Introduction: The Dangerous Moment Nobody Talks About

There's a moment in every enterprise AI project when success becomes a trap. A team launches its first AI agent, it solves a real problem, and everyone celebrates. The deployment works. Metrics improve. Stakeholders are happy. Leadership greenlight more budget.

Then comes the scaling phase.

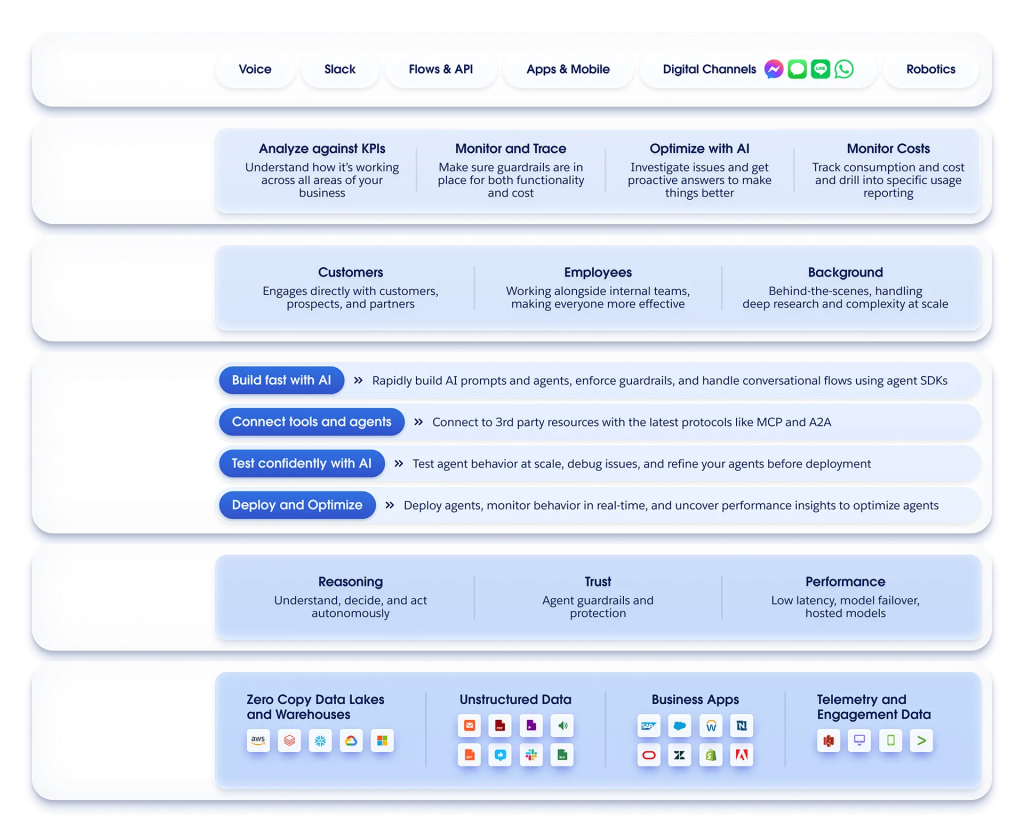

A company that built a voice agent for customer support wants to extend it to chat. A chatbot that handles inbound leads needs to work across WhatsApp, web, and email simultaneously. An internal AI assistant that works on Slack needs to integrate with Teams for hybrid workforces.

This is when organizations discover a painful truth: the system that nailed a single-channel deployment wasn't built to handle multiple channels without fundamental restructuring.

The logic has to be rebuilt. Integrations get duplicated. Governance becomes messier, not cleaner. Progress stalls at the exact moment the business expects acceleration. The team faces an ugly choice: slow down to rebuild, or rush forward and accept growing technical debt.

Here's what's critical to understand: this isn't a technology problem. The underlying AI models are fine. The integrations work. The APIs function properly. The real issue is architectural—a decision made early in the project to optimize for single-channel speed instead of multi-channel resilience.

Enterprise AI maturity isn't measured by how quickly you can launch your first agent. It's measured by how effectively you can scale that agent across channels, geographies, and use cases without recreating core logic each time.

This guide breaks down why most enterprises get scaling wrong, what omnichannel architecture actually means in practice, and how to build AI systems that grow instead of fracture as demands increase.

TL; DR

- Single-channel optimization costs double on scale: Early AI deployments built for one surface (voice, chat, email) require near-complete rewrites to extend to additional channels, creating massive technical debt.

- Most enterprises discover this too late: Teams validate pilots on one channel, then hit friction when business demands multi-channel coverage, forcing expensive architectural resets.

- Omnichannel isn't a deployment mandate—it's an architectural direction: You can start with one channel, but your foundation must allow future expansion without recreating core workflows, integrations, and logic.

- Shared agent logic reduces operational risk: When AI logic is channel-agnostic, governance improves, inconsistencies decrease, and scaling becomes predictable instead of chaotic.

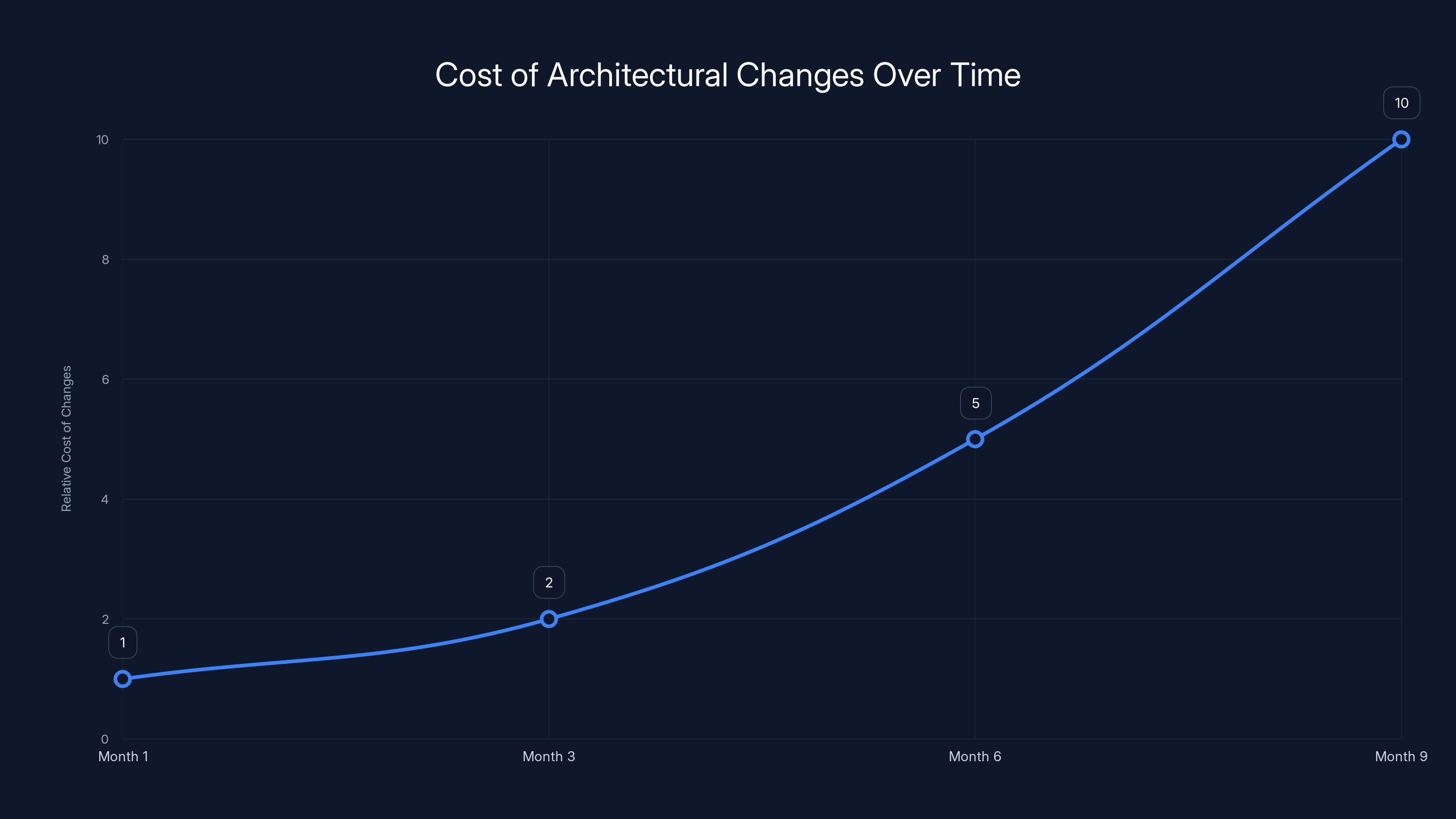

- This decision gets locked in early: Platform choices, integration patterns, and workflow design made in month two determine your scaling ceiling in month twelve—change is exponentially harder later.

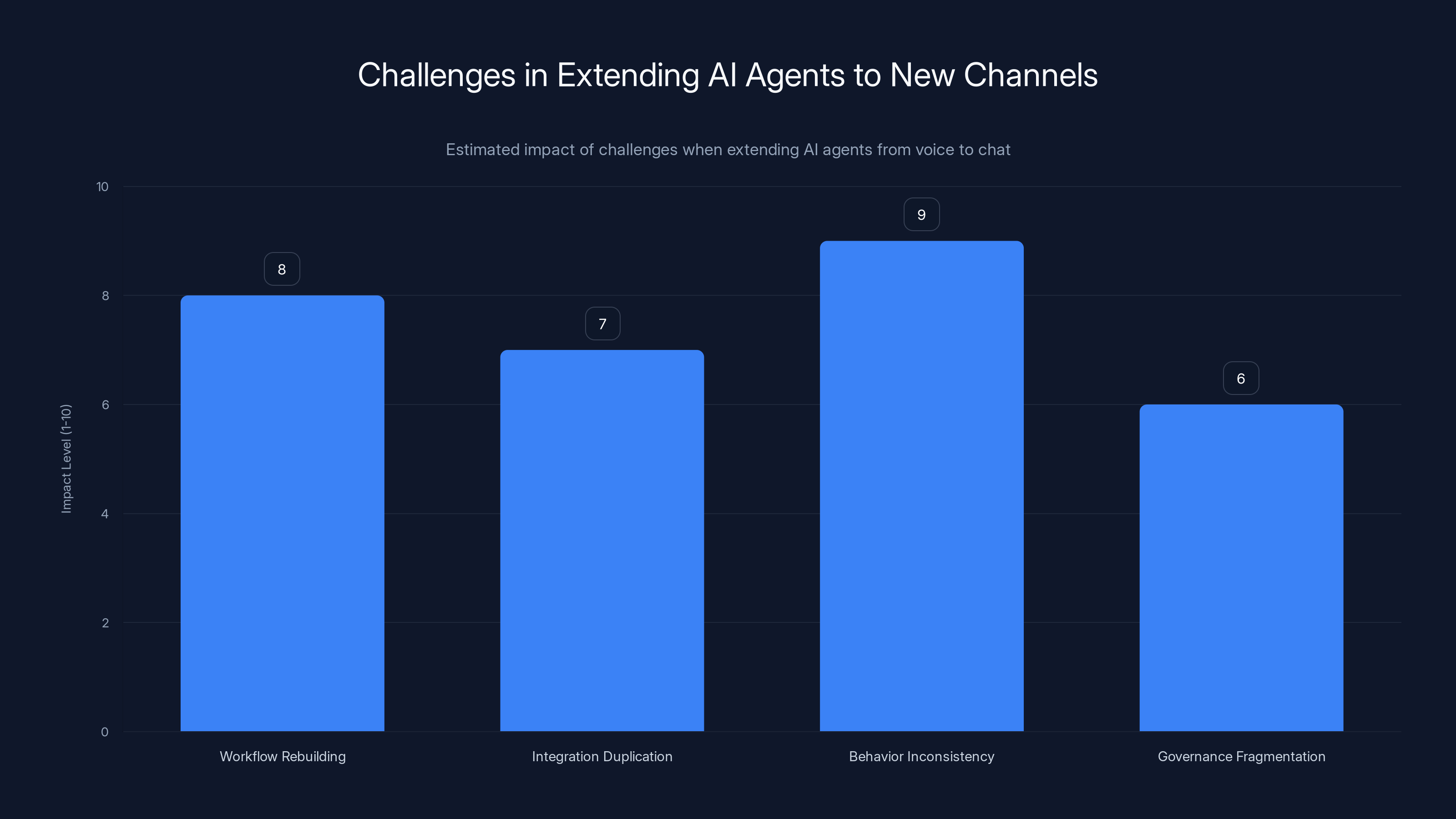

Estimated data shows that behavior inconsistency poses the highest challenge when extending AI agents to new channels, followed by workflow rebuilding and integration duplication.

The Reality of How Enterprises Begin Their AI Journey

Most organizations don't start with an omnichannel vision. That's not because they're naive. It's because they're pragmatic.

A company faces a concrete problem: customer support is drowning in repetitive questions, inbound leads are going unanswered, or operational costs are spiraling. The solution is equally concrete—deploy an AI agent to handle a narrow, high-impact use case. One problem. One channel. One team.

This approach is sensible. It's how real enterprise adoption works.

A financial services firm might deploy a voice agent for mortgage application inquiries, as seen in Atrium's financial services solutions. An e-commerce company launches a chat agent to handle returns and refunds. A healthcare provider uses an AI system to screen appointment requests before they reach staff. These pilots work because they're focused. Scope is controlled. Success is measurable within weeks or months.

Internal stakeholders see tangible results. Cost per interaction drops. Customer satisfaction metrics improve. The team gets budget for expansion. This is when momentum builds.

But momentum conceals a structural problem. Teams optimizing for a single channel make certain architectural choices that feel fine at pilot scale and become anchors at production scale. They build workflows specific to voice interactions. They integrate with systems that work for chat but not email. They set up monitoring tailored to one user journey. They create governance rules for one surface.

None of these decisions feel wrong in isolation. Voice-specific workflows make sense when you're building a voice agent. Tight coupling to one system seems efficient when you have one integration. The problem isn't any individual choice. It's the cumulative effect of optimizing locally instead of globally.

When the business asks for broader coverage, what seemed like incremental progress becomes structural reset territory.

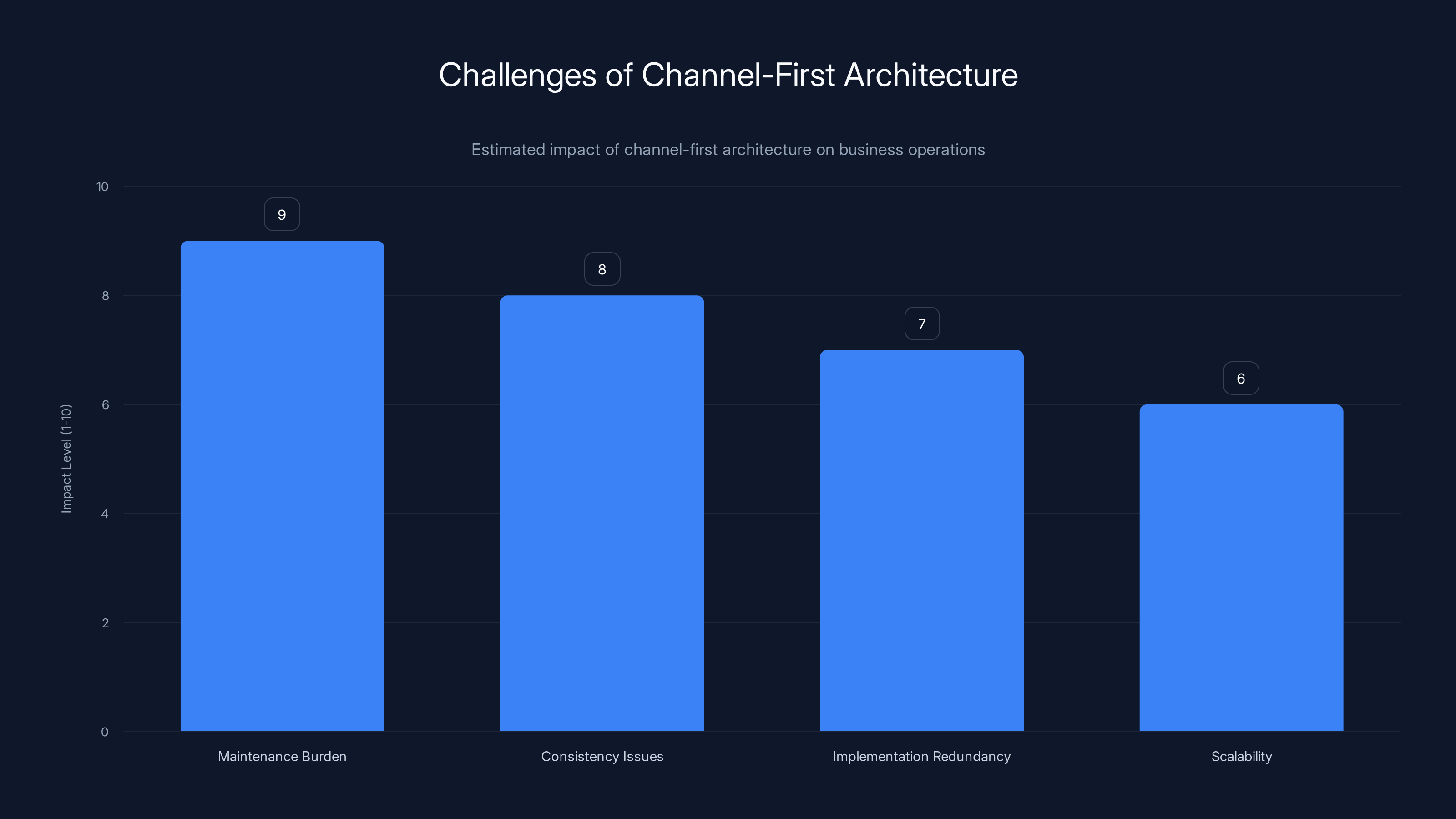

Channel-first architecture significantly increases maintenance burden and consistency issues, with high impact levels estimated across key operational challenges.

Where the Momentum Quietly Turns Into Friction

Month three or four is when cracks typically appear. The original deployment succeeded beyond expectations. Volume is higher than projected. Customer satisfaction is up. Now leadership wants the same agent on a second channel.

This is where project managers and engineering leads discover something uncomfortable: extending the AI agent to a new surface requires significantly more effort than the initial deployment.

Here's what actually happens:

Workflows need rebuilding. A voice agent asks questions one at a time, listens for responses, handles interruptions, manages context across turn-taking. A chat agent should handle asynchronous messaging, text-based inputs, the ability to return to a conversation after hours, and different escalation patterns. These aren't the same workflows. They can't be—the interaction model is fundamentally different. Teams end up redefining business logic rather than reusing it.

Integrations get duplicated. The voice agent might integrate with a CRM system through one API endpoint and authentication pattern. The chat agent needs the same CRM data but through a different integration that chat platforms support. Instead of a single integration layer serving both channels, you end up maintaining two separate connection points, two authentication schemes, two sets of error handling.

Behavior becomes inconsistent. Voice agents have certain safety rails: they timeout after 30 seconds of silence, they repeat information differently, they handle clarification requests through different mechanisms. Chat agents work differently: they might timeout after hours of inactivity, they can show information in structured formats, they escalate differently. When the same business logic needs to operate across both, teams discover that behavior that made sense in one context breaks in another.

Governance fragments. Monitoring, logging, audit trails, escalation rules, and compliance checks were built for one channel. Chat introduces different data retention requirements. Voice adds call recording compliance. Email brings forward security concerns. What looked like unified governance actually has channel-specific assumptions baked in.

The friction isn't visible in the first few weeks. It compounds. Each new channel requires 70-80% effort of the original build, not 20-30% as executives expected. By month six, teams face a binary choice: slow expansion to rebuild properly, or accelerate and accept growing technical debt.

Most organizations choose to accelerate. They shouldn't. But they do, because business expectations have been set.

The Dangerous Illusion of Channel-First Architecture

Much of today's enterprise AI marketing speaks about "omnichannel capabilities." It sounds comprehensive. It sounds like a solved problem.

But if you look under the surface, most "omnichannel" AI platforms are actually built on channel-first foundations. Voice agents and chat agents are separate systems, developed independently, connected loosely if at all. Voice uses one model, chat might use another. Escalation logic is different. Configuration is separate. Reporting is distinct.

This architecture works fine for single-channel pilots. It fails at scale.

Here's why: when each channel is a separate system, the same business logic needs to be reimplemented for each surface. A financial services company with rules about loan eligibility needs to implement those rules in voice, then again in chat, then again in email. A healthcare provider with guidelines about appointment scheduling needs those rules in voice and text and web simultaneously. But they're not the same implementations—they're adapted to each medium.

This creates invisible costs:

Maintenance burden explodes. When a business rule changes—say, a bank's lending criteria gets updated—the change needs to cascade across every channel separately. A small rule revision in voice might be three lines of code. The same change in chat might be a different format. In email integration, another variation. Over time, when you have five channels and dozens of business rules, changing one rule touches fifteen different code paths.

Consistency becomes aspirational. When each channel reimplements logic independently, consistency requires perfect synchronization across independent teams. In practice, this never happens. Voice escalates slightly differently than chat. The decision tree for chat takes a slightly different path than email. Customers notice. They interact with one channel and get one answer, switch channels and get a slightly different one. It erodes trust.

Scaling becomes unaffordable. Adding a sixth channel means implementing every workflow, integration, and rule again. The marginal cost of channel N increases every time. At some point, businesses stop expanding because the effort to launch channel N+1 becomes too high. Growth gets capped by architecture, not by business logic.

Governance fails under weight. Compliance teams can't audit a system where the same logical decision gets made five different ways. Security reviews need to cover every implementation separately. Risk management becomes fragmented. When incident response happens, teams have to trace logic through multiple implementations instead of one.

The illusion is that "omnichannel" means "multiple channels." The reality is that true omnichannel means a single core intelligence operating across multiple surfaces—not multiple intelligences pretending to be unified.

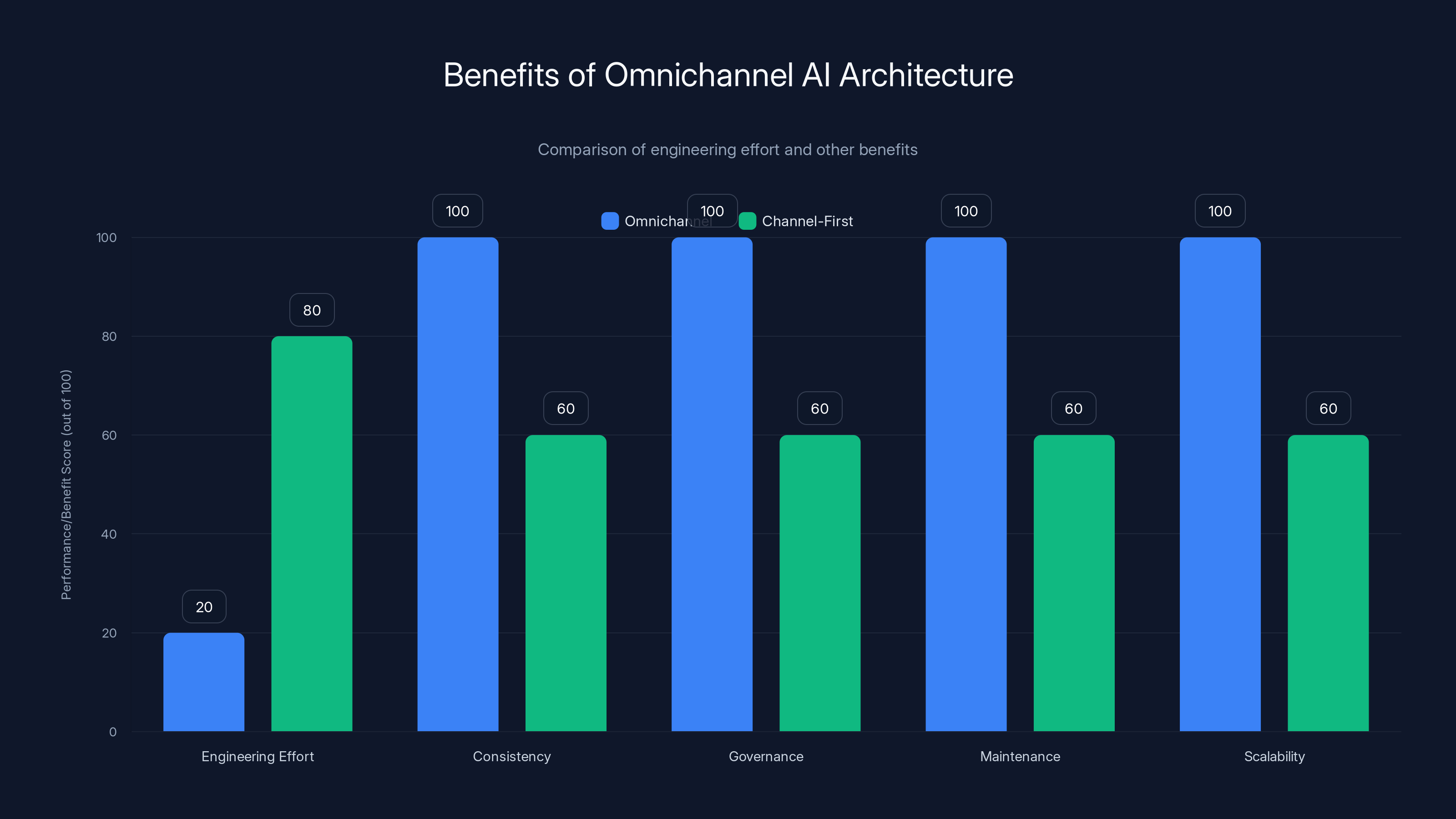

Omnichannel architecture significantly reduces engineering effort and enhances consistency, governance, maintenance, and scalability compared to channel-first systems. Estimated data based on typical industry insights.

Defining Omnichannel as Architectural Direction (Not Deployment Requirement)

This is the distinction that changes everything.

Omnichannel is often framed as a deployment mandate—"you must launch on all channels simultaneously." That's wrong and impractical. Most organizations shouldn't launch everywhere at once. Focus is a feature of early-stage adoption.

But omnichannel as an architectural direction is different. It means building intelligence in a way that's channel-agnostic, allowing that same intelligence to operate across multiple surfaces without fundamental reimplementation.

Here's what this actually looks like in practice:

Single core logic layer. Business rules, decision trees, workflow orchestration—the intelligence that decides what the agent does—lives in one place. It's not voice-specific or chat-specific. It's a pure business logic layer that doesn't care which channel invokes it.

Channel adapters as lightweight wrappers. Voice interfaces to this core through an adapter that handles voice-specific concerns: audio input/output, turn-taking, timeout handling. Chat interfaces through a different adapter: text formatting, message queuing, session management. But both adapters work with the same underlying intelligence.

Unified integration layer. CRM systems, databases, APIs, payment processors—these integrate once, at the core level. Channel adapters don't reimplement these integrations. They delegate to the shared layer.

Centralized governance. Rules, monitoring, escalation, compliance—all defined once at the core level. When a rule changes, it changes everywhere. When you audit the system, you're auditing one implementation, not five.

The advantage is enormous: when the business needs a new channel, engineers write an adapter (days or weeks of work) instead of reimplementing the entire system (weeks or months).

You still start with one channel. But that channel is built on foundations that don't collapse when you add the next one.

The critical insight: you don't need to build omnichannel infrastructure before you have a multi-channel strategy. You need to build foundations that allow omnichannel expansion when the business demands it.

Why This Matters Most for Early-Stage Adopters

If you're in month two or three of an AI project, this distinction is do-or-die important.

Your early architectural choices lock in your scaling ceiling. The integration patterns you choose now determine how easily you can add channels in month twelve. The way you structure business logic in month three constrains your options in month nine.

Changing these decisions later is exponentially harder. Refactoring a tightly coupled system built for single-channel operation into one designed for multi-channel expansion often requires rebuilding core components. You can't just sprinkle a new layer on top and call it omnichannel. You're restructuring how the system thinks.

This is why timing matters so much. There's a sweet spot—usually between month one and month three of a project—where architectural changes are still affordable. The codebase is small enough to modify. Business logic hasn't accumulated in channel-specific ways. Integration decisions haven't yet become load-bearing walls.

Wait until month six, and the cost of architectural changes starts climbing. Wait until month nine, and you're looking at full-system rewrites.

For organizations just starting their AI journey, this means asking hard questions early:

Does your chosen platform support channel-agnostic workflow definition? Can you define business logic once and reuse it across channels, or do you need to define workflows per channel?

How tightly coupled is the integration layer? Is each integration designed for a specific channel, or can it serve multiple channels?

What does governance look like across channels? One audit trail, or separate ones? One set of rules, or per-channel variations?

How much reimplementation would actually be required to add a second channel? Not "how much would the vendor say," but honest assessment with your engineering team.

These conversations are uncomfortable because the answers sometimes suggest you need to slow down or change platforms. But that discomfort now beats crisis later.

The cost of making architectural changes in AI projects increases significantly over time, especially after month three. Estimated data.

The Mathematics of Channel Expansion and Technical Debt

There's a useful way to think about this quantitatively.

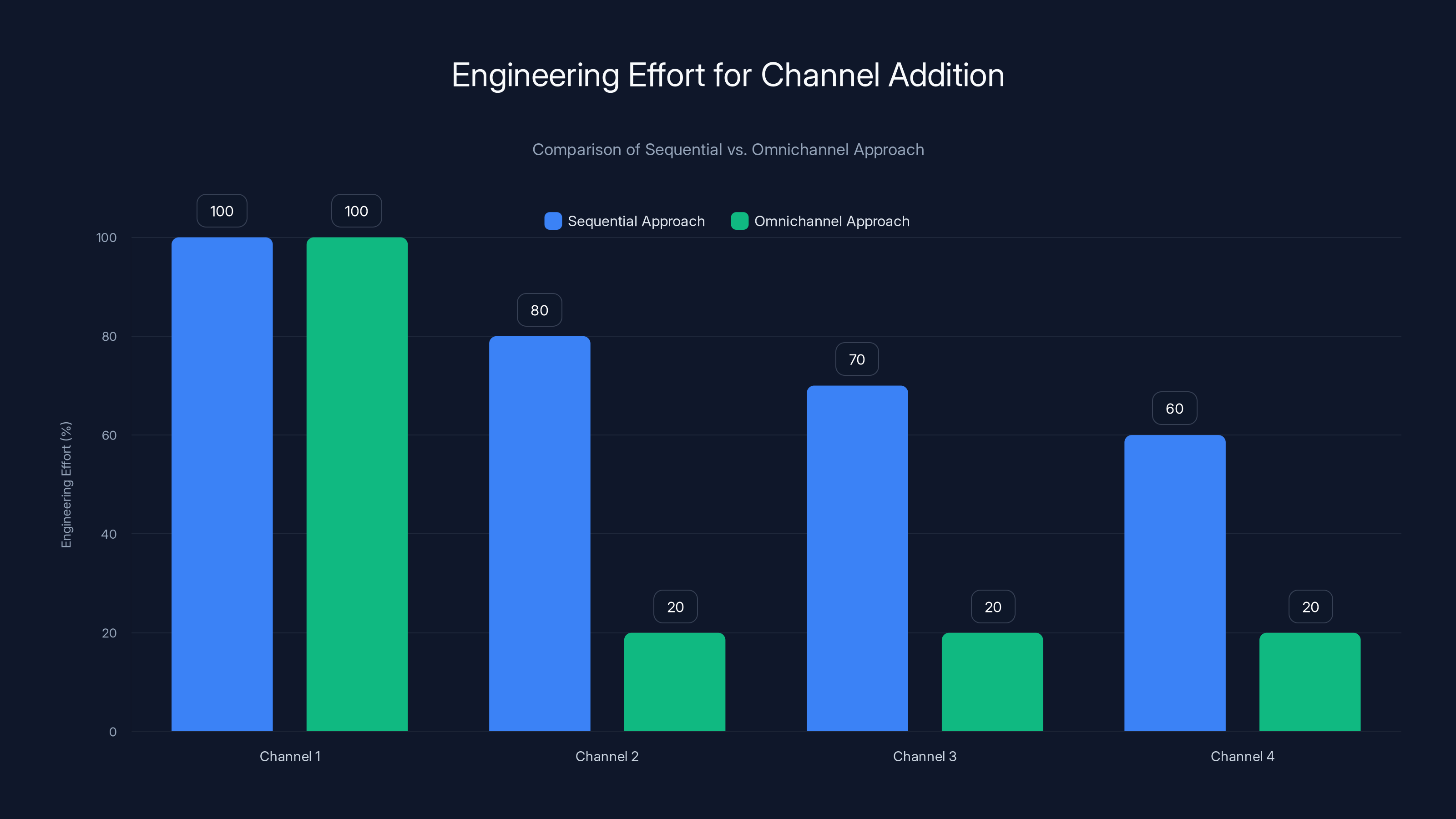

Assuming your first channel requires 100 units of engineering effort, what does the second channel actually cost?

In channel-first architecture: Channel 2 costs roughly 70-85 units of effort. You're reimplementing business logic (40 units), rebuilding integrations (25 units), adapting governance (10 units), learning new patterns (5 units).

In omnichannel architecture: Channel 2 costs roughly 15-25 units of effort. You're building an adapter (10-15 units), configuring channel-specific settings (5-10 units), testing integration (3-5 units).

The math compounds:

Channel 3 in channel-first: 70 units. You've learned some efficiency, but you're still essentially rebuilding.

Channel 3 in omnichannel: 12 units. The pattern is established and repeatable.

Channel 4 in channel-first: 70 units. The cost plateaus because you're always doing the same reimplementation.

Channel 4 in omnichannel: 10 units. Diminishing returns work in your favor.

Over a 12-month roadmap with five channels:

Channel-first total effort: 100 + 75 + 70 + 70 + 70 = 485 units

Omnichannel total effort: 100 + 20 + 12 + 10 + 10 = 152 units

Omnichannel is roughly 3x more efficient over this scope. But more importantly, the omnichannel path gets easier as you add channels, while channel-first gets harder or stays flat. This is where the business impact becomes clear: the team that can deploy channel five in month eleven instead of month seventeen has a competitive advantage.

There's also a quality dimension. In channel-first architecture, each new channel introduces new bugs, new edge cases, new failure modes. The system becomes harder to maintain as you add surfaces. In omnichannel architecture, new channels test existing logic but don't introduce fundamentally new code paths. Maintenance becomes easier, not harder.

Where Adapter Effort decreases slightly as you refine the pattern, and Config Effort stays relatively constant.

This formula shows why early architectural choices matter. The denominators are fixed at platform-selection time. You can't reduce them later without major work.

Building Shared Intelligence Across Channels: The Practical Model

How does this actually work in implementation?

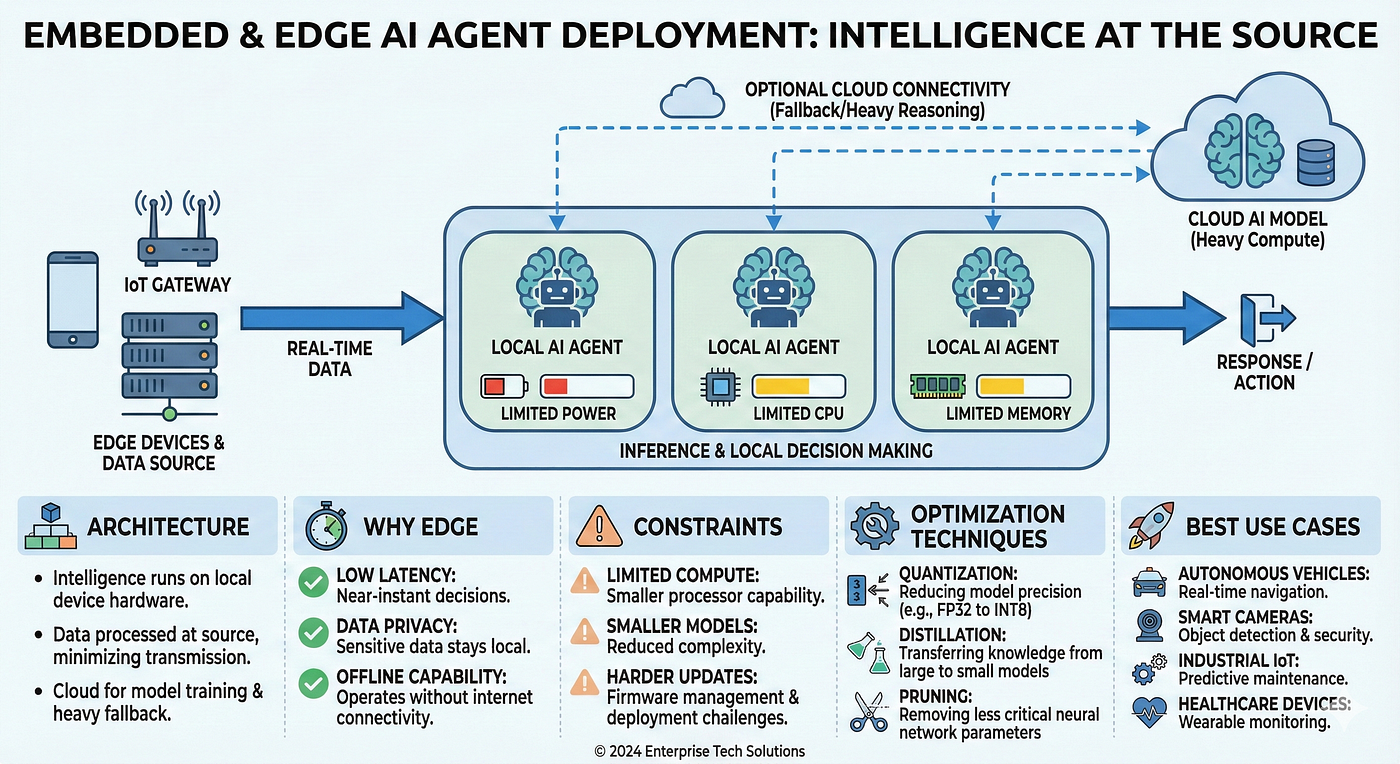

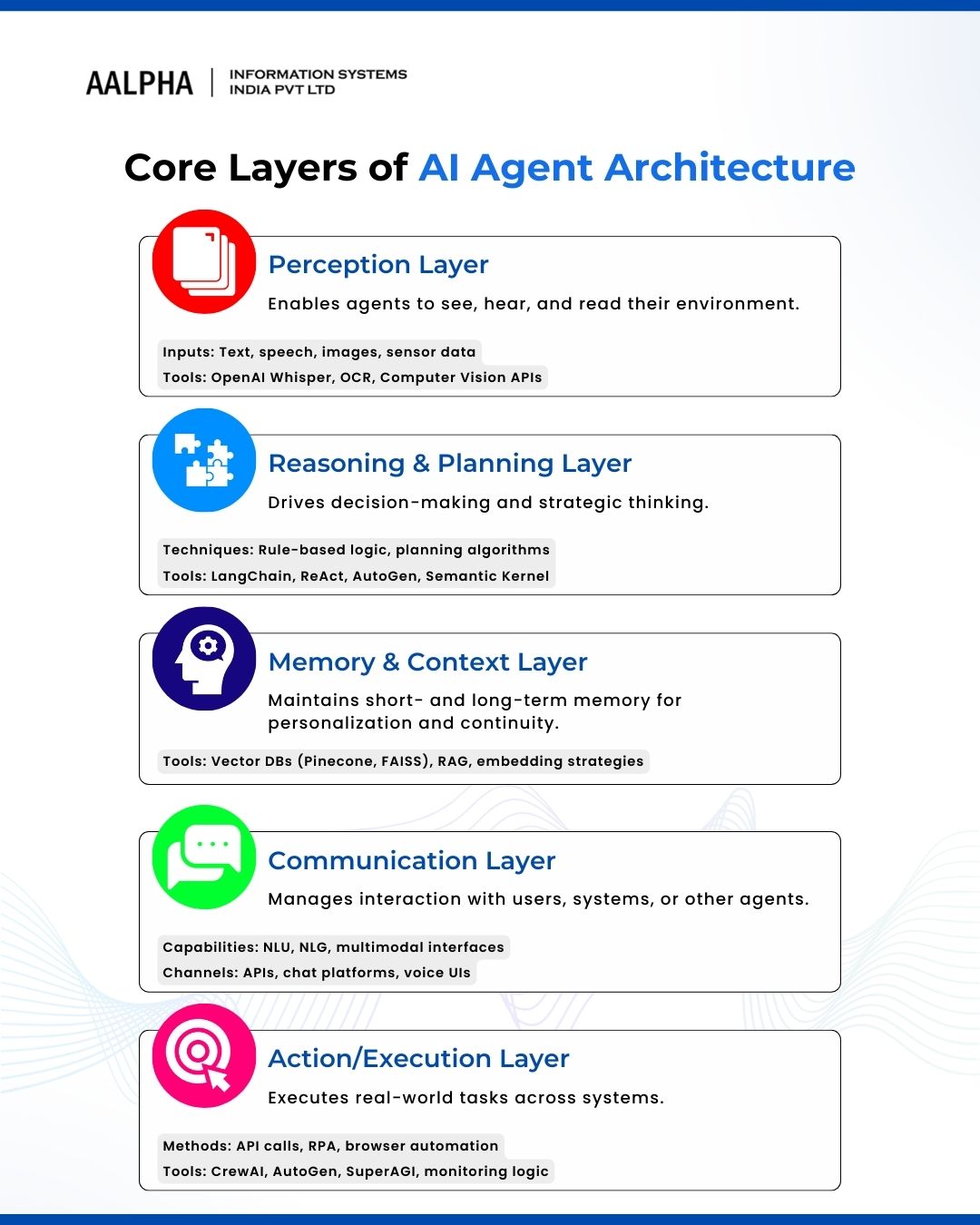

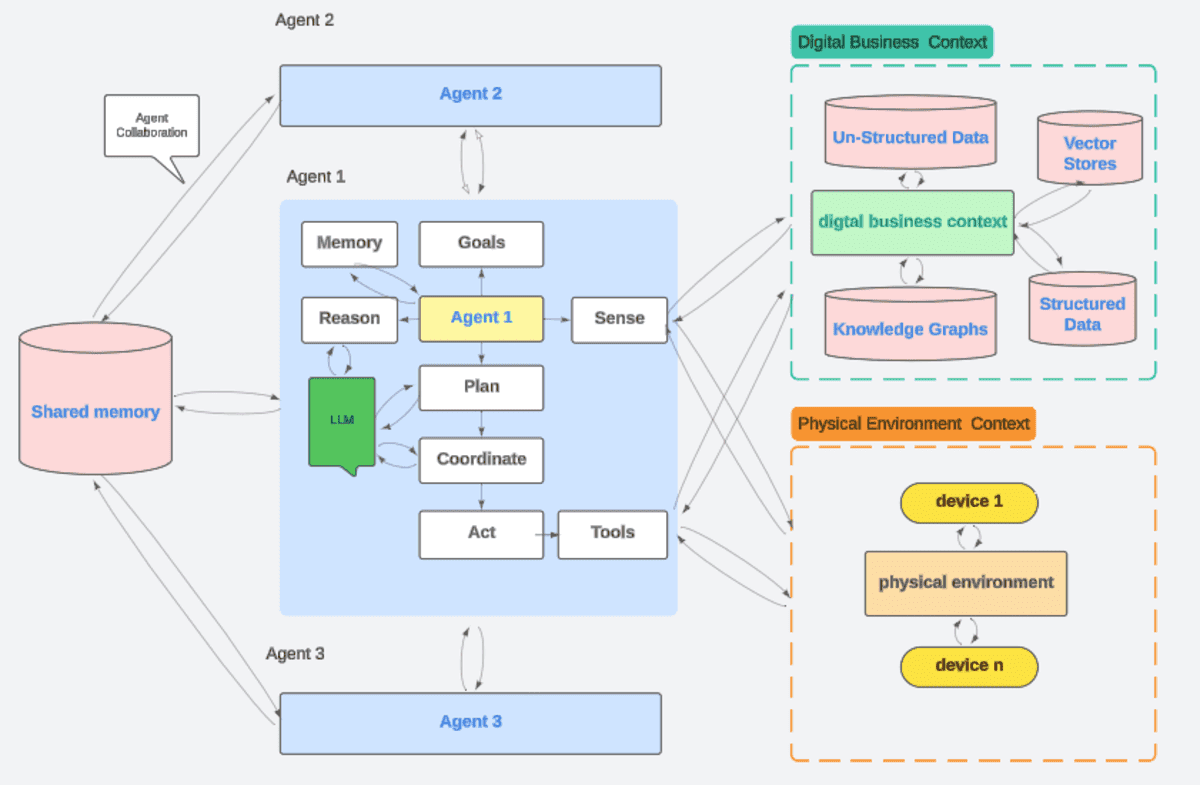

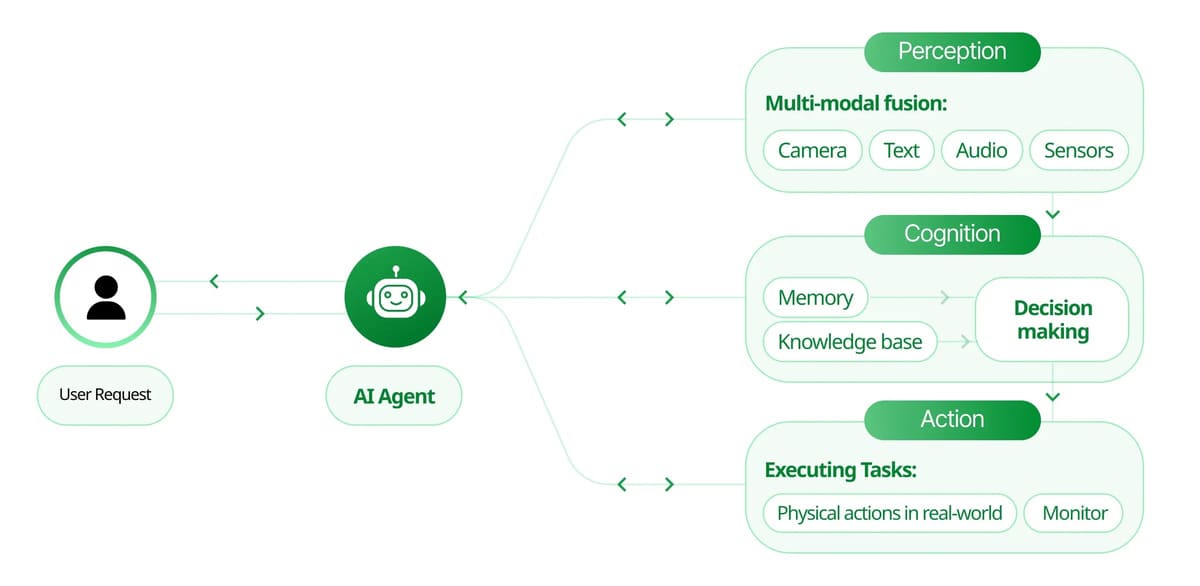

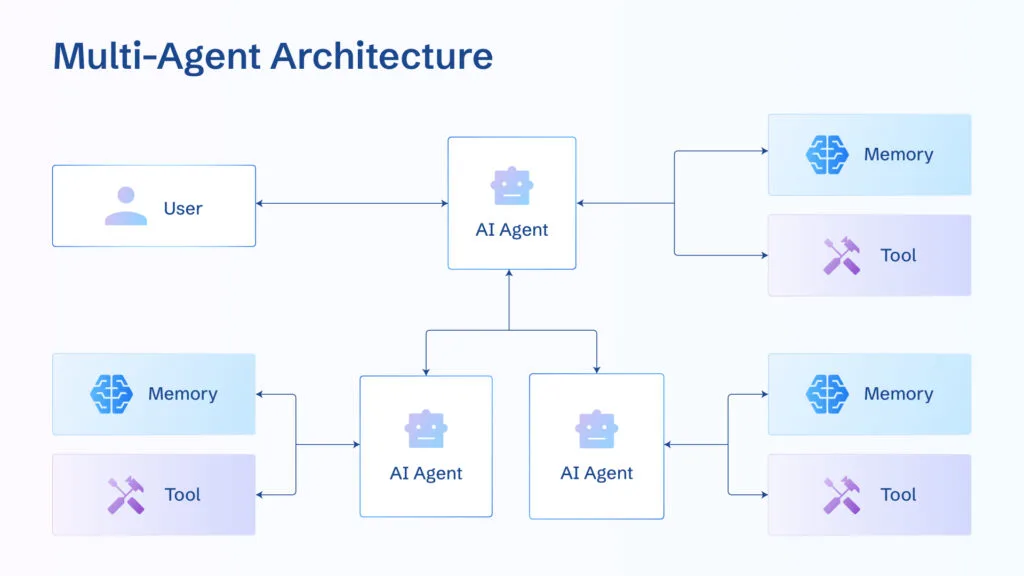

Most successful omnichannel AI deployments follow a three-layer architecture:

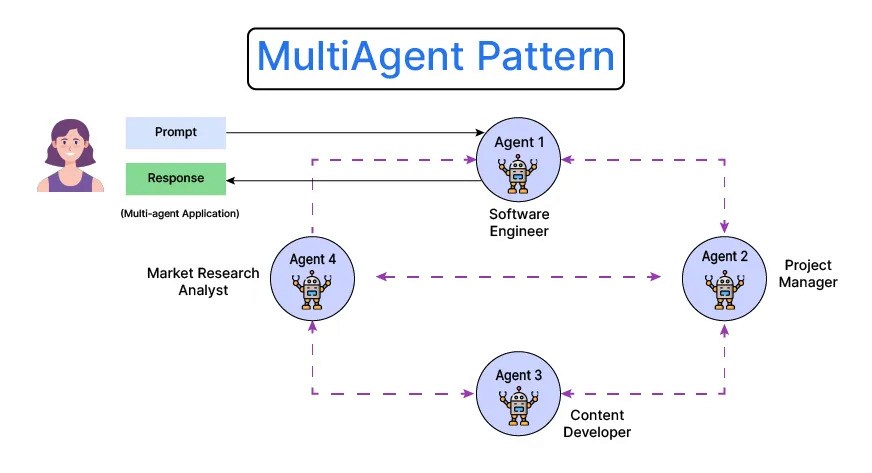

Layer 1: Orchestration and Business Logic

This is where the actual intelligence lives. Decision trees, workflow states, business rules, context management, escalation logic. This layer is completely channel-agnostic. It doesn't know if it's being invoked by voice or chat or email.

Example: A mortgage application workflow has steps: collect applicant name, verify income, assess credit, make decision. The logic is pure—applicant provides info (somehow), system validates (somehow), system decides (somehow). The "somehow" is channel-specific, but the workflow is not.

In practice, this layer is often implemented as:

- A rules engine (if you're using traditional enterprise platforms)

- A state machine with defined transitions

- A decision graph that routes through branches based on inputs

- A workflow engine that orchestrates steps

The key principle: logic is defined once, invoked many times, from many channels.

Layer 2: Integration and Data Access

When the orchestration layer needs external data—customer records, payment systems, compliance databases—it goes through a unified integration layer. This layer abstracts away the specific systems.

Instead of voice having its own CRM integration and chat having another, both invoke the same data access layer. That layer handles authentication, error handling, caching, and response formatting. Channel-specific concerns (like how to present data) are handled downstream, not in the integration layer.

Example: When the orchestration layer needs a customer's credit score, it calls get Customer Credit(customer Id). The integration layer handles whether that's coming from Equifax API, a local database, or a third-party service. Voice doesn't need to know. Chat doesn't need to know.

This is where maintenance effort gets recaptured. When you upgrade the CRM system or change the credit score provider, you change it once in the integration layer, not five times across five channels.

Layer 3: Channel Adapters

This is the only layer that's channel-specific, and it should be as thin as possible.

A voice adapter handles: audio encoding, real-time transcription, turn-taking logic, managing context across voice interactions, audio output formatting. It translates voice inputs into the format the orchestration layer expects, and translates orchestration outputs into voice responses.

A chat adapter handles: message queuing, session management, text formatting, asynchronous message delivery. Same orchestration layer, different translation.

An email adapter handles: message parsing, attachment handling, threading logic, response formatting.

The key principle: adapters are thin, specific, and focused only on translation between the channel's format and the core system's format. No business logic lives here.

In practice:

Voice Input → Voice Adapter → Orchestration → Integration → Response → Voice Adapter → Audio Output

Chat Input → Chat Adapter → Orchestration → Integration → Response → Chat Adapter → Text Output

Email Input → Email Adapter → Orchestration → Integration → Response → Email Adapter → Email Message

The adapters are different. The path through the middle is identical.

When this architecture is properly implemented, adding a new channel is genuinely fast. You write an adapter (which is well-understood and constrained), test integration points, and launch. You're not reimplementing intelligence. You're not redefining workflows. You're translating between a new medium and existing intelligence.

Omnichannel approach significantly reduces engineering effort for adding new channels, with only 15-20% additional effort per channel compared to the sequential approach.

Governance, Compliance, and the Hidden Cost of Fragmentation

This is where the importance of shared architecture becomes existential, especially in regulated industries.

Imagine an insurance company with an AI agent handling claims inquiries. In voice, the agent explains claim status. In chat, the same agent should provide the same information. In email, the response should be consistent.

But when voice, chat, and email are separate systems, consistency becomes impossible to guarantee. Different implementations might have different safety checks. Escalation rules might vary. Information might be presented differently. Audit logs might have different structures.

When a regulator audits the system, they're looking at three separate implementations of what should be one decision. That's a compliance nightmare.

Governance in channel-first systems requires:

- Training separate teams on separate implementations

- Documenting three versions of the same logic

- Testing each channel independently (and missing cross-channel issues)

- Monitoring three separate audit trails

- When something breaks, debugging across multiple code paths

- When regulations change, updating multiple implementations

Governance in omnichannel systems enables:

- One version of the truth about what the AI does

- Single audit trail showing all interactions

- Unified monitoring and alerting

- Testing that covers the core logic once (not three times)

- When regulations change, one update that applies everywhere

- When something breaks, one investigation that fixes all channels

For a financial services company or healthcare provider, this difference isn't academic. It's the difference between a system that can be audited and a system that becomes increasingly opaque as it scales.

There's also a risk dimension. When decision logic is reimplemented across channels, bugs manifest differently. A security issue in voice might not appear in chat because the implementations are different. This creates a false sense of security. In omnichannel systems, security issues are consistent—one fix protects all channels.

From Pilot Success to Platform Evolution: The Scaling Trajectory

Successful teams follow a clear progression over 12-18 months:

Months 1-3: Single-channel pilot

Launch voice agent for customer support. Build orchestration layer, integration layer, voice adapter. Prove the concept. Measure success. Get stakeholder buy-in.

At this stage, your foundation is being set. If you're choosing omnichannel architecture, this is when it matters most.

Months 4-6: Initial expansion

Add chat to the same orchestration layer. Discover some edge cases in the shared core logic. Refine governance. You're now running two channels on one intelligence.

If you built channel-first in months 1-3, this is where pain starts appearing. If you built omnichannel, you're validating that the architecture works.

Months 7-9: Broader integration

Add email and messaging (WhatsApp, SMS). The system now handles three distinct interaction patterns. You've refined the adapter pattern twice; adding new adapters is increasingly predictable.

This is where channel-first architectures usually hit a scaling wall. Omnichannel systems start to show their advantage.

Months 10-12: Cross-channel coherence

Focus shifts from "can we do this on multiple channels" to "how do we ensure consistency across channels." Session management spans channels. A customer starts a conversation in voice, continues it in chat. Context flows across surfaces.

This requires shared data structures and cross-channel awareness at the orchestration level. If you've been maintaining separate implementations, this becomes extremely hard. If you've been working from shared orchestration, it's an incremental addition.

Months 13-18: Platform maturity

The AI agent is now infrastructure. It's integrated into workflows across the organization. Teams depend on it. You're measuring impact not by channel, but by business outcome.

You're also adding new capabilities: proactive outreach, predictive routing, cross-channel campaign orchestration. These are only possible when channels are unified at the orchestration level.

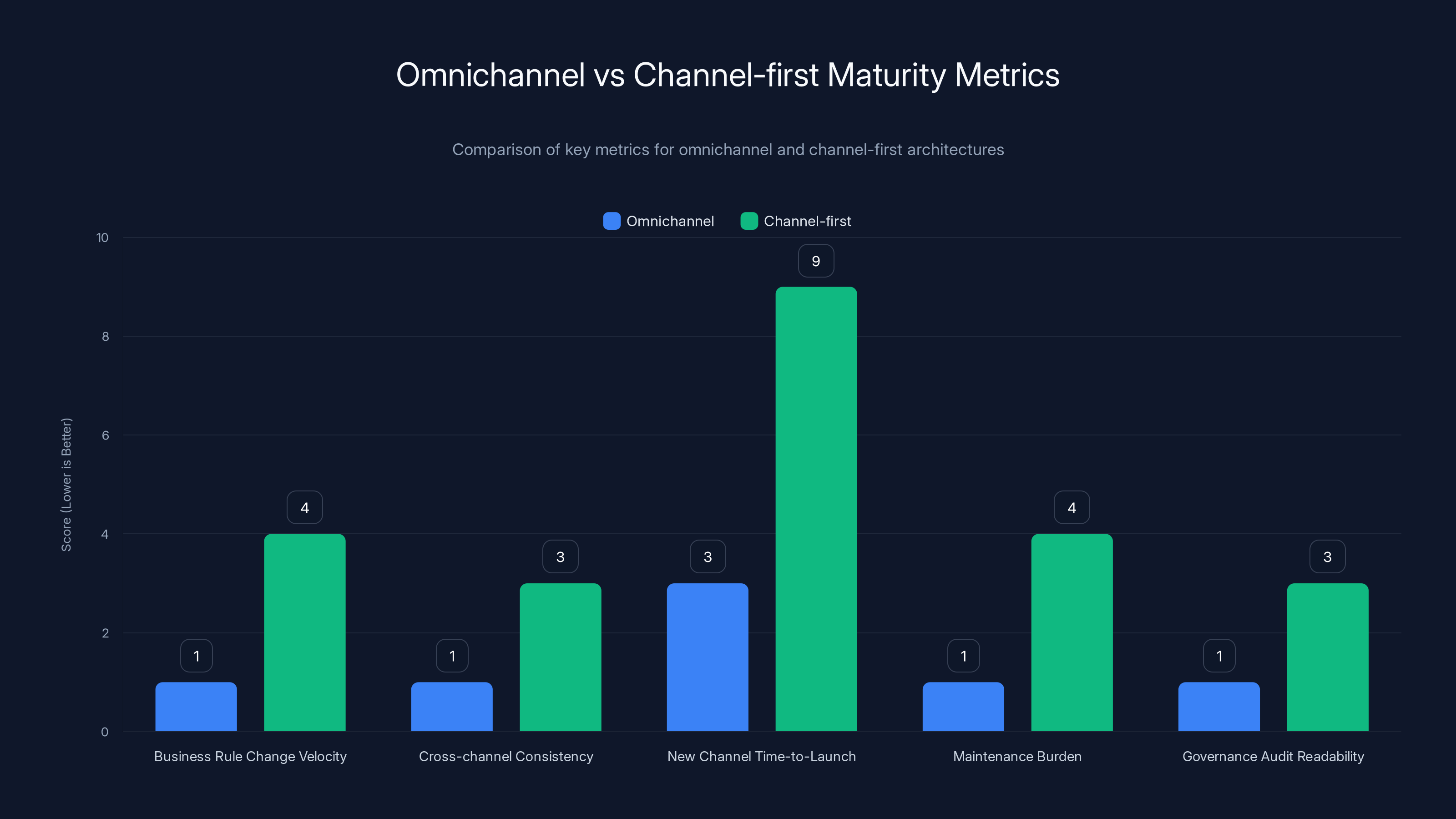

Omnichannel architecture generally scores better across key metrics, indicating a more integrated and efficient system compared to channel-first approaches. Estimated data based on typical implementations.

Common Scaling Mistakes and How Omnichannel Avoids Them

Mistake 1: Assuming sequential channel addition is cheap

The myth: "We'll get voice working, then add chat next quarter, then expand from there."

The reality: Each new channel requires rearchitecting if the first was channel-specific. It's not sequential. It's cascading complexity.

Omnichannel approach: Sequential addition is genuinely cheap. Channel 2 adds only 15-20% engineering effort.

Mistake 2: Building channel-specific business logic

The myth: "Voice has its own workflow, chat has its own—they're different media."

The reality: The underlying business logic is identical. The media is just the medium. Separating them creates maintenance nightmares.

Omnichannel approach: One workflow, multiple translations. When business rules change, they change once.

Mistake 3: Separate integration points per channel

The myth: "Voice connects to this CRM, chat connects to that one. They have different requirements."

The reality: CRM data is CRM data. The channel doesn't change the requirement. Separate integrations create inconsistency and synchronization headaches.

Omnichannel approach: One integration point serving all channels. Changes and upgrades happen once.

Mistake 4: Inconsistent governance across channels

The myth: "Each channel has its own escalation rules and monitoring."

The reality: Regulators and users see one agent operating inconsistently. That erodes trust and creates compliance risk.

Omnichannel approach: Unified governance visible across all channels simultaneously.

Mistake 5: Treating multi-channel as a future problem

The myth: "We'll handle omnichannel when we need to. For now, let's ship."

The reality: Architectural decisions lock in within weeks. Waiting until you "need" multi-channel means rebuilding.

Omnichannel approach: Build foundations that support future expansion from day one. This doesn't delay initial launch—it enables it.

The Competitive Advantage of Architectural Clarity

Here's what separates fast-moving enterprises from slow ones in the AI era:

Fast enterprises make one architectural decision in month two and execute consistently against it for 12-18 months. They know which layers are channel-agnostic and which are channel-specific. They know where business logic lives. They know how to add a new channel.

Slow enterprises make channel-specific optimizations that felt efficient at the time, then spend months untangling them when business demands shift. They're stuck between "this works for one channel" and "we need to rebuild for multi-channel."

The difference in speed isn't about AI capability. It's about architectural clarity.

Omnichannel architecture provides that clarity:

- You know adding a new channel requires building an adapter, not reimplementing intelligence.

- You know business rule changes flow to all channels simultaneously.

- You know governance is singular, not fragmented.

- You know your scaling ceiling isn't locked in at month three—it's expandable.

This clarity has business impact. When a competitor launches on a new channel, you can match them in weeks instead of months. When the market shifts and you need to support a new interaction pattern, you're ready. When regulators demand changes, you implement once, not five times.

The organizations winning at enterprise AI right now aren't winning on model quality. Models are commoditized. They're winning on the ability to move fast when the business demands it.

Building Your AI Strategy Around Architectural Direction, Not Deployment Timing

This is the framing that changes decision-making:

Don't ask, "Can we launch an AI agent on voice by Q2?"

Ask, "Can we build foundations that support voice in Q2, chat in Q3, messaging in Q4, and web in Q1 without major rework?"

The first question optimizes for speed to first success. The second optimizes for sustainable scaling.

Both are achievable. But they require different architectural choices.

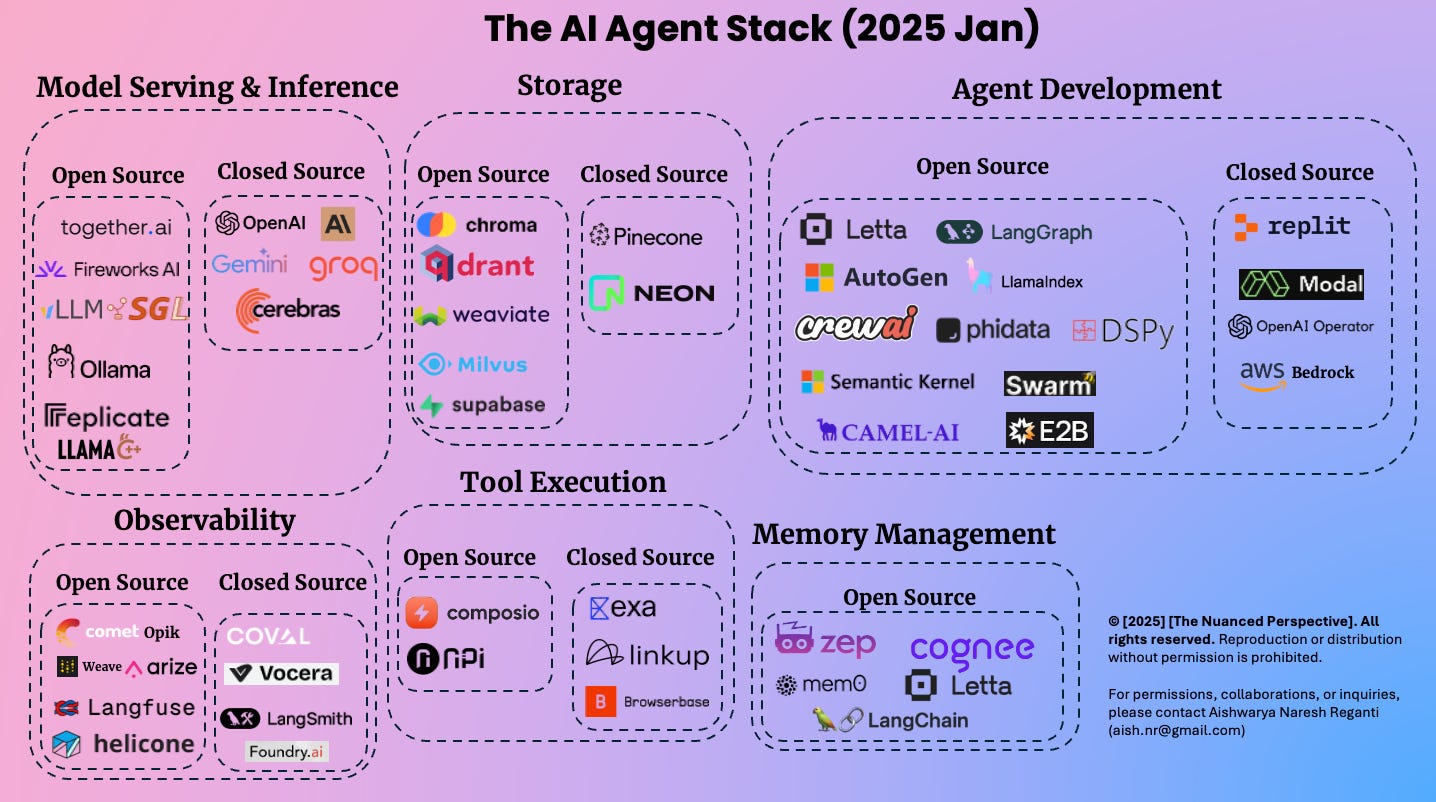

If you're choosing a vendor platform:

- Ask about orchestration layer design (channel-agnostic or channel-specific?)

- Understand integration patterns (shared or separate per channel?)

- Probe governance architecture (unified or fragmented?)

- Test with your own use case: "How much code changes if we add a new channel?"

If you're building in-house:

- Separate business logic from channel logic from the start.

- Make integration layers channel-agnostic.

- Design adapters as thin translation layers, not logic containers.

- Build governance at the orchestration level.

The additional upfront investment (usually 10-15% more effort in months 1-3) pays for itself by month 8-9 when you're scaling faster than channel-first approaches allow.

Measuring Omnichannel Maturity

How do you know if your architecture is actually omnichannel, or just multichannel with separate implementations?

Metric 1: Business rule change velocity

Omnichannel: Change a business rule, roll it out to all channels in a single deployment.

Channel-first: Change a rule, update it in voice, test it, update chat, test it, update email, test it. Multiple deployments.

Measure: How many code repositories do you modify when changing one business rule? Omnichannel = 1. Channel-first = 3-5.

Metric 2: Cross-channel consistency

Omnichannel: Same scenario, same query, same decision across channels (differences are only in presentation).

Channel-first: Same scenario produces slightly different results in different channels because implementations are independent.

Measure: Run a test case through all channels. Do you get the same decision? Omnichannel = yes. Channel-first = sometimes.

Metric 3: New channel time-to-launch

Omnichannel: New channel launches in 2-4 weeks (adapter building and testing).

Channel-first: New channel launches in 6-12 weeks (full reimplementation).

Measure: How long did channel 2 take compared to channel 1? Omnichannel = similar or faster. Channel-first = significantly longer.

Metric 4: Maintenance burden

Omnichannel: Bug fixes happen once, affect all channels.

Channel-first: Bug fixes happen multiple times across implementations.

Measure: When you found a bug, how many places did you have to fix it? Omnichannel = 1. Channel-first = 3-5.

Metric 5: Governance audit readability

Omnichannel: Auditors see one implementation, one decision path, one logic flow.

Channel-first: Auditors see the same logic implemented five different ways, creating confusion.

Measure: Can a compliance officer trace a decision through the system and find the same path regardless of which channel the customer used? Omnichannel = yes. Channel-first = unclear.

Track these metrics over time. They're leading indicators of architectural clarity.

What Will Differentiate Enterprise AI in the Next Phase

We're at an inflection point in enterprise AI adoption. The question "Can we deploy an AI agent?" has been answered—most large companies can and do. The next phase will separate winners from everyone else.

Winners will be organizations that can move fast at scale.

That ability doesn't come from better models or shinier interfaces. It comes from architectural decisions made in month two that turned out to be right in month twelve.

It comes from choosing foundations that don't limit future growth.

The companies that win the next three years will be the ones that realized early that omnichannel isn't a deployment requirement ("launch everywhere at once") but an architectural direction ("build in a way that allows expansion without rebuilding").

They'll move at 2-3x the speed of organizations still locked in channel-first architecture. They'll be able to pivot business models when markets shift. They'll handle regulatory changes faster. They'll integrate AI into more workflows, more channels, more use cases without linear growth in engineering effort.

And the truly competitive organizations will go further. They'll realize that once you have omnichannel intelligence—one agent operating across voice, chat, email, web—you can start optimizing across channels. Proactive outreach through the preferred channel. Seamless handoffs between channels. Context flowing across surfaces. Campaigns orchestrated omnichannel.

That's not possible when channels are separate systems. It's native when they're unified.

The Architecture that Scales

The most dangerous moment in enterprise AI is indeed early success. Success on one channel creates momentum that can push an organization toward short-term decisions with long-term costs.

But success doesn't have to come at that cost.

Omnichannel architecture isn't a complex, costly approach that only large enterprises can afford. It's a clear decision to separate business logic from channel logic and build adapters that translate between them. It's maybe 10-15% more upfront effort. And it saves 3-4x effort by month twelve.

For any organization starting an AI journey, the question isn't "Will we need multiple channels?" (the answer is almost always yes, eventually). The question is "When do we want to discover that our architecture doesn't support it?"

Discover it in month three when architecture is still malleable and changes are cheap. Not month nine when you're facing a choose-your-fate moment: slow down for a rewrite, or accept growing technical debt.

The organizations building AI today that will still be competitive in 2026-2027 are the ones making that choice correctly.

Use Case: Automatically generate architectural documentation and runbooks for your AI deployment, keeping all team members aligned on your omnichannel strategy.

Try Runable For Free

FAQ

What is omnichannel AI architecture?

Omnichannel AI architecture is a system design approach where a single core intelligence layer operates across multiple communication channels (voice, chat, email, etc.) through channel-specific adapters. Unlike channel-first approaches where each channel has its own implementation, omnichannel means business logic is defined once and reused across all channels. This approach avoids duplicating workflows, integrations, and governance rules.

How does omnichannel architecture differ from multichannel AI?

Multichannel typically means operating on multiple channels, but each might be a separate system with its own logic and integrations. Omnichannel means those channels share common intelligence—the same workflows, rules, and business logic, just translated for different interaction patterns. It's the architectural difference between "managing five separate agents" and "deploying one agent across five surfaces."

What are the key benefits of omnichannel architecture for enterprises?

Omnichannel architecture provides faster scaling (new channels cost 15-20% engineering effort instead of 70-80%), consistency across channels (customers get the same decision regardless of medium), easier governance and compliance (one audit trail instead of five), reduced maintenance burden (rule changes happen once), and future-proof foundations (you're not locked into a single-channel scaling ceiling). It also enables cross-channel capabilities like seamless handoffs and context persistence across surfaces.

What specific mistakes happen when enterprises scale channel-first AI systems?

Channel-first systems require reimplementing business logic for each new channel, creating separate integration points instead of shared ones, developing inconsistent behavior across channels, fragmenting governance across implementations, and often forcing expensive architectural rewrites when business demands shift. Teams discover these problems too late—usually months three through six—when reversing course becomes costly.

When should an enterprise decide on omnichannel architecture?

The ideal time is during the initial design phase, typically month one to three of an AI project. This is when architectural decisions are still malleable and cheap to change. By month four to six, integration patterns and business logic structures have solidified, making architectural changes exponentially more expensive. Early decisions lock in scaling capability—waiting until you "need" multiple channels means rebuilding.

How much additional effort does omnichannel architecture require upfront?

Omnichannel architecture typically requires 10-15% additional engineering effort in months one through three compared to a single-channel approach. You're investing in architectural clarity, shared integration layers, and orchestration design. This upfront investment pays back by month eight through nine when adding subsequent channels requires only 15-20% effort instead of 70-80%, making the cumulative cost significantly lower over 12-18 months.

What does a practical three-layer omnichannel system look like?

Layer 1 is the orchestration and business logic layer—completely channel-agnostic, containing workflows, decision trees, rules, and context management. Layer 2 is the unified integration layer where external systems (CRM, payment processors, databases) connect once and serve all channels. Layer 3 is channel-specific adapters—thin translation layers that convert voice to text, text to voice, handle async messaging, manage audio encoding, etc. The adapters are the only channel-specific code; everything else flows through shared layers.

How do you measure whether your architecture is truly omnichannel?

Key metrics include: How many code repositories change when you update one business rule (omnichannel = 1, channel-first = 3-5)? How long did your second channel take to launch compared to your first (omnichannel = similar timeframe, channel-first = significantly longer)? Can the same query produce inconsistent results across channels (omnichannel = no, channel-first = yes)? Can auditors trace a single decision path (omnichannel = yes, channel-first = confusing)?

What are the governance advantages of omnichannel architecture?

With omnichannel, you have one audit trail, one set of rules, one implementation to monitor and maintain. Regulatory changes affect one codebase. Security reviews cover one logic flow. Inconsistent behavior is impossible because there's only one implementation. This is critical in regulated industries like finance and healthcare where fragmented implementations create compliance nightmares.

Why do most enterprises discover this problem too late?

Most organizations start with a single-channel pilot that succeeds beyond expectations. Success builds momentum. By the time the business asks for a second channel, engineering teams have optimized around channel-specific concerns. The system that worked perfectly for voice or chat creates friction when expanded. At that point, architectural decisions from month three have become load-bearing walls, expensive to change. The "too late" moment is typically month six to nine.

Conclusion: Your Architecture Determines Your Destiny

Here's the uncomfortable truth about enterprise AI adoption: your most important decision isn't which model to use or which vendor to choose. It's how you structure intelligence, integration, and governance in the first 90 days.

That decision—made almost quietly, without executive fanfare—determines whether you'll be moving fast at scale in month twelve or trapped in architectural debt.

Early success masks this risk. Your voice agent works beautifully. Customers are happy. Metrics are good. Everyone celebrates. But if that success was built on channel-specific optimizations, you've essentially built a system that can't easily become more.

The question isn't whether you need omnichannel AI. The question is when you want to discover that your architecture doesn't support it.

Discover it now, when you can still change direction affordably. When adding an omnichannel approach costs 10-15% extra in month two. Not when you discover it in month nine, when the cost is a full rebuild.

The enterprises that will be winning at AI in 2026 aren't the ones that shipped the fastest in 2024. They're the ones that made the hard architectural decision early, stuck with it, and systematically built on foundations that didn't limit future growth.

Fundamental question for your organization: Are you optimizing for speed to first success, or for sustainable scaling?

Because those are different architectures. Choose accordingly.

Key Takeaways

- Early success on a single AI channel masks architectural decisions that become expensive to reverse—channel-first systems require 70-85% effort to add each new channel, while omnichannel requires only 15-25%.

- The critical decision window is month 1-3 of an AI project when architecture is still malleable; waiting until month 6+ makes architectural changes exponentially more costly.

- Omnichannel architecture means one shared intelligence layer serving multiple channels through thin adapters, not five separate implementations of the same logic.

- Channel-first approaches create governance nightmares in regulated industries—three separate implementations of the same decision creates compliance complexity that scales with channels.

- Organizations that commit to omnichannel architecture early move 2-3x faster at scaling than those that refactor channel-first systems later, creating sustainable competitive advantage.

Related Articles

- AI Governance & Data Privacy: Why Operational Discipline Matters [2025]

- Code Metal's $125M Bet on AI-Powered Defense Code Modernization [2025]

- OpenAI & Reliance's JioHotstar AI Search Partnership [2025]

- OpenClaw Security Risks: Why Meta and Tech Firms Are Restricting It [2025]

- AI Coding Tools and Open Source: The Hidden Costs [2025]

- Enterprise Agentic AI's Last-Mile Data Problem: Golden Pipelines Explained [2025]

![AI Agent Scaling: Why Omnichannel Architecture Matters [2025]](https://tryrunable.com/blog/ai-agent-scaling-why-omnichannel-architecture-matters-2025/image-1-1771587417520.png)