AI Coding Tools and Open Source: The Hidden Costs of Abundance

Let's start with what seemed obvious. AI coding assistants are supposed to make building software cheaper, faster, and more accessible. Lower the barrier to entry, and suddenly junior developers can ship features that once took senior engineers weeks. Startups can replicate complex SaaS features without massive engineering teams. Open-source projects, perennially starved of resources, should theoretically be among the first to benefit.

Except it's not working that way.

I've spent the last eight months talking to maintainers across major open-source projects—from VLC, the media player that's been around since 2001, to Blender, the 3D modeling tool, to infrastructure projects like cURL. The story they tell is radically different from the "AI will solve everything" narrative we've been hearing.

AI coding tools have dramatically lowered the friction for submitting code. That sounds good. But friction existed for a reason: it filtered out low-quality contributions and signaled genuine investment from contributors. Remove that friction, and you don't get a surge in high-quality contributions. You get a flood.

The real issue isn't that AI coding tools create bad code. It's that they create abundant bad code, and open-source projects don't have the resources to handle abundance at scale. Building new features became easy. Maintaining them didn't.

TL; DR

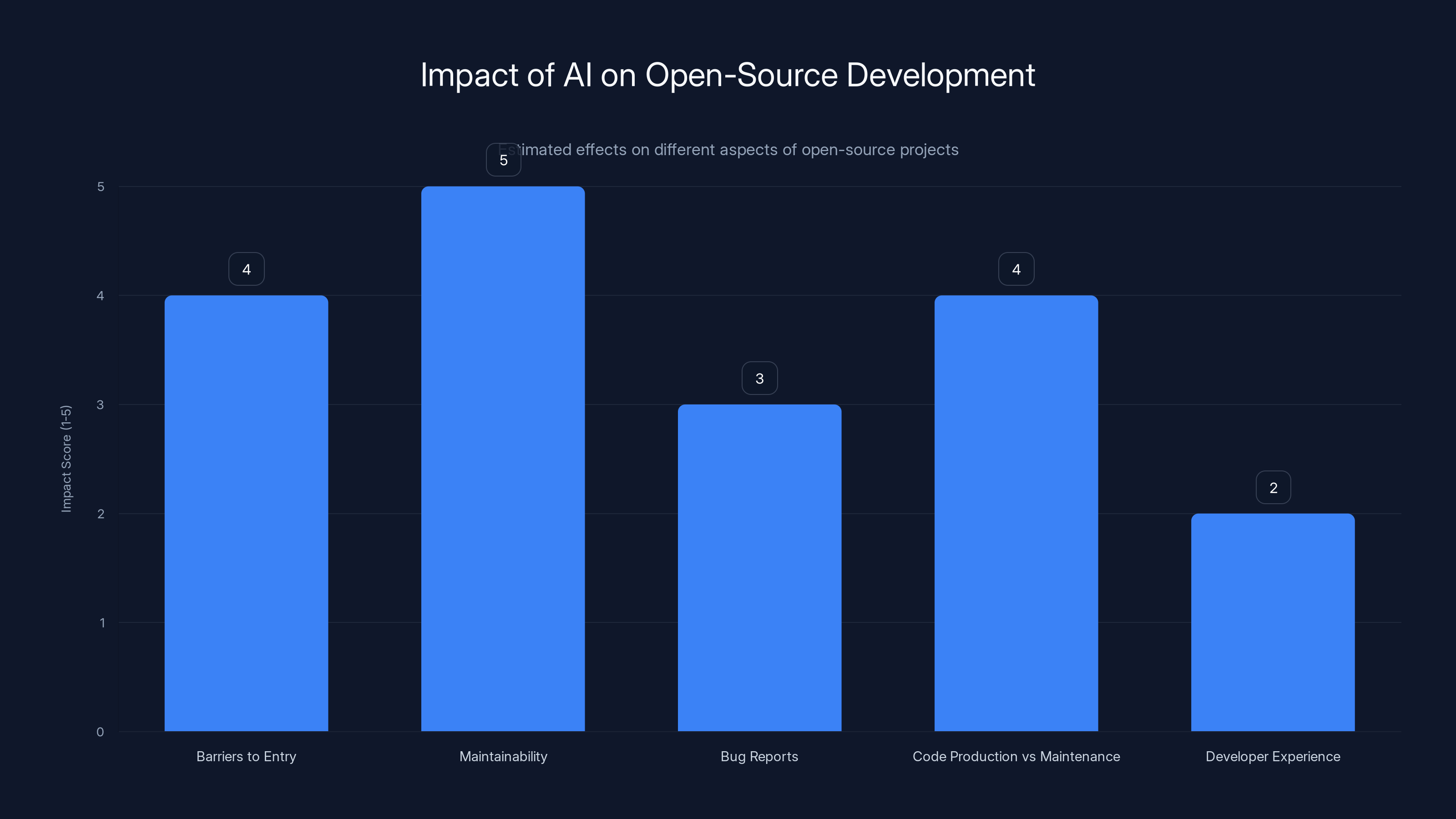

- AI lowered barriers to entry, not to quality: The flood of contributions from junior and non-expert developers has overwhelmed review capacity at major open-source projects

- Maintainability crisis is worse than code quality: Even good AI-generated code still needs testing, documentation, and long-term maintenance—areas where open-source projects are already resource-constrained

- Bug bounty programs are drowning in spam: Security researchers flooded with AI-generated vulnerability reports are abandoning responsible disclosure workflows

- The gap between code production and code maintenance is widening: Open-source ecosystems are fragmenting as the number of dependencies grows exponentially while maintainers stay flat

- Experienced developers benefit; junior developers hurt: AI coding tools work best for senior developers writing new features. They're actively harmful for junior developers trying to learn through code review

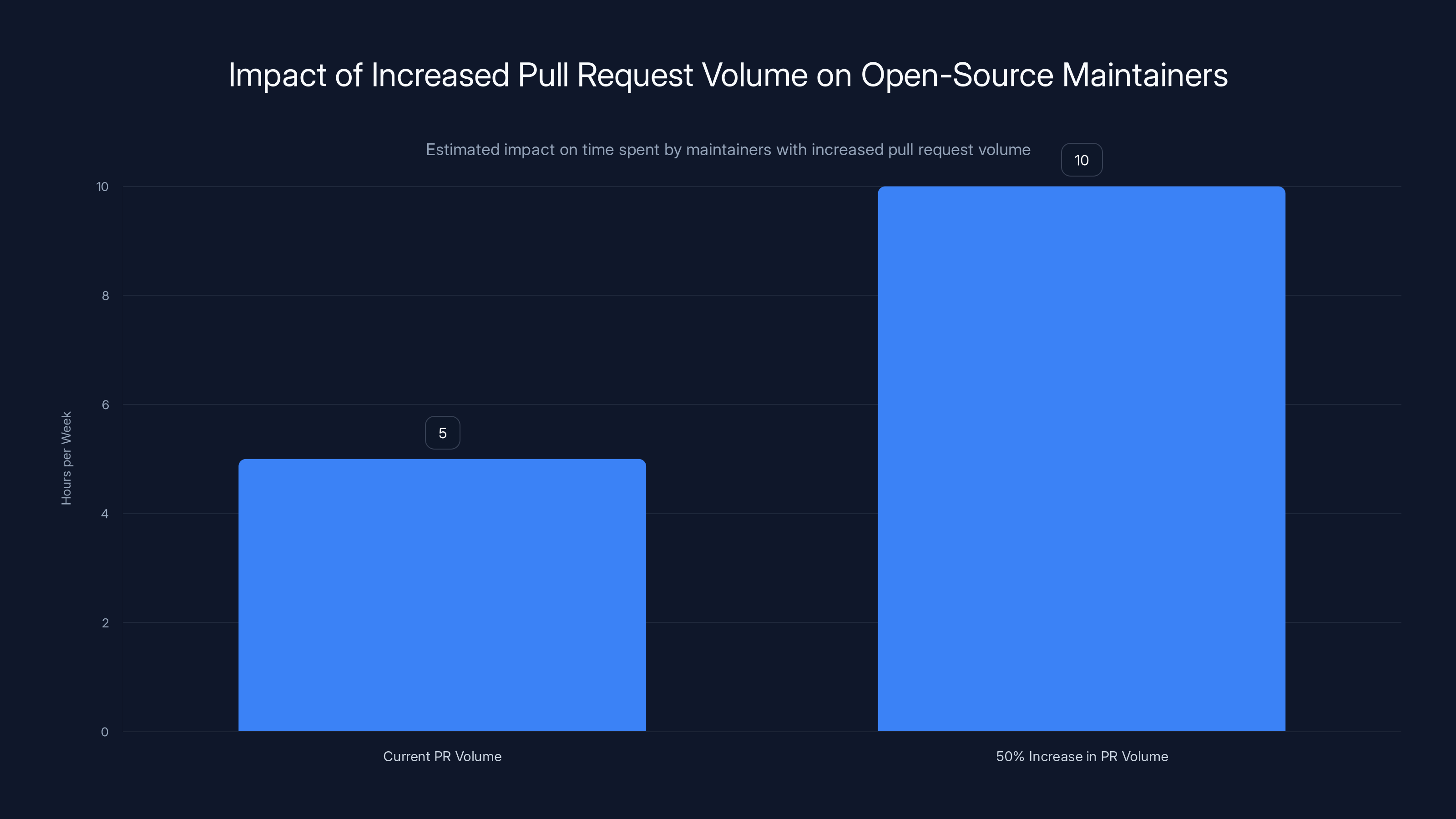

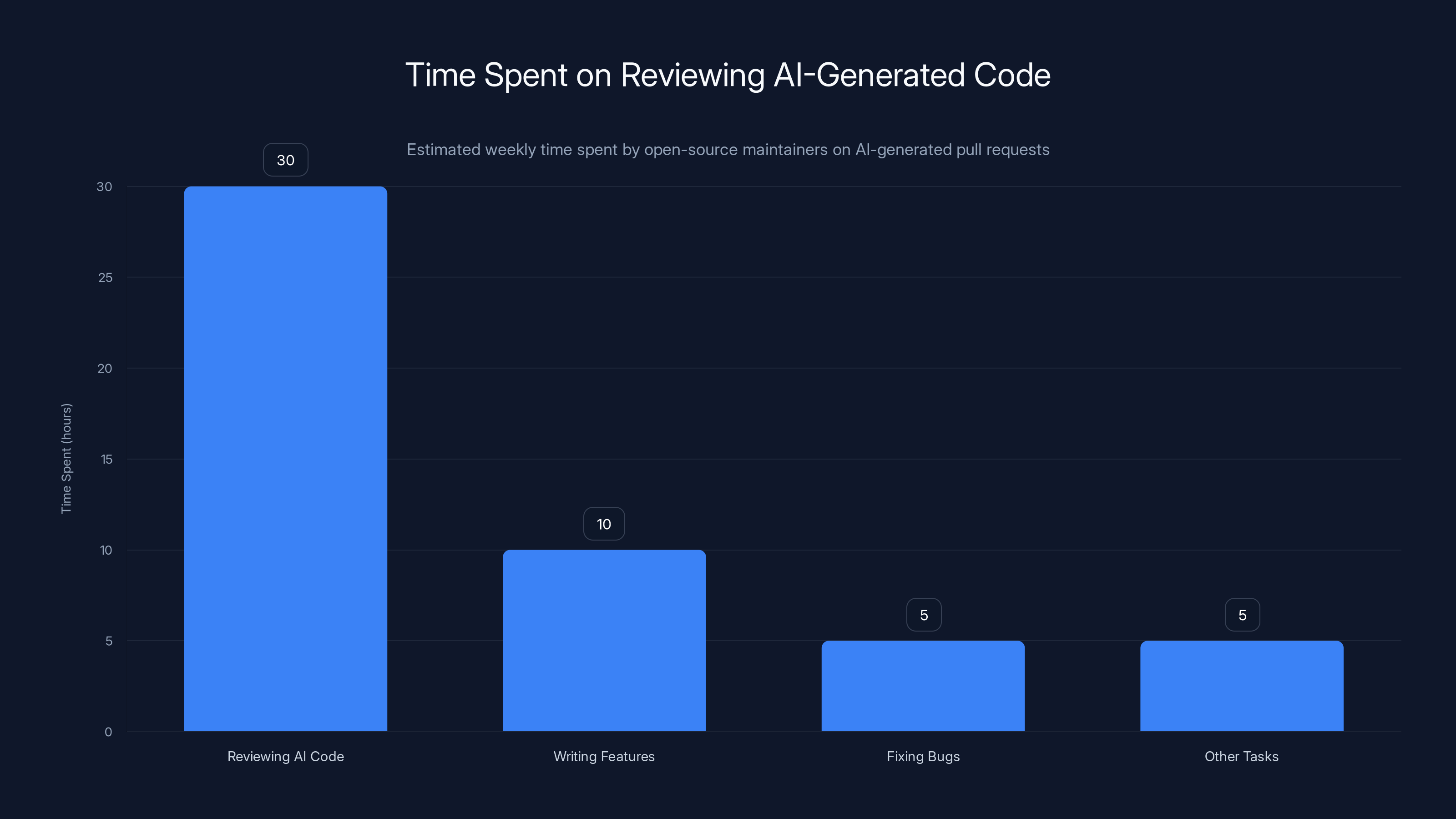

Estimated data shows that a 50% increase in pull request volume could double the time open-source maintainers spend on code review, significantly impacting their available free time.

Why Open-Source Projects Can't Scale With AI-Generated Code

Here's the fundamental problem: open-source maintainers operate under severe resource constraints. A single person or small team often manages projects with millions of users. They're not paid to review code—they do it because they care. They have day jobs.

When you dramatically increase the volume of incoming contributions, you don't proportionally increase the review capacity. You hit a breaking point.

VLC is a case study in this. The media player is used by hundreds of millions of people. It's also maintained by a relatively small core team. Jean-Baptiste Kempf, CEO of the VideoLAN Organization, was direct in conversations with me: the quality of AI-assisted merge requests has been "abysmal."

He wasn't being hyperbolic. The pattern is consistent across projects:

- Junior or external developer generates code using an AI tool

- They submit a pull request without testing on their actual system

- The code follows none of the project's conventions or architecture

- A maintainer spends 30 minutes to an hour reviewing a contribution that took the developer 5 minutes to generate

- The maintainer writes detailed feedback

- The external contributor never responds or makes superficial fixes

The math doesn't work. A maintainer reviewing 50 AI-assisted pull requests per week—which is conservative for popular projects—is spending 25-40 hours per week just reading, testing, and responding to code they didn't ask for. That's not writing features. That's not fixing bugs. That's not the work open-source maintainers signed up for.

At Blender, the 3D modeling tool, the problem manifested differently but similarly. Francesco Siddi, CEO of the Blender Foundation, told me that LLM-assisted contributions "wasted reviewers' time and affected their motivation." This is critical. Open-source maintainers are already stretched thin. Demoralize them further, and they stop contributing.

Blender hasn't banned AI-assisted contributions, but they're not recommending them either. The official policy is essentially neutral with a heavy lean toward "maybe don't."

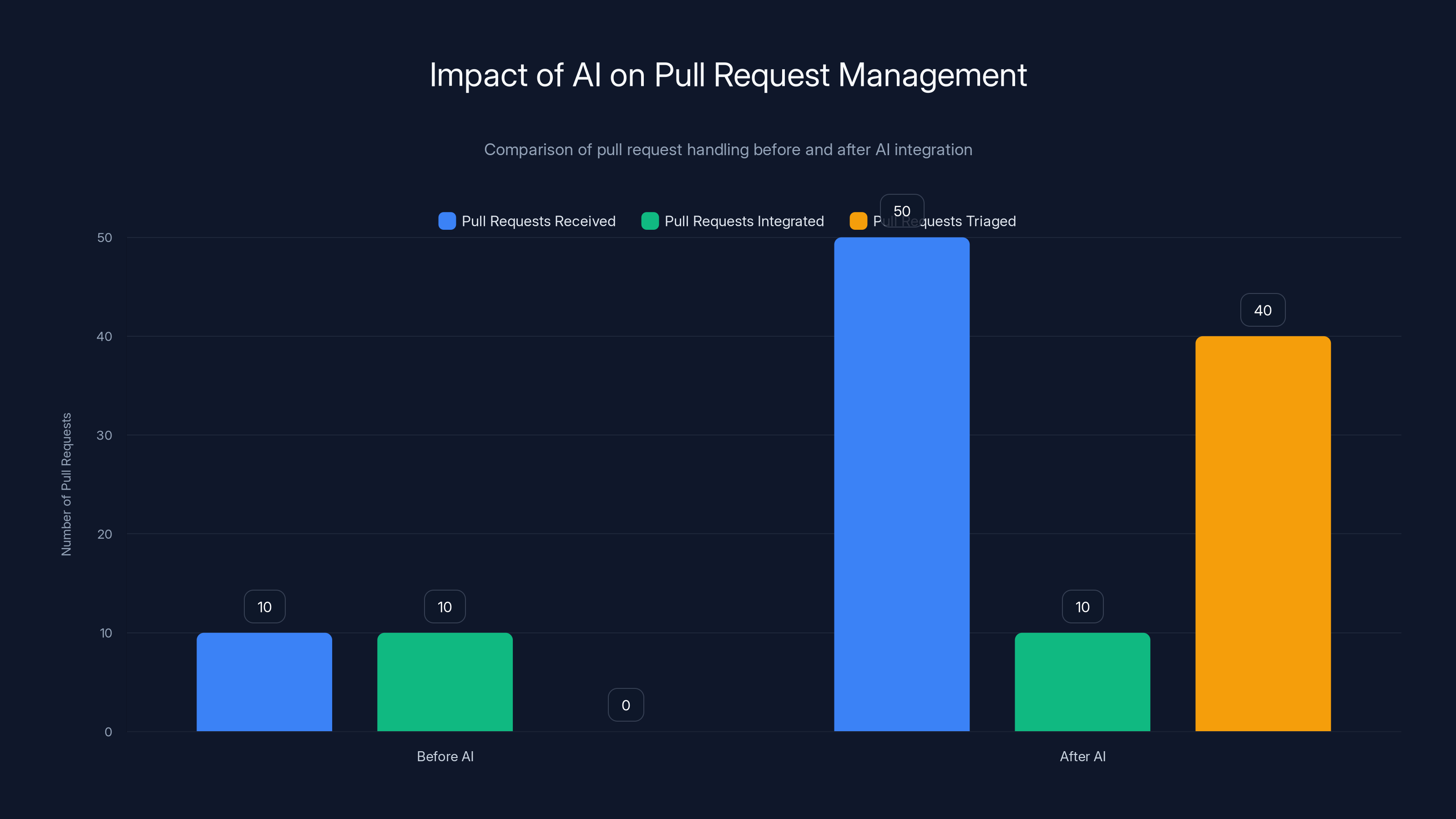

AI tools increase pull requests by 5x, but only 20% are integrated, leading to increased triaging workload. Estimated data.

The Paradox: AI Makes New Code Easier, Not Maintenance

Here's where the story gets complicated. Kempf is honest about the upside: for experienced developers, AI coding tools are genuinely useful. He gave me a specific example.

Imagine you're VLC and you want to port the media player to a new operating system. You have the entire VLC codebase—millions of lines of code covering everything from codec handling to platform-specific UI. A senior developer can now feed that codebase to an AI, say "port this to operating system X," and the AI generates a first draft that actually compiles and runs.

That's real progress. A few years ago, that kind of work took weeks of manual effort. Now it takes days.

But here's the catch: that workflow only works if the developer at the helm knows VLC deeply. They understand the architecture. They know which shortcuts are acceptable and which will cause problems downstream. They can look at the AI's output and immediately see what needs to be fixed, refactored, or removed.

A junior developer trying the same thing? They'll paste the codebase into Chat GPT or Claude, get back 5,000 lines of code, and have no idea if it's correct. They'll submit it anyway, assuming the AI is smarter than they are.

This creates a weird dynamic. For senior developers, AI is a multiplier. For junior developers, it's a footgun disguised as a shortcut. And most open-source contributions come from junior developers trying to build their skills and portfolios.

The Flood: When Open Doors Become Unsustainable

Open-source has always had a culture of openness. Anyone can submit code. That's the whole point. It's meritocratic in theory—good ideas and good code win. It's democratic.

But AI broke something fundamental in that model. It eliminated the natural friction that signaled genuine effort.

In the old days, if you wanted to contribute to an open-source project, you had to:

- Clone the repository

- Set up the local development environment (often painful)

- Make your changes

- Test everything locally

- Write clear commit messages

- Submit a pull request with a thoughtful description

This whole process took hours, sometimes days. If you were going to invest that time, you were probably serious. You'd thought through your contribution. You understood the project.

Now? You can describe what you want in English to an AI and have working (or at least compilable) code in five minutes. The friction is gone.

Mitchell Hashimoto, the creator of Terraform, launched a system earlier this year to address exactly this problem. He calls it a "vouching" system—you can only contribute to certain open-source projects if an existing contributor vouches for you.

In his announcement, he was blunt: "AI eliminated the natural barrier to entry that let OSS projects trust by default." His solution essentially closes the open-source door to outsiders.

That's a significant shift. Open-source was supposed to be open. But scaling open-source when anyone can generate pull requests in seconds? That might not be possible without some form of gatekeeping.

Estimated data shows maintainers spend the majority of their time reviewing AI-generated code, leaving less time for other essential tasks.

The Bug Bounty Disaster: When Security Drowns in Spam

The problem is particularly acute in bug bounty programs. These programs invite external security researchers to find vulnerabilities and report them responsibly. In exchange, researchers get cash rewards and recognition.

It's a beautiful system when it works. Researchers find real bugs before attackers do. Projects get patches out. Users stay safer.

But AI broke it.

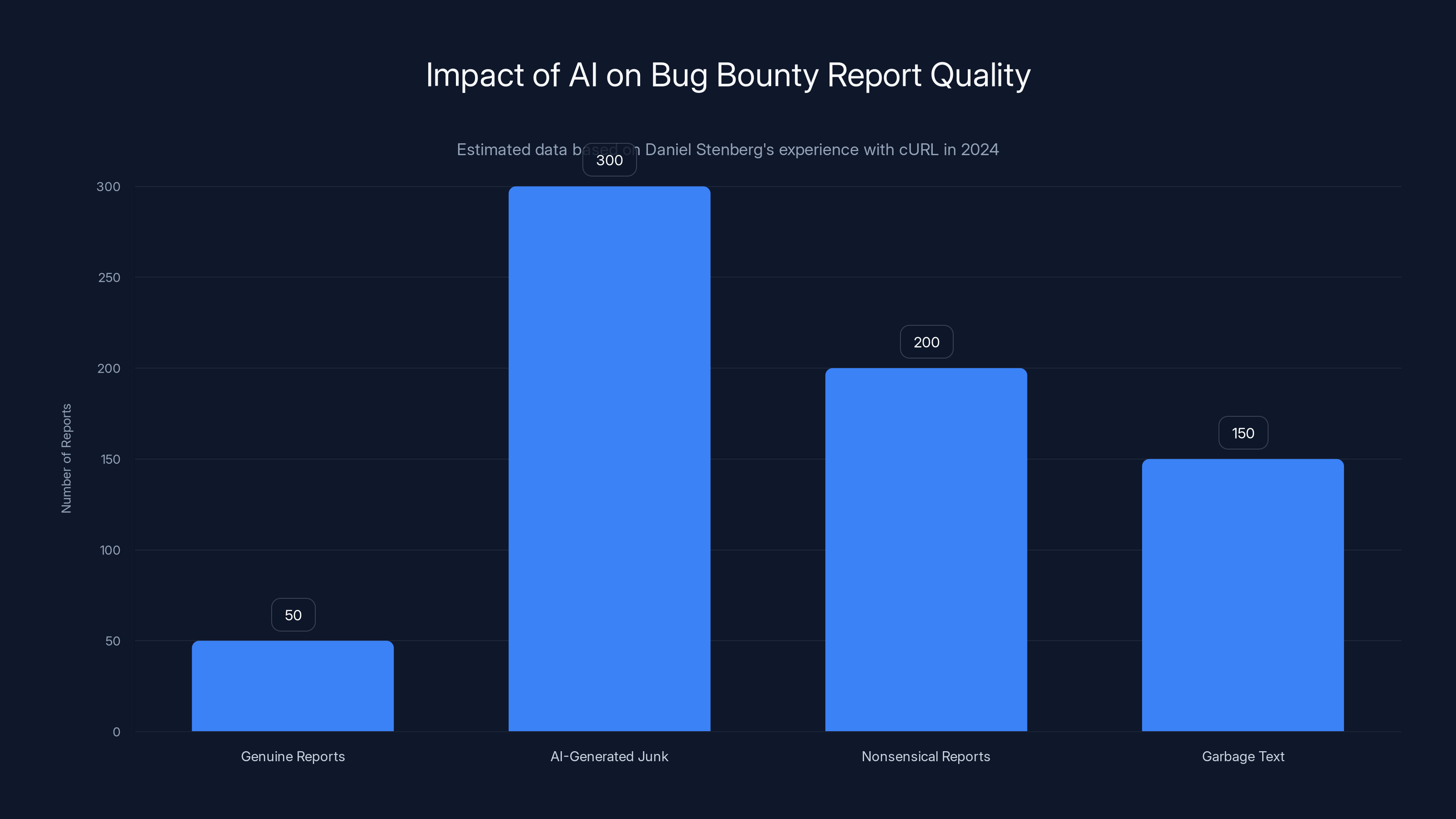

Daniel Stenberg, creator of cURL, halted the cURL bug bounty program in 2024. Why? He was drowning in AI-generated vulnerability reports.

I got to talk with Stenberg about this, and his frustration was palpable. In the old days, security research required investment. You had to understand the codebase. You had to trace through potential attack vectors. You had to write a coherent report explaining why something was actually a vulnerability.

"There was a built-in friction," Stenberg told me. "Someone actually invested time. Now there's no effort at all. The floodgates are open."

He's getting hundreds of junk reports. Many are nonsensical—descriptions of attack vectors that don't actually exist, or vulnerabilities in dependencies that aren't even used by cURL. Some are just garbage text that an AI hallucinated.

For legitimate security researchers, this is a nightmare. Real vulnerability reports get buried in the noise. Stenberg spends hours triaging garbage instead of working with researchers on genuine issues.

The economic incentive structure breaks when the cost of generating false reports drops to essentially zero.

The Maintenance Crisis: Code Abundance Without Stewardship

Here's where the problem gets genuinely scary. It's not just about managing volume. It's about what happens to code when it's generated faster than it can be maintained.

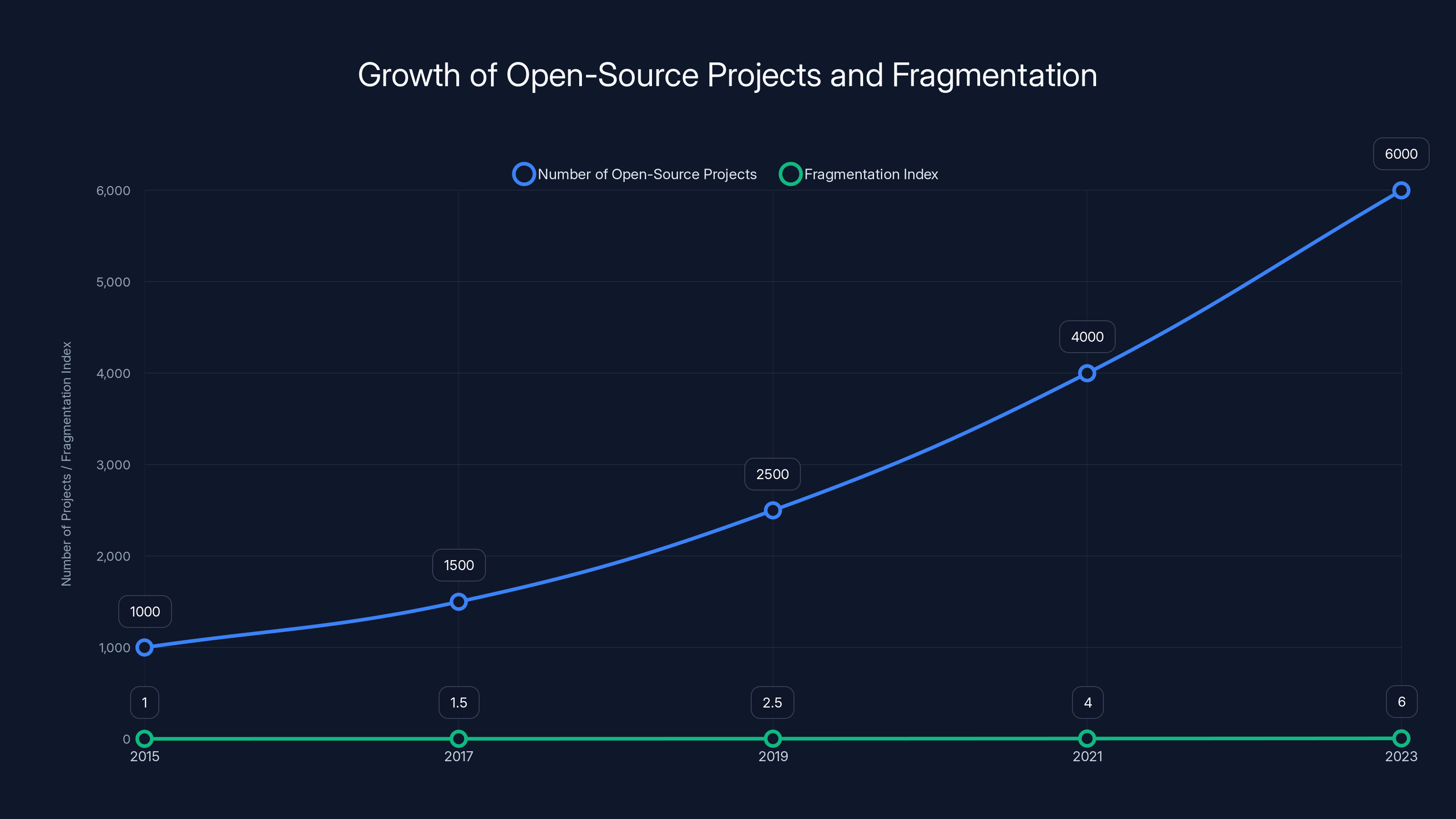

Open-source software is already highly fragmented. You have dependencies on dependencies on dependencies. A typical modern application might depend on hundreds of open-source packages. Each of those packages has its own maintainers—often just one or two people doing the work in their spare time.

Konstantin Vinogradov, founder of the Open Source Index and someone who thinks deeply about the sustainability of open-source infrastructure, described the problem this way: "On one hand, we have exponentially growing codebase with exponentially growing number of interdependencies. On the other hand, we have the number of active maintainers, which is maybe slowly growing, but definitely not keeping up."

AI accelerates both sides of that equation. More code gets written faster. More dependencies get added. But the number of people available to maintain all that code stays flat.

This creates a sustainability crisis. Projects accumulate technical debt. Features get half-finished because the maintainer ran out of energy. Security patches pile up. Bugs go unfixed.

From the outside, open-source looks like a beautiful, meritocratic ecosystem. From the inside, especially for maintainers, it's increasingly unsustainable.

AI coding tools didn't cause this problem—the crisis has been brewing for years. But they're accelerating it. They're making it possible to create more code than can possibly be maintained, more features than can possibly be stewarded, more dependencies than can possibly be secured.

AI has significantly lowered barriers to entry and increased code production, but it has exacerbated maintainability issues and overwhelmed bug reporting systems. Estimated data.

The Real Cost of "Cheap Code"

There's a mental model in the industry that goes like this: if code generation is cheap, then software engineering is cheap, and software engineering jobs are threatened.

But that model confuses code production with software engineering.

Engineering, in the truest sense, isn't about producing code. It's about managing complexity. It's about designing systems that work reliably at scale. It's about maintaining software over years or decades. It's about understanding trade-offs and making good decisions about what to build and what not to build.

AI coding tools can handle code production. They're not bad at it. But they can't handle the hard parts of engineering.

When you generate code without understanding the system you're building, you create technical debt. When you add dependencies without considering their maintenance burden, you create fragility. When you build features without thinking about how they'll be maintained, you create problems for future maintainers.

AI coding tools make it easier to create these problems. They don't make it easier to solve them.

For large companies with dedicated engineering teams and clear priorities, this might not matter much. They can absorb the technical debt. They can hire people to maintain the code.

For open-source projects with volunteer maintainers? It's catastrophic.

The Divergence: What Works for Companies Breaks Open-Source

Here's a crucial insight that Kempf pointed out: the incentive structures are completely different.

At large tech companies, engineers get promoted for writing code. They get bonuses for shipping features. They get recognized for their contributions to product roadmaps. There's essentially no reward for maintaining existing code or refactoring messy systems.

AI coding tools align perfectly with this incentive structure. Generate more code, ship more features, get promoted. Perfect.

But open-source projects optimize for different things. Stability matters more than velocity. A security patch is more valuable than a new feature. Maintainability matters because the person who has to maintain the code next month might be a volunteer with limited time.

AI coding tools misalign with these values. They make new features easy to add, but they make stability and maintainability harder.

This creates a divergence. Companies will use AI coding tools to accelerate product development. Open-source projects will get crushed under the weight of AI-generated contributions they can't possibly maintain.

Kempf is still optimistic that AI coding tools can be useful for open-source, but only if used the right way: by experienced developers, in service of long-term project goals, with a clear understanding of maintenance burden.

That's not how they're being used right now.

AI-generated reports significantly outnumber genuine reports, creating a challenge for security researchers to identify real vulnerabilities. (Estimated data)

The Quality Problem: Bad Code Is Worse Than No Code

Let me be specific about what "bad code" means in this context. It's not just "code that doesn't work." Some of that AI-generated code actually compiles and runs.

Bad code, in the open-source context, means:

Code that doesn't follow project conventions. Every project has style guides, naming standards, architectural patterns. AI-generated code often ignores all of it. It technically works, but it creates inconsistency and makes the codebase harder to navigate.

Code that doesn't handle edge cases. AI generates code that works for the happy path. It often misses error handling, boundary conditions, and the weird real-world scenarios that maintainers know about from experience.

Code that isn't tested. Developers submit AI-generated code without testing it in their own environment. They assume the AI's testing was sufficient (spoiler: it wasn't).

Code that creates unexpected dependencies. An AI might suggest using a library that looks good in isolation but conflicts with another dependency, or introduces a security risk the AI didn't understand.

Code that documents poorly. Documentation written by AI tends to be generic and unhelpful. It doesn't explain why a design decision was made, only what the code does.

None of these are dealbreakers individually. But together, they create a maintenance burden that scales quickly. Every piece of bad code requires more review time, more fixes, more follow-up conversations.

The Role of Experienced Developers: AI as a Multiplier

Now, here's what's working: AI tools in the hands of experienced developers.

Kempf's example of porting VLC to a new OS is worth thinking through. A senior developer who knows the VLC architecture deeply can use AI as a starting point. They generate code, review it critically, fix the parts that don't work, refactor what needs refactoring, and integrate it with the existing system.

This isn't fundamentally different from using AI as a sophisticated code snippet library. It's faster than writing from scratch, but it's not magic. The developer is still doing the actual engineering work.

For experienced developers, AI coding tools are genuinely valuable. They handle boilerplate. They generate first drafts. They suggest approaches. They save time on the mechanical parts of coding so engineers can focus on the hard problems.

But this use case—experienced engineers leveraging AI for specific tasks—is the opposite of what's actually happening in open-source. In open-source, it's usually junior developers or non-expert contributors using AI to generate code they don't fully understand.

The number of open-source projects is growing exponentially, leading to increased fragmentation. Estimated data shows a parallel rise in the fragmentation index, indicating more projects with overlapping purposes.

The Documentation and Discovery Problem

One thing that's rarely discussed: AI coding tools are terrible at learning from undocumented code.

VLC has millions of lines of code. Some of it is well-documented. Some of it isn't. An AI tool trying to understand the codebase might miss important context. It might not understand why a particular design pattern was chosen. It might miss edge cases that are only handled correctly in three specific places in the codebase.

Senior developers have built up that contextual knowledge over years. They know where to look, what to pay attention to, which parts of the codebase are fragile.

AI tools have to infer all of this from code alone. They often get it wrong.

This is particularly problematic for legacy projects. The older a project is, the less likely it is to be well-documented. The more likely that critical knowledge lives only in the heads of long-time maintainers. AI tools struggle with this kind of context.

What Open-Source Projects Are Actually Doing About It

Maintainers aren't passive about this problem. They're building tools and policies to manage the flood.

VLC is implementing stricter review processes for contributors with no history. Blender is developing an official policy (currently "neither mandated nor recommended"). cURL shut down its bug bounty program.

And, as mentioned, some projects are moving toward a vouching system or limiting contributions to pre-approved developers.

These are not the actions of projects that are thriving. These are the actions of projects that are overwhelmed.

Think about what this means culturally. Open-source was supposed to be open. "Anyone can contribute." That's the whole promise. Now, for some projects, that promise is breaking. The door is closing. Not because maintainers are hostile to new contributors—they're not—but because they literally cannot handle the volume.

The Ecosystem Fragmentation Problem

Here's a second-order effect that's particularly concerning. As open-source projects become harder to contribute to, developers might create new projects instead.

Why deal with the VLC maintainers and their strict review process? Why not fork VLC and create your own media player? You can use AI to generate features faster, and you don't have to deal with anyone else's code quality standards.

This is how ecosystems fragment. It's how you end up with dozens of similar projects, each solving the same problem slightly differently, each with its own dependencies, its own maintenance burden.

Vinogradov has studied this problem extensively. The open-source ecosystem is already fragmenting due to exponential growth and flat maintainer capacity. AI tools are accelerating that fragmentation.

More projects means more interdependencies. More interdependencies means more complexity. More complexity means more work for everyone trying to maintain anything.

This is genuinely scary from a software resilience perspective. The systems we all depend on—servers, networks, applications—are built on a foundation of open-source software maintained by volunteers. If that foundation starts to crack, we all suffer.

The Knowledge Problem: Can Junior Developers Still Learn?

Here's something I worry about that doesn't get discussed enough: if junior developers are using AI to generate code they don't fully understand, what are they learning?

For decades, open-source has been a primary educational channel for junior developers. You contribute to a project, experienced maintainers review your code and give feedback, you learn from their criticism, you level up.

But if your code was AI-generated and you don't fully understand it, then what? The maintainer says "this doesn't work because [technical reason]," but you don't have the context to learn from that criticism.

Worse, if you're just using AI as a shortcut to look productive without actually understanding what you're doing, you're not leveling up at all. You're just getting better at prompt engineering.

That might sound harsh, but it's a real risk. Open-source has always been where junior developers actually learn engineering. If that channel gets corrupted by AI-generated code that nobody understands, we've lost something valuable.

The Maintenance Debt Accumulation

There's a mathematical problem here worth thinking through carefully.

Let's say a project currently receives 10 pull requests per week. The core team can review and integrate all of them, plus fix bugs, plus work on strategic improvements. It's tight, but manageable.

Now AI tools make it 5x easier to generate pull requests. Suddenly the project is receiving 50 pull requests per week. If only 20% of them are worth integrating, that's still 10 pull requests to integrate—plus the time spent triaging and rejecting the other 40.

The math is:

If you increase "PRs Received" by 5x without changing the team size, review time increases by 5x. Someone's day is now fully consumed just triaging contributions.

But there's a second equation:

Every line of code that gets committed without proper maintenance planning adds to the debt. AI-generated code commits without proper stewardship. The debt accumulates faster than it can be paid down.

Eventually, technical debt becomes so heavy that it slows down all future development. The project that was supposed to accelerate—because everyone can generate code easily—instead slows down because the maintenance burden is unsustainable.

How Large Companies Are Handling This Differently

It's worth noting that large companies don't face these problems the same way.

At Meta or Google or Microsoft, if you use an AI coding tool to generate code, there's a team on the other side who will maintain it. You're not dumping your code on volunteers. You're handing it off to full-time engineers who are paid to maintain whatever you build.

Incentive structures are aligned. You generate code, they maintain it, everyone moves forward.

Open-source doesn't work that way. The person who generates the code and the person who maintains it are often different people, separated by months or years.

This is why Kempf says AI coding tools are "best for experienced developers." What he really means is they're best for contexts where the developer is responsible for the long-term maintenance of what they create.

The Path Forward: What Actually Needs to Happen

So if AI coding tools are breaking open-source, what's the solution? Close the door? Ban AI-assisted contributions? That's not realistic and, frankly, it's not desirable.

The real solutions are messier:

Better tooling for maintainers. We need AI-assisted tools that help maintainers manage incoming contributions, not just tools that help developers generate code. Tools that can automatically run test suites, check for style compliance, flag contributions that don't follow project norms.

Cultural shifts in education. Junior developers need to understand that AI-generated code is a starting point, not a finished product. They need to own their contributions fully.

Sustainability investment. Open-source maintainers need resources—not just money, but time. Grants or sponsorships that let maintainers work part-time on open-source without starving.

Thoughtful contribution policies. Projects might need to be selective about who can contribute. This is hard to admit, because it violates the spirit of open-source, but it might be necessary for projects to survive.

Better documentation and contribution guides. Projects that explicitly explain their architecture, their review standards, their maintenance philosophy will attract contributors who understand what they're signing up for.

None of these are silver bullets. But together, they might help preserve what's valuable about open-source while acknowledging the new reality of AI-generated code.

The Broader Engineering Question

Here's the deepest question this raises: what actually is software engineering?

If engineering is just writing code, then AI coding tools make engineers less necessary. They can generate code fast and cheap.

But if engineering is managing complexity, making good design decisions, understanding systems deeply, maintaining software over years, and making trade-off decisions that affect millions of users, then code generation is just one small part of the job.

Most of the work is everything else.

AI coding tools have made one part of engineering easier. They haven't made the hard parts easier. They've arguably made them harder.

When you can generate code in seconds instead of hours, your biggest constraint shifts. It's no longer "how do I write this code?" It's "how do I maintain all this code I just created?"

That's a fundamentally different problem. And it's one that no AI coding tool can solve for you.

What This Means for Software Development Moving Forward

The story of AI coding tools in open-source is a case study in unintended consequences. The tools themselves aren't bad. But deploying them in systems where the incentive structures don't align can cause real harm.

For organizations using AI coding tools internally, the lesson is clear: think about maintenance. If you use AI to generate code, you're committing to maintaining that code. If you can't maintain it, don't generate it.

For junior developers, the lesson is: understand what you're submitting. If an AI generated it, you still need to read it, test it, and own it.

For open-source projects, the lesson is: your maintainability capacity is your real constraint, not your development capacity. Protect it fiercely.

And for the industry broadly, the lesson is: abundance of code is not the problem we actually needed to solve. Maintainability, sustainability, and architectural clarity are the hard problems.

AI coding tools are excellent at creating abundance. They're terrible at creating clarity or maintaining systems over time.

Until we figure out how to apply AI to the hard problems—understanding complex systems, managing technical debt, making good architectural decisions—we'll keep seeing stories like this. Tools that were supposed to help actually creating new problems.

The story isn't over. But it's already clear that the "AI makes everything easier" narrative was too simple. The real world is more complicated. And open-source projects are learning that the hard way.

FAQ

What is the impact of AI coding tools on open-source projects?

AI coding tools have created a paradoxical situation for open-source projects. While they make writing new code easier for experienced developers, they've also dramatically lowered the barriers to submitting code, resulting in a flood of low-quality contributions that overwhelm maintainers' review capacity. This has fundamentally changed the economics of open-source maintenance, shifting the bottleneck from code generation to code review and maintenance.

Why are open-source maintainers struggling with AI-generated contributions?

Open-source projects typically operate with volunteer maintainers who have limited time. When AI tools make it trivial to generate and submit pull requests, the review burden increases exponentially. A maintainer who could previously handle 10 weekly pull requests might now receive 50, forcing them to spend most of their time triaging and rejecting submissions rather than actually improving the project. Additionally, AI-generated code often lacks understanding of project conventions, edge cases, and long-term maintenance considerations.

How have AI tools affected bug bounty programs in open-source?

AI tools have caused significant problems in bug bounty programs by enabling the rapid generation of false or meaningless vulnerability reports. Security researchers using AI to generate bulk submissions have flooded projects like cURL with spam, forcing them to shut down their programs entirely. This eliminates the legitimate security research channel and makes it harder for genuine researchers to report real vulnerabilities that need patching.

What's the difference between how experienced and junior developers use AI coding tools effectively?

Experienced developers can leverage AI coding tools as a multiplier, using them to generate starting points or handle boilerplate while maintaining responsibility for design decisions and system integration. Junior developers often use AI tools as shortcuts without understanding the generated code, which creates maintenance problems and prevents them from actually learning software engineering principles through code review feedback.

Are open-source projects banning AI-assisted contributions?

Most major projects aren't outright banning AI-assisted contributions, but they're implementing stricter policies. Some projects are moving toward a "vouching" system where only pre-approved contributors can submit, while others like Blender are explicitly neither mandating nor recommending AI tools. These changes represent a significant shift from open-source's historically open-door philosophy toward necessary gatekeeping due to unsustainable contribution volumes.

How does AI code generation affect software maintenance and technical debt?

AI tools make it possible to create code faster than it can be maintained. This creates a widening gap between code production and code maintenance, leading to rapid accumulation of technical debt. When code is generated without consideration for long-term stewardship, future maintainers inherit problems they didn't create. This is particularly damaging in open-source, where maintainers are already stretched thin and can't spare resources for paying down debt created by AI-generated contributions.

What are the solutions to these problems in open-source projects?

Solutions include developing better tooling for maintainers to automatically enforce code quality standards, investing in open-source sustainability through funding and time allocation, implementing clear contribution policies and better documentation, and cultural shifts in how junior developers approach AI-generated code. Projects also need to recognize that maintainability capacity is their real constraint, not development capacity, and protect it accordingly.

Can AI coding tools coexist successfully with open-source development?

Yes, but only with thoughtful implementation. AI tools work well when used by experienced developers who understand the systems they're modifying and take responsibility for long-term maintenance. They fail when they become shortcuts for junior developers or when they're deployed in systems where incentive structures reward code generation over maintenance and stability. The key is aligning tool usage with sustainable practices.

Key Takeaways

- AI coding tools lowered the friction for submitting code, but that friction existed for a reason—it signaled genuine effort and project understanding

- Open-source maintainers now face exponential increases in pull request volume they cannot possibly review, fundamentally breaking the volunteer-driven model

- Bug bounty programs are drowning in AI-generated spam vulnerability reports, eliminating legitimate security research channels

- Technical debt accumulates faster than it can be maintained when code production vastly exceeds stewardship capacity

- AI tools amplify experienced developers' productivity but confuse junior developers, inverting open-source's educational mission

- The real engineering work isn't code generation—it's managing complexity, maintaining systems, and making good design decisions over time

Related Articles

- OpenAI Codex Hits 1M Downloads: Deep Research Gets Game-Changing Upgrades [2025]

- The AI Agent 90/10 Rule: When to Build vs Buy SaaS [2025]

- Why AI Can't Make Good Video Game Worlds Yet [2025]

- Why AI Pilots Fail: The Gap Between Ambition and Execution [2025]

- AI Bias as Technical Debt: Hidden Costs Draining Your Budget [2025]

- How Spotify's Top Developers Stopped Coding: The AI Revolution [2025]

![AI Coding Tools and Open Source: The Hidden Costs [2025]](https://tryrunable.com/blog/ai-coding-tools-and-open-source-the-hidden-costs-2025/image-1-1771511873650.jpg)