Introduction: The Age of Slopaganda

Last month, a 23-year-old with a smartphone uploaded a video making unfounded claims about daycares run by Somali Americans in Minneapolis. The video didn't just get shares. It triggered a federal occupation that left two residents dead at the hands of immigration agents. Welcome to 2025, where a kid with a ring light and a Twitter account can move government machinery, as reported by PBS NewsHour.

His name is Nick Shirley. You've probably never heard of him. That's not because he's obscure—it's because he's everywhere, just not in places you're looking.

For the past few years, something strange has been happening on social media. A new breed of content creator emerged, one that looks nothing like traditional influencers. They're not slick. They're not polished. They don't spend months perfecting a brand. Instead, they churn out videos at breakneck speed, hitting the same beats over and over: a city has "fallen," migrants are destroying neighborhoods, protesters look ridiculous, Democrats are destroying America. The content is cheap to make. It's repetitive. It's often factually thin. But it works.

We call this "slop." Most people associate slop with AI-generated garbage, but slop predates AI. It's any content made quickly, cheaply, and poorly, designed to capture attention and extract value from your time. Slop doesn't need to be synthetic. It can be a vlogger filming in 4K. It can be a politician's incendiary tweet. It can be 45-second climate change debunking on Instagram Reels.

But here's what makes Shirley and his cohort different: they're not just making slop. They're making propaganda wrapped in slop, optimized by algorithms, and weaponized for political purposes. Call it slopaganda.

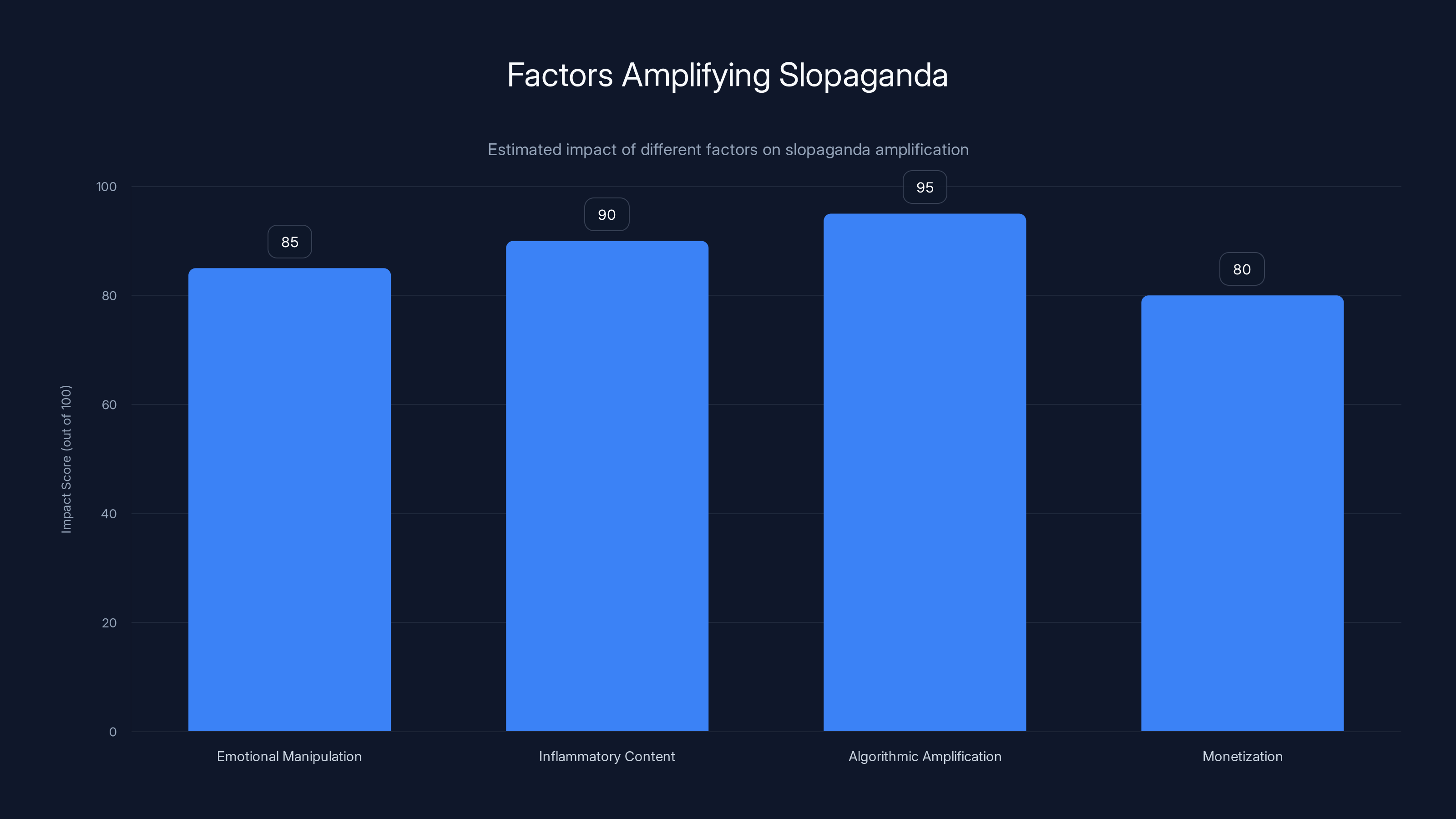

The thing that haunts me most is that this isn't new. This is yellow journalism of the 1890s, turbocharged by machine learning. The printing press democratized information. The internet democratized it again. But algorithms—those invisible ranking systems that decide what billions of people see—have concentrated power in ways we're still struggling to understand.

This article breaks down who Shirley is, how slopaganda works, why algorithms amplify it, and what it means for American democracy when a single person with a phone can trigger federal action. More importantly, it explains why this matters to you, even if you've never heard of Nick Shirley before.

TL; DR

- Slopaganda is propaganda as slop: Low-effort, inflammatory content designed to exploit algorithmic amplification for political ends, not just engagement.

- Nick Shirley's influence is real: His unfounded claims about Somali-American daycares directly preceded federal immigration raids that killed two residents, as noted by Star Tribune.

- It mirrors yellow journalism: Like 1890s newspapers, modern slopagandists generate outrage to drive engagement, but with algorithmic acceleration amplifying the effect.

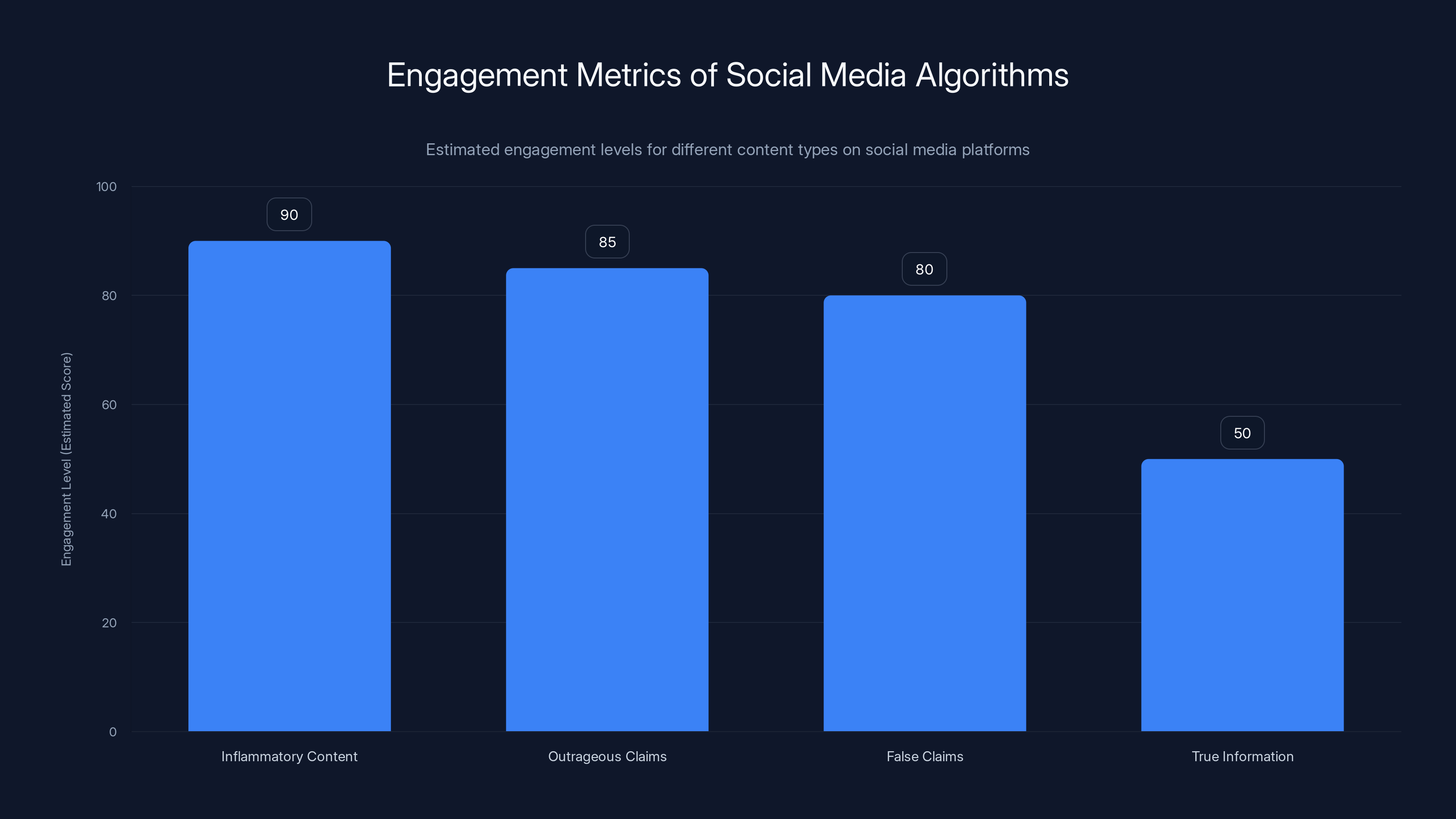

- Algorithms are the distribution engine: Social media platforms' recommendation systems actively reward inflammatory content that generates clicks and engagement.

- The economics are perverse: Low production costs, high engagement yields, and monetization through ads, merchandise, and political donations create a self-sustaining system.

- This is a democratic crisis: When unfounded accusations can trigger government violence, the boundary between speech and incitement dissolves.

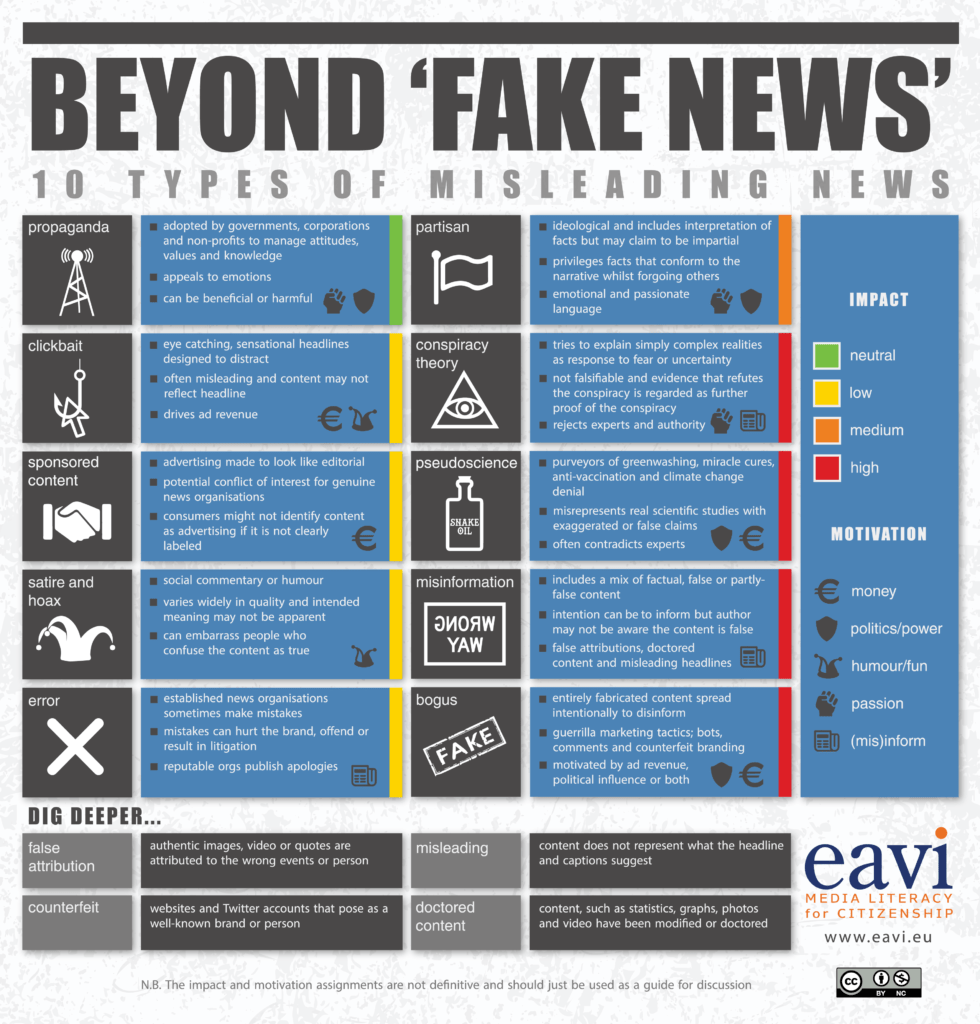

Estimated data shows a dramatic increase in both reach and speed from yellow journalism in the 1890s to modern social media in 2025, highlighting the amplified influence of media today.

Who Is Nick Shirley, Actually?

Nick Shirley is a content creator from Georgia who, until 2023, was mostly making the kind of bland clickbait that populates YouTube. Think "16-Year-Old Flies to New York Without Telling Parents" and "Giving My Teachers $1,000 for Christmas!" Videos designed to exploit parasocial relationships and algorithmic promotion. Views were decent. Nothing special.

Then something shifted. Shirley discovered that inflammatory political content got way more engagement. His early political videos hammered the same themes: immigration chaos, drug epidemics, urban decay, and the "destruction" of American cities. The videos exploded. His following swelled from thousands to hundreds of thousands.

By early 2024, Shirley had become a fixture on the right-wing internet ecosystem. He appeared on podcasts. He sold merchandise. He got praised by Vice President JD Vance. He was invited to Trump's Mar-a-Lago compound. His reach extended directly into the highest offices of government, as discussed in The Verge.

But his content never evolved. It remained what it always was: repetitive, usually thin on facts, aggressively designed to provoke reaction rather than inform. The formula became mechanical. He'd go to the same neighborhoods (Canal Street in NYC, Kensington in Philadelphia), film the same scenes, and repackage them with slightly different titles. Same song, different verse.

What makes Shirley notable isn't his originality or depth of analysis. It's that he weaponized something much more powerful: algorithmic amplification. And he did it at scale.

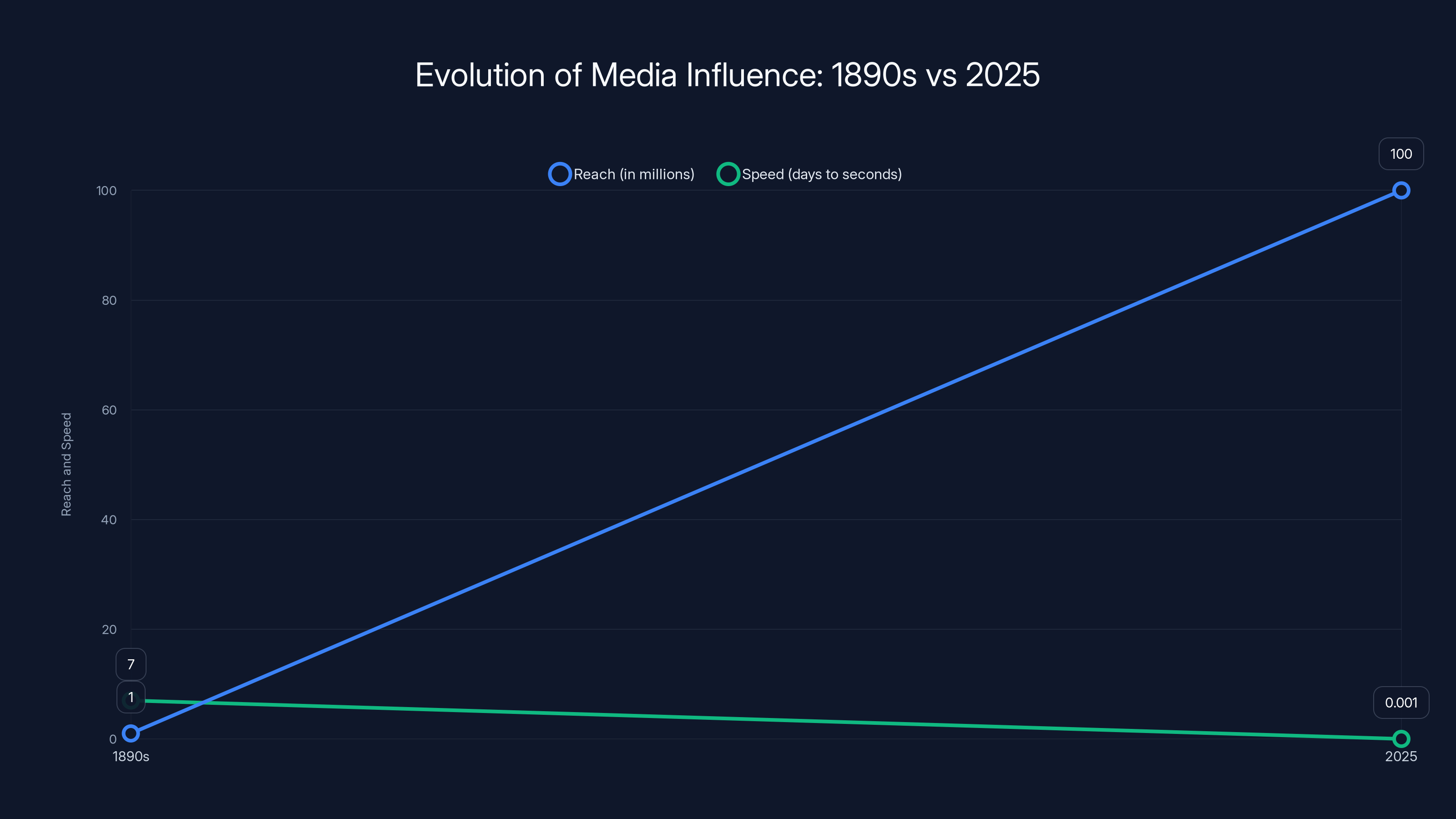

Estimated data shows ad revenue and donations as the largest revenue streams for slopaganda creators, highlighting the diverse income sources that make this content profitable.

The Minneapolis Raids: When Slop Becomes Violence

In early January 2025, Shirley uploaded a video making allegations that Somali American-operated daycares in Minneapolis were engaged in fraud and trafficking. The allegations were unsubstantiated. No evidence was presented. But the video was made with Shirley's signature style: inflammatory music, dramatic cuts, and urgent language suggesting imminent danger.

The video spread. It was shared on Twitter, on TikTok, on YouTube. Engagement spiked. Shirley's algorithm gods smiled.

Within weeks, federal immigration agents descended on Minneapolis in what became a violent occupation. Two residents died. The allegations Shirley had made? They were largely false. But the damage was already done. Two people were dead. A community was traumatized. And Shirley had moved on to his next video, as noted by The Guardian.

This is the distinction that matters. Shirley wasn't just posting a false opinion. He wasn't sharing a debunked conspiracy theory in a chat room. He was using the tools of modern content creation—editing, pacing, emotional manipulation—combined with algorithmic distribution to create a video designed to provoke maximum outrage. And that outrage didn't stay online. It catalyzed federal violence.

When you can trace a direct line from a YouTube video to dead bodies, the question stops being about free speech and starts being about accountability. How did we get here? How does a 23-year-old with no credentials trigger a federal operation? And why is the algorithm rewarding this behavior?

Those are the questions that haunted everyone who paid attention to what happened in Minneapolis.

What Is Slop, Really?

Before we can understand slopaganda, we need to define slop itself. And it's not what you think.

When people talk about slop, they usually mean AI-generated garbage. Chat GPT essays. Fake images. Stock footage compilations. But that's just a specific subgenre of something much larger.

Slop is any content made quickly, cheaply, and poorly for the explicit purpose of extracting value from your attention. That value could be ad revenue. It could be engagement metrics. It could be influence. It could be political power. But the mechanism is always the same: make something that provokes enough reaction to get distributed, without spending the resources to make it actually good.

The financial advice peddled by thousands of people on Instagram Reels? Slop. Climate change debunking that snowballs to millions of views? Slop. The president's social media posts? Slop. Engagement bait designed to make you react before you think? Slop.

Slop is passive. It asks nothing of you except to consume it, react to it, and move on. It's the natural endpoint of an internet built for scale and ruthless optimization. When the algorithm rewards engagement above all else, slop is what you get.

Before Shirley's pivot to conservative politics, he was making slop for babies. Sensationalist titles, minimal production value, maximum engagement. The format never changed when he switched to political content. The only difference was that political slop has higher stakes. When you're making slop about conspiracy theories, the algorithm doesn't just reward you with ad revenue. It rewards you with influence. With political power. With the ability to move institutions.

That's when slop becomes dangerous.

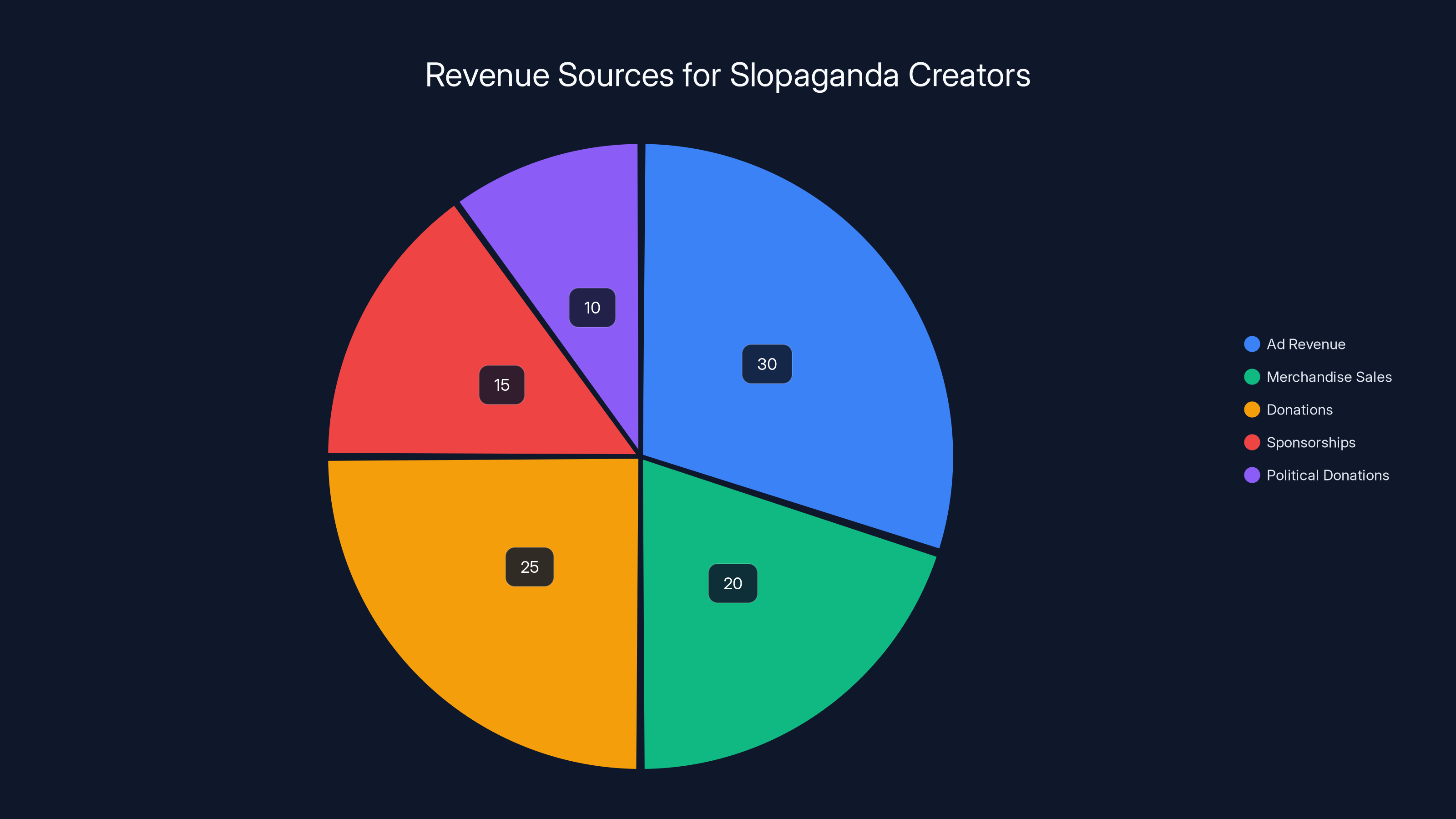

Algorithmic amplification and inflammatory content have the highest impact on slopaganda reach. Estimated data.

The Yellow Press Playbook: History Repeating

The parallel historians keep drawing is to yellow journalism of the 1890s. It's not a perfect comparison, but it's instructive.

Yellow journalism got its name from a cartoon strip called "The Yellow Kid" that ran in competing New York newspapers. The New York World and the New York Journal would publish legitimate reporting alongside sensationalist stories designed to provoke outrage and "almost invent scandals in order to sell more newspapers," according to journalism historians.

What's crucial about that era is that professional news standards hadn't been established yet. There were no codes of ethics. No fact-checking departments. No institutional accountability. Papers could print whatever would sell copies, truth was optional, and the consequences were whatever the market would bear.

The Spanish-American War of 1898 remains the textbook example. Yellow papers ran stories about Spanish atrocities in Cuba that were either exaggerated or entirely invented. "You furnish the pictures and I'll furnish the war," the editor of the Journal allegedly told a photographer. Whether that quote is accurate or not, the dynamic was real: sensationalist press coverage and political ambitions aligned, and the result was a war that killed thousands.

Now look at 2025. We have Shirley's unfounded claims about Somali daycares. We have federal raids. We have dead bodies. The dynamic is identical. The press (in this case, social media creators) generates outrage. Political actors signal approval. The outrage intensifies. Action is taken. Consequences follow.

The difference is scale and speed. Yellow journalists needed printing presses and distribution networks. They were still constrained by physical limitations. Shirley needs a smartphone and a WiFi connection. And instead of reaching thousands, he reaches millions, in seconds, with algorithmic amplification doing the heavy lifting.

Historians note that debate continues about how much responsibility yellow journalism actually bears for the Spanish-American War. But there's no debate about causation here. The Minneapolis raids followed directly from Shirley's video. The algorithm had moved from passive distribution to active incitement.

We keep thinking this is a free speech problem. It's not. It's an algorithmic amplification problem. The speech itself is protected. The mechanism that amplifies false speech into federal violence is the issue.

The Anatomy of Slopaganda: How It's Designed

Slopaganda is propaganda optimized for algorithmic distribution. It's not subtle. It's not sophisticated. It's mechanically designed to hit specific buttons that we know the algorithm rewards.

First, repetition. Shirley's videos follow predictable patterns. "[City] Has Fallen." "What's Happening in [Neighborhood]." Same structure. Same framing. Same conclusion. Why? Because the algorithm rewards consistency. If viewers know what they're getting, they're more likely to click. More clicks equals more engagement. Repetition isn't a flaw—it's a feature.

Second, emotional manipulation. Every video is shot with the sense that you're about to see something shocking or urgent. The music swells. The cuts are fast. The language is alarming. This isn't journalism trying to inform. It's theater trying to provoke reaction. Anger, fear, disgust—these emotions generate engagement. Thoughtful analysis doesn't.

Third, visual confirmation bias. Shirley films in rough neighborhoods, usually in poor areas with visible drug use, homelessness, or decay. He never provides context. He never interviews residents. He never explores causes. He just points a camera at suffering and lets the viewer draw conclusions. The algorithm doesn't care about nuance. It cares about clarity. Stark visual contrasts between order and chaos generate engagement.

Fourth, false equivalence. Shirley implies causation without establishing it. He shows a rough street and implies it's "because of" immigration or Democratic policy. He never proves the claim. He doesn't need to. The visual evidence feels convincing. Our brains prefer simple narratives over complex ones. The algorithm knows this and rewards it.

Fifth, monetization integration. Every video includes merchandise links. Patreon links. Affiliate links. The content itself becomes a funnel. You watch because you're angry. You stay because you're engaged. You buy because you feel part of a community. The economics are transparent: slopaganda is profitable. That profit incentive ensures it will continue.

Sixth, ecosystem leverage. Shirley doesn't exist in isolation. His videos get shared by larger right-wing media figures. They get discussed on podcasts. They get cited by politicians. The slopaganda ecosystem amplifies individual pieces. One video reaches thousands. Shared by an influencer with millions of followers, it reaches tens of millions. That reach creates political pressure. Political pressure creates action.

Understood this way, slopaganda is perfectly rational. It's not stupid. It's not ineffective. It's precisely engineered to exploit the incentive structures of modern social media. And it works.

Algorithms prioritize content that generates high engagement, often amplifying inflammatory, outrageous, or false claims over true information. Estimated data based on typical algorithm behavior.

The Algorithm as Amplification Engine

Here's the uncomfortable truth: algorithms don't care whether content is true. They care whether it generates engagement. Engagement is the metric that social media companies optimize for, because engagement is how they sell ads.

Inflammatory content generates engagement. Outrageous claims generate engagement. False claims that provoke reaction generate engagement. The algorithm doesn't distinguish between true outrage and manufactured outrage. It just sees the signal and amplifies it.

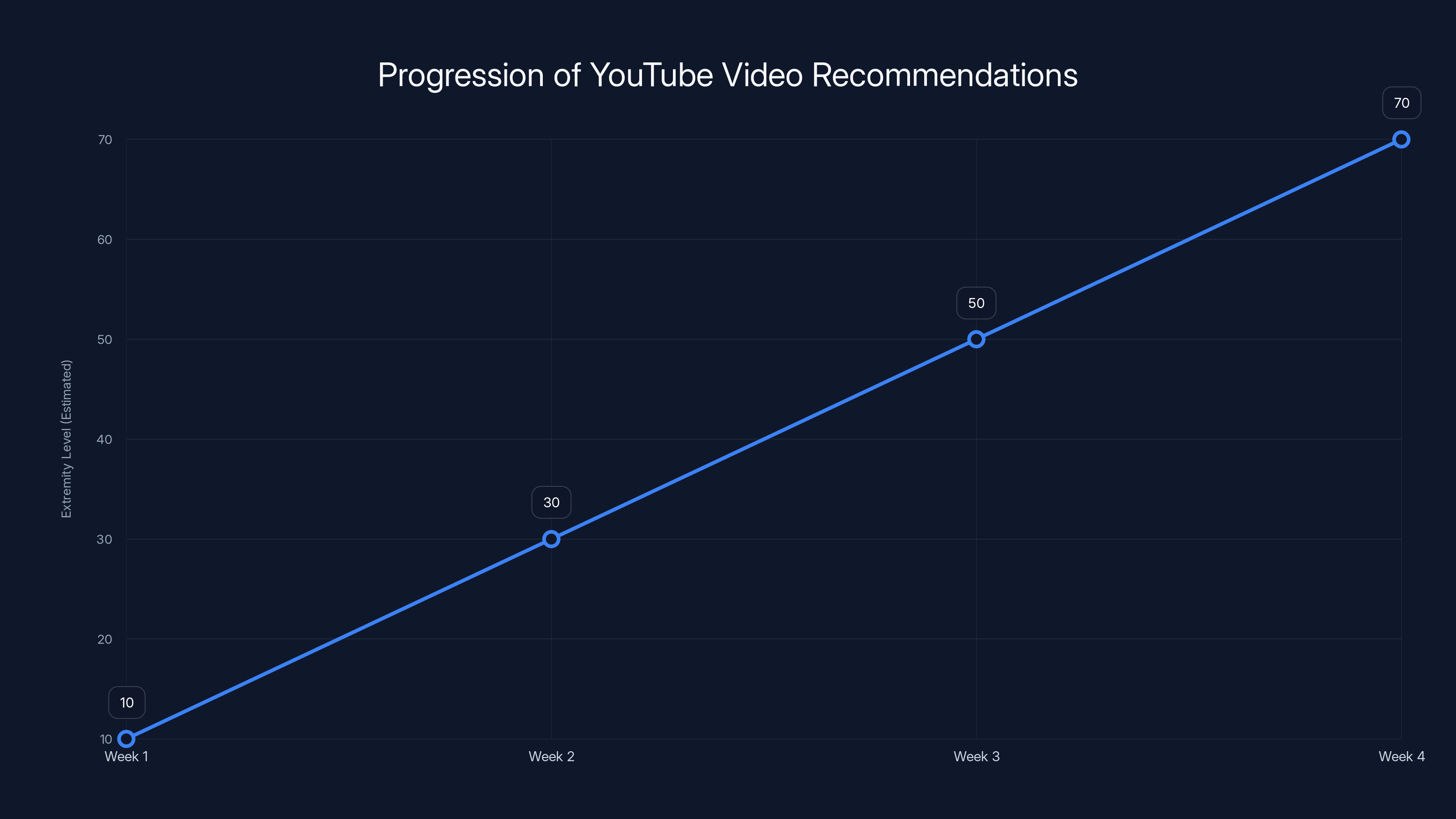

YouTube's recommendation algorithm is designed to keep you watching. It learns what kind of content makes you click. If you watch one political video, the algorithm learns that you're interested in political content. It starts recommending more. The more you watch, the more extreme the recommendations become. This isn't conspiracy. This is documented behavior.

TikTok's algorithm is even more aggressive. It doesn't rely on your viewing history. It learns from what you watch, what you skip, how long you pause, whether you go back and rewatch. It's profiling your emotional response in real time. It knows what makes you angry. It knows what scares you. And it feeds you more of that.

This is why slopaganda is so effective. The algorithm is doing the heavy lifting. Shirley makes the video. The algorithm decides whether it reaches 10,000 people or 10 million. And the algorithm's incentive is always to amplify whatever generates reaction, true or false.

The question becomes: at what point does algorithmic amplification of false information constitute the platform's responsibility? If YouTube's algorithm pushes Shirley's video to millions of people, knowing that previous versions of similar videos have triggered real-world violence, is YouTube complicit in the violence?

That's the legal and moral question that nobody has answered satisfactorily. Platforms hide behind Section 230, which shields them from liability for user-generated content. But Section 230 doesn't shield them from liability for their algorithms. The distinction matters. The algorithm isn't neutral distribution. It's active amplification.

When a 23-year-old's video can trigger federal violence, the algorithm has stopped being a distribution system and become a weapon.

The Economics of Slopaganda

Slopaganda wouldn't exist if it wasn't profitable. Understanding the money explains why we have so much of it.

The production cost for slopaganda is essentially zero. You need a smartphone, decent lighting, and basic editing software. Most of that is free or very cheap. A professional documentary costs hundreds of thousands. A slop video costs hundreds. That's a cost-to-reach ratio that's almost impossible to beat.

The revenue model is diverse. First, ad revenue from the platform itself. If you upload a video to YouTube that gets 10 million views, you're earning somewhere between

Second, merchandise sales. Shirley sells t-shirts, hats, and merchandise branded with slogans from his videos. If even 1% of your viewers buy one item at $25 profit, a 10 million view video funds itself multiple times over.

Third, donations. Platforms like Patreon allow creators to ask for direct financial support. Engaged audiences often donate. A creator with 100,000 dedicated followers might raise $50,000 per month from Patreon alone.

Fourth, sponsorships. Companies love reaching engaged audiences. A slopaganda creator with a few hundred thousand subscribers can charge

Fifth, political donations. This is the part that gets less attention but matters most. Conservative PACs and political organizations donate directly to creators who align with their messaging. Shirley's content gets shared by Republican politicians. It's entirely possible (likely, even) that his work attracts direct financial support from political actors with an agenda.

Add it all up and a single slopaganda creator can earn half a million dollars per year with minimal effort. Multiply that across hundreds of creators doing the same thing, and you're looking at an industry worth hundreds of millions annually.

That's the engine. As long as the economics work, creators will keep making slopaganda. As long as the algorithm rewards engagement, the platforms will keep amplifying it. As long as slopaganda generates political power, political actors will keep amplifying it further. It's a self-reinforcing cycle.

The only way to break it would be to change the incentives. Tax engagement-based algorithms. Regulate algorithmic amplification. Change how platforms monetize content. Require transparency about recommendation systems. But none of that has happened, and it probably won't happen soon.

So we're stuck. The economics are too good. The incentives are too perverse. Slopaganda is here to stay.

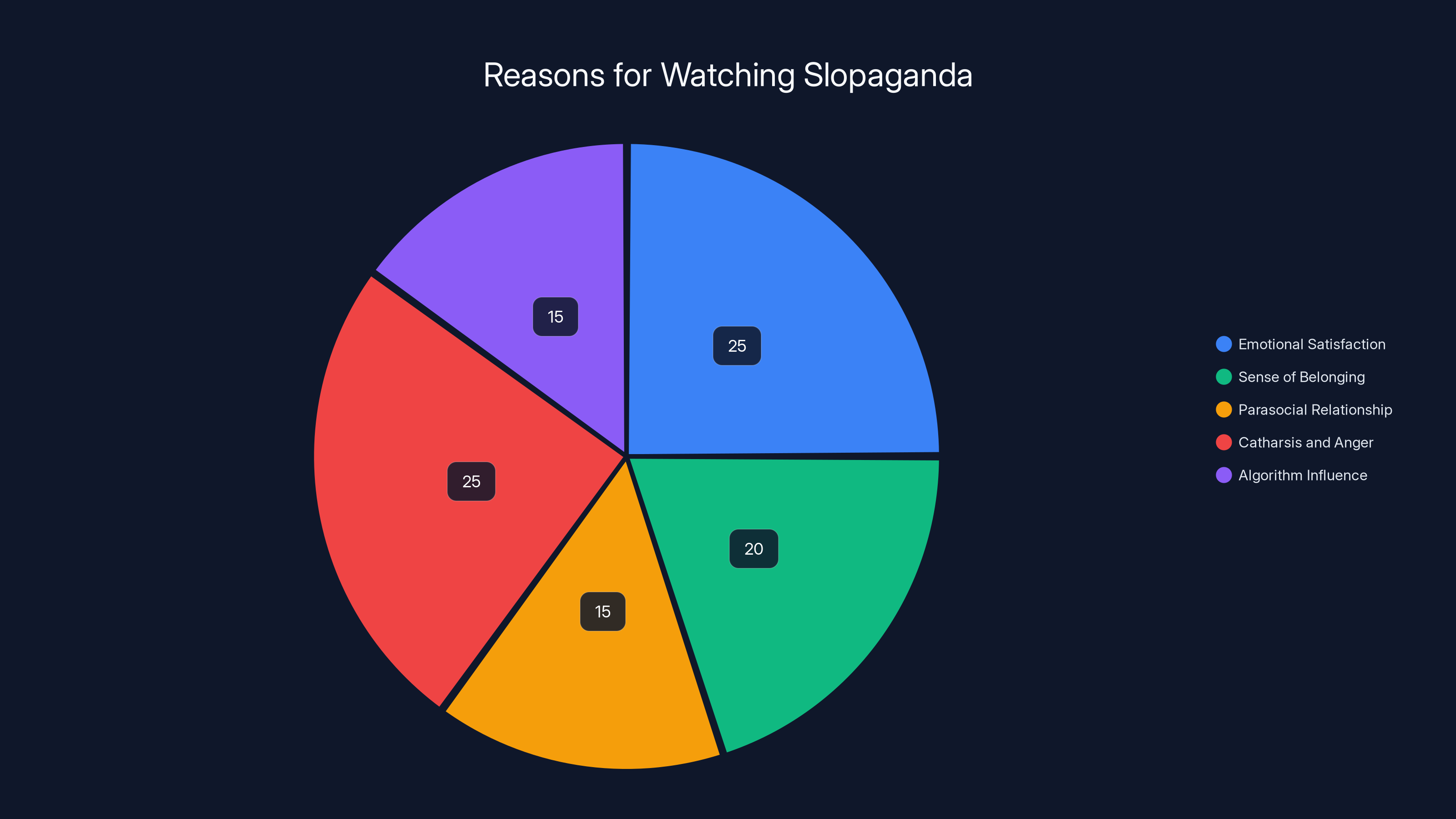

Viewers are primarily motivated by emotional satisfaction and catharsis, with the algorithm also playing a significant role. Estimated data based on narrative insights.

Who Watches This Stuff, and Why?

It's easy to dismiss slopaganda viewers as gullible or stupid. That misses the point entirely.

People watch slopaganda because it explains the world in ways that feel true, even if they aren't accurate. They watch because they feel like something is wrong, and slopaganda offers an explanation. They watch because their real material conditions have gotten worse (stagnant wages, rising housing costs, deteriorating infrastructure), and slopaganda tells them who to blame.

Shirley's audience probably includes people who saw their neighborhood change, who felt economically precarious, who felt left behind. Slopaganda doesn't tell them "well, complex structural economic forces are at play." It tells them: "That group over there is destroying your city." That's emotionally satisfying. That's the kind of narrative your brain enjoys.

There's also a tribal element. Watching Shirley signals membership in a community. You're part of the group that "sees what's really happening." You're not asleep like the mainstream media consumers. You understand the truth. That sense of belonging is powerful. Humans are tribal creatures. We want to belong.

The parasocial relationship is real too. Viewers feel like they know Shirley. He's always honest. He tells it like it is. He's not afraid of being canceled. He represents a version of authenticity that's increasingly rare in media. That feeling of connection makes people protect him, defend him, share his work.

Also, there's actual anger. Real grievances. If you're struggling economically, if you feel like institutions have failed you, if you feel like your voice doesn't matter, then slopaganda offers catharsis. It channels that anger outward. It gives you someone to blame. That's psychologically valuable, even if it's not true.

Finally, there's the algorithm itself. Once you watch one slopaganda video, your feed fills with more. You're not choosing to watch a hundred videos about urban decay. The algorithm chose for you. Before you know it, you've spent 30 hours consuming content that frames reality in a very specific way. Repetition creates belief, even for claims you'd normally question.

Understanding the audience means understanding that this isn't a simple problem. These aren't people who need better education. These aren't people who need to "learn to think critically." These are people with real grievances who've been captured by a recommendation system that profits from amplifying the most emotionally resonant framing of their grievances.

That's much harder to fix than debunking individual false claims.

The Slopagandists: Who Else Is Out There?

Shirley isn't alone. There's an entire ecosystem of creators doing the same thing, with slight variations.

Some focus on immigration. Some focus on urban crime. Some focus on protests. Some focus on education or cultural issues. The topics vary, but the format is identical: show a problem, blame a scapegoat, imply urgency and danger, monetize the attention.

They call themselves "independent journalists." Sometimes they claim to be doing "real reporting" that the mainstream media ignores. But there's nothing journalistic about it. Journalists report facts. They provide context. They check their sources. They correct themselves when they're wrong. Slopagandists do none of these things.

What defines a slopagandist:

- Repetition over reporting: Same topics, same framing, same conclusions, minimal original investigation.

- Emotional manipulation over information: Content is designed to provoke reaction, not to inform.

- Monetization transparency: All content includes links to merchandise, donations, sponsorships.

- Community building over accuracy: Viewers feel part of a tribe, not necessarily informed.

- Algorithm optimization: Every choice is made to maximize engagement, not understanding.

- Political alignment: The content consistently aligns with and amplifies one political perspective.

- Minimal accountability: No corrections. No retractions. No engagement with criticism.

There are probably dozens of slopagandists with audiences in the hundreds of thousands. Maybe hundreds with smaller audiences. They form a network. They promote each other. They appear on each other's podcasts. They reference each other's claims. They create the impression of a movement, a perspective, a legitimate alternative media.

But it's all slop. Repetitive, emotionally manipulative, algorithmically optimized slop.

The scary part is that it works. It moves institutions. It changes policy. It triggers federal violence. The scarier part is that nobody's stopping it. The platforms profit from it. The political actors benefit from it. The creators grow wealthy from it. Everyone wins except the public, which is left holding the bag of consequences.

Estimated data shows that YouTube's algorithm may push users towards more extreme content over a period of weeks, starting from initial engagement with political videos.

The Credibility Crisis: How We Lost Trust in Everything

There's a question lurking behind all of this: How did we get to a place where an unsubstantiated video by a 23-year-old can trigger federal violence?

Part of the answer is that we've lost institutional trust. Mainstream media is perceived as biased. Fact-checkers are perceived as partisan. Government agencies are perceived as corrupt. When institutional authority collapses, people will believe whatever explanation makes sense to them emotionally.

Slopaganda fills that gap. It's not constrained by institutional standards. It doesn't have to be true. It just has to be satisfying. And in a world where nothing feels trustworthy anymore, satisfying is enough.

But there's a circularity here. Slopaganda contributes to institutional distrust. When you watch hundreds of videos claiming that mainstream media is lying, that fact-checkers are corrupt, that institutions are conspiring against you, your trust in those institutions erodes. And as trust erodes, you become more receptive to slopaganda. It's a reinforcing loop.

The platforms have enabled this. By treating mainstream news outlets and slopagandists as equivalent sources worthy of equal algorithmic consideration, they've delegitimized the very institutions that might have counteracted slopaganda. Why trust the New York Times when the algorithm shows you a slop video with higher engagement?

It's not clear how we escape this trap. Reinstating institutional authority won't work as long as those institutions haven't meaningfully changed. Fact-checking slopaganda won't work as long as viewers don't trust the fact-checkers. Regulating the platforms might help, but it's slow and political and unlikely to happen before more damage is done.

Meanwhile, slopaganda keeps working.

The Government-Media-Influencer Feedback Loop

What makes Shirley genuinely dangerous is that he exists within a feedback loop with political power.

It works like this: Shirley makes a video with inflammatory claims about immigration or urban decay. The video goes viral because the algorithm rewards engagement. The video gets shared by conservative influencers with larger audiences. Conservative politicians see the video, recognize the messaging aligns with their political agenda, and amplify it. They quote it. They share it. They reference it in speeches and statements.

That political amplification sends the video back through the recommendation system with added legitimacy. If a politician is talking about it, the algorithm treats it as more important. More people see it. More engagement. The cycle continues.

Eventually, enough political pressure builds around the claims in the video that the government feels compelled to respond. The Department of Homeland Security can't ignore reports of trafficking, even if those reports come from a YouTuber. So they investigate. They take action. Federal agents descend. And what started as slop becomes policy.

This is exactly what happened with the Minneapolis raids. The video had no credible sourcing. But the claims fit within the Trump administration's immigration agenda. So the administration treated them as credible and acted. The result was federal violence, as detailed by ABC News.

The feedback loop means slopaganda isn't just media anymore. It's a propaganda system. The traditional definition of propaganda is information designed to influence public opinion or policy toward a specific end. Slopaganda does exactly that. It's designed to generate outrage about specific groups and policies, and it's coordinated (even if loosely) with political actors who benefit from that outrage.

What distinguishes propaganda from journalism is intent and accuracy. Journalists intend to inform. Slopagandists intend to inflame. Journalists care about accuracy. Slopagandists care about engagement. The distinction matters legally and morally.

But here's the terrifying part: The feedback loop means it doesn't matter if the claims are true or not. Eventually, the political system will act on them anyway. False claims about daycares trigger federal violence. That's what we've normalized.

The Role of Platform Architecture

Let's be clear about something: none of this happens without the platforms.

YouTube, TikTok, Twitter, Instagram—these platforms are architected to amplify engagement. Every design choice is made to maximize the time you spend on the platform and the number of ads you see. Inflammatory content generates more engagement than thoughtful content. That's not coincidence. That's how the system was designed.

The recommendation algorithm doesn't think. It doesn't have values. It doesn't care about truth. It's a mathematical optimization function trained on one metric: engagement. Given that objective, it will reliably recommend content that provokes reaction, regardless of whether that content is accurate.

When you watch a slopaganda video, the algorithm learns that you're interested in that category of content. It starts showing you more. The more you watch, the more extreme the recommendations become. This is documented behavior across all these platforms. It's called the "filter bubble" or "echo chamber" effect.

The platforms know this is happening. They've known for years. There's been substantial internal research at these companies documenting how their algorithms amplify extreme content. Some of that research has leaked to the public. It all confirms the same thing: the platforms know their systems amplify misinformation and extreme content, and they haven't fixed it because fixing it would reduce engagement and ad revenue.

There's a technical fix available. You could rewire recommendation algorithms to prioritize accuracy over engagement. You could downrank content from sources with poor fact-checking records. You could promote content from sources with higher editorial standards. You could do a lot of things.

But you'd make less money. So they don't do them.

This is where the free speech defense breaks down. The platforms aren't just hosting speech. They're actively amplifying it through algorithmic decisions. They're choosing which speech gets seen by millions and which speech gets seen by hundreds. That's not neutrality. That's editorial decision-making. And when the editorial decisions consistently amplify misinformation and propaganda, at what point do the platforms become responsible for the consequences?

There's no legal consensus on that yet. But the moral question seems clear.

Fact-Checking as Theater

One response to slopaganda has been fact-checking. Fact-checkers debunk false claims. They post corrections. They try to stop misinformation from spreading.

It doesn't work.

There are several reasons why. First, the backfire effect is real. When people encounter fact-checks that contradict their existing beliefs, they often become more entrenched in those beliefs, not less. Showing someone that their favorite influencer spread misinformation doesn't make them trust the influencer less. It makes them distrust the fact-checker more.

Second, fact-checkers operate at a disadvantage. Slopagandists can make 50 videos in the time a fact-checker can properly debunk one. The sheer volume of content makes comprehensive fact-checking impossible. By the time a claim is debunked, it's already spread to millions of people.

Third, fact-checkers have lower engagement. A fact-check article is dry and academic. It's designed to inform, not to provoke reaction. Slopaganda is designed to provoke reaction. The algorithm favors slopaganda. So even when fact-checks exist, they reach far fewer people than the original misinformation.

Fourth, fact-checkers aren't trusted by the people who need fact-checking most. If you believe the mainstream media is lying, why would you trust mainstream fact-checkers? Why would you trust academic institutions? Why would you trust anything except independent creators who claim to be telling the truth?

This is the trap. Fact-checking works great for people who already value accuracy and institutional authority. But slopaganda's audience is precisely the people who don't value those things anymore. Fact-checking bounces off them.

There's a solution, maybe. You'd need fact-checkers who are also trusted by the audience. You'd need influencers with credibility in these communities who are willing to say "this claim is false." You'd need independent media producers who are willing to do real reporting instead of slop. But that doesn't exist yet. And it won't exist as long as real reporting doesn't generate the engagement that slop does.

So fact-checking remains theater. It makes us feel like we're doing something. But it doesn't actually stop slopaganda.

The Free Speech Paradox

This is where it gets politically complicated.

Free speech principles say that the government can't regulate speech. That's a hard limit. But slopaganda doesn't require government regulation. It requires institutional responses.

Platforms could demonetize slopagandists. That would be legal. They could deprioritize inflammatory content in their algorithms. That would be legal. They could refuse to amplify content without credible sourcing. That would be legal. They could do a lot of things without violating anyone's free speech rights.

But they don't, because doing so would reduce engagement and ad revenue. So instead, they hide behind free speech principles that don't actually apply to their editorial decisions.

Meanwhile, slopaganda advocates claim free speech protections too. They say they're just sharing their perspective. That everything they say is opinion. That fact-checks are censorship. That deplatforming is a violation of their rights.

Here's the thing though: Free speech doesn't protect you from consequences. It protects you from government punishment. It doesn't protect you from your employer firing you. It doesn't protect you from advertisers dropping you. It doesn't protect you from platforms demonetizing you. It just protects you from jail.

But the slopagandist framings have convinced people that any consequence for speech is censorship. That's not what free speech means. That's just how power works.

None of this solves the fundamental problem. Even if you regulate the platforms perfectly, even if you demonetize every slopagandist, the incentive structures remain. There will always be a way to make money from inflammatory content. There will always be an audience desperate to find explanations for their suffering. The tools will always exist.

Free speech is a prerequisite for democracy. But it's not sufficient. Democracy also requires an informed citizenry and functioning institutions. When the institutional mechanisms for distributing information get hijacked by algorithms that profit from misinformation, free speech becomes a weapon against itself.

We haven't solved that paradox yet.

Slopaganda and Political Radicalization

One question researchers haven't fully answered: how much does slopaganda contribute to political radicalization?

We know that the algorithm can radicalize people. A person might start watching mildly conservative content and get gradually nudged toward more extreme versions. Studies show this happens consistently. The algorithm learns what provokes engagement and pushes you further in that direction.

But slopaganda accelerates the process. It presents extreme claims as facts. It demonizes outgroups as threats. It frames political opponents as enemies of the state. It creates an in-group identity that becomes central to how people see themselves. People radicalized through slopaganda don't just disagree with political opponents. They believe the opponents are destroying the country.

That mindset is dangerous. It justifies extreme actions. When your political opponent is an existential threat, normal rules don't apply. Violence becomes defensible. That's how you get federal raids and dead bodies.

There's been less research on this than there should be, partly because it's hard to study. You can't do a controlled experiment on radicalization. You have to do observational studies, which are messy and contested. But what research exists suggests slopaganda is particularly effective at radicalization because it operates at an emotional level, below conscious reasoning.

The algorithm is designed to do this. It's not a side effect. It's the intended behavior. Emotionally engaged users spend more time on the platform. They click more. They see more ads. They generate more data. From the platform's perspective, radicalization is profitable.

That's the most troubling part of all this. The platforms aren't failing to stop radicalization. The platforms are profiting from it.

The Path Forward: Possible Solutions

So what do we actually do about this?

The first step is transparency. We need to understand how algorithms work. We need to see which content is being amplified, why, and to what effect. Right now, that's a black box. Researchers can't access the data. Regulators can't audit the systems. We're flying blind.

There's a regulation being proposed called the Algorithmic Accountability Act that would require platforms to document and disclose how their recommendation systems work. That's necessary but insufficient. Even with transparency, we'd still need enforcement.

The second step is changing the incentive structure. Right now, engagement is the only metric that matters. You could change that. You could incentivize accuracy. You could reward content from sources with strong editorial standards. You could penalize content from sources with records of misinformation. You could make the algorithm optimize for informed citizens instead of just engagement.

Again, this would reduce ad revenue. But it would also reduce the spread of misinformation that triggers federal violence and kills people. There's a trade-off. We have to decide what we value.

The third step is media literacy. We need to teach people how to identify misinformation, how to evaluate sources, how to understand how algorithms work. That's slow. It takes years. But it's necessary. People need to understand that what they're seeing online is being selected by an algorithm based on what will provoke reaction, not what's true.

The fourth step is supporting real journalism. Investigative journalism is expensive. It doesn't generate the engagement that slop does. But it's essential for holding institutions accountable. We could fund journalism directly, through subscriptions or public funding. We could treat quality reporting as a public good, like we do with roads and schools.

The fifth step is political will. None of this happens without politicians being willing to regulate the platforms. And politicians benefit from slopaganda too. It helps them get elected. It mobilizes their base. They have an incentive to keep the current system in place.

So we're stuck. The economic incentives are perverse. The political incentives are perverse. The algorithmic incentives are perverse. Everyone with power to change the system benefits from keeping it the way it is.

Meanwhile, slopagandists keep posting. Algorithms keep amplifying. Politicians keep acting. And people keep dying.

Conclusion: Living in the Age of Slopaganda

Nick Shirley is not a unique problem. He's a symptom of a system that rewards misinformation, amplifies extremism, and monetizes outrage. He's what the internet looks like when you optimize purely for engagement and treat truth as irrelevant.

We didn't get here by accident. We got here through deliberate design choices made by platforms that prioritize growth and profit. We got here through the erosion of institutional trust and the lack of credible alternatives. We got here through our own participation in systems that reward the content we engage with most, regardless of its accuracy.

The scary thing is that Shirley won't be the last slopagandist to trigger real-world violence. The system is designed to produce more of them. As long as the economic incentives exist, as long as the algorithms reward engagement over accuracy, as long as the political system responds to manufactured outrage, we'll keep seeing this pattern.

Two people died in Minneapolis because a 23-year-old made a video. That's not normal. But it's not unique either. It's the inevitable endpoint of treating information as just another product to be optimized for scale and profit.

If we want things to change, we have to change the incentives. We have to demand that platforms prioritize accuracy over engagement. We have to fund real journalism. We have to restore institutional credibility. We have to teach media literacy. We have to regulate algorithmic amplification.

Or we accept that slopaganda is just what the internet is now, and that sometimes people will die based on false videos made by teenagers with smartphones.

Choose wisely.

FAQ

What exactly is slopaganda?

Slopaganda is propaganda designed and optimized for algorithmic amplification on social media. It's content made quickly and cheaply that combines inflammatory political messaging with emotional manipulation to provoke maximum engagement, regardless of factual accuracy. Unlike traditional propaganda, which prioritizes persuasion through ideology, slopaganda prioritizes profit through engagement metrics.

How does Nick Shirley's work fit the definition of slopaganda?

Shirley creates low-effort, repetitive videos making unsubstantiated claims about immigration, urban decay, and social issues. He uses emotional manipulation, dramatic editing, and algorithmic formatting to maximize engagement. His content is monetized through ads, merchandise, and donations. His videos have influenced policy directly—his false claims about Somali American daycares preceded federal immigration raids. This pattern exemplifies slopaganda perfectly.

What's the difference between slopaganda and yellow journalism?

Yellow journalism (1890s-1900s) was sensationalist reporting designed to sell newspapers. It prioritized outrage over accuracy but was still constrained by printing presses and distribution networks. Slopaganda is algorithmically amplified, reaches millions instantly, and operates at global scale. The speed and scale are exponentially different, making slopaganda more dangerous.

Why does the algorithm amplify slopaganda?

Algorithms are trained to maximize engagement. Inflammatory content, emotional manipulation, and conspiracy theories generate more engagement than thoughtful analysis. The algorithm doesn't care whether content is true. It cares whether it provokes reaction. Since slopaganda is engineered specifically to provoke reaction, the algorithm consistently prioritizes it.

Can fact-checking stop slopaganda?

Fact-checking alone is insufficient because it operates at a disadvantage. Slopagandists produce content faster than fact-checkers can debunk it. Additionally, the backfire effect means people who believe false claims often reject fact-checks and become more entrenched. Fact-checking works for people who already value accuracy, but not for people who have lost trust in institutions.

What happens if slopaganda is regulated?

Regulating slopaganda is complex because free speech protections prevent government censorship. However, platforms could voluntarily demonetize slopaganda, deprioritize it in algorithms, or require stronger sourcing without violating free speech. The challenge is that platforms profit from engagement, and slopaganda generates the most engagement.

Is slopaganda legal?

Yes, in most cases. Free speech protections cover false statements unless they constitute incitement (directly causing imminent harm). The Minneapolis raids followed directly from Shirley's video, which created a causation problem. But proving legal incitement is difficult. Most slopaganda exists in a grey zone where it's legal but deeply harmful.

Why do people believe slopaganda?

People believe slopaganda for several reasons: it explains their lived experiences and grievances, it provides simple answers to complex problems, it signals tribal membership, it triggers emotional satisfaction through catharsis, and it exploits algorithmic recommendation systems that concentrate consumption around similar content. Additionally, loss of trust in institutions means people are more receptive to alternative explanations.

What role do social media platforms play?

Platforms are central to slopaganda's success. They build algorithmic systems that reward engagement over accuracy. They monetize content through advertising. They provide distribution at global scale. Without platforms, slopaganda would be confined to small audiences. With platforms, a single creator can influence federal policy.

How can individuals protect themselves from slopaganda?

Individuals can develop media literacy by learning to identify emotionally manipulative content, evaluating source credibility, understanding how algorithms work, seeking diverse perspectives, and checking claims through credible sources. Additionally, being aware of your own algorithmic filter bubble and actively consuming content that challenges your existing beliefs helps prevent radicalization.

Quick Takeaways

Key Takeaways

- Slopaganda is propaganda optimized for algorithmic amplification, combining misinformation with emotional manipulation to maximize engagement regardless of accuracy.

- Nick Shirley exemplifies slopagandists: content creators whose false video claims directly triggered federal immigration raids and deaths in Minneapolis.

- Social media algorithms actively reward inflammatory content, creating a feedback loop that amplifies misinformation faster than fact-checkers can debunk it.

- Slopaganda's profitability through ads, merchandise, donations, and political funding creates economic incentives that ensure continued production.

- Yellow journalism parallels demonstrate this isn't new, but algorithmic scale makes modern slopaganda exponentially more dangerous to democratic institutions.

Related Articles

- TikTok's Trump Deal: What ByteDance Control Means for Users [2025]

- TikTok Censorship Fears & Algorithm Bias: What Experts Say [2025]

- TikTok Data Center Outage Sparks Censorship Fears: What Really Happened [2025]

- Social Media Companies' Internal Chats on Teen Engagement Revealed [2025]

- TikTok Data Center Outage: Inside the Power Failure Crisis [2025]

- Google AI News Headlines: How AI Rewrites Journalism & Why Publishers Are Angry

![Slopagandists: How Nick Shirley and Digital Propaganda Work [2025]](https://tryrunable.com/blog/slopagandists-how-nick-shirley-and-digital-propaganda-work-2/image-1-1769704915266.jpg)