The Era of Solo AI Is Over: Why Coordination Is the Next Battlefield

We've spent the last two years watching AI chatbots answer questions, summarize documents, and solve equations at superhuman speeds. It's impressive. It's also wildly incomplete.

Here's the thing: every major AI advancement has optimized for one user talking to one bot. Chat GPT? Designed for individuals. Claude? Built for solo users. Even the fanciest enterprise deployments basically bolt together a bunch of single-user experiences and call it "enterprise AI."

But that's not how work actually happens. Work is messy. It's collaborative. It involves competing priorities, changing requirements, people in different time zones disagreeing about the same problem, and decisions that ripple across weeks or months.

The real bottleneck isn't intelligence anymore. It's coordination.

That's the bet Humans& is making, and frankly, it's the most original AI startup thesis I've seen in months. Founded by alumni from Anthropic, Meta, OpenAI, xAI, and Google DeepMind—basically a who's who of AI research—the company just raised $480 million to build a new foundation model architecture designed specifically for collaboration, not information retrieval.

No product yet. No clear answer on what it'll be. But the founding team is talking about something genuinely different: an AI "central nervous system" for teams that understands social dynamics, tracks distributed decision-making, and helps groups of humans work together more effectively.

Let me break down why this matters, who's building it, and where the real opportunity actually sits.

Why Chatbots Failed at Collaboration (And Why It Matters)

Chatbots are, by design, optimized for a very specific interaction pattern: question in, answer out. The model gets trained on two objectives that sound sensible but create a fundamental blind spot.

First: minimize latency. Users want fast responses. Second: maximize accuracy on the specific question being asked. Both of these push AI toward surface-level, immediate problem-solving.

Neither of these metrics cares about whether a question is actually useful to ask in the first place. They don't care about context that existed three weeks ago. They don't understand that asking someone the same question twice creates frustration. They don't track that a decision made today contradicts something decided last month.

Current models, no matter how smart, are optimized to behave like extremely knowledgeable strangers. They have no institutional memory. No understanding of group dynamics. No ability to recognize when a conversation is just rehashing an old argument.

The coordination gap is massive.

Think about what coordination actually requires:

- Distributed memory: Someone needs to remember that the team decided on option A three weeks ago, why they chose it, and who disagreed.

- Perspective tracking: Who's in favor of what? Who needs to be consulted before moving forward? Who'll resist this change?

- Decision continuity: Understanding that today's choice contradicts yesterday's decision, and flagging that contradiction.

- Asynchronous intelligence: Not every communication is synchronous. A good collaborator understands that Sarah in London might not see a message for hours, and you need to structure information accordingly.

- Conflict resolution: When people disagree, a good coordinator doesn't pick a winner. They make space for the disagreement to be heard and explored.

Every single one of these requires a different model architecture, different training objectives, and different evaluation metrics than what we're currently optimizing for.

Current chatbots fail at collaboration because they're not built for collaboration. They're built for answering questions.

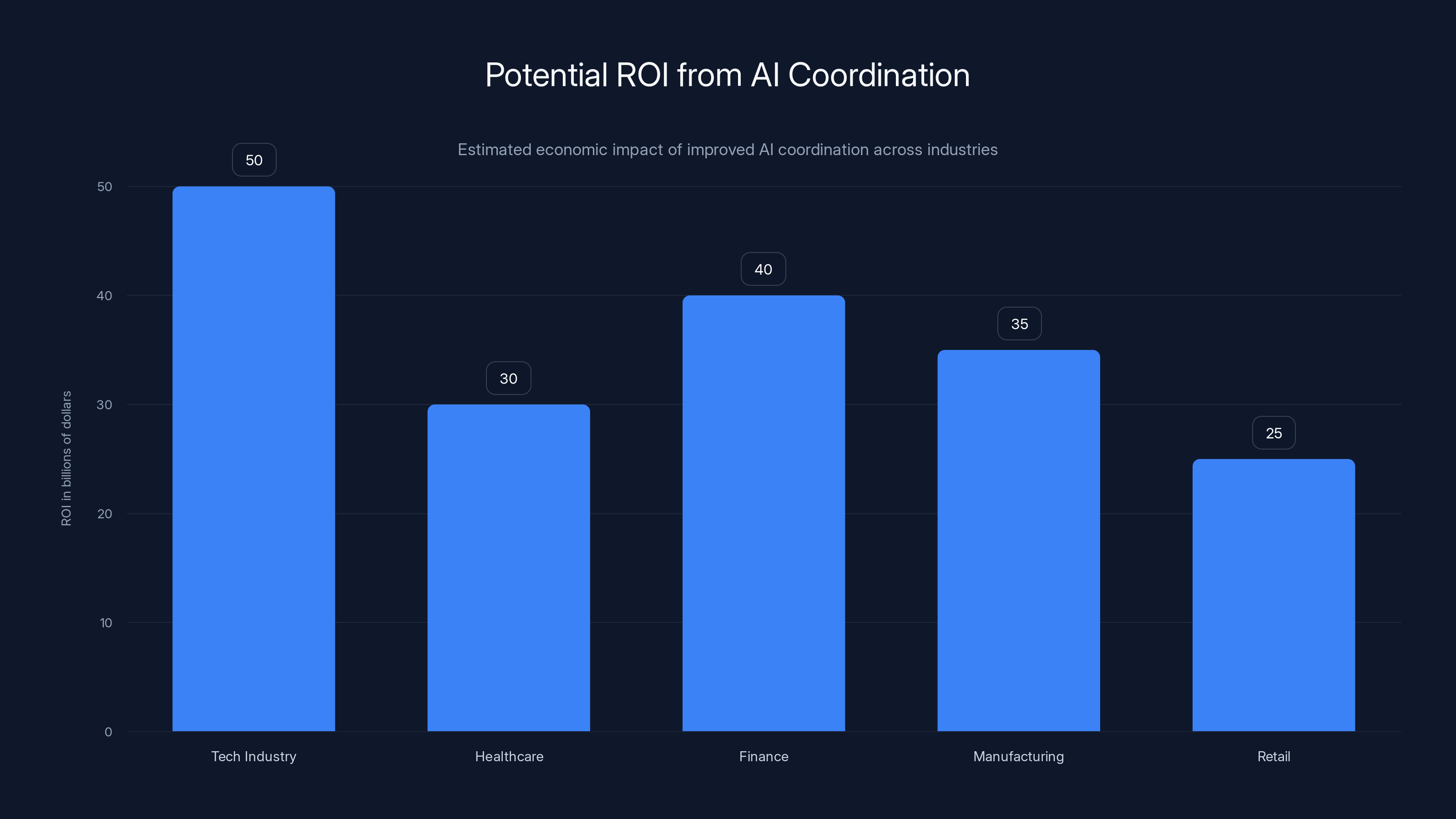

AI coordination could unlock billions in ROI by improving decision-making and reducing miscommunication across industries. Estimated data.

Humans&'s Bet: The Model as Connective Tissue

Humans& isn't trying to build a better chatbot. The co-founders—Eric Zelikman (CEO, former xAI researcher), Andi Peng (former Anthropic), and the broader team—are explicit about this.

They're building what they call "a model for coordination." The framing matters. It's not a model that can be plugged into Slack or Notion or Google Docs. It's a model that becomes the foundation for an entirely new product category designed around how humans actually collaborate.

In early interviews, Zelikman described a specific scenario that clarifies their thinking. Imagine you're a startup trying to decide on a logo. Everyone has opinions. It's tedious, it takes forever, and by the time you've polled everyone, you've lost momentum.

A coordination model would work differently. It would understand that Sarah, the designer, has the strongest opinions about aesthetics. Marcus, from marketing, cares about brand consistency. Priya, the CEO, needs to approve final decisions. The model would structure the conversation to surface these perspectives, minimize wasted discussion, and actually move the group toward a decision.

That's not answering a question. That's orchestrating a process.

The model itself changes:

Current models are trained to optimize for immediate user satisfaction (did the user like my response?) and accuracy on a specific query. A coordination model would be trained differently:

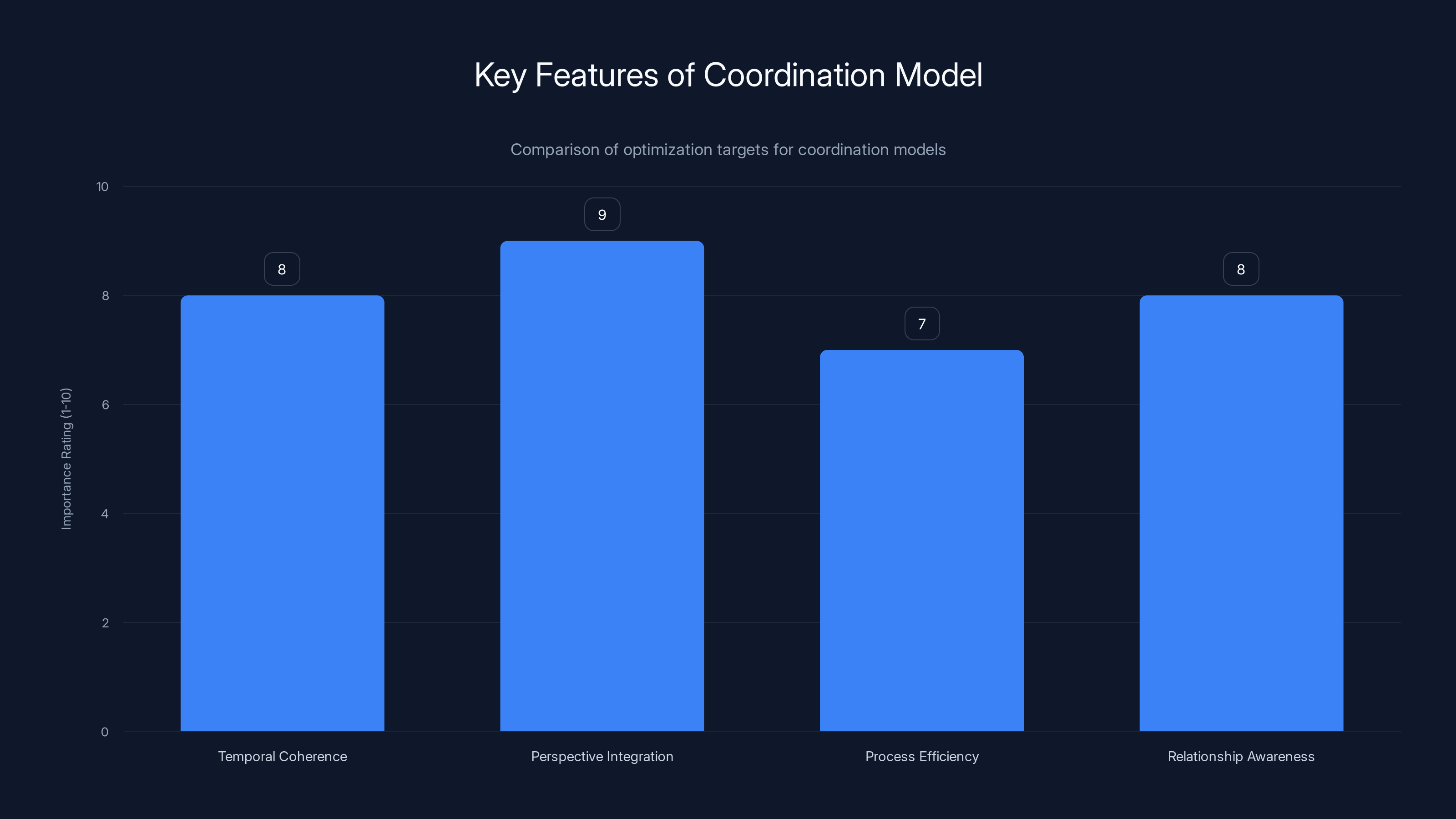

- Temporal coherence: Does this response make sense given what the group decided yesterday?

- Perspective integration: Have I understood all the viewpoints in this conversation?

- Process efficiency: Am I moving us toward a decision, or just rehashing the same arguments?

- Relationship awareness: Does this suggestion respect the trust and hierarchy in this group?

These are fundamentally different optimization targets. And they require different architectures.

Humans& is designing the model and product co-evolutively, which is a smart move. Most AI companies build models first and figure out the product later. Humans& is deliberately iterating the two together, making sure that as the model improves, the interface and capabilities actually map to something useful.

The $480 Million Bet: What Investors Believe About the Market

A $480 million seed round is not normal. Humans& raised it from top-tier investors, and the size tells you something important: these investors believe the coordination problem is as big as the intelligence problem.

For context, that's larger than many Series A rounds. It's comparable to what you'd raise if you were trying to build the next Google or Facebook. Investors don't write checks that size unless they believe the TAM (total addressable market) is massive and the founding team is uniquely positioned to own it.

Why would they believe that?

Market timing is everything. For the last two years, the question has been: what do we do with all these smart AI models? Companies have been running pilots, experimenting with chatbots in customer service, automating data entry. It's been a land-grab mentality—use AI wherever possible and worry about the business model later.

But we're entering a different phase. Companies are transitioning from "let's use AI for this specific task" to "let's restructure how our teams work around AI." That requires a different infrastructure layer.

Think of it like how Slack didn't displace email. Instead, Slack became the coordination layer that made email less necessary. Humans& isn't trying to replace your email or your project management tool. They're trying to become the coordination layer that makes those tools work better.

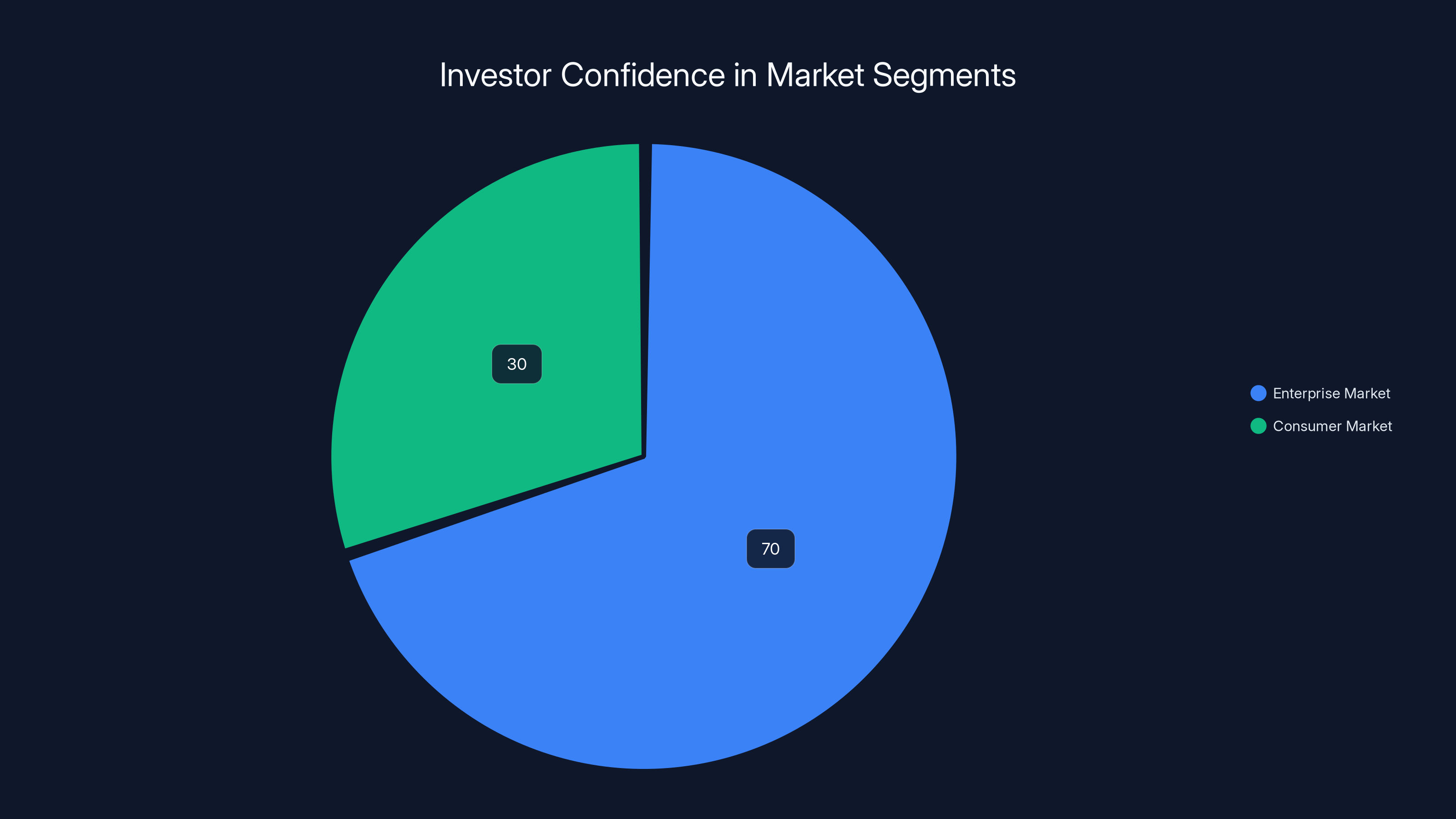

The enterprise picture is clearer than the consumer one.

For enterprise, the value is straightforward. If you can reduce the friction of distributed decision-making by 20%, that's billions of dollars of productivity gains across the economy. If you can help teams avoid rehashing decisions or duplicating work, that's even bigger.

For consumer, it's murkier. Humans& hinted at both enterprise and consumer use cases, but they were vague. Building a consumer coordination product is genuinely harder because coordination value is often invisible until you've used it for months. A chatbot delivers value immediately (great answer). A coordination system delivers value over time (fewer wasted meetings).

Investors are clearly betting that the enterprise value is so enormous that even if the consumer opportunity takes longer to materialize, the company will be worth hundreds of billions.

Investors are estimated to focus 70% on the enterprise market due to clearer value propositions, while the consumer market receives 30% due to its complexity and less visible coordination value. Estimated data.

Why This Matters More Than Another AI Startup

There are hundreds of AI startups raising money. Most are building tools that work within existing workflows or are applying models to specific verticals (legal AI, medical AI, sales AI). Those are useful, but they're not reshaping how work happens.

Humans& is different because it's betting on something that affects the entire stack: how groups of people make decisions and coordinate action.

There are three reasons this is actually important.

First: The coordination problem is genuinely unsolved. We've had Slack since 2013. We've had Asana and Monday.com and Linear and a thousand project management tools. All of them are still clunky for distributed coordination. They're great at making information visible, but they're bad at helping groups actually make decisions efficiently. Adding AI to these tools hasn't solved the problem—it's just made individual parts of the workflow faster.

A fundamental rethinking of how coordination works could be genuinely transformative.

Second: This maps to real pain. Talk to any engineering manager, product manager, or executive, and they'll tell you the same thing: we spend way too much time in meetings, on Slack, in email threads, trying to get alignment. The coordination bottleneck is real and it's expensive.

If Humans& can build something that materially reduces that friction, the ROI is enormous. Even a 10% improvement in team coordination efficiency translates to billions of dollars across the economy.

Third: The founding team actually understands both AI and organizational behavior. This is not a group of researchers who learned how to code suddenly trying to build organizational tools. These are people who've shipped products at scale, who understand how model capabilities map to user needs, and who've thought deeply about how AI changes human behavior.

That combination is rare.

The Shift From Chat Interfaces to Agent-Based Workflows

We're at an inflection point in how organizations deploy AI. For the last two years, the dominant pattern has been: chatbot interface + human decision-making. You ask the AI a question, it gives you an answer, you decide what to do.

That's changing.

Companies are moving toward agent-based systems where AI isn't just answering questions—it's executing multi-step workflows, coordinating with other systems, and sometimes making decisions autonomously within guardrails.

The shift is important for understanding why coordination matters so much:

In a chat interface, you're the bottleneck. You ask the question, wait for the answer, decide what to do next. The AI is passive. It's responding.

In an agent system, the AI is active. It's identifying problems, proposing solutions, checking with relevant stakeholders, and moving work forward. The human is the checkpoint, not the operator.

This creates a completely different coordination challenge. When an AI agent is actively coordinating with multiple humans and systems, you need a layer that helps manage that coordination. You need something that tracks what the agent did, who needs to know about it, when decisions need to be revisited, and how changes propagate.

Humans& is betting that this is exactly where their model becomes critical.

Instead of thinking about AI as "a smart assistant that answers questions," you start thinking about AI as "part of the team that needs to stay coordinated with everyone else." That requires fundamentally different model capabilities.

The Social Intelligence Layer: What Humans& Thinks Is Missing

One of the most interesting things about Humans&'s framing is their emphasis on "social intelligence." They're not building a model that's smarter at understanding language or code. They're building a model that understands social dynamics.

What does that actually mean?

It means understanding group psychology. When you ask a group of people a question, there are dynamics at play that aren't visible in the text. Some people are more comfortable speaking up. Others are deferring to authority. Someone might strongly disagree but stay quiet because they perceive social pressure.

A socially intelligent system would recognize these patterns. It would actively elicit perspectives from people who are holding back. It would flag contradictions between what people say publicly and what they might actually think. It would structure conversations to surface disagreements early rather than letting them fester.

It means understanding relationship dynamics. Not all relationships are equal. In a typical team, there are relationships of trust, skepticism, hierarchy, and friendship. These relationships shape how information flows and how decisions get made.

A socially intelligent model would understand these dynamics and account for them. It would know that when Sarah says something, Marcus trusts her completely, so her input carries more weight on certain decisions. It would know that Priya and James have a history of disagreement, so their different perspectives should both be explicitly surfaced, not hidden.

It means understanding temporal dynamics. Decisions aren't made in isolation. They build on previous decisions. They get revisited. Context matters.

A socially intelligent model would track the history of decisions. It would recognize when the team is about to repeat a decision they already made. It would surface relevant context from past conversations that informs current ones.

None of these things require superhuman intelligence. They require a different kind of intelligence: one that's attuned to how humans actually interact in groups.

This is what differentiates Humans& from just "let's add AI to Slack." They're building a model specifically trained to understand group dynamics, not individual intelligence.

The 'Embedded Layer' option scores highest in advantages due to potential for faster adoption, while the 'New Category' faces the most significant disadvantages due to unclear product-market fit. Estimated data based on qualitative analysis.

The Product Question: What Could Humans& Actually Ship?

Here's the honest part: Humans& still doesn't have a clear product. They've hinted at replacing multiplayer contexts like Slack or Google Docs or Notion, but they haven't committed to anything.

This is either a massive red flag or a sign they're thinking deeply about something that can't be easily described. Probably both.

There are a few plausible directions they could go:

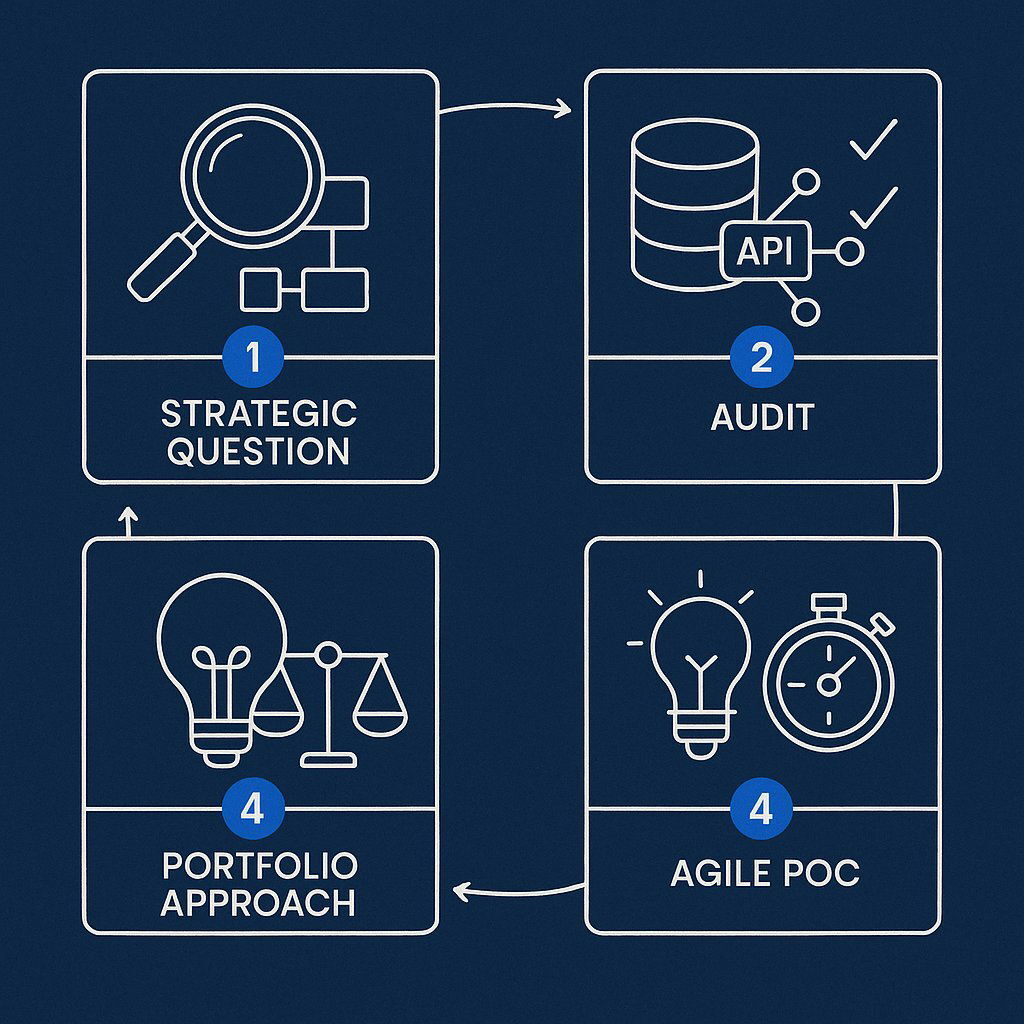

Option 1: A replacement for synchronous communication platforms. Imagine a tool that's like Slack, but where the AI actively helps coordinate conversations toward decisions. Instead of an endless thread of messages, you get structure. You get the AI flagging when someone's perspective isn't being heard. You get intelligent summaries that aren't just "here's what everyone said," but "here's what we actually decided and why."

The advantage: huge market, clear comparison point. The disadvantage: Google, Microsoft, and Slack are all adding AI features. You'd be fighting entrenched players.

Option 2: A new category that sits between communication and project management. A tool specifically for distributed decision-making. You'd use it to run decisions like "what product should we build next?" or "how do we restructure the team?" It would structure the conversation, surface perspectives, and help the group move toward a decision.

The advantage: new category, no direct competitors. The disadvantage: product-market fit is unclear, and adoption is slow for new categories.

Option 3: An embedded layer in existing tools. Like how Figma has plugins, Humans& could provide a coordination layer that sits on top of existing communication and project management tools. Their model would power a smart assistant that lives in Slack, Notion, Google Docs, etc.

The advantage: faster adoption, leverages existing workflows. The disadvantage: completely dependent on whether Slack, Notion, etc. are willing to give them deep access.

Full transparency: I don't know which path they'll take. And that's probably intentional. They're thinking about the model first, the product second. Once the model is good enough at coordination, the product form will become obvious.

That's actually a smart approach for a $480M startup. You're not constraining yourself to a product shape before you understand what the model can actually do.

The Broader Landscape: Who Else Is Working on Coordination?

Humans& isn't alone in thinking about coordination. Several smart people in AI and business are converging on the same insight.

Reid Hoffman, LinkedIn founder, recently argued that companies are implementing AI wrong. They treat it like isolated pilots—use AI for this customer service task, use AI for this data entry task. But the real leverage isn't in individual tasks. It's in the coordination layer.

Hoffman framed it clearly: "AI lives at the workflow level, and the people closest to the work know where the friction actually is." The idea is that better coordination is where you unlock actual productivity improvements.

This framing aligns perfectly with what Humans& is building. They're not trying to optimize individual tasks. They're trying to optimize how humans and AI work together across tasks.

**Granola, an AI note-taking app, raised

The pattern is clear: the next wave of AI adoption is about coordination, not automation.

Even big companies are signaling this. Google and Microsoft are investing heavily in multi-user AI features. Anthropic has been explicit about building models that work in team contexts. OpenAI is working on multi-agent systems.

But none of them have built a foundation model specifically designed for coordination. That's Humans&'s bet.

Training Data and Model Architecture: What's Actually Different?

Let's get technical for a second, because this is where Humans& has a real structural advantage.

Training a coordination model requires different data than training a general-purpose language model. You need data that captures group decision-making processes, not just individual question-answering.

What kind of data? Think about:

- Slack workspaces where teams made important decisions. Not just the messages, but understanding which decisions stuck, which ones got revisited, and why.

- Meeting transcripts where teams discussed and debated options. Understanding how perspectives were introduced, challenged, and resolved.

- Email threads tracking decision evolution over time. The back-and-forth that shows how group consensus forms.

- GitHub discussions where engineers debate architecture. The way technical disagreements get resolved (or don't).

- Product review documents where multiple stakeholders weigh in. How feedback gets incorporated or dismissed.

This is harder to source than typical training data. You can't just scrape Reddit for this. You need data that captures the full arc of group decision-making.

The founders have access to something valuable: relationships at Anthropic, Meta, OpenAI, xAI, and Google DeepMind. These companies have decades of internal coordination data. They understand group dynamics at massive scale.

That's not to say they'll use proprietary data. But it's to say they have insight into what good coordination looks like at scale.

Model architecture is also different.

A standard language model is basically: context goes in, next token prediction comes out. You're optimizing for, "Given what I know, what word comes next?"

A coordination model might look different. You might have:

- A module that tracks decision state over time. Not just remembering what was said, but maintaining a representation of the decision being made, who's involved, and what the status is.

- A module that models relationships and perspectives. Understanding not just what people are saying, but what they actually think and who they trust.

- A module that predicts group dynamics. Understanding that if you push this perspective now, person X will feel unheard and will block the decision later.

- A module that optimizes for decision quality and speed. Not just answering correctly, but getting the group to a good decision efficiently.

These are speculative—I don't know what their actual architecture looks like. But they'd be logical directions if you're building a coordination model.

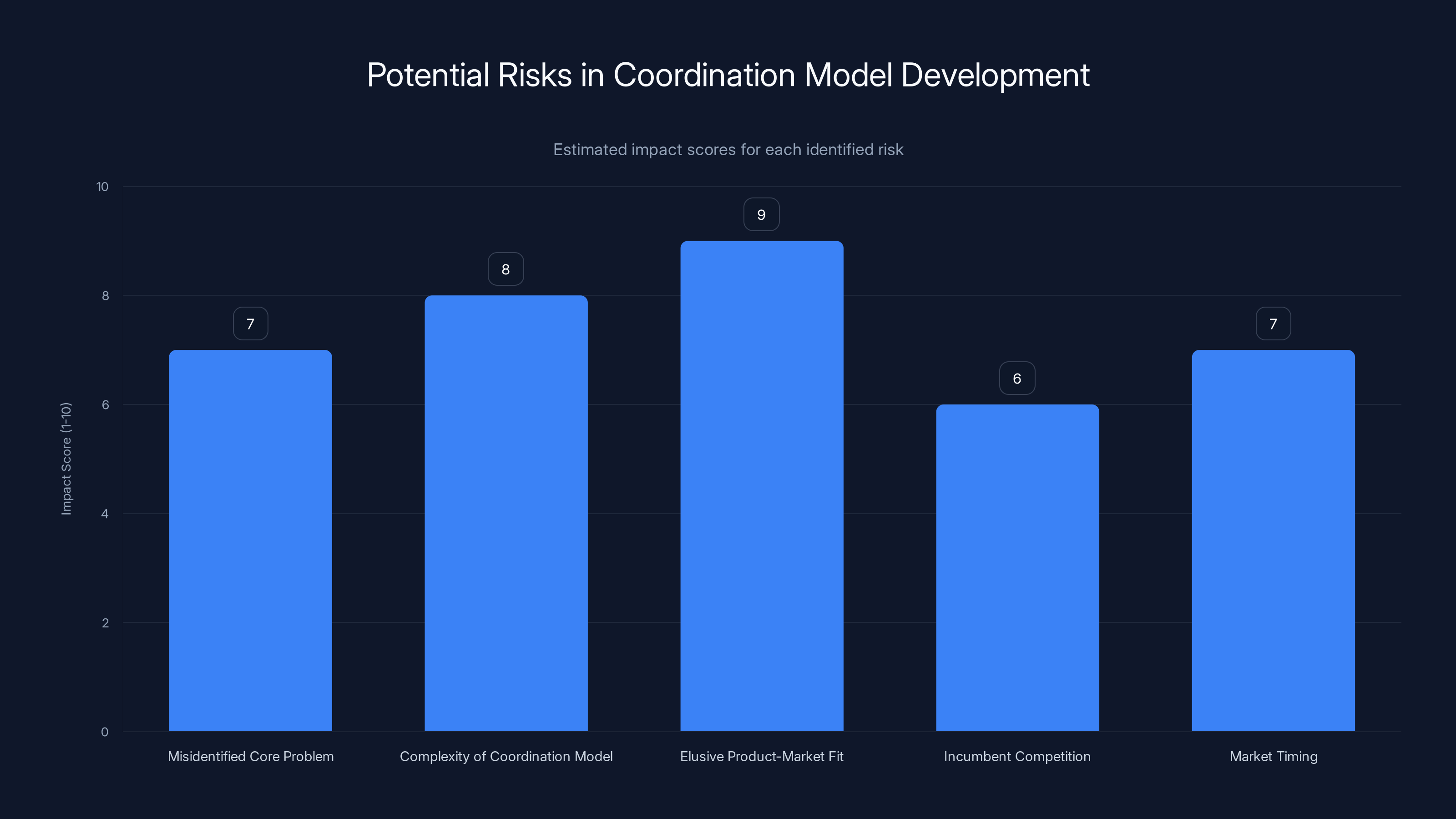

The most significant risk is achieving product-market fit, with a high impact score of 9, indicating the challenge of translating a model into a viable product. Estimated data.

The Talent Play: Why Founding Team Pedigree Matters Here

Let's be direct: the founding team is the primary asset. A $480 million seed round isn't really about the technology or the product. It's about the people.

Why? Because this problem is genuinely hard. You need people who:

- Understand state-of-the-art AI research well enough to push the boundaries

- Have shipped products at scale and understand what users actually need

- Have thought deeply about organizational behavior and group dynamics

- Can recruit other world-class researchers and engineers

Humans& has all of that:

Eric Zelikman (CEO) came from xAI, where he worked on some of the most advanced language models. He's published research on reasoning and planning in large language models. He understands both the cutting edge of AI research and how to build products.

Andi Peng comes from Anthropic, where she worked on some of the most capable models in the world. She understands constitutional AI, interpretability, and how to think about model alignment. She also has a track record of translating research into product impact.

The broader team has people from Meta (product scale), OpenAI (product-market fit at speed), and Google DeepMind (research excellence). That combination is almost magical.

Why does this matter? Because if your thesis is "coordination is the next frontier," you need people who can:

- Design experiments that test whether a model is actually learning coordination behaviors

- Build products that users want to use (not just that work technically)

- Recruit other talented people who believe in the thesis

All three of these require a specific combination of skills and credibility.

Investors know this. When top-tier AI researchers leave cushy positions at the best research labs to start a startup, it signals something about how important they think the problem is.

The Competitive Response: Why Incumbents Are Vulnerable

One of the most interesting things about Humans& is that they're entering a space where the incumbent solutions are weak.

Slack, Notion, Google Workspace, Microsoft Teams—these are all incredibly successful products. But none of them are actually solving the coordination problem. They're solving the communication and information storage problems.

Coordination is different. It requires understanding group dynamics, tracking decision state, and actively helping groups move toward decisions. These tools have added AI features, but they're treating AI as "a smarter search tool" or "a better auto-responder." Not as "a fundamental rethinking of how coordination works."

This is where Humans& has a real opening.

The incumbent playbook for responding to Humans& would be:

- Wait and see if they actually ship something valuable

- Add similar features to existing products

- Compete on scale, brand, and integration ecosystem

But that playbook doesn't actually work here. You can't just bolt on a coordination layer to a chat tool. You need to rebuild the fundamental architecture.

Slack could theoretically add coordination features. But Slack is optimized for real-time communication, and coordination is optimized for asynchronous, multi-day processes. Those are competing optimization targets.

Humans& is building a product from scratch, optimized for coordination. They'll win or lose on whether they nail that singular problem, not on whether they can also do everything Slack does.

That's actually Humans&'s advantage. They don't have to maintain backward compatibility with 15 years of product decisions. They can design everything around coordination.

Enterprise vs. Consumer: Where the Real Value Sits

Humans& mentioned both enterprise and consumer opportunities, but the enterprise opportunity is clearly larger and clearer.

For enterprise, the value is immediate and measurable:

- A 10% improvement in meeting efficiency translates to millions of dollars saved

- A 20% reduction in decision cycle time maps directly to revenue (faster product launches, faster customer response)

- Better coordination means fewer duplicate efforts, fewer miscommunications, fewer costly mistakes

You can model the ROI. You can sell it to CFOs. Enterprise companies will pay for this if it works.

For consumer, it's hazier:

- Consumer users don't have a coordination problem in the same way. They're not running multi-day group decisions

- The value is more subtle (fewer misunderstandings with roommates? Smoother friend group planning?)

- It's harder to monetize

Humans& will probably start enterprise. That's where the money is, where the pain is clearly felt, and where you can charge meaningful prices.

The consumer opportunity might come later if they can figure out what coordination problems consumers actually have. But I'd expect them to focus on enterprise for the first few years.

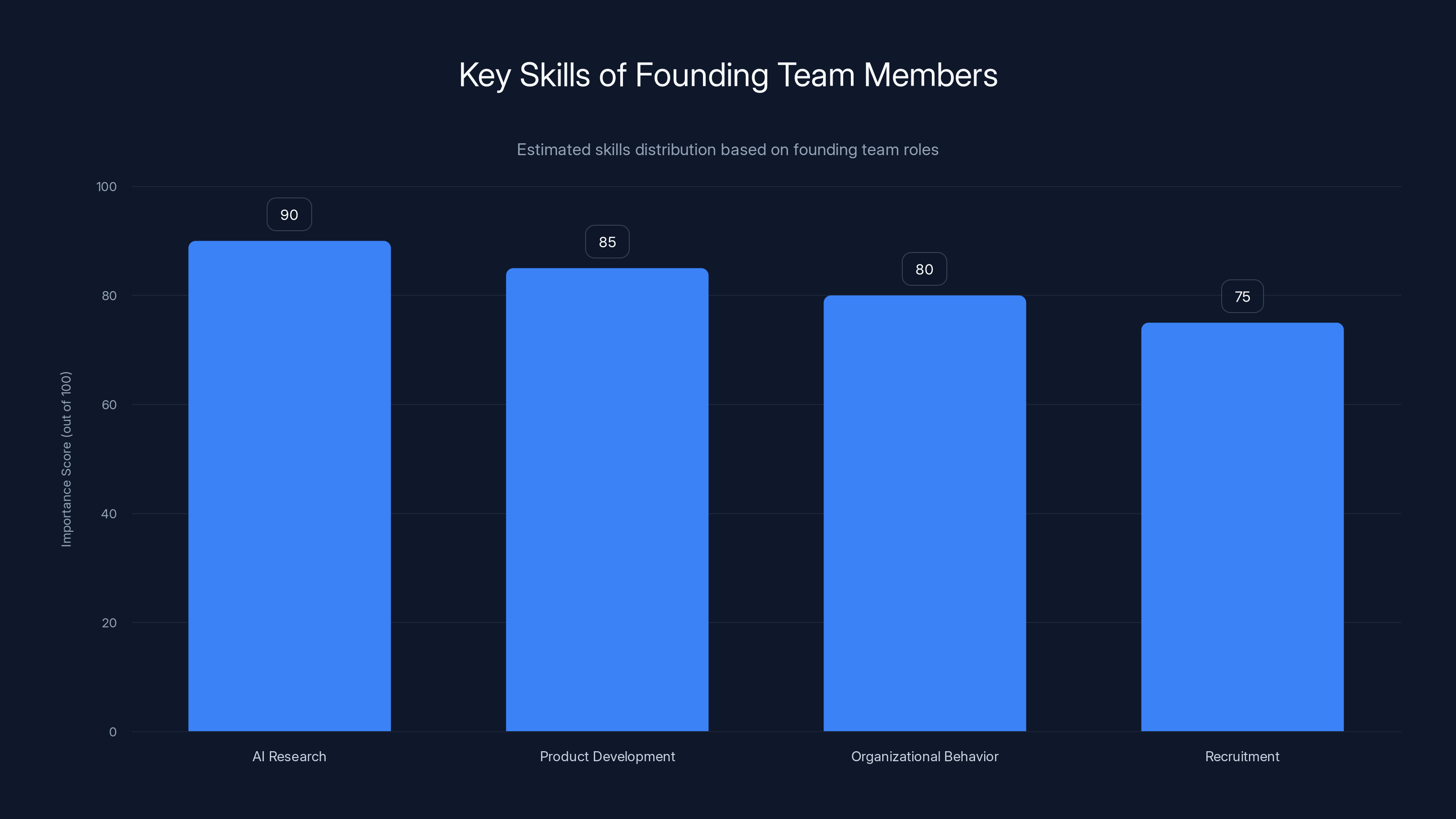

Founding team members need a blend of AI research, product development, organizational behavior, and recruitment skills to succeed. Estimated data.

Potential Pitfalls: What Could Go Wrong?

Let's be honest about the risks here.

Risk 1: Coordination might not be what they think it is. It's possible that better coordination isn't actually what companies need. Maybe the problem isn't coordination—maybe it's decision quality, or management skills, or something else entirely. If they've misidentified the core problem, the whole thesis fails.

Risk 2: Building a coordination model might be harder than expected. Training on group decision-making data is complex. You need to understand not just what was said, but what people were thinking, what the stakes were, and what dynamics were at play. That's much harder to represent in training data than typical language model tasks.

Risk 3: Product-market fit could be elusive. Even if they build a great model, translating that into a product people want to pay for is hard. The coordination value might be real but invisible to end users. They might be unable to charge enough to justify the cost of development.

Risk 4: Incumbents could actually move fast. Slack, Google, Microsoft are big, but they're not dumb. If they see Humans& gaining traction, they could pour resources into coordination features and lock users in with their existing ecosystems.

Risk 5: The market timing could be wrong. Maybe companies aren't ready to rethink coordination yet. Maybe they need two more years of AI maturation before they're willing to switch to a new product for this. A $480M burn rate gives them time, but not infinite time.

None of these risks are fatal. But they're real.

The Broader Shift: From Automation to Augmentation to Coordination

There's a useful framework for thinking about how AI is progressing:

Phase 1: Automation (2020-2023). Can AI do this task instead of a human? Replace customer service reps with chatbots. Replace data entry with AI extraction. Replace junior analysts with AI analysis.

Phase 2: Augmentation (2023-2025). Can AI make this task easier for humans? Code completion, writing assistance, research help. The human is still in control—AI is just making them faster.

Phase 3: Coordination (2025+). Can AI help humans work together better? This is where Humans& is betting. The focus isn't on replacing humans or making them faster. It's on fundamentally changing how groups of humans interact and make decisions together.

Each phase requires different model capabilities, different product designs, and different business models.

Phase 1 sold on cost savings. Phase 2 sells on productivity. Phase 3 will sell on decision quality and organizational effectiveness.

We're at an inflection point. The automation narrative is running out of steam (because not everything can be automated). Augmentation is proving useful, but it's not transformative. Coordination is where the next decade of AI value actually lives.

Humans& is betting their $480M on that thesis. And honestly, I think they might be right.

Timeline Expectations: When Will We Know If This Works?

A fair question: how long until we know if Humans& is onto something real?

Next 12 months: They'll ship something. It might be limited, it might be a beta for select partners, but they'll need to start testing the model in real-world coordination scenarios. This is where we'll get the first signal on whether the model architecture is actually capturing something useful about coordination.

12-24 months: They'll need to show early adoption and product-market fit signals. That means users who are actively choosing their product over alternatives, retention that's strong, and word-of-mouth growth. If those aren't happening, the thesis is probably wrong.

24-36 months: They'll need to show revenue growth and enterprise traction. At this point, if they haven't found a market willing to pay real money for coordination tools, the money runs out and the bet fails.

3-5 years: If they get past year three successfully, they're probably building a real company. A company that's actually solving coordination problems and charging for it.

The timeline is aggressive. $480M burns through pretty quickly if you're hiring top talent and paying for compute. They probably have 3-4 years to prove the thesis before things get serious.

But for a problem this important, that timeline is reasonable.

Coordination models prioritize perspective integration and relationship awareness, differing from traditional models focused on immediate user satisfaction. Estimated data.

What Humans& Means for the Broader AI Landscape

Here's why Humans& matters even if they fail:

They're signaling to the entire AI industry that the problem worth solving next isn't "smarter models." It's "models that help humans work together better."

That's a fundamental shift from the last five years of AI research, which has been obsessed with scale, capabilities, and raw intelligence.

If Humans& is successful, you'll see a wave of coordination-focused AI products. You'll see research labs publishing papers on social intelligence. You'll see VCs funding startups that approach collaboration problems.

Even if Humans& fails, they've identified something real. Other teams will eventually solve the coordination problem. It might be Google or Microsoft doing it within their platforms. It might be a different startup that figures out the right approach. But the problem is now on the table.

That matters.

The Philosophical Question: What Are We Actually Building?

There's something worth sitting with: what does it mean to build a "central nervous system" for human-plus-AI organizations?

It means the AI isn't just a tool anymore. It becomes infrastructure. It becomes the connective tissue that holds the organization together.

That's different from a chatbot (which is a tool you use) or even an agent (which is a system that works on your behalf). It's more fundamental.

It's closer to how email became the nervous system for distributed organizations. It's not that email is smart (it's not). It's that email became the layer through which coordination happens. Every organization structure, every decision, every project exists on top of email.

Humans& is betting that there's a space to build something similar for AI-plus-human organizations. Something that becomes so central to how work happens that it becomes invisible. You're not using a coordination tool. You're using coordination as a natural part of how work happens.

That's ambitious. That's also probably correct.

What You Should Watch For

If you're trying to understand whether Humans& is onto something real, here's what to watch:

Product announcements: What do they actually ship? Pay less attention to the marketing and more attention to the actual feature set. Does it actually solve coordination problems, or does it just look like a prettier Slack with AI?

Customer traction: Are they getting enterprise customers? Are those customers actually using it to make better decisions, or is it just sitting in their stack unused? Retention and expansion metrics will tell you the truth.

Research publications: Will they publish papers on what they've learned about coordination and social intelligence? The best AI researchers publish, and what they publish signals what they've figured out.

Hiring: Who are they hiring? Are they bringing in organizational psychologists and social scientists, or just more ML engineers? Your hiring tells the world what you actually believe.

Investor confidence: Will they raise a follow-up round? And at what valuation? If investors keep believing in the thesis, you'll see that in the capital flowing in.

Competitive response: How do incumbents respond? If Google, Microsoft, and Slack are panicking and rushing to build coordination features, that's a signal the thesis is real.

Watch these signals. They'll tell you whether Humans& is a legitimately important company or a well-funded bet that didn't work out.

Final Thoughts: The Coordination Frontier

AI didn't solve the hard problems. It solved the easy ones first. Making search faster. Making text generation work. Making code completion possible.

The hard problems are still there: helping humans make better decisions together, improving how organizations coordinate action, building AI systems that understand group dynamics.

Those problems are worth solving. And they're worth $480 million. Maybe worth a lot more.

Humans& is betting their capital, their credibility, and their careers on the idea that coordination is next. That the next phase of AI adoption isn't about automating more tasks or making individuals more productive. It's about fundamentally improving how humans work together.

I don't know if they'll succeed. The risks are real. But I do know that someone will eventually solve this problem. And when they do, it'll be as transformative as Slack was for communication or Google was for search.

Humans& is making their bet that they're the team to do it.

The next few years will tell us whether they're right.

FAQ

What is AI coordination, and how does it differ from AI automation?

AI coordination focuses on helping groups of humans work together more effectively with AI assistance, rather than automating individual tasks. While automation replaces human work with AI, coordination uses AI to improve decision-making, reduce miscommunication, and track distributed processes across teams. The key difference is that coordination is fundamentally about group dynamics—understanding competing priorities, surfacing diverse perspectives, and moving teams toward decisions—whereas automation just makes individual tasks faster or replaces them entirely.

Why is coordination considered the next frontier for AI?

Coordination is the next frontier because automation and augmentation have run into diminishing returns. Most easily automatable tasks have been automated. Most tasks that benefit from individual AI assistance have been enhanced. But the coordination challenge—helping distributed teams make good decisions together—remains largely unaddressed by current tools. Companies are spending enormous amounts of time in meetings, email threads, and Slack channels trying to get alignment. If AI could materially improve that process, the ROI would be massive, estimated at billions of dollars across the economy.

What makes Humans& uniquely positioned to build a coordination model?

Humans& has a founding team with rare pedigree, including researchers and product leaders from Anthropic, Meta, OpenAI, xAI, and Google DeepMind. These individuals have deep understanding of both cutting-edge AI research and how to ship products at scale. They also have access to insights about organizational coordination from their previous roles at large tech companies. Additionally, they're designing the model and product co-evolutively, ensuring that theoretical capabilities translate into practical tools that people actually want to use.

How would a coordination model differ from current language models in terms of architecture and training?

A coordination model would be trained on different data (group decision-making transcripts, meeting recordings, email threads showing decision evolution) and would optimize for different objectives than current models. Instead of maximizing user satisfaction with individual answers or accuracy on specific queries, a coordination model would be trained to understand group psychology, track decision state over time, recognize when perspectives aren't being heard, and predict how group dynamics will evolve. The architecture might include specialized modules for relationship tracking, temporal decision modeling, and perspective synthesis—none of which current language models prioritize.

What are the main risks Humans& faces in execution?

The primary risks include: misidentifying the core coordination problem, finding it harder than expected to train models on group decision-making data, struggling to achieve product-market fit despite having a good model, facing rapid competitive responses from incumbents like Slack and Google, and timing risks if companies aren't ready to adopt new coordination tools yet. Additionally, even with $480 million in funding, the burn rate for a team of world-class researchers could exhaust capital in 3-4 years if they don't demonstrate meaningful revenue growth.

How long until we know if Humans&'s thesis about coordination is correct?

The timeline is probably 2-3 years for initial signals. Within 12 months, they should ship something testable in real-world coordination scenarios. By 18-24 months, early adoption metrics and product-market fit signals should be visible (user retention, word-of-mouth growth, customer willingness to pay). By the 3-year mark, if they haven't found a market willing to pay for coordination tools, the thesis is probably wrong. If they get past year three with strong metrics, they're likely building a genuinely important company.

What could be the killer application for a coordination model?

Potential killer applications include enterprise decision-making workflows (product strategy, organizational changes, budget allocation), distributed team management (reducing wasted meetings and email threads), complex project coordination (tracking interdependencies and surfacing blockers), and customer success (understanding how teams evaluate vendors and move toward decisions). The most likely first market is enterprise, where the pain is clear and customers can measure ROI. Consumer applications might emerge later if the team can identify coordination problems consumers actually face and are willing to pay to solve.

How does Humans& approach compare to competitors who are adding AI to existing collaboration tools?

Humans& is building from scratch, optimizing the entire architecture for coordination rather than bolting coordination features onto existing communication tools. Companies like Slack, Notion, and Google Workspace are adding AI features, but they're constrained by 15+ years of product decisions optimized for communication and information storage. Coordination requires fundamentally different optimization targets. Humans& has the freedom to design everything around coordination, though they lack the distribution, network effects, and switching costs that incumbents have. This is a classic disruption dynamic—specialists beat generalists when the problem is specific enough.

What would success look like for Humans& in their first 18 months?

Success would mean shipping a product that early users describe as materially improving their team's decision-making process or reducing coordination friction. Quantitatively, they'd need strong retention (users still using the product after 30, 60, 90 days), evidence of word-of-mouth growth, and at least a few enterprise customers paying real money. They'd also likely publish research on what they've learned about coordination and social intelligence, signaling to the industry that they've figured something out. Finally, they'd need to be able to articulate, in clear terms, what coordination problem they're solving better than alternatives.

Could Humans& be wrong about coordination being the next frontier?

Absolutely. It's possible that the real next frontier is something else entirely—maybe vertical-specific AI, maybe multimodal capabilities, maybe reasoning improvements. It's also possible that coordination is important but that the market isn't ready to adopt new tools for it yet. Organizations might prefer to wait for Slack or Google to add coordination features rather than adopt a new platform. Additionally, the underlying assumption that coordination can be meaningfully improved by AI might be wrong. Maybe the problem isn't intelligence or AI—maybe it's organizational structure, management skills, or something entirely different. Smart people have been wrong about market trends before.

The Coordination Revolution Starts Now

The story of AI has been a story of increasing capabilities: better language understanding, better code generation, better reasoning. But capabilities alone don't transform organizations.

Transformation comes from solving problems people actually face every day. And the problem most people face isn't "I need a smarter AI." It's "how do we get our team aligned on what we're building?"

Humans& is betting their $480 million that solving that problem, at scale, is worth everything. They might be wrong. But they might also be right about something genuinely important that nobody else has figured out yet.

Watch this space. The next five years of AI adoption might be defined by who figures out coordination first.

Key Takeaways

- AI coordination focuses on helping teams make better decisions together, not automating individual tasks—a fundamentally different problem than what current models solve

- Humans& raised $480M on the thesis that coordination is the next frontier, representing a shift from automation (Phase 1) through augmentation (Phase 2) to coordination (Phase 3)

- The founding team's pedigree from Anthropic, Meta, OpenAI, xAI, and Google DeepMind suggests they're not just raising hype—they're assembling rare talent specifically for this problem

- Coordination models require different training data (group decision-making transcripts) and different optimization targets (group dynamics) than standard language models

- The market timing is critical: companies are transitioning from chat to agents, and the coordination gap is the last major bottleneck before AI-plus-human workflows reach peak efficiency

- Enterprise is the clearer initial market; consumer opportunities are less obvious but potentially enormous if the team can identify real coordination problems consumers face

- Humans& will likely have 3-4 years to prove the thesis—watch for product announcements, enterprise traction, research publications, and how incumbents respond

Related Articles

- Claude Cowork: Enterprise AI Infrastructure Beyond Chat [2025]

- AI Agents: Why Sales Team Quality Predicts Deployment Success [2025]

- Why AI Agents Keep Failing: The Math Problem Nobody Wants to Discuss [2025]

- Microsoft Rho-Alpha: Physical AI Robots Beyond Factory Floors [2025]

- Vibe Coding and AI Agents: The Future of APIs and DevRel [2025]

- The AI Lab Monetization Scale: Rating Which AI Companies Actually Want Money [2025]

![AI Coordination: The Next Frontier Beyond Chatbots [2025]](https://tryrunable.com/blog/ai-coordination-the-next-frontier-beyond-chatbots-2025/image-1-1769380741431.jpg)