AI Cybercrime: Deepfakes, Phishing & Dark LLMs [2025]

Introduction: The New Face of Cybercrime

We're living through a pivotal moment in cybersecurity. Not because hackers suddenly got smarter, but because the tools enabling them just got dramatically cheaper and easier to use.

For years, launching a convincing phishing campaign required technical skill. Creating a deepfake needed expensive equipment and expertise. Automating social engineering attacks meant building custom bots. All of that has changed in the last 18 months.

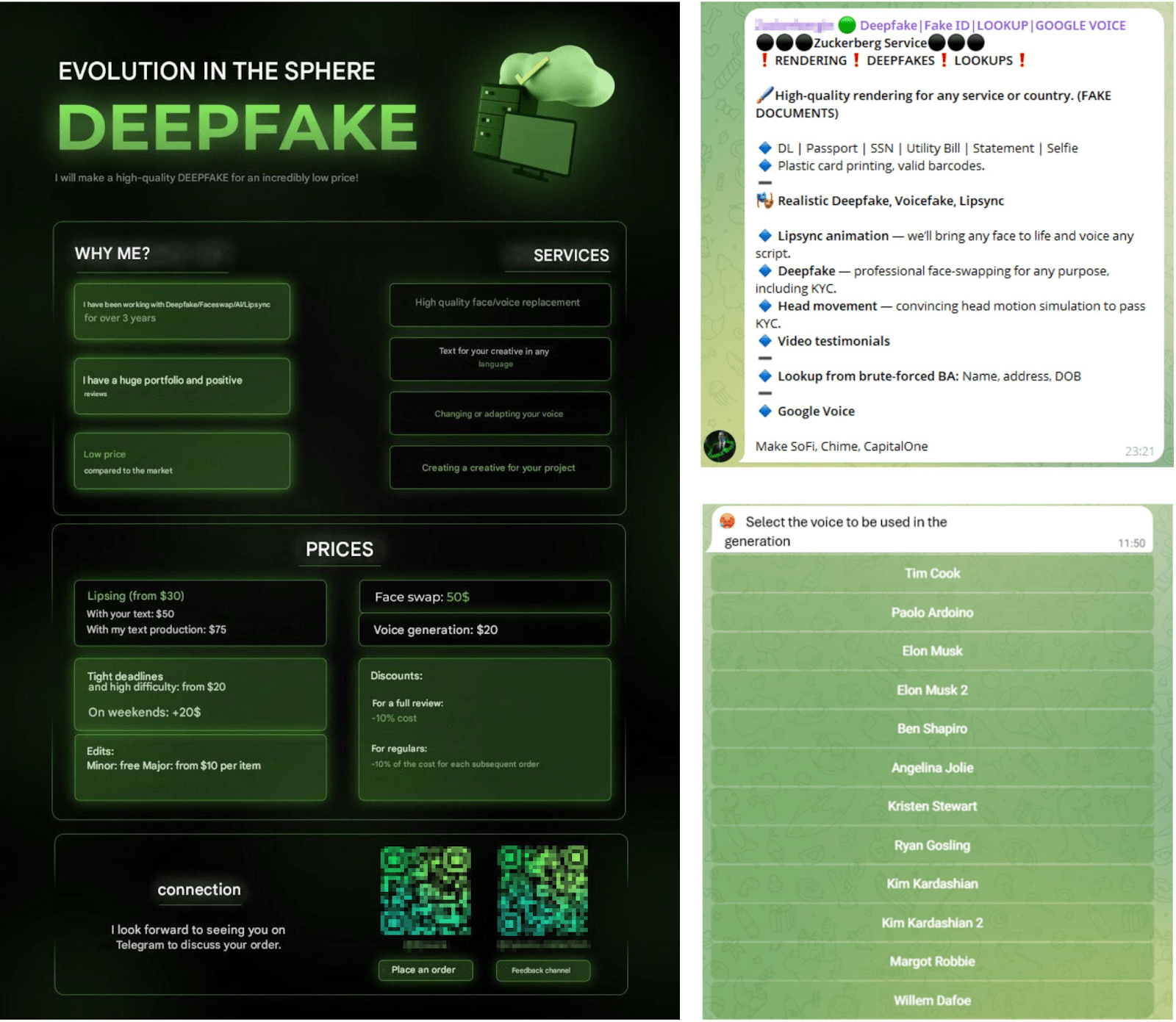

Today, criminals with minimal technical knowledge can access dark web marketplaces selling subscription-based "AI crimeware." These platforms bundle large language models without safety restrictions, deepfake generation tools, and phishing automation into turnkey crime packages. Prices? Between

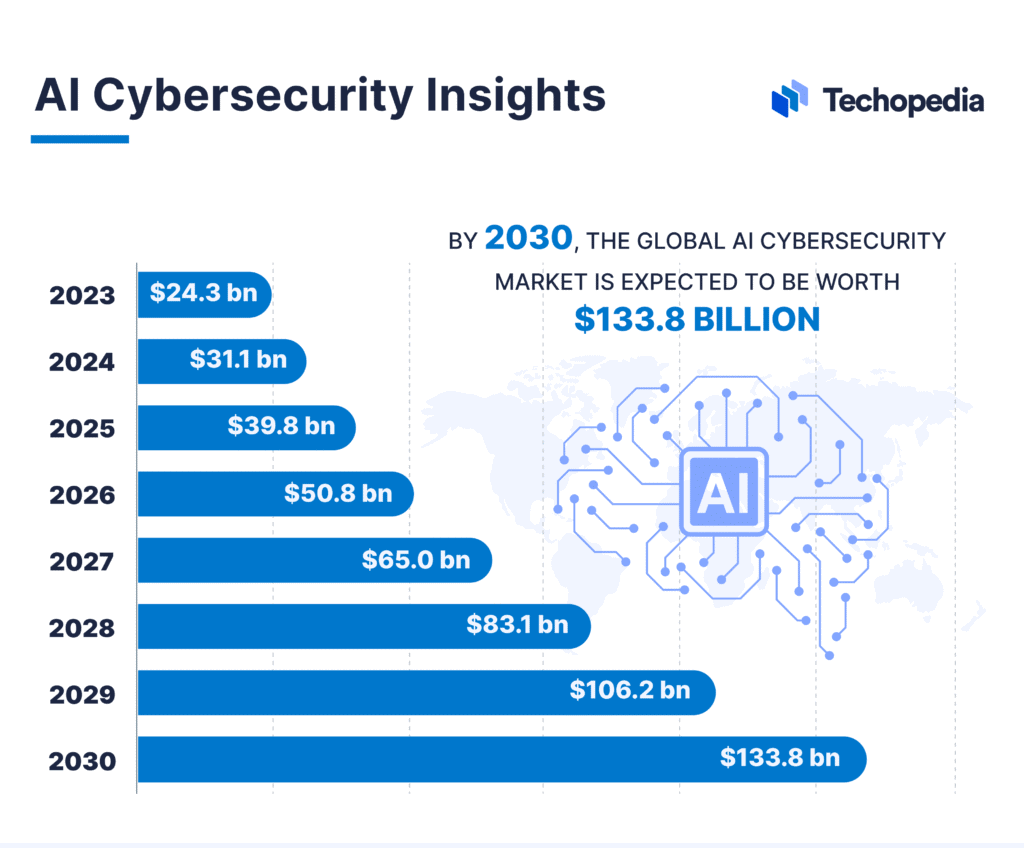

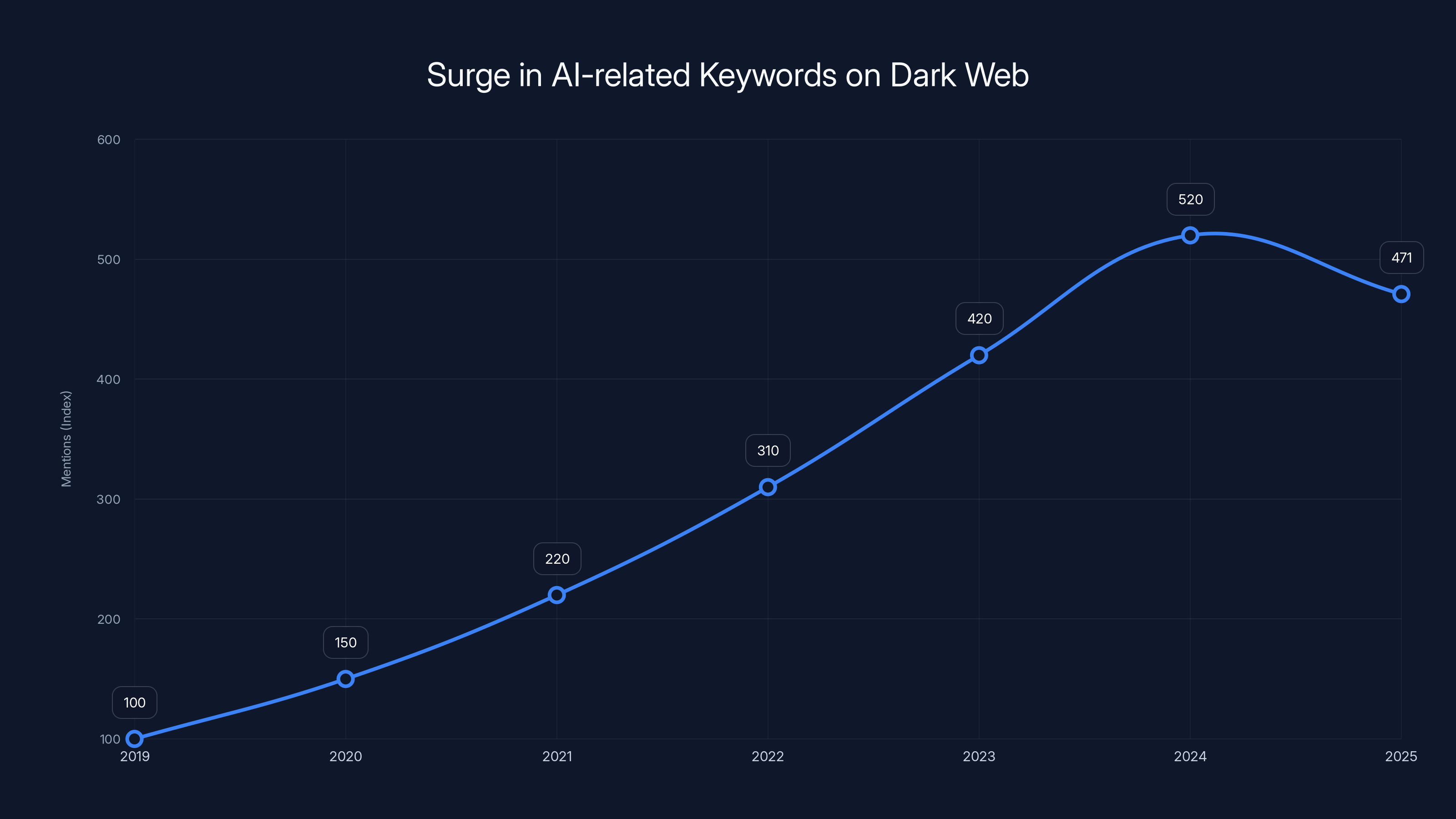

According to recent threat intelligence data, first-time dark web posts mentioning AI-related keywords surged by 371% between 2019 and 2025. But here's the thing that should scare you more than that number: it's not experimental anymore. Thousands of forum discussions each year reference AI misuse. The underground market is stable. Growing. Organized.

We're not talking about isolated incidents or one-off attacks. This is the emergence of what security researchers are calling the "fifth wave of cybercrime," driven by the democratization of AI tools rather than isolated experimentation. Deepfake-enabled fraud attempts hit 347 million dollars in verified global losses. Entry-level synthetic identity kits sell for as little as

Your business is potentially facing the most sophisticated, accessible wave of attacks in history. But here's the good news: understanding how these attacks work is your first line of defense.

Let's break down what's actually happening in the AI cybercrime underground, how these attacks target your organization, and what you need to do right now to protect yourself.

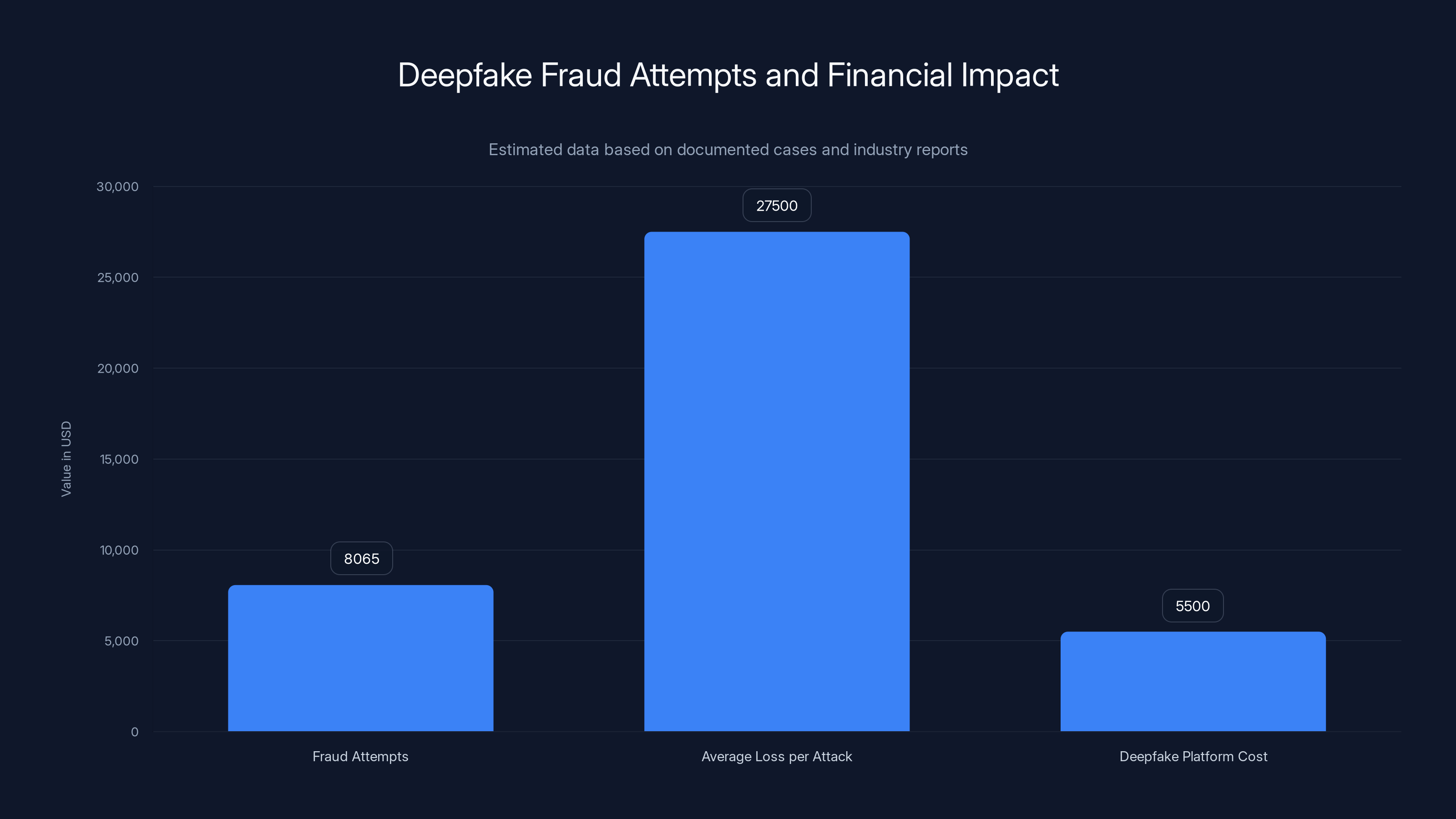

The underground marketplaces offer a range of AI-enabled cybercrime services with varying price tiers. Deepfake generation platforms and credential databases are among the most expensive offerings. (Estimated data)

TL; DR

- AI cybercrime activity accelerated 371% since 2019, with persistent high-level forum discussions indicating a stable underground market, not experimental curiosity

- **Deepfake-enabled fraud caused 5 and real-time deepfake platforms ranging10,000

- Dark LLMs and AI crimeware subscriptions cost 200 monthly, democratizing attack capabilities to low-skill actors and creating a sustainable criminal economy

- AI-generated phishing is now embedded in malware-as-a-service platforms, enabling rapid scaling of convincing campaigns that bypass traditional email defenses

- Your defenses must evolve immediately: multi-factor authentication, continuous monitoring, AI-powered threat detection, and behavioral analytics are no longer optional

The Dark LLM Economy: How AI Became a Crime Service

Let's talk about the infrastructure that's making all of this possible. Because the real story isn't about AI being dangerous in theory. It's about criminals building a profitable, sustainable industry around weaponized AI.

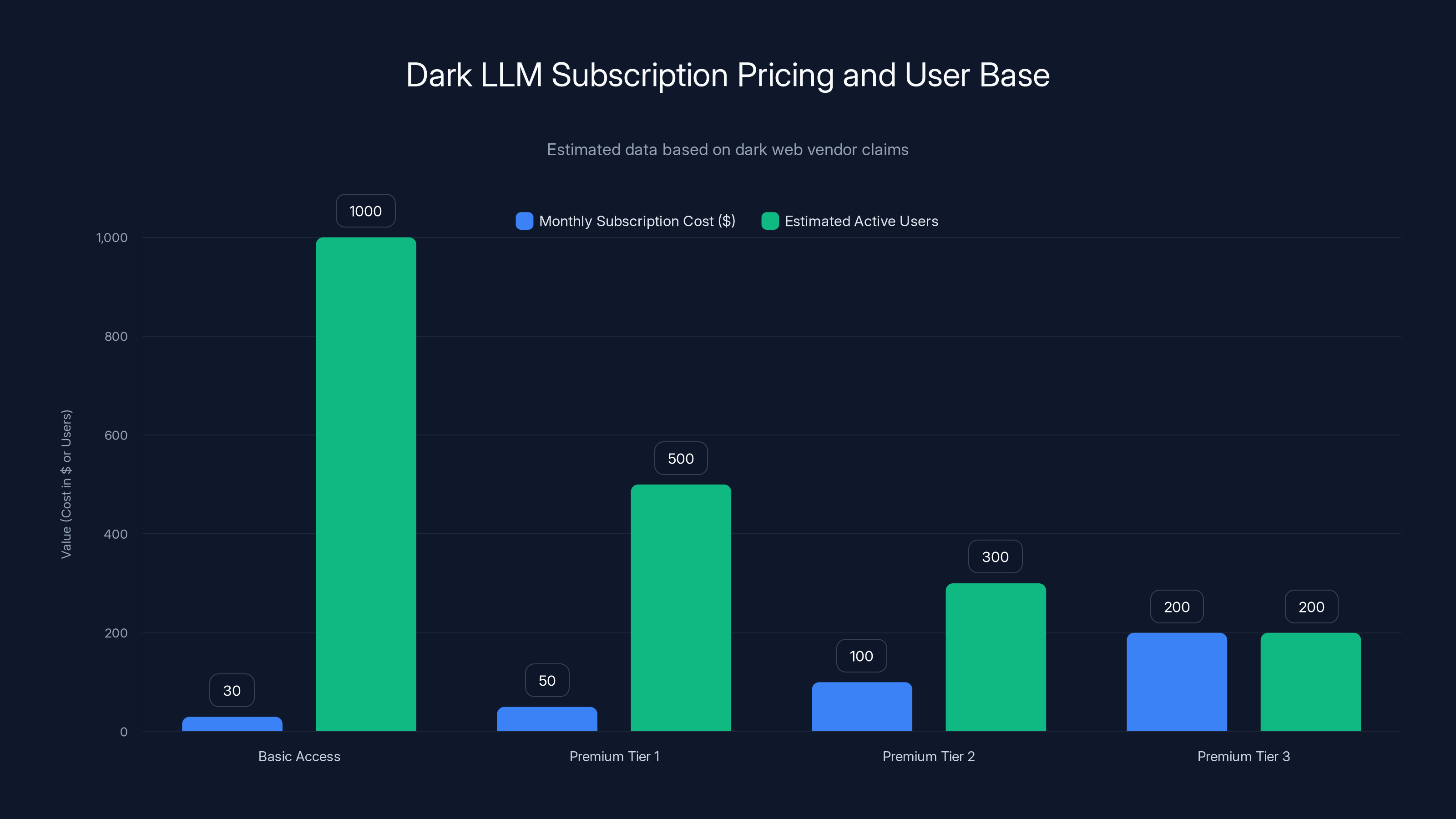

Three distinct vendors have emerged on dark web marketplaces selling what they call "Dark LLMs." These aren't hobbyists tinkering in basements. They're running structured businesses with subscription pricing, feature tiers, and documented user bases.

The specifics are chilling. These dark LLMs are fundamentally large language models without safety guardrails. No content filtering. No usage restrictions. No ethical constraints. You feed them instructions for creating convincing phishing emails, social engineering scripts, or fraud narratives, and they output polished, deployment-ready content.

Subscription models follow a simple economy: Basic access starts at

Here's what makes this different from previous crime waves. In the past, scaling required proportional increases in criminal infrastructure. More phishing emails meant hiring more humans or building better automation. More deepfakes meant investing in expensive video production tools. The cost curve favored defenders. Organizations could simply hire security teams faster than criminals could expand operations.

AI inverted that curve. One dark LLM operator can serve thousands of paying customers simultaneously. A single deepfake generation platform can process millions of requests. The marginal cost of serving one additional criminal customer approaches zero.

At least 251 posts explicitly referenced large language model exploitation on monitored dark web forums. The vast majority referenced systems based on Open AI's architecture. This creates an interesting security paradox: criminals are leveraging the same foundational models that legitimate businesses use, but with safety controls stripped away.

The psychological impact matters too. Criminals selling access to dark LLMs aren't anonymous ghosts anymore. They're actively marketing. They publish testimonials from satisfied customers. They offer free trials. They've professionalized the entire supply chain of AI-enabled crime.

This is arguably more dangerous than any individual exploit or vulnerability. Because you can patch a vulnerability. But you can't patch the human decision to buy a $49/month subscription that lets someone impersonate your CEO.

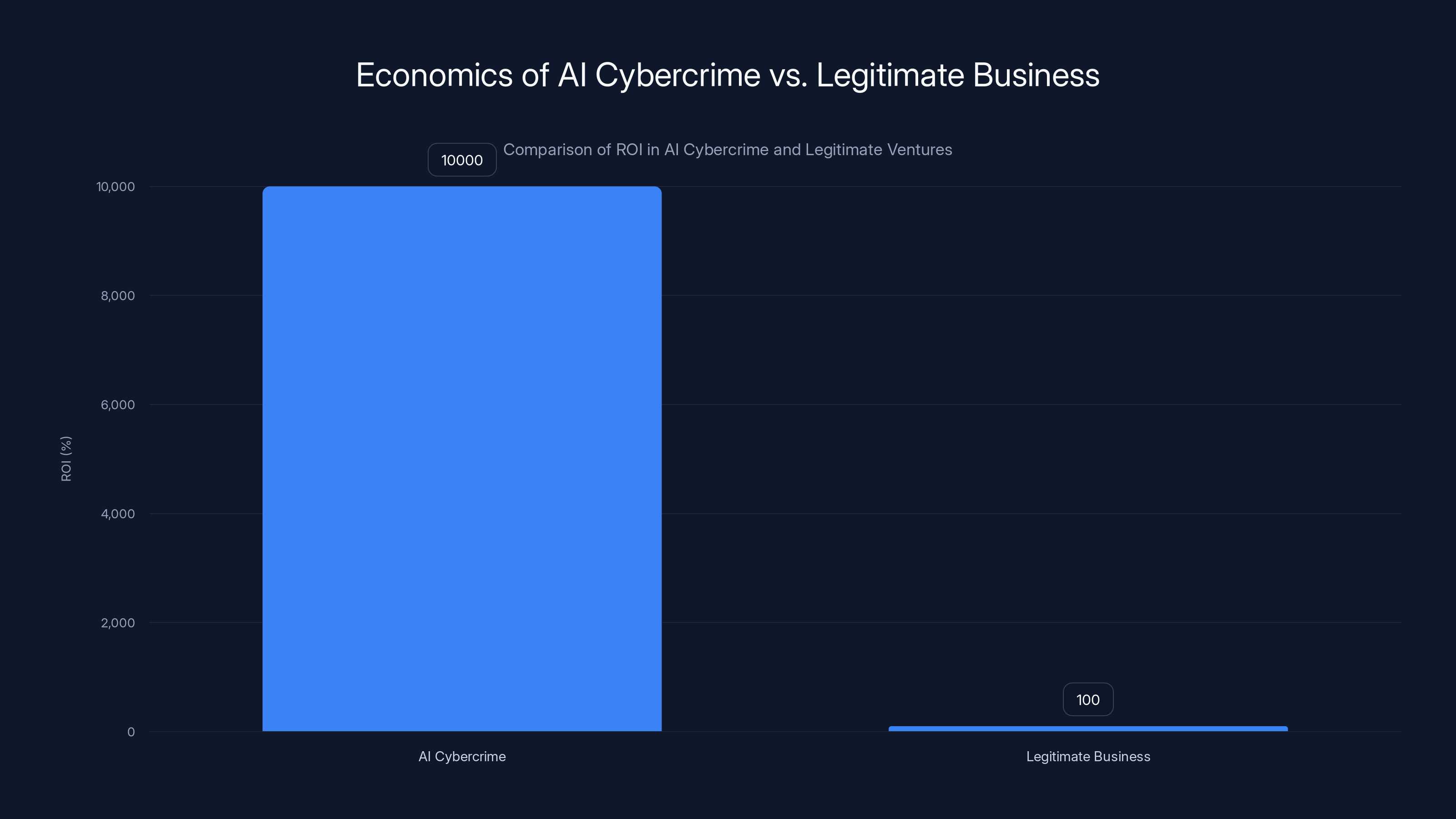

AI cybercrime offers a staggering 10,000% ROI compared to 100% for legitimate businesses, highlighting the economic allure for criminals. Estimated data.

Deepfake Fraud: The $347 Million Reality

Deepfakes used to feel like science fiction. Now they're a thriving criminal enterprise with documented losses exceeding $347 million globally in verified fraud cases.

Let's be precise about what's happening here. Deepfake technology has evolved from "impressive but obviously fake" to "indistinguishable from reality" in approximately 18 months. We're not talking about crude video swaps anymore. We're talking about real-time synthetic video generation that can fool identity verification systems, banking biometrics, and human observers.

The attack pattern is becoming standardized. Step one: criminals obtain your photo from social media or data breaches. Step two: they use a real-time deepfake platform to generate synthetic video of you conducting transactions, approving wire transfers, or confirming identity verification.

Step three: they use that video as part of a multi-vector attack. Some attacks target the financial institution directly through deepfaked video calls to support teams. Others target you personally, making it appear you've authorized unusual transactions.

One financial institution documented 8,065 deepfake-enabled fraud attempts in an eight-month period. Eight thousand attempts. At a single organization. This is industrial-scale attack volume.

Entry-level synthetic identity kits are available for

Real-time deepfake platforms sit at the premium end of the market. A functional platform that generates convincing video in real-time costs between

The technology enabling this is surprisingly accessible. Consumer-grade machine learning frameworks can generate deepfakes. Cloud computing resources are cheap and anonymous. The only barrier was technical skill, and that barrier is crumbling as more tutorials, pre-built templates, and automation tools enter the market.

What terrifies security researchers is the targeting precision. Criminals aren't deploying random deepfake attacks. They're researching specific high-value targets. Executives. Finance managers. System administrators. People with access to valuable accounts or critical infrastructure.

They construct elaborate social engineering campaigns around deepfaked video. A CFO receives an email appearing to come from the CEO, with an embedded video call request. The "CEO" requests immediate wire transfer authorization. The video is perfect. The voice matches. The background matches the CEO's office. The request aligns with normal business operations.

How many people would question that?

AI-Powered Phishing: Beyond the Obvious Red Flags

Traditional phishing campaigns are getting harder to execute. Most organizations have email filtering. Users get training. Obvious spelling mistakes and suspicious formatting get flagged and deleted.

AI changes the calculus entirely.

Criminals are now using large language models to generate phishing emails that are syntactically perfect, contextually appropriate, and genuinely difficult to distinguish from legitimate business communications. We're not talking about broken English anymore. We're talking about perfectly crafted correspondence that references your actual company processes, uses appropriate jargon, and adopts the communication style of legitimate business contacts.

Here's a concrete example of how this works in practice. A criminal with access to a dark LLM inputs the following prompt: "Generate a convincing phishing email targeting employees in the finance department of [Company Name]. The email should request password reset due to a security audit. Use the company's actual terminology and reference their actual security policies."

The dark LLM outputs a complete, deployment-ready email. Grammar is flawless. The tone matches corporate communication standards. It references specific security policies visible on the company website. It creates appropriate urgency without being heavy-handed.

The criminal sends that email to 10,000 employees. In previous attack waves, maybe 200 clicked a suspicious link. Now, with AI-generated personalization and perfect language, maybe 800 click. That's a 4x improvement in conversion rate.

But that's just the beginning. AI is also embedding itself into malware-as-a-service platforms. These are essentially crime-as-a-subscription services where criminals can deploy phishing campaigns, create malware payloads, and manage compromise infrastructure without any technical skill.

AI-assisted malware generation now produces variants automatically. Instead of releasing one malware version, criminals release thousands of variations, each slightly different from the last. This defeats signature-based antivirus detection because each variant is technically unique.

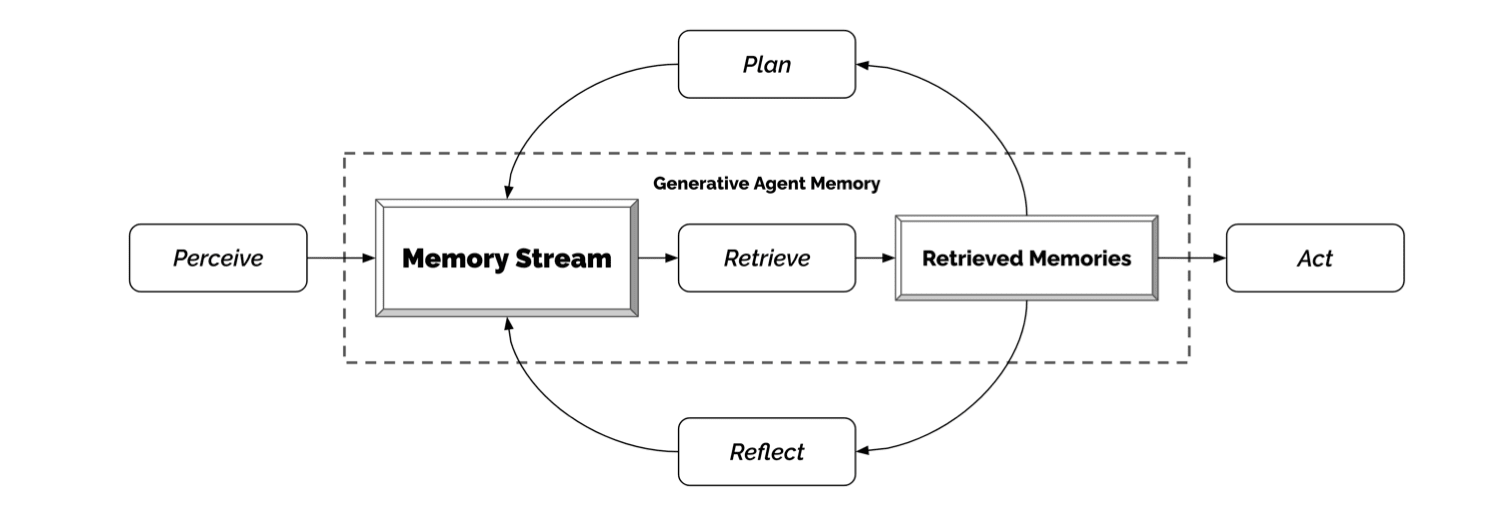

Remote access tools are being paired with AI automation. Once a criminal gains initial access to a network, AI-powered tools automatically enumerate systems, identify valuable targets, and accelerate lateral movement. What previously required manual reconnaissance by a skilled attacker now happens automatically in minutes.

The sophistication is genuinely intimidating. An attacker deploys an AI-generated phishing email, gains initial access, and then an AI-powered tool takes over: identifying domain administrators, discovering backup systems, locating financial data repositories, and proposing privilege escalation paths. The human attacker barely has to do anything.

Email filtering becomes nearly impossible when the phishing email is indistinguishable from legitimate business communication. Your traditional defenses break down. Your human security awareness training becomes less effective when the email is genuinely well-crafted.

This is why security leaders are shifting strategies. Instead of trying to block every phishing email (impossible when they're AI-generated and perfect), they're implementing zero-trust frameworks, behavioral analytics, and multi-factor authentication as fundamental infrastructure rather than optional security measures.

Voice Cloning and Audio Deepfakes: A New Attack Vector

Video deepfakes get the headlines, but audio deepfakes might be more dangerous because they're harder to verify and easier to deploy at scale.

Voice cloning technology has reached a point of remarkable fidelity. Advanced systems can replicate someone's voice from as little as 10 seconds of audio. The generated speech is indistinguishable from the original speaker. Inflection, accent, breathing patterns, even the subtle quirks that make human speech recognizable are present in the synthetic version.

Criminals are using voice cloning in several distinct attack scenarios. The first is what security researchers call "authoritative impersonation." A criminal calls a bank representative and claims to be the account holder, except they're using a cloned voice of the actual account holder.

The voice is perfect. The background matches (criminal plays recorded office noise). The details they provide are accurate (obtained through previous research or data breaches). The representative hears a voice that matches the account holder's voice on file. Authorization is granted. Money is transferred.

The second attack vector targets contact centers specifically. These are environments where humans are trained to trust voice as an identity verification mechanism. A criminal calls in claiming to be a system administrator, using a cloned voice of someone with administrative authority. They request password resets, system access, or data retrieval.

The contact center employee faces an interesting problem: the voice they're hearing is authentic-sounding, they have no independent verification mechanism, and the requester is claiming to be someone with authority. Many contact center employees are taught to respect authority. This makes them vulnerable.

The third scenario involves fraud amplification. A criminal initiates a transaction (often a wire transfer or fund withdrawal). The bank calls the account holder to verify the unusual activity. The criminal has prepared for this. They've set up a fake bank phone number or intercepted the call. When the account holder tries to reach their bank to report fraud, they're actually talking to a criminal using a cloned voice of a bank representative.

The account holder is assured the transaction is legitimate. The account holder approves it. The theft is complete.

The psychological impact is significant. We trust voice. We've evolved to trust voice for thousands of years. Voice carries emotional content and nuance that text doesn't. When a voice sounds authentic, our skepticism decreases.

As voice cloning technology continues improving (and it's improving rapidly), this attack vector will become more prevalent. Some security researchers believe voice impersonation attacks will surpass text-based phishing in frequency within 24 months.

Dark LLM vendors offer tiered subscriptions from

Synthetic Identity Fraud: Building Fake People

Synthetic identity fraud is perhaps the most insidious AI-enabled attack because it operates at scale below the radar. There's no victim whose account is compromised. There's no existing person being impersonated. Instead, criminals are essentially manufacturing entire fake people, complete with digital history, to commit fraud.

This process starts with the purchase of a "synthetic identity kit." These kits sell for

A criminal purchases a kit, customizes it slightly (changing the name or address), and deploys it. The synthetic identity opens credit accounts, applies for loans, accumulates credit history. Everything happens in the background for months or years.

During this accumulation period, the synthetic identity behaves like a real person. Bills are paid on time. Credit is used responsibly. No red flags appear. The credit score climbs.

Then, at a predetermined moment, the criminal activates. They rack up massive debt on the credit accounts, secure large unsecured loans, and disappear. The fake identity is left with outstanding debt. The lenders discover they've been defrauded by someone who doesn't actually exist.

But here's the scale problem: this attack is easy to automate. A criminal with access to synthetic identity generation tools can deploy 1,000 synthetic identities simultaneously. Each one operates independently. Each one slowly accumulates credit. Each one eventually gets "harvested" for fraud.

Financial institutions are estimating losses in the billions from synthetic identity fraud. The attack works because existing fraud detection systems are designed to catch compromised identities or clear cases of impersonation. They're not designed to catch brands new identities committing fraud because there's no historical identity to check against.

AI accelerates this process by automating the detection and exploitation of vulnerabilities in identity verification systems. Machine learning models can identify which lenders have the weakest identity verification procedures. They can generate synthetic identity profiles optimized to pass those specific verification systems. They can operate thousands of identities across thousands of institutions simultaneously.

What's particularly troubling is that synthetic identity fraud is difficult to prevent without compromising legitimate lending. How do you distinguish a genuine new person from a synthetic identity? Both have no credit history. Both will need to build credit from scratch. Both will have some digital presence that can be fabricated.

Some financial institutions are responding with deeper verification (additional documentation, phone calls, in-person visits). But this increases friction for legitimate customers. The criminals simply adapt, moving to lenders with lower verification thresholds. The incentives are misaligned.

The Underground Marketplace: Where Crime Meets Commerce

One of the most significant developments in AI-enabled cybercrime is the professionalization of the underground marketplace. This isn't chaotic. It's not loosely organized. It's structured commerce.

Dark web marketplaces have evolved into platforms that look remarkably like legitimate Saa S businesses. There are storefronts with product pages. Pricing tiers. Feature comparisons. Customer testimonials. Refund policies. Support tickets.

These marketplaces offer complete AI crime packages. You can purchase:

- Dark LLM access (200/month): Pre-configured large language models without safety restrictions, optimized for generating phishing emails, social engineering scripts, and fraud narratives

- Deepfake generation platforms (10,000): Real-time video synthesis tools for creating convincing deepfaked video of specific individuals

- Voice cloning services (500): Tools for replicating someone's voice from audio samples

- Synthetic identity generation (100 per identity): Complete fake identity packages with documentation and digital history

- Phishing infrastructure (100/month): Email sending platforms optimized for bypassing security filters, with analytics and A/B testing capabilities

- Malware customization (200): Tools for generating malware variants that evade antivirus detection

- Credential databases (10,000): Collections of stolen usernames and passwords from previous breaches, organized by industry and institution

The marketplace operators are actively recruiting customers. They post tutorials. They offer free trials. They provide customer support. Some even offer affiliate programs where customers can earn commissions by referring other criminals.

The sophistication of the marketing is almost amusing if it weren't so dangerous. One marketplace operator publishes monthly "product updates" highlighting new features and performance improvements. Another runs seasonal promotions ("Back-to-school discount: 30% off all malware packages").

Pricing suggests healthy demand and profitable operations. If an operator charges

What's remarkable is how this marketplace innovation follows legitimate business principles. Vendors compete on feature parity and customer service. Innovation happens in real-time as competitors release new capabilities. Pricing reflects supply and demand dynamics.

The only difference is that the product being sold is crime.

This marketplace professionalization represents a substantial shift in cybercrime. Previous crime waves were led by brilliant individuals or small, tightly-knit groups. Now, the infrastructure is distributed. Thousands of criminals can participate at different skill levels. A low-skill actor with $100/month can run sophisticated phishing campaigns. A medium-skill operator can deploy deepfake fraud attacks. A sophisticated actor can customize tools and develop new capabilities.

The barrier to entry has collapsed. The barrier to scaling has disappeared. The profitability is clear.

Attribution and the Threat Actor Landscape

One of the interesting challenges in the AI cybercrime era is attribution. Historically, security researchers could trace attacks back to specific threat actors or nations through unique tools, tactics, and procedures.

AI commoditizes attack capabilities. When 1,000 different threat actors are using the same dark LLM to generate phishing emails, attribution becomes nearly impossible. The phishing email provides no clues about the attacker's identity, origin, or motivation.

Currently, documented AI-related cybercrime activity suggests multiple distinct threat actor types:

Opportunistic criminals are low-skill actors without significant cybercriminal experience. They purchase access to dark LLMs and phishing infrastructure and deploy basic attacks. Success rates are low, but they operate with minimal risk and minimal investment. Volume compensates for low conversion rates.

Organized crime groups are using AI to enhance existing criminal operations. They have established infrastructure, victim targeting strategies, and money laundering processes. They're adopting AI selectively to improve specific attack vectors (particularly deepfake fraud and voice cloning for credential theft).

Financially motivated nation-state actors are deploying AI-enabled attacks against specific strategic targets. These attacks are highly sophisticated and precisely targeted. They use custom modifications to dark LLMs. They develop novel attack strategies combining deepfakes, voice cloning, and social engineering. Observed targets include financial institutions, energy companies, and government agencies.

Ideologically motivated groups are using AI tools to amplify disinformation campaigns, which sometimes include financial fraud elements. Their primary goal is information warfare, but they monetize attacks opportunistically.

Insider threat actors are using dark LLMs to generate convincing social engineering campaigns targeting colleagues and business partners. An employee with insider knowledge of a company's security practices can use AI to craft attacks specifically tailored to bypass that company's defenses.

The diversity of threat actors using AI tools is concerning because it suggests no single defense strategy will address all attack vectors. Nation-state actors require different defensive postures than opportunistic criminals. Insider threats require different mitigation than external attacks.

What unites all these threat actors is access to the same fundamental AI tools. The playing field has been leveled upward: everyone now has access to sophisticated capabilities that previously required years of expertise to develop.

Deepfake fraud attempts at a single institution reached 8,065, with average losses per attack estimated at

Detection Challenges: Why Traditional Defenses Fail

Here's the problem with AI-enabled attacks from a defensive perspective: your traditional security tools weren't designed to detect them.

Signature-based antivirus looks for known malware signatures. AI-generated malware is unique. Every variant is different. Your signatures don't match. Detection fails.

Email filtering looks for suspicious patterns: unusual sender addresses, malicious links, formatting anomalies. AI-generated phishing emails have none of these. The sender is spoofed properly. The links are legitimate (sometimes). The formatting is perfect corporate communication. Your email filter is helpless.

Behavioral analysis systems look for anomalous activity: unusual login times, atypical file access, strange network traffic. Deepfake-enabled fraud attack by someone using cloned credentials looks identical to a legitimate user accessing their own account. The behavior is normal because the account owner is legitimately being impersonated (they're aware of the transaction).

Multi-factor authentication stops many attacks, except when the second factor is compromised. How? Social engineering through a perfectly crafted phishing email. Malware stealing the MFA code. Voice cloning bypassing voice-based verification.

Domain verification and email authentication (SPF, DKIM, DMARC) prevent basic email spoofing, except when the domain itself is compromised or similar (attacker@company-security.com looks like attacker@company.com).

The fundamental problem is this: AI-enabled attacks are adapting faster than defenses. When deepfakes reach a certain fidelity threshold, your human security awareness training becomes ineffective. When phishing emails are indistinguishable from legitimate business communication, your email filtering becomes ineffective. When malware is automatically generated in thousands of variants, your signature-based detection becomes ineffective.

Your defenses were built for a different threat landscape. They assume attackers operate at human speed. They assume attacks contain detectable signatures. They assume humans can be trained to recognize attacks.

AI invalidates these assumptions.

Security teams are struggling to adapt. The detection tools they've invested in for years are becoming less effective. The detection methodologies they've relied on (pattern matching, rule-based detection) are insufficient. The human expertise they've built (knowing how to spot phishing emails) is becoming less valuable as phishing emails become technically indistinguishable from legitimate communication.

Many organizations are in a reactive posture: understand the attack, update the defense, then the attack evolves slightly and the defense becomes obsolete again.

Building Resilience: Practical Defense Strategies

Given these challenges, what can you actually do?

First, abandon the idea that you can prevent all attacks. With AI-generated phishing, deepfake fraud, and synthetic identity attacks, some percentage of attacks will succeed. Your goal shifts from prevention to resilience: detect compromise quickly, minimize impact, and recover faster than the attacker can expand.

Implement Zero-Trust Architecture

Traditional security assumed the network perimeter was protected and anything inside could be trusted. Zero-trust reverses this. Assume everything is untrusted. Every access request requires verification. Every user needs multi-factor authentication. Every device requires endpoint verification. Every application requires explicit authorization.

In the context of AI attacks, zero-trust is essential because deepfake-enabled compromise looks legitimate from a traditional perspective (correct credentials, expected access patterns). Zero-trust forces additional verification layers: is the request originating from a recognized device? Is the request consistent with the user's normal behavior? Is there additional context suggesting compromise?

Zero-trust doesn't prevent deepfake fraud, but it adds friction. An attacker using cloned credentials still needs to pass device verification, behavioral analytics, and potentially additional authentication. More layers mean more opportunities for detection.

Behavioral Analytics and Anomaly Detection

Instead of looking for signatures (which AI constantly changes), look for behavioral anomalies. Machine learning systems can establish baselines for normal user behavior: typical access times, typical applications used, typical geographic locations, typical data access patterns.

When behavior deviates significantly from the baseline, the system flags it. A user accessing sensitive data at 3 AM from a different country than usual generates an alert. A user suddenly accessing 10 GB of financial records flags as anomalous.

This approach works against AI attacks because even if an attacker has legitimate credentials, their behavior will often look different from the legitimate user. The timing is different. The applications accessed are different. The data accessed is different.

Behavioral analytics isn't foolproof (a sophisticated insider threat actor knows expected behavior and can mimic it), but it's effective against most AI-enabled attacks.

Continuous Verification and Adaptive Authentication

Stop treating authentication as a single gate (you authenticate once, then you're trusted for hours). Move to continuous verification where authentication strength adapts based on risk context.

High-risk actions (transferring large amounts of money, accessing sensitive data, making permission changes) require stronger authentication: biometric verification, out-of-band verification through a separate channel, or security key authentication.

Low-risk actions (viewing publicly available information, sending emails to known recipients) require lighter authentication.

This approach is effective against deepfake-enabled fraud because while an attacker might have stolen credentials, they're unlikely to have stolen the specific biometric or security key associated with the account.

Isolation and Segmentation

When an attacker gains access to your network (and they will, at some point), they'll attempt to move laterally, accessing more sensitive systems and data. Network segmentation prevents this.

Critical systems live on isolated network segments. Access between segments requires explicit verification. A compromised general user workstation doesn't automatically grant access to the financial system segment.

This doesn't prevent initial compromise, but it limits the scope of damage. An attacker who successfully deploys deepfake-enabled fraud against a user might gain access to that user's email and basic files. Network segmentation prevents them from accessing the financial system, credential storage, or other critical infrastructure.

Incident Response and Rapid Recovery

Assume you will be compromised at some point. Your incident response plan determines how much damage the compromise causes.

Rapid incident response means: detecting compromise quickly (within minutes, not days), containing the damage (isolating compromised systems), and recovering (rebuilding systems from clean backups).

In the AI cybercrime context, this means: you detect deepfake-enabled fraud or phishing compromise, you immediately revoke compromised credentials, you rebuild affected systems, and you restore from backups. The attacker's window of opportunity is measured in minutes, not weeks.

Security Awareness Training (But Different)

Traditional security awareness training teaches users to spot phishing emails and social engineering attempts. This training is becoming less effective as AI generates technically perfect phishing emails.

New security awareness training focuses on: verification habits (always verify through a separate channel), skepticism about unusual requests (even if they appear legitimate), and clear escalation paths (if something feels off, involve security team).

The training acknowledges that users can't reliably distinguish AI-generated phishing from legitimate communication. Instead, the training emphasizes process (verify through separate channels), not perception (identify the email as suspicious).

Threat Intelligence and Information Sharing

Understanding the current threat landscape is essential. What attack techniques are being deployed? What tools are being used? What targets are being prioritized?

Join industry threat intelligence sharing groups. Participate in information sharing communities. Implement real-time threat feeds from reputable sources.

When a new dark LLM emerges on underground marketplaces, you need to know about it quickly. When a new deepfake attack variant appears, you need to understand it. When a new phishing technique is deployed at scale, you need to have detected it.

Information sharing doesn't prevent attacks, but it accelerates your understanding and response.

Emerging AI Defense Technologies

As AI-enabled attacks become more sophisticated, defensive AI systems are evolving to counter them.

AI-Powered Threat Detection

Security vendors are deploying machine learning systems to detect AI-generated attacks. These systems are trained on large datasets of both legitimate and malicious AI-generated content. They can identify phishing emails generated by dark LLMs with higher accuracy than human analysts (though not perfect accuracy).

The challenge: as detection improves, attackers adapt their generation techniques. The arms race accelerates. Detection accuracy improves, attack generation improves, detection improves further.

Currently, AI-powered threat detection achieves approximately 75-85% accuracy for detecting AI-generated phishing. That means 15-25% of attacks still pass detection. With high-volume attacks, this acceptance rate means thousands of emails slip through.

Deepfake Detection Technology

Multiple approaches are emerging for detecting deepfakes. Some focus on video artifacts: compression patterns, lighting inconsistencies, biological markers (eye movement, blink patterns, pulse detection).

Others focus on audio artifacts: subtle voice synthesis markers, breathing pattern inconsistencies, timing irregularities.

The problem: deepfake technology is advancing faster than detection technology. When deepfakes reach perfect quality (and they're getting close), detection based on visual artifacts becomes impossible. You'd need to detect the deepfake through other means: verification of the communication channel, out-of-band confirmation, or behavioral analysis.

Synthetic Identity Detection

Financial institutions are deploying machine learning models to identify synthetic identities before they're activated for fraud. These models look for patterns: identities opened at suspicious times, identities with unusual geographic patterns, identities with identical behavioral signatures (suggesting automated generation), identities accumulating credit in suspicious patterns.

The accuracy is improving, but it remains imperfect. The fundamental challenge: legitimate new identities and synthetic identities follow similar patterns during accumulation phase.

Voice Synthesis Detection

Voice cloning detection is particularly challenging because synthetic speech is imperceptible to human listeners. Detection technologies look for subtle markers: frequency analysis inconsistencies, temporal artifacts, or voice characteristics that are physically impossible to achieve with a human voice.

These detection methods are becoming more sophisticated, but voice synthesis is also becoming more sophisticated. The arms race continues.

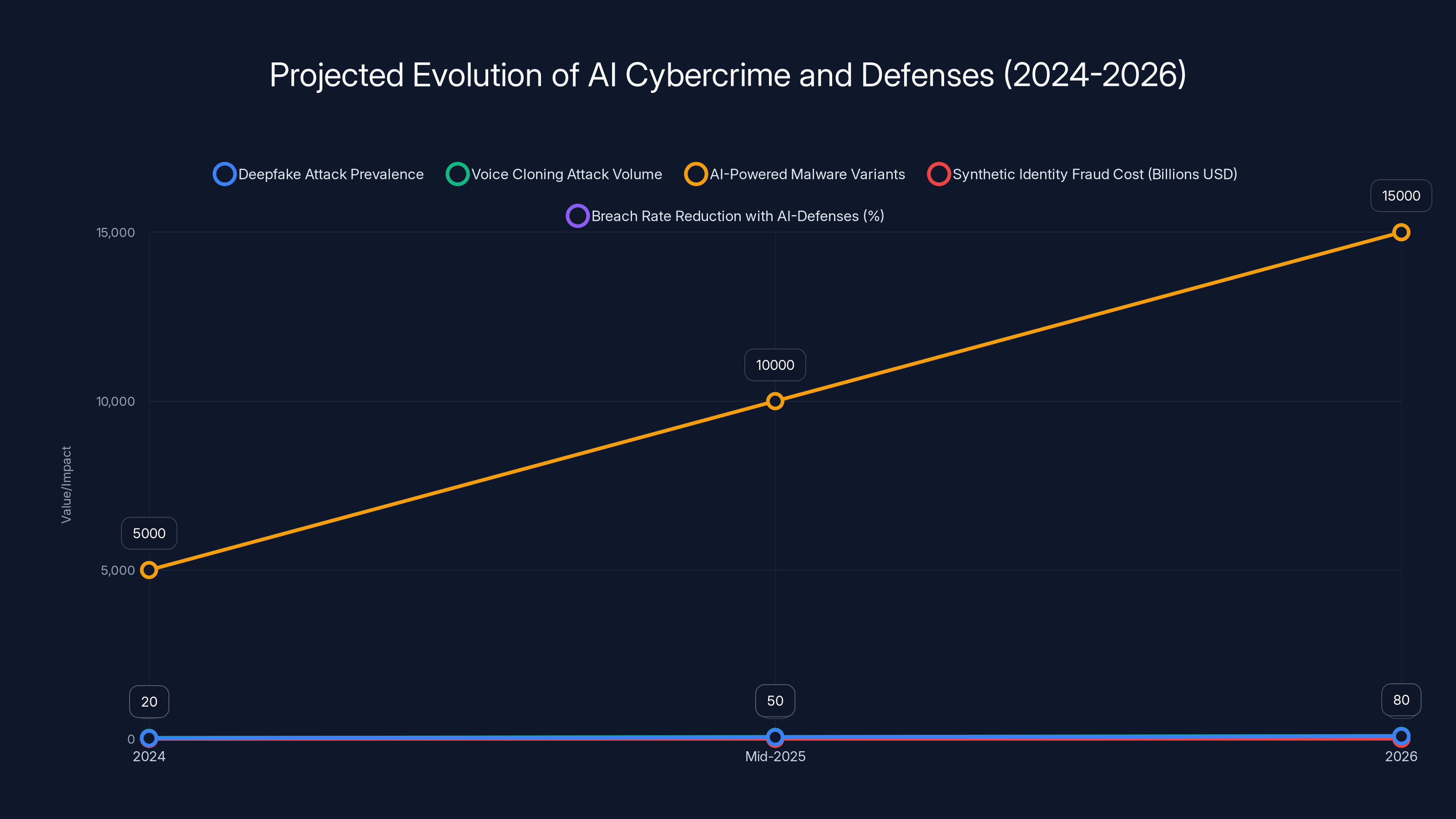

The chart shows an estimated increase in AI cybercrime threats, such as deepfake attacks and voice cloning, from 2024 to 2026. Organizations using AI-appropriate defenses are projected to experience significantly lower breach rates. Estimated data.

Regulatory and Legal Responses

Governments and regulators are slowly responding to AI-enabled cybercrime, though the pace is considerably slower than the threat evolution.

Regulatory Requirements

Several regulatory frameworks now explicitly require protection against AI-enabled attacks. Financial regulations require banks to implement controls specifically designed to detect deepfake fraud and voice cloning attacks. Data protection regulations require organizations to implement AI-appropriate security measures.

The challenge: regulations are written reactively (after attacks occur) while threats evolve proactively. By the time a regulation is implemented, the threat has evolved beyond the regulatory requirements.

Law Enforcement Challenges

Federal law enforcement agencies are dedicating resources to AI cybercrime investigation. But the challenges are substantial: attribution is difficult, jurisdiction is unclear (dark LLM might be operated from any country), and the scale of activity is enormous (thousands of active criminals across dozens of countries).

Prosecuting dark LLM operators is complicated by the infrastructure involved: server hosting, payment processing, customer support all involve multiple jurisdictions and entities.

International Cooperation

AI cybercrime is international. Darknet operators might be in Eastern Europe, customers might be in the Middle East, victims might be in North America. No single nation can effectively combat this.

International law enforcement cooperation is improving, but it remains slow. Joint taskforces take months or years to develop sufficient evidence for prosecution. By the time prosecution begins, the underlying threat has evolved significantly.

Industry-Specific Threats and Responses

Financial Services

Banks and fintech companies face the highest volume of AI-enabled attacks. Deepfake fraud and voice cloning specifically target financial institutions because the payoff is direct (money transfer).

Responses include: mandatory video verification for large transfers, behavioral analytics on all accounts, voice biometric systems that are more difficult to spoof than simple voice passwords, and AI-powered fraud detection that has reached 85%+ accuracy.

But financial institutions are also implementing unconventional defenses. Some require in-person verification for large transactions. Others use "out-of-band" verification (the bank calls you on a phone number in their records, not a number you provide). Some financial institutions are exploring blockchain-based verification systems.

Healthcare

Healthcare organizations face AI-enabled attacks targeting patient data, medication orders, and insurance claims. Deepfakes of physicians can authorize inappropriate treatments or medication changes.

Responses include: mandatory physician verification for critical decisions, audit logs on all medication orders, and behavioral analytics on physician access patterns.

Government and Defense

Government agencies are implementing the most sophisticated AI defenses because they face nation-state level threats. These include:

- Biometric verification for all critical access

- Behavioral analysis with immediate human intervention when anomalies are detected

- Isolated networks with no internet connectivity for most sensitive systems

- Mandatory multi-factor authentication with security keys

- Regular red team exercises testing AI-enabled attacks

The Timeline: Where We Are and Where We're Going

Current State (2024-2025)

We're in the early-to-mid phase of the AI cybercrime wave. Dark LLMs are emerging. Deepfakes are becoming convincing. Voice cloning is becoming accessible. Phishing is becoming technically perfect.

Threats are clearly increasing, but defenses are adapting. Organizations implementing zero-trust architecture and behavioral analytics are experiencing lower breach rates than organizations using traditional security approaches.

The crime marketplace is consolidating. Multiple dark LLM vendors are competing. Prices are stabilizing. Customer bases are growing.

6-12 Months Out (Mid-2025)

Expect: deepfake attacks to become indistinguishable from reality. Voice cloning to mature. AI-powered malware variants reaching 10,000+ variations per day. Synthetic identity fraud to accelerate.

Expect defensive responses to lag behind. Organizations without zero-trust implementation will face increasing difficulty. Traditional security tools will become demonstrably less effective.

12-24 Months Out (2026)

Expect: deepfake-enabled fraud to become the primary attack vector against financial institutions. Voice cloning attacks to equal text-based phishing in volume. Synthetic identity fraud to cost financial institutions billions annually.

Expect regulatory responses to sharpen. Expect law enforcement to achieve some notable prosecutions (which will have limited deterrent impact). Expect technological defenses to improve, but always remaining one step behind the threats.

Expect a bifurcation between organizations investing heavily in AI-appropriate defenses (zero-trust, behavioral analytics, continuous verification) and organizations using traditional security approaches. The former will experience breach rates 50-70% lower than the latter.

Mentions of AI-related keywords on dark web forums surged by 371% from 2019 to 2025, indicating a significant increase in AI misuse discussions. Estimated data.

Building Your Defense Strategy: A Practical Framework

Given the landscape of threats, here's how to prioritize your defensive investments:

Phase 1: Foundation (Next 30 Days)

- Implement mandatory multi-factor authentication across your entire organization. Non-negotiable. This single measure stops the majority of initial compromises.

- Deploy endpoint detection and response (EDR) on all critical systems. This gives you visibility into compromise attempts.

- Establish incident response capability. Know who you'll call if you're compromised. Know what your recovery process is.

- Conduct threat assessment. Understand which attack vectors pose the highest risk to your specific organization.

Phase 2: Detection (Next 30-90 Days)

- Deploy behavioral analytics on user accounts, particularly privileged accounts. Establish baselines for normal behavior.

- Implement network segmentation. Isolate critical systems from general network access.

- Deploy email authentication (SPF, DKIM, DMARC). This stops basic email spoofing.

- Implement fraud detection on financial systems and data access systems. Look for unusual patterns.

Phase 3: Adaptation (Next 90-180 Days)

- Migrate to zero-trust architecture. This is a major undertaking, but it's increasingly non-negotiable.

- Deploy AI-powered threat detection. Supplement human analysis with machine learning.

- Implement continuous verification. Make authentication adaptive based on risk context.

- Establish threat intelligence integration. Consume real-time threat feeds and share intelligence with peers.

Phase 4: Resilience (Ongoing)

- Continuous testing. Red team exercises should specifically include AI-enabled attack scenarios.

- Regular updating. Keep all systems patched and current. AI-enabled attacks often exploit known vulnerabilities.

- Training evolution. Shift security awareness training from "spot the phishing" to "verify through separate channels."

- Monitoring and adjustment. Evaluate detection accuracy. Adjust models and rules based on observed threats.

The Economics of AI Cybercrime: Why This Won't Stop

Understanding the economics helps explain why this threat wave will persist and accelerate.

From a criminal perspective, the risk-reward calculation is extremely favorable. Cost to operate is low (

A criminal operating 100 deepfake fraud attacks with a 10% success rate nets

Compare this to legitimate business. Venture-backed companies are thrilled with 100% ROI annually. Criminals are achieving that per week with AI-enabled attacks.

From a marketplace operator perspective, the economics are equally favorable. A dark LLM vendor serving 1,000 customers at

That's enough revenue to justify full-time criminal operations, continuous development, and marketing.

From a defender perspective, the economics are unfavorable. Implementing zero-trust architecture costs substantial capital investment (millions for mid-sized organizations). Maintaining advanced security infrastructure requires ongoing investment (staff, tools, training). ROI is measured in "breaches prevented," which is difficult to quantify.

This economic imbalance drives the threat growth. Criminals are capitalizing profitable enterprises. Defenders are making cost-based decisions. The gap widens.

What AI Defenders Are Getting Right (And Wrong)

Getting Right:

Organizations that have successfully reduced AI-enabled attack impact are doing several things correctly:

They're assuming compromise is inevitable and designing for resilience rather than prevention. They're implementing detection that works against AI-generated attacks (behavioral analytics, anomaly detection) rather than signature-based approaches. They're making authentication adaptive based on risk context. They're testing their defenses against realistic AI-enabled attack scenarios.

Getting Wrong:

Many organizations are making critical mistakes:

They're investing heavily in AI-powered threat detection tools while neglecting foundational security (multi-factor authentication, endpoint detection, network segmentation). They're treating the threat as purely technical when it's increasingly behavioral and social. They're building detection systems for known attack patterns while threats are constantly evolving. They're attempting to prevent AI attacks through detection and rules when the attack surface is too large.

The most effective defensive strategy combines: strong foundational security (MFA, EDR, segmentation), behavioral analysis and anomaly detection, assumption of compromise and rapid incident response, and continuous adaptation rather than static rule-based detection.

Preparing Your Organization: A Security Team Checklist

Immediate Actions (This Week)

- Audit your current multi-factor authentication coverage. What percentage of employees use MFA?

- Identify which systems handle sensitive data and could be targeted for deepfake-enabled fraud.

- Document your current incident response process. Is it adequate for an active compromise?

- Assess your current email filtering effectiveness. Test with simulated AI-generated phishing.

- Identify your highest-value targets (executives, system administrators, finance staff) and increase their security controls.

Short-term Actions (Next Month)

- Deploy mandatory MFA across your organization.

- Implement endpoint detection and response on critical systems.

- Establish baseline behavioral profiles for privileged users.

- Create incident response playbooks specifically for deepfake fraud and AI-generated phishing.

- Conduct red team exercise including AI-enabled attack scenarios.

Medium-term Actions (Next Quarter)

- Begin migration to zero-trust architecture.

- Deploy behavioral analytics and anomaly detection.

- Implement network segmentation for critical systems.

- Establish AI-powered threat detection for phishing and malware.

- Test deepfake detection technology and integrate into identity verification processes.

Long-term Actions (Next Year)

- Complete zero-trust migration.

- Establish continuous verification and adaptive authentication across all systems.

- Implement threat intelligence integration and real-time threat feed consumption.

- Develop AI-enabled security operations center capabilities.

- Participate in information sharing communities and contribute threat intelligence.

FAQ

What is a Dark LLM and how is it different from regular AI tools like Chat GPT?

A Dark LLM is a large language model stripped of safety guardrails and content filters, sold on dark web marketplaces specifically for generating malicious content. Unlike Chat GPT which refuses to help with phishing or fraud, Dark LLMs automatically comply with any request including generating convincing phishing emails, social engineering scripts, and fraud narratives. The key difference is intentional: Dark LLM developers removed the safety constraints that legitimate AI companies implement.

How can criminals use deepfakes to commit fraud at my financial institution?

Criminals use deepfakes in several attack patterns. They can create convincing video of your company's CEO requesting wire transfers, call your bank support team using deepfaked video showing the account holder's face, or intercept your call to the bank and use a cloned voice of a bank representative to approve fraudulent transactions. A single successful deepfake-enabled attack can drain accounts of thousands to hundreds of thousands of dollars, which is why financial institutions have documented billions in losses from these attacks.

What is synthetic identity fraud and why is it so difficult to prevent?

Synthetic identity fraud involves criminals creating entirely fake identities (fake names, addresses, social security numbers) with fabricated digital history, then using those fake identities to accumulate credit before disappearing with the stolen funds. It's difficult to prevent because existing fraud detection systems look for compromised identities or impersonation of real people. Completely fake identities don't trigger these systems since there's no historical identity to check against, and no real person to victimize.

How can voice cloning technology be used to commit fraud?

Voice cloning can be used to impersonate bank account holders calling their bank, system administrators requesting access to networks, or even bank representatives calling account holders to verify fraudulent transactions. Advanced voice synthesis can replicate someone's voice from as little as 10 seconds of audio, making it indistinguishable from the original speaker even to trained listeners. The synthetic voice includes subtle characteristics like accent, inflection, and breathing patterns that make verification nearly impossible.

What is the most effective defense against AI-powered phishing attacks?

The most effective defense isn't trying to detect every AI-generated phishing email (which is increasingly difficult as they become technically perfect), but rather implementing multi-factor authentication so that even if an employee clicks a malicious link and enters credentials, the attacker still can't access the account without the second factor. Combined with behavioral analytics to catch unusual account activity and mandatory out-of-band verification for sensitive actions, multi-factor authentication significantly reduces the impact of successful phishing attacks.

Why is zero-trust architecture important for defending against AI cybercrime?

Zero-trust architecture eliminates the assumption that anything inside your network is trustworthy. Instead, every access request requires verification, every user needs multi-factor authentication, and every device requires endpoint verification. This is critical for AI-enabled attacks because deepfake-enabled compromises (where someone uses stolen credentials of a legitimate user) look identical to normal access from a traditional security perspective. Zero-trust forces additional verification layers that catch these compromises.

How much does dark LLM access cost and how many criminals are using these services?

Dark LLM subscriptions typically cost between

What should I do if I suspect my organization is being targeted by AI-enabled attacks?

First, implement immediate protective measures: enforce mandatory multi-factor authentication, deploy endpoint detection and response, and establish network segmentation. Second, monitor actively for signs of compromise including unusual login patterns, unexpected credential usage, and behavioral anomalies. Third, prepare incident response procedures including clear escalation paths and recovery procedures. Finally, maintain threat intelligence feeds to understand current attack techniques and adapt your defenses proactively.

Are there regulatory requirements for defending against deepfake fraud specifically?

Several regulatory frameworks now explicitly address deepfake fraud and AI-enabled attacks. Financial regulations require banks to implement controls for detecting deepfake fraud. Some jurisdictions are considering regulations specifically banning deepfake creation for fraudulent purposes. However, regulations are written reactively after threats emerge, meaning they always lag behind threat evolution. Your defensive strategy should exceed current regulatory requirements since the threats are moving faster than regulatory frameworks.

How quickly are deepfake and voice cloning technologies improving?

Both technologies are advancing remarkably quickly. Voice cloning systems can now replicate someone's voice from a single 10-second audio sample. Deepfake video is reaching imperceptible quality, with generations now indistinguishable from authentic video even when studied carefully. The rate of improvement suggests that within 12-24 months, detection based on visual or audio artifacts may become impossible, requiring defenses based on verification processes, behavioral analysis, and communication channel verification rather than detection of the synthesis artifacts themselves.

Conclusion: The New Reality of Cybersecurity

We're living through a transition point in cybersecurity. The previous 30 years of defense-in-depth, perimeter security, and signature-based detection were effective because attacks operated at human scale. Phishing emails had to be written by humans. Malware had to be created by developers. Deepfakes required expensive equipment.

That era is over.

AI has collapsed the barrier to sophistication. A teenager with a $50/month dark LLM subscription can generate phishing campaigns that fool 90% of users. An amateur with access to deepfake generation tools can create convincing synthetic video. A low-skill criminal with access to synthetic identity generation can commit fraud at industrial scale.

The threat landscape has fundamentally changed. Your defenses need to change too.

The good news: we understand what works. Organizations implementing zero-trust architecture, behavioral analytics, continuous verification, and adaptive authentication are experiencing dramatically lower breach rates than organizations using traditional security approaches. Foundational controls (multi-factor authentication, endpoint detection, network segmentation) remain effective against AI-enabled attacks.

The challenge: implementing these defenses requires investment, organizational change, and continuous evolution. It's not a one-time project. It's a permanent shift in how you approach security.

But the alternative is worse. Organizations not implementing these defenses are facing increasing breach rates, increasing financial impact, and increasing operational disruption. The cost of inaction exceeds the cost of action.

Start immediately. Implement multi-factor authentication this month. Deploy behavioral analytics this quarter. Plan zero-trust migration for the next year. Test your defenses against realistic AI-enabled attack scenarios.

The AI cybercrime wave is here. Your response determines whether it's a manageable threat or an existential challenge for your organization.

The time to prepare was six months ago. The second best time is right now.

Key Takeaways

- AI cybercrime activity increased 371% between 2019-2025, creating a stable underground market with thousands of active participants

- Deepfake-enabled fraud caused 1,000-5

- Dark LLMs and AI crimeware are democratizing sophisticated attacks: subscription prices of 200/month enable low-skill actors to deploy enterprise-scale phishing campaigns

- AI-generated phishing achieves 4x higher success rates than traditional phishing, making traditional email filtering and security awareness training increasingly ineffective

- Defense requires fundamental shift to zero-trust architecture, behavioral analytics, continuous verification, and rapid incident response; traditional signature-based detection no longer suffices

Related Articles

- Hyatt Ransomware Attack: NightSpire's 50GB Data Breach Explained [2025]

- Malicious Chrome Extensions Spoofing Workday & NetSuite [2025]

- Critical Cybersecurity Threats Exposing Government Operations [2025]

- Google Fast Pair Security Flaw: WhisperPair Vulnerability Explained [2025]

- Copilot Security Breach: How a Single Click Enabled Data Theft [2025]

- LinkedIn Comment Phishing: How to Spot and Stop Malware Scams [2025]

![AI Cybercrime: Deepfakes, Phishing & Dark LLMs [2025]](https://tryrunable.com/blog/ai-cybercrime-deepfakes-phishing-dark-llms-2025/image-1-1769276234103.jpg)