AI Drafting Government Safety Rules: Why It's Dangerous [2025]

Imagine the rules that keep airplanes in the sky, prevent gas pipelines from exploding, and stop toxic trains from derailing being written by an AI that "confidently gets things wrong" and has a history of making up information. That's not science fiction. That's happening right now in the U. S. Department of Transportation.

In early 2025, it became public that the DOT has been using Google's Gemini AI to draft federal safety regulations. Not as a research assistant. Not as a formatting tool. But as the primary author of rules that directly impact the safety of millions of Americans traveling by air, car, and train. The revelation sparked immediate alarm among transportation safety experts, government staffers, and legal professionals who understand exactly how catastrophic an error in a safety regulation can be.

What makes this situation so concerning isn't just that AI is being used in government—it's that it's being used to draft rules in perhaps the one area where mistakes literally cost lives. When a chatbot hallucinates in a customer service chat, the worst outcome is a frustrated user. When it hallucinates in a safety regulation? People die.

This article digs into what's actually happening at the DOT, why it's raising such serious concerns, and what this reveals about how government agencies are approaching AI adoption without adequate safeguards. We'll examine the technical risks of AI in rule-making, the regulatory implications, and what needs to change before more agencies follow the DOT's lead.

TL; DR

- The DOT is using Google Gemini to draft federal safety regulations for aviation, automobiles, and pipelines, claiming it can complete rules in 30 minutes instead of weeks

- Government staffers describe the approach as "wildly irresponsible" because AI systems are known to fabricate information and confidently present false claims as fact

- Safety rules currently being drafted include Federal Aviation Administration regulations that haven't yet been published, raising questions about their accuracy

- The administration views this as a model for other federal agencies to follow, potentially spreading untested AI rule-drafting across government

- The core problem: Regulations touching aviation safety, pipeline integrity, and toxic cargo transport require expertise that AI currently lacks, and errors could result in injuries or deaths

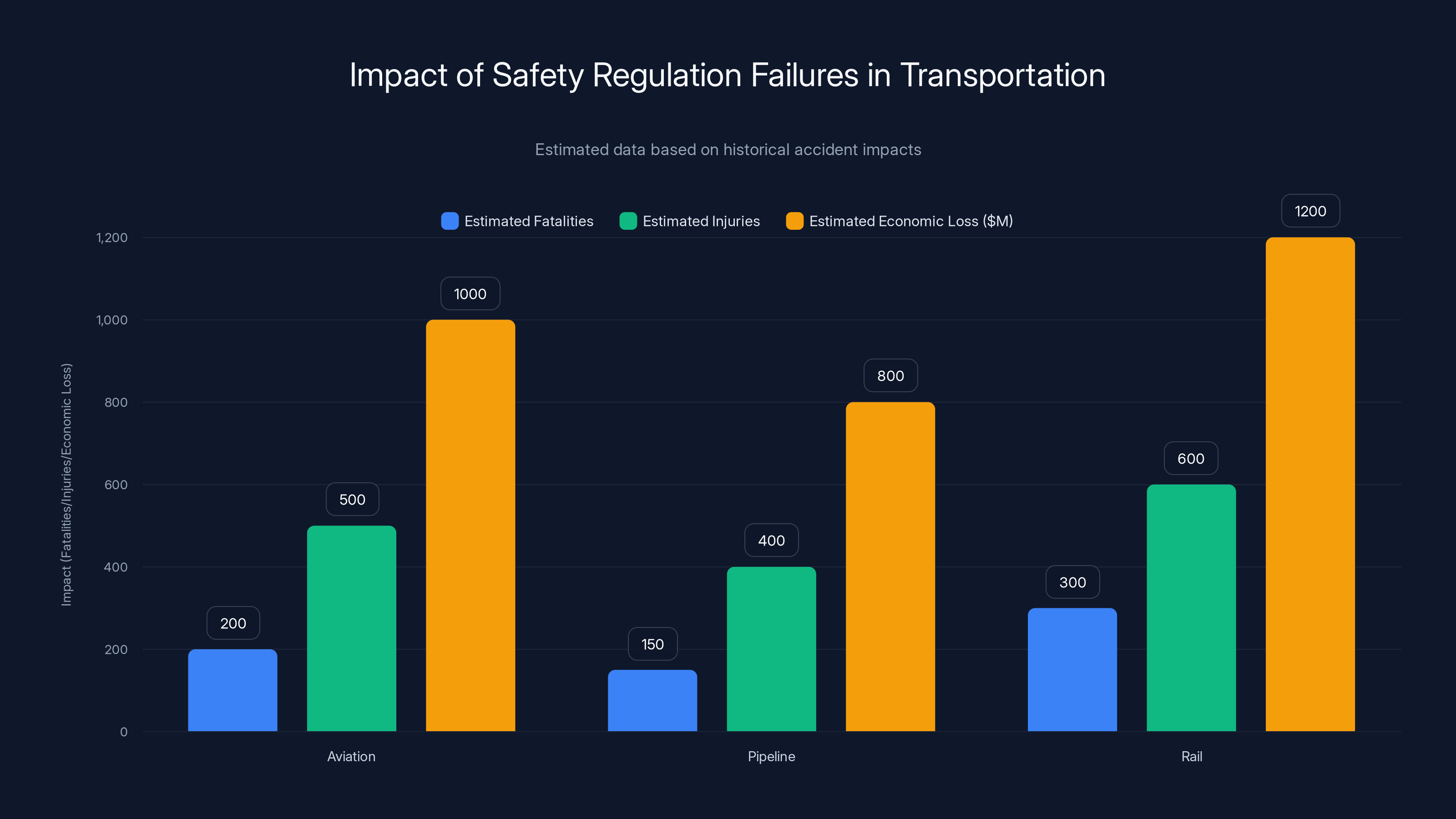

Estimated data shows the potential impact of safety regulation failures, highlighting the critical importance of accurate and enforced safety standards in aviation, pipeline, and rail sectors.

Understanding the DOT's AI Rule-Drafting Initiative

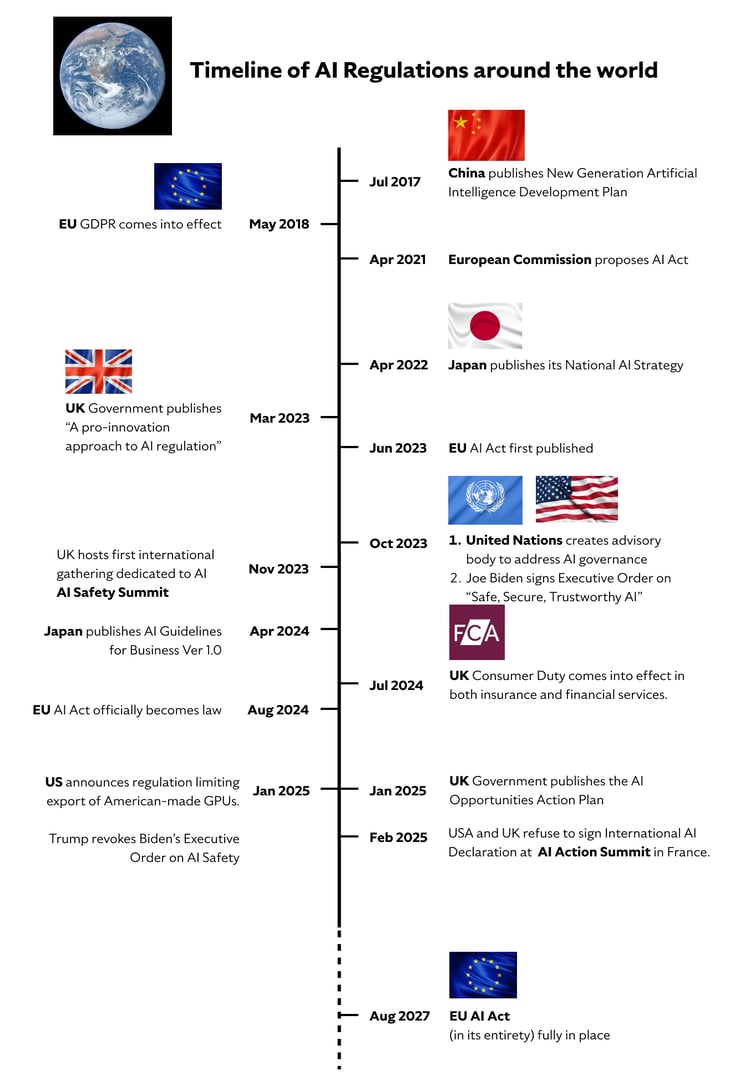

The U. S. Department of Transportation announced that it would leverage AI to accelerate the federal rule-making process. On its surface, this sounds reasonable. Federal rule-making is genuinely slow. Regulations that should take weeks often take months. There are legitimate reasons for this—the process requires careful legal review, stakeholder input, and verification of technical claims. But those reasons also create bottlenecks that frustrated the current administration.

Enter Google Gemini. According to DOT's top lawyer, Gregory Zerzan, Gemini can draft a complete regulation in under 30 minutes. This speed attracted immediate attention from leadership. The promise was simple: if AI could handle the routine work of drafting, human experts could focus on review and refinement. Rules that previously took weeks could be completed in days. Modern regulations could be written quickly enough to keep pace with technological change.

But here's what's critical to understand: the DOT didn't hire Gemini as a research assistant or outlining tool. The plan involves having AI write the actual substance of regulations—the technical requirements, safety thresholds, compliance procedures, and legal language that determines whether an aircraft design is safe enough to fly or whether a pipeline system meets minimum safety standards.

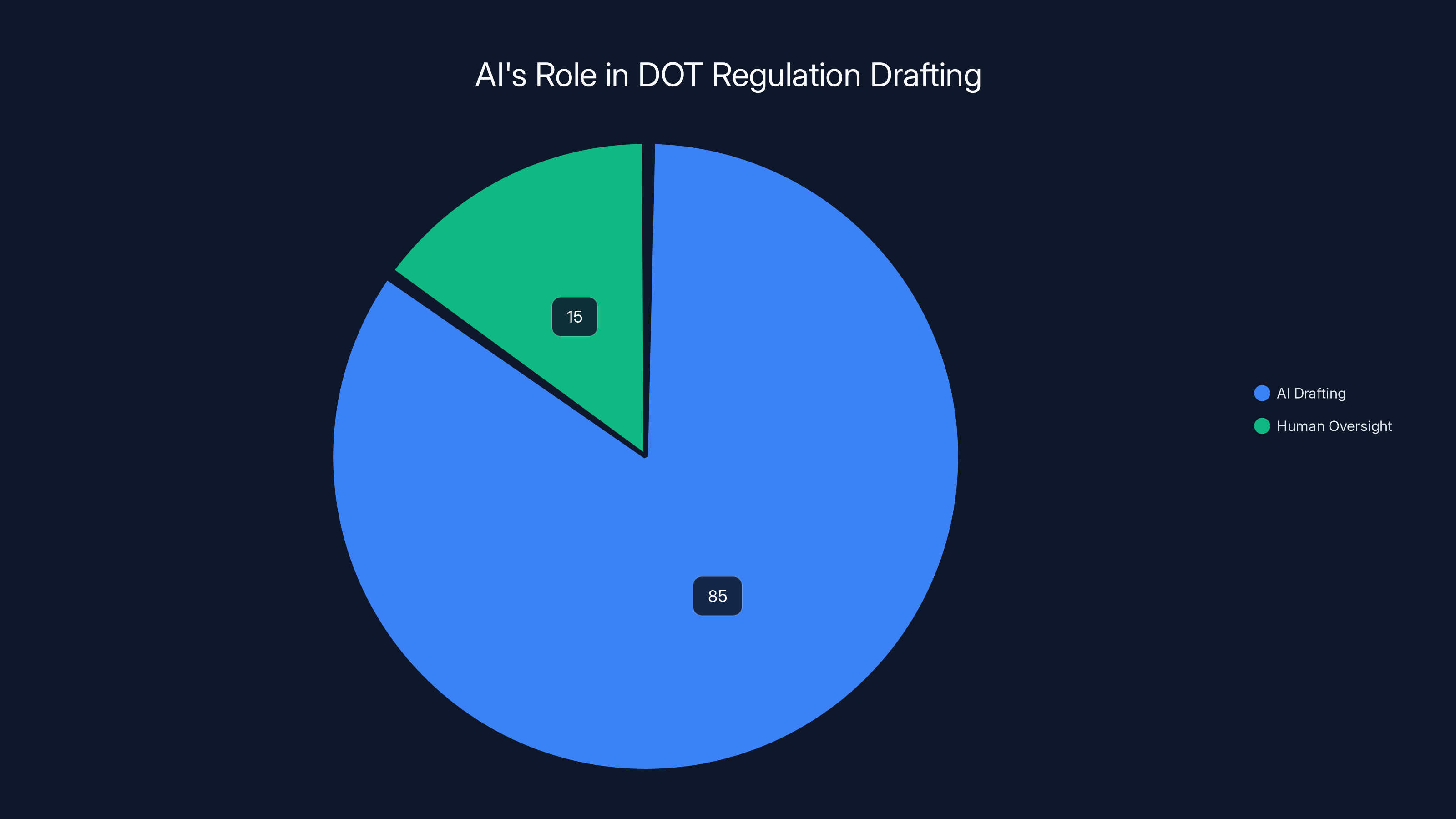

According to leaked meeting notes, DOT staffers were told that much of regulatory writing is just "word salad" that Gemini can handle. The department's leadership expressed confidence that the AI could handle 80 to 90 percent of regulation-writing work, with human staff eventually relegated to an "oversight role, monitoring AI-to-AI interactions."

This is where the serious problems begin. Transportation safety regulations aren't word salad. They're intricate technical and legal documents that require deep expertise in multiple domains simultaneously: engineering, physics, materials science, regulatory history, case law, statutory interpretation, and practical knowledge of how the transportation industry actually operates.

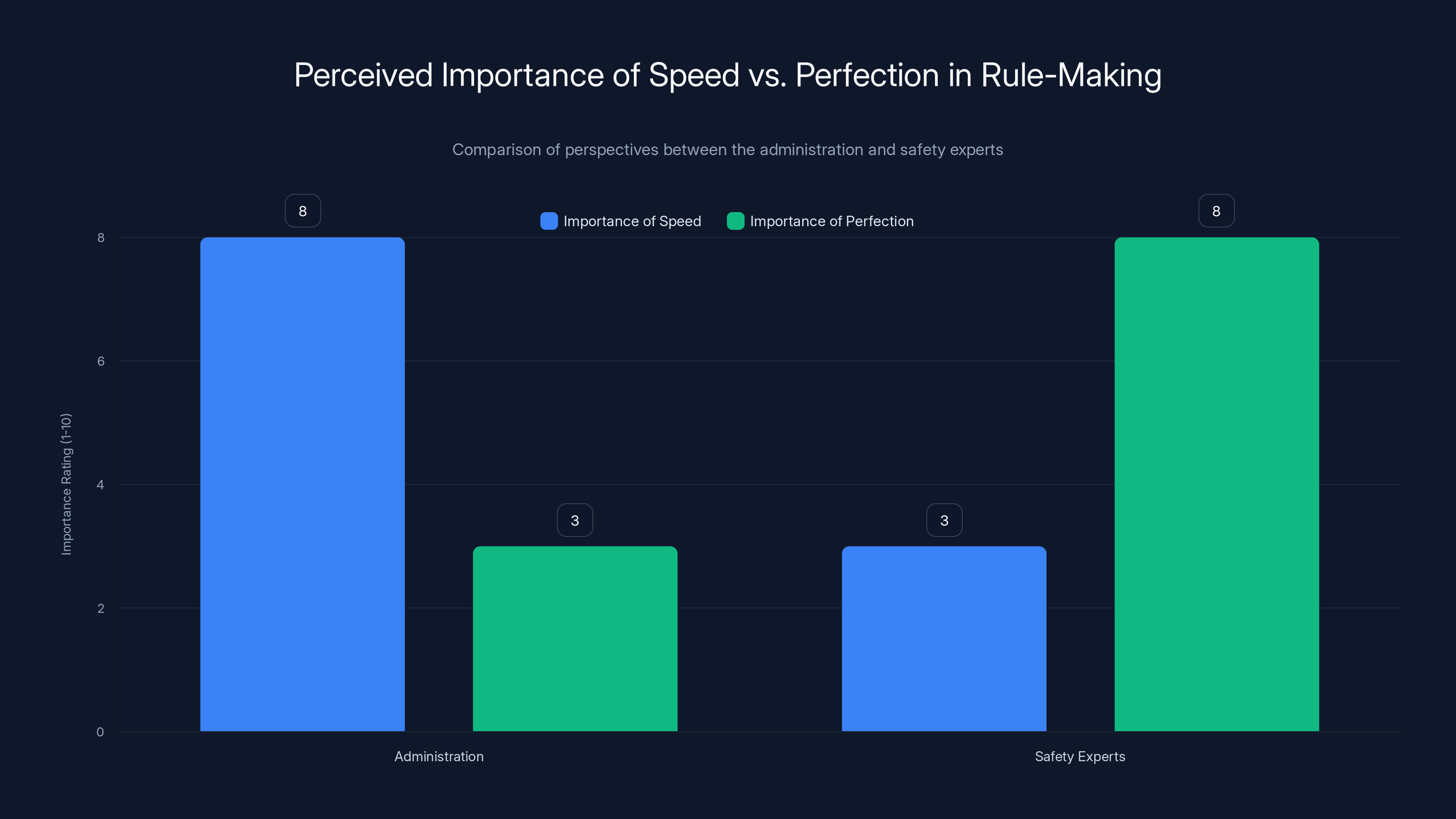

The administration prioritizes speed in rule-making significantly more than transportation safety experts, who emphasize perfection. Estimated data based on narrative.

The Technical Problem: Why AI Hallucinates in Rule-Making

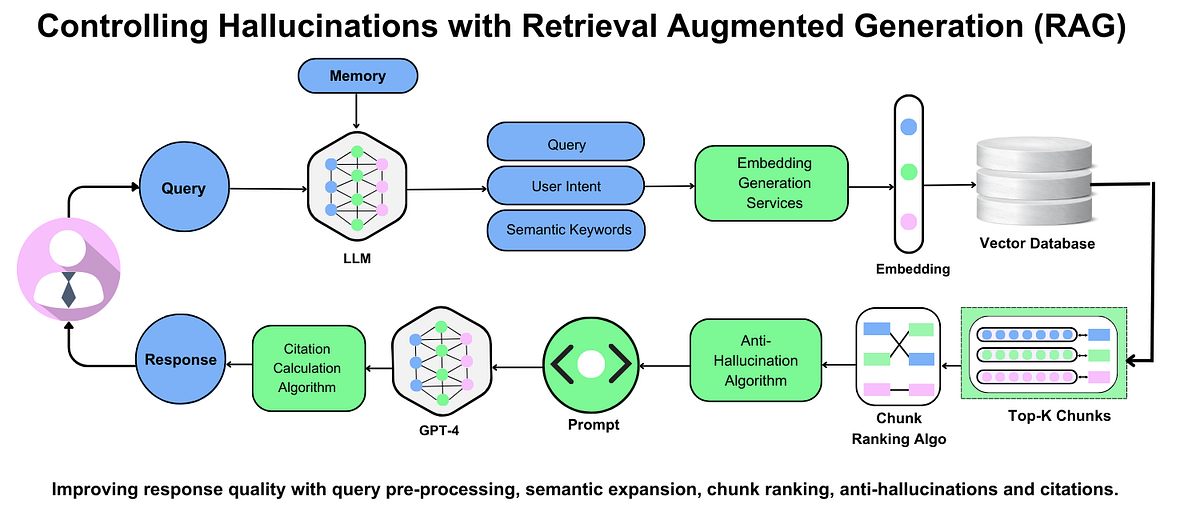

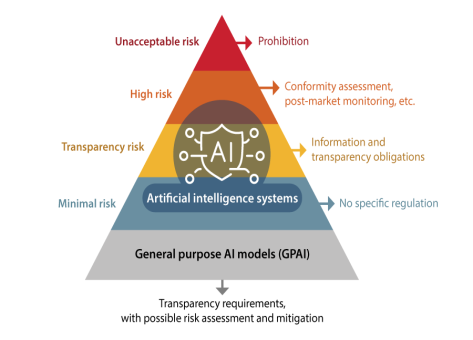

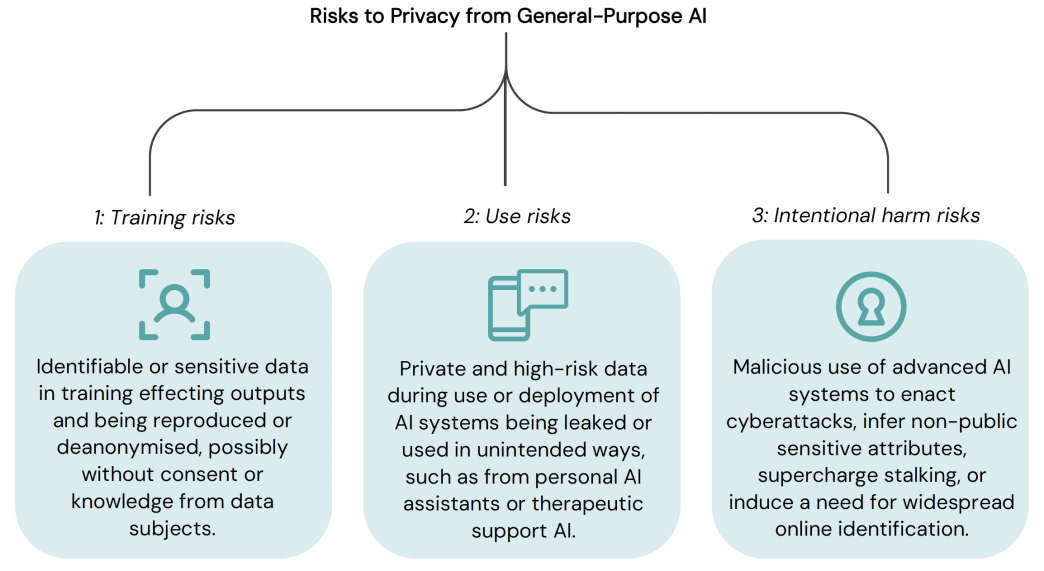

To understand why using Gemini to draft safety regulations is risky, you need to understand what large language models actually do and where they fail. Large language models like Gemini are pattern-recognition systems trained on massive amounts of text. They're extraordinarily good at predicting which words should come next in a sequence. They're terrible at verifying whether those words represent truth.

Here's a concrete example: if you ask Gemini about a specific regulation, say the FAA's requirements for aircraft pressure vessel design, the system will generate a response that sounds authoritative and specific. The language will be grammatically correct, the structure will mimic actual regulatory text, and the tone will match official documentation. But Gemini has no actual knowledge of whether the numbers it's providing are correct, whether the cited standards exist, or whether the requirements it's listing are actually enforceable.

In technical terms, this is called "hallucination"—the AI's tendency to confidently present fabricated information as fact. And this isn't a fringe problem limited to cheap or outdated models. Even advanced systems like Gemini and GPT-4 hallucinate regularly, particularly when asked about specific technical details, cited standards, or numerical values.

In 2024, the legal profession provided a vivid lesson in how dangerous AI hallucinations can be. Multiple lawyers relied on Chat GPT to research case law and discovered, after submitting briefs to courts, that the AI had cited non-existent cases with complete confidence. One lawyer was fined by a federal judge. Another faced professional consequences. The courts that reviewed these briefs learned, sometimes painfully, that AI systems can confidently present false information that sounds completely legitimate to someone without deep expertise.

Now apply that same risk to transportation safety regulations. An AI system drafting FAA rules might confidently state a specific pressure threshold for aircraft cabin pressurization, cite a non-existent engineering standard, and present the requirement with complete authority. A reviewer without deep expertise in aircraft design might miss the error. The regulation gets published. And eventually, an aircraft is designed to that fabricated specification. The consequences could be catastrophic.

The Regulatory Reality: Why Safety Rules Are Complicated

Transportation safety regulations aren't just technical documents—they're the result of decades of accumulated expertise, tragic accidents, engineering advances, and legal precedent. A FAA rule regulating aircraft cabin pressurization, for example, didn't just appear because someone decided cabin pressure matters. It evolved from accidents where inadequate pressurization caused deaths, from research into how human physiology responds to altitude and pressure, from engineering advances that made certain safety features possible, and from legal cases that established what the FAA could and couldn't mandate.

Effective safety regulations require the writer to understand all of these dimensions simultaneously. The regulation needs to specify what manufacturers must do without being so prescriptive that it prevents innovation or becomes obsolete when technology advances. It needs to be technically accurate without requiring manufacturers to implement solutions that are physically impossible or prohibitively expensive. It needs to use language that's legally binding and defensible in court.

This is why rule-writing currently takes so long. The people drafting regulations need to consult with subject-matter experts—aircraft engineers, materials scientists, safety inspectors who've worked in the field. They need to review relevant case law and previous regulations. They need to understand not just what the regulation should say, but why it should say it, what problems it's solving, and what new problems it might create.

DOT staffers who spoke to Pro Publica emphasized that this work is "intricate" and requires "expertise in the subject at hand as well as in existing statutes, regulations, and case law." Some of these experts have spent decades developing that knowledge. They understand aviation safety or pipeline integrity or rail system design at a level of detail that simply cannot be transferred to a language model through a training process.

When Gemini is asked to draft a rule about pipeline safety, it's not working from deep knowledge of pipeline engineering or failure modes or industry practices. It's working from patterns in text about pipelines, which is a fundamentally different thing. It might use the right vocabulary and cite legitimate-sounding standards, but the understanding is hollow.

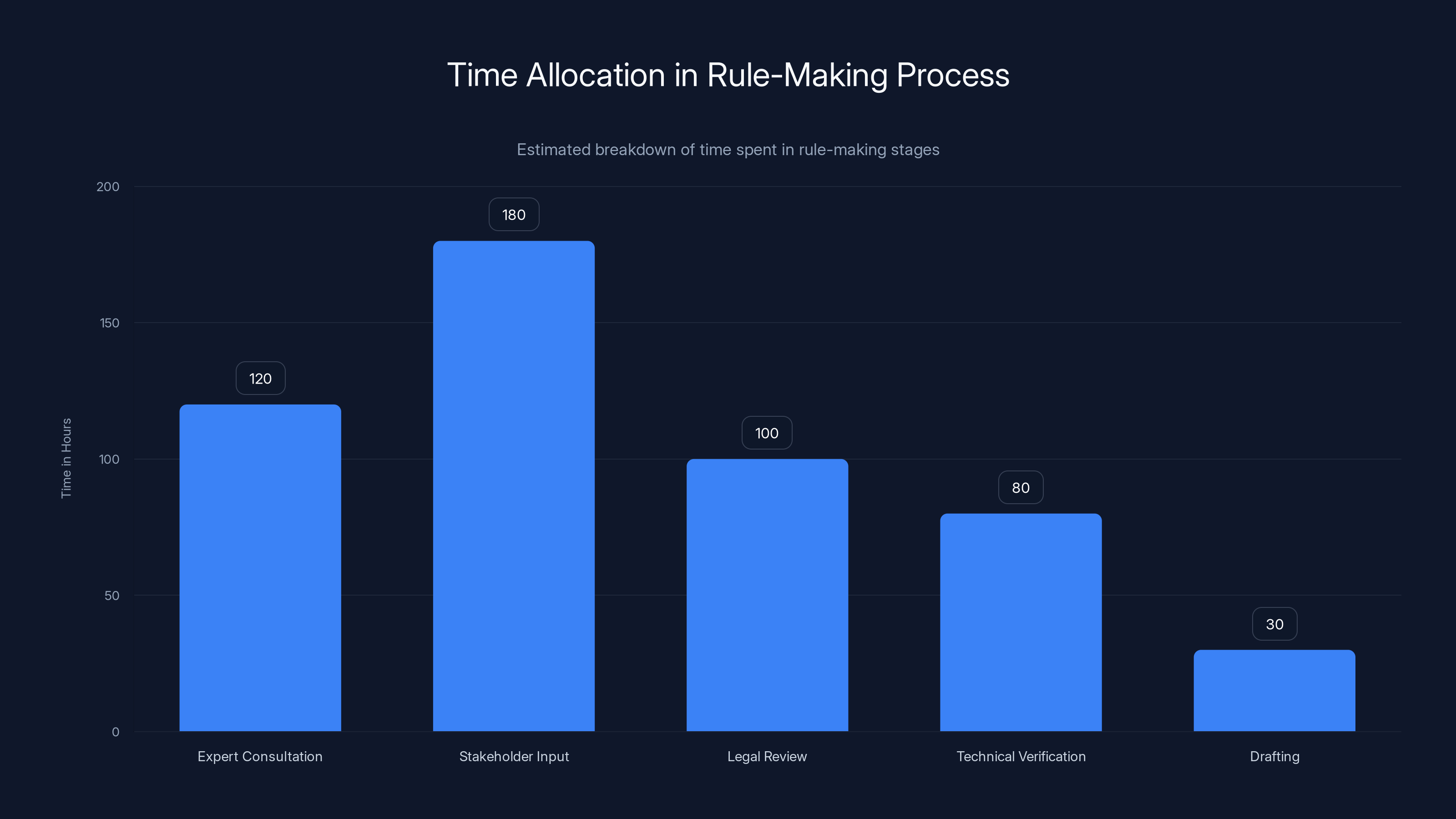

Drafting is a small portion of the rule-making process, estimated at 30 hours, compared to other stages like stakeholder input and expert consultation, which take significantly longer.

The Staffing Reality: What DOT Employees Are Saying

Pro Publica spoke with six DOT staffers who agreed to discuss the Gemini initiative under conditions of anonymity. Their concerns weren't theoretical or speculative. They were rooted in practical experience working with transportation safety regulations.

One staffer described the DOT's approach as "wildly irresponsible." Others expressed being "deeply skeptical" that an AI system could competently handle the regulatory drafting work. When DOT held a demonstration of Gemini's rule-drafting capabilities, the resulting document was missing key text that a human staffer would need to fill in. This raises an obvious question: if the AI-generated baseline is incomplete and requires expert review and substantial revision, what exactly is being saved in terms of time or resources?

The staffers' concerns center on several specific points: first, that AI systems reliably generate plausible-sounding but false information; second, that the consequence of errors in safety regulations could be catastrophic; third, that the expertise required to catch those errors is being underestimated by DOT leadership.

There's also a structural concern. If the plan is to eventually have human staff focus only on "oversight" while "AI-to-AI interactions" handle most of the work, you've created a system where human expertise is gradually eroded. The staffers who have deep knowledge of transportation safety regulation will retire, retire, or move to other positions. New employees will be trained to oversee AI rather than to develop deep expertise themselves. Over time, the organization's actual capability to understand whether the AI outputs are correct will diminish.

This is sometimes called "deskilling"—the process by which organizations lose human expertise when they over-rely on automated or AI-assisted systems. It's a serious organizational risk that compounds the technical risk of using AI that hallucinates.

The Transportation Safety Stakes: What Could Go Wrong

To understand why errors in transportation safety regulations are so serious, consider what these regulations actually control:

Aviation Safety: FAA regulations determine the structural integrity standards for aircraft, the minimum safety requirements for pilots, the protocols for handling equipment failures, and the procedures for emergency situations. These regulations exist because decades of aviation accidents taught us what happens when safety is neglected. Every significant safety standard was written because people died in ways that the regulation was designed to prevent.

Pipeline Integrity: Regulations governing natural gas and hazardous liquid pipelines determine pressure limits, material specifications, inspection frequencies, and failure response procedures. The history of pipeline accidents shows what happens when these standards are inadequate: explosions that destroy entire neighborhoods, toxic chemical releases, gas leaks that kill people miles away from the pipeline itself.

Rail Safety: Freight train regulations determine how toxic chemicals can be transported, what structural requirements apply to tanker cars, what braking systems are required, and what routing restrictions exist. The concern is concrete: a freight train carrying chlorine gas that derails in a populated area can kill hundreds of people.

These aren't abstract regulatory topics. Each of these regulatory domains has a body count—specific accidents that happened because safety standards were inadequate or were not properly enforced. The regulations that exist today were written partly in response to those accidents.

If an AI system drafts a rule containing an error about aircraft structural requirements or pipeline pressure ratings or rail braking systems, that error doesn't just create administrative problems. It potentially creates the conditions for accidents that could have been prevented. The DOT staffer who called the initiative "wildly irresponsible" wasn't exaggerating the stakes.

The scale of potential impact is also enormous. According to the National Highway Traffic Safety Administration, there are over 330 million vehicle registrations in the United States. The FAA oversees an aviation system that carries nearly 3 million passengers per day. Natural gas pipelines serve over 70 million customers. Regulations affecting any of these systems have implications for hundreds of millions of people.

AI is estimated to handle 85% of the drafting process, with humans focusing on oversight and refinement. Estimated data based on DOT's initiative.

The Administration's Perspective: Speed Over Perfection

DOT's top lawyer, Gregory Zerzan, articulated the administration's position clearly during a December meeting with DOT staff. According to meeting notes, Zerzan stated: "We don't need the perfect rule on XYZ. We don't even need a very good rule on XYZ. We want good enough."

This statement reveals the fundamental mismatch between how the administration views regulation and how transportation safety experts view it. The administration sees regulation-writing as a bureaucratic task that's been bogged down by excessive caution and process. The goal is to accelerate the process and get rules out faster, with the premise that even imperfect rules are better than delayed rules.

But transportation safety regulation isn't comparable to other regulatory domains. This isn't about consumer protection where "good enough" might be acceptable, or environmental standards where some imperfection might be tolerable. This is about the operating parameters for systems that carry human lives. For these systems, "good enough" is not good enough.

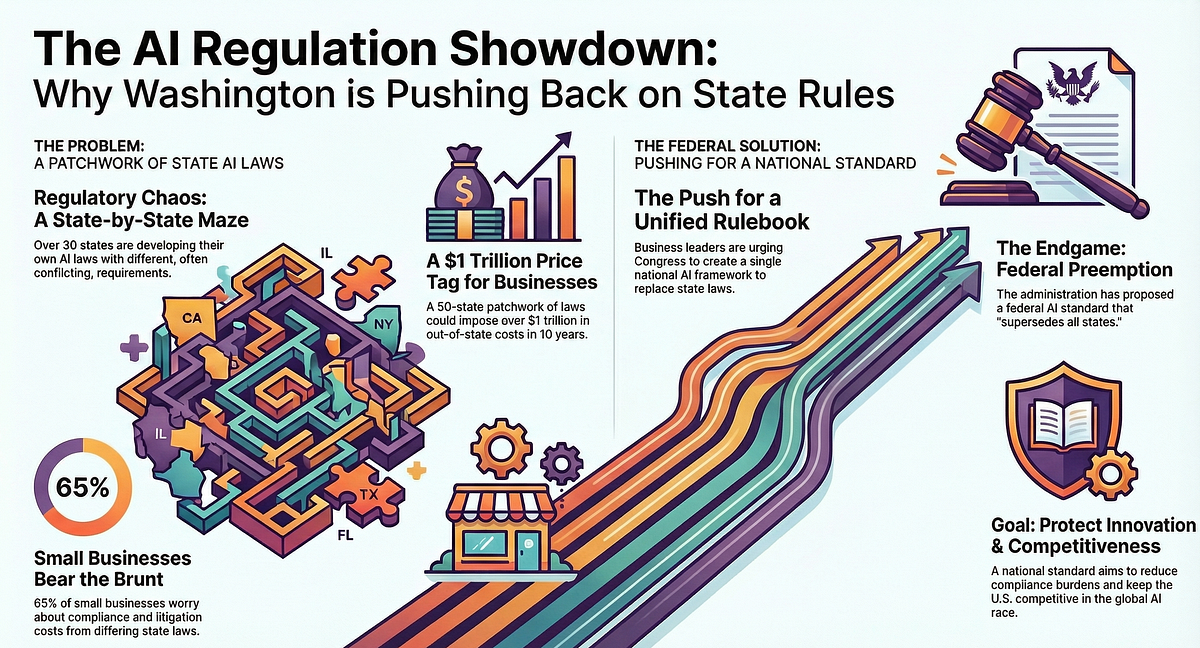

The administration's eagerness to use AI for rule-writing appears connected to a broader policy objective: modernizing transportation systems by reducing regulatory barriers. The White House Office of Science and Technology Policy credited the DOT with "replacing decades-old rules with flexible, innovation-friendly frameworks." This suggests that the primary goal isn't just speed—it's deregulation. Using AI to draft rules faster appears to be a mechanism for accelerating the removal or modification of existing safety rules.

Zerzan also told DOT staff that President Trump is "very excited" about the DOT initiative and views it as the "point of the spear" for AI-assisted rule-drafting across other federal agencies. This framing is significant. It suggests the DOT's experiment is intended as a proof-of-concept to encourage other agencies to adopt similar approaches.

If that happens—if EPA starts using AI to draft environmental regulations, OSHA starts using it to draft workplace safety rules, FDA starts using it to draft pharmaceutical approval rules—the implications compound exponentially. You'd have AI systems making errors across multiple high-stakes regulatory domains simultaneously, with potentially catastrophic consequences.

The Precedent Problem: AI Errors in Courts and Government

The DOT's experiment isn't happening in a vacuum. It's happening against the backdrop of recent, high-profile demonstrations of AI's unreliability in serious contexts.

In the legal field, multiple lawyers have faced professional consequences after relying on Chat GPT to research case law. The AI generated fake cases with complete confidence. The lawyers, trusting the AI's authoritative tone, included these citations in briefs submitted to federal courts. When judges discovered the fabricated cases, the lawyers faced sanctions and disciplinary action. One judge described being fooled by the AI's confident presentation of false information.

The lesson should have been obvious: AI systems that generate text confidently but without genuine knowledge cannot be trusted for applications where accuracy is critical and errors have serious consequences. Yet the DOT is proceeding with exactly that scenario.

There are also documented cases of government agencies being misled by AI-generated information in other contexts. When AI systems are used for document analysis, legal research, or policy analysis, the consistent finding is that they produce plausible-sounding but unreliable output that requires expert review to verify—which defeats the purpose of using them for efficiency gains.

The most relevant precedent, though, might be the history of automation failures in transportation itself. The aviation and rail industries have learned through tragedy that automation cannot be trusted to make critical safety decisions without redundant human oversight. When Boeing developed the 737 MAX, the automated MCAS system was designed with insufficient human oversight, and the result was two crashes that killed 346 people. The lesson that aviation learned was that automation in safety-critical systems requires robust human oversight, not less.

Using AI to draft the rules that govern such systems seems to ignore the hard-won lessons of aviation safety history.

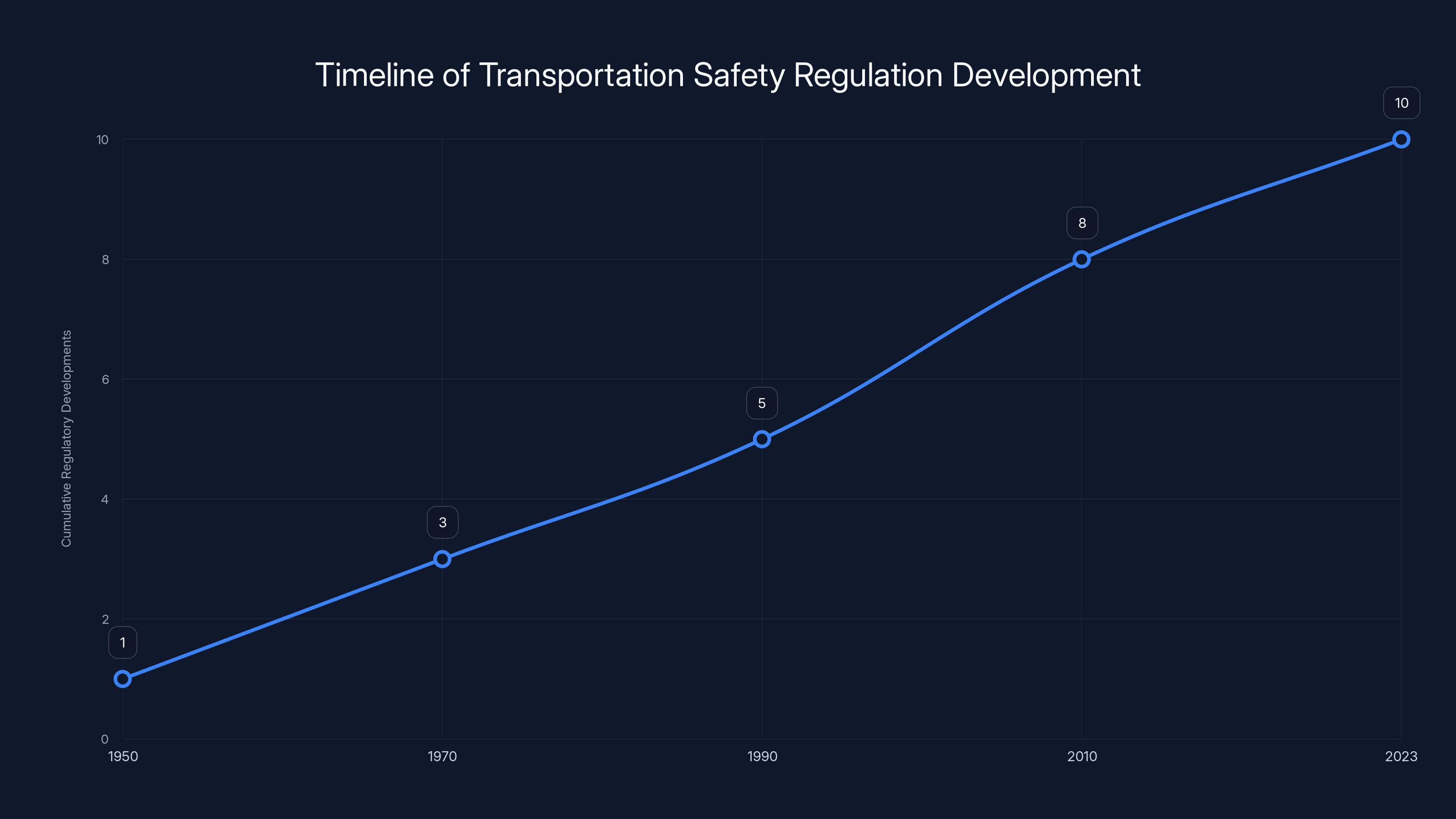

The development of transportation safety regulations has accelerated over the decades, reflecting accumulated expertise and technological advances. Estimated data.

Why Speed Isn't the Real Constraint in Rule-Making

The DOT's justification for AI-assisted rule-drafting centers on speed: regulations take too long to write, AI can write them faster, therefore using AI is more efficient. But this overlooks why federal rule-making actually takes time.

Rule-making doesn't take years because people are slow at typing. It takes years because:

Expert consultation is necessary: Developing accurate regulations requires input from subject-matter experts. Those experts are busy people working in the industry or in academia. Coordinating with them takes time.

Stakeholder input is required by law: The Administrative Procedure Act requires that proposed rules be published for public comment, and agencies must consider those comments before finalizing rules. This process, by design, takes months.

Legal review is essential: Proposed rules must be reviewed by the agency's legal office and often by the Office of Management and Budget to ensure they don't conflict with statute or create unintended consequences.

Technical accuracy requires verification: For safety rules, the agency needs to verify that the specifications being proposed are technically sound and achievable.

If you accelerate the rule-writing part (which is just the drafting), you don't actually accelerate the overall process much. You still need stakeholder comment periods, legal review, and technical verification. What you've done is saved the time that an expert would spend writing the draft. But that expert was also the person who understood the technical requirements and could ensure accuracy.

By replacing the expert drafting with AI, you might save 30 hours of an expert's time, but you've created a situation where the resulting rule might contain errors that require additional expert time to catch. The overall efficiency gain is questionable, and the risk has substantially increased.

The Broader AI Governance Problem

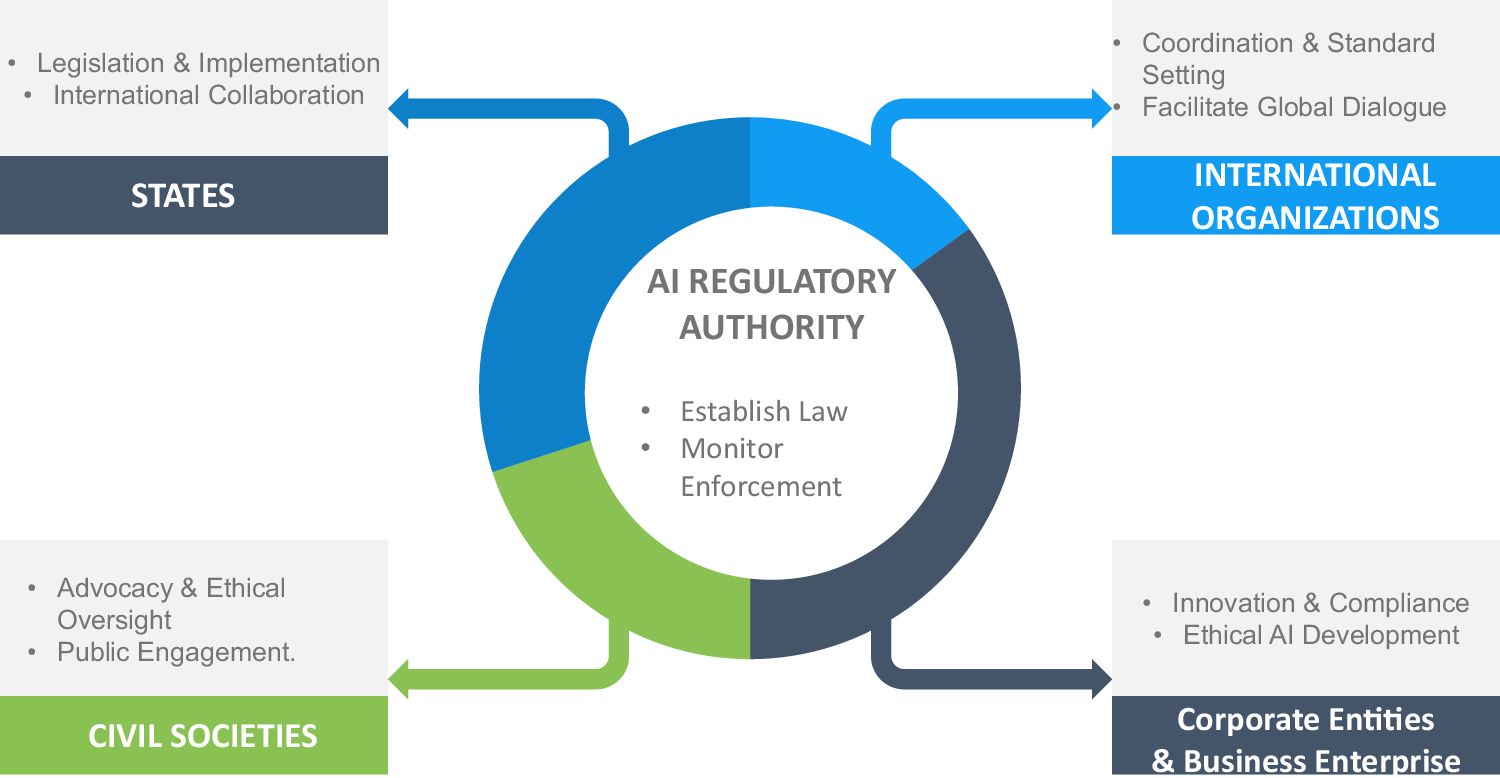

The DOT's approach to AI-assisted rule-drafting reveals a wider problem in how government is approaching AI adoption: speed and efficiency are being prioritized over accuracy, safety, and public input.

The rule-making process, for all its slowness, includes built-in safeguards: multiple levels of expert review, legal analysis, stakeholder comment periods, and documented decision-making that can be challenged in court if necessary. When you introduce AI into that process without adequate safeguards, you're removing transparency and accountability.

If a regulation is drafted by a human expert, that expert's reasoning is documented and can be examined. If there are errors or problems, they're often caught during the review process. If the rule is later challenged in court, the agency can explain its reasoning.

If a regulation is drafted by Gemini, the agency can't really explain how the specific language was generated or why particular requirements were chosen. The AI doesn't have reasoning to document. It has outputs. The reasoning is opaque, and accountability becomes difficult.

This opacity becomes a serious problem when something goes wrong. If an AI-drafted rule led to regulatory failures that resulted in injuries or deaths, how would accountability work? You can't sue an AI. You'd have to sue the agency, but the agency's defense would be essentially "we used AI to draft the rule." That doesn't actually clarify whether the rule was wrong, why it was wrong, or who should be held responsible.

There's also the question of democratic legitimacy. Rule-making, slow as it is, is supposed to be a process where public input shapes regulations that affect them. If rules are drafted by AI and then published with minimal substantive revision by humans, the public's actual influence over their own regulations diminishes.

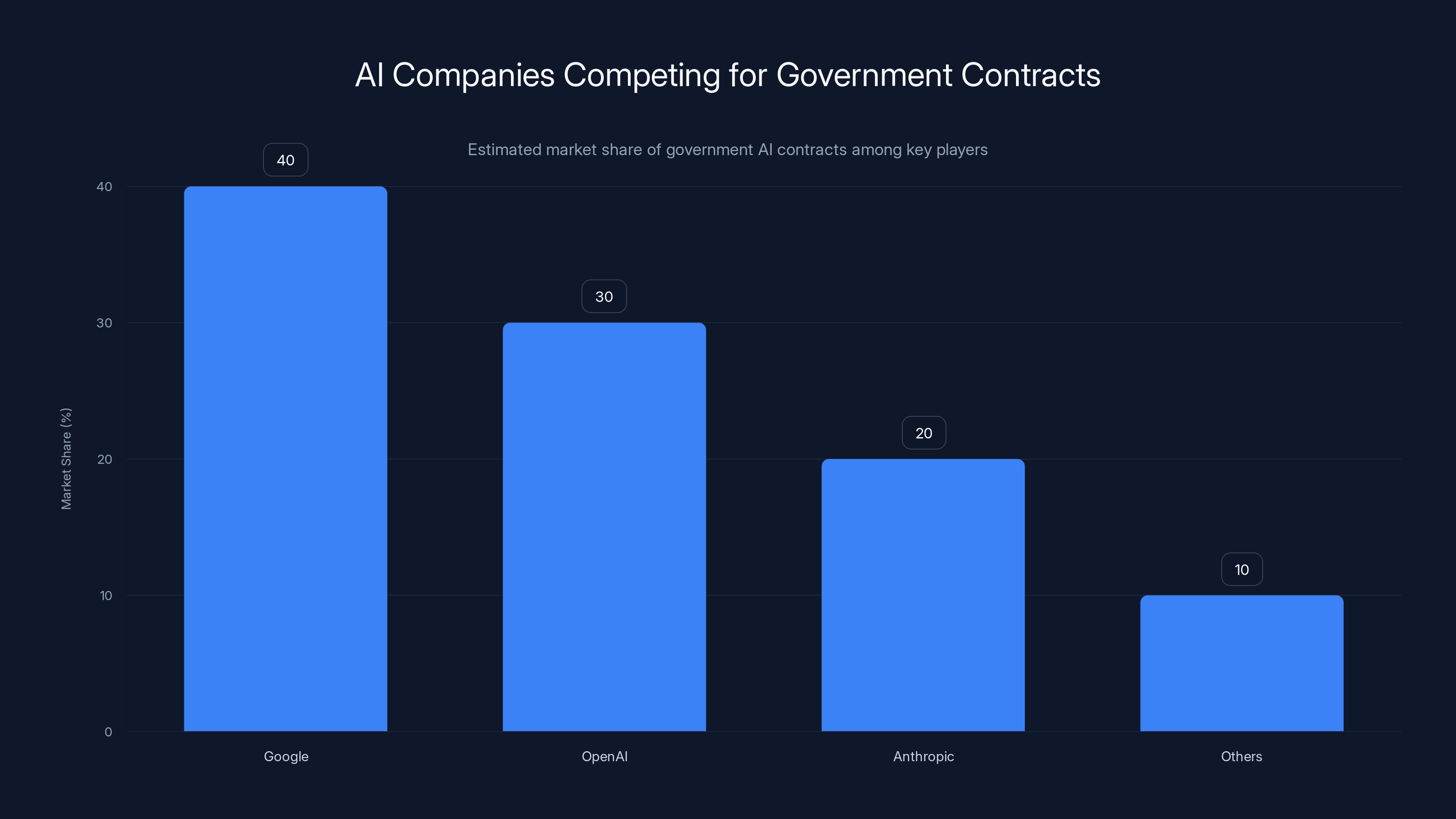

Google is estimated to hold the largest share of government AI contracts, leveraging initiatives like the DOT's adoption of Gemini to strengthen its position. Estimated data.

Expert Responses and Industry Concerns

Experts who monitor AI in government were cautious in their responses to Pro Publica's investigation. Some suggested that using Gemini as a research assistant—with substantial oversight and transparency—might be defensible. But even those measured responses emphasized the need for robust review by experts who actually understand transportation safety.

The distinction is important: using AI to gather information and organize research is different from using AI to draft the substantive requirements of regulations. The former might be a reasonable efficiency tool. The latter is substantially riskier.

Transportation safety organizations and industry associations haven't yet issued public statements on the DOT initiative, but private conversations among engineers and safety professionals suggest significant concern. People who have spent careers ensuring transportation safety understand how easily small errors in specifications or procedures can have catastrophic consequences.

Legal scholars who study administrative law have also raised concerns about the process. The Administrative Procedure Act gives the public a right to notice and comment on proposed rules, but it doesn't explicitly require that rules be drafted by humans or allow public input into the drafting process itself. This creates a potential loophole: if an agency uses AI to draft a rule and publishes the result for comment, the public's only recourse is to comment on what's already been drafted. The decision to use AI in the first place, and what prompts were used to generate the rule, would typically be invisible to the public.

This raises constitutional and administrative law questions that have not yet been fully litigated. Courts have not yet ruled on whether using AI to draft regulations without transparent disclosure of that fact violates the Administrative Procedure Act or other legal requirements.

Google's Role and Incentives

Google has positioned itself as the primary beneficiary of the DOT's initiative. The company has been competing aggressively with other AI companies like Open AI and Anthropic for government contracts. To undercut competitors, Google offered the DOT a year of access to Gemini for just $0.47—effectively free.

The company has celebrated the DOT's adoption of Google Workspace with Gemini across the entire agency, framing it as a success story and encouraging other federal agencies to do the same. In their public communications, Google emphasizes efficiency and transformation, but notably does not emphasize the risks associated with using AI to draft safety regulations.

Google did not respond to requests for comment on the DOT's use of Gemini for rule-drafting. The company's public statements on the topic have been limited to a blog post arguing that Gemini can help federal workers with "creative problem-solving to the most critical aspects of their work." But this framing glosses over the specific problem: AI is being used to generate content for regulations that affect public safety, not to help with general problem-solving.

Google's incentive structure here is important to understand. Each successful deployment of Gemini in government creates a case study that can be used to sell the system to other agencies. Even if the DOT initiative proves problematic, Google benefits from having established a foothold in government AI. Future contracts with other agencies will be easier to secure if Google can say it's already being used by the DOT.

This creates a perverse incentive: it's in Google's interest to have the DOT succeed with the Gemini initiative, even if "success" by DOT's metrics (faster rule-drafting) creates problems by other metrics (accurate, safe, transparent regulation).

The Path Forward: What Should Happen

If the DOT's Gemini rule-drafting initiative is to continue responsibly, several changes need to happen:

First, the agency needs to establish clear standards for when and how AI can be used in rule-drafting. AI should not be generating the substantive technical requirements of safety regulations. AI might be useful for literature review, organizing research, or formatting documents, but the actual requirements and specifications need to be written by domain experts.

Second, any use of AI in rule-drafting needs to be transparent. The public and Congress need to know which rules were drafted with AI assistance, what prompts were used, and what changes were made by human reviewers. Transparency is essential both for public accountability and for future legal challenges.

Third, rules drafted with AI assistance need to undergo enhanced expert review before publication. If you're using AI to draft because you want to save time, you can't also demand that the review process take just as long as traditional rule-drafting. You have to choose: use AI and reduce safety margins, or maintain current safety standards and accept that rules will take longer to develop.

Fourth, the administration needs to acknowledge that "good enough" is not acceptable for safety regulations. The position that rules don't need to be perfect or even very good is not tenable when those rules govern systems that can kill people if they fail.

Fifth, Congress should consider legislation requiring that AI use in federal rule-making be disclosed and approved at the agency level, with oversight of the process. The current situation allows agencies to use AI without congressional knowledge or input.

Without these changes, the DOT initiative represents an experiment where the subjects are the American public, and the stakes are safety in the transportation system.

The Ripple Effects: Other Agencies Watching

The DOT initiative is significant beyond just the Department of Transportation. Trump administration officials have made clear that the DOT is being positioned as a model for other agencies to follow. If other federal agencies adopt similar approaches, the problems multiply.

Imagine EPA using Gemini to draft environmental regulations, or OSHA using it to draft workplace safety standards, or FDA using it to draft pharmaceutical approval procedures. The hallucination problems don't go away. The expertise gaps don't diminish. What you get is errors propagating across multiple regulatory domains simultaneously.

The efficiency pressure that drove the DOT initiative exists across government. Agencies everywhere are struggling with limited budgets and staffing. The promise that AI could accelerate rule-making is genuinely attractive to administrators facing resource constraints. But that attractiveness doesn't change the underlying technical reality: current AI systems are not reliable enough for safety-critical rule-drafting, no matter how much pressure there is to move fast.

The broader question is whether government agencies can learn from the legal profession's recent experience with AI hallucinations, or whether we'll see those same mistakes replicated across the entire federal regulatory apparatus.

FAQ

What is AI hallucination and why does it matter for government regulation?

AI hallucination is when a language model like Gemini confidently generates false information that sounds plausible and authoritative. It matters for government regulation because regulations that contain fabricated technical specifications or nonexistent standards could result in unsafe systems being approved or implemented. When a lawyer used Chat GPT to research cases and the AI cited non-existent cases in court briefs, judges and lawyers alike learned how convincingly AI can present false information. That same risk applies to safety regulations, but the consequences are higher.

How long does federal rule-making actually take, and what causes the delays?

Federal rule-making typically takes 3 to 7 years, with complex safety rules sometimes taking a decade or more. The delays aren't primarily due to slow drafting—they result from required expert consultation, stakeholder comment periods mandated by the Administrative Procedure Act, legal review, and technical verification. If you use AI to speed up just the drafting part, you don't significantly reduce overall timeline because the other steps still need to happen. Meanwhile, you've removed human expertise from the process, creating new risks.

Can AI be used responsibly in government rule-making?

Yes, but only in limited ways with significant safeguards. AI might be appropriately used for literature review, organizing research, or formatting documents, with the understanding that all outputs require expert review. AI should not be used to generate the substantive technical requirements or specifications in safety regulations. The current DOT approach of having AI draft the core requirements of rules is fundamentally different from appropriately limited AI assistance and carries substantially greater risk.

What are the specific safety risks of using AI-drafted regulations for transportation?

Transportation safety rules govern aircraft structural integrity, pipeline pressure limits, rail system specifications, and emergency procedures. Errors in these regulations don't create administrative problems—they create conditions for accidents. An error in an FAA rule about aircraft cabin pressurization, a pipeline rule about pressure safety margins, or a rail rule about chemical transport could directly contribute to accidents that injure or kill people. The consequences of regulatory error in transportation are measured in human lives, not in administrative inconvenience.

Why is the DOT pushing this approach forward despite staffers' concerns?

DOT leadership appears motivated by two factors: first, a genuine desire to accelerate rule-making to keep pace with technological change; second, a broader policy objective of deregulation and making rules more "innovation-friendly." The Trump administration views faster rule-writing as a mechanism for modernizing regulations. However, in safety-critical domains, faster isn't always better. The appropriate speed is whatever speed allows experts to ensure the rules are actually correct.

How would accountability work if an AI-drafted rule caused a regulatory failure?

That question doesn't yet have a clear legal answer, which is part of the problem. You can't sue an AI. If a rule drafted by Gemini proved inadequate and caused injuries, legal liability would presumably fall on the agency, but the agency's argument would essentially be "we used a tool that generated this output." This creates an accountability gap. The reasoning behind regulatory decisions becomes opaque, making it harder for the public or courts to understand why particular requirements were chosen or to challenge those requirements meaningfully.

What would happen if other federal agencies adopted similar AI approaches?

The risks would compound significantly. EPA drafting environmental regulations with AI, OSHA drafting workplace safety standards with AI, and FDA approving pharmaceuticals with AI would mean errors propagating across multiple critical regulatory domains simultaneously. The legal and safety precedents don't exist for managing such widespread automation of rule-making. It would represent a fundamental shift in how federal regulation works, done largely without public input or congressional oversight.

Is there legislation pending to govern AI use in federal rule-making?

No specific legislation currently addresses AI use in federal rule-making. The Administrative Procedure Act doesn't explicitly require that rules be drafted by humans, which creates a potential loophole. Courts haven't yet ruled on whether using AI to draft regulations without transparent disclosure violates the APA or other legal requirements. This is an area where the law is significantly behind technological practice, leaving agencies with broad discretion to use AI however they see fit.

The Bottom Line: When Speed Becomes Recklessness

The DOT's use of Gemini to draft federal safety regulations represents a critical test case for how government will adopt AI. The outcome will likely influence how other agencies approach similar tools and whether adequate safeguards develop or whether AI-assisted rule-making becomes normalized without proper protections.

The core problem isn't that AI is being used in government. It's that AI is being used in a domain—safety-critical regulation—where the margin for error is extremely thin and the consequences of errors are measured in human lives. It's being justified by a statement that "good enough" is acceptable, when "good enough" could mean regulations that fail to prevent accidents people died to teach us we need to prevent.

Transportation safety as a field exists because of tragic lessons. Every major safety regulation was written because people died in ways the regulation was designed to prevent. Using an AI system known to confidently fabricate information to draft those regulations seems like a serious step backward, regardless of how much time it might save.

The staffers at the DOT who called the approach "wildly irresponsible" understood something crucial: when the consequence of being wrong is deaths, caution isn't a bug in the system. It's the entire point.

What happens with the DOT initiative over the next months will determine whether government agencies learn from recent high-profile AI failures in law and elsewhere, or whether they accelerate toward a future where critical safety decisions are made by systems that sound authoritative but lack actual expertise. The stakes are measured in human lives, and that's not a margin where speed can be prioritized over accuracy.

Key Takeaways

- The DOT is using Google Gemini to draft federal safety regulations for aviation, pipelines, and rail, claiming it can complete draft rules in under 30 minutes instead of weeks

- AI systems like Gemini are known to hallucinate—confidently presenting fabricated information as fact—which is particularly dangerous in safety-critical regulations where errors could cause injuries or deaths

- Transportation safety regulations govern systems affecting 330 million vehicle registrations, 3 million daily airline passengers, and 70 million pipeline customers, making the stakes for regulatory errors extraordinarily high

- DOT leadership explicitly stated they don't need 'good enough' regulations, not perfect ones, which directly contradicts the safety principle that margin for error must be minimized when lives are at stake

- The DOT initiative is positioned as a proof-of-concept for other federal agencies to follow, potentially spreading AI-assisted rule-drafting across government without adequate safeguards or legal frameworks

Related Articles

- Age Verification & Social Media: TikTok's Privacy Trade-Off [2025]

- Claude MCP Apps: How AI Became Your Workplace Command Center [2025]

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

- Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

![AI Drafting Government Safety Rules: Why It's Dangerous [2025]](https://tryrunable.com/blog/ai-drafting-government-safety-rules-why-it-s-dangerous-2025/image-1-1769459797429.jpg)