AI Energy Consumption vs Humans: The Real Math [2025]

TL; DR

- Training vs. Inference: AI models consume massive energy during training, but per-query inference is increasingly efficient.

- Water Usage Myths: Claims about 17 gallons of water per Chat GPT query are false; modern data centers don't use evaporative cooling.

- The Real Comparison: A single Chat GPT query uses far less energy than a human thinking through the same problem, when normalized properly.

- Brain Training Costs: The human brain takes 20 years and thousands of calories to develop; comparing it to AI inference alone is fundamentally unfair.

- Future Challenge: Total AI energy consumption is rising because billions use it daily, making grid infrastructure and renewable energy adoption the actual problem.

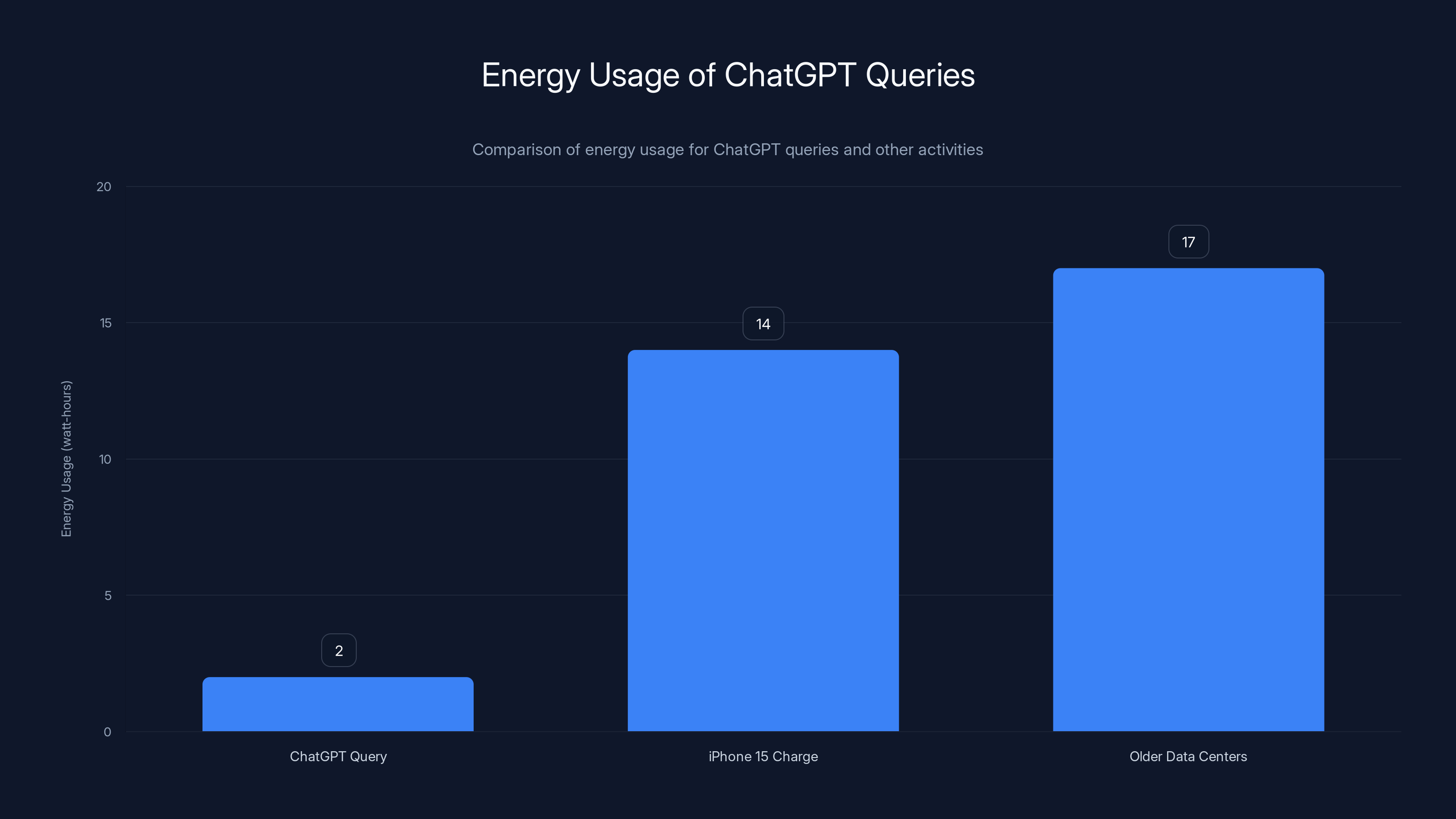

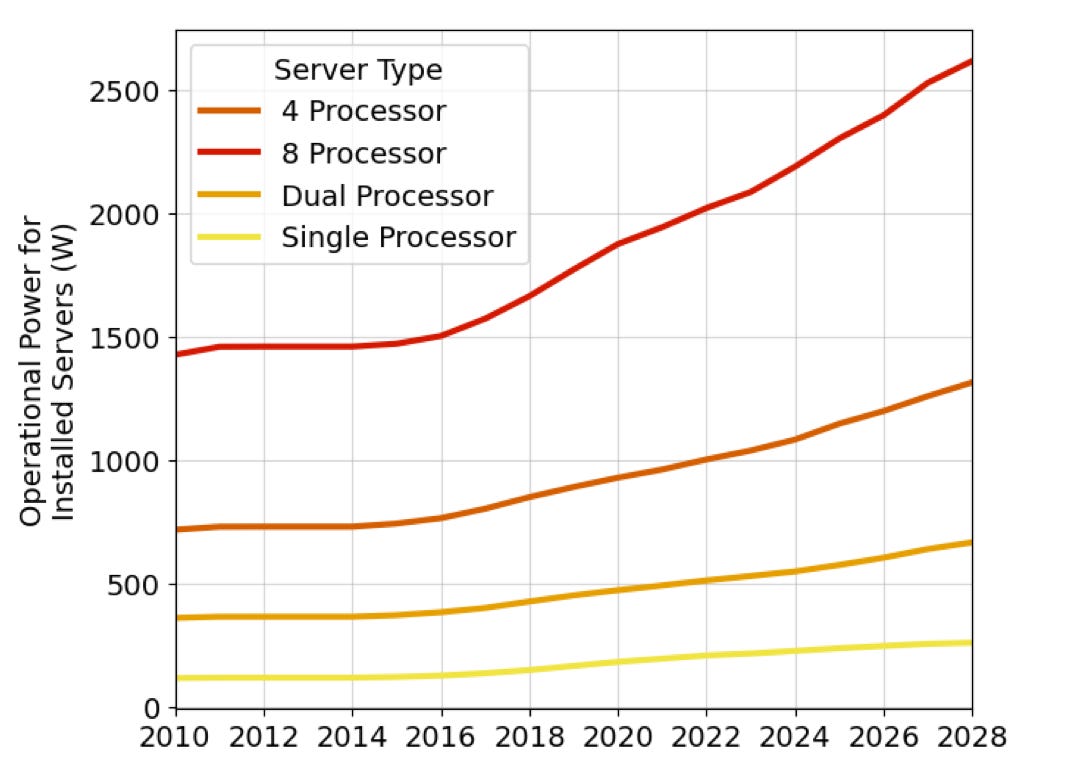

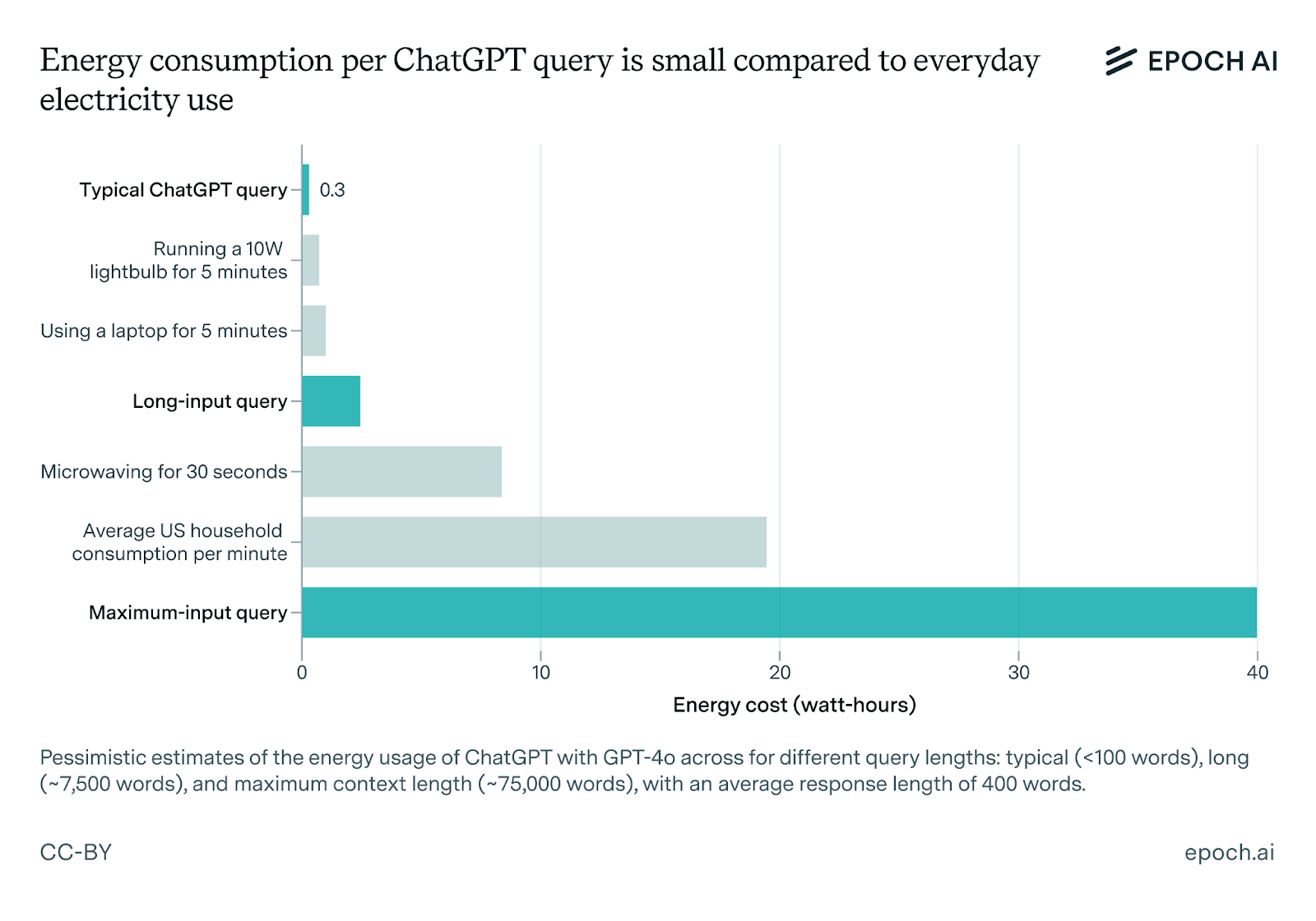

A typical ChatGPT query uses 1-3 watt-hours, significantly less than an iPhone 15 charge. Estimated data for older data centers shows higher energy use due to less efficient cooling systems.

Introduction: Why Everyone Gets AI Energy Math Wrong

There's a viral talking point that won't die: Chat GPT uses more electricity than your entire house. Or it takes 17 gallons of water per query. Or running GPT-4 is burning the planet faster than data centers in Iceland can be built.

Most of it is wrong.

But here's the tricky part: the underlying concern isn't wrong. AI does consume significant energy. Data centers do draw massive amounts of power. And yes, that matters for climate and electricity grids. The problem is we've been comparing apples to telephones, then acting surprised when the numbers don't match.

When Open AI's CEO Sam Altman pushes back on these claims, he's not denying that AI is energy-intensive. He's pointing out that the way we measure and compare it has been fundamentally broken.

Here's what actually matters: understanding where energy goes in AI systems, what the honest comparisons look like, and why the real energy challenge isn't about individual queries but about infrastructure scaling at a pace that power grids aren't prepared for.

This article breaks down the actual science, the myths, the legitimate concerns, and what we should actually be worried about when we talk about AI and energy. You'll learn how to spot bad statistics, understand the real energy cost of intelligence (human or artificial), and see why the conversation needs to shift from "is AI energy-intensive?" to "how do we build enough clean power for the world's AI infrastructure?"

Let's start with the numbers everyone gets wrong.

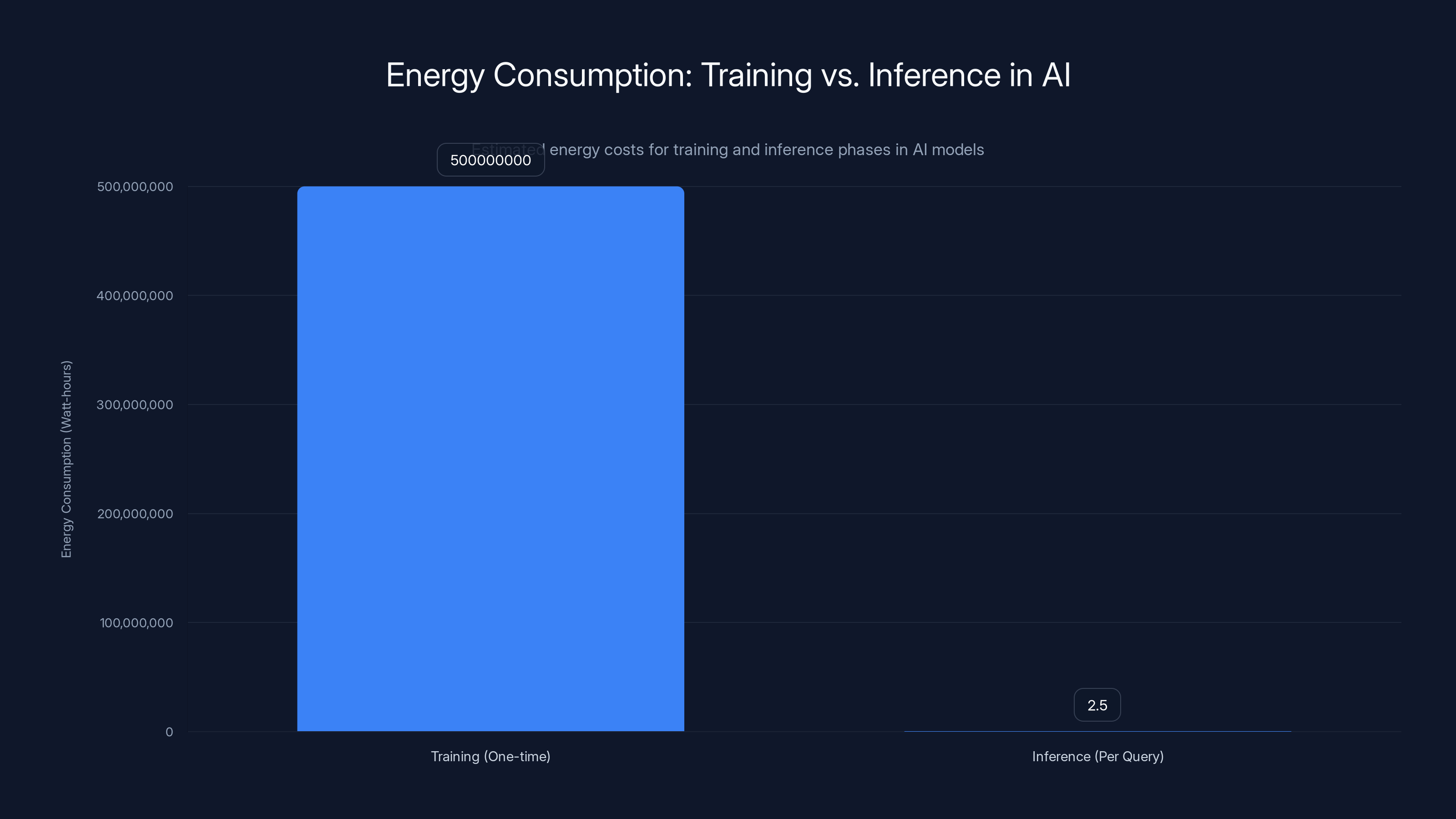

Training AI models like GPT-4 is extremely energy-intensive, consuming millions of watt-hours in a single run. In contrast, inference is much less costly, using only 0.5 to 5 watt-hours per query. Estimated data.

The 17 Gallons Per Query Myth: Where It Came From and Why It's Backwards

You've probably heard the statistic: a single Chat GPT query uses 17 gallons of water. It sounds specific. It sounds like someone measured it. It sounds terrifying.

It's also completely fabricated.

This myth likely traces back to a misunderstanding about water cooling in data centers. Older data center designs used evaporative cooling, which does consume significant water. A 2018 Washington Post study examined water usage at major tech facilities and found real concerns about regional water depletion. That was legitimate.

But modern AI data centers have largely moved away from evaporative cooling. They use other methods: immersion cooling (submerging hardware in specialized liquids), air-cooled systems, and hybrid approaches that dramatically reduce water consumption.

Someone then took the older water-usage numbers, divided by the number of queries a data center might handle, and created a fake per-query statistic. It spread because it was shocking, specific, and played into existing fears about tech companies draining water in drought-stricken regions.

The irony: if you actually asked Chat GPT a question instead of typing it to a human, the human would need drinking water, and if they worked in an air-conditioned office, the building's cooling might use more water than any inference query.

This doesn't mean data centers aren't a real water concern in water-stressed regions. TSMC in Taiwan and various AI facilities have faced legitimate water shortage issues. The point is the per-query number is propaganda masquerading as science.

Training vs. Inference: The Energy Math Nobody Explains Correctly

This is where the real confusion lives, and it's actually important.

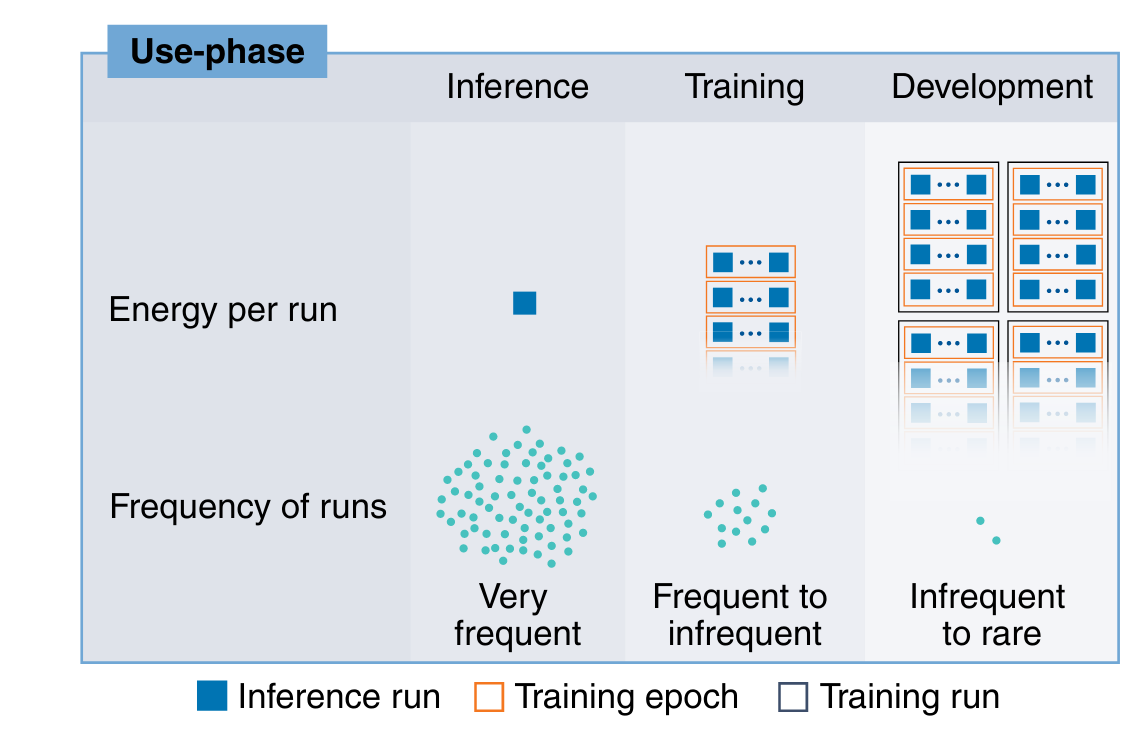

There are two energy-intensive phases in AI: training and inference.

Training is expensive. Stupidly expensive. Building GPT-4 or comparable large language models requires enormous computational resources. We're talking about thousands of specialized chips running continuously for weeks or months. The energy bill for training state-of-the-art models runs into millions of dollars.

Inference is when the model is already trained, and you ask it a question. The weights are fixed. The model just runs through your prompt and generates an answer. This is cheaper than training, but still requires electricity.

Here's where people mess up the comparison:

They compare the total energy to train Chat GPT (which is massive, one-time cost) against the energy to ask Chat GPT one question (which is tiny, per-use cost), then ask "How many queries equal one training run?" and conclude that if you ask Chat GPT 500 million times, you use as much energy as training it once.

Then they claim this proves AI is inefficient.

But that's like saying cars are inefficient because manufacturing a car burns more fuel than a single mile of driving. The manufacturing cost is amortized across millions of miles.

For AI, the training cost is amortized across billions of queries. Over time, if people use the model for years, the cost per query becomes infinitesimal.

According to multiple analyses of neural network optimization, a well-optimized inference pass through a large language model consumes roughly 0.5 to 5 watt-hours per query, depending on the model size and hardware. That's genuinely small.

For context: a human brain running at rest consumes about 20 watts continuously. Just existing. Not problem-solving, not being creative. Just baseline brain function.

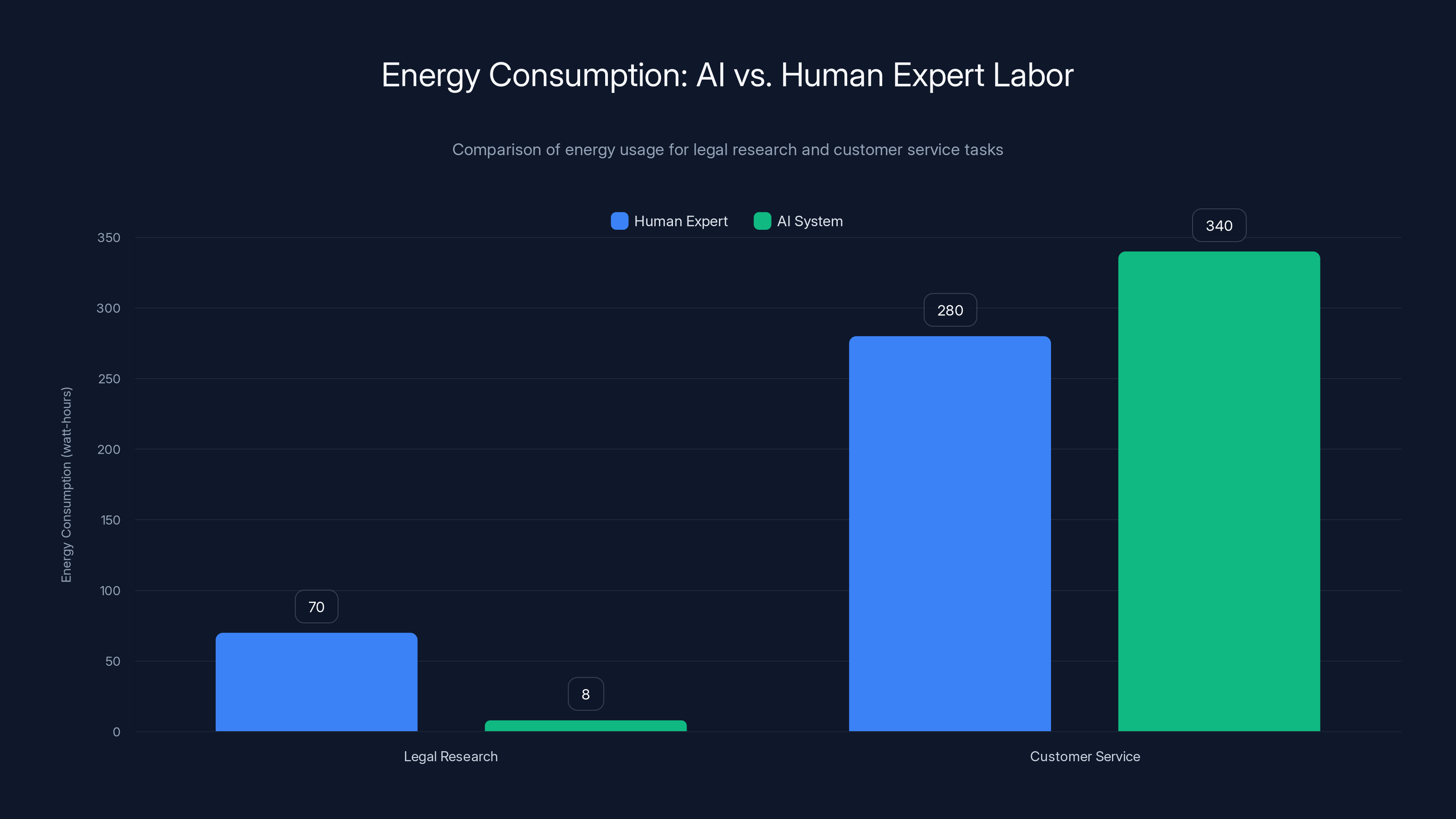

AI systems use significantly less energy than human experts for legal research but slightly more for customer service due to human escalations. Estimated data based on typical scenarios.

The Unfair Comparison: Training a Human vs. Inferencing an AI

Sam Altman's main point was actually brilliant, even if it was expressed casually: everyone comparing AI energy consumption was comparing different categories of things.

Here's what he said, essentially: "If you compare how much energy it takes to train an AI model versus how much energy it takes a human to answer a question, the comparison is meaningless. A human took 20 years and millions of calories to develop. An AI was trained once, then answers millions of queries."

This is the core insight that most energy criticisms miss.

Let's build out the actual math:

Human Brain Development Energy:

- From conception to adulthood: approximately 18-20 years

- Daily caloric intake: roughly 2,000 calories per day for an adult (more during childhood growth)

- Total calories to develop: roughly 2,000 × 365 days × 20 years = 14.6 million calories

- Converting to kilowatt-hours: 1 calorie = 0.00116 k Wh, so 14.6 million calories = roughly 17,000 k Wh

Large Language Model Training Energy:

- GPT-3 training: estimated at 1,287 MWh = 1,287,000 k Wh (but this amortizes across billions of potential queries)

- GPT-4 training: estimated at 100+ million dollars in compute, or roughly 2,000+ MWh = 2,000,000+ k Wh

- But this isn't energy "used up." The model remains useful indefinitely

Per-Query Energy (Amortized):

If GPT-4 was trained with 2,000,000 k Wh of energy, and millions of people use it, asking millions of queries per day, the per-query training cost becomes essentially unmeasurable.

Add inference energy (0.5-5 watt-hours per query), and you're still looking at energy consumption measured in milliwatt-hours per query.

Meanwhile, if you ask a human expert the same question, they:

- Took 20 years to develop

- Continue consuming 20 watts of brain power just to exist

- Require salary, office space, electricity, commute energy, food calories, etc.

The honest comparison is this: For most informational queries, AI has already achieved better energy efficiency than getting a human expert to answer.

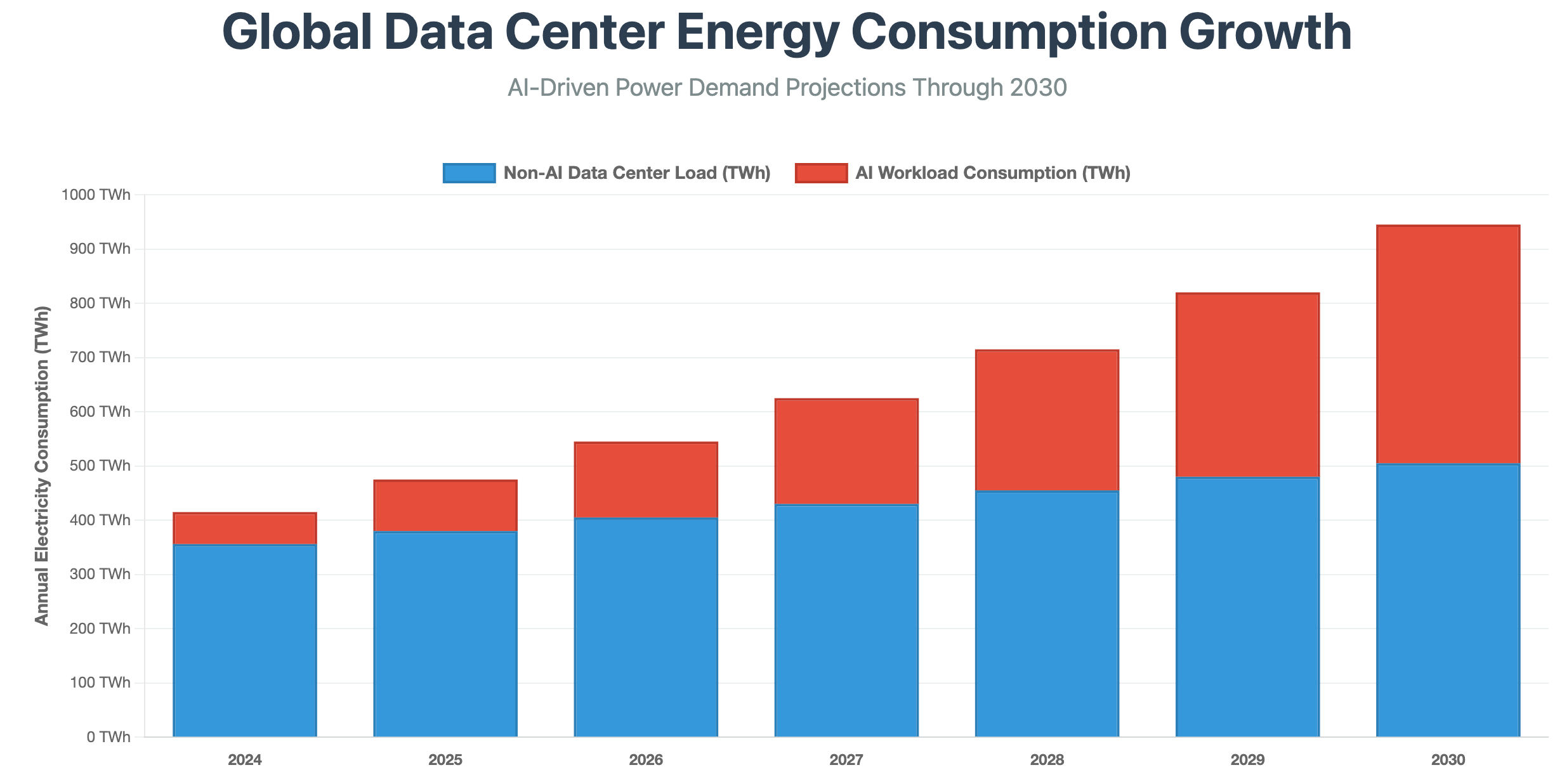

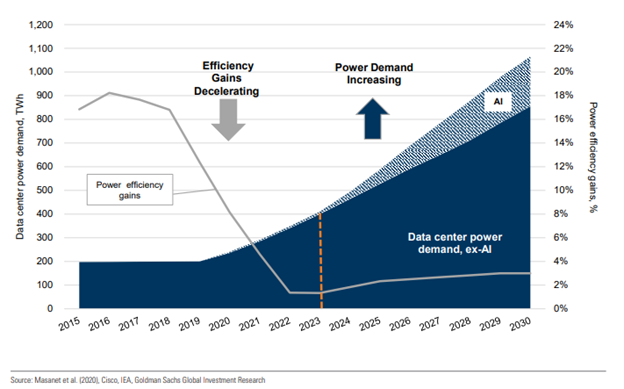

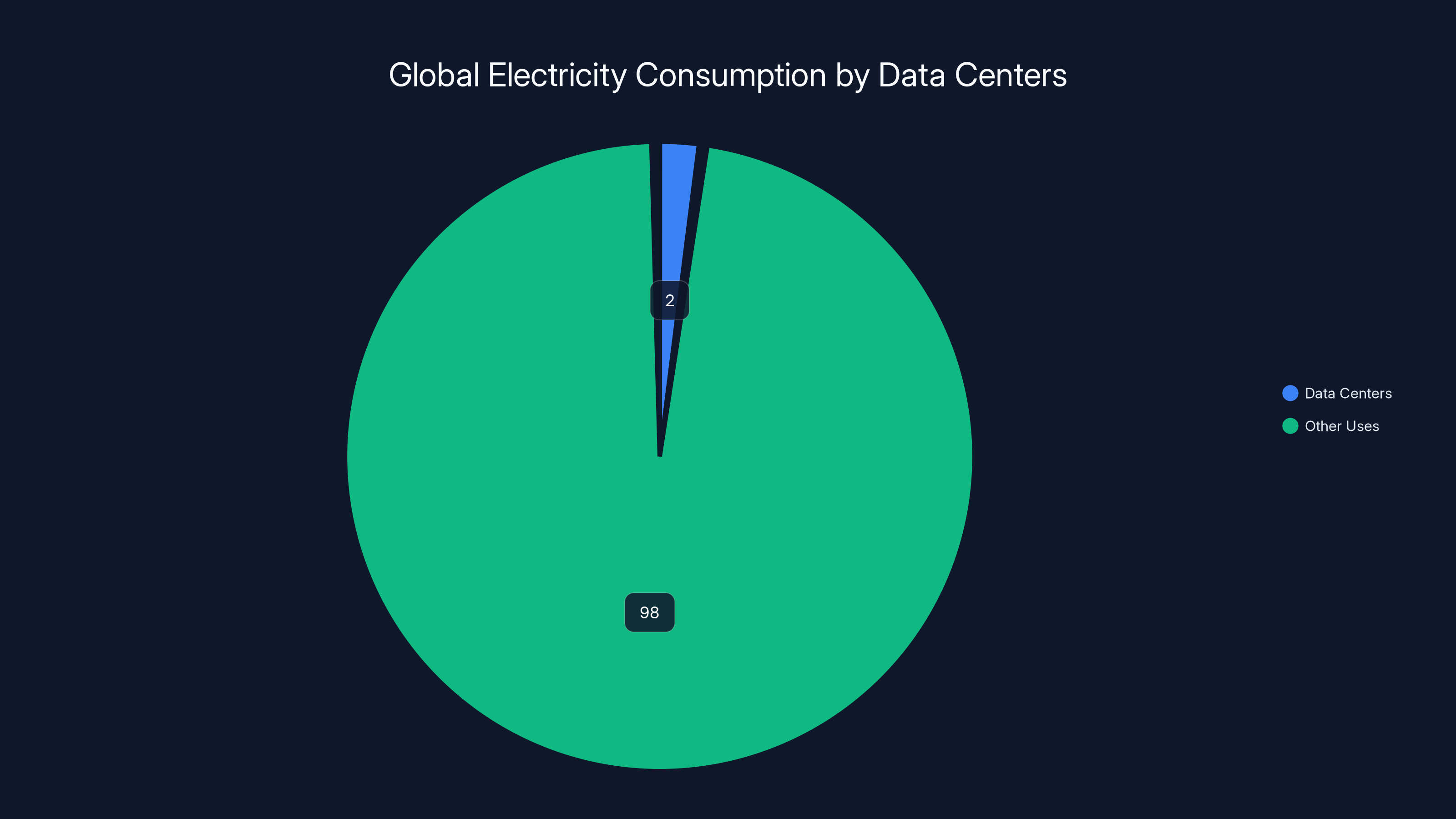

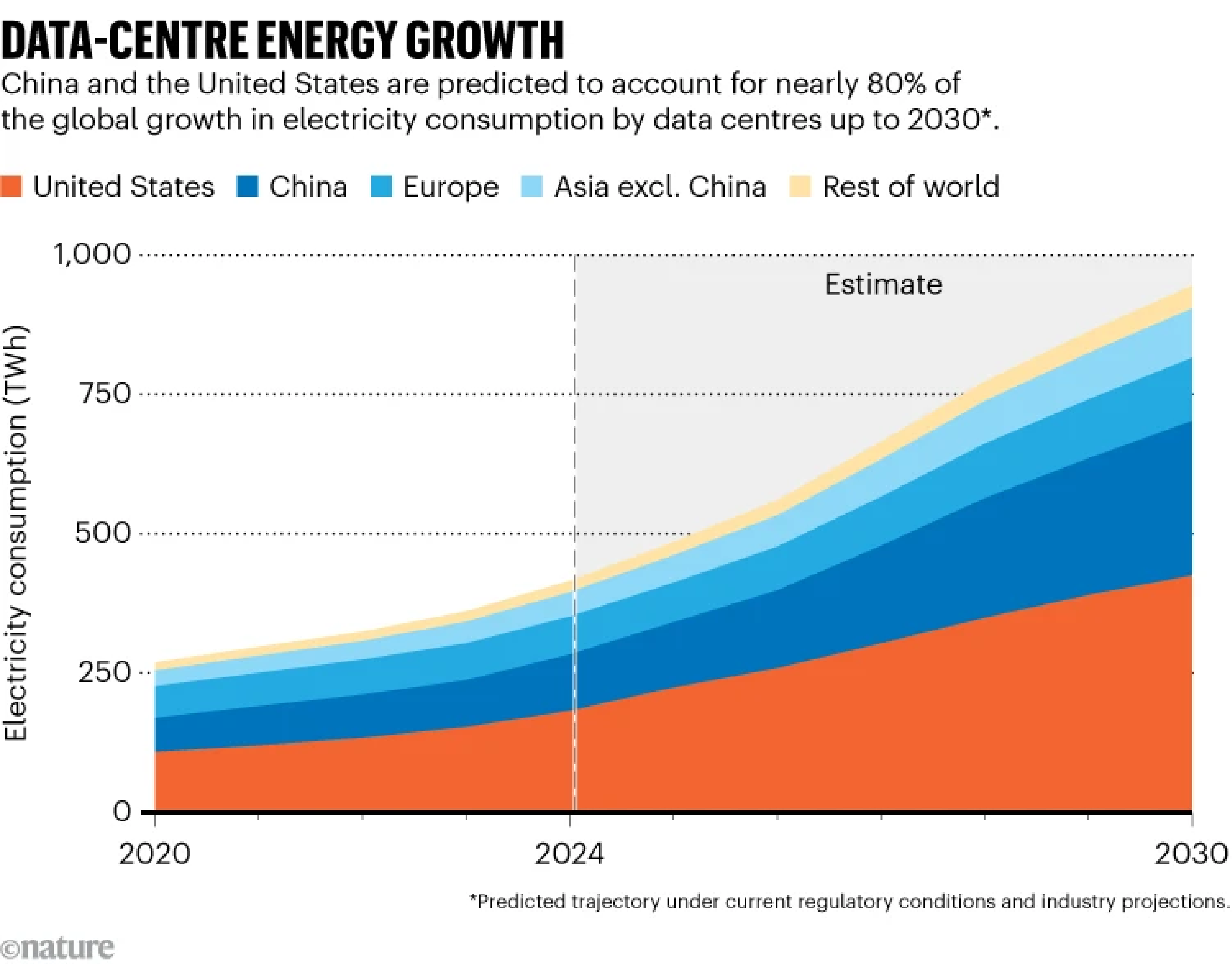

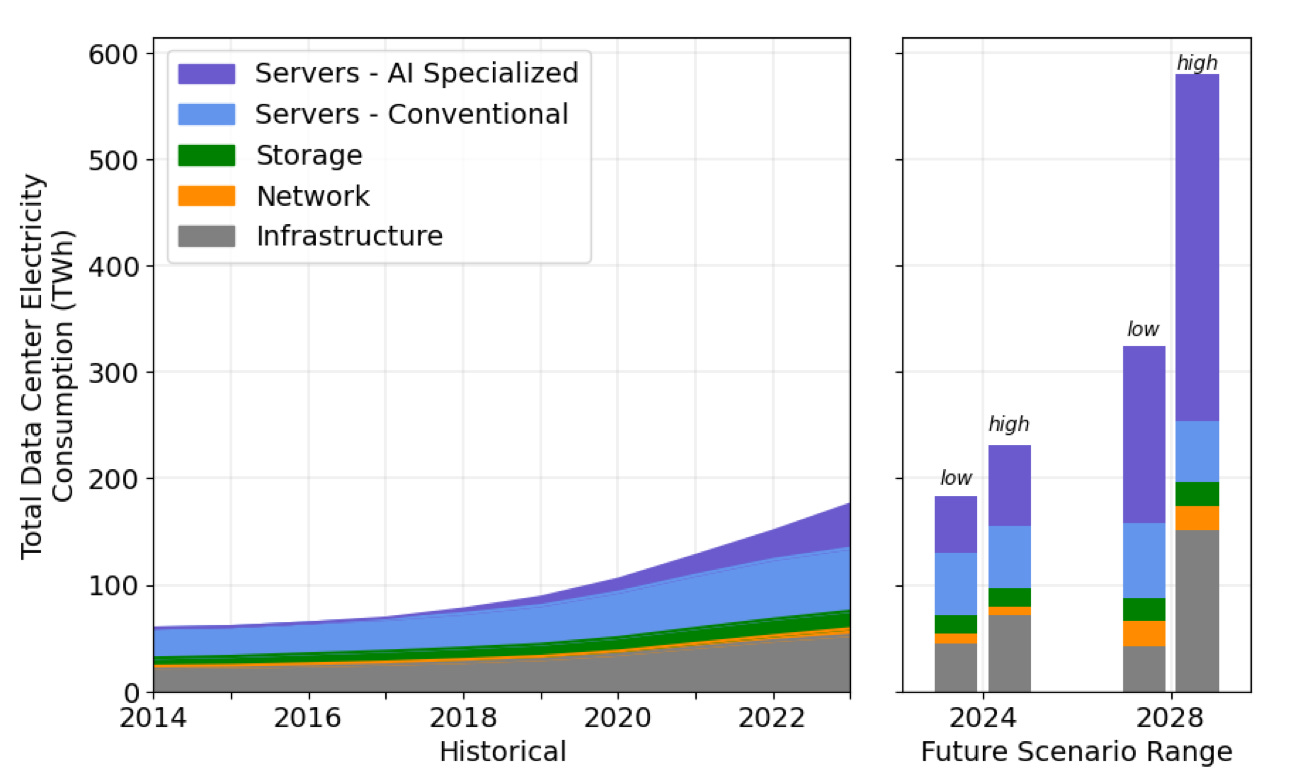

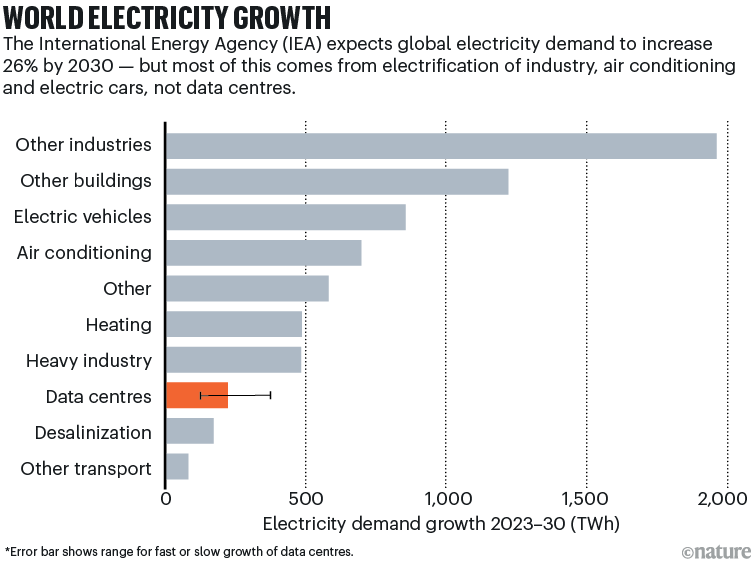

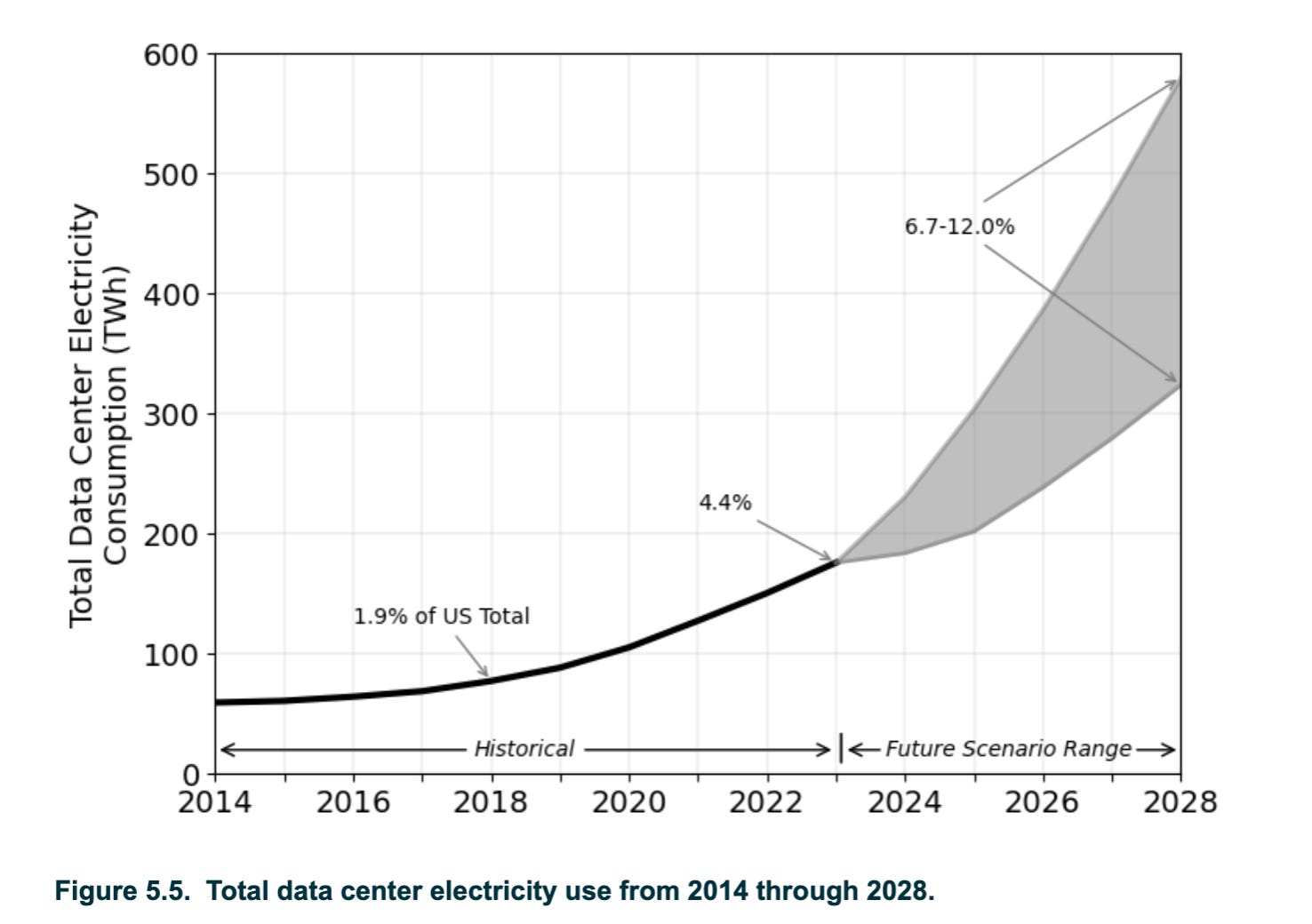

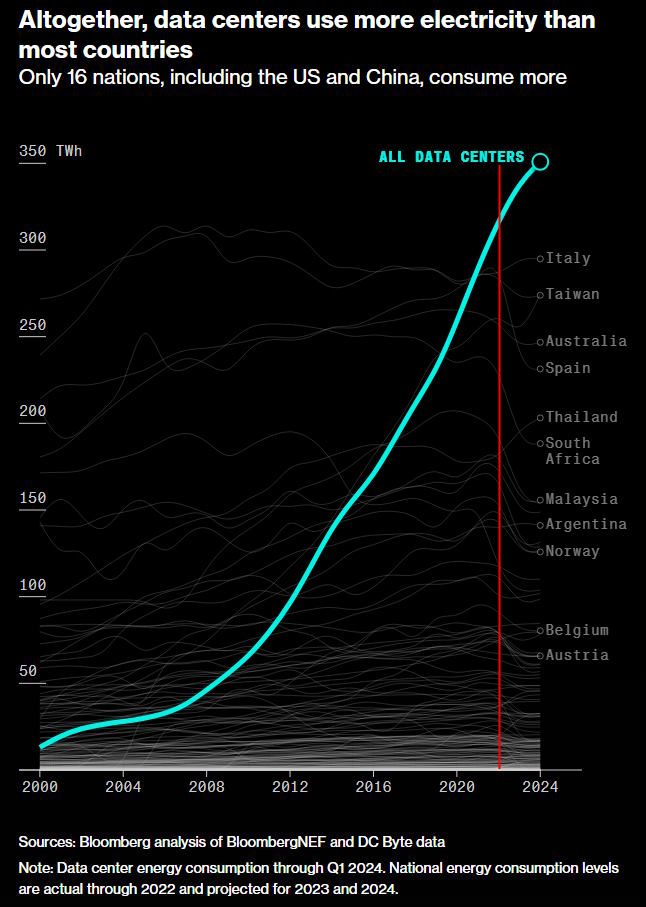

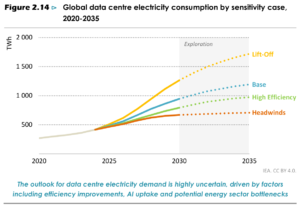

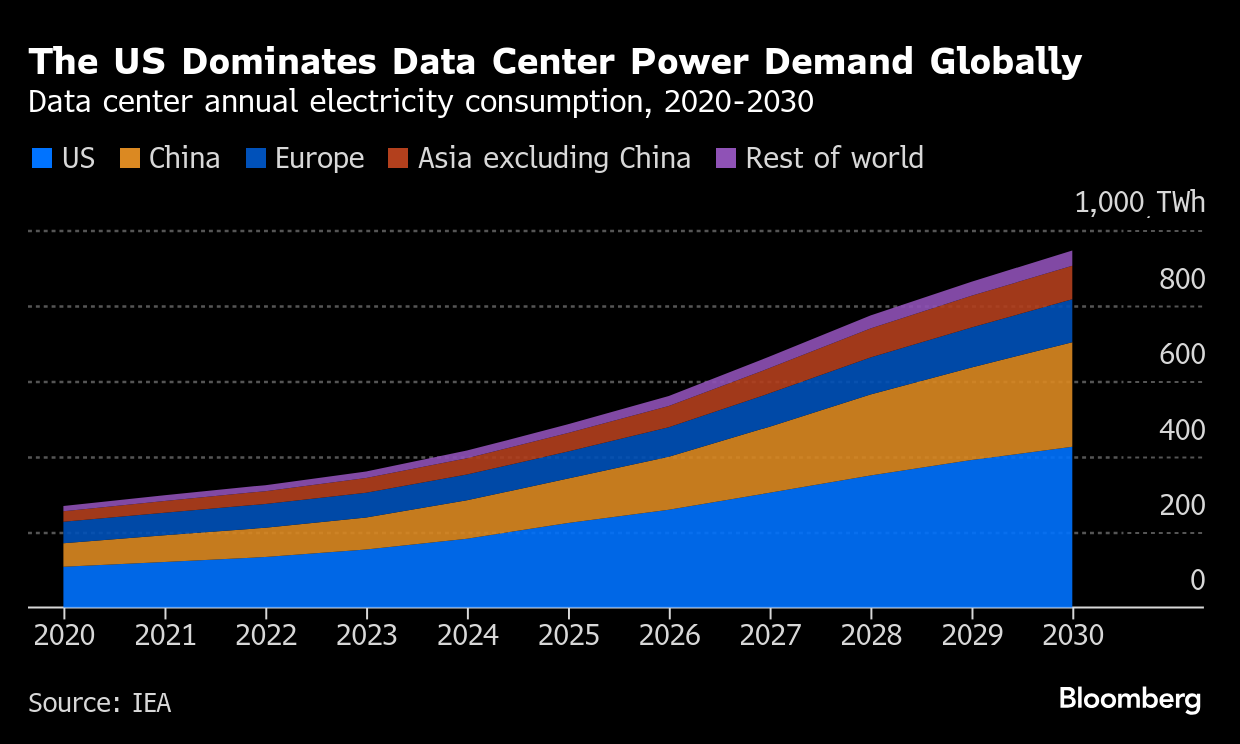

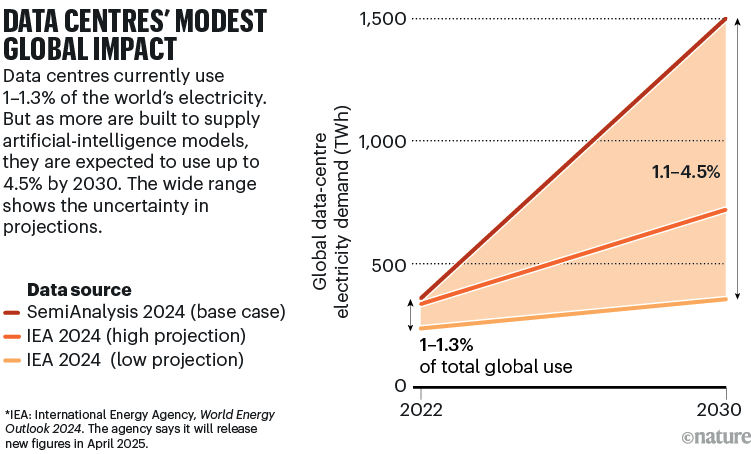

The Real Problem: Total Energy Consumption, Not Per-Query Efficiency

Okay, so individual queries are becoming more energy-efficient. Per-query costs are dropping. The training costs are one-time and amortized. If that's true, why do we keep reading about data center power crises?

Because the real problem isn't efficiency. It's scale.

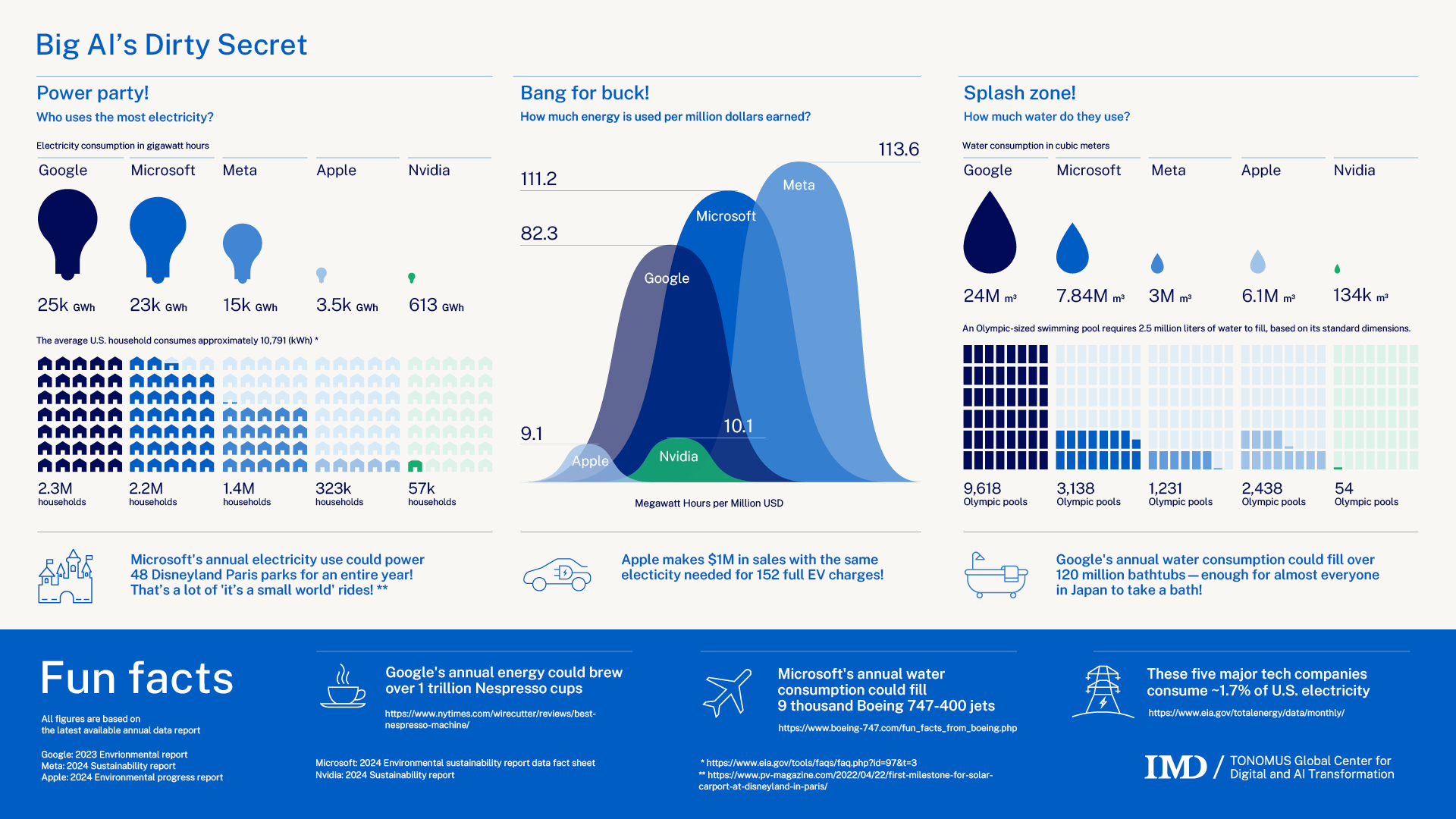

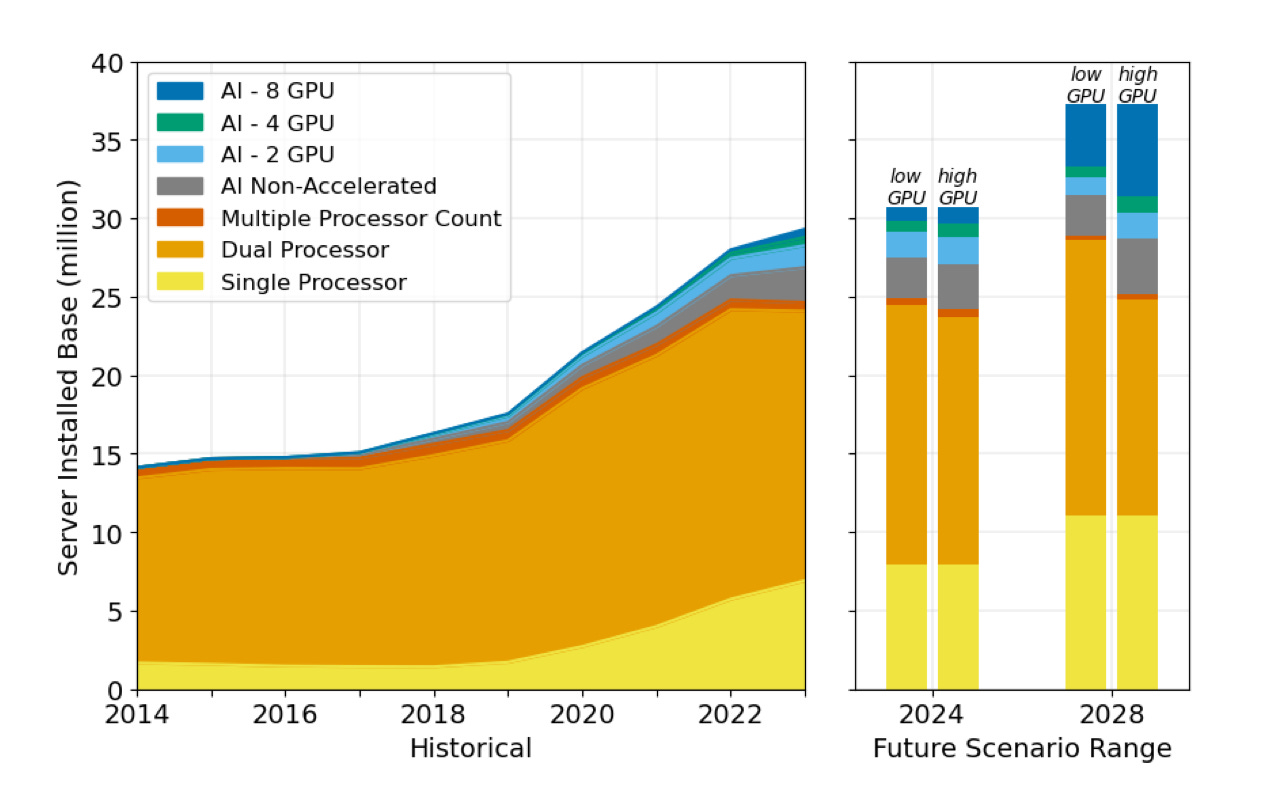

AI models are increasingly power-efficient on a per-query basis. But billions of people are using AI. Chat GPT alone handles millions of queries daily. Add in Gemini, Claude, Llama, and thousands of smaller models, and the total electricity consumption is genuinely massive.

This is the distinction between marginal efficiency and total consumption.

You can have the most efficient query processor in the world, but if a billion people query it ten times a day, the total power draw is enormous.

This matters because:

- Electricity grids aren't unlimited: Data centers are consuming increasingly large portions of available grid capacity. In some regions, this is pushing electricity prices up.

- Renewable energy isn't built out yet: Most grids still rely on fossil fuels. More consumption = more emissions, unless the power source is clean.

- Physical infrastructure is needed: Servers, cooling, transmission, backup power, etc. This has real environmental and capital costs.

- Regional concentration: Data centers cluster in specific areas, straining local power infrastructure and sometimes water supplies.

The honest takeaway from major tech companies right now is: we need to scale renewable energy deployment at a pace that matches AI growth. Not because AI is uniquely wasteful, but because total AI infrastructure is growing faster than we're building clean power.

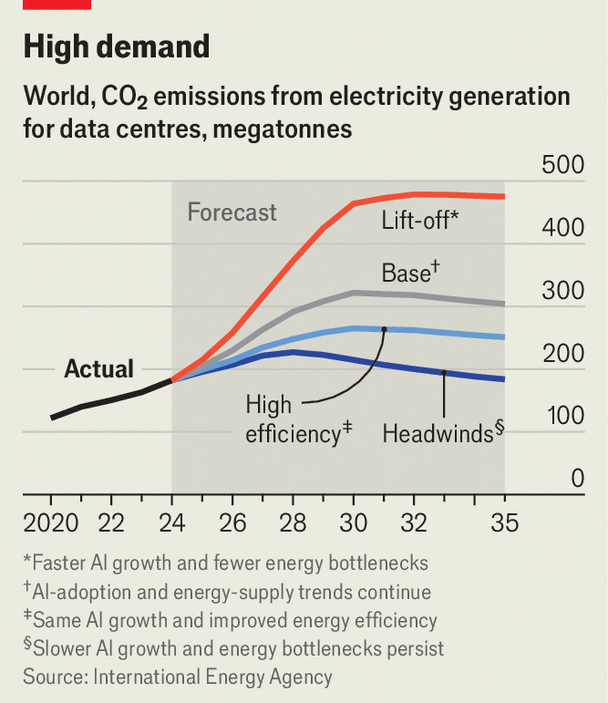

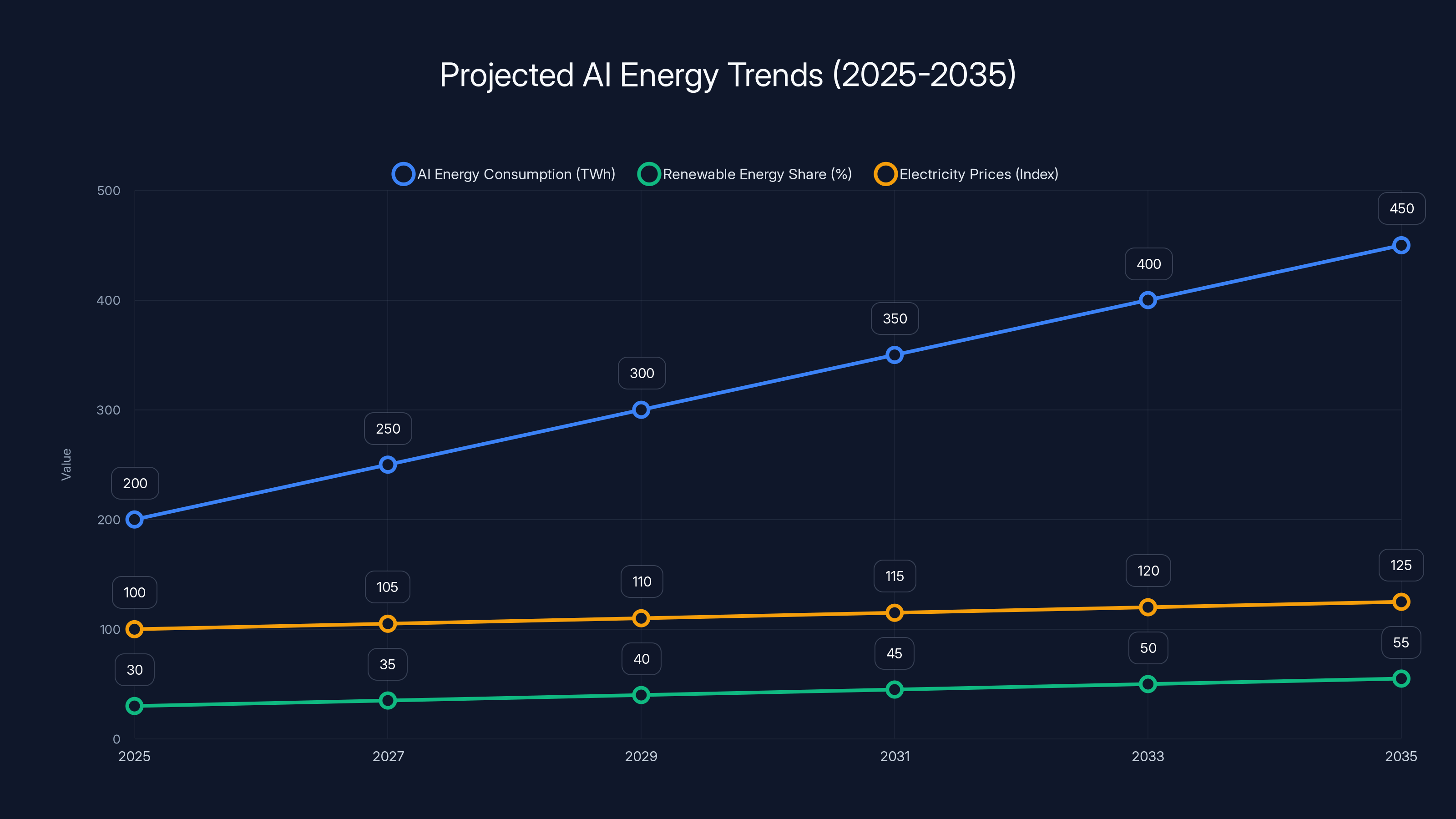

AI energy consumption is projected to grow significantly by 2035, with renewable energy share increasing but not fully matching the demand. Electricity prices are expected to rise, especially in regions with high data center concentrations. Estimated data.

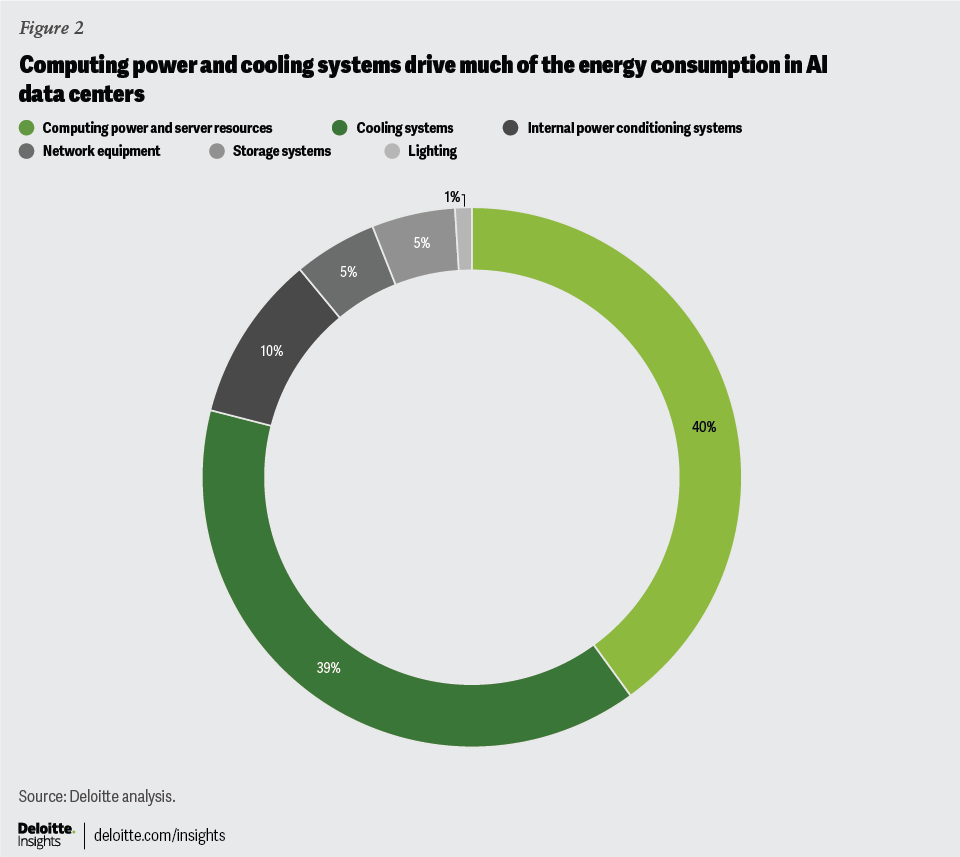

How Data Centers Actually Cool Themselves (And Why It Matters)

Understanding data center cooling is crucial because it's where the real environmental impact lives.

Older data centers used evaporative cooling (also called swamp cooling). You blow dry air across water-saturated pads, the water evaporates, and the air cools. It works, but it uses massive amounts of water. This is what triggered the original water-usage concerns, which was legitimate.

Modern data centers have shifted to several approaches:

Immersion Cooling: Hardware is submerged in special liquids (not water, typically engineered fluids or mineral oil). Heat transfers directly to the liquid. The liquid circulates to radiators. This is far more efficient than air cooling and uses no water. Companies like Microsoft and others have invested heavily in this technology.

Free Air Cooling: Many newer data centers use outside air directly, relying on geographic location and design to avoid needing mechanical cooling. Google has pioneered this in cool climates.

Liquid Cooling with Recycled Water: Some facilities use recycled water systems where the water is reused, not discharged.

Hybrid Systems: Combine multiple approaches to optimize for cost and environmental impact.

The key point: evaporative cooling is being phased out. The water usage concern was real but is becoming outdated as technology improves.

That said, electricity consumption remains real. A large modern data center can draw 50-200+ megawatts continuously, equivalent to the power needs of a small city.

The 1.5 i Phone Battery Claim: Another Debunked Statistic

Another viral claim: a single Chat GPT query uses as much energy as 1.5 full charges of an i Phone battery.

Sam Altman's response was direct: "There's no way it's anything close to that much."

He's right.

Let's do the math:

i Phone Battery Energy:

- i Phone 15 battery capacity: roughly 3,500 m Ah at 3.85V nominal = approximately 13.5 watt-hours

- 1.5 i Phone batteries = 20 watt-hours

Chat GPT Query Energy:

- Inference energy for a typical query: 0.5 to 5 watt-hours, typically in the 1-3 watt-hour range

- This is 5-20 times less than the claim

Where did the 1.5 battery number come from? Likely a conflation of different estimates, or someone multiplying training costs by a ridiculous factor.

The real number is: a Chat GPT query uses roughly the same energy as an i Phone processing a complex local task, not charging the entire battery.

This matters because false statistics undermine legitimate environmental concerns. When you debunk one exaggerated claim, people assume all the concerns are overblown. But the real issue—total data center energy draw in a world rapidly adopting AI—remains genuine.

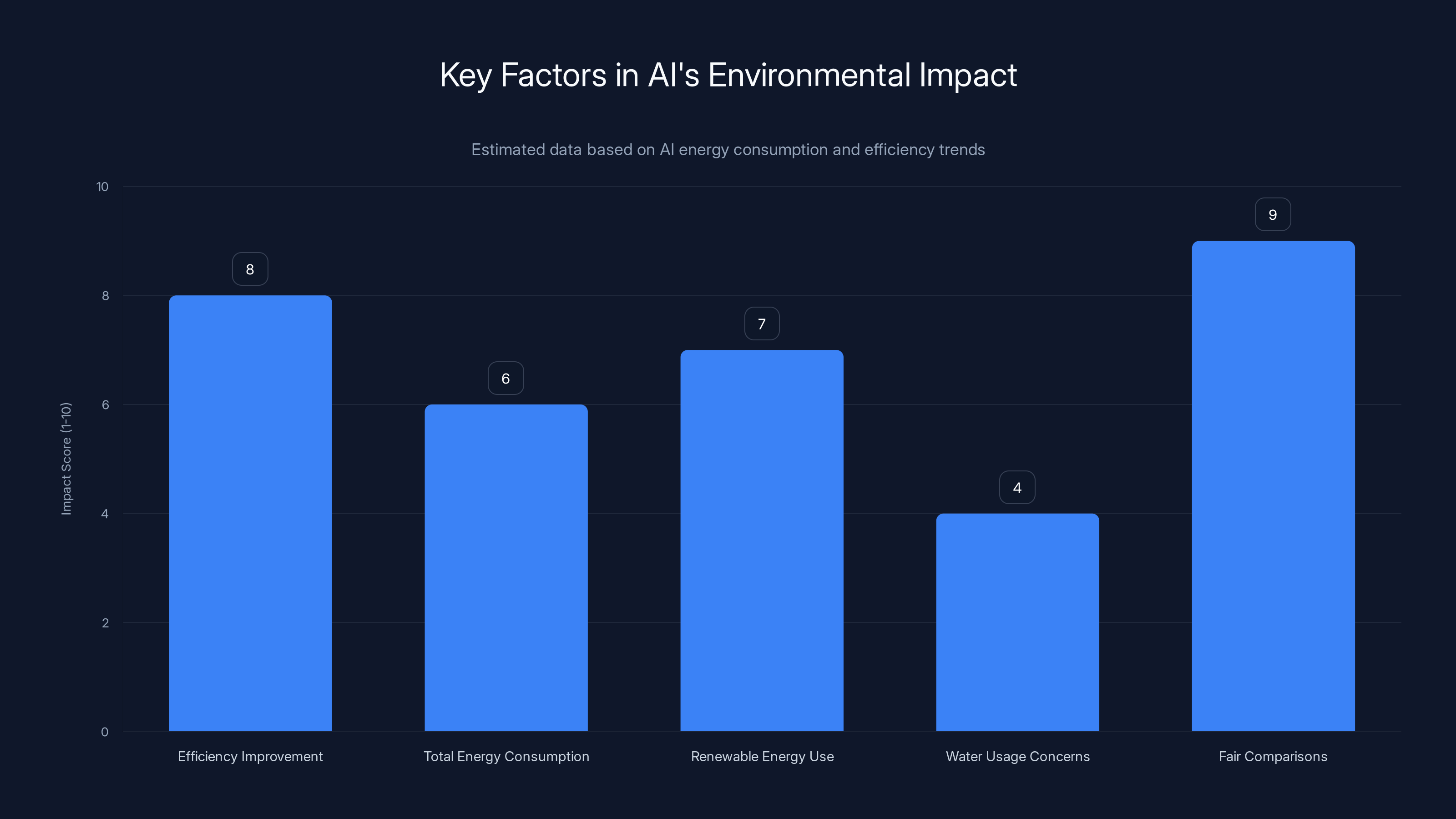

AI queries are becoming more energy-efficient, but total energy consumption is still growing. Renewable energy use and fair comparisons highlight AI's environmental benefits. Estimated data.

Energy Efficiency Gains: Why AI Is Actually Getting Cleaner

Here's something that rarely makes headlines: AI systems are becoming dramatically more energy-efficient.

This happens through several mechanisms:

Algorithm Improvements: Newer architectures like Transformers (the foundation of GPT and similar models) are more compute-efficient than earlier deep learning methods. Researchers continue optimizing them.

Hardware Specialization: Custom chips like Google's TPUs and NVIDIA's GPUs are optimized for AI workloads, consuming less energy per operation than general-purpose CPUs.

Model Optimization: Techniques like quantization (reducing floating-point precision), pruning (removing unnecessary connections), and distillation (training smaller models to mimic larger ones) all reduce energy needs.

Inference Optimization: Once a model is trained, companies spend significant effort optimizing how it runs. Caching, batching queries together, and hardware-specific optimizations all reduce per-query energy.

Open AI has publicly stated that the per-token inference cost (the energy to process one unit of text) has dropped by over 90% since the original GPT-3. That's not a marginal improvement; that's radical efficiency gain.

Meanwhile, demand is growing. So we have a scenario where:

- Each query uses less energy (efficiency improving)

- But total queries are increasing (demand growing)

- Net effect: total energy consumption is rising, but not as fast as it would without efficiency improvements

The real world example: as AI adoption grows from 1 billion to 2 billion to 5 billion daily users, even per-user efficiency gains won't prevent total energy consumption from rising significantly. The infrastructure needs to grow to accommodate demand.

Renewable Energy: The Real Solution (And Why It's Hard)

Alright, so AI uses energy. Some of it's concentrated in data centers. We need a solution.

The obvious one: power those data centers with renewable energy.

Major tech companies have committed to this. Google commits to carbon-neutral operations. Microsoft has climate pledges. Apple markets its renewable energy investments.

But here's the infrastructure reality: building enough renewable capacity to power growing AI infrastructure is genuinely hard.

Solar and Wind Generation:

- Solar panels have gotten cheap and efficient, but still require significant upfront capital, land, and years to deploy

- Wind farms are powerful but face siting challenges, environmental concerns, and transmission limitations

- Both are intermittent, requiring battery storage or backup power

Nuclear Power:

- Incredibly dense energy source, carbon-free, runs 24/7

- But regulatory approval takes years, construction takes a decade, and public acceptance is fraught

- New modular reactor designs are promising but unproven at scale

The Grid Problem:

- Even if you build renewable capacity, you need transmission lines to move power

- Electrical grids weren't designed for the kind of concentrated demand that modern data centers represent

- Grid upgrades take time

The Economics:

- It's cheaper to sign a power purchase agreement with an existing coal or natural gas plant than to build new renewable capacity

- So companies do both: they buy renewable credits (offsetting their carbon) while drawing power from the grid, which often includes fossil fuels

The honest assessment: tech companies are investing in renewable energy, but the pace of deployment is slower than the pace of AI infrastructure growth. This means, for the next 5-10 years, a growing portion of AI compute is powered by whatever is on the grid—which, in many regions, includes fossil fuels.

The practical path forward involves:

- Continuing efficiency improvements (shrinking the energy per-query)

- Scaling renewable energy deployment (especially nuclear and solar)

- Grid modernization (smart grids, demand response, storage)

- Geographic diversification (placing data centers where power is abundant)

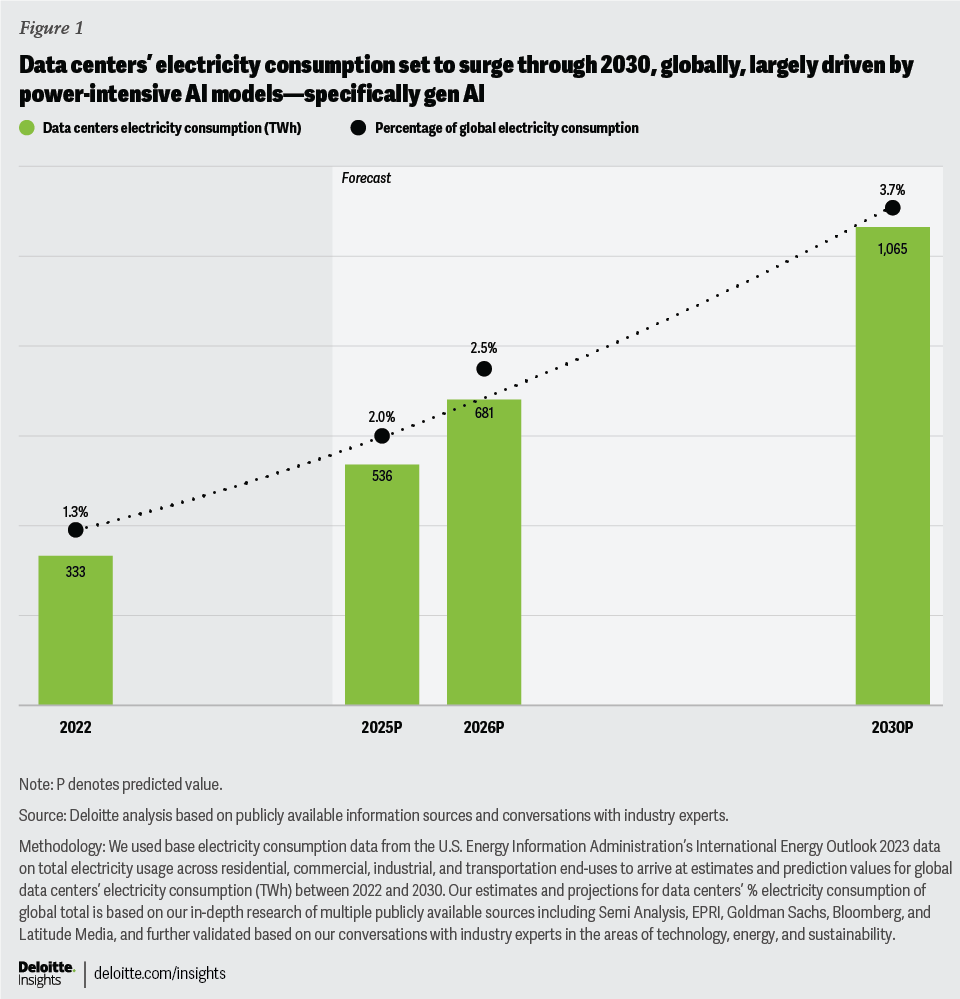

Data centers consumed approximately 1-2% of global electricity in 2023, highlighting their significant impact on energy resources. Estimated data.

What About Water Stress in Specific Regions?

While the "17 gallons per query" statistic is fabricated, water remains a real concern in water-stressed regions.

Data centers need cooling. Even efficient cooling systems need some water in many designs. In regions facing drought or limited freshwater supplies, concentrated data center development can exacerbate local water stress.

This has happened:

- TSMC's facilities in Taiwan have competed with agriculture for limited freshwater, leading to serious policy discussions.

- Data center expansion in the American Southwest (where water is increasingly scarce) has faced local opposition.

- Northern Europe and Canada have attracted data center investment partly because they have abundant water and cool climates.

The solution isn't to claim data centers don't use water. It's to:

- Use water-efficient cooling (immersion cooling, recycling systems)

- Locate facilities in water-abundant regions

- Invest in water recycling and reclamation infrastructure

- Be transparent about local water impact

The asymmetry is interesting: electricity concerns are global (power affects climate everywhere), but water concerns are hyperlocal (water impact depends on regional availability and use). Both matter, but they're different problems requiring different solutions.

The Fair Comparison: AI Inference vs. Human Expert Labor

Let's build out the most honest comparison: what's the energy cost of replacing human expert work with AI?

Scenario: Legal Research

Task: A lawyer needs to research precedent for a specific legal question.

Human Expert Path:

- Lawyer at desk: 20 watts (brain) + 10 watts (office equipment) + 5 watts (share of building cooling/lighting) = roughly 35 watts

- Time: 2 hours

- Total energy: 35 watts × 2 hours = 70 watt-hours

- Plus: commute, building infrastructure, salary, etc.

AI Path:

- User queries Claude or GPT or similar: 2 watt-hours for inference

- User reads and validates results: 25 watts × 0.25 hours = 6 watt-hours

- Total: roughly 8 watt-hours

Result: AI uses roughly 10x less energy for the same task.

Scenario: Customer Service

Task: Answer 100 customer support questions.

Human Path:

- Customer service rep: 35 watts × 8 hours = 280 watt-hours

- Handles maybe 20-30 questions per day due to complexity and fatigue

- Per question: 9-14 watt-hours

AI Path:

- Chatbot handles 100 questions: roughly 100 × 2 watt-hours = 200 watt-hours (inference)

- Plus: per-human escalations for 10% of queries = 10 × 14 watt-hours = 140 watt-hours

- Total: roughly 340 watt-hours for 100 questions

- Per question: 3.4 watt-hours

Result: AI with human escalation uses roughly 3x less energy for the same throughput.

These comparisons matter because they show that AI isn't inherently energy-wasteful relative to the tasks it displaces. In many cases, it's more efficient than the human alternative.

The problem isn't that AI is wasteful. The problem is that AI is so efficient that it enables entirely new use cases, expanding overall consumption. People don't replace human research with AI research; they ask AI 100 research questions instead of one.

The Growth Problem: More AI Means More Energy, Even If It's Efficient

This is the real crux of the energy concern.

AI systems might be energy-efficient on a per-query basis. They might use less energy than human alternatives. They might become 90% more efficient over time. But if usage grows exponentially, total energy consumption still rises dramatically.

This is called the rebound effect or Jevons paradox (originally observed with efficiency of steam engines leading to increased coal consumption).

Here's how it plays out with AI:

- Year 1: Chat GPT uses 500 GWh per year to serve millions of queries

- Year 2: Adoption doubles, but efficiency improves 20%, so total usage grows to 800 GWh

- Year 3: Adoption triples, efficiency improves another 20%, but total usage grows to 1,800 GWh

Even with efficiency gains, total consumption rises because demand grows faster.

This is why the honest conversation from major tech leaders now includes: "We're optimizing per-query efficiency, AND we're investing in renewable energy, because both are necessary."

The energy growth from AI isn't a bug in the system. It's a feature of success. As AI becomes more capable and more useful, more people use it, and total energy consumption grows. This is true of any technology: the internet, smartphones, cloud computing, etc.

The question isn't whether this is sustainable (it isn't, under current energy infrastructure). The question is whether we can build clean power infrastructure fast enough to accommodate it.

How AI Companies Actually Report on Energy (Or Don't)

Here's a frustrating fact: there's no legal requirement for tech companies to disclose energy and water usage.

So most don't. Or they do, in vague terms, buried in sustainability reports that focus on feel-good narratives rather than hard numbers.

Anthropic has published some training compute figures. Open AI has discussed per-token efficiency improvements. But detailed operational data remains proprietary.

Why? It's competitive sensitive. Energy consumption and cooling efficiency are part of your competitive advantage if you've optimized them well. Companies don't want to disclose what they're spending on infrastructure.

This means:

- Scientists have to estimate based on published model sizes, hardware specs, and training duration

- Estimates vary wildly because many parameters are unknown

- Some claims are unverifiable and thus become impossible to debunk convincingly

- Bad statistics propagate because there's no clear source of truth

Some researchers have called for mandatory energy and water disclosure in AI model development, similar to how pharmaceutical companies must disclose clinical trial data. The logic is straightforward: if environmental impact is a legitimate concern, we should have access to real data.

Private disclosure requirements could help:

- Annual third-party audits of data center energy and water use

- Training compute disclosed for major models

- Efficiency improvements tracked and published

- Carbon footprint calculated and verified

Without this, the conversation remains dominated by bad faith arguments ("AI is destroying the planet") and corporate vagueness ("we're committed to sustainability").

Future Outlook: Where AI Energy Trends Are Heading

Given current trajectories, where does AI energy consumption go?

Likely Scenario (2025-2030):

- Per-query efficiency continues improving through algorithm optimization, hardware specialization, and inference optimization

- Total AI energy consumption still grows because demand grows faster than efficiency improves

- Renewable energy investment accelerates but doesn't keep pace with growth, so fossil fuel grid power still supplies significant portion of AI compute

- Electricity prices rise in regions with heavy data center concentration, spurring policy interventions

- Geographic consolidation around renewable-rich regions (Northern Europe, Canada, regions with cheap hydro/wind/solar)

2030-2035 Outlook:

Depends heavily on policy and investment:

- Optimistic path: Massive nuclear, solar, and wind deployment means AI growth is powered by clean energy; per-query costs continue dropping; AI becomes economically efficient enough to replace significant human labor

- Realistic path: Renewable deployment accelerates but lags behind AI growth; mixed grid with increasing clean energy percentage; energy costs become a meaningful input to AI model pricing; some cooling innovation reduces water concerns

- Pessimistic path: Deployment of clean energy stalls; AI energy costs become prohibitive for many use cases; return to on-premise or smaller models; public backlash limits AI adoption

The most likely outcome is between realistic and optimistic. The key factors:

- Nuclear power regulation and construction (Can we build small modular reactors quickly?)

- Battery storage technology (Can we store enough renewable energy for nighttime?)

- Grid infrastructure upgrades (Can we transmit power to where it's needed?)

- AI efficiency breakthroughs (Do we discover fundamentally more efficient approaches?)

- Policy intervention (Do carbon pricing or renewable mandates accelerate deployment?)

The Mitigation Strategies: What Companies Are Actually Doing

Large AI companies are implementing serious strategies to reduce energy impact. Some are genuine; some are greenwashing.

Real Strategies:

-

Renewable energy procurement: Long-term power purchase agreements for wind and solar projects, which fund actual development. This is real, has measurable impact, and creates accountability.

-

Efficiency optimization: Hardware-software co-optimization, kernel optimization, model distillation, quantization. This directly reduces per-query energy, measurable and verifiable.

-

Chip design: Custom processors (TPUs, specialized GPUs) that reduce energy per operation. This is capital-intensive but works.

-

Cooling innovation: Immersion cooling, free cooling, liquid cooling systems. Real hardware improvements with measurable water/energy savings.

-

Geographic diversification: Placing facilities in renewable-rich regions. Microsoft's underwater data center experiments, for example, combined cooling efficiency with renewable energy proximity.

Greenwashing Strategies:

-

Carbon offsets: Buying credits instead of reducing actual consumption. Useful as a gap measure, not a solution.

-

Vague sustainability goals: "Carbon neutral by 2030" without explaining how, which often means offsets, not actual reduction.

-

Renewable energy claims: Sometimes companies claim to run on renewable energy because they've signed power purchase agreements, but the actual electricity consumed comes from the grid, which includes fossil fuels.

-

Marketing efficiency: Talking constantly about improvements while consumption grows.

The honest companies discuss both efficiency AND renewable energy procurement. The greenwashing companies focus on one and ignore the other.

A More Nuanced Take on AI Environmentalism

Here's the uncomfortable truth: AI's energy consumption is real and growing, but it's not uniquely bad compared to other technologies, and it often replaces more energy-intensive alternatives.

But saying "AI is efficient compared to human work" doesn't mean we should ignore energy impact. It means we should:

- Acknowledge the real costs rather than dismissing concerns or exaggerating them

- Compare fairly against realistic alternatives, not strawman scenarios

- Focus on solutions that are actually tractable (renewable energy deployment, not "stop using AI")

- Demand transparency so we can measure progress

- Invest in infrastructure that can sustainably support growth

The path forward isn't to reject AI because of energy concerns. It's to build the energy infrastructure that AI's growth demands.

That's harder than either "AI is fine" or "AI is destroying the planet," which is probably why those narratives dominate. But it's the honest conversation.

FAQ

How much energy does a single Chat GPT query actually use?

A typical Chat GPT query uses between 0.5 to 5 watt-hours of energy during inference, with most queries falling in the 1-3 watt-hour range. This is roughly 1/7th the energy of a full i Phone 15 battery charge, not 1.5 batteries as some viral claims suggest. The exact amount varies based on query length, model version, and hardware optimization.

Is the "17 gallons of water per query" statistic true?

No, this statistic is false. It likely originated from misunderstandings about older evaporative cooling systems used in some data centers, which did consume significant water. Modern data centers have largely moved away from evaporative cooling to more efficient methods like immersion cooling and free air cooling, dramatically reducing water consumption. The per-query water usage claims lack scientific basis.

Why do AI companies say the energy comparison is unfair?

The comparison is unfair because it conflates training costs (one-time, billions of dollars, amortized across years of usage) with inference costs (per-query, very small). Additionally, comparing total training energy against a single query ignores that humans also require 20 years of development before they can answer questions, consuming thousands of kilowatt-hours of energy. Fair comparisons normalize for these differences.

How much energy does it take to train a large language model?

Training models like GPT-3 consumed approximately 1.3 million kilowatt-hours of energy, while GPT-4 likely required more due to increased scale. However, these are one-time costs amortized across billions of queries over years. Current estimates suggest the per-query training cost is negligible compared to inference costs when distributed across widespread usage.

What's the biggest factor in AI's environmental impact: training or inference?

The answer depends on scale. For a newly released model with limited usage, training dominates the total energy cost. But as models are deployed and reach billions of users, inference energy becomes more significant in aggregate, even though individual inference is cheap. For mature, widely-used models, the question shifts from training to total electricity consumption across all inference operations.

Are there technologies making AI more energy-efficient?

Yes, multiple areas of development are reducing energy consumption. These include algorithm optimization (like improved Transformer architectures), hardware specialization (custom chips like TPUs), inference optimization (quantization, pruning, caching), and cooling innovation (immersion cooling systems). Open AI has reported over 90% improvements in per-token energy efficiency since GPT-3, demonstrating real progress.

Will renewable energy solve AI's environmental impact?

Renewable energy is necessary but not sufficient. The real challenge is deployment speed: building enough solar, wind, and nuclear capacity to power growing AI infrastructure while simultaneously decarbonizing other sectors. This is an infrastructure problem, not an AI problem. Even with aggressive renewable deployment, total energy consumption will grow, requiring significant grid modernization and storage infrastructure.

Should we be concerned about AI's environmental impact or not?

Yes, but for the right reasons. The concern isn't that individual AI queries are wasteful (they're relatively efficient). The concern is that total AI infrastructure energy consumption is growing rapidly, and we haven't yet built the clean power infrastructure to support it sustainably. The solution is scaling renewable energy and grid modernization, not limiting AI development.

Conclusion: Moving the Conversation Forward

Sam Altman's point about human brain development was actually the most important part of the recent conversation about AI energy, even if it seemed flippant at the time.

He wasn't saying AI has no environmental impact. He was pointing out that the way we measure and discuss that impact has been deeply broken. We've been comparing things in fundamentally different categories and pretending the numbers tell us something meaningful.

Here's what we actually know:

Individual AI queries are becoming more energy-efficient. Serious optimization work is happening across hardware, algorithms, and inference systems. That's verifiable and measurable.

Total AI energy consumption is still growing. Because demand grows faster than efficiency improves. This is normal for any successful technology, but it's worth acknowledging.

The environmental impact depends on the energy source. If AI runs on renewable energy, the marginal cost of a query is close to zero. If it runs on coal, it's significant. The problem is infrastructure, not the AI itself.

Water usage concerns are mostly outdated. Modern data centers have moved away from the worst practices. Regional water stress remains a concern in specific areas, but it's not a global problem with AI technology.

Fair comparisons matter. When you compare AI against realistic alternatives (human experts, previous technology), AI often comes out ahead from an energy perspective. But those alternatives also had environmental costs we've normalized.

The path forward isn't mysterious:

- Continue optimizing AI system efficiency

- Scale renewable energy deployment as fast as possible

- Modernize electrical grids to handle concentrated demand

- Demand transparency from companies about energy and water usage

- Make environmental impact a core design consideration for new AI systems

None of this requires rejecting AI. All of it requires treating the energy challenge as genuinely important infrastructure work that needs serious investment and policy attention.

The conversation shouldn't be "Is AI wasteful?" That's too simple and the answer depends entirely on your reference point. The conversation should be "How do we build a clean energy infrastructure that can sustain the world we're building?"

That's harder. It requires thinking about electricity markets, nuclear policy, transmission infrastructure, battery storage, grid modernization, and long-term energy security.

But it's the conversation that actually matters.

And unlike the viral myths about water gallons and i Phone batteries, it's one grounded in real physics, real infrastructure challenges, and real solutions.

Ready to Build on What You've Learned?

Now that you understand the real energy dynamics of AI, the next step is thinking about how to build sustainable AI systems in your own organization. If you're exploring AI automation tools that prioritize efficiency and integration, consider how these principles apply to your specific use case.

Use Case: Building AI-powered automation workflows that are both powerful and resource-efficient for your team.

Try Runable For Free

Key Takeaways

- The '17 gallons per query' water usage claim is fabricated; modern data centers use efficient cooling that doesn't match the statistic.

- Per-query energy costs are dropping 90% since GPT-3, but total consumption grows because demand increases faster than efficiency improves.

- Fair energy comparison requires amortizing training costs across years of usage and comparing against human expert work, not cherry-picked scenarios.

- The real environmental challenge is deploying renewable energy infrastructure fast enough to match AI growth, not the technology itself.

- Renewable energy procurement and grid modernization, not efficiency tweaks alone, will determine if AI infrastructure becomes sustainable.

Related Articles

- Nanometer QR Codes: The Ceramic Storage Revolution That Could Last Forever [2025]

- Meta and Nvidia Partnership: Hyperscale AI Infrastructure [2025]

- AI Agents in Production: What 1 Trillion Tokens Reveals [2025]

- Facial Recognition Goes Mainstream: Enterprise Adoption in 2026 [2025]

- India's Sarvam Launches Indus AI Chat App: What It Means for AI Competition [2025]

- AWS 13-Hour Outage: How AI Tools Can Break Infrastructure [2025]

![AI Energy Consumption vs Humans: The Real Math [2025]](https://tryrunable.com/blog/ai-energy-consumption-vs-humans-the-real-math-2025/image-1-1771711568339.jpg)