Facial Recognition Goes Mainstream: Why 2026 Marks the Year Enterprise Tech Stops Being Exciting

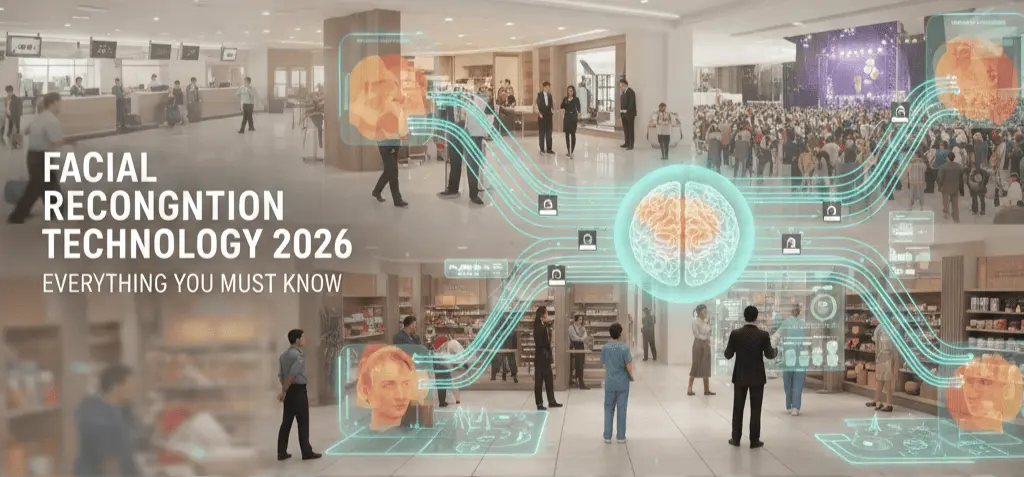

Here's the thing about transformative technology: the moment it becomes truly transformative is exactly when nobody gets excited about it anymore.

Cloud computing used to feel like magic. Now it's just infrastructure. Multi-factor authentication used to be this novelty thing that security teams evangelized. Now it's table stakes. And in 2026, facial recognition technology is about to make that same transition.

It's already happening. Quietly. Without the breathless press releases or the dystopian think pieces. Organizations across border control, financial services, workplace security, and healthcare are moving facial recognition out of pilot programs and into production systems. Not as innovation theater. Not as proof-of-concept. But as actual, everyday infrastructure that runs the business.

When a technology becomes boring, that's when the real transformation begins.

The shift from "cutting-edge science experiment" to "business-critical infrastructure" matters more than it might seem. It means facial recognition will finally have to prove itself in the real world, with all its complications and constraints. It means accuracy standards will tighten. It means governance and accountability will stop being afterthoughts. It means trust has to be earned, not assumed.

This isn't hype. It's maturity. And if you're building or deploying systems in 2026, understanding this shift is non-negotiable.

TL; DR

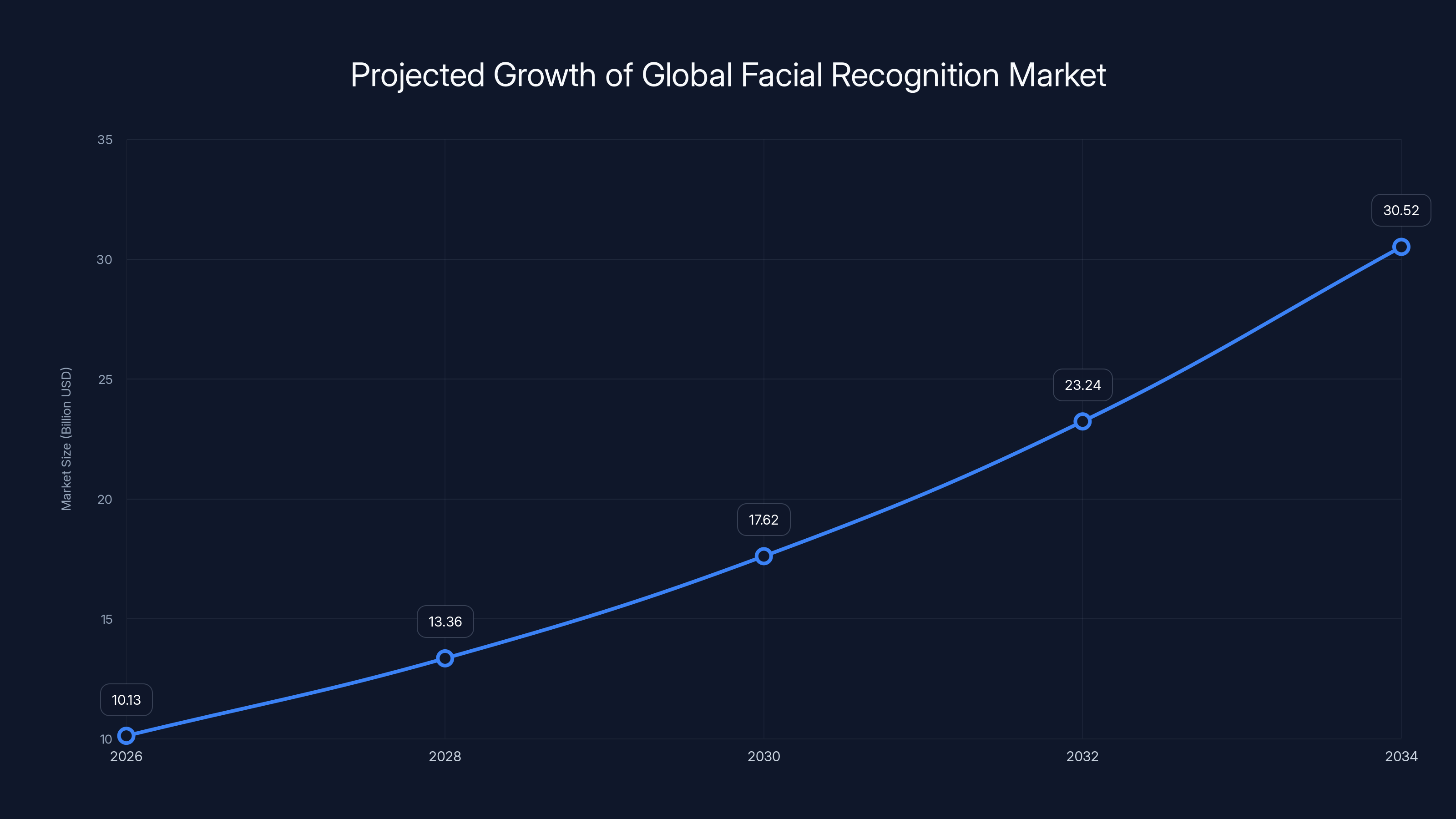

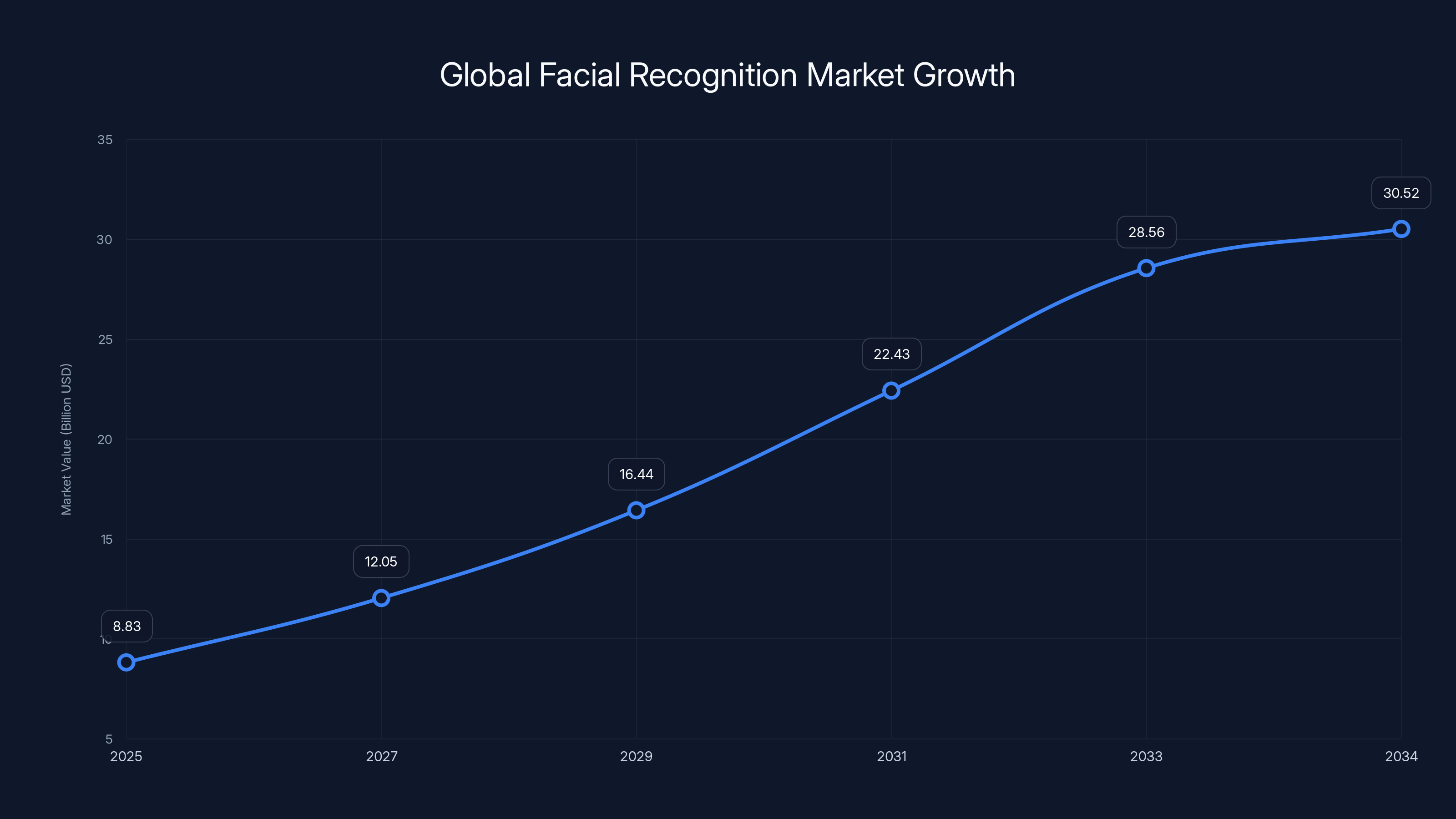

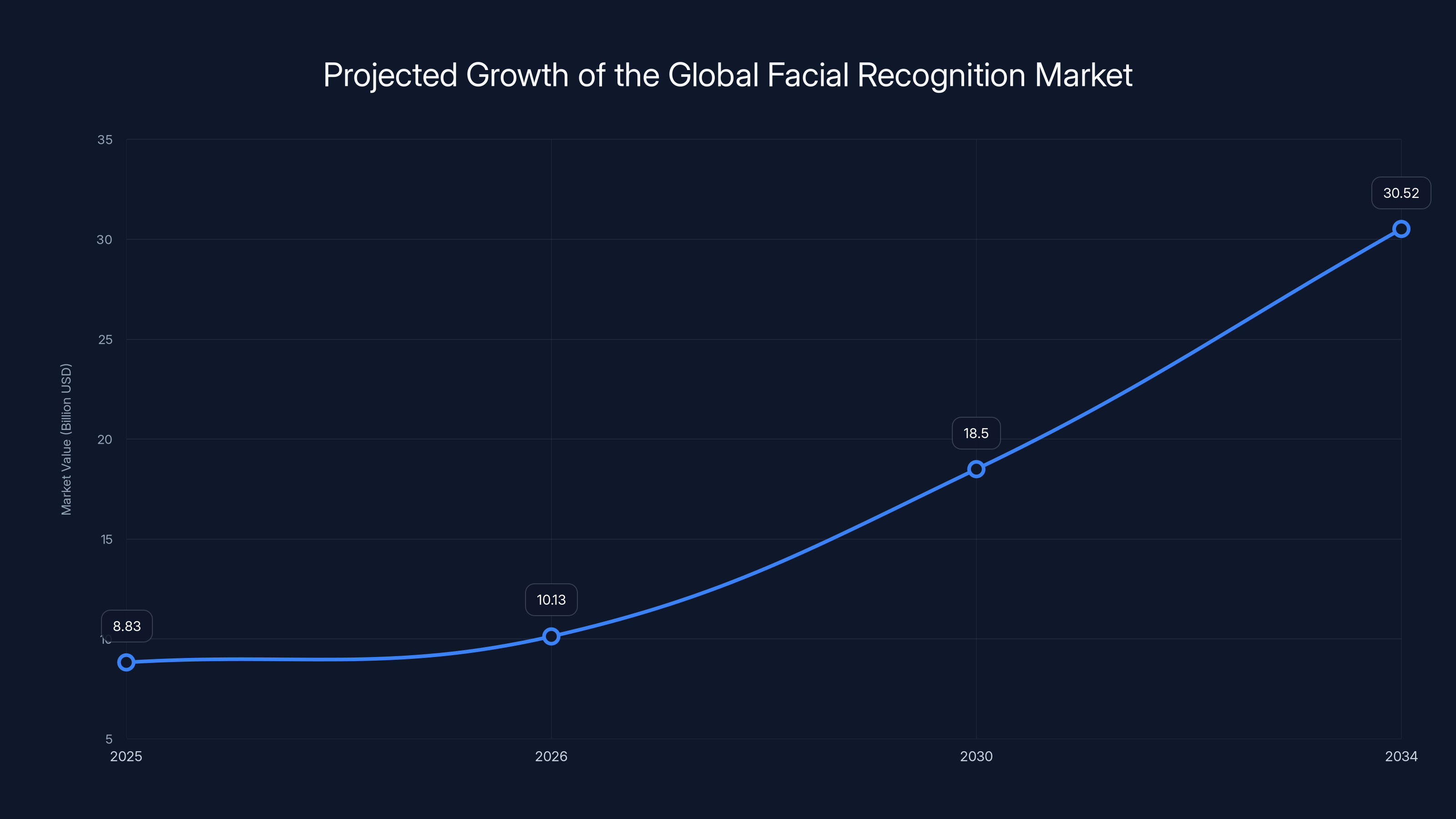

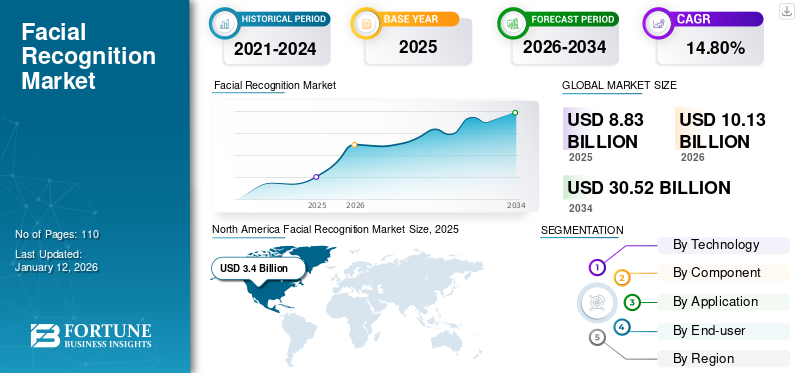

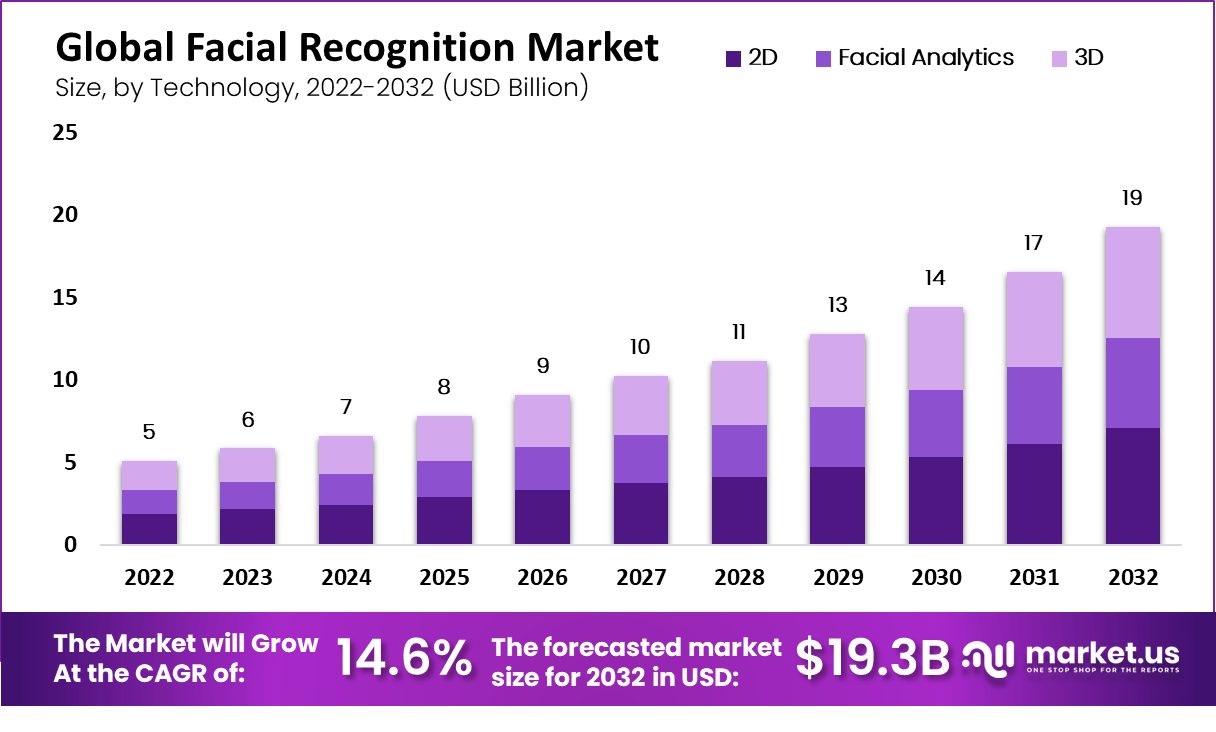

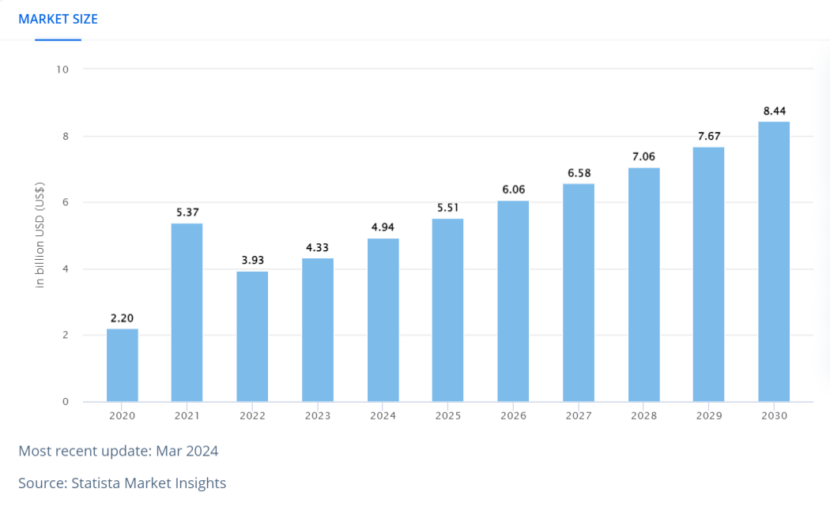

- Market trajectory is undeniable: The global facial recognition market was valued at 30.52 billion by 2034, growing at a 14.80% compound annual growth rate

- Production deployment is accelerating: Organizations are moving FRT from pilots to live operations across border control, financial services, healthcare, and workplace security

- Accuracy matters more than speed: Real-world performance varies dramatically based on lighting, demographics, camera quality, and operational context—static benchmarks are meaningless

- Governance becomes non-negotiable: As FRT scales, privacy, transparency, and regulatory compliance shift from nice-to-have to business-critical requirements

- Trust must be built systematically: Successful FRT deployment requires clear policies, ongoing testing, stakeholder communication, and accountability mechanisms from day one

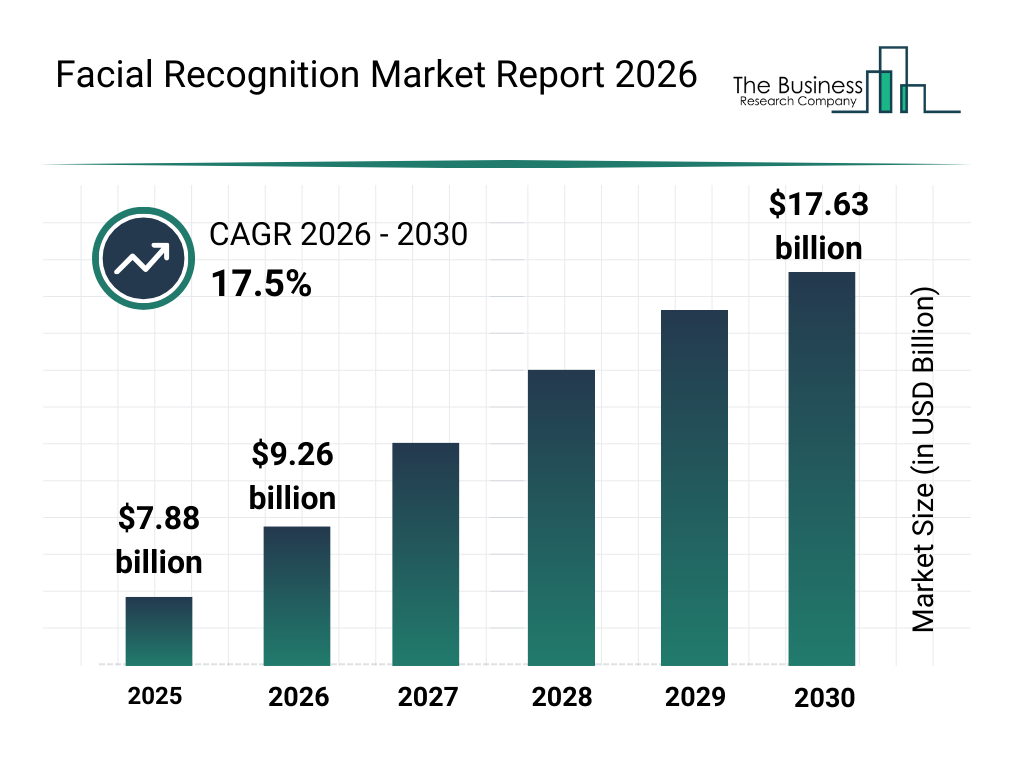

The global facial recognition market is expected to grow significantly from

The Quiet Revolution: Facial Recognition Leaves the Lab

Facial recognition has been around longer than most people realize. The foundational mathematical concepts go back decades. But for the general public—and for most enterprises—it's always felt like something out of science fiction.

That's changing. And it's not because the technology suddenly became better overnight. It's because the conditions for adoption have finally aligned.

First, the infrastructure exists. You need computational power, storage, and connectivity. Cloud computing solved that. GPUs became accessible and affordable. Real-time processing at scale went from impossible to routine. Organizations don't need massive on-premise server farms anymore. They need API calls and cloud spending.

Second, the accuracy crossed a threshold. Modern facial recognition systems hit human-level accuracy on standard benchmarks somewhere around 2019-2020. That's not to say they're perfect—they're not—but they're good enough to be useful in constrained environments. And that's the key word: constrained. When you control the variables, FRT becomes genuinely reliable.

Third, the regulatory landscape started developing. The EU's AI Act forced organizations to think about accountability. The UK's Information Commissioner's Office issued guidance on facial recognition in CCTV. GDPR forced businesses to ask hard questions about biometric data processing. Regulation doesn't kill adoption; it forces adoption to become responsible.

Fourth, competitive pressure became real. If your competitor is using facial recognition for border control and reducing processing time from 5 minutes to 30 seconds, you're now at a disadvantage. If your peer in financial services is using liveness detection to prevent fraud on remote account openings, you have a security gap. Adoption becomes less about innovation and more about keeping pace.

All of this adds up to a maturation curve. And 2026 is where the inflection point hits.

The facial recognition market is expected to grow from

From Pilot Hell to Production Reality: The FRT Maturation Curve

Every transformative technology follows a similar path. And facial recognition is no exception.

Phase 1: The Science Phase (roughly 2010-2015). Academic papers. Research institutions proving feasibility. Benchmarks showing steady accuracy improvements. Excitement in specialized circles. Nobody outside of computer vision researchers cares.

Phase 2: The Hype Phase (roughly 2015-2020). Startups emerge. Tech press goes crazy. "AI is here!" "Facial recognition will change everything!" Companies spin up pilot programs. Big announcements. Pilot results rarely translate to production.

Phase 3: The Pilot Hell Phase (roughly 2018-2025). Lots of organizations have pilots. They work fine in controlled environments. Real-world deployment reveals unexpected problems. Accuracy drops. Performance degrades under load. Privacy concerns become concrete. Some companies push forward. Most sit in extended pilot limbo.

Phase 4: The Production Phase (starting 2026). The companies that solved the real-world problems in Phase 3 now have production systems running at scale. Others learn from their experience and adopt faster. The technology becomes standard infrastructure. Press stops covering it because it's boring.

We're entering Phase 4. Not everywhere, not evenly across industries. But in sectors with clear use cases and regulatory pressure—border control, financial services, workplace security, healthcare—facial recognition is moving into production.

Why does this matter? Because Phase 4 is where the real work begins.

In Production systems, accuracy isn't an average across your test set. Accuracy is measured in how many times it fails when someone's backlit by a window at 3 PM on a Tuesday. Accuracy is how it performs on demographics that were under-represented in training data. Accuracy is what happens when your camera degrades or your lighting changes seasonally.

Production systems also have to deal with scale. A pilot serving 100 people a day is fundamentally different from a system serving 100,000 people a day. Throughput requirements change. Storage requirements explode. Latency budgets tighten. Systems that looked reliable at pilot scale can fail catastrophically at production scale.

And production systems have accountability. When a pilot makes a mistake, you learn from it. When a production system makes a mistake, someone gets denied entry to a secure area. Or a fraudulent account gets opened. Or law enforcement arrests the wrong person. The stakes change.

The Market Numbers: From 30.52 Billion

Market projections are always estimates. They're rarely precise. But the direction matters. And the direction for facial recognition is unambiguous.

In 2025, the global facial recognition market was valued at approximately

To put that in perspective, that's growth that outpaces the broader AI market. It's growth that suggests genuine adoption, not speculative investment. It's growth that indicates organizations are moving from conversation to deployment.

But here's what's important: growth in market size doesn't automatically mean growth in maturity. A market could be growing because more companies are throwing more money at the same problems, not because those problems are being solved.

In the case of facial recognition, though, the growth correlates with something more meaningful: deployment across new use cases and new geographies.

Border control applications are scaling beyond early adopters. Countries across Asia, Europe, and North America are rolling out large-scale facial recognition systems for passport control and customs screening. What used to be a novelty in select airports is becoming standard infrastructure.

Financial services adoption is accelerating. Banks and payment processors are implementing facial recognition for identity verification, fraud detection, and remote onboarding. The friction that kept digital-only banking from being truly seamless is shrinking. Companies that previously required in-person identity checks are moving to fully remote account opening with facial liveness detection.

Workplace access control is becoming more sophisticated. Security teams that spent decades managing physical keycards and badge readers are migrating to facial recognition for access control. It's faster, harder to spoof, and creates an audit trail that cards can't match.

Healthcare facilities are implementing facial recognition for patient identification, preventing medication errors and improving security. It sounds like a small use case until you realize that patient misidentification is a known patient safety issue, and anything that reduces it has value.

Each of these use cases pushes forward the timeline for maturation. Each success in production deployment provides a template for the next organization considering adoption. Each solved problem becomes a case study.

The global facial recognition market is projected to grow from

Where Facial Recognition Actually Works: Use Cases That Justify Deployment

Facial recognition isn't a general-purpose technology. That's actually a strength, not a limitation. It works exceptionally well in specific contexts, and those contexts are scaling rapidly.

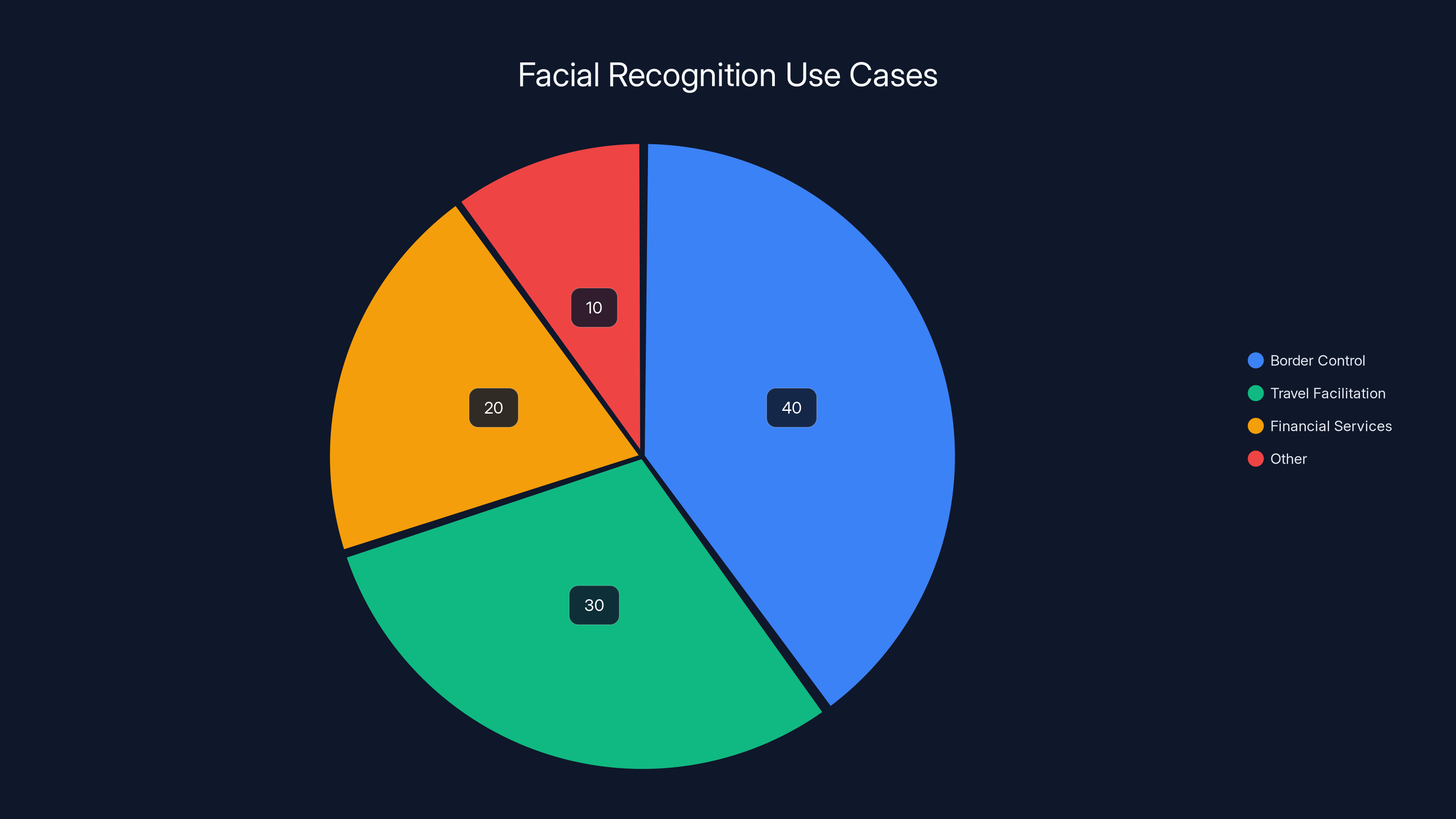

Border Control and Travel Facilitation

This is the use case where facial recognition matured first. Why? Because the constraints are clear, the stakes are manageable, and the potential efficiency gains are massive.

Traditional passport control involves a human officer examining a physical document, comparing the photo to the person's face, checking against databases, and making a decision. At high volume, this takes time. Queues form. Friction builds.

Facial recognition automates the comparison step and accelerates database lookups. The system captures an image, compares it against the passport database, checks watchlists and no-fly lists in milliseconds, and flags anomalies for human review. Processing time drops from minutes to seconds.

The key constraint: controlled environment. Lighting is designed for facial recognition. The person knows they're being photographed. They're not moving quickly. If the system can't get a good match, a human is right there to handle it.

This is why airports, border agencies, and travel hubs are leading deployment. The operational case is clear. The failure modes are manageable. The training data comes from government ID databases, which are reliable and comprehensive.

Real-world example: The UK's border control system now processes facial recognition checks on millions of passengers annually. The system has matured to the point where it's trusted infrastructure, not an experiment.

Financial Services and Identity Verification

Banks and payment processors face a fundamental problem: how do you verify someone's identity remotely without seeing them in person?

Traditional solutions involved document scanning (easy to fake), video calls (slow and expensive), and knowledge-based questions (vulnerable to data breaches). None of them are perfect.

Facial liveness detection solves a piece of this. It confirms that the person claiming to be the account owner is physically present and not just holding up a photo. Combined with document scanning and other checks, it creates a verification workflow that's faster and more secure than in-person visits.

Organizations in the financial sector report that liveness detection reduced the need for second-factor human review by 40-60%, cutting identity verification time from 15 minutes to 5 minutes or less. For remote account opening, that's transformative.

The constraint: the person initiating the account opening is trying to be verified. They want the process to succeed. There's genuine incentive alignment.

Workplace Access Control

Security teams managing physical access have always faced tradeoffs between security and friction.

Badge-based systems are fast but spoofable. Guards checking IDs are secure but slow. Biometric fingerprint systems are reliable but require physical contact and can fail for people with worn fingerprints.

Facial recognition sits in an interesting middle ground. It's fast (faster than guards checking badges). It's hard to spoof (much harder than stolen badges). It creates an audit trail. And unlike fingerprints, it doesn't degrade with age or wear.

Companies deploying facial recognition for access control report measurable improvements: fewer delays during peak hours, fewer instances of unauthorized access, and better audit trails for security reviews.

The constraint: the system sees the same people repeatedly. Training data becomes rich and diverse naturally. The system gets better over time.

Healthcare Patient Identification

This use case highlights why maturation matters. Patient misidentification sounds like an edge case, but it's surprisingly common. Studies suggest that patient identification errors occur in roughly 1-2% of healthcare encounters. Most are caught before they cause harm. Some aren't.

Facial recognition provides a secondary check. After a patient checks in and gets a wristband, the system can verify that the right patient is receiving the right medication or procedure. It's not about surveillance. It's about safety.

Hospital systems implementing facial recognition for patient verification report significant improvements in safety metrics. Not dramatic ones—1-2% reductions in identification errors. But in healthcare, 1-2% across millions of patient interactions adds up.

The constraint: patients have every incentive to be identified correctly. The system operates in controlled indoor environments. Accuracy requirements are high, but failure modes are manageable.

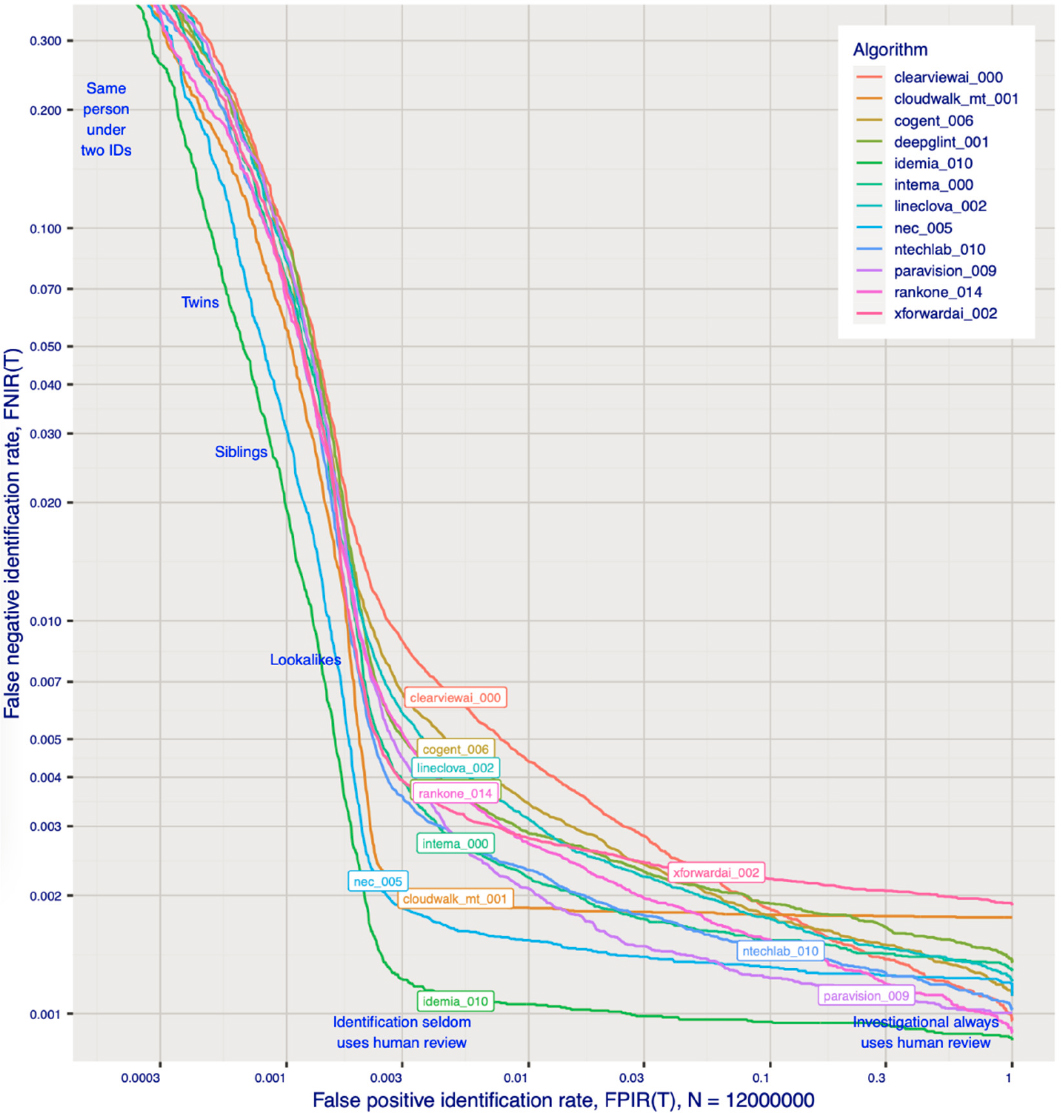

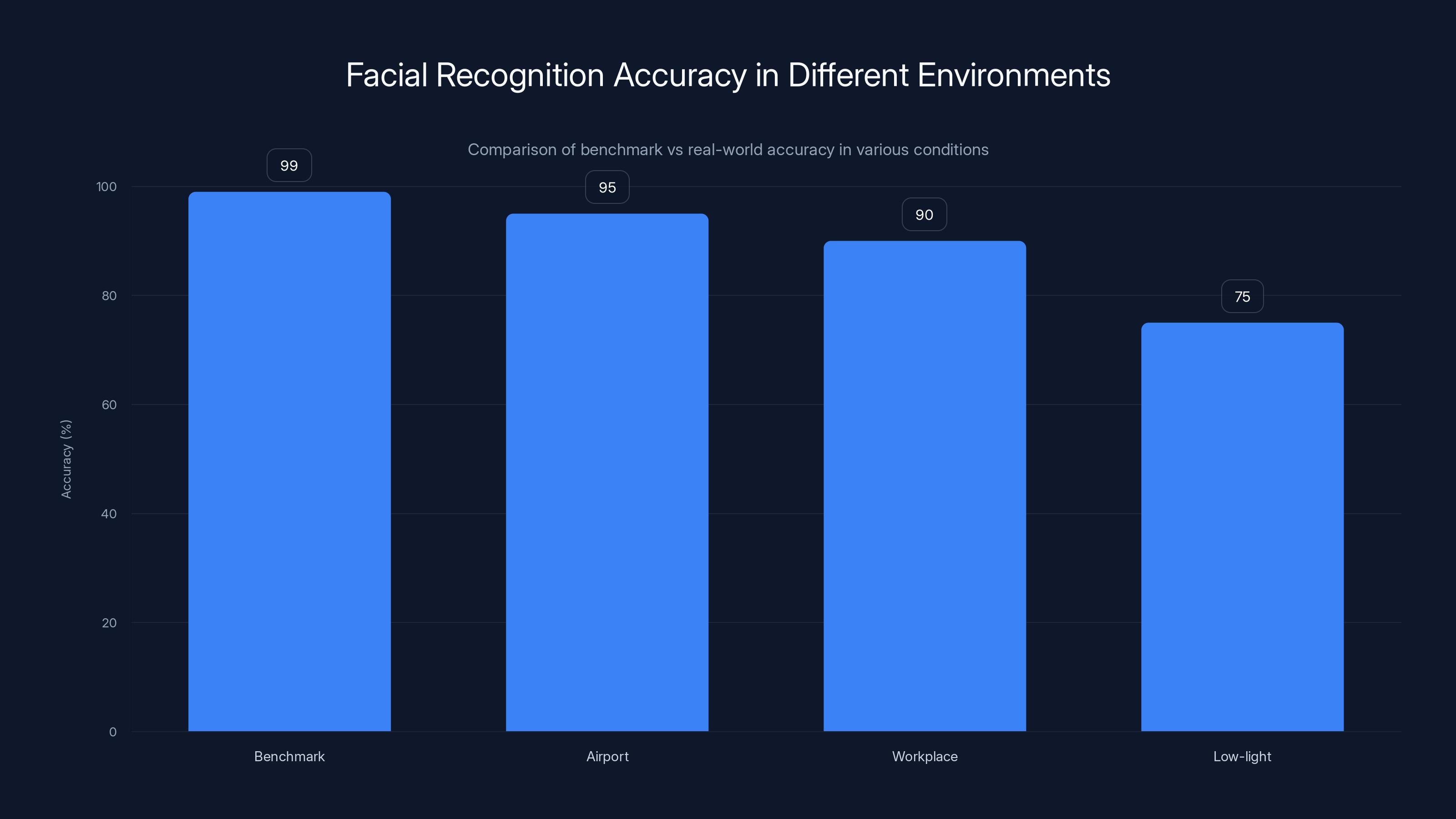

The Accuracy Paradox: Why High Benchmark Scores Mean Less Than You Think

This is where the transition to maturation gets serious. Facial recognition benchmarks—the ones you see in papers and on vendor websites—show impressive accuracy numbers. 99% accuracy. 99.5%. Sometimes higher.

Those numbers are real. And they're also misleading.

Benchmark accuracy is measured in controlled conditions. The same lighting. The same camera quality. The same demographic distribution as the training data. The same angle and distance. It's like testing a car's fuel efficiency on a perfectly flat test track with zero wind resistance.

Real-world accuracy is different. It's weather. It's people wearing sunglasses. It's backlighting from windows. It's camera angles that aren't ideal. It's people whose faces have aged since their ID photo. It's demographics that were under-represented in the training data.

When you move from benchmarks to production, accuracy doesn't stay the same. It degrades. How much depends on how much the production environment differs from the benchmark conditions.

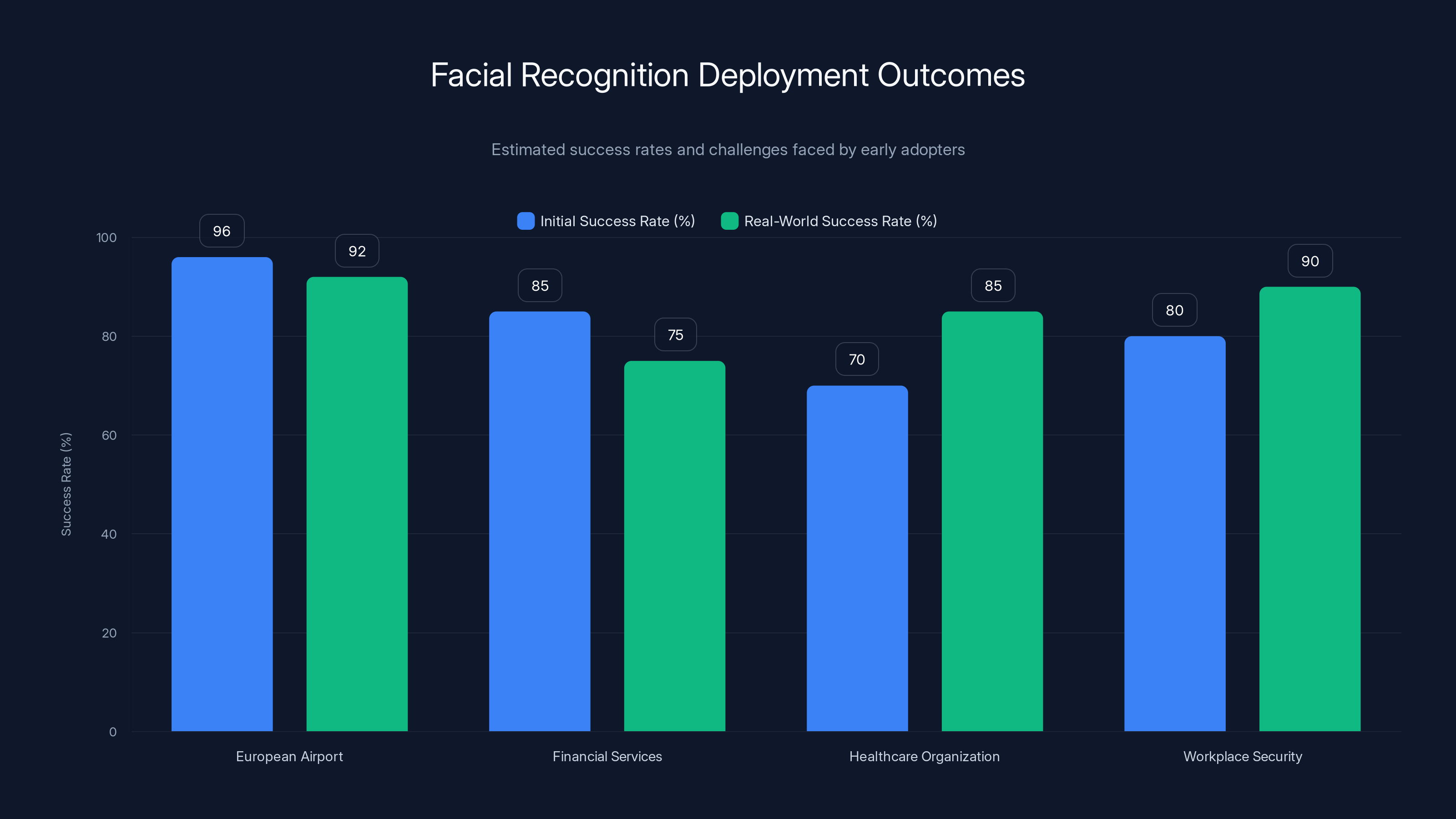

Let me give you concrete numbers. A facial recognition system might achieve 99% accuracy on a standard benchmark. That same system in an airport with variable lighting and diverse passengers might achieve 95% accuracy. In a workplace with aging ID photos and unexpected angles, it might drop to 90%. Under infrared lighting in low-light security scenarios, it might be 75%.

None of those numbers are "accurate" or "inaccurate." They're all accurate descriptions of performance in those specific contexts. The problem is that organizations deploying in 2026 need to know their own specific accuracy, not benchmark accuracy.

This is the discipline that separates mature FRT deployment from amateur hour. You test in conditions matching your actual use case. You establish accuracy baselines across demographics. You identify failure modes. You set acceptance thresholds. You build monitoring to catch degradation.

A production facial recognition system in 2026 isn't a simple classifier. It's a system that knows its own limitations and accounts for them.

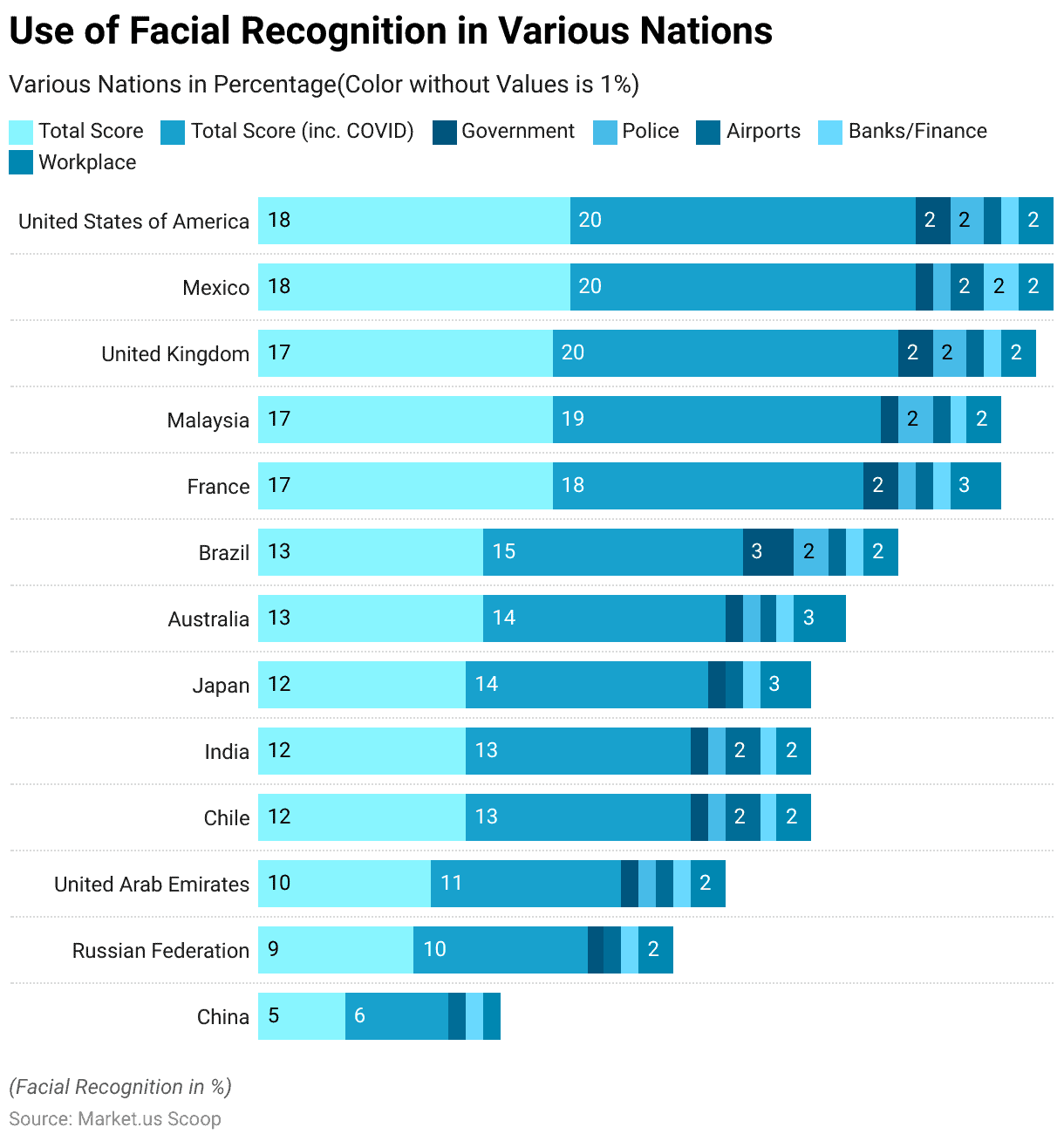

Facial recognition is predominantly used in border control and travel facilitation, accounting for 70% of its applications. Estimated data.

Demographic Bias: The Problem That's Solvable But Requires Vigilance

One of the most criticized aspects of facial recognition is demographic bias. The research is clear and concerning: facial recognition systems trained on datasets with limited demographic diversity perform worse on populations that are under-represented.

Specifically, many systems show lower accuracy for women, for people with darker skin tones, and for specific ethnicities. This isn't a flaw in the concept of facial recognition. It's a flaw in how training data has historically been collected and how models have been built.

But here's the important part: this is a solvable problem. It requires intentionality, but it's solvable.

Organizations deploying facial recognition in 2026 have the option to do this right. They can audit training data for demographic representation. They can test accuracy across demographic groups explicitly. They can reject models that show unacceptable performance variance. They can monitor performance over time as the system encounters new data.

It's not free. It requires additional testing and potentially accepting lower overall accuracy to achieve acceptable performance across demographics. But the alternative—deploying a system that works great for some people and poorly for others—is both ethically problematic and legally risky.

The companies getting this right are treating demographic bias testing as non-negotiable. They're building it into procurement requirements. They're including it in acceptance testing. They're monitoring it in production.

The companies glossing over it are taking on risk. Regulatory risk (as agencies increasingly mandate demographic testing). Operational risk (as biased systems cause problems). Reputational risk.

Maturation means taking these issues seriously. And in 2026, the organizations deploying facial recognition are increasingly doing exactly that.

Privacy by Design: The Shift From Afterthought to Foundation

Privacy and facial recognition have always had a tense relationship. The technology is inherently capable of surveillance at scale. It can identify people without their knowledge or consent. It can be used in ways that are deeply invasive.

For years, privacy considerations were often treated as a regulatory hurdle to clear, not a core design principle. Compliance meant checking boxes. It meant putting up notices saying facial recognition was in use. It meant having a privacy policy.

Maturation is changing that. Organizations deploying facial recognition in 2026 are increasingly treating privacy as a design requirement from day one, not a constraint to accommodate.

Data minimization means collecting only the facial data actually needed for the specific use case. If you're doing access control, you don't need historical video of everyone who passed through the area. You need the real-time match for access control. Historical data is overhead and risk.

Purpose limitation means being explicit about what the data will be used for and not using it for anything else. If you collect facial data for access control, you don't then use it for marketing analytics or employee monitoring without explicit consent and clear policy.

Secure storage means facial data is encrypted at rest and in transit. It's stored separately from other identity data. Access is logged and audited. Retention policies are clear and enforced.

Transparency means being upfront about when and where facial recognition is used. It means publishing policies. It means making it possible for people to understand why they were flagged by the system.

Retention limits mean facial data isn't kept indefinitely. For access control, you might retain identity matches for 90 days for audit purposes, then delete them. For border control, you might retain images for longer, but with clear policies and legal justification.

Organizations getting this right are treating facial recognition data like protected health information: rare access, extensive logging, regular audits, strong encryption, clear retention policies.

They're doing this not just because regulation requires it, but because it's operationally sensible. Less data means smaller liability surface. Clearer policies mean fewer disputes. Transparency builds trust.

When facial recognition becomes boring infrastructure, privacy practices will matter more, not less. Because there will be thousands of facial recognition systems in use, and the ones operating with clear privacy policies will have a measurable competitive advantage.

Facial recognition accuracy drops from 99% in controlled benchmarks to as low as 75% in challenging real-world scenarios like low-light conditions. Estimated data.

Governance Frameworks: How Mature Organizations Deploy FRT

You can't bolt governance onto facial recognition after deployment. It has to be built in from the beginning.

Here's how mature organizations are structuring FRT governance in 2026:

1. Multi-stakeholder oversight. Not just IT, not just security. FRT decisions involve legal (regulatory risk), HR (employee considerations), security (operational risk), technology (implementation), and sometimes ethics committees. Decisions are made collaboratively, not unilaterally by one department.

2. Clear policy documentation. What scenarios will facial recognition be used for? Under what conditions? What accuracy standards must be met? What happens when the system fails? Who reviews errors? How long is data retained? These are written down, published, and regularly reviewed.

3. Explicit testing protocols. Before deployment, the system is tested against diverse demographics, in representative environmental conditions, and across the use cases it will actually encounter. Acceptance thresholds are set. These thresholds are based on operational requirements, not just technical perfection.

4. Monitoring and auditing. Production systems are monitored continuously. Accuracy is tracked over time. Usage is logged. Access to facial data is audited. Anomalies trigger investigation.

5. Regular reassessment. Governance frameworks aren't static. They're reviewed quarterly or semi-annually. Performance data is analyzed. Policy changes are made if warranted. The system evolves as the organization learns.

6. Clear failure modes and human fallbacks. What happens when the facial recognition system returns a low-confidence match? What happens when it fails to return a match? Who makes the final decision? These scenarios are defined in advance, not resolved in crisis mode.

7. Accountability mechanisms. Someone is responsible for the facial recognition system. Not just technically, but ethically and operationally. That person is empowered to make decisions about the system and accountable for outcomes.

Organizations deploying without these governance structures in 2026 are making a bet that they won't encounter serious problems. That's not a bet most responsible organizations take with business-critical infrastructure.

The Regulation Timeline: What 2026 Actually Looks Like Legally

Regulation around facial recognition varies dramatically by geography. There's no global standard. But the direction is clear.

The EU's AI Act has categorized facial recognition as high-risk in many contexts. That means comprehensive documentation requirements, impact assessments, bias testing, and human oversight. These aren't onerous from a technical perspective, but they are real requirements that shape how deployments happen.

The UK is still determining its AI regulatory framework. But guidance from the Information Commissioner's Office and rulings from courts have made clear that facial recognition requires compelling justification and careful governance.

The US doesn't have comprehensive federal AI regulation yet. But individual states are moving. Illinois has strong biometric privacy laws. California has regulations around government use of facial recognition. Sectoral regulation (healthcare, finance) applies to facial recognition deployments in those sectors.

Asia and other regions are developing frameworks at varying paces. What's consistent: as facial recognition adoption increases, regulatory attention increases.

Here's what that means for organizations deploying in 2026: you need to understand the regulatory landscape in the jurisdictions where you operate. You need to design systems that can meet the most stringent requirements that apply to you. You need to document compliance.

But you also need to recognize that regulation is still evolving. Some requirements might change. Some interpretations might shift. Governance frameworks need flexibility built in.

Maturity means being compliant today while being positioned to adapt tomorrow.

The chart compares initial and real-world success rates of facial recognition deployments across different sectors. While initial testing showed high accuracy, real-world conditions often led to lower success rates, highlighting the importance of realistic expectations and adjustments. (Estimated data)

Ethical Considerations: Beyond Compliance

Compliance is table stakes. But ethical deployment goes further.

One ethical consideration is consent. In some scenarios, people can opt out of facial recognition. In access control, employees could use badges instead. In financial services, customers could use alternative identity verification methods. In other scenarios, opt-out isn't practical.

Border control presents an interesting case. People entering a country could theoretically refuse facial recognition, but that refusal might mean being processed through a slower manual line, creating a de facto penalty for non-consent. Is that acceptable?

Different organizations answer this differently. Ethical organizations are explicit about it. They acknowledge the tradeoff and explain their reasoning.

Another consideration is function creep. A facial recognition system deployed for access control could, in theory, be used for employee monitoring, attendance tracking, or marketing analysis. Function creep is a real risk. Addressing it requires technical controls (access restrictions on the data) and policy controls (explicit limits on use) and organizational culture (enforcing the limits).

A third consideration is error impact asymmetry. When facial recognition makes an error, the impact isn't symmetric. Some errors (false positives, incorrectly identifying someone) have worse consequences than others. Ethical deployments recognize this and design systems to minimize high-consequence errors, even if it means accepting more low-consequence errors.

Organizations taking ethics seriously build these considerations into design. They're not trying to optimize for pure accuracy. They're trying to optimize for ethical outcomes.

Implementation Roadmap: Getting Facial Recognition Into Production

If your organization is considering facial recognition deployment in 2026, here's the realistic timeline:

Months 1-2: Planning and stakeholder alignment. Define the use case precisely. Identify stakeholders. Establish governance structure. Conduct regulatory impact analysis. Set success criteria.

Months 3-4: Technology assessment. Evaluate vendor solutions (or open-source alternatives). Conduct proof-of-concepts in representative environments. Test performance across demographics. Assess integration complexity.

Months 5-6: Pilot design. Define the pilot scope. Select the pilot location. Set up monitoring and evaluation frameworks. Prepare staff for the change. Document policies.

Months 7-10: Pilot execution. Run the pilot in a constrained environment. Collect performance data. Gather feedback from users and operators. Identify problems. Iterate on policies and processes.

Months 11-12: Scale preparation. Incorporate pilot learnings into the full deployment plan. Prepare additional environments. Train staff at scale. Set up monitoring infrastructure.

Month 13+: Production rollout. Deploy to production environments systematically. Monitor performance closely. Be ready to pause or adjust if unexpected issues emerge.

This timeline is realistic for a moderately complex deployment. Simpler deployments might happen faster. More complex ones take longer. The point is that responsible facial recognition deployment isn't fast.

Organizations treating FRT like a three-month project are setting themselves up for problems. Mature deployment requires deliberation.

The Cost Equation: Why Investment in FRT Is Increasing

Facial recognition isn't cheap when you do it right. And doing it right is increasingly the norm.

Software licensing costs are moderate. Facial recognition APIs from established vendors cost anywhere from a few thousand dollars monthly to tens of thousands, depending on volume and capabilities.

Infrastructure costs depend on deployment scale and architecture. But a medium-scale facial recognition deployment might require significant cloud infrastructure investment, especially if you're running real-time processing.

Integration costs are often underestimated. Facial recognition doesn't exist in isolation. It has to connect with access control systems, identity databases, audit logging, human review workflows. Integration is where projects spend unexpected time and money.

Testing and validation costs are real. Demographic testing. Environmental testing. Failure mode testing. Security testing. These aren't optional if you want a reliable system.

Staffing and training costs matter. You need people who understand the system, who can monitor it, who can handle edge cases and exceptions. Staff who can explain it to stakeholders.

Compliance and governance costs are often invisible but substantial. Legal review. Privacy assessments. Documentation. Audit preparation.

Adding this up, a meaningful facial recognition deployment—not just a pilot, but a real production system—might cost

So why are organizations making this investment? Because the productivity gains or risk reduction justify it. When facial recognition reduces border control processing time from 5 minutes to 30 seconds and you process a million passengers annually, you're looking at significant time savings and improved customer experience. When it reduces fraudulent account opening attempts, you're looking at measurable risk reduction.

The organizations deploying facial recognition in 2026 are those where the math works. They're not doing it because it's trendy. They're doing it because they've calculated that the benefits exceed the costs.

Success Metrics: How to Actually Measure FRT Deployment Outcomes

Facial recognition deployments live or die based on whether they deliver measurable value. And that requires defining the right success metrics before deployment.

Operational efficiency metrics measure how much faster or smoother the process becomes. In border control, that's processing time per person. In access control, it's time to grant access. In financial services, it's account opening time.

Accuracy metrics measure real-world performance, not benchmark performance. This includes false positive rate, false negative rate, and accuracy across demographic groups.

User experience metrics measure friction and satisfaction. Are people happy with the experience? Are there unexpected failure modes from a user perspective?

Security metrics measure whether the deployment actually improves security. For fraud detection, that's fraudulent transactions prevented. For access control, it's unauthorized access attempts detected.

Cost metrics measure return on investment. What was spent? What was saved?

Compliance metrics measure whether the deployment meets regulatory and policy requirements. Audit findings. Policy violations. Stakeholder complaints.

Successful organizations track all of these. They establish baselines before deployment. They measure performance post-deployment. They use the data to decide whether to scale, whether to adjust the system, or whether to pivot.

Facial recognition deployments that lack clear success metrics are essentially experiments running without hypothesis testing. That's how you end up with systems that consume resources without delivering value.

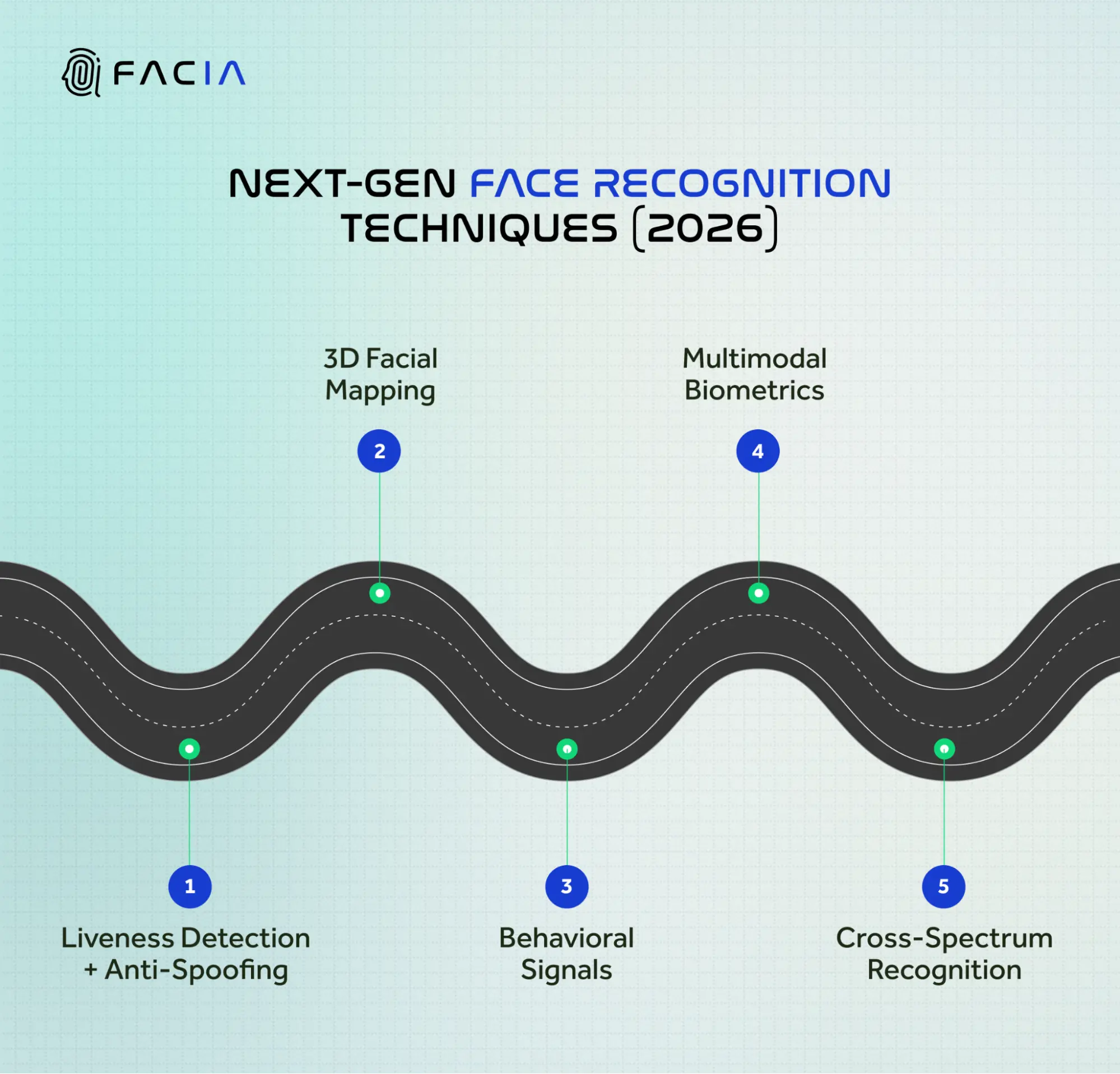

The Future Beyond 2026: Continued Evolution

When facial recognition becomes boring in 2026, it doesn't mean the technology stops evolving. It just means evolution happens in the background, driven by practical requirements rather than hype.

Liveness detection will improve. The ability to distinguish live faces from spoofing attempts (photos, videos, masks) will become more sophisticated. This matters for security applications where spoofing is a real threat.

Multi-modal authentication will become standard. Facial recognition will be one component of authentication, not the only one. It will be combined with liveness detection, iris recognition, gait analysis, or behavioral biometrics to create more robust identity verification.

Edge deployment will expand. Processing facial recognition locally on devices, rather than sending data to the cloud, will become more practical. This has privacy and latency benefits. It requires smaller, more efficient models.

Integration with other biometrics will deepen. Fingerprint readers, iris scanners, and facial recognition will work together, creating authentication systems that are harder to spoof and more reliable.

Algorithmic improvements will continue. The underlying algorithms for facial recognition will get better. Accuracy will improve. But the improvements will be incremental, not dramatic. We're no longer in the steep part of the learning curve.

Regulation will continue to tighten. As facial recognition becomes more common, regulatory frameworks will become more detailed. You should expect more specific requirements around testing, documentation, and governance.

All of this suggests that organizations deploying facial recognition in 2026 should design systems with evolution in mind. Architecture should support upgrades. Governance should be flexible enough to accommodate new regulations. Skills should be developed for continuing adaptation.

Maturity is a process, not a destination.

Real-World Deployment Stories: Learning From Early Adopters

Here's what's actually happening in organizations leading facial recognition deployment:

A European airport deployed facial recognition for passport control. Initial accuracy was 96% in testing. In production, it was 92% due to variable lighting and diverse passenger demographics. They initially treated this as a failure. Then they reframed it: 92% accuracy meant 92% of passengers had minimal friction. 8% were directed to manual review, which they could handle within normal staffing. System is now considered successful. Lessons learned: real-world accuracy expectations should be modest and realistic.

A financial services company implemented facial liveness detection for remote account opening. They tested it comprehensively for spoofing attacks. But they didn't account for elderly users with age-related changes to facial features. The system struggled with significant aging since ID photos. They had to adjust acceptance thresholds, which increased manual review volume. But it reduced false rejections. Lessons learned: demographic diversity includes age-related changes, which need explicit testing.

A healthcare organization rolled out facial recognition for patient identification. Staff initially resisted, concerned about privacy and surveillance. The organization spent significant time on communication, explaining why the system was being deployed and how privacy was being protected. Resistance decreased. System is now accepted. Lessons learned: stakeholder communication is as important as technical implementation.

A workplace security team implemented facial recognition for access control. They configured the system to flag low-confidence matches for manual review. This created more work initially. But it caught cases where similar-looking employees were being confused. Over time, with better training data, false positives decreased. System is now a genuine security improvement. Lessons learned: conservative confidence thresholds in early deployment prevent security gaps.

What these stories have in common: successful organizations didn't expect perfection. They planned for real-world complexity. They iterated based on actual performance. They involved stakeholders. They measured outcomes.

The Boring Reality: What Actually Matters

When facial recognition becomes boring infrastructure in 2026, the organizations that benefit are those that:

-

Deployed thoughtfully, with clear use cases and realistic performance expectations, not just because it was exciting.

-

Built governance into the system, with multi-stakeholder oversight, clear policies, and accountability mechanisms.

-

Tested comprehensively, across real-world conditions and diverse demographics, not just against benchmark datasets.

-

Designed for privacy, with data minimization, purpose limitation, and secure storage from day one.

-

Measured systematically, tracking real-world performance and business outcomes, not just technical metrics.

-

Communicated clearly, with stakeholders and the public, about how the system works and why it exists.

-

Adapted continuously, refining policies and systems based on actual performance and changing requirements.

Organizations that treat facial recognition as a technical problem to be solved quickly will struggle. Those that treat it as an organizational and ethical challenge that requires deliberation will succeed.

The boring year isn't 2025, when everyone's still talking about facial recognition. It's 2026 and beyond, when facial recognition is just how certain processes work. When it's a supporting layer that makes workflows smoother without being the interesting part of the story.

That's when the real value emerges. And that's why understanding the transition matters.

FAQ

What exactly is facial recognition technology and how does it work?

Facial recognition is a biometric technology that identifies people by analyzing unique characteristics of their facial features. The system captures an image of a face, converts it into a mathematical representation (a "faceprint"), and compares that representation against stored facial data to determine identity. Modern systems use deep learning algorithms that can analyze hundreds of unique facial characteristics—from the distance between eyes to the shape of cheekbones—to create highly specific identifiers that distinguish one person from another.

Why is 2026 being positioned as a turning point for facial recognition adoption?

Several converging factors make 2026 significant. First, the technology has matured past the proof-of-concept phase—most systems now deliver human-level or better accuracy on standard benchmarks. Second, the global facial recognition market is projected to grow from

What are the biggest challenges organizations face when deploying facial recognition systems?

The primary challenges are technical, operational, and organizational. Technically, real-world accuracy degrades from benchmark accuracy due to variable lighting, camera quality, and demographic diversity in the actual population served. Operationally, facial recognition doesn't exist in isolation—it requires integration with existing identity systems, access control infrastructure, and audit logging. Organizationally, deploying facial recognition requires governance frameworks that span legal, HR, security, and technology teams, not just IT. Additionally, stakeholder communication is essential because facial recognition generates privacy concerns that need to be addressed transparently. Organizations underestimating any of these dimensions encounter unexpected delays and costs.

How accurate is facial recognition technology in real-world applications?

Benchmark accuracy—the numbers vendors advertise—can reach 99%+ under controlled conditions. Real-world accuracy is lower and varies significantly based on context. A system achieving 99% on benchmark datasets might achieve 95% in an airport with variable lighting and diverse passengers, or 90% in a workplace with aging ID photos and unexpected angles. Accuracy also varies across demographic groups—systems trained on limited demographic diversity often perform worse on populations that are under-represented in training data. The key metric isn't benchmark accuracy but real-world accuracy in your specific use case with your specific population. Organizations deploying facial recognition in 2026 are increasingly doing explicit testing to establish realistic accuracy baselines for their actual operational conditions.

What privacy safeguards should be built into facial recognition systems?

Responsible facial recognition systems are designed with privacy as a foundational principle, not an afterthought. This means data minimization—collecting only the facial data needed for the specific use case, not more. It means purpose limitation—using facial data only for explicitly stated purposes, not for unrelated analytics or monitoring. It requires secure storage—facial data encrypted at rest and in transit, with restricted access and detailed audit logging. It demands transparency—being clear about when and where facial recognition is used and allowing people to understand why they were flagged. And it requires retention limits—establishing and enforcing clear policies about how long facial data is kept before deletion. Organizations implementing these safeguards treat facial recognition data like protected health information, with equivalent security and governance rigor.

How is facial recognition regulated, and what compliance requirements exist?

Regulation varies significantly by jurisdiction. The EU's AI Act classifies facial recognition as high-risk in many contexts, requiring impact assessments, comprehensive documentation, bias testing, and human oversight. The UK's Information Commissioner's Office provides guidance requiring organizations to justify facial recognition deployments and implement clear governance. The US lacks federal regulation but has sectoral rules (healthcare, finance) and state-level biometric privacy laws (notably in Illinois and California). Most jurisdictions are still developing comprehensive frameworks, but the trend is clear: facial recognition deployments increasingly require documented justification, technical safeguards, and governance mechanisms. Organizations deploying facial recognition in 2026 should assume that regulatory requirements will tighten and should design systems capable of meeting the most stringent requirements that apply to their jurisdiction.

What are the key success metrics for measuring facial recognition deployment outcomes?

Successful facial recognition deployments track multiple categories of metrics. Operational efficiency metrics measure whether the system actually improves workflow speed—processing time per person, account opening time, or time to grant access. Accuracy metrics measure real-world performance—false positive rate, false negative rate, and performance variance across demographic groups. User experience metrics capture whether the system creates or reduces friction from the perspective of people using it. Security metrics measure whether the deployment actually improves security—fraudulent transactions prevented, unauthorized access attempts detected, or comparable security outcomes. Cost metrics track return on investment against deployment and operational costs. Compliance metrics measure whether the system meets regulatory and policy requirements. Organizations tracking all of these categories can make evidence-based decisions about whether to scale, adjust, or modify their facial recognition deployments.

What's the realistic timeline for deploying facial recognition from planning to production?

Responsible facial recognition deployment takes 12-16 months from initial planning through full production rollout. This timeline breaks down roughly as: months 1-2 for planning and stakeholder alignment; months 3-4 for technology assessment and proof-of-concept testing; months 5-6 for pilot design and policy development; months 7-10 for pilot execution and iteration; months 11-12 for scale preparation; and months 13+ for production rollout with continuous monitoring. This timeline is realistic for moderately complex deployments in large organizations. Simpler deployments might be faster. More complex ones take longer. Organizations trying to deploy facial recognition in 3-4 months are setting themselves up for either corners being cut or unexpected problems in production.

Why do facial recognition systems show different accuracy for different demographic groups?

Facial recognition systems are machine learning models trained on datasets. If the training dataset has limited demographic diversity—if it includes more examples of men than women, or more people with lighter skin tones than darker skin tones—the model learns to recognize those groups better. When the model encounters examples from under-represented groups, it's essentially interpolating rather than recognizing, which leads to lower accuracy. This isn't a fundamental limitation of facial recognition technology. It's a limitation of how training data has historically been collected and how models have been built. Systems trained on demographically diverse data with explicit bias testing show much better balanced performance. Organizations deploying facial recognition in 2026 are increasingly making demographic bias testing non-negotiable—they require vendors to demonstrate balanced accuracy across demographic groups before procurement.

What should organizations do if facial recognition system makes an error in production?

Facial recognition systems inevitably make errors—false positives (incorrectly matching two people) and false negatives (failing to recognize someone who should be recognized). Responsible systems are designed with error handling built in. When the system returns a low-confidence match, the decision escalates to a human. When the system fails to return a match, a fallback process engages. For high-stakes scenarios (security access, financial transactions), false negatives might be more costly than false positives, so systems are configured to accept more false positives and have human review catch problems. Organizations should establish policies before deployment defining what happens when the system fails, who makes the final decision, and how errors are logged and analyzed. Over time, as the system encounters different scenarios, both the system accuracy and the human processes improve.

Looking Ahead: The Boring Infrastructure of Tomorrow

Facial recognition technology is at an inflection point. It's transitioning from experimental to essential. From novel to normal. From something tech executives talk about at conferences to something that just works in the background.

That transition—that maturation into boredom—is the most significant development happening in facial recognition right now. Not because the technology is becoming dramatically more powerful (though it will). But because it's becoming trustworthy, predictable, and integrated into how organizations actually operate.

For organizations deploying facial recognition in 2026, the implications are substantial. You can't treat it like a three-month technology implementation. You need governance, testing, policy, and stakeholder alignment. You need realistic expectations about accuracy and careful management of edge cases. You need privacy built in from day one, not added afterward.

But the payoff is real. Organizations that get facial recognition right don't just get a new technology. They get measurably faster, more secure operations. Reduced friction in customer-facing processes. Better identity verification. Improved security in sensitive areas. Better audit trails and accountability.

The next five years will see facial recognition adoption accelerate across sectors. The organizations that succeed will be those that understood early that boredom was the goal. Not because boredom is exciting—it isn't. But because boring, reliable, trusted infrastructure is how transformative technology actually transforms organizations.

When facial recognition stops being the interesting part of the story and just becomes part of how you do business, that's when you know it actually works.

Key Takeaways

- The global facial recognition market is projected to grow from 30.52 billion by 2034 at a 14.80% compound annual growth rate, indicating genuine enterprise adoption

- Real-world facial recognition accuracy drops significantly from benchmark performance due to variable lighting, diverse demographics, and uncontrolled environmental conditions

- Production facial recognition systems require multi-stakeholder governance, comprehensive testing across demographics, privacy-by-design principles, and realistic accuracy expectations

- 2026 marks the transition from facial recognition as experimental technology to facial recognition as business-critical infrastructure, with accuracy, governance, and accountability becoming non-negotiable

- Organizations deploying facial recognition should expect 12-16 month implementation timelines and plan for integration challenges, stakeholder communication, and ongoing performance monitoring

Related Articles

- Meta's Smart Glasses Problem: Why Facial Recognition Could Ruin Everything [2025]

- India's Sarvam Launches Indus AI Chat App: What It Means for AI Competition [2025]

- AWS 13-Hour Outage: How AI Tools Can Break Infrastructure [2025]

- Can We Move AI Data Centers to Space? The Physics Says No [2025]

- G42 and Cerebras Deploy 8 Exaflops in India: Sovereign AI's Turning Point [2025]

- Google Gemini 3.1 Pro: AI Reasoning Power Doubles [2025]

![Facial Recognition Goes Mainstream: Enterprise Adoption in 2026 [2025]](https://tryrunable.com/blog/facial-recognition-goes-mainstream-enterprise-adoption-in-20/image-1-1771666570551.jpg)