Why Everyone's Betting Big on Healthcare AI

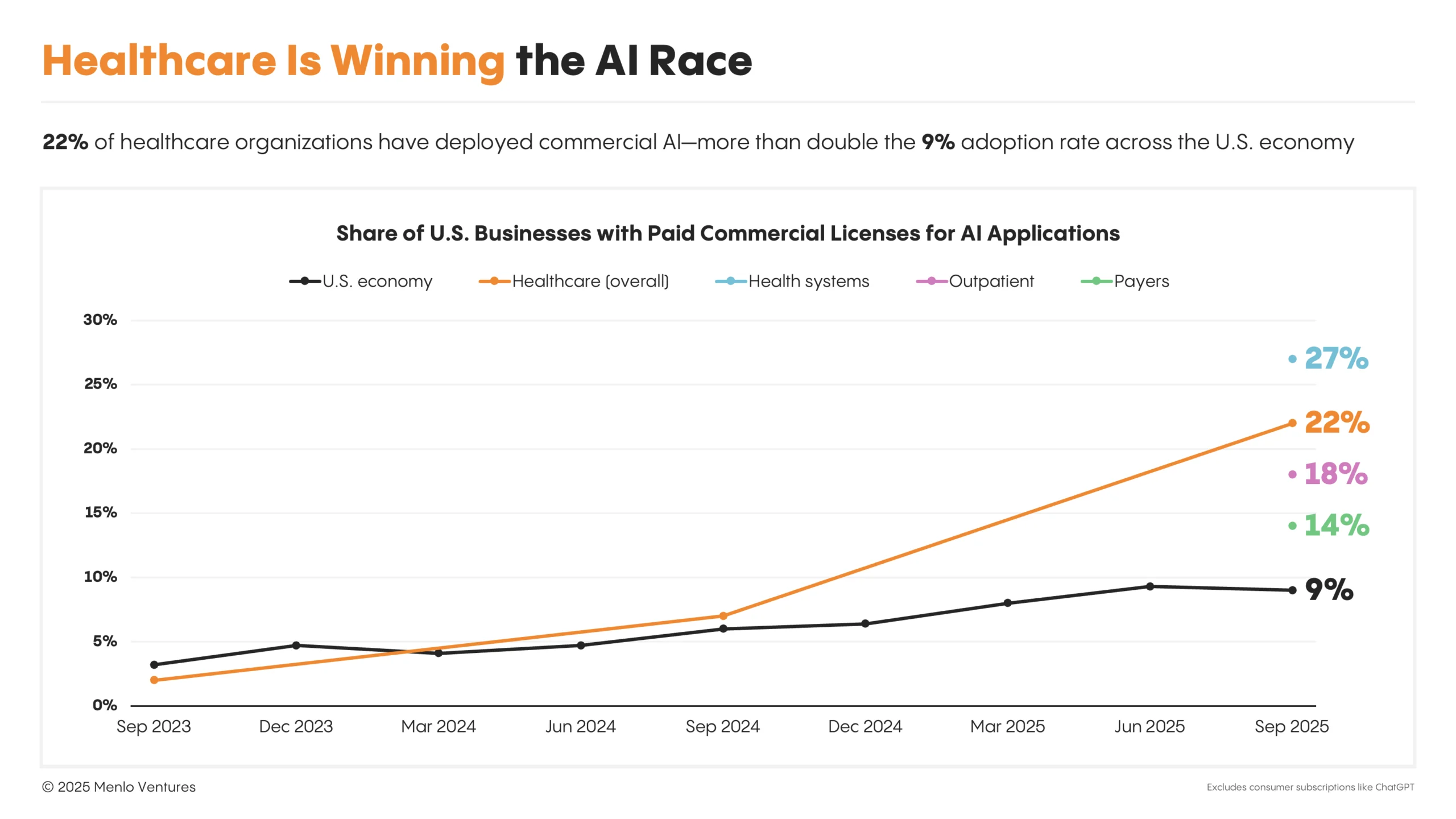

Something shifted in the AI industry about six months ago. Suddenly, every major language model company started making healthcare announcements simultaneously. The timing wasn't coincidental.

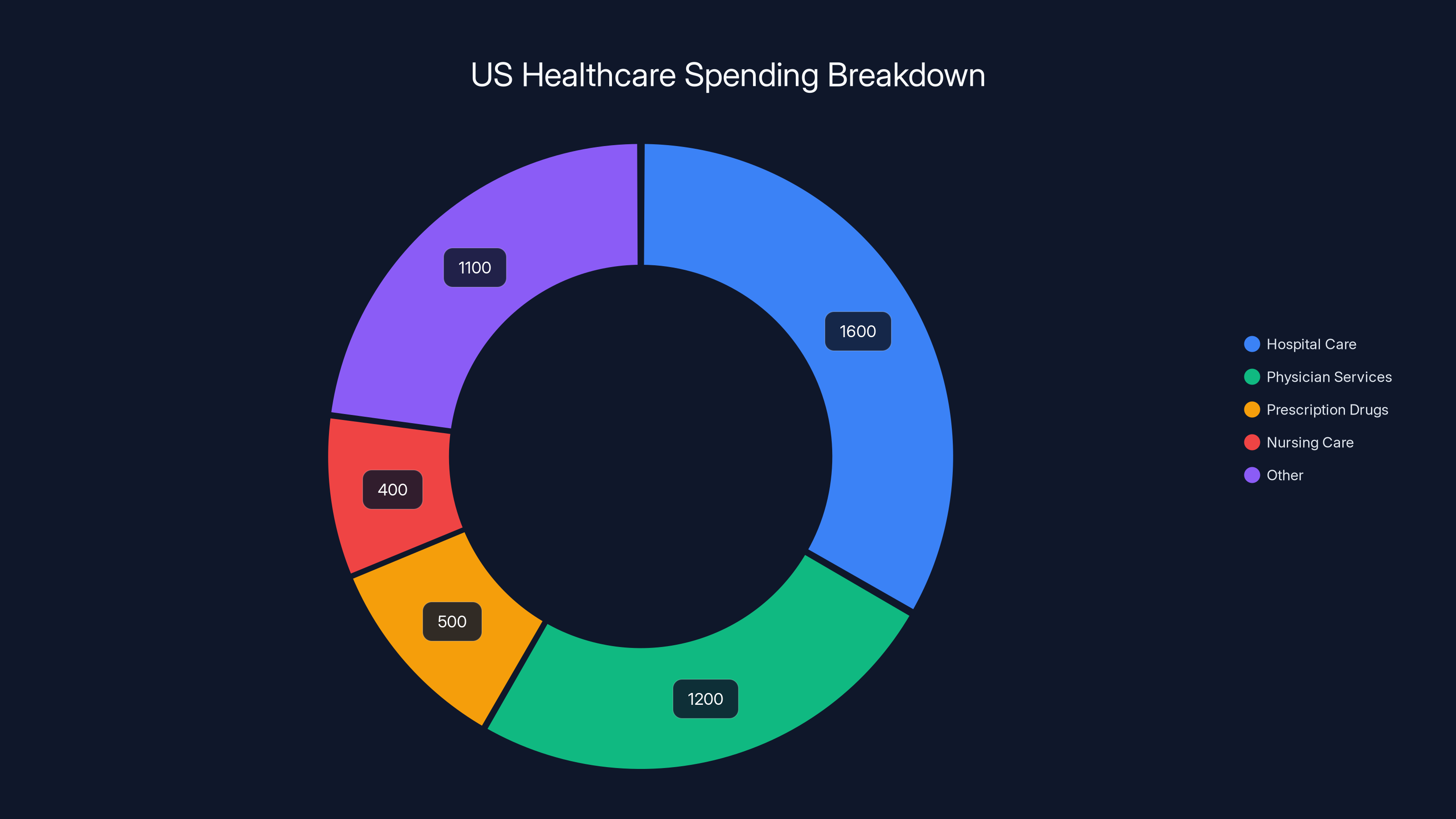

Healthcare represents something different from other industries. It's massive—the United States alone spends over $4.8 trillion annually on healthcare. It's fragmented, with countless inefficiencies baked into the system. And crucially, it's desperately hungry for solutions. Hospital administrators aren't waffling about whether they need help. They know they do. They're overwhelmed with administrative burden, facing staffing shortages, and dealing with burnout that's driving physicians out of medicine entirely.

That's the gold rush moment. When an industry is clearly broken and has the budget to fix it, venture capitalists and product teams notice. They notice hard.

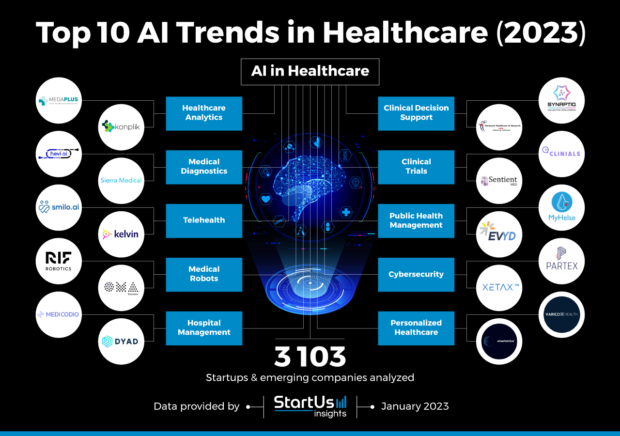

The past eighteen months have proven that AI isn't just another technology trying to wedge itself into healthcare. It's fundamentally reshaping how medical work happens. Voice AI for clinical documentation. Predictive models for patient deterioration. Diagnostic assistance tools that augment radiologists. Drug discovery platforms that process medical literature in ways humans never could.

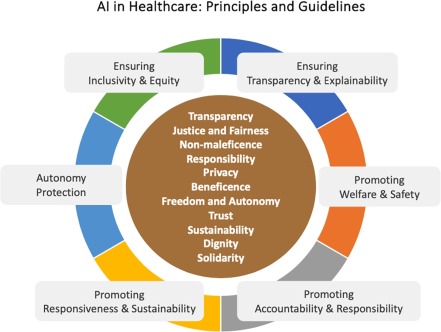

But here's where the story gets complicated. The same capabilities that make AI valuable in healthcare also create unique dangers. Medical hallucinations aren't just embarrassing—they can kill people. A chatbot confidently recommending the wrong dosage. An algorithm flagging a normal imaging result as cancerous. A system suggesting treatment plans based on fabricated studies.

This is the central tension in AI healthcare right now: enormous potential colliding with genuine, high-stakes risk. And the industry is still figuring out which force wins.

The Numbers Behind Healthcare's AI Moment

Investment data tells the story more clearly than any keynote ever could.

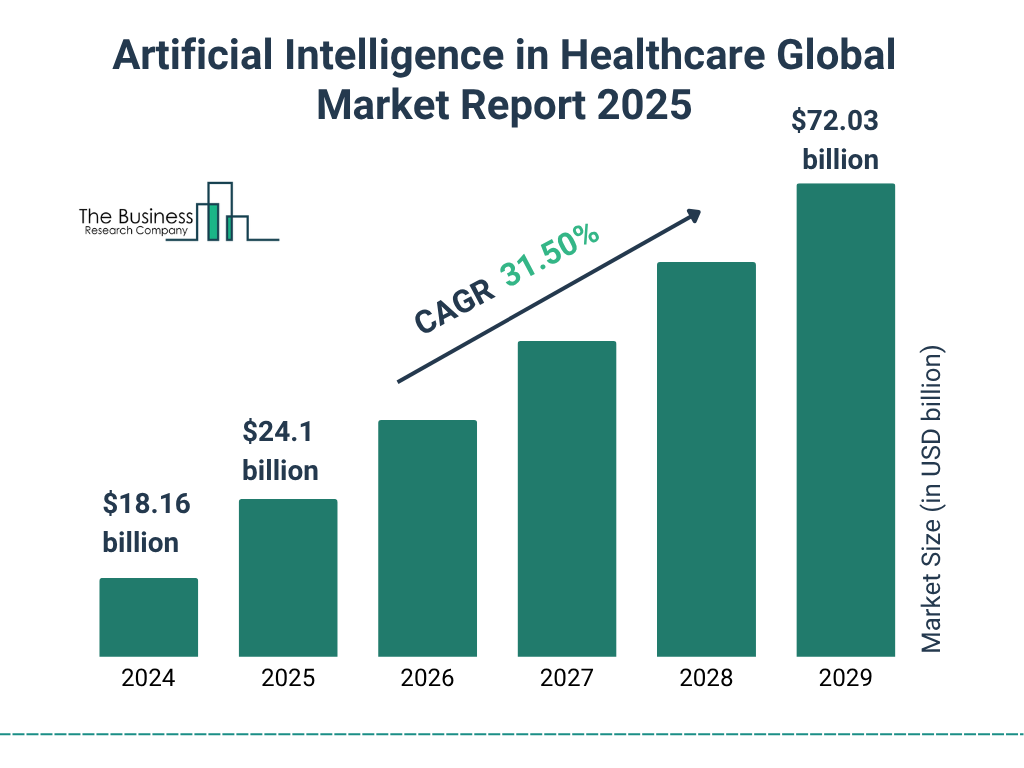

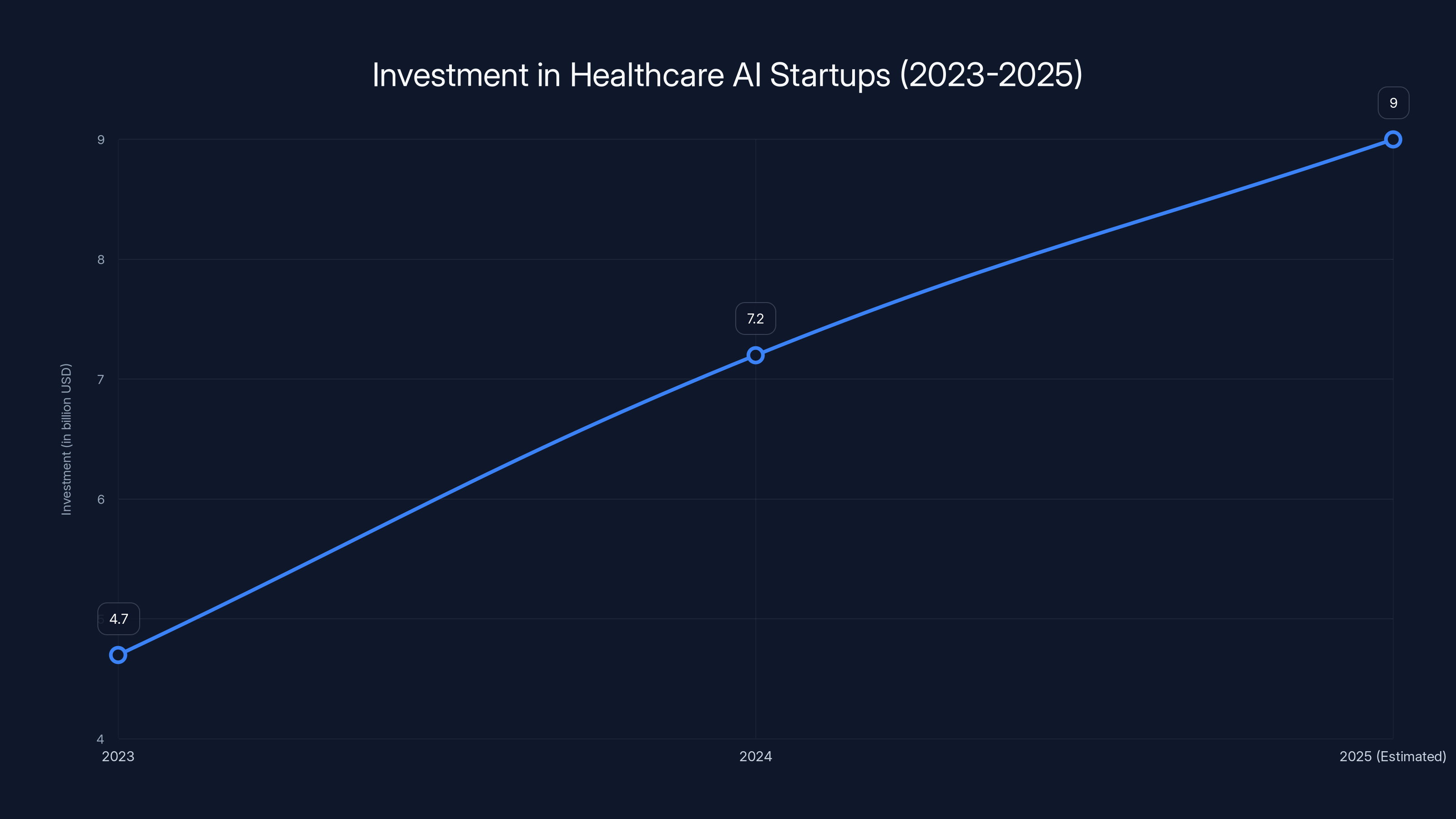

In 2023, healthcare AI startups raised approximately

These aren't marginal increases. This is explosive growth trajectory. And it's not scattered randomly across thousands of small companies. The capital is concentrating in specific problem areas: clinical documentation, diagnostic imaging analysis, drug discovery, predictive analytics, and patient engagement platforms.

What's particularly telling is who's investing. Open AI's acquisition of Torch Health, Anthropic's launch of healthcare-specific Claude configurations, and the emergence of Merge Labs as a $250 million seed-round recipient signal that the biggest AI foundation model companies see healthcare as core to their business strategy, not a nice-to-have experiment.

These aren't one-off bets. These are platforms companies are building their entire future around.

The revenue potential is equally compelling. A single healthcare AI application serving a mid-sized hospital network can generate millions in value through administrative efficiency gains alone. Scale that to a nationwide application, and the addressable market becomes genuinely enormous.

Compare this to other AI applications. A productivity tool that saves knowledge workers thirty minutes daily is valuable, but it's not transformative. A system that prevents a misdiagnosis or catches deteriorating patient condition hours earlier? That's literally lifesaving. From a business perspective, that kind of impact commands premium pricing.

Hospital care and physician services dominate US healthcare spending, highlighting areas where AI can address inefficiencies. (Estimated data)

Why Healthcare Is Different for AI Companies

Healthcare isn't like other industries AI has already transformed. It has unique characteristics that make it simultaneously more attractive and more challenging than the sectors that came before.

The regulatory environment is complex but clear. Healthcare doesn't move fast and break things. Regulatory bodies like the FDA, state medical boards, and health privacy authorities have established frameworks. Those frameworks are restrictive, but they're not unknowable. A company that takes regulatory compliance seriously from day one can navigate these waters. Compared to trying to interpret evolving AI regulation in industries without established oversight, healthcare's regulatory clarity is almost an advantage.

The data is phenomenally rich. Healthcare generates more structured, detailed data than almost any other industry. Electronic health records contain not just raw information but years of historical context, relationships between symptoms and outcomes, and treatment decisions made by trained physicians. For training AI systems, that richness is invaluable. A language model trained on clinical notes understands medical language in ways general models can't.

There's zero ambiguity about whether the problem needs solving. You can argue about whether we need another social media platform or another productivity tool. Nobody argues about whether we need better healthcare. This creates a fundamentally different sales dynamic. Healthcare organizations aren't adopting these tools because they're fashionable. They're adopting them because they're drowning.

The stakes are genuinely high. This cuts both ways. On one hand, organizations will pay premium prices and tolerate longer implementation timelines for solutions that deliver real impact. On the other hand, a failure in healthcare carries reputational and legal consequences that dwarf most other industries. An AI system that makes wrong stock recommendations? That's a problem. An AI system that recommends the wrong cancer treatment? That's potentially catastrophic.

Clinical Documentation: The Low-Hanging Fruit Everyone's Reaching For

If you want to understand why AI companies are clustering in healthcare, start with clinical documentation. It's the least sexy application and the most impactful. That paradox tells you something important about AI's actual role in healthcare.

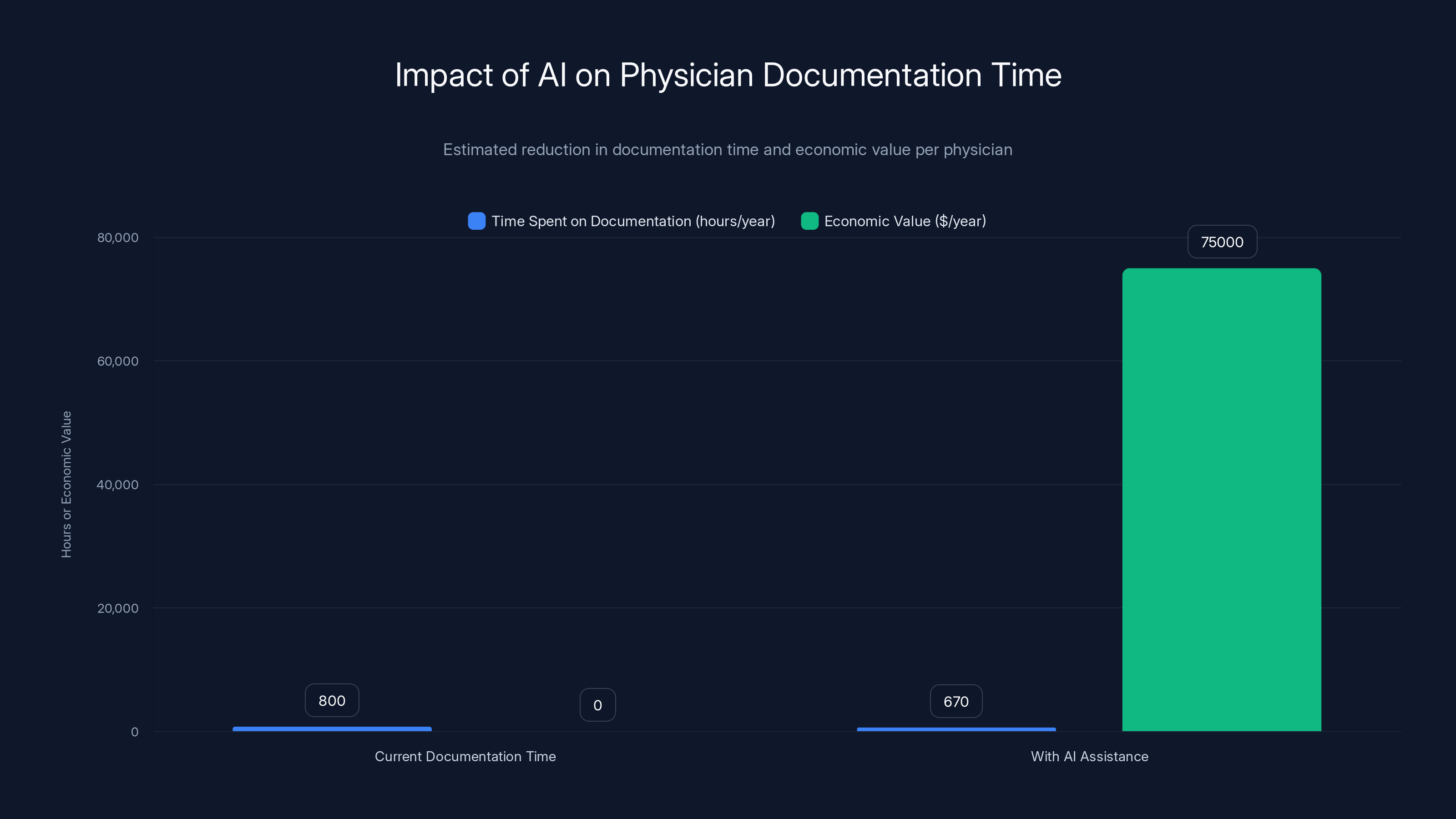

A physician today spends an astonishing amount of time doing paperwork. Conservative estimates suggest around 40% of a doctor's day involves documentation, billing codes, prior authorization requests, and regulatory compliance work. Some medical practices report the figure as high as 60% for administrative specialists.

This time shouldn't be spent on documentation. It should be spent on patients. The irony is painful: we've built healthcare systems that force medical professionals to act as data entry clerks, then express shock when burnout rates climb.

Voice-based AI changes this dynamic immediately. A physician examining a patient speaks naturally into a system. That system transcribes the conversation, interprets clinical context, and generates structured documentation that's ready for the patient record or requires minimal editing.

This isn't novel in concept. Dragon Medical (speech recognition for doctors) has existed for years. But modern language models do something different. They understand medical context. They can infer what diagnoses the physician is considering based on examination findings. They can suggest relevant diagnostic codes. They can flag potential medication interactions or allergy contradictions in real time.

The impact is staggering when you do the math. A physician saving just 20 minutes daily on documentation work has an extra 130 hours per year available for patient care, research, or simply being less exhausted. At typical physician billing rates, that's

But here's the crucial part: unlike some AI applications that are genuinely optional ("nice to have"), this is immediately useful to every practicing physician. It doesn't require retraining. It doesn't require workflow redesign. A doctor uses the tool, and the benefit is apparent within minutes.

That's why every major AI company has launched documentation products. Open AI, Google, Microsoft, Anthropic—they've all made their plays. Specialized startups like Torch Health built entire companies around this single problem. The market dynamics almost demanded it.

Investment in healthcare AI startups is on a rapid growth trajectory, projected to exceed $9 billion by 2025. Estimated data for 2025 suggests continued strong investor confidence.

Diagnostic Assistance: The Compelling Promise and the Real Risks

If clinical documentation is the low-hanging fruit, diagnostic assistance is the ambitious longshot that everyone's funding anyway.

The premise is intuitively appealing: AI systems trained on millions of medical images and clinical cases can identify patterns that individual radiologists might miss. A second opinion from an intelligent system could catch early-stage cancers, reduce diagnostic errors, and potentially improve patient outcomes across populations.

The data suggests this is technically feasible. Numerous studies have demonstrated that AI systems can match or occasionally exceed radiologist performance on specific, narrow tasks. An algorithm trained specifically on chest X-rays can reliably identify pneumonia. A model trained on mammography images can flag potentially cancerous lesions.

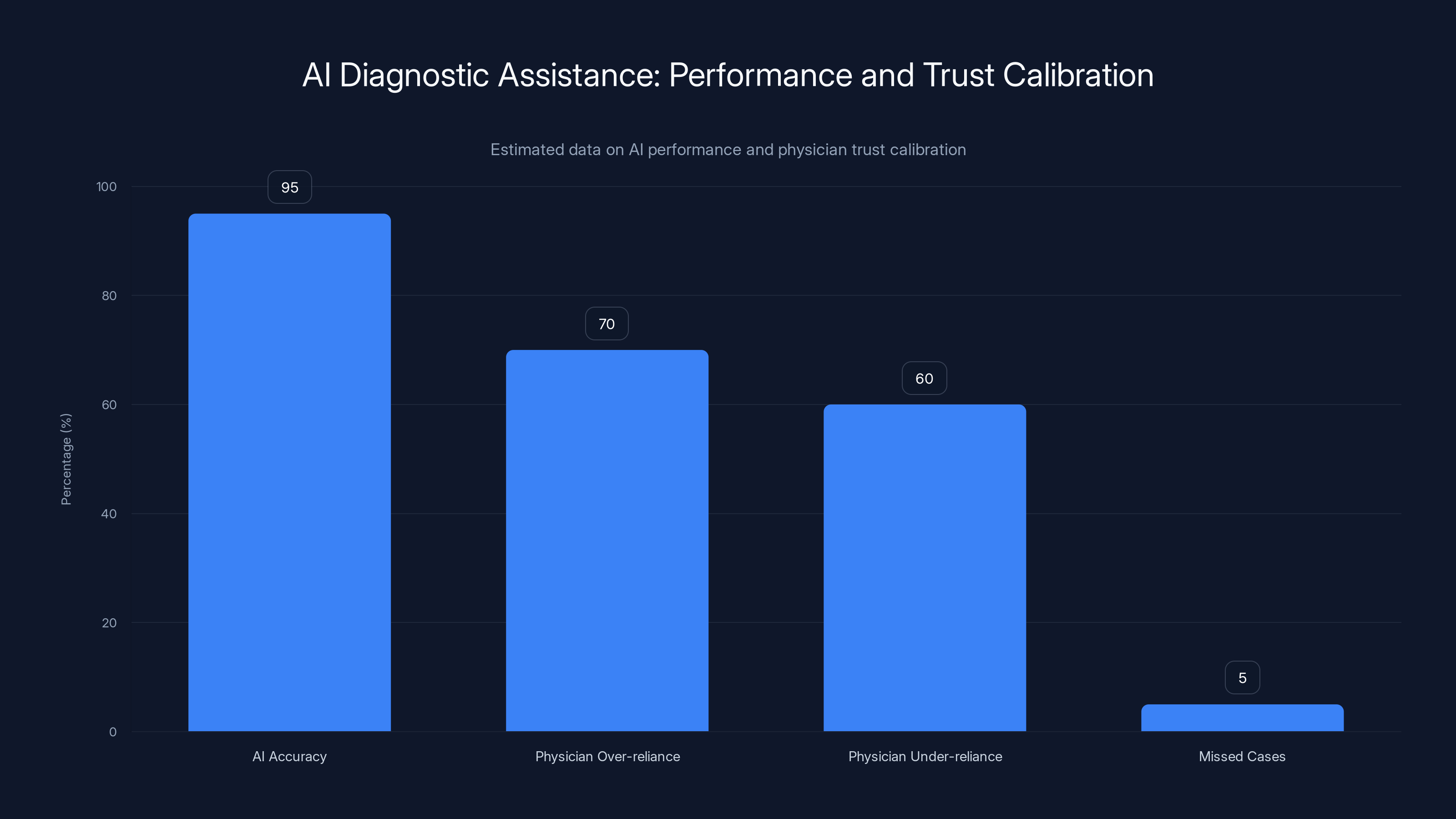

But here's where the healthcare application of AI diverges from other industries. In consumer products, a 95% accuracy rate on a specific task might be acceptable. In medicine, physicians and patients want to understand the 5% of cases where the system fails. What types of cases does it miss? Under what conditions is it less reliable? Are there specific demographics where its performance drops?

These aren't questions you can answer with a simple accuracy metric. They require deep investigation, clinical validation, and honest acknowledgment of limitations.

More importantly, diagnostic assistance introduces a phenomenon researchers call "appropriate reliance." When a physician has access to an AI second opinion, they might rely on it too heavily or too lightly. They might dismiss the system's finding when it's actually correct because they trust their own judgment too much. Or they might defer to the AI when their clinical intuition is actually superior.

The current evidence suggests physicians—like most humans—struggle with calibrating trust in AI systems. They either over-rely or under-rely, and the failure mode (trusting incorrectly) can have patient safety implications.

Then there's the hallucination problem specific to AI in medicine. Language models sometimes generate plausible-sounding but entirely false information. They might cite medical studies that don't exist. They might suggest treatment protocols based on misremembered information. In most contexts, these hallucinations are embarrassing. In medicine, they're dangerous.

A radiologist consulting an AI system that confidently reports "findings consistent with stage three malignancy" when the image shows normal tissue creates a diagnostic path that could derail patient care significantly. The physician might order unnecessary procedures, expose the patient to additional radiation, or create psychological harm through false cancer diagnosis.

Drug Discovery: Where AI's Pattern Recognition Meets Pharma's Desperate Need

Drug discovery is perhaps the area where AI's capabilities feel most naturally aligned with healthcare's actual problems.

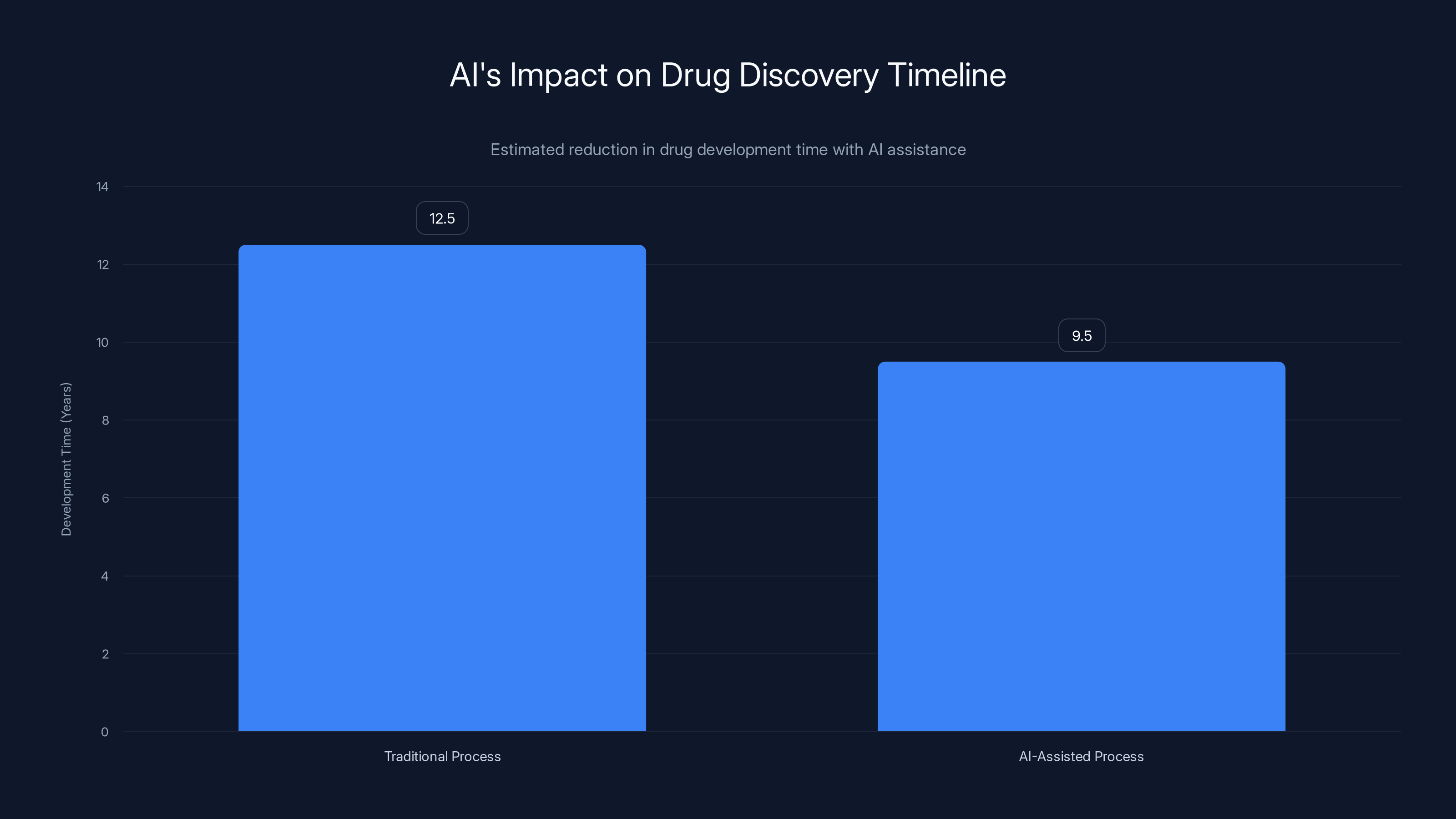

Pharmaceutical companies currently spend $2.6 billion and 10-15 years on average to bring a single new drug to market. The process involves screening millions of chemical compounds, testing those that show promise in cells, then progressing through animal trials, then through progressively larger human trials.

Most compounds tested never become viable drugs. The hit rate in early stages is catastrophically low. Companies test thousands of molecules to find one that has the combination of efficacy, safety profile, and manufacturability required for clinical use.

AI systems can compress parts of this process dramatically. Machine learning models trained on published research, chemical databases, and structural biology can predict how molecules will behave without requiring physical synthesis and testing. A system might screen millions of candidate compounds in computational space and identify the thousand most promising ones for experimental validation.

This isn't theoretical. Companies like Owkin and others have already demonstrated that AI-assisted drug discovery can identify promising new drug targets, predict patient populations most likely to respond to specific treatments, and accelerate the path from initial hypothesis to clinical testing.

From an economic perspective, if an AI system can even modestly accelerate drug discovery, the returns are enormous. A drug that reaches market one year faster generates billions in additional revenue. Every year of development time saved reduces risk and cost.

Compare this to diagnostic imaging, where the benefits are important but ultimately bounded by volume and efficiency gains. Drug discovery benefits can compound across an entire pharmaceutical pipeline. One successful application of AI can potentially create value across dozens of development programs.

That's why pharma companies are investing heavily in AI partnerships. That's why academic medical centers are launching AI research institutes. That's why venture capital is flowing into biotech AI companies at record rates.

The Hallucination Problem: When AI Gets Confidently Wrong

This deserves its own extended discussion because it's not a minor edge case. It's a fundamental behavioral characteristic of current AI systems.

Language models are pattern-matching systems. They've learned statistical relationships between words and concepts from training data. When they generate text, they're predicting what words should come next based on that learned pattern. Importantly, this process has no built-in mechanism to distinguish between "high confidence because I've seen similar patterns many times" and "complete fabrication because I'm hallucinating."

A language model can say "according to the 2023 Journal of Medicine study on topic X" and that sentence will sound exactly as confident and credible as one based on an actual paper that actually exists. The system has learned how to write academic-sounding citations. It hasn't learned the difference between real citations and fabricated ones.

In healthcare, this is more than embarrassing. It's dangerous.

Consider a clinical decision support system that tells a physician: "For this patient's condition, literature suggests treatment protocol X with success rate of 72%." If the system is hallucinating, and no such literature exists, and protocol X is actually ineffective or harmful, the physician's decision-making process starts from a fundamentally false premise.

The risk is amplified because physicians, like all humans, are subject to confirmation bias. If an AI system's recommendation aligns with what the physician was already considering, the physician is more likely to trust it without verification. If it contradicts their intuition, they might dismiss it correctly—or they might dismiss an actually correct recommendation because they over-trusted their own judgment.

Vendors are working on mitigation strategies. Some systems require explicit citation retrieval and validation. Some refuse to generate clinical recommendations without explicitly sourcing every claim. Some maintain confidence intervals and explicitly communicate uncertainty.

But the underlying problem remains unsolved. Current AI systems don't truly "know" what they're talking about. They generate statistically plausible text. In medicine, statistical plausibility isn't sufficient.

AI assistance can reduce documentation time by 130 hours per year, translating to an estimated $75,000 in economic value per physician annually. Estimated data.

Data Privacy and Security: The Elephant in Every Healthcare AI Room

Healthcare data is uniquely sensitive. Patient records contain not just medical information but intimate personal details—sexual history, mental health diagnoses, substance use, genetic predispositions. This information is protected under regulations like HIPAA in the United States and GDPR in Europe.

Breaking down the data sensitivity barriers that protect patient privacy creates liability and regulatory risk. But building effective AI healthcare systems requires training data. Modern language models and machine learning systems need massive amounts of examples to learn from.

There's an inherent tension here. You need data to build good systems. You can't ethically share data without destroying patient privacy. How do you resolve this?

Current approaches include:

Federated learning: AI models are deployed to hospitals and trained on local data, rather than centralizing all data in one place. The model learns from all hospitals' data without requiring data to leave secure environments.

Synthetic data generation: Training data is artificially generated based on patterns from real data, removing personally identifiable information while preserving statistical relationships the AI needs to learn.

Differential privacy: Formal mathematical techniques add noise to data in ways that preserve utility for machine learning while providing formal guarantees about privacy protection.

Data sharing agreements: Organizations share limited datasets under strict legal agreements defining permitted uses and requiring data deletion after model training.

None of these approaches is perfect. Federated learning increases computational complexity and slows training. Synthetic data generation might lose important edge cases. Differential privacy adds noise that can reduce model performance. Restricted data sharing limits the diversity and scale of training data.

But the alternative—centralizing sensitive patient data for AI training—creates unacceptable privacy and security risk. The 2023 Change Healthcare ransomware attack, which disrupted operations across thousands of healthcare facilities and potentially compromised 100 million patients' data, illustrates exactly why centralized healthcare data repositories are dangerous.

Organizations building healthcare AI are increasingly adopting privacy-preserving approaches not just because regulation requires it, but because the reputational and operational risk of data breaches is genuinely catastrophic. A healthcare company that loses patient data faces regulatory fines, class action litigation, loss of customer trust, and potentially business-ending consequences.

Regulatory Approval: The FDA's Evolving Relationship with AI

One of the most important dynamics in healthcare AI is regulatory approval. Unlike many industries where companies can launch products and ask forgiveness later, healthcare requires approval before deployment in clinical settings.

The FDA has created specific pathways for AI-based medical devices. These pathways recognize that AI systems learn and improve over time, requiring different regulatory approaches than traditional fixed software. The FDA's framework for "modifications to AI-based software modifications" (the awkwardly named Final Rule) provides guidance on what kinds of changes require new approval versus what can be deployed without resubmission.

This is actually somewhat beneficial for established companies. Navigating FDA approval requires expertise, resources, and patience that smaller startups struggle with. Companies that successfully get FDA approval gain both regulatory blessing and competitive moat. A diagnostic AI that has FDA clearance has massive advantages over uncleared competitors.

But the regulatory process also slows innovation. A startup that invents a novel approach to patient monitoring might need 18-36 months for FDA review before deployment. By that time, well-funded competitors might have built similar solutions and obtained approval. The timeline creates competitive advantage for well-funded companies that can absorb regulatory delays.

This is partly why major tech companies and well-funded venture startups dominate AI healthcare. They have the resources to navigate regulatory processes. Smaller companies, unless they're venture-backed adequately to weather regulatory cycles, struggle to compete.

Why Clinical Validation Matters (and Why It's Often Skipped)

Here's an uncomfortable truth about healthcare AI: not all deployed systems have rigorous clinical validation.

Proper clinical validation requires prospective studies where the AI system is tested in real clinical environments with real patients, and outcomes are compared against established standards or alternative approaches. These studies take time and money. They need institutional review board approval. They require careful documentation and statistical analysis.

It's slower and more expensive than releasing a product and iterating based on user feedback. It's the opposite of the "move fast and break things" mentality that shaped consumer technology.

Some healthcare AI companies do rigorous validation. They publish results in peer-reviewed journals. They conduct multi-site studies demonstrating their system's effectiveness. They're transparent about limitations and failure modes.

Others deploy systems with minimal validation, relying on internal testing, preliminary studies, or customer case studies. The systems might actually be effective. But the evidence level is lower. The confidence about how they'll perform across different patient populations or care settings is weaker.

This matters because healthcare organizations are increasingly cautious about validation. Some refuse to deploy systems without published evidence from independent studies. Others will accept manufacturer-provided studies or case reports. Still others adopt systems with minimal evidence, hoping they'll help and monitoring outcomes carefully.

The right answer is probably in the middle: meaningful evidence requirements that prevent obviously ineffective systems from deploying, without such stringent requirements that useful innovations can't be deployed for years while perfect evidence accumulates.

But that balance is hard to get right, and different organizations strike it differently.

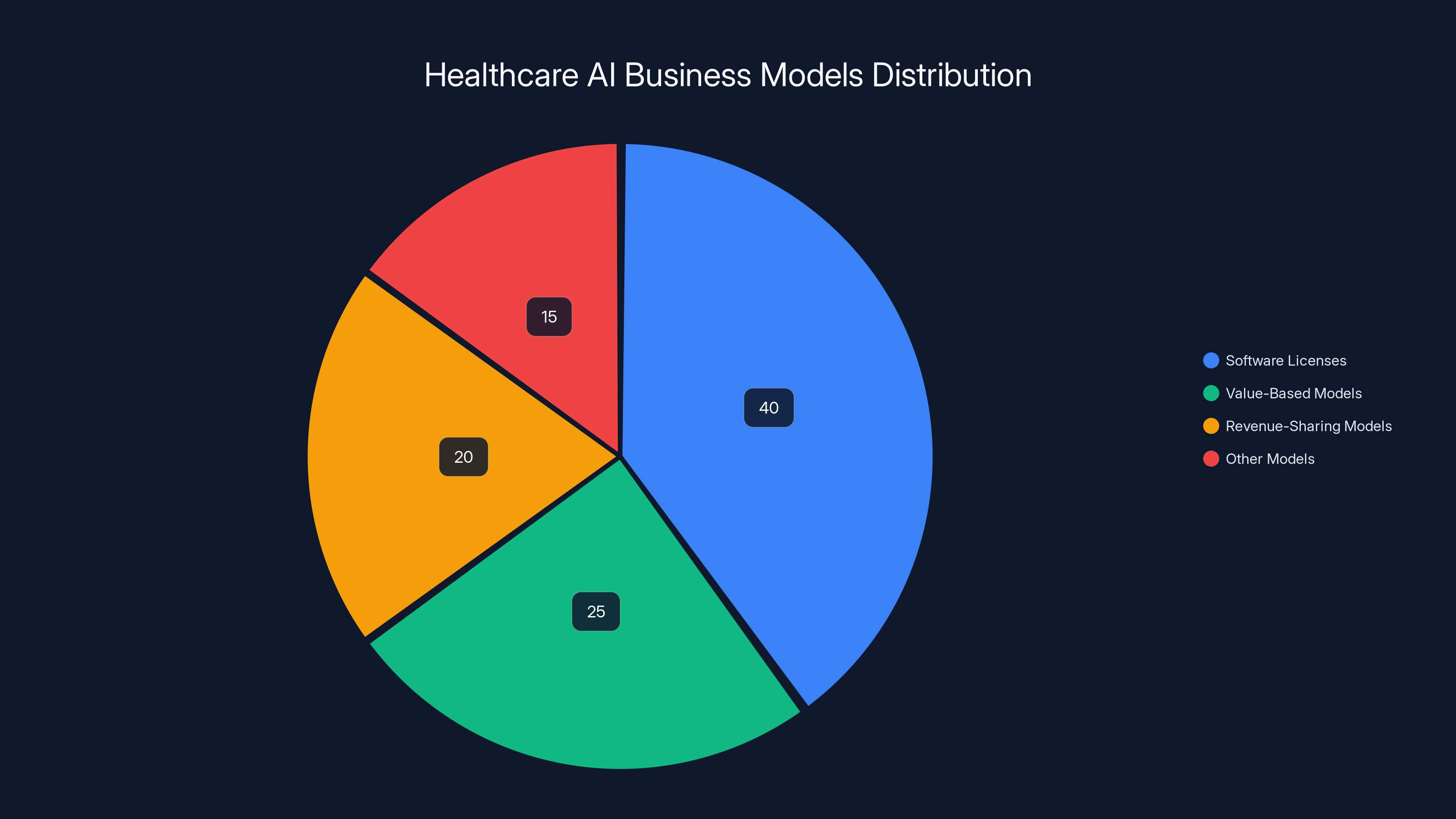

Estimated data suggests that software licenses dominate healthcare AI business models, followed by value-based and revenue-sharing models. Estimated data.

The Predictive Analytics Revolution: Finding Patients Before Problems Become Crises

One of the most promising applications of AI in healthcare doesn't get as much attention as diagnostics, but arguably has bigger impact: predictive analytics that identifies patients at risk of deterioration before clinical problems become obvious.

A patient admitted to the hospital for pneumonia might appear stable when checked by nurses on the 3 AM rounds. But subtle changes—respiratory rate slowly increasing from 18 to 22 breaths per minute, oxygen saturation drifting from 96% to 94%, temperature creeping up—might indicate deterioration before the patient becomes critically ill.

A human clinician checking on the patient every four hours might miss these gradual changes. But an AI system analyzing minute-by-minute vital signs, lab values, and trend data can detect concerning patterns and alert staff for earlier intervention.

This kind of early warning system can prevent ICU admissions, reduce mortality, and improve patient outcomes. And critically, it addresses one of the healthcare system's biggest problems: understaffing.

When nurses have adequate staffing, they notice subtle changes and intervene. When units are understaffed (which is nearly constant in American hospitals), important clinical changes get missed because there simply aren't enough eyes watching enough patients.

AI-based monitoring systems act as an additional set of continuous observers. They're not replacing nurses—they're augmenting human attention by highlighting patients who need immediate assessment.

The economics are compelling. A hospital system that prevents ICU admissions through earlier detection saves potentially tens of thousands of dollars per prevented admission. The clinical impact is equally compelling. Earlier intervention for deteriorating patients genuinely saves lives.

Unlike diagnostic AI, which augments physician decision-making, predictive monitoring is fundamentally supportive. It's not asking clinicians to trust an algorithm's judgment. It's alerting them to pay attention to a specific patient. The action and judgment remain entirely with human clinicians.

Patient Engagement and Remote Monitoring: Healthcare at Home

Traditionally, healthcare happens in medical facilities. Patients visit clinics, hospitals, and specialists. But the future increasingly involves healthcare happening at patients' homes, with clinicians maintaining monitoring and communication through digital platforms.

AI enables this shift by handling communication and basic triage that previously required clinical staff time.

A patient with diabetes can use an AI-powered app that asks about symptoms, reviews medication adherence, suggests lifestyle adjustments, and escalates to human clinicians when needed. A cardiac patient recovering at home can have continuous remote monitoring from wearable devices analyzed by AI that alerts their care team if concerning patterns emerge.

This works because AI is good at standardized assessment and information gathering. It's less good at nuanced clinical judgment, which is where human clinicians come in.

The impact on healthcare access is significant. Rural patients who might travel four hours to see a specialist can have much of that interaction occur remotely, with AI handling routine communication and human specialists focusing on complex decision-making.

The impact on cost is equally significant. Remote monitoring is cheaper than in-person visits. AI-assisted patient engagement reduces no-show rates and improves medication adherence—two factors that have enormous impact on health outcomes and healthcare system costs.

But like other AI healthcare applications, there are risks. An AI system that misses early warning signs could delay critical care. A chatbot that confidently gives wrong advice could harm patients. Poor user interface design could cause patients to use the system incorrectly, providing false reassurance.

These are solvable problems. But they require that companies building patient-facing healthcare AI take safety and usability seriously, not as afterthoughts but as central design principles.

The Business Models: Who Pays and How Much

Understanding how healthcare AI companies monetize is crucial to understanding whether they're solving real problems or building solutions hunting for problems.

Some healthcare AI companies sell software licenses. A hospital pays a per-seat fee (e.g.,

Others operate on value-based models where pricing is tied to outcomes. A predictive monitoring system might be priced per prevented ICU admission or per patient mortality prevented. This aligns incentives but requires demonstrating clear outcomes causation.

Still others operate on revenue-sharing models where they participate in the savings they generate. A documentation system that saves a hospital $5 million annually might be priced at 30-40% of those savings.

The most sustainable models tend to be the ones that solve clear, quantifiable problems with obvious ROI. Documentation time savings are measurable. A hospital knows how much it costs to have clinicians do administrative work and can calculate savings precisely.

Models based on harder-to-quantify benefits ("improves patient experience") struggle more. Hospitals are increasingly sophisticated about ROI analysis and less willing to invest in tools without clear financial justification.

This creates an interesting dynamic: companies are strongly incentivized to solve the highest-impact problems rather than tangential ones. There's no room for nice-to-have features. Healthcare AI succeeds by being genuinely useful.

AI systems show high accuracy (95%), but physicians struggle with trust calibration, leading to over-reliance (70%) or under-reliance (60%). Estimated data.

Integration Challenges: The Hidden Cost of Implementation

One of the least appreciated challenges in healthcare AI deployment is integration with existing systems.

Healthcare organizations use dozens of software systems: Electronic Health Records (EHRs), Practice Management systems, billing systems, lab systems, imaging systems, pharmacy systems. These systems rarely integrate seamlessly. They often don't share data efficiently. Some still use 30-year-old technology built on outdated architecture.

A new AI tool needs to connect to these systems to be useful. A documentation AI needs to read data from the EHR and write back into the EHR. A diagnostic AI needs to access imaging studies from the radiology information system. A predictive monitoring system needs real-time data feeds from multiple monitoring systems.

Building these integrations requires understanding existing systems' technical architecture, which varies wildly between organizations. A solution that works perfectly at one hospital might require completely different integration at another.

Implementation timelines and costs often exceed initial estimates by 50-100% due to integration complexity. A healthcare AI company that quotes 3-month implementation might hit 6 months in reality because unexpected integration challenges emerged.

This creates advantage for solutions that work more independently. A clinical documentation tool that works well through voice input, without heavy EHR integration, deploys faster than a diagnostic system that requires real-time imaging system access.

It also creates advantage for companies that build their own integrated systems rather than trying to connect to fragmented existing ones. But the cost of building complete healthcare platforms is enormous, putting this approach mostly out of reach except for largest tech companies.

The Talent War: Why AI Healthcare Startups Struggle to Hire

Building effective healthcare AI requires uncommon combinations of skills. You need machine learning engineers who understand deep learning. You need clinicians or clinical informaticists who understand healthcare workflow and regulations. You need regulatory specialists who understand FDA requirements. You need data engineers who can handle sensitive patient information securely.

Finding people with all these skills is hard. Finding enough of them to build a company is harder.

Healthcare organizations are competing with well-funded AI startups for healthcare-trained talent. When Open AI or Google launches a healthcare initiative, they can offer compensation that startups can't match. When a talented physician-engineer is considering whether to join a startup or take a VP role at a major tech company, many factors favor the latter.

This creates a dynamic where companies best positioned to hire the talent needed to build healthcare AI are often the big tech companies, not the focused startups with domain expertise. That's somewhat suboptimal for healthcare innovation, since focused companies often move faster than large organizations with competing priorities.

Some healthcare AI startups have solved this by building in academic medical centers where they can recruit physician and clinical informatics talent more easily. Others have focused on problems where clinicians aren't essential—pure software or data problems.

But the fundamental challenge remains: building healthcare AI at startup pace with startup budgets while hiring the specialized talent needed is genuinely hard.

Competitive Dynamics: Big Tech Versus Specialized Startups

The healthcare AI landscape has an interesting structural tension: major tech companies building healthcare capabilities versus specialized healthcare startups building AI applications.

Big tech companies have advantages in foundational model development. Building a 70-billion-parameter language model costs tens of millions and requires specialized infrastructure. Only large tech companies can bear these costs. They can then apply these models across hundreds of healthcare applications.

Specialized startups have advantages in domain focus. A team building documentation AI can optimize specifically for clinical language and EHR integration. They can talk to hundreds of clinicians about their actual needs. They can move quickly without navigating large organization politics.

Optimally, these would be complementary. Large companies develop foundational models. Startups build domain-specific applications on top of those models. Startups get leverage from improved underlying models. Large companies get revenue from specialized applications they wouldn't have built themselves.

In practice, there's increasing overlap. Large companies are building specialized applications directly rather than letting startups innovate on top of their models. Startups are building foundational models rather than just applying existing ones.

This competitive overlap is creating consolidation. Acquisition of promising healthcare AI startups by major tech companies is increasing. Some specialized startups are raising enormous funding rounds to compete directly with major companies rather than positioning as complementary.

From a healthcare system perspective, the outcome likely features both. Foundational models from major tech companies will power many applications. Specialized companies will build domain-specific solutions. The balance between integration (everything comes from one vendor) and specialization (best-of-breed point solutions) will likely vary by organization.

AI-assisted drug discovery can potentially reduce development time by approximately 3 years, significantly cutting costs and accelerating market entry. Estimated data.

International Expansion: Different Regulations, Different Opportunities

So far, this discussion has largely focused on the American healthcare system. But healthcare AI is global opportunity.

Europe has stricter data privacy requirements (GDPR) but also earlier adoption of AI in healthcare in some areas. The UK's National Health Service has been relatively aggressive about deploying AI diagnostics. France and Germany have funded healthcare AI research heavily.

Asia-Pacific is emerging as massive market. China's healthcare system is deploying AI for screening and diagnosis at scale. Japan's aging population is creating specific opportunities for AI-assisted care for elderly patients. India's large population and cost-conscious healthcare system create demand for AI that can augment limited clinical resources.

But each region requires different regulatory approaches. European systems require different privacy handling than American systems. Asian markets have different reimbursement models. Healthcare AI companies expanding internationally need to adapt solutions, not just translate them.

This creates both opportunity and challenge. The global addressable market for healthcare AI is larger than the US market. But executing globally is harder than dominating domestically.

The Immediate Future: What's Actually Deployable in the Next 12-24 Months

Looking at what healthcare organizations are actually investing in right now, several categories stand out:

Clinical documentation remains priority one. Vendor revenue, implementation rates, and customer satisfaction all suggest documentation automation continues driving AI healthcare adoption. The economics are clear. The implementation is manageable. The clinician enthusiasm is there.

Predictive monitoring for hospitalized patients is seeing increased investment and deployment. The ability to identify deteriorating patients earlier has clear clinical and economic impact. Many vendors are moving from pilot to production phases.

Specialty-specific AI applications are expanding. Radiology still leads, but pathology, cardiology, and dermatology are all seeing targeted AI tools. These specialist applications often have narrower scope than general diagnostic AI, making validation and deployment more manageable.

Administrative and revenue cycle AI is growing faster than clinician-facing applications. Tools that automate prior authorization, identify billing optimization opportunities, and manage scheduling are increasingly deployed. These have clear ROI even if they don't directly impact patient care.

Patient engagement and remote monitoring tools are moving beyond pilot stages. Organizations are realizing that home-based monitoring with AI triage actually works and can reduce healthcare costs.

What's not seeing rapid deployment yet: fully autonomous diagnostic AI that replaces physician judgment. AI-generated treatment recommendations without clinician oversight. Systems that make significant clinical decisions without human involvement.

This makes sense. Organizations are moving cautiously with AI in high-stakes domains. They're using it to augment human expertise, not replace it. That's the sustainable path.

The Unresolved Questions That Will Define the Next Five Years

Major questions remain unanswered. How these resolve will shape healthcare AI's trajectory:

How do we actually solve the hallucination problem? Current large language models hallucinate. Until we solve this at a fundamental level, deploying these systems in high-stakes medical environments remains risky.

What does appropriate liability look like? If an AI system recommends treatment and the patient is harmed, who's liable? The vendor? The hospital? The physician? Until this is clarified, healthcare organizations will remain cautious about deployment.

How do we validate AI effectively without requiring 10-year studies? Traditional pharmaceutical validation is slow. AI systems improve iteratively. How do we validate them without either approving unproven systems or slowing innovation to pharmaceutical timescales?

Can we actually preserve privacy while sharing enough data to build good systems? Privacy-preserving AI is promising but still immature. Can federated learning, synthetic data, and differential privacy all work together to enable good data science without compromising privacy?

How do we ensure equitable healthcare AI? Most healthcare AI is trained on data from wealthy countries' healthcare systems. Will systems built on this data work as well for less privileged populations? What's the obligation to validate in diverse populations before deployment?

What happens as AI becomes more capable? At some point, AI might become genuinely better than human clinicians at specific diagnostic or treatment decisions. Do we then require humans to follow AI recommendations? Or do we maintain human authority even when humans are making objectively worse decisions?

These aren't rhetorical questions. The answers will determine how much impact healthcare AI actually has and whether that impact is uniformly beneficial or creates new problems even as it solves existing ones.

Beyond Healthcare: Where AI Gets the Same "Opportunity Collision" Pattern

Healthcare is getting the AI gold rush treatment, but it's not unique. The same pattern appears wherever you have massive industry, clear problems, and regulatory clarity.

Legal services are seeing similar dynamics. Legal research and contract analysis are perfect AI applications. Multiple startups and major companies are deploying AI here. But like healthcare, legal involves high stakes and strong preference for human expert involvement.

Financial services and compliance are further along in AI deployment. Regulatory requirements for financial institutions actually encourage AI use (machine learning flags suspicious transactions better than humans do). The economics are clear. Implementation is standard.

Manufacturing and industrial processes have been using AI longer than healthcare. But they're seeing acceleration. Predictive maintenance, quality control, and process optimization all see increasing AI adoption.

The pattern is consistent: industries with massive markets, clear problems, strong incentives for solutions, and regulatory frameworks that permit innovation see rapid AI adoption. Healthcare fits perfectly.

FAQ

Why are major tech companies suddenly investing so heavily in healthcare AI?

Healthcare is a massive market—the US healthcare system alone spends over $4.8 trillion annually. It's fragmented with clear inefficiencies. Physician burnout and administrative burden create immediate, pressing problems that organizations will pay to solve. Unlike some industries where AI adoption is aspirational, healthcare adoption is driven by genuine desperation for solutions. Combined with established regulatory frameworks and rich training data, healthcare represents genuinely attractive market opportunity where AI can demonstrate immediate impact.

What's the difference between AI augmentation and AI replacement in healthcare?

Augmentation means AI assists human clinicians who maintain decision-making authority. A documentation system that transcribes clinical conversations is augmentation. A diagnostic AI that flags suspicious imaging is augmentation. Replacement means AI makes decisions without human oversight. Current healthcare AI is almost entirely augmentation because healthcare systems are appropriately cautious about autonomous medical decision-making. The industry is moving slowly toward more autonomous systems, but human oversight remains central to all deployed clinical AI.

Why is hallucination such a big problem in healthcare specifically?

In consumer applications, an AI system confidently providing false information is embarrassing. In healthcare, it's dangerous. A language model might confidently cite medical studies that don't exist, suggest dosages based on completely fabricated data, or recommend treatments that don't actually exist. Physicians relying on these false outputs make clinical decisions based on false premises. Unlike general-purpose applications where users might fact-check outputs, healthcare users often trust medical-sounding information from AI systems. Until hallucination is solved at a fundamental level, deploying AI systems in high-stakes medical decision-making remains risky.

How does healthcare AI handle patient privacy concerns?

Healthcare data is protected under regulations like HIPAA and GDPR. Organizations training AI on patient data use several approaches: federated learning trains models on local hospital data without centralizing sensitive information, synthetic data generation creates artificial training data that preserves statistical patterns without personal information, differential privacy adds mathematical noise to data to provide privacy guarantees, and restricted data sharing uses legal agreements to control how shared data is used. None of these approaches is perfect, but they collectively allow AI development while maintaining reasonable privacy protection.

What's the biggest obstacle preventing faster healthcare AI adoption?

Integration with existing healthcare systems is substantially underestimated. Healthcare uses dozens of incompatible legacy systems that rarely share data efficiently. New AI tools need to connect to these systems to be useful. Integration complexity often causes implementation timelines to exceed initial estimates by 50-100%. A healthcare AI company quoting a three-month deployment might hit six months in reality when unexpected integration challenges emerge. This structural reality slows deployment and creates advantage for less integrated solutions that can operate more independently.

Why are specialized healthcare startups struggling when big tech companies are entering?

Huge capital requirements for foundational model development favor large companies. Big companies can build 70-billion-parameter language models at tens-of-millions cost. Only large tech companies can sustain these investments. However, specialized startups have advantages in domain focus, speed, and understanding specific clinical problems. The competitive landscape is shifting toward consolidation, with major tech companies acquiring promising startups rather than competing directly. The sustainable future likely features both: foundational models from tech companies powering applications, with both large companies and specialized players building domain-specific solutions.

How do healthcare organizations decide whether to trust an AI system's recommendations?

Healthcare organizations are increasingly sophisticated about AI validation. They want evidence from prospective clinical studies showing the system works in real clinical environments with diverse patient populations. Some require published, peer-reviewed evidence from independent researchers. Others accept manufacturer studies or case reports. Still others adopt systems with minimal evidence while monitoring outcomes carefully. The right balance likely involves meaningful evidence requirements that prevent obviously ineffective systems from deploying without such stringent standards that useful innovations can't be deployed for years while perfect evidence accumulates. Different organizations strike this balance differently based on their risk tolerance and resources.

What healthcare AI applications are actually deployable in 2025?

Clinical documentation automation is leading—vendors are profitable, implementation is manageable, and clinician enthusiasm is high. Predictive monitoring for hospitalized patients moving from pilots to production. Specialty-specific diagnostic AI (radiology, pathology, cardiology) expanding with narrower scope making validation easier. Administrative and revenue cycle AI showing faster growth than clinician-facing tools. Patient engagement and remote monitoring tools moving beyond pilots. What's not seeing rapid deployment: fully autonomous diagnostic AI replacing physician judgment, AI-generated treatment recommendations without clinician oversight, and systems making significant clinical decisions without human involvement. Healthcare adoption remains fundamentally cautious about AI autonomy.

What's Next for Healthcare AI

The gold rush is real, and it's accelerating. Healthcare's unique combination of massive market opportunity, clear problems, regulatory clarity, and rich training data creates genuine alignment between what AI can do and what healthcare needs.

But success in healthcare AI isn't about innovation speed. It's about solving real problems safely. It's about building systems that augment human expertise rather than replacing it. It's about regulatory compliance, privacy protection, and honest acknowledgment of limitations.

The companies and products that will define healthcare AI aren't the ones moving fastest. They're the ones moving most thoughtfully. The ones willing to do clinical validation, prioritize safety over speed, and genuinely solve problems that clinicians and healthcare organizations are desperate to solve.

That's a fundamentally different mindset from consumer tech. It's slower. It's more expensive. It requires patience with regulatory processes and acceptance that perfect evidence takes time to accumulate.

But when it works, when an AI system genuinely improves patient outcomes and clinician experience, the impact compounds. Every physician freed from administrative burden can spend time on patient care. Every patient identified as high-risk before crisis occurs gets earlier intervention. Every diagnostic assistance system that catches something a human missed prevents preventable harm.

That's the real gold in the healthcare AI rush. Not the venture capital flowing in or the company valuations inflating. It's the opportunity to genuinely improve how medicine works. That's motivation that drives the best work, whether you're a startup or a major tech company.

The healthcare AI revolution is here. The next five years will determine whether it delivers on its promise or falls short of its potential. Everything depends on whether we prioritize innovation speed or patient safety, investor returns or genuine healthcare improvement, moving fast or moving thoughtfully.

Based on the organizations leading right now, there's reason for cautious optimism. They're getting it right.

Key Takeaways

- Healthcare AI investment surged from 7.2B (2024), with projections exceeding $9B in 2025 due to industry's massive scale, clear problems, and established regulatory frameworks

- Clinical documentation automation, predictive patient monitoring, and specialty-specific diagnostics are seeing rapid real-world deployment, while fully autonomous AI clinical decision-making remains limited

- Hallucination risks, integration complexity with legacy healthcare systems, and validation requirements create substantial challenges that slow adoption despite enormous market opportunity

- Success in healthcare AI depends on prioritizing patient safety over innovation speed, conducting rigorous clinical validation, and maintaining human clinician oversight rather than pursuing autonomous systems

- Healthcare organizations integrating 50+ legacy systems create implementation timelines 50-100% longer than estimated, requiring vendors to account for substantial integration complexity in deployment planning

Related Articles

- AI in Healthcare: Why Doctors Trust Some Tools More Than Chatbots [2025]

- Claude for Healthcare vs ChatGPT Health: The AI Medical Assistant Battle [2025]

- Google Removes AI Overviews for Medical Queries: What It Means [2025]

- ChatGPT Health: How AI is Reshaping Medical Conversations [2025]

- ChatGPT Health: How AI is Reshaping Healthcare Access [2025]

- The Pitt Season 2 Episode 2: AI's Medical Disaster [2025]

![AI Healthcare Revolution: Why Tech Giants Are Racing Into Medicine [2025]](https://tryrunable.com/blog/ai-healthcare-revolution-why-tech-giants-are-racing-into-med/image-1-1768590542463.jpg)