Open AI Scam Emails & Vishing Attacks: How to Protect Your Business [2025]

TL; DR

- Scam targeting Open AI users: Fraudsters register fake accounts and embed malicious links in organization names to bypass email filters

- Multi-vector attack strategy: Initial phishing emails are followed by aggressive vishing calls to pressure victims into revealing account credentials

- Business risk multiplier: Attackers target multiple employees simultaneously, making companies more vulnerable than individual users

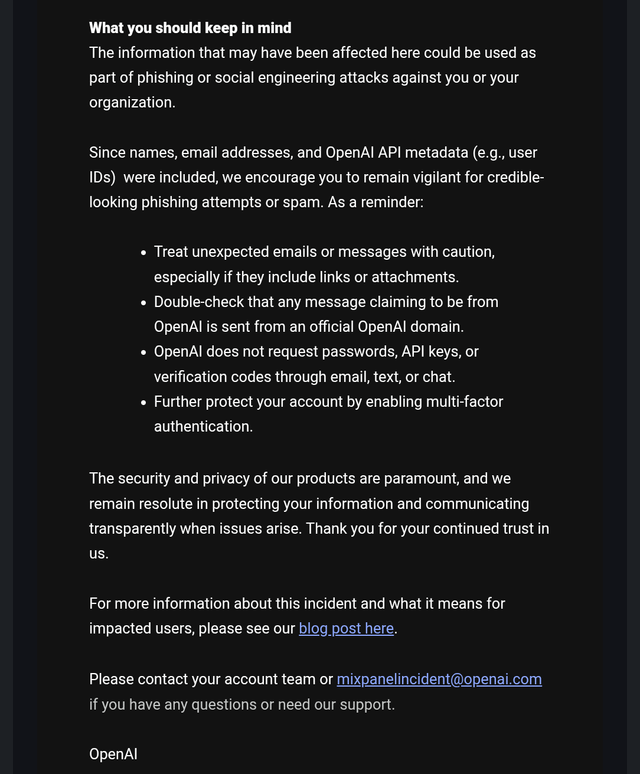

- Key protection: Enable multi-factor authentication, scrutinize all team invitations, verify URLs before clicking, and never call numbers in suspicious messages

- Bottom line: Open AI's legitimate collaboration features have become weaponized for social engineering, requiring heightened vigilance across your organization

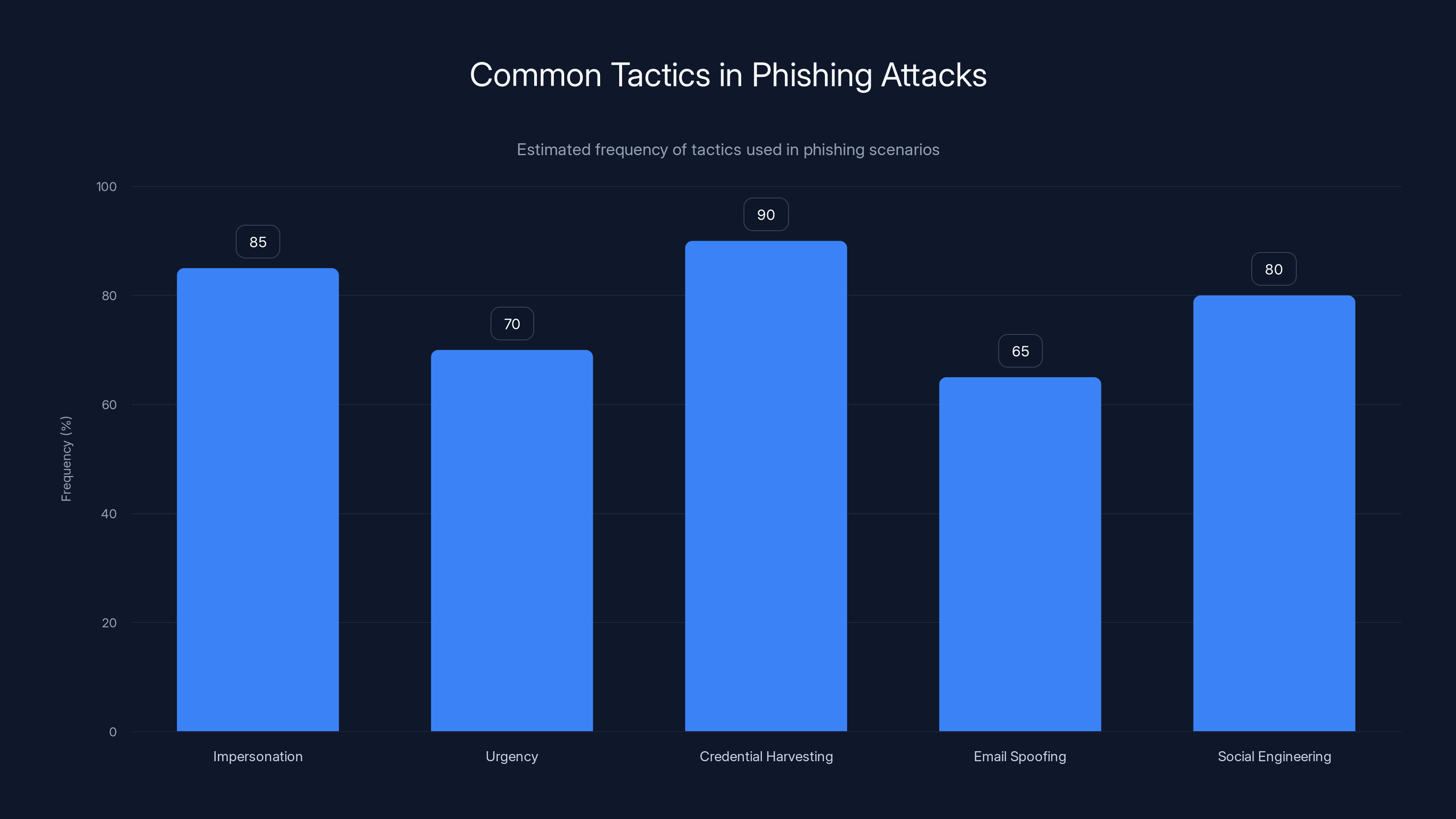

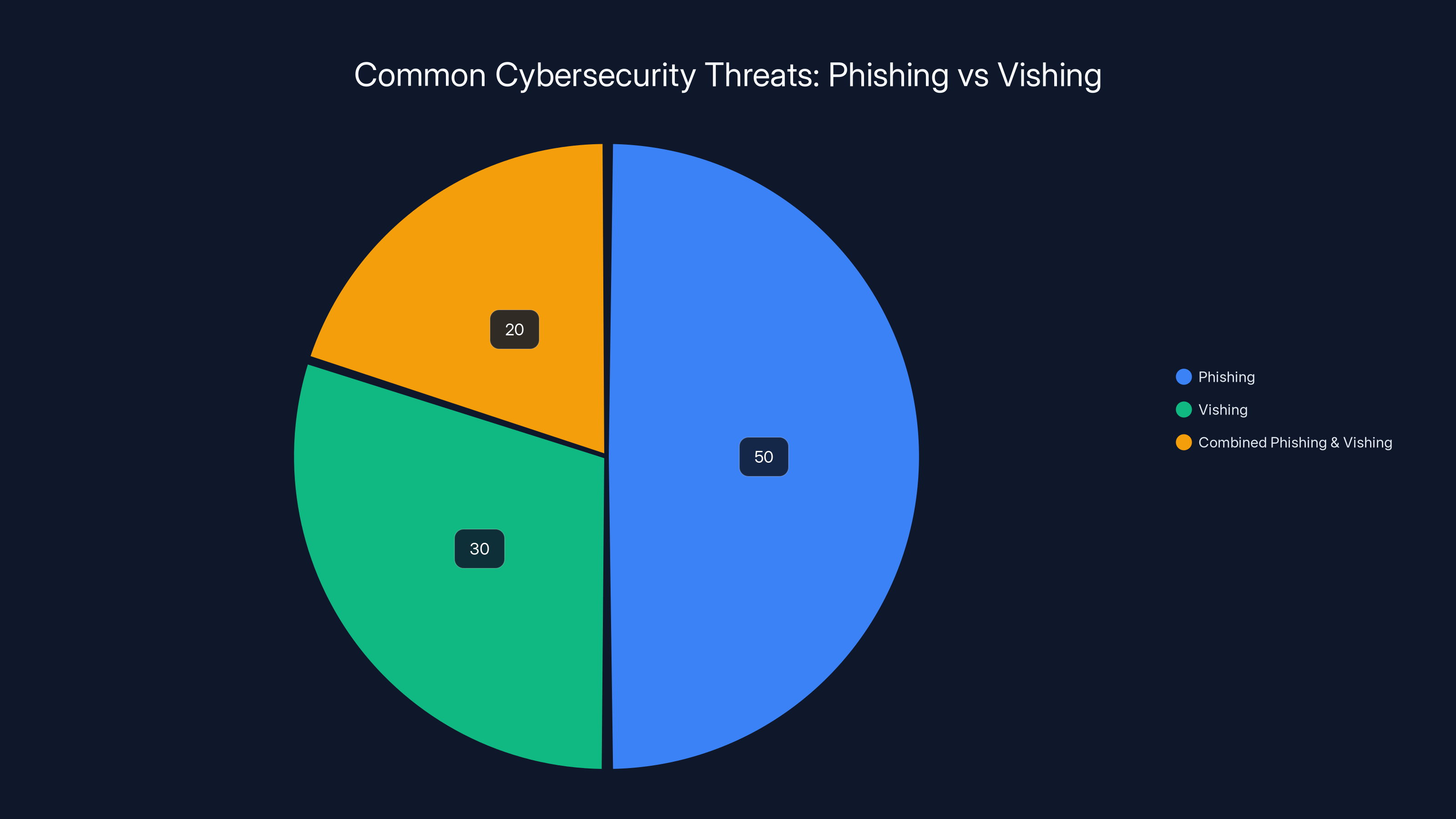

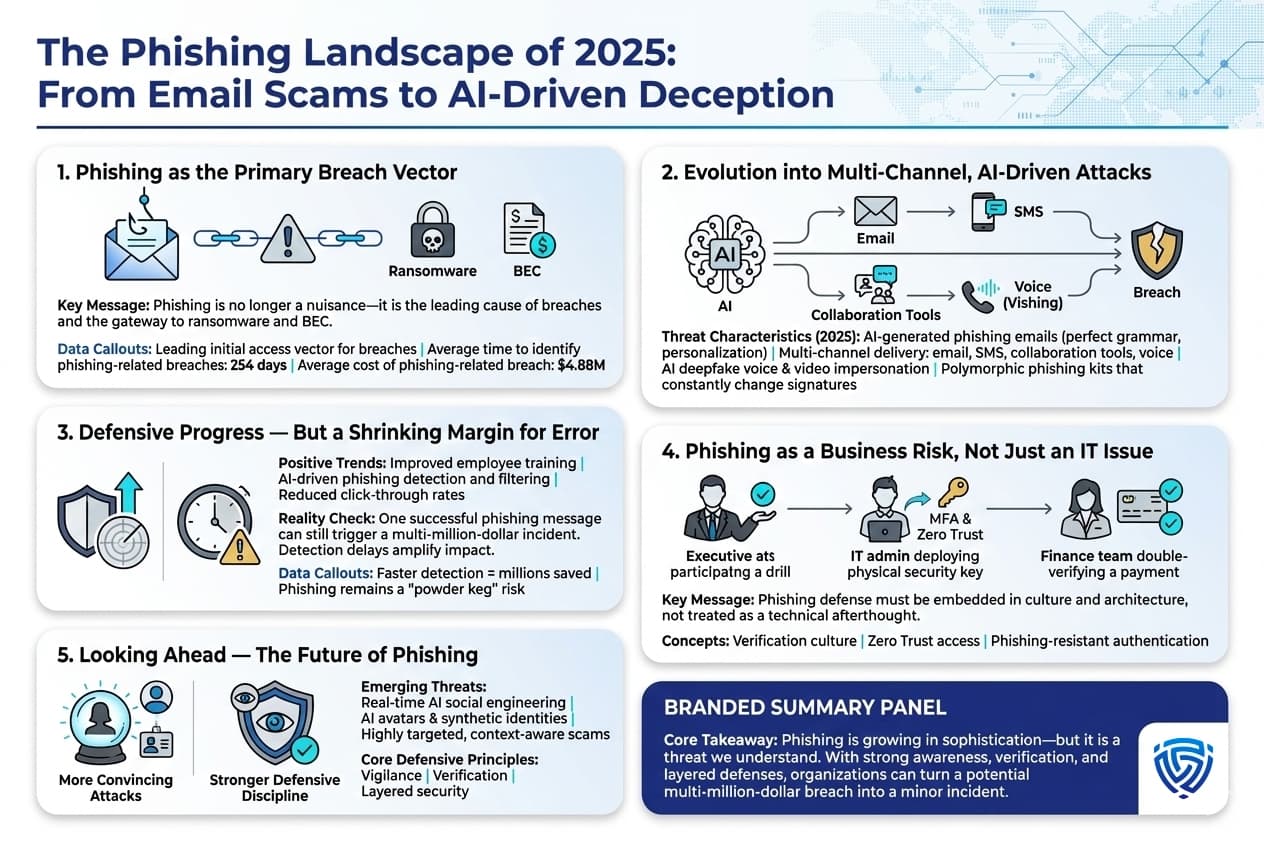

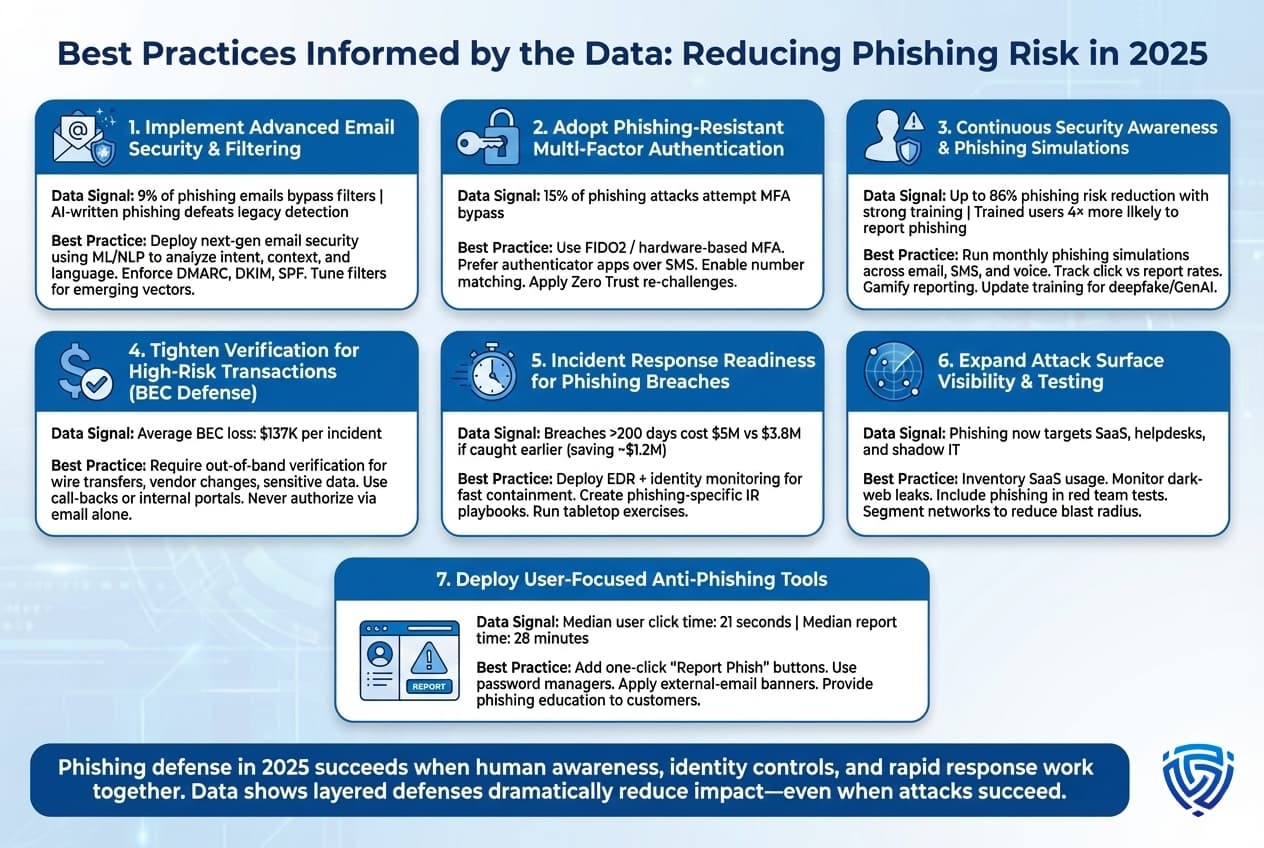

Credential harvesting and impersonation are the most common tactics in phishing attacks, with estimated frequencies of 90% and 85% respectively. (Estimated data)

Introduction: When Trusted Platforms Become Attack Vectors

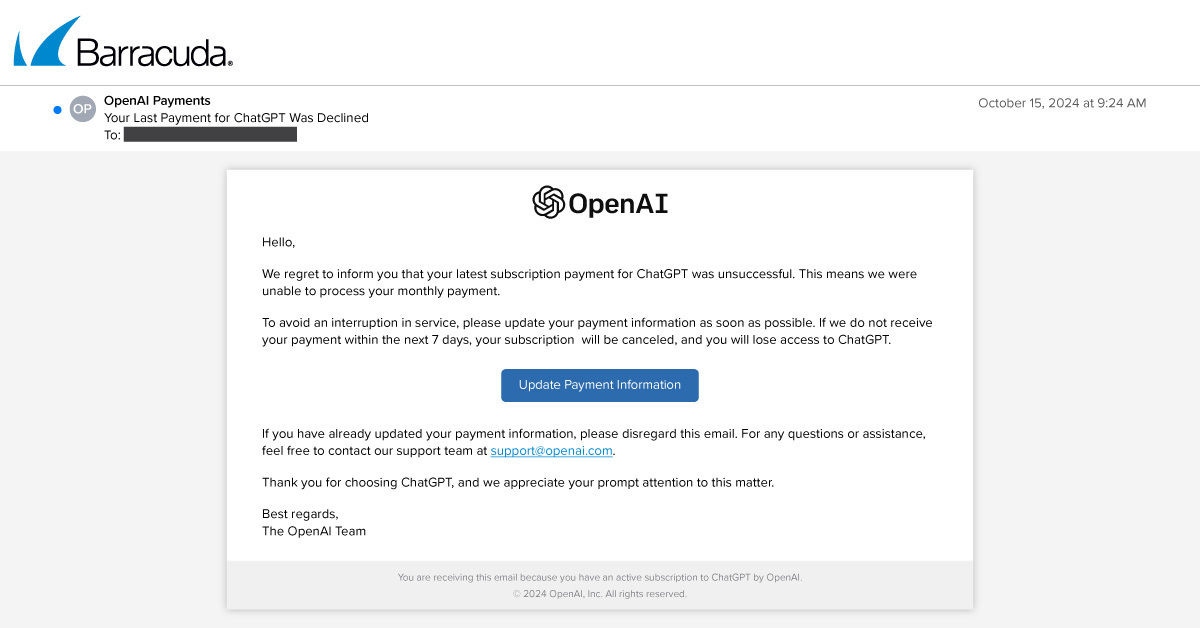

Let me set the scene. You're sitting at your desk, and an email lands in your inbox. It looks official. The sender appears to be from Open AI. It says someone's inviting you to join their team. Your instinct is to click the link and get started. That's exactly what the fraudsters are counting on.

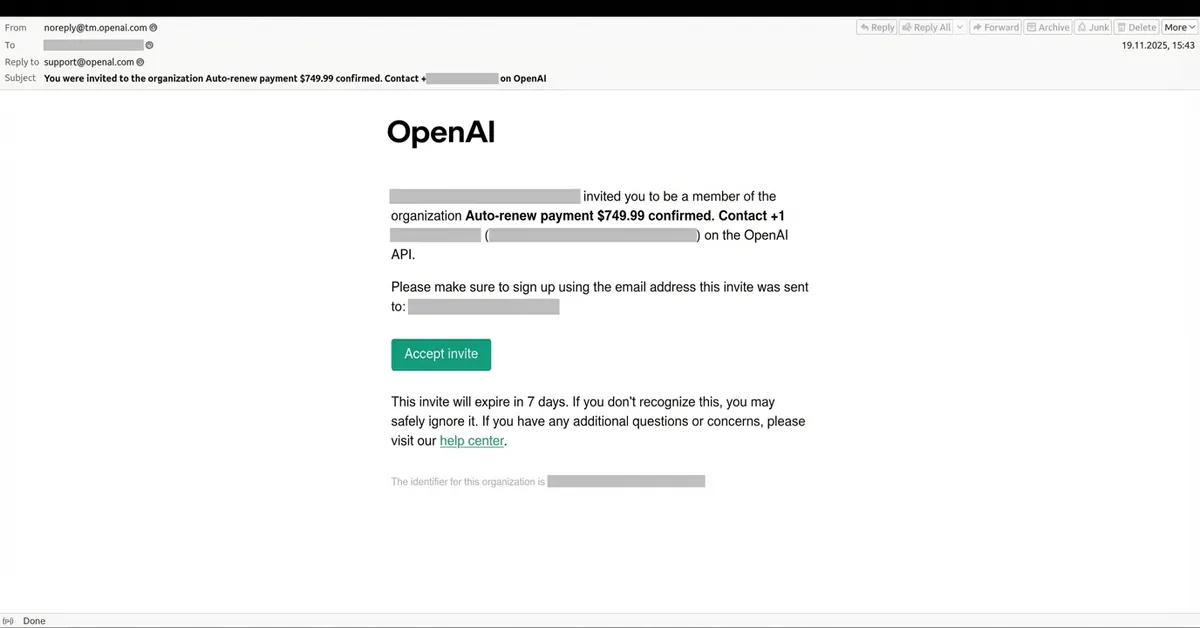

Here's the thing: this isn't a random phishing attempt anymore. Security researchers at Kaspersky have uncovered a sophisticated, multi-stage attack that exploits one of Open AI's most legitimate features—the team invitation system. It's clever. It's persistent. And it's targeting businesses at scale.

What makes this attack particularly dangerous is that it's not just about stealing one person's credentials. Fraudsters register fake Open AI accounts, then embed deceptive links and phone numbers directly into the organization name field. This bypasses traditional email filters because the malicious content lives inside a field that security systems don't scrutinize as heavily. When that invitation lands in your inbox, it looks like it came from a real Open AI organization. Because technically, it did.

The attack doesn't stop with the email, though. That's just the first hook. Once someone clicks through or expresses interest, attackers follow up with vishing calls (voice phishing) to pressure victims into revealing account details, payment information, or access credentials. The psychological pressure is real. Attackers claim subscriptions have been renewed, invoices are overdue, or urgent action is required immediately. Panic clouds judgment, and people make mistakes they otherwise wouldn't.

Why does this matter to you? Because if your organization uses Open AI's services—and statistically, many do—your employees are potential targets. Attackers don't need to compromise your entire infrastructure. They just need one employee to panic, click a link, or give up their password. One breach can cascade into unauthorized access, stolen proprietary data, financial losses, and a reputation hit that takes months to recover from.

This guide walks you through everything you need to know about these attacks: how they work, why they're so effective, and most importantly, concrete steps to protect your business and your people.

Estimated time allocation for each remediation step shows 'Stop the Bleeding' and 'Check Unauthorized API Keys' as the most time-consuming tasks.

How the Open AI Team Invitation Scam Works: The Anatomy of a Multi-Stage Attack

The Registration and Account Creation Phase

The attack starts with something deceptively simple. Fraudsters create legitimate Open AI accounts using either stolen credentials or freshly registered accounts with fake information. They don't hack into Open AI's systems. They don't need to. They just sign up like anyone else would.

Once inside, they navigate to the team creation feature. This is a standard function designed to help organizations collaborate. The attacker names their organization something deceptive. Maybe "Open AI Security Team." Maybe "Open AI Account Verification." Maybe something that mimics a legitimate enterprise customer organization. The key insight is that Open AI's interface allows relatively long organization names, and many security administrators don't scrutinize this field.

Here's where it gets clever. Instead of putting a malicious link in the email body, the attacker embeds it in the organization name itself. So when the invitation email is sent out, it contains what appears to be the organization's official name. When clicked, it directs to a phishing page. From a technical standpoint, this bypasses many email filters that focus on the email body and headers but don't heavily scan organization metadata.

The result is an invitation that passes initial security checks and lands directly in your inbox looking legitimate.

The Email Delivery and Initial Hook

Once the fake organization is set up, the attacker generates team invitations. These go out to target lists the attacker has compiled—sometimes from public sources like LinkedIn, sometimes from data breaches, sometimes from industry-specific targeting.

The email itself mimics Open AI's official communication style. It says something like: "You've been invited to join [Fake Organization Name] on Open AI." The design, fonts, and structure match legitimate Open AI emails. Most people skim their inbox. They see Open AI's branding. They see a team invitation. They assume it's work-related. They click.

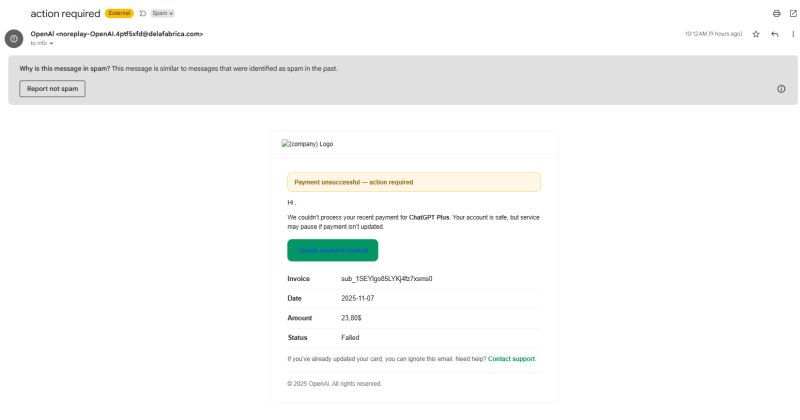

What varies about these emails is the deceptive content. Some claim subscriptions have been renewed for unusually large amounts. Others mention account verification issues. Still others promote fraudulent offers. Attackers use different subject lines and message templates to avoid triggering content filters and to appeal to different psychological triggers.

Notice the structural inconsistencies, though. Kaspersky researchers found that many of these emails contain grammar mistakes, awkward phrasing, and formatting irregularities. Legitimate Open AI communications are typically polished. But attackers bank on two things: most people don't read carefully, and when they're stressed or in a hurry, they're even less likely to notice.

The Phishing Page and Credential Harvesting

Once someone clicks the link in the deceptive organization name, they're redirected to a phishing page. This page mimics Open AI's login interface with stunning accuracy. Logos are correct. Color schemes match. The form fields are identical to the real thing.

The user sees something like: "Please sign in to access your team." They're already partially committed—they clicked a link about joining a team, so the next logical step is to enter their credentials. The attacker captures the email and password. Sometimes there's a second step asking for additional information: phone number, recovery email, security questions.

If the victim has enabled multi-factor authentication (which, statistically, far too few people have), the attacker might ask for that too. Some advanced phishing pages include steps where they request a verification code, which they can then prompt the victim to provide.

Within seconds, the attacker has access to a legitimate Open AI account.

The Follow-Up Vishing Attack: Pressure and Urgency

Here's where the attack shifts from phishing to vishing. Within hours or days, the compromised victim receives a call. The caller identifies themselves as from Open AI. They claim the victim's account has been compromised. Or there's an unusual charge. Or a security incident. Or anything that creates urgency.

The attacker's goal now is simple: pressure the victim into revealing additional credentials, payment information, or account access. The psychological tactics are refined. The caller sounds professional. They have the victim's email address. Maybe they know the victim works at a specific company. This social proof creates credibility.

The victim might say: "I don't remember changing my password." The attacker pivots: "That's why I'm calling—someone accessed your account. For your security, I need to verify your identity. What's your recovery email?" Or: "We need to update your payment method immediately to prevent service suspension."

Under pressure, people make bad decisions. They provide information they shouldn't. Some victims, in a panic, might even allow the caller to screen-share or access their device to "fix" the issue. Once that happens, the attacker has complete access.

Why Businesses Are Higher-Value Targets Than Individuals

The Multiplier Effect of Corporate Environments

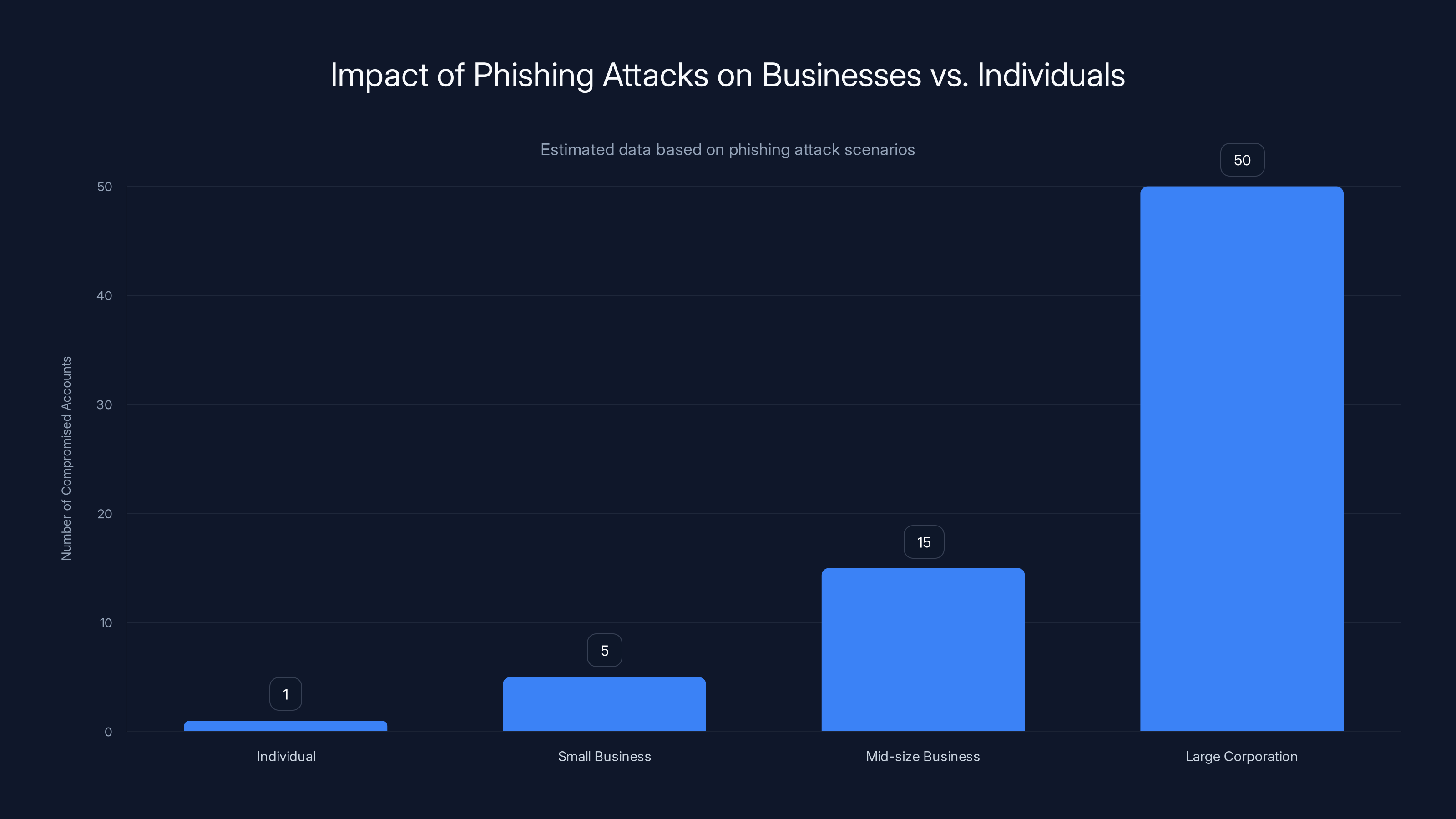

Attackers don't randomly target people. They strategically target organizations because businesses amplify the return on investment for fraud. A single phishing attack against an individual might yield one compromised account. The same attack against a business targets dozens or hundreds of employees simultaneously.

Consider a mid-size tech company with 150 employees. If an attacker sends out 150 team invitations spoofing Open AI, statistically some percentage will click. Maybe 5–10% click immediately. That's 7–15 people. Of those, maybe 2–3 actually fall for the phishing page. But that's 2–3 employees with compromised Open AI accounts.

Now multiply that. If the attacker has contact lists for dozens of companies, they're not attacking serially. They're running this attack in parallel against multiple organizations. The effort scales because the phishing infrastructure is automated. The return scales because businesses have more assets to steal.

Access to Company Resources and Financial Systems

Why do attackers care about compromising employee Open AI accounts specifically? Because Open AI isn't just a consumer app. Businesses use Open AI's API for critical systems. Customer service automation. Code generation. Data analysis. Market research. Competitive intelligence.

If an employee's account is compromised, the attacker gains access to:

- API keys and integration tokens that might be stored in account settings

- Conversation history containing proprietary business data, customer information, or strategic planning documents

- Billing information and payment methods linked to company accounts

- Team settings and permissions that might reveal how the organization uses the platform

- Audit trails showing what the organization is analyzing or building

From a single compromised account, an attacker can pivot to other systems. If the Open AI account is linked to a corporate email address, they can attempt password resets on other platforms. If they extract payment information, they can attempt fraud. If they see company data in the conversation history, they can sell it or use it for competitive advantage.

Supply Chain and Third-Party Risk

Here's another layer. Many companies don't use Open AI directly themselves. They use third-party tools and services that integrate Open AI's API behind the scenes. If attackers compromise the right employee accounts at a company that provides AI-powered services, they can potentially access the API keys of multiple downstream customers.

This creates a supply chain vulnerability. One compromised employee at a Saa S company leads to compromised API keys for dozens of enterprise customers. The attack surface expands exponentially.

Businesses, especially larger ones, are more lucrative targets for phishing attacks due to the higher number of potential compromised accounts. Estimated data highlights the scale of impact.

Red Flags: How to Spot Suspicious Open AI Invitations

Sender and Organization Name Inconsistencies

Legitimate Open AI invitations come from specific email addresses. Open AI publishes these. If you receive an invitation from an email address that doesn't match Open AI's known domains, or if it comes from a personal email address, that's a red flag.

But here's the subtle part. The attack exploits organization names, not sender addresses. The email might genuinely come from Open AI's servers (because it's from a legitimate account that was compromised). What's deceptive is the organization name embedded in the invitation.

Read the organization name carefully. Does it match what you'd expect? "Acme Corp Research Team" makes sense. "OPENAI SECURITY VERIFICATION REQUIRED" is suspicious. Look for organizations with names that create urgency, use all caps, include numbers or symbols, or sound like they're from Open AI itself rather than from a customer organization.

Suspicious Language and Pressure Tactics

Legitimate team invitations are straightforward: "You've been invited to join [Organization]. Click here to accept or decline." That's it.

Suspicious invitations include urgent language. "Your account needs immediate verification." "Unusual activity detected." "Subscription renewal pending." "Secure your account now." These phrases create pressure. They make you feel like you need to act immediately without thinking.

Legitimate Open AI communications don't typically:

- Ask you to verify your password

- Claim your account is compromised

- Demand immediate payment

- Request personal or financial information via email

- Use threatening language

- Create artificial urgency

- Reference services or features you don't use

If any of these elements appear in an invitation or follow-up email, treat it as suspicious.

Structural and Design Inconsistencies

This is where visual inspection matters. Pull up a legitimate Open AI email from your inbox (you can find examples on Open AI's official website). Compare it side-by-side with the suspicious invitation.

Do the fonts match? Are the colors identical? Is the spacing the same? Legitimate companies use consistent design systems. Fraudsters often copy designs from screenshots, and small details get lost in translation. A logo might be slightly blurry. A button might have slightly different padding. Text alignment might be off.

Also look at the domain in links. If an invitation says "Click here," hover over the link before clicking. Where does it actually point? Does the URL contain openai.com? Or does it point to a different domain entirely?

Grammatical and Spelling Errors

Open AI, like most professional companies, has quality assurance processes. Their public communications are spell-checked and grammar-reviewed. If an email contains obvious mistakes—"You'r account" instead of "Your account," inconsistent capitalization, awkward phrasing—that's a sign it wasn't written by Open AI.

This isn't foolproof because some phishers are careful. But many aren't. If you notice errors, report the email as phishing.

The Vishing Follow-Up: Why Phone Calls Are So Effective

The Psychology of Voice-Based Social Engineering

Phishing attacks succeed because they exploit cognitive biases. We trust written communication more than we should. We assume official-looking emails are real. We skim instead of read carefully.

Vishing attacks succeed because they exploit different biases. We trust voice more than text. A human voice sounds authoritative. It creates conversation, which makes it harder to think clearly. And voice has finality—you're on a call with someone in real-time, so you feel pressure to give an immediate answer.

Research in social psychology shows that verbal requests are significantly more persuasive than written requests, even when the written request is more detailed and reasonable. When a caller says, "For your security, I need to verify your identity," the brain responds differently than when an email says the same thing. The real-time nature of the call increases compliance.

Attackers know this. They know that if they can get someone on the phone, they dramatically increase their chances of getting what they want.

Common Vishing Scripts and Pretexts

Attackers use various scripts depending on the target. Here are the most common:

The Account Compromise Pretext: "We detected unusual activity on your account. For your security, I need you to log in and reset your password. Let me walk you through it." The attacker gets you to screen-share or confirm your password, giving them access.

The Billing Pretext: "Your subscription renewed at a higher tier, and there's a charge of $999." Most people will panic at this. The attacker then says: "To help reverse this, I need to verify your billing information to confirm you're the account holder." They're now extracting sensitive financial data.

The Security Verification Pretext: "We're conducting a security audit. I need to verify your credentials. What's your Open AI password?" A legitimate company will never ask for your password over the phone. But some people don't know this.

The Urgent Access Pretext: "Your colleague initiated a payment transfer from the company account. To prevent fraud, I need to verify your identity to block it." This creates urgency and appeals to your sense of protecting the company.

The Support Recovery Pretext: "Your account locked due to too many failed login attempts. I'm going to send you a password reset link. Don't share this with anyone. For your security, read it back to me so I know it went through." Now the attacker has the reset link.

Each script is designed to extract a specific piece of information or gain a specific type of access.

The Screen-Share Trap

One of the most dangerous tactics is getting the victim to allow remote screen-sharing. The attacker says: "Let me guide you through fixing this. I'll screen-share so I can see what you're seeing."

Once screen-sharing is active, the attacker can see everything on the victim's device. They can see browser history, cached passwords, open email, documents on the desktop. They can observe the victim typing credentials. And some screen-sharing tools allow bidirectional control—the attacker can actually move the mouse and click things on the victim's machine.

This is exponentially more dangerous than a standard phishing attack because the attacker gets real-time visibility and, often, direct control.

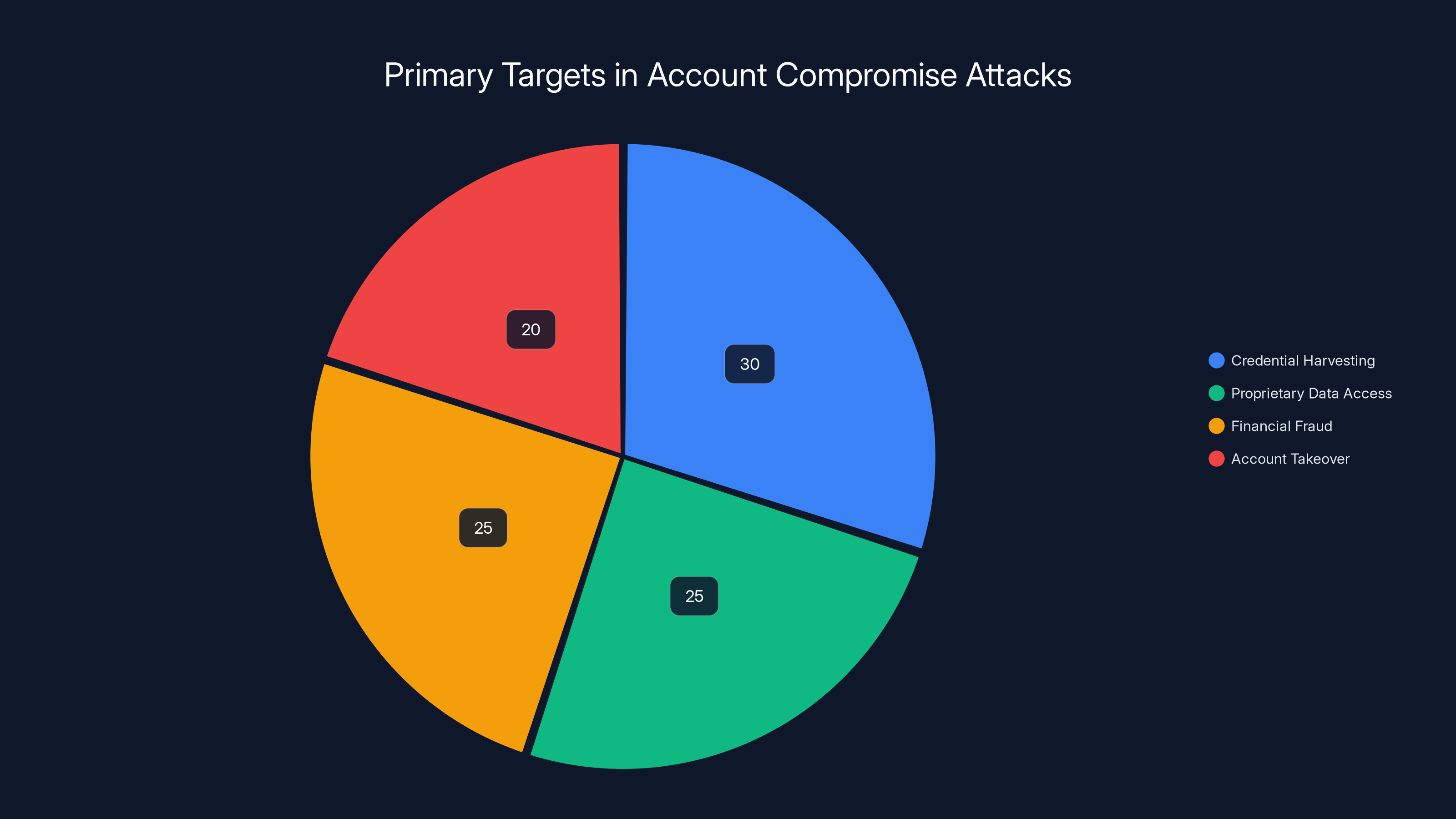

Estimated data shows that credential harvesting is the most common focus in account compromises, followed closely by accessing proprietary data and financial fraud.

Data at Risk: What Attackers Are Really After

Credential Harvesting and Account Takeover

The immediate goal of the attack is getting credentials. But why? What do attackers actually do with a compromised Open AI account?

First, they take over the account. They change the password, add a recovery email they control, and lock out the original owner. Now they have persistent access.

Second, they explore what's accessible from that account. They might find API keys. They might find billing information. They might find conversation history containing sensitive business data.

Third, they use the account to continue the attack chain. They might use the compromised account to send team invitations to other employees, turning it into a worm-like attack that spreads within the organization. Or they might use the account to access downstream services that trust Open AI's login system.

Proprietary Business Data and Competitive Intelligence

Here's what many organizations don't realize. If your employees are using Open AI for work—and many are—your conversation history in that account contains proprietary information.

Maybe your team uses Chat GPT to analyze customer data. Maybe you're using it to help with product development. Maybe you're asking it to review financial projections or marketing strategies. All of that lives in the conversation history.

When an attacker compromises an account, they can read everything. They see your competitive strategies. They see your customer list. They see your financial metrics. They see your product roadmap.

For a startup, this is catastrophic. A competitor who gets early access to your strategy can undercut you in the market. For an enterprise, this is a data breach—sensitive proprietary information is now in the hands of someone who might sell it or leak it.

Financial Fraud and Payment Method Abuse

Billable services are attractive targets. If an attacker gains access to Open AI account settings, they can see billing information. Payment methods. Usage patterns. The attacker can:

- Increase API quota and generate massive bills on your account

- Extract payment method information for credit card fraud

- Change billing address to intercept statements

- Add themselves as a billing contact to prevent you from noticing charges

- Downgrade security settings so they can maintain persistent access

Some attackers use compromised accounts as proxies for other fraud. They use your account to generate API calls that mask their identity. They use your payment method to purchase subscriptions to other services. They're using your identity as a shield.

Supply Chain Access and Downstream Compromises

If your company integrates Open AI's API into your own products or services, a compromised employee account can lead to API key exposure. This creates risk for your customers.

For example, if you're a Saa S company that provides AI-powered features to enterprises, and an attacker compromises an engineer's Open AI account, they might find the API keys that power your product. Now they can:

- Access customer data processed through your API

- Generate massive usage and costs that you'll be billed for

- Impersonate your service by using your API key

- Disrupt your service for customers

This transforms a single compromised account into a supply chain incident affecting your entire customer base.

Why Email Filters Aren't Enough

The Flaw in Traditional Email Security

Most organizations use email filters that scan:

- Email body content for phishing indicators

- Subject lines for suspicious keywords

- Sender domain against reputation databases

- Attachment content for malware

- URLs in the email body against blocklists

What they don't consistently scan:

- Metadata fields like organization name

- Fields populated by legitimate services (because it would block valid emails)

- Content that's embedded in legitimate service structures (like team invitation organization names)

The Open AI attack exploits this gap. The malicious content isn't in the email body that a filter scans. It's in the organization name field, which the email filter trusts because it comes from Open AI's legitimate infrastructure.

This is why traditional spam filters and phishing detection tools might miss these emails entirely. The email technically comes from Open AI. The syntax is correct. The structure is legitimate. But the payload is malicious.

The False Sense of Security

Many organizations have email security tools they believe are protecting them. They might be using Microsoft Defender, Proofpoint, Mimecast, or similar solutions. These tools are good. They catch a lot of phishing.

But they don't catch everything. And this attack specifically is designed to be difficult for automated systems to catch because it exploits trust in a legitimate service's infrastructure. The malicious content is delivered through legitimate channels, so it bypasses reputation-based filtering.

This creates a false sense of security. Organizations think they're protected because they have security tools, but they're not protected against this specific attack vector.

The Human Layer is the Last Defense

Which means the real defense is the human layer. Your employees. Your team members.

If employees know what to look for, they're the most powerful security tool you have. A human can recognize that an "Open AI Security Team" organization name doesn't make sense. A human can read a vishing script and recognize social engineering tactics. A human can decide not to click a link from an unknown organization.

But this only works if employees are trained and vigilant. It only works if there's a culture of security awareness. And it only works if you give people clear, actionable guidance on what to do if they suspect they've encountered an attack.

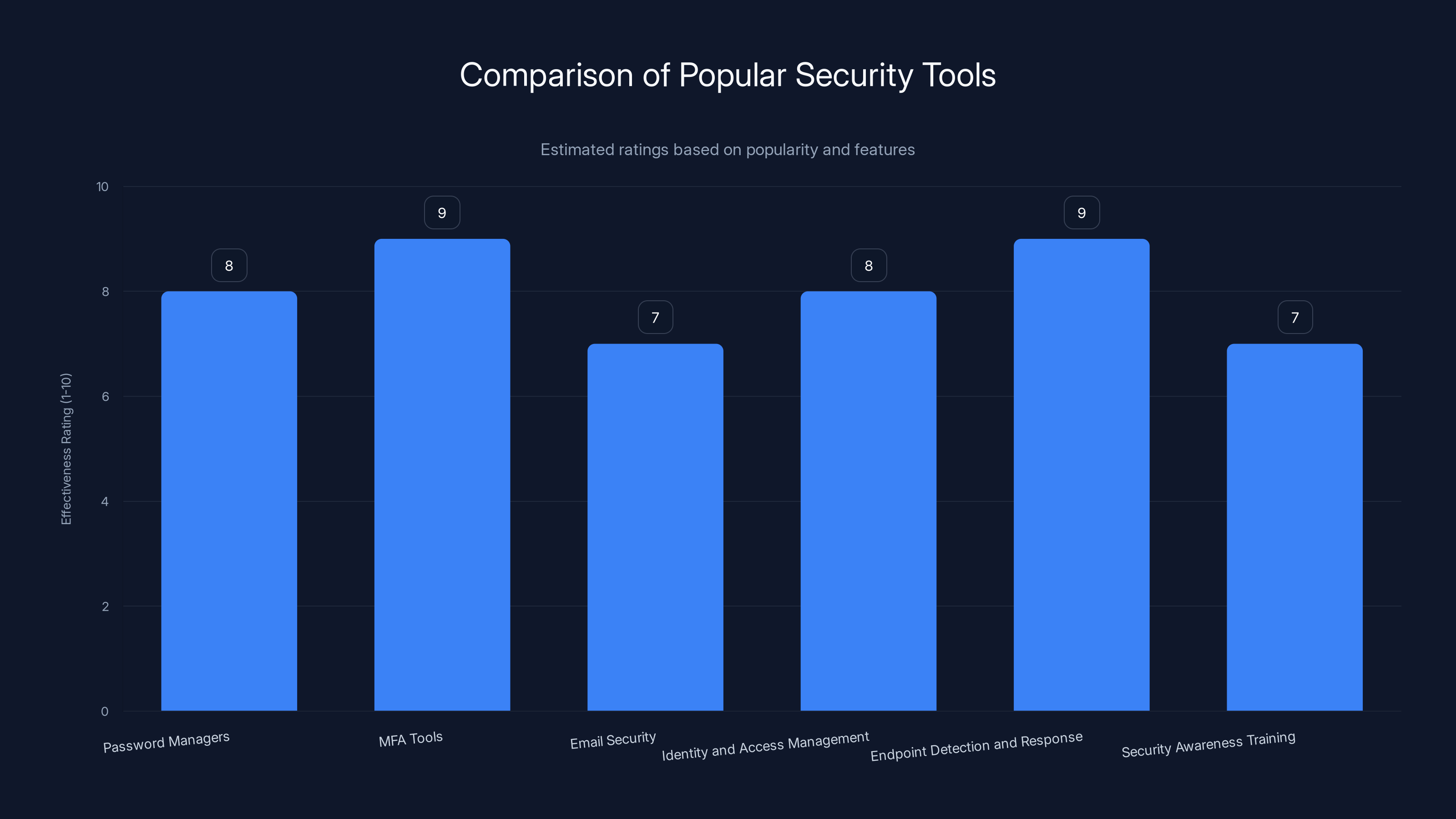

This bar chart provides an estimated effectiveness rating for various security tool categories based on their popularity and features. Estimated data.

Attack Examples: Real-World Scenarios

Scenario 1: The Billing Scare Vishing Attack

A marketing director at a mid-size Saa S company receives an email invitation to join "Open AI Billing Team." The organization name feels official. She clicks it.

Two days later, she gets a call. "Hi, this is Open AI Support. We detected an unusual charge on your account—$3,500 for API usage. That's unusual for your account type. I want to help reverse this, but I need to verify your identity."

She panics. Three thousand dollars is real money. She's worried about her company's budget. The caller sounds professional. He asks: "Can you confirm the email address on the account?" She provides it. "And can you confirm the payment method? Is it a corporate card or personal card?"

Now he has her email and knows her payment method is a personal card (which makes her feel more responsible for the charge). He says: "I'm going to need to take a closer look at this on our end, but I'll also need you to review your account settings to make sure nothing else is wrong. Can you log into your Open AI account right now?"

While she's logging in, he walks her through the login process, asking her to confirm the password she's entering. "You typed 'M-a-r-k-1234' right?" She confirms. Now he has her password.

He then says: "I'm going to email you a password reset link for security. When you get it, read back the entire link to me so I know it went through properly." She gets the link and reads it. He now has the password reset token.

The call ends with him saying he'll handle the billing issue and she'll see a credit within 24 hours. There's no credit. By that time, he's already used her credentials to access her account, reset her password, and extracted payment information.

Scenario 2: The Competitor Intelligence Breach

A product manager at a fintech startup uses Open AI to brainstorm product strategies. She regularly asks Chat GPT to analyze competitive landscapes, estimate customer acquisition costs, and develop growth strategies. All of this is stored in her conversation history.

She falls for a phishing invitation from a fake "Open AI Team Collaboration" organization. Her credentials are compromised.

Within days, a competitor has access to her entire conversation history. They see:

- Her strategy to target a specific customer segment

- Her estimated CAC and LTV projections

- Her planned pricing strategy for the next quarter

- Her analysis of the competitor's weaknesses

- Her timeline for launching a new feature

The competitor uses this information to launch a targeted marketing campaign against the fintech startup's planned strategy. They pivot their roadmap to block the startup's planned feature. They undercut the startup's planned pricing.

Within six months, the startup's growth rate slows significantly. They never realize the cause was a compromised Open AI account.

Scenario 3: The Supply Chain Compromise

A software company that provides AI-powered customer service tools for enterprises has a junior engineer whose Open AI account is compromised. The attacker finds API keys in the engineer's account settings.

These API keys power the company's customer service platform, used by 50+ enterprise customers. The attacker uses the API keys to:

- Intercept customer data flowing through the service

- Generate massive API usage charges ($40K+ in a week)

- Access customer conversation history

- Plant malicious responses that affect customer experiences

The company's customers start complaining. Their support systems are slow. Their data might be compromised. One customer initiates a compliance investigation.

It takes the company two weeks to realize the API keys were compromised. By then, the damage is done. They're liable for the customer data breach. They have to explain the incident to 50+ enterprise customers. They lose three major contracts.

The initial point of entry: one employee clicking one phishing link.

Prevention: Multi-Layer Defense Strategy

Layer 1: Employee Training and Awareness

This is foundational. Every employee who has access to Open AI, or any cloud service, needs training on:

- Phishing recognition: How to spot suspicious emails and invitations

- Social engineering: The psychology of vishing attacks and pressure tactics

- Safe practices: How to verify legitimacy before clicking or providing information

- Reporting procedures: How to report suspicious activity

- Password security: Why strong, unique passwords matter

Training should be specific, not generic. Generic security training ("Don't click suspicious links") is forgettable. Specific training ("If you get an Open AI invitation, verify it from your account dashboard before clicking") is actionable.

Training should also be ongoing. One annual training session isn't enough. Monthly phishing simulations, quarterly refresher training, and real-time alerts when new threats emerge create a culture of vigilance.

Layer 2: Technical Controls and Account Security

Multi-Factor Authentication (MFA)

This is non-negotiable. Every employee with access to critical systems needs MFA enabled. MFA means that even if an attacker has your password, they can't log in without a second factor—a code from an authenticator app, a hardware key, or a biometric.

In the context of this attack, MFA is a game-changer. When a vishing attacker tries to use the stolen password to log in, Open AI will request the second factor. The attacker doesn't have it. Access is denied.

MFA isn't perfect (attackers can sometimes intercept codes or use browser-based tools to bypass it), but it's dramatically better than password-only authentication.

Password Managers

Password managers like 1 Password, Bitwarden, or Microsoft Authenticator reduce the risk of password reuse. If one service is compromised, the password isn't also on six other services.

Password managers also make strong, unique passwords practical. Without a password manager, it's hard to remember a unique 20-character password for every service. With one, it's automatic.

Account Recovery Options

Make sure your Open AI account has a recovery email and phone number that you actively monitor. If an attacker tries to reset your password, Open AI will send a confirmation to your recovery email. If your recovery email is outdated or inactive, you won't know.

Review your account recovery settings quarterly.

Layer 3: Email and Network Controls

Advanced Email Filtering

While traditional filters miss this attack, advanced email security solutions that use sandboxing and behavioral analysis can catch some phishing attempts. These tools open suspicious emails in isolated environments and watch what they do before allowing them to reach your inbox.

Implement tools that provide additional protections:

- URL rewriting: Links are rewritten to pass through security checkers

- Attachment sandboxing: Files are detonated in isolated environments before delivery

- User authentication enforcement: Links require re-authentication before proceeding

- Domain authentication: SPF, DKIM, and DMARC records prevent spoofing

Network Segmentation

If an attacker gains access to one employee's device, they shouldn't automatically have access to your entire network. Network segmentation (using VLANs, firewalls, and Zero Trust architecture) limits the lateral movement an attacker can do.

For example, your financial systems should require additional authentication even if someone's device is already inside your network. Your employee data should be in a separate zone from your customer data.

Layer 4: Detection and Response

Behavioral Monitoring

Tools that monitor account behavior can flag unusual activity. If an Open AI account suddenly logs in from a new location at an unusual time, or makes API calls at unusual volumes, that's worth investigating.

Implement monitoring for:

- Login from new locations or devices: Unusual geography or device fingerprints

- Bulk API calls: Sudden spikes in usage that don't match historical patterns

- Password changes: Multiple password reset attempts or unexpected changes

- Permission escalation: Adding new team members or API keys

- Data access: Accessing conversation history that the user doesn't typically use

Incident Response Plan

If an account is compromised, what's your process? Who do you notify? How quickly can you revoke access?

Your incident response plan should include:

- Detection triggers: What alerts you to a compromise

- Investigation procedures: Who investigates, what they check

- Containment steps: How to isolate the compromised account

- Eradication: How to remove attacker access and restore the account

- Recovery: How to restore normal operations

- Notification: Who do you inform, and what's your timeline

Write this plan down. Share it with your team. Test it regularly.

Phishing remains the most common threat at 50%, but vishing is significant at 30%, with combined attacks at 20%. Estimated data.

If You're Already Compromised: Remediation Steps

Immediate Actions (First Hour)

Step 1: Stop the Bleeding

If you suspect your Open AI account is compromised, the first step is to isolate it. Change your password immediately from a clean device (not a device that might also be compromised).

Log into your Open AI account from a different device than the one you normally use. Go to Settings > Security. Change your password to something completely new, something you've never used before, something long (20+ characters), and something that includes uppercase, lowercase, numbers, and symbols.

Step 2: Check Your Recovery Options

Go to Settings > Security > Recovery options. Check that your recovery email is correct and that you have access to it. Check that your recovery phone number is correct. If an attacker added their own recovery email or phone number, remove it immediately.

If you can't access your account at all (because your password was changed), use the account recovery process. Proof of identity will be needed.

Step 3: Review Recent Activity

Most modern web services allow you to see login activity. In your Open AI account, check your login history. Do you see logins from places you don't recognize? At times you weren't using the service? Write down the locations, times, and devices.

If you see suspicious activity, take note of it for reporting.

Step 4: Check for Unauthorized API Keys

Go to Settings > Organization > API keys. Do you see any API keys you didn't create? If so, revoke them immediately. Attackers often create new API keys to maintain access even after passwords are changed.

Revoke all API keys and create new ones from a clean device.

Short-Term Actions (First Day)

Step 5: Check Your Billing and Settings

Go to Settings > Billing > Overview. Check your billing history for charges you don't recognize. Check your payment method to make sure it's still correct. Check your subscription tier—did an attacker upgrade you to an expensive tier?

If you see fraudulent charges, report them immediately. Depending on your region and payment method, you might have dispute rights.

Go through all account settings systematically. Check email addresses, phone numbers, security questions, and contact information. Anything that's wrong, correct it.

Step 6: Notify Your Organization

If your account is linked to your organization (through an Open AI organization account), notify your organization's administrators immediately. They need to:

- Review who has access to shared resources

- Check if conversation history was accessed

- Assess if any API keys for organizational use were compromised

- Review team invitations for suspicious additions

- Change organizational API keys

Step 7: Report to Open AI

Contact Open AI's security team. Provide them with:

- Date and time you discovered the compromise

- Evidence of unauthorized access (login history, timeline)

- Actions you've already taken

- Any information about how the compromise occurred (phishing email, vishing call, etc.)

Open AI can perform deeper investigation, check for data breaches, and potentially identify if your account was used in other attacks.

Step 8: Change Related Account Passwords

If your Open AI account password was the same as passwords on other accounts, change those too. Use a password manager to ensure passwords are unique across all services.

Prioritize:

- Email account (most critical—recovery emails give access to other accounts)

- Work accounts

- Financial accounts

- Cloud storage

- Other Saa S platforms

Medium-Term Actions (First Week)

Step 9: Assess Data Exposure

Review your conversation history. What sensitive information might an attacker have accessed? Categorize it:

- Personally identifiable information: Names, email addresses, phone numbers

- Financial information: Credit card numbers, salary, pricing data

- Proprietary information: Business strategies, customer lists, product plans

- Credentials: Passwords, API keys, access tokens

Document what was potentially exposed. You'll need this for compliance reporting.

Step 10: Notify Affected Parties

Depending on what information was exposed, you might have legal obligations to notify affected people or organizations. This varies by jurisdiction and industry.

Consult with your legal team. Some regions (GDPR in Europe, CCPA in California, etc.) have specific notification timelines and requirements.

Step 11: Monitor for Follow-Up Attacks

Compromises often come in waves. An attacker might maintain multiple access points. After regaining control of your account, monitor closely for:

- Additional login attempts

- New team invitations or organization changes

- Unexpected conversations in your history

- API usage from unknown sources

Set up alerts for unusual activity and check your account regularly.

Step 12: Broader Security Review

Use this incident as a catalyst for a broader security review. If your account was compromised, it's likely others in your organization are at risk too. Conduct a security assessment:

- Which employees have access to Open AI?

- Do they have MFA enabled?

- What sensitive data are they inputting into AI systems?

- Do you have policies around data classification?

- Is there monitoring of API usage?

- Do you have an incident response plan?

Address gaps.

Organizational Defense: Company-Wide Protections

Employee Training Program Structure

Baseline Training

Every employee should complete baseline security training that covers:

- Phishing and vishing attack tactics

- Social engineering psychology

- How to verify legitimacy of communications

- Password security and MFA

- Reporting procedures

This should be 30-60 minutes long and completed on hire. Update it annually.

Role-Specific Training

Employees with access to sensitive systems or data need deeper training:

- IT and security staff: Deeper technical controls, incident response

- Finance staff: Payment fraud, account compromise detection

- Engineering staff: API key security, supply chain risks

- Customer-facing staff: Social engineering resistance

Phishing Simulations

Send simulated phishing emails to employees. Track who clicks malicious links. Those employees get additional training. This creates real, practical learning.

Start with obvious simulations and gradually make them more sophisticated. Track metrics:

- Click rate

- Report rate (people reporting the simulation to security)

- Improvement over time

The goal is to drive both the click rate down and the report rate up.

Institutional Policies

Data Classification Policy

Not all data should be input into AI systems. Establish a data classification system:

- Public: No restrictions

- Internal: Restricted to employees, not shared with third parties

- Confidential: Restricted to specific teams or people, with audit logging

- Restricted: Should not be input into AI systems at all (customer PII, passwords, financial data, etc.)

Train employees on what data falls into each category. Make it easy for them to classify data correctly.

AI Usage Policy

Define what employees can and cannot do with AI systems:

- Permitted: Brainstorming, drafting, learning, summarizing

- Prohibited: Inputting customer data, inputting passwords or credentials, inputting financial information, creating commitments

- Review required: Large projects, analysis of competitive data, anything that might affect external parties

Make it clear that violations have consequences. Also make it clear that your goal is to support safe usage, not to punish.

Incident Reporting Process

Make it easy for employees to report security concerns:

- Create a dedicated email address for security reports

- Create a web form for anonymous reporting

- Train managers to take security concerns seriously

- Provide feedback to reporters (not necessarily public, but confirm the report was investigated)

- Protect reporters from retaliation

Technical Enforcement

SSO and Centralized Access Management

If your organization uses a centralized identity provider (like Okta, Azure AD, or Google Workspace), require all employees to use it. This gives you centralized control over:

- MFA enforcement

- Password policies

- Access revocation

- Login monitoring

- Audit logging

VPN and Zero Trust Architecture

For remote workers and BYOD (bring your own device) environments, require VPN access. Go further with Zero Trust architecture that requires authentication for every resource, even internal ones.

This limits the impact if an employee's device is compromised.

EDR and Endpoint Protection

Deploy endpoint detection and response (EDR) tools on company devices. These tools monitor:

- Malware execution

- Suspicious process behavior

- Credential dumping

- Lateral movement

- Command and control communications

If an employee falls for a phishing attack that installs malware, the EDR tool can detect it and block it before it causes damage.

Understanding the Broader Attack Ecosystem

Why AI Services Are Attractive Targets

Open AI accounts are attractive because they represent several things to attackers:

- Direct access to enterprise data: Employees are inputting business data into AI systems

- Financial accounts: Billable services with payment methods

- API keys: Programmatic access to services

- Trusted third party: Employees trust AI systems and are less defensive

- Lack of awareness: Many organizations don't have policies around AI use

As AI adoption increases, attacks targeting AI platforms will increase too. This isn't a temporary threat. It's a structural change in the threat landscape.

Copycat Attacks and Variants

Once attackers discover a successful attack vector, others copy it. The Open AI team invitation attack isn't unique to Open AI. Similar attacks likely exist or will exist for:

- Slack team invitations

- Git Hub organization invitations

- Google Workspace invitations

- Microsoft Teams invitations

- Any platform with team/group invitation features

The attack vector is generalizable. Once you understand how this attack works, you understand how similar attacks work against other platforms.

The Role of Data Brokers

Attackers are targeting specific companies and employees, which means they have targeting lists. Where do these come from?

- Public data: Linked In, industry directories, company websites

- Previous breaches: Attackers buying breached email lists from other compromises

- Social engineering: Calling company phone numbers to gather employee names and emails

- Business data brokers: Companies legally collect and sell email lists

Your organization's employees are already on attacker mailing lists. This isn't a question of "if" your employees receive phishing, but "when." Prepare accordingly.

Emerging Trends and Future Threats

AI-Generated Phishing Content

As AI tools become more sophisticated, so do phishing attacks. Instead of phishing emails written by humans with grammatical errors, attackers are using AI to generate convincing phishing content.

AI-generated phishing emails:

- Have no grammatical errors

- Sound professional and natural

- Can be personalized at scale

- Can adapt to different targets

- Are harder for humans to distinguish from legitimate emails

Defense against this requires shifting from "look for grammatical errors" to "verify legitimacy through independent channels."

Deepfake Vishing Attacks

Voice cloning technology is improving rapidly. In the future, attackers might use AI-generated voice that sounds exactly like Open AI support staff, or like your CEO, or like someone else you trust.

The vishing attack becomes dramatically more convincing when it's AI-powered voice cloning. You're hearing a familiar voice, so you trust it more. You're less likely to be skeptical.

Defense requires not just training people to be skeptical, but also creating processes that don't rely on voice authentication. If someone calls claiming to be support, you should verify through a different channel: call them back using a number from the official website, send a message through the official support system, etc.

Supply Chain Targeting

As businesses become more security-conscious, attackers are shifting focus upstream. Instead of targeting enterprises directly, they target the vendors that serve enterprises.

A smaller Saa S company is easier to compromise than a Fortune 500 company. But if that Saa S company has access to enterprise data, the payoff is the same.

Organizations need to extend their security practices to their vendors and require similar standards from them.

Tools and Resources for Protection

Employee Security Tools

Password Managers

- 1 Password

- Bitwarden

- Last Pass

- Microsoft Authenticator

MFA Tools

- Authy

- Microsoft Authenticator

- Google Authenticator

- Yubi Key (hardware)

Email Security

- Proofpoint

- Mimecast

- Microsoft Defender for Office 365

- Cisco Secure Email

Organizational Tools

Identity and Access Management

- Okta

- Azure Active Directory

- Ping Identity

- Jump Cloud

Endpoint Detection and Response

- Crowd Strike Falcon

- Microsoft Defender for Endpoint

- Elastic Security

- Sentinel One

Security Awareness Training

- Proofpoint Security Awareness Training

- Know Be 4

- Cymulate

- SANS Security Awareness

Free Resources

- NIST Cybersecurity Framework: Comprehensive guidance on building security programs

- CISA Alerts: Real-time alerts about emerging threats

- Open AI Security Documentation: Official guidance on securing Open AI accounts

- SANS Institute: Free white papers and webcasts

FAQ

What exactly is vishing and how is it different from phishing?

Phishing is primarily text-based (emails, messages) designed to trick people into clicking malicious links or revealing information. Vishing is voice phishing—using phone calls to achieve the same goal. Vishing is often more effective because human voice creates authority and urgency, making it harder to think critically. In the Open AI attack, the two are combined: phishing softens the target, vishing closes the deal.

How can I tell if an Open AI invitation is legitimate?

The safest approach is to never click links in unsolicited invitations. Instead, log directly into your Open AI account from the official website, go to your team invitations section, and check if the invitation appears there. If it does, it's legitimate. If it doesn't, the invitation was a phishing attempt. This one extra step completely neutralizes the phishing attack.

Is it safe to use Open AI for work-related tasks?

Open AI itself is secure. The issue is user behavior and what data users input into it. If you input proprietary business information, customer data, or credentials, you're creating a target. Establish a data classification policy with your organization: some data can be input into AI systems, some cannot. For example, never input customer passwords, financial information, or unreleased product details. Summarizing documents or brainstorming ideas? Safe.

What should I do if I think I've been compromised?

Immediately change your password from a clean device. Check your account settings for unauthorized changes. Review your billing for unusual charges. Check for unauthorized API keys and revoke them. Report the incident to Open AI security. If your organization uses your account for work, notify your IT team. The faster you act, the less damage an attacker can do.

Should my organization require all employees to use Open AI's SSO login option?

Yes. Open AI's SSO (single sign-on) option allows you to log in using your organization's identity provider. This is more secure because it supports centralized MFA enforcement and monitoring. If an attacker tries to log in without the second factor, they're blocked. Additionally, when you leave the organization, your identity provider access is revoked, automatically preventing further access. This is much more secure than email/password authentication.

How often should we run phishing simulations?

Independent of industry recommendations, a practical schedule is monthly simulations with quarterly variations. Monthly keeps security top-of-mind. Quarterly variations (different attack types, different targets, increasing sophistication) ensure training doesn't become predictable. Track metrics: click rates should decline over time, and report rates should increase. If they don't improve after 6-12 months, your training approach needs adjustment.

What's the best multi-factor authentication method?

For maximum security, hardware keys (like Yubi Key) are most resistant to phishing and interception. For practicality and ease of adoption, authenticator apps (Microsoft Authenticator, Google Authenticator, Authy) provide strong security and better UX. SMS-based MFA is weakest but better than nothing. Use whatever method your organization can actually get employees to adopt and use consistently, with hardware keys for high-value targets.

Should we restrict what data employees can input into Open AI?

Absolutely. Create a data classification policy. Establish which data categories can be input into AI systems: it's fine to input general ideas, frameworks, and summarized information. Never input customer data, personally identifiable information, financial details, credentials, or unreleased product information. Document this policy and train employees on it. Periodically audit compliance. Some organizations use data loss prevention tools to prevent sensitive data from being pasted into web forms.

How do we know if an attack has affected multiple employees in our organization?

Monitor Open AI for unusual organizational activity: unexpected team members being added, new API keys being created, unusual geographic login patterns. More practically, ask your security team to review Open AI access logs if you have organizational accounts. If multiple employees report suspicious emails or calls within a short timeframe, that's a sign of a targeted campaign. Share threat intelligence internally and increase monitoring during these periods.

What's the legal liability if our organization's data is compromised through Open AI?

This depends on what data was compromised and which jurisdictions apply. If customer data was exposed, you likely have notification obligations (GDPR, CCPA, etc.) and potential liability to those customers. If proprietary business data was exposed, your liability is to your organization. Working with legal counsel is essential. From a practical security perspective, the best protection is preventing the compromise in the first place through strong security practices.

Can we trust Open AI's security, or should we use a different platform?

Open AI's security is reasonable. The vulnerability exploited by this attack isn't a flaw in Open AI's systems—it's a flaw in how humans interact with systems. The attack works because people trust email and click links without verification. This same attack could be executed against competitors like Claude, Gemini, or other AI platforms. The issue isn't Open AI—it's user behavior. Focus on defense mechanisms (MFA, training, monitoring, verification procedures) rather than avoiding platforms entirely.

Conclusion: Building a Culture of Vigilance

The Open AI team invitation scam isn't an isolated incident. It's a symptom of a larger trend: attackers are becoming more sophisticated at exploiting legitimate business tools for social engineering.

The good news is that these attacks are preventable. They require human action—clicking a link, calling a number, providing information. That means you can break the chain by training people to recognize the attacks and establishing processes that don't rely on people making perfect decisions under pressure.

Here's what we know works:

Employees are your strongest defense layer, but only if they're trained and supported. A single employee who recognizes a phishing email and reports it saves the organization from a potential breach. Scale this across an organization, and you've built a resilient defense.

Technical controls matter more than awareness alone. MFA stops attackers even if they have passwords. Endpoint detection stops malware even if employees make mistakes. Email filtering catches 80% of phishing, even if imperfect.

Incident response plans transform chaos into order. If a compromise occurs, having a clear, practiced process means you respond within hours instead of weeks. Minutes and hours matter in breach response.

Verification breaks the attack chain. Making it policy to verify legitimacy before clicking or calling means phishing emails become useless. "If you got an invitation via email, verify it in your account dashboard" is such a simple procedure, yet it would have prevented every person in this article from being compromised.

For your organization:

- Implement MFA on all critical accounts today, not next quarter

- Schedule security training that includes this specific attack vector

- Establish a clear process for verifying invitations and communications

- Create an incident response plan and test it

- Monitor Open AI account activity for unusual patterns

- Build a security culture where employees feel comfortable reporting suspicious activity

These steps aren't perfect. No defense is. But they dramatically reduce your risk and your exposure.

The attackers will keep adapting. They'll use AI-generated content. They'll use voice cloning. They'll find new platforms and new attack vectors. But the fundamentals of their attacks stay the same: they need you to trust them, click on something, or tell them something you shouldn't.

Your job is to make it harder for them to achieve any of those things. It's possible. It takes commitment. But it's absolutely possible.

Start today. Don't wait for a breach to force your hand.

Key Takeaways

- OpenAI attackers embed malicious content in organization names instead of email bodies to bypass filters, making the initial phishing significantly harder to detect

- Vishing (voice phishing) follow-ups are the critical second stage—attackers use psychological pressure and false authority to extract credentials over the phone

- Businesses are targeted at scale because attackers can compromise multiple employees simultaneously, gaining access to shared resources and proprietary data

- Multi-factor authentication is the single most effective defense: even if attackers have passwords, they cannot access accounts without the second factor

- Verification through independent channels (logging directly into account dashboards rather than clicking email links) completely breaks the phishing attack chain

Related Articles

- Vishing Kits Targeting SSO: Google, Microsoft, Okta at Risk [2025]

- 1Password's New Phishing Prevention Feature [2025]

- The Human Paradox in Cyber Resilience: Why People Are Your Best Defense [2025]

- AI Defense Breaches: How Researchers Broke Every Defense [2025]

- Microsoft BitLocker Encryption Keys FBI Access [2025]

- FortiGate Under Siege: Automated Attacks Exploit SSO Bug [2025]