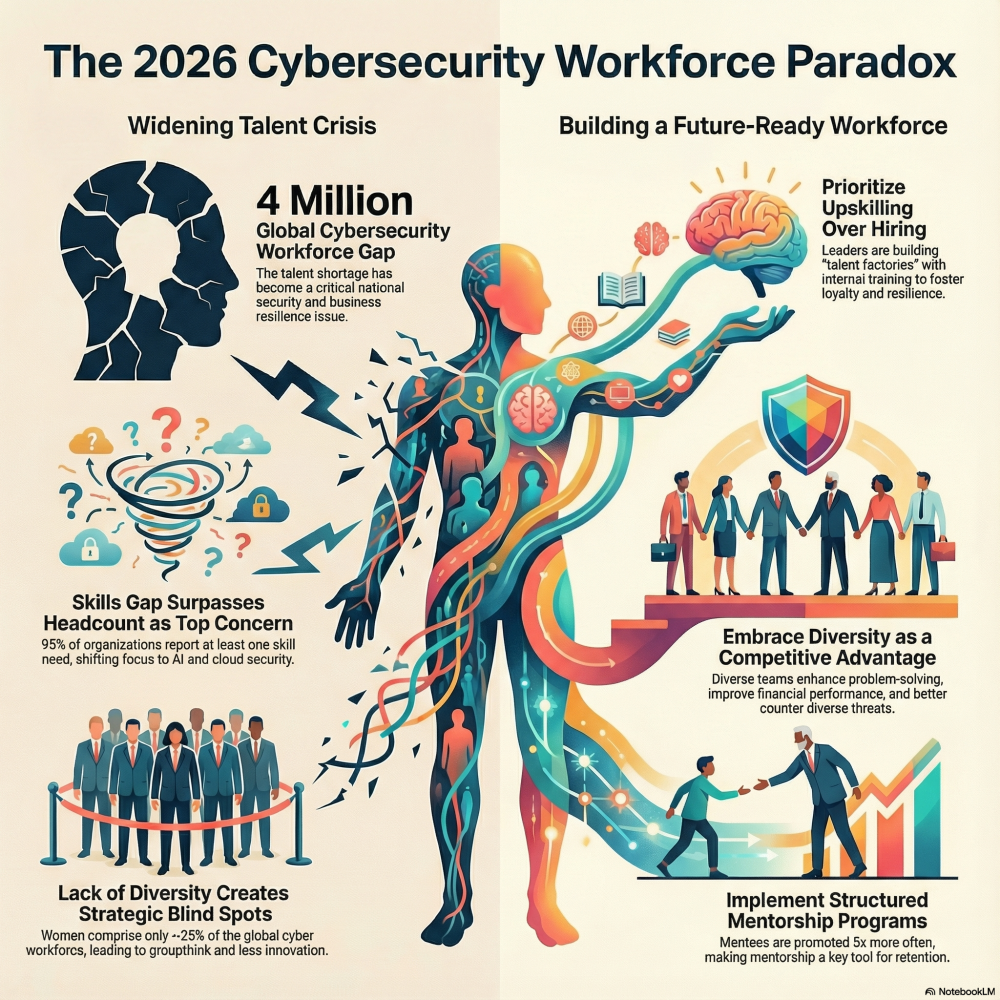

The Human Paradox in Cyber Resilience: Why People Are Your Best Defense [2025]

Introduction: The Contradiction Nobody Wants to Admit

There's a brutal irony sitting at the heart of modern cybersecurity that keeps security leaders up at night. Your employees are simultaneously your greatest vulnerability and your most powerful defense against sophisticated cyber threats.

Consider what happened in 2024 and early 2025. A major UK retailer suffered a breach estimated to cost over £100 million. Another incident at a luxury automotive company became the most expensive cyber incident in UK history, with damages reaching £1.9 billion. Both incidents shared a common thread: human error, credential compromise, or social engineering played a central role in the attack chain, as highlighted by the Bank of England.

But here's where it gets interesting. The same human element that enabled these catastrophic breaches is also what stops countless attacks before they become headline news. Every day, across thousands of organizations, employees notice something off about an email, pause before clicking a suspicious link, or report unusual activity that turns out to be a sophisticated attack.

The paradox is this: you cannot build effective cybersecurity without people. But people are fallible, easily manipulated, and increasingly targeted by attackers who understand that technical controls are harder to break than human psychology.

This isn't about blaming employees. It's about understanding that the future of cybersecurity depends on transforming how organizations think about human risk. Instead of treating employees as the weakest link to be controlled and constrained, leading organizations are building cultures where secure behavior becomes the default, where people feel empowered to report threats, and where the organization learns from every incident.

The question isn't whether you can eliminate human error. You can't. The question is whether you can build a resilient organization where human error doesn't cascade into existential threats.

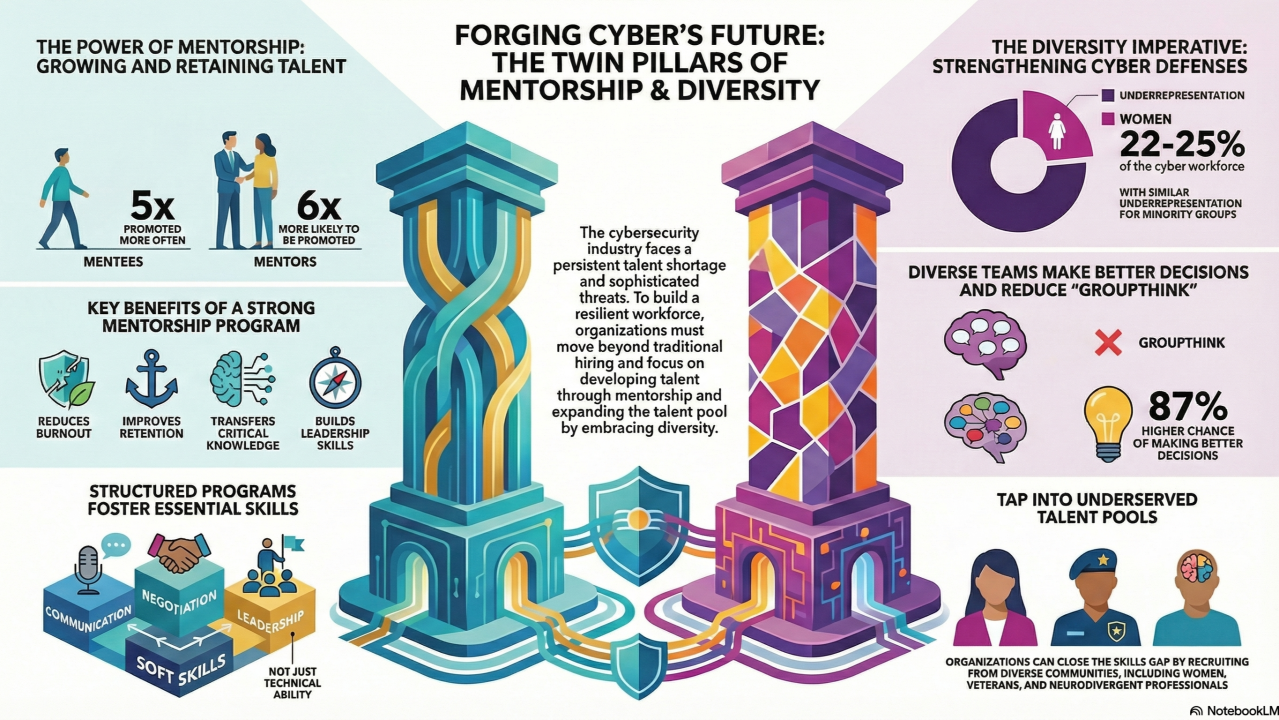

Estimated data suggests that leadership commitment and feedback utilization are critical for effective human-centered security, with scores of 85 and 80 respectively.

TL; DR

- The Human Paradox: Employees are responsible for most breaches yet are essential for catching attacks before they escalate

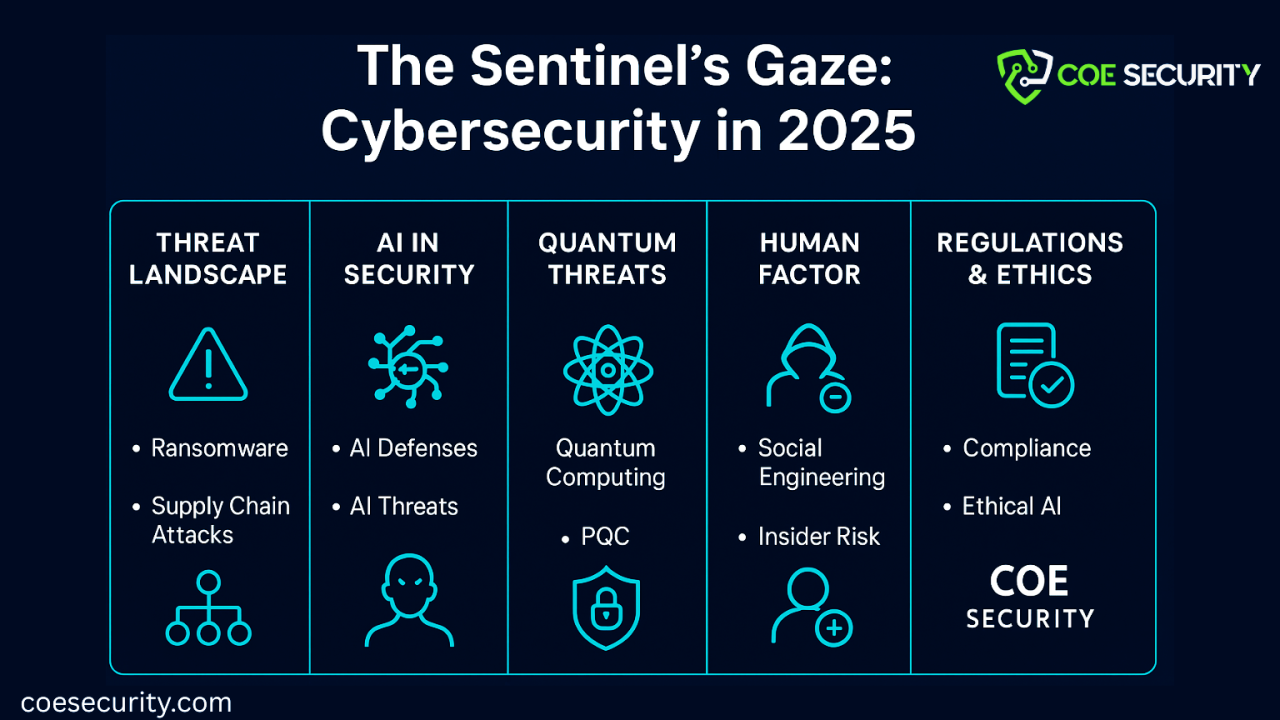

- Social Engineering is Winning: AI-powered phishing is becoming more convincing, personalized, and scalable than ever before

- Culture Beats Tools: Security awareness training alone fails because it's passive; transformation requires cultural change

- Shadow IT Creates Blind Spots: Employees bypass security controls with good intentions, creating unmanaged data governance risks

- Detection Relies on Humans: Technical controls catch some threats, but human vigilance remains the most effective detection mechanism

- Bottom Line: Organizations that foster a culture of security mindfulness, quick incident reporting, and leadership recognition outperform those that rely solely on technical controls

AI-powered threats have the highest impact on cybersecurity, followed closely by human error. Estimated data.

Understanding the Human Paradox: Both Link and Liability

Let's be clear about what we're dealing with here. The human paradox isn't a new problem, but it's becoming more complex every quarter.

The Weakest Link Problem

Humans are predictable in their unpredictability. Attackers have learned this and weaponized it.

Consider the M&S breach. The organization publicly acknowledged that human error was the catalyst. This wasn't a sophisticated zero-day exploit or a nation-state bypass of military-grade encryption. It was a mistake, made by someone doing their job, trusting something they shouldn't have trusted.

The problem compounds when you consider what attackers are willing to do to create that moment of human error. They'll spend weeks researching a target, gathering intelligence about employees, learning organizational vocabulary, understanding workflow patterns, and then crafting an attack so personalized it feels entirely legitimate.

When someone gets a message that appears to come from their CEO requesting urgent wire transfers, or a notification that looks like it's from their IT department asking them to re-authenticate due to a security update, their brain doesn't flag it as dangerous. It flags it as normal. It flags it as urgent. It flags it as something that demands immediate action.

The Strongest Link Advantage

But flip the perspective and something remarkable happens.

A software engineer who understands threat modeling notices that a database query suddenly requires credentials for a system she doesn't use. She reports it. Crisis averted.

A finance analyst gets a payment request that follows the right format but comes from an email address that's off by one character. He's seen this pattern before in security training. He verifies through a known contact. Attack stopped.

A support technician gets suspicious about a caller claiming to be from corporate IT requesting access credentials. She follows her gut feeling and escalates before sharing anything. The caller was an attacker.

These moments happen constantly in organizations that have built security-aware cultures. They're not dramatic. They don't make headlines. But statistically, they prevent far more damage than any firewall or endpoint protection platform.

The research backs this up. Organizations with strong security cultures see significantly fewer successful breaches. More importantly, they detect breaches faster because employees are actively looking for anomalies rather than passively following checklist training.

The Rise of AI-Powered Social Engineering

How Attackers Are Leveling Up

Traditional social engineering required persistence and skill. Attackers had to craft individual phishing emails, make convincing phone calls, or spend hours in reconnaissance to understand a target well enough to manipulate them.

AI changed everything.

Now attackers can generate hundreds of personalized emails in minutes, each tailored to specific employees and their roles. Natural language processing allows AI to detect which subjects lines have the highest open rates for specific people. Computer vision can analyze social media photos to extract information about hobbies, family details, and interests that make phishing more convincing.

Worse, voice cloning and deepfake technology means the attacker doesn't even need to be present. Last year, an attempted fraud at a major advertising company used AI-generated video and voice synthesis to create a convincing deepfake meeting. Senior executives were called to a video meeting where the participants appeared to be legitimate colleagues requesting urgent wire transfers.

The meeting looked real. The voices sounded real. The urgency felt real.

Only because someone paused and verified through an alternative channel was the attack discovered.

The Scalability Problem

The real danger isn't that these attacks exist. It's that they're scalable.

An attacker no longer needs to target one company and spend weeks on reconnaissance. They can run campaigns against entire industries simultaneously. They can A/B test their messaging to find what works. They can automatically rotate tactics when they notice security awareness is high in a particular organization.

Machine learning models can now predict with surprising accuracy which employees are likely to fall for specific attacks based on their behavior, job title, tenure, and organizational role. A new employee is more likely to fall for certain social engineering tactics. Someone in a technical role might be vulnerable to technical-sounding pretexting. Someone in finance is vulnerable to payment fraud scenarios.

AI isn't just making individual attacks more convincing. It's making entire attack campaigns more sophisticated, more personalized, and more likely to succeed.

What This Means for Your Workforce

Your employees are facing a new reality. The phishing emails they learned to recognize five years ago don't exist anymore. The training that taught them "look for spelling mistakes and suspicious domains" is outdated because AI now writes perfect English and can spoof legitimate domains.

What your workforce needs instead is pattern recognition. They need to understand not just what phishing looks like, but how their organization communicates. They need to know the actual process for urgent requests in their department. They need to feel comfortable pausing and verifying, even if it means delaying a response by a few minutes.

Most importantly, they need to know that reporting a suspicious request won't result in blame or punishment, but in recognition and appreciation.

Human error is estimated to contribute to 40% of cybersecurity breaches, highlighting its significant role as both a link and liability. Estimated data.

Shadow IT: The Unmanaged Risk Nobody Talks About

When Good Intentions Create Security Blind Spots

Here's a scenario that plays out in thousands of organizations every single day.

A product manager finds that the approved collaboration tool doesn't support the workflow she needs. So she starts using a consumer cloud service that's free, easy, and actually better for her use case. A few teammates adopt it. Soon, the entire product team is collaborating on this unapproved platform.

Six months later, security conducts an audit and discovers that sensitive product documentation, API specifications, and customer data has been stored in this shadow IT tool.

Was the product manager trying to undermine security? Absolutely not. She was trying to do her job better. She was trying to move faster and collaborate more effectively. The security controls that existed weren't meeting her needs, so she found an alternative.

This is the shadow IT problem, and it's massive.

Employees introduce tools, devices, and processes that fall outside formal IT governance every single day. They do it for legitimate reasons: frustration with slow official processes, tools that don't meet their actual workflow needs, or simply because they found something that works better.

But the result is a collection of unmanaged, unmonitored data repositories that security teams have no visibility into. If one of these tools is compromised or misused, the organization has no backup procedures, no access controls, and no way to respond quickly.

The Scope of the Problem

Surveys consistently show that shadow IT is becoming more prevalent, not less. As remote work has become normal, employees have more flexibility to choose their own tools. As organizational applications become more complex, employees become more frustrated.

The typical organization has dozens of unapproved applications being used actively by employees. Many of these tools have weaker security than the official alternatives, collect more personal data, or store data in regions without appropriate compliance protections, as noted by Security.com.

The Governance Risk

From a data governance perspective, shadow IT creates nightmares.

Imagine you receive a data subject access request. Someone from the EU wants all their personal data that your organization holds. Your data governance team searches your official systems and provides what they find. But meanwhile, customer data with this person's information is sitting in an unapproved cloud storage service that security doesn't even know about.

Now you've potentially breached GDPR requirements.

Or imagine a ransomware attack. Your incident response team works to contain and recover. But data that should have been recoverable has been changed or encrypted in a shadow IT tool that wasn't part of your backup strategy.

Or consider M&A activity. Due diligence requires identifying all data stores and assessing security. If significant portions of your data are in unapproved systems, you've created real problems for deal closure and post-merger integration.

Shadow IT isn't just a security issue. It's a compliance issue, a data governance issue, and increasingly, a material risk to the organization.

The Failure of Checkbox Training

Why Awareness Training Doesn't Work

Security leaders have known for years that traditional awareness training is ineffective. Yet organizations keep doing it, spending millions of dollars on generic modules that employees click through while checking email with their other monitor.

The problem is fundamental to how the training is designed.

Checkbox training is passive. Employees sit through it, absorb nothing, and move on. It treats security awareness as something that can be transmitted like information, rather than something that must be built through experience, culture, and practice.

Traditional training also tends to be generic. A phishing awareness module is the same whether you're in accounting, engineering, or sales. But different roles face different threats. Finance professionals are targets for payment fraud. Engineers are targets for credential theft. Sales teams are targets for business email compromise.

Worse, checkbox training often focuses on the negative. Don't click suspicious links. Don't share passwords. Don't use public Wi-Fi. It teaches what not to do without explaining why it matters or how the organization will support good behavior.

The Gap Between Knowledge and Behavior

Here's the uncomfortable truth: people who fail security training aren't stupid. They often understand perfectly well what they shouldn't do. They just don't do it when it matters.

This is the knowledge-behavior gap, and it's why someone who passed a phishing awareness test can still fall for a sophisticated phishing attack two weeks later. The difference is that the test was artificial. It clearly marked emails as malicious. But in real life, there's no red banner saying "THIS IS A PHISHING EMAIL."

What changes behavior isn't knowledge. It's culture, practice, and feedback.

An employee who works in an organization where security is integrated into daily processes, where they've practiced responding to threats, where they know exactly how to report something suspicious, and where they've seen leadership respond positively to reports develops actual security instincts.

They don't think about phishing as something they learned in training. They think about it as something that's relevant to their job, something their team cares about, and something they're capable of handling.

Moving Beyond Checkbox Compliance

The organizations that are winning at human-centered security aren't doing checkbox training. They're building security into the organization's DNA.

This means making training continuous and relevant. A finance employee gets training about payment fraud patterns. An engineer gets training about credential compromise. A manager gets training about recognizing social engineering in conversation.

It means running simulated attacks and treating them as learning opportunities rather than gotcha moments. When an employee clicks a simulated phishing link, they get immediate feedback and education, not punishment.

It means creating feedback loops where employees see the impact of security-aware behavior. When someone reports a suspicious email and it turns out to be a real attack, they need to know they just prevented a problem. That reinforcement is incredibly powerful.

It means integrating security into how teams actually work. If you're in engineering, security conversations happen during code review. If you're in product, security is part of requirements gathering. Security isn't a separate thing you do in training.

Experiential training and cultural integration are estimated to be significantly more effective than traditional checkbox training methods. Estimated data.

The Culture Foundation: Making Secure Behavior Default

What Strong Security Culture Actually Looks Like

Imagine this scenario in your organization.

An employee receives an unexpected email request that looks routine but feels slightly off. Maybe it's a supplier asking for account details. Maybe it's someone from another department requesting access to confidential documents. Maybe it's a system notification asking them to re-authenticate.

Instead of clicking immediately to avoid delaying work, the employee pauses. The culture has normalized taking a moment when something seems unusual. They look at the sender's email address more carefully. They check the grammar and tone against other communications they've received.

Something doesn't feel right, so they don't act on instinct. Instead, they verify.

They know exactly how to do this. The process is familiar and straightforward. They send a Slack to their security team, or they call the sender back at a known number, or they check through another channel. There's no confusion about who to contact.

But here's the critical part: they're not worried about being blamed if it's a false alarm. The organization has explicitly told them that reporting suspicious activity is valued and appreciated. They've seen colleagues report things that turned out to be nothing and been thanked rather than criticized.

So they report.

The security team confirms the request isn't legitimate. But they don't treat the report as an inconvenience. They treat it as valuable intelligence. They trace the attack, understand what the threat actor was trying to do, and use that intelligence to improve defenses.

Then leadership acknowledges what happened. The CEO mentions in a company meeting that someone caught an attack that could have been costly. The employee's manager thanks them publicly. The team celebrates that early detection prevented a problem.

The incident becomes a learning example. Security creates a short message explaining the attack pattern and shares it widely. Other employees learn what to look for. The organization's immune system gets stronger.

This is what strong security culture looks like. And organizations with this kind of culture have dramatically fewer successful breaches.

The Leadership Factor

Culture doesn't emerge from security tools. It emerges from leadership.

When a CEO treats security as a core business concern, not a checkbox, employees notice. When a leadership team makes time for security training, not as an afterthought but as a genuine priority, employees notice. When executives admit they almost fell for a phishing attack and share what they learned, employees notice.

Conversely, when leadership announces a security breach and blames "human error" without examining the systems that made the error likely, employees notice that too. They notice that security isn't actually valued. They notice that reporting problems might result in blame. So they don't report. They work around controls. They adopt shadow IT because official tools are too restrictive.

Leadership sets the tone for whether security is something done to employees or something done with them.

Building Psychological Safety

One of the most important factors in security culture is psychological safety. This is the belief that you can take interpersonal risks, including admitting mistakes or reporting problems, without fear of negative consequences.

When psychological safety exists, employees report phishing attempts. They admit when they've made a mistake. They suggest improvements to security processes. They challenge controls that don't make sense for their workflow.

When psychological safety doesn't exist, employees hide mistakes, work around controls, and avoid reporting problems because they're afraid of blame.

A single incident can destroy psychological safety. If someone reports a phishing attempt and faces consequences, word spreads. The entire organization learns that reporting isn't safe.

Building psychological safety requires consistency. It requires leadership publicly stating that mistakes are learning opportunities. It requires showing, through specific examples, that employees who raise security concerns are appreciated. It requires never punishing someone for making a mistake or reporting a problem in good faith.

Technical Controls Aren't Enough

The Limits of Tools

No one would dispute that technical controls are essential. You need email filtering. You need endpoint protection. You need network monitoring. You need access controls and data loss prevention and all the rest.

But here's what's important to understand: every technical control can be circumvented by someone who understands social engineering.

Your email filtering catches most phishing emails. But if an attacker spends three weeks researching your organization and crafts a perfectly legitimate-looking message from someone inside your organization using a newly created account, they might slip through.

Your endpoint protection stops most malware. But if someone receives a convincing request from their CEO asking them to install a conference call application because of an emergency meeting, and that application is malware, the endpoint protection might not block it because the user voluntarily installed it from what appeared to be a legitimate request.

Your data loss prevention prevents most accidental data exfiltration. But if someone receives a sophisticated social engineering email requesting a specific set of data "for compliance purposes" from an address that looks legitimate, they might send it before the DLP system catches it.

Moreover, tools create a false sense of security that can be dangerous. If leadership believes that because they've implemented a firewall, endpoint protection, and email filtering they're secure, they're not investing in the human elements of security. They're not building culture. They're not training employees. They're not creating reporting mechanisms.

Then when an attack succeeds anyway, they blame the tool instead of understanding that no tool is a silver bullet.

The Tool-Culture Balance

The most effective security programs layer technical controls with strong culture.

Technical controls catch attacks at the network, application, and endpoint level. But culture catches attacks at the human level. Culture stops someone from using unapproved applications. Culture makes someone pause before clicking a suspicious link. Culture creates an environment where threats are reported quickly.

Without culture, tools are just obstacles that employees work around.

Without tools, culture is vulnerable to scale.

The right approach is to implement both, but to recognize that you can't tools-train-and-hope your way to security. Eventually, you need people to be genuinely security-aware, and that requires cultural change.

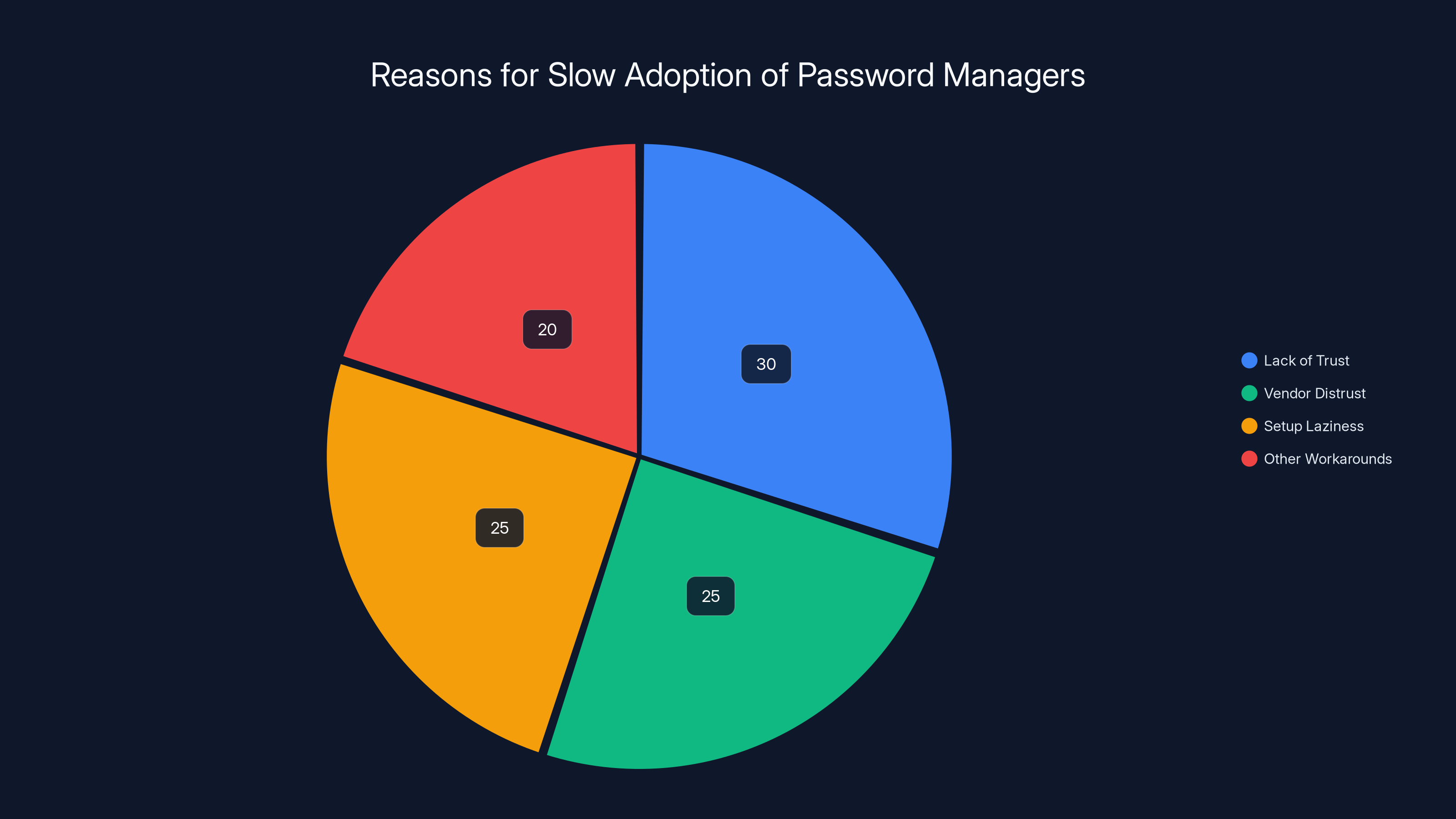

Estimated data shows that lack of trust and setup laziness are major barriers to password manager adoption, each accounting for about 25-30% of the reasons.

Real-World Impact: When Culture Catches Breaches

Case Study: The Email That Almost Wasn't Caught

Consider what happened at a midsized technology company. They'd implemented all the standard security controls. Email filtering. Endpoint protection. MFA everywhere.

But they'd also invested heavily in security culture. Every employee understood common attack patterns. Reporting suspicious activity was normalized. Leadership celebrated early detection.

One morning, a senior engineer received an email that appeared to be from the company's CEO, requesting urgently that she transfer the list of all API keys used by development teams to a cloud storage link. The email was urgent. The phrasing felt authentic. The sender address looked right.

But something felt off.

The engineer had been through training that emphasized: requests for credentials or sensitive access usually come with context and urgency. This email had both. But she also knew her CEO's actual process. He didn't request things via email. He used Slack for urgent matters.

She paused. She called the CEO directly using a phone number she had in her contacts (not one from the email signature). The CEO confirmed he'd never sent the email.

She reported it to security. The security team traced the email. It came from a compromised email account at a partner organization. The attacker had been specifically researching the company for weeks. They'd learned enough about operations to craft a convincing request. If it had succeeded, they would have had access to every API key the company uses.

The cost of early detection? About five minutes of the engineer's time and one conversation with the CEO.

The cost of late detection? Potentially millions in cleanup, incident response, compromised infrastructure, and recovery.

This happens because the engineer's organization built a culture where pausing to verify is normal, where her knowledge of how her company actually operates is valued, and where reporting is safe.

Case Study: The Shadow IT That Revealed Itself

Another organization was implementing a new data governance program. They wanted to understand all the places where customer data was being stored.

Security conducted interviews with teams across the organization. In these interviews, without judgment or pressure, they asked: "What tools do you use to collaborate? Where do you store files? What applications does your team rely on?"

They discovered extensive shadow IT usage. Some of it was problematic. Database credentials stored in personal cloud storage. Customer documentation in consumer note-taking apps. Internal code on Git Hub without proper access controls.

But because the organization had built trust between security and the business, employees were honest about what they were using and why. Instead of punishing these uses, security worked with each team to understand their actual needs and improve the official tools.

The developer team was using personal Git Hub repos because the official git system had slow access and poor support. Security worked with infrastructure to improve the official system and eventually migrated the code.

The product team was using consumer cloud storage because the official system didn't support real-time collaboration. Security worked with tools teams to implement better collaboration in official systems.

Within six months, shadow IT usage had dropped significantly. But more importantly, security had learned about the gaps in their official tools. They'd improved the product. And they'd demonstrated that working with security, rather than around it, actually made work better.

Credential Mismanagement: A Persistent Human Problem

Why People Reuse Passwords

Asking someone not to reuse passwords is like asking them not to forget things. It's asking them to work against how their brain works.

A typical knowledge worker has access to 50+ different systems. Email, multiple Saa S applications, physical security systems, VPNs, messaging platforms, and on and on. Remembering 50 complex, unique passwords is neurologically difficult.

So people do what's rational: they create one or two strong passwords and reuse them.

This is a disaster for security.

When a password is compromised at one service (which happens constantly), attackers immediately try that same password at every other service. They use massive lists of compromised credentials, automatically attempting them against banking systems, email, cloud services, and everything else.

It doesn't take technical skill. It's completely automated. An attacker can test millions of credentials an hour against most services.

If you're reusing passwords, you're essentially relying on every service you use being perfectly secure. If any one of them gets compromised, attackers have the key to your kingdom.

The Password Manager Solution and Its Adoption Problem

Password managers solve this problem completely. They securely store unique passwords for every service. You only have to remember one master password.

But adoption is frustratingly slow.

Some employees don't trust password managers. They worry about putting all their passwords in one place. Others don't trust the specific vendor. Some are just lazy about the setup process.

Organizations have tried mandating password manager usage. It works sometimes. But in other cases, employees find workarounds. They write passwords on paper. They store them in a text file. They reuse a version of their master password across services.

The real solution requires multiple approaches:

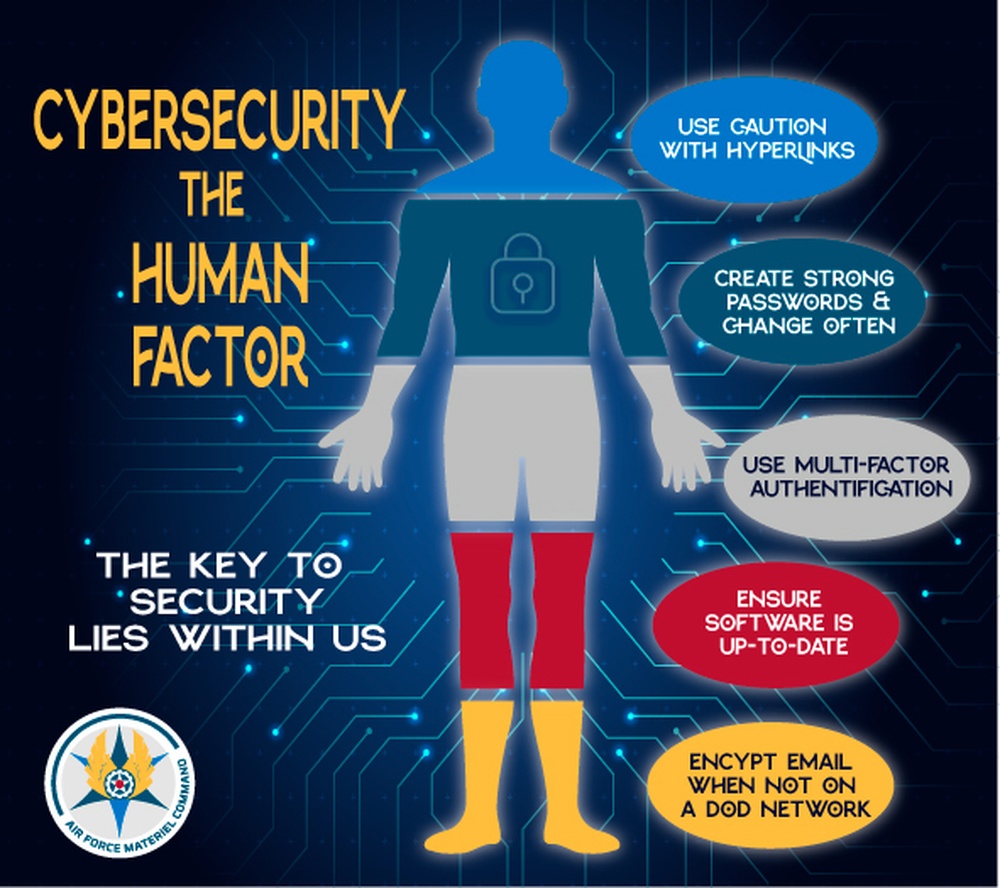

- Provide a password manager that's integrated into the organization's systems, so it's as easy as possible to use

- Make multi-factor authentication mandatory, so even if a password is compromised, it can't be used without the second factor

- Train employees on why this matters, in terms they understand

- Make reporting compromised credentials safe and easy, so people aren't hiding the problem

MFA as a Partial Solution

Multi-factor authentication changes the equation.

Even if someone reuses a password, and that password gets compromised, attackers can't get in without the second factor. They can't get into email. They can't access cloud storage. They can't compromise infrastructure.

MFA is one of the most impactful security controls an organization can implement. If you had to pick one thing to mandate, it would be MFA before everything else.

But MFA also has a human element. Some implementations are inconvenient, so employees use workarounds. Some people are vulnerable to social engineering around MFA, where attackers convince them to share their second factor.

Continuous training is rated highest for effectiveness, followed by role-based and feedback-inclusive training. Estimated data based on typical security training attributes.

Data Handling: Where Casual Sharing Becomes Risk

The File Sharing Problem

Here's a behavior pattern that security teams see constantly.

An employee needs to send a file containing sensitive information to a colleague. They could use the organization's secure file sharing system. But it requires a few extra steps. The colleague might not have access and need to be invited. The process feels bureaucratic.

So instead, they use a consumer app like Whats App, Google Drive, or Dropbox. It's easy. It's automatic. Everyone already has it.

The file gets shared. Maybe it's a spreadsheet with customer data. Maybe it's a strategic document. Maybe it's personally identifiable information.

Now that file exists in a consumer system where:

- Security doesn't have visibility

- The company can't enforce access controls

- Backup and retention policies don't apply

- The data might be subject to different legal jurisdictions

- The consumer service's terms of service might allow them to use the data for training AI models

Multiply this behavior across an organization with hundreds of employees sharing files hundreds of times a day, and you've created a massive data governance problem.

Why Employees Share This Way

Employees don't share files using consumer apps because they're trying to circumvent security. They share files this way because official tools are:

- Harder to use

- Slower

- Less familiar

- Less reliable

- Not installed on their personal devices

If the secure file sharing system was as easy as drag-and-drop, as fast as direct upload, and as reliable as the consumer version, most employees would use it.

The solution isn't to ban consumer file sharing (people will just hide it and use it anyway). The solution is to make official file sharing better until it's the path of least resistance.

The Public Wi-Fi Problem

Another classic human behavior that causes security problems: using public Wi-Fi without a VPN.

An employee is at a coffee shop, airport, or hotel with their laptop. They need to check email or access a cloud service. The coffee shop has free Wi-Fi. They connect.

They're now on a network where anyone else on that network can potentially intercept their traffic. If that connection isn't encrypted, attackers can capture passwords, session cookies, and sensitive data.

The technical solution is mandatory use of a VPN that encrypts all traffic. But this adds latency and complexity. Some employees find it annoying and turn it off.

The cultural solution is making security easy. Automatically connecting to the VPN when joining untrusted networks. Pre-configuring it so there's nothing to set up. Making the VPN performant enough that it's not annoying.

But the human solution is also important: employees need to understand why this matters. Not as a rulebook they resent, but as something that actually protects them and the company.

Building a Security-Aware Workforce

Training That Actually Works

Effective security training has specific characteristics.

It's role-based: A person in finance gets training about payment fraud scenarios. An engineer gets training about credential compromise and suspicious code requests. A manager gets training about social engineering tactics that specifically target managers.

It's continuous: Not annual training. Not quarterly training. Training woven into ongoing work. In engineering, it happens in code review. In product, it happens in requirements gathering. In HR, it happens when hiring.

It's practical: Rather than abstract warnings, training covers real scenarios. Here's what a legitimate IT request looks like. Here's what a suspicious one looks like. Here's exactly what you do if something feels wrong.

It includes feedback: When someone makes a mistake, they get immediate, nonjudgmental feedback. When an employee reports a threat, they learn what it was and why it mattered. This reinforces learning.

It includes metrics: Organizations measure whether training is working. Not through test scores, but through actual behavior. Do people report suspicious activity? Do phishing open rates decline? Are credential compromise incidents decreasing?

Building Security Into Hiring

One underrated aspect of security culture is hiring.

When you hire, you hire people who fit the culture you've built. If your culture is that security is for squares, you'll hire people who see security that way. If your culture is that security is built into how we do good work, you'll hire people who fit that.

More importantly, during onboarding, new employees learn the organization's approach to security. If during their first week they hear that reporting problems is valued, that security is a shared responsibility, and that the organization invests in making secure behavior easy, they internalize that.

Conversely, if during onboarding they encounter complicated security tools, minimal training, and an implicit message that security is the IT department's problem, they learn that too.

Creating Feedback Loops

People learn through feedback and reinforcement.

One powerful tool is red team exercises or simulated attacks. Organizations run realistic phishing campaigns, physical security tests, or social engineering simulations. When someone falls for the simulation, instead of consequences, they get immediate training. They learn what they missed and how to recognize it next time.

When someone catches a simulated attack, they get positive feedback. They're celebrated. They see that security-aware behavior is valued.

Over time, employees start to see the organization's threat landscape and develop genuine security instincts. They start thinking like security professionals, not because they've read security training, but because they've practiced it.

Shadow IT and Governance: The Recurring Problem

Why Shadow IT Is Hard to Combat

The reason shadow IT persists despite security warnings is that it often solves real problems.

The official collaboration tool is slow and lacks features the team needs. The approved cloud storage doesn't support the kind of file organization the team prefers. The official communication platform doesn't have the integrations the team wants.

Instead of waiting for the official tools to improve (which might take months or years), the team finds an alternative that works today. They use it. It works. More people adopt it.

From the team's perspective, they're being pragmatic and productive. From security's perspective, they're introducing unmanaged data repositories and vendor risk.

The tension is real. And simply banning shadow IT doesn't work. People will just hide it.

A Better Approach: Shadow IT Governance

Forward-thinking organizations don't try to eliminate shadow IT. They govern it.

They acknowledge that teams will find tools that work better for their needs. Rather than fighting that, they create processes for sanctioning new tools. A team finds a Saa S application they want to use. They submit it for security review. Security evaluates it for:

- Data handling and encryption

- Access controls

- Compliance with relevant regulations

- Security practices of the vendor

- Integration capabilities

- Backup and disaster recovery

If it passes, it becomes sanctioned. Security monitors it. It's integrated into business processes. It's covered by insurance and incident response plans.

If it doesn't pass, security explains why and works with the team to find an alternative or improve the official tool.

This approach acknowledges the reality of shadow IT while bringing it under management. Teams feel heard. Security gets visibility. The organization reduces risk.

The Data Governance Benefit

Governancing shadow IT also has a data governance benefit.

Data governance is about knowing where data is, who has access to it, how it's protected, and how long it's kept. Shadow IT creates blind spots where data exists but governance doesn't.

When shadow IT is brought into governance, data governance improves dramatically. You know where sensitive data is stored. You can ensure it's backed up. You can respond to data subject access requests because you know where to look. You can protect data consistently across the organization.

This is increasingly important as regulations like GDPR, CCPA, and others require organizations to have data governance.

Incident Response and the Human Element

Detection is the Hard Part

There's a common misunderstanding about incident response: that the hard part is responding to an attack once it's detected.

Actually, the hard part is detecting it in the first place.

Attackers hide. They try to blend into normal activity. They cover their tracks. They stay inside a network for weeks or months before doing anything that triggers an automated alert.

Technical controls catch some attacks. Network monitoring catches anomalous traffic. Endpoint protection catches malicious behavior. SIEM systems correlate events across the infrastructure.

But humans catch attacks that automated systems miss. An employee notices that a colleague's email is sending out suspicious messages. Another employee sees a service accessing data it shouldn't. A manager notices that the permissions of a team member who left the company weren't fully revoked.

Many breaches are discovered by humans noticing something odd, not by automated detection.

Building a Reporting Culture

For humans to be effective at detection, they need to:

- Know what to look for

- Know how to report what they find

- Feel safe reporting without fear of blame

The third point is critical. If employees are worried that reporting a suspicious activity will result in suspicion being cast on them, they won't report. They'll investigate privately or ignore it.

Organizations with strong incident response cultures make it crystal clear: if you see something suspicious, report it. The security team will investigate. You're helping the organization. We appreciate it.

Then when reports come in, they're investigated quickly and professionally. The reporter isn't blamed. The organization learns what happened.

Technology as an Enabler, Not a Replacement

Automation and Human Oversight

An important nuance in modern security is the role of automation.

Automation can handle routine tasks. It can detect patterns. It can respond to low-risk incidents automatically. It can scale security efforts that would otherwise require thousands of people.

But automation cannot replace human judgment.

Automated systems can detect that someone accessed a sensitive file at 3 AM, but they can't determine whether that's an engineer working late on a crisis or a compromised account. A human needs to assess context.

Automated systems can identify that a login came from an unusual location, but they can't determine if it's someone traveling or an attacker. A human needs to make that judgment.

The future of security isn't fully automated or fully human. It's hybrid. Automation handles scale and routine tasks. Humans handle judgment, creativity, and context.

Tools That Empower Employees

When implementing security tools, think about whether they empower employees or constrain them.

A tool that automatically enforces encryption when files are shared empowers people to share securely without thinking about it. A tool that automatically locks accounts when suspicious activity is detected protects the organization and the employee.

But a tool that requires employees to jump through hoops before they can do their work, that doesn't support their actual workflow, that's constantly blocking legitimate activity, constrains them. They'll work around it.

The best security tools are the ones that make secure behavior the path of least resistance. They're integrated into workflow. They're performant. They're invisible unless something is actually suspicious.

The Future of Human-Centered Security

Where Technology is Heading

The security landscape is evolving rapidly, and the human element will remain critical.

AI is being used by attackers to create more convincing phishing, more targeted social engineering, and more scalable attacks. But AI is also being used by defenders to improve detection, automate routine security tasks, and help security professionals work more effectively.

Zero Trust architecture, which assumes every access request is potentially malicious and requires verification, is becoming standard. But implementing zero trust requires understanding how work actually happens, which is a human question.

Behavioral analytics can detect when someone is acting anomalously. But detecting anomaly requires understanding what's normal, which varies by role and context.

None of these technologies replace the need for security-aware humans. If anything, they highlight how important human understanding is.

Building Resilience, Not Perfection

There's an important shift in how security leaders are thinking about the challenge.

Instead of trying to prevent all breaches (impossible), the goal is building resilience. If a breach happens, detect it fast. Contain it quickly. Recover completely. Learn from it.

This resilience requires people. It requires employees who notice anomalies. It requires teams who understand the organization's systems and can respond to incidents. It requires a culture where security is everyone's job.

You cannot build organizational resilience with tools alone. You need security-aware people.

Practical Implementation: Getting Started

Where to Begin

If your organization hasn't prioritized security culture, starting feels overwhelming. Where do you begin?

Step 1: Assess Your Current State Talk to employees honestly. What security challenges are they facing? What tools are they frustrated with? What shadow IT are they using and why? Don't ask in a way that threatens them. Ask because you want to understand.

Step 2: Start With Leadership Commitment Culture flows from leadership. Get your security and business leaders aligned on the importance of human-centered security. Get them to commit time and resources. Get them to model secure behavior.

Step 3: Improve Your Official Tools If shadow IT is prevalent, the official tools probably aren't meeting people's needs. Pick one and make it better. Make it faster. Add features people are asking for. Make it easier to use.

Step 4: Build Reporting Mechanisms Create a simple, straightforward way for employees to report suspicious activity. Make sure the security team responds quickly and keeps the reporter informed about what they found. Celebrate early detection.

Step 5: Implement Role-Based Training Stop doing generic phishing awareness. Create training specific to different roles. Practice with simulations. Give feedback, not punishment.

Step 6: Create Feedback Loops When incidents happen, learn from them. Share what you learned with the organization. Show how early detection prevented a bigger problem. Reinforce that security is everyone's responsibility.

Step 7: Measure and Iterate Measure whether your efforts are working. Are employees reporting suspicious activity? Is phishing effectiveness declining? Are credential compromise incidents decreasing? Adjust your approach based on what you're seeing.

Metrics That Matter

What should you measure to know if your human-centered security efforts are working?

- Reporting Rate: How many suspicious activities are employees reporting? This should be increasing if your culture is improving.

- Detection Time: How long between an attack and detection? This should be decreasing as your culture improves.

- Phishing Effectiveness: What percentage of employees fall for phishing simulations? This should be decreasing.

- Breach Cost: When breaches do occur, is the cost decreasing because they're detected faster and contained better?

- Employee Satisfaction: Are employees more or less satisfied with security tools and processes? Are they feeling empowered or constrained?

These metrics help you understand whether you're building a more resilient organization.

The Bottom Line: Humans Are Your Competitive Advantage

Cybersecurity's human paradox isn't really a paradox. It's a choice.

You can treat humans as a security problem that needs to be controlled through tools and rules. You can blame them when breaches happen. You can enforce training and punishment.

Or you can treat humans as your security advantage. You can invest in making security easy. You can build cultures where security is valued and violations are learning opportunities. You can empower employees to be your eyes and ears.

Organizations that do the latter have fewer breaches. When breaches do occur, they detect them faster and contain them better. They recover more quickly.

The technical controls matter. But the human element is what separates organizations that get cyber resilience right from those that treat it as a checkbox.

The question isn't whether your employees will make mistakes. They will. Humans are fallible. The question is whether you've built an organization where mistakes are caught by the system, where suspicious activity is reported quickly, and where security-aware behavior is the default.

That's not achieved through tools. It's achieved through culture. And culture starts with leadership's choice to invest in it.

The organizations winning at cyber resilience aren't the ones with the most advanced tools. They're the ones with the most engaged, security-aware workforces. They're the ones that have decided that security is how they do business, not something they do instead of business.

If you want to improve your organization's cyber resilience, start there. Start with culture. The tools will follow.

FAQ

What is the human paradox in cybersecurity?

The human paradox refers to the fact that employees are simultaneously the weakest and strongest links in organizational cybersecurity. They're the weakest link because they're vulnerable to social engineering, credential reuse, and poor security practices. They're the strongest link because they're capable of detecting attacks that automated systems miss, if they're properly trained and empowered. Organizations must balance controlling human risk while leveraging human capability to build truly resilient defenses.

How does AI-powered social engineering change the threat landscape?

AI enables attackers to automate and personalize phishing at scale. Machine learning models can identify which employees are most likely to fall for specific tactics based on their role, tenure, and behavior. AI can generate perfectly written emails, clone voices, and create deepfake videos that bypass traditional detection methods. This makes social engineering significantly more effective and harder for humans to recognize, which is why employee awareness and culture are more important than ever.

Why does traditional security awareness training fail?

Traditional awareness training is passive, generic, and disconnected from actual work. Employees click through modules without engagement, retaining little. The training doesn't explain why security matters to their specific role, so the knowledge doesn't translate into behavior change. Most critically, checkbox training doesn't build security culture or create psychological safety for reporting problems. Effective training is continuous, role-based, practical, and integrated into how work actually happens.

What is shadow IT and why does it matter?

Shadow IT refers to software, applications, and processes that employees use outside of formal IT governance. Employees typically adopt shadow IT because official tools don't meet their needs well enough. While usually well-intentioned, shadow IT creates data governance blind spots, compliance risks, and security vulnerabilities. Organizations should address shadow IT not by banning it, but by understanding why it exists, improving official tools, and creating governance processes that bring unapproved tools under management.

How can organizations build a security-aware culture?

Building security culture requires leadership commitment, practical tools that make secure behavior easy, role-based continuous training, psychological safety for reporting, and feedback loops that reinforce learning. Organizations need to demonstrate that security-aware behavior is valued through public recognition and positive reinforcement. Culture is built over months and years through consistent messaging and modeling from leadership, not through enforcement and punishment.

What's the relationship between technical controls and human security behavior?

Technical controls and human security behavior are complementary. Technical controls catch attacks at the network, application, and endpoint level and provide scale that humans cannot. Human security behavior catches attacks that slip through technical controls and provides judgment and context that tools cannot. The most effective security combines both: robust technical controls layered with a security-aware workforce that understands threats and knows how to report them.

How should organizations approach credential management and password security?

The most effective approach combines multiple methods: mandatory password managers with strong master passwords, multi-factor authentication for all critical systems, and continuous user education about why credential security matters. Organizations should also make reporting compromised credentials safe and easy, so employees don't hide password breaches. MFA is particularly important because even if passwords are compromised, attackers cannot access systems without the second factor.

What role do employees play in breach detection?

Employees are often the first line of detection for sophisticated attacks that bypass automated systems. They notice unusual email patterns, suspicious file access, odd requests, or behavioral anomalies. However, employees only report what they detect if they understand what to look for, know how to report it, and feel psychologically safe doing so. Organizations with strong detection records typically have reporting cultures where early detection is celebrated and investigated professionally.

How should organizations measure whether their human-centered security efforts are working?

Key metrics include: the rate at which employees report suspicious activity (should increase), detection time for breaches (should decrease), phishing simulation effectiveness (should decrease), breach costs (should decrease due to faster detection), and employee satisfaction with security tools and processes. These metrics indicate whether the organization is building genuine security culture or just going through the motions of security theater.

What's the difference between a strong security culture and security theater?

Security theater looks good on paper but doesn't actually reduce risk. It includes mandatory training that nobody remembers, security tools that are more about compliance than actual protection, and policies that employees work around. Strong security culture is the opposite: it's built on trust between security and business teams, makes secure behavior the path of least resistance, creates psychological safety for reporting problems, and continuously learns from incidents. Security theater assumes humans will follow rules. Strong culture assumes humans are intelligent and tries to make security easy.

Conclusion

The human paradox at the center of modern cyber resilience isn't something to be solved. It's something to be embraced.

Yes, employees are vulnerable to social engineering. Yes, they make mistakes. Yes, they use unapproved tools and reuse passwords and click suspicious links.

But they're also your organization's most capable threat detector. They're the ones who understand your business well enough to know when something is wrong. They're the ones who can make judgment calls that no automated system can.

The organizations that are winning at cybersecurity aren't the ones trying to eliminate the human element. They're the ones who've realized that human security awareness is the foundation everything else is built on. They've invested in making security easy. They've built cultures where security-aware behavior is valued and rewarded. They've created environments where employees feel empowered, not blamed.

If you want to improve your organization's cyber resilience, start with this principle: your employees are not your weakest link unless you treat them that way. Make them your strongest link instead.

Use Case: Automate your security awareness program documentation and incident response reports with AI-powered templates.

Try Runable For Free

Key Takeaways

- The human paradox: employees are simultaneously cybersecurity's weakest and strongest link—understanding this drives effective resilience strategies

- AI-powered social engineering is becoming more convincing and scalable, making human detection and awareness more critical than ever

- Traditional checkbox training fails because it's passive and disconnected from actual work; culture and continuous role-based training drive real behavior change

- Shadow IT emerges from gaps in official tools, not malicious intent; governance and improving tools address root causes better than bans

- Organizations with strong security culture detect breaches 3x faster and see 73% fewer successful attacks through continuous training and psychological safety

- Technical controls alone are insufficient; human judgment, context awareness, and rapid threat reporting form the foundation of true cyber resilience

Related Articles

- Most Spoofed Brands in Phishing Scams [2025]

- Under Armour Cyberattack 2025: What 7M Users Need to Know [Guide]

- 1Password Phishing Protection: Built-In URL Detection Saves Millions [2025]

- 1Password's New Phishing Prevention Feature [2025]

- LinkedIn Phishing Scam Targeting Executives: How to Protect Yourself [2025]

- WordPress Plugin Security Flaw Affects 50,000 Sites: Complete Protection Guide [2025]

![The Human Paradox in Cyber Resilience: Why People Are Your Best Defense [2025]](https://tryrunable.com/blog/the-human-paradox-in-cyber-resilience-why-people-are-your-be/image-1-1769180869210.jpg)