The AI-SaaS Evolution: Understanding Databricks' Bold Thesis

The technology industry has been consumed by a singular question for the past two years: Will artificial intelligence destroy the software-as-a-service market? This anxiety permeates venture capital firms, startup boardrooms, and enterprise IT departments. When Databricks announced reaching a

Ghodsi's position is nuanced and grounded in observable market dynamics. He doesn't argue that AI will leave SaaS untouched. Rather, he contends that the relationship between AI and SaaS is far more complex than the dismissive narratives suggesting enterprises will "rip out" their critical systems to replace them with hastily assembled, AI-powered alternatives. This perspective, backed by a company that has raised

The discourse surrounding AI and SaaS often relies on oversimplified assumptions. Critics envision a world where large language models enable companies to quickly assemble custom software solutions, rendering specialized SaaS providers obsolete. Proponents of SaaS incumbents, meanwhile, argue that their entrenched positions, battle-tested infrastructure, and network effects make them virtually untouchable. Ghodsi's view transcends this binary thinking by introducing a third dimension: the fundamental transformation of how users interact with software itself.

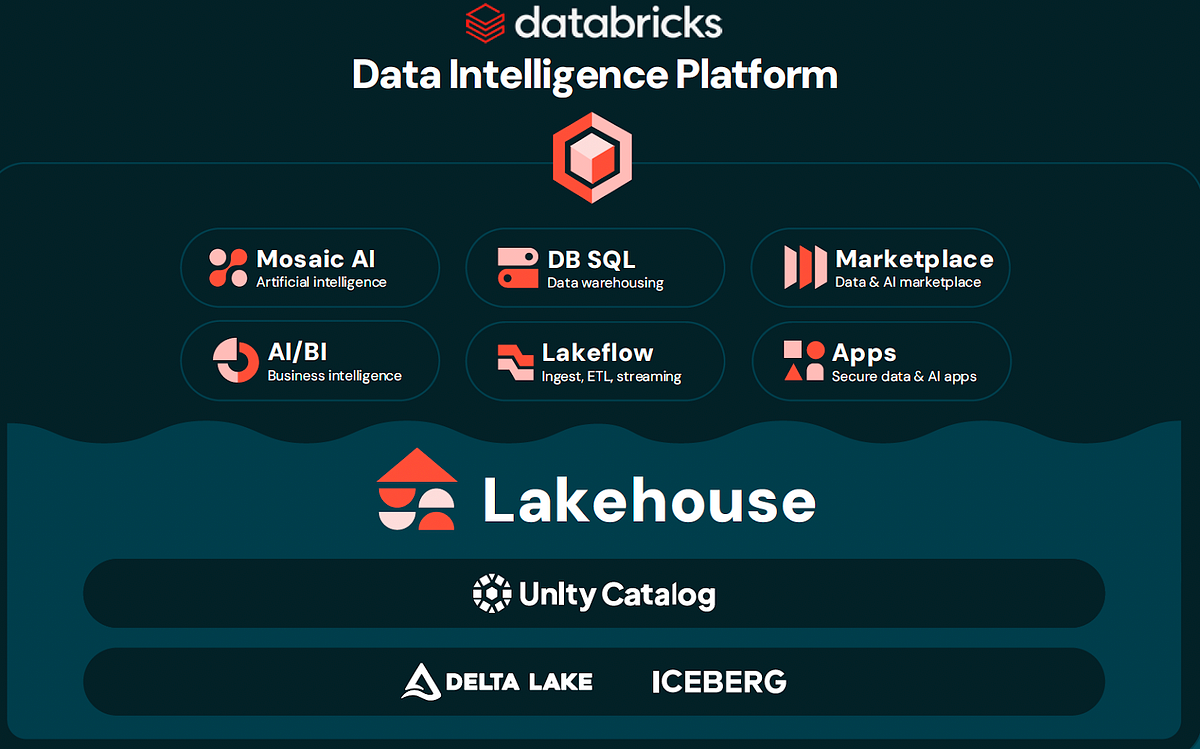

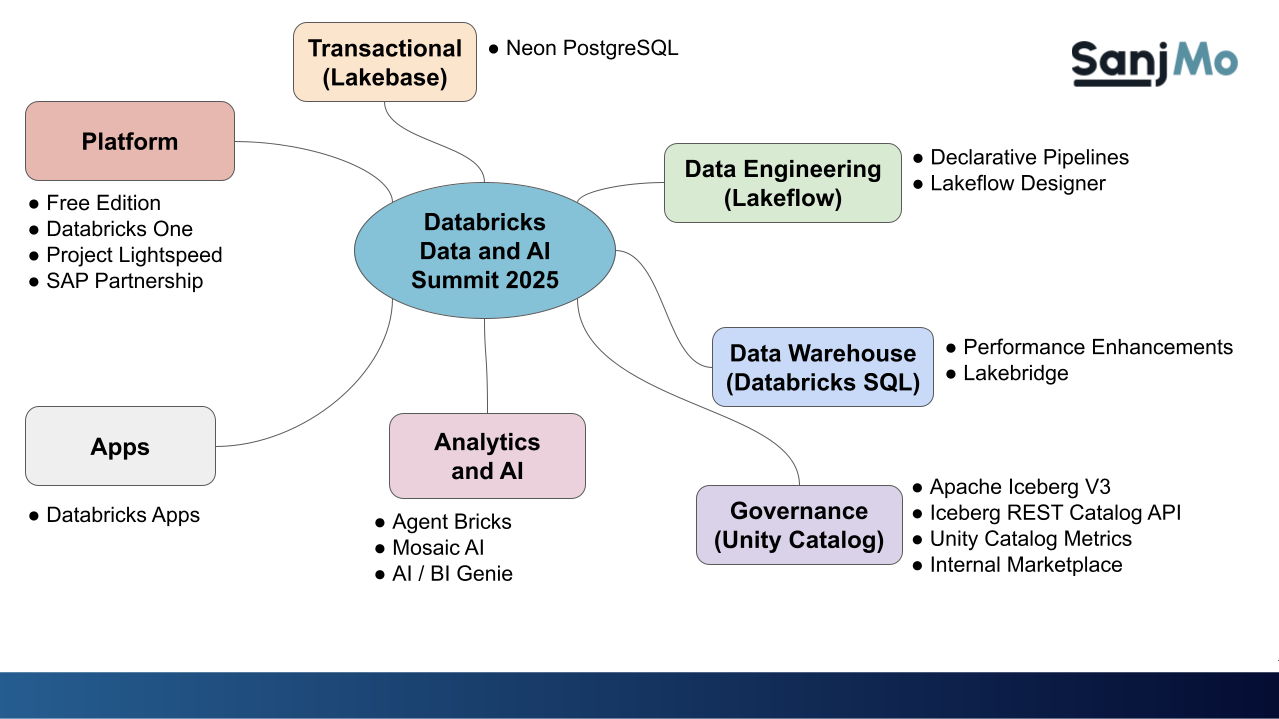

Databricks' own trajectory provides concrete evidence for this thesis. The company emerged as a cloud data warehouse provider but has evolved into a comprehensive AI data platform. Its recent success isn't attributable to abandoning its core data infrastructure—rather, it stems from integrating AI-native interfaces and capabilities into that infrastructure. This progression illustrates how mature SaaS businesses can reinvent themselves by embracing AI rather than being threatened by it.

The implications extend far beyond Databricks. Understanding how AI will reshape the SaaS landscape requires examining the specific mechanisms of disruption, the resilience factors that protect existing players, and the genuine vulnerabilities that demand transformation. This comprehensive analysis will explore these dynamics in depth, examining what Ghodsi and other industry leaders understand about the future of enterprise software.

The Real Threat: Natural Language User Interfaces Replace Traditional Query Languages

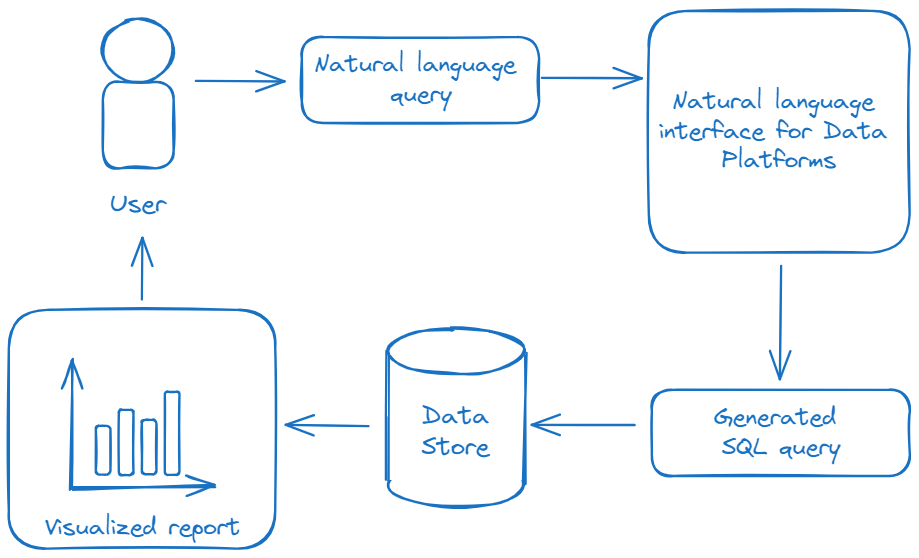

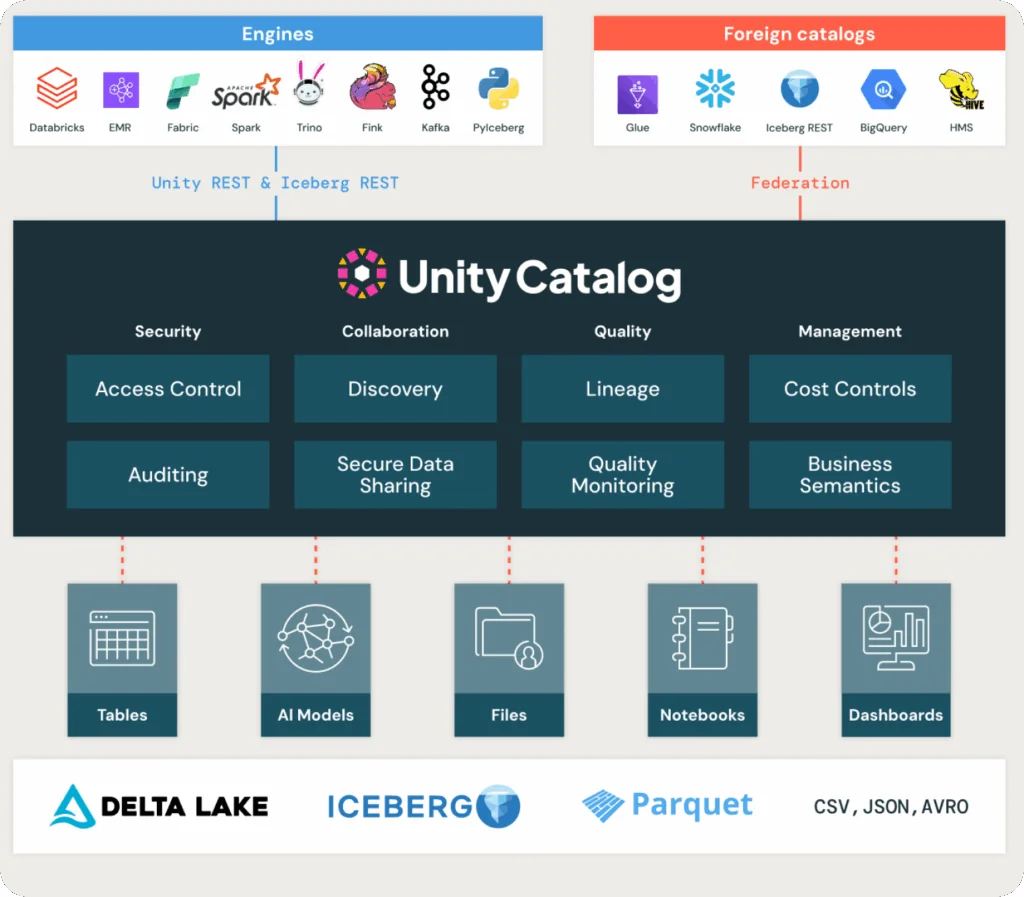

When Ghodsi discusses the impact of AI on SaaS, he points to a specific technical shift that executives and analysts often gloss over: the replacement of specialized interfaces with natural language interactions. This represents perhaps the most significant threat to incumbent SaaS providers, not because it makes their core functionality obsolete, but because it democratizes access to that functionality in unprecedented ways.

For decades, enterprise software has been defined by its user interfaces. Access to data warehouses required knowledge of SQL, a specialized query language. Generating reports demanded either hiring specialists or navigating complex dashboard builders. Creating business intelligence required technical expertise or extensive training. These barriers to entry created a protective moat around SaaS providers—they became indispensable precisely because their software was difficult to learn and use.

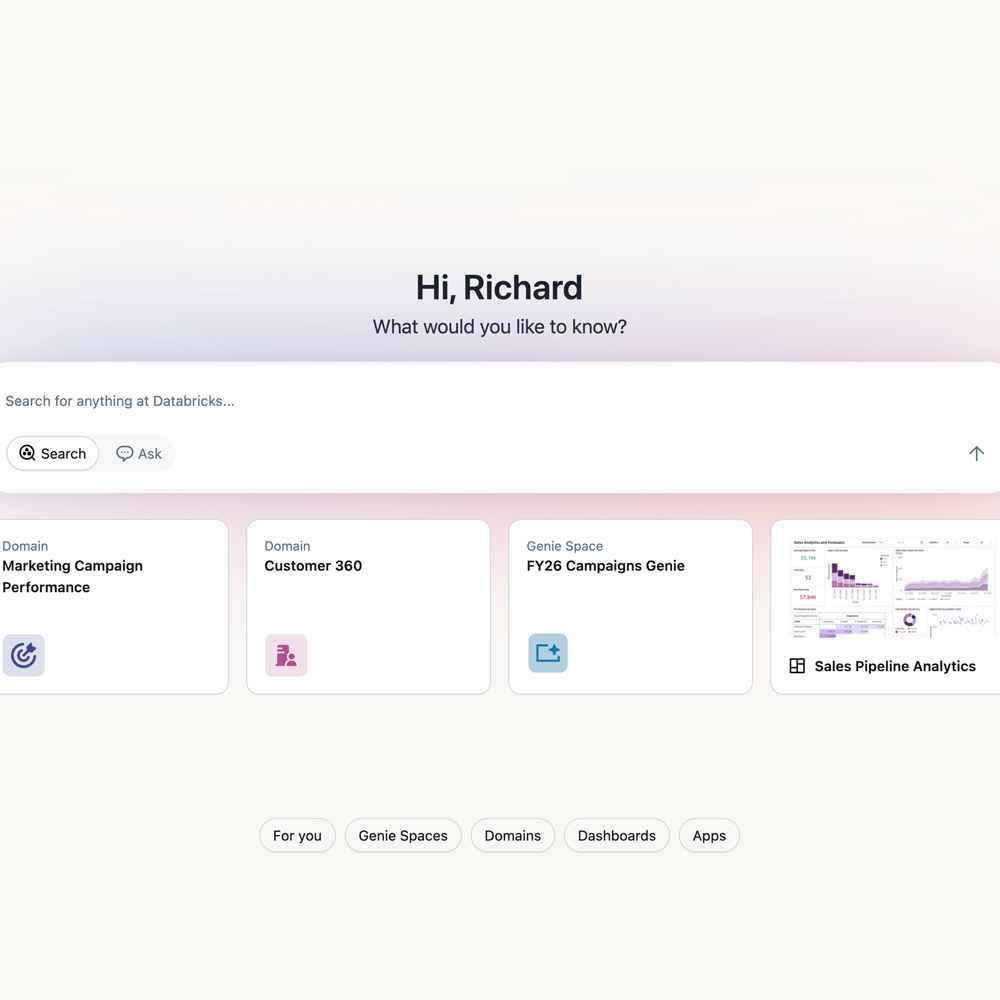

Databricks' Genie product exemplifies how AI systematically dissolves this protective moat. Genie allows any user to interact with data warehouses using conversational natural language. Instead of crafting a SQL query to understand why warehouse usage and revenue spike on particular days, a business analyst simply asks the question in plain English. The large language model translates this natural language request into the necessary technical queries, aggregates the results, and presents the answer in a conversational format.

This capability represents a genuine discontinuity in how enterprise software functions. Ghodsi emphasizes that "millions of people around the world got trained on those user interfaces. And so that was the biggest moat that those businesses have." These moats—the accumulated expertise, muscle memory, and organizational knowledge embedded in specialized interfaces—are precisely what AI natural language interactions undermine.

The competitive implications are substantial. A SaaS vendor that maintains a legacy interface while competitors introduce natural language interactions faces an immediate competitive disadvantage in user acquisition and retention. However, implementing natural language interfaces presents challenges for incumbents. Building reliable natural language query systems requires different engineering expertise than traditional software development. It demands careful attention to accuracy, hallucination prevention, and edge case handling. For established SaaS companies with deep roots in their existing architecture, retrofitting natural language capabilities can be technically complex.

Startups and AI-native companies face fewer architectural constraints. They can design systems with natural language interaction as a foundational principle rather than a retrofit. This architectural advantage explains why Databricks invested in building purpose-designed systems like Lakebase, which Ghodsi describes as designed "for agents." These systems assume natural language and API-driven interactions from inception rather than bolting them onto existing legacy interfaces.

The shift to natural language interfaces also extends to automation and autonomous agents. Traditional SaaS systems relied on humans to interact with their user interfaces. AI agents, however, require structured APIs and programmatic interfaces. A SaaS vendor optimized for human interaction may struggle to serve AI agents efficiently. This creates a two-pronged disruption: human users gain the ability to interact more naturally, while AI agents require fundamentally different interfaces than current SaaS systems provide.

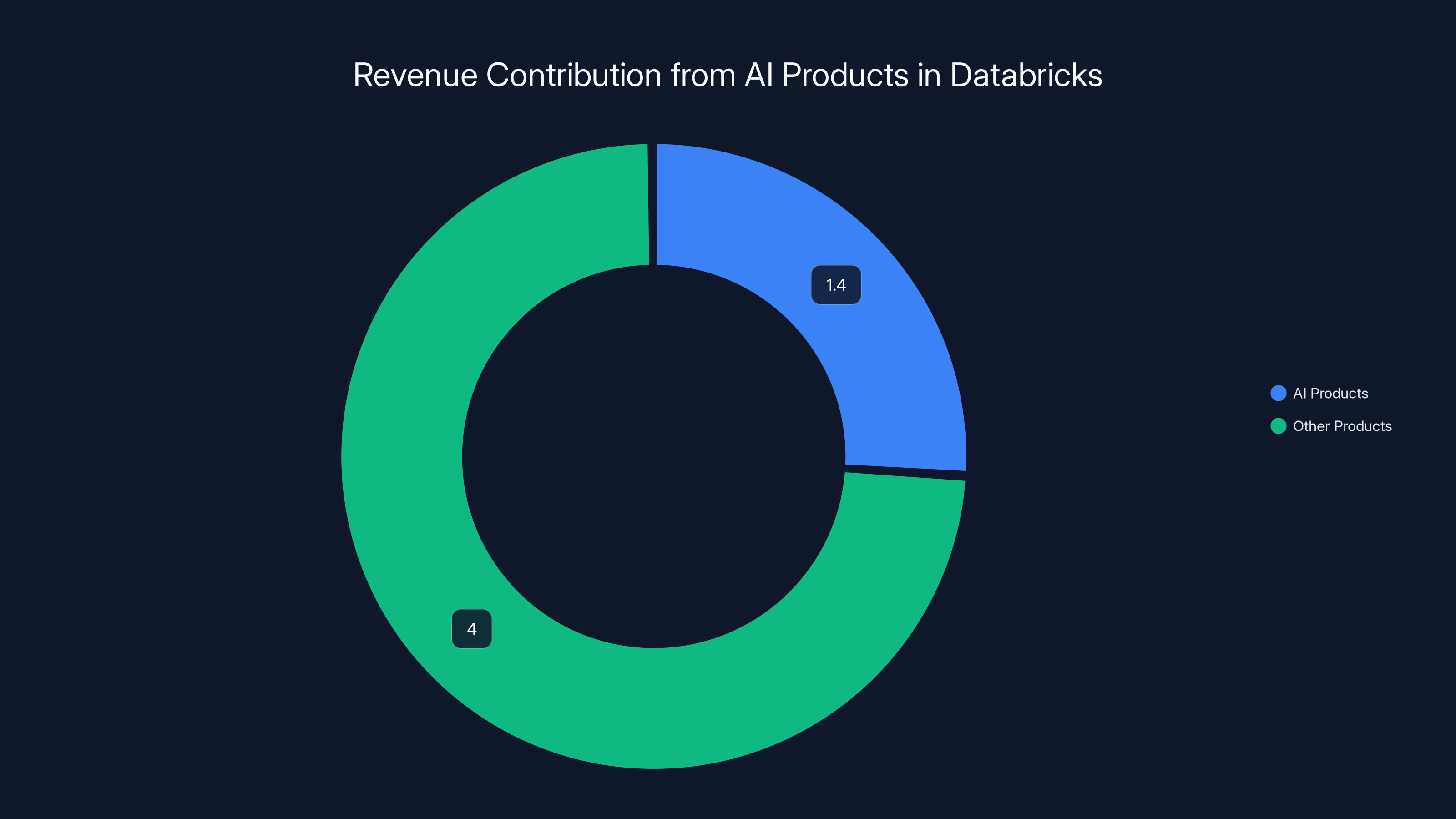

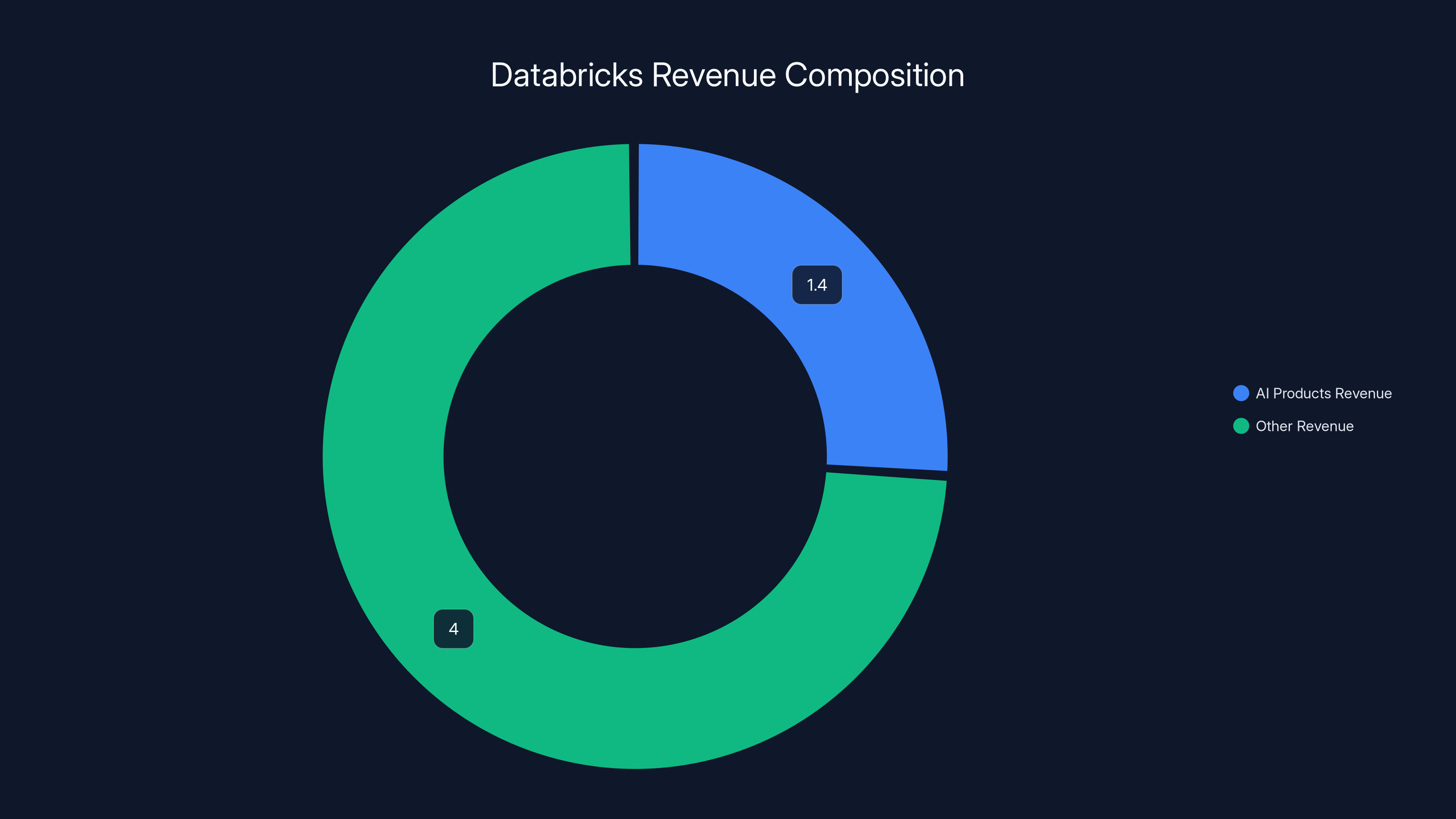

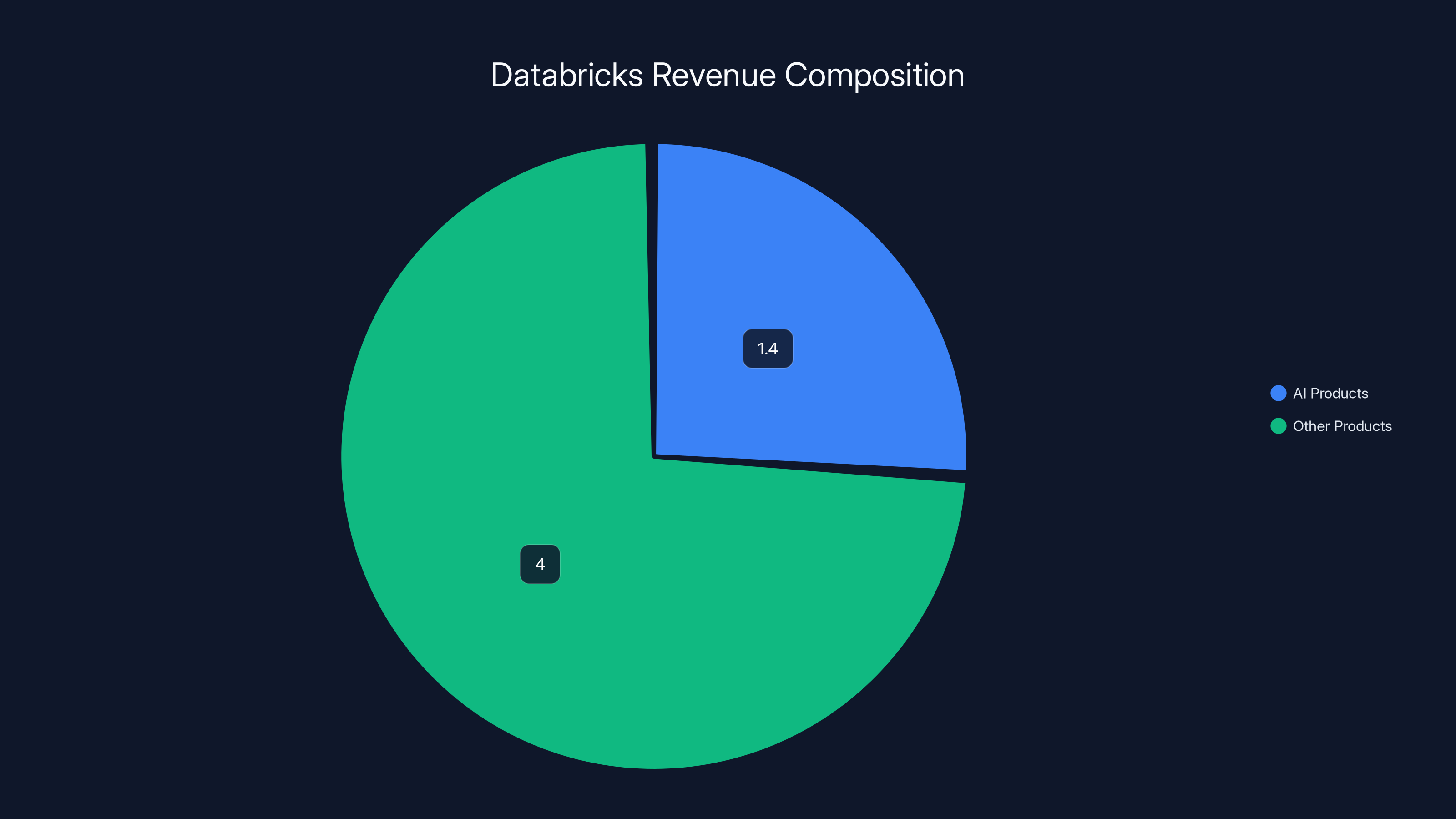

AI products contribute approximately 26% to Databricks' total revenue, highlighting the significant impact of AI integration on growth.

Why "Systems of Record" Remain Remarkably Resilient

For all the disruption that AI introduces to user interfaces, Ghodsi makes a compelling observation about the resilience of core enterprise systems: companies will not abandon their "systems of record" to replace them with hastily assembled AI alternatives. A system of record is an organization's single source of truth for critical business data—customer information, financial records, sales pipelines, supply chain data, and similar essential information.

This resilience stems from fundamental economic and operational realities. Systems of record are deeply integrated into organizational processes. They connect to countless other systems through APIs and integrations. They contain years or decades of historical data in carefully structured formats. They are subject to regulatory compliance requirements and audit trails. They support business-critical decision-making and cannot tolerate data inconsistency or downtime.

Migrating a system of record is among the most disruptive and expensive undertakings in enterprise IT. Organizations that have successfully migrated—from legacy mainframe systems to cloud platforms, for instance—typically report multi-year projects involving hundreds of people and millions of dollars. The operational risk and cost far exceed the potential benefits of switching to a newer technology unless the incumbent system is actively failing to meet organizational needs.

This fundamental constraint explains why large SaaS vendors like Salesforce, SAP, and Oracle have maintained dominant market positions despite decades of technological change. Yes, they face competitive threats. But the barrier to dislodging a system of record is so substantial that competitive displacement typically occurs in adjacent categories or with new customer cohorts, not through rip-and-replace migrations of existing deployments.

Ghodsi explicitly addresses this dynamic when discussing why major model makers—the companies building large language models and AI systems—aren't targeting the systems of record market: "The model makers aren't offering databases to store that data and become systems of record anyway." This statement reveals that those building generative AI and large language models have tacitly accepted that systems of record are outside the scope of what AI will disrupt in the near term.

However, this doesn't mean systems of record are immune to change. Rather, the transformation will be incremental rather than revolutionary. SaaS providers will enhance their systems of record with AI-powered interfaces, predictive capabilities, and automation features. The core data storage and transaction processing will remain largely unchanged, but the layers above—how users and systems interact with that data—will be substantially enhanced by AI.

This creates a differentiated competitive landscape. Established SaaS providers maintaining market-leading systems of record have a structural advantage: they already own the relationship, control the data, and can incrementally enhance their offerings with AI capabilities. However, this advantage is not absolute. SaaS vendors that move slowly, maintain inflexible architectures, or fail to integrate AI capabilities effectively can find themselves losing share to more innovative competitors even if they retain their systems of record status.

AI products contribute approximately 26% to Databricks' total revenue, highlighting the significant impact of AI integration.

The Emergence of AI-Native Competitors and Category Creation

While existing systems of record may be resilient, Ghodsi identifies a more serious competitive threat: the emergence of entirely new categories of software designed explicitly for AI and autonomous agents. This represents genuine disruption, not of existing SaaS companies, but of the market structure itself. The competitive threat isn't to a specific vendor's product—it's to the entire category definition.

Databricks' launch of Lakebase exemplifies this dynamic. Rather than trying to retrofit AI capabilities into its existing data warehouse product, Databricks built a new database product specifically designed for AI agents. The performance metrics are striking. Ghodsi notes that "in its eight months that we've had it in the market, it's done twice as much revenue as our data warehouse had when it was eight months old." While Ghodsi humorously acknowledges he's "comparing toddlers," the comparison illustrates something important: products designed from inception for AI workloads may have fundamentally different growth trajectories than products designed for traditional enterprise use cases.

This phenomenon extends beyond databases. We're beginning to see AI-native products across virtually every enterprise software category. Project management systems designed for autonomous agents operate differently than systems designed for human users. Customer relationship management systems optimized for AI-driven processes differ fundamentally from those optimized for sales teams. Accounting software designed for automated financial workflows differs from software designed for accountants working manually.

The creation of these new AI-native categories doesn't necessarily destroy existing incumbents, but it creates parallel competitive markets. A company that historically dominated a category among human users may find itself competing in a fundamentally different category optimized for AI agents. This requires different product design choices, different infrastructure, and different go-to-market strategies. Many incumbent SaaS vendors may find themselves strong in the "human-optimized" market segment while facing fierce competition from AI-native alternatives in the "agent-optimized" segment.

This dynamic creates strategic challenges for large SaaS companies. They must simultaneously support existing customers using traditional interfaces while building new products optimized for AI workloads. Resource allocation becomes contentious. Engineering talent focused on building AI-native products is talent unavailable for maintaining legacy systems. Companies that fail to balance these needs risk either becoming obsolete in the AI era or cannibalizing their existing revenue base by shifting resources prematurely away from profitable legacy products.

Small to mid-sized SaaS vendors face different dynamics. They may lack the resources to maintain both legacy products and build new AI-native alternatives. Some will focus on AI-native development and gradually cede the legacy market to established competitors. Others will try to remain focused on legacy markets where they have established positions. The outcome of these strategic choices will define the competitive landscape for the next decade.

How Traditional SaaS Companies Are Leveraging AI for Growth

Databricks' own business provides a masterclass in how established SaaS companies can use AI to accelerate growth rather than simply defend against disruption. The company's numbers are instructive. It's growing at 65% year-over-year, a pace that puts it among the fastest-growing SaaS companies at its scale. More importantly, AI products are generating

This growth isn't coming from completely new customer segments or entirely new product categories. Rather, it's coming from deepening usage among existing customers and expanding the company's total addressable market with new AI-specific offerings. Ghodsi's comment that "for us, it's just increasing the usage" encapsulates this strategy. Traditional SaaS companies that successfully integrate AI aren't succeeding by abandoning their core business. They're succeeding by expanding it.

The mechanism driving this expansion is more sophisticated than simply adding an AI feature to an existing product. Instead, AI integration changes what's possible with existing infrastructure. When data warehouse users can interact with data using natural language instead of requiring specialized query language expertise, they interact more frequently and more deeply. This increased interaction drives higher usage, which in turn drives higher pricing (for usage-based pricing models) or higher expansion revenue (for per-user or per-seat pricing models).

This dynamic creates a positive feedback loop. Users interact more because the system is easier to use. Increased interaction generates valuable new data about how the system is being used, which can be used to train and improve the AI capabilities. Improved AI capabilities make the system even more useful and easier to interact with, driving further usage increases. Companies that successfully establish this cycle gain compounding competitive advantages.

Databricks' Genie product directly exemplifies this dynamic. By enabling any user to query the data warehouse conversationally, the company dramatically expands who can get value from its core product. A marketing analyst who previously required the help of a data engineering team can now independently answer their questions. A sales manager can quickly generate insights instead of waiting for a report. A financial analyst can perform ad hoc analysis without specialists. This democratization of access drives significantly higher usage and engagement.

For SaaS companies considering how to leverage AI, Databricks' approach suggests several key principles. First, integrate AI into existing products rather than creating separate AI-specific products (unless entering genuinely new markets). Second, focus on use cases where AI genuinely expands the value proposition—not features where AI is included for competitive necessity but doesn't create meaningful user benefit. Third, invest in infrastructure that can scale AI capabilities efficiently, as the cost of running large language models at scale can quickly become prohibitive if not carefully managed.

However, this approach isn't without risks. By democratizing access through AI interfaces, Databricks potentially reduces the need for specialized data engineering roles. This might create organizational resistance—why invest in a tool that makes your specialized expertise less valuable? Successful SaaS companies implementing AI must navigate these organizational dynamics carefully, positioning AI as expanding the value of existing expertise rather than eliminating it.

Databricks generates approximately

The Valuation Question: What Is Databricks Worth in the AI Era?

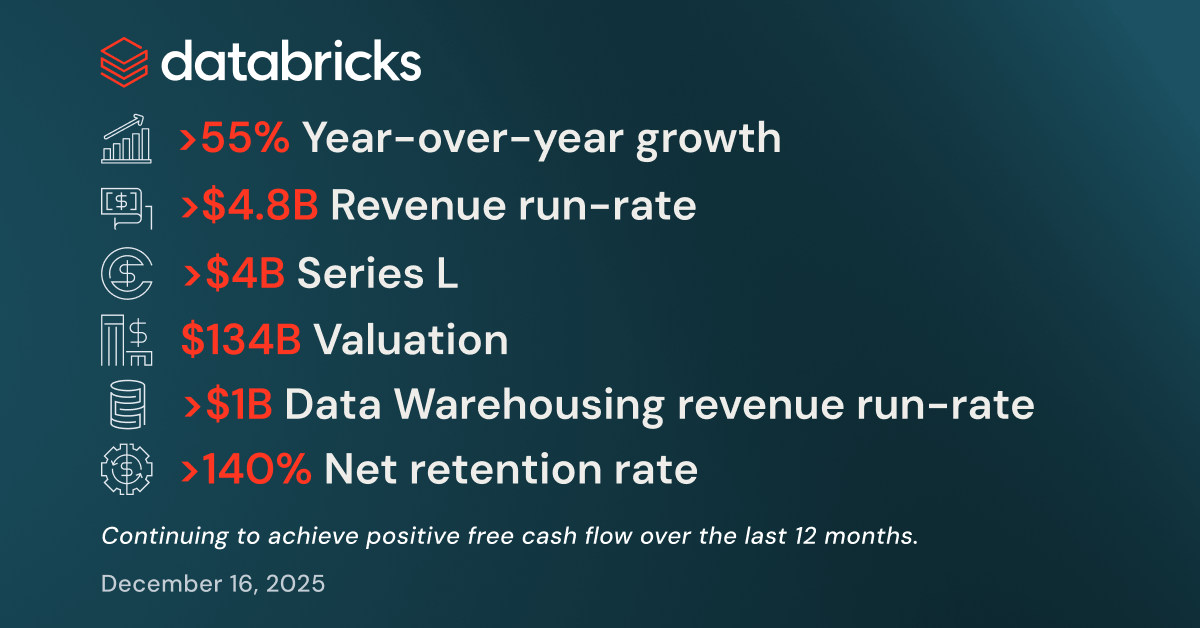

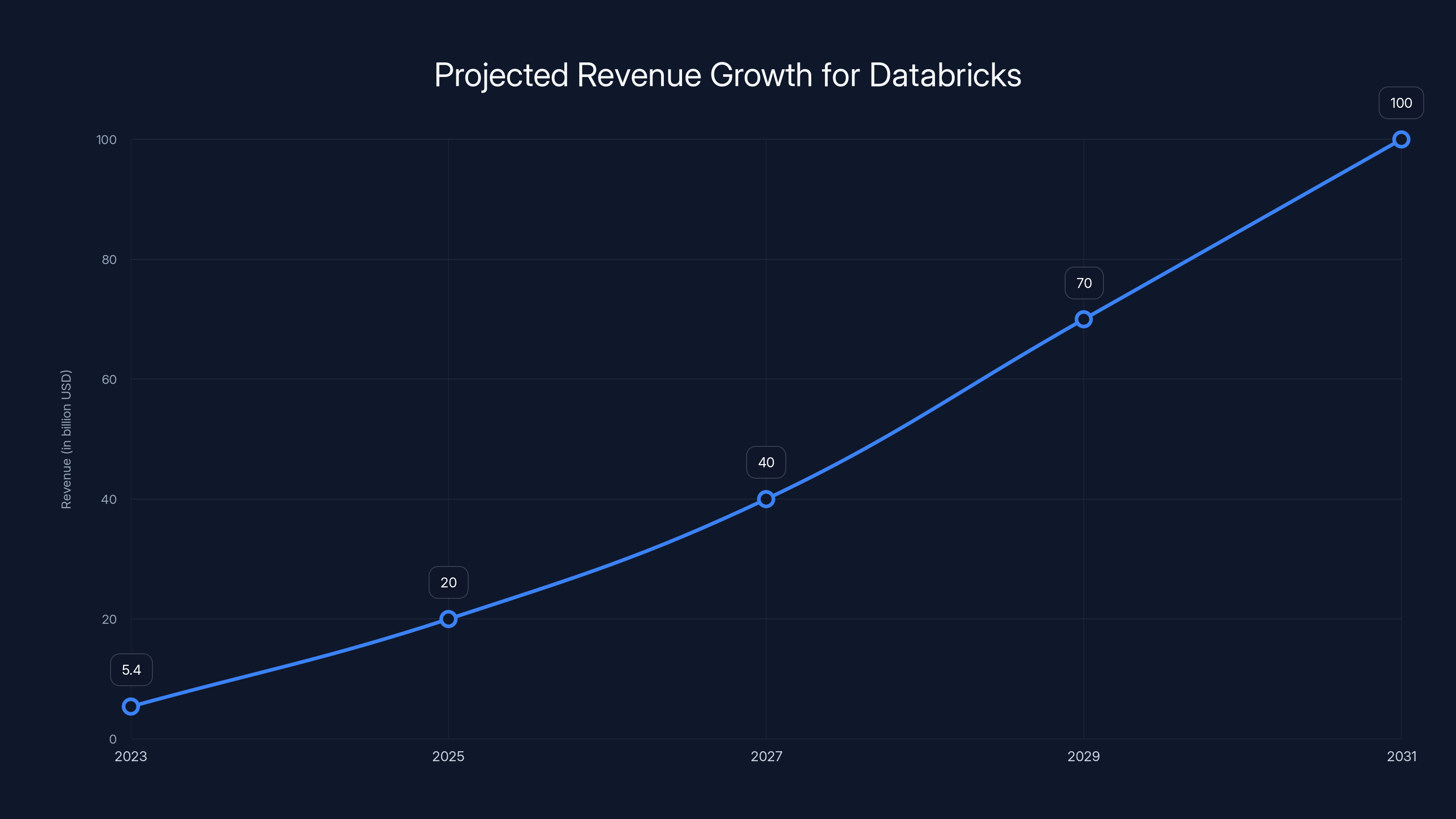

Databricks' $134 billion valuation at its latest funding round represents a significant milestone in enterprise software valuation. For context, this valuation exceeds that of many publicly traded software companies with decades of history and far larger revenue bases. It signals extraordinary investor confidence in the company's ability to grow into its valuation and capitalize on AI-driven opportunities.

Valuation at this scale raises important questions about growth trajectories and market size. Databricks is currently generating

There's precedent for companies achieving this level of growth. Several cloud infrastructure companies—Amazon Web Services, Microsoft Azure, Google Cloud—have reached or exceeded these revenue scales, though often over 15-20 year periods. For a private company in the second decade of its existence, reaching such scaling would be impressive but not unprecedented for the winners in large markets.

The valuation also reflects confidence in Databricks' strategic positioning. The company controls infrastructure that's becoming increasingly critical in the AI era. As organizations build AI applications, they need places to store training data, fine-tuning data, and operational data for AI systems. Databricks positioned itself at the intersection of traditional data infrastructure and emerging AI requirements—a position that could prove highly valuable as AI adoption accelerates across enterprises.

However, the valuation also carries risk. If AI-native databases and data platforms proliferate, or if cloud providers like Amazon, Microsoft, and Google capture a larger share of this market through their own AI-optimized offerings, Databricks' growth trajectory could disappoint relative to its valuation. The company's ability to maintain pricing power and growth rates will depend on maintaining competitive advantages in a rapidly evolving market.

Understanding the Competitive Moat of User Interface Expertise

One of Ghodsi's most insightful observations concerns the competitive moat that accumulated user interface expertise creates. This insight deserves deeper exploration because it explains why certain SaaS companies have been able to maintain market dominance despite technological disruption, and why AI may finally erode this advantage.

Consider how organizational knowledge about SaaS systems accumulates. Users attend training programs, develop muscle memory, learn keyboard shortcuts and workflow patterns. Documentation and training materials proliferate. Communities of practice form around how to use specific systems effectively. Organizations optimize their processes around system capabilities. This accumulated expertise acts as a powerful barrier to switching. Even if a superior alternative emerges, the cost of retraining thousands of employees exceeds the benefit of switching for many organizations.

This dynamic has protected SaaS incumbents throughout the cloud computing era. Companies invested heavily in Salesforce, even though competitors offered comparable functionality, because the switching cost of retraining their sales teams was too high. Organizations maintained SAP implementations despite frustrations because the cost of migration exceeded the benefit. Oracle maintained customer relationships even as newer, more innovative database companies emerged.

AI disrupts this dynamic in a fundamental way. If interaction with software shifts from specialized interfaces to natural language, the accumulated expertise becomes less valuable. A user trained extensively in navigating a SaaS product's menu structure doesn't have a competitive advantage over a new user if both are simply asking questions in natural language. The specialized knowledge that took years to develop becomes less relevant.

This suggests a remarkable opportunity for insurgent SaaS companies. A startup building an AI-native competitor to an established SaaS category doesn't have to overcome the accumulated expertise moat. A new customer evaluating the incumbent versus the newcomer faces a similar learning curve for both—learning to interact through natural language. In this scenario, the incumbent's advantage from accumulated customer expertise evaporates.

However, this cuts both ways. SaaS incumbents can use the same mechanism to entrench new advantages. If Salesforce implements a superior natural language interface to its platform, customers might value that AI integration enough to continue using Salesforce despite having the option to switch. The moat changes form—from interface expertise to AI capability quality—but remains meaningful.

The timing of this transition matters significantly. Companies that maintain user interface expertise while simultaneously building strong AI interfaces enjoy a transitory period where they have advantages relative to both directions—they can serve users preferring traditional interfaces and those preferring natural language. But as natural language becomes the standard, the traditional interface advantage disappears. Companies delayed in implementing natural language interfaces face a genuine competitive vulnerability.

To justify its

Strategic Implications for Data Warehousing and Analytics

Databricks' success with AI-enhanced data warehousing offers instructive lessons for how entire software categories can be transformed by AI without being entirely disrupted. Data warehousing is a mature category that's been commoditized in many respects. Cloud providers offer data warehouse functionality as part of their broader platforms. Specialized vendors compete on features, performance, and pricing.

Databricks' approach—building AI-native features on top of traditional data warehouse infrastructure—suggests a template for category evolution. Rather than competing purely on cost, performance, or incremental feature differentiation, the company is expanding the value of data warehouse infrastructure by making it accessible through natural language interfaces, integrating AI agents, and building agent-native databases like Lakebase.

This strategy creates a differentiated product that's difficult to quickly copy. A cloud provider can offer cheap compute and storage, but building sophisticated natural language query capabilities requires specific expertise. Customers who have invested in Databricks gain access to interfaces and capabilities that competitors either don't offer or offer in inferior form. This creates genuine competitive differentiation even in a mature, commoditized category.

The data warehousing category evolution also illustrates how AI transforms software without eliminating the need for specialized infrastructure. Databricks doesn't suggest that data warehouses become obsolete—rather, that they become smarter, more accessible, and optimized for new workloads like AI agents. The core value of collecting and analyzing data persists. The mechanisms for delivering that value evolve.

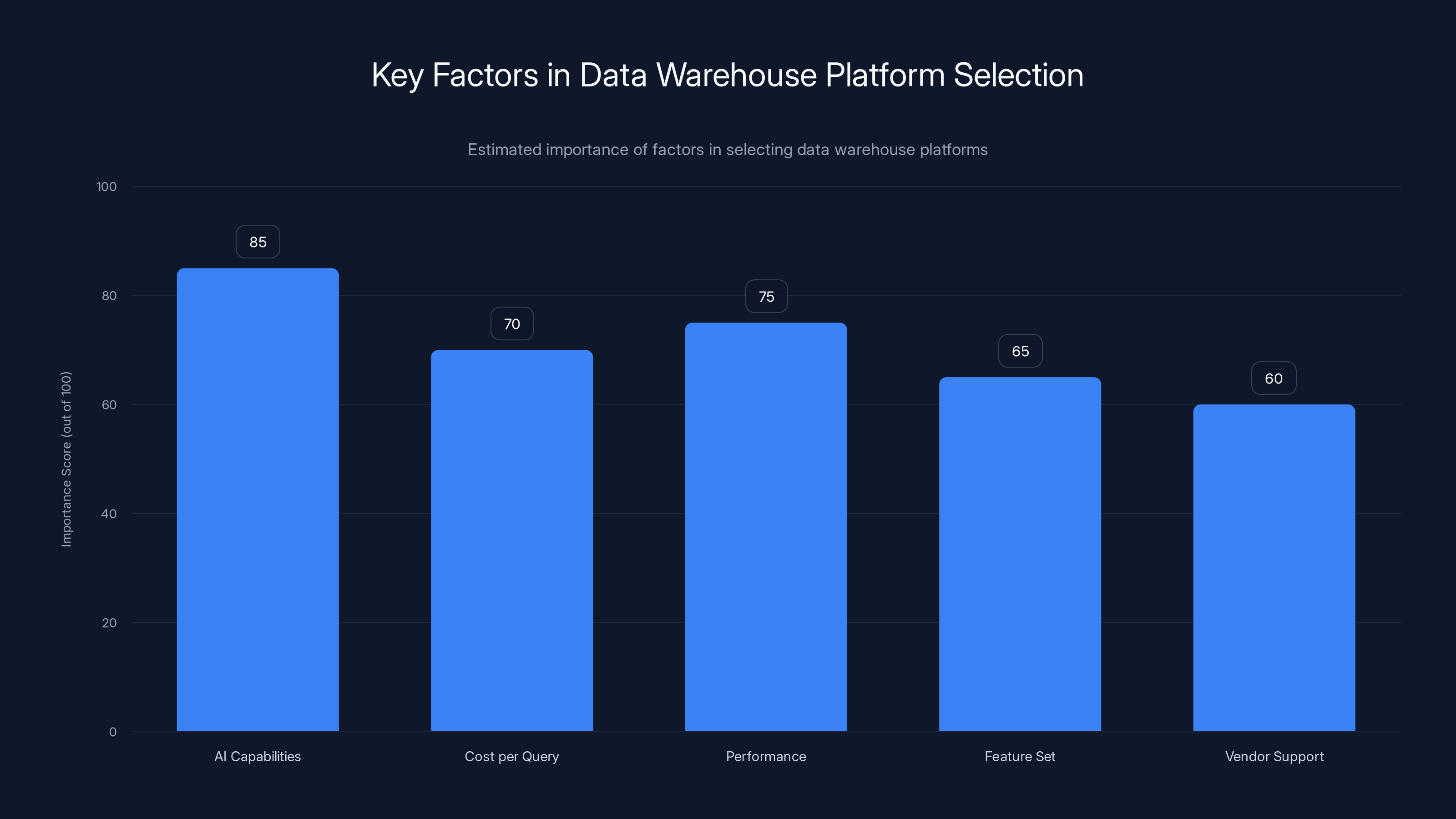

For customers evaluating data warehouse platforms, this evolution means that vendor selection should increasingly prioritize AI capabilities, not just traditional performance metrics. Cost per query and query performance remain important, but the ability to interact with data through natural language, the quality of AI-generated insights, and the support for AI agent workloads increasingly determine competitive positioning.

This shift has implications for organizational buying behavior. Chief Data Officers and analytics leaders who historically evaluated tools have traditional software evaluation criteria. Chief AI Officers and AI engineering teams have different priorities and evaluation criteria. Organizations with AI initiatives increasingly involve AI-specific stakeholders in infrastructure decisions. Vendors that can appeal to both constituencies—traditional analytics leaders and emerging AI stakeholders—have competitive advantages.

The Agent Economy and Its Infrastructure Requirements

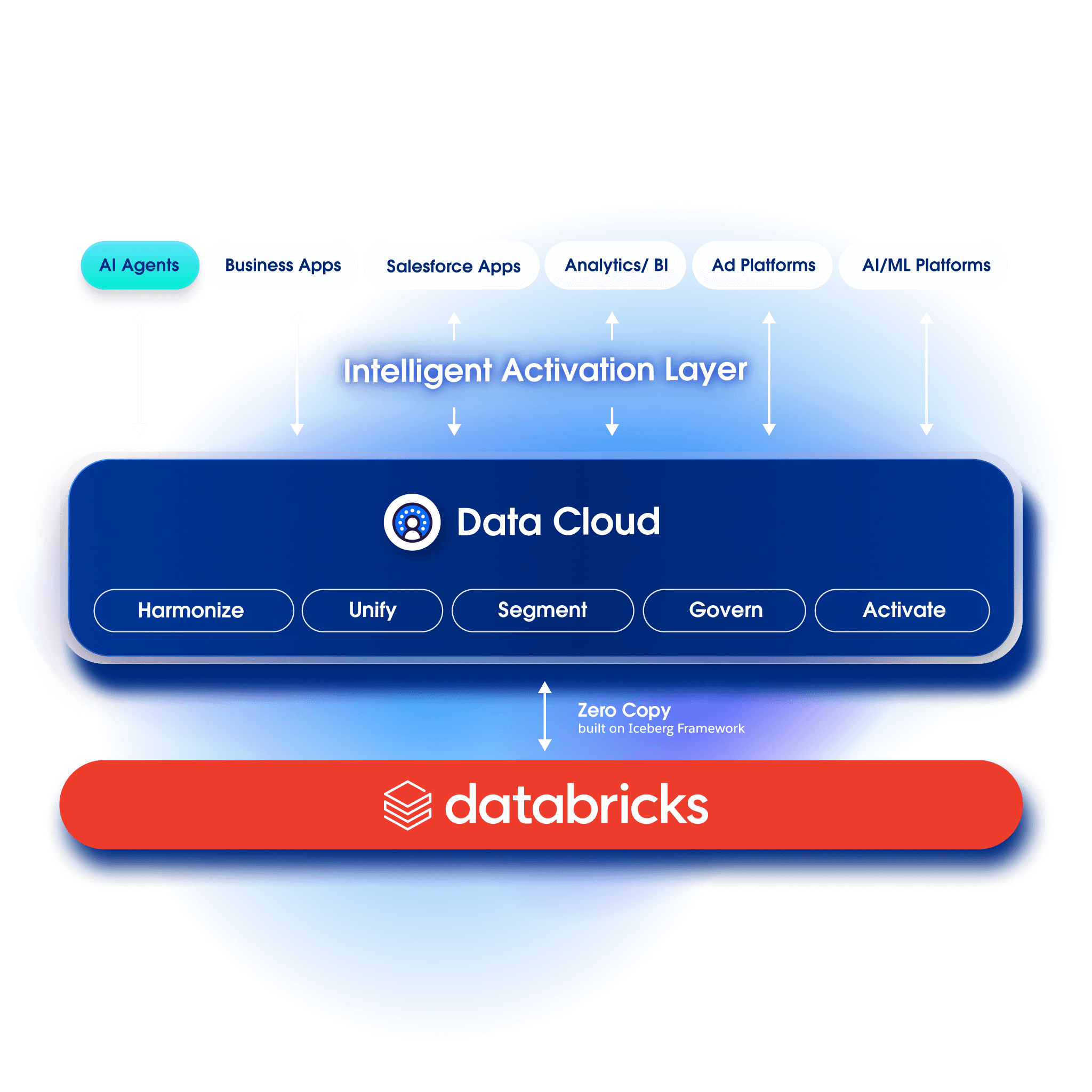

Ghodsi's mention of products designed "for agents" points to an emerging structural shift in how enterprise software is built and deployed. The agent economy—where autonomous AI agents perform work previously done by humans—requires fundamentally different infrastructure than traditional enterprise software.

Traditional SaaS systems are built around human workflows. A project management system models how humans organize and track work. A CRM system reflects how sales teams manage customer relationships. An accounting system represents how accountants record and categorize transactions. These systems are optimized for human comprehension, decision-making, and workflow patterns.

AI agents operate under different constraints and capabilities. They can process information in formats humans find unwieldy. They can make decisions at inhuman speeds. They can parallelize work in ways humans cannot. They can operate continuously without fatigue. They require interfaces that are machine-readable rather than human-readable. Traditional SaaS systems are poorly optimized for agent workloads because they're designed around human workflows.

Databricks' Lakebase represents an attempt to build database infrastructure optimized for agents rather than humans. The specific design choices differ from traditional databases. Schema design might optimize for agent query patterns rather than human understandability. Pricing might reflect agent computational patterns rather than human usage patterns. APIs might prioritize programmatic efficiency over interactive usability.

The emergence of agent-optimized infrastructure creates new competitive opportunities. Startups can build agent-first databases without the constraint of maintaining human interfaces. They can optimize purely for agent workload patterns. Established database vendors face the challenge of serving both human and agent users simultaneously—a difficult balance that may satisfy neither constituency completely.

This dynamic will likely play out across enterprise software categories. We'll see the emergence of agent-optimized versions of traditional software categories—project management for agents, CRM for agents, accounting for agents, and so forth. Some of these will become distinct products or companies. Others will be features within broader platforms serving both humans and agents.

The infrastructure to support agents also requires different operational characteristics. Human-optimized systems prioritize usability, support, and training. Agent-optimized systems prioritize reliability, security, and integration. A system designed for agents to autonomously execute business transactions requires extraordinary reliability—agent hallucinations or errors could cause substantial business damage. Human-optimized systems tolerate higher error rates because humans catch many errors before they cause damage.

This creates opportunities for specialized vendors. Companies building infrastructure specifically optimized for AI agents could carve out substantial markets by solving the unique problems that agent workloads create. However, these opportunities come with higher stakes than traditional SaaS—if agent infrastructure fails, the impact is more severe than if a collaboration tool becomes temporarily unavailable.

Estimated data suggests private markets value high-growth AI companies at nearly double the multiples of public markets, reflecting skepticism in public markets towards unprofitable tech firms.

Comparing Traditional SaaS Adaptation With AI-Native Competition

As AI transforms enterprise software, a clear pattern is emerging in how different vendors respond. Understanding the strategic differences between traditional SaaS companies adapting to AI and AI-native competitors building from scratch provides valuable context for evaluating the industry's trajectory.

Traditional SaaS companies adapting to AI typically follow several patterns. They retrofit natural language interfaces onto existing products. They integrate large language model capabilities through partnerships rather than building from scratch. They add AI-powered features to existing categories—AI-powered insights, AI-assisted workflows, AI-generated summaries. This approach preserves existing customer relationships and revenue bases while adding AI capabilities.

The advantages of this approach are substantial. Existing customers already understand the product and have trained their organizations around it. The vendor understands customer needs deeply, having served the category for years. Distribution channels and sales organizations already exist. The transition to AI can happen incrementally, minimizing business disruption. A company like Salesforce can add AI capabilities while maintaining its fortress of existing CRM customers.

However, this approach also carries constraints. The existing product architecture may not be optimized for AI workloads. The user interface designed for human interaction may not translate cleanly to natural language interfaces. The pricing model built around per-user seats may not work well for AI-driven automation that reduces user headcount. The engineering culture and expertise in traditional software development may differ from what's needed to build sophisticated AI features.

AI-native companies, by contrast, start with AI as a core principle rather than a retrofit. They design systems expecting natural language interaction from the beginning. They price based on AI computational costs rather than human headcount. They build infrastructure optimized for AI workloads. They attract engineering talent specialized in AI and machine learning rather than traditional software development. They can move quickly without the constraint of maintaining legacy systems.

The disadvantages are equally clear. AI-native companies lack the customer relationships that incumbents have built over decades. They must educate markets about new categories or approaches that customers haven't learned yet. They face the challenge of competing against established vendors with superior distribution, brand recognition, and customer switching costs. They must achieve profitability quickly because investors are increasingly skeptical of unprofitable AI startups.

This creates two distinct competitive dynamics operating simultaneously. In the traditional SaaS categories—CRM, project management, accounting, HR—incumbent companies are likely to maintain market leadership, particularly among existing customers, by successfully integrating AI capabilities. In new categories being created around AI—agent infrastructure, AI-native data platforms, AI-first automation—new companies have better opportunities to establish market leadership.

The most vulnerable incumbent SaaS companies are those in the middle—not leading in their categories but large enough that customers have already invested in them, not moving fast enough to lead in AI adoption, not small enough to pivot entirely to AI-native approaches. These mid-tier companies face genuine competitive risk from both directions—customers might consolidate to larger vendors with better AI capabilities, or might switch to AI-native competitors solving their problems in fundamentally new ways.

Pricing Models in the AI-Driven SaaS Era

The transformation of SaaS by AI has profound implications for pricing models, a dimension that deserves careful analysis because pricing structures both reflect and shape competitive dynamics. Traditional SaaS pricing has followed predictable patterns: per-user pricing, per-seat pricing, or tiered subscription models based on feature access. These models align with how software has traditionally been consumed—by humans using accounts and seats.

AI fundamentally disrupts this pricing model alignment. When software can be accessed and used by AI agents as well as humans, per-user pricing becomes problematic. An AI agent may access a system to perform work previously done by a human, but per-user pricing assumes that adding users adds cost to the vendor proportional to additional value delivered. AI agents don't follow this relationship. One agent might create massive consumption. A hundred agents might create minimal consumption if they're executing efficiently.

Databricks' usage-based pricing model is instructive in this context. The company charges customers based on their data warehouse consumption—more queries, more data processing, higher bills. This model aligns pricing with actual value delivered. A customer using the system more pays more, but only for actual usage. A customer using the system less pays less. This creates fairness that's difficult to achieve with per-user models.

Usage-based pricing, however, creates different problems. Customers face uncertainty about their monthly bills—they don't know in advance what they'll spend. Some industries and customers struggle with this uncertainty and prefer predictable budgeting. Vendors face complexity in metering and measuring usage accurately. Some customers become sophisticated at optimizing usage to minimize costs, potentially reducing the vendor's revenue while not improving customer outcomes.

AI-native vendors are experimenting with additional pricing dimensions. Some charge based on AI computational resources consumed. Others charge based on the number of autonomous agents deployed. Some use hybrid models combining base subscriptions with usage overages. These emerging models reflect the reality that AI-driven software has different cost structures than traditional SaaS.

This pricing evolution creates strategic opportunities and risks. Vendors that first move to pricing models aligned with AI workloads may establish new willingness-to-pay baselines. Customers accustomed to per-user pricing for traditional SaaS might accept higher overall costs if the value proposition is compelling enough. However, vendors that move too aggressively on pricing risk enabling competitors to undercut them.

For customers evaluating AI-enhanced SaaS products, understanding the pricing model implications of AI adoption is crucial. A tool that reduces headcount requirements through AI automation might command higher overall costs even as cost-per-human-worker declines. A system that automates previously expensive specialized work might deliver outsized ROI even at premium pricing.

AI capabilities are becoming the most important factor in selecting data warehouse platforms, surpassing traditional metrics like cost and performance. (Estimated data)

The Role of Data Advantage in AI-Era Competition

As AI becomes central to competitive differentiation, data advantages take on heightened importance in ways that differ from traditional SaaS competition. Databricks' position as a data platform provider illustrates these dynamics particularly well, because the company sits at the intersection of data ownership and AI capability development.

Traditionally, SaaS vendors competed partially on the basis of the data they accumulated. A CRM vendor understood customer behavior because they hosted data from thousands of organizations. An accounting vendor understood business financial patterns. A project management vendor understood work patterns. This data provided competitive advantages through understanding market patterns, improving products, and in some cases, selling insights back to customers.

AI intensifies this dynamic substantially. Large language models improve as they're exposed to more data. Models trained on diverse datasets often perform better than models trained on limited data. A SaaS vendor with access to vast amounts of customer data can potentially build better AI models than competitors without equivalent data. Moreover, models trained on customer data can be fine-tuned to particular use cases, making them more valuable for specific applications.

This creates a competitive dynamic where data-rich companies have compounding advantages. Databricks, as a data platform hosting data for tens of thousands of organizations, has access to extraordinary data diversity. The company can potentially build better AI models for data analysis than competitors precisely because of this data advantage. As the models improve, more customers are attracted to the platform, increasing the data advantage further.

However, this data advantage comes with risks and constraints. Privacy regulations like GDPR limit what companies can do with customer data. Organizations expect their data to be kept confidential and not used to train models benefiting competitors. Ethical considerations around data ownership and usage are increasingly important to customers. A data platform company that tried to exploit customer data for AI model training without explicit consent would face substantial backlash.

Smart data platform companies address this challenge through transparency and customer control. Databricks likely allows customers to opt in to having their data used for model training in exchange for benefits, while allowing others to keep their data completely private. This balanced approach leverages data advantages without overstepping ethical boundaries.

For customers, understanding how vendors plan to use their data in the AI era is increasingly important. Organizations should ask explicit questions about whether their data will be used to train vendor models, whether that usage is optional or required, and what controls they have over it. Vendors providing strong data privacy guarantees and transparent practices around AI model training create trust that competitors undermining it through aggressive data exploitation undermine.

Timing Considerations: Why Databricks Chose Capital Over IPO

Databricks' decision to raise $5 billion in private capital rather than pursue a public offering provides insight into how market conditions and strategic positioning influence capital decisions. Ghodsi stated directly: "Now is not a great time to go public." This seemingly simple statement reflects complex calculations about company valuation, market receptivity, and strategic flexibility.

From a tactical perspective, Ghodsi's capital-raising strategy makes sense. Private markets are valuing high-growth AI companies at premium multiples. Public markets have historically been more skeptical of unprofitable technology companies, particularly after the 2022 market correction. Databricks, while generating substantial revenue, may not be profitable (the company doesn't disclose detailed profit metrics). In this environment, raising private capital likely yields better valuation per dollar of equity than a public offering would.

Ghodsi also articulated a strategic rationale: "I just wanted to be really well capitalized," with the capital providing "many, many years of runway" should markets deteriorate. This capital strategy reflects lessons learned from previous boom-bust cycles. Companies that entered downturns with substantial cash reserves survived better than those dependent on continued capital access. By raising an enormous round now, Databricks has insulated itself from future market disruptions.

This strategy is particularly astute for an AI company because the AI landscape could shift rapidly. If certain AI approaches become commoditized, if new technical paradigms emerge, or if competition intensifies, substantial cash reserves provide flexibility to pivot, invest in R&D, or acquire complementary technologies. Companies lacking capital cushions might be forced into unfavorable strategic choices by market circumstances.

However, this strategy isn't without costs. By remaining private, Databricks forgoes the liquidity that public markets provide to employees and early investors. Talented engineers have more incentive to join public companies where they can diversify out of equity exposure. The company doesn't get the validation that successful public market transitions provide. If markets remain skeptical of unprofitable AI companies, Databricks' decision to stay private might ultimately reduce its options.

From a market perspective, Databricks' decision to stay private might herald a broader trend. If large, successful technology companies prefer remaining private in the current environment, that suggests either that public markets are undervaluing high-growth companies, or that private markets have developed sufficient depth and liquidity to make public offerings unnecessary. Either interpretation has significant implications for how technology markets will develop over the coming years.

Organizational Resistance and Implementation Challenges

While Ghodsi's thesis about how AI will transform SaaS is compelling, implementing it faces significant organizational resistance that shouldn't be underestimated. Understanding these implementation challenges provides context for how quickly the AI transformation of SaaS will actually occur.

Large organizations have substantial institutional knowledge and processes built around existing software systems. A financial analyst has learned complex spreadsheet techniques, developed relationships with data engineering teams, and built workflows around how company systems work. Introducing natural language AI interfaces threatens this accumulated expertise. The analyst might fear becoming less valuable if their specialized knowledge becomes irrelevant. Data engineering teams might view AI automation as a threat to their headcount and importance.

These aren't irrational fears. If an AI system can do what a specialized expert previously did, the organization no longer needs that specialist at the same level. Some organizations will reposition specialists into higher-value work. Others will reduce headcount. Specialists rightfully perceive AI as a potential threat.

Organizational response to this threat includes slowing adoption of AI capabilities, maintaining legacy systems longer than technically optimal, and limiting how widely new AI features are deployed. These responses are completely rational from an organizational preservation perspective, even if they're irrational from an economic efficiency perspective.

Vendor organizations implementing AI also face substantial challenges. Traditional software engineers may lack expertise building large language model applications. Organizational cultures developed around traditional software development may struggle to accommodate the different engineering practices, testing approaches, and failure modes that AI systems introduce. Companies used to building deterministic software need entirely new mindsets for building probabilistic systems that hallucinate sometimes.

These organizational challenges mean that Ghodsi's vision of AI-transformed SaaS, while compelling and likely inevitable long-term, will progress more slowly than pure technical capability would suggest. Organizations will maintain hybrid systems longer than necessary. Some vendors will struggle to build quality AI features. Customers will move cautiously in their adoption, waiting for proven reliability before fully committing to AI-driven workflows.

This gradual transition creates opportunities for companies that execute well on AI integration and challenges for those that struggle. But it also suggests that the competitive reshuffling won't happen as dramatically or quickly as the most enthusiastic AI proponents suggest.

Geographic and Regulatory Dimensions of AI-Driven SaaS Evolution

While Ghodsi's analysis focuses primarily on technological and competitive dimensions, the geographic and regulatory landscape increasingly shapes how AI affects SaaS competition. Different regions have fundamentally different regulatory approaches to AI, data privacy, and software competition, which will shape how the transformation unfolds across markets.

Europe's regulatory approach, embodied in regulations like GDPR and the emerging AI Act, creates constraints on how AI companies can operate. Using customer data to train AI models requires explicit consent in many cases. AI systems used to make consequential decisions must be explainable. These requirements don't eliminate AI adoption, but they create compliance costs that smaller vendors struggle to meet while larger vendors can absorb.

This creates a geographic advantage for well-capitalized vendors. A company like Databricks with substantial resources can build compliance infrastructure for European markets. A startup with limited resources might struggle to meet regulatory requirements, ceding market share to incumbents and well-funded competitors. Geography becomes a dimension of competitive advantage.

China's regulatory approach differs substantially, with concerns focused on data sovereignty and state control rather than individual privacy. Chinese SaaS companies and AI vendors operate under entirely different constraints. This potentially allows Chinese companies to develop AI capabilities and business models distinct from those viable in Western markets. However, market fragmentation limits global scale for companies optimized for China-specific regulatory requirements.

Developing economies often lack the regulatory infrastructure to constrain AI adoption, but also face challenges building the engineering talent and capital required for sophisticated SaaS businesses. This creates opportunities for companies serving specific regional markets but reduces the tendency for global players to dominate everywhere.

These geographic dimensions suggest that while technological change is global, the competitive impacts will vary substantially by region. A vendor competitive in Europe might struggle to compete globally if it cannot serve markets with different regulatory requirements efficiently. Global SaaS companies will increasingly need to operate distinct product versions optimized for different regulatory environments.

Skill Requirements and Labor Market Implications

As AI transforms SaaS, the skill requirements for both building and using these systems shift dramatically, with profound implications for labor markets in technology and beyond. These workforce dynamics will significantly shape how quickly AI adoption accelerates and how it impacts different categories of workers.

Building AI-enhanced SaaS systems requires different expertise than traditional software development. Traditional backend engineers needed to understand distributed systems, databases, and scalable architecture. AI-enhanced SaaS requires engineers comfortable with machine learning, large language models, prompt engineering, and managing probabilistic systems. These aren't simply additive skills—they reflect different problem-solving approaches and technical intuitions.

Many experienced traditional software engineers struggle to develop intuition for AI systems. Deterministic software rewards careful logic and predictability. AI systems are fundamentally probabilistic and sometimes opaque. This difference causes some excellent traditional engineers to struggle with AI-focused work. Companies building AI capabilities face challenges recruiting and retaining the right talent.

This creates unusual labor market dynamics. Expertise in building AI-enhanced systems commands substantial premiums. New computer science graduates with some AI/ML background often have better job prospects than experienced traditional software engineers without AI expertise. Companies struggle to find people with the right skill combinations. AI specialists are in extreme shortage, with demand vastly exceeding supply.

These labor dynamics create winners and losers. Well-funded companies like Databricks can offer compensation packages that attract top talent. Mid-tier companies struggle to compete for talent. Smaller startups must often be extremely selective, hiring only extraordinary individuals. This consolidates technical talent toward well-capitalized companies, reinforcing competitive advantages.

For individual technologists, the labor market implications are substantial. Traditional software engineers without AI expertise face career uncertainty. Those willing to invest in learning AI and machine learning concepts position themselves well for future opportunities. Technical leadership roles increasingly require understanding AI capabilities and limitations. Organizations increasingly expect leaders to make decisions about how to integrate AI into products and operations.

Beyond the technology industry itself, AI transformation of SaaS implies substantial labor market changes in industries that SaaS serves. Jobs that are automatable through AI-enhanced SaaS become threatened. Financial analysts who relied on specialized expertise in manually analyzing data face challenges from AI systems that do this automatically. Business analysts who generated insights through data manipulation face displacement from AI systems that generate insights automatically.

This isn't unique to the AI era—technology has always displaced workers whose skills became automated. However, the pace of AI transformation may be faster and broader than previous technological changes. Organizations that prepare their workforces for this transition—retraining workers, positioning automation to complement rather than replace humans, investing in uniquely human capabilities—navigate the transition more successfully.

Future Competitive Scenarios: Multiple Paths Forward

Ghodsi's thesis provides important insights into how AI will transform SaaS, but the future is not predetermined. Multiple competitive scenarios could plausibly unfold based on how companies execute, how customers respond, and how technology evolves. Mapping these scenarios provides useful context for understanding the landscape.

Scenario 1: Incumbent Consolidation. In this scenario, large SaaS incumbents successfully integrate AI capabilities into their products, maintaining market dominance. Salesforce, SAP, Oracle, and similar companies become more valuable and competitive as their AI-enhanced products capture additional value. The transformation strengthens incumbents because their customer relationships, distribution, and data advantages are too strong to overcome. This scenario suggests cautious optimism for incumbents and difficulty for AI-native competitors.

Scenario 2: Category-Level Disruption. In this scenario, AI-native companies successfully establish new categories and carve out significant market share in emerging domains like agent-optimized infrastructure, AI-first automation, and novel applications of large language models. Incumbents maintain their core markets but lose growth opportunities as new categories emerge. The overall market grows, but growth is increasingly concentrated in new categories rather than traditional SaaS expansion. This scenario reflects Ghodsi's comments about new competitors emerging, even as incumbents maintain core businesses.

Scenario 3: Competitive Fragmentation. In this scenario, no clear winners emerge. Traditional incumbents struggle to build quality AI features due to organizational constraints and legacy system baggage. AI-native companies struggle to compete against incumbents' distribution and customer relationships. No company successfully dominates new categories. The market becomes increasingly fragmented, with multiple competitors in every category, none achieving clear dominance. This scenario suggests more competitive intensity and slower consolidation.

Scenario 4: Platform Dominance. In this scenario, cloud providers like Amazon, Microsoft, and Google increasingly capture value by providing both infrastructure and AI-enhanced SaaS applications. Rather than independent SaaS companies competing, we see competition between cloud platform ecosystems. Microsoft's AI-enhanced Office combined with Azure infrastructure becomes dominant, competing against similar ecosystems from Amazon and Google. This scenario suggests winner-take-most dynamics at the platform level.

Each scenario has different implications for investors, customers, and technology workers. Scenario 1 rewards owning shares of incumbent SaaS companies. Scenario 2 rewards investing in emerging categories. Scenario 3 generates intense competition and lower prices. Scenario 4 concentrates power and reduces competition. The actual future likely combines elements of all four scenarios, playing out differently in different categories and geographies.

Strategic Recommendations for Different Stakeholders

Understanding how AI is transforming SaaS suggests different strategic implications for different stakeholders. These recommendations attempt to provide actionable guidance based on the analysis.

For SaaS Incumbents:

- Accelerate AI integration into core products, treating it as strategically critical rather than optional

- Invest aggressively in building engineering talent capable of building AI-enhanced systems

- Consider building separate product lines optimized for AI workloads rather than retrofitting legacy products

- Maintain strong customer relationships while preparing them for interface and workflow changes

- Invest in compliance infrastructure to navigate different regulatory requirements across geographies

- Prepare for margin compression from competition and potential price resistance from customers benefiting from automation

For Emerging Companies:

- Identify market segments where incumbent weakness or different requirements create space for AI-native approaches

- Build products explicitly optimized for AI agent workloads rather than trying to compete with incumbents on human interfaces

- Consider strategic partnerships with incumbents that have distribution and customers if direct competition proves too difficult

- Focus on different pricing models that align with AI cost structures rather than traditional per-user models

- Build strong moats through data advantages, proprietary models, or network effects that protect against competitive displacement

For Customers Evaluating SaaS:

- Prioritize vendors providing roadmaps for AI integration rather than treating AI as an afterthought

- Evaluate vendors' technical capability in building AI features rather than assuming all AI implementations are equivalent

- Prepare organizational workflows for transformation as natural language interfaces and AI automation change how work is done

- Understand pricing model implications of AI adoption and negotiate terms that protect against unexpected cost escalation

- Build technical expertise internally to implement AI capabilities rather than depending entirely on vendor features

FAQ

What is Databricks' position on AI's impact on SaaS companies?

Databricks CEO Ali Ghodsi argues that AI won't destroy SaaS companies by enabling quick replacement with hastily assembled alternatives. Instead, AI will transform how SaaS companies compete by replacing specialized user interfaces with natural language interactions and creating new categories of AI-native products optimized for autonomous agents.

How is Databricks growing through AI integration?

Databricks is experiencing 65% year-over-year revenue growth with over

Why do incumbent SaaS companies have advantages in the AI transformation?

Incumbent SaaS companies maintain their system of record status—their position as the single source of truth for critical business data—which creates a powerful defensive moat. The organizational cost and operational risk of migrating these systems exceeds the benefit of switching, meaning incumbents can maintain their core customer relationships even as they integrate AI capabilities to compete more effectively.

What are AI-native alternatives to traditional SaaS products?

AI-native alternatives are emerging across enterprise software categories, with products specifically designed for AI agent workloads rather than human users. These may include agent-optimized databases, AI-first automation platforms, natural language query systems, and autonomous workflow tools. Companies like Runable offer AI-powered automation platforms with capabilities for workflow orchestration, content generation, and developer productivity that represent alternatives to both traditional SaaS and specialized point solutions.

How will natural language interfaces change SaaS competition?

Natural language interfaces democratize access to specialized software by eliminating the requirement for users to learn proprietary query languages or complex menu navigation. This erosion of the user interface moat—the competitive advantage gained from accumulated expertise with a specific system—creates opportunities for both incumbents to grow usage and for new competitors to establish market share without facing the switching cost of retraining users on unfamiliar interfaces.

What pricing models are emerging for AI-enhanced SaaS?

Traditional per-user and per-seat pricing models are misaligned with how AI-enhanced systems create value, since AI agents don't correspond to per-user billing. Usage-based pricing, computational resource pricing, and hybrid models are emerging. Databricks uses usage-based pricing where customers pay for data warehouse consumption regardless of whether consumption comes from human users or autonomous agents.

How long will the SaaS-to-AI transformation take?

While the technological capabilities are advancing rapidly, organizational implementation faces substantial challenges. Legacy systems, organizational resistance from workers whose expertise is threatened by automation, and the complexity of retrofitting AI into existing systems will slow the transition compared to pure technical possibility. Most analysts expect a 5-10 year transition period where hybrid systems supporting both human users and AI agents coexist.

What regulatory factors will shape AI-driven SaaS evolution?

Different geographies have fundamentally different AI and data privacy regulations. Europe's GDPR and AI Act create compliance requirements that increase costs but potentially level competitive playing fields. China's data sovereignty requirements create distinct market dynamics. Companies serving global markets must build separate product versions for different regulatory environments, which consolidates advantage toward well-capitalized vendors who can afford this complexity.

Will AI eliminate traditional SaaS categories?

No. Ghodsi's analysis suggests AI will transform categories rather than eliminate them. Even as natural language interfaces and autonomous agents change how software is used, the underlying value—storing critical business data, analyzing it for insights, automating business processes—remains. However, new categories optimized explicitly for AI agents will emerge alongside transformed traditional categories.

How should organizations prepare for AI-transformed SaaS?

Organizations should evaluate vendors' roadmaps for AI integration rather than assuming all vendors are equivalent in their AI implementation quality. Build internal expertise in implementing AI capabilities rather than depending entirely on vendor features. Prepare workforce training for interface and workflow changes. Understand pricing model implications and negotiate terms that protect against unexpected cost escalation. Consider how AI automation will affect existing roles and develop reskilling programs for affected employees.

Conclusion: The Resilience and Transformation of SaaS

Databricks CEO Ali Ghodsi's perspective on how AI will shape the SaaS landscape offers a sophisticated counterpoint to the more hyperbolic narratives suggesting AI will wholesale eliminate enterprise software companies. His analysis, supported by Databricks' own remarkable growth and strategic positioning, suggests a more nuanced future where established SaaS companies maintain resilience in defending their core markets even as new competitive categories emerge and how software is used transforms fundamentally.

The evidence supporting this analysis is substantial. Systems of record—the critical databases storing enterprise data for sales, finance, customer relationships, and operations—demonstrate remarkable staying power despite technological transformation. The organizational cost, operational risk, and regulatory complexity of migrating these systems creates a structural barrier to displacement that mere technological superiority cannot overcome. Companies that maintain system of record status possess a defensibility that should not be underestimated.

However, this defensibility doesn't extend to every dimension of SaaS competition. User interfaces, the methods through which organizations interact with software, face genuine disruption from natural language AI interactions. The specialized expertise that made software sticky—training thousands of employees in specific menu systems and workflow patterns—becomes less valuable when interaction shifts to conversational natural language. This represents genuine vulnerability, particularly for vendors that move slowly in implementing natural language interfaces.

The emergence of AI-native categories and products designed explicitly for autonomous agents represents a third competitive dimension that doesn't necessarily displace incumbents but creates new markets where new competitors have advantages. A startup building an agent-optimized database faces fewer architectural constraints than an incumbent trying to retrofit agent support into a system designed for human analysts. This suggests space for new competitors even as incumbents maintain their core businesses.

For customers navigating this transformation, the analysis suggests that SaaS remains a viable, valuable category but requires sophisticated vendor evaluation. Not all AI implementations are equivalent. Vendors that have built genuine expertise in large language models, autonomous agents, and AI integration will outcompete those treating AI as a checkbox feature. Customers should prioritize vendors demonstrating clear technical depth in AI capabilities and committed roadmaps for integration.

Organizations should also prepare internally for the transition to AI-enhanced workflows. This preparation means more than simply purchasing new software. It requires rethinking how work gets done, preparing employees for interface changes, and building internal expertise in AI implementations. Organizations that make these preparations systematically will reap greater benefits from AI-enhanced SaaS than those attempting to adopt AI passively through vendor features alone.

The transformation of SaaS by AI will be neither as dramatic as the most enthusiastic proponents suggest nor as safe as the most conservative defenders hope. Change will be substantial, competition will intensify, but winners will be determined by execution quality, not predetermined by current market position. Incumbents that embrace AI genuinely and implement it well will thrive. Newcomers that build products genuinely optimized for new AI-enabled workflows will establish themselves. Incumbents that resist or implement AI poorly will lose ground. This is a competitive resetting, not an elimination.

For investors, technologists, and business leaders, understanding this balanced view of how AI transforms SaaS is essential for making informed decisions. The future isn't predetermined. Multiple scenarios could plausibly unfold based on how organizations execute on AI integration, how customers respond to new capabilities and interfaces, and how competitive dynamics play out across different market segments. Success will belong to those who understand these dynamics deeply enough to navigate the transformation effectively.

Key Takeaways

- AI will transform SaaS through natural language interfaces and autonomous agents, not wholesale replacement with vibe-coded alternatives

- Systems of record maintain defensive moat despite AI disruption because migration costs exceed benefits

- Specialized user interface expertise that protected SaaS incumbents becomes less valuable with natural language interaction

- New AI-native categories are emerging alongside traditional SaaS transformation, creating opportunities for new competitors

- Pricing models are evolving from per-user to usage-based models aligned with AI computational costs

- Databricks demonstrates how established companies can achieve 65% growth by successfully integrating AI into core products

- Organizational resistance and implementation complexity will slow AI adoption faster than pure technological capability suggests

- Geographic and regulatory differences will fragment competitive dynamics across global markets

- For customers and leaders, vendor selection increasingly depends on genuine AI implementation quality rather than incumbent status

- The future will belong to companies that execute well on AI integration, not to those with predetermined competitive advantages

Related Articles

- Airtable Superagent: AI Agent Features, Pricing & Alternatives [2025]

- SaaS Revenue Durability Crisis 2025: AI Agents & Market Collapse

- Claude Opus 4.6: 1M Token Context & Agent Teams [2025 Guide]

- Intel GPU Development 2025: Strategic Hiring & Nvidia Challenge

- Agentic Coding in Xcode: How AI Agents Transform Development [2025]

- Own's $2B Salesforce Acquisition: Strategy, Focus & Lessons