Intel's GPU Offensive: A Historic Shift in Semiconductor Strategy

The semiconductor landscape is experiencing a fundamental realignment. For decades, Intel has dominated the CPU market while largely ceding GPU development to specialized competitors like Nvidia and AMD. But in 2024-2025, Intel's strategic direction has shifted dramatically. Chief Executive Officer Lip-Bu Tan's recent public statements confirm what industry observers have suspected: Intel is mounting a serious, sustained effort to become a credible GPU competitor as reported by WebProNews.

This isn't a casual experimentation or incremental improvement. The company has made deliberate leadership hires, allocated significant manufacturing resources through its Intel Foundry Services division, and positioned GPU development as a critical pillar of its future strategy. These moves represent one of the most significant strategic pivots in Intel's 60-year history according to FindArticles.

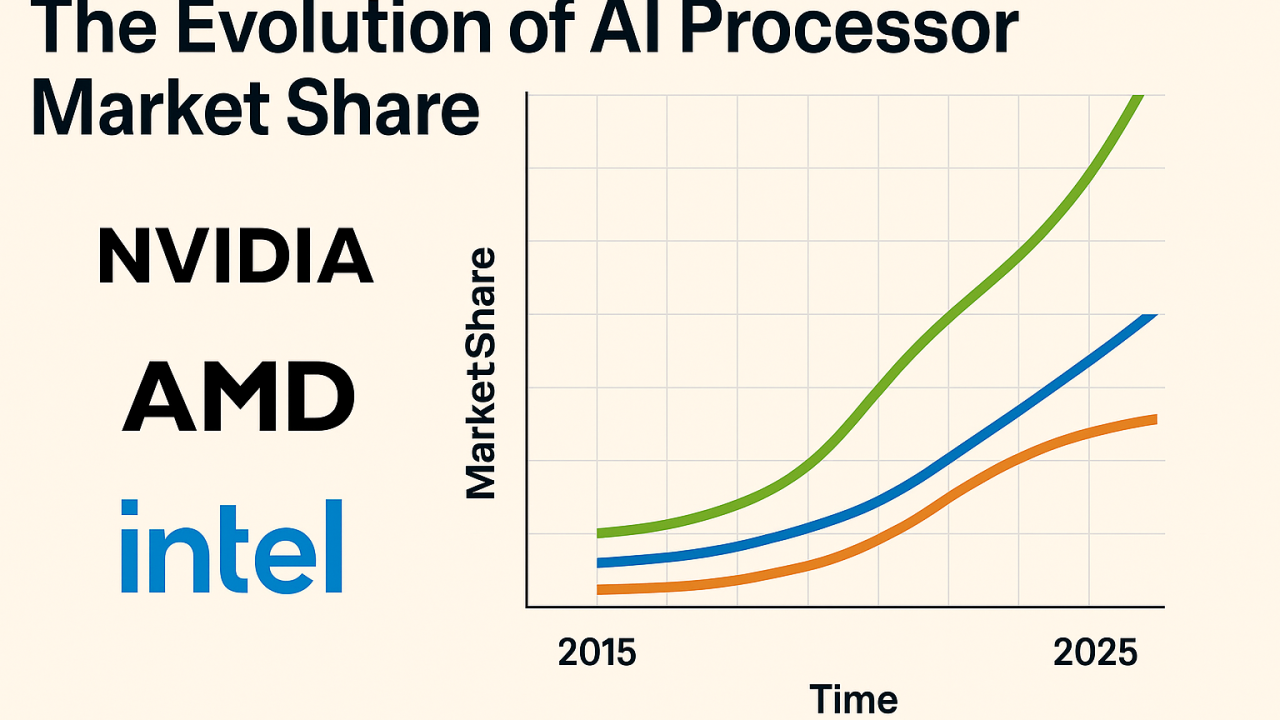

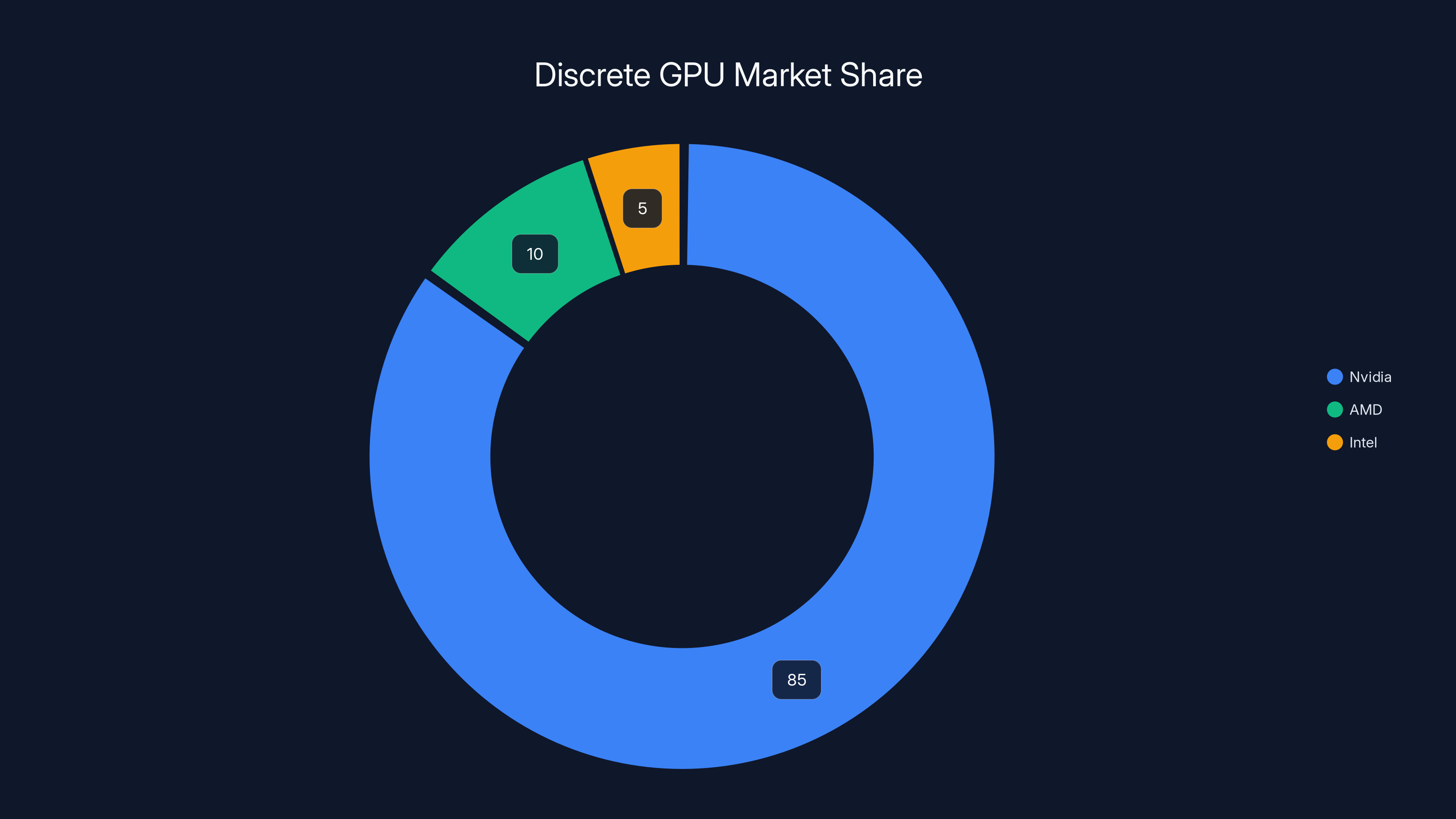

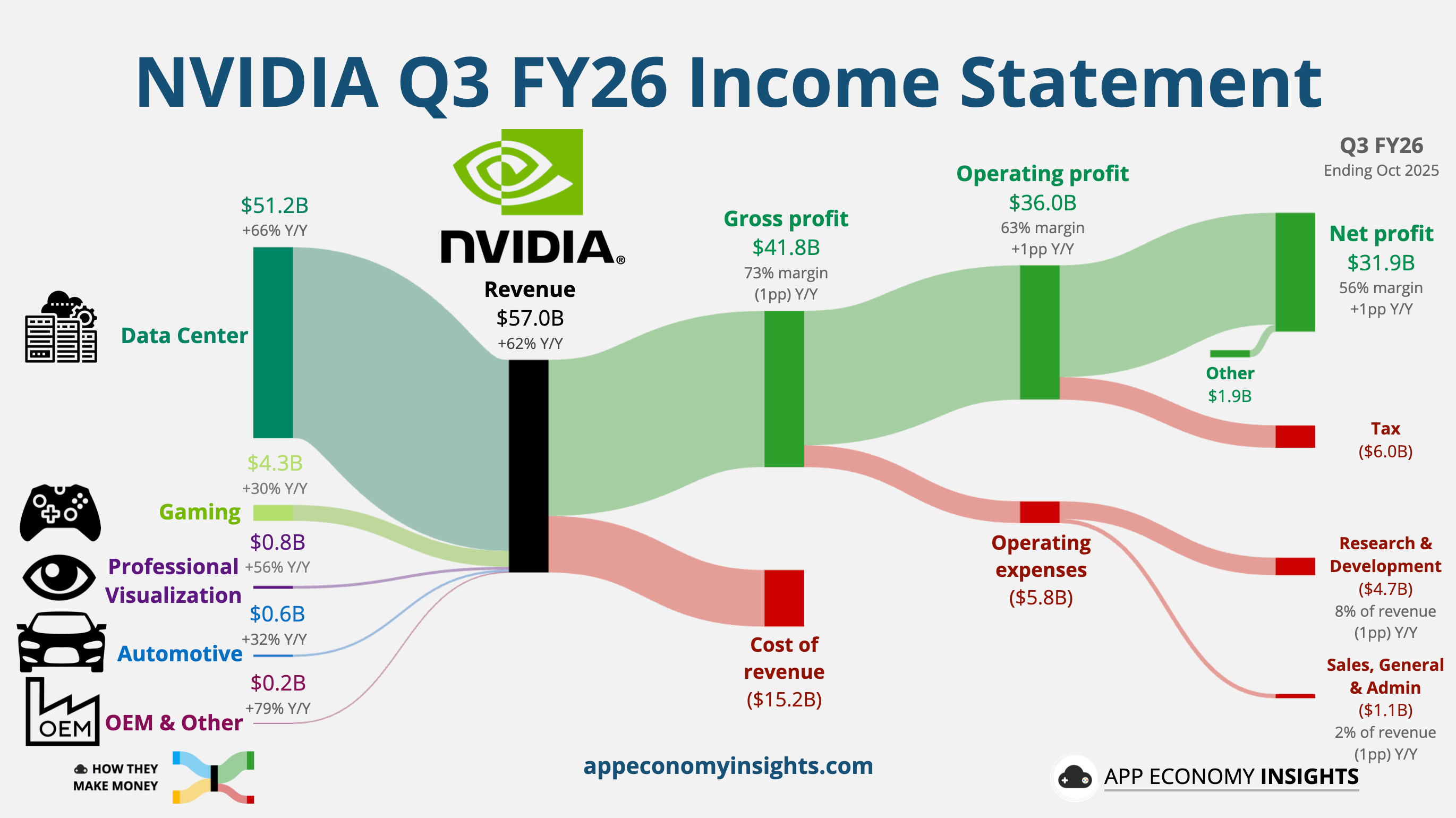

The stakes couldn't be higher. The GPU market has transformed from a niche graphics segment into the epicenter of the trillion-dollar AI revolution. Nvidia's dominance in data center GPUs generates astronomical margins—the company's gross margins exceeded 75% in recent quarters, driven almost entirely by AI accelerator demand as noted by Financial Content. AMD has captured meaningful share in certain segments, but Nvidia maintains commanding market position across consumer, gaming, and enterprise AI workloads as analyzed by Seeking Alpha.

Intel's entry into this market represents a fundamental competitive threat. The company possesses manufacturing capabilities, engineering talent depth, and customer relationships that most competitors simply cannot match. If Intel executes successfully on its GPU roadmap, it could fundamentally alter competitive dynamics, pricing pressure on Nvidia, and accelerate AI hardware innovation across the industry as reported by CNBC.

Understanding Intel's GPU strategy requires examining multiple dimensions: the leadership appointments that signal seriousness, the technical architecture decisions that differentiate Intel's approach, the manufacturing advantages Intel can leverage, the competitive landscape Intel must navigate, and the realistic timeline for meaningful market impact. This analysis explores each dimension in depth, providing clarity on what Intel's GPU push means for developers, enterprises, and the broader semiconductor industry.

The Leadership Appointments: Signaling Serious Technical Intent

Eric Demers: Chief GPU Architect from Qualcomm

The appointment of Eric Demers as Intel's Chief GPU Architect represents the most significant signal of Intel's GPU commitment. Demers spent over a decade at Qualcomm, where he led critical GPU architecture development for the Snapdragon platform as detailed by Tom's Hardware. At Qualcomm, Demers worked on Adreno GPU architecture, which powers billions of mobile devices worldwide and competes directly with Apple's GPU designs in performance metrics.

Demers brings several critical capabilities to Intel. First, he understands GPU architecture at a deep systems level—not just individual GPU cores, but how to integrate graphics compute with memory hierarchies, interconnect topology, and manufacturing process nodes. Second, he has proven experience scaling GPU designs across multiple process nodes and power envelopes, from low-power mobile to higher-performance segments. Third, he understands the software ecosystem challenges of launching a new GPU architecture, including compiler optimization, driver development, and developer relations.

Lip-Bu Tan's public comments about Demers recruitment are particularly revealing. Tan specifically noted that hiring Demers "takes some persuasion," suggesting Intel offered significant compensation and executive authority to attract him. This willingness to spend premium talent acquisition resources indicates that Intel executives view GPU architecture capability as a strategic bottleneck that justifies substantial investment as reported by Wccftech.

Demers' track record at Qualcomm provides important clues about what he might accomplish at Intel. The Adreno GPU architecture evolved from generation to generation, improving memory efficiency, increasing compute density, and maintaining competitive performance per watt against Apple's increasingly aggressive GPU designs. Intel is likely betting that Demers can apply similar methodical, incremental architecture improvements to a data center GPU product family, focusing initially on AI inference workloads where time-to-market and competitive positioning matter enormously.

Kevork Kechichian: Data Center Leadership and Organizational Authority

Beyond Demers, Intel elevated Kevork Kechichian as a key GPU development overseer during a broader organizational restructuring. Kechichian previously led Intel's data center business unit, giving him intimate familiarity with enterprise customer requirements, product roadmapping processes, and sales channel dynamics as noted by CRN. His involvement in GPU development signals that Intel isn't treating this as a skunkworks project—it's being integrated into mainstream data center business strategy.

Kechichian's background matters because GPU products succeed or fail partly on engineering excellence and partly on go-to-market strategy. A brilliant GPU architecture means nothing if customers don't adopt it. Kechichian has proven ability to navigate complex enterprise sales environments, manage long product cycles, and maintain customer relationships through development uncertainty. Having him involved suggests Intel is thinking beyond technical specification sheets toward production, marketing, and customer enablement.

The Broader Talent Acquisition Strategy

Beyond these two high-profile hires, Intel has reportedly expanded its GPU engineering team substantially. The company is recruiting experienced GPU architects from competitors, acquiring smaller GPU-focused design firms, and expanding partnerships with universities for GPU research talent. This multi-pronged hiring approach suggests Intel is building not just a small team around a few specialists, but a complete end-to-end GPU organization capable of managing architecture, validation, software, manufacturing integration, and customer enablement as reported by Financial Content.

The competitive implications are significant. When talented engineers leave Nvidia or AMD to join Intel, it signals not just better compensation but belief in Intel's strategic direction. GPU engineers are in extraordinarily high demand—compensation for senior GPU architects at Nvidia routinely exceeds $1 million annually when including equity. Intel's ability to attract this talent suggests the company is offering not just competitive compensation but compelling strategic narratives about the role they'll play in Intel's future.

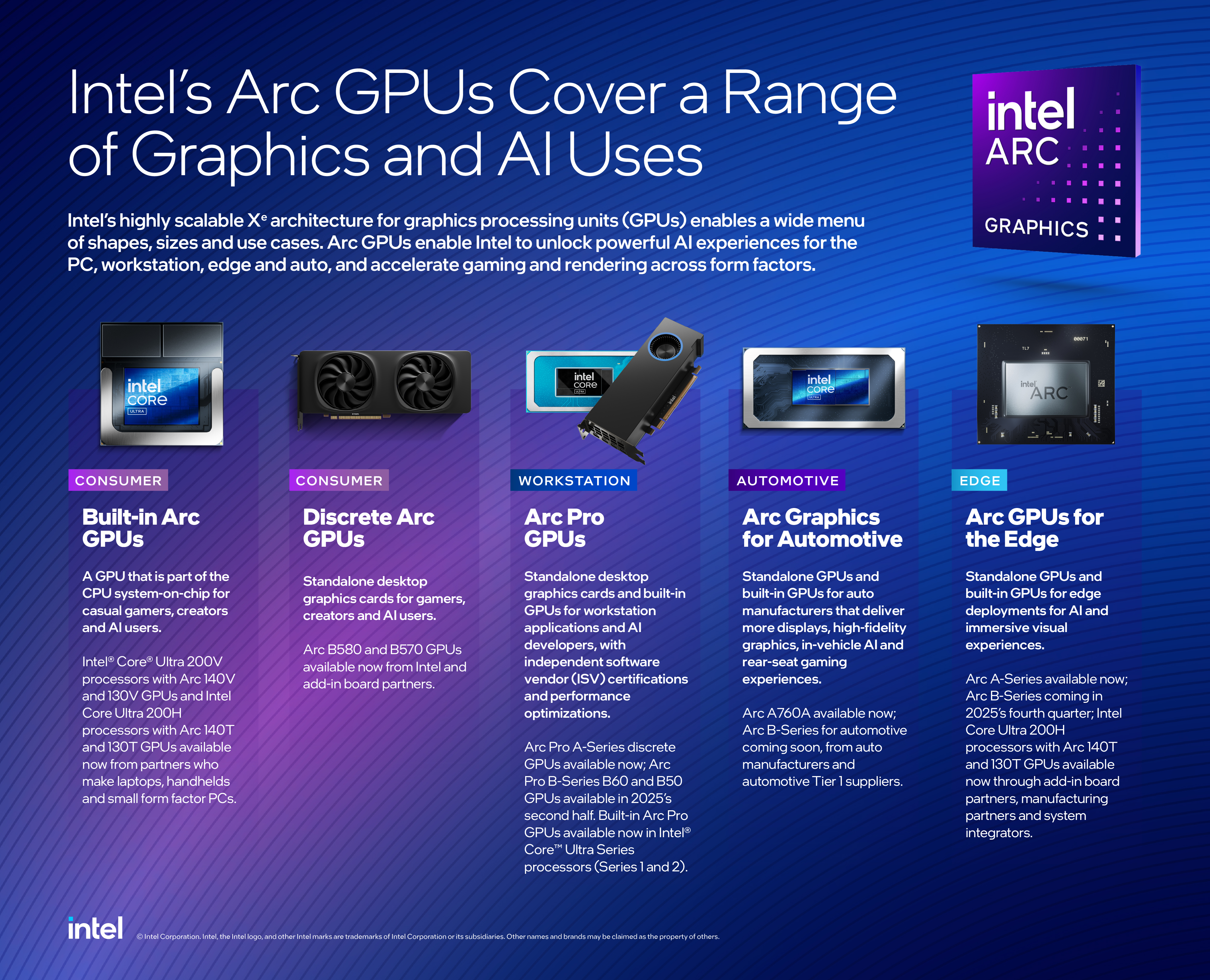

undefined

Intel's GPU Architecture Strategy: Learning from Competitors and History

Discrete Data Center GPUs: The Primary Target

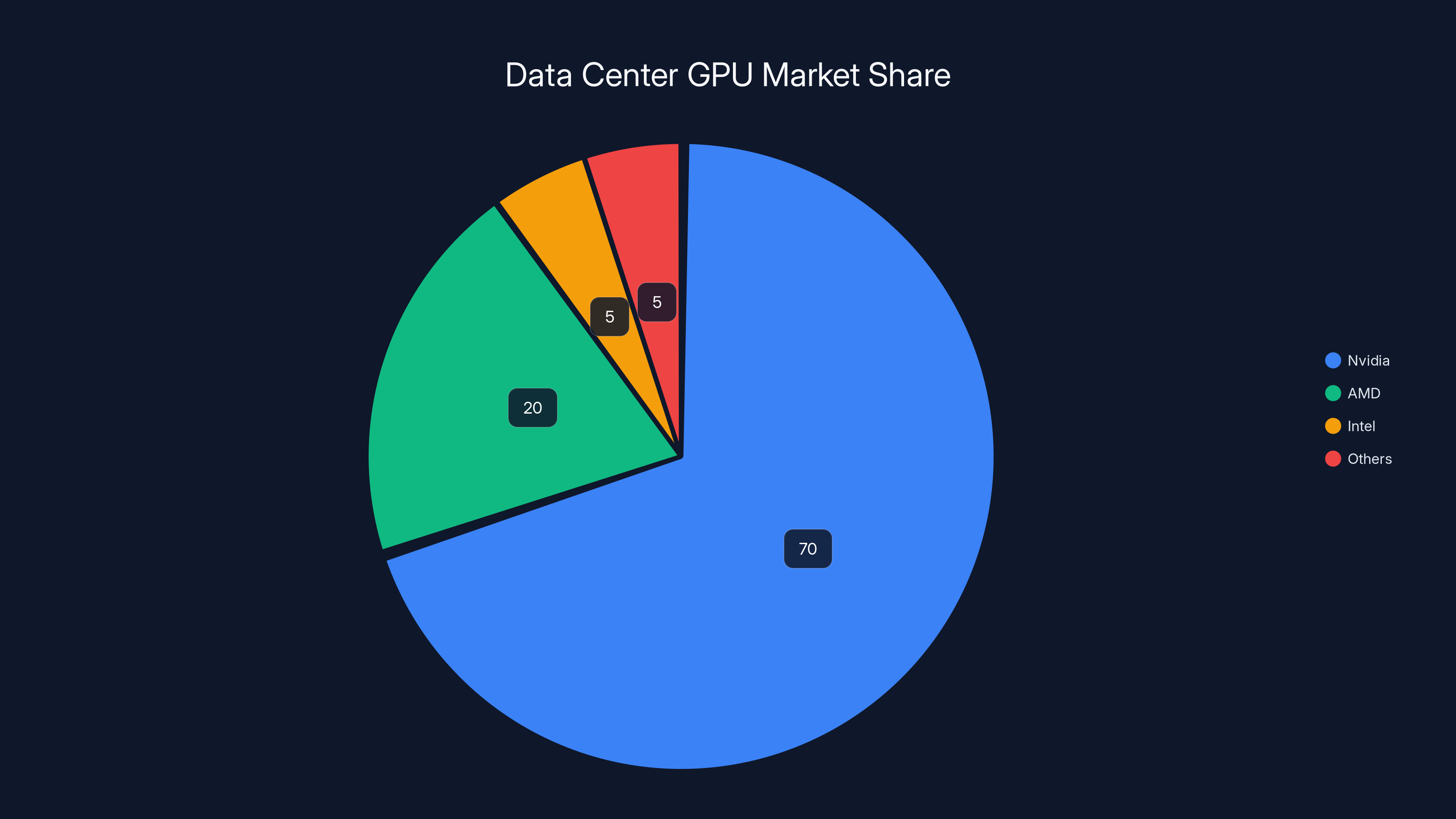

Intel's initial GPU focus centers on discrete data center accelerators, not integrated graphics or consumer gaming GPUs. This strategic choice reveals sophisticated competitive thinking. The data center GPU market is growing fastest, driven by AI training and inference workloads. Nvidia's A100 and H100 GPUs generate margins exceeding 70%, making this segment extraordinarily profitable as reported by Nvidia News. AMD's MI250 and MI300 lines represent the only meaningful competitive threat, and even AMD's data center share lags Nvidia significantly.

Why focus on data center first rather than consumer gaming? Several factors matter. First, enterprise customers evaluate GPUs on raw performance metrics, software maturity, and total cost of ownership—not marketing or brand loyalty. Intel can compete on performance and leverage its superior manufacturing process technology for power efficiency advantages. Second, data center customers are less brand-loyal than consumers; they'll evaluate Intel's offering seriously if performance and price are competitive. Third, data center market growth is more predictable and less subject to consumer preference volatility.

Intel's manufacturing advantages become most visible in the data center segment. Intel's cutting-edge process nodes—whether 7nm, 4nm, or next-generation technology—provide inherent power efficiency advantages over competitors potentially using older nodes. This translates directly into lower customer operating costs and competitive performance per watt, a key decision criterion for hyperscale data center operators managing electricity bills for millions of GPUs as discussed by Time.

Process Technology as Competitive Moat

Here's where Intel's strategy becomes particularly sophisticated: the company isn't just building GPUs, it's leveraging its manufacturing prowess as a core competitive advantage. Intel operates the world's most advanced semiconductor fabs, capable of producing chips at leading-edge process nodes. Nvidia outsources manufacturing to Taiwan Semiconductor Manufacturing Company (TSMC), creating a dependent relationship on TSMC's capacity, pricing, and technology roadmap.

Intel's manufacturing control provides several competitive benefits. First, Intel can implement tighter integration between CPU and GPU products, creating systems-level advantages competitors struggle to match. Second, Intel maintains pricing power on GPU components because it controls manufacturing cost structure. Third, Intel can prioritize GPU production capacity without competing for TSMC allocation against other customers.

Historically, Intel stumbled in the discrete GPU market. The company's earlier Larrabee architecture (2009-2012) attempted to leverage x86 architecture for GPU compute but ultimately failed due to architectural limitations for graphics workloads. Intel subsequently released integrated graphics capabilities (Intel Arc branding) for consumer and gaming markets, with mixed results. The company is learning from these failures: Demers' new architecture will likely not force-fit x86 semantics onto GPU design but instead build purpose-built GPU architecture optimized for AI workloads and compute-intensive applications as reviewed by Tom's Hardware.

Targeting AI Inference as Initial Market

Most observers expect Intel's initial GPU launches will target AI inference workloads rather than AI training. Why? Inference represents a larger total addressable market, with different competitive dynamics. Training is hyperscaler-dominated (massive data centers at Google, OpenAI, Meta, Microsoft). Inference is more distributed—every company deploying large language models needs inference hardware, and requirements span from cloud to edge to on-premises deployments.

Inference workloads also impose different performance characteristics. Inference typically emphasizes latency, throughput at batch sizes of 1-32, and power efficiency. Training emphasizes raw peak throughput and scaling efficiency across thousands of GPUs. Intel's architecture can potentially offer competitive performance on inference even if it trails on training workload performance, capturing enormous market share in the higher-volume inference segment as noted by Financial Content.

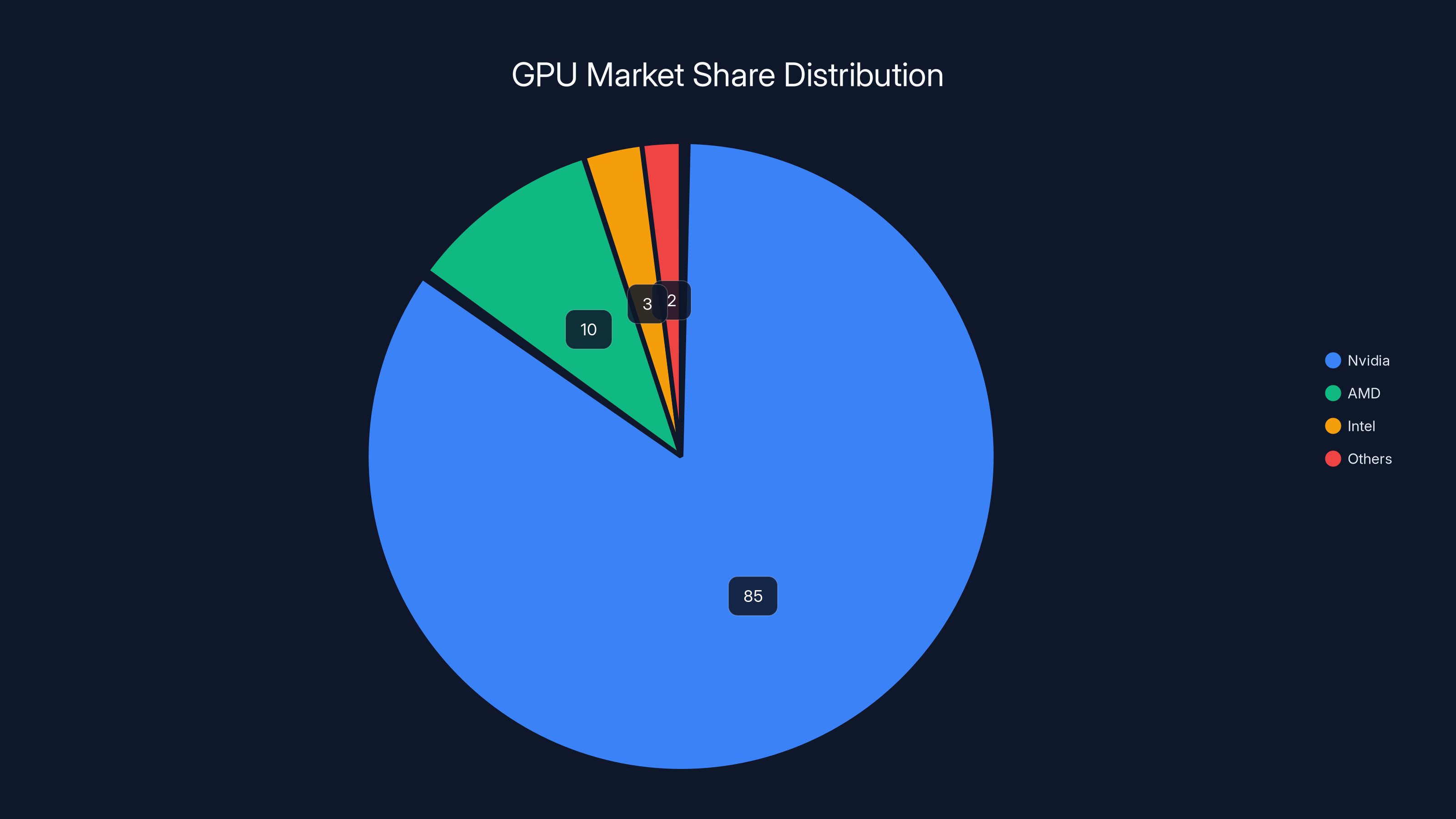

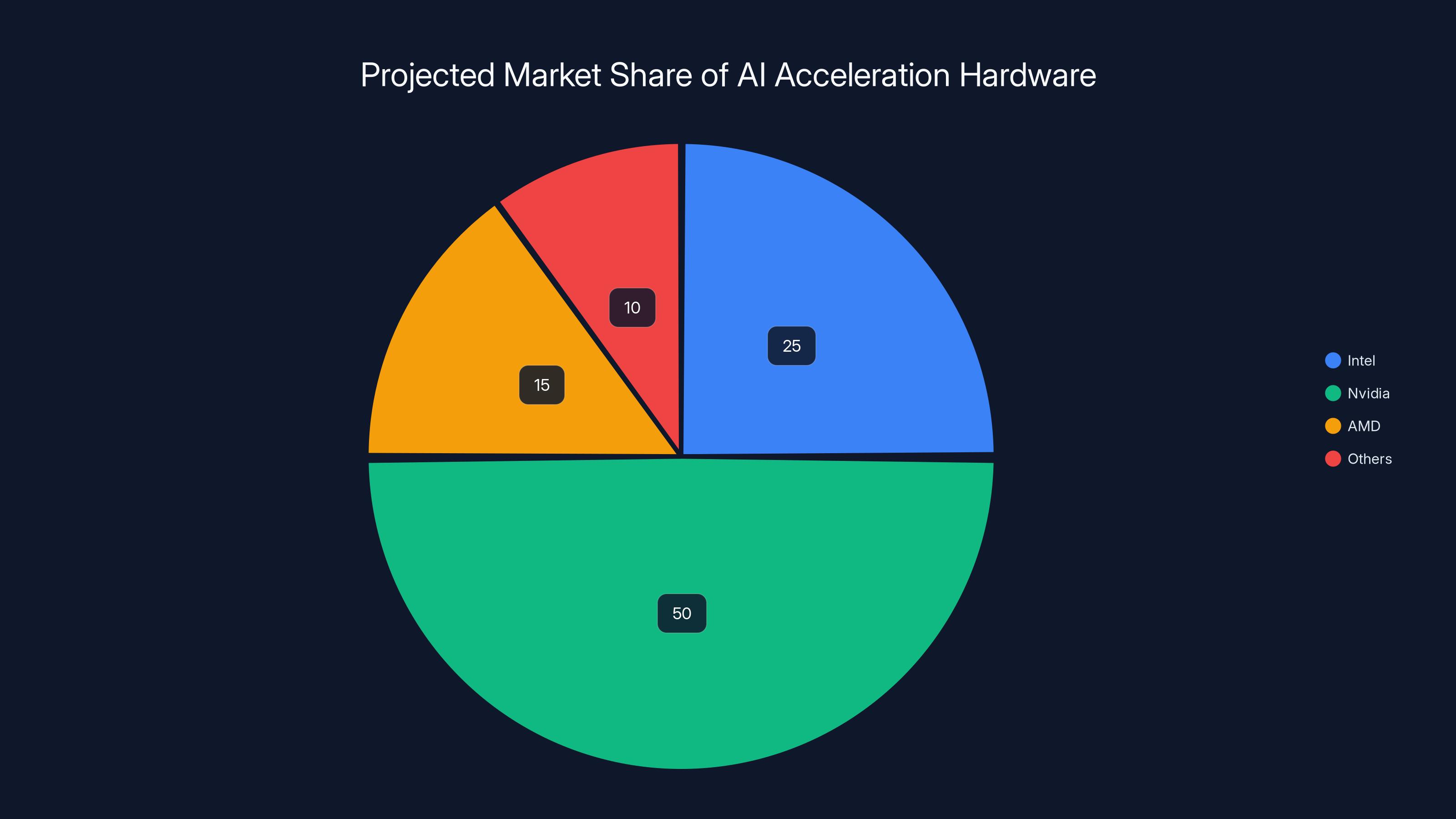

Nvidia dominates the GPU market with an estimated 85% share, while Intel is emerging as a new competitor with a small but growing presence. (Estimated data)

Manufacturing Advantage: Intel Foundry Services and Competitive Differentiation

The Strategic Role of Intel Foundry Services

Intel's commitment to GPU development becomes inseparable from its foundry strategy. Intel Foundry Services (IFS) represents the company's effort to operate as a contract manufacturer like TSMC, accepting manufacturing orders from external customers while also serving Intel's internal product requirements. This dual role creates unique strategic opportunities for GPU development as reported by FindArticles.

When Intel manufactures its own GPUs at IFS fabs, the company gains several advantages. First, it can prioritize production capacity allocation without competing against other customers for manufacturing slots. During periods of high demand (which AI hardware has experienced continuously since 2023), foundry capacity becomes extraordinarily valuable. Intel's own GPUs receive priority scheduling, enabling faster time-to-market and better capacity matching with demand.

Second, Intel gains detailed process technology roadmap information earlier than external competitors. When new manufacturing capabilities become available—whether advanced packaging, chiplet interconnect improvements, or power delivery enhancements—Intel's GPU team can incorporate these advantages immediately into product design. TSMC customers learn about new capabilities on published roadmaps, with significant delays between announcement and actual production availability.

Third, Intel can implement vertical integration opportunities that competitors cannot match. The company could combine leading-edge CPU cores with specialized GPU compute elements on the same die, creating differentiated products not achievable through separate components. Such integration could target specific workloads like AI inference combined with real-time data processing, where system-level optimization provides meaningful performance advantages as discussed by WebProNews.

Cost Structure Implications

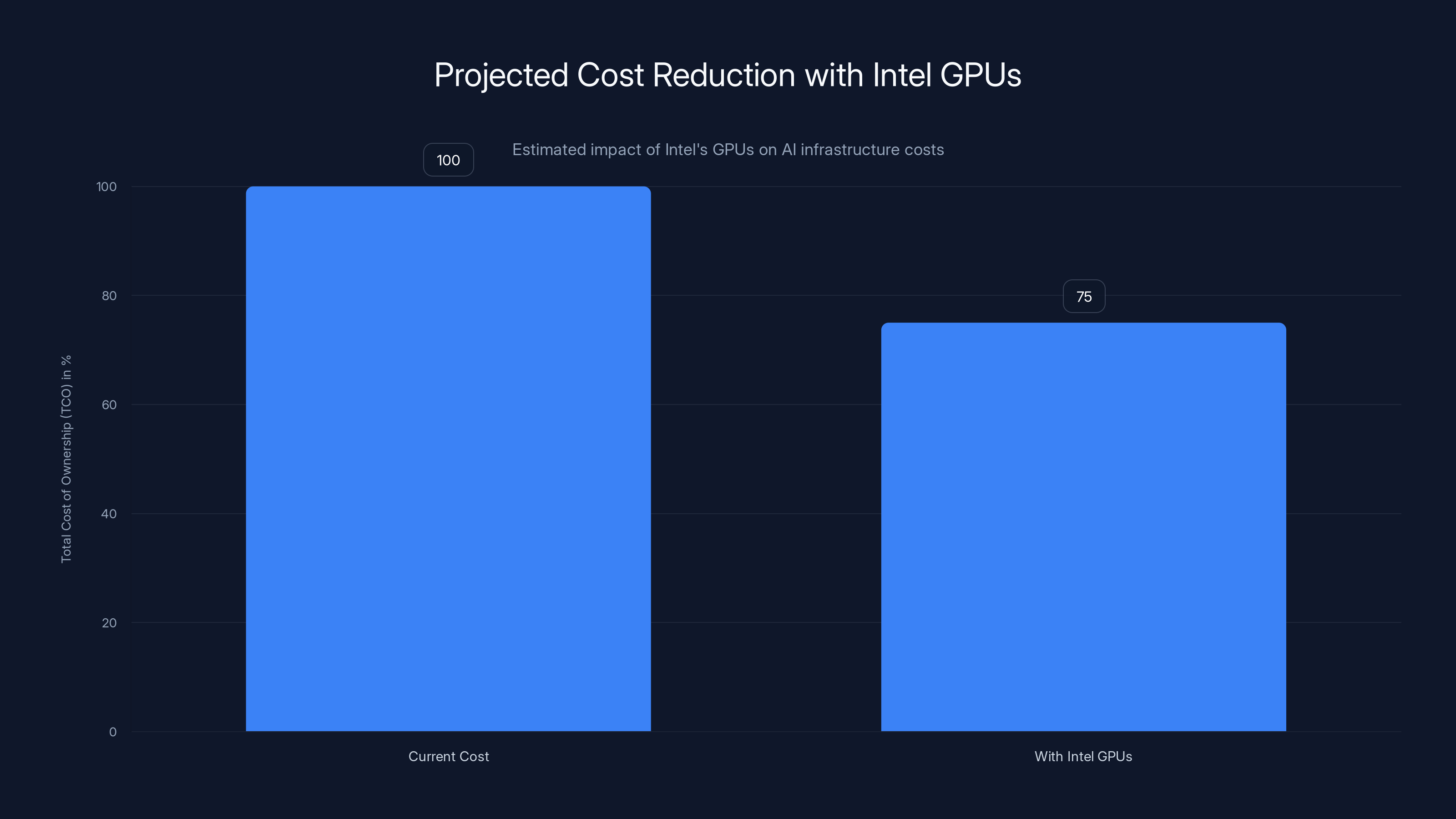

Intel's manufacturing advantage also translates into cost structure benefits that ripple through product pricing strategy. Manufacturing cost represents perhaps 30-40% of the total cost of goods sold for high-end GPUs. Intel's advanced manufacturing processes, optimized for GPU production, potentially deliver lower per-unit manufacturing costs than competitors relying on external foundries.

Lower manufacturing costs enable several strategic pricing options. Intel could price GPUs below Nvidia's pricing, capturing market share through competitive pricing. This would immediately impact Nvidia's margins and force the company to respond—either accepting lower margins or improving product performance to justify pricing. Alternatively, Intel could maintain pricing competitive with Nvidia while capturing superior margins, improving profitability faster than competitors.

The competitive dynamics matter enormously. Nvidia's gross margins on data center GPUs exceed 75%, far above normal semiconductor industry margins of 45-55%. This margin premium reflects Nvidia's market power and limited competition. Introduction of serious price competition from Intel, backed by manufacturing cost advantages, would compress Nvidia's margins and change the financial profile of GPU businesses dramatically. Over a 5-10 year period, the margin impact could exceed hundreds of billions of dollars, making Intel's GPU investment potentially one of the highest-ROI strategy decisions the company could pursue as noted by Financial Content.

Competitive Landscape: Understanding Nvidia's Position and AMD's Challenge

Nvidia's Market Dominance and Vulnerability

Nvidia currently dominates the discrete GPU market with an estimated 80-90% share of data center AI accelerator revenue. The company's H100 GPU represents the industry standard for AI training and remains highly competitive for inference workloads as reviewed by Tom's Hardware. Nvidia's CUDA ecosystem—the software framework developers use to write GPU-accelerated applications—represents perhaps the company's most durable competitive moat. Thousands of libraries, frameworks, and applications support CUDA, creating switching costs that lock in existing customers.

However, Nvidia's dominance contains latent vulnerabilities that Intel could exploit. First, customer dissatisfaction with Nvidia's pricing power continues growing. Hyperscalers and enterprises openly discuss GPU costs as a constraint on AI model deployment. Second, CUDA's software dominance is weakening as open standards like OpenCL and SYCL gain adoption. Third, customer desire for manufacturing diversification—to avoid single-supplier risk—creates demand for viable GPU alternatives even if performance isn't identical to Nvidia's latest generation.

Intel's strategy exploits these vulnerabilities effectively. The company doesn't need to match Nvidia's cutting-edge performance immediately. Early products that deliver 80-90% of Nvidia's performance at 20-30% lower total cost of ownership would capture meaningful market share. Enterprise customers, particularly those managing dozens or hundreds of thousands of GPUs, view total cost of ownership as a primary decision criterion. A competitive Intel GPU could reshape buying decisions across the entire hyperscaler market as noted by Financial Content.

AMD's Partial Success and Strategic Position

AMD represents Intel's most immediate competitive reference point. The company successfully developed RDNA architecture GPUs that compete with Nvidia in consumer gaming and has invested in ROCm software ecosystem as an open-source alternative to CUDA. However, AMD has struggled to translate this success into meaningful data center share. Why?

Several factors limit AMD's success in data center. First, ROCm software ecosystem remains substantially less mature than CUDA, with fewer optimized applications and libraries. Developers continue preferring CUDA for new projects, even when AMD hardware might be competitive. Second, AMD's manufacturing is outsourced to TSMC, creating similar capacity and cost-structure challenges Intel faces with competitors. Third, AMD has smaller sales and customer engineering organizations compared to Nvidia, limiting ability to support complex enterprise deployments.

Intel enters the market with several advantages over AMD's position. First, Intel's manufacturing control addresses the TSMC capacity constraint that limits AMD's ability to ramp production. Second, Intel's existing data center relationships and sales infrastructure provide superior distribution channels compared to AMD. Third, Intel can potentially achieve better software integration if the company targets inference-optimized architecture different from AMD's approach. Fourth, Intel's process technology roadmap provides credible path to performance improvement, making the company a more compelling long-term partner than AMD.

From AMD's perspective, Intel's entry both threatens existing progress and potentially validates AMD's strategy. If Intel successfully launches competitive GPUs, it validates the market opportunity and reduces risk that hyperscalers will over-commit to Nvidia. Paradoxically, strong Intel competition helps AMD by relieving pressure on Nvidia to defend share through aggressive pricing or technology improvements as analyzed by Seeking Alpha.

Alternative Architectures and Software Ecosystems

Beyond Nvidia and AMD, emerging competitors like Cerebras, Graphcore, and others have attempted to build specialized AI accelerators using novel architectures. Most of these companies have struggled to achieve meaningful market traction or profitability. Their challenges provide important lessons for Intel.

Specialized architectures often fail because they optimize for specific workloads but struggle with generality and software ecosystem breadth. A company that designs GPUs optimized for large language model inference might discover that customers want to run dozens of different model architectures, each with different optimization requirements. Building software support for this diversity requires enormous investment that startup-scale companies cannot sustain.

Intel's strategy avoids this trap by designing GPUs for general-purpose compute, not specialized workloads. Intel GPUs will run training, inference, graphics, scientific simulation, and custom applications. This generality reduces performance optimization opportunities but dramatically improves market addressability and software ecosystem viability. Developers building AI applications will write to Intel GPUs even if they're not optimized for every possible model architecture, knowing they can deploy on diverse customer deployments.

Nvidia dominates the data center GPU market with an estimated 70% share, while AMD holds around 20%. Intel is entering the market with a focus on leveraging its manufacturing advantages. (Estimated data)

Technical Architecture Decisions: Learning from Demers' Track Record

GPU Compute Architecture Fundamentals

Intel's GPU architecture will face several critical design decisions that determine competitive viability. Modern GPU architecture divides compute capability into clusters of processing elements, typically arranged in symmetric configurations. Nvidia's H100 contains 18,176 CUDA cores organized into 568 streaming multiprocessors. AMD's MI300 arranges compute elements differently, using 19,456 stream processors across 304 compute units as reviewed by Tom's Hardware.

Intel's architecture must balance several competing objectives. The company needs sufficient compute density to match or exceed competitor performance at equivalent power budgets. Compute density—measured in floating-point operations per watt—determines how many GPUs a data center can power within electrical infrastructure constraints. Hyperscales typically operate at 150-200 watts per GPU, creating hard power envelope constraints.

Within power constraints, Intel must also optimize memory bandwidth, cache efficiency, and latency characteristics. AI workloads vary enormously in memory access patterns. Large language model inference typically involves moving enormous amounts of data from memory into compute elements, making memory bandwidth a critical bottleneck. Training workloads exhibit different characteristics, with more temporal locality that benefits from larger caches. Intel's architecture must balance these competing requirements as reported by CNBC.

Eric Demers' experience at Qualcomm suggests he understands these tradeoffs intimately. The Adreno architecture evolved significantly across mobile processor generations, improving performance per watt through careful optimization of compute element organization, memory hierarchies, and cache configurations. Intel likely expects similar iterative improvement in data center GPU architecture across successive product generations as noted by Wccftech.

Memory Subsystem and Bandwidth Optimization

Memory bandwidth represents perhaps the most critical constraint for AI workloads. A GPU with enormous compute capability but inadequate memory bandwidth cannot feed compute elements with data quickly enough, resulting in poor utilization. Nvidia's H100 provides 3.5 TB/second memory bandwidth, supporting 141 tera FLOPS peak compute performance. AMD's MI300 provides similar bandwidth proportional to compute performance as reviewed by Tom's Hardware.

Intel can potentially exceed competitor bandwidth through manufacturing advantages. The company's advanced process nodes enable denser memory interfaces and more efficient power delivery to memory subsystems. Wider memory buses and higher-frequency memory clocks become feasible with leading-edge manufacturing, potentially providing Intel GPUs with bandwidth advantages even if compute performance is comparable to competitors.

Memory hierarchy design also matters enormously. Modern GPUs include multiple cache levels—register files, L1 cache, L2 cache, and sometimes L3 cache—creating hierarchical memory systems where data locality significantly impacts performance. Demers likely understands optimal cache hierarchy organization for various workload types. Intel's architecture can potentially improve cache efficiency beyond competitors through careful design of data movement patterns and cache coherency protocols.

Power Delivery and Thermal Architecture

As GPUs consume more power, power delivery and thermal management become critical engineering challenges. The H100 operates at 700 watts peak power consumption, requiring sophisticated power delivery networks and thermal solutions. Scaling to thousands of GPUs in data centers creates facility-level constraints on total power, cooling capacity, and physical deployment density as reviewed by Tom's Hardware.

Intel's manufacturing advantages become visible in power delivery architecture. The company's advanced process nodes enable more efficient power conversion, lower parasitic resistance in power distribution networks, and better thermal properties. This translates to lower power loss and superior thermal efficiency compared to competitors using older process nodes.

Thermal architecture also affects practical deployment. Data centers prefer GPUs that can be cooled efficiently through standard liquid cooling systems without requiring exotic thermal solutions. Intel can potentially design GPUs with superior thermal characteristics—more uniform heat distribution, lower peak thermal density, better integration with standard cooling infrastructure—that provide deployment advantages relative to competitors.

Software Ecosystem Strategy: Beyond Drivers to Full Software Stack

The CUDA Moat: Challenges and Opportunities

Nvidia's CUDA ecosystem represents perhaps the company's most durable competitive advantage. CUDA consists of multiple components: a programming model that developers use to write GPU-accelerated code, compilers that translate CUDA code into GPU instructions, libraries optimized for common operations, and runtime systems that manage GPU execution as noted by Nvidia News.

Intel faces a strategic choice regarding software strategy. The company could attempt to build a CUDA-equivalent framework from scratch, targeting long-term parity but requiring years of development and ecosystem building. Alternatively, Intel could leverage open standards like SYCL and OpenCL, potentially offering faster time-to-market and broader compatibility but sacrificing some performance optimization opportunities.

Most likely, Intel will pursue a hybrid strategy. The company will likely build comprehensive SYCL support and openness to standard frameworks, but also develop Intel-specific optimizations that perform well on Intel GPUs. This approach balances time-to-market against long-term ecosystem strength. Developers who write portable SYCL code can deploy on Intel GPUs immediately, but developers optimizing specifically for Intel architecture can achieve superior performance through Intel-specific tuning.

Intel's approach differs from AMD's strategy. AMD invested heavily in ROCm software ecosystem, building ROCm equivalents to CUDA features. However, ROCm development has been uneven, with inconsistent support for various frameworks and lower developer adoption than hoped. Intel is likely learning from AMD's experience—attempting software compatibility and ease-of-use without attempting to be 100% CUDA-equivalent, which is an unwinnable strategy as analyzed by Seeking Alpha.

Framework Support and Library Development

Beyond low-level programming models, Intel must ensure that high-level frameworks popular with AI developers support Intel GPUs efficiently. PyTorch, TensorFlow, JAX, and other frameworks account for the vast majority of AI development. If Intel GPUs integrate well into these frameworks with minimal code changes, adoption accelerates dramatically. If developers must write specialized code for Intel GPUs, adoption suffers.

Intel's strategy likely involves multiple layers of support. At the framework level, Intel will work with PyTorch and TensorFlow teams to ensure their GPU abstraction layers support Intel GPUs. At the library level, Intel will develop or optimize core libraries like oneDNN (Intel's Deep Neural Network Library) to provide excellent performance on Intel GPUs. At the application level, Intel will potentially provide pre-optimized models and reference implementations for popular workloads like large language models and diffusion models.

This tiered approach recognizes that different customers have different requirements. Hyperscales with custom applications need excellent low-level support and access to raw GPU capabilities. Enterprise customers running standard applications need excellent performance on standard frameworks. Intel must support both use cases effectively.

Developer Relations and Ecosystem Cultivation

Building software ecosystem requires more than technical excellence—it requires systematic developer engagement. Nvidia invests heavily in developer relations, sponsoring conferences, maintaining extensive documentation, providing developer support, and funding research partnerships. Intel must match this investment to attract developer mindshare as noted by Nvidia News.

Intel likely plans significant investment in developer programs, including funding for developers targeting Intel GPUs, technical training and certification programs, and close collaboration with key open-source projects. The company could also provide early hardware access to researchers and leading developers, building excitement and commitment before commercial launches.

Estimated data suggests Nvidia will maintain a leading position in AI hardware market share by 2030, but Intel is projected to capture a significant portion due to its strategic investments and capabilities.

Market Segmentation Strategy: Where Intel Can Compete Effectively

Data Center Inference Workloads: The Primary Opportunity

Intel's most immediate opportunity involves AI inference in data centers. The inference market is larger than training in terms of total deployment volume and continues growing rapidly. Every organization deploying large language models requires inference infrastructure. This creates a heterogeneous market with diverse customer requirements—hyperscales, cloud providers, enterprises, and on-premises deployments all need inference hardware.

Inference workloads impose different requirements than training. Inference emphasizes latency (how quickly a single request processes) and throughput (how many concurrent requests the GPU processes). These characteristics enable different GPU design tradeoffs than training optimization would suggest. Intel can potentially design inference-optimized GPUs that deliver competitive or superior performance for inference even if training performance lags competitors.

The inference market also exhibits favorable pricing dynamics. While Nvidia commands premium pricing for H100 GPUs used in training, customers show greater price sensitivity for inference GPUs. They're willing to evaluate alternatives if total cost of ownership improves, creating opportunity for Intel to capture share through competitive positioning as noted by Financial Content.

Edge Computing and Specialized Deployments

Beyond hyperscale data centers, Intel can address edge computing and specialized deployment scenarios. Autonomous vehicles, robotics, industrial automation, and real-time inference systems increasingly require local AI processing capability without reliance on cloud infrastructure. These deployments emphasize power efficiency, thermal efficiency, and real-time latency guarantees more than pure peak performance.

Intel's manufacturing advantages become especially visible in these segments. Efficient power delivery and thermal design enable edge deployments that competitors struggle to support within power and thermal envelopes. Intel could develop specialized SKUs optimized for edge scenarios, differentiating from competitors through deployment efficiency rather than pure performance metrics as discussed by Time.

Enterprise and Mid-Market Adoption

Large enterprises deploying AI internally represent another target segment. These organizations often prioritize supply chain diversification, existing IT relationships, and integration with existing infrastructure over pure performance metrics. An enterprise already using Intel CPUs in their data centers might preferentially adopt Intel GPUs to simplify procurement, reduce supply chain complexity, and integrate GPUs more tightly with existing systems.

Intel's sales organization and existing relationships provide advantages in reaching enterprise customers. The company's large field sales team and established enterprise accounts can introduce GPU offerings through existing relationships, reducing customer acquisition costs compared to startups or companies without established enterprise presence.

Manufacturing Scale and Production Ramp Timeline

Building Sufficient Manufacturing Capacity

Successfully executing Intel's GPU strategy requires not just excellent architecture but also the ability to manufacture GPUs at scale. Nvidia's challenge involves securing sufficient TSMC capacity to ramp production. Intel avoids this constraint by manufacturing internally, but faces different challenges: ramping new product manufacturing at leading-edge process nodes is extraordinarily difficult.

Process node ramp involves discovering and fixing defects, optimizing yield, and ramping production volume while maintaining quality. Intel has ramped new process nodes successfully for CPUs, but GPU ramp adds complexity because GPUs contain different design characteristics than CPUs. Yield typically starts lower than expected, requiring months of debugging before reaching acceptable production efficiency.

Intel's timeline likely anticipates 12-18 months from design tape-out (completion of final design and submission to manufacturing) to volume production achieving acceptable yields. Early production will likely emphasize smaller GPU SKUs with lower complexity before ramping larger, higher-complexity products. This enables yield improvement through experience while generating initial revenue to fund larger-scale deployment as reported by FindArticles.

Supply Chain and Component Integration

GPU manufacturing requires sophisticated supply chains for memory, packaging, testing, and assembly. HBM (High Bandwidth Memory) represents a critical component for high-performance GPUs, with supply constrained by limited HBM manufacturers (SK Hynix, Samsung, Micron). Intel must secure long-term HBM supply agreements, likely negotiating exclusive capacity allocation to ensure production ramp doesn't face memory supply constraints.

Advanced packaging technology represents another critical component. Leading-edge GPUs use chiplet designs where compute elements and memory controllers sit on separate dies, connected through advanced interconnect. These interconnects require specialized packaging, typically provided by specialized packaging suppliers. Intel can either develop internal packaging capability or partner with companies like TSMC or Samsung for advanced packaging services.

Intel likely plans phased supply chain maturation. Initial products might use simpler monolithic designs without advanced chiplet interconnect, enabling faster ramp. Subsequent generations could introduce advanced chiplet designs once supply chain partners achieve necessary capability and yield.

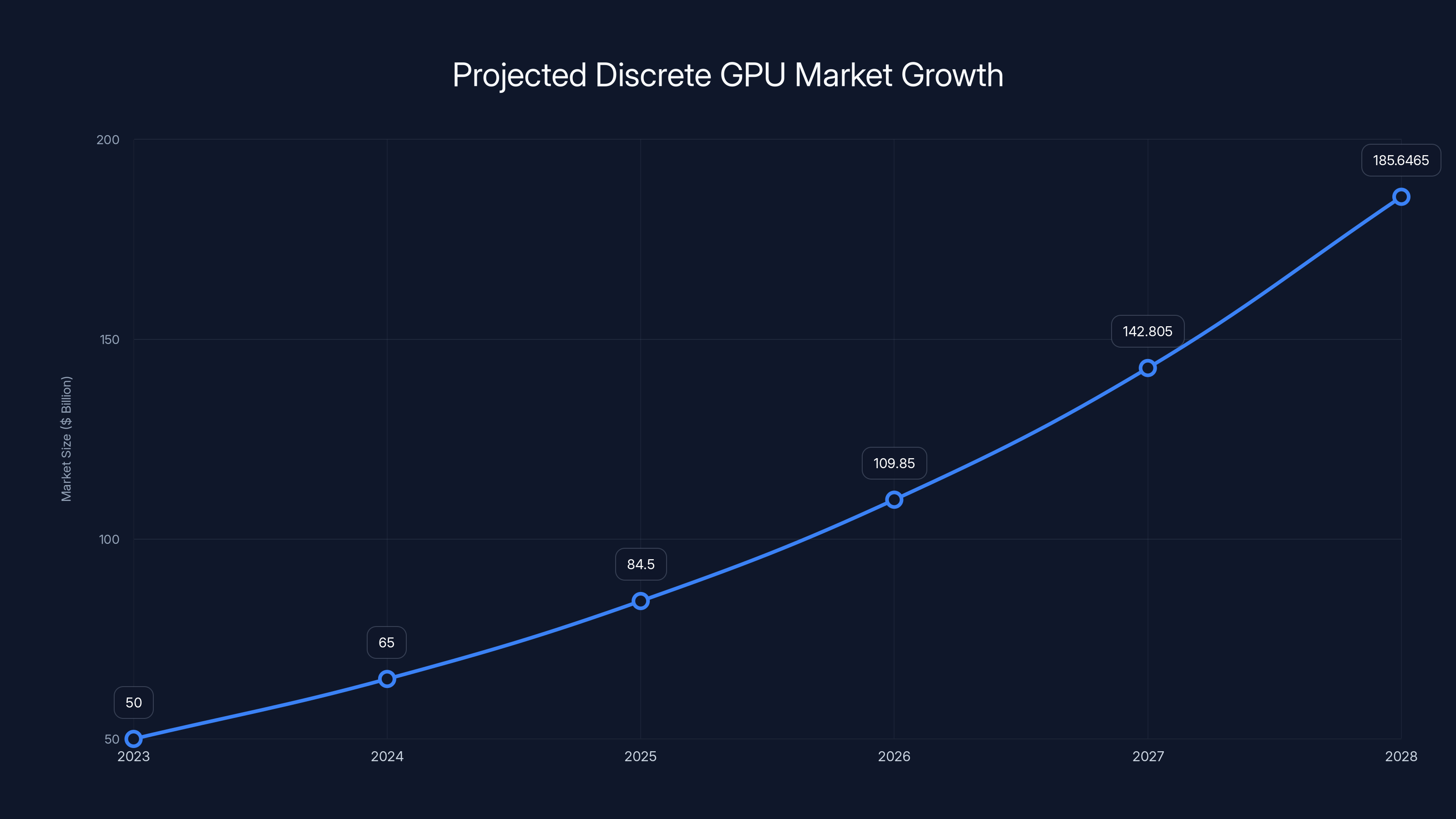

The discrete GPU market is projected to grow at an annual rate of 30-40%, reaching approximately $185.65 billion by 2028. Estimated data based on industry growth projections.

Revenue Potential and Financial Impact

Market Size Calculations and Growth Projections

The discrete GPU market continues expanding rapidly. Nvidia's data center GPU revenue exceeded $40 billion in 2023-2024, representing approximately 40% of the company's total revenue and the fastest-growing segment. Industry analysts project the discrete GPU market will grow 30-40% annually through 2028, driven by continued AI adoption and inference expansion as noted by Financial Content.

At

These projections assume Intel execution on product roadmap and successful customer adoption. Execution risks remain substantial. However, the magnitude of potential financial impact justifies Intel's investment and explains management's public commitment to the strategy.

Margin Profile and Profitability Timeline

GPU businesses typically operate at gross margins of 50-70%, substantially above Intel's historical CPU margins of 40-50%. This margin premium reflects the value AI workloads place on GPU acceleration and current supply constraints limiting competition. As Intel ramps production and increases competition, margins will likely compress from current levels but should remain above Intel's traditional CPU margin profile.

Initial profitability will likely be modest, with early products emphasizing volume ramp and market share capture over margin maximization. As manufacturing yields improve and production volumes increase, margins expand materially. Analysts expect GPU business profitability to approach acceptable levels around 3-4 years post-launch, with full margin expansion requiring 5-7 years as manufacturing matures and competitive dynamics stabilize.

Competitive Response Scenarios: Nvidia's Options and Likely Actions

Price Competition and Margin Compression

Nvidia's most direct response to Intel's GPU entry involves price competition. The company could reduce GPU pricing, capturing share through pricing leadership and forcing Intel to match prices or lose customers. This strategy compresses margins industry-wide but leverages Nvidia's scale and profitability to outlast newer competitors as noted by Financial Content.

Historically, Nvidia has been reluctant to compete primarily on price, preferring to maintain premium pricing justified by superior performance and ecosystem maturity. However, competitive threats from serious competitors like Intel might force the company to reconsider pricing strategy. Early indications suggest Nvidia is already moderating price increases and potentially reducing prices in certain segments, suggesting the company recognizes Intel threat and is taking defensive action.

Performance Improvement and Feature Acceleration

Alternatively, Nvidia could compete through accelerated product improvement. The company could increase GPU architecture development pace, introducing new products more frequently with superior performance characteristics Intel struggles to match. This strategy preserves margins while maintaining competitive advantage through persistent performance leadership.

Nvidia's history suggests the company favors this approach. The company maintains aggressive product roadmaps, introducing new architectures every 18-24 months with meaningful performance improvements. Continued roadmap acceleration would force Intel to constantly chase Nvidia performance, reducing opportunity for price-based competition.

Software Ecosystem and Developer Lock-in

Nvidia could deepen CUDA ecosystem lock-in by expanding proprietary libraries and frameworks difficult for competitors to replicate. The company could invest more heavily in developer relations, developer programs, and specialized CUDA features that create switching costs. This strategy doesn't directly respond to Intel competition but instead attempts to make switching to Intel GPUs more painful, preserving customer attachment despite potential price advantages.

Acquisition and Strategic Partnerships

Nvidia might also pursue acquisitions or partnerships with software companies, integrating specialized AI frameworks into Nvidia offerings and making them difficult for competitors to support. Recent Nvidia acquisitions of software companies like Run: AI and Wiz suggest the company is pursuing this strategy, building a comprehensive AI platform where Nvidia hardware becomes embedded within broader software solutions.

Nvidia dominates the discrete GPU market with an estimated 85% share, while AMD holds 10% and Intel 5%. Estimated data.

Challenges and Risks: Execution Uncertainties and Competitive Threats

Technical Execution Risk

Building competitive discrete GPUs represents an extraordinarily complex technical challenge. Intel's previous GPU architecture attempts (Larrabee, Arc) encountered performance and software challenges that limited commercial success. The company may face similar challenges with data center GPUs. Technical risks include:

Achieving competitive performance: Designing GPU architecture that matches Nvidia's performance at equivalent power budgets requires deep expertise. If Intel's architecture underperforms, customers will prefer Nvidia's superior performance even at higher cost.

Memory interface optimization: GPU memory subsystems are extraordinarily complex, with tight tradeoffs between bandwidth, latency, and power consumption. Suboptimal memory architecture could bottleneck performance and eliminate Intel's manufacturing advantages.

Thermal and power delivery challenges: Operating GPUs at 500-700 watts requires sophisticated power delivery and thermal management. Design oversights in these areas could create field reliability issues or thermal management complexity that customers resist.

Software Ecosystem Maturation Risk

Intel must build comprehensive software support for data center workloads. If Intel's software stack remains immature compared to Nvidia's, developers will avoid Intel GPUs despite competitive hardware performance. Building mature software ecosystems requires years of development and community contribution. Intel must either invest billions in software development or accept initial software limitations while ecosystem matures.

Manufacturing Ramp Risk

Intel's GPU production depends on successful manufacturing at leading-edge process nodes. If Intel encounters process challenges that delay GPU manufacturing or result in poor yields, production ramps fail and competitive opportunity passes to other suppliers. Intel's foundry services group has experienced challenges ramping new process nodes, suggesting manufacturing risk is real and material as reported by FindArticles.

Time-to-Market Window

Window for GPU market entry is narrowing as competition intensifies. If Intel's GPU product launches later than expected, competitors will have consolidated market positions, relationships, and software ecosystems. A 2026-2027 launch remains viable for meaningful market entry. A 2027-2028 launch becomes materially more challenging as competitors improve products and develop relationships.

Strategic Implications for Different Stakeholders

Implications for Hyperscale Data Centers

Hyperscale operators like Google, Meta, OpenAI, and Microsoft are probably watching Intel's GPU strategy with mixed emotions. Intel GPU success creates welcome competition that addresses Nvidia pricing and supply concentration concerns. However, GPU competition also creates deployment complexity—hyperscales must now evaluate multiple GPU suppliers, integrate multiple vendor software stacks, and manage workforce expertise across multiple GPU architectures.

Hyperscales will likely adopt Intel GPUs if performance is competitive and pricing provides material total cost of ownership advantage. This likely happens around 2027-2028 when Intel's second or third generation GPU products achieve mature software support and manufacturing scale. Early hyperscale adoption would prove commercially important to Intel's success.

Implications for Enterprise Data Centers

Enterprise customers have strong incentives to diversify GPU suppliers. Currently, GPU procurement essentially means Nvidia procurement, creating supply risk and pricing dependency. Intel GPUs offer welcome competition that improves negotiating leverage with Nvidia and reduces supply risk. Enterprises will likely require longer evaluation timelines than hyperscales, potentially delaying adoption until 2028-2030 when Intel GPU ecosystem achieves greater maturity.

Implications for Cloud Service Providers

Cloud providers like AWS, Microsoft Azure, and Google Cloud face interesting strategic decisions. Each provider has made significant Nvidia GPU commitments and offers Nvidia GPUs as primary AI acceleration option. If Intel GPUs become competitive, cloud providers will offer Intel GPUs to customers, potentially reducing prices or improving price-to-performance. Alternatively, cloud providers might create exclusive partnerships with Nvidia, differentiating their offerings through superior GPU capability.

Likely, cloud providers will eventually offer both Nvidia and Intel GPUs, allowing customers to choose based on workload requirements and cost preferences. This creates vendor diversity and competitive pricing pressure that benefits customers.

Development Timeline and Product Roadmap Expectations

2025-2026: Validation and Early Prototyping

Intel likely has GPU designs in various stages of development. Early designs are probably in validation phase, being tested through simulation and prototype manufacturing. The company is probably submitting designs to manufacturing for initial production runs, identifying yield issues and design problems. This phase emphasizes learning and iteration rather than volume production.

Public announcements during this phase likely focus on partnerships (software framework providers, cloud vendors), hiring announcements, and technical research papers demonstrating Intel's architecture innovations. Intel wants to build excitement about upcoming products while developing the technical foundation for volume production.

2026-2027: Initial Product Launches

First GPU products probably launch in late 2026 or early 2027, likely focused on inference workloads. Initial products might emphasize specific use cases—large language model inference, vision model inference—rather than general-purpose GPU computing. This allows Intel to optimize products for specific workloads and achieve better early performance positioning.

Initial production volumes will probably be modest, perhaps 10,000-50,000 units annually until manufacturing yields improve and volume ramps. These initial shipments likely target early adopter customers and reference designs, allowing Intel to gather real-world performance data and optimize software support.

2027-2028: Volume Production and Market Expansion

By 2027-2028, Intel likely achieves meaningful production volumes and expands product portfolio beyond initial launches. Second-generation products targeting different market segments (edge inference, training, specialized workloads) probably enter development. Manufacturing processes mature, yields improve, and cost structure becomes competitive with Nvidia.

Market adoption accelerates during this phase as customers overcome evaluation concerns and software ecosystem matures. Intel's market share probably approaches 5-15% by 2028, representing successful market entry but still substantial Nvidia dominance.

2028+ Sustained Competition

By 2030 and beyond, Intel likely achieves steady-state competitive position with meaningful but still secondary GPU market share. The competitive landscape probably stabilizes with Nvidia maintaining dominance (60-70% share) while Intel, AMD, and other suppliers capture remaining share. This equilibrium maintains competition while ensuring no single supplier dominates GPU markets, which enterprise and hyperscale customers strongly prefer.

How This Relates to Broader Technology Trends

AI Infrastructure Buildout and Acceleration

Intel's GPU strategy cannot be separated from broader AI infrastructure investment trends. Enterprises and hyperscales continue investing enormous sums in AI compute infrastructure, driven by adoption of large language models, generative AI, and AI-powered features across applications. This $500+ billion annual investment creates enormous opportunity for GPU suppliers as reported by CNBC.

Intel's entry accelerates infrastructure buildout by improving price-to-performance economics. If Intel delivers competitive GPUs at 20-30% lower total cost of ownership, enterprises can afford more extensive AI deployments, accelerating overall industry growth. This benefits Intel through absolute unit volume growth even if market share remains below Nvidia levels.

Process Technology Leadership and Manufacturing Advantage

Intel's GPU strategy also demonstrates how manufacturing leadership can translate to competitive advantage across product categories. By deploying advanced manufacturing processes exclusively for its own products initially, Intel potentially achieves superior performance per watt compared to competitors. Over time, this advantage compounds as subsequent process nodes offer continued improvements.

Intel's ability to maintain manufacturing leadership through advanced process nodes becomes critical to long-term GPU competitiveness. If Intel's manufacturing roadmap fails to deliver expected process improvements, GPU competitive advantage erodes. However, Intel's commitment to manufacturing leadership suggests the company is viewing GPU development as strategic validation of foundry strategy as reported by FindArticles.

Open Standards and Software Ecosystem Maturation

Intel's GPU strategy also accelerates maturation of open GPU standards and software frameworks. Nvidia's dominance created incentives for developers to invest in proprietary CUDA optimization. Competition from Intel (and AMD) creates incentives for developers to invest in open standards like SYCL and OpenCL, enabling code portability across multiple GPU vendors.

Increasingly portable GPU software ecosystems benefit the entire industry by reducing switching costs between GPU vendors and enabling healthier competition. This benefits customers through more choice and better pricing, though it challenges individual vendors by reducing platform lock-in opportunities.

Long-term Strategic Vision: Intel's Role in AI Hardware

Integration of CPU and GPU Capabilities

Long-term, Intel's strategy likely involves deeper integration between CPU and GPU products. The company could develop heterogeneous systems where traditional CPUs and specialized GPU compute elements work together, optimized for specific workload combinations. This integration would be extraordinarily difficult for competitors to replicate, creating meaningful competitive differentiation.

Historically, Intel understood CPU-centric computing. GPUs represented specialized accelerators for niche workloads. Future AI workloads increasingly involve hybrid compute where CPUs handle control flow, memory management, and coordination while GPUs accelerate numerical compute. Intel could optimize system architecture for this hybrid approach, delivering superior system-level performance compared to separate CPU and GPU components from different vendors.

Competitive Positioning Against Nvidia's Vertical Integration

Nvidia has pursued aggressive vertical integration, combining hardware (GPUs), software (CUDA, frameworks), and cloud services (Nvidia cloud offerings). This integration creates powerful competitive advantages and high customer lock-in. Intel's strategy likely involves similar integration across its product portfolio—combining leading-edge CPUs, competitive GPUs, and Intel Foundry Services for custom silicon.

This broadens Intel's competitive positioning beyond individual product competition toward ecosystem and platform competition. Customers choosing Intel GPUs also benefit from better integration with Intel CPUs and potentially superior manufacturing economics compared to competitors relying on external foundries.

Long-term Market Share Projections

Long-term equilibrium likely involves GPU market share distributed across multiple vendors, with Nvidia maintaining plurality position but no longer approaching 90% dominance. Conservative estimates suggest equilibrium markets around 2030 might involve: Nvidia 60-70%, Intel 15-20%, AMD 10-15%, and emerging competitors 5-10%. This fragmentation benefits customers through competition while still allowing individual vendors to operate profitably.

This projection assumes successful Intel execution and eventual maturity of Intel's GPU ecosystem. Execution failures, competitive overresponse from Nvidia, or supply chain disruptions could shift equilibrium substantially.

Practical Implications for IT Decision-Makers Today

Procurement Strategy Considerations

Enterprise IT leaders should monitor Intel's GPU development progress and consider long-term supplier diversity implications. Current Nvidia GPU dominance creates supply and pricing risks. IT leaders should:

Evaluate workload portability: Understand which AI workloads require Nvidia-specific optimization versus those that could run on alternative GPU platforms. Emphasizing portable workloads reduces long-term Nvidia dependency.

Build relationships with alternative suppliers: As Intel launches GPU products, IT leaders should evaluate offerings early and build relationships with Intel sales teams, positioning for future purchases if competitive offerings materialize.

Plan infrastructure for multi-vendor support: Future data center infrastructure should support multiple GPU vendors without substantial architecture changes. This flexibility enables future vendor diversification as alternatives mature.

Software Development Strategy

Developers building AI applications should prioritize code portability across multiple GPU platforms. Using SYCL, OpenCL, or other portable frameworks rather than vendor-specific optimizations ensures applications remain competitive across GPU vendors as markets evolve. This approach requires some performance optimization sacrifice in the short term but provides substantial long-term flexibility.

Financial Planning and Budgeting

CFOs planning AI infrastructure budgets should assume that GPU pricing may decline as competition intensifies. Rather than extrapolating current Nvidia pricing levels forward, assume that by 2027-2028, competitive alternatives may reduce effective GPU pricing by 20-40%. This improves overall AI project economics and should influence investment decisions and use case prioritization.

FAQ

What is Intel's GPU development strategy and why is it significant?

Intel's GPU development strategy involves building discrete graphics processing units to compete in the AI accelerator market currently dominated by Nvidia. The significance lies in Intel leveraging its manufacturing capabilities, CPU ecosystem, and established customer relationships to challenge Nvidia's 80-90% market share in data center GPUs. If successful, this could fundamentally reshape the GPU market, introducing meaningful competition in a market segment currently generating over $40 billion in annual revenue as noted by Financial Content.

How does Eric Demers' hiring affect Intel's GPU prospects?

Eric Demers, recruited as Chief GPU Architect from Qualcomm, brings critical expertise in GPU architecture design and process technology optimization. His decade-plus experience developing the Adreno GPU architecture for mobile devices demonstrates capability to create competitive GPU designs. CEO Lip-Bu Tan's public statements about hiring Demers "requiring persuasion" suggest Intel made significant compensation and authority commitments, indicating serious strategic intent. Demers' presence signals Intel has acquired the specialized technical leadership necessary to execute GPU development at scale as reported by Wccftech.

What manufacturing advantages does Intel possess in GPU production?

Intel operates advanced semiconductor manufacturing facilities capable of producing chips at leading-edge process nodes (7nm, 4nm, and next-generation technology). Unlike Nvidia, which outsources manufacturing to TSMC, Intel controls its own manufacturing, providing several advantages: direct control over capacity allocation for GPU production, potential cost structure advantages from internal manufacturing, and ability to implement vertical integration between CPUs and GPUs. These manufacturing advantages could translate to superior power efficiency and cost competitiveness compared to competitors relying on external foundries as reported by FindArticles.

When can customers expect Intel GPU products to reach market?

Based on typical GPU development cycles, initial Intel GPU products likely launch in late 2026 or early 2027, probably focused on AI inference workloads. Initial production volumes will be modest until manufacturing yields improve and software ecosystem matures. Meaningful market availability with expanded product portfolio likely occurs in 2027-2028, with the company potentially achieving 5-15% market share by 2028. Full ecosystem maturity and competitive parity with Nvidia may require 3-5 additional years beyond initial launch as noted by FindArticles.

How will Intel's GPU entry affect Nvidia's competitive position?

Intel's GPU entry introduces meaningful competition that will likely pressure Nvidia through several mechanisms. First, pricing pressure becomes likely as Nvidia must compete on total cost of ownership rather than maintaining monopolistic margins. Second, customer diversification incentives strengthen as enterprises prefer multiple GPU suppliers to reduce supply risk. Third, software ecosystem competition intensifies as developers invest in portable frameworks rather than Nvidia-specific optimizations. However, Nvidia maintains substantial competitive advantages through CUDA ecosystem maturity, performance leadership, and established market position, so the company likely maintains majority market share even after Intel competition materializes as noted by Financial Content.

What role will Intel's software ecosystem play in competitive success?

Software ecosystem represents critical competitive determinant alongside hardware performance. Intel cannot succeed with superior hardware if developers cannot easily develop applications targeting Intel GPUs. Intel's strategy likely involves supporting open standards like SYCL and OpenCL to enable rapid ecosystem adoption, while also developing Intel-specific optimizations for customers targeting Intel architecture specifically. The company must achieve software ecosystem maturity roughly simultaneously with hardware product launches to enable successful customer adoption. Early ecosystem inadequacy could delay meaningful market penetration despite competitive hardware capabilities as analyzed by Seeking Alpha.

How does Intel's GPU strategy relate to its foundry services business?

Intel's foundry services strategy and GPU development are deeply connected. By manufacturing its own GPUs at Intel foundry facilities, the company demonstrates leading-edge process capabilities to potential customers while gaining priority access to manufacturing capacity and process roadmaps. GPU development essentially serves as a showcase for Intel's manufacturing capabilities, proving that Intel can produce world-class semiconductors at advanced process nodes. Conversely, competitive GPU products strengthen Intel foundry services' value proposition by demonstrating that companies can obtain leading-edge products from Intel's manufacturing services as reported by FindArticles.

What alternative platforms should IT leaders consider alongside Intel and Nvidia GPUs?

Beyond Nvidia and Intel, AMD's RDNA GPUs and MI300 line represent emerging competitive alternatives, though AMD currently lags in data center market share. For developers and teams seeking broad automation capabilities beyond traditional GPU computing, platforms like Runable offer AI-powered automation for workflow optimization and developer productivity. While Runable focuses on automation rather than raw GPU acceleration, it complements GPU investments by optimizing utilization of expensive compute resources through intelligent workflow orchestration. Organizations evaluating GPU investments should simultaneously assess whether automation platforms can optimize overall infrastructure efficiency and reduce total compute requirements.

How will Intel's GPU development impact broader AI infrastructure investment decisions?

Intel's GPU competition likely improves overall AI infrastructure economics by reducing per-unit GPU costs and total cost of ownership. Enterprises budgeting for AI infrastructure deployments should expect competitive pricing pressure as Intel products reach market, potentially reducing effective GPU costs by 20-40% compared to current Nvidia-exclusive pricing. This cost improvement enables more extensive AI deployments and should influence infrastructure investment decisions and use case prioritization. Organizations should plan procurement strategies assuming multi-vendor GPU environments become increasingly common by 2028 and beyond as reported by CNBC.

What risks could delay or limit Intel's GPU success?

Several execution risks could constrain Intel's GPU success: manufacturing challenges in ramping production at leading-edge process nodes could delay product launches or result in poor yields; software ecosystem maturity challenges could limit developer adoption despite competitive hardware; Nvidia's aggressive competitive response through accelerated product improvements or pricing reductions could compress Intel's competitive window; and customer inertia toward Nvidia's established position could limit adoption even if Intel products offer superior economics. Additionally, broader geopolitical tensions affecting semiconductor manufacturing or trade policies could impact Intel's ability to manufacture and distribute GPUs globally, creating supply uncertainties that favor established competitors with diversified supply chains as noted by FindArticles.

Conclusion: Intel's GPU Strategy and the Future of AI Acceleration Hardware

Intel's confirmed GPU development strategy represents one of the semiconductor industry's most consequential strategic decisions in the past decade. The company's investment in leadership talent, manufacturing capability, and product development signals serious, sustained commitment to challenging Nvidia's dominance in data center GPUs and AI acceleration hardware.

Underlying this strategy is clear-eyed recognition that GPU markets will define semiconductor industry economics for the next decade. AI adoption continues accelerating across enterprises and consumer applications. GPU hardware enables this transformation and generates margin profiles substantially exceeding traditional semiconductor businesses. For a company like Intel facing eroding CPU margin pressure, GPU success becomes existential to maintaining competitive position and financial health.

Intel possesses genuine competitive advantages that could translate to successful GPU business. Manufacturing capability represents a durable differentiator—the company can potentially deliver superior power efficiency and cost structure compared to competitors relying on external foundries. Established customer relationships provide distribution advantage for GPU products. Integration between CPUs and GPUs offers system-level optimization opportunities competitors struggle to match. The company is hiring experienced talent like Eric Demers, signaling that execution will benefit from specialized expertise rather than forcing x86-centric architectures onto GPU designs as reported by Wccftech.

However, execution risks remain substantial. GPU development requires different expertise than CPU design. Intel's previous attempts at discrete GPU products (Larrabee, Arc) encountered challenges that limited commercial success. Building comprehensive software ecosystems takes years, and Nvidia's entrenched CUDA ecosystem creates switching costs that competitors must overcome through compelling advantages. Manufacturing at leading-edge process nodes remains extraordinarily complex, with yield challenges that could delay production ramps.

Competitive dynamics will also prove challenging. Nvidia isn't defenseless against Intel competition—the company's financial strength, market dominance, and CUDA ecosystem provide substantial competitive advantages. Nvidia could respond through aggressive pricing, accelerated product innovation, and deeper ecosystem integration that makes switching costs prohibitive. AMD has invested years building GPU alternatives without achieving meaningful data center traction, suggesting that competing against Nvidia proves harder than it appears.

Still, Intel's GPU strategy appears sound despite execution challenges. The company is entering the market with serious intent, substantial resources, and genuine competitive advantages. Even if Intel captures smaller share than Nvidia (perhaps 10-20% by 2030), the absolute market size remains enormous enough to support profitable GPU businesses across multiple competitors. Industry analysts project data center GPU markets exceeding $150-200 billion by 2030, with compound growth rates of 30-40% annually through the decade as noted by Financial Content.

For IT leaders, enterprises, and customers, Intel's GPU entry offers welcome developments. Current Nvidia monopoly creates supply risks, pricing dependency, and technology stagnation risks. Introduction of credible competitive alternatives improves customer negotiating positions, ensures multiple suppliers maintain investment in GPU innovation, and enables architectural flexibility across future deployments.

The most likely outcome involves GPU markets evolving toward multi-vendor equilibrium over the next 5-7 years. Nvidia maintains position as market leader with 60-70% share but no longer approaches monopolistic dominance. Intel captures 15-20% share leveraging manufacturing advantages and customer relationships. AMD holds 10-15% through continued product improvements and software ecosystem development. Emerging competitors capture remaining 5-10% through specialized workload optimization.

This fragmented competitive landscape benefits customers through improved pricing, technology choice, and supply security. It benefits the industry through accelerated innovation as competitors improve products to maintain position. It benefits Intel specifically through substantial new revenue and margin opportunities, even if the company never achieves Nvidia's market dominance.

Intel's GPU strategy ultimately reflects recognition that technology leadership no longer means CPU leadership—it means GPU and AI accelerator leadership. By making genuine commitment to GPU development and backing that commitment with leadership talent, manufacturing resources, and multi-year investment timelines, Intel positions itself as a meaningful competitor in the markets that will define semiconductor industry economics for the coming decade. Execution success remains uncertain, but Intel's strategic direction appears sound and investments appear substantial enough to translate into meaningful competitive presence within 3-5 years.

Key Takeaways

- Intel's GPU strategy targets data center AI workloads where Nvidia currently dominates with 80-90% market share

- Leadership hires like Eric Demers signal serious technical execution intent beyond strategic experiments

- Intel's manufacturing capability provides genuine advantages in power efficiency and cost structure

- Software ecosystem maturity represents equal challenge to hardware performance for competitive success

- GPU market entry likely achieves 10-20% share by 2028 if execution succeeds, fundamentally reshaping competitive landscape

- Customer demand for supply diversification creates favorable market conditions for Intel's competitive entry

- Nvidia's entrenched CUDA ecosystem creates switching costs that Intel must overcome through compelling differentiation

- Multi-vendor equilibrium most likely outcome, reducing monopolistic pricing and accelerating industry innovation

- Enterprise IT leaders should plan infrastructure for multi-vendor GPU support and evaluate competitive alternatives

- GPU market growth exceeding $150-200 billion by 2030 provides sufficient opportunity for multiple profitable vendors

Related Articles

- Nvidia's $100B OpenAI Investment: Reality vs. Reports [2025]

- On-Device Contract AI: How SpotDraft's $380M Valuation Changes Enterprise Legal Tech [2025]

- Nvidia's $2B CoreWeave Investment: AI Infrastructure Strategy Explained

- Grubhub Fee Waiver Strategy: Impact, Analysis & Alternatives

- Tech News January 2025: Samsung S26 & Garmin Whoop Features

- Optical Transistor GPU: How Tulkas T100 Breaks Moore's Law | 2025