Amplitude vs Post Hog 2025: Complete Analytics Comparison & Alternatives

Introduction: Understanding Product Analytics in the Modern Era

Product analytics has become the backbone of data-driven decision making across software companies, from scrappy startups to Fortune 500 enterprises. Yet choosing the right analytics platform can make the difference between shipping features that users actually want and wasting engineering cycles on misguided priorities.

The analytics landscape has fundamentally shifted over the past five years. Where teams once relied solely on basic page view metrics and conversion funnels, modern product organizations now demand sophisticated behavioral tracking, experimentation capabilities, and the ability to rapidly test hypotheses at scale. This evolution has spawned two distinct categories of analytics tools: platforms designed for business and marketing teams who need accessible, visual dashboards, and platforms built specifically for product and engineering teams who need deep technical control and feature flag integration.

Amplitude and Post Hog represent the two poles of this spectrum. Amplitude built its reputation by making behavioral analytics accessible to anyone—product managers, growth marketers, and business analysts without deep technical training. Post Hog, meanwhile, emerged from the developer-first wave of infrastructure tools, designed with engineers as the primary user and built-in experimentation and feature flags as first-class features.

But this framing—Amplitude for business teams, Post Hog for engineers—oversimplifies the decision. The reality is more nuanced. Both platforms offer surprising depth, and the best choice depends entirely on your team's composition, your product's complexity, and what questions you're actually trying to answer.

In this comprehensive guide, we'll examine both platforms from first principles, exploring not just what they do, but why they made the architectural choices they did. We'll walk through real-world scenarios where each excels, and we'll also explore alternative solutions that might serve your specific needs even better. Whether you're a small team trying to understand your first users or a large organization managing experimentation across multiple product surfaces, this analysis will help you make an informed decision.

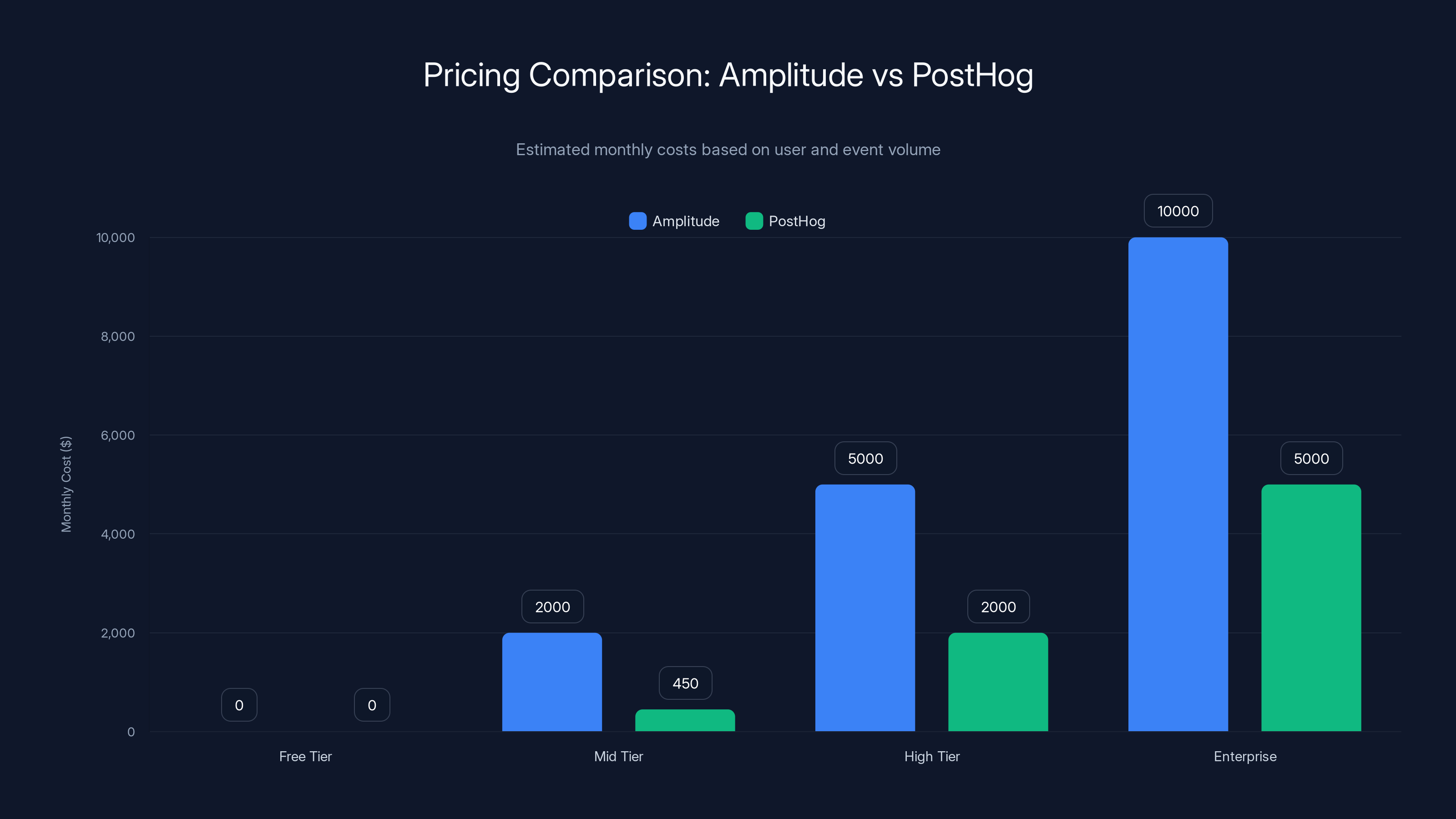

Amplitude's costs scale with user growth, while PostHog's scale with event volume. Estimated data shows Amplitude is more expensive at higher tiers.

The Fundamental Philosophy: Different Problems, Different Solutions

Amplitude's Origin Story and Design Principles

Amplitude emerged in 2012 with a clear mission: democratize analytics. The founders recognized that while enterprise analytics platforms existed, they required large teams of data scientists and SQL expertise to extract insights. Amplitude's breakthrough was asking a simple question: what if product managers and growth teams could answer behavioral questions without becoming data engineers?

This philosophical commitment shaped every architectural decision at Amplitude. The company built a query engine that could handle complex behavioral analysis—funnel segmentation, cohort retention, user property filtering—while presenting results through a visual interface that didn't require coding knowledge. A product manager could segment users by signup date, acquisition channel, and feature usage, then visualize the resulting cohort's retention curve within minutes, without requesting help from analytics engineers.

This approach proved incredibly valuable for teams building consumer products, SaaS applications, and e-commerce platforms where understanding user behavior patterns directly translated to business metrics like retention and revenue. The company's positioning as "the analytics platform for growth" attracted companies that needed to move quickly and test hypotheses frequently.

Post Hog's Development and Engineering-First Philosophy

Post Hog, founded in 2020, emerged during a different era—when open-source infrastructure tools were reshaping how companies built their technology stacks. The Post Hog founding team came from Y Combinator and had experienced firsthand how feature flags, session recordings, and experimentation were becoming essential infrastructure for rapidly iterating products.

Where Amplitude asked "how do we make analytics accessible," Post Hog asked "how do we make all of product discovery part of the engineering workflow?" Rather than building tools that live in a separate analytics dashboard, Post Hog decided to embed product insights directly into the development process. Engineers could deploy feature flags, run experiments, and analyze results without context-switching to another application.

This architectural philosophy meant Post Hog needed different technical foundations. Instead of optimizing for ease of use for non-technical users, Post Hog optimized for integration into code, APIs, and CI/CD pipelines. The interface could be more technical, the learning curve steeper, because the target user already understood programming concepts.

Both philosophies are correct—they're just solving for different constraints and different user types.

Feature Set Analysis: Beyond Surface-Level Comparisons

Product Analytics and Event Tracking Infrastructure

Both Amplitude and Post Hog track user events—actions users take within your product. This is the foundation layer upon which all other analytics builds. However, the way they handle this foundation reveals significant differences.

Amplitude's Event Architecture: Amplitude treats events as immutable fact records. When a user completes an action—opens an email, clicks a button, submits a form—Amplitude records that event with timestamps, user properties, and custom dimensions. The system is optimized for subsequent analysis: once events are in Amplitude, you can slice, filter, and aggregate them countless ways using the visual query builder.

Amplitude's strength lies in retroactive analysis. You can ask "which cohort of users who performed action X within Y timeframe have the highest lifetime value?" without having anticipated this question when you instrumented the event. The event data is rich enough to answer almost any behavioral question you might have later. This flexibility is invaluable for exploratory analysis—you're not forced to know all the questions you'll ask when you design your event schema.

The tradeoff is overhead. Amplitude's data model involves sending event payloads with numerous properties, and the company charges based on events (technically, monthly tracked users plus additional volume), so teams often need to think carefully about what data they're collecting to manage costs.

Post Hog's Event Architecture: Post Hog also collects events, but treats them as inputs into a larger system. Events feed into feature flags, which influence experimentation, which generates results data. Rather than event analysis being the primary interface, Post Hog positions event data as one component of an integrated product development platform.

This architectural choice allows Post Hog to be more efficient with data. Because experiments and feature flags are built into the system natively, Post Hog doesn't need to transmit as much metadata with each event. The platform knows which users received which variant of a feature because that information is stored in the flag system, not redundantly in every event record.

Post Hog's event structure is also SQL-queryable. If Amplitude's visual query builder doesn't support your use case, you might be stuck. With Post Hog, you can write SQL queries against the underlying data directly, giving you unlimited analytical flexibility if you have someone who knows SQL.

Behavioral Segmentation and Cohort Analysis

Amplitude's Segmentation: Amplitude's segmentation tools are often described as "the most powerful in the industry" by users who've worked extensively with both systems. You can create segments based on complex behavioral conditions: "users who completed purchase event, followed by support ticket within 7 days, with property country = US, segmented by signup month."

Amplitude pre-computes these segments, meaning once you've created a cohort definition, new users matching that criteria are automatically added. This enables sustained analysis—you can track how a cohort's behavior evolves over weeks and months. You can also export segments to email platforms, advertising systems, and other downstream tools, making Amplitude a natural fit for marketing teams who need behavioral targeting.

The visual interface for building segments is remarkably intuitive. Where a less well-designed tool might require nested boolean logic, Amplitude's interface guides you through a natural workflow that reads almost like a sentence: "Users who did [event] [frequency] [time window] AND have property [name] [equals/contains] [value]."

Post Hog's Segmentation: Post Hog offers comparable segmentation capabilities, but they're somewhat less forgiving if you want complex behavior-based segments. You can segment by events and properties, but the interface is more basic than Amplitude's. However, you can write SQL to define segments if you need more power, and you can create segments in your feature flags to control which users see which variants.

Where Post Hog's segmentation shines is in the integration with feature flags and experiments. Because segments are native to the flag system, flagging a feature to a segment happens seamlessly. The user experience of "create segment, apply to flag, run experiment" is more fluid than with Amplitude, where these are separate workflows.

Funnel Analysis: Predefined vs. Exploratory

Amplitude's Funnel Capabilities: Amplitude's funnel analysis lets you define a specific sequence of steps and measure how users move through them. You specify: users should click button A, then view page B, then submit form C. Amplitude counts the percentage of users who complete each step in that exact sequence and identifies where drop-off occurs.

Amplitude also offers "flexible funnels" where you specify that users need to complete all steps, but they can occur in any order. This is useful for understanding whether the sequence of events matters or whether it's simply completion of the set that matters.

The visualization is clear: a waterfall chart showing the percentage of users remaining at each funnel step, with demographic breakdowns showing which segments convert at higher rates. You can apply segment filters before creating the funnel, so you might ask "what's the purchase funnel for users who came from Twitter ads?" The answer appears instantly.

Amplitude's weakness is that this requires knowing what funnel to measure. The system is optimized for hypothesis testing—"I think mobile users drop off more at the payment step"—rather than exploratory discovery. If you want to understand all possible multi-step paths users take, Amplitude isn't the best tool.

Post Hog's Funnel Analysis: Post Hog's funnel tool is functionally similar to Amplitude's, with one notable addition: Post Hog shows you additional context for why users might be dropping off. The platform displays the most common actions users took instead of completing the next funnel step, which helps diagnose where friction actually exists.

If users should be clicking a checkout button but instead they're clicking a help link, Post Hog's funnel visualization makes this visible. This is more actionable than just seeing that 40% of users dropped off—you now know they were seeking support, which suggests a UX problem rather than price sensitivity.

Post Hog's weakness is that funnels still require you to know what you're measuring. Like Amplitude, it's a tool for validating hypotheses, not discovering them.

User Journey and Path Analysis

Amplitude's Journeys Feature: Amplitude's Journeys tool shows the actual paths real users took through your product, not the paths you designed for them. Rather than defining a funnel and measuring completion, you can see: "what did users who eventually purchased do on their first session?" The visualization shows the common event sequences and where paths diverge.

This is more exploratory than funnels. You're not starting with a hypothesis; you're observing actual behavior. For product teams trying to understand user behavior patterns without preconceptions, this is incredibly valuable.

Amplitude also offers Retention analysis, which shows cohorts of users and tracks what percentage remain active over subsequent days, weeks, or months. Combined with segment filters, you can ask "how does retention differ between users acquired through organic search vs. paid ads?" Understanding retention differences helps you identify which acquisition channels bring engaged vs. casual users.

Post Hog's Approach to Journey Understanding: Post Hog doesn't have a dedicated "journeys" feature in the Amplitude sense. Instead, it provides session replays—actually watching what users did on screen. This is fundamentally different from event sequences. A session replay shows you the UX experience; an event sequence shows you the interaction data.

For diagnosing why users are confused or frustrated, session replays often provide more insight than event data. You'll see users clicking the same button repeatedly, or scrolling up and down looking for something, or getting stuck on a particular input field. These behavioral patterns are invisible in event data but obvious in video.

Post Hog also integrates user journeys into experimentation. When you run an experiment, you can slice results not just by overall conversion rate but by which steps in a multi-step flow improved or regressed between variants.

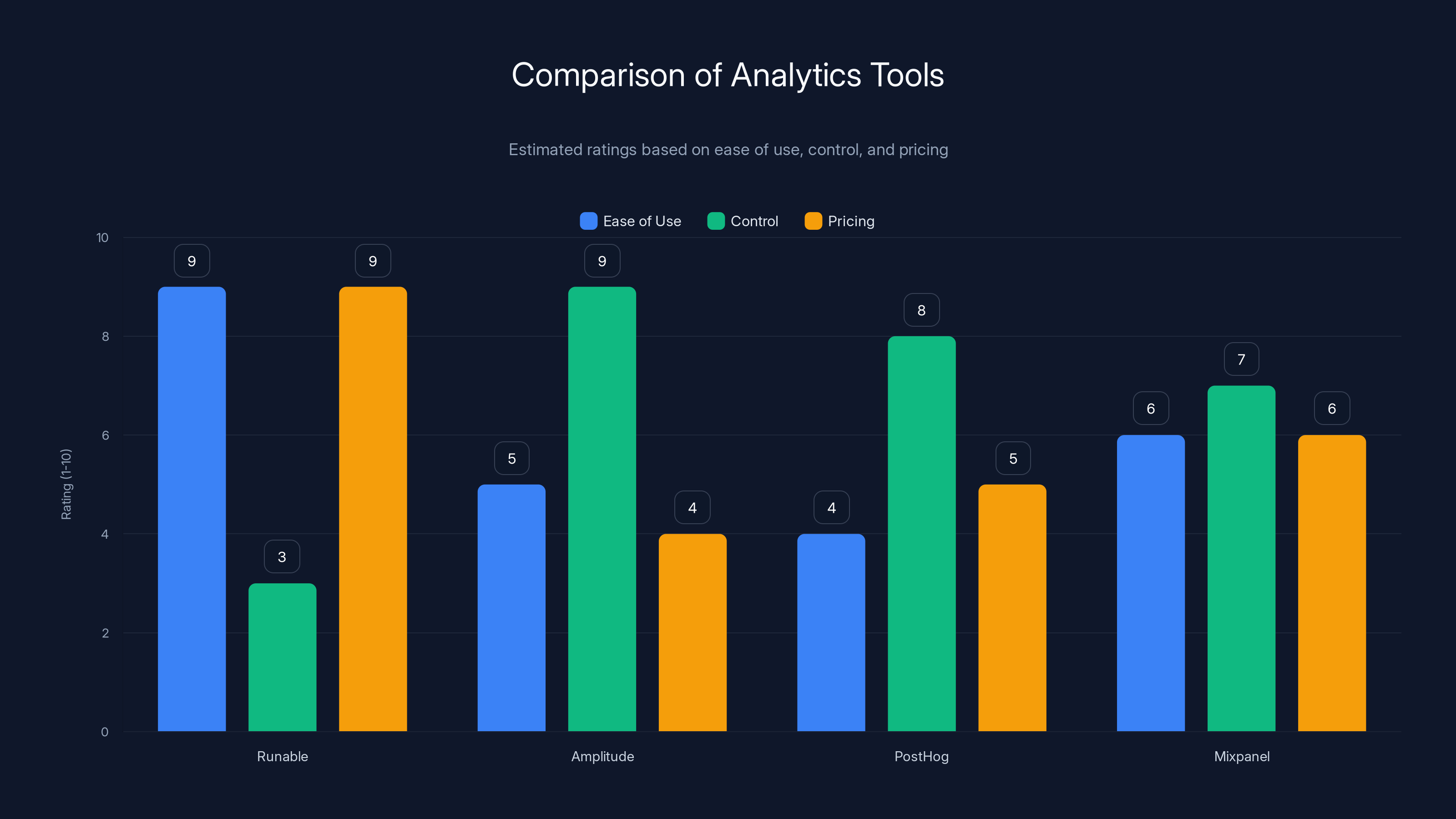

Runable excels in ease of use and pricing, making it ideal for small teams. Amplitude and PostHog offer more control, suitable for detailed analytics. Mixpanel provides a balance, focusing on retention analytics. Estimated data.

Experimentation Capabilities: A Critical Differentiator

Amplitude's Experimentation Approach

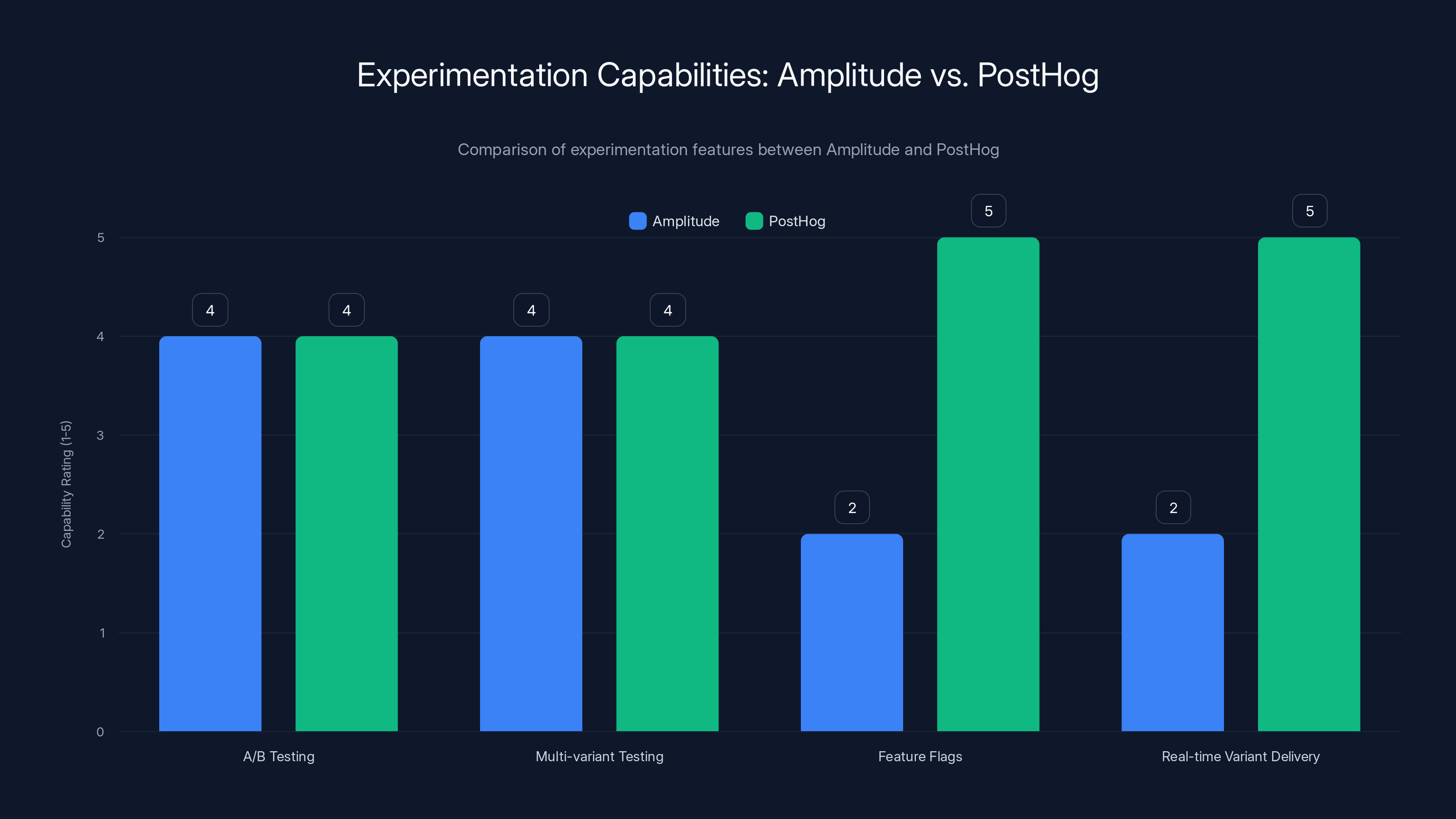

Amplitude built experimentation as an add-on to the core analytics platform. Using Amplitude Experiment (which acquired a company called Experiment.com), teams can configure A/B tests, multi-variant tests, and sequential testing strategies.

The workflow goes: define your test in Amplitude, expose it through Amplitude's SDK, measure results using your event data. Amplitude aggregates the event data and calculates statistical significance, providing a dashboard showing conversion rates for each variant with confidence intervals.

Amplitude's experimentation is sound and functional, particularly for teams that are primarily using Amplitude for analytics and want to avoid context-switching. However, it's somewhat disconnected from the core analytics experience. You run an experiment to learn that variant B converts 12% better, but then you have to manually integrate variant B into your product code and launch it. Amplitude doesn't maintain the flag state or deliver the variant assignment to your application—it's an analysis tool, not an infrastructure tool.

This separation of concerns is actually a philosophical choice. Amplitude remains focused on answering the question "did our change work?" while delegating the question of "how do we deploy changes safely?" to other tools.

Post Hog's Integrated Experimentation

Post Hog integrates experimentation directly with feature flags. When you create an experiment in Post Hog, you're defining a feature flag variant and specifying what percentage of users should see each variant. Post Hog then delivers the correct variant to each user in real-time through its SDKs, and simultaneously measures the impact on your defined metrics.

This integration is incredibly valuable. As soon as your experiment reaches statistical significance and you've decided to push variant B to 100% of users, there's no separate deployment step. You move the flag to 100%, and variant B is immediately live.

This also enables rapid iteration. You can have dozens of concurrent experiments running, learning from failed variants quickly and baking winning variants into your product architecture. For teams shipping multiple features per week, this velocity advantage is enormous.

Post Hog's statistical testing is also more sophisticated. The platform uses sequential testing (continuous monitoring as data comes in) rather than traditional fixed-horizon testing, which means you can stop experiments as soon as statistical significance is reached, rather than waiting for a predetermined sample size.

Statistical Rigor and Multiple Comparison Problems

Both platforms handle multiple comparisons differently. Amplitude applies statistical corrections when you run multiple variants, reducing the risk of false positives. Post Hog's sequential testing approach inherently manages this problem through its methodology.

Where it gets tricky is running multiple experiments simultaneously. If you run 20 experiments and look for any that shows statistical significance at p < 0.05, you'd expect to find false positives by chance alone. Neither platform perfectly solves this problem, though both are aware of it. In practice, this means teams need to be cautious about over-segmenting experiments and looking at too many metrics.

Pricing Models: Understanding the Cost Structure

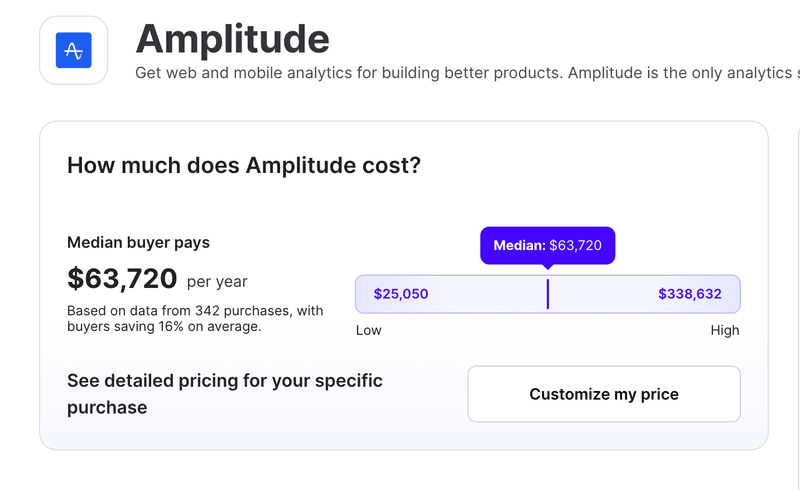

Amplitude's Pricing Architecture

Amplitude historically charged based on Monthly Tracked Users (MTU)—essentially, the number of unique users generating events. A startup with 100,000 monthly users might pay $1,000-2,000 monthly depending on the plan tier.

This has significant implications. As your user base grows, your costs scale accordingly. A viral product launch that quadruples your user base would quadruple your analytics costs. For high-growth companies, this can become expensive quickly.

Amplitude also charges for additional services: unlimited dashboards, more extensive data retention, and advanced segmentation features live behind higher-tier plans. The pricing tiers are roughly: Free (up to 10 million events/month), Plus (

For many teams, the Plus tier is appropriate—you get all core analytics features, basic experimentation, and reasonable data retention. Moving to Pro primarily unlocks more concurrency and deeper customization.

Amplitude's pricing transparency is good. You can see exactly what each tier includes and make an informed decision. However, the MTU-based model creates predictability in one direction and unpredictability in another. If you know your user base growth trajectory, you can forecast costs. But if you do a major launch and user numbers spike, you might suddenly face much higher bills.

Post Hog's Consumption-Based Pricing

Post Hog charges based on actual events captured and stored. Their free tier includes 1 million events per month. Paid plans scale from

The advantage of Post Hog's model is that it rewards efficiency. If you instrument fewer events (capturing only the signals that actually matter), you pay less. The disadvantage is that it's proportional to activity, not user growth. A product with 1 million inactive users generates different event volumes than a product with 10,000 highly engaged users, and Post Hog charges based on the latter (active users generate events), not the former.

Post Hog also offers on-premises deployment, which some companies prefer for data privacy or regulatory reasons. There's an open-source version that you can self-host entirely, though you lose some features.

Post Hog's pricing is less transparent than Amplitude's. Calculating your likely monthly cost requires understanding your event volume, which most teams don't know precisely before implementation.

Cost Comparison Scenarios

Imagine a SaaS company with 50,000 monthly active users:

- Amplitude would charge based on the 50,000 MTU: approximately $1,500-2,500/month

- Post Hog's cost depends on events. If users generate average 50 events/month, that's 2.5M events/month, costing roughly $450-600/month

Post Hog is cheaper here. But now imagine a social app with 100,000 users generating 200 events/day:

- Amplitude: Based on 100,000 MTU, roughly $3,000-5,000/month

- Post Hog: 200 × 100,000 × 30 = 600 million events/month, far exceeding Post Hog's highest tier cost ceiling

Amplitude is cheaper here because Amplitude's user-based model doesn't scale with per-user event volume.

The fundamental difference: Amplitude penalizes large user bases; Post Hog penalizes high-frequency event tracking. Choose the tool whose cost structure aligns with your usage pattern.

Integration Ecosystems and Data Export

Amplitude's Integration Network

Amplitude has built partnerships with over 100 downstream tools. You can export segments to Braze for push notifications, to Mailchimp for email campaigns, to Stripe for behavioral billing, and to dozens of advertising platforms for audience targeting.

These integrations are valuable because they extend Amplitude's usefulness beyond analytics. Your behavioral segments become actionable across your entire marketing and product infrastructure. If you identify that users with more than 5 logins per week have 10x retention, you can automatically send those users to your email platform with a high-value engagement campaign.

Amplitude also provides APIs for exporting raw event data, allowing integration with data warehouses and custom analytics tools. If Amplitude doesn't have a specific visualization or analysis you need, you can extract the raw data and build custom dashboards in Tableau, Looker, or internal tools.

Amplitude's data export is also useful for migration. If you ever decide to switch platforms, you can extract all your historical data and import it into a new system. This reduces lock-in.

Post Hog's Integration Philosophy

Post Hog takes a different approach. Rather than extensive integrations with downstream tools, Post Hog aims to be comprehensive enough that you don't need to export data. All experimentation, flags, feature releases, and analytics live in one platform, so the need to push segments to external tools is reduced.

That said, Post Hog does integrate with Slack, webhook systems, and has APIs for custom integrations. You can trigger actions based on experiment results or set up webhooks to notify other systems when feature flags change.

Post Hog also provides direct database access (with permission controls) if you want to query the underlying data directly or build custom reports. For technical users, this is more powerful than fixed-format integrations.

The tradeoff: Post Hog requires more self-service integration work if you want to connect to downstream systems, but it's also more flexible for teams with engineering resources to build custom workflows.

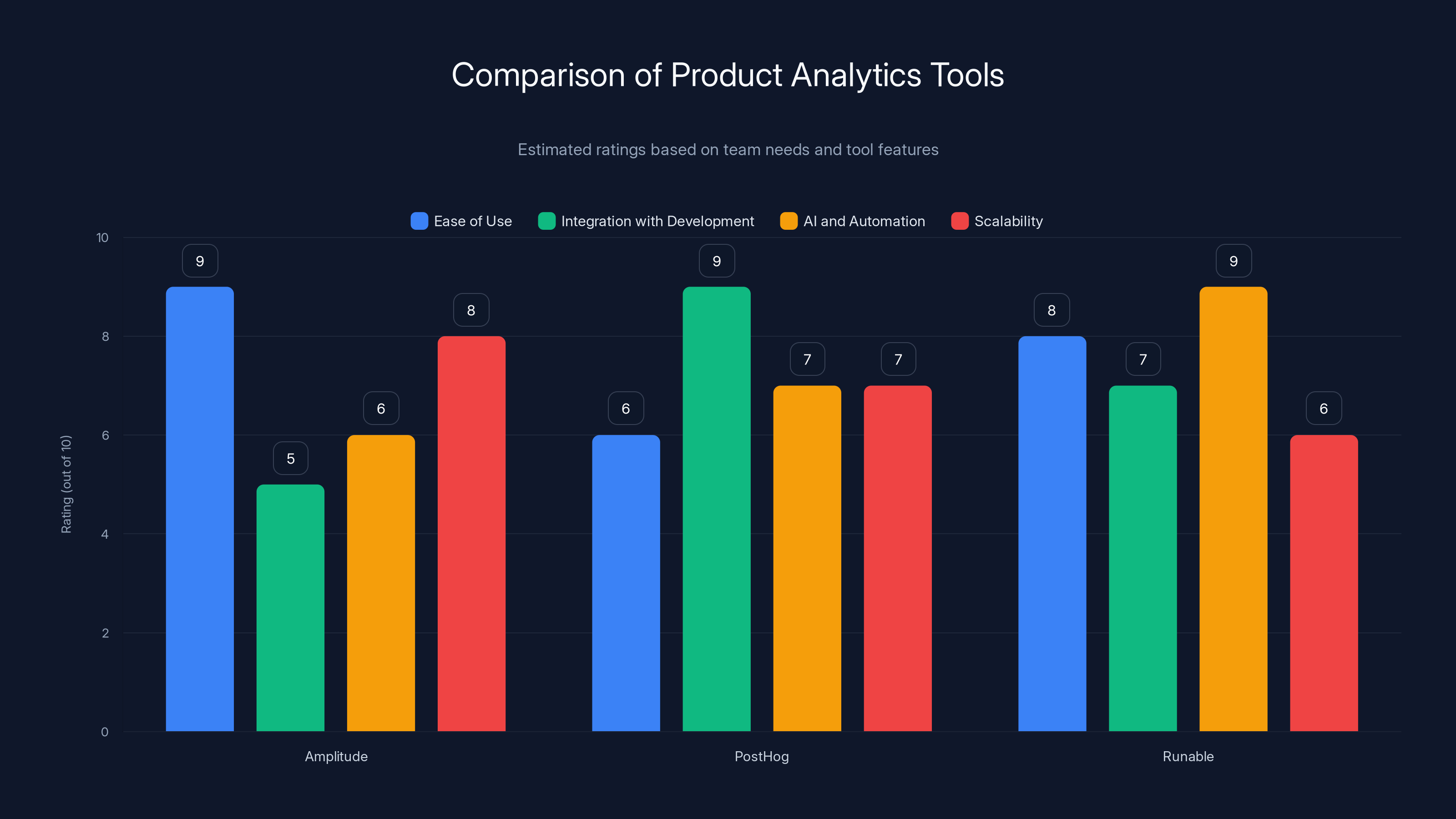

Estimated data shows Amplitude excels in ease of use and scalability, PostHog in integration with development, and Runable in AI and automation. Choose based on team needs.

User Experience and Learning Curve

Amplitude's Interface Design

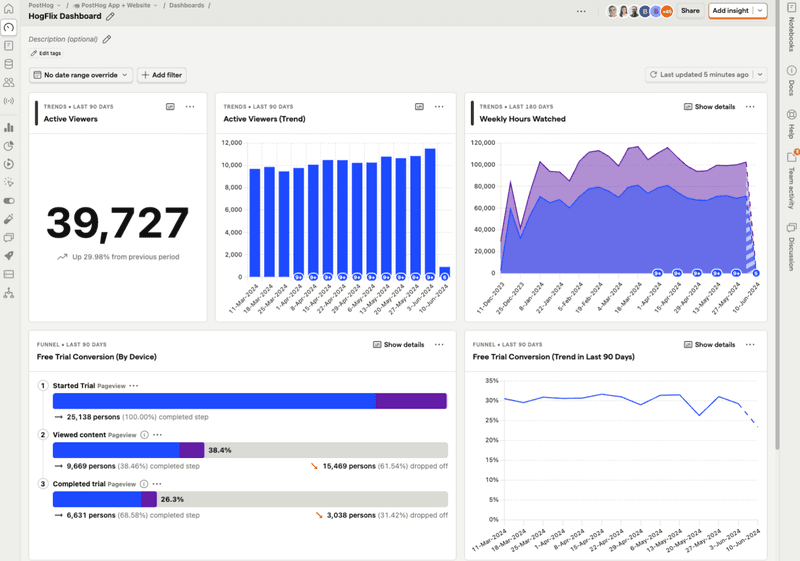

Amplitude's interface is primarily designed for product and growth managers. The visual query builder uses metaphorical language: "users who did [event] [frequency] [timeframe]." This reads naturally to non-technical users. Most product managers can open Amplitude, navigate to create a chart, and write a query with minimal training.

Dashboards in Amplitude are highly customizable. You can create dashboards with cards that show funnels, retention charts, trend lines, or custom metrics. The visualizations are polished and suitable for presenting to executives.

Amplitude's weakness is that this simplicity breaks down at the edges. If you want to ask a question that doesn't fit the visual query builder's patterns—maybe you need a highly specific formula or a non-standard visualization—you're stuck. You can't hand-write the query; you have to contact support or accept that the answer isn't available in Amplitude.

For teams with 5-10 product managers all running queries, Amplitude is very efficient. For teams with 100+ people running queries, the limitations become more apparent, and they usually graduate to SQL-based tools alongside Amplitude.

Post Hog's Interface Design

Post Hog's interface is engineered. Terms like "events," "properties," "filters," and "series definitions" are used rather than natural-language phrasing. For someone who understands database concepts, Post Hog feels natural and intuitive. For a product manager used to drop-down builders, it feels intimidating.

This is intentional. Post Hog optimizes for power over simplicity. You can ask any question your brain can formulate because you're essentially writing database queries through the UI (and can also write SQL directly).

Post Hog's learning curve is real. Most teams allocate 2-3 weeks for onboarding, with dedicated team members learning the system deeply. Once onboarded, technical users are enormously productive. But the initial investment is higher.

For teams where all analytics users know how to code or have deep data literacy, Post Hog is faster than Amplitude. For teams with many business users, Amplitude's approach is better.

Data Privacy, Compliance, and Security

Amplitude's Data Handling

Amplitude stores data in AWS and operates data centers in multiple regions (US and EU), complying with GDPR. The company is SOC 2 Type II certified and offers HIPAA compliance for healthcare clients.

Amplitude's user deletion processes are important for privacy compliance. Since Amplitude is tracking individual users, GDPR's right to be forgotten requires that you can delete a user's data on request. Amplitude supports this, and you can request deletion of specific users' event histories.

Amplitude also offers data export capabilities, allowing you to move your data if you switch vendors. This reduces lock-in and addresses vendor risk concerns.

One consideration: Amplitude's business model depends on having your event data. They use your data (anonymized, in aggregate) to build their platform's features and sell benchmarking insights. This isn't hidden, but it's worth understanding.

Post Hog's Data Approach

Post Hog has made privacy a core competitive advantage. By default, Post Hog doesn't identify users by email or personal information—it uses anonymous session IDs. You can identify users if you choose, but it's opt-in rather than default.

Post Hog also offers self-hosted deployment, where you run the entire analytics infrastructure on your own servers. This means your event data never leaves your infrastructure, which is critical for companies with strict data residency requirements or privacy concerns.

The open-source version of Post Hog is particularly valuable for teams that want complete control over their data. You can inspect the code, see exactly what's being collected, and audit the entire system.

Post Hog also supports EU data centers for GDPR compliance and is working toward HIPAA certification.

Post Hog's privacy positioning is a genuine advantage for teams handling sensitive user data or operating in regulated industries. Being able to run analytics entirely on your own infrastructure eliminates data transfer concerns.

Real-World Use Case Analysis

When Amplitude Excels

E-commerce and Marketplace Companies: Amplitude's strength in behavioral segmentation makes it ideal for e-commerce. You can segment by purchase history, price sensitivity (inferred from browsing patterns), and engagement level, then use these segments for personalized marketing. Companies like DuckDuckGo and Atlassian have used Amplitude to understand user behavior at scale.

Growth-Focused SaaS Companies: Amplitude's integration with marketing platforms makes it natural for teams with dedicated growth organizations. You can identify high-value user cohorts, export them to your email platform, and run campaigns. The analytics-first approach gives you deep insights into what drives retention and expansion.

Mobile App Analytics: While both platforms support mobile, Amplitude's focus on event aggregation and visualization makes it particularly good for mobile products. Mobile apps generate lots of events, and Amplitude handles high-frequency event streams well.

Teams with Limited Engineering Resources: Amplitude's visual interface means non-technical users can run sophisticated analyses independently. You don't need a data team to answer behavioral questions. This is invaluable for startups without dedicated analytics engineers.

When Post Hog Excels

Feature-Heavy Products with Rapid Iteration: Post Hog's integrated experimentation and feature flags make it ideal for teams shipping multiple features per week. The seamless workflow from "define experiment" to "measure results" to "roll out winner" is optimized for velocity.

Developer Tools and Infrastructure Software: Products used primarily by developers benefit from Post Hog's engineering-first design. The team that built your product can analyze its usage without switching to a separate analytics tool, reducing friction and enabling faster decision-making.

Organizations with Regulatory Requirements: Post Hog's self-hosted option is critical for companies handling sensitive data—healthcare platforms, financial apps, or government contractors. Running analytics entirely on your infrastructure is often a requirement, not an option.

Highly Technical Product Teams: If your product team includes many engineers and few traditional product managers, Post Hog's interface aligns naturally with how they think about problems. SQL queries and database concepts feel comfortable.

Companies Wanting to Avoid Vendor Lock-in: Post Hog's open-source nature means you can always run your own version of the platform. You're not dependent on Post Hog the company continuing to operate or maintaining favorable pricing.

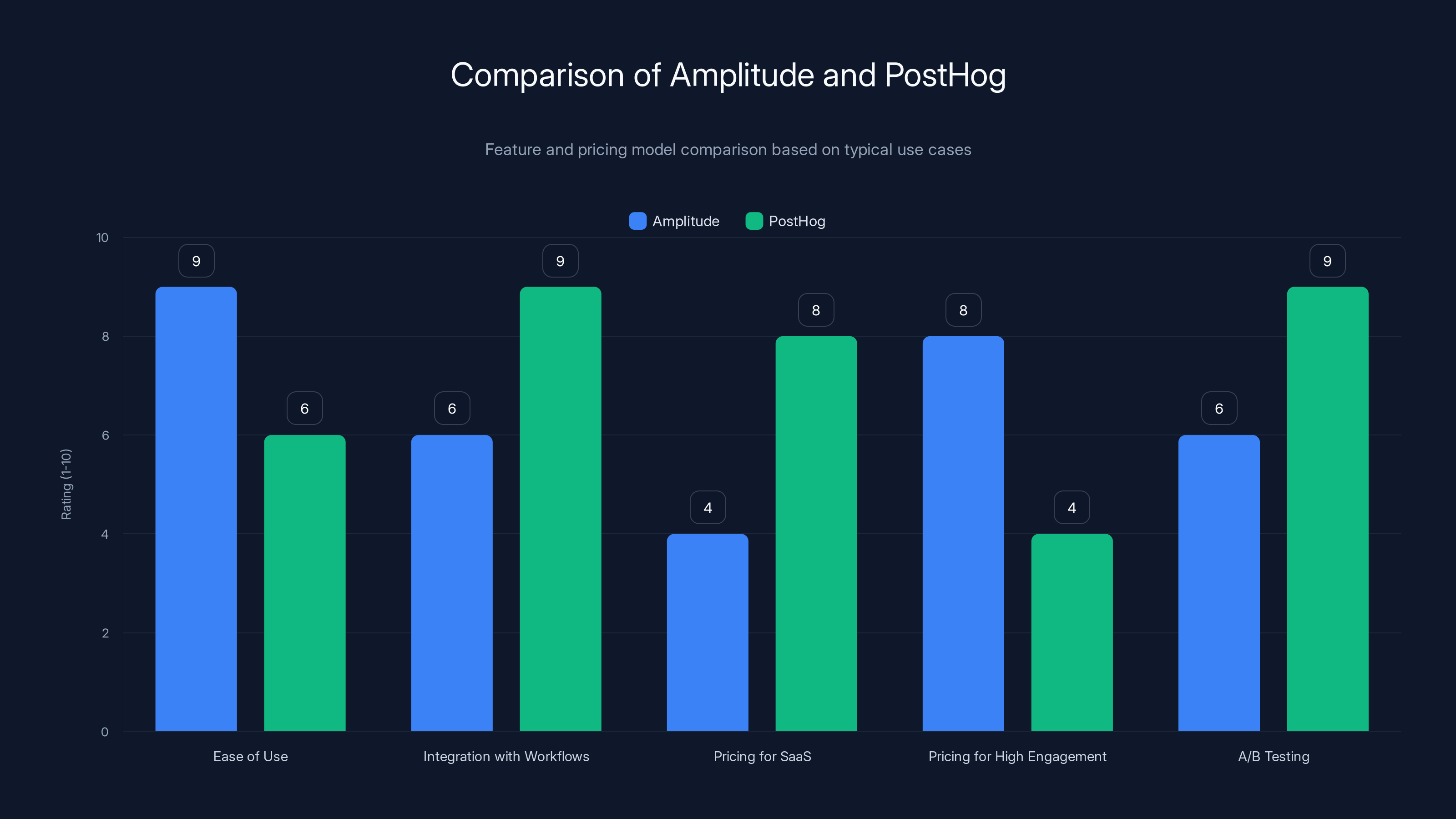

Amplitude excels in ease of use and high engagement pricing, while PostHog is better integrated with engineering workflows and offers more affordable pricing for SaaS products. PostHog also leads in A/B testing capabilities. Estimated data.

Technical Implementation and SDKs

SDK Quality and Documentation

Both Amplitude and Post Hog provide SDKs for web, mobile (iOS/Android), and backend environments. SDK quality is roughly comparable—both are well-maintained and extensively documented.

Amplitude's SDKs are slightly more mature, with longer histories and larger communities. Post Hog's SDKs are newer but have evolved quickly and now match Amplitude's functionality.

A notable difference: Post Hog's SDKs include built-in feature flag support. When you initialize the Post Hog SDK, you immediately get access to flag evaluation without needing a separate SDK. With Amplitude, you need a separate feature flag library or use Amplitude Experiment specifically.

Server-Side and Client-Side Tracking

Both platforms support both client-side (browser JavaScript) and server-side (backend API) event tracking. This is important because some events naturally occur on your backend (subscription renewal, API call) and others on the frontend (button click, form submission).

Amplitude's client-side SDK is mature and handles complex scenarios like queueing events offline and syncing when connectivity returns. Post Hog's approach is simpler but also handles offline scenarios.

For server-side tracking, both have REST APIs and support batch event ingestion, which is more efficient for high-volume scenarios.

Data Freshness and Query Latency

Amplitude's query engine processes events in near real-time for recent data, but older data (beyond a certain retention window, depending on your plan) might be accessed with slight delays. For most purposes, you get results within seconds.

Post Hog processes events similarly—recent events appear in queries within seconds. For complex queries over large datasets, latency increases, but it's typically still under a minute for reasonable query scopes.

Neither platform is designed for real-time streaming queries. If you need sub-second latency on arbitrary questions, you'd want a dedicated real-time analytics tool.

Comparing Core Metrics and KPIs

Retention and Churn Analysis

Both platforms excel at calculating retention (percentage of users remaining active after N days/weeks/months). However, they approach it slightly differently.

Amplitude's Retention: Amplitude's retention dashboard shows retention curves with multiple cohorts overlaid. You can segment by user properties and see how retention differs by acquisition source, user tier, or geography. The visualization makes it immediately obvious which cohorts retain best and which churn.

Retention is particularly useful in Amplitude because you can retroactively define it however you want. Maybe "active" means "logged in" for your app, but later you decide it should mean "generated an event" (including passive tracking). You can change the definition and recalculate retention without re-instrumenting events.

Post Hog's Retention: Post Hog's retention feature is similar but slightly less flexible. You define "active" as specific events and get retention curves. The feature integrates with experiments—you can measure whether an experiment improved retention for exposed users.

Post Hog's strength is in the causality. You can ask "did this experiment move the needle on retention?" and get a yes/no with statistical confidence. Amplitude would require separate cohort analysis to answer the same question.

Engagement and Frequency Analysis

Amplitude's Engagement Metrics: Amplitude calculates engagement frequency (how often users complete key actions) and provides distributions showing what percentage of users engage once, twice, weekly, etc. These insights directly inform whether your product is sufficiently habit-forming.

If you see that 20% of users engage daily, 30% weekly, 40% monthly, and 10% are inactive, you understand your engagement pyramid. You can drill into the "monthly" cohort to understand what would move them to weekly engagement.

Post Hog's Engagement Tracking: Post Hog doesn't have dedicated engagement metrics in the same way. Instead, you'd define events that represent engagement and create charts showing their frequency distribution. It's more flexible but requires you to know which events represent engagement.

Where Post Hog shines is integrating engagement into feature flags. You might flag a new feature only to users in your "high engagement" segment and measure whether the feature increases engagement frequency further.

Revenue and Monetization Analysis

Many teams want to correlate user behavior with revenue. Both platforms support this, but through different mechanisms.

Amplitude's Approach: Amplitude lets you add revenue events—when a user makes a purchase or upgrades their plan. You can then correlate revenue with behavioral cohorts. For example, "users who completed 5+ tutorials have 3x LTV."

Amplitude also offers Amplitude Recommend, which uses machine learning to identify which features or content drive the highest LTV users. This is useful for focusing product efforts on features that attract high-value users.

Post Hog's Approach: Post Hog handles revenue events similarly but without the machine learning layer. You track revenue events, segment by user properties, and compare LTV across segments. If you want machine learning recommendations, you'd need to export the data to a separate analysis tool.

Alternative Solutions and When to Consider Them

The Case for Runable's Analytics Automation

While Amplitude and Post Hog handle traditional analytics and experimentation, teams often face a broader problem: too much data, not enough insight. This is where Runable offers an interesting alternative approach. Rather than asking users to formulate complex queries or build experiments, Runable uses AI to generate analytics reports, summaries, and insights automatically.

For teams that find themselves overwhelmed by the sophistication of Amplitude or Post Hog, or for organizations where not everyone is comfortable with the query interfaces of either platform, Runable provides a different workflow. You point Runable at your analytics data, and AI agents generate natural-language summaries of trends, alerts on anomalies, and reports comparing time periods or cohorts.

Runable is positioned at the opposite end of the spectrum from Post Hog's engineering-first design. Rather than maximum control and flexibility, Runable optimizes for ease of use and requires minimal training. At $9/month, it's also accessible to small teams and startups that find Amplitude or Post Hog's pricing high.

The tradeoff is reduced control. With Amplitude or Post Hog, you specify exactly what to measure. With Runable, you trust the AI to identify what matters. This works well for exploratory analysis but less well if you have specific, well-defined questions.

Mixpanel: The Middle Ground

Mixpanel competes more directly with Amplitude than Post Hog does. Mixpanel also offers behavioral analytics with event tracking, segmentation, and funnels. The key difference is that Mixpanel focuses on "retention and engagement analytics" specifically, whereas Amplitude is broader.

Mixpanel's strength is in retention analytics specifically. If you're building a consumer product and retention is your primary concern, Mixpanel's depth in retention cohorts, retention formulas, and churn prediction is notable. However, Amplitude has added significant retention features over time, and the gap has narrowed.

Mixpanel's pricing is similar to Amplitude's MTU-based model, and it faces similar scaling concerns. For most teams, Amplitude offers more flexibility, though Mixpanel remains competitive for retention-focused products.

Google Analytics 4: Why It Might Be Enough

Google Analytics 4 (GA4) is often overlooked in discussions of "serious" analytics tools, but for many teams, it's sufficient. GA4 provides event tracking, segments, funnels, and audience creation at no cost.

GA4's weakness is that it's optimized for web analytics and marketing metrics, not product behavior analysis. If your primary question is "which marketing channels drive the most engaged users," GA4 is excellent. If your question is "which users are at risk of churning, and what can we do about it," GA4 is limited.

GA4 also has data retention limitations and rate limits on queries that make it unworkable for serious analytics at scale. But for early-stage products or teams just getting started, GA4 can save money and provide foundational analytics without premature tooling complexity.

Heap and Fullstory: Behavioral Analytics with Session Recordings

Heap and Fullstory position themselves as alternatives to Amplitude by adding session recordings (actually watching user sessions on video) to behavioral analytics. Both automatically capture all user interactions without requiring you to define events upfront.

This "zero-config" approach is attractive because you don't need to instrument events carefully—the system captures everything. However, this generality comes at a cost: you have so much data you need to know what questions to ask. Without careful definition of what matters, you're drowning in events.

Session recordings are genuinely valuable for diagnosing specific user problems ("why are users abandoning this checkout page?"), but they don't scale to understanding broad behavioral patterns across millions of events.

For teams that need both behavioral analytics and session replays, Heap or Fullstory can provide both. Post Hog also includes session replays, making it competitive for this use case.

Segment, mParticle, and Customer Data Platforms (CDPs)

Larger organizations often use CDPs like Segment or mParticle as middleware between products and analytics tools. CDPs collect data from all your applications, normalize it, and route it to Amplitude, Post Hog, data warehouses, and other tools.

CDPs are valuable for companies with many data sources (website, app, backend, third-party services) because they centralize data collection and ensure consistency. However, they add cost and complexity.

For small teams with one or two primary data sources, a CDP is unnecessary overhead. But as you scale to multiple products and complex data pipelines, a CDP becomes increasingly valuable.

PostHog offers a more integrated experimentation experience with real-time variant delivery and feature flags, while Amplitude focuses on analytics with strong A/B and multi-variant testing capabilities. Estimated data.

Migration and Switching Costs

Migrating from Amplitude to Post Hog

If you're considering switching from Amplitude, the primary concerns are:

-

Historical Data: Amplitude allows you to export raw event data. Post Hog has import capabilities, so you can theoretically migrate historical data. However, the process is manual and requires technical work.

-

Segment Definitions: Your sophisticated segment definitions in Amplitude don't automatically translate to Post Hog. You'd need to recreate them using Post Hog's interface or SQL queries.

-

Experiment History: Experiments you've run in Amplitude Experiment can't be automatically imported to Post Hog. However, you can export the results data.

-

SDK Changes: Your product code currently uses Amplitude's SDK. You'd need to replace it with Post Hog's SDK, which is straightforward but requires code changes and testing.

-

Integration Rewiring: If you're exporting segments to marketing tools, you'd need to reconfigure those integrations in Post Hog.

The total migration effort is typically 2-4 weeks for a mid-sized team: 1 week for planning and data export, 1 week for SDK migration, 1 week for testing and validation, and 1 week for operational transition.

The upside is that you can run both systems in parallel during transition, ensuring no loss of data or insights.

Migrating from Post Hog to Amplitude

Reverse migration is generally simpler because you're moving from a more technical tool to a more user-friendly one:

-

Event Data Export: Post Hog provides SQL access to export events. This is straightforward for teams with technical capabilities.

-

Feature Flags: You can export feature flag history from Post Hog, but integrating it into Amplitude requires custom work since Amplitude Experiment is separate.

-

SDK Migration: Simpler than the reverse because Amplitude's SDK is easier to integrate for most teams.

Migration from Post Hog typically takes 1-2 weeks for most teams.

Implementation Timeline and Success Metrics

Amplitude Implementation Roadmap

Amplitude deployments typically follow this timeline:

Week 1-2: Planning and Event Schema Definition

- Define what events you want to track and what properties each event needs

- Create a shared dictionary of event names (critical for team alignment)

- Plan which teams need access and what they'll use Amplitude for

Week 3-4: SDK Integration and Instrumentation

- Integrate Amplitude SDK into your web app

- Add tracking calls for key events

- Test in staging environment

Week 5: Deployment and Initial Data Collection

- Deploy to production

- Allow 24-48 hours of data collection to verify things are working

- Create initial dashboards for key metrics

Week 6+: Ongoing Analysis and Iteration

- Train teams on using Amplitude

- Identify and answer your first questions

- Refine instrumentation based on learnings

For most organizations, teams are running productive analyses within 6 weeks.

Post Hog Implementation Roadmap

Post Hog implementations typically take slightly longer due to the higher learning curve:

Week 1-2: Planning and Environment Setup

- Decide on cloud-hosted vs. self-hosted (affects timeline)

- Plan feature flags and experiments strategy

- Define success metrics

Week 2-3: SDK Integration

- Integrate Post Hog SDK

- Add feature flag calls to conditional feature logic

- Set up basic event tracking

Week 3-4: Feature Flag Validation

- Verify flags are being evaluated correctly

- Test rollout and percentage-based targeting

- Set up monitoring for flag performance

Week 4-5: Experimentation Setup

- Design first experiment

- Set up event tracking for experiment metrics

- Run small pilot experiment

Week 6+: Scaling and Advanced Use

- Run multiple concurrent experiments

- Integrate flags into deployment workflows

- Train team on reading experiment results

Post Hog reaches productivity slightly slower than Amplitude (6-8 weeks vs. 4-6 weeks) due to complexity, but the productivity advantage of integrated flags and experiments compounds over time.

Architectural Considerations for Scale

Event Volume and Query Performance

As your product grows and event volumes increase, both Amplitude and Post Hog face performance considerations, but in different ways.

Amplitude at Scale: Amplitude's cloud infrastructure is designed to handle massive event volumes. Companies sending billions of events monthly use Amplitude without performance degradation. The primary concern is cost—if you're sending millions of events per user per day, your costs become substantial.

Query performance remains fast because Amplitude pre-aggregates much of the data. Common queries (retention, funnels, segmentation) have optimized code paths.

Post Hog at Scale: Post Hog uses ClickHouse (a columnar database) for event storage, which is excellent at aggregating events but has quirks at very high volumes. Companies with billions of events per month typically move to Post Hog's enterprise tier with additional infrastructure.

Post Hog's self-hosted deployments require more operational attention at scale. You're responsible for database optimization, backup strategies, and capacity planning.

Data Retention and Archival

Both platforms store event data, but have different retention policies:

Amplitude: Free and Plus tiers have limited data retention (30-90 days typically). Pro and Enterprise tiers offer longer retention (up to 2+ years). Archived data incurs additional costs but remains queryable.

Post Hog: Self-hosted Post Hog retains data as long as your storage allows. Cloud Post Hog has similar retention to Amplitude. This is significant—if you want to analyze user behavior over 5-year periods, Post Hog's self-hosted option gives you that capability at the cost of managing storage.

Clear event taxonomy and testing/validation are rated highest for successful event tracking implementation. Estimated data.

Team Structure and Tool Alignment

Product Manager-Led Organizations

If your organization is structured around product managers as the primary decision-makers, Amplitude is likely the better fit. Product managers can independently run analyses, create dashboards, and answer questions without depending on analytics engineers or data scientists. The visual interface matches how product managers think about problems.

In this structure, a single analytics engineer or data lead can support 5-10 product managers, each running their own Amplitude queries.

Engineer-Led Product Development

If your product team includes many engineers and engineering decisions drive the product roadmap, Post Hog is more aligned. Engineers shipping features can measure the impact themselves without switching to another tool. Feature flags and experimentation are close to the code they're modifying.

This structure works well for developer tools, infrastructure software, and deeply technical products where the product-building team has strong engineering skills.

Hybrid Organizations with Dedicated Analytics

Larger organizations often employ dedicated analytics engineers—people with deep SQL and data modeling expertise. For these teams, both tools are workable, but Post Hog's SQL interface becomes valuable. Analytics engineers can write sophisticated queries that go beyond what Amplitude's visual builder supports.

At this scale, Post Hog's flexibility and integration with engineering workflows often wins. But Amplitude's visualization and segment management remain valuable for analysts and product managers without SQL skills.

Machine Learning and Predictive Analytics

Amplitude's ML Capabilities

Amplitude includes machine learning features focused on prediction:

- Amplitude Recommend: Identifies which content or features drive the highest LTV users

- Predictive Cohorts: Estimates which users are likely to churn, based on historical patterns

- Behavioral ML: Segments users by similar behavioral patterns without manual definition

These are valuable for teams wanting to be proactive about retention. Rather than analyzing cohorts you've already defined, the ML models discover patterns in your data that correlate with churn or value.

The tradeoff is that ML features are black boxes. You get predictions but limited visibility into why the model is making them. For teams needing interpretability, this is limiting.

Post Hog's Approach

Post Hog doesn't include built-in ML models in the traditional sense. However, Post Hog integrates with experiment and flag systems in ways that enable data-driven learning.

Where Post Hog could add significant value is through integration with external ML tools. You can export your event data to Jupyter, scikit-learn, or other ML frameworks for custom model development.

For teams wanting sophisticated ML on top of their analytics, Post Hog's flexibility (SQL access + ability to export data) enables custom solutions more easily than Amplitude's walled-garden approach.

Organizational Change and Analytics Adoption

Getting Teams to Actually Use Analytics

Having an analytics platform doesn't guarantee it gets used. Many organizations buy Amplitude or Post Hog and see adoption plateau after initial enthusiasm.

Successful analytics adoption depends on:

-

Clear Dashboards: Create dashboards focused on decisions people actually need to make. A dashboard showing "daily active users, conversion rate, and retention," without business context, is not useful. A dashboard titled "Q4 Growth Focus: New User Acquisition and Engagement" with targets and context is actionable.

-

Regular Cadence: Schedule weekly analytics reviews where the team discusses insights and next steps. Without structure, analytics becomes occasional rather than integral to decision-making.

-

Access and Training: Make sure everyone who should use analytics has access and knows how. This sounds obvious but is often skipped.

-

Integration with Decision-Making: Analytics should directly inform decisions. When someone asks "should we build feature X?" the answer should involve analytics. Over time, teams learn to turn to analytics naturally.

-

Choosing the Right Tool: This is where Amplitude vs. Post Hog matters. If you choose a tool poorly aligned with your team's skills, adoption suffers. An organization of marketers using Post Hog will struggle with the interface. An engineering team using Amplitude for feature flag decisions will find workarounds.

Competitive Differentiation and Future Roadmap

Amplitude's Competitive Advantages

- User-Friendliness: The visual interface remains best-in-class for non-technical users

- Ecosystem Integrations: The largest partner network of any analytics platform

- ML Features: Predictive cohorts and recommendations give competitive advantage for retention focus

- Maturity: Longest history means most battle-tested, stable platform

Amplitude's challenge is maintaining relevance as development teams (increasingly technical) want analytics integrated into their workflows rather than separate from them.

Post Hog's Competitive Advantages

- Integrated Features: One platform for analytics, flags, experiments, and replays

- Developer Alignment: Built by developers for developers

- Open Source Option: Self-hosting and open-source model appeal to companies with privacy/control concerns

- Feature Velocity: Regular improvements and new capabilities

Post Hog's challenge is remaining accessible to non-technical users. As the product becomes more sophisticated, the interface risk becoming overwhelming for product managers without technical training.

Best Practices for Implementation Success

Event Tracking Best Practices

1. Define a Clear Event Taxonomy Before implementing, document what events you'll track and what properties each event needs. Example:

Event: "purchase_completed"

Properties: product_id, amount, currency, payment_method, signup_date, user_tier

Why: Understanding purchase patterns by product, payment method, and user cohort

2. Use Consistent Naming Event names should be consistent and descriptive. "user_purchased" is better than "transaction" or "purchase_v2." Descriptive names reduce confusion when analyzing later.

3. Include Contextual Properties Not every property needs to be added to every event, but important context should be. If understanding geographic differences matters, include location. If understanding by user subscription tier matters, include it.

4. Avoid Over-Tracking More data isn't always better. Every event has a cost (storage, processing, engineering overhead). Track what matters; don't track everything.

5. Test and Validate Before production deployment, verify tracking in staging. Check that events are firing correctly and properties contain expected values.

Dashboard and Report Best Practices

1. Create Decision-Focused Dashboards Dashboards should support specific decisions, not be generic. A dashboard titled "Product Metrics" doesn't tell you what to do. A dashboard titled "New Feature Adoption: Weekly Rollout Plan" is actionable.

2. Set Clear Targets Show not just metrics, but goals. "Retention: 35%" is useless. "Retention Target: 40% (currently 35%, +2% vs last week)" provides context.

3. Separate Dashboards by Role Executives need different information than engineers. An exec dashboard might show retention, revenue, and churn. An engineer dashboard might show feature flag health, experiment status, and query latencies.

4. Use Alerts Most analytics platforms support alerts (email or Slack notifications) when metrics deviate from normal ranges. Set up alerts for critical metrics so you catch problems before they become severe.

Experimentation Best Practices

1. Define Metrics Before Running Experiments Choose your primary metric (what you're trying to improve) and guardrail metrics (things you don't want to regress) before launching. This prevents p-hacking—testing many metrics until you find statistical significance by chance.

2. Run Adequate Sample Sizes Underpowered experiments (too few users) won't detect real improvements and will have high false-positive rates. Calculate required sample size based on your baseline conversion rate and minimum detectable effect size.

3. Respect Statistical Significance Don't stop an experiment because you see a difference if it's not statistically significant. Statistical significance roughly means "the difference is real, not due to randomness." Most platforms use p < 0.05 as the threshold.

4. Monitor for Unexpected Effects When testing a feature to improve checkout conversion, also monitor engagement metrics, support ticket volume, and other guard rails. Sometimes optimizing one metric hurts others in unexpected ways.

5. Iterate Quickly but Carefully You want to run many experiments, but not so fast that you're constantly in experimental debt (several experiments running simultaneously that interact in complex ways). A good cadence is 2-5 concurrent experiments per product.

Conclusion: Making the Right Choice for Your Team

Amplitude and Post Hog represent two different philosophies for product analytics. Amplitude asks "how do we make analytics accessible?" Post Hog asks "how do we make analytics integral to engineering?" Both questions are valid; the right answer depends on your context.

Choose Amplitude if:

- Your team includes many non-technical product managers, growth marketers, or business analysts

- You need a tool that requires minimal training and can be used independently

- You want extensive integrations with marketing and advertising platforms

- Behavioral segmentation and retention analysis are primary concerns

- You prefer a visual query interface over SQL and code

- Your team is scaling rapidly and you need a battle-tested, mature platform

Choose Post Hog if:

- Your product team is primarily engineers

- You want experimentation and feature flags integrated directly into your development workflow

- You need SQL query access for complex analytical questions

- Data privacy and self-hosting capabilities are important

- You want to avoid vendor lock-in through open-source optionality

- You're building a product where rapid iteration and A/B testing are core to your process

Consider Runable if:

- You want AI-generated insights without having to formulate complex queries

- Your team lacks time or expertise to dive deep into either platform

- You're looking for a more accessible, lower-cost entry point to analytics

- You want automated anomaly detection and trend reporting

- Your primary need is understanding what's happening (insights) rather than precisely answering predefined questions

The best tool isn't always the one with the most features. It's the one your team will actually use, that aligns with how you think about problems, and that scales with your organization's growth.

Implementation is more important than selection. A company using Amplitude well will generate more value than a company using Post Hog poorly. Invest in training, create helpful dashboards, and establish a culture where analytics informs decisions. Then, the tool becomes a multiplier for that culture rather than a constraint on it.

Start with a 30-day trial of your top choice. Let your team use the real interface, instrument your actual product, and run actual queries. This practical experience will reveal which tool feels natural to your team far better than any feature comparison table.

The right analytics tool is one you'll actually use. Choose accordingly.

FAQ

What is the main difference between Amplitude and Post Hog's approach to analytics?

Amplitude was built to make behavioral analytics accessible to non-technical users through visual dashboards and query builders. Post Hog took an engineering-first approach, integrating analytics directly with feature flags and experimentation so developers can measure product changes without leaving their development environment. Amplitude optimizes for ease of use, while Post Hog optimizes for integration with engineering workflows.

How do the pricing models differ between Amplitude and Post Hog?

Amplitude uses a Monthly Tracked Users (MTU) model, charging based on unique users generating events. This scales with your user base but is predictable if you know growth projections. Post Hog uses consumption-based pricing calculated on event volume processed monthly. High-frequency event tracking drives Post Hog costs higher, while large user bases with light engagement drive Amplitude costs higher. For most SaaS products, Post Hog is cheaper; for high-engagement consumer products, Amplitude is cheaper.

Can I migrate my event data from Amplitude to Post Hog or vice versa?

Migration is possible but requires manual work. Amplitude allows you to export raw event data through APIs or dashboard exports. Post Hog can import historical data, but the process requires technical effort (typically 2-4 weeks for a mid-sized organization). You'll also need to recreate segment definitions, rebuild dashboards, and update SDKs in your product code. Both platforms recommend running them in parallel during transition to ensure no data loss.

Which platform is better for A/B testing and experimentation?

Post Hog has integrated experimentation with feature flags, making it more efficient for rapid iteration—you can define an experiment, measure results, and deploy the winner without leaving the platform. Amplitude Experiment is functional but separate from the analytics platform, requiring additional integration steps. If experimentation velocity is critical, Post Hog has a structural advantage. If you just need solid statistical testing capabilities, both are equivalent.

What's the learning curve for each platform?

Amplitude has a gentler learning curve for non-technical users—most product managers can start running useful analyses within 1-2 weeks. Post Hog requires more technical acumen; expect 3-4 weeks before teams are fully productive. For experienced engineers, Post Hog's learning curve is shorter because the concepts (events, properties, SQL queries) align with software development thinking. The right tool depends on your team's technical skill level.

How important is data privacy and compliance in choosing between these platforms?

Both platforms comply with GDPR, SOC 2, and other standards, but Post Hog has stronger privacy positioning. Post Hog's self-hosted option means your event data never leaves your infrastructure, which is critical for companies handling sensitive data, healthcare applications, or those with strict data residency requirements. If privacy is a primary concern, Post Hog's approach is more flexible. Amplitude is cloud-only but may be sufficient if your data isn't particularly sensitive.

Which platform is better for e-commerce and consumer products?

Amplitude's strength in behavioral segmentation and cohort analysis makes it particularly valuable for e-commerce and consumer products. You can deeply segment users by purchase history, engagement patterns, and lifetime value, then activate those segments in marketing tools. Post Hog is more suitable if your product team is engineering-focused and you want to rapidly test feature variations. Many e-commerce companies use both: Post Hog for engineering-driven experimentation and Amplitude for marketing-driven segmentation and insights.

Can I use both Amplitude and Post Hog together?

Many larger organizations run both platforms. Post Hog handles experimentation and engineering analytics, while Amplitude provides deeper segmentation and business intelligence. This requires sending events to both platforms (typically through a CDP like Segment for deduplication and consistency) and maintaining separate dashboards. The additional cost and complexity only make sense if you're large enough to justify dedicated teams for experimentation and insights analytics.

What are the main success factors for analytics adoption within my team?

Beyond choosing the right platform, success requires: (1) clear, decision-focused dashboards tied to business goals, (2) a regular cadence of analytics review meetings, (3) proper training and access for all relevant stakeholders, (4) integration of analytics insights into formal decision-making processes, and (5) choosing a tool aligned with your team's technical skills. The best tool is the one your team will actually use consistently. No platform drives adoption by itself.

How should I evaluate alternatives like Runable for my specific needs?

If your primary pain point is "we have data but struggle to extract insights," Runable's AI-generated analysis might be valuable. If your pain point is "our tool is too technical for our product managers," Amplitude is more appropriate. If your pain point is "we need to iterate faster," Post Hog is better. Evaluate solutions based on your actual constraints, not feature lists. Run 30-day trials with real product instrumentation and real team members to understand which tool fits naturally into your workflows.

Key Takeaways

- Amplitude excels for non-technical users and behavioral segmentation; PostHog excels for engineering-driven experimentation and rapid iteration

- Amplitude charges by monthly tracked users (scales with user base); PostHog charges by event volume (scales with engagement frequency)

- PostHog integrates feature flags and experiments natively; Amplitude keeps experimentation separate but offers deeper visualization

- Team composition matters: product managers prefer Amplitude; engineers prefer PostHog

- Both platforms support sophisticated analytics, but PostHog provides SQL access while Amplitude uses visual query builders

- Runable offers AI-generated insights as an alternative for teams wanting simplified, automated analysis

- Migration between platforms is possible but requires 2-4 weeks of technical work to transfer data and rebuild configurations

- Success depends more on implementation, training, and dashboard design than on tool selection

- Consider privacy requirements: PostHog self-hosting available; Amplitude cloud-only but mature

- Hybrid approaches exist for larger organizations running both platforms for different purposes

Related Articles

- Hardware Phone Keyboards: The Fad That Won't Last [2025]

- US and China AI Collaboration: Hidden Partnerships [2025]

- What We Know About Major Winter Storms Hitting the US [2025]

- Microsoft Xbox App on Arm Windows: What It Means for Gaming [2025]

- Blue Origin's TeraWave Megaconstellation: The 6Tbps Satellite Internet Game Changer [2025]

- Meta Expands Ads to All Threads Users Globally [2025]