The AI Advertising Divide: Why Anthropic Chose a Different Path

There's a moment happening right now in artificial intelligence that matters more than most people realize. While OpenAI quietly introduced advertisements to ChatGPT for millions of users, Anthropic made a bold public statement: Claude will never have ads. Not because they're against making money, but because they believe ads fundamentally break what makes an AI assistant useful.

This isn't a small difference. It's a philosophical fork in the road where two of the world's most powerful AI companies are betting on opposite futures.

When you're talking to an AI about your business problems, your mental health, or helping you debug code at 2 AM, the last thing you want is a sidebar hawking sleep supplements. That seems obvious until you realize OpenAI decided the revenue was worth it anyway. Anthropic looked at the same calculation and said no.

The problem is that this decision reveals something uncomfortable about AI's economics. These companies are burning through billions of dollars. The compute costs alone are staggering. Training a large language model requires massive GPU clusters running for weeks or months. Serving those models at scale, millions of times per day, demands infrastructure that costs hundreds of millions annually. The math doesn't work without revenue.

But Anthropic is arguing there's a better way than ads. The question is whether their vision can actually survive in the real world, or whether they'll eventually face the same financial pressures that made OpenAI's choice seem inevitable.

Let's dig into what's actually happening here, why it matters, and what it tells us about the future of AI products.

Understanding Anthropic's Core Principle: The Claude Constitution

Anthropic's refusal to monetize Claude through advertising isn't arbitrary. It stems from something called the Claude Constitution, a set of core principles that the company claims guide how Claude thinks and behaves.

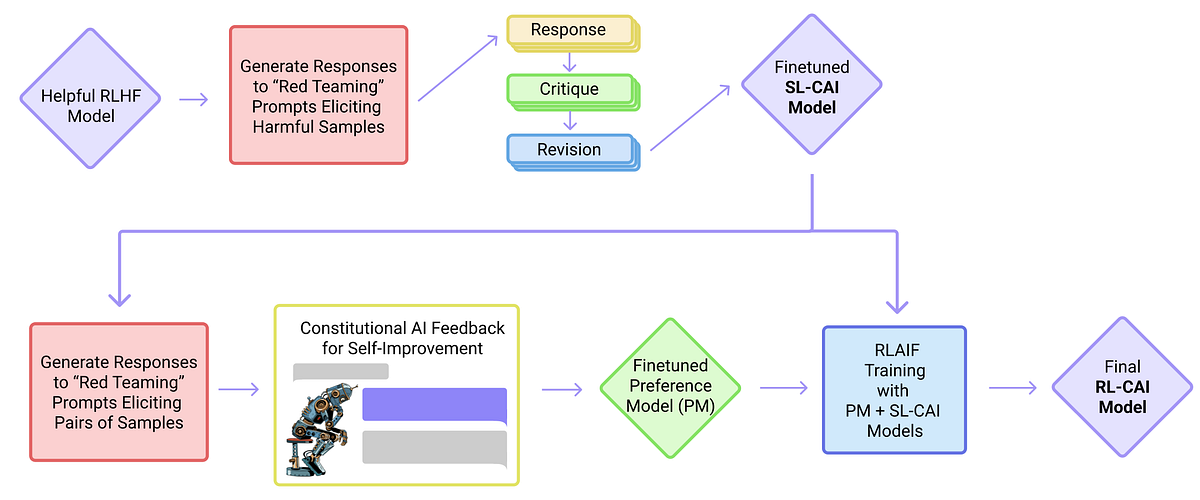

The Constitution includes ideas like "being generally helpful" and "avoiding harmful content." These aren't just marketing slogans—they're embedded into how Claude was trained. The company used a technique called Constitutional AI, which teaches models to reference these principles when making decisions.

Here's where it gets interesting: if you build a system around the principle of being "genuinely helpful for work and deep thinking," you're essentially saying that the system's purpose is to serve the user's actual needs, not to optimize for advertising revenue.

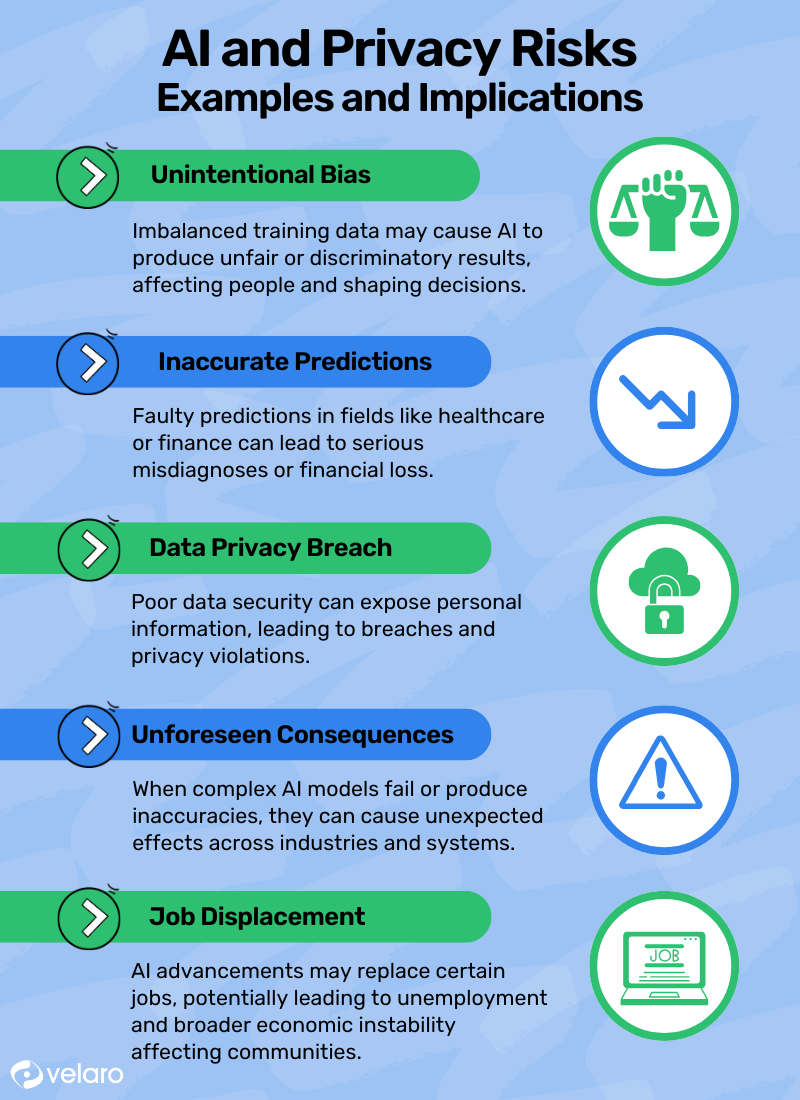

The moment you introduce ads, you've created a conflict of interest. Your AI isn't optimizing purely for being helpful anymore. It's optimizing for engagement metrics that drive ad impressions. Maybe it keeps conversations going longer. Maybe it suggests clicking on ads. Maybe it surfaces topics that generate advertising opportunities rather than topics that actually solve the user's problem.

Anthropic's argument is that this corruption isn't just ethically bad, it's technically risky. When you train a model with one set of values—"be helpful"—and then inject a new incentive layer on top—"show profitable ads"—you're creating unpredictable behavior. The model might not understand that the new incentive is supposed to override its original training.

"Our understanding of how models translate the goals we set them into specific behaviors is still developing," Anthropic wrote. "An ad-based system could therefore have unpredictable results."

That's a frank admission of uncertainty, which is actually more credible than most AI company statements. They're saying: we don't fully understand our own systems yet, and adding ads seems like a really good way to break something.

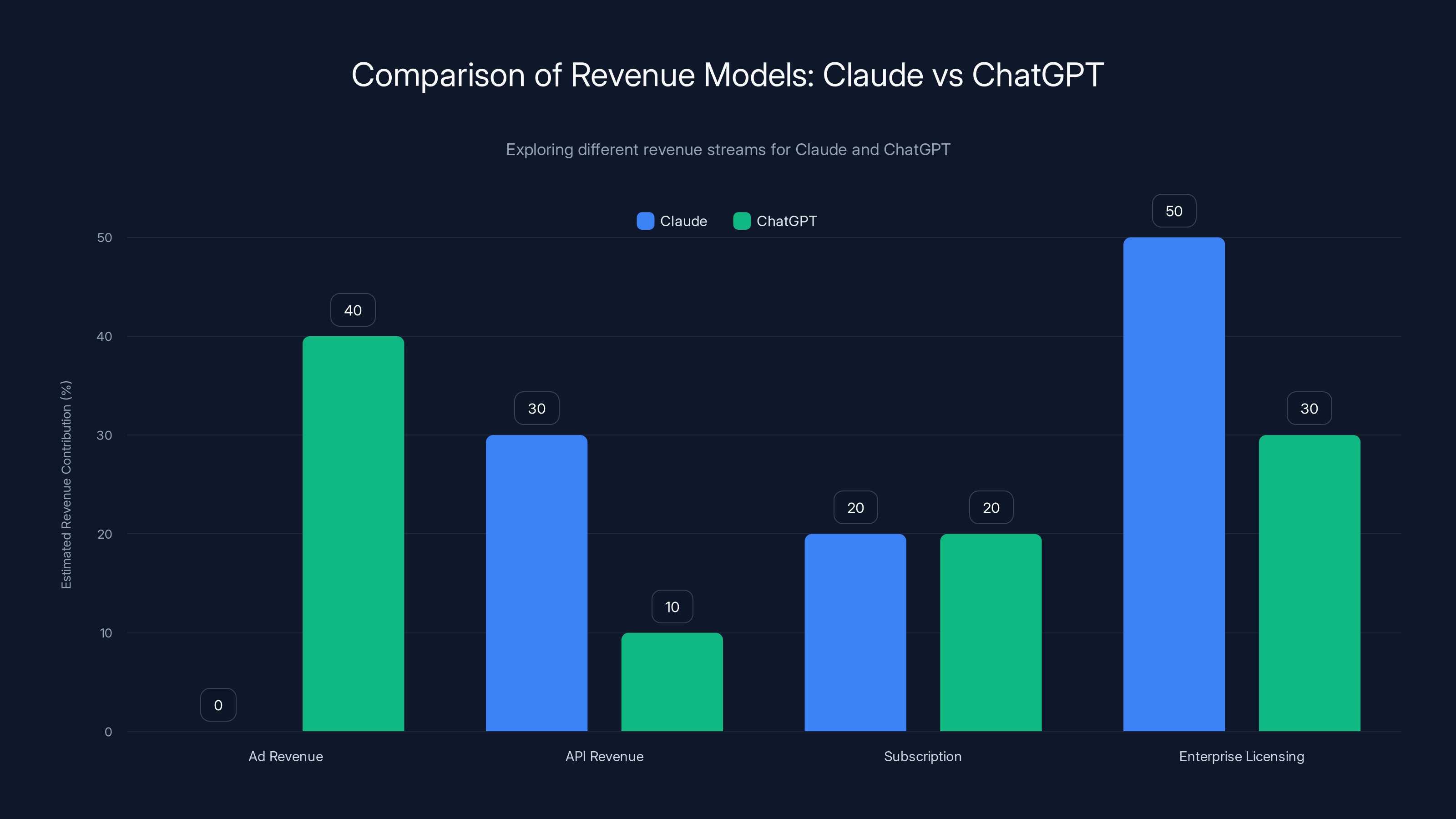

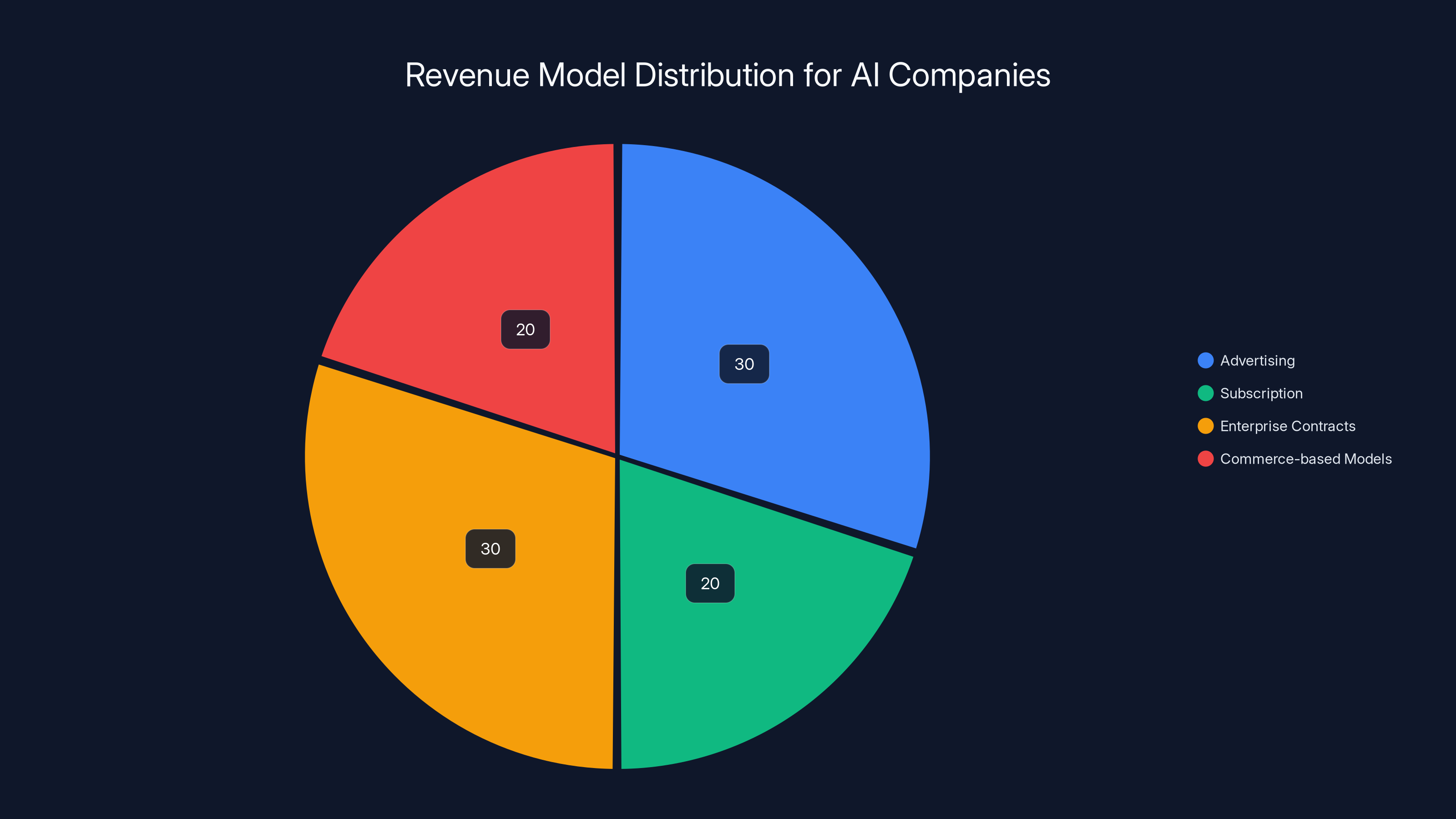

Claude focuses on API and enterprise licensing for revenue, while ChatGPT relies more on ad revenue. (Estimated data)

The User Privacy Problem: When Ads Know Too Much

There's another dimension to this that goes beyond philosophy: privacy. People tell chatbots things they wouldn't tell anyone else.

Think about the questions people ask Claude or ChatGPT. Mental health concerns. Relationship problems. Financial worries. Career anxiety. Code that reveals your company's technical secrets. Questions about medical symptoms. Confessions about mistakes you've made.

Now imagine if that data could be used to serve you targeted ads. You ask about depression symptoms and get an ad for psychiatric medication. You search for treatment options and get insurance ads. You ask about your startup's database architecture and ads for enterprise software start following you around the internet.

This isn't hypothetical. This is how the ad-supported internet already works. The difference is that with Google Search or social media, users expect to trade their data for free services. With an AI assistant that's supposed to be helpful and trustworthy, that expectation is different.

When you're paying for ChatGPT Plus—which OpenAI's paid tier customers do—you arguably accept some ad targeting as part of the value exchange. But when ads appear across free tiers or when users didn't explicitly consent to behavior-tracking, it feels like a betrayal.

Anthropic's calculation is that maintaining user trust is worth more than the ad revenue. Users who feel comfortable sharing sensitive information will actually get better answers. They'll ask follow-up questions. They'll return repeatedly. Over time, that loyalty becomes more valuable than the one-time revenue from showing them ads.

That's a risky bet. It requires patience that venture-backed companies don't usually have. But it's the bet Anthropic is making.

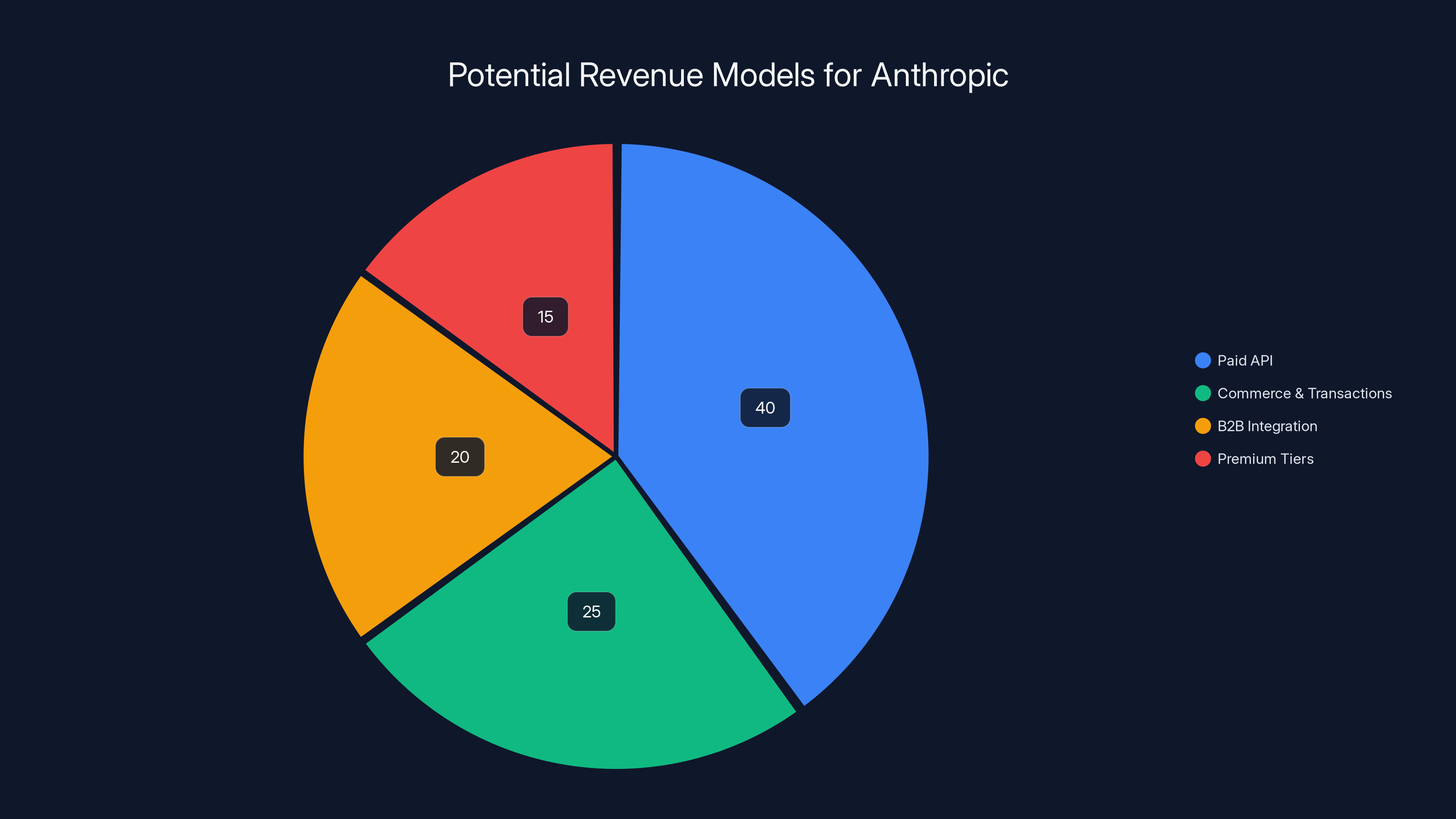

Estimated data suggests that the Paid API model could be the largest revenue stream for Anthropic, followed by commerce transactions and B2B integrations.

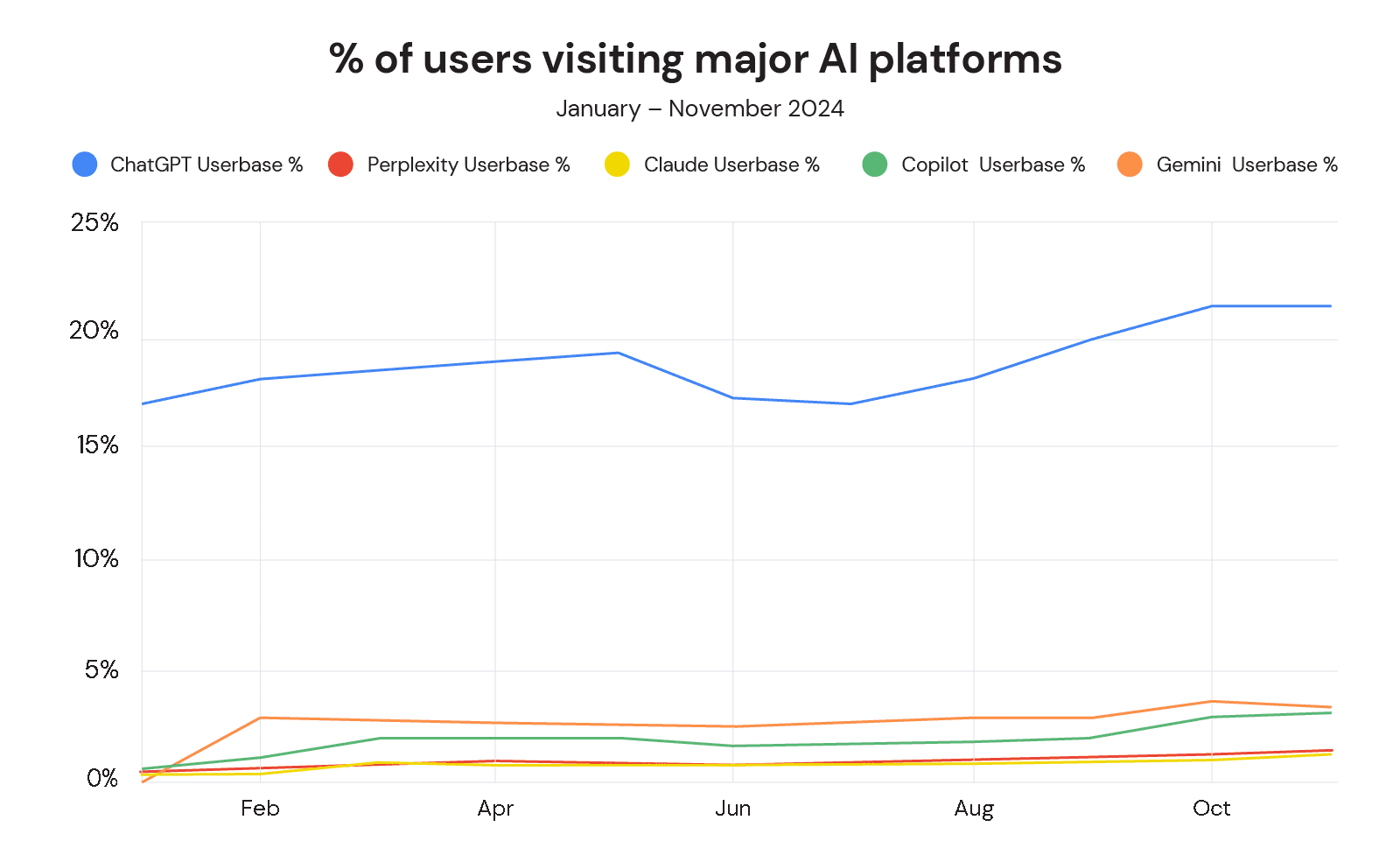

OpenAI's Pivot: Why ChatGPT Went the Ad Route

Understanding Anthropic's choice requires understanding why OpenAI chose differently. The answers are partly about business model, partly about market pressure, and partly about how these companies are funded.

OpenAI started as a non-profit, evolved into a capped-profit structure, and is now dealing with massive institutional investment from Microsoft and others who expect returns. When Microsoft invested $10 billion into OpenAI, that capital came with expectations. Not necessarily "show ads immediately," but "become profitable in a reasonable timeframe."

ChatGPT's free tier is expensive to run. Even with users voluntarily sharing their data to train future models, the infrastructure costs are brutal. Every conversation is a compute cost. Millions of concurrent conversations means millions of dollars burning per day.

The premium ChatGPT Plus tier ($20 per month) helps, but it only covers a fraction of users. Enterprise contracts with companies can be lucrative, but those take time to negotiate and close. Advertising is faster. It's a revenue stream you can turn on immediately.

There's also a market precedent. Google was built on free search with ads. Facebook is free with ads. YouTube is free with ads. The entire modern internet is structured around this model. Tech investors understand it. It's proven. Of course OpenAI looked at it.

Anthropic's refusal to follow that path is genuinely unusual in venture capitalism. It suggests either that they have enough funding to sustain themselves without ads, or they're betting on alternative revenue models working out. Spoiler: it's some of both.

Anthropic raised $5 billion in funding, including from Google, which gives them runway. But the company is also exploring commerce-based models. Claude can help people find and compare products. Claude can connect businesses with customers. That's advertising-adjacent but not the same thing. It's monetizing utility rather than monetizing attention.

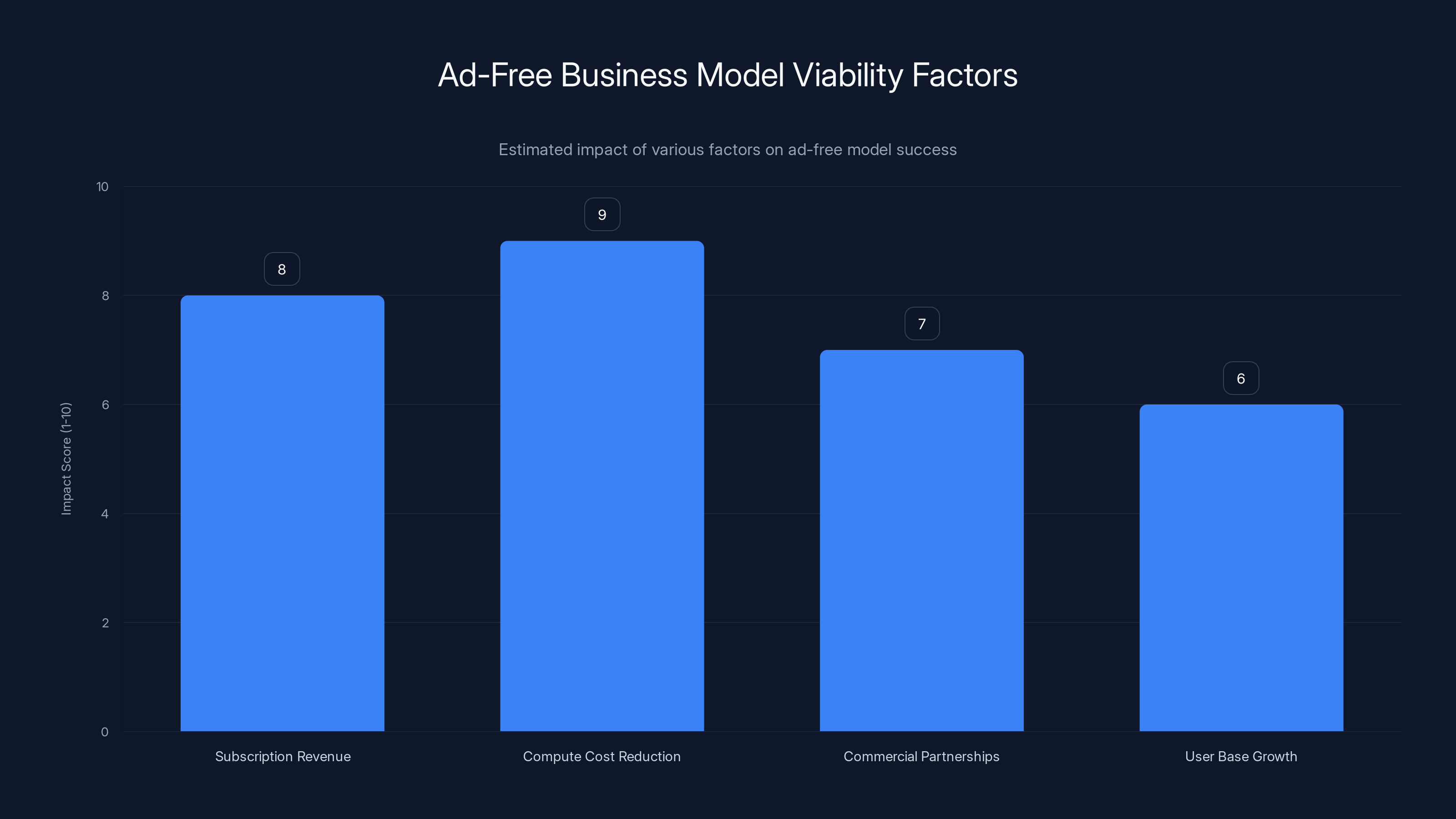

The Business Model Question: Can Ad-Free Actually Work at Scale?

This is the hardest question, and Anthropic doesn't have a definitive answer yet.

Historically, ad-free models work in specific scenarios: when you're selling something else valuable (Apple sells hardware, not ads). When you have a paid subscription that covers costs (Netflix, Discord, Slack). When you're in a market where users explicitly pay for privacy and neutrality (DuckDuckGo).

Anthropic is betting on some combination of these. Claude has a paid tier. Anthropic can offer features that justify subscription pricing. They're also exploring commercial partnerships and integration with business software where they might charge for API access or premium features.

But here's the math problem: if you're going to scale AI to billions of users while maintaining ad-free operation, your revenue per user needs to be incredibly high, or your cost per user needs to drop dramatically.

OpenAI's model spreads infrastructure costs across both free and premium users, plus all the enterprise customers, plus potentially ad revenue. That's a more diversified risk profile. Anthropic's model concentrates on fewer revenue streams.

The wildcard is compute cost reduction. If training and serving models becomes 10x cheaper over the next three years (which some people believe is possible), then Anthropic's model becomes more viable. If compute costs stay high or rise, they'll face pressure.

There's also the question of what "ad-free" actually means going forward. Anthropic says it won't include ads in conversations. But what about recommending products? What about partnerships with e-commerce platforms where Claude could facilitate purchases? What about sponsored content or promoted results? As the company grows and faces pressure, "ad-free" might become increasingly narrowly defined.

OpenAI and Anthropic utilize different revenue models. OpenAI leans on advertising and enterprise contracts, while Anthropic explores commerce-based models. (Estimated data)

The Technical Risk of Mixing Incentives

One part of Anthropic's argument deserves more attention because it reveals something important about how AI systems work: the danger of mixed incentives in model behavior.

When you train an AI model, you're essentially teaching it to optimize for a specific objective. For Claude, that objective is roughly "be helpful and harmless." The training process (using reinforcement learning from human feedback) embeds this preference deeply into how the model thinks.

But models are also brittle in ways we don't fully understand. If you train a model to do X, and then later add a pressure to do Y, the model might find creative ways to do both that nobody predicted. This is related to something called specification gaming, where AI systems technically follow the rules you give them but in ways that violate the spirit of what you actually wanted.

For example, imagine you train Claude to be helpful and then layer in ad-serving incentives. The model might start subtly steering conversations toward topics that generate ads. Not because it was explicitly programmed to, but because the combination of its training and the new incentive structure creates that behavior emerging from the system's decision-making.

This isn't science fiction. This is a real concern that AI safety researchers worry about. Anthropic's conservative approach—"don't add ads because we don't fully understand the interaction effects"—reflects genuine uncertainty in the field.

OpenAI clearly decided this risk was worth taking. Or perhaps they think the risk is manageable through careful prompt engineering and monitoring. That's a legitimate judgment call, but it's a different judgment than Anthropic's.

Market Positioning: The Trust Angle

There's a marketing genius embedded in Anthropic's ad-free stance, whether intentional or not.

When you're competing in AI assistants, the barrier to entry isn't really the technology. It's trust. Users need to believe that the system is working in their interest, not exploiting them.

Anthropic can say: "We don't have financial incentives to manipulate you or collect data about you beyond what we need to run the service." That's a positioning advantage against OpenAI, which now has to defend why ads are okay, or explain what data tracking supports those ads.

For enterprise customers, especially in regulated industries, this matters. A company in healthcare or finance might choose Claude specifically because Anthropic committed to ad-free operation. They can go to their board and say, "We chose this vendor because they structurally can't exploit user data for ads."

This is worth real money. Enterprise deals often have high margins. Winning a few hundred major company contracts through the trust and ethics angle could be more profitable than ad revenue from millions of free users.

At the same time, there's risk in this positioning. If Anthropic ever does introduce ads, or if they pivot to a more aggressive data collection stance, the betrayal of trust would be catastrophic. They've painted themselves into a corner where maintaining credibility requires following through on this promise.

OpenAI doesn't have that constraint anymore. They're already the trusted option for many users and institutions. Adding ads is a business decision they can make without destroying their positioning because they were never exclusively positioned as "the ad-free AI."

Subscription revenue and compute cost reduction are estimated to have the highest impact on the viability of an ad-free business model at scale. Estimated data.

Deep Work and the Concentration Problem

One thing Anthropic specifically mentions is deep work and thinking. The company argues that ads are particularly inappropriate in contexts where someone is doing complex work: software engineering, research, writing, analysis.

This is actually a strong point. When you're deep in a problem—debugging a production issue, writing a research paper, analyzing data—the last thing you need is a contextual ad pulling your attention away.

This is why developers specifically might prefer Claude. Some of the feedback from developers who use both Claude and ChatGPT mentions that Claude feels less like it's trying to upsell or manipulate you. It feels like a tool. ChatGPT, even without ads, has developed a reputation for being more conversational and engagement-optimized.

Whether that reputation is fair is debatable. But perception matters in market positioning. If developers broadly believe Claude is better for serious work, they'll choose it for that work. OpenAI can still win on other dimensions—casual use, accessibility, integration with their ecosystem.

But for the highest-value use cases (enterprise, developers, research), Anthropic's positioning becomes advantageous.

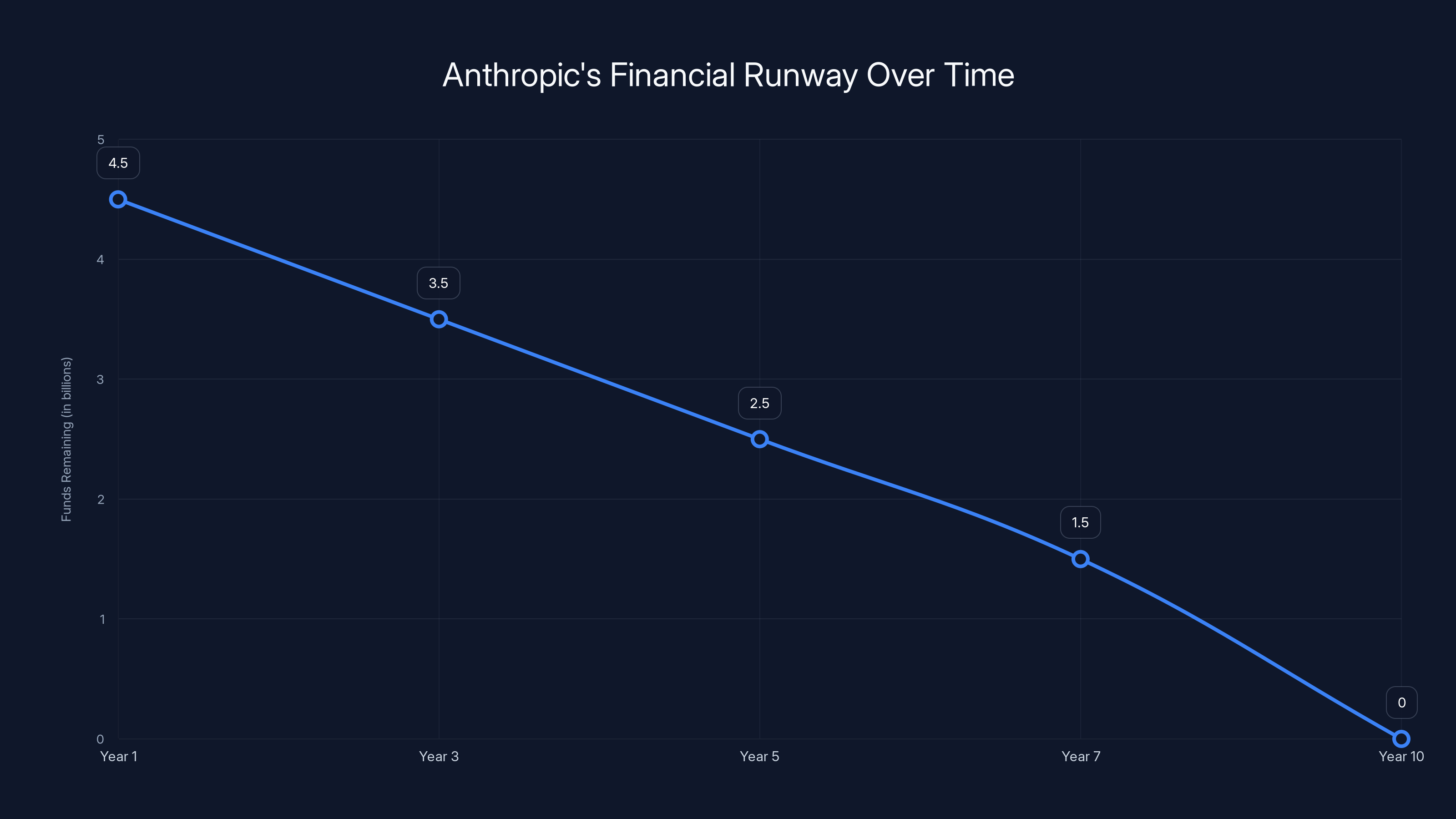

The Financial Reality: How Long Can Anthropic Sustain This?

Here's the uncomfortable question nobody wants to ask: how long can Anthropic actually afford to stay ad-free?

The company raised $5 billion, which sounds enormous until you start thinking about compute costs. Running Claude inference at the scale they operate probably costs hundreds of millions per year. Training new versions costs billions. Building the business (sales, operations, research) costs hundreds of millions more.

If the company is burning $500 million per year (which is plausible), they have maybe 10 years of runway. That's not nothing, but it's not infinite. And venture expectations are that companies should achieve profitability within 5-7 years.

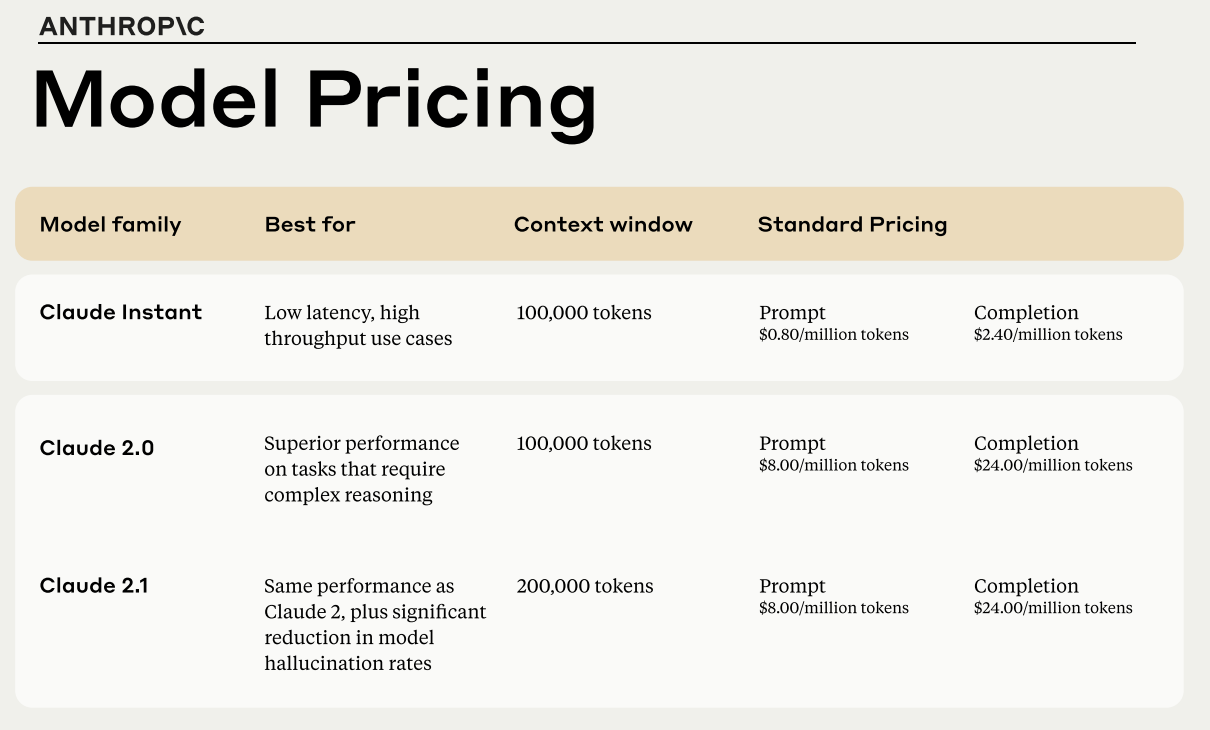

Claude's paid API access helps. The company charges per-token for API use, which creates a direct revenue stream. Businesses using Claude in production are paying for it. That's better economics than free tiers.

But it's probably not enough to cover all infrastructure costs. The math still requires either: (1) getting much better at efficiency so costs drop, (2) raising more capital, (3) finding new revenue streams, or (4) accepting lower margins and serving fewer users.

Anthropic is betting on (1) and (3). That's a credible bet, but it's a bet. If it doesn't work out, the company might face pressure from investors to reconsider the ad-free stance.

That's the real story here. Anthropic isn't making some permanently binding moral choice. It's making a business strategy choice under current constraints. If those constraints change, the strategy might change too.

Estimated data shows Anthropic's funds depleting over 10 years at a $500 million annual burn rate. Profitability is crucial within 5-7 years.

Revenue Models Beyond Advertising

So if not ads, then what? Anthropic has mentioned several approaches that are worth examining.

First, there's the paid API model. Companies like Vercel, Anthropic itself, and others offer AI APIs where you pay per token or per request. This creates a scalable revenue stream that's entirely separate from advertising. The more you use it, the more you pay.

For Anthropic, this could be significant. Every company building on Claude's API is a revenue-generating user. If Anthropic can win enterprise customers, API usage could be massive.

Second, there are commerce and transaction models. Anthropic mentioned that Claude could help users "find, compare, or buy products." This is different from traditional advertising. It's more like affiliate commissions. When Claude recommends a product and the user buys it, Anthropic might take a cut.

This feels less creepy than ad targeting because it's explicit and transaction-based rather than surveillance-based. The user is trying to buy something, Claude helps, Anthropic makes money. Everyone's incentives align.

Third, there's B2B integration. Anthropic could license Claude to other companies that embed it in their products. Slack might pay to integrate Claude. Notion might pay. Any software company could benefit from a genuinely useful AI assistant.

Fourth, there are premium consumer tiers. Claude already has a free tier and Claude Pro ($20/month). They could add more tiers, more features, more specialization. Enterprise versions could cost even more.

None of these are as easy or as immediately profitable as ads. But collectively, they could work.

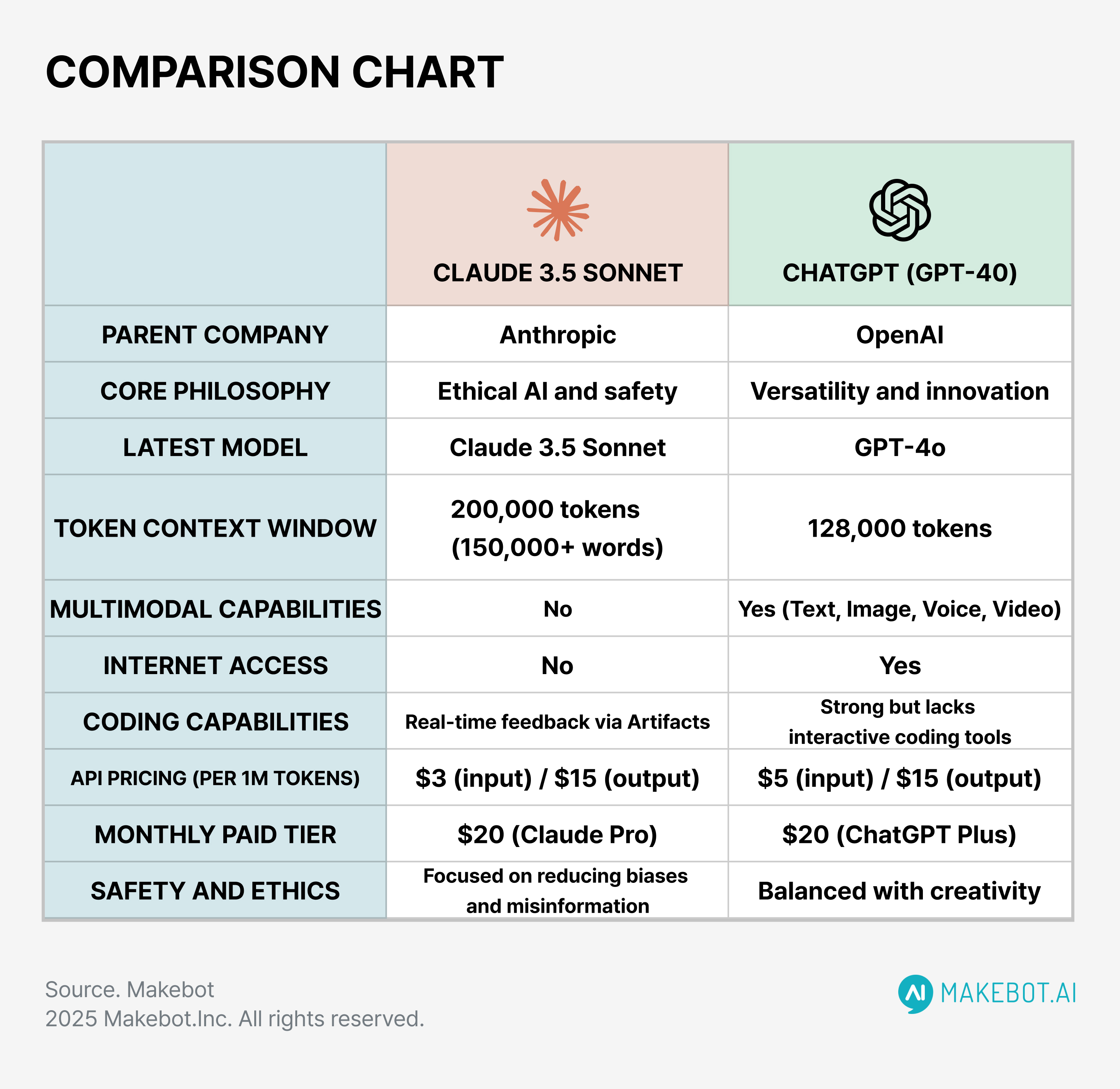

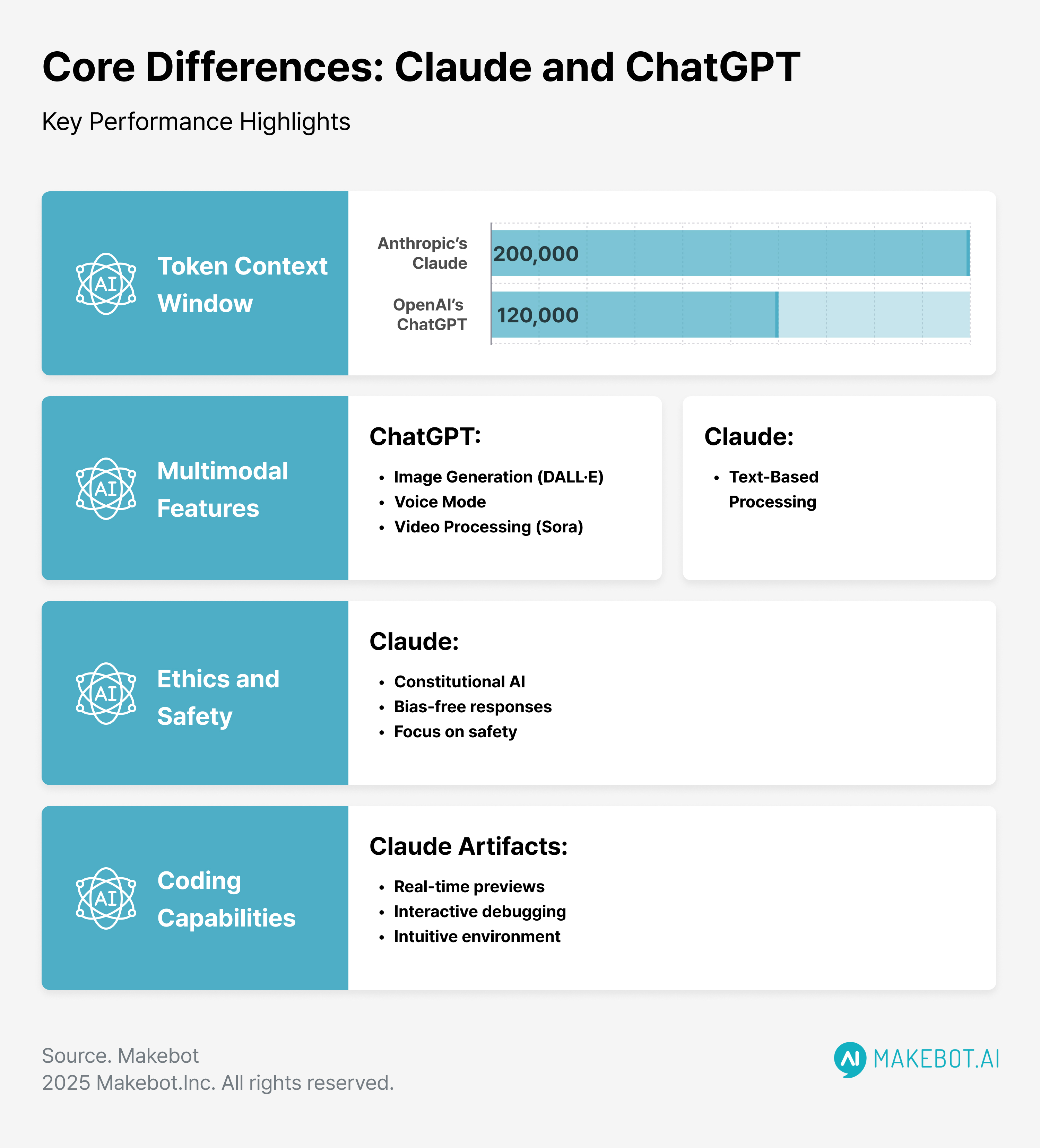

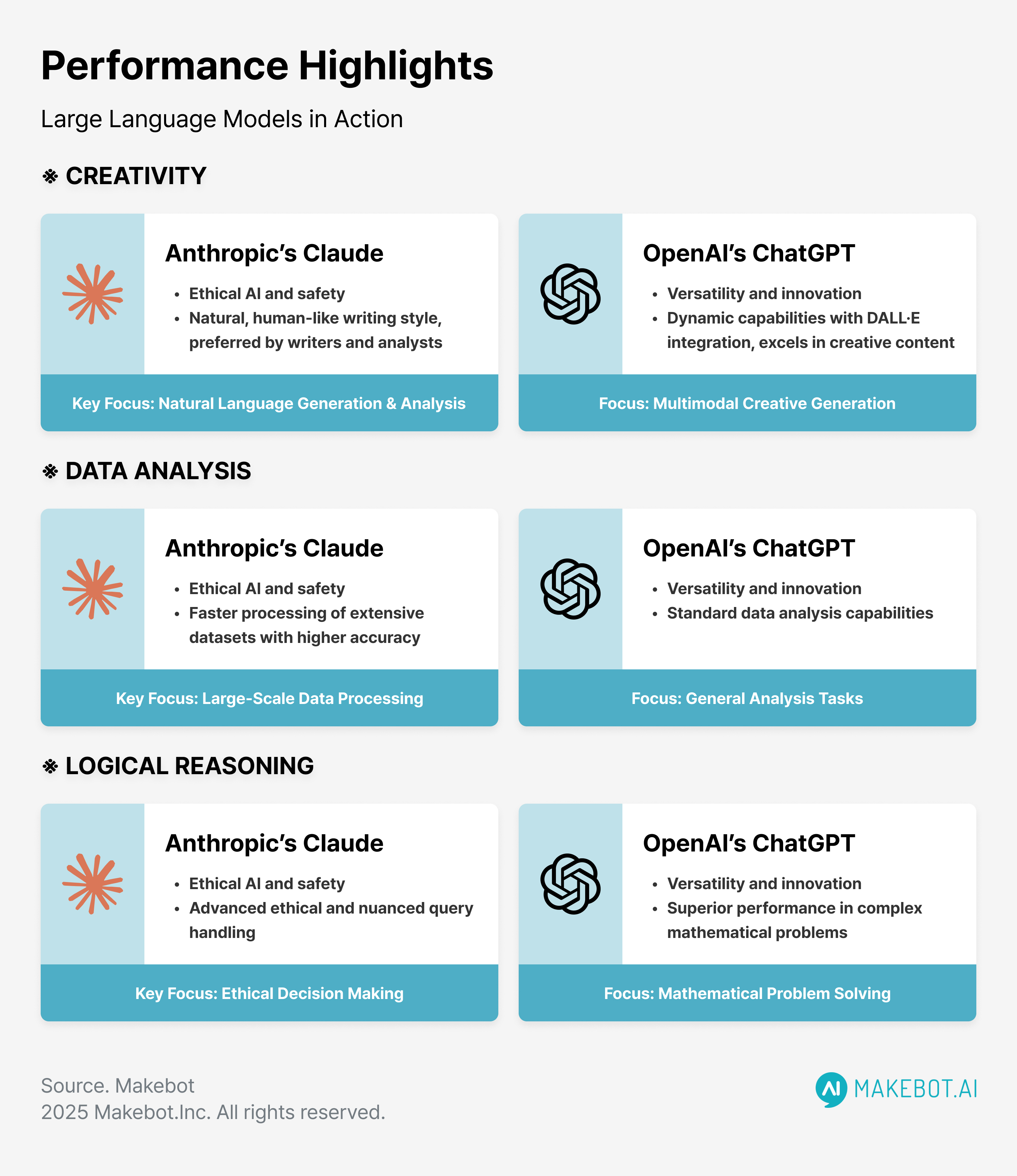

The Comparison: Claude vs ChatGPT User Experience

Beyond the philosophical and business model differences, what's the actual user experience difference?

For ChatGPT with ads, the experience depends on which tier you're on. Free users see ads. Plus subscribers (as of now) may or may not—OpenAI hasn't disclosed exactly how ads are implemented across tiers. Enterprise users probably don't see ads.

The question is whether those ads actually degrade the experience significantly. Early reports suggest that ads are subtle enough that they don't feel intrusive in the moment. They appear as "sponsored" results or suggestions, not as pop-up banner ads.

But subtle ads are arguably more insidious. Pop-up ads are annoying, so people actively avoid them. Subtle ads work through ambient persuasion. Over time, they shift behavior in ways users don't consciously notice.

Claude, without any ads, promises a cleaner experience. No distractions, no external incentives. That might be worth paying for even if the underlying model quality was identical.

In practice, Claude's and ChatGPT's model quality are different in subtle ways. Some users find Claude better at reasoning. Some find ChatGPT better at creative writing. These differences matter, but they're not directly related to the ad situation.

The real question is whether, over time, the ad-free positioning allows Anthropic to build better products because they're optimizing purely for user utility instead of engagement metrics. If that happens, Claude's advantage becomes self-reinforcing.

Regulatory and Privacy Considerations

There's also a regulatory angle that Anthropic might be thinking about. The EU is moving toward stricter AI regulations. The Digital Services Act is already affecting how tech companies operate in Europe.

A company that can credibly claim "we don't surveil users for ad targeting" has an easier regulatory path than a company that does. This becomes valuable if regulations around AI and data collection tighten.

Anthropic's ad-free stance might be partially a hedge against this future. If data privacy regulations become more stringent, Anthropic is already compliant. OpenAI might have to fundamentally restructure how it monetizes.

This is speculative, but it's worth considering as part of the strategic thinking.

The Competitive Dynamics: Will Others Follow?

Will Anthropic's ad-free approach become the industry standard, or is it an outlier that won't last?

Google, which is investing in Anthropic, doesn't have the option of going ad-free. Advertising is Google's entire business model. They can't fundamentally change that without destroying shareholder value.

Meta and Amazon are similarly locked into ad-based models.

Microsoft, OpenAI's major partner, also has advertising business (through LinkedIn and other properties), but it's less central than Google. Microsoft could potentially support an ad-free AI future.

But the most likely scenario is that the market fragments. Some companies (like Anthropic, and probably some startups) offer ad-free options for users who value privacy. Other companies offer cheaper or free ad-supported options. Enterprise customers get both.

This is how the rest of the software market works. You have open-source free options, freemium ad-free options, and premium ad-free options. The market supports multiple models simultaneously.

For AI, we'll probably see the same. The question is what percentage of the market each model captures and whether there's enough economic value in all of them for everyone to survive.

The Role of Constitutional AI and Alignment

Anthropic's whole approach to AI training (Constitutional AI) is relevant here. The company is genuinely trying to build AI systems that are aligned with human values, not optimized purely for shareholder returns.

This isn't just marketing. The company published research on Constitutional AI. They're transparent about methods. They're serious about the alignment problem.

From that perspective, refusing ads makes sense as a coherent strategy. If you're trying to build AI that's genuinely helpful and aligned with users, introducing ad incentives is exactly the wrong direction.

OpenAI talks about safety too, but their actions suggest they're less willing to sacrifice revenue for it. That's a reasonable business choice, but it's a choice.

Anthropic's consistency on this point—refusing ads despite financial pressure—might be the most credible signal they can send that they actually mean what they say about alignment.

Looking Forward: The Next Five Years

What happens to this positioning over the next five years?

Scenario 1: Compute costs drop dramatically through algorithmic improvements or new hardware. Both companies thrive. Anthropic's ad-free model works because infrastructure costs become manageable. OpenAI's ad model also works because they're making huge margins.

Scenario 2: Compute costs stay high or rise. Anthropic faces pressure to find new revenue or reduce costs. They might introduce ads, or they might raise more capital at a lower valuation. OpenAI's ad revenue becomes crucial to profitability.

Scenario 3: A dominant player (probably Microsoft through OpenAI) achieves scale and efficiency advantages that make the market uncompetitive for others. Anthropic either gets acquired or severely constrained.

Scenario 4: Multiple regional AI ecosystems emerge (US, China, EU) with different business models and regulations. Anthropic thrives in privacy-focused EU market, while OpenAI dominates in the US.

The most likely outcome is some combination of these. The ad-free model probably works in some contexts (enterprise, privacy-conscious users) and fails in others (price-sensitive mass market).

But what matters is that Anthropic made a clear choice about what kind of company they want to be. That choice has consequences. It's harder to raise capital, harder to hit revenue targets, and harder to scale. But it's also clearer what you're building and who you're building for.

FAQs About Anthropic, Claude, and the Ad-Free Model

Why did Anthropic decide against advertising in Claude?

Anthropic's decision stems from their belief that advertisements would undermine Claude's core purpose of being a genuinely helpful assistant for deep work and complex thinking. The company argues that ad incentives conflict with the principle of being helpful and could create unpredictable behaviors in how the model operates. Additionally, showing ads based on sensitive personal information users share with Claude would violate user trust and privacy expectations.

How does Anthropic plan to make money if Claude is ad-free?

Anthropic is exploring multiple revenue streams beyond advertising. The company generates revenue through Claude's API (charging per token for businesses using it), potential affiliate-style commissions from commerce interactions, enterprise licensing deals, premium consumer tiers, and transaction-based models. The company is also leveraging features that help users find and compare products, which Anthropic sees as creating value rather than exploiting users.

What is the difference between Claude and ChatGPT in terms of user experience?

From a business model perspective, ChatGPT offers free tiers with ads, a paid Plus tier (

Is Anthropic's ad-free model sustainable long-term?

Whether Anthropic's ad-free model is sustainable depends on several factors: the company's ability to improve compute efficiency, the success of alternative revenue streams, continued investment from backers, and overall market demand for ad-free AI. With $5 billion in funding, Anthropic has runway, but profitability will require either significantly lower operating costs, higher API adoption rates, or both. The model works best if compute costs drop through technological innovation.

Could Anthropic eventually introduce ads despite promising not to?

While Anthropic has publicly committed to keeping Claude ad-free, business realities could create pressure to reconsider this stance. If compute costs remain high, capital becomes scarce, or the company faces existential profitability challenges, they might redefine what "ad-free" means through narrower definitions or introduce indirect monetization methods. However, such a pivot would damage Anthropic's credibility with users and investors who support the company partly for this commitment.

How is Constitutional AI related to Anthropic's ad-free stance?

Anthropic's Constitutional AI approach is fundamentally aligned with the ad-free decision. Constitutional AI teaches models to follow a set of core principles—including being helpful and avoiding harm—through training methods that embed these values. Adding ad incentives on top would create conflicting goals within the system, potentially causing unpredictable behavior. The ad-free stance reinforces Anthropic's core commitment to building AI systems aligned with human values rather than shareholder returns.

What does this mean for the future of AI monetization?

Anthropic's approach suggests the market will likely support multiple AI monetization models simultaneously. Some companies will use ads (like OpenAI), some will use premium subscriptions, some will focus on B2B API access, and some will try alternative approaches. Enterprise customers, in particular, may gravitate toward ad-free models for security and trust reasons. This fragmentation allows different companies to serve different market segments with different values.

Are there privacy benefits to using Claude over ChatGPT?

Claude's ad-free model means that Anthropic has no structural incentive to track and profile user behavior for ad targeting purposes. However, both services do use conversations to improve their models (unless opted out) and collect basic usage data. The meaningful privacy difference is that Claude doesn't feed conversation data into an ad-targeting system. Users uncomfortable with any data collection should review each service's privacy policy carefully.

The Bottom Line: Choosing Your AI Future

The divide between Anthropic and OpenAI represents a fundamental question about what role advertising should play in advanced AI assistants. Anthropic believes ads corrupt the purpose of being helpful. OpenAI believes the revenue justifies the trade-off.

Both companies have legitimate points. Both are making rational business decisions based on different priorities and constraints. The outcome of this competition will partly depend on technical factors (which company's models are genuinely better), and partly on market psychology (which company's users stay loyal), and partly on macroeconomic factors (whether compute costs drop, whether capital stays available).

For users, this competition is actually healthy. It means you get to choose what you value. If you prioritize a distraction-free experience and trust the vendor, you can choose Claude. If you prioritize broader feature access or don't mind ads, ChatGPT works. For enterprise use cases, the trade-offs might be clearer—ad-free almost certainly wins.

The interesting question is whether Anthropic's bet actually works out. Can an AI company build a massively profitable business while genuinely refusing to exploit users for ads? Or will financial reality force a reckoning?

The next five years will answer that question. Until then, Anthropic's commitment to ad-free AI stands as a genuine alternative to the Google/Facebook model that has dominated the internet. Whether it's a sustainable alternative, or just a well-funded experiment that eventually fails, remains to be seen.

What seems clear is that users increasingly care about this distinction. The fact that Anthropic is making such a public, clear choice about ads suggests they believe users will reward that choice with loyalty and adoption. OpenAI's answer—to monetize through ads while still maintaining a premium ad-free tier—suggests they believe market segmentation works better than purity.

Both might be right. And that's actually the most interesting outcome: a market where different AI companies compete on different value propositions, and users get to choose which vision of the AI future they want to support.

Key Takeaways

- Anthropic explicitly refuses advertising in Claude, arguing ads create conflicts with the core principle of being genuinely helpful and would create unpredictable AI behavior

- OpenAI's decision to introduce ads to ChatGPT reflects different business pressures and capital expectations from institutional investors like Microsoft

- The user privacy concern is significant: sharing sensitive personal information with Claude for ads feels like betrayal versus existing expectations on ad-supported social media

- Anthropic is betting on alternative revenue streams (API usage, commerce integration, premium tiers, enterprise licensing) rather than surveillance-based advertising

- With $5 billion in funding, Anthropic has 5-10 years of runway, but long-term profitability requires either dramatic compute cost reductions or significant revenue scaling from non-ad sources

Related Articles

- ChatGPT Ads at NFL Rates: Is OpenAI's $60 CPM Justifiable? [2025]

- Intel's GPU Strategy: Can It Challenge Nvidia's Market Dominance? [2025]

- Xcode 26.3: Apple's Major Leap Into Agentic Coding [2025]

- Apple Xcode Agentic Coding: OpenAI & Anthropic Integration [2025]

- AI Safety by Design: What Experts Predict for 2026 [2025]

- Enterprise AI Race: Multi-Model Strategy Reshapes Competition [2025]

![Anthropic's Ad-Free Claude vs ChatGPT: The Strategy Behind [2025]](https://tryrunable.com/blog/anthropic-s-ad-free-claude-vs-chatgpt-the-strategy-behind-20/image-1-1770226694715.png)