Anthropic's Data Center Power Pledge: AI's Energy Crisis [2025]

Last week, Anthropic made a promise that sounds simple on the surface but reveals something much bigger about where AI is heading. The company said it would cover 100 percent of the electricity grid upgrades needed to connect its data centers to local power systems. No passing costs to residents. No hiding behind infrastructure investors. Anthropic itself would absorb the full bill.

This isn't altruism. It's necessity.

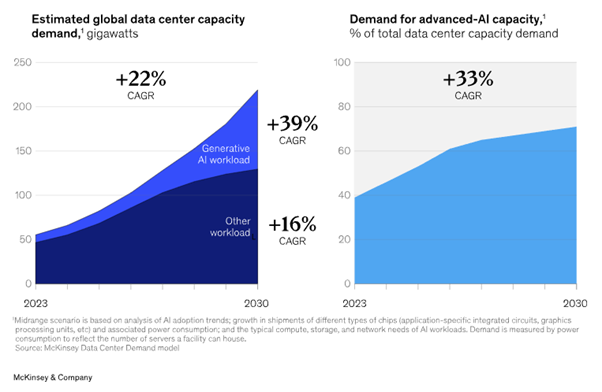

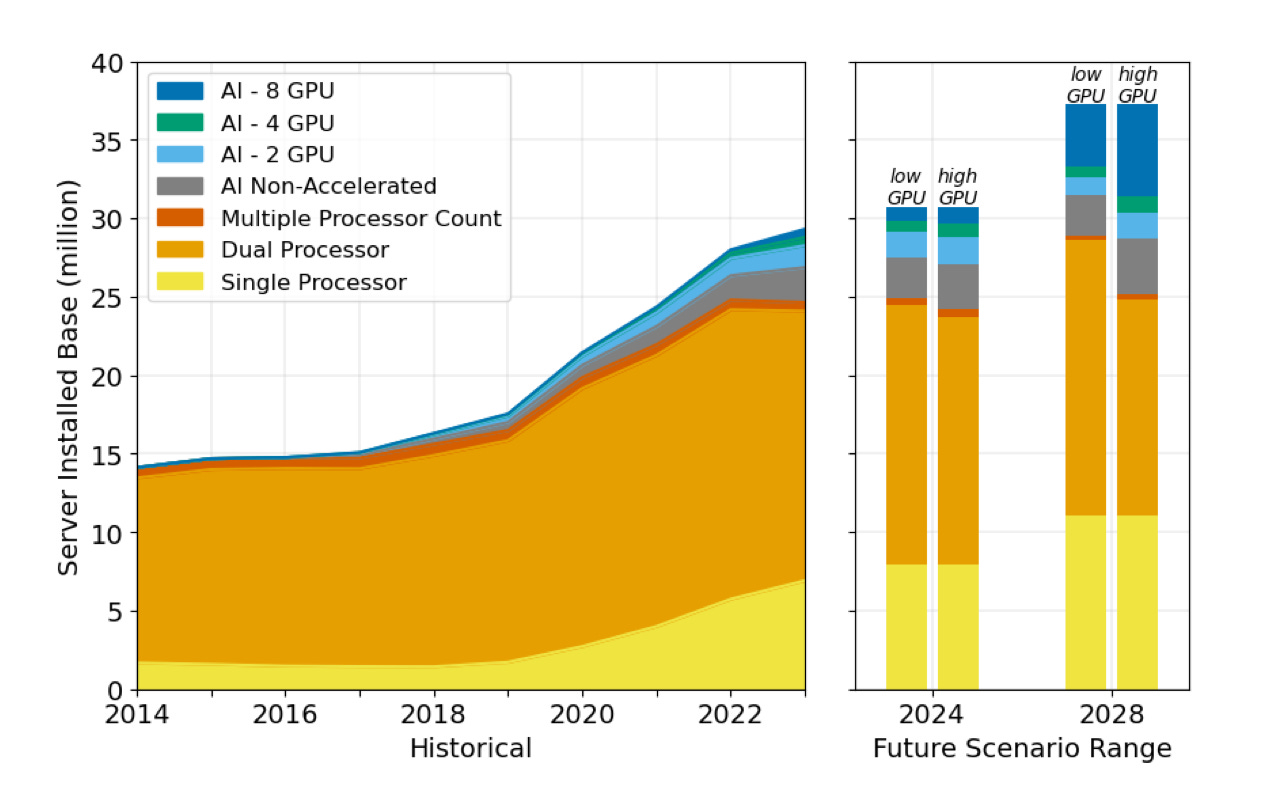

AI has become a power hog unlike anything we've seen before. Training a single large language model now consumes as much electricity as thousands of homes use in a year. When you stack multiple models, inference requests, and the constant improvements these systems require, you're talking about data centers that drink electricity at scales that can visibly impact local power grids and monthly utility bills for everyone nearby.

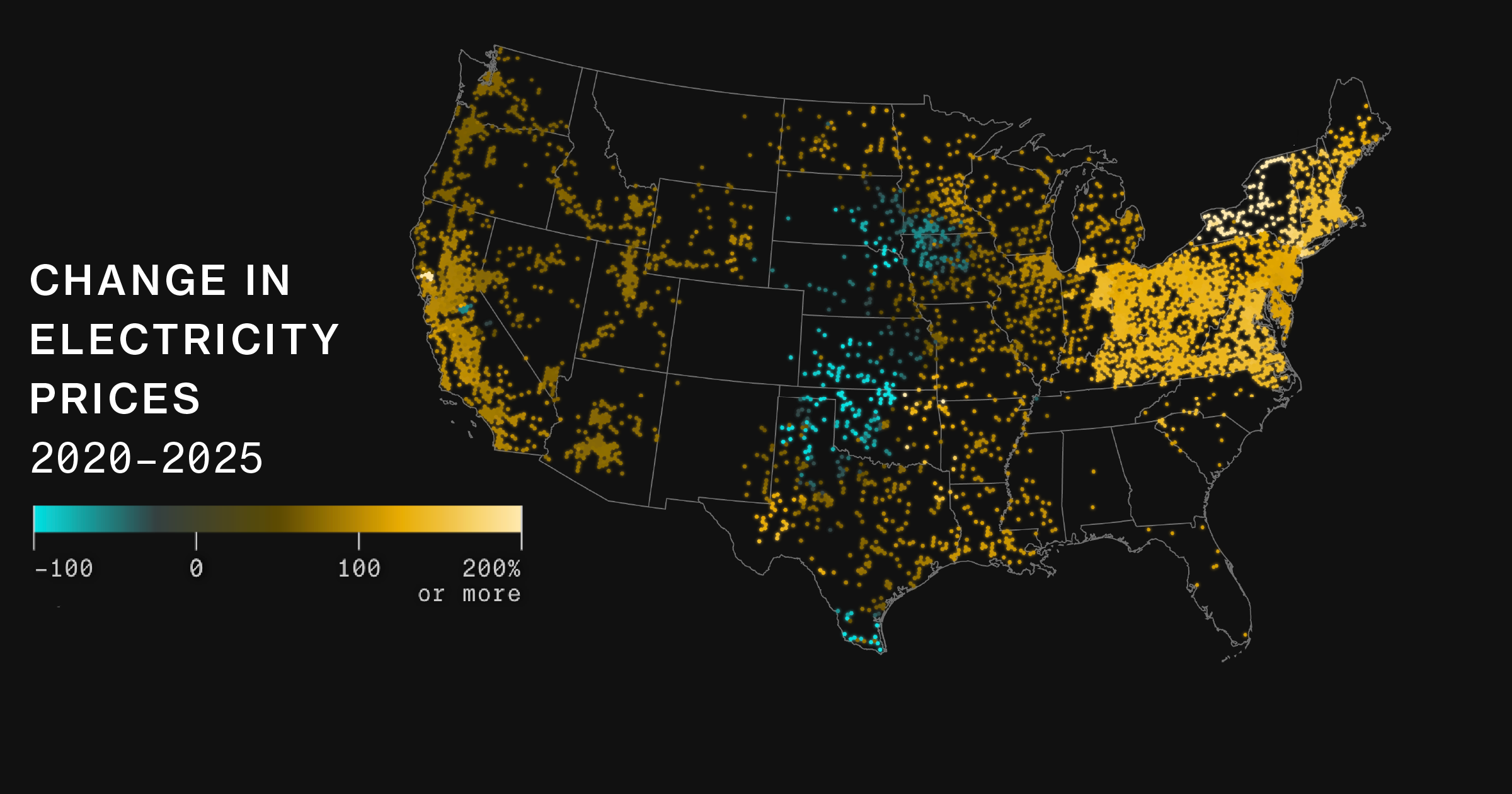

The backlash has been swift and political. Across the United States, electricity rates have become a top election priority. Communities are canceling data center projects. Grid operators are sweating about capacity. And regular people are starting to notice their power bills climbing in areas near new AI infrastructure.

Anthropic isn't alone in this. Microsoft and Meta have made similar pledges. OpenAI is quietly dealing with its own power constraints. What started as a tech infrastructure problem has become a public relations crisis and a policy battlefield.

Here's what's really happening beneath these corporate promises, why they matter less than you'd think, and what actually needs to happen to solve the AI power crisis.

TL; DR

- AI data centers consume extraordinary amounts of power: Training modern models uses megawatts continuously, equivalent to powering entire towns

- Local electricity bills are rising because of data center expansion: Grid upgrades cost billions, and historically, consumers absorbed these costs through rate increases

- Anthropic's pledge is meaningful but incomplete: Covering upgrade costs doesn't address supply shortages or the fundamental strain on aging infrastructure

- Microsoft and Meta are making similar commitments: The tech industry is racing to manage the political fallout before stricter regulations emerge

- The real crisis is power generation capacity, not just infrastructure costs: Even if companies pay for upgrades, there's not enough electricity being produced to meet AI demand

- This will reshape where data centers get built: Companies will cluster around nuclear plants, hydroelectric dams, and regions with excess capacity

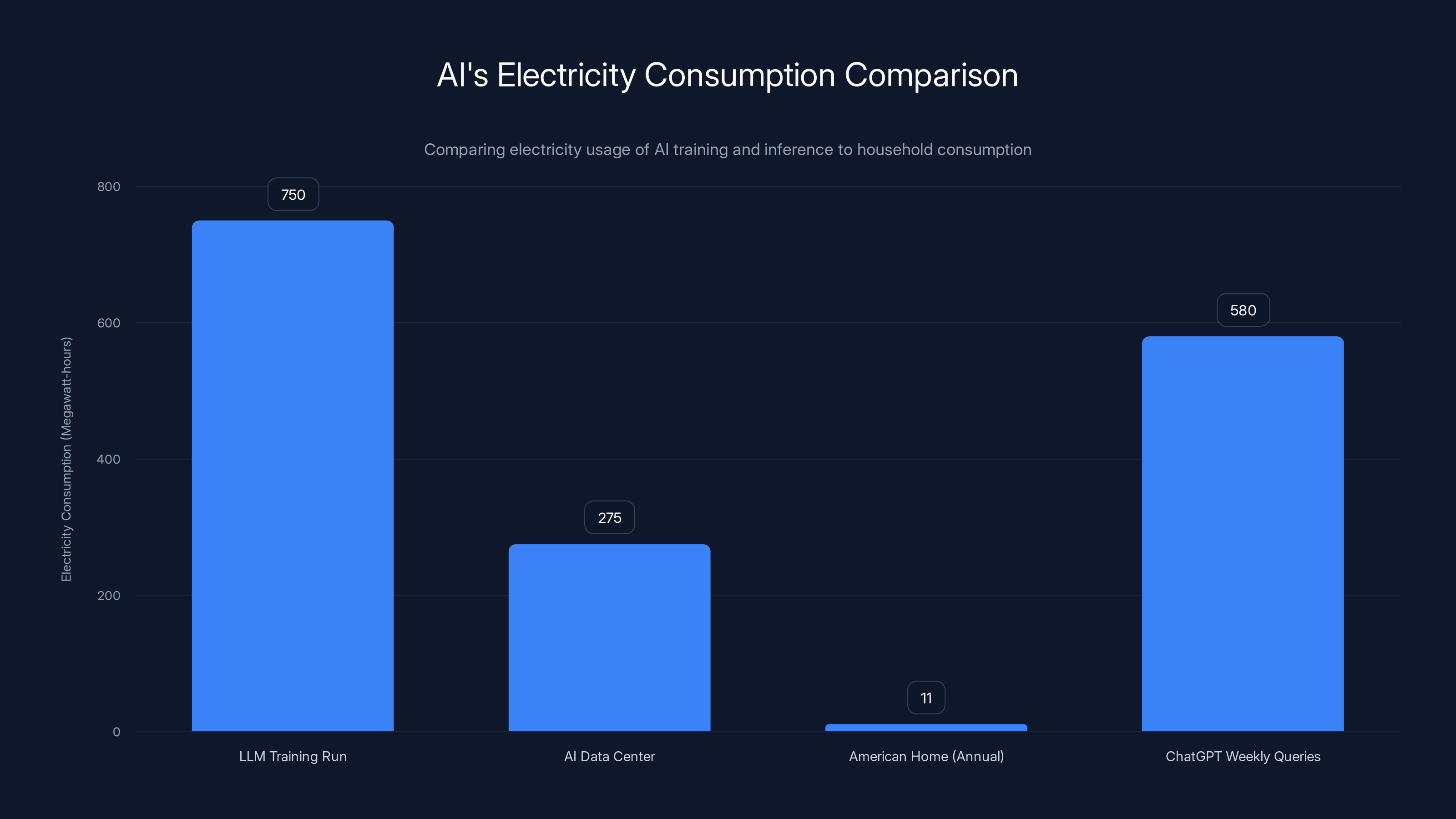

Training a large language model consumes 750 MWh, equivalent to 68 American homes' annual usage. AI data centers and ChatGPT queries also have significant power demands. Estimated data for contextual understanding.

The Scale of AI's Power Hunger Is Almost Unimaginable

Let's start with numbers, because they're the only way to grasp what's actually happening.

Training Claude 3.5 Sonnet or similar large language models now consumes between 500 to 1,000 megawatt-hours of electricity per training run. A single run. One model. One iteration.

To put that in perspective: the average American home uses about 11,000 kilowatt-hours per year. That's roughly 11 megawatt-hours annually. One training run of a modern LLM uses what 50 to 100 American homes need for an entire year. In weeks. Sometimes days.

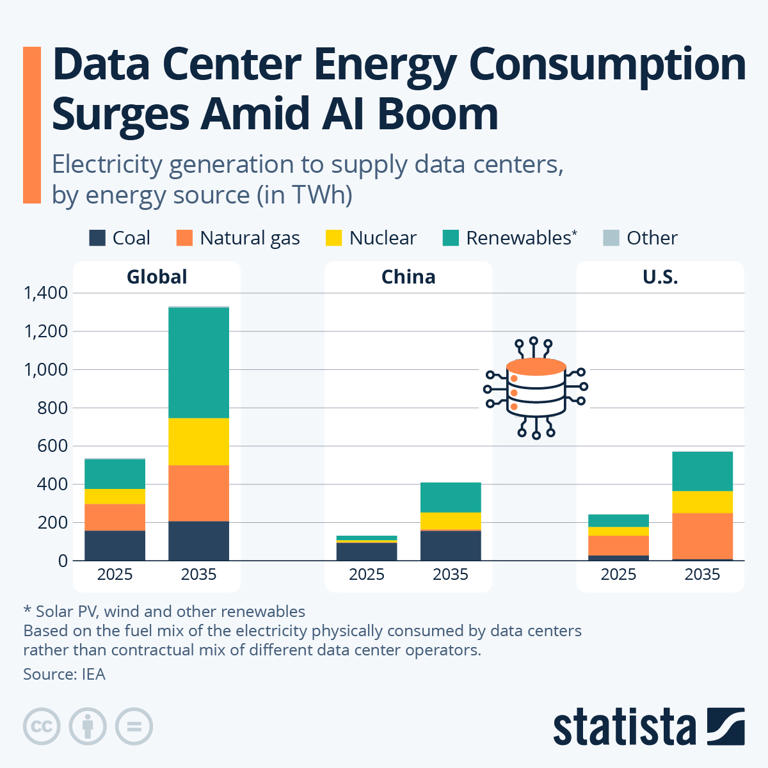

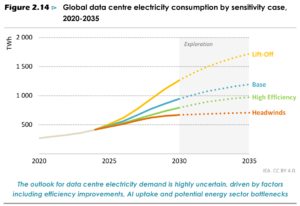

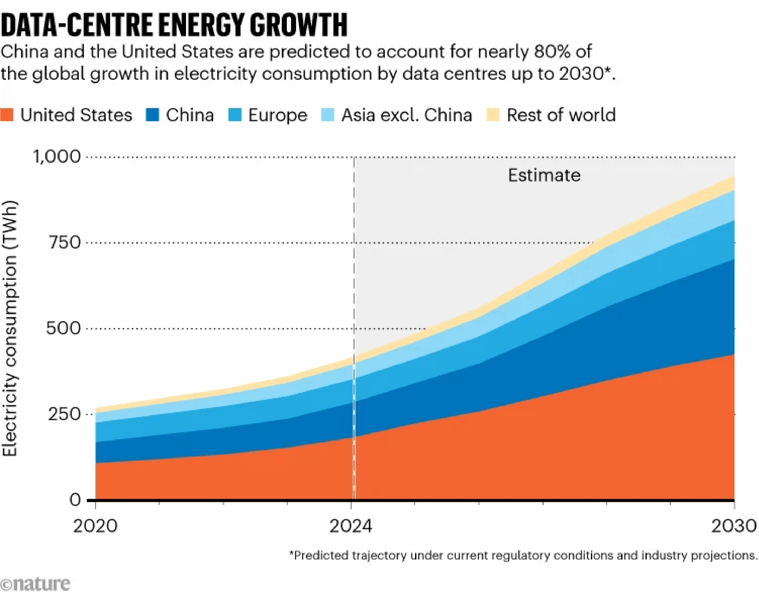

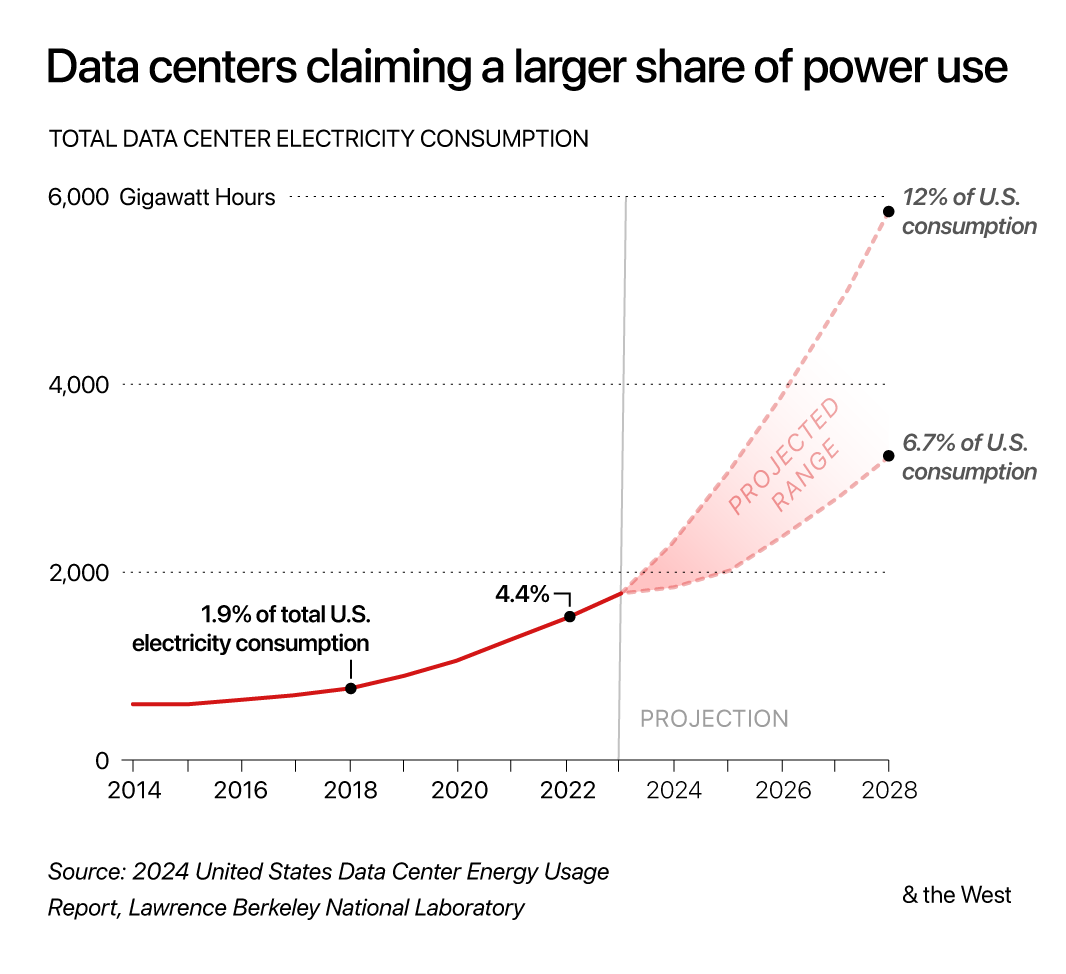

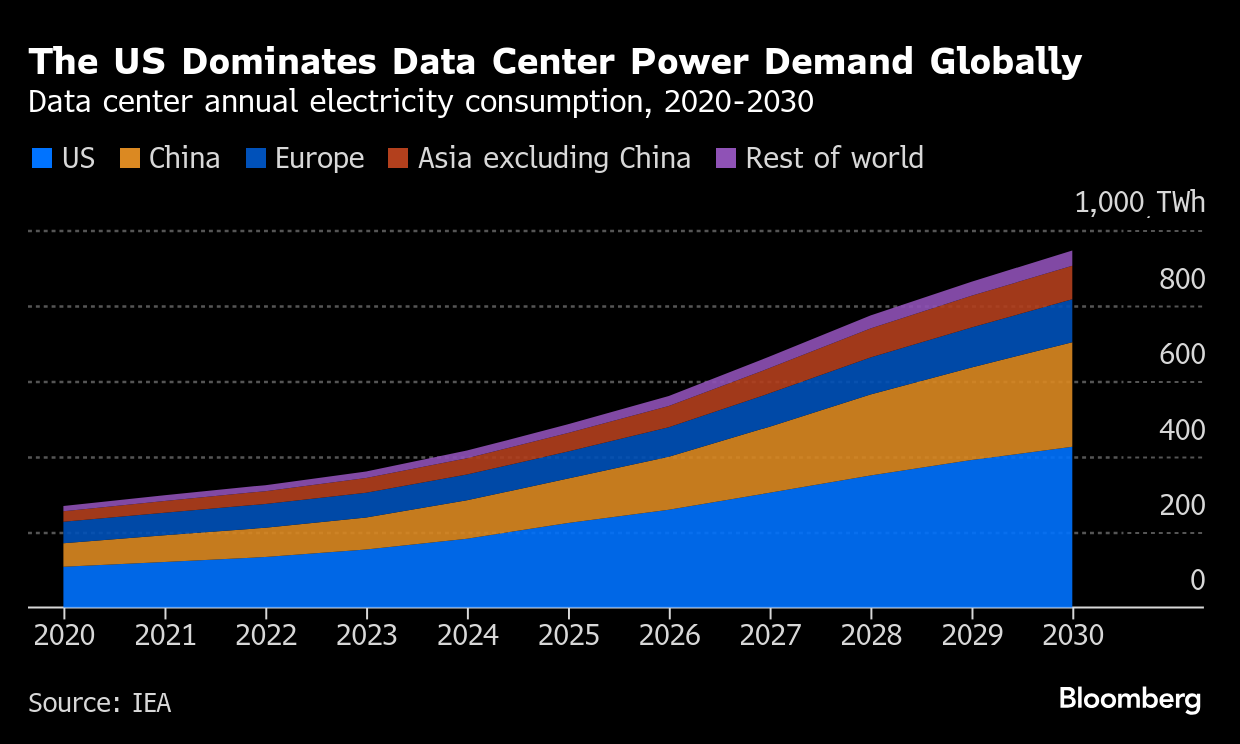

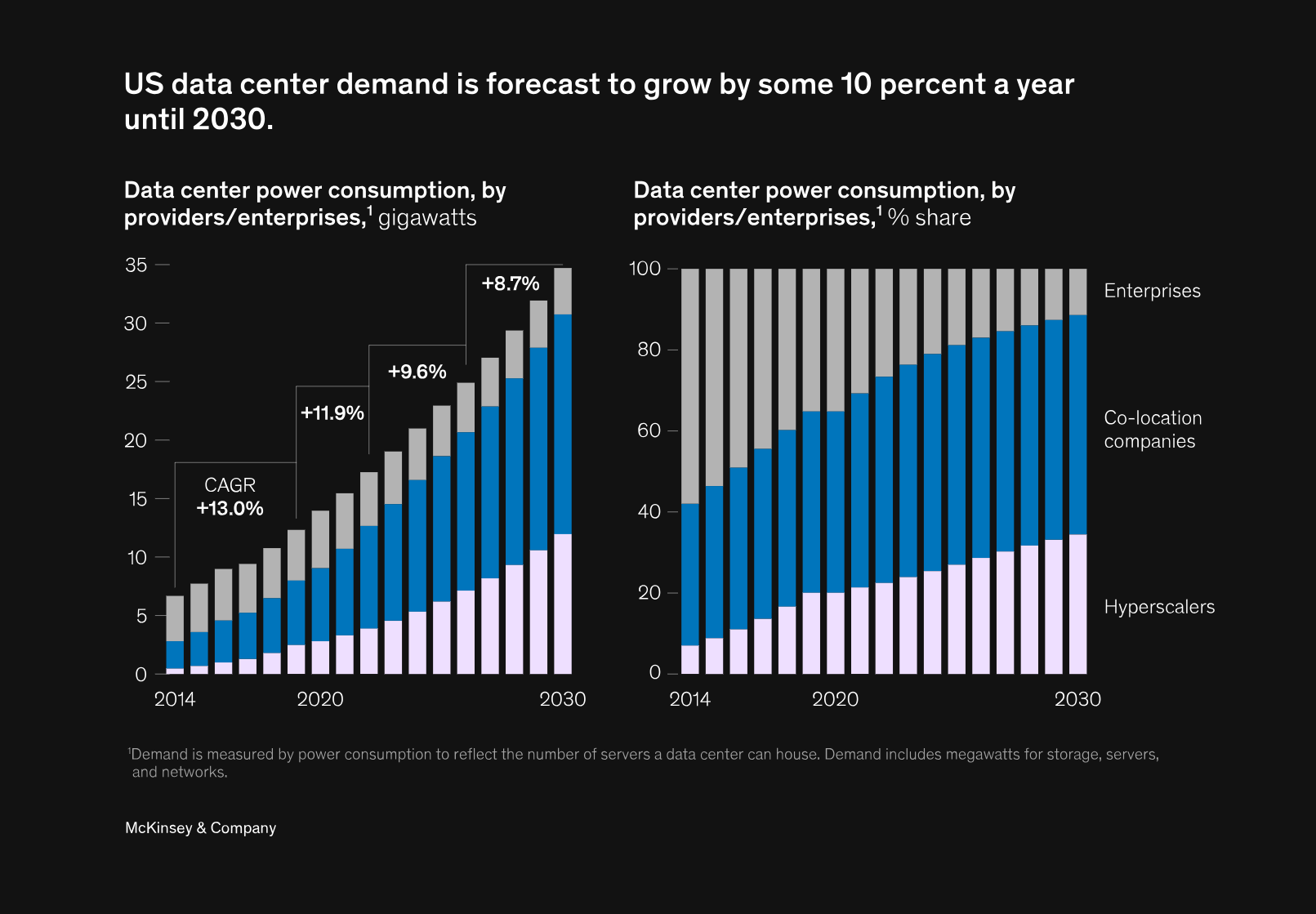

But training is only the beginning. Once models are deployed, they run inference constantly. Every time someone uses Chat GPT, Claude, Gemini, or any AI assistant, it's consuming electricity. Not as much as training, but continuously, at scale. The International Energy Agency estimates that AI could account for 10-15% of global electricity consumption by 2030, up from roughly 1% today.

That's not a typo.

Data centers running AI models are typically massive facilities. A single data center supporting large-scale AI operations might consume 50 to 500 megawatts continuously. For comparison, the average coal power plant generates about 1,000 megawatts. A large nuclear plant generates about 1,000 megawatts. So you're talking about data centers that rival major power generation facilities in their appetite.

The reason this matters for Anthropic's pledge is that electricity grid infrastructure was never designed for this. The grids in most American cities were built incrementally over decades, scaled for residential and commercial growth that increased gradually. A new data center showing up and demanding 50 megawatts of continuous power is like a tsunami hitting a river.

Power plants take 10-20 years to build. New transmission lines take 5-10 years and billions in investment. Substations need upgrades. Distribution lines need reinforcement. When Anthropic or any AI company arrives in a region and says "we're building a data center here," the local utility faces a choice: upgrade infrastructure at massive cost, or don't upgrade and risk brownouts affecting existing customers.

Historically, those costs got passed directly to consumers through rate increases. That's what has people angry.

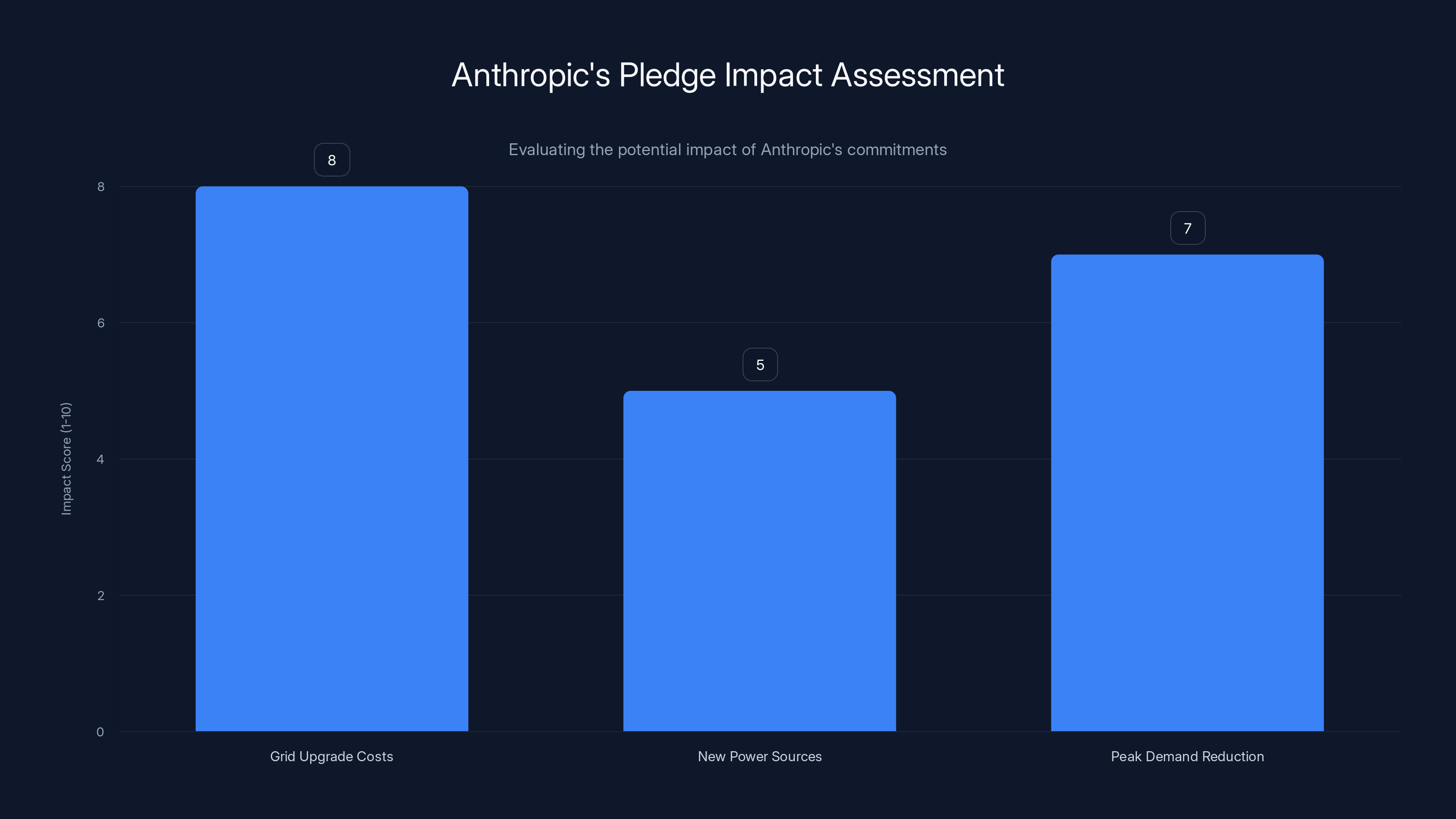

Anthropic's commitment to covering grid upgrade costs scores highest in impact due to direct financial relief for consumers. Peak demand reduction also scores well for its potential to stabilize the grid. Support for new power sources remains vague, resulting in a lower impact score. Estimated data.

Why Local Electricity Bills Are Spiking (And It's Not Just Weather)

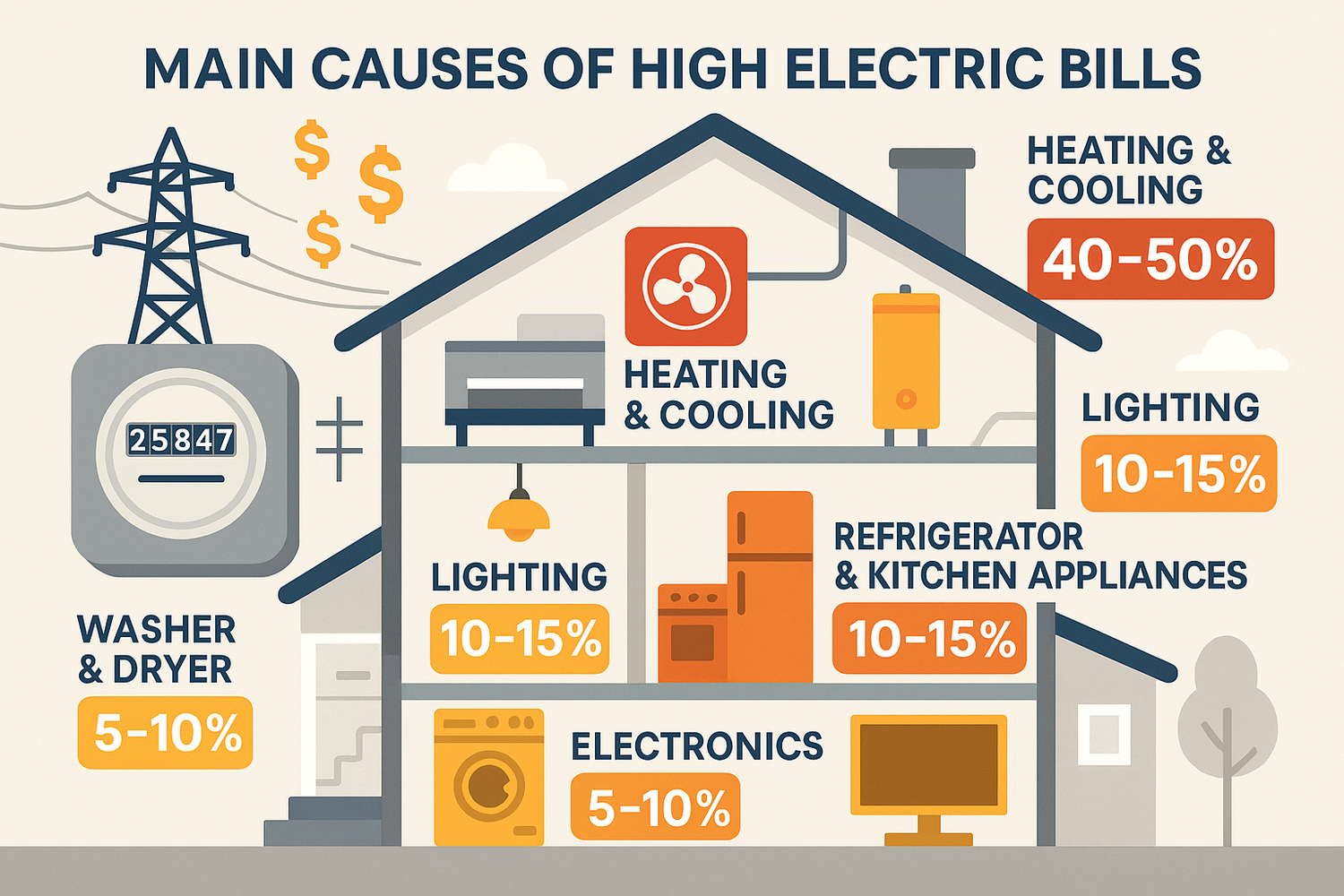

You've probably noticed electricity costs climbing even if you're not running any AI workloads. There are multiple reasons for this, but data center expansion is becoming the primary culprit in specific regions.

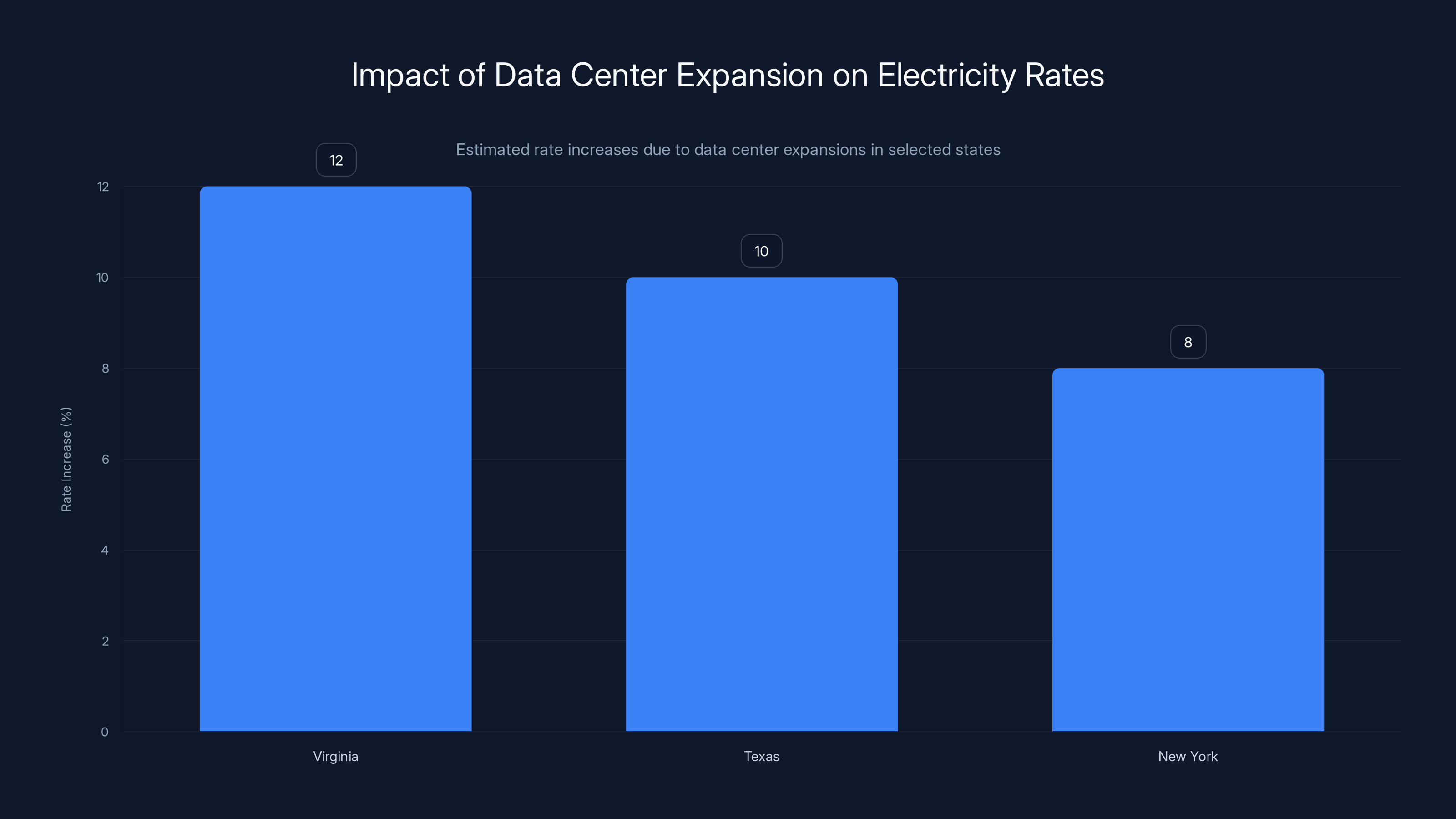

Virginia, which has become a hotbed for data center construction because of proximity to cloud infrastructure hubs, has seen residential electricity rates rise by up to 12 percent in recent years. Texas, where Anthropic is planning massive expansion, is facing its own capacity crunch. New York, another target for Anthropic's $50 billion infrastructure plan, has aging grids that need serious investment.

The math is straightforward. When a utility company needs to upgrade infrastructure to support a new industrial customer (a data center), those upgrades cost money. Historically, utilities recover those costs through rate increases on all customers, distributed across the entire service area. So when Anthropic or Microsoft arrives and demands grid upgrades, every resident in that region ends up paying for it.

For a family living on a fixed income, a 10 percent rate hike means real money. For a retiree, it's the difference between keeping the air conditioning on during summer and suffering through the heat. Politically, this is explosive. It's direct, tangible, and hits people's wallets immediately.

That's why state legislatures across the country have started pushing back, and why grid operators are becoming increasingly concerned about stability.

But here's the catch with Anthropic's pledge. Paying for infrastructure upgrades is good. It's legitimate. It prevents direct cost-shifting to residents. But it doesn't solve the actual problem underlying this crisis.

Anthropic's Pledge: What It Actually Covers (And What It Doesn't)

Let's be precise about what Anthropic committed to, because the company's own announcement left a lot of details vague.

Anthropic pledges to:

- Pay 100 percent of grid upgrade costs needed to connect its data centers to existing power infrastructure

- Support efforts to develop new power sources to meet AI electricity demand

- Voluntarily reduce power consumption during peak demand periods to help stabilize the grid during extreme weather

The first one is straightforward and valuable. If a utility needs to spend

The second pledge is vaguer. "Support efforts to develop new power sources" could mean anything from lobbying for renewable energy projects to providing capital for nuclear facilities. Anthropic didn't specify. No dollar amounts. No timeline. "Support" is doing a lot of work in that sentence.

The third pledge is the most interesting and potentially impactful. Data centers that can reduce power consumption during peak demand—when heat waves are taxing grids or cold snaps are pushing heating loads to the max—can actually help grid stability. It's not altruism; it's operational flexibility. Companies with sophisticated load management can shift computationally intensive tasks to off-peak hours when electricity is cheaper and grid stress is lower.

But here's what Anthropic's pledge does NOT cover:

It doesn't guarantee that electricity generation capacity will exist to power its data centers. It doesn't promise that the region has enough power plants, solar farms, or wind turbines. Paying for infrastructure upgrades is meaningless if there isn't actual electricity being produced to flow through those upgrades.

This is the part that worries grid operators most. In states like Texas, existing power plants are already operating near maximum capacity. Adding massive data centers means building new generation facilities—solar farms, wind turbines, or nuclear plants. That takes money, regulatory approval, and years. It's not something companies can fix by paying for infrastructure.

Microsoft and Meta have made similar commitments, but with slightly different angles that reveal how each company is thinking about the problem differently.

Estimated data shows that Virginia has experienced the highest electricity rate increase at 12% due to data center expansions, followed by Texas and New York.

Microsoft's Approach: Rewiring Data Centers for Efficiency

Microsoft's strategy for managing electricity consumption has focused less on pledging payment and more on technical innovation—which is telling.

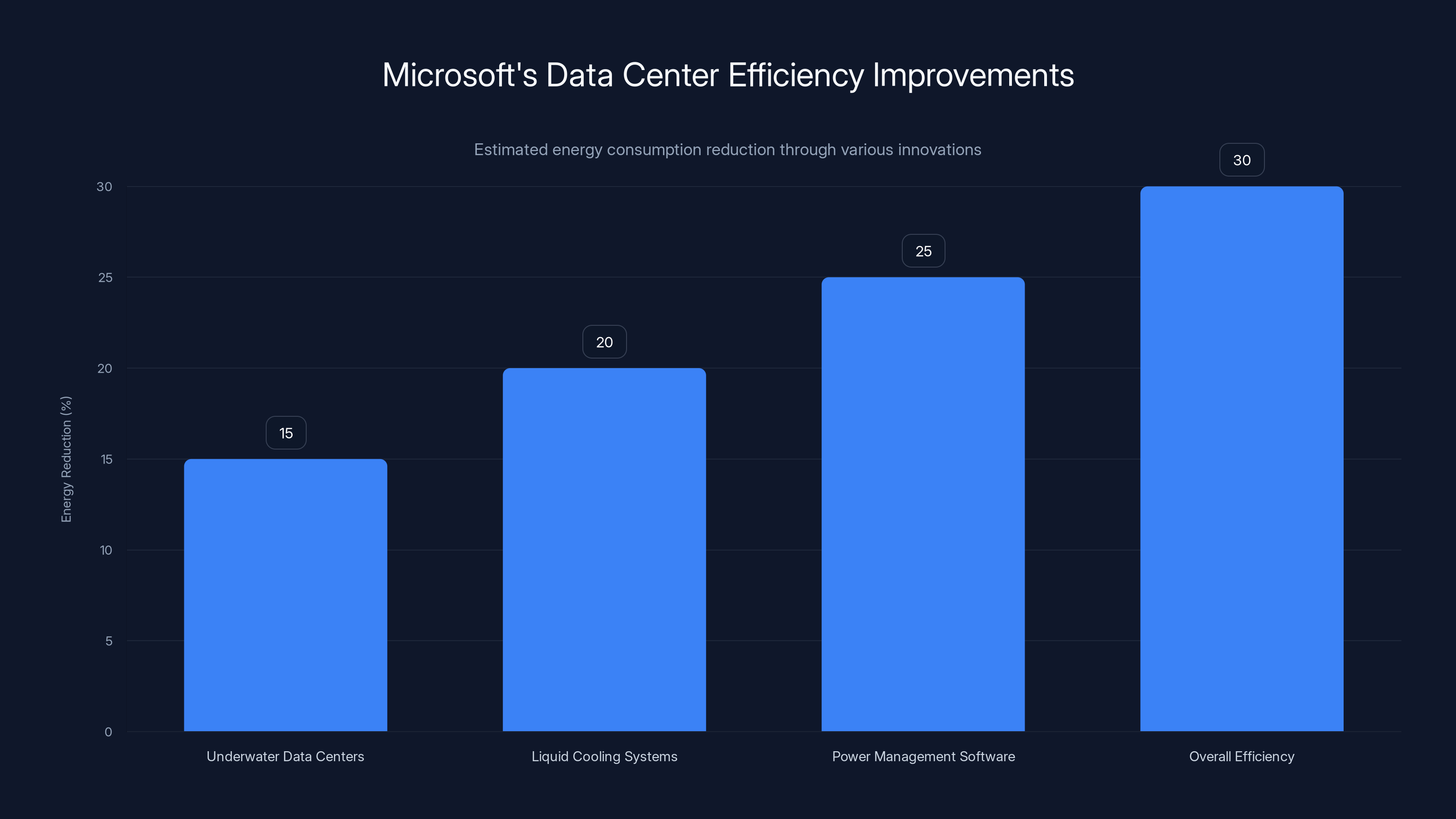

Microsoft has been investing heavily in making data centers more efficient. The company has experimented with underwater data centers (to improve cooling efficiency), liquid cooling systems instead of air cooling, and advanced power management software that optimizes which servers are active and which are idle based on real-time demand.

Why? Because Microsoft understands something critical: if you can reduce power consumption by 30 percent without reducing computing capacity, you've solved a third of the problem. You need fewer power plants. Your infrastructure upgrades are cheaper. Your carbon footprint shrinks. Grid impact decreases.

This is why Microsoft Azure's energy efficiency ratings have become a competitive advantage. Companies using Azure infrastructure can market their AI services as more sustainable, which matters to enterprise customers increasingly concerned about their own carbon footprint.

Microsoft's approach doesn't eliminate the need for Anthropic-style pledges. But it recognizes that the companies which solve the efficiency problem first will have a massive competitive advantage. Microsoft can run more computation on less electricity, which means it needs less new infrastructure, which means fewer battles with communities and regulators.

The catch: this efficiency innovation is expensive and capital-intensive. Smaller AI companies or startups can't afford to invest in custom cooling systems and proprietary power management software. So you'll likely see a stratification emerge: well-funded companies like Microsoft, Google, and Anthropic invest in efficiency and can expand more freely. Smaller competitors get squeezed by infrastructure costs and regulatory pushback.

Meta's Gamble: The Open Source Approach

Meta has taken a different angle, one that's less about making a pledge and more about strategic positioning.

By releasing its large language models (particularly Llama) as open source, Meta effectively distributed the computational burden. Instead of running all inference on Meta's centralized data centers, companies and researchers can run Llama models on their own infrastructure.

This is brilliant strategically because it shifts electricity consumption away from Meta's facilities and onto distributed systems. Meta still uses power to train and update models, but inference workloads get spread across thousands of organizations running the models locally or on their own cloud infrastructure.

It's not a philanthropy move. It's a power play, literally. By making models open source, Meta reduces the concentrated electricity demand on its own data centers, making it easier to manage grid impact and avoid the exact backlash Anthropic is now facing.

But there's a cost: Meta loses the monopoly on serving every inference request. Users running Llama locally get a slightly different model than what Meta's proprietary systems run. Performance may vary. But from a power consumption perspective, it's a genuinely clever solution.

The challenge with Meta's approach is that it only works for specific use cases. Enterprise customers running real-time AI systems at scale still need centralized infrastructure. Scientific computing still needs massive data centers. Training new models still requires concentrated computing power. So Meta's open source strategy reduces pressure but doesn't solve the underlying problem.

Microsoft's innovative approaches, such as underwater data centers and liquid cooling, are estimated to reduce energy consumption by up to 30%, significantly enhancing data center efficiency. Estimated data.

The Real Crisis: Power Generation Capacity, Not Infrastructure

All three companies—Anthropic, Microsoft, Meta—are essentially rearranging deck chairs on the Titanic. They're solving the wrong problem.

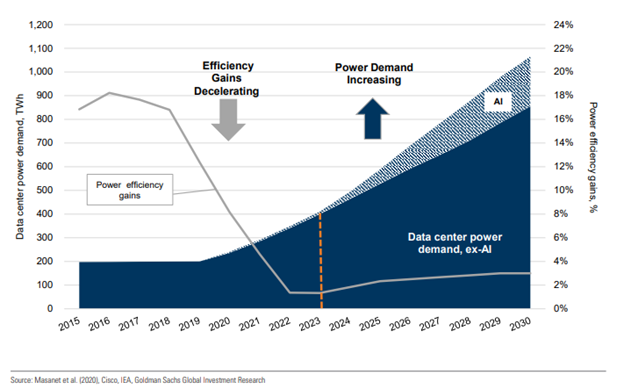

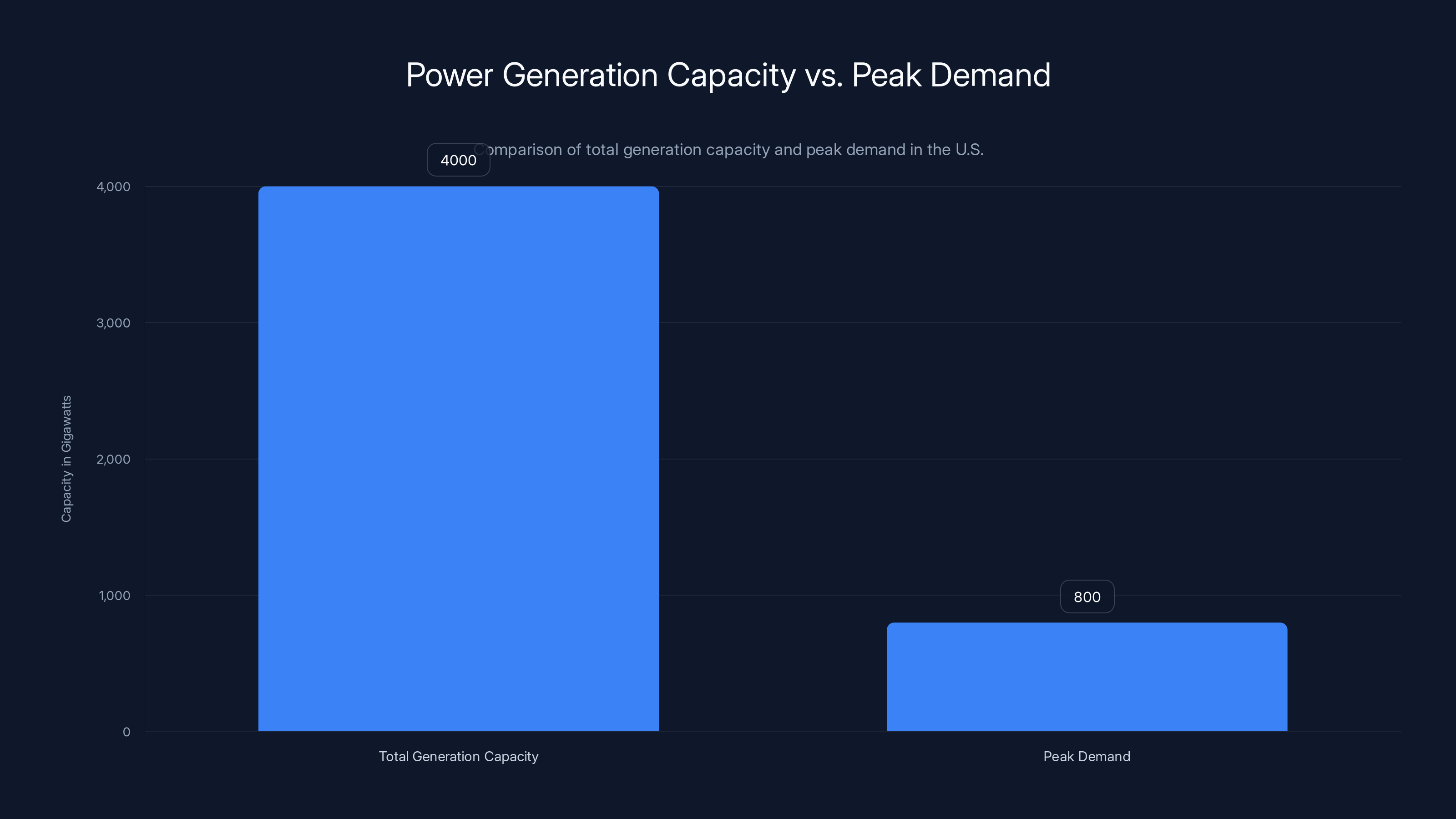

The actual crisis is power generation capacity. The United States generates about 4,000 gigawatts of electricity from all sources combined (coal, natural gas, nuclear, renewables, etc.). Peak demand typically maxes out around 800 gigawatts during summer afternoons. That leaves capacity. But the growth of AI is happening at the same time that coal plants are retiring and climate concerns are limiting gas plant expansion.

In regions where data center expansion is happening fastest—Texas, Virginia, the Pacific Northwest—existing capacity is already tight. During extreme weather (heat waves, cold snaps), systems operate at near-maximum capacity. Adding massive new loads increases the risk of rolling blackouts and grid instability.

The solution requires massive investment in new power generation. Nuclear power plants are the fastest-growing option because they provide baseline power (unlike solar and wind which are intermittent) and produce zero emissions. But a new nuclear plant takes 10-15 years to plan, build, and license. Billions of dollars. Regulatory hurdles. Community opposition.

Companies like Anthropic and Microsoft are beginning to recognize this, which is why both have started investing directly in nuclear power development and signing long-term contracts with nuclear plants.

But here's the timeline problem. These contracts are multi-year, multi-billion-dollar commitments. The power plants won't be online for years. Meanwhile, companies want to expand data centers now. So you end up with a mismatch: companies promising to cover infrastructure costs and reduce peak demand, while simultaneously racing to build infrastructure faster than new power generation can come online.

It's unsustainable. And everyone knows it.

How This Is Reshaping Where Data Centers Get Built

The power crisis is creating a geographic bottleneck that will reshape the entire AI infrastructure landscape.

Traditionally, data centers clustered in regions with cheap electricity and good connectivity. That meant Texas (cheap gas), the Pacific Northwest (hydropower), and Virginia (established tech corridors). But as electricity becomes a constraint rather than an abundant resource, companies are now forced to locate near specific power sources.

You're seeing this play out already. Companies are buying land adjacent to nuclear power plants. Microsoft signed a deal with Constellation Energy to bring the Three Mile Island nuclear plant back online specifically to power data centers. Anthropic is building facilities in Texas partly because of the state's existing power infrastructure.

This creates a new competitive advantage: being located where power is abundant. Companies that secure capacity near nuclear plants, hydroelectric dams, or high-renewable-energy regions have a moat. They can expand faster, with lower power costs, facing less regulatory pushback.

Smaller companies without that leverage will struggle. They'll either have to negotiate with utilities at unfavorable terms (paying premium rates for less guaranteed capacity) or stay in regions with cheaper electricity (which might be coal-heavy and facing climate scrutiny).

You'll also see more international expansion. Iceland's abundant geothermal power is attracting data center investment. Norway's hydroelectric capacity is becoming valuable. Even Ireland is leveraging its wind energy potential to attract data center companies.

The countries and regions with abundant clean electricity will become magnets for AI infrastructure. The rest will be left competing for scraps.

The U.S. generates about 4,000 gigawatts of electricity, while peak demand is around 800 gigawatts, indicating available capacity. However, regional constraints and growing AI demands pose risks.

The Political Fallout: From Infrastructure Problem to Election Issue

What makes Anthropic's pledge significant isn't its technical merit. It's the political context.

Electricity rates and grid reliability have become top-tier election issues in 2024-2025. Voters are noticing their power bills climbing. They're experiencing more frequent brownouts and peak demand warnings. In some states, electricity became the defining campaign issue.

When federal policymakers saw AI companies being blamed for grid stress, they started asking hard questions. What happens to the grid when you add trillion-dollar AI infrastructure? How do we make sure it doesn't bankrupt regular people?

Anthropic and other companies realized that if they didn't get ahead of this politically, they'd face new regulations. Data center siting regulations. Power purchase requirements. Environmental reviews that could delay or kill projects.

So the pledge is partly genuine commitment and partly preemptive political move. By voluntarily agreeing to pay for infrastructure upgrades and support new power generation, companies hope to demonstrate that they're "responsible corporate citizens" and avoid stricter mandates.

Whether this works depends entirely on execution. If Anthropic pays for upgrades and they actually help communities, politicians will point to it as a success story. If communities still see rate increases or grid problems, the pledge will be seen as corporate greenwashing.

Based on early signs, it's mixed. Some utilities and communities have welcomed the commitments. Others remain skeptical that corporate pledges can substitute for actual regulatory oversight.

The Efficiency Path: Can Technology Actually Solve This?

The most optimistic narrative is that AI companies will innovate their way out of the power crisis.

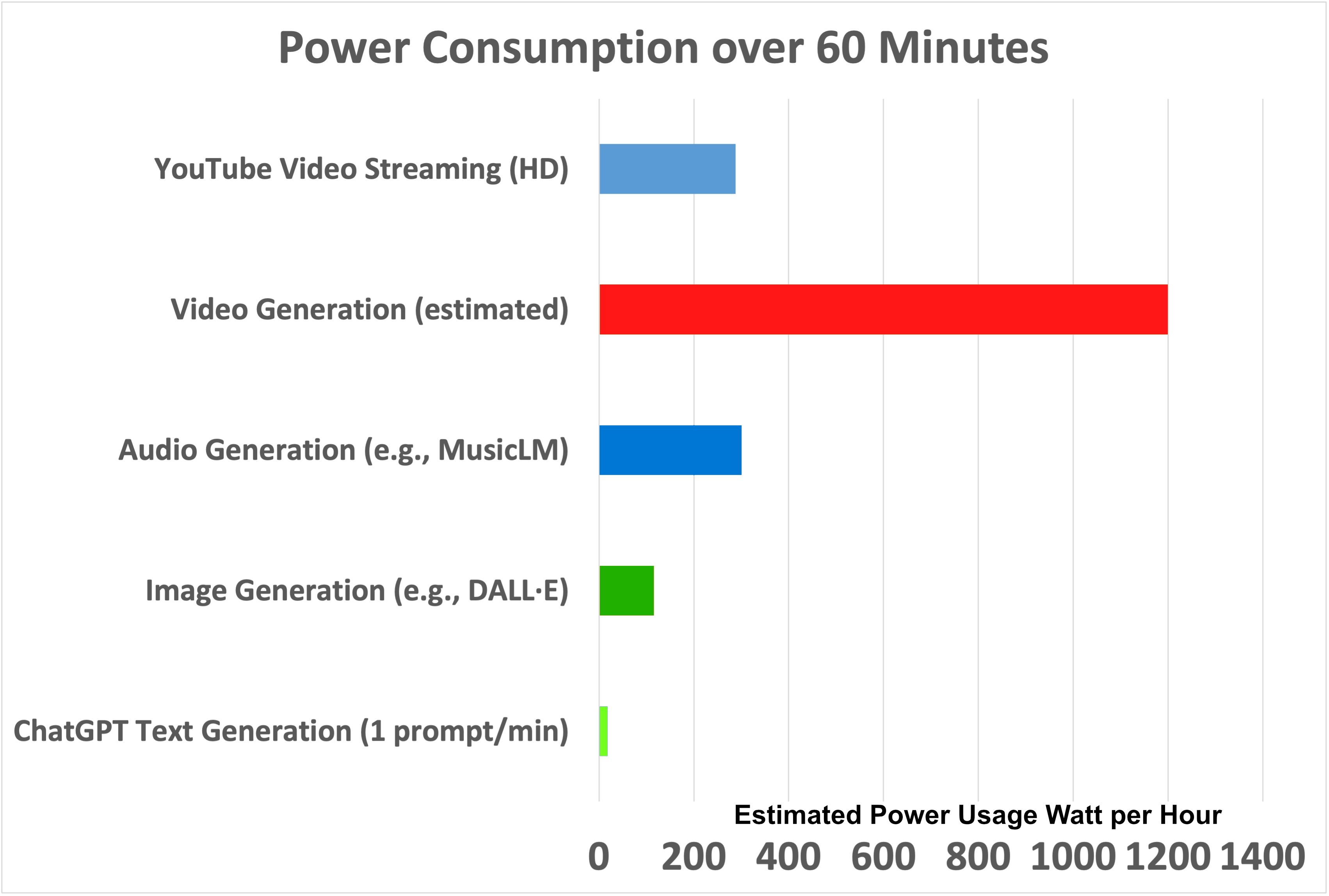

Improvement in model efficiency is happening. New architectures and training techniques are reducing power consumption per unit of computation. OpenAI's recent focus on reasoning models that do more computation with fewer parameters is partially driven by efficiency concerns.

Liquid cooling systems (which Microsoft and others are deploying) can reduce data center cooling costs by 30-40 percent. Chip makers are designing AI accelerators (like NVIDIA's latest GPUs) to be more power-efficient.

If efficiency improvements happen fast enough, the crisis might not be as severe. Maybe data centers will need 20 percent less electricity in five years than initial projections. That buys time for new power generation to come online.

But there's a problem with this narrative: efficiency gains often get swallowed by increased usage. This is called Jevons Paradox. When something becomes more efficient, people use more of it. Cheaper, more efficient AI might actually increase total electricity consumption even as per-unit efficiency improves.

We see this with GPUs. As GPUs became more efficient, the total electricity consumed by AI workloads exploded because suddenly training huge models became economically viable.

The same will likely happen with data centers. More efficient cooling means data centers can run hotter computations. More efficient chips mean companies deploy more servers. Better infrastructure means more data centers build in the same region.

Efficiency buys time. It doesn't solve the fundamental problem.

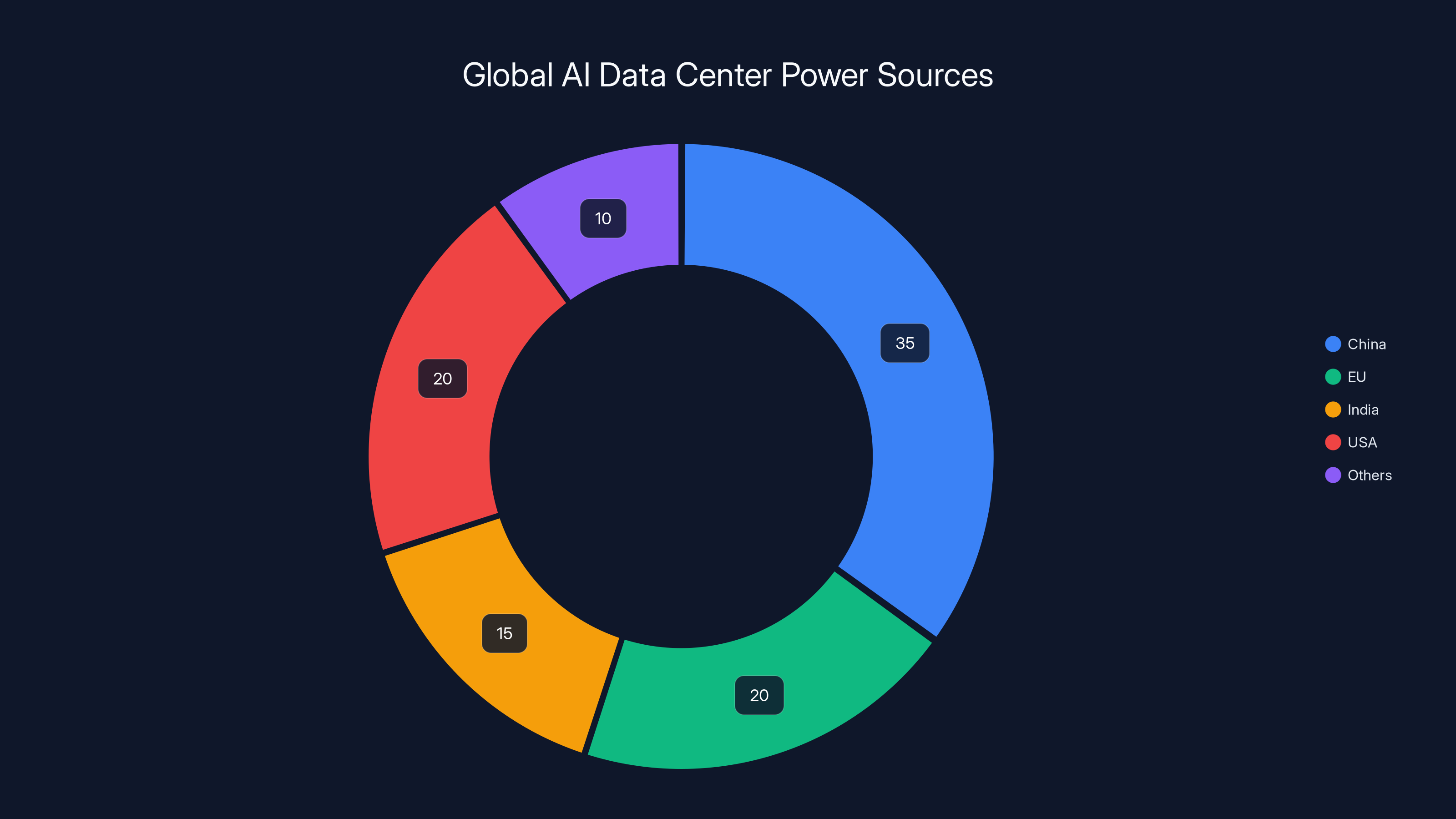

China leads in AI data center power sources with 35% due to minimal environmental constraints and coal-based power. The EU and USA follow with stricter regulations. Estimated data.

What Anthropic's Pledge Actually Signals About AI's Future

Step back from the details and look at what the pledge reveals about where this is heading.

Anthropic is essentially saying: "We're big enough and committed enough to take on costs that most companies would externalize." This is a strength signal. It's saying Anthropic is serious about long-term infrastructure investment, not just quick expansion followed by abandonment.

It's also a confidence signal. Anthropic is betting that it can grow faster and more profitably than the power crisis can slow it. If the company didn't think it could sustain operations with higher infrastructure costs, it wouldn't make this pledge. The fact that it did suggests the company is projecting massive revenue growth and can absorb these costs.

Finally, it's a consolidation signal. Smaller AI companies can't afford to pay for all grid upgrades themselves. So they'll either have to partner with larger companies or locate in regions where utilities subsidize infrastructure costs. This pushes consolidation toward the biggest players with the most capital.

You'll see a future where maybe five or six mega-companies (Anthropic, OpenAI, Microsoft, Google, Meta, Tesla) control most of the AI infrastructure because they're the only ones who can afford the energy costs and infrastructure pledges. Smaller competitors get squeezed out or acquired.

The International Dimension: Who Wins the AI Power Race

For all the focus on US electricity markets, the real competition is global.

China is currently building data centers with minimal environmental constraint and cheap power (partly coal-based). The EU is establishing stricter energy efficiency requirements for data centers. India is building renewable infrastructure specifically to attract data center investment.

This creates an asymmetry. Companies willing to operate outside strict regulatory environments can expand faster with lower constraints. Companies in regulated markets face higher costs and more scrutiny.

Anthropic is a US company committed to operating domestically, so it's bound by American environmental and regulatory standards. That's ethically defensible but commercially challenging. A Chinese AI company with the same computational requirements would face different constraints.

Over time, this might push more AI infrastructure development to countries with abundant, cheap electricity—which often correlates with less strict environmental regulation. That's a problematic dynamic for climate goals.

The solution requires global coordination on data center standards, which doesn't exist yet. Without it, you'll see a race to the bottom where companies migrate operations to the least regulated jurisdictions.

Practical Implications for AI Users and Developers

If you're building AI products or relying on AI infrastructure, what does this crisis mean for you?

First, electricity costs will almost certainly increase. As companies pay for infrastructure upgrades, some of that cost will eventually flow through to users via higher API prices or subscription costs. OpenAI, Anthropic, Microsoft—they'll all need to recoup power expenses somehow.

Second, availability might become less guaranteed. If grids become increasingly stressed, you might see rate limiting, priority queues, or service degradation during peak demand periods. Major cloud providers will prioritize their premium customers and their own services.

Third, location matters more now. If you're building infrastructure in North America, the region you choose significantly impacts your power costs and availability. Texas is becoming competitive again because of grid capacity. The Pacific Northwest is stable because of hydropower. Virginia is increasingly constrained.

Fourth, efficiency becomes competitive differentiation. Companies that build AI systems to use less electricity will have lower operating costs and more room to scale. This rewards thoughtful architecture design and sustainable practices.

Fifth, specialized hardware might become necessary. As power becomes a bottleneck, custom silicon designed for specific AI workloads (like inference at scale) might become standard. This benefits companies that can afford custom chip design and penalizes those stuck with general-purpose hardware.

The Unspoken Reality: This Isn't Just About Infrastructure

Here's what corporate pledges like Anthropic's don't address directly but everyone in the industry understands:

This is about existential risk management. The companies making these pledges aren't doing it primarily because they're good corporate citizens. They're doing it because they fear what happens if they don't.

If communities across the country start blocking data center construction, refusing to allow land sales, and implementing zoning restrictions against large computing facilities, AI companies lose the ability to scale. No new data centers means no growth.

If the federal government decides to restrict data center expansion or impose capacity taxes, suddenly the entire economic model of AI companies shifts. Profitability depends on being able to scale infrastructure cheaply.

If power becomes so constrained that prices spike dramatically, electricity might become the limiting factor for AI growth rather than compute innovation.

All of these are genuine possibilities if communities feel harmed and politicians feel pressure. So companies are trying to prevent that by being proactive—paying for infrastructure themselves rather than letting communities bear the cost.

It's a rational strategy. It might even work. But it doesn't change the fundamental dynamic: AI infrastructure is outpacing power generation, and no amount of corporate pledges eliminates that gap. The gap only gets closed when new power plants come online.

Until then, you'll see more pledges, more efficiency improvements, more geographic shifts toward power-abundant regions, and increasing pressure on grids in areas with data center expansion.

The political and regulatory response to this will define AI's infrastructure trajectory for the next decade.

FAQ

What is Anthropic's electricity pledge?

Anthropic committed to paying 100 percent of the costs to upgrade local electricity grids needed to connect its data centers to power systems, rather than allowing utilities to pass those costs to residential customers through rate increases. The company also pledged to support development of new power sources and reduce power consumption during peak demand periods.

Why are data center electricity costs becoming a major political issue?

As AI companies expand data center operations, local electricity grids require expensive upgrades to handle the increased demand. Historically, utilities recover these costs through rate increases on all customers, meaning residents pay for infrastructure built primarily to serve industrial users. This has made electricity rates a top election issue in states with significant data center expansion like Texas and Virginia.

How much electricity does training an AI model actually consume?

Training modern large language models like Claude 3.5 or GPT-4 consumes between 500 to 1,000 megawatt-hours per training run. For context, the average American home uses approximately 11 megawatt-hours per year, so a single model training run consumes what 50-100 homes need annually. This doesn't include ongoing inference costs when the model is deployed and users interact with it.

What's the difference between grid infrastructure and power generation capacity?

Grid infrastructure (transmission lines, substations, transformers) is the physical network that delivers electricity from power plants to users. Capacity is the total amount of electricity being generated. You can have perfect infrastructure but insufficient power generation, or abundant generation with inadequate distribution infrastructure. Anthropic's pledge addresses infrastructure costs but doesn't guarantee sufficient electricity will be generated to power its data centers.

Why are AI companies investing in nuclear power plants?

Nuclear plants provide reliable baseline power (unlike solar and wind which depend on weather conditions) without producing emissions. Data centers need continuous, predictable power supply. Microsoft's deal with Constellation Energy to reopen Three Mile Island and Anthropic's support for new nuclear development reflect recognition that existing grid capacity is insufficient to meet AI's electricity demands.

How is this reshaping where data centers get built globally?

Companies are increasingly locating data centers near abundant power sources: nuclear plants, hydroelectric dams, and regions with high renewable energy capacity. This gives companies like Microsoft access to cheaper, more reliable electricity and reduces community opposition. International regions with abundant clean power—Iceland (geothermal), Norway (hydroelectric), Ireland (wind)—are becoming increasingly attractive for data center investment compared to capacity-constrained US regions.

What does this mean for AI costs and availability for users?

As electricity costs rise, some of those expenses will likely be passed to users through higher API prices and subscription costs. Grid stress may also lead to service degradation, rate limiting, or availability issues during peak demand periods. Companies using AI services should expect increasing power-related costs as operational expenses rise for providers.

Can efficiency improvements actually solve the power crisis?

Efficiency improvements help by reducing electricity consumption per unit of computation. Liquid cooling, better chip design, and optimized training methods can reduce power usage by 20-40 percent. However, efficiency often leads to increased usage (Jevons Paradox), where cheaper AI capabilities encourage more deployments, potentially increasing total electricity consumption despite per-unit improvements.

Will data centers in different regions face different constraints?

Yes. Regions with abundant power (parts of Texas with gas generation, Pacific Northwest with hydropower) will have more flexibility and lower costs. Regions with tight grid capacity (parts of Virginia, California) will face higher costs, more regulatory scrutiny, and potential service constraints. Choosing your data center location based on regional power availability has become a major strategic decision.

How does Anthropic's pledge compare to what Microsoft and Meta are doing?

Anthropic focuses on paying infrastructure costs directly. Microsoft emphasizes efficiency innovation (liquid cooling, power management) and securing long-term contracts with specific nuclear plants. Meta uses open-source model release (like Llama) to distribute inference workloads away from its centralized data centers. Each strategy reflects different assessments of how to manage power constraints.

The Bottom Line: Corporate Pledges Are Good, But They Don't Solve the Core Problem

Anthropic's commitment to cover electricity grid upgrade costs is meaningful. It shows the company is thinking seriously about infrastructure impact and is willing to absorb costs rather than pushing them to communities.

But let's be clear about what it actually accomplishes: it makes data center expansion less directly harmful to residents' power bills. It doesn't create new electricity generation. It doesn't magically expand grid capacity. It doesn't solve the fundamental mismatch between AI's exponential power demands and the slow timeline for bringing new power plants online.

The real solution requires three things happening simultaneously:

First, massive investment in new power generation capacity, particularly nuclear, which only comes online over 10-15 year timelines. This is happening but insufficient.

Second, meaningful efficiency improvements in AI infrastructure and model training, which are happening but getting offset by increased usage. This is a race between getting better and using more.

Third, regulatory frameworks that ensure power costs and grid impact are priced appropriately, so companies make location and expansion decisions based on true costs rather than externalities. This barely exists yet.

Without all three, you'll continue seeing the same pattern: corporate pledges, localized electricity price increases, community opposition, project delays, and geographic concentration of AI infrastructure around power-abundant regions.

The companies making these pledges understand this. They're not under the illusion that voluntary commitments solve structural problems. They're buying time and political goodwill while betting that they can scale faster than the power crisis constrains them.

For everyone else—people paying electricity bills, grid operators managing stability, policymakers trying to balance AI innovation with energy costs, and developers building AI applications—the next five years are going to be shaped by how aggressively new power generation gets built and how effectively efficiency improves.

Anthropic's pledge matters. But it's one variable in a much larger game.

Key Takeaways

- AI data centers consume extraordinary electricity: training modern LLMs uses 500-1,000 megawatt-hours per run, equivalent to 50-100 homes' annual consumption

- Anthropic's pledge covers infrastructure costs but not power generation capacity, which is the actual bottleneck limiting AI expansion

- Companies are investing directly in nuclear power plants because grid capacity constraints are becoming the primary limiting factor for infrastructure growth

- Data center locations will increasingly cluster around abundant power sources (nuclear plants, hydroelectric dams), creating geographic competitive advantages

- Efficiency improvements help but often get offset by increased usage, meaning the power crisis likely persists for 10-15 years until new generation comes online

Related Articles

- Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

- Claude's Free Tier Gets Major Upgrade as OpenAI Adds Ads [2025]

- The Emotional Cost of Retiring ChatGPT-4o: Why AI Breakups Matter [2025]

- AI Rivals Unite: How F/ai Is Reshaping European Startups [2025]

- Observational Memory: How AI Agents Cut Costs 10x vs RAG [2025]

- John Carmack's Fiber Optic Memory: Could Cables Replace RAM? [2025]

![Anthropic's Data Center Power Pledge: AI's Energy Crisis [2025]](https://tryrunable.com/blog/anthropic-s-data-center-power-pledge-ai-s-energy-crisis-2025/image-1-1770851161937.jpg)