Apple's $2 Billion Q.ai Acquisition: Reshaping Tech's AI Landscape

In a landmark move that signals Apple's renewed commitment to artificial intelligence advancement, the tech giant announced its acquisition of Q.ai, an Israel-based startup, for a reported

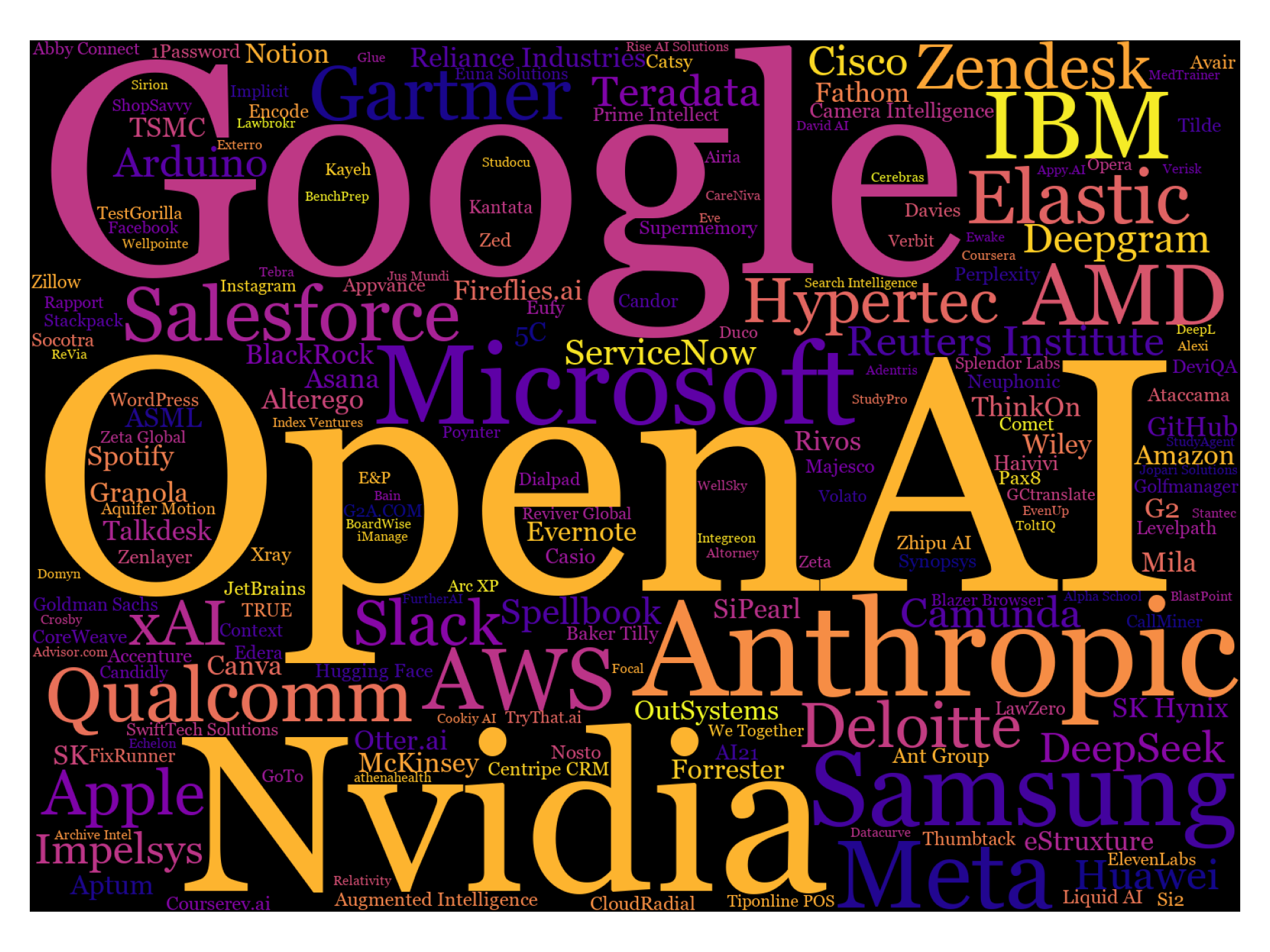

The acquisition wasn't merely a financial transaction; it was a strategic pivot. At a time when competitors like Google, Microsoft, and OpenAI dominate public conversations about artificial intelligence breakthroughs, Apple had been noticeably quiet about its own AI initiatives. While the company has integrated AI features across its ecosystem—from computational photography in iPhones to on-device machine learning in Siri—it hadn't matched the public fanfare of competitors. The Q.ai deal changes this narrative, bringing proven AI talent and innovative imaging technology directly into Apple's hardware engineering division.

What makes this acquisition particularly intriguing is Q.ai's specific technological focus. The startup specializes in imaging and machine learning technologies with applications that extend far beyond traditional smartphone processing. According to patent filings and technology analyses, Q.ai's work encompasses facial recognition, gesture control through "facial skin micro movements," and advanced imaging capabilities that could revolutionize how users interact with wearable devices. Imagine controlling your augmented reality glasses or next-generation AirPods Pro not through voice commands or physical buttons, but through subtle facial expressions or micro-gestures—this is the territory Q.ai has been exploring.

Apple's formal announcement came through Johny Srouji, the company's Senior Vice President of Hardware Technologies, who praised Q.ai as "a remarkable company that is pioneering new and creative ways to use imaging and machine learning." This positioning matters because it places the acquisition squarely within Apple's hardware innovation division, not its software or services teams. This suggests Apple sees Q.ai's technology as fundamental to the next generation of physical products, not merely as a feature addition to existing services.

The timing of this acquisition carries additional significance. Apple CEO Tim Cook had publicly acknowledged during the company's Q3 2025 earnings call that "We're open to M&A that accelerates our roadmap"—a statement that directly preceded this major acquisition. Cook's willingness to pursue strategic acquisitions marked a notable shift for a company that historically preferred building technology entirely in-house. For decades, Apple's philosophy centered on vertical integration and proprietary development. But in the rapidly accelerating AI era, that approach proved insufficient. The acquisition of Q.ai represents Apple's pragmatic acknowledgment that acquiring proven talent, patents, and technology can sometimes move faster than internal development, especially in competitive technological frontiers.

Understanding Q.ai: The Company Behind the Deal

The Founding Vision and Team Pedigree

Q.ai wasn't simply a random AI startup that caught Apple's attention. The company emerged from exceptionally strong technological and leadership foundations. The founding team, led by CEO Aviad Maizels, brought not only entrepreneurial ambition but also proven success in the Israeli tech ecosystem and previous relationships with Apple itself. Maizels' background is particularly noteworthy: he had previously founded Prime Sense, a pioneering 3D sensing and imaging company that Apple acquired in 2013 for reportedly under $400 million. The fact that Maizels returned to Apple through Q.ai wasn't coincidental—it reflected both his understanding of Apple's engineering culture and his proven ability to build technologies that align with Apple's product vision.

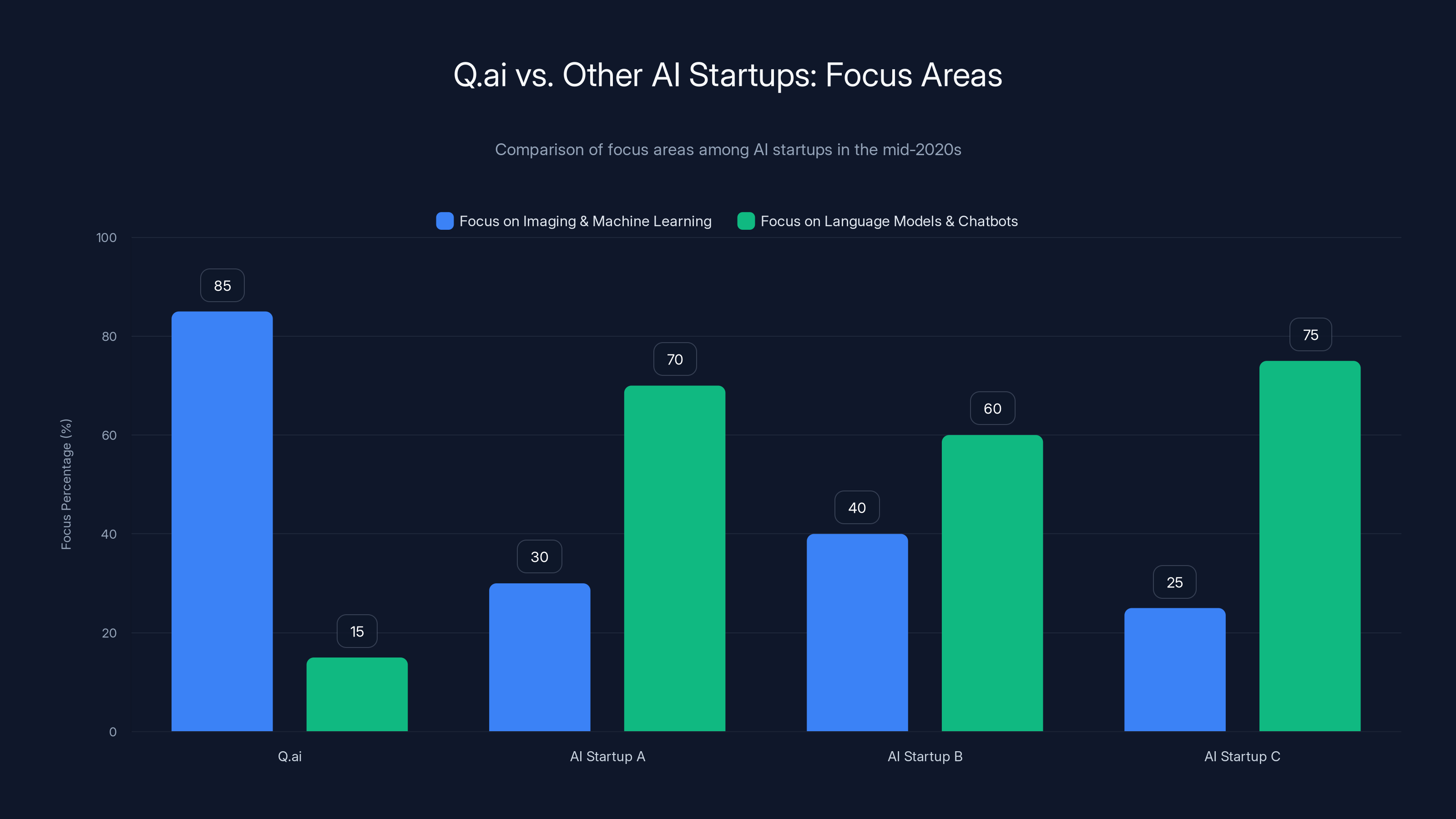

The founding of Q.ai occurred during a period of intense innovation in computer vision and machine learning. The company positioned itself at the intersection of these fields, focusing specifically on how sophisticated imaging technology could be combined with machine learning algorithms to create entirely new categories of human-computer interaction. While many AI startups in the mid-2020s chased large language models and chatbot capabilities, Q.ai took a different approach, doubling down on the physical layer—how humans and devices could communicate through visual and gestural means rather than purely through text or voice.

Prime Sense, Maizels' previous startup, had pioneered 3D depth sensing technology that became foundational to motion sensing in gaming (it powered Microsoft Kinect). That experience informed Q.ai's approach: starting with hardware-level imaging innovations and then layering machine learning intelligence on top. This contrasted with many AI companies that started with algorithms and then sought hardware partnerships. Q.ai built from the ground up with the assumption that specialized hardware would enable more powerful and efficient AI applications.

Core Technology Focus

Q.ai's primary technological focus centered on what researchers call "facial micro-expression" recognition and gesture-based interfaces. The company's patents and published research indicate deep expertise in detecting minute muscular movements beneath the skin's surface—changes so subtle that they're invisible to the naked eye but detectable through specialized imaging sensors. These micro-expressions contain rich information about user intent, emotional state, and desired actions. Traditional interfaces rely on gross motor movements (hand gestures, head positioning) or explicit inputs (voice commands, touch). Q.ai's innovation mapped the space between intention and action, creating interfaces responsive to subtler human signals.

The technical implementation involves several sophisticated components working in concert. First, specialized imaging sensors capture high-frequency visual data from facial regions. Unlike standard RGB cameras, these sensors might operate in infrared, hyperspectral, or depth-sensing modes—technologies Q.ai had developed or optimized. Second, machine learning models trained on extensive datasets can recognize patterns in these micro-movements and correlate them with user intentions. Rather than requiring explicit gestures, a user might accomplish tasks through natural facial expressions, eye movements, or involuntary facial tension patterns. This represents a paradigm shift from explicit interface design toward implicit, brain-intention-reading-like interaction.

The implications extend across wearable device categories. For users with motor disabilities or mobility restrictions, such technology could enable communication and device control previously requiring assistive devices. For surgical applications, surgeons could control imaging systems or robotic arms through subtle gestures captured during procedures. For consumer devices, imagine AirPods that adjust noise cancellation based on detected facial tension patterns, or AR glasses that follow your eye movements and emotional responses to optimize content delivery. These weren't theoretical applications—they represented areas where Q.ai had demonstrable patents and proof-of-concept work.

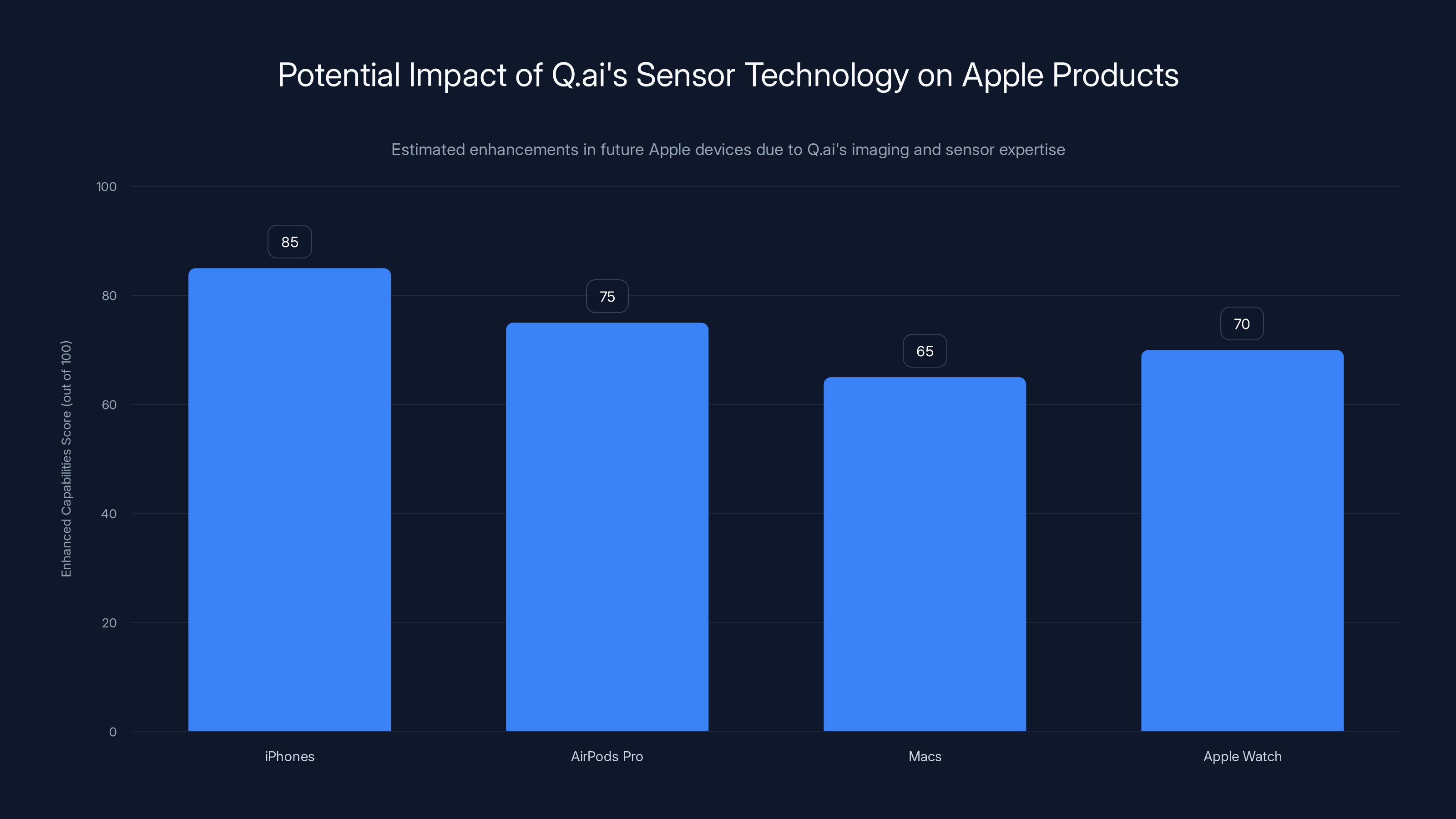

Estimated data shows iPhones could benefit most from Q.ai's sensor technology, enhancing capabilities like facial recognition and gesture control.

Strategic Context: Apple's AI Acceleration Plan

The AI Gap Apple Faced

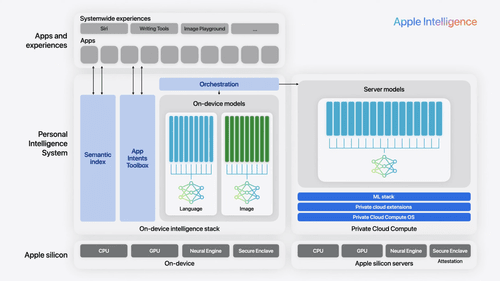

Entering 2025, Apple faced an unusual competitive position in the public AI narrative. The company had been integrating machine learning throughout its products for years—from computational photography that makes iPhone cameras competitive with professional equipment, to on-device processing that powers Siri's voice recognition and suggested replies in Messages. Yet Apple's AI work remained largely invisible to the public and industry analysts. Meanwhile, competitors garnered headlines for ChatGPT, Gemini, Claude, and other large language models that captured public imagination.

This visibility gap masked Apple's actual AI sophistication but created a perception problem. Investors, enterprise customers, and developers increasingly viewed Apple as lagging in the "AI revolution." The company's partnerships—including relying on Google and OpenAI to power some AI features in iOS—seemed to reinforce the narrative that Apple wasn't leading in artificial intelligence, merely integrating other companies' technology. Tim Cook's acknowledgment that Apple was "open to M&A that accelerates our roadmap" directly addressed this perception challenge.

Apple's historical strength lay in hardware-software integration and user experience design. The company excelled at taking emerging technologies and making them intuitive for consumers. But in the AI era, the conversation had shifted toward algorithmic innovation and model development—domains where smaller, more specialized companies often moved faster than large incumbents. By acquiring Q.ai, Apple could acquire both the specialized expertise and the proof-of-concept work necessary to leapfrog years of internal development.

Accelerating the Wearables and AR Strategy

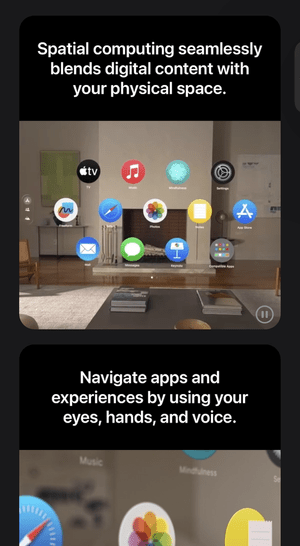

Q.ai's technology proved particularly valuable for Apple's wearables and augmented reality ambitions. Apple's wearables division—driven by Apple Watch, AirPods, and Vision Pro—represents some of the company's highest-growth categories. Yet these devices face interaction challenges inherent to their form factors. Watches have tiny screens. AirPods have limited input surfaces. Vision Pro requires complex hand gesture recognition in three-dimensional space. Q.ai's micro-gesture and facial-expression-based interfaces could revolutionize how users command and interact with all these device categories.

Consider Apple Watch interactions: instead of fumbling with a tiny touchscreen or speaking voice commands in public settings, users could accomplish tasks through subtle wrist movements, breathing patterns, or facial expressions. For AirPods, Q.ai's technology could enable unprecedented awareness of user context—detecting when the user is in a meeting, exercising, or concentrating—without any explicit input, enabling automatic optimization of audio profiles. For Vision Pro, facial expression recognition could replace or supplement hand gesture controls, making AR interactions more natural and intuitive. These capabilities don't merely represent incremental improvements; they represent fundamental reimagining of how wearables could function.

Apple's broader spatial computing strategy—including Vision Pro development and the eventual move toward AR glasses—depends on solving the interaction problem. Wearable AR devices need interfaces that work seamlessly while the device is mounted on the face, without requiring hand movements or constant voice commands. Facial expression and micro-gesture recognition addresses precisely this challenge. By acquiring Q.ai, Apple acquired a significant head start on technology that could become central to the next decade of human-computer interaction in wearable devices.

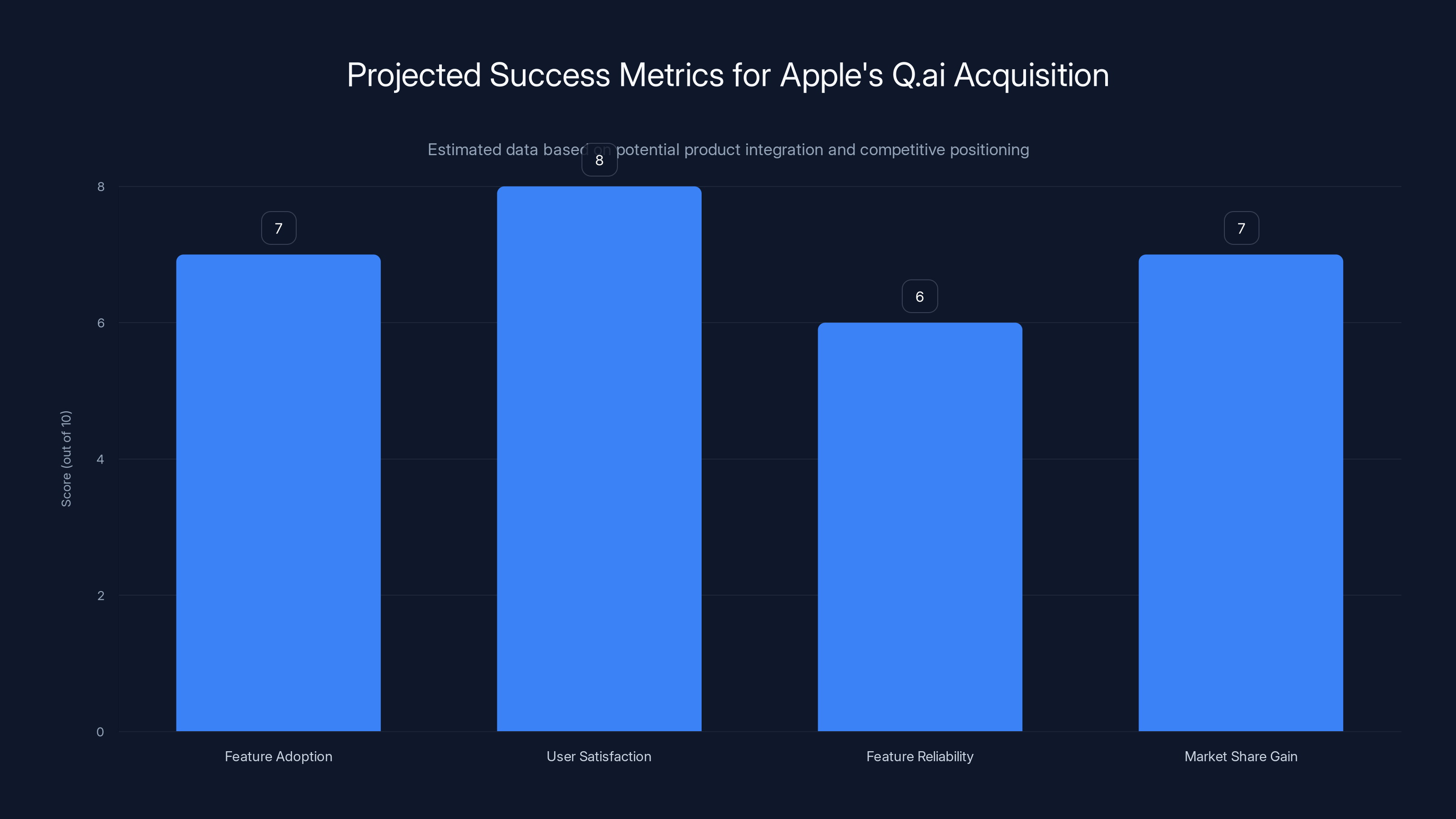

Estimated data suggests Apple's success with Q.ai will be gauged by feature adoption, user satisfaction, feature reliability, and market share gain, with user satisfaction expected to be the highest.

The Technology Stack: What Q.ai Brings to Apple

Imaging and Sensor Innovation

Beyond algorithmic capability, Q.ai brought specialized expertise in imaging and sensor technology that differentiates it from pure software AI companies. Building sophisticated gesture and expression recognition requires more than software algorithms—it requires sensors optimized to capture the right data. Q.ai had developed or partnered with specialists in various imaging modalities: infrared cameras sensitive to skin temperature variations, depth cameras capturing three-dimensional facial geometry, and potentially hyperspectral cameras detecting subtle color variations that reveal underlying vascular activity.

Apple's acquisition meant absorbing this sensor expertise directly. The company already manufactures custom chips and components at scale; adding specialized imaging capabilities to its product lineup became possible. Imagine future iPhones incorporating subtle infrared cameras optimized for facial micro-expression recognition. Or AirPods Pro featuring miniaturized thermal and depth sensors. These aren't incremental tweaks—they represent new sensing capabilities that require manufacturing innovation, component sourcing relationships, and supply chain integration. Q.ai brought the technical specifications and manufacturing knowledge necessary to make these additions viable.

The timing aligned well with Apple's silicon strategy. The company had been developing custom chipsets for years—the M-series for Macs, the A-series for iPhones, specialized chips for different device categories. Integrating Q.ai's sensor and imaging expertise meant Apple could design specialized processing capabilities for facial and gesture recognition tasks, similar to how dedicated neural engine cores in Apple's chips accelerate machine learning tasks generally. Custom silicon optimized for specific imaging processing tasks could deliver better performance and efficiency than generic processors.

Machine Learning Models and Algorithms

Q.ai's technical foundation extended to proprietary machine learning models trained on extensive datasets of facial expressions, micro-movements, and corresponding user intents. These models represent years of research and thousands of hours of training data collection. Acquiring Q.ai meant Apple acquired these trained models, the underlying algorithms, and the expertise to refine and extend them further. Given the competitive advantage of pre-trained models in machine learning applications, this represented tremendous value beyond the raw cash transaction.

The company's machine learning work likely included several sophisticated components: computer vision models for detecting and tracking facial landmarks and expressions, temporal models for understanding how expressions evolve over time, intention-mapping models linking expressions to predicted user actions, and personalization models adapting to individual users' unique expressions and micro-movement patterns. Each of these components required substantial training data and iterative refinement—the exact work that would take Apple's internal teams years to replicate from scratch.

Patent Portfolio and Intellectual Property

The $2 billion price tag reflected not just the team and current technology but also Q.ai's patent portfolio. Tech acquisitions of this magnitude almost invariably include valuable patent holdings covering novel techniques, specific implementations, and potential applications. Q.ai's patents—particularly those covering micro-expression detection, facial gesture interfaces, and multi-modal sensing approaches—became Apple's intellectual property upon acquisition. This patent portfolio provides defensive protection against competitors and creates potential licensing revenue opportunities for Apple.

Patent-based valuations can be significant in technology acquisitions. A single patent in emerging technology spaces might be valued at $5-50 million depending on breadth, technical significance, and competitive landscape. A startup like Q.ai likely held dozens of patents across facial recognition, gesture control, and imaging optimization domains. Collectively, this patent portfolio might have represented 20-30% of the acquisition value, with the remainder distributed across team talent, customer relationships, and existing technology products.

Implications for Apple's Product Roadmap

Next-Generation AirPods and Wearables

The most immediate impact of the Q.ai acquisition likely manifests in Apple's wearables lineup. AirPods Pro, the company's premium wireless earbuds, already incorporate sophisticated sensors and machine learning for features like adaptive audio and conversation detection. Q.ai's facial micro-expression recognition technology could enable entirely new capabilities. Imagine AirPods that understand when the wearer is speaking, excited, stressed, or focused—all without explicit user input—and automatically adjust audio profiles accordingly.

Beyond AirPods, Apple Watch represents another target for this technology. Current Watch interfaces rely heavily on the watch face's tiny display and crown control mechanism. Adding facial gesture recognition—detecting when the user has tilted their head in specific ways, or tensed facial muscles in patterns corresponding to predetermined actions—could create entirely new interaction modalities. Users could respond to notifications through subtle facial expressions, control music playback through eye movements, or even dismiss alarms through specific micro-expressions. This might sound futuristic, but Q.ai's patents suggest significant progress toward practical implementation.

Apple's rumored ring-form-factor devices might benefit particularly from Q.ai technology. A smart ring lacks any interaction surface—no screen, no buttons, no speaker. Such a device would require sophisticated remote sensing capabilities to detect user intent. Facial expression recognition, combined with other sensing modalities, could enable smart rings to function reliably despite lacking traditional input mechanisms. This represents a product category where Q.ai's innovations move from nice-to-have features to absolutely necessary technology.

Vision Pro Evolution and Spatial Computing

Apple's Vision Pro represents the company's most ambitious hardware initiative in years—a spatial computing device that blends digital content with the physical world. The device already incorporates sophisticated eye-tracking technology, hand gesture recognition, and advanced sensors. Yet user interaction with Vision Pro remains somewhat cumbersome, particularly for extended use. Q.ai's facial expression and micro-gesture recognition could streamline interaction significantly.

Vision Pro's current interaction model relies heavily on eye gaze for menu selection and hand gestures for action confirmation. Users can feel fatigued after extended periods of precise eye tracking and complex hand gestures. Micro-expression-based controls—where subtle facial movements trigger actions without conscious user effort—could reduce cognitive load and fatigue. Imagine a Vision Pro user navigating applications and selecting content primarily through natural facial expressions and implicit gestures, with minimal hand movement required. This would make the device more comfortable for extended use and more intuitive for users transitioning from traditional computing interfaces.

Longer-term, Q.ai technology enables the next evolution toward true AR glasses. Current Vision Pro is tethered to an external battery for practical use. Future AR glasses will need to detect user intent and respond to commands without requiring any external input device or explicit gesture. If worn on the face itself, AR glasses could incorporate Q.ai's micro-expression recognition directly in the device's own sensing array, enabling users to control the glasses through subtle facial movements invisible to observers. This represents a significant step toward truly seamless human-computer interaction in wearable AR.

Siri Intelligence and Voice Assistant Evolution

Siri has long been considered one of Apple's weaker AI products compared to competing voice assistants. While Google Assistant and Alexa captured market mindshare through integration into countless devices and smart home ecosystems, Siri remained largely confined to Apple devices. The Q.ai acquisition presents an opportunity to fundamentally reimagine Siri—not as purely a voice assistant, but as a multimodal intelligence system that understands facial expressions, gestures, voice commands, and implicit intent signals simultaneously.

Imagine using Siri in settings where voice commands feel socially awkward or practically difficult. In a crowded coffee shop, instead of speaking a voice command loudly, users could accomplish the same task through subtle facial expressions detected by the device's front camera. During exercise or activities where voice commands are impractical or unsafe, micro-gestures could replace voice. Siri could become context-aware based on detected user state—understanding when the user is distracted, focused, emotional, or calm—and adjusting its responses accordingly. This multimodal approach would make Siri more capable and intuitive than single-modality competitors.

Apple's acquisition of Q.ai for

Competitive Landscape Analysis

Microsoft's Acquisition Strategy

Microsoft's massive investment in OpenAI and integration of advanced AI into its Office productivity suite and Bing search engine represented one competitive benchmark that Apple watched carefully. Microsoft's strategy emphasized partnering with cutting-edge AI companies and integrating their technology into existing products. By acquiring Q.ai, Apple adopted a similar playbook but with a crucial difference—Microsoft pursued general-purpose AI capabilities applicable across many domains, while Apple focused on specialized capabilities directly supporting hardware innovation.

The strategic contrast is worth noting. Microsoft built partnerships and minority stakes in AI companies, maintaining independence while gaining access to innovation. Apple traditionally preferred acquisitions that brought full control over critical technologies. This Q.ai acquisition represented Apple's confidence that the startup's technology was sufficiently aligned with Apple's vision to warrant acquisition rather than partnership. It also suggested that Apple saw facial recognition and gesture control as core to its future, worth owning entirely rather than licensing from external providers.

Google's Computational Imaging Leadership

Google has dominated computational photography and imaging-based machine learning for years, from Pixel phone computational photography that rivals or exceeds dedicated cameras, to sophisticated depth sensing in Pixel Phones. Q.ai's expertise in imaging and facial recognition represented another domain where Google held considerable lead. By acquiring Q.ai, Apple wasn't merely matching Google's capabilities—it was addressing a specific gap in gesture and expression-based interaction where Google had invested less heavily.

Yet competitive pressure from Google wasn't the only driver. Google's approach emphasizes cloud-based processing and continuous model refinement through online learning. Apple's philosophy prioritizes on-device processing and privacy preservation. Q.ai's technology could enable sophisticated facial recognition and gesture control entirely on-device, without sending facial data to Apple's servers. This privacy-first approach differentiated Apple's implementation from competitors and aligned with the company's established privacy messaging to consumers.

Meta's AR and Gesture Control Work

Meta has invested heavily in augmented reality and spatial computing, with significant research into gesture recognition and hand tracking. The company's Quest headsets incorporate sophisticated hand gesture recognition enabling interaction without controllers. Yet Meta had invested less in facial expression recognition compared to hand gestures. Q.ai's specific expertise in micro-expression detection and facial gesture control represented capabilities that complemented Meta's hand gesture work. If both companies pursued similar acquisition strategies simultaneously, Meta might have been a competing bidder for Q.ai, making the acquisition urgent for Apple before competitors could acquire the company.

Technical Deep Dive: Micro-Expression Recognition

The Science Behind Facial Micro-Expressions

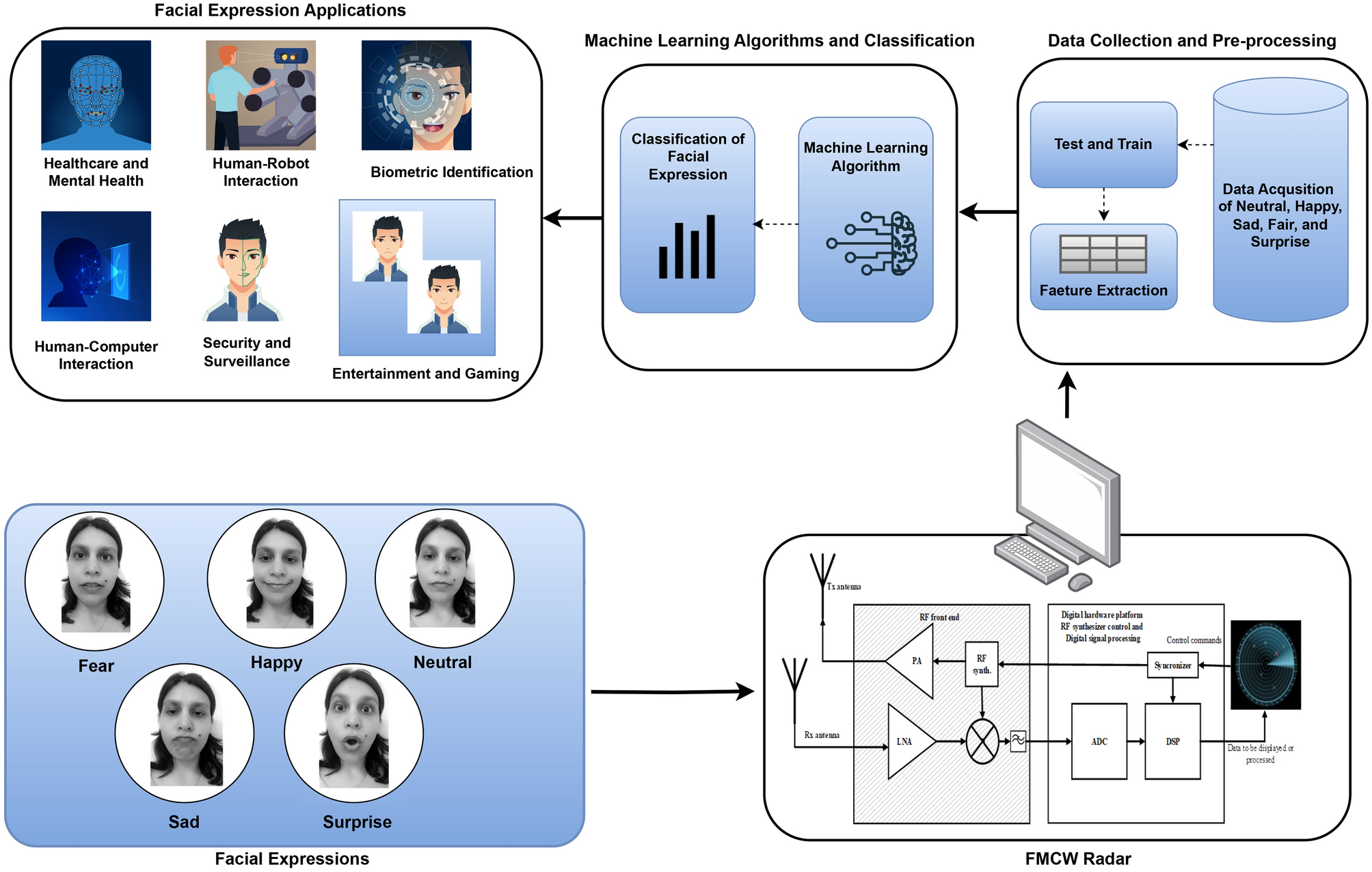

Facial micro-expressions represent involuntary facial muscle movements that reveal emotional and cognitive states. Unlike deliberate expressions that people consciously control, micro-expressions occur involuntarily and typically last less than a second, often between 1/25th and 1/5th of a second. Scientists have identified seven universal emotional micro-expressions—happiness, sadness, fear, anger, surprise, disgust, and contempt—that appear consistently across cultures. Beyond emotions, micro-expressions also correlate with cognitive states: concentration produces distinctive facial tension patterns, confusion generates characteristic expressions, and high focus creates specific eye and brow movements.

Q.ai's innovation centered on detecting these subtle signals using advanced imaging and machine learning. Traditional facial recognition systems detect gross features—overall face shape, relative eye and mouth positions—to identify who a person is. Micro-expression detection goes deeper, analyzing muscular movements at the millimeter scale to understand what a person is thinking or feeling. This requires sensors sensitive enough to detect minute changes, processing fast enough to capture expressions lasting fractions of a second, and algorithms sophisticated enough to disambiguate subtle differences between similar expressions.

The detection methodology involves several computational steps. First, specialized sensors—potentially infrared or depth cameras—capture detailed geometric information about facial structure and movement. Unlike standard RGB cameras that capture color information, these sensors might measure skin temperature variations, surface topology changes, or depth shifts as facial muscles contract. Second, preprocessing algorithms normalize the captured data, accounting for head position, lighting variations, and individual differences in facial structure. Third, machine learning models trained on extensive labeled datasets predict the specific micro-expression present and its associated intent.

Machine Learning Approaches

Building accurate micro-expression recognition systems requires careful machine learning architecture design. Early computer vision approaches relied on hand-crafted features and traditional machine learning algorithms. Modern approaches use deep learning, particularly convolutional neural networks (CNNs) for spatial feature extraction and recurrent neural networks (RNNs) for temporal analysis—understanding how expressions evolve over time rather than examining static frames in isolation.

A sophisticated micro-expression recognition system might employ multiple neural network layers with specific responsibilities. Spatial layers extract local features from image patches—detecting muscle movements, skin texture changes, and geometric deformations. Temporal layers analyze sequences of frames to understand expression trajectories and timing characteristics. Attention mechanisms highlight which facial regions and temporal patterns are most informative for accurate recognition. Ensemble approaches combining multiple specialized networks improve robustness and accuracy beyond any single model.

Training such systems requires substantial labeled data. Machine learning researchers would need thousands of video clips with annotated micro-expressions, indicating when they occur, what type of expression they represent, and what user action or intent they correlate with. This dataset creation is expensive and time-consuming, requiring human annotators to review footage frame-by-frame, identifying subtle muscular movements and categorizing them. Q.ai's value included not just algorithms, but the extensive datasets developed over years of research and product development.

Real-World Implementation Challenges

Implementing micro-expression recognition in consumer devices faces substantial practical challenges. Lab settings with controlled lighting, camera angles, and subject cooperation enable high accuracy. Consumer devices operate in uncontrolled environments: varying lighting conditions, extreme head angles, partially obscured faces (users might be wearing sunglasses or hats), and changing expressions. Robustness across diverse demographic groups—different ethnicities, ages, genders—requires training data spanning this diversity and careful bias testing.

Computational efficiency represents another challenge. Running sophisticated neural networks on wearable devices with limited power budgets and processing capacity requires optimization. Q.ai's team would have developed specialized approaches—quantized models requiring less computational resources, efficient architectures exploiting mobile hardware capabilities, and on-device processing pipelines optimized for real-time performance. These implementation details often determine whether laboratory innovations become practical consumer products.

Privacy considerations constrain how micro-expression recognition can operate. Apple's privacy-first philosophy means facial data—particularly data revealing emotional state—should remain on-device rather than transmitting to cloud servers. This requires efficient models small enough to run entirely on devices like AirPods, smartwatches, and phones, without network connectivity. This constraint pushes toward smaller neural networks, specialized hardware accelerators, and clever edge processing techniques—exactly the areas where Q.ai's engineering team had developed expertise.

Apple's acquisition of Q.ai for

Privacy Implications and User Data Protection

On-Device Processing Strategy

Apple's acquisition of Q.ai must be understood within the context of the company's privacy positioning. For decades, Apple has differentiated itself through privacy advocacy, marketing devices that process sensitive information on-device rather than transmitting it to corporate servers. This privacy commitment creates both trust with consumers and practical constraints on how AI capabilities can operate.

Micro-expression recognition presents particularly sensitive privacy implications. If Apple could train models understanding users' emotional states, stress levels, and thoughts through facial expressions, this could enable powerful personalization. It also represents potentially the most invasive surveillance imaginable—a system monitoring emotional state without explicit consent or awareness. Apple's resolution to this tension involves on-device processing: training sophisticated facial recognition models that operate entirely on devices in users' hands and on their wearables, never transmitting raw facial data or detailed expression analysis to Apple's servers.

This on-device approach provides genuine privacy advantages. Users retain control over their facial data—the device captures and analyzes it locally, but no centralized databases accumulate facial expression profiles. Apple doesn't need to build server infrastructure processing billions of facial images daily. Yet on-device processing also constrains capabilities. Without access to vast server-side computing resources and continuous learning from new data, on-device models might be less capable than cloud-based alternatives offered by competitors. Apple accepted this tradeoff explicitly, valuing privacy over maximum capability.

Regulatory Alignment

The timing of this acquisition intersected with evolving privacy regulations worldwide. The European Union's Digital Markets Act, AI Act, and other regulatory frameworks were increasingly constraining how large technology companies could process biometric data. Regulations emerging in various US states similarly raised questions about facial recognition and emotion detection. Q.ai's technology and Apple's acquisition strategy needed to account for this regulatory environment.

On-device processing aligns well with privacy-first regulations. If Apple can demonstrate that micro-expression detection and analysis occur entirely on users' devices without data transmission to Apple's servers, regulatory scrutiny diminishes. Users retain fundamental control over who accesses emotional state information derived from facial analysis. Explicit user consent becomes practical—enabling the feature only for specific applications where users opt-in, rather than building system-wide surveillance infrastructure.

Apple's historical privacy advocacy gave the company credibility in implementing privacy-protective approaches to facial expression recognition. Competitors like Google or Meta would face greater skepticism introducing similar technology, given historical concerns about how these companies monetize user data. Apple's brand reputation for privacy meant consumers might more readily trust micro-expression-based features if Apple implemented them with genuine privacy safeguards. The Q.ai acquisition represented an opportunity to introduce powerful capabilities while maintaining privacy commitments that differentiate Apple.

Market Context and Industry Implications

The 2025 AI Acquisition Landscape

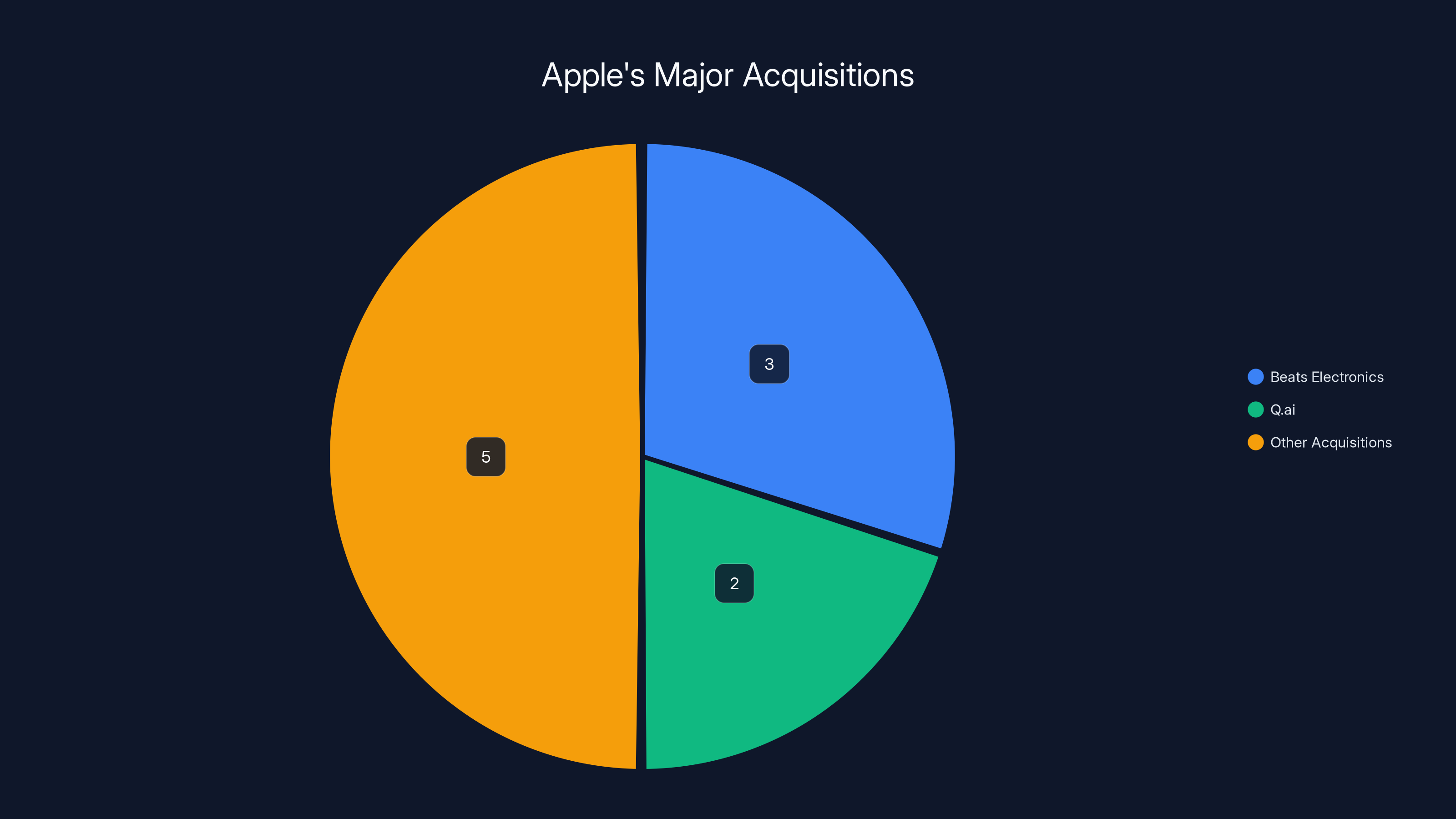

The Q.ai acquisition arrived during a broader wave of AI-driven corporate acquisitions throughout 2024-2025. Tech companies facing competitive pressure in artificial intelligence increasingly preferred acquiring proven startups over building internally. This reflected recognition that AI leadership often concentrates in specialized startups moving faster than established companies. For Apple specifically, the acquisition represented a more aggressive posture toward AI competition after years of maintaining that the company was investing heavily in AI but would do so primarily internally.

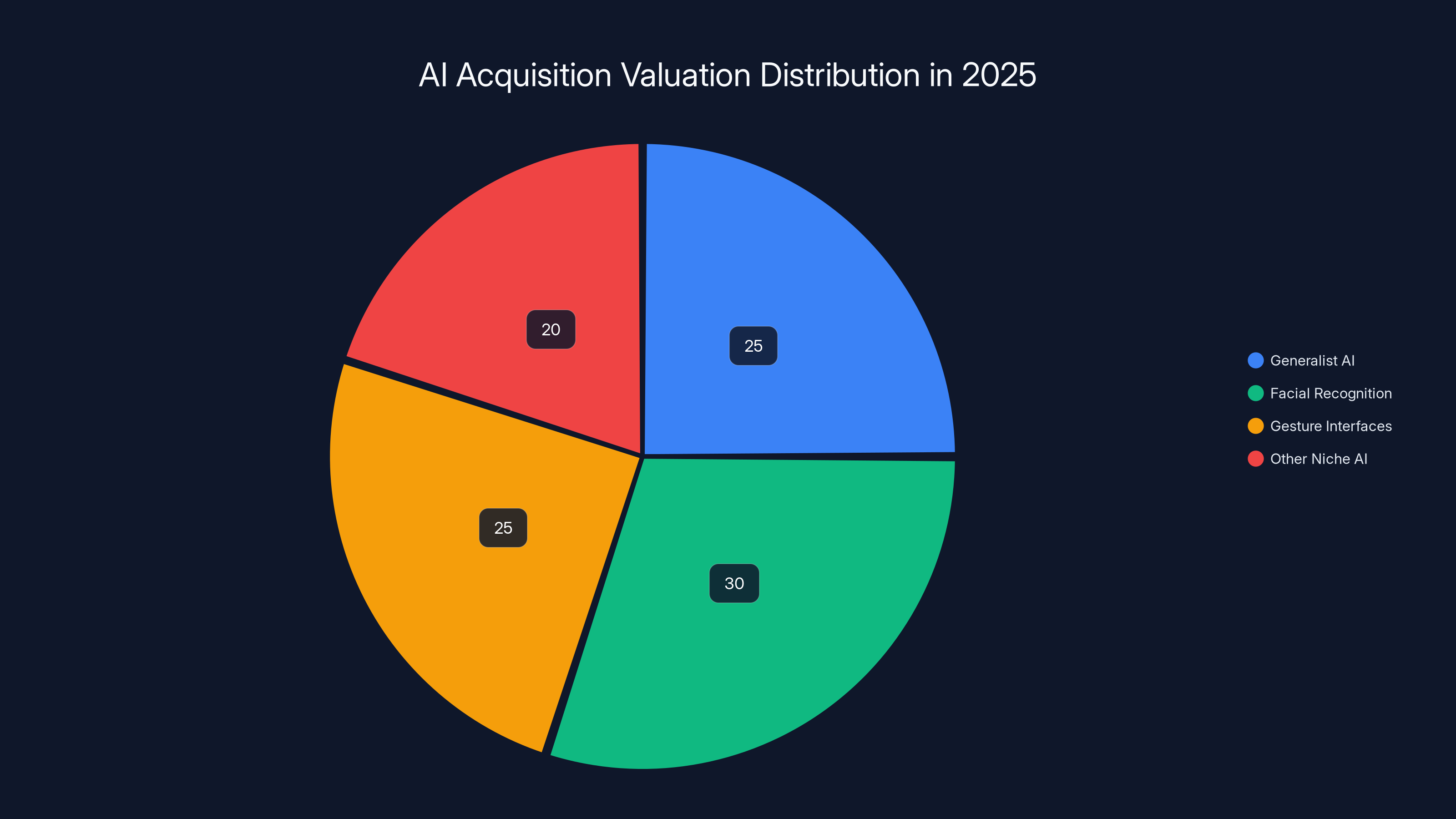

The $2 billion valuation provided market signals about startup valuations in the specialized imaging and gesture recognition space. Q.ai wasn't a generalist AI company pursuing large language models or multimodal foundation models. It occupied a specific niche—facial micro-expression recognition and gesture-based interfaces. Yet Apple valued this specialization highly, suggesting that niche AI capabilities addressing specific consumer problems commanded significant valuations in 2025's technology market. For founders and investors in specialized AI companies, the Q.ai acquisition demonstrated that focused innovation in specific domains could be more valuable than building broadly applicable general-purpose AI.

Startup Ecosystem Implications

The acquisition of Q.ai likely accelerated a dynamic where Israeli AI startups—particularly those with founders who had previous successful exits to Apple or other major tech companies—attracted disproportionate attention from acquiring companies. Aviad Maizels' track record (Prime Sense acquisition in 2013, subsequent Q.ai acquisition in 2025) demonstrated that successful Israeli founders could command significant valuations for specialized hardware-software companies combining imaging innovation with AI capability.

This created ripple effects throughout the Israeli technology ecosystem. Other imaging and gesture recognition startups would likely field acquisition interest, knowing that Apple had just validated the market for such technology. The Israeli government and venture capital community, already supportive of technology entrepreneurship, received signals that hardware-AI combinations addressing consumer devices represented particularly attractive investment domains. The Q.ai acquisition thus influenced capital flows and startup formation in the broader ecosystem, potentially accelerating development of related technologies.

Consolidation Trends in Hardware AI

Broader patterns of technology industry consolidation accelerated through the mid-2020s, driven by AI competition. Large companies with resource constraints struggled to compete in pure AI domains where specialized startups moved faster. Rather than trying to match competitors' research velocity internally, acquiring companies opted to integrate successful startups. This consolidation trend meant fewer independent AI companies survived long-term. Promising founders faced clear choices: pursue independent venture-backed company building with eventual acquisition, or join large technology companies as employees from the beginning.

For the consumer technology industry specifically, consolidation around hardware-AI integration meant that the innovation ecosystem became increasingly concentrated. A few large companies (Apple, Google, Microsoft, Meta, Samsung) would control most consumer-facing AI capabilities, with smaller independent players filling niche markets serving enterprise or specialized applications. This raised questions about innovation incentives in subsequent decades—would venture capital invest in hardware-AI startups if acquiring companies could purchase promising companies before they achieved meaningful scale?

In 2025, niche AI specializations like facial recognition and gesture interfaces commanded significant valuations, reflecting a trend towards targeted innovation. (Estimated data)

Comparative Analysis: Hardware AI vs. Software AI

Distinctions in AI Strategy

Q.ai's acquisition revealed important distinctions between hardware-focused AI and pure software AI that dominated public conversations about artificial intelligence. OpenAI, Anthropic, and similar companies built large language models—mathematical constructs trained on vast text data, running on cloud infrastructure. These companies' valuations derived from their algorithms and trained models. Q.ai's value derived differently: from specialized hardware and sensors combined with machine learning, designed to operate on consumer devices in users' hands.

These approaches involve fundamentally different competitive dynamics. Software-AI companies compete through model sophistication and training data scale. More parameters, better training data, and more computational resources generally produce superior language models. This favors companies with access to massive computational infrastructure and broad internet data. Hardware-AI companies compete through sensor specialization, form factor integration, and on-device efficiency. Success requires deep expertise in consumer electronics manufacturing, supply chain management, and hardware-software co-design. These capabilities concentrate in companies like Apple with decades of hardware engineering experience.

Apple's acquisition of Q.ai reflected recognition that competing with software-AI pure-plays on their terms proved difficult. Apple lacked the computational infrastructure and data advantages of competitors. But Apple could dominate hardware-AI integration where consumer devices became the competitive battleground. By acquiring Q.ai, Apple positioned its wearables and spatial computing products as the primary arena for meaningful AI innovation from the consumer perspective—not raw algorithmic capability, but AI capabilities that solve real consumer problems through better hardware.

Implications for Product Development

This distinction shaped how Apple would likely integrate Q.ai's technology into products. Rather than building general-purpose AI capabilities available across applications, Apple would bake micro-expression recognition and gesture control into specific products where these capabilities created superior user experiences. AirPods would gain emotional awareness capabilities enabling automatic optimization. Vision Pro would support more natural gesture control. Smartwatches would enable expression-based interaction. Apple Watch would transition from predominantly voice-command and touch-based interaction toward multi-modal interaction combining expressions, micro-gestures, and explicit commands.

This focused approach suited Apple's product philosophy. The company historically resisted adding features merely because they were technically possible. Features needed clear use cases improving user experiences in meaningful ways. Q.ai's technology wouldn't be bolted onto every Apple product indiscriminately. Instead, product teams would carefully evaluate where micro-expression recognition genuinely improved interaction paradigms. This disciplined approach meant slower technology adoption than competitors might achieve, but potentially more meaningful integration when the technology did ship.

The Balance of On-Device vs. Cloud Processing

Q.ai's focus on on-device processing differentiated it from many AI startups pursuing cloud-first strategies. Cloud processing offers advantages—access to vast computational resources, continuous model improvement from accumulated data, easy deployment to users without special hardware requirements. Yet cloud processing also creates privacy concerns and latency issues. On-device processing inverts this tradeoff: privacy and low-latency response times improve, but computational resources and model sophistication constraints apply.

Apple's commitment to on-device processing meant Q.ai's technology would need to operate efficiently on devices like AirPods with power budgets measured in milliwatts and processing budgets measured in milliwatt-hours. This required developing lean, efficient models that sacrificed some accuracy for computational efficiency. Yet even this tradeoff aligned with Apple's strategy. Users might accept slightly lower accuracy in micro-expression recognition (97% instead of 99%) in exchange for guaranteed privacy—knowing facial data never left their device. This privacy-first approach differentiated Apple from competitors and justified continued focus on Q.ai's on-device processing specialization.

Future Product Possibilities and Timeline

Near-Term Integration (2025-2026)

The most likely near-term products benefiting from Q.ai integration would be next-generation AirPods and Apple Watch models. These products release on annual cycles, and Apple would have begun design phases for 2025-2026 models prior to publicly announcing the Q.ai acquisition. Apple's product teams would integrate micro-expression detection capabilities into devices launching 12-18 months after acquisition. Rather than complete reimagining, early integration would likely focus on specific features: AirPods detecting user stress levels and adjusting audio profiles automatically, or Apple Watch understanding when users are distracted and suppressing notifications accordingly.

These initial integrations would represent careful, limited applications of the technology—not full-scale deployment across all features. Product teams would gather real-world usage data, refine algorithms based on diverse users' actual patterns, and identify bugs or edge cases before expanding functionality. This phased approach aligned with Apple's philosophy of avoiding shipping technologies that feel half-baked or unreliable to consumers. Better to launch limited micro-expression detection that works flawlessly than ambitious capabilities that occasionally fail.

Medium-Term Product Evolution (2026-2027)

Medium-term, Apple would likely introduce more sophisticated applications. Vision Pro 2 or a future AR glasses prototype might incorporate significantly enhanced gesture control derived from Q.ai's micro-expression and facial movement recognition. Rather than relying primarily on hand gestures and eye gaze, users could control spatial computing devices through subtle facial expressions and micro-movements detected by the device's front sensors. This would reduce user fatigue and enable interaction in situations where hand gestures prove impractical—while lying down, during exercise, or while holding objects.

Simultaneously, improvements to Siri would likely incorporate Q.ai's technology. By 2026-2027, Siri could understand not just spoken requests but emotional context derived from facial expression analysis. Users could express frustration through micro-expressions that Siri would interpret, potentially offering reassurance or modified responses. Siri could detect when users were confused by responses and proactively offer clarifications or alternative approaches. This emotional intelligence layer wouldn't merely improve Siri's capabilities technically—it would change how the assistant felt to users, making interaction feel more natural and empathetic.

Long-Term Vision (2027+)

Longer-term, Q.ai's technology could enable entirely new product categories impossible without gesture and expression recognition. Apple might develop smart contact lenses or glasses lacking any visible control surface, requiring sophisticated facial expression recognition to operate. A true augmented reality glasses device could detect users' expressions and eye movements, inferring intent and automatically adjusting displayed content. Rather than explicitly commanding devices through gestures, users could browse information through natural eye movements and control augmented reality content through subtle facial expressions.

Within existing products, Q.ai technology could enable more sophisticated health tracking. Wearables detecting signs of emotional distress, exhaustion, or health issues through micro-expression analysis could provide early warnings of health problems. A smartwatch might detect stress-related facial muscle tension and recommend breathing exercises or mindfulness breaks before stress escalates. Apple Health could incorporate emotion tracking alongside current metrics, providing holistic understanding of users' physical and mental states. These capabilities transform wearables from simple activity trackers into more sophisticated health and wellness devices understanding users' emotional and psychological states.

Q.ai's unique focus on imaging and machine learning sets it apart from other AI startups that predominantly focus on language models and chatbots. (Estimated data)

Investment in AI Talent and Infrastructure

Human Capital Acquisition

While the $2 billion price reflected the technology and intellectual property, substantial value came from acquiring the Q.ai team itself. Aviad Maizels and his leadership team brought proven experience building successful hardware-AI companies, relevant domain expertise in facial recognition and gesture control, and demonstrated ability to execute. Apple's hiring practices typically involve evaluating individual engineers, but acquisitions like this provide the option to bring entire teams onto Apple's payroll with their existing working relationships intact. Teams that collaborated successfully at Q.ai would continue collaborating within Apple's organization.

Apple's commitment to retaining Q.ai's team manifested in Apple's public statements. Srouji explicitly noted that Maizels and the founding team "will join Apple," signaling Apple's commitment to keeping the founders involved in continued development. This mattered tremendously—when startup founders see acquirers dismissing their leadership, they often choose not to stay, instead pursuing new ventures. By publicly committing to retain leadership, Apple signaled respect for Q.ai's vision and likely negotiated earnout arrangements or retention bonuses ensuring the team remained engaged.

Integration Into Apple's Organization

Integrating acquired startup teams into large organizations proves notoriously challenging. Startups operate with different cultures, decision-making processes, and incentive structures than large companies. Some acquisitions fail because acquired teams become frustrated within slow-moving corporate bureaucracies and leave after vesting stock grants. Apple's reputation for successful acquisitions (Beats, various chip design companies) derived partly from the company's ability to integrate acquired talent while maintaining startup-like execution velocity.

Q.ai would likely integrate into Apple's Hardware Technologies division under Johny Srouji's leadership. This placed the team within Apple's most forward-thinking product development organization, responsible for speculative future products and fundamental technology innovations beyond current product roadmaps. Rather than being absorbed into a product team with immediate shipping deadlines, Q.ai's team would have operational independence to continue advancing core technologies while Apple's product teams determined integration points. This organizational structure acknowledged that Q.ai's technology required continued development before it could power consumer products reliably.

Research Infrastructure and Funding

Apple's acquisition provided Q.ai's team with resources vastly exceeding what a startup could access independently. Apple can fund research and development without needing quarterly revenue targets or exits on specific timelines. Q.ai's researchers could pursue ambitious long-term projects—building new datasets for micro-expression recognition, developing next-generation neural network architectures, exploring applications in spatial computing—without venture capital pressure to achieve profitability within fixed timelines.

This research freedom likely accelerated Q.ai's innovation velocity. The team gained access to Apple's computing infrastructure, research labs, and prototype manufacturing capabilities. Rather than building proof-of-concepts in a startup lab, Q.ai engineers could work with Apple's manufacturing teams to develop production-ready implementations. Access to Apple's supply chain and component suppliers meant they could experiment with specialized sensors and hardware that startup budgets couldn't support. These resource advantages could advance Q.ai's technology years beyond where independent development would progress.

Alternative Approaches Apple Could Have Taken

Internal Development Path

Apple could have pursued entirely internal development of micro-expression recognition and gesture control capabilities. The company employs thousands of machine learning engineers and researchers across various AI domains. Theoretically, this internal team could research facial micro-expression recognition, develop datasets, train models, and integrate capabilities into products. The advantage of this approach: Apple maintains 100% control over all technology, intellectual property, and product roadmap. The disadvantage: this path would likely require 3-5 years of focused research before shipping production-ready capabilities. Apple's recent acknowledgment that it was "open to M&A that accelerates our roadmap" suggested internal development would move too slowly given competitive pressure.

Licensing or Partnership Approach

Alternatively, Apple could have licensed Q.ai's technology or pursued a minority equity investment maintaining Q.ai's independence while gaining access to its innovations. This approach would have been lower-risk financially and maintained Q.ai's potential as an independent company. Yet partnerships and licensing arrangements create ongoing complications—disagreements over product direction, royalty negotiations, constraints on how Apple could modify or integrate technology. Full acquisition resolved these complications by giving Apple complete control over how Q.ai's technology evolved and integrated into products.

Competing Startup Acquisitions

Apple might have pursued different startups with relevant capabilities. The facial recognition and gesture control space included multiple companies working on related problems. Other startups offered different technical approaches: some focused on hand gesture recognition rather than facial expressions, others pursued depth-sensing approaches, still others developed software-only solutions without specialized hardware assumptions. The choice to acquire Q.ai specifically reflected Apple's assessment that micro-expression recognition through advanced imaging represented the most important missing capability for future products, and that Q.ai offered the most advanced development in this specific domain.

Regulatory Considerations and Compliance

Biometric Data Regulations

Q.ai's facial expression recognition technology falls into regulatory categories involving biometric data processing. European regulations (GDPR, AI Act) impose strict requirements on biometric data collection and processing. US state regulations (California Consumer Privacy Act, Illinois Biometric Information Privacy Act, and others) similarly constrain facial recognition applications. Any product Apple developed using Q.ai's technology would need to navigate this regulatory landscape carefully.

Apple's privacy-first positioning and on-device processing approach positioned the company to address regulatory requirements better than competitors pursuing cloud-based facial recognition. By ensuring facial expression data never left users' devices, Apple could argue it wasn't collecting or storing biometric information in centralized databases subject to regulatory oversight. This regulatory advantage—combined with Apple's strong privacy brand—suggested the company had advantages integrating Q.ai's technology compared to competitors.

Antitrust Considerations

Apple's $2 billion acquisition of Q.ai generated minimal antitrust scrutiny, suggesting the deal didn't threaten regulators' competition concerns. Q.ai represented a small startup; its acquisition by Apple didn't consolidate competing major players or reduce consumer choice. If Apple had attempted to acquire a larger competitor with significant existing market share, regulatory review would have intensified. The relatively small size of Q.ai's acquisition likely meant streamlined regulatory approval processes, though Apple would have required various regulatory filings depending on involved jurisdictions.

Measuring Success: Future Evaluation Criteria

Product Integration Metrics

Apple's acquisition success would ultimately be measured through product integration and consumer adoption. Did Q.ai's micro-expression recognition technology ship in products on planned timelines? Did shipped features function reliably and provide meaningful user value? Did consumers appreciate expression-based interaction capabilities, or did they find them gimmicky or intrusive? These practical metrics would determine whether Apple's $2 billion investment generated returns through stronger products and increased customer loyalty.

Within specific products, measurable success criteria would include adoption rates for new expression-based features, user satisfaction metrics, feature reliability across diverse demographic groups, and power efficiency characteristics. AirPods Pro might measure success through adoption rates for emotion-aware audio profiles—what percentage of users enabled the feature, how frequently did they use it, and did it improve satisfaction? Vision Pro might measure success through reduction in user fatigue during extended use sessions after implementing micro-expression-based gesture controls.

Competitive Positioning

Broader competitive success would manifest through Apple's improved positioning relative to competitors in AI and wearables. Did the acquisition enable Apple to offer capabilities competitors couldn't match? Did products incorporating Q.ai's technology gain market share against competing wearables and spatial computing devices? The acquisition's success would be measured not in absolute financial terms but in Apple's improved competitive position in increasingly important categories.

Research Output and Follow-Up Innovation

Longer-term success would be evidenced through continued research innovation from Q.ai's team within Apple. Would the team publish research papers advancing the field's understanding of micro-expression recognition? Would the team develop follow-on innovations building on the initial acquisition? Would Q.ai's work within Apple inspire additional acquisitions or research initiatives in adjacent areas? Sustained innovation output would signal a successful acquisition where the team remained engaged and productive.

Comparative Landscape: How This Fits Into Broader AI Strategy

Positioning Relative to Competitors

The Q.ai acquisition positioned Apple distinctly within the competitive landscape. While Microsoft and Google pursued general-purpose AI through partnerships and acquisitions with large language model companies, Apple pursued specialized hardware-AI integration. This differentiation reflected each company's strengths: Microsoft and Google excel at cloud infrastructure and broad software ecosystems, while Apple excels at consumer hardware and integrated device ecosystems. Rather than competing on competitors' turf (general-purpose AI models), Apple leveraged distinctive strengths in hardware innovation.

This differentiation strategy created long-term competitive advantages. If consumers' primary interaction with AI occurs through consumer devices—smartphones, wearables, AR glasses—then companies mastering hardware-AI integration possess advantages over companies with superior underlying AI models but weaker hardware capabilities. Apple's bet, signaled through the Q.ai acquisition, was that the future of AI consumer value creation lay in hardware integration more than raw algorithmic capability. Competitors with different strengths would struggle to match Apple's execution in this specific domain.

Broader Investment Pattern

The Q.ai acquisition represented one move in a broader Apple strategy of strategic acquisitions accelerating specific technology roadmaps. The company had previously acquired chip design companies (Intel's smartphone modem team, for advanced wireless capabilities), AR technology companies (Metaio, for AR expertise), and health technology companies (Gizmodo, and various health startups) to accelerate specific product roadmaps. The Q.ai acquisition followed this pattern—acquiring proven capability in specific technology domains rather than building entirely from scratch internally.

Key Takeaways and Strategic Implications

Apple's $2 billion acquisition of Q.ai represents one of the most significant moves in the company's recent AI strategy, signaling a fundamental shift from purely internal AI development toward strategic acquisitions accelerating specific technology roadmaps. The deal places facial micro-expression recognition and gesture-based interfaces at the center of Apple's next-generation wearables and spatial computing vision. By acquiring Q.ai's team, intellectual property, and proven technology, Apple gained a significant head start on capabilities that could define human-computer interaction for the next decade.

The acquisition revealed important truths about AI competition and innovation in consumer technology. While software-AI companies compete through model sophistication and training data scale—domains favoring larger companies with computational resources—hardware-AI integration creates competition around specialization, supply chain expertise, and device ecosystem integration. Apple's distinctive advantages lie precisely in these hardware domains, making the Q.ai acquisition strategically coherent with the company's existing strengths.

Looking forward, this acquisition's impact will unfold across multiple product categories over several years. Initial integration into AirPods and Apple Watch will establish the technical foundation and refine algorithms through real-world usage. Medium-term vision will likely see significant Vision Pro and AR-related capabilities leveraging Q.ai's gesture recognition. Longer-term, entirely new product categories may emerge—true AR glasses without visible controls, smart rings enabling sophisticated interaction through expression recognition, or next-generation health devices understanding emotional and psychological states alongside physical metrics.

For the broader technology industry, the acquisition signals that specialized hardware-AI startups addressing specific consumer problems represent valuable acquisition targets. The Israeli startup ecosystem in particular may see accelerated activity as successful founders pursue specialized hardware-AI combinations. Investors observing Apple's $2 billion valuation for Q.ai's proven technology may increase investment in related domains, potentially accelerating innovation in facial recognition, gesture control, and wearable AI more broadly.

Ultimately, the Q.ai acquisition represents Apple's pragmatic recognition that artificial intelligence competition in consumer technology isn't determined solely by algorithmic capability. It's determined by how effectively companies integrate AI into devices that delight users through superior experiences. By acquiring Q.ai, Apple acquired the specialized capability, proven talent, and intellectual property necessary to lead in that integration over the next decade.

FAQ

What is Q.ai and why did Apple acquire it?

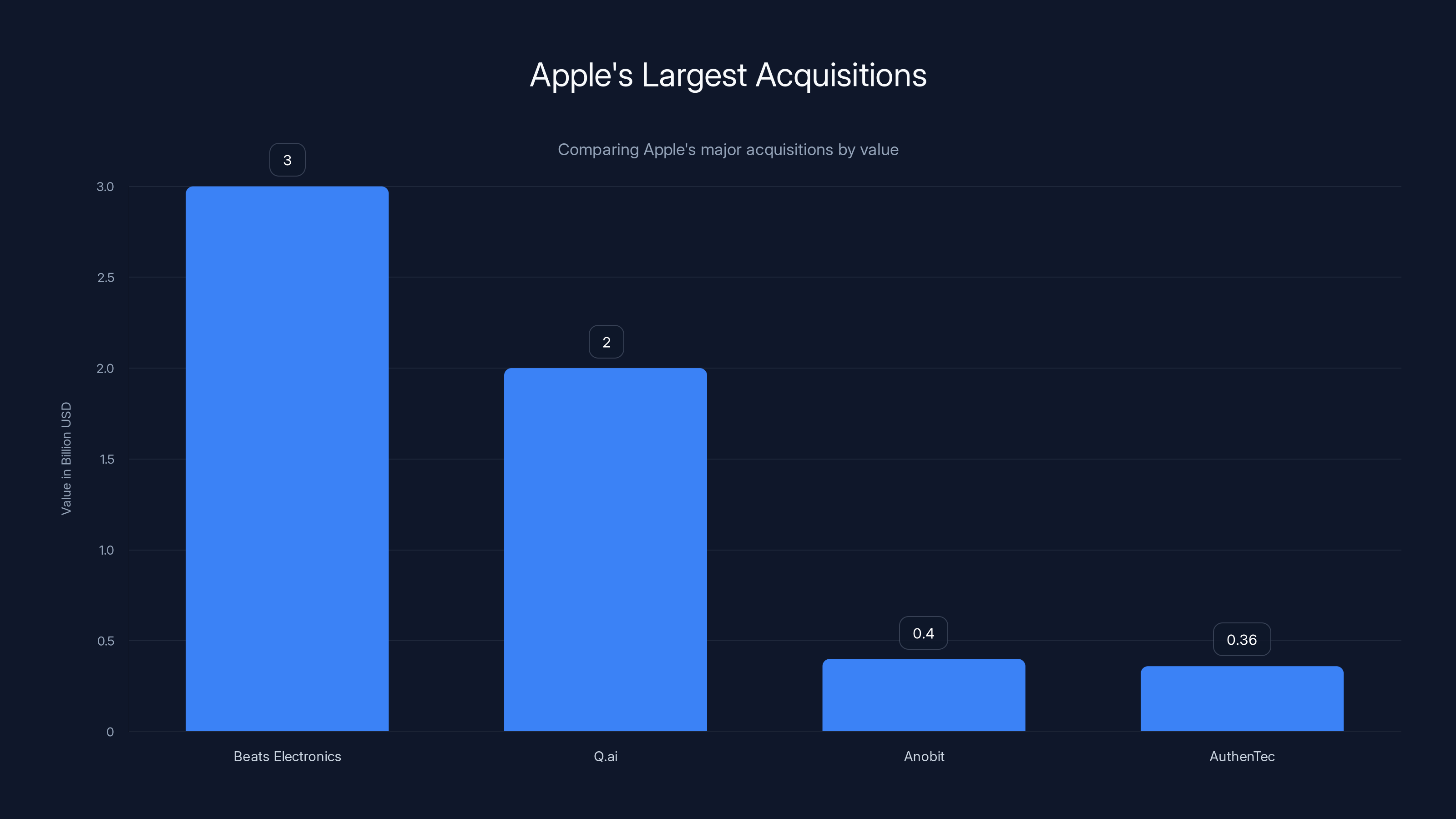

Q.ai is an Israel-based startup specializing in facial micro-expression recognition and gesture-based interface technology. Apple acquired Q.ai for approximately $2 billion to acquire advanced imaging and machine learning capabilities focused on detecting subtle facial movements that can enable entirely new ways for users to interact with devices. The acquisition represents Apple's second-largest purchase ever, after the Beats Electronics acquisition in 2014.

What specific technology does Q.ai develop?

Q.ai develops technology for detecting and interpreting micro-expressions—subtle involuntary facial movements lasting fractions of a second that reveal emotional and cognitive states. The company has pioneered techniques for using specialized imaging sensors combined with machine learning algorithms to recognize these expressions and translate them into device commands or contextual understanding. Patents filed by Q.ai show applications in headphones and glasses using "facial skin micro movements" to enable hands-free control without voice commands.

How might Q.ai's technology improve Apple products?

Q.ai's technology could enable significant improvements across Apple's wearables and spatial computing products. AirPods could become emotion-aware, automatically adjusting audio profiles based on detected user stress or focus levels. Apple Watch could support expression-based interaction, allowing users to control devices through subtle facial movements rather than relying on tiny screens or voice commands. Vision Pro could provide more natural gesture control requiring less hand movement and physical effort during extended use, reducing user fatigue in spatial computing applications.

Why did Apple choose acquisition over internal development?

Apple's CEO Tim Cook stated the company is "open to M&A that accelerates our roadmap," indicating that internal development of micro-expression recognition technology would require many years of research, dataset creation, and algorithm refinement. By acquiring Q.ai, Apple gained proven team talent, extensive research datasets, registered patents, and working prototypes that compressed the development timeline significantly. This strategic choice reflected recognition that competitive pressure in AI required faster paths to advanced capabilities than pure internal development could provide.

What does the Q.ai acquisition reveal about Apple's AI strategy?

The acquisition signals that Apple differentiates its AI strategy from competitors like Google and Microsoft by focusing on hardware-AI integration rather than general-purpose language models. While Microsoft invested heavily in OpenAI and Google developed large language models, Apple pursued specialized capabilities directly supporting consumer devices. The Q.ai acquisition demonstrates Apple's bet that future AI value creation in consumer technology lies in sophisticated hardware-software integration enabling new interaction paradigms, rather than in raw algorithmic capability alone.

How does Q.ai's technology protect user privacy?

Q.ai designed its technology for on-device processing, meaning facial expression recognition and analysis occur entirely on users' devices without transmitting raw facial data or detailed analysis to Apple's servers. This privacy-first approach aligns with Apple's privacy positioning and regulatory requirements around biometric data processing in jurisdictions like the European Union. Users maintain control over their facial data, and Apple doesn't accumulate centralized databases of facial expression profiles or emotional state information, addressing privacy concerns that would arise with cloud-based alternatives.

When will Q.ai technology appear in Apple products?

While Apple hasn't announced specific products or timelines, the technology likely begins integration into next-generation AirPods and Apple Watch models within 12-18 months of the acquisition announcement. Initial implementations would focus on specific features—emotion-aware audio profiles, gesture-based control—before expanding to more sophisticated applications. Medium-term (2026-2027), Vision Pro successors would likely incorporate significantly enhanced gesture control based on Q.ai's facial micro-movement recognition, while longer-term entirely new product categories may leverage the technology.

How does this acquisition impact competitors like Google and Meta?

The Q.ai acquisition intensifies competitive pressure on Google and Meta to develop comparable facial expression recognition and gesture control capabilities. Google possesses computational imaging expertise but has invested less in micro-expression recognition compared to hand gesture control. Meta invested heavily in hand gesture recognition but less in facial expressions. Both companies now face choices to accelerate internal development, pursue competing acquisitions, or partner with specialized companies. Apple's $2 billion valuation for Q.ai's focused technology demonstrates the market value of specialized hardware-AI capabilities, likely attracting acquisition interest and investment toward related startups.

What makes Aviad Maizels' previous experience relevant to Q.ai's acquisition?

Aviad Maizels founded Prime Sense, a 3D sensing and imaging company that Apple acquired in 2013 for approximately $360 million. Prime Sense's technology pioneered depth sensing and 3D imaging capabilities later integrated into Apple products. Maizels' second venture, Q.ai, continued this pattern of combining advanced imaging hardware with machine learning intelligence to create new interaction capabilities. His successful track record building hardware-AI companies, combined with proven ability to create technologies Apple values, likely contributed to Apple's confidence in the Q.ai acquisition and Maizels' retention within Apple's organization.

Could facial expression recognition technology raise ethical concerns?

Facial expression recognition technology revealing emotional states could raise legitimate privacy and ethical concerns if implemented without proper safeguards. The technology could theoretically enable invasive emotional surveillance, discrimination based on detected emotional states, or manipulation based on emotional understanding. Apple's privacy-first approach—implementing on-device processing, providing explicit user controls, and avoiding centralized data accumulation—addresses these concerns substantially. However, ongoing questions about how emotion detection should be regulated, when users should provide explicit consent, and what limitations should apply to emotion-based algorithmic decision-making will likely emerge as the technology matures and appears in consumer products.

How does Q.ai's acquisition fit into Apple's long-term vision for spatial computing?

Spatial computing devices like Vision Pro require sophisticated interaction capabilities that go beyond traditional interfaces. Q.ai's facial expression and micro-gesture recognition technology enables Vision Pro successors and future AR glasses to detect user intent and respond to commands with minimal explicit gesturing or voice commands. This advancement is crucial for true augmented reality glasses that need to be lightweight, power-efficient, and comfortable during extended use. Facial expression recognition allows such devices to understand what users want without requiring visible hand gestures or voice commands that might feel socially awkward in public settings, making AR glasses viable for all-day wear like traditional eyeglasses.

Integration Opportunities: Considering Alternatives for Automation

While Apple's Q.ai acquisition focuses on facial recognition and gesture control within consumer devices, organizations developing internal automation tools, content pipelines, or developer workflows might consider different solutions for their specific needs. For teams seeking AI-powered automation capabilities at scale, platforms like Runable offer comparable AI features at significantly different price points. Runable provides AI agents for document generation, workflow automation, and content creation—capabilities addressing different use cases than facial recognition but serving teams needing intelligent automation across their operations.

For developers building internal tools or automation frameworks, exploring platforms offering AI-powered slides, documents, reports, and automated workflows provides practical alternatives to building custom solutions. Runable's $9/month entry point makes sophisticated AI automation accessible to startups and small teams, contrasting with enterprise solutions requiring substantial infrastructure investments. Similarly, teams implementing gesture or expression-based interfaces for specialized applications might benefit from evaluating whether pre-built automation platforms could accelerate their development velocity compared to building recognition systems from scratch.

Related Articles

- AI Glasses & the Metaverse: What Zuckerberg Gets Wrong [2025]

- Meta Reality Labs: From Metaverse Losses to AI Glasses Pivot [2025]

- Snap's AR Glasses Spinoff: What Specs Inc Means for the Future [2025]

- Samsung Galaxy Unpacked 2026: S26, TriFold & What's Coming [2025]

- How Xreal's Real 3D Update Solved XR Glasses' Biggest Problem [2025]

- Harvey Acquires Hexus: Legal AI Race Escalates [2025]