AI Glasses & the Metaverse: What Zuckerberg Gets Wrong [2025]

Introduction: The Hype Machine Meets Reality

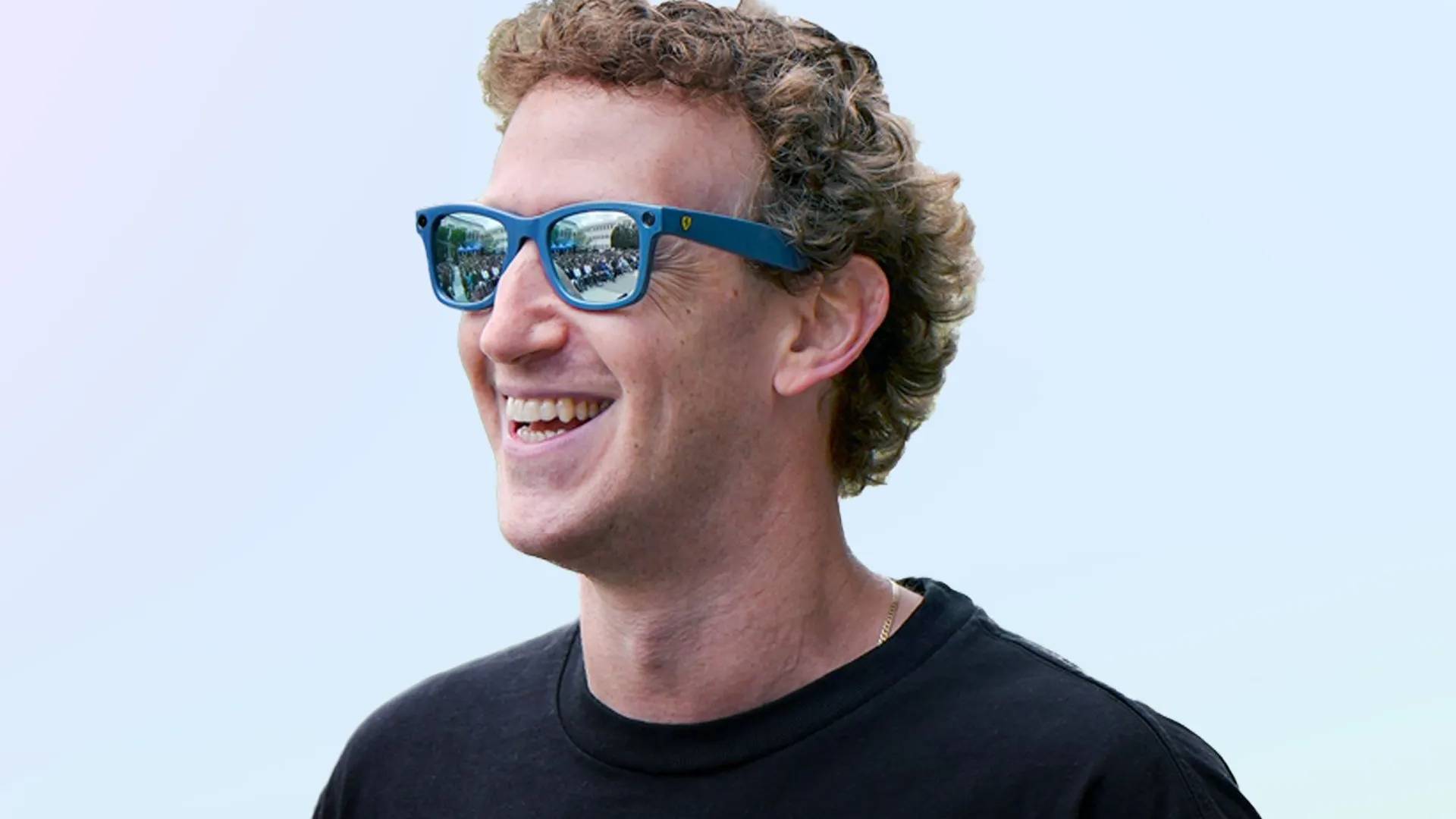

Mark Zuckerberg says it's hard to imagine a future without AI glasses. And he'd know something about betting big on hardware that the world wasn't ready for. Yet here we are again, watching the CEO of Meta evangelize the next revolution in computing while the previous one (remember the metaverse?) quietly fades from headlines.

This isn't cynicism. This is pattern recognition.

Back in 2021, Zuckerberg announced Meta's pivot away from Facebook and toward the metaverse with absolute conviction. He spent

Now he's pivoting again. This time, the play is AI glasses.

The thing is, Zuckerberg isn't entirely wrong about AI glasses being significant. They probably will shape how we interact with information, navigation, communication, and work. But calling the future "hard to imagine" without them is either masterful marketing or a fundamental misunderstanding of market timing, consumer behavior, and the engineering challenges that actually matter.

Let's dig into what's real, what's hype, and what Zuckerberg isn't talking about when he talks about AI glasses as inevitable.

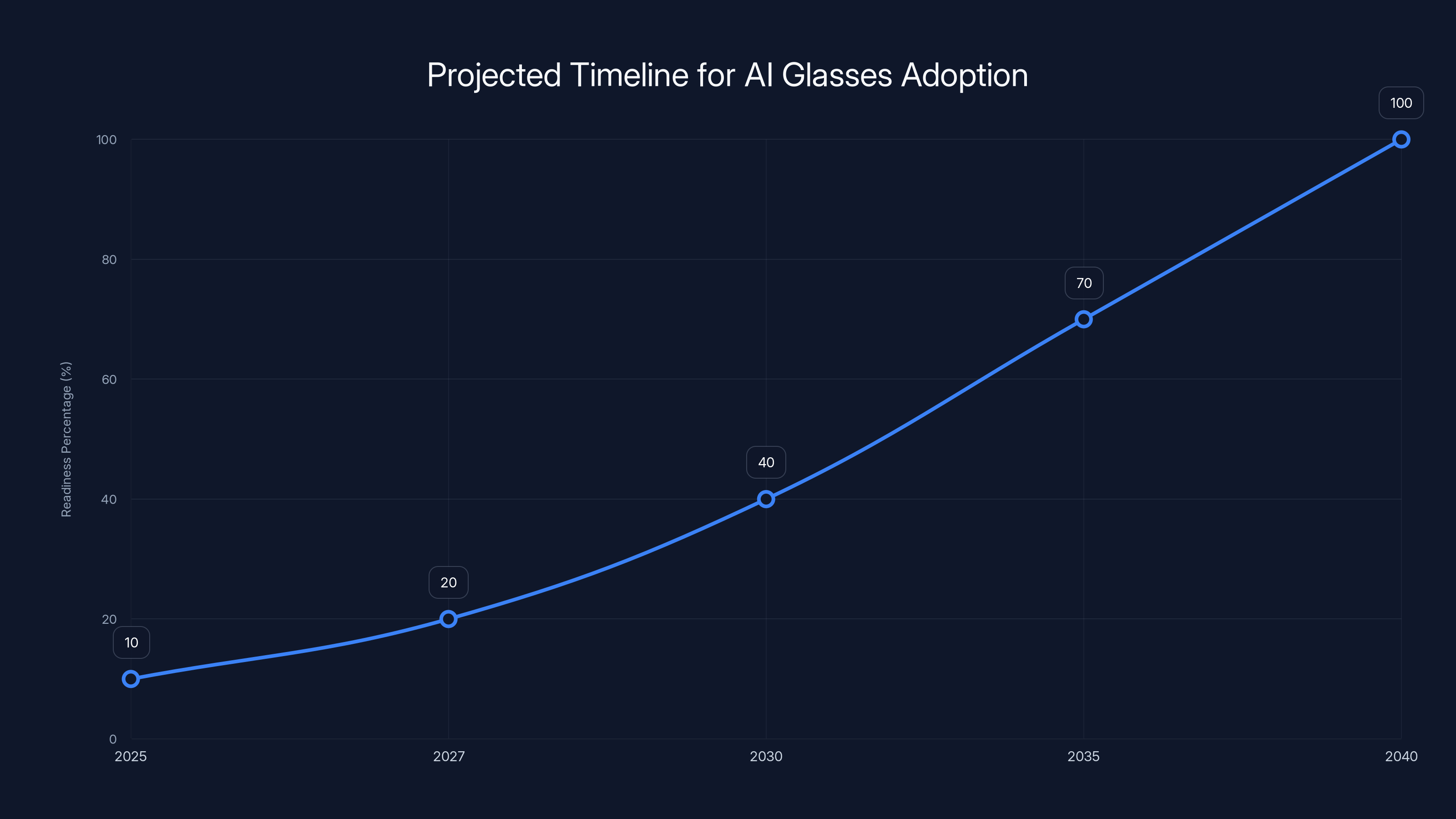

Estimated data suggests AI glasses may not reach full market readiness until 2040, highlighting the gap between vision and current technological capabilities.

TL; DR

- AI glasses could reshape human-computer interaction, but we're 5-10 years minimum from mainstream adoption, not the 2-3 years Meta is implying

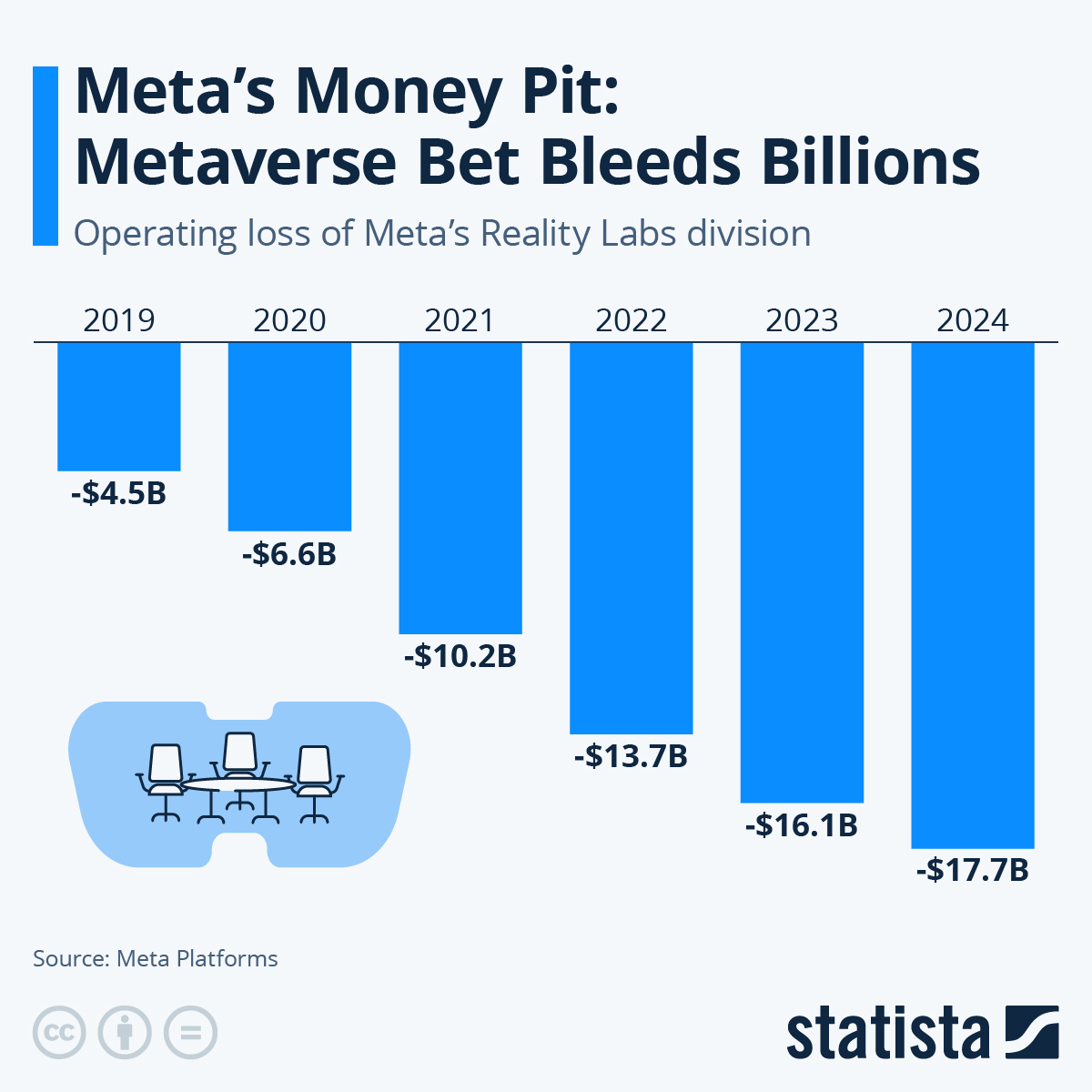

- Meta's metaverse bet failed spectacularly, with $50+ billion in losses since 2020, signaling that brand conviction alone doesn't create market demand

- Technical hurdles remain substantial: battery life, thermal management, display quality, and spatial AI processing are years away from consumer-grade solutions

- Privacy concerns are existential, not peripheral—always-on cameras and AI interpretation of your environment raise questions regulators are only starting to ask

- The real competition isn't Meta's Vision Pro rivals, it's your smartphone—and phones are remarkably good at doing what AR glasses promise

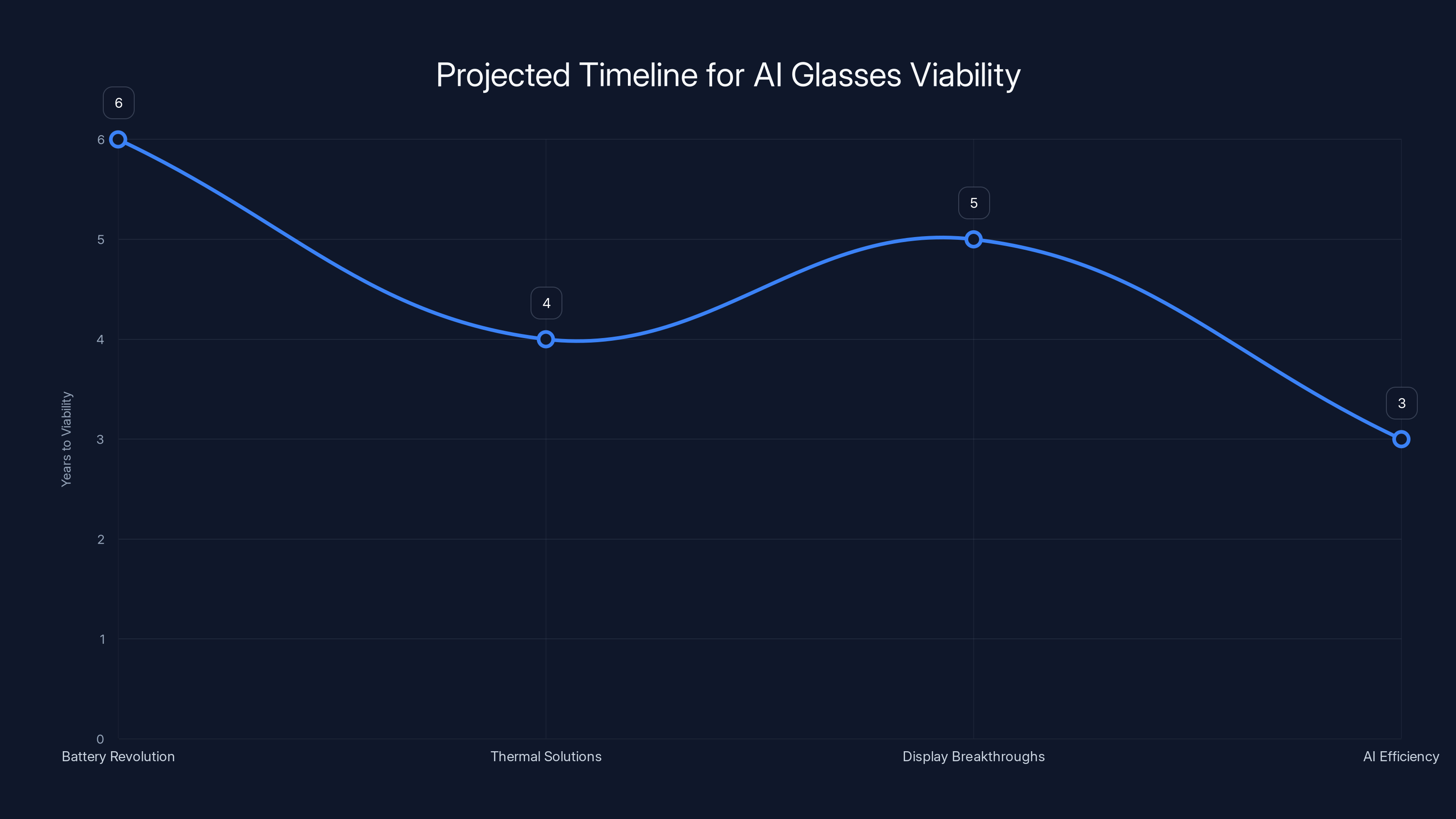

Estimated data shows that AI glasses could become commercially viable within 3 to 6 years, depending on advancements in battery, thermal, display, and AI technologies.

Why Mark Zuckerberg's Vision Matters (Even When It's Wrong)

Let's start here: Zuckerberg has successfully built trillion-dollar companies before. Facebook went from dorm room to global infrastructure. Instagram became the default social platform for visual media. WhatsApp powers messaging for 2 billion people. Whatever you think of his choices, his ability to spot emerging platforms and move capital toward them is undeniably real.

Which makes his current conviction in AI glasses interesting, not because it's definitely right, but because it reveals something about how he's thinking about the next decade.

Zuckerberg's operating theory, as he's articulated it, goes like this: computing today is still fundamentally constrained by the interface. We stare at screens. We tap glass. We're limited to a 6-inch window into digital information. AI glasses remove that constraint. They project information directly into your field of view. They understand context through cameras and spatial sensors. They can interpret what you're looking at and provide real-time information, suggestions, translations, and augmentation.

The vision, in its simplest form, is that AI glasses become the default interface between you and artificial intelligence.

Here's where it gets interesting: that vision is probably directionally correct. The form factor might look different. The timeline is definitely wrong. But the idea that we'll eventually move past phone-centric computing toward more ambient, contextual forms of information access? That's not a crazy prediction.

The problem is that Zuckerberg is collapsing "someday, probably true" into "soon, definitely inevitable." And he's making billion-dollar bets accordingly.

The Metaverse Gamble: A $50 Billion Lesson

You can't understand Meta's current push on AI glasses without understanding how completely the metaverse bet failed.

In October 2021, Mark Zuckerberg announced that Meta Platforms was essentially a metaverse company now. Facebook the social network would be one product among many, all oriented toward a vision of persistent, three-dimensional virtual worlds where people would work, play, and socialize through digital avatars.

It was a bold repositioning. It was also premature.

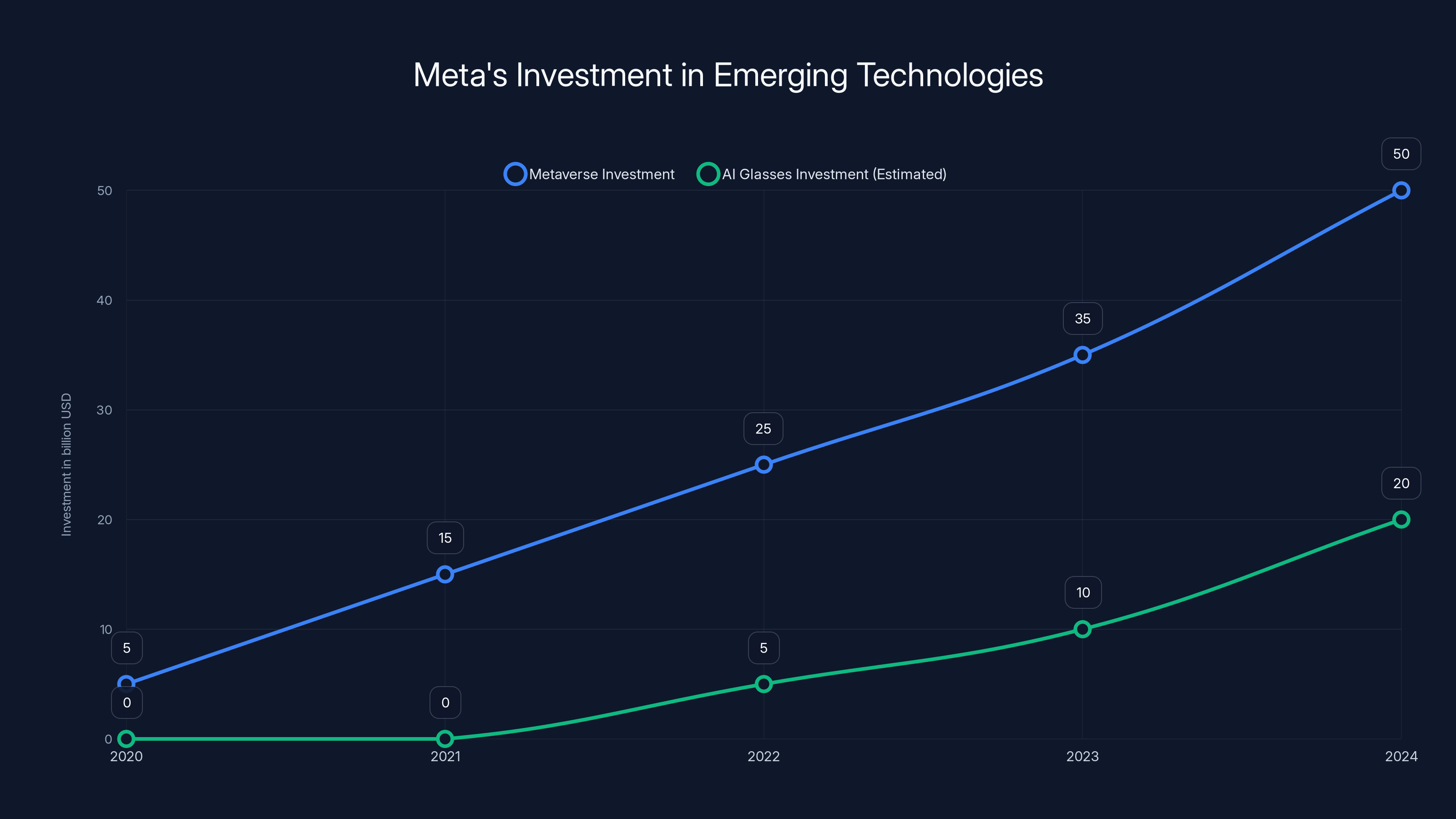

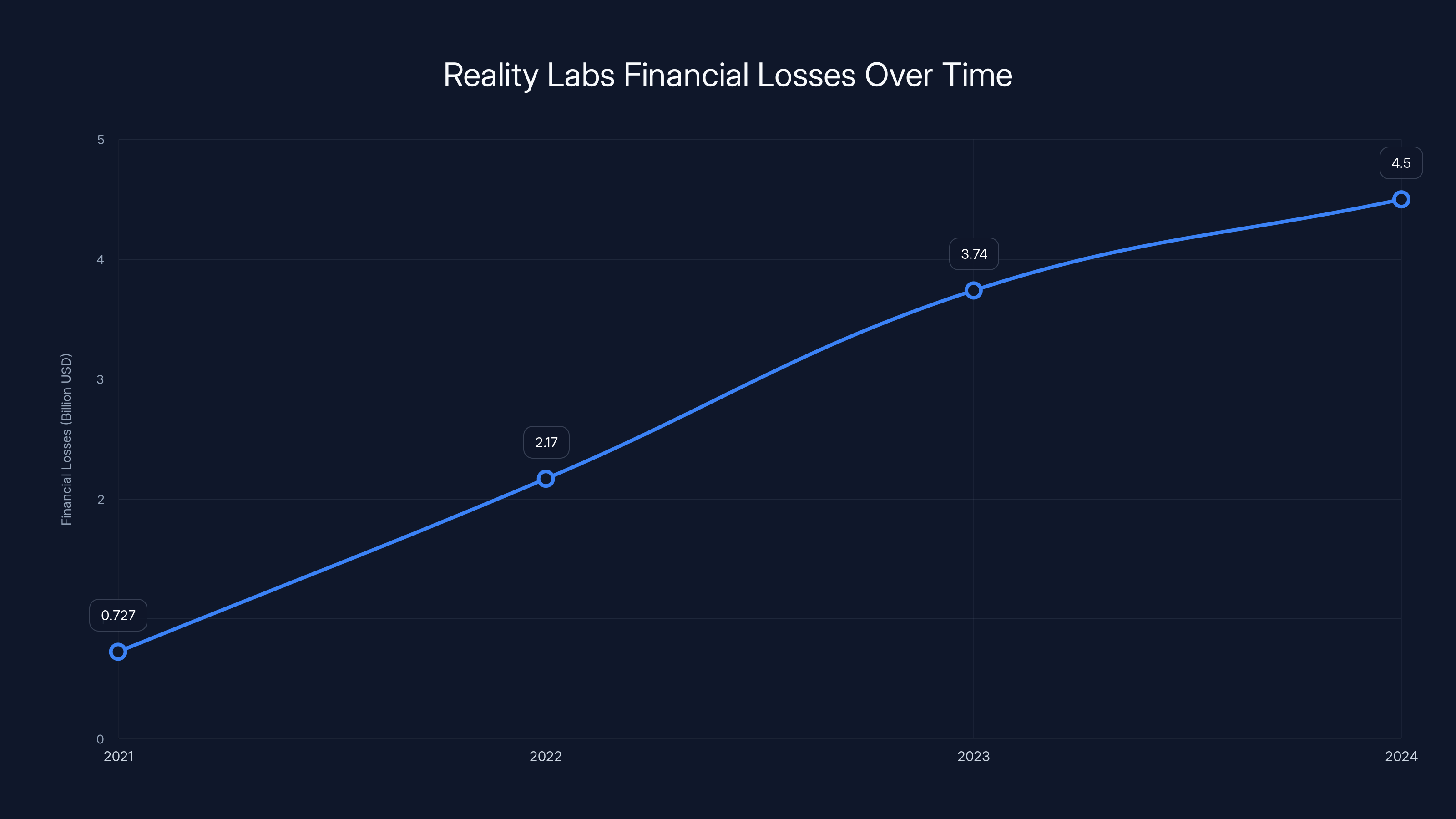

Meta created the Reality Labs division to oversee this bet, and it started spending like a company that had already won. According to Meta's financial filings, Reality Labs has generated:

- 2021: $727 million in losses

- 2022: $2.17 billion in losses

- 2023: $3.74 billion in losses

- 2024: Losses continuing with no clear path to profitability

Total losses since 2020: over $50 billion.

Meanwhile, what happened to the metaverse itself?

It didn't arrive. Meta's Quest VR headsets remained niche products. Virtual worlds populated by avatars didn't become how people spend their leisure time. Office work didn't move into Horizon Workrooms. The ecosystem of VR content never achieved critical mass. By 2024, VR was still a hobbyist product, not a consumer standard.

Why? Several converging reasons:

Adoption friction was higher than predicted. VR headsets are expensive (

The experiences weren't compelling enough. Virtual environments looked and felt uncanny. Socializing through avatars felt performative rather than authentic. Work in VR was slower and more frustrating than work in real environments or on traditional computers. Gaming was the one use case that worked, and it was still better on traditional platforms.

Content required massive investment. Building quality VR experiences costs millions. Without mainstream adoption to justify the investment, the content never materialized. Without content, adoption stalled. It's a classic chicken-and-egg problem.

This is crucial context because Meta is now positioning AI glasses as the solution. But AI glasses have many of the same problems:

- Higher friction than phones (new form factor, new interfaces, new ecosystems)

- Experiences still need to be invented (what actually gets better with AR glasses?)

- Content requires massive investment

- Adoption feedback loops need to be created from nothing

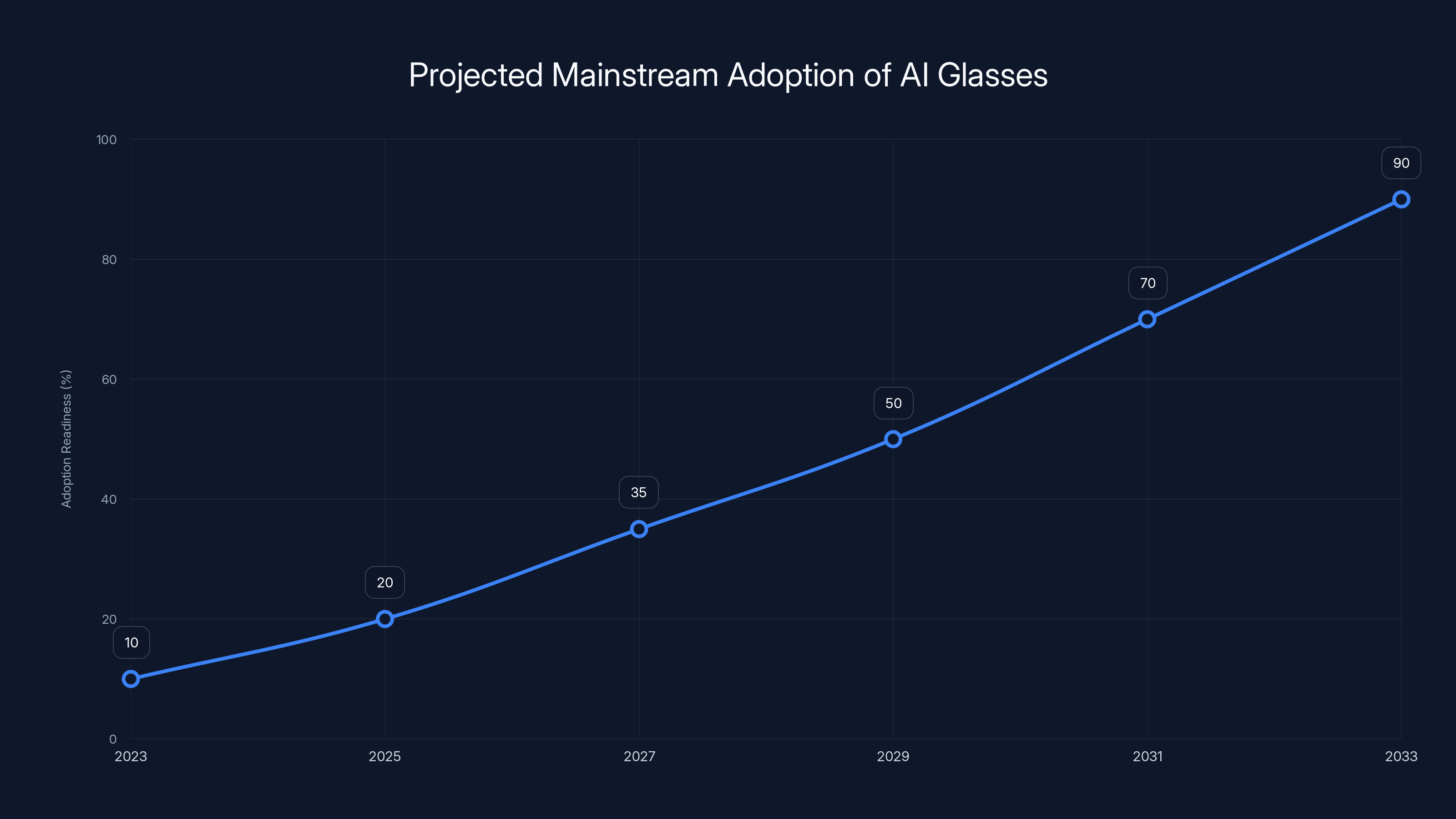

Mainstream adoption of AI glasses is projected to be viable by 2030-2032, with readiness increasing as technological breakthroughs occur. Estimated data.

The Technical Reality Behind AI Glasses

Let's talk about what actually has to work for AI glasses to be viable.

This isn't theory. Companies like Apple with the Vision Pro, Microsoft with Holo Lens, and Ray-Ban (through Meta's partnerships) are actively shipping products. They're hitting real constraints that no amount of hype solves.

Battery Life: The Unsolved Problem

AI glasses need to run for a full day of use without dying. That's roughly 8-12 hours of active use, plus standby time.

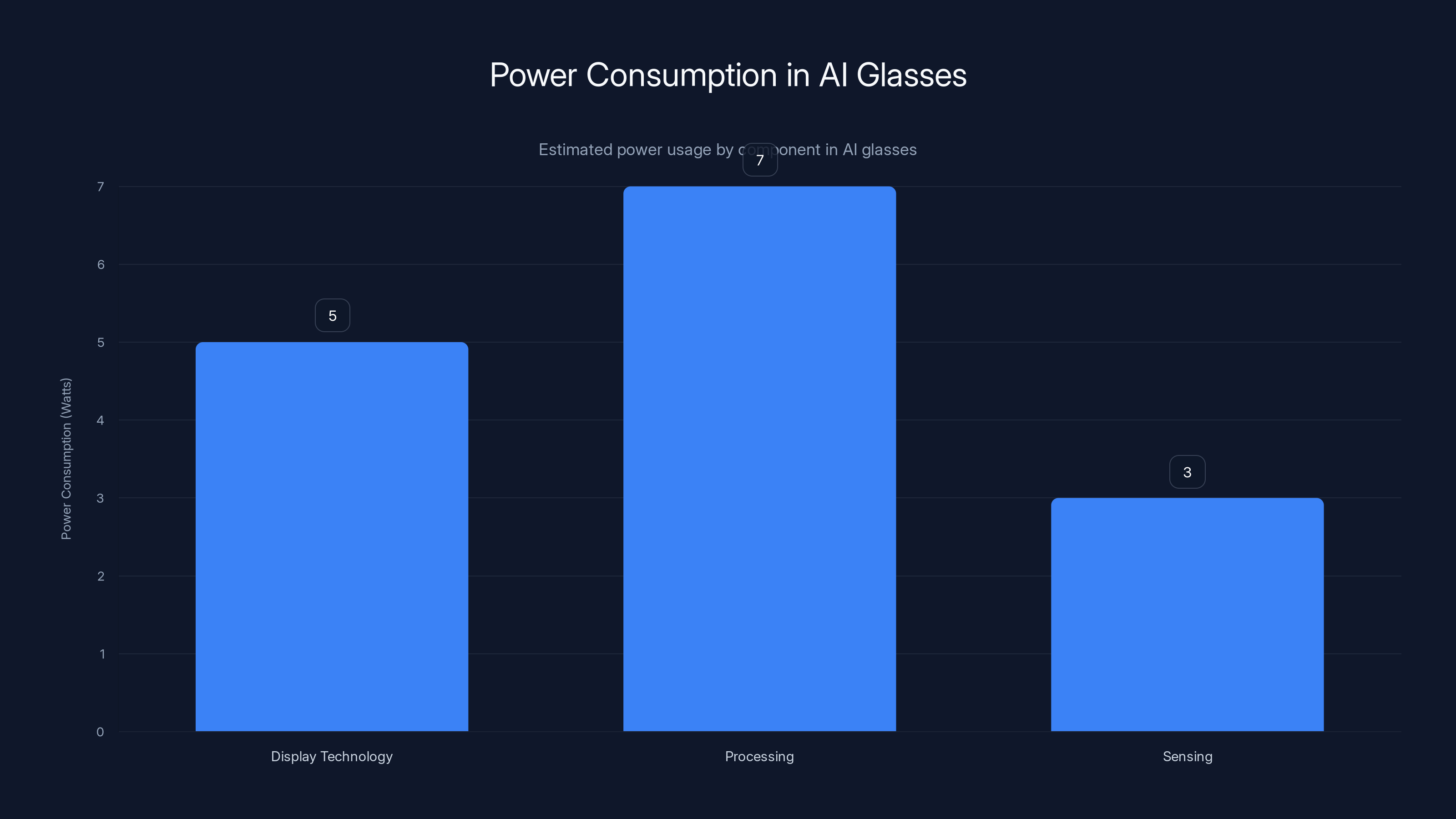

Here's the challenge: today's AI glasses consume power from three places simultaneously:

- Display technology (whether micro LED, micro-OLED, or holographic): Drawing images directly into your field of view requires significant power

- Processing (running AI models for scene understanding, translation, object recognition): Modern AI models are power-hungry

- Sensing (cameras, depth sensors, IMU data): Continuous capture of spatial information draws steady power

Meta's current smart glasses (the Ray-Ban collaboration) dodge this by being dumb. They're really just cameras with some wireless processing. The actual AI runs on your phone or the cloud. That's why they can run for days.

But that's not the vision. The vision requires the glasses themselves to be intelligent. Which means processing on-device. Which means significantly more power consumption.

Today, even the best AR glasses prototypes get 2-4 hours of actual use before needing a charge. That's not viable for a device meant to replace your phone or compete with glasses you wear all day.

The math: a modern smartphone battery (

Thermal Management: Heat That Can't Escape

AI processing generates heat. Lots of it.

When you're running language models, vision processing, and spatial computing simultaneously, you're dissipating watts of power in a device pressed against your face, with glasses literally touching your skin.

Cable-free operation means no heat sink, no fan, no way to move air. The thermal budget is maybe 1-2 watts before the device becomes uncomfortable to wear. Current AI chips in phones and tablets easily exceed that when running inference-heavy workloads.

This is why current AR glasses either:

- Offload processing to a phone or cloud server (kills latency, requires connectivity)

- Run very lightweight AI models (severely limits capability)

- Only enable AI when stationary (defeats the purpose of wearable AI)

None of these scale to a mass-market product.

Display Technology: Still Not There

AR glasses need displays that:

- Project information without blocking your view of the real world

- Cover a wide field of view (minimum 50 degrees, ideally 90+)

- Achieve brightness visible in sunlight

- Maintain color accuracy

- Keep weight and heat under control

- Cost under $500 for the entire device

Today's options:

Micro-OLED: High brightness, excellent color, but expensive (

Micro-LED: More power-efficient than OLED, but manufacturing is in early stages. Cost and yield aren't consumer-friendly yet.

Holographic displays: Theoretically the best (glasses could be nearly transparent when off), but we're still in prototyping phase. Technical breakthroughs needed on multiple fronts.

Transparent OLED: Samsung and others are researching this, but transparent pixels are inherently less efficient and dimmer than opaque ones. Solving this requires materials breakthroughs.

None of these technologies are ready for a

Spatial AI: The Hardest Part

The real value proposition of AI glasses is understanding context. You look at a plant and the glasses tell you what species it is. You see a sign in Japanese and it translates in real-time. You're in a meeting and the glasses highlight important points. You're navigating an airport and AR shows you the gate numbers overlaid on your vision.

This requires:

- Real-time scene understanding (what's in the image)

- Object recognition with enough specificity to be useful

- Text recognition and OCR for instant translation

- Semantic understanding (knowing not just what something is, but what to do about it)

- Spatial mapping in real-time without slowing down or draining power

All of this running locally (not cloud-dependent) while you move your head around.

Modern large language models can do some of this, but not at latency below 100-200ms per frame. For glasses, you need sub-100ms latency or the experience feels laggy.

Quantization helps (running smaller models on-device), but each optimization trades capability for speed. You end up with a spectrum:

- Cloud processing: Low latency, high capability, but requires connectivity

- Local processing: No connectivity requirement, but slower and less capable

Zuckerberg's pitch is that AI can bridge this gap. But right now, it requires compromises that make the glasses less useful.

Privacy: The Existential Question Nobody's Answering

Here's what AI glasses actually are: always-on cameras pointed at your world.

Meta's current Ray-Ban smart glasses have cameras. You can see them. The glasses are recording constantly. When you use them, you're documenting everyone around you, whether they consent or not.

Scale that to mainstream adoption. Imagine 20% of people in public spaces wearing AI glasses that capture everything. Facial recognition software that identifies strangers instantly. AI systems that interpret what they see and report it back to servers.

This isn't hypothetical. Privacy organizations are already raising alarms. The FTC has started asking questions about data collection practices. EU regulators are drafting rules.

But nobody has actually solved this problem:

How do you prevent AI glasses from enabling mass surveillance?

Potential answers, and their problems:

- Local processing only, no cloud upload: This limits capability and requires more power

- Strict encryption and anonymization: Still requires trust in the company, and still records footage

- Legal restrictions on recording in public: How do you enforce this? How do you detect violations?

- Consent-based systems: Impractical and unenforceable at scale

Zuckerberg's answer, when pressed, is usually some version of "we'll be responsible." That's not a technical solution. It's a promise. And given Meta's history with privacy (Cambridge Analytica, underage users, location tracking), that promise carries less weight than he'd like.

The regulatory reality is that AI glasses will almost certainly face significant restrictions before mainstream adoption. Recording in public spaces without consent may become illegal. Facial recognition may be banned. Data retention policies may be mandated. These aren't hypothetical; they're already being debated in major markets.

This isn't a minor friction point. It's a fundamental constraint on how useful the glasses can actually be. Remove the ability to recognize faces, and half the useful applications disappear. Prevent cloud upload, and you lose real-time processing. Require explicit consent for video capture, and you've killed the ambient nature of the experience.

Estimated data shows that processing AI models consumes the most power in AI glasses, followed by display technology and sensing. This highlights the challenge of extending battery life for all-day use.

The Competition Isn't What You Think

When people talk about AI glasses competition, they usually mention Apple's Vision Pro or Microsoft's Holo Lens or ByteDance's upcoming AR glasses.

That's wrong. The real competition is your phone.

Your phone already does translation. It already has a camera. It already has AI. It's already in your pocket. You already know how to use it. Billions of apps work on it.

For AI glasses to win, they need to be more convenient than reaching into your pocket. They need to be faster. They need to offer capabilities your phone can't. And they need to be cheaper.

Let's look at this honestly:

Convenience: A phone is quick to unlock and use. AR glasses require looking at something, waiting for recognition, and interpreting an overlay. Not obviously faster.

Speed: Phone processors are extremely fast. AR glasses offload processing to avoid thermal issues, which means cloud latency and connectivity dependence.

Capability: Phones have mature ecosystems. AR glasses require developers to build new experiences from scratch.

Price: Phones cost

Zuckerberg's argument is essentially: "Eventually, AR glasses will be better." That's probably true. But "eventually" is not 2025 or 2026. It's more like 2030 at the absolute earliest, maybe not until the 2030s.

In the meantime, phones will get better. AI on phones will get better. The phone ecosystem will deepen. By the time AR glasses are consumer-ready, the phone might be irrelevant—but not because of AR glasses. Because of something we haven't predicted yet.

That's why Zuckerberg hedges with phrases like "hard to imagine." It's not certainty; it's hope.

What Would Actually Make AI Glasses Viable

Let's be concrete about what needs to happen for AI glasses to achieve mainstream adoption.

1. Battery Revolution

You need a 10x improvement in battery energy density without proportional weight increase. We're talking 500 Wh per kilogram, in a form factor that fits in glasses.

This isn't impossible. Solid-state batteries, lithium-metal, new cathode materials—the research is real. But we're talking about 5-7 years before commercial viability, minimum. And even then, it requires manufacturing scale that doesn't exist yet.

2. Thermal Solutions

You need to dissipate 3-5 watts of heat continuously without making the device uncomfortable to wear.

Possible solutions:

- Phase-change materials that absorb heat

- Micro-fluidic cooling integrated into the frame

- Smarter AI that uses less power through optimization

Again, 3-5 years minimum to make this work at scale.

3. Display Breakthroughs

You need a display technology that's bright, full-color, wide field-of-view, and costs under $50 in volume.

Micro-LED manufacturing needs to improve yield rates by 10x. Holographic displays need to move from lab to production. New materials for transparent OLED need to reach maturity.

Timeline: 4-6 years to commercial viability.

4. AI Efficiency

You need AI models that run locally with 90% of the capability of cloud models but at 1/10th the power consumption.

This is actually happening. Quantization, distillation, and architectural innovations are making progress. But another 2-3 years of optimization is needed.

5. Software Ecosystem

You need developers to build genuinely useful AR experiences that are actually better than phone equivalents.

This requires mainstream adoption first, which requires solving the above problems. It's a downstream dependency.

6. Regulatory Clarity

You need governments to set clear rules about surveillance, data collection, and facial recognition—and those rules to allow useful AI glasses functionality.

Timeline: 2-4 years of regulatory clarification, but the rules will almost certainly be more restrictive than current tech allows.

Add all of these up, and you're looking at 5-10 years before AI glasses are actually consumer-ready. That's not Zuckerberg's timeline.

Meta's investment in the Metaverse reached over $50 billion by 2024, while AI glasses investment is estimated to grow significantly from 2022 onward. Estimated data for AI glasses.

The Real Reason Meta Is Betting Everything

Let's talk about why Zuckerberg is pushing so hard on AI glasses despite the failures of the metaverse and the massive technical challenges ahead.

The answer isn't that he's wrong about the long-term vision. It's that Meta needs to move away from its core business before the core business stops working.

Facebook and Instagram are mature platforms. They're profitable, but growth is slowing. Mobile advertising is saturated. Apple's privacy changes made targeted advertising harder. Competition from TikTok is eroding engagement. Regulatory pressure is increasing globally.

Meta needs a new platform. Not because the metaverse failed, but because every platform eventually matures.

AI glasses represent that new platform in Zuckerberg's mind. If everyone wears AI glasses, Meta can be the operating system. The app layer. The AI layer. The integration point.

That's worth $50 billion in losses and more.

But here's where the strategy breaks down: you can't will new platforms into existence. You can't spend your way past the laws of physics. You can't overcome bad product-market fit with enough conviction.

Metaverse didn't fail because Meta wasn't trying hard enough. It failed because virtual worlds where you talk to cartoon avatars aren't actually what people want to do when they have free time. The market said no, and no amount of capital changed that.

AI glasses face a different form of market friction. They're not fundamentally undesirable; they're fundamentally unready. The physics hasn't caught up. The AI still isn't good enough. The privacy rules don't exist yet. The ecosystem doesn't exist.

Zuckerberg knows this, intellectually. But he's committed the capital, so he has to commit the narrative. He has to convince investors, employees, and the public that this is inevitable. Because if he admits it's a 10-year bet with no guarantee of success, the stock tanks. The talent leaves. The momentum stops.

So he says it's "hard to imagine a future without AI glasses." And maybe that's true, eventually. But the future he's imagining might not include Meta at the center of it.

Where AI Glasses Will Actually Win (In the Short Term)

Let's be fair: AI glasses won't be useless before they're mainstream.

There are specific use cases where they're genuinely valuable today:

Enterprise & Industrial Applications

Factory workers, surgeons, maintenance technicians—these are use cases where AR glasses provide real value:

- Real-time guidance overlaid on physical tasks

- Hands-free documentation

- Remote expert assistance with shared vision

- Training simulations

Microsoft's Holo Lens and similar devices are already being deployed in these contexts. These aren't consumer-facing, but they're proof that the form factor works for specific problems.

The margins are better, the ROI is easier to calculate, and the users are trained professionals, not consumers. This is a viable market in the near term.

Navigation & Translation

Walking through a foreign city and needing directions or translation? AR glasses are genuinely useful here. Your phone already does this, but having it in your field of view is better.

This is probably the first mainstream use case that sticks, if the hardware catches up. But it's still years away from consumer-ready execution.

Gaming & Entertainment

Nintendo had success with mobile gaming by catching people in moments when they had phones but not dedicated gaming hardware. AR glasses could do something similar—location-based gaming, social gaming, casual entertainment.

But this requires the glasses to be cheap, ubiquitous, and reliable. None of which is true yet.

Accessibility

For people with vision impairments, AR glasses could be transformative. Visual information could be enhanced, magnified, or translated to audio. This is a smaller market than mainstream consumer, but the value proposition is immediate.

Companies are already building glasses for this use case. It's probably the most legitimate near-term application.

Reality Labs has incurred increasing financial losses from 2021 to 2024, with no clear path to profitability, highlighting the challenges faced by Meta in its metaverse venture. Estimated data for 2024.

The Timeline Reality Check

Here's what's actually going to happen, if I'm being honest:

2025-2026: Premium AR glasses launch and find niche audiences. Enterprise adoption grows. Consumer awareness increases. Battery and thermal issues remain unsolved.

2027-2029: Incremental improvements in display, power efficiency, and processing speed. Prices drop from

2029-2032: Real breakthroughs in battery or display technology. Thermal management becomes manageable. Prices drop below $500. Mass-market adoption begins. Privacy regulations force compromises in functionality.

2032+: AI glasses become ubiquitous. But they look different from what we're imagining today. The killer app isn't what anyone predicted. Meta's market position depends on a series of bets that may or may not pay off.

This timeline is basically saying: "Zuckerberg is right about the direction, wrong about the speed."

What Zuckerberg Isn't Talking About

When Zuckerberg says it's hard to imagine a future without AI glasses, what he's really not talking about:

The metaverse still hasn't arrived, and nobody's talking about it anymore. This is a credibility issue. Making huge bets on the next big thing when the last bet failed spectacularly is a harder sell than it looks.

Privacy concerns will almost certainly force compromises. By the time AI glasses are mainstream, recording and facial recognition may be heavily regulated. The product might be less useful as a result.

Phones are really good at this already. The functional gaps between phones and AR glasses are smaller than people realize. Closing them requires genuine breakthroughs, not just engineering iteration.

Competition might come from unexpected places. Maybe contact lens displays. Maybe neural interfaces. Maybe something we haven't imagined. Meta's bet on glasses might be correct about the direction but wrong about the form factor.

The economic model is unclear. How does Meta make money on AI glasses? Advertising? Premium subscriptions? Hardware sales? The metaverse failure suggests hardware alone isn't enough.

These are all things Zuckerberg knows but doesn't emphasize. They don't fit the narrative of inevitability.

The Real Lesson from the Metaverse

If you want to understand where AI glasses actually go, study what happened with the metaverse.

The metaverse was technically feasible. VR headsets work. Virtual environments can be built. Avatars can move around. Everything was possible in 2021.

What wasn't possible was making people want to spend time in virtual worlds instead of doing literally anything else. That's a desire and behavior problem, not a technology problem.

AI glasses face something different. It's not that people don't want them. It's that the technology isn't ready, and people's needs for what they promise can already be met by phones.

So the path forward is narrow: AI glasses have to become noticeably better than phones for enough use cases that the friction of switching is worth it.

History suggests that takes longer than any executive predicts.

Industry Trends That Actually Matter

If you're thinking about investing in or betting your company on AI glasses, here are the trends that actually matter:

1. Quantization and Model Efficiency

The AI industry is moving toward smaller, more efficient models. Claude, GPT, and others are all exploring ways to run capable AI with less compute.

This directly helps AI glasses, which need low-power processing.

2. On-Device AI

Companies are investing heavily in on-device AI inference. Apple's Neural Engine, Qualcomm's Snapdragon, and others are optimizing for local processing.

This trend favors wearables like AI glasses.

3. Battery Innovation

Solid-state batteries are moving closer to commercial viability. Multiple startups are targeting 2027-2028 for production. This is the single biggest unlock for wearable devices.

4. AR Software Maturity

Apple's ARKit, Google's ARCore, and Meta's tools are improving. The software layer is getting better while the hardware lags.

Once hardware catches up, the software will be ready.

5. Regulatory Clarification

The EU is setting rules on AI and surveillance. The US will follow (eventually). Once regulations are clear, companies can build with certainty about what's allowed.

This is actually helpful for the market, even if it limits some capabilities.

The Real Question: What Actually Changes?

Let's zoom out. Assume Zuckerberg is right and AI glasses become ubiquitous by 2035.

What actually changes about human experience?

Some obvious things:

- Navigation becomes seamless (no need to check your phone for directions)

- Translation becomes real-time (language barriers decrease)

- Information access becomes ambient (you look at something, context appears)

- Work becomes more flexible (hands-free access to information while doing other tasks)

Some obvious downsides:

- Privacy erosion (you're broadcasting visual data)

- Attention fragmentation (information is always available, so attention becomes harder)

- Inequality (early adopters get advantages, late adopters are at a disadvantage)

- Social awkwardness (always-on cameras create consent issues)

But here's the deeper question: do AI glasses change what we actually want to do?

Computers haven't made us smarter. The internet hasn't made society more truthful. Smartphones haven't made us less lonely. They've made some things easier, some things worse, and some things we didn't know we needed.

AI glasses will probably be the same. They'll make some things better. They'll enable new forms of commerce and surveillance. They'll create new dependencies. They'll be adopted because they're convenient, not because they're good.

Zuckerberg frames them as inevitable because that's what you say when you're betting $50 billion. But inevitability and desirability are different things.

Comparing AI Glasses to Actual Breakthroughs

Let's compare AI glasses to technologies that actually did transform markets:

Smartphones (2007)

Probably the last true platform shift. Steve Jobs showed the first iPhone, and people immediately understood why it was better than flip phones. The form factor was right. The software was elegant. The ecosystem exploded. Within 10 years, smartphones were ubiquitous.

Key difference from AI glasses: immediate, obvious value proposition that most people understood without explanation.

Cloud Computing (2006)

AWS launched and solved a real problem (nobody wants to manage physical servers). Adoption was gradual but inevitable. Enterprise adopted first, then scale meant consumer prices dropped.

Key difference from AI glasses: problem-solution fit was crystal clear. The value was direct: lower cost, less complexity.

AI (2022)

ChatGPT showed that large language models could do things people actually wanted (write things, answer questions, brainstorm). Adoption was explosive because the value proposition was obvious and immediate.

Key difference from AI glasses: this wasn't about a hardware form factor. It was about capability. And capability arrived in a product people could use immediately.

AI glasses don't have that clarity. The value proposition requires explaining. The form factor requires adjustment. The ecosystem doesn't exist yet.

That doesn't mean they won't eventually succeed. But it does mean Zuckerberg's "hard to imagine a future without them" is more hope than prediction.

What Should Actually Happen

If I were advising Meta on AI glasses strategy, I'd say: stop predicting inevitability. Start proving value.

Here's what that looks like:

-

Focus on enterprise first. Surgeons, pilots, mechanics, and technicians need better tools. Build for them. Generate revenue. Build credibility.

-

Solve one problem really well. Don't try to replace phones. Try to make translation better, or navigation better, or hands-free information access better. Pick one and execute perfectly.

-

Accept longer timelines. Stop talking about 2025-2026. Commit to 2030s. It's more honest and it removes pressure to deliver on unrealistic roadmaps.

-

Don't bet the company on hardware profitability. Make money on software, services, and data. Use hardware as a beachhead.

-

Address privacy head-on. Don't wait for regulators to force the issue. Design privacy into the product from the beginning. Make it a feature, not an afterthought.

-

Be honest about the metaverse. Acknowledge it didn't work. Explain what you learned. Use that credibility to build trust on the next bet.

None of this sounds like what Meta is actually doing. They're doubling down on the narrative, not the honest assessment.

The Bottom Line on AI Glasses

Mark Zuckerberg is probably right that AI glasses will eventually be important. Someday, we might interact with digital information primarily through wearable devices rather than phones.

But he's wrong about the timeline, the inevitability, and the path to get there.

The metaverse failed because people didn't want what it was offering. AI glasses might succeed because people do want hands-free, context-aware information access. But "might succeed eventually" and "are inevitable soon" are very different statements.

The technology isn't ready. Battery life is wrong. Thermal management is wrong. Display technology is wrong. AI efficiency is wrong. Privacy regulations don't exist yet.

All of these problems are solvable. None of them are solved yet.

Zuckerberg is betting that Meta can drive innovation fast enough to overcome these challenges before someone else does. Or before the market decides phones (which actually work right now) are good enough.

That's a valid bet. It's just not inevitable.

And when a CEO frames a $50 billion bet as inevitable, that's usually when you know it's not.

FAQ

What exactly are AI glasses?

AI glasses are wearable devices that combine optical displays (glasses that project information into your field of view) with cameras, sensors, and AI processing to provide real-time information about your environment. Unlike traditional AR glasses that just overlay digital content, AI glasses interpret what you're looking at and provide contextual information, translation, navigation, or other AI-powered assistance. Currently, most products on the market (like Ray-Ban smart glasses) are primarily camera-focused with limited on-device processing, with heavy reliance on cloud services for actual AI interpretation.

How close are we to mainstream AI glasses?

Based on current technology roadmaps and engineering constraints, realistic mainstream adoption is probably 5-10 years away, with serious technical viability closer to 2030-2032. Apple's Vision Pro and similar premium devices exist today but are niche products due to high cost ($3,500), limited battery life (2-3 hours), and thermal management issues. The physical challenges—battery density, heat dissipation, display brightness, and power-efficient AI processing—all require fundamental breakthroughs, not just incremental improvement. Each breakthrough takes 2-4 years minimum from lab to commercial viability.

Why did the metaverse fail, and does that predict AI glasses' fate?

The metaverse failed because the fundamental value proposition wasn't compelling. After spending $50+ billion, Meta created virtual worlds that people didn't actually want to spend time in when they could do literally anything else. For AI glasses, the situation is different—the value proposition (hands-free, context-aware information access) is actually appealing. The problem isn't desire; it's that the technology isn't ready and phones already solve most of the problem reasonably well. The metaverse teaches us that market timing matters more than engineering effort, but it doesn't necessarily doom AI glasses if the technical obstacles can be overcome.

What are the main technical obstacles to mainstream AI glasses?

Four major obstacles prevent current AI glasses from mainstream adoption. First, battery life: today's glasses get 2-4 hours of active use with limited processing, versus the 8-12 hours needed for all-day wear. Second, thermal management: running AI models on a device pressed against your face generates dangerous heat without effective cooling. Third, display technology: micro-OLED displays that provide good brightness and color cost

What privacy concerns do AI glasses raise that are different from smartphones?

Smartphones collect data about you; AI glasses collect data about everyone around you. The glasses have cameras pointing at the world, not at you, so they're capturing images of strangers, their faces, their surroundings, and their activities without their knowledge or consent. Combined with facial recognition and AI interpretation, this enables mass surveillance at a scale that didn't exist before. Additionally, the always-on nature means you can't opt out by not using it—you're recorded by people around you whether you consent or not. Privacy advocates have raised legitimate concerns about mass surveillance, consent frameworks, and data retention that current regulatory frameworks aren't equipped to address. The European Union is already moving toward restrictions on AI-powered facial recognition in public spaces, which could severely limit AI glasses functionality before they even reach mainstream adoption.

How would AI glasses actually improve on what smartphones already do?

For specific use cases, genuinely: navigation (you don't need to glance at a phone screen; directions appear in your field of view), translation (point at a sign in another language and see instant translation in your vision), hands-free documentation (surgeons, technicians, inspectors can capture and annotate information without picking up tools), and context-aware information (point at a restaurant and see reviews, hours, and menu without searching). These are real improvements. The problem is that these same tasks are already possible with smartphones, just requiring slightly more friction (taking out your phone, opening an app). That friction is small enough that it hasn't driven mass demand for glasses. AI glasses would need to be dramatically faster, cheaper, and more intuitive to justify the switch—and they're not there yet. Many of these value propositions might actually be delivered better by contact lenses or other form factors than glasses.

What's Zuckerberg's actual strategy with AI glasses, and why does it matter?

Meta's underlying strategy is to establish a new computing platform before the current one (smartphones/social media) becomes commodified or displaced. Facebook and Instagram are mature, with slowing growth and regulatory challenges. AI glasses represent a potential platform shift that could give Meta a central role in the next decade of computing—similar to how they dominated mobile social networking. The

If I'm a developer, should I invest in building AI glasses applications?

The answer depends on your risk tolerance and timeline. Building for enterprise/professional use cases (healthcare, manufacturing, inspection) is lower-risk because the value proposition is clear and customers will pay for functionality that directly improves work. Building for consumer use cases requires believing that widespread AI glasses adoption will happen in the next 3-5 years, which seems unlikely based on technical timelines. Consider Apple's ARKit and Google's ARCore (which work on phones today) as safer platforms for AR development while the glasses hardware matures. If you're in enterprise or specifically solving problems for glasses (like real-time translation or hands-free navigation), there's legitimate opportunity. For general consumer apps, you're probably betting on a timeline that's further out than the startup lifecycle allows.

What would actually accelerate AI glasses adoption?

Three things would meaningfully accelerate adoption: first, a battery breakthrough (solid-state batteries reaching 500+ Wh/kg in practical form factors), which would solve the all-day wear constraint; second, a software killer app that's dramatically better on glasses than phones (which hasn't been demonstrated yet), which would justify the friction of switching; and third, regulatory clarity that allows the privacy-surveillance benefits of AI glasses without enabling mass surveillance, which would remove uncertainty around the business model. Any one of these would help. All three together would probably guarantee mainstream adoption. Right now, zero are fully solved. Battery research is advancing but still 5+ years from commercialization. No killer app has emerged. Regulators are moving toward restriction, not clarity. So the timeline remains extended.

Conclusion: The Vision vs. The Timeline

Mark Zuckerberg is right about something important: AI glasses will probably be significant eventually. The form factor of computing will likely evolve beyond phone screens. Information access will probably become more ambient and less screen-dependent. The direction he's pointing toward is probably correct.

But here's what he's not saying: "eventually" doesn't mean 2025. It doesn't mean 2026. It might not even mean 2030.

The metaverse taught Meta a brutal lesson about the difference between visionary thinking and market reality. You can spend $50 billion on the right vision and still be wrong about the timeline, the product, and the market's willingness to adopt.

AI glasses face that same risk. The vision might be right (hands-free, contextual information access through wearable AI). The market might eventually want it. But getting there requires solving simultaneous problems in battery technology, thermal engineering, display manufacturing, AI efficiency, and regulatory frameworks.

Solving one of these is hard. Solving all of them, simultaneously, with commercial viability—that's the actual bet.

Zuckerberg is making that bet because his core business is maturing and he needs the next platform. That's honest motivation, even if his public narrative is "this is inevitable." The question isn't whether he's right about the direction. It's whether Meta can execute on the physics, and whether the market will adopt when the technology is finally ready.

History suggests: maybe, but not as fast as he's claiming.

For now, keep your phone. It works. AI glasses will get there eventually, but eventual and imminent are different things.

And that's the gap between Zuckerberg's vision and reality.

If you're exploring workflow automation and want to understand how AI is already transforming real productivity (without waiting for glasses), consider trying platforms that already deliver AI-powered benefits today. Runable offers AI-powered automation for presentations, documents, reports, and slides starting at $9/month—delivering contextual AI capabilities right now, not in 5-10 years.

Use Case: Create marketing presentations automatically from data, generate quarterly reports with AI, or build documentation without writing everything manually.

Try Runable For FreeKey Takeaways

- AI glasses will likely become important eventually, but realistic mainstream adoption is 5-10 years away, not the 2-3 years Meta implies

- Meta's $50+ billion metaverse losses show that capital and conviction alone can't overcome market resistance when product-market fit is wrong

- Technical obstacles (battery life, thermal management, display technology, spatial AI processing) require fundamental breakthroughs, not just engineering iteration

- Privacy concerns around always-on cameras and facial recognition may force regulatory restrictions that limit AI glasses functionality before mainstream adoption

- Smartphones already solve most promised use cases well enough that AI glasses face a high bar to justify adoption, even if technology catches up

Related Articles

- The Smart Glasses Revolution: Why Tech Giants Are All In [2025]

- Meta Reality Labs: From Metaverse Losses to AI Glasses Pivot [2025]

- Meta's $19 Billion VR Gamble: Reality Labs Losses Explained [2025]

- Snap's AR Glasses Spinoff: What Specs Inc Means for the Future [2025]

- Meta's VR Pivot: Why Andrew Bosworth Is Redefining The Metaverse [2025]

- China Approves Nvidia H200 Imports: What It Means for AI [2025]

![AI Glasses & the Metaverse: What Zuckerberg Gets Wrong [2025]](https://tryrunable.com/blog/ai-glasses-the-metaverse-what-zuckerberg-gets-wrong-2025/image-1-1769688680337.jpg)