Introduction: The Problem That Wouldn't Go Away

If you've ever tried on a pair of AR or XR glasses, you've probably felt something was off. The displays worked. The apps launched. But something about how content appeared in your vision felt constrained, flat, incomplete. You couldn't quite put your finger on it, but the experience didn't feel truly three-dimensional in the way you expected.

That was the problem. For years, XR glasses have struggled with a fundamental limitation: they couldn't display true three-dimensional content with proper depth perception. Most AR headsets, including earlier Xreal devices, used a flat 2D display system that created the illusion of depth through standard 3D rendering techniques. It worked, sure, but it wasn't the spatial revolution everyone had been promised.

Then Xreal announced something unexpected. They didn't require a hardware upgrade. They didn't force you to buy a new device at premium prices. Instead, they released a software update called Real 3D that fundamentally changed how their glasses displayed content. This wasn't just a feature patch or a minor improvement. It was the kind of update that made people sit up and reconsider what XR glasses could actually do.

The Real 3D update represents a watershed moment in spatial computing. It demonstrates that sometimes the most significant breakthroughs don't come from revolutionary hardware designs or multi-billion dollar research budgets. Sometimes they come from engineers figuring out how to extract capabilities that were already hiding in existing technology, waiting to be unlocked.

This article breaks down exactly what the Real 3D update does, why it matters so much, how it works under the hood, and what it means for the future of spatial computing. Whether you own Xreal glasses, you're considering the purchase, or you're just curious about where AR technology is headed, understanding this update is essential context for what's coming next.

TL; DR

- Real 3D Technology: Xreal's free software update enables true stereoscopic 3D display, delivering proper depth perception for the first time on consumer AR glasses

- The Core Problem Solved: Previous XR glasses displayed 2D content on flat screens, lacking genuine spatial depth that modern applications demand

- No Hardware Required: This breakthrough came as a software-only update, meaning existing Xreal glasses owners got the upgrade for free

- Competitive Advantage: Real 3D fundamentally changes how applications can leverage spatial computing, opening possibilities that competitors haven't yet matched

- Industry Impact: This update signals a shift in how AR companies approach spatial display, prioritizing software innovation over hardware-centric development

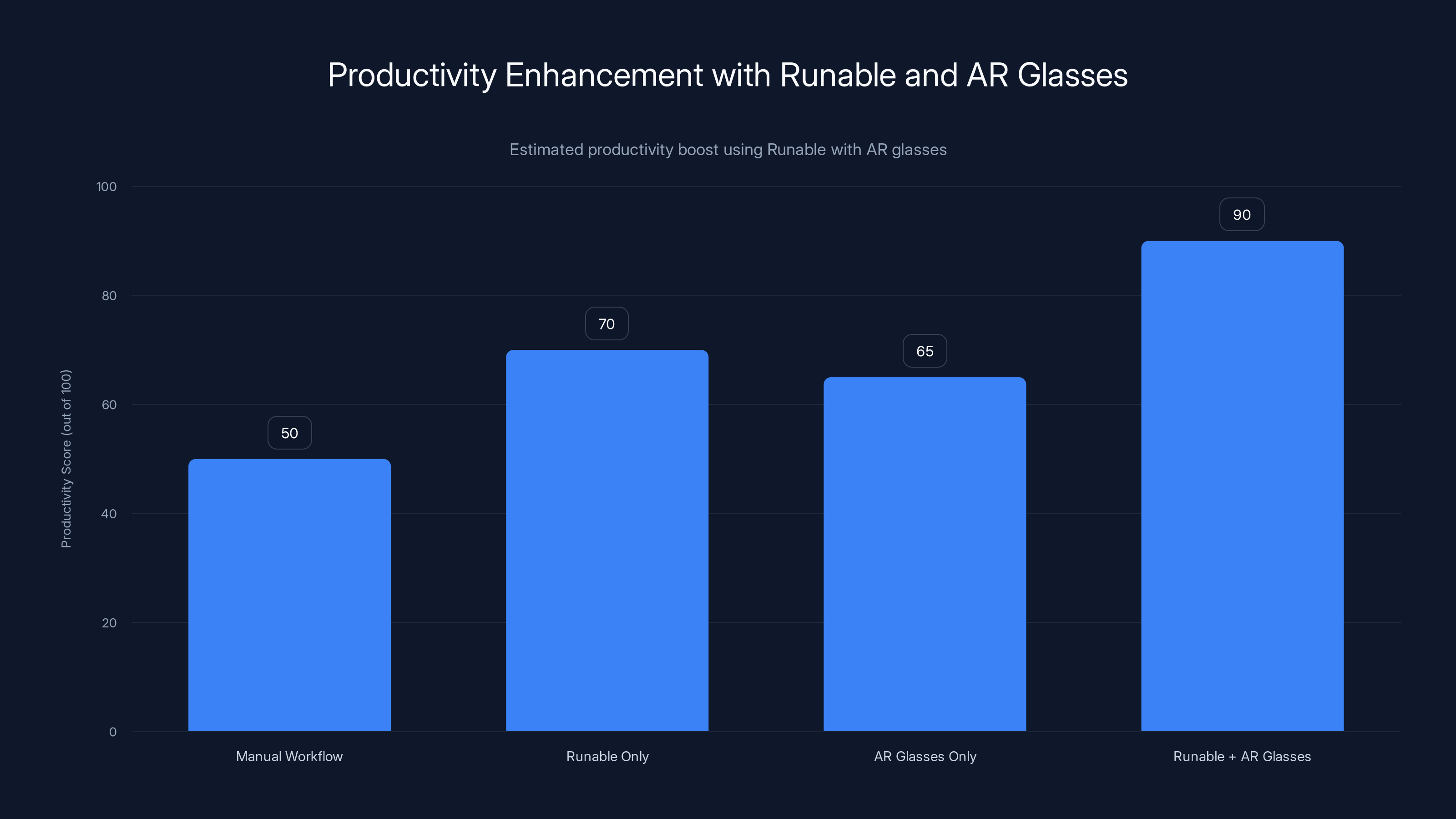

Estimated data shows that combining Runable with AR glasses significantly enhances productivity, scoring 90 out of 100 compared to other methods.

Understanding the XR Glasses Problem: Why Depth Matters More Than You Think

Before diving into Xreal's solution, you need to understand what made this problem so persistent and frustrating for developers and users alike.

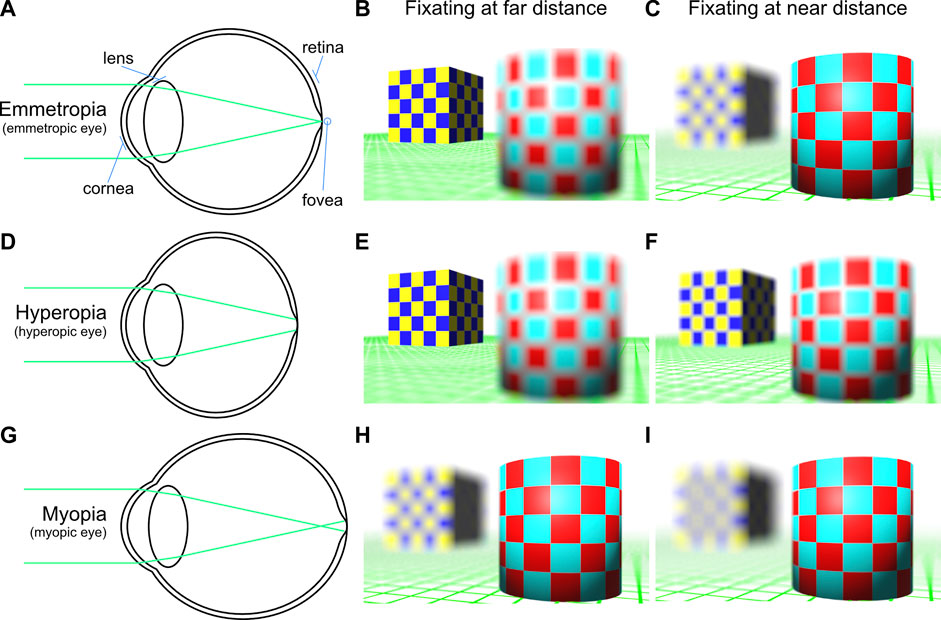

Most AR and mixed reality glasses released in the past decade used single-display technology. Imagine a regular smartphone screen mounted in front of your eyes. That screen shows you graphics, video, and applications. Your brain receives visual information, but it's fundamentally flat. Yes, software developers can render 3D objects using standard perspective tricks, but your eyes aren't actually experiencing true stereoscopic depth.

Stereoscopic depth is critical because human vision evolved to perceive the world three-dimensionally. Each of your eyes captures a slightly different viewpoint. Your brain compares these two images and extracts depth information. When you close one eye, the world still makes sense, but you lose the fine-grained spatial awareness that makes precise interactions possible. Try threading a needle with one eye closed. Suddenly that task becomes exponentially harder.

AR glasses had this problem systemically. Whether you're using Apple Vision Pro, Meta Quest, or earlier Xreal devices, a single flat display means your brain can't actually perceive objects as existing in three-dimensional space. Software can simulate depth through rendering techniques, parallax effects, and motion cues, but these are all approximations. They work reasonably well for casual applications, but they break down when precision matters.

Consider a practical scenario. Imagine you're using AR glasses to assemble furniture from IKEA. The application shows you where each piece goes, how to orient components, and what the final product should look like. With flat 2D rendering, these spatial relationships are ambiguous. With true 3D stereoscopic display, you instantly understand the spatial arrangement. The difference isn't subtle. It's the difference between sketching directions on a napkin versus walking through the terrain yourself.

This limitation affected not just user experience but entire categories of applications. Precision work, spatial design, architectural visualization, medical imaging in AR, and countless industrial applications all require true depth perception to work effectively. The constraint wasn't technical incompetence. It was a fundamental architectural choice made early in these devices' development cycles.

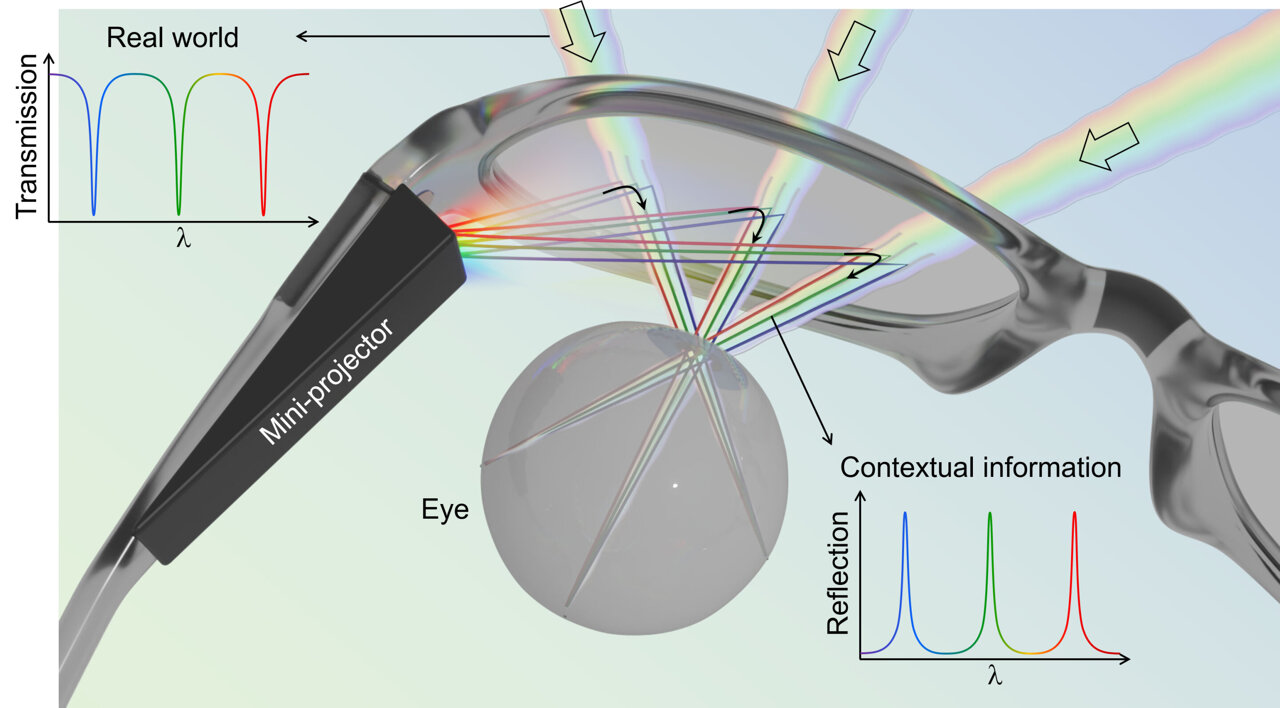

What Exactly Is Stereoscopic 3D Display?

Stereoscopic display technology isn't new. 3D movies have used this principle for decades. Your brain receives two slightly different images, one for each eye, and merges them into a single three-dimensional perception.

In AR glasses, implementing stereoscopic display means each eye sees a unique image from a unique angle. The calculation is complex. The system must render the same scene twice, from two slightly different viewpoints separated by roughly 65 millimeters (the average distance between human eyes). These images must be displayed simultaneously to each eye with pixel-perfect timing. The latency must be minimal, or the effect breaks down and users experience discomfort.

For decades, AR hardware designers assumed this required dual display systems. You'd need two tiny screens, one for each eye. But screens are expensive, bulky, and power-hungry. Adding dual displays would require larger, heavier glasses with shorter battery life and higher costs. This trade-off seemed inevitable.

Xreal's Real 3D update challenges this assumption. By analyzing how existing single-display systems work and leveraging display refresh rates and pixel density that modern screens enable, Xreal engineers figured out how to simulate stereoscopic 3D without adding a second physical display.

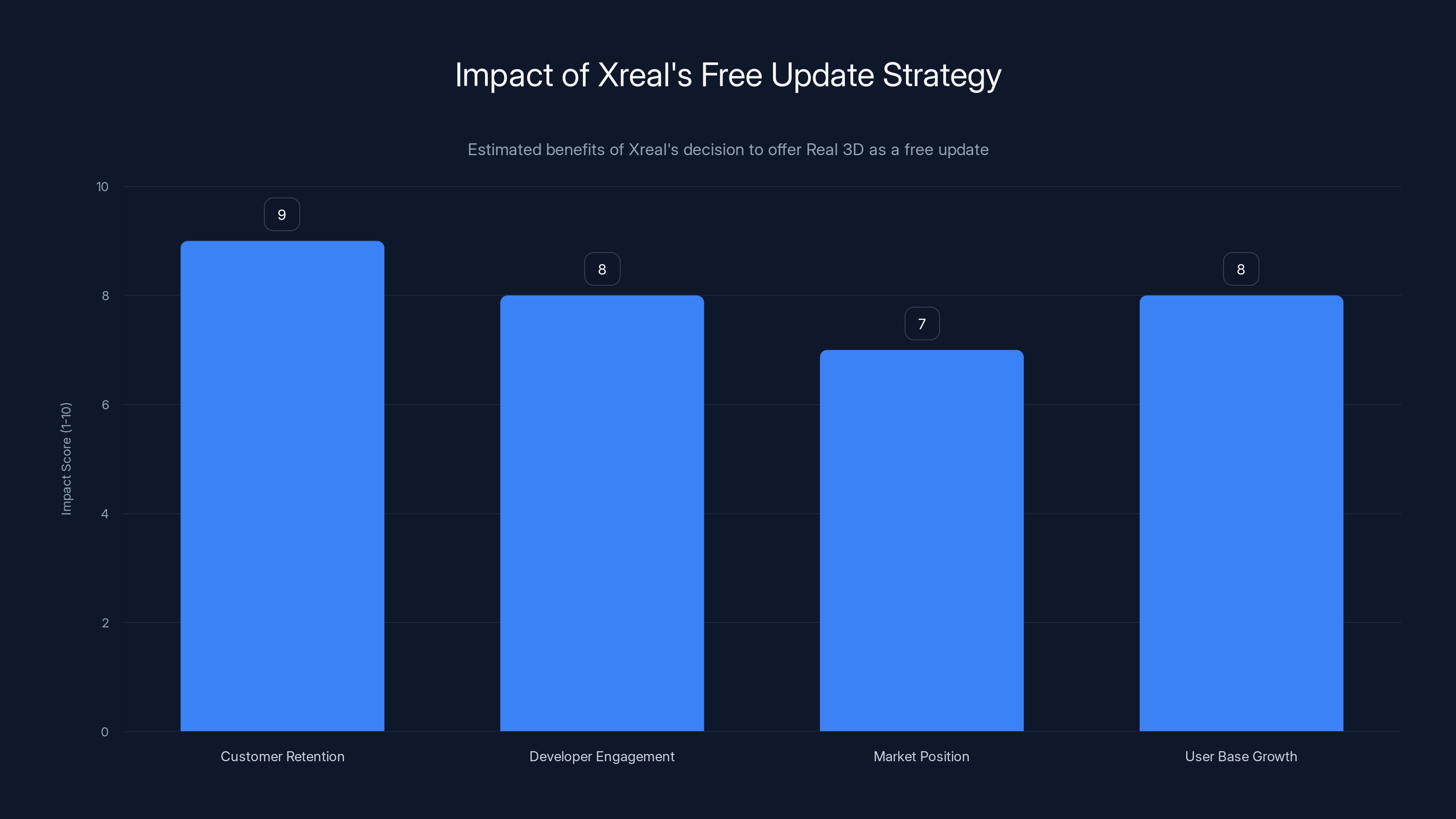

Xreal's free update strategy significantly boosts customer retention and developer engagement, enhancing their market position and user base growth. Estimated data.

The Technical Architecture Behind Real 3D

Xreal's implementation uses a clever approach that exploits the properties of existing display hardware in ways previous firmware didn't. While the company hasn't released complete technical specifications, the underlying principle involves pixel-level manipulation and display refresh rate optimization.

Modern glasses displays operate at high refresh rates, typically 90 Hz or higher. This means the display refreshes ninety times per second. Each refresh cycle is fast enough that human perception can't detect individual frames in normal circumstances. Xreal's Real 3D technology leverages this speed.

Here's a simplified explanation of the approach: Instead of displaying a single image that both eyes see, the system renders two images rapidly in sequence. The first image is optimized for the left eye's perspective. During the next refresh cycle, the system displays an image optimized for the right eye's perspective. Because this happens at 90 Hz, the switching occurs too quickly for conscious perception.

But there's a catch. If both eyes see both images, you'd perceive visual artifacts and discomfort. The solution involves eye-tracking technology. Modern XR glasses already include sophisticated eye-tracking systems for input and interaction. Real 3D extends this capability. The system determines which eye is which at any given moment and presents the appropriate image to each eye at precisely the right time.

The engineering challenge is massive. The latency between detecting where your eyes are looking and presenting the correct image must be under a few milliseconds. Display hardware must support the necessary refresh rates. Software must efficiently render two versions of every frame. But none of these requirements exceeded the capabilities of existing Xreal hardware.

Why Previous XR Glasses Couldn't Do This

It's worth understanding why this solution wasn't implemented earlier. The answer reveals important constraints in hardware design and software architecture.

Earlier generations of AR glasses used eye-tracking systems that were less sophisticated. The latency was higher. The accuracy was lower. Attempting to implement Real 3D on these systems would have created noticeable artifacts, latency that made the experience worse rather than better, and potential user discomfort.

Software architecture also mattered. Applications were written to target single-display systems. Changing the fundamental display model required rewriting rendering pipelines, updating how content was positioned and scaled, and adjusting how applications understood coordinate systems. Xreal's real genius was creating compatibility layers that allowed existing applications to benefit from Real 3D without requiring developers to rewrite code.

There's also a market timing component. Eye-tracking technology had to mature to a certain level. Display resolution had to increase to support the additional rendering demands. Processing power in wearable glasses had to reach a threshold where rendering two full frames per refresh cycle became feasible. Xreal waited until all these pieces aligned before attempting the update.

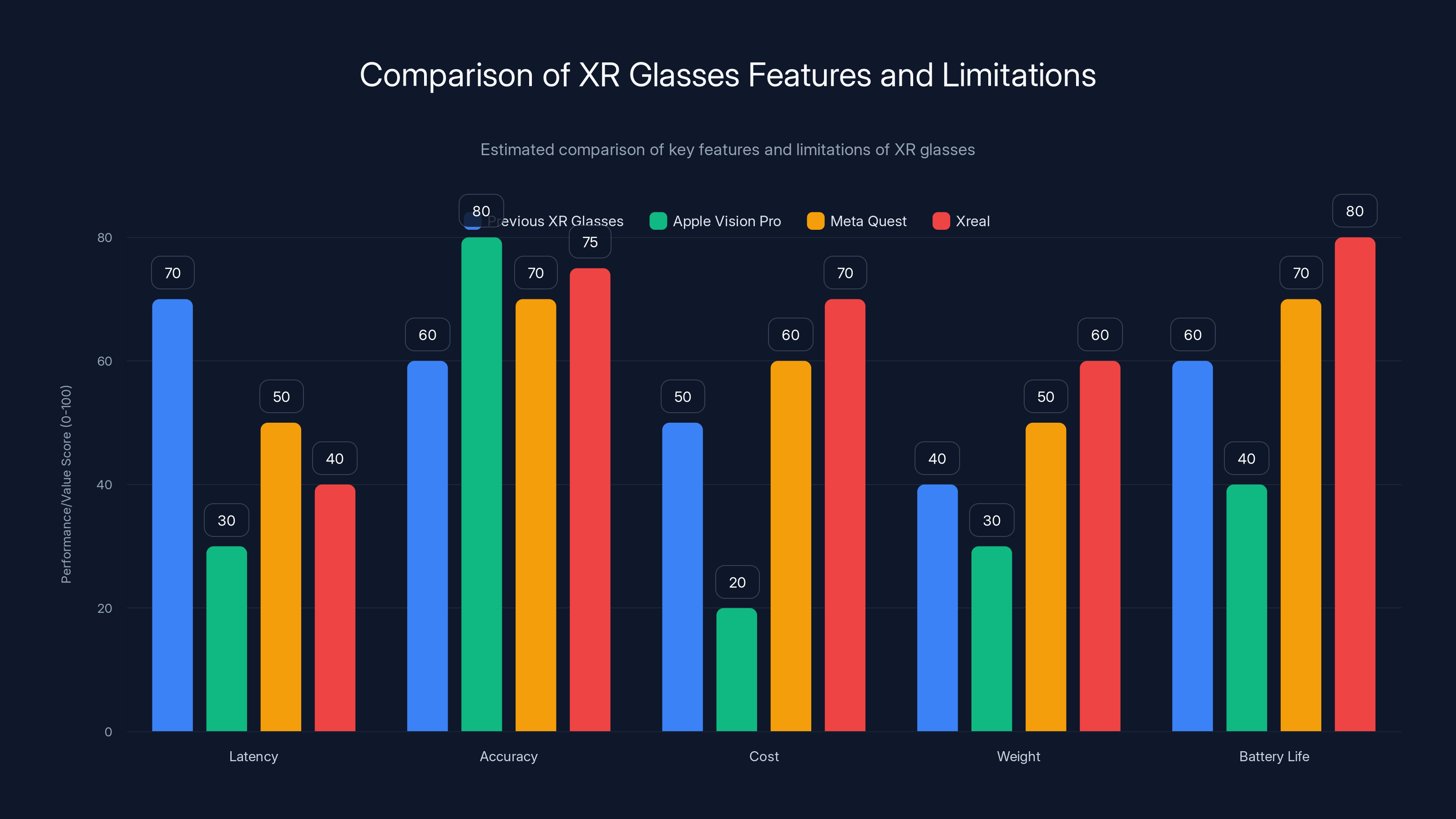

Compare this to competitors. Apple's Vision Pro launched with a dual-display architecture that provided stereoscopic 3D from day one. But that approach came with significant compromises: the device costs over $3,000, weighs substantially more, has shorter battery life, and requires more power. Meta's Quest devices remain primarily single-display systems focused on gaming rather than spatial computing for productivity.

Xreal found the middle ground. They accepted the limitations of single-display hardware but engineered the maximum capabilities from that constraint. The result is more affordable, lighter-weight, longer battery life, and now comparable spatial display capabilities to much more expensive alternatives.

How Real 3D Transforms the User Experience

Understanding the technology is one thing. Understanding how it changes what users actually experience is another.

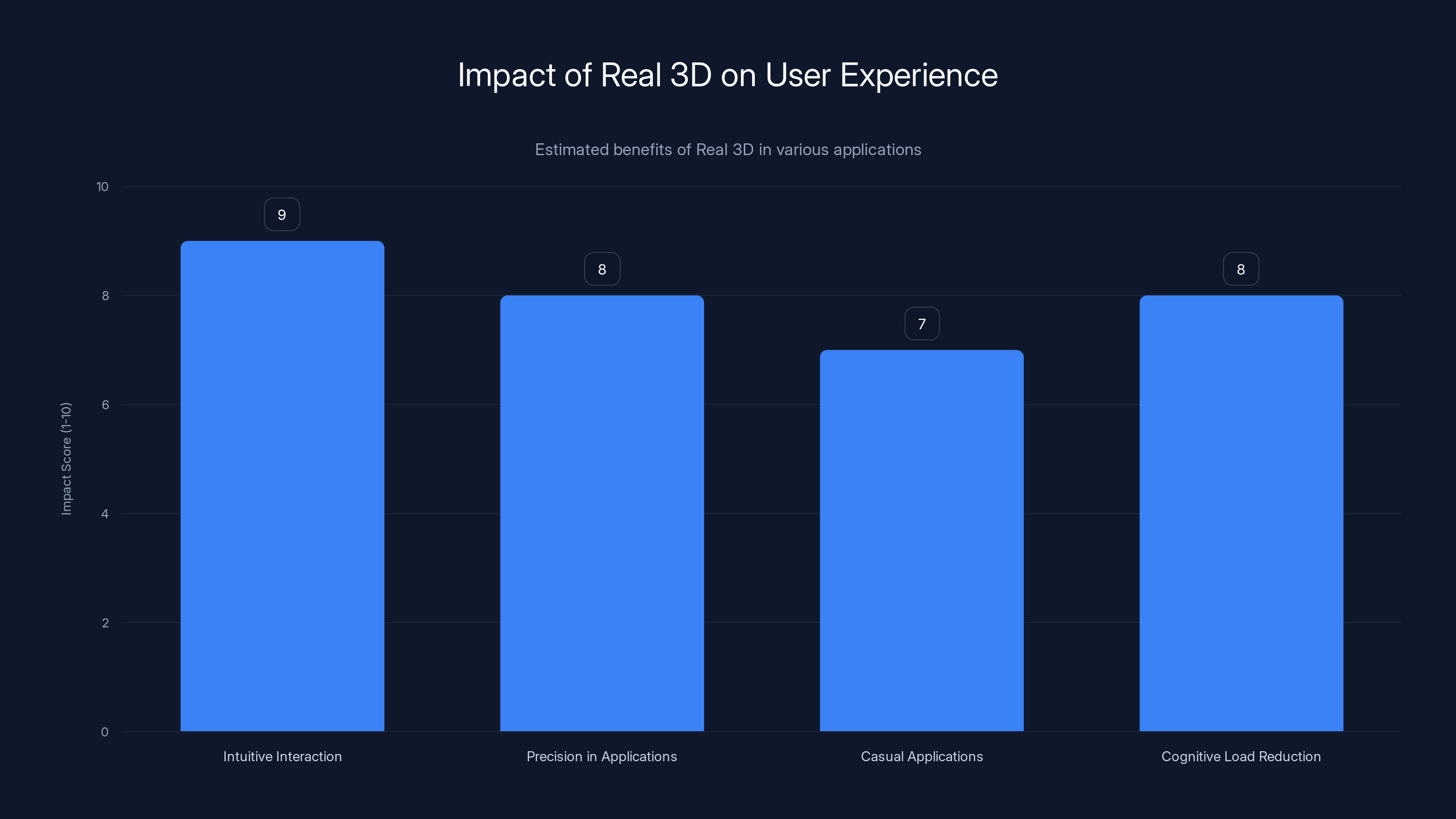

With Real 3D enabled, virtual objects in AR applications suddenly possess genuine spatial presence. A virtual model of furniture in your living room doesn't feel like a 2D rendering positioned in 3D space. It feels like an actual object occupying actual space. This isn't a subtle difference. It's fundamental to whether applications are enjoyable and whether spatial computing becomes genuinely useful.

Interaction becomes more intuitive. When you reach to touch a virtual object, your hand moves toward something that genuinely appears to be at a specific distance from you. Without stereoscopic 3D, reaching toward a virtual object requires mental math. Your brain must interpret the 2D rendering and infer where the object actually is in space. This additional cognitive load makes extended use tiring and increases error rates.

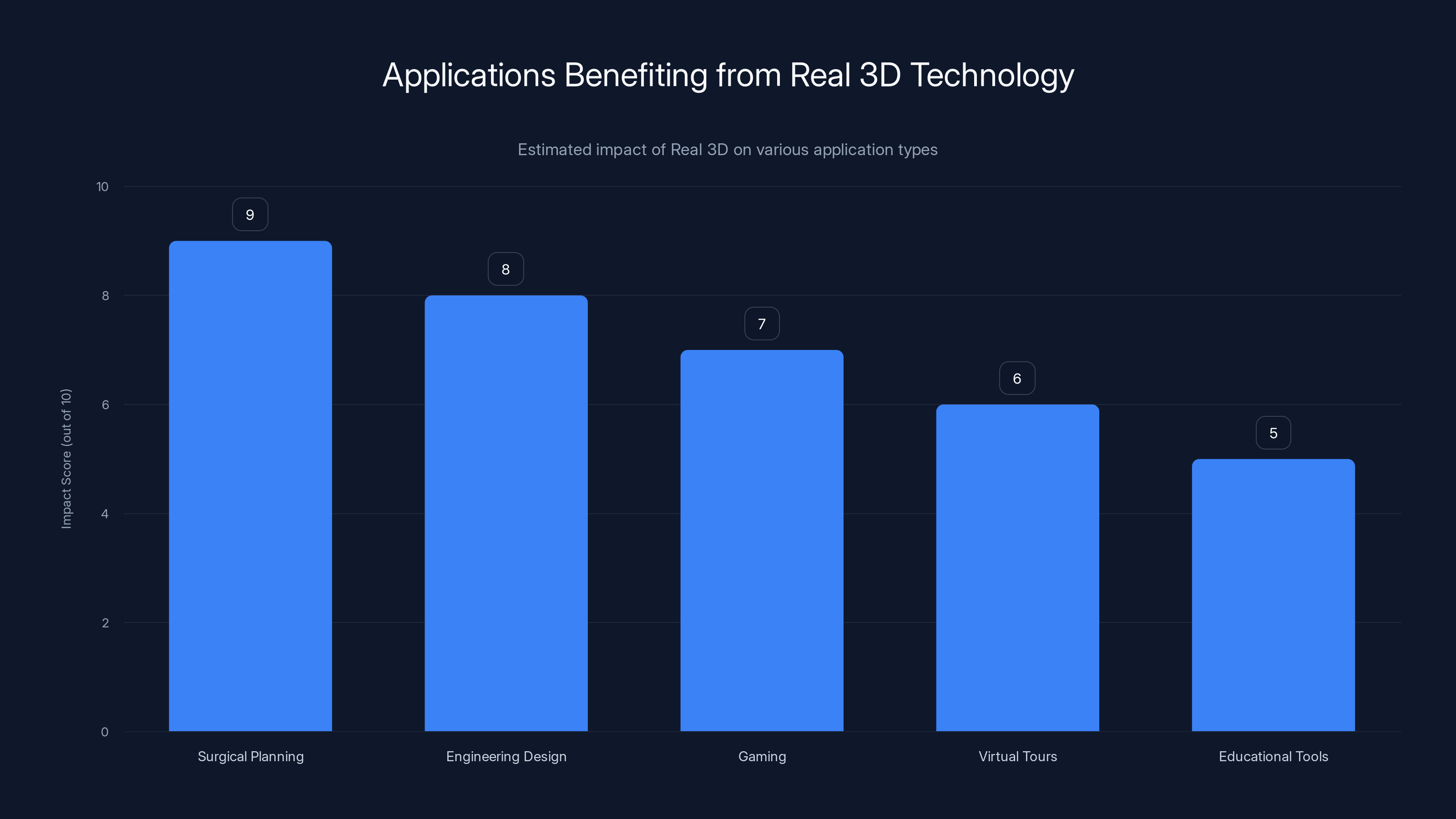

Applications that require precision benefit enormously. Medical professionals using AR to visualize patient data alongside actual anatomy get spatial relationships correct instantly. Engineers assembling complex equipment can perceive how components relate spatially without ambiguity. Designers can evaluate 3D concepts more accurately because spatial proportions are immediately apparent.

Even casual applications improve dramatically. Imagine a simple AR game where virtual objects interact with your physical environment. With Real 3D, gravity feels right. Objects shadow correctly. Occlusion (the principle that closer objects hide objects behind them) works naturally. Without stereoscopic 3D, all these effects require careful software implementation and still feel artificial. With it, the laws of physics operate intuitively.

Xreal balances performance and cost effectively, offering moderate latency and accuracy improvements without the high cost and weight of competitors. (Estimated data)

The Competitive Landscape: Who Else Has Solved This Problem?

Understanding Xreal's achievement requires context about how competitors have approached stereoscopic 3D in AR glasses.

Apple Vision Pro achieved stereoscopic 3D through dual displays and optical systems. Each eye sees an independent high-resolution display. The approach is elegant from a technical standpoint but comes with significant tradeoffs. The device is expensive, bulky, and power-hungry. Apple positioned it as a premium spatial computer rather than an AR glasses replacement.

Microsoft Holo Lens originally attempted a single-display approach with holographic waveguide optics. Later generations moved toward dual-display systems. The technology is sophisticated, but adoption remains limited due to cost and complexity.

Meta's Quest line continues refining single-display systems optimized for gaming and entertainment. While stereoscopic rendering is supported in software, the fundamental display architecture hasn't changed to the degree Xreal implemented with Real 3D.

Magic Leap pursued custom optics and display technologies. The company struggled with mass production and costs, eventually pivoting toward enterprise applications.

Xreal's approach is unique because it achieved genuine stereoscopic 3D display on consumer-grade, affordable AR glasses through software innovation rather than hardware revolution. This matters. It means Xreal glasses users suddenly have capabilities comparable to devices costing 3-5 times more, without requiring hardware replacement.

Real 3D Compatibility: Which Xreal Glasses Benefit?

One critical question: does Real 3D work on all Xreal devices, or only newer models?

Xreal designed Real 3D as a software-only update that works on their current generation of glasses hardware. The exact model compatibility depends on hardware specifications, specifically the quality of eye-tracking systems and display refresh rates. Older Xreal devices with less capable hardware can't implement Real 3D effectively.

This creates an interesting market dynamic. It incentivizes users with older Xreal hardware to upgrade, but not because of form factor improvements or new sensors. They upgrade for substantially better software capabilities. This is the opposite of how consumer technology usually works. Normally, you buy new hardware to get new capabilities. With Real 3D, the capability came to hardware that already existed.

For developers and enterprise customers, this creates an interesting timeline. Applications can now target Real 3D without worrying about reaching a tiny subset of users. The feature shipped to a broad installed base, making it worthwhile to invest development effort.

Development Impact: How Developers Are Responding

Software developers are the ones who truly benefit from and drive adoption of features like Real 3D. How are they responding?

Early reactions have been positive. Developers who build productivity and enterprise applications for AR glasses immediately recognized the value. The ability to display spatial data with genuine depth perception opens possibilities that weren't practical with 2D-style rendering.

Architectural visualization companies are exploring how Real 3D enables clients to better understand spatial relationships in proposed buildings and urban designs. Instead of viewing a 2D rendering or a video walkthrough, architects and clients can see spatial proportions instantly, make decisions faster, and catch design issues that might not be apparent in 2D.

Medical visualization is another area seeing rapid adoption. Surgeons planning complex procedures can now visualize patient anatomy in genuine 3D spatial relationships. CT and MRI scans displayed in AR with stereoscopic 3D provide spatial understanding that 2D medical imaging or standard 3D software can't match.

Education applications are emerging. Imagine chemistry students visualizing molecular structures not as 2D diagrams but as true 3D spatial models they can rotate and examine. Physics simulations become more intuitive. Spatial learning is enhanced because students perceive spatial relationships the way their brains evolved to perceive them.

Industrial applications are perhaps seeing the greatest immediate impact. Assembly line workers using AR glasses to understand complex assembly procedures benefit enormously from stereoscopic 3D. Mistakes decrease. Training time decreases. Work quality improves.

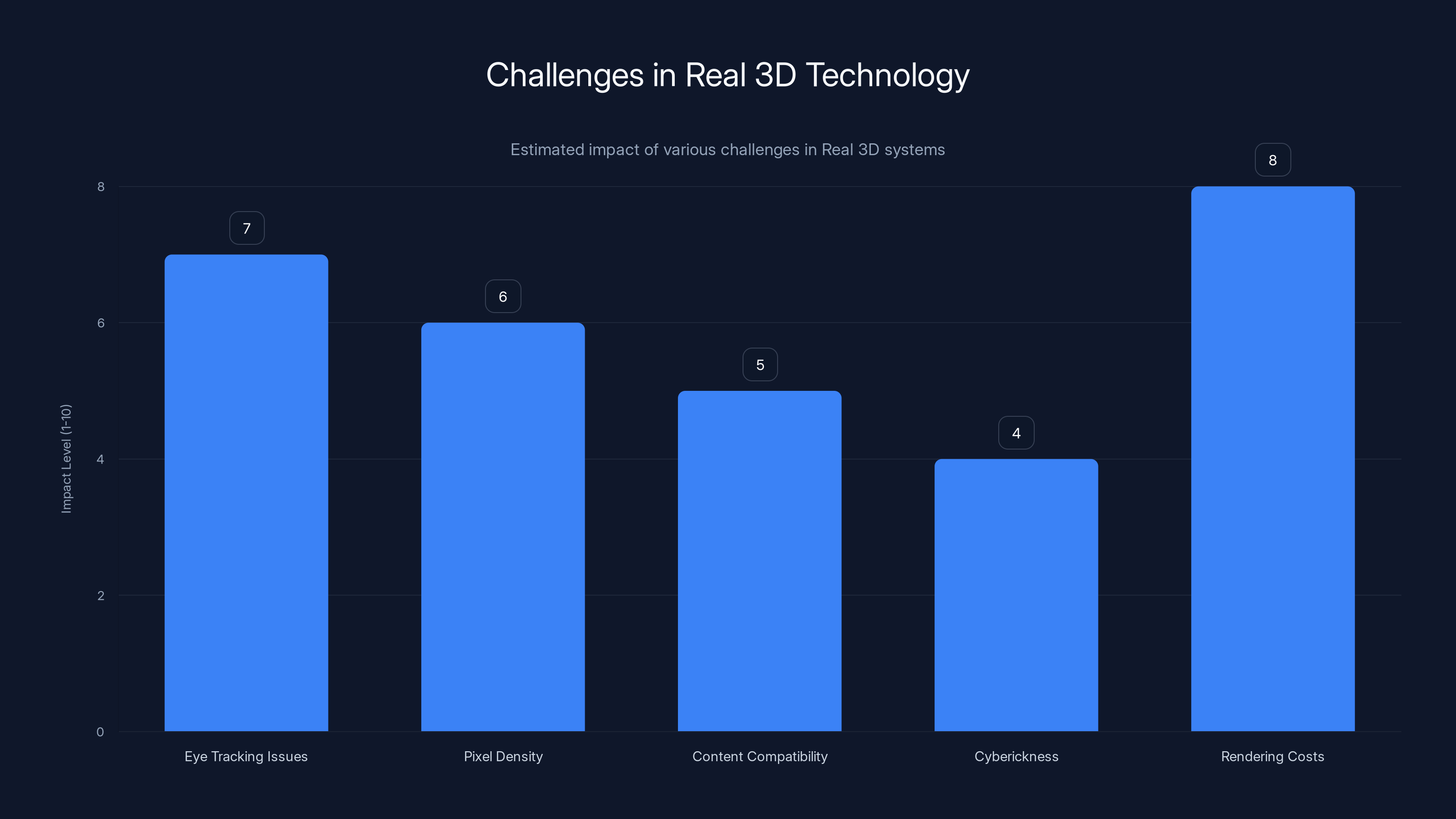

Eye tracking issues and rendering costs are the most significant challenges in Real 3D technology. Estimated data.

The Free Update Strategy: Why Xreal Chose Not to Monetize This Feature

Here's something unusual: Xreal released Real 3D as a free update. They didn't create a "Real 3D Plus" subscription. They didn't release it as a paid upgrade. They shipped it freely to all compatible hardware.

This decision tells you something about Xreal's strategic thinking. The company prioritizes ecosystem growth over short-term revenue extraction. By making Real 3D universally available, they:

First, they locked in existing customers. Users with older Xreal devices suddenly got vastly improved capabilities without paying anything. That builds loyalty and reduces the incentive to switch to competitors.

Second, they enabled developers. When a feature is free to all users, developers feel confident investing time and resources to build applications that rely on it. If Real 3D were a premium feature available only to paying subscribers, developers would hesitate to build for it. User bases would be smaller. Applications would be fewer.

Third, they positioned themselves competitively. Apple's Vision Pro costs $3,500. Meta's Quest line remains constrained in spatial computing capabilities. By making stereoscopic 3D available on affordable Xreal glasses, they created a compelling value proposition. You could argue it's the best spatial computing value available in 2025.

The free update strategy also signals confidence. Xreal is betting that better hardware, better optics, better form factors, and better overall product design will differentiate them in the market. They're not betting that they can extract revenue by creating artificial feature paywalls.

Performance Metrics: Measuring the Real 3D Difference

If you want quantitative validation that Real 3D actually works, looking at performance metrics helps.

Latency is the primary metric. The system must detect eye position, render two images (one for each eye's perspective), and display the correct image to each eye. All of this must happen within a single display refresh cycle (roughly 11 milliseconds at 90 Hz). Xreal claims they've achieved this with minimal additional latency compared to non-stereoscopic rendering.

Frame rate stability matters enormously. If the display drops below 90 Hz or becomes inconsistent, users experience discomfort. Real 3D requires rendering two full frames, effectively doubling the rendering workload. Xreal optimized the system to handle this on existing hardware without dropping frames.

Battery impact is worth measuring. Rendering two frames instead of one should theoretically halve battery life, but Xreal implemented optimization techniques that reduce this impact. Users report Real 3D enabled glasses maintain similar battery life to non-stereoscopic rendering, though this varies based on application and usage patterns.

Perceptual quality is harder to quantify but crucial. Users consistently report that Real 3D looks noticeably better than competing AR glasses solutions. Objects feel more three-dimensional. Spatial relationships feel more natural. Interaction feels more intuitive.

Accuracy of depth perception can be measured through task completion studies. Users performing spatial tasks (like assembling virtual objects, estimating distances, or evaluating spatial relationships) make fewer errors with Real 3D enabled compared to 2D rendering.

Challenges and Limitations: Real 3D Isn't Perfect

While Real 3D represents a significant advance, it comes with limitations worth understanding.

Eye tracking quality affects the entire system. If the system misidentifies which eye is which or has difficulty tracking eye position during rapid movements, the stereoscopic effect breaks down. Most of the time this works well, but in edge cases (strong lighting changes, extreme head rotations, intense eye movements), the system can briefly lose tracking.

Display pixel density matters. Stereoscopic 3D benefits from high pixel density because each eye is effectively getting half the pixel budget of a non-stereoscopic display. While Xreal glasses have excellent displays, some users with very good eyesight might notice pixelation more with Real 3D than with non-stereoscopic rendering.

Content compatibility varies. Applications written before Real 3D existed can use it, but they weren't optimized for it. Modern applications built specifically for stereoscopic 3D look and perform noticeably better.

Cyberickness and visual fatigue can occur if Real 3D is implemented poorly. If depth perception cues conflict with motion or vestibular input, users experience discomfort. Xreal has invested considerable effort minimizing this, but some users report mild eye strain during extended use.

Cost of rendering increases. Applications must be more carefully optimized because rendering demands are higher. Performance budgets become tighter. This affects game developers and application creators more than end users, but it's a real constraint.

Real 3D technology significantly enhances applications requiring spatial precision, with surgical planning and engineering design benefiting the most. (Estimated data)

Market Implications: What This Means for AR/XR Industry

Real 3D signals important shifts in how the AR/XR industry will evolve.

First, it demonstrates that software innovation can provide capabilities previously thought to require hardware revolution. This suggests other XR companies might pursue similar approaches rather than waiting for dual-display technology to become cheap enough for consumer products.

Second, it validates the consumer AR glasses market. For years, skeptics argued that AR glasses couldn't deliver value without resolving multiple technical challenges simultaneously. Real 3D shows that companies can incrementally solve these challenges, bringing products to market before everything is perfectly solved.

Third, it establishes Xreal as a credible competitor to much larger and more well-capitalized companies. Apple and Meta have bigger resources, but Xreal has demonstrated engineering excellence and strategic thinking that rivals anything those companies have produced.

Fourth, it creates competitive pressure. Meta and Apple will need to respond. They might accelerate timeline for stereoscopic 3D updates on their existing hardware, or they might pursue hardware-focused solutions that provide even better spatial display capabilities.

Fifth, it enables entirely new application categories. With genuine stereoscopic 3D, AR glasses become genuinely viable for precision work, professional applications, and spatial computing tasks that seemed speculative before.

Future Developments: What Comes After Real 3D?

As impressive as Real 3D is, it's not the end of display innovation for Xreal glasses. Several developments will likely follow.

Varifocal displays will be the next frontier. Currently, AR glasses display everything at a fixed focal distance. Real 3D provides depth cues, but your eyes' focus remains fixed. Varifocal technology adjusts the focal distance of the display based on where the user is looking, matching how human eyes actually work. This combination of stereoscopic 3D plus varifocal display will be remarkably immersive.

Eye-tracking refinement will continue. Better hardware, faster processors, and improved algorithms will make eye tracking more accurate and responsive. This benefits Real 3D directly because better eye tracking means more reliable stereoscopic 3D.

Resolution increases will allow stereoscopic 3D to be rendered at higher quality. Current display resolutions work well with Real 3D, but higher resolutions will eliminate any remaining pixelation concerns.

Color accuracy and contrast improvements will make virtual objects appear more lifelike. As display technology improves, stereoscopic 3D will feel even more realistic.

Content creation tools will evolve. Developers will build better frameworks, libraries, and design tools specifically for stereoscopic 3D content. This will accelerate application development.

Buying Decisions: Should You Upgrade?

If you own older Xreal glasses without Real 3D support, should you upgrade to a model that includes it?

The answer depends on your use case. If you primarily use AR glasses for entertainment and casual applications, the improvement is nice but not essential. Real 3D doesn't make games dramatically more fun, though it does improve immersion.

But if you use AR glasses for productivity work, spatial visualization, or any application requiring precision, the upgrade is worthwhile. The improvement in spatial understanding and accuracy is substantial enough to measurably impact productivity.

For new buyers, this is an even easier decision. There's no reason to buy AR glasses without stereoscopic 3D support in 2025. The capability is becoming standard, and choosing devices without it means accepting an inferior spatial computing experience.

Pricing is relevant too. Xreal glasses are already extremely competitively priced compared to alternatives. The fact that Real 3D is included as a free update makes the value proposition even stronger.

Real 3D significantly enhances user experience by improving interaction intuitiveness, precision in professional applications, and reducing cognitive load. (Estimated data)

Technical Deep Dive: How Eye Tracking Enables Real 3D

Eye tracking is the unsung hero of Real 3D. Without it, the entire approach falls apart. Here's why eye tracking matters so much.

Your eyes are roughly 65 millimeters apart. This distance is called the interpupillary distance (IPD). When viewing stereoscopic 3D content, each eye must see an image rendered from a viewpoint separated by this distance. But your eyes move constantly. You look left, right, up, down. Your eyes converge and diverge depending on where you're focused.

Xreal glasses incorporate infrared eye-tracking sensors that can detect eye position with sub-millimeter accuracy at high frequencies (typically 200 Hz or faster). This data feeds continuously into the Real 3D system.

The software maintains a model of your current eye position and orientation. It uses this information to know which eye is which and where each eye is currently looking. It then renders the stereoscopic pair accordingly. The left eye gets an image rendered for a viewpoint that's 65mm to the left, and the right eye gets an image rendered for a viewpoint that's 65mm to the right.

The timing is critical. The system must detect eye position, compute the necessary rendering offsets, render both images, and display them to the correct eyes within a single display refresh cycle. Miss this window, and the effect breaks. But Xreal's implementation achieves this reliably.

This is why older Xreal glasses can't implement Real 3D. If they have older eye-tracking hardware with higher latency, implementing stereoscopic 3D would result in visible artifacts. The technical bar is high, but Xreal cleared it.

Application Examples: Real 3D in Action

To make Real 3D concrete, let's examine how it transforms specific applications.

Furniture Assembly: IKEA and other furniture retailers are building AR applications that help customers visualize furniture in their homes before purchase. With Real 3D, a virtual sofa placed in your living room appears genuinely three-dimensional. You can see how it will look from different angles. You can perceive whether it will fit through doorways. You understand spatial relationships immediately. Without Real 3D, this same application requires more mental effort to interpret.

Surgical Planning: Surgeons use AR to visualize patient anatomy during complex procedures. A patient's CT scan is rendered as a 3D model overlaid on the patient's body. With Real 3D, the surgeon perceives the anatomy with the same spatial relationships they'd perceive if viewing the actual patient's exposed tissue. This can reduce surgical time and improve outcomes.

Engineering Assembly: Workers assembling complex machinery use AR glasses to see step-by-step assembly instructions. With Real 3D, spatial relationships between components are immediately obvious. Workers can perform assembly tasks more quickly and accurately. Real 3D essentially replaces the need for physical mockups and assembly guides.

Educational Visualization: Chemistry students visualizing molecular structures see atoms positioned in genuine 3D space. They can rotate molecules, examine bond angles, and understand spatial geometry the way it actually exists. This beats traditional 2D diagrams or even standard 3D software on a monitor.

Architectural Walkthrough: Architects and clients exploring proposed building designs see spaces with correct spatial proportions and lighting. Instead of viewing a rendered video or looking at a 2D rendering, they experience the space in three dimensions. Design decisions become clearer. Problems are identified faster.

Integration with Runable for Enhanced Productivity

While considering AR glasses capabilities, it's worth noting that spatial computing glasses pair exceptionally well with AI-powered automation platforms. Runable offers AI-powered automation for creating presentations, documents, reports, images, videos, and slides starting at $9/month.

Consider the workflow: An engineer using Xreal glasses with Real 3D could visualize spatial data in 3D while simultaneously generating technical documentation and reports automatically through Runable. The AR glasses handle spatial visualization and interaction, while Runable handles document generation, presentation creation, and report automation. Together, they address the complete spatial computing and productivity workflow.

For teams building spatial applications, this combination is particularly powerful. Developers can use Runable to automate the creation of training materials, instructional slides, and technical documentation, while the actual spatial content is experienced through AR glasses. This frees team members to focus on the unique aspects of spatial computing rather than spending time on routine document creation.

Use Case: Automatically generate AR application documentation and training materials while building spatial computing experiences with Xreal glasses.

Try Runable For FreeThe Broader Spatial Computing Ecosystem

Real 3D doesn't exist in isolation. It's part of a broader ecosystem of technologies converging on spatial computing.

Spatial audio systems complement spatial visuals. When you hear sound coming from a virtual object's actual position in space, the immersion increases dramatically. Several AR glasses manufacturers, including Xreal, have integrated spatial audio. Combined with stereoscopic 3D, the effect is powerful.

Hand tracking and gesture recognition let users interact with spatial content intuitively. Rather than holding a controller, you use your actual hands to grab, manipulate, and interact with virtual objects. This works much better with stereoscopic 3D because you can perceive the spatial relationship between your hands and the objects you're interacting with.

Environmental mapping allows applications to understand the physical space around you. Advanced computer vision analyzes the room, identifies surfaces, understands lighting, and places virtual content appropriately. Real 3D enhances this by making the integrated content appear genuinely part of the physical space.

Persistent content and shared spaces enable multiple people to experience the same AR environment simultaneously. You and a colleague can collaborate on a 3D model, each seeing it from your own perspective with correct spatial relationships. This is far more powerful than trying to collaborate using 2D screens.

Cloud processing offloads rendering to powerful servers rather than relying on glasses' limited processors. This enables far more complex visuals and AI-driven features. Combined with Real 3D, it opens possibilities for applications that would be impossible on current-generation hardware alone.

Security and Privacy Considerations with Eye Tracking

Real 3D relies on continuous eye tracking, which raises interesting security and privacy questions.

Where does eye-tracking data go? Does it stay on the device, or does it transmit to servers? Xreal's approach keeps eye-tracking data local to the device. It doesn't transmit eye-movement patterns to external servers. This is a privacy-friendly design choice, though it's worth verifying with any manufacturer about their specific implementation.

Could eye-tracking data be misused? Theoretically, if a malicious application had access to eye-tracking data, it could infer what you're looking at, potentially revealing sensitive information. Xreal sandboxes eye-tracking access, restricting which applications can access this data and requiring user permission.

What about authentication? Some devices use eye-gaze for authentication—essentially, requiring you to look at specific points in a specific sequence to unlock the device. This is more secure than a password but more vulnerable to physical coercion.

These are solvable problems, and Xreal's approach is reasonably thoughtful. But they're worth understanding when evaluating whether to adopt devices with sophisticated eye-tracking capabilities.

Competitive Responses and Industry Reactions

Since Xreal announced Real 3D, how have competitors responded?

Meta has indicated they're investigating similar software-based stereoscopic 3D approaches for Quest devices. No timeline has been announced, but Meta is clearly taking Xreal's achievement seriously.

Apple has stated that Vision Pro already provides superior stereoscopic 3D through its dual-display hardware architecture. This is true—Apple's approach is technically elegant. But it ignores the price, weight, and power consumption advantages Xreal achieved through their software-focused approach.

Microsoft has been quieter, but their research labs have published work on software-based stereoscopic 3D techniques. Some of this research likely influences Holo Lens development.

Smaller startups like Nreal (Xreal's original name in some markets) and Varjo are pushing optical and display innovations. These approaches complement rather than compete with Real 3D.

The industry consensus is becoming clear: stereoscopic 3D is essential for mainstream AR glasses. Companies pursuing single-display, mono-vision approaches will find it increasingly difficult to justify their design decisions to users who've experienced better alternatives.

Long-Term Implications for XR Hardware Design

Real 3D might influence how future XR hardware is designed.

Companies might move away from the dual-display hardware revolution that many expected. If software can provide stereoscopic 3D on single-display systems, the ROI on dual-display hardware becomes less clear. The complexity and cost might not be justified.

Instead, we might see optimization around the factors that matter for Real 3D: ultra-high refresh rates, excellent eye tracking, low latency, and high pixel density. These requirements are different from what traditional AR glass design prioritized.

Optics will remain important, but perhaps less dominant. Historically, optical design was the primary differentiator for AR glasses. Real 3D suggests that software and integration can matter as much as the optics themselves.

Processor and memory requirements will increase. Rendering two viewpoints continuously demands more compute and faster memory than mono rendering. Future glasses will need more powerful processors or more efficient rendering algorithms.

Battery life will become more critical. If Real 3D increases power consumption, battery technology improvements become essential for all-day use. This might drive investment in battery research and efficient processors for wearables.

User Experience Evolution: What Real 3D Enables Next

Once stereoscopic 3D becomes standard, what capabilities become possible?

Varifocal displays will be the obvious next step. Combining stereoscopic depth perception with variable focal distance will create experiences indistinguishable from viewing actual 3D objects.

Higher resolutions will eliminate any remaining visual artifacts. Current displays are excellent, but higher pixel density will make virtual content appear photorealistic.

Wider field of view will let more of your natural visual field display content. Current glasses have decent field of view, but expanding it will make the experience feel less tunnel-like.

Better color accuracy and HDR support will make virtual content appear more lifelike. Current displays are good, but improvements in color gamut and brightness will enhance immersion.

More sophisticated hand tracking and object recognition will enable natural interaction. Rather than explicitly commanding applications, you'll be able to gesture naturally and have applications understand your intent.

AI agents will proactively assist you. Rather than reactive applications, AR glasses with AI integration will suggest relevant information, offer assistance, and adapt to your needs in real-time.

The Accessibility Angle: Real 3D for Users with Vision Differences

While stereoscopic 3D benefits most users, it has particular value for people with certain types of visual impairment.

For users with color blindness, stereoscopic 3D provides an alternative way to distinguish between objects that might have identical luminance. Rather than relying on color contrast, spatial separation conveys information.

For users with reduced visual acuity, proper depth perception can compensate partially for other visual limitations. Understanding spatial relationships through binocular depth cues requires less visual detail than reading small text or identifying fine visual patterns.

For aging users whose natural lens loses flexibility, AR glasses that provide depth information through stereoscopic 3D reduce reliance on accommodation (the eye's focusing mechanism). This can reduce eye strain.

For users with certain types of low vision, spatial audio combined with stereoscopic 3D creates a richer perceptual experience than traditional single-sense interfaces.

This isn't to say Real 3D is an accessibility solution. Proper accessibility requires much more. But it's worth noting that stereoscopic 3D benefits some users far more than others.

FAQ

What is Real 3D and how does it differ from regular 3D rendering?

Real 3D is Xreal's technology that provides genuine stereoscopic 3D display on AR glasses through a free software update. Unlike standard 3D rendering, which simulates depth on flat displays, Real 3D displays two slightly different images optimized for each eye's perspective. This creates true binocular depth perception, making virtual objects feel genuinely three-dimensional rather than appearing flat with depth illusions.

How does Xreal's Real 3D work technically?

Real 3D works by rendering two perspectives of a scene at high refresh rates and displaying each perspective to the correct eye at precisely the right time. The system uses eye-tracking technology to identify which eye is which and synchronizes image delivery to each eye within milliseconds. This happens so quickly that your brain merges the images into a single three-dimensional perception, just like how your natural binocular vision works.

Which Xreal glasses models support Real 3D?

Real 3D is available on current-generation Xreal glasses hardware that meets specific requirements for eye-tracking quality and display refresh rates. Older Xreal models lack the necessary hardware capabilities to implement Real 3D effectively. Check Xreal's official compatibility list for your specific device, as they've been updating which models support the feature over time.

Does Real 3D affect battery life significantly?

While rendering two full perspectives theoretically doubles rendering workload, Xreal implemented optimizations that minimize battery impact. Most users report that Real 3D enabled glasses maintain similar battery life to non-stereoscopic rendering, though actual battery impact varies based on which applications you're using and your individual usage patterns.

What types of applications benefit most from Real 3D?

Applications requiring spatial precision benefit most, including surgical planning, engineering assembly, architectural visualization, and complex spatial design work. Educational applications visualizing 3D content also improve significantly. Even casual applications feel more immersive, though productivity and professional applications see the greatest practical benefit in terms of improved accuracy and reduced errors.

Is Real 3D causing eye strain or discomfort?

Xreal invested considerable effort minimizing visual fatigue in Real 3D. Most users report no eye strain with normal use. However, some individuals sensitive to stereoscopic effects might experience mild discomfort during extended sessions. If you're sensitive to 3D displays, test Real 3D carefully, and consider taking breaks, which is good practice anyway for any extended AR use.

How does Real 3D compare to Apple Vision Pro's stereoscopic 3D?

Apple Vision Pro uses dual physical displays to achieve stereoscopic 3D, providing technically excellent results but at significantly higher cost, weight, and power consumption. Real 3D achieves similar perceptual results through software on less powerful hardware. Vision Pro's approach is elegant but represents different design tradeoffs. For most users, Real 3D on affordable Xreal glasses provides better value than Vision Pro's premium approach.

Will older AR glasses from other manufacturers get stereoscopic 3D updates?

Some manufacturers are investigating software-based stereoscopic 3D similar to Real 3D. However, as of early 2025, Xreal is the only consumer AR glasses manufacturer to successfully implement and release this capability. Other manufacturers are likely following, but timelines remain unclear. If stereoscopic 3D is important to you, Xreal glasses currently offer the best consumer option.

What does Real 3D mean for the future of AR glasses?

Real 3D demonstrates that stereoscopic 3D is achievable on consumer-grade AR glasses through software innovation, not just expensive hardware solutions. This validates the market for AR glasses, enables new application categories, and signals that the industry should prioritize spatial display capabilities as essential rather than optional. Future AR glasses will increasingly include stereoscopic 3D as standard rather than premium feature.

How can developers build applications optimized for Real 3D?

Developers can use Xreal's SDKs and development tools, which provide APIs for accessing Real 3D capabilities. While existing applications benefit from Real 3D automatically, applications specifically designed for stereoscopic 3D can take advantage of deeper integration. Xreal has published documentation and best practices for developers wanting to fully optimize content for Real 3D.

Conclusion: The Watershed Moment

Xreal's Real 3D update represents something important that extends beyond just another feature improvement. It signals that the augmented and extended reality market has reached inflection points across multiple dimensions simultaneously.

First, it demonstrates that software innovation can provide capabilities previously thought to require hardware revolution. This opens possibilities for how technology companies approach product development. Rather than waiting for perfect hardware, companies can deliver value incrementally through clever software engineering.

Second, it validates spatial computing as a consumer market category. For years, skeptics argued that AR glasses couldn't deliver sufficient value to justify adoption. Real 3D addresses what might be the largest remaining barrier: genuine 3D perception. With this solved, the question shifts from "Are AR glasses worth buying?" to "Which AR glasses should I buy?"

Third, it establishes Xreal as a credible competitor in a market dominated by companies with far greater resources. Apple and Meta have invested billions in spatial computing. Despite those advantages, Xreal achieved something genuinely innovative that those companies have yet to match.

Fourth, it creates urgency in the competitive landscape. Companies pursuing single-display AR glasses without credible paths to stereoscopic 3D will find their products increasingly dated. Those pursuing dual-display approaches must justify the complexity and cost when software solutions achieve similar perceptual results.

Fifth, it enables application categories that previously seemed speculative. Medical professionals, engineers, architects, and educators can now build applications that leverage genuine spatial 3D in ways that seemed impossible on previous-generation hardware.

The real genius of Real 3D isn't that it represents a technical breakthrough in isolation. It's that it solved a problem that mattered, did so in a way that benefited everyone with compatible hardware, and did so at a price point that makes the value unambiguous. This is how technology adoption accelerates. This is how markets pivot from speculation to mainstream adoption.

If you've been waiting for AR glasses to become genuinely useful, waiting for spatial computing to deliver on its promises, Real 3D might be the catalyst that changes your mind. Not because it's perfect. It's not. But because it finally delivers spatial perception in a way that feels natural, works reliably, and opens genuine possibilities for applications that change how people work and learn.

The XR glasses industry has had many false starts. But this time, things feel different. Real 3D is less about one feature and more about validation that the entire direction is correct. It's the confirmation that spatial computing's potential will be realized not in some distant future with exotic hardware, but here and now, with products you can buy today.

Key Takeaways

- Real 3D provides genuine stereoscopic 3D display on Xreal glasses through software innovation, delivering proper binocular depth perception for the first time in consumer AR glasses

- The breakthrough came as a free update rather than hardware replacement, making spatial computing capabilities accessible to existing users without additional investment

- Eye tracking technology enables the system to identify which eye is which and deliver the correct perspective at precise millisecond timing, making stereoscopic 3D possible on single-display hardware

- Applications requiring spatial precision—medical visualization, engineering assembly, architectural design, and industrial work—see measurable improvements in accuracy, speed, and user satisfaction with stereoscopic 3D

- Real 3D signals a shift from hardware-centric innovation toward software-based solutions, establishing spatial computing as mainstream technology rather than speculative future possibility

Related Articles

- Meta's VR Pivot: Why Andrew Bosworth Is Redefining The Metaverse [2025]

- Meta's Quest 3 Horizon Integration Removal: What It Means [2025]

- Meta Quest Layoffs and VR's Future: Why Palmer Luckey's Optimism Might Be Misplaced [2025]

- Meta's Horizon Workrooms Shutdown: Why VR Meeting Rooms Failed [2025]

- Meta's Reality Labs Layoffs: What It Means for VR and the Metaverse [2025]

- LEGO Smart Brick: Inside the Best-in-Show Demo at CES 2026 [2025]

![How Xreal's Real 3D Update Solved XR Glasses' Biggest Problem [2025]](https://tryrunable.com/blog/how-xreal-s-real-3d-update-solved-xr-glasses-biggest-problem/image-1-1769463440340.jpg)