Vercel v 0: Solving the 90% Problem with AI-Generated Code in Production [2025]

There's a massive disconnect happening inside every enterprise right now, and most companies don't even realize it's a security crisis waiting to explode.

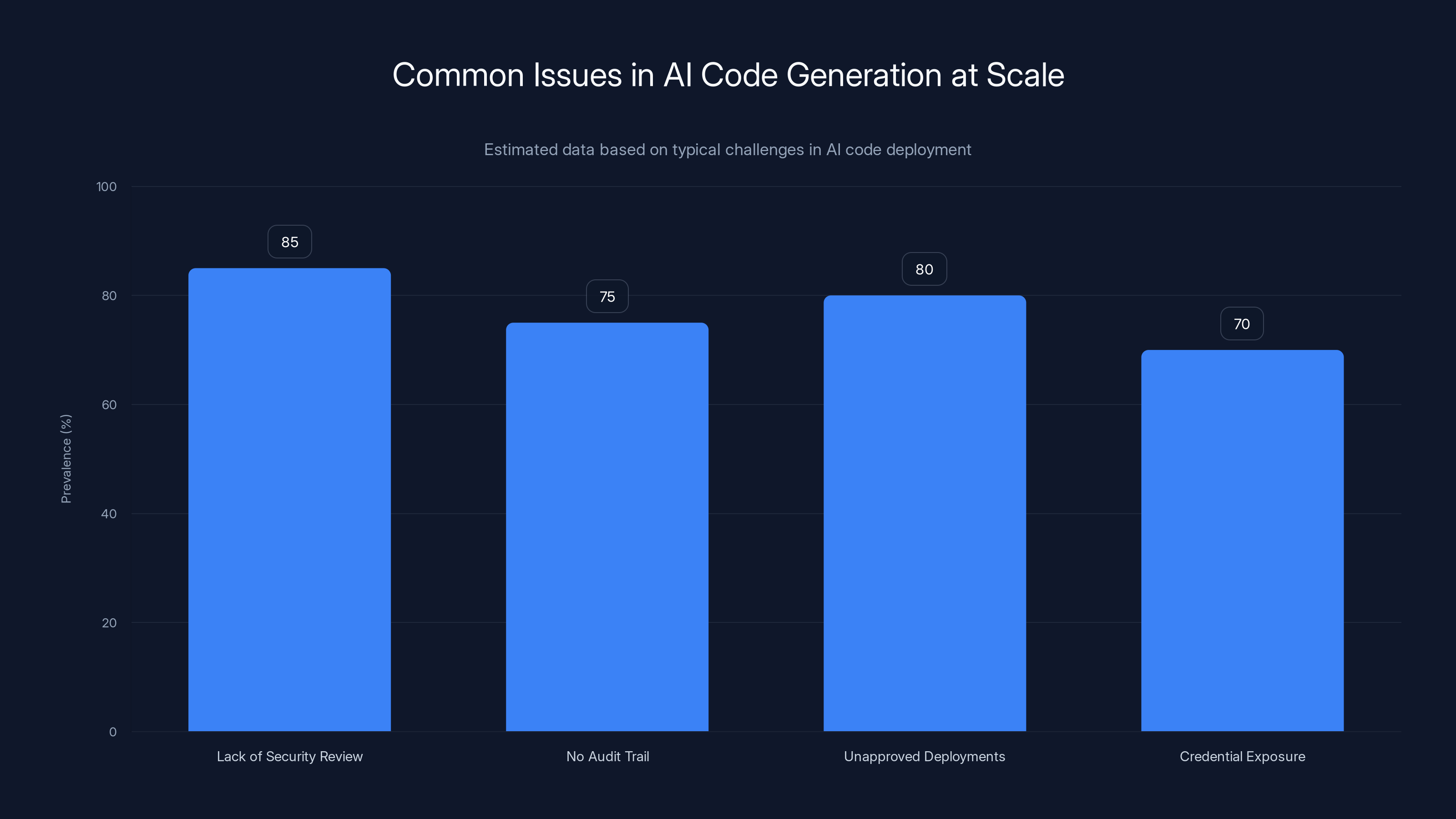

Developers are spinning up AI tools, copying database credentials into prompts, generating code, and deploying it to random cloud accounts—completely outside official channels. No audit trail. No governance. No idea what's running where. This is what's being called the world's largest shadow IT problem, and it's happening because the tools that generate code don't actually understand how production systems work.

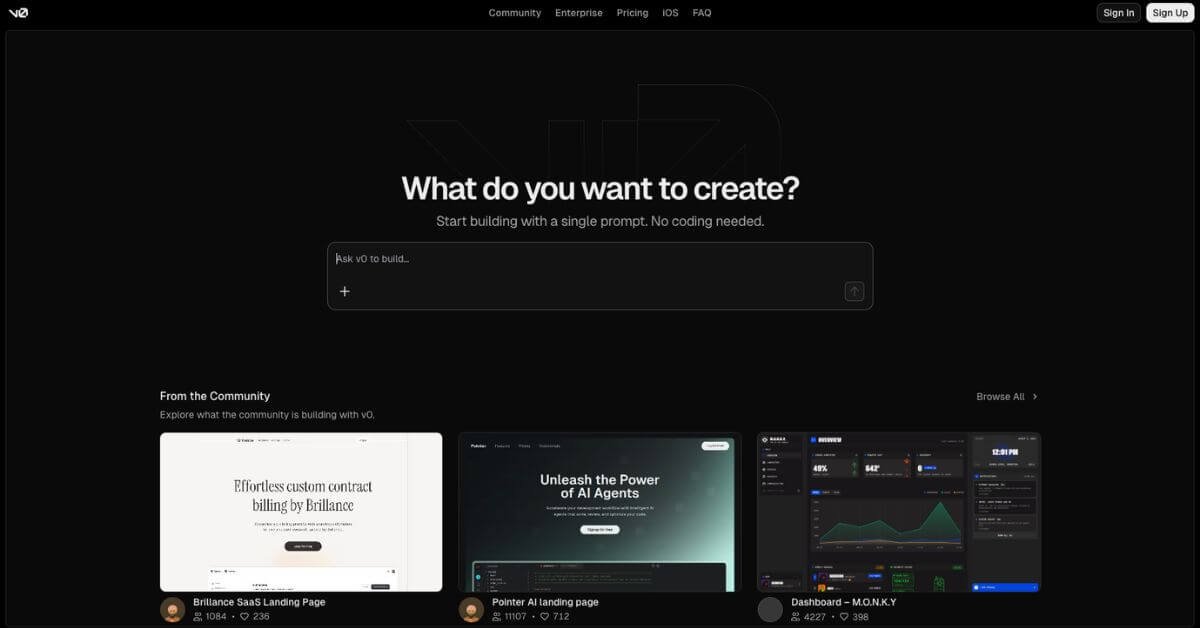

That's the gap Vercel just decided to fix.

The company rebuilt its v 0 platform from the ground up to do something that sounds simple but turns out to be monumentally difficult: connect AI-generated code directly to existing production infrastructure. Not prototypes. Not isolated sandboxes. Real deployments with real security controls, proper git workflows, and infrastructure that actually exists in your company.

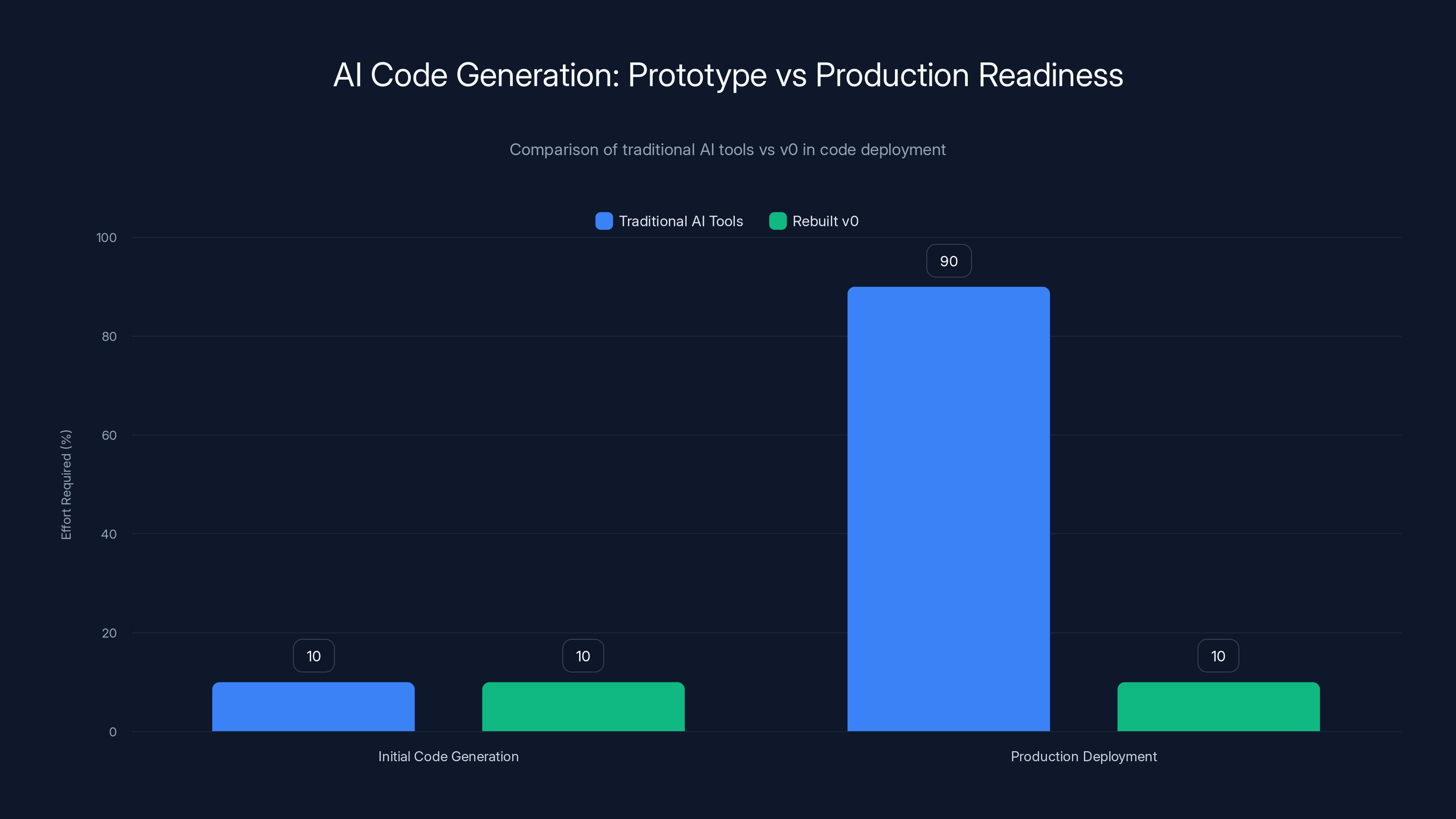

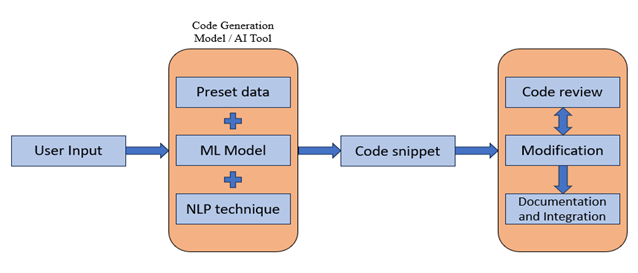

Before this rebuild, v 0 was positioned as a "version 0" tool—something that solved the blank canvas problem for developers. You'd prompt your way to a UI scaffold that looked decent, iterate on it conversationally, and then... you'd have to figure out how to get it into your actual system. That's the 90% problem. The first 10% is generating the initial code. The other 90% is integrating it with everything else your organization has built, securing it, auditing it, and shipping it through proper channels.

With v 0 rebuilt, that 90% gap starts closing. And the implications ripple across how teams think about AI-assisted development, infrastructure control, and enterprise security.

Let's dig into what actually changed, why it matters, and what this means for how your team might build software in 2025.

TL; DR

- The 90% problem: AI tools generate initial code (10%), but integrating it with production infrastructure (90%) requires manual rewrites and security workarounds

- Vercel's solution: Sandbox runtime that imports Git Hub repos, pulls environment variables, and generates code that maps directly to real deployments

- Security model shift: Built-in audit trails, git workflows, and infrastructure controls replace the "shadow IT" approach of copying credentials into prompts

- Team collaboration: Non-engineers can now ship production code through proper PR processes without needing local dev environments

- Infrastructure matters: Vercel's decade-long focus on React and Next.js infrastructure is the differentiator, not just the AI UI

Traditional AI tools require significant effort for production deployment (90%), while v0 minimizes this to 10% by integrating with production infrastructure from the start. Estimated data.

The Original v 0: Smart Prototyping, But a Dead End for Production

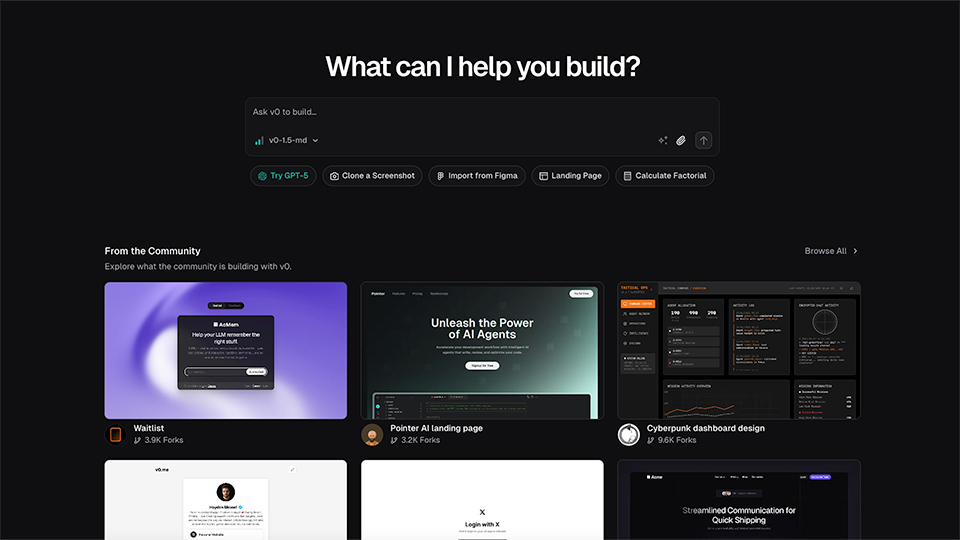

When v 0 launched in 2024, it solved a real developer problem beautifully. Staring at a blank canvas is friction. You start a new project, you've got an empty file, and suddenly you're wondering whether you should hand-code a basic layout or just ask Claude to scaffold something. v 0 removed that friction completely.

More than 4 million people have used v 0 to build millions of prototypes. That's not a fluke. The product works. You describe what you want, the AI generates React code, it renders in a preview pane, you iterate through conversation, and boom—you've got something that looks reasonable in minutes.

But here's the fundamental problem: the code lived entirely inside v 0's isolated environment. It wasn't connected to anything your company actually uses. So when you wanted to move it to production, you had to:

- Copy the code out of v 0

- Rewrite imports to match your actual project structure

- Wire up API endpoints manually

- Reconnect environment variables

- Integrate it with your design system

- Pass it through code review

- Actually deploy it

That's seven steps. Seven friction points. And at step three, you're essentially back to manual development because the AI code doesn't understand your infrastructure.

This is the 90% problem in its purest form.

Product managers loved v 0 for quick mockups. Designers used it for rapid prototyping. But engineers looked at it and thought: "Cool, but my actual job is integrating this into our stack." And that's where most AI code generation tools fail. They're brilliant at the first 10%. They're useless at the next 90%.

The 90% Problem: Why AI Code Generation Fails at Scale

The reason this matters goes way beyond developer convenience. The real consequence is that companies end up with employees who are quietly deploying code outside official infrastructure.

Here's how it actually goes down: A product manager asks an engineer to add a feature. The engineer doesn't want to spin up a local environment. They open v 0, describe the feature, copy the generated code, and paste it into their company Slack. The product manager runs it locally. They like it. Suddenly, it's deployed to a development server. Then staging. Then production. Nobody in security knew about it. Nobody reviewed the dependencies. There's no audit trail. The code might be connecting to a database with credentials that were copy-pasted into a prompt and now exist in some AI company's servers somewhere.

This is the shadow IT problem. And it's not malicious. It's just friction driving bad security decisions.

Vercel's insight was that the solution isn't to make the AI better at generating code. The code generation part is actually pretty good at this point. The solution is to make the infrastructure understand where that code is going to live, what it's going to connect to, and how it's going to get there.

You need sandbox runtimes that know your actual Git Hub repos. You need environment variables that pull from your real deployments. You need git workflows that map directly to your PR processes. You need security controls that are enforced by infrastructure, not by policy docs that nobody reads.

In other words, you need to make generating production-ready code the path of least resistance.

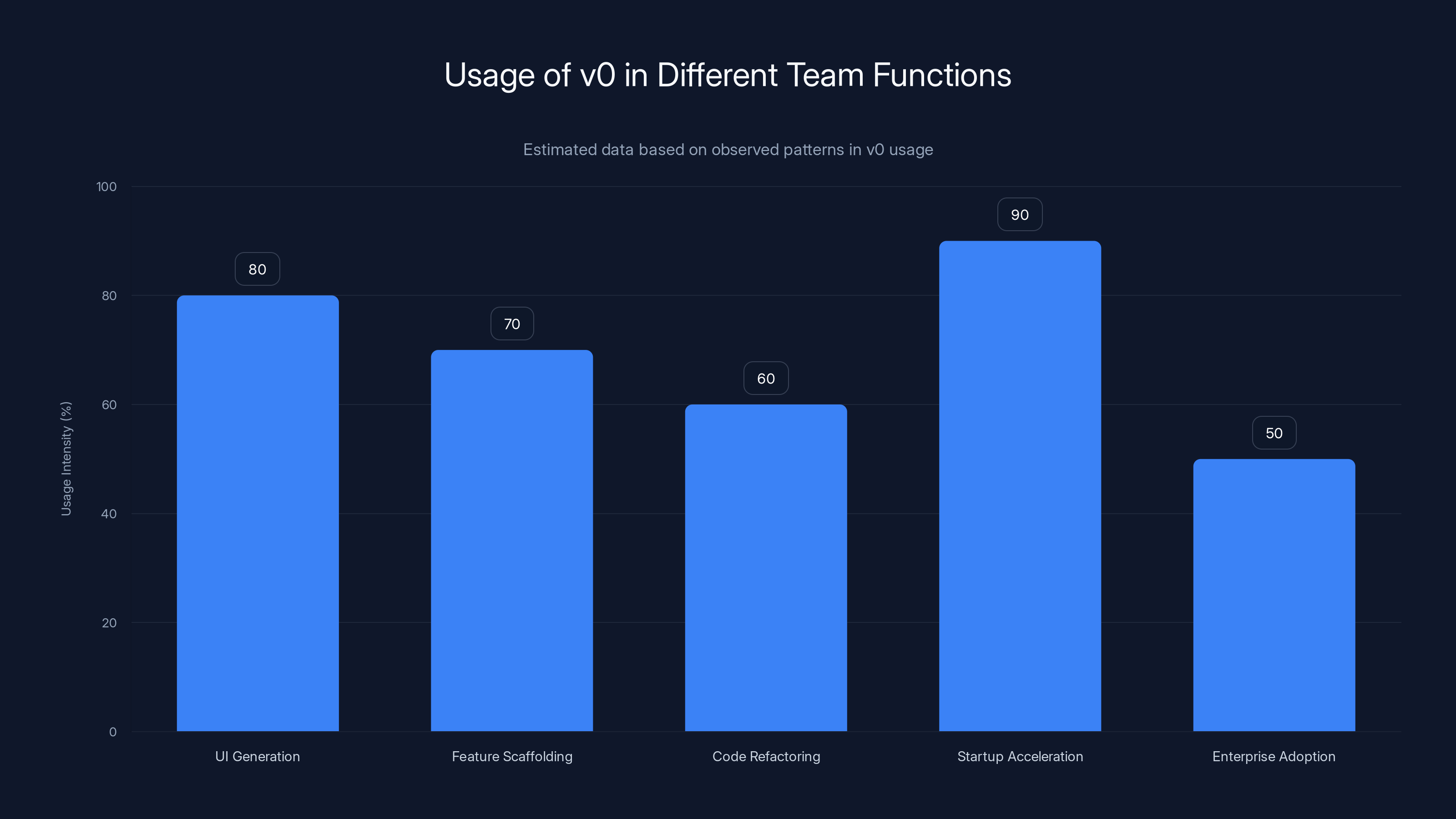

Estimated data shows startups and UI generation teams using v0 most intensively, while enterprise teams are more cautious. Estimated data.

How v 0 Rebuilt: The Sandbox Runtime Architecture

The technical shift Vercel made is subtle but profound. Instead of generating code in isolation, the new v 0 runs a sandbox that directly understands your production environment.

When you open a project in the rebuilt v 0, here's what happens:

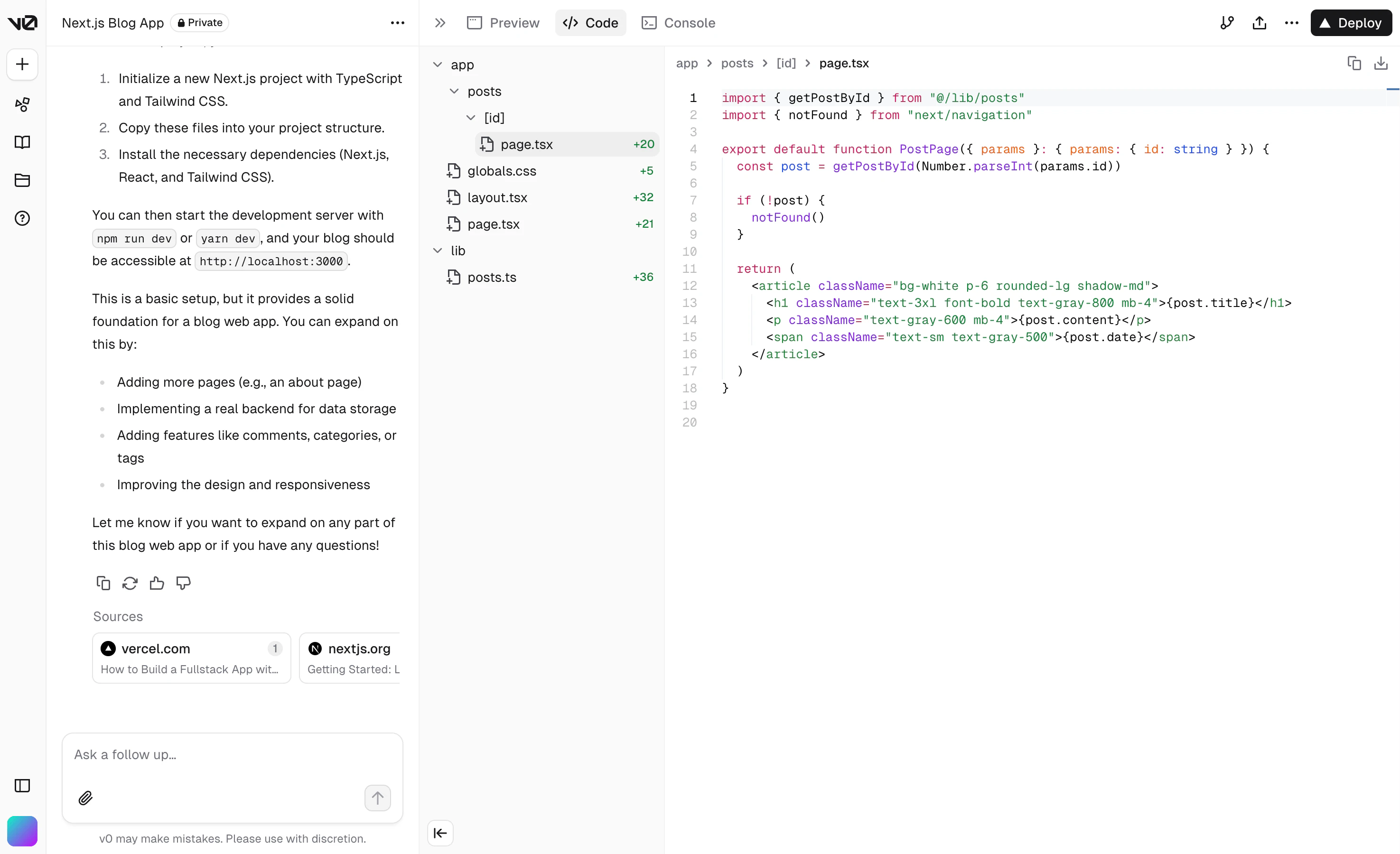

First, you import an existing Git Hub repository. Not a new project. Your actual codebase. The one that's running right now in production. v 0 clones that into a sandbox environment where it can be modified safely.

Next, v 0 automatically pulls your environment variables and deployment configurations from Vercel. It knows your database credentials, your API endpoints, your API keys, your infrastructure setup. This isn't new; deployment platforms have done this for years. But it's never been available to an AI code generation tool before because of security concerns. Now, it is—and it happens inside a sandboxed environment that doesn't expose credentials to the AI model itself.

This is critical. The AI never sees your actual credentials. Instead, the sandbox runtime maps credential requests to the right environment variables, so when the AI generates code like connect To Database(process.env. DATABASE_URL), that actually works without the AI ever knowing what the password is.

Then, when you prompt v 0 to generate a feature, it generates code that already understands your project structure. Imports are correct. API routes align with your backend. It's not spraying random code into the void and hoping something sticks.

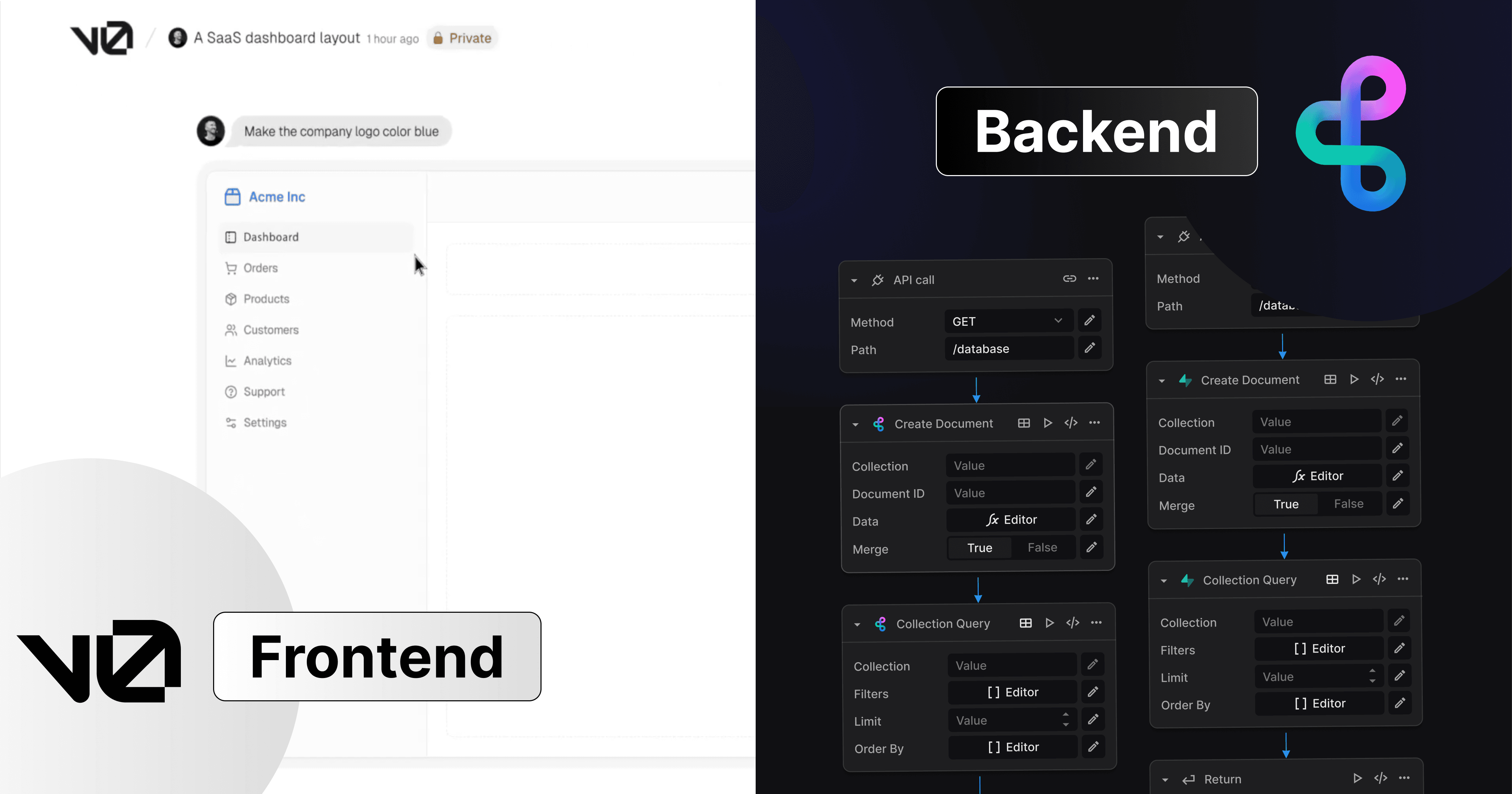

The code lives in your Git Hub repository, not in v 0's cloud. You can edit it directly in VS Code within the v 0 interface. There's a full git panel that lets you create branches, open PRs, and push directly to main on merge. Pull requests are first-class citizens. When you open a PR, it automatically creates a Vercel preview deployment that maps to the exact code you're reviewing.

This matters because product managers can now review actual production previews, not isolated demos. And when they approve, the code goes through proper git workflows. It's not copy-paste into Slack anymore.

Git Hub Integration: The Bridge Between AI and Your Codebase

The Git Hub integration is where the rebuild really shows its teeth.

Most AI code tools treat your codebase as a black box. They might read it for context, but they don't actually understand how it's structured, what conventions it follows, or how new code should integrate with existing code.

Vercel's approach is different. When v 0 imports a Git Hub repo, it's not just reading files. It's understanding your project structure, your component architecture, your styling conventions, your naming patterns. If your team uses a specific way of organizing components, v 0 learns that. If you have shared utility functions, v 0 knows where they are. If you follow a pattern for API routes, v 0 follows that same pattern.

This is why the integration matters so much. Most developers who test v 0 report that the generated code feels native to their codebase. It doesn't feel like an external tool dumped code into their project. It feels like a developer who actually understands how the project works wrote it.

The secondary benefit is that changes stay in version control. Every prompt, every iteration, every change gets committed to a branch. There's a full audit trail. If a feature breaks in production, you can trace it back to the exact PR, the exact prompt, the exact AI response that generated it. That's invaluable for enterprises that need to understand how code came into being, who approved it, and why it was deployed.

Git workflows also mean code review doesn't get bypassed. You can't just merge code to main and ship it. PRs go through the normal review process. Humans still control what gets deployed. The AI is just helping humans write better code faster, not replacing human judgment about what should run in production.

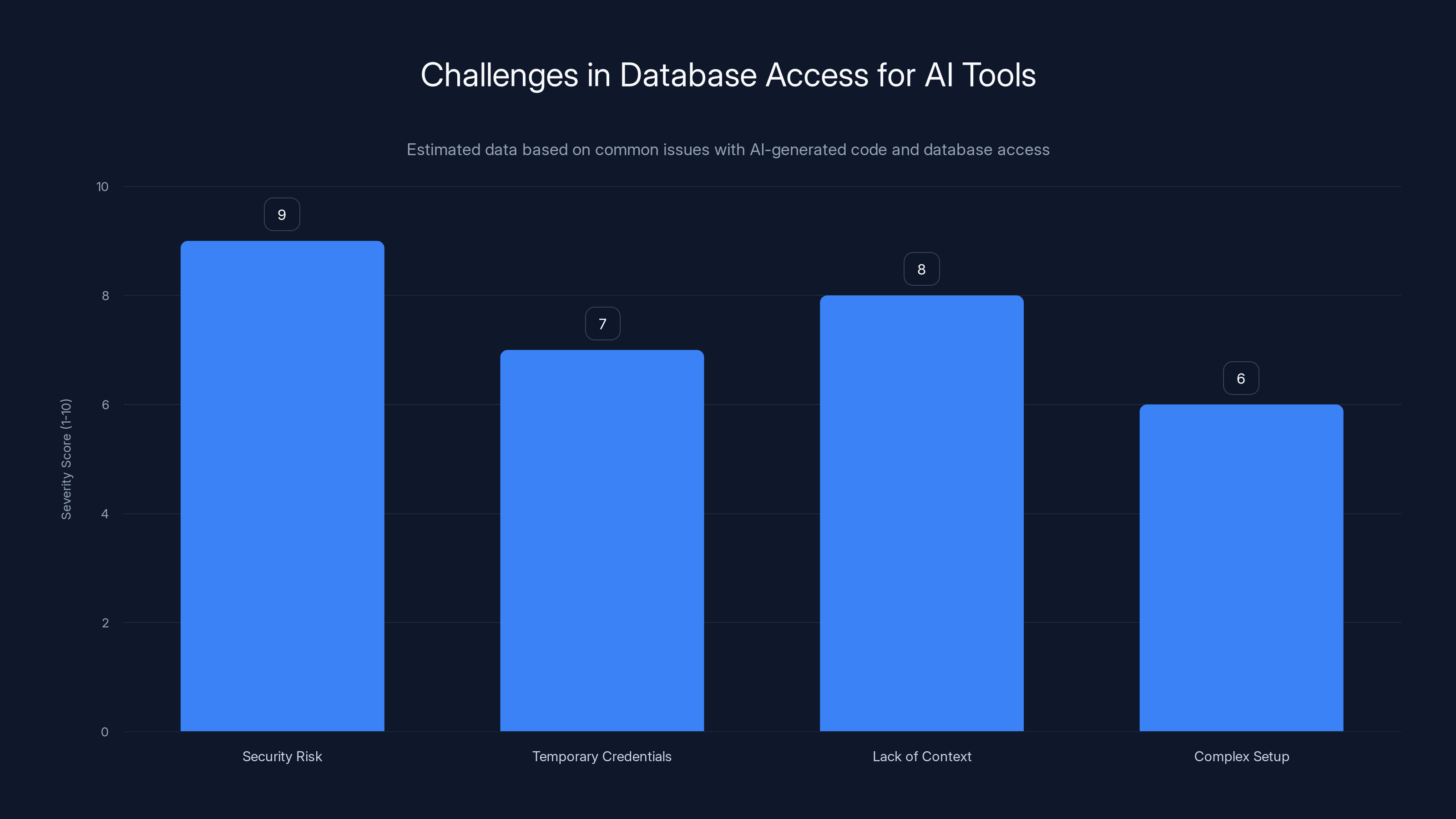

Environment Variables and Database Access: The Security Shift

This is where things get really interesting from a security perspective.

Traditionally, when you want to use an AI tool to generate code that connects to your database, you have a few options, all of them bad:

- Copy your database password into the prompt (terrible security)

- Create a temporary credential and hope you remember to delete it (usually don't)

- Tell the AI tool to generate code without actual database context (it'll be wrong)

- Use a read-only replica with test data (expensive, complex setup)

Vercel's approach is different. The sandbox runtime gets direct access to your Vercel deployments, which means it can pull actual environment variables. But the AI model never sees them. Instead, the sandbox translates requests.

When AI-generated code calls process.env. DATABASE_URL, the sandbox intercepts that and returns the actual URL from your Vercel deployment. The generated code works with real data, but the AI never knew what the password was.

The new v 0 also adds direct integrations with Snowflake and AWS databases. You can connect a production data source directly, and the sandbox runtime will execute queries against real data. The AI understands the actual schema, the actual relationships, the actual constraints. It can generate code that actually works with your real database, not mock data.

This is a massive security improvement because it removes the primary reason developers bypass official tools: "The official tools don't understand our actual infrastructure." Now, they do. You're not forcing developers to choose between security and speed. Security is the faster path.

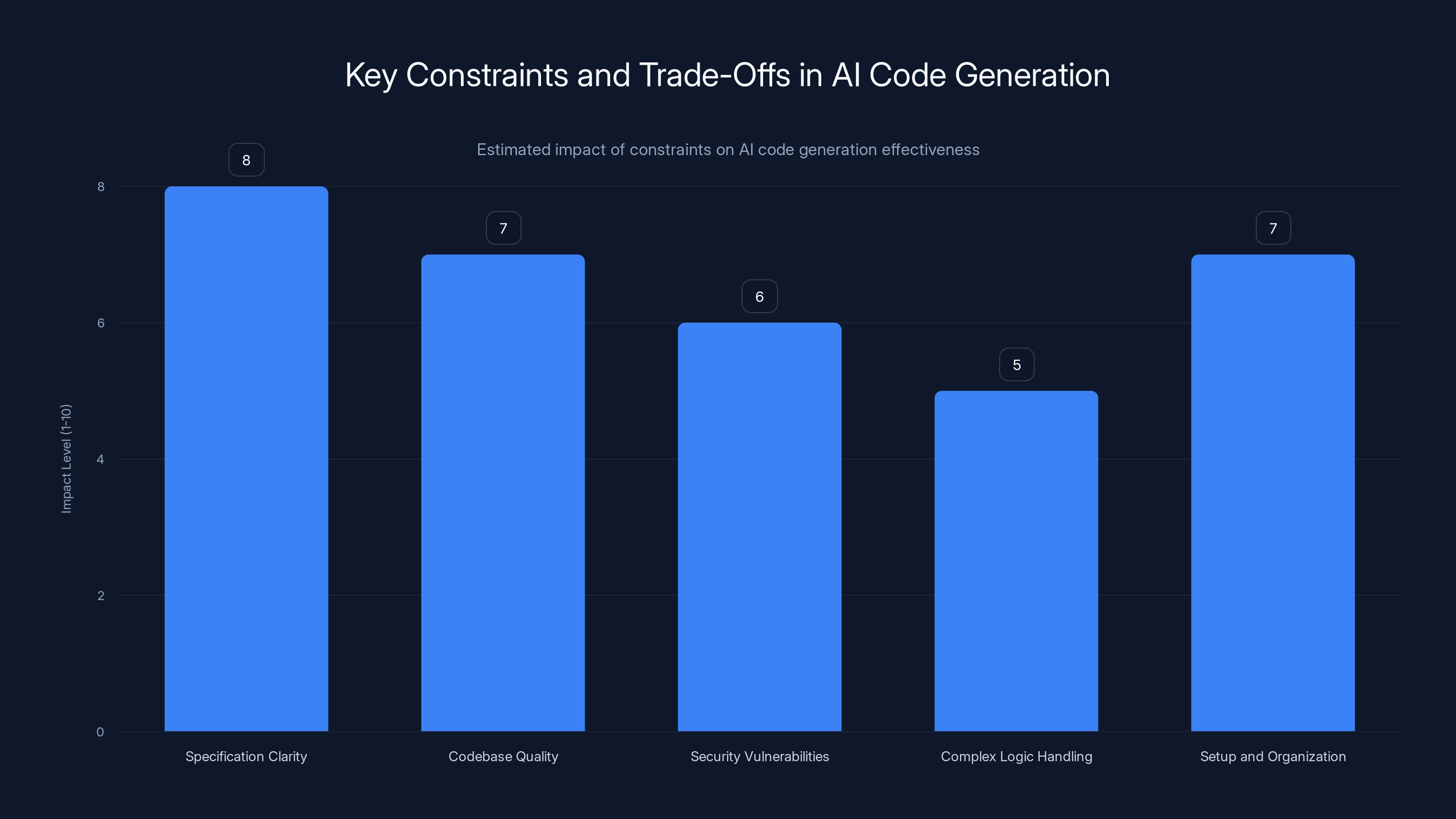

Specification clarity and codebase quality have the highest impact on AI code generation effectiveness. Estimated data.

Vercel's Infrastructure Advantage: A Decade of React and Next.js

Here's what makes Vercel's position interesting: they're not just building an AI UI on top of generic infrastructure. They're leveraging a decade of expertise in building frameworks and deployment infrastructure specifically designed for modern web development.

Vercel's founder, Guillermo Rauch, created Next.js, which has become the dominant full-stack framework in the AI-assisted code generation space. Tom Occhino, who leads the product side of v 0, spent a dozen years at Meta working on React. These aren't people who recently jumped into AI. These are people who've been building the infrastructure that AI models are trained on.

Why does this matter? Because when v 0 generates code, it generates it in a way that's native to Next.js and React. The code is idiomatic. It follows best practices that Vercel published specifically for AI agents and LLMs. If you're using Next.js—and a lot of modern teams are—the AI-generated code doesn't feel foreign. It feels like it was written by someone who actually understands the framework.

More importantly, Vercel publishes React best practices specifically designed to help AI agents generate better code. Things like component structure patterns, naming conventions, and data flow patterns. When these get into AI training data, the models learn to generate code that aligns with those patterns. So v 0 benefits from being built by the people who define what "good" Next.js code looks like.

The infrastructure side is equally important. Vercel handles millions of deployments annually. The company understands how to ship code safely. They've built deployment protections, edge caching, preview environments, analytics—all the infrastructure machinery that production code needs. Now all of that is available to the AI code generation process.

You're not deploying to generic cloud infrastructure. You're deploying to infrastructure that was specifically designed to run Next.js and React applications. The entire stack is optimized. The code v 0 generates deploys faster and runs more efficiently because the infrastructure knows how to run it.

This is the biggest differentiator Vercel has. It's not that they have a better AI model. It's that they have better infrastructure that understands how to deploy the code the AI generates.

From Prototyping to Production: Breaking Down the Workflow

Let's walk through what an actual workflow looks like with rebuilt v 0.

A product manager wants to add a customer analytics dashboard to an existing application. In the old workflow, this would involve multiple handoffs and rewrites. Now, it's different.

The PM opens v 0, imports the company's Git Hub repo (which already contains their existing application, design system, and data models). v 0 automatically pulls the Vercel deployment config, which includes database access, API endpoints, and environment setup.

The PM describes what they want: "Show me a dashboard that pulls customer metrics from our database, visualizes them with charts, and breaks down by region."

v 0 generates the code. Not a prototype. Production code that:

- Uses the existing design system components

- Queries the actual database (through the sandbox runtime)

- Integrates with existing API routes

- Follows the codebase's naming conventions

- Creates a proper Next.js page structure

The PM can iterate on the design conversationally. "Make the charts bigger." "Sort regions by revenue." "Add a date filter." Each change generates updated code in a preview that maps directly to a real Vercel deployment URL.

Once it's right, the PM doesn't hand it off to an engineer to "integrate it properly." Instead, they click "create PR." v 0 creates a pull request against main. The code goes through the normal review process. An engineer reviews it, approves it, and merges. On merge, it automatically deploys to production.

No copy-paste. No rewrites. No "let me just integrate this manually." The code was production-ready from the start.

This changes the whole collaboration model. Teams end up collaborating on the product itself, not on PRDs and spec documents. The design is reviewable and executable immediately.

The Web Application Firewall and Security Protections

Production code needs more than just correct functionality. It needs security controls.

v 0 includes a built-in web application firewall (WAF) that monitors generated code for common security vulnerabilities before it even gets deployed. Things like SQL injection patterns, cross-site scripting (XSS) vulnerabilities, insecure API calls.

The WAF isn't trying to be a magic solution. It's not going to catch every possible vulnerability. But it removes entire categories of basic mistakes. If an AI generates code that looks like it might be vulnerable, the WAF flags it for review before it ever gets deployed.

This is important because AI models sometimes generate code that works but isn't secure. They might generate string concatenation instead of parameterized queries. They might miss authentication checks on API endpoints. The WAF catches these patterns and pushes them back for review.

Beyond the WAF, there's Single Sign-On (SSO) integration, which means the generated code can immediately work with your company's authentication system. You don't have to manually wire that up.

There's also deployment protection, which means you can set rules like "code generated by v 0 requires explicit approval before deploying to production." You could allow auto-deploy to staging, but require manual approval for production. This gives teams flexibility about risk tolerance while maintaining control.

The chart highlights the prevalence of common issues faced when AI-generated code is deployed without proper infrastructure integration. Estimated data.

Why This Solves the Shadow IT Problem

The shadow IT problem exists because the official path is harder than the unofficial path.

Developers don't get security wrong because they're trying to be reckless. They get security wrong because:

- Copying credentials into prompts is fast

- Using random AI tools is faster than waiting for infrastructure teams

- Deploying to personal cloud accounts is easier than navigating corporate deployment systems

- There's no immediate feedback when you're doing something unsafe

Vercel's approach removes each of these friction points.

Credentials aren't copied anywhere—they're pulled securely from production. The official tool (v 0) is faster than AI tools that don't understand your infrastructure. Corporate deployment systems are integrated directly, so deploying through proper channels is easier than deploying elsewhere. And the audit trail means you get feedback if something goes wrong.

This is the insight that matters: security through friction doesn't work. Security through convenience does. Make the secure path the easy path, and people take it.

The shadow IT problem also gets visibility now. Vercel can see what code is being generated, when it's being generated, who's generating it, and where it's being deployed. That visibility alone—the audit trail—makes governance possible. You can't govern what you can't see.

Integration Ecosystem: From Database Connections to Deployment Pipelines

Building just the AI code generation isn't enough. You need integrations.

The rebuilt v 0 includes direct integrations with Snowflake for data warehousing, AWS databases for relational data, and is expanding from there. You're not writing SQL queries by hand. You're describing what you want, and the AI generates the queries. The queries run against real data.

On the deployment side, v 0 integrates with Vercel's preview deployments. Every PR automatically gets a preview URL that lets you see exactly what the code does before it deploys to production. This is crucial for review. You're not looking at code and imagining what it does. You're looking at the actual rendered application.

For teams using other tools, there are MCP (Model Context Protocol) integrations. This is a newer protocol that lets AI models talk to external systems safely. You can integrate v 0 with your internal tools, your deployment systems, your monitoring systems. The AI can understand the broader context.

There's also agentic workflow support, which means you can let the AI take multiple steps autonomously. "Generate the feature, run the tests, generate the tests if they're failing, commit to a branch, and create a PR." Instead of iterating manually with each prompt, you describe the goal and let the AI execute a multi-step plan.

Practical Constraints and Honest Trade-Offs

v 0 solved the 90% problem, but it didn't solve all problems.

First, you're still limited by how well you can describe what you want. If you're vague, you get vague results. The AI is very good at executing against clear specifications, but it's not psychic. "Make a dashboard" works better than "make something cool," which always works better than "I don't know, surprise me."

Second, the generated code is only as good as your existing code. If your codebase is a mess, v 0 will learn bad patterns and generate code that fits your mess. This is actually good—consistency matters—but it's worth knowing. If you're using v 0 as an excuse to clean up your architecture, you'll get better results than if you use it as a quick fix for a broken system.

Third, there are security vulnerabilities that no WAF can catch because they require understanding business logic. If an AI generates code that's technically secure but does the wrong thing (like applying a discount twice instead of once), no automated system catches that. You need human review, and v 0 requires it. That's the constraint you actually want—humans stay in the loop for judgment calls.

Fourth, complex backend logic still often requires hand-coding. v 0 excels at UI, API integration, and straightforward data retrieval. For business logic that involves complex calculations, state machines, or domain-specific rules, you're still often better off writing code manually. The AI can help, but it usually needs human guidance.

Fifth, setup matters. v 0 works great if your codebase is reasonably clean and well-organized. If you're working with a spaghetti codebase that's 15 years old, v 0 will struggle more. You might need to refactor first, which is a project in itself.

None of these are failures. They're constraints that make sense. The fact that v 0 requires human review is good. The fact that it works best with clean codebases is good. These are features, not bugs, because they force good practices.

Estimated data shows that security risks and lack of context are major challenges when using AI tools for database access. Vercel's approach mitigates these issues by securely managing environment variables.

How Teams Are Actually Using v 0 in Production

The theory is nice. The practice is what matters.

Preliminary data from teams using rebuilt v 0 shows a few patterns emerging.

Product and design teams are using v 0 as their primary tool for generating UI. Instead of building prototypes in design tools and handing off to engineers, they're building in v 0 and shipping production code. This doesn't replace the design process—it just makes the implementation part faster. What's surprising is how much this changes collaboration. Designers are now thinking about code earlier, and engineers are thinking about design requirements earlier, because they're literally working with the same artifact.

Engineering teams are using v 0 for scaffolding new features. The pattern is: PM or designer describes the feature, v 0 generates 80% of the code, engineers review and refine, then ship. This has empirically reduced feature development time because the scaffolding step—which used to be manual and tedious—is now instant.

Some teams are using v 0 for refactoring. You describe the change you want to make to an existing feature, and v 0 generates the updated code. This is useful for things like switching from one styling library to another, or refactoring a component from class to functional, or moving from one state management approach to another. The AI understands the old pattern and can mechanically convert it to the new pattern, which is exactly the kind of work that bores humans.

Startups are using v 0 to punch above their weight. With small teams, the ability to ship code faster matters. A three-person startup can now move at the speed of a 20-person startup because they're using AI to handle the scaffolding work. This has real competitive implications.

Enterprise teams are using v 0 more conservatively. They're starting with non-critical features and building up trust in the tool and the process. They're using it more as a way to accelerate experienced developers than as a way to let non-engineers ship code. But even conservative usage cuts development time by 30-40% based on early numbers.

Building in the AI Era: Skills and Team Composition

One thing that's starting to shift is what skills matter for software development.

You still need people who understand systems thinking, architecture, trade-offs. That hasn't changed. If anything, those skills matter more because infrastructure decisions impact what kind of code the AI generates.

But you need fewer people who are experts at basic code syntax, boilerplate generation, and mechanical transformations. The AI handles those things. This means experienced engineers can focus on harder problems, and junior engineers can contribute on harder problems earlier.

It also means that the people you hire matter differently. Communication skills become more important than they used to be. If you're using AI to generate code, you need to be really good at describing what you want. You need product managers who can write clear specs. Designers who can articulate specific behavior. Engineers who understand when AI-generated code is good enough versus when it needs customization.

This has implications for team composition. You probably don't need as many junior engineers doing mechanical work. But you might need more senior engineers who can guide the AI effectively. The ratio of seniors to juniors might actually shift in both directions—fewer bodies needed overall, but the ones you have need different skills.

For existing teams, this means some reskilling. People who were spending 50% of their time on scaffolding and boilerplate need to learn how to direct an AI to do that work, then review and refine the output. It's a different skill than writing the code yourself, and it takes practice.

Competitive Landscape: Vercel vs. Cursor, Replit, and Lovable

Vercel isn't alone in this space. There are other tools trying to solve the production integration problem.

Cursor is a locally-run IDE that lets you edit code with AI assistance. It's fast, it's good for writing code locally, but it doesn't integrate with deployment infrastructure. You're still responsible for wiring your code into production. It's solving a different problem—making the coding process itself smarter—rather than connecting code to infrastructure.

Replit is a cloud IDE that's been adding AI capabilities. It has similar positioning to Vercel in some ways—cloud-based, integrated development—but Replit is more general-purpose. You can build anything there. v 0 is specifically focused on web applications that deploy to Vercel infrastructure. That specificity is actually an advantage for Vercel because the entire system is optimized for that use case.

Lovable is positioning itself as a no-code builder with AI. It's going after the "let non-engineers build web applications" market. Vercel is going after the "accelerate engineer productivity" market. Different customer, different problem, different solution.

From a purely technical standpoint, the thing that matters is how deep the integration goes. If all you do is run an AI in a text editor and let humans figure out deployment, that's one thing. If you actually integrate with deployment infrastructure, pull real environment variables, generate code that maps directly to production, and enforce security controls—that's deeper. That's harder to replicate. And that's what Vercel's built.

The secondary thing that matters is framework specificity. Vercel's advantage is that it knows Next.js and React deeply. If you're using those technologies, the advantage is real. If you're using something else—Django, Laravel, Rails—that advantage evaporates.

Multi-step agentic workflows reduce iteration time by approximately 60%, significantly enhancing productivity by automating multiple steps in AI-driven processes.

Looking Forward: Where AI Code Generation Heads in 2025 and Beyond

A few trends are emerging that'll probably shape where this goes.

First, AI code generation is moving further up the stack. It started with UI scaffolding. Now it's moving into business logic, data models, API design. Within a year or two, you'll probably have AI that can design your entire application architecture from a high-level specification. The constraint there isn't the AI. It's getting humans to think clearly about what they actually want before the AI generates it.

Second, multi-step agentic workflows are going to become the default. Instead of prompting an AI once and editing the output, you'll describe a goal and the AI will execute a series of steps to reach it. Generate code, run tests, fix failures, update documentation, create a PR. This makes the tool feel less like "write some code for me" and more like "work on this project with me."

Third, domain-specific code generation will improve. Generic AI is good at generic code. But industry-specific AI that knows healthcare regulations, financial compliance, or e-commerce patterns will generate better code for those domains. We're already seeing specialized models emerge. That trend accelerates.

Fourth, the infrastructure arms race continues. The companies that win at AI code generation won't win because they have the best AI model. They'll win because they have the best infrastructure integration. The ability to generate code that deploys cleanly, securely, and reliably matters more than the ability to generate impressive code that doesn't actually work in production. Vercel's betting on that, and it's probably the right bet.

Fifth, security and compliance become differentiators. As more teams use AI code generation in production, security and compliance will matter more. Audit trails, deployment protections, security scanning—these become table stakes. The tools that build these in from day one have an advantage over tools that bolt them on later.

The Real Shift: From Code Generation to Development Infrastructure

Step back from the specifics for a moment. What's actually changing here?

It's not just that AI can write code now. AI could write code before. What's changing is that the infrastructure for deploying code—the thing that used to be separate from code generation—is now integrated with it.

This matters because it reframes the problem. Historically, "code generation" was thought of as an AI problem. Build a better AI model and you'll generate better code. Now it's clear that code generation is also an infrastructure problem. You can't separate generating code from deploying code. They're the same process.

This has implications for how companies should think about investing in this space. If you're an enterprise considering AI code generation, don't just evaluate the AI. Evaluate the infrastructure. Does the tool integrate with your deployment system? Can it pull your environment configuration? Does it enforce your security policies? These matter as much as code quality.

For Vercel specifically, this is why their position is strong. They've spent a decade building infrastructure. Now they're adding AI on top of that infrastructure. The infrastructure is the moat. It's hard to replicate.

For teams using the tool, this means that adoption isn't just about learning how to write prompts. It's about rethinking your deployment process, your git workflow, your review process. You need to design for AI-assisted development. That's a team and process question, not just a tool question.

Getting Started: How to Implement v 0 in Your Organization

If you're thinking about adopting rebuilt v 0 in your organization, here's a practical approach.

Phase 1: Evaluation (1-2 weeks)

Start with a non-critical project or feature. Pick something that's high-value but low-risk if it needs rework. Have a couple of experienced engineers spend a few hours with v 0. The goal is to build intuition about what it's good at and what it's not.

During this phase, you're also validating that v 0 works with your codebase. Import your repo. Verify that it pulls your environment variables correctly. Make sure the deployments work. If there are incompatibilities, find them now.

Phase 2: Pilot (2-4 weeks)

Expand to a small team or a few features. Have product managers and designers use v 0 to generate features. Let engineers review and refine. Track metrics: how long does feature development take? How many review cycles? How many bugs?

During this phase, you're building muscle memory. People learn how to write good prompts. You figure out which types of features work well with v 0 and which don't. You identify process changes you need to make (like updating your code review guidelines for AI-generated code).

Phase 3: Process Optimization (2-4 weeks)

Based on what you learned in the pilot, make changes. Update your PR templates to note that code is AI-generated. Update your code review guidelines. Potentially update your testing requirements—do you need more tests for AI-generated code? Set deployment protection rules.

The goal of this phase is to make AI-assisted development feel like a native part of your development process, not an external tool.

Phase 4: Rollout (4-8 weeks)

Expand to your whole team. Provide training. Make sure people understand the new process. Monitor that people are following it. Gradually increase the fraction of features being developed with v 0.

Expect that this takes time. Even within teams, adoption varies. Some people love it immediately. Some people are skeptical and need convincing. That's normal.

Phase 5: Iterate and Optimize (ongoing)

As more people use it, you'll discover new use cases and new constraints. Evolve your practices. If you find that v 0 works really well for a specific type of feature, maybe you route more features that way. If you find a type of feature where it consistently fails, maybe you exclude those.

The goal is to get to a state where AI-assisted development is just how your team works, not something special.

Security Best Practices When Using AI Code Generation

Since v 0 is specifically built with security in mind, here are practices that complement the built-in protections.

Require human review for all code, even code generated by AI. The AI is a tool that improves speed and reduces errors, not a replacement for human judgment. Code should go through your normal review process.

Audit AI-generated dependencies. When AI generates code, it sometimes adds new dependencies to your project. Review those. Does your team approve this dependency? Are there security concerns? Is it maintained? These questions don't disappear because code is AI-generated.

Log and monitor AI usage. Who's using v 0? Which teams? How often? This doesn't have to be invasive, but visibility helps. If one team is generating massive amounts of code and shipping it unsupervised, that's a sign you need to adjust your process.

Train people on secure coding practices. The AI is good at generating code, but it's not magically better at security than humans. If your team doesn't know how to write secure code, the AI won't either. Invest in security training.

Keep your environment configuration secure. v 0 pulls environment variables from Vercel. Make sure those are properly restricted. Don't give v 0 access to production credentials if you don't have to. Separate development, staging, and production configurations.

Enforce code review for deployment to production. Even if code is reviewed before merging to main, require an additional approval before deploying to production. This gives you a gate where a human explicitly approves moving code to the real world.

Building Sustainable Development Teams in the AI Era

As tools like v 0 become common, teams need to think about sustainability differently.

One risk is that the acceleration becomes a pressure. If v 0 lets you ship features 3x faster, does that mean you're expected to ship 3x more features? If so, you're not saving time—you're just shipping more problems. The sustainable approach is to use the time savings for things that matter: better architecture, better testing, better documentation, better refactoring.

Another risk is skill atrophy. If junior engineers are spending all their time reviewing and refining AI code, they're not learning how to write code from scratch. That's a problem five years from now when they're supposed to be senior engineers but they've never actually written a complex system. The sustainable approach is to deliberately rotate people through work that requires deep thinking and hand-writing code.

A third risk is that the easy problems all get automated, but the hard problems remain. This is actually good—it means humans focus on interesting work—but it requires making sure people have time for the hard problems. If you're shipping features so fast that nobody has time to think about architecture, you'll accumulate technical debt quickly.

The teams that will succeed with AI code generation are the ones that treat it as a tool to improve quality and reduce repetition, not just a tool to ship more code. Use the speedup to iterate on design. Use it to build better tests. Use it to refactor. Use it to think bigger.

FAQ

What is the 90% problem in AI code generation?

The 90% problem refers to the gap between initial code generation (10%) and production deployment (90%). Traditional AI tools generate working prototypes quickly, but integrating that code with existing production infrastructure, security controls, databases, and deployment systems requires extensive manual work. Rebuilt v 0 solves this by making code generation aware of production infrastructure from the start, so generated code deploys directly without rewrites.

How does v 0's sandbox runtime connect to my production infrastructure?

The sandbox runtime imports your Git Hub repository and automatically pulls environment variables, database configurations, and deployment settings from your Vercel deployments. The AI generates code that understands your actual project structure, real databases, and real API endpoints—all without exposing credentials to the AI model itself. The sandbox maps credential requests securely, so generated code just works in production.

Why does Git Hub integration matter for AI code generation?

Git Hub integration means v 0 understands your codebase structure, naming conventions, component architecture, and existing patterns. When AI generates code, it follows your team's conventions rather than creating foreign code that needs rewrites. Every change creates a git commit with a full audit trail, proper PR workflows manage the code, and human review happens through normal channels. This keeps security controls intact while accelerating development.

Can AI-generated code really be production-ready, or is it always a prototype?

With rebuilt v 0, AI-generated code is genuinely production-ready because it's generated with knowledge of your actual infrastructure, environment configuration, databases, and security requirements. It goes through normal code review and testing processes. However, complex business logic, security-critical code, and domain-specific rules should still be reviewed carefully. The AI is excellent at scaffolding and standard patterns, but human judgment remains essential for architectural decisions.

What security concerns should we address when using AI code generation?

Key concerns include: credentials being exposed in prompts (v 0 handles this via the sandbox), dependencies added by AI that aren't approved, code that deploys outside your security controls, and lack of audit trails. Mitigation strategies include requiring human review for all code, auditing AI-added dependencies, enforcing deployment protection rules, and maintaining proper environment separation between development and production. v 0 includes built-in WAF protection and git workflows to enforce these, but your team's practices matter equally.

How does this compare to other AI code generation tools like Cursor or Replit?

Cursor is a local IDE that makes the coding process smarter but doesn't integrate with deployment infrastructure. Replit is a general-purpose cloud IDE with AI capabilities, good for building anything but not specialized for web deployment. v 0 is specifically built on top of Vercel's production infrastructure, which means code deploys directly without manual integration. The advantage is greatest if you're using Next.js and deploying to Vercel, but the infrastructure integration is what fundamentally makes the difference across any tech stack.

What types of features work best with v 0?

UI scaffolding, API integration, standard data retrieval, and component generation work consistently well. Refactoring existing code (like changing styling libraries or component patterns) also works well. Complex business logic, security-critical features, and domain-specific rules need more human involvement. Features requiring multi-step reasoning or understanding business context often need engineers to guide the AI or refine the output. The best approach is starting with high-value, straightforward features and learning your tool's boundaries.

How do we measure productivity gains from AI code generation?

Track metrics like: time from feature request to production deployment, number of code review cycles per feature, percentage of code written by AI versus manually, and bug rates in AI-generated code versus hand-written code. In practice, teams see 30-60% reduction in development time for scaffolding-heavy features, with the gains highest on straightforward UI and data retrieval features. Compare metrics before and after adoption, but also measure quality metrics—don't optimize just for speed.

Do we need to retrain our team to use AI code generation effectively?

Yes, but not extensively. The main skill shift is learning to write clear specifications and prompts that AI can execute well. Experienced engineers adapt quickly, usually within 1-2 weeks. Code review practices need updating—reviewers should check different things in AI-generated code than in hand-written code. The bigger shift is organizational: deciding which features use AI assistance, how to distribute the time savings, and how to keep people learning despite automation handling routine work. This is a process question more than a training question.

What happens if AI-generated code breaks in production?

v 0 maintains a complete audit trail: the prompt that generated the code, the exact git commit, the PR that approved it, the deployment that shipped it. You can trace the issue back through all these artifacts to understand what happened. This actually makes debugging easier than with hand-written code because you have a record of the decision-making process. The trade-off is that you need comprehensive logging and monitoring in production, which you should have regardless.

How does this scale to large enterprise teams?

The security model and infrastructure control are specifically designed for enterprises. Environment separation, deployment protections, SSO integration, and audit trails give enterprises the control they need. The main scaling challenge is process and culture: teaching large teams to work with AI assistance, maintaining code quality standards, and preventing people from bypassing proper workflows. Organizations that invest in good processes around AI code generation see better results than those that just hand out the tool.

The Path Forward: Production-Ready AI Code Generation Is Here

The 90% problem existed because code generation and deployment were treated as separate problems. You'd generate code in one tool, then manually integrate it in another. That gap was huge.

Vercel's rebuild of v 0 collapses that gap. It treats code generation and deployment as one integrated process. That changes everything about how fast you can move and how safely you can move.

This doesn't mean your engineers don't write code anymore. It means they write code at a higher level of abstraction. Instead of writing boilerplate, they direct AI to write boilerplate and then they focus on the logic that actually matters. Instead of manual integration, the infrastructure handles integration automatically.

It doesn't mean security disappears. It means security controls move from policy documents to infrastructure. Instead of hoping people follow guidelines, the infrastructure enforces them. This actually improves security because it removes the choice to do it wrong.

It doesn't mean code review disappears. Code review becomes more important, but it focuses on different things. Reviewers aren't checking whether syntax is correct—the AI and the infrastructure handle that. They're checking whether the logic is right, whether the approach is sound, whether it fits the product direction.

The teams that adapt to this first get a significant competitive advantage. They ship faster, safer, and with better code. The teams that struggle to adapt are the ones that treat AI code generation as just another tool rather than a fundamental shift in how development works.

The tools are ready. The infrastructure is ready. The question now is whether teams are ready to think differently about development. Based on early adoption, the answer is yes. Teams are hungry for this shift. They're tired of boilerplate. They're tired of manual integration. They're tired of the gap between "this is a good idea" and "this is running in production."

Built right, AI code generation doesn't replace developers. It amplifies them. It lets small teams punch above their weight. It lets experienced engineers focus on the problems worth their time. It lets non-engineers participate more directly in building products.

That's worth the process changes. That's worth the learning curve. That's worth thinking carefully about how you want to work.

The future of development isn't AI replacing engineers. It's infrastructure that understands production, systems that generate code in context, and humans freed from routine work to focus on judgment calls. That future starts looking a lot like rebuilt v 0.

Key Takeaways

- AI code generation historically solved the first 10% of development (scaffolding), but deployment integration required 90% manual work

- V0's rebuilt sandbox runtime imports repositories, pulls environment variables, and generates production-ready code that deploys directly

- GitHub integration maintains full audit trails and enforces proper PR workflows, eliminating shadow IT risks from credential exposure

- Infrastructure control and deployment integration are more important differentiators than AI model quality for enterprise adoption

- Teams using v0 report 30-60% reduction in development time for standard features, but complex logic and security decisions still require human judgment

Related Articles

- OpenAI's Codex for Mac: Multi-Agent AI Coding [2025]

- AI-Powered Smart Home Automation With Claude Code [2025]

- Enterprise AI Race: Multi-Model Strategy Reshapes Competition [2025]

- OpenAI vs Anthropic: Enterprise AI Model Adoption Trends [2025]

- OpenAI's Codex Desktop App Takes On Claude Code [2025]

- OpenAI's New macOS Codex App: The Future of Agentic Coding [2025]

![Vercel v0: Solving the 90% Problem with AI-Generated Code in Production [2025]](https://tryrunable.com/blog/vercel-v0-solving-the-90-problem-with-ai-generated-code-in-p/image-1-1770127656813.jpg)