Benchmark's $225M Cerebras Bet: Inside the AI Chip Revolution

Introduction: When the Best Investors Back the Underdog

Last week, something remarkable happened in the AI infrastructure world, and frankly, most people missed it. A venture capital firm called Benchmark Capital—you know, the one that discovered Instagram and Uber—quietly committed $225 million to a single company called Cerebras Systems. Not a flashy announcement. Not a press tour. Just two separate investment vehicles, both named "Benchmark Infrastructure," both created for this exact moment.

Here's why this matters: Benchmark doesn't usually move like this. The firm runs deliberately small funds, capped at $450 million by design. To invest this much in Cerebras, they had to create separate vehicles. That tells you something important. This isn't a diversification play or a portfolio hedge. This is conviction. Real conviction.

Cerebras raised

So what's the story here? Why would one of Silicon Valley's most respected investors commit this much capital to an AI chipmaker that most people have never heard of? The answer sits in the physics of how we build AI systems today, the limitations of current approaches, and the possibility that Nvidia's dominance isn't inevitable.

This article explores what Cerebras is actually doing, why Benchmark sees it as the future of AI computing, and what this funding round really signals about the next chapter of artificial intelligence infrastructure. We'll dig into the technology, the market dynamics, the competition, and what comes next.

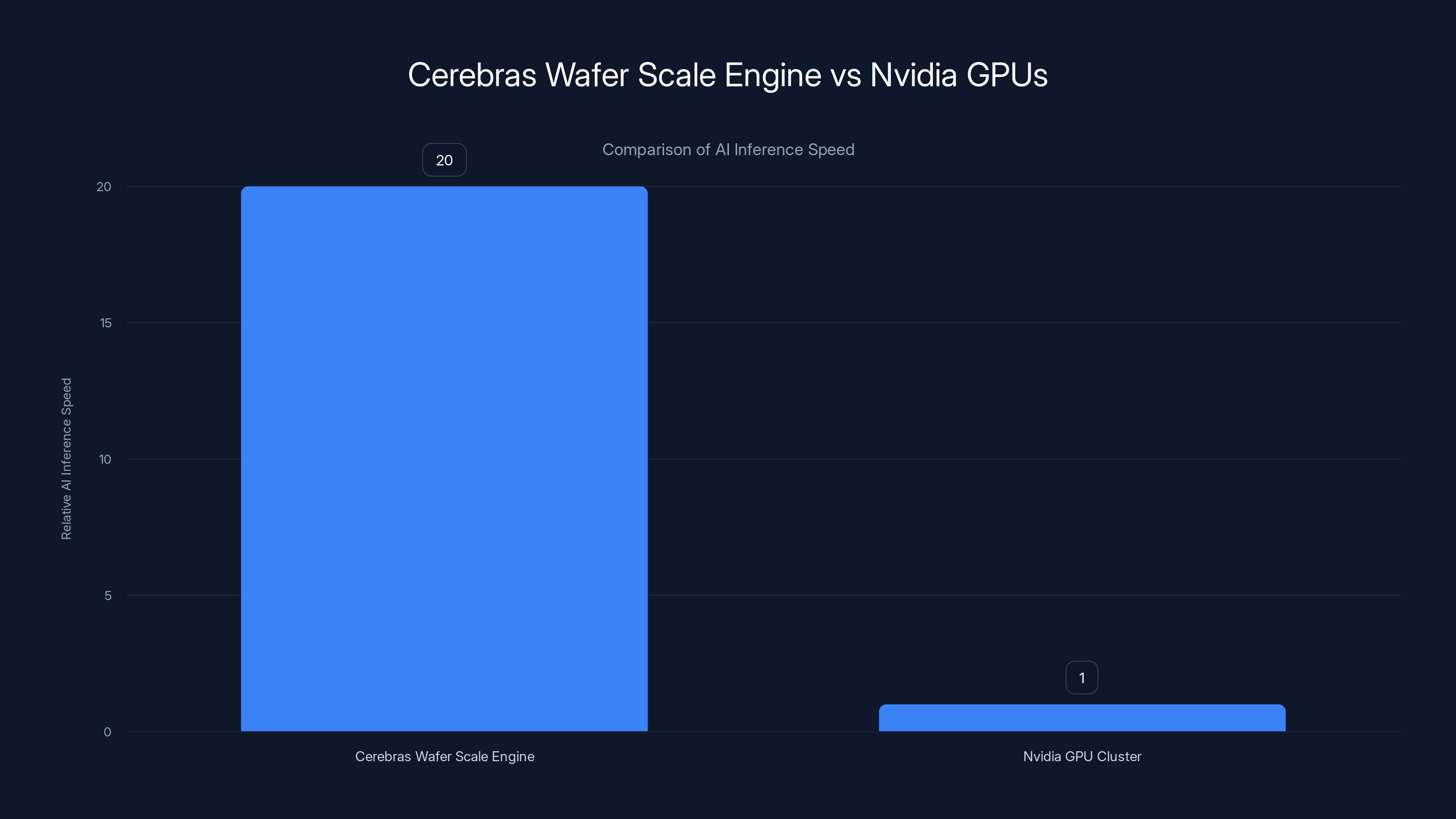

Cerebras' Wafer Scale Engine delivers over 20 times faster AI inference compared to Nvidia's GPU clusters, highlighting its optimization for specific workloads. Estimated data based on claims.

TL; DR

- **Benchmark invested 1B funding round, valuing the company at $23 billion, nearly 3x its valuation from 6 months prior

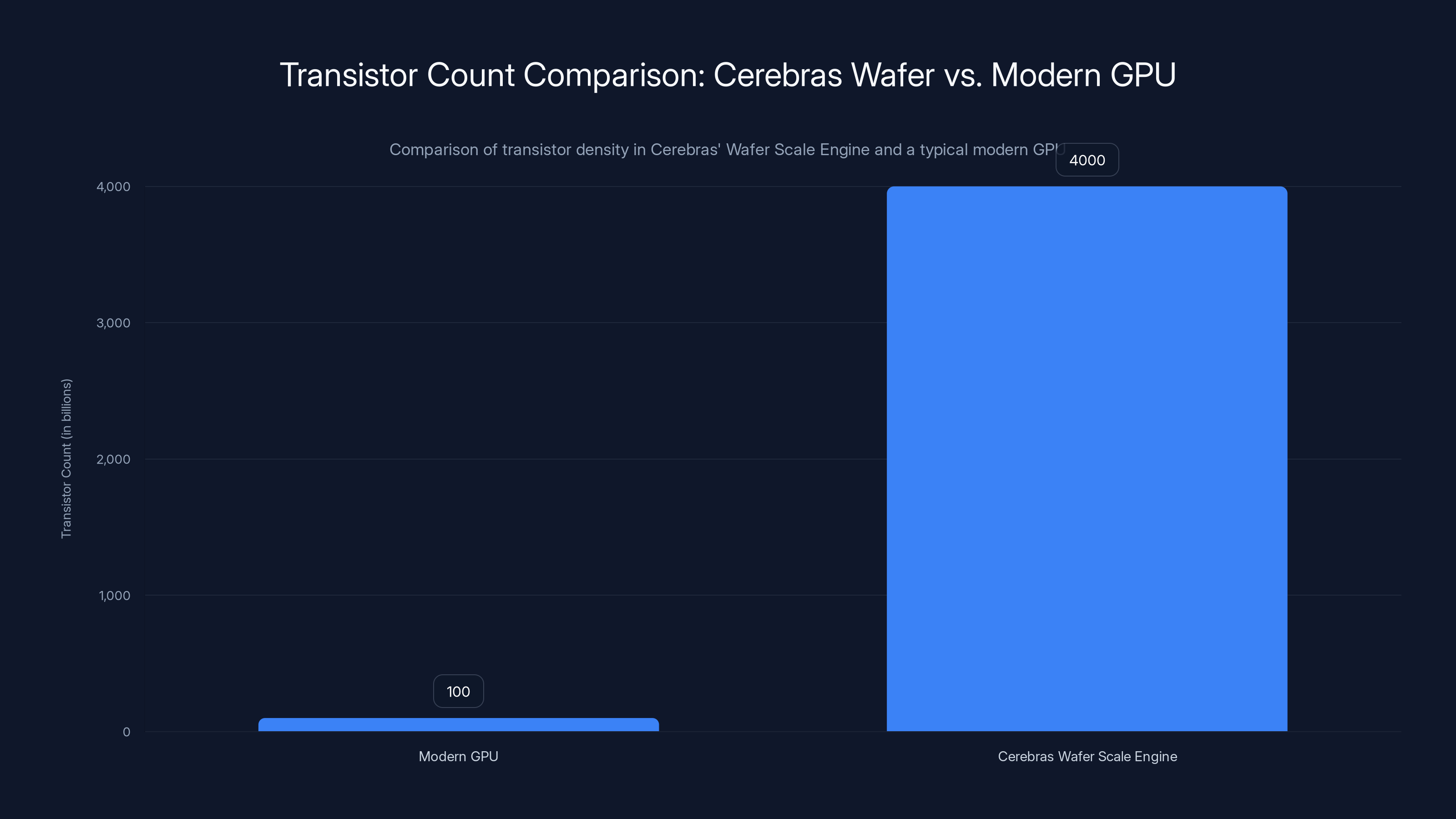

- Cerebras' Wafer Scale Engine packs 4 trillion transistors into a single chip, delivering 900,000 specialized cores and 20x faster AI inference compared to traditional GPU clusters

- Open AI partnership worth $10B+ extends through 2028, providing Cerebras with 750 megawatts of computing power demand

- IPO planned for Q2 2026, following successful removal of G42 from investor list and resolution of national security concerns

- Valuation momentum reflects broader shift toward alternative AI architectures beyond Nvidia's dominance

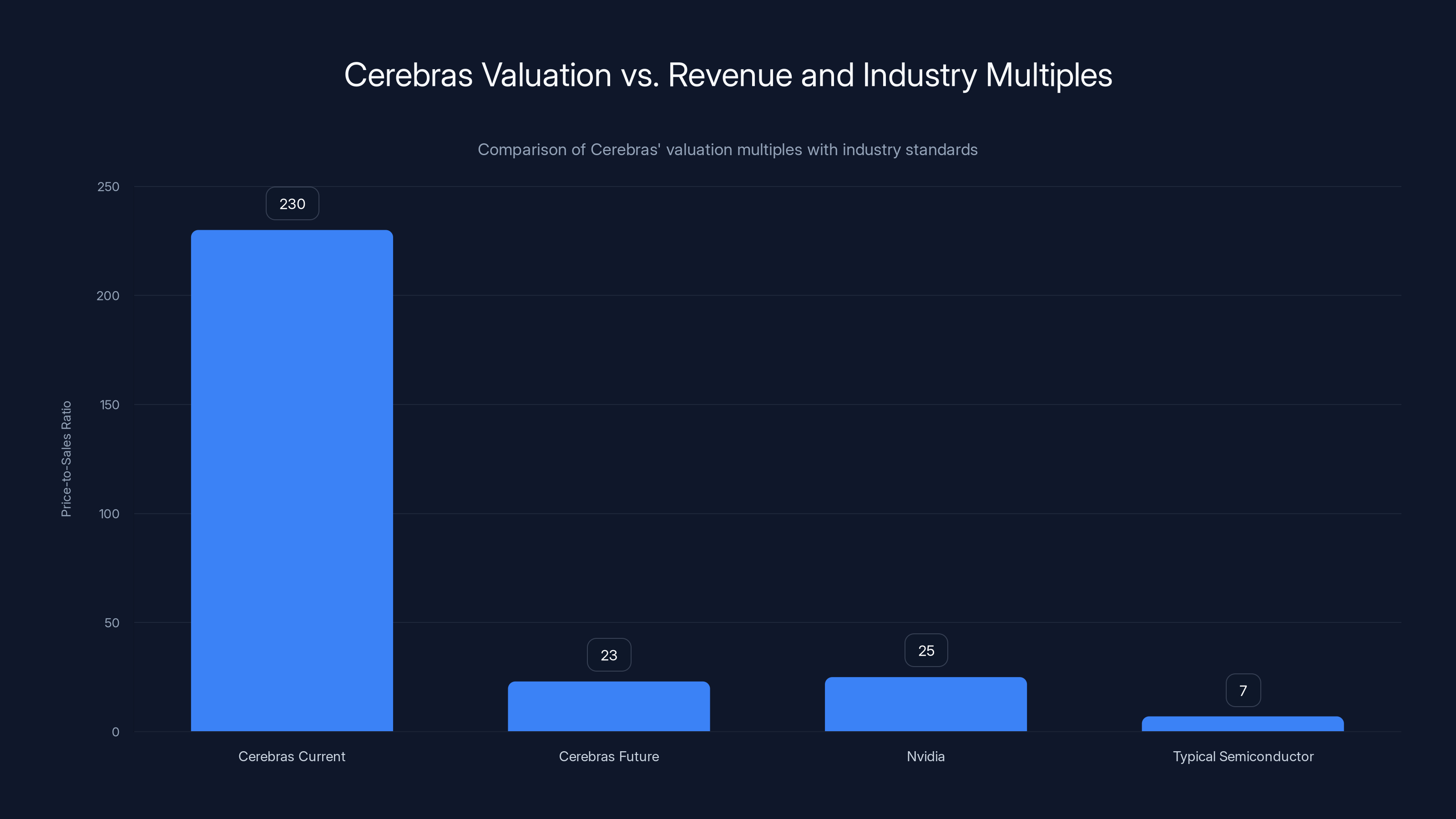

Cerebras' current price-to-sales ratio is significantly higher than industry norms, reflecting speculative future growth. Estimated data used for Cerebras' future scenario.

The $225M Question: Why Benchmark Goes All-In

Benchmark Capital making a

Mark Lapidus and others at Benchmark have watched AI infrastructure evolve for nearly a decade. They were there in 2016 when Cerebras was essentially a whiteboard and an idea. Ten years later, that idea has crystallized into a chip architecture that fundamentally challenges how we think about AI computation.

What drives this conviction? First, there's the track record. Benchmark has invested in some of the most important infrastructure companies in tech history. When you've backed Uber, Instagram, and Snapchat, you develop an eye for founders and technologies that shift paradigms. Benchmark's infrastructure investments have a particular flavor—they back technologies that become invisible but indispensable.

Second, there's the timing. The AI infrastructure market is experiencing what you might call "architect fatigue" with Nvidia. Don't get me wrong—Nvidia is extraordinary. They've built an empire on GPUs that happen to be perfect for training massive language models. But every architect eventually faces a fundamental question: are there better tools for the job?

Third, Cerebras' customer base is already validating the approach. Open AI's $10 billion+ commitment over three years is not a charity deal. Sam Altman, Open AI's CEO, wouldn't commit that kind of capital if the math didn't work. When Open AI needs faster inference, lower latency, and more efficient computation, Cerebras' architecture delivers. The fact that Altman is also a personal investor in Cerebras suggests that this isn't just corporate procurement—it's founder-to-founder conviction.

Fourth, there's the supply constraint problem. Nvidia's H100 and H200 chips are brilliant, but they're limited by manufacturing capacity. Taiwan Semiconductor Manufacturing Company (TSMC) can only produce so many advanced chips. Building alternative architectures that can scale using different manufacturing approaches or different design philosophies becomes strategically important. Cerebras isn't fighting Nvidia directly on every dimension—they're building for different use cases where their architecture wins decisively.

Benchmark's $225 million bet is essentially saying: "We believe that the future of AI infrastructure is not GPU clusters. We believe it's radically different hardware architecture. And we're willing to bet enormous capital that Cerebras is the company that builds it."

The Cerebras Technology: How to Build a Giant Chip Instead of Giant Clusters

When you look at how AI inference works today, you're looking at clusters. Multiple GPUs wired together, passing data back and forth, calculating in parallel, optimizing for throughput. A typical large language model inference setup might involve dozens or hundreds of individual chips, all communicating through networks, all competing for bandwidth.

Cerebras took a fundamentally different approach. Instead of fragmenting computation across multiple chips, they asked a simple but radical question: what if we put everything on one piece of silicon?

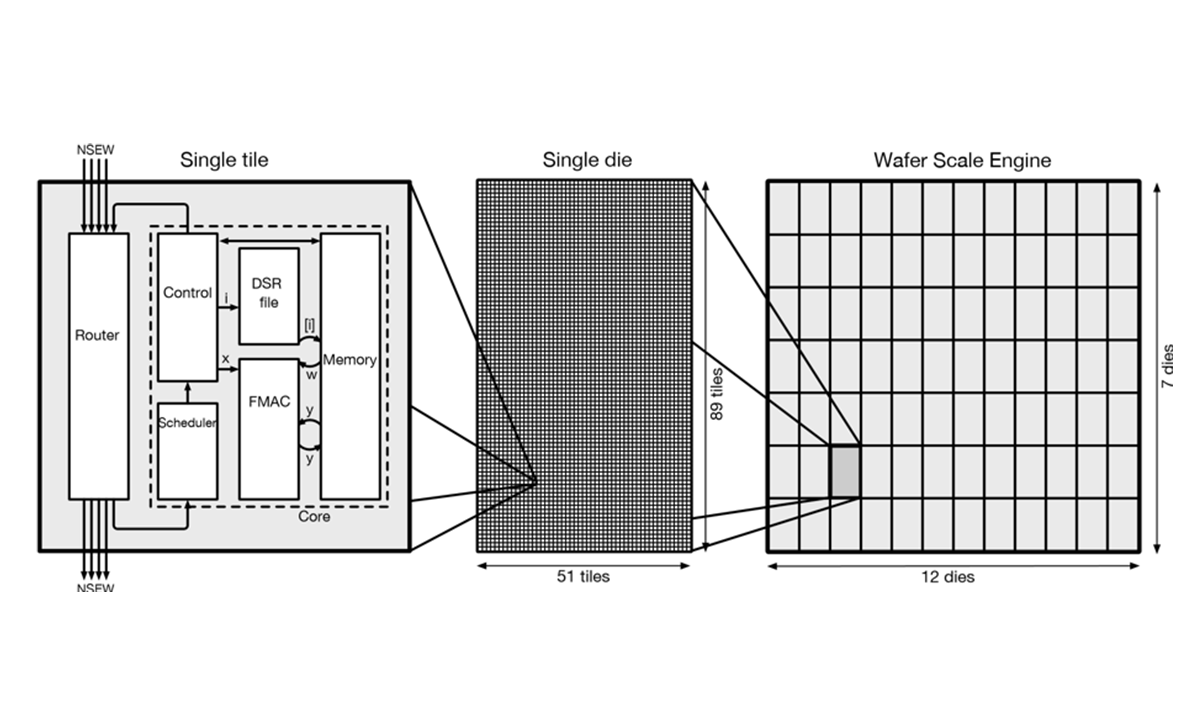

The company's flagship product is called the Wafer Scale Engine. To understand why this matters, you need to know what a wafer is. Silicon wafers are circular discs, typically 300 millimeters in diameter, manufactured to incredible precision. They're the raw material for all semiconductor production. Normally, wafer manufacturers cut these circles into hundreds of individual chips—roughly thumbnail-sized fragments. Each fragment becomes a separate GPU or CPU.

Cerebras doesn't cut their wafers. They use the entire wafer as a single chip. This single Wafer Scale Engine measures approximately 8.5 inches on each side and contains 4 trillion transistors crammed into a single piece of silicon.

To give you a sense of scale: a single modern GPU contains roughly 100 billion transistors. Cerebras' Wafer Scale Engine contains 40 times that density. It's a staggering concentration of computational power on a single substrate.

The physical architecture delivers 900,000 specialized cores working in parallel. Each core is relatively simple compared to a GPU core, but the sheer quantity and the way they're organized makes the system extraordinary for certain workloads. These cores are connected by a high-speed network fabric built directly into the chip. There's no external data shuffling, no network latency, no waiting for data to traverse from one chip to another.

This matters enormously because moving data is expensive. In a traditional GPU cluster, a significant portion of the computational overhead comes from data movement—reading from memory, writing results, shuffling data between chips. Cerebras eliminates that overhead by putting everything on the same substrate.

The result? Cerebras claims their systems run AI inference tasks more than 20 times faster than competing systems built with GPU clusters. For complex queries where latency matters—think real-time AI assistants, live reasoning, interactive inference—this speed advantage is transformational.

The Manufacturing Challenge Nobody Talks About

Building a functional wafer-scale chip sounds elegant in theory. In practice, it's manufacturing hell. When you're working with 4 trillion transistors on a single piece of silicon, the defect rate matters enormously. Even a single manufacturing defect can render the entire wafer unusable.

Traditional chip manufacturers address this by breaking wafers into smaller chips. If one small chip has a defect, it's isolated—the other chips on the wafer remain functional. With a wafer-scale approach, you lose this redundancy advantage.

Cerebras solved this problem partially through clever engineering. The Wafer Scale Engine uses built-in redundancy—spare cores and memory that can be swapped in if defects are found. Think of it like having extra lanes on a highway that activate when the main lanes are damaged. This approach allows them to tolerate certain defects while maintaining functionality.

It's an engineering triumph, but it also means Cerebras' manufacturing yield is a closely guarded secret. The yield determines how many of each production run is actually usable, which directly impacts margins and manufacturing economics. For investors like Benchmark, understanding this yield becomes crucial to assessing long-term profitability.

The manufacturing partnership with TSMC gives Cerebras access to cutting-edge fabrication capabilities, but it also creates dependency. TSMC has limited capacity for advanced node production, and prioritizing Cerebras means deprioritizing other customers. This is a real constraint on Cerebras' growth rate.

Software Stack: The Invisible Foundation

Here's what many people miss about Cerebras: the hardware is only half the story. The real competitive moat is the software stack.

Tensor Flow, Py Torch, and other deep learning frameworks were all built assuming a GPU cluster architecture. If you want to run code on Cerebras, you can't just compile it directly. You need compilers, runtime systems, and optimization frameworks that understand the Wafer Scale Engine's unique architecture.

Cerebras has spent years building this software layer. They've developed compiler technology that can take traditional neural network code and map it efficiently onto their hardware. They've built memory management systems, communication protocols, and debugging tools optimized for their architecture.

This software advantage compounds over time. As more researchers and engineers use Cerebras systems, they discover optimizations that feed back into the software stack. The feedback loop strengthens the competitive position.

This is exactly what happened with Nvidia and CUDA. Twenty years ago, CUDA was just Nvidia's parallel computing platform. Today, it's a complete ecosystem—universities teach it, researchers optimize for it, companies build entire products around it. The software lock-in is as powerful as the hardware advantage.

Cerebras is building toward this same position, but they're doing it while competing against a company with a 20-year head start. That's a real challenge, but it's not insurmountable. When the hardware advantage is large enough—like the claimed 20x speedup for certain workloads—software compatibility becomes secondary to raw performance.

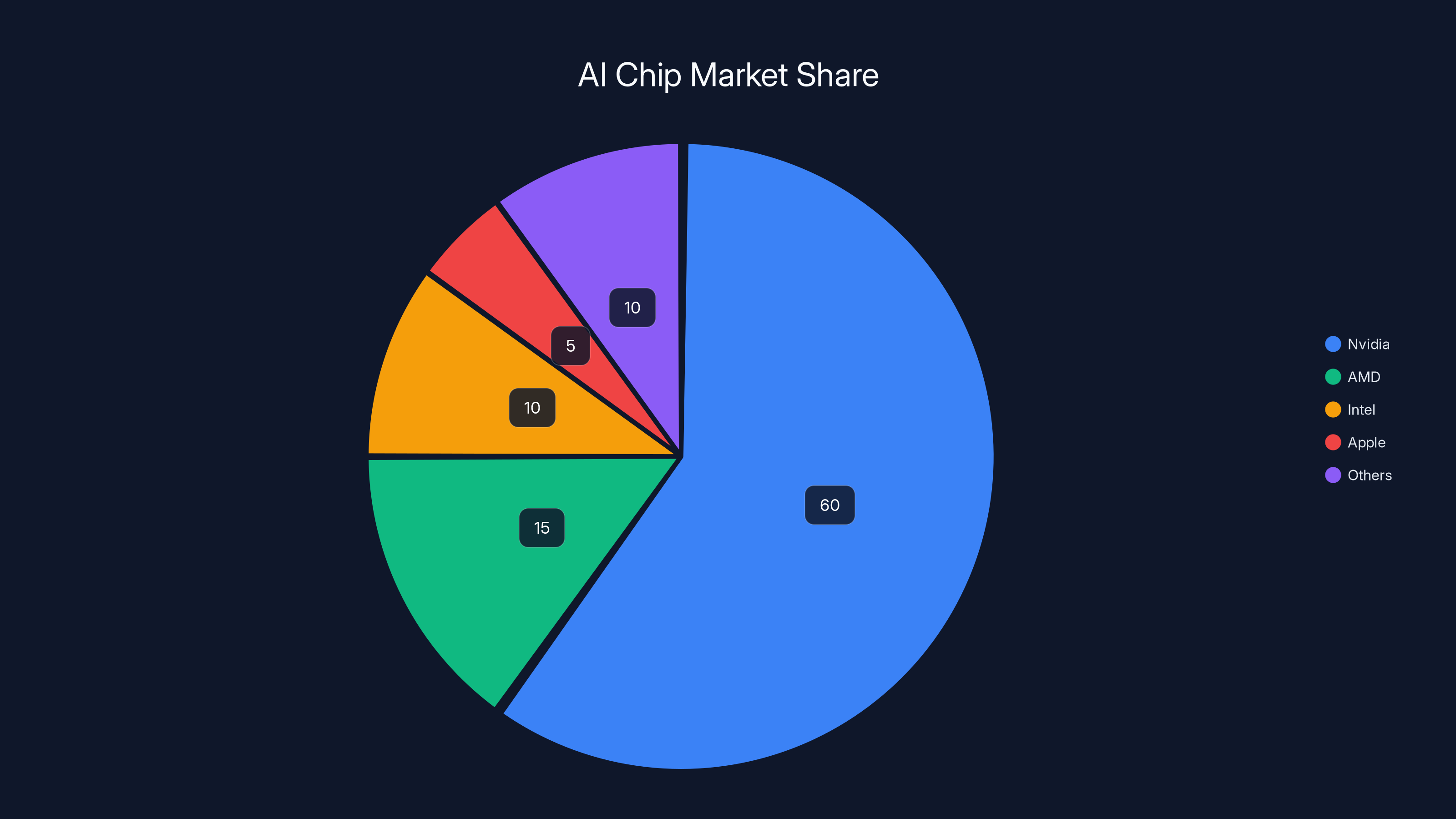

Nvidia holds an estimated 60% of the AI chip market, showcasing its dominance. However, competitors like AMD and Intel are gradually increasing their presence. (Estimated data)

The Open AI Partnership: When Validation Comes from the Top

In January, Cerebras announced a multi-year agreement with Open AI worth more than $10 billion. The contract extends through 2028 and involves providing 750 megawatts of computing power. For context, 750 megawatts is enough to power roughly 600,000 homes. This isn't a pilot program or a proof of concept. This is large-scale, production infrastructure deployment.

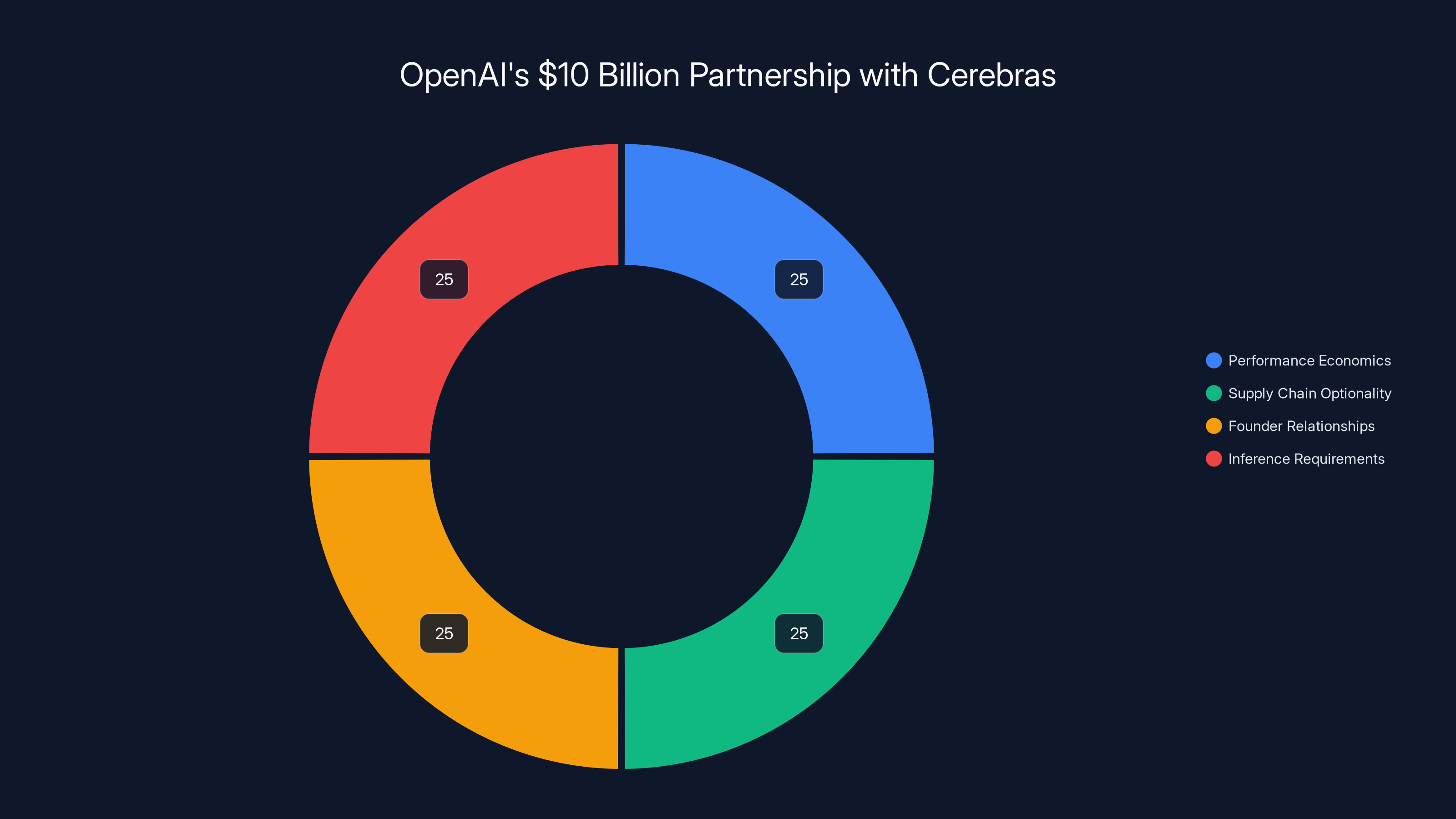

Why does Open AI—which has access to unlimited capital, can negotiate with any vendor, and has deep relationships with Nvidia—choose to commit $10 billion to Cerebras? There are several possibilities, and the truth probably involves all of them.

First, raw performance economics. If Cerebras' systems deliver faster inference, lower latency, or better power efficiency for certain workloads, the math justifies the commitment. Open AI runs inference servers that process millions of queries per day. Even a 10% improvement in throughput or latency translates to massive operational savings or better user experience—potentially both.

Second, supply chain optionality. Nvidia chips are in high demand, and capacity is limited. By diversifying their infrastructure across multiple vendors and architectures, Open AI ensures that they're not dependent on any single supplier. If TSMC's capacity gets constrained, or if Nvidia deprioritizes certain products, Open AI still has computational capacity available through Cerebras.

Third, founder relationships. Sam Altman is a personal investor in Cerebras. This is important context. Altman isn't just making a business decision as Open AI's CEO—he's also making a financial decision as a personal shareholder. When you have that alignment, you tend to negotiate harder, commit deeper, and find creative ways to make partnerships work.

Fourth, inference requirements are different from training. Everyone focuses on training large language models, but inference—actually running the model to answer questions—is where the real computational burden lies. Open AI runs Chat GPT inference at massive scale. The latency and cost characteristics of inference are completely different from training. Cerebras' architecture, optimized for inference, addresses Open AI's specific pain point.

The Open AI partnership signals something crucial: Cerebras is not a speculative technology. It's not a proof of concept or a research prototype. It's production infrastructure running real workloads for the most important AI company in the world. That validation is worth more than any marketing campaign.

The Competitive Landscape: Nvidia's Reign and Alternative Architectures

When people hear "AI chips," they immediately think Nvidia. The company has achieved something remarkable—dominance in a market that didn't exist ten years ago. Nvidia's stock price reflects this dominance, and the market capitalization has grown to over $3 trillion at points. This is one of the most successful business positions in technology.

But even dominance has limits. Several factors are creating space for alternative architectures.

Nvidia's Constraints

Nvidia's GPUs are extraordinarily powerful and remarkably flexible. You can run almost any computation workload on them. They're the default choice for a reason. But flexibility comes with tradeoffs.

GPUs are general-purpose accelerators. They're designed to handle diverse computational patterns—matrix multiplication, convolution, branching logic, memory access patterns that vary unpredictably. To support all of this, they need complex instruction sets, large caches, branch predictors, and memory management systems.

When you're running a specific workload repeatedly—like neural network inference with a fixed model architecture—much of this generality is wasted. You're paying for computational capability you don't use. It's like buying a Formula One racing car to commute to work. The car is extraordinary, but you're using a tiny fraction of its capability.

Second, Nvidia's supply is limited by TSMC's capacity. The most advanced semiconductor manufacturing is bottlenecked on advanced packaging, advanced node production, and assembly. Nvidia competes with AMD, Apple, and others for this capacity. When demand exceeds supply—as has been the case consistently during the AI boom—prices increase and delivery times extend.

Third, the software ecosystem around Nvidia creates its own gravity. Decades of optimization, millions of engineers who understand CUDA, libraries optimized for Nvidia architecture—this creates lock-in. But lock-in is a double-edged sword. If a better option emerges, switching costs are high, but the reward for switching also scales with the advantage.

Alternative Architectures Emerging

Cerebras isn't alone in the space. Google has invested heavily in custom chips like the TPU (Tensor Processing Unit), optimized specifically for machine learning. Microsoft has explored custom hardware as well. AMD is aggressively pursuing GPU alternatives with EPYC and ROCm software.

But most of these alternatives compete on the same dimensions as Nvidia—they're more efficient, cheaper, or better optimized, but they're still built on fundamentally similar architecture principles. You're still working with clusters of individual chips wired together.

Cerebras is different. The wafer-scale approach is a fundamentally different architectural choice. It's not an incremental improvement—it's a different category of compute.

This difference matters in specific contexts. For latency-sensitive workloads, for inference with fixed model architectures, for problems where you want to minimize data movement—the Wafer Scale Engine's advantages compound. For flexible, diverse workloads or training large models, traditional approaches still have advantages.

The market is probably large enough for multiple approaches. Nvidia handles the 80% of use cases where their flexibility and ecosystem dominance win. Cerebras captures the 20% where their specialized advantages matter most. Even 20% of the AI infrastructure market is enormous.

Custom Silicon: The Broader Trend

Beyond Cerebras and Google's TPUs, there's a broader industry trend toward custom silicon. Apple designs its own chips. Tesla builds AI chips for autonomous driving. Qualcomm optimizes for mobile. Amazon developed Trainium and Inferentia chips.

What's driving this trend? Three factors: (1) generic chips waste resources for specific applications, (2) custom hardware creates competitive advantage that's hard to replicate, and (3) margins are better when you design and manufacture your own compute infrastructure.

Cerebras is betting that custom silicon for AI inference becomes not just a competitive advantage for individual companies, but a separate market category. Companies like Open AI, Anthropic, and others need specialized compute infrastructure. They'll contract with manufacturers like Cerebras rather than trying to build it themselves.

This business model—being the specialized chip manufacturer for AI companies—is different from Nvidia's model. Nvidia sells to everyone. Cerebras sells to specific customers with specific needs. The market might be smaller, but the margins could be higher, and customer lock-in is stronger.

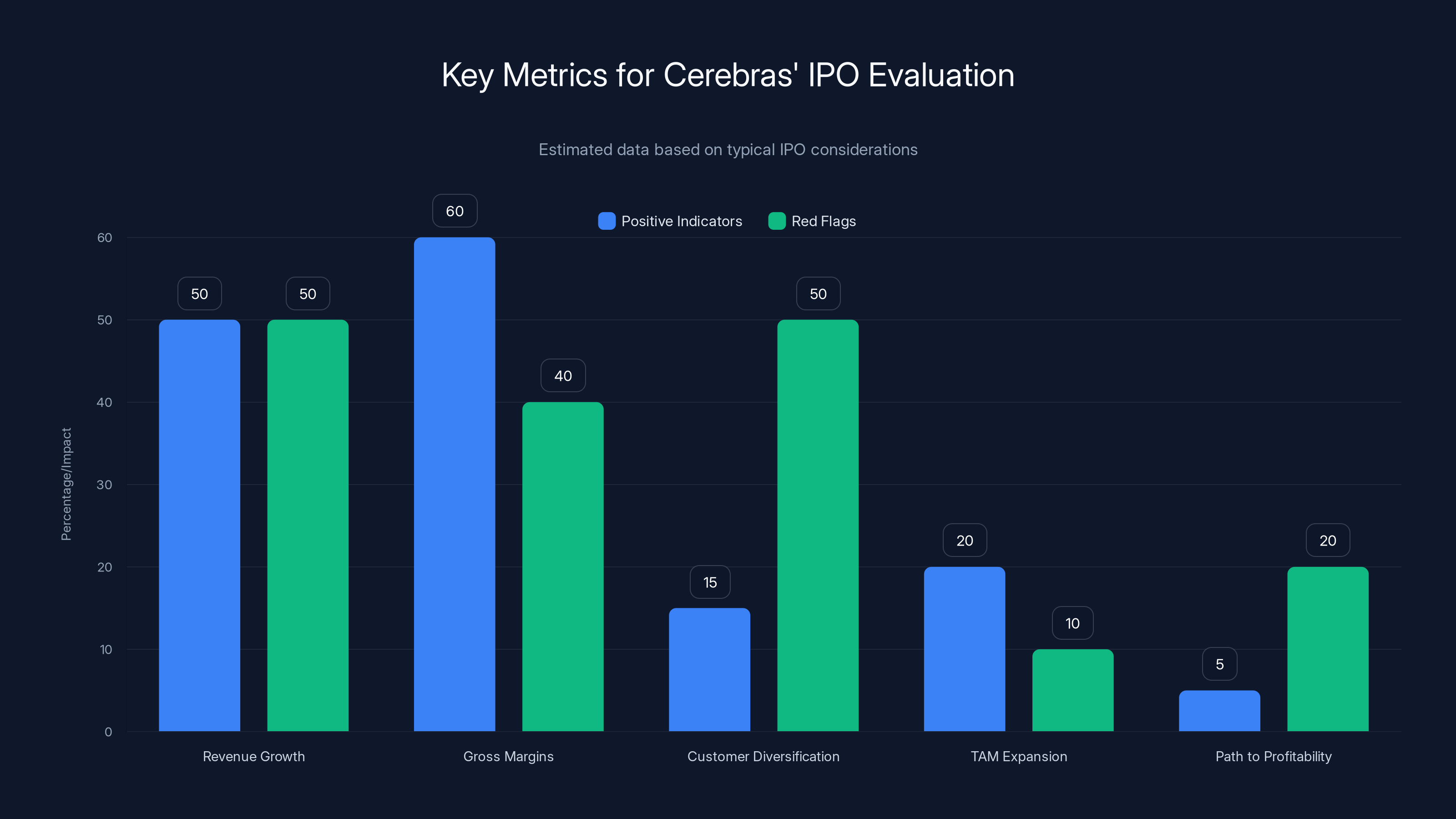

Investors should focus on revenue growth and gross margins as positive indicators, while being wary of revenue concentration and declining margins as red flags. Estimated data.

The Valuation Question: Is $23 Billion Reasonable?

Cerebras raised

Let's approach this analytically. What drives semiconductor company valuations? Generally, some combination of revenue, gross margins, addressable market, and competitive moat. Let's examine each.

Current Revenue and Trajectory

Cerebras hasn't disclosed exact revenue figures, but we can make educated estimates based on available information. The company has been generating revenue for several years, primarily from a small set of enterprise customers—research institutions, cloud providers, and AI companies.

Based on reported partnerships and typical enterprise hardware pricing, Cerebras is probably generating somewhere in the range of $50-150 million in annual revenue currently. This is an estimate, but it's probably directionally correct.

At

230x seems absurd on its face. But wait. Cerebras is a pre-revenue-ramp company. The real valuation case isn't based on current revenue—it's based on future revenue after the company scales.

If Cerebras can reach

But the valuation is $23 billion. So the market is pricing in even more upside than this scenario. The implicit assumption is that Cerebras becomes a larger business than this, or that the market opportunity is larger than standard multiples suggest.

The Addressable Market

How big is the AI infrastructure market? It's enormous and growing. Current estimates suggest the global AI hardware and infrastructure market is roughly

If Cerebras can capture even 5% of this market, that's

But here's the catch: these valuations assume no execution risk, no competitive pressure, and no market evolution. Reality is messier.

Competitive Moat and Sustainability

The critical question is whether Cerebras can sustain competitive advantage. In semiconductor manufacturing, competitive advantages typically come from:

-

Engineering talent and IP: Cerebras has world-class engineers and proprietary IP around wafer-scale manufacturing and optimization. This is valuable but replicable—other companies can hire similar talent and develop similar technology.

-

Manufacturing partnerships: Cerebras' relationship with TSMC is valuable, but TSMC works with everyone. There's no exclusive manufacturing agreement.

-

Software ecosystem: Over time, as more people use Cerebras hardware, the software ecosystem becomes more valuable. This creates lock-in.

-

Customer relationships: The Open AI partnership and others provide credibility and early deployment, but they're not exclusive relationships.

The moat is real but not insurmountable. A well-funded competitor—or an acquihire by a larger company—could potentially replicate Cerebras' technology. This is why the company is racing to scale and to build an irreplaceable software ecosystem.

Valuation Verdict

Is

If Cerebras faces execution challenges, if their manufacturing yield is lower than expected, or if competitive alternatives emerge, $23 billion is overvalued.

Benchmark's $225 million bet suggests they believe in the first scenario. They've been backing Cerebras since 2016. That decade-long conviction suggests they've seen something that justifies the bet. But conviction alone doesn't eliminate risk.

The IPO Path: G42, National Security, and Going Public

Cerebras has been pursuing an IPO for several years, but the path has been complicated by geopolitics. Specifically, the company's relationship with G42, a UAE-based AI firm.

The G42 Problem

G42 was a significant customer and investor in Cerebras. At its peak, G42 accounted for 87% of Cerebras' revenue—essentially the company was being funded and powered by a single customer. For a public company, that level of customer concentration is a massive red flag. But it was more complicated than that.

G42 has historical ties to Chinese technology companies. The United States government, through the Committee on Foreign Investment in the United States (CFIUS), conducted a national security review. The concern was that Cerebras' advanced AI chip technology might, through G42, effectively transfer to Chinese entities. This touched on sensitive questions about AI supremacy, semiconductor technology transfer, and national security.

CFIUS reviews can result in deals being blocked, restricted, or restructured. In Cerebras' case, it created uncertainty around the IPO. Public investors don't want to invest in companies with unresolved national security reviews.

Cerebras addressed this by effectively divesting from G42. By late 2024, G42 had been removed from the company's investor list and was no longer a significant customer. This resolution cleared the path for an IPO attempt.

IPO Timeline and Expectations

Cerebras is now targeting a public debut in the second quarter of 2026. If that timeline holds, it would come roughly six months after this funding round. That's an aggressive timeline but achievable for a company with Cerebras' scale and institutional backing.

When Cerebras goes public, what should investors expect? Several things become visible:

-

Exact revenue figures: All speculation ends. We'll know exactly how much revenue the company is generating and what margins look like.

-

Customer concentration: We'll see whether they've successfully diversified beyond G42 and Open AI, or whether they remain dependent on a small number of large customers.

-

Gross margins: Semiconductor manufacturing economics become transparent. We'll know whether margins are 30%, 60%, or somewhere in between.

-

Cash burn and path to profitability: We'll see the company's actual spending, cash reserves, and timeline to profitability.

-

Risk factors: The S-1 filing will detail all the risks investors should be aware of—manufacturing risk, competitive risk, customer concentration, and more.

For a company valued at $23 billion, the IPO will be significant. Even if they price at a lower valuation than the private round, they're still raising enormous capital and creating a new publicly traded company in a hot sector.

Cerebras' Wafer Scale Engine contains 40 times more transistors than a typical modern GPU, showcasing its incredible computational density.

Why Benchmark's Conviction Matters for the Broader Industry

Benchmark Capital isn't just a venture capital firm—it's a signal provider. When Benchmark makes a massive bet, the venture capital world pays attention. The $225 million double-down on Cerebras is a signal: alternative AI architectures are not edge cases. They're going to be important.

This matters for several reasons.

Signaling to Other Investors

Large institutional investors—family offices, endowments, mutual funds—pay attention to what top-tier venture capital firms are doing. If Benchmark thinks Cerebras is worth $225 million, that's a data point that increases the probability that other sophisticated investors will also back the company.

Benchmark's

Encouraging Competitive Entry

Benchmark's conviction also signals to entrepreneurs and other companies: "There's a huge market for alternative AI architectures." This attracts talent, encourages entrepreneurship, and spurs competition. In the long run, competition is good for the ecosystem even if it's bad for Cerebras.

Validating the Technology Category

Wafer-scale processors aren't new—they've been explored for decades. But Cerebras has made them work at scale. Benchmark's bet is validation that the technology is real, the product is valuable, and the market opportunity is substantial.

This validation might encourage universities, researchers, and other companies to invest in wafer-scale architecture exploration. It's a broader signal about where computing infrastructure is heading.

The Manufacturing Reality: Why Scaling Hardware is Brutally Difficult

Everyone talks about software scaling—you write code once, it runs on millions of devices. Hardware is completely different. Every unit has to be manufactured, physically transported, and installed. Scaling hardware means building more factories, training more workers, and managing complex supply chains.

Cerebras faces all of these challenges.

Capacity Constraints

TSMC's advanced manufacturing capacity is the bottleneck. The company manufactures chips for Apple, Qualcomm, Nvidia, AMD, and dozens of others. Cerebras is in a queue waiting for capacity. They can't just decide to "scale up" production—they need TSMC to allocate wafer starts to their production.

This is fundamentally different from software. With software, if demand increases, you just deploy more instances. With hardware, demand is constrained by manufacturing capacity. Cerebras will be capacity-constrained for years, possibly decades.

This has both advantages and disadvantages. The disadvantage is that you can't satisfy all demand quickly. The advantage is that you can maintain pricing power because you're supply-constrained rather than demand-constrained.

Manufacturing Economics

Wafer-scale manufacturing is expensive. Each production run costs millions in setup. The yield—the percentage of wafers that come out of manufacturing without defects—determines the unit economics.

If yield is 80%, then 80% of the wafers are usable and you split the manufacturing cost among them. If yield is 50%, the per-unit manufacturing cost doubles. In semiconductor manufacturing, yield is everything.

Cerebras doesn't disclose their yield. Keeping yield a secret is standard practice in the industry—it's a competitive advantage. If competitors knew your yield was 30%, they'd know your manufacturing cost structure is weak. But lower yield means higher unit costs, which means lower margins.

This is where Benchmark's conviction matters. Benchmark has done enough diligence to believe that Cerebras' manufacturing economics work—that their yield is good enough to support the business. This isn't guaranteed, but it's an important assumption baked into the valuation.

Supply Chain Fragility

Beyond TSMC, Cerebras depends on packaging houses, test facilities, logistics companies, and other suppliers. Any disruption in this chain affects their ability to deliver product.

The pandemic showed how fragile semiconductor supply chains are. A single disruption can cascade. Cerebras, as a smaller manufacturer, has less negotiating power with suppliers than Nvidia does. This is another form of execution risk.

OpenAI's $10 billion commitment to Cerebras is likely driven by a combination of performance economics, supply chain diversification, founder relationships, and specific inference requirements. (Estimated data)

The Inference Economy: Why This Matters for AI Costs

Most discussions about AI focus on training—teaching models like GPT-4 to work. But training is a one-time cost. Inference—actually running the model to answer questions—is the ongoing cost.

Consider Chat GPT. Training the model was expensive, but training is done. Now, Open AI needs to handle millions of inference queries daily. Each query requires computation. That computation cost is what users ultimately pay for.

Nvidia's GPUs are good at both training and inference. Cerebras' architecture is optimized specifically for inference. For inference workloads, their claimed 20x speedup is transformational.

Let's do some math. Suppose an inference query on a Nvidia-based system costs 1 cent to compute and takes 2 seconds. On Cerebras' system, if it's 20x faster and uses 1/20th the compute, the cost drops to 0.05 cents and the latency drops to 0.1 seconds.

For a service running millions of queries daily, this difference compounds into billions of dollars saved annually. This is why Open AI committed $10 billion to Cerebras. The math justifies it.

Inference at Scale

Most AI companies can't afford to run all their inference on GPU clusters. It's too expensive. Instead, they make tradeoffs—shorter response times for premium customers, cached responses for common queries, lighter models for common tasks.

With cheaper inference compute from Cerebras, companies can offer better service to more users at lower cost. This changes the unit economics of AI services.

Imagine a chatbot service where:

- Running inference on GPUs costs 0.1 cents per query

- Users pay 0.05 cents per query

- The business loses money on every query

With Cerebras infrastructure:

- Inference costs 0.005 cents per query

- Users still pay 0.05 cents per query

- The business is profitable

This transformation—from a business model that doesn't work to one that does—is exactly what happens when you reduce infrastructure costs by 20x.

This is the economic logic driving Cerebras' success. It's not about having better technology for technology's sake. It's about enabling business models that weren't possible before. This is a powerful force.

The Software Moat: Why Hardware Alone Isn't Enough

Historically, semiconductor companies compete on hardware—clock speed, transistor density, power efficiency. But increasingly, the real competitive advantage comes from software.

Consider Nvidia and CUDA. Twenty years ago, CUDA was just Nvidia's parallel computing platform. Today, it's an entire ecosystem. Researchers learned CUDA in university. Libraries are optimized for CUDA. Training materials, courses, documentation—all assume CUDA.

If you wanted to switch to AMD GPUs today, you could theoretically run most CUDA code through compatibility layers. But practically, you'd face friction. You'd need to relearn, retune, and adapt. The software lock-in is real.

Cerebras is building toward the same position, but they're starting from scratch against an entrenched competitor. Their advantage is that their software is optimized for their specific hardware in ways that general-purpose solutions can't match.

Over time, as more researchers and companies use Cerebras systems, the software ecosystem strengthens. Libraries are built. Best practices emerge. The next generation of engineers learns Cerebras. Eventually, switching costs become prohibitive.

This is a multi-year process, but it's how durable competitive advantages are built in infrastructure software.

Open Source and Proprietary Strategy

Cerebras faces a strategic choice: should they open-source their software stack (like Nvidia has partially done with CUDA) or keep it proprietary?

Open-source advantages: attracts developers, builds community, accelerates adoption. Proprietary advantages: maintains control, enables premium pricing, allows aggressive optimization without sharing IP.

Cerebras seems to be taking a hybrid approach—some tools are open, some are proprietary. This is pragmatic but creates complexity. The strategy needs to balance ecosystem development with competitive moat protection.

The Future Roadmap: What's Next for Cerebras

Cerebras has a clear roadmap:

- Scale manufacturing: Work with TSMC to increase capacity and yield

- Expand customer base: Move beyond G42 and Open AI to cloud providers, research institutions, and AI companies

- Improve software ecosystem: Build more developer tools, optimize for more models, reduce friction

- Go public: Execute an IPO and raise capital for continued growth

- Pursue next-generation chips: The Wafer Scale Engine will eventually be superseded by faster, more efficient designs

Each of these steps has risks. Manufacturing scaling is brutally difficult. Customer diversification requires proving the technology to skeptical buyers. Software ecosystem development is a multi-year effort. IPO execution requires favorable market conditions. And next-generation development requires continued innovation against increasingly capable competitors.

But Benchmark's bet suggests confidence in this roadmap. The firm has deployed significant capital and is betting on successful execution.

Competitive Responses and Industry Evolution

What will Nvidia do in response? The company faces a strategic choice.

Option 1: Ignore Cerebras as a niche player. If Cerebras' advantages only apply to inference (not training), and if the inference market is a fraction of the total AI infrastructure market, Nvidia can maintain dominance by focusing on training and general-purpose compute.

Option 2: Develop competitive products. Nvidia could invest in alternative architectures—maybe a wafer-scale GPU or a specialized inference processor. Nvidia has the capital, engineering talent, and manufacturing relationships to do this.

Option 3: Acquire Cerebras. If Cerebras threatens Nvidia's market position, Nvidia might acquire the company to neutralize the threat and gain their technology and team. This would be a massive acquisition—probably $20-30 billion—but within Nvidia's means.

History suggests companies often choose option 3. Intel acquired Altera (FPGAs) for $16.7 billion. Broadcom has made numerous acquisitions to extend market reach. Nvidia itself acquired Arm intellectual property exploration.

Cerebras going public changes the acquisition calculus. Acquiring a public company is more difficult and expensive than acquiring a private company. Once Cerebras is public, they have a currency (their stock) that makes acquisitions easier, but they also have shareholder protection and independent directors who will evaluate any acquisition offer carefully.

The competitive landscape is likely to remain dynamic. Alternative architectures will emerge. Some will succeed, others will fail. Eventually, we'll probably end up with a fragmented market where different workloads use different infrastructure—GPUs for some tasks, Cerebras for others, TPUs for specialized Google workloads, etc.

This is healthy for the industry. Monocultures are vulnerable to disruption. Diversity creates resilience and spurs innovation.

The Bigger Picture: What This Signals About AI Infrastructure

Benchmark's $225 million bet is significant, but only if you zoom out and see what it represents in the broader context of AI infrastructure evolution.

We're at an inflection point. For the past 15 years, GPU-based compute has been the dominant paradigm. Nvidia built an empire on this. But empires don't last forever. Eventually, new paradigms emerge.

The shift toward specialized hardware—whether wafer-scale processors, specialized inference chips, or quantum computers—is probably inevitable. The question is timing and execution. When will specialized hardware become mainstream? Who will capture the market? Which architectures will win?

Cerebras is betting they know the answers. Benchmark is betting Cerebras is right. If they're correct, the next decade of AI infrastructure will look very different from the last decade.

This isn't guaranteed. Nvidia has the resources, talent, and relationships to adapt. But the opening is there. For the first time, there's a credible alternative to GPU dominance. Cerebras is that alternative, or at least the leading example of what alternatives could look like.

Benchmark's conviction suggests they see this clearly. The $225 million bet is a bet on paradigm shift.

Learning from Benchmark's Investment Philosophy

Why would a venture capital firm make a $225 million bet on a company founded over a decade ago? This question reveals something important about how top-tier venture capital actually works.

Benchmark's approach has always been concentrated. Rather than making hundreds of small bets and hoping some work out, Benchmark makes fewer, larger bets in companies they believe will be transformational. This approach requires enormous conviction and carries enormous risk.

But it also produces enormous returns. When Benchmark backs a winner—and they've backed Instagram, Uber, Snapchat—the returns can be 100x or 1000x. These outsized returns more than compensate for the inevitable failures.

The $225 million Cerebras bet reflects this philosophy. Benchmark isn't diversifying risk through numerous small investments. They're concentrating capital in what they believe is the future of AI infrastructure. This is conviction investing at its purest.

What gave Benchmark confidence to make this bet?

-

Founder quality: Cerebras was founded by Andrew Feldman, a talented engineer and entrepreneur with credibility in the hardware space.

-

Customer validation: Open AI, not a venture capital firm, is willing to commit $10 billion. This isn't theoretical validation—it's real money from a real company making a real bet.

-

Product-market fit: The product exists, customers are using it, and they're willing to pay for it. This isn't a prototype or a research project.

-

Market opportunity: The AI infrastructure market is enormous and growing. Even if Cerebras captures a small percentage, the outcome is huge.

-

Long-term track record: Benchmark has been backing Cerebras since 2016. A decade-long investment relationship provides confidence that isn't available from a single data point.

These factors combined suggest that Benchmark's bet isn't reckless—it's informed by deep due diligence and conviction developed over years.

Challenges and Risks: The Honest Assessment

For all the optimism around Cerebras, there are real risks.

Market Risk

What if inference compute doesn't become as important as Cerebras believes? What if companies continue optimizing inference through software rather than custom hardware? What if the market opportunity is smaller than the valuation assumes?

Execution Risk

What if Cerebras' manufacturing yield is lower than expected? What if TSMC's capacity constraints prevent scaling? What if the next generation of chips doesn't deliver expected improvements?

Competitive Risk

What if Nvidia successfully develops competitive products? What if other startups develop better wafer-scale architectures? What if the software ecosystem doesn't develop as expected?

Customer Risk

What if Open AI significantly reduces their compute spend? What if other large customers don't emerge? What if customer concentration remains a problem?

Regulatory Risk

What if future national security reviews block exports or partnerships? What if the geopolitical environment shifts in ways that disadvantage companies like Cerebras?

These risks are real. They're not reason to dismiss Cerebras, but they're reason to be realistic about the probability of success. Even the best-backed companies fail or underperform. Benchmark's conviction doesn't guarantee success.

The IPO Narrative: What Investors Should Watch

When Cerebras goes public in Q2 2026, the narrative will be important. Here's what investors should pay attention to:

The Good Narratives:

- Revenue growth rates (50%+ year-over-year is impressive)

- Gross margins (60%+ is healthy for hardware)

- Diversified customer base (multiple customers > 15% of revenue)

- TAM expansion (market opportunity seems larger)

- Path to profitability (company is on track to profitability)

The Red Flags:

- Revenue concentration (if Open AI or any customer > 50% of revenue)

- Declining gross margins (suggests manufacturing or competitive pressure)

- Rising inventory (suggests sales slowdown)

- Competitive losses (customers switching to Nvidia)

- Delayed product launches (manufacturing delays)

The S-1 filing will be the most important document. Read it carefully. The risk factors section is where management admits the things that could go wrong.

Cerebras will likely go public at a lower valuation than the private round, which is typical. The question is how much lower. If they're valued at

Conclusion: The Bet on Paradigm Shift

Benchmark's $225 million bet on Cerebras is really a bet on the future of AI infrastructure. It's saying: "We believe that GPU clusters are not optimal for AI inference. We believe that wafer-scale processors are better. And we believe Cerebras is the company that will dominate this market."

This is a bold, concentrated bet. It requires conviction that Cerebras can execute, that the market opportunity is as large as believed, and that competitive threats can be managed. It requires believing that specialized hardware is the future, not a niche.

Are they right? History suggests that paradigm shifts in computing are real and lucrative. The shift from mainframes to personal computers was massive. The shift from CPUs to GPUs for AI was massive. The next shift—to specialized architectures optimized for specific workloads—seems plausible.

If Cerebras successfully executes their roadmap, scales manufacturing, builds an unbreakable software ecosystem, and captures meaningful market share in the inference market, Benchmark's $225 million bet could be worth tens of billions. The math is clear.

But execution in hardware is brutally difficult. Manufacturing is unforgiving. Competition is intense. Market conditions change. For every success like Cerebras, there are dozens of talented hardware startups that didn't make it.

What's impressive about Benchmark's bet isn't just the amount of capital—it's the signal it sends. It says: "Alternative architectures are not hypothetical. They're real. They're here. And they're going to be important."

Whether that signal is right remains to be seen. But in technology, when top-tier investors like Benchmark make concentrated bets, it's usually worth paying attention. This is one of those bets worth watching.

FAQ

What exactly is Cerebras' Wafer Scale Engine?

The Wafer Scale Engine is a custom semiconductor chip that uses nearly an entire 300-millimeter silicon wafer as a single processor, packing 4 trillion transistors and 900,000 specialized cores into one piece of silicon. Unlike traditional chips that are cut from wafers into individual units, Cerebras' architecture keeps the entire wafer as one integrated system, eliminating data-shuffling bottlenecks that slow down GPU clusters.

How does Cerebras compare to Nvidia's GPUs for AI inference?

Cerebras claims their Wafer Scale Engine delivers more than 20 times faster AI inference compared to comparable GPU cluster setups. While Nvidia's GPUs are general-purpose accelerators that work for many applications, Cerebras' architecture is optimized specifically for inference workloads with fixed model architectures. The speed advantage comes from eliminating data movement between chips, but the trade-off is less flexibility than general-purpose GPUs.

Why did Benchmark make such a large $225 million investment?

Benchmark created two separate

What is the significance of the Open AI partnership for Cerebras?

Open AI's $10 billion+ multi-year commitment extending through 2028 for 750 megawatts of computing power provides crucial product-market validation. This isn't theoretical interest—it's massive capital deployment from the world's most important AI company, essentially confirming that Cerebras' technology works at production scale for real inference workloads. Sam Altman being a personal investor in Cerebras adds further credibility to the partnership.

When is Cerebras going public and what should investors expect?

Cerebras is targeting a public debut in Q2 2026. The IPO will provide transparency on exact revenue, gross margins, customer concentration, and path to profitability. Investors should watch for strong revenue growth (50%+ annually), healthy gross margins (60%+), and diversified customer bases without concentration. The removal of G42 from investor lists resolved national security concerns that previously delayed the IPO timeline.

What manufacturing challenges does Cerebras face scaling production?

Cerebras depends on TSMC for manufacturing advanced wafer-scale chips, which means they face capacity constraints since TSMC allocates advanced production between many customers including Apple, Qualcomm, and others. Manufacturing yield (percentage of wafers that work without defects) is critical—lower yield increases per-unit costs. Building functional wafer-scale chips is more difficult than manufacturing traditional chips because a single defect can affect the entire wafer rather than just one small chip.

Could Nvidia simply acquire Cerebras as a competitive response?

While Nvidia has the capital (over

What is the addressable market for Cerebras' technology?

The global AI hardware and infrastructure market is estimated at

How does Cerebras' software ecosystem compare to Nvidia's CUDA?

Cerebras is building proprietary software tools and compiler technology optimized for their hardware, but they start from decades behind Nvidia's CUDA ecosystem. CUDA benefits from 20+ years of development, millions of trained engineers, and comprehensive libraries. Cerebras' advantage is that their software is specifically optimized for their hardware, while CUDA is general-purpose. Over time, if Cerebras scales successfully, their software ecosystem will strengthen through increased developer adoption and community optimization.

Ruable — AI-powered automation tools for developing presentations, documents, reports, images, and videos starting at $9/month—can help teams accelerate the infrastructure analysis and competitive research needed to evaluate companies like Cerebras. Use cases include automatically generating investor presentations, creating detailed competitive analysis reports, and building visual comparisons of different technology platforms. Try it for free at Runable.com to streamline your research workflow.

Key Takeaways

- Benchmark's $225M commitment is a concentrated bet on specialized AI inference architecture as the future of computing infrastructure

- Cerebras' Wafer Scale Engine delivers 20x faster inference by consolidating 4 trillion transistors and 900,000 cores on a single chip

- OpenAI's $10B+ partnership validates production-scale deployment, not theoretical capability

- Manufacturing capacity constraints and yield uncertainty represent real execution risks despite strong market signals

- The 1B+ revenue at 60%+ gross margins, which requires successful manufacturing scale and customer diversification

Related Articles

- Microsoft's Maia 200 AI Chip Strategy: Why Nvidia Isn't Going Away [2025]

- AI Agent Social Networks: The Rise of Moltbook and OpenClaw [2025]

- Elon Musk's Orbital Data Centers: The Future of AI Computing [2025]

- Valve's Steam Machine & Frame Delayed by RAM Shortage [2026]

- Resolve AI's $125M Series A: The SRE Automation Race Heats Up [2025]

- ElevenLabs 11B Valuation [2025]

![Benchmark's $225M Cerebras Bet: Inside the AI Chip Revolution [2025]](https://tryrunable.com/blog/benchmark-s-225m-cerebras-bet-inside-the-ai-chip-revolution-/image-1-1770442599299.jpg)