Introduction: The Robot That Makes You Uncomfortable (And That's Intentional)

Last year, something unsettling walked into a conference room. It looked almost human. Almost. Not quite. That discomfort you're feeling? That's the uncanny valley doing its job.

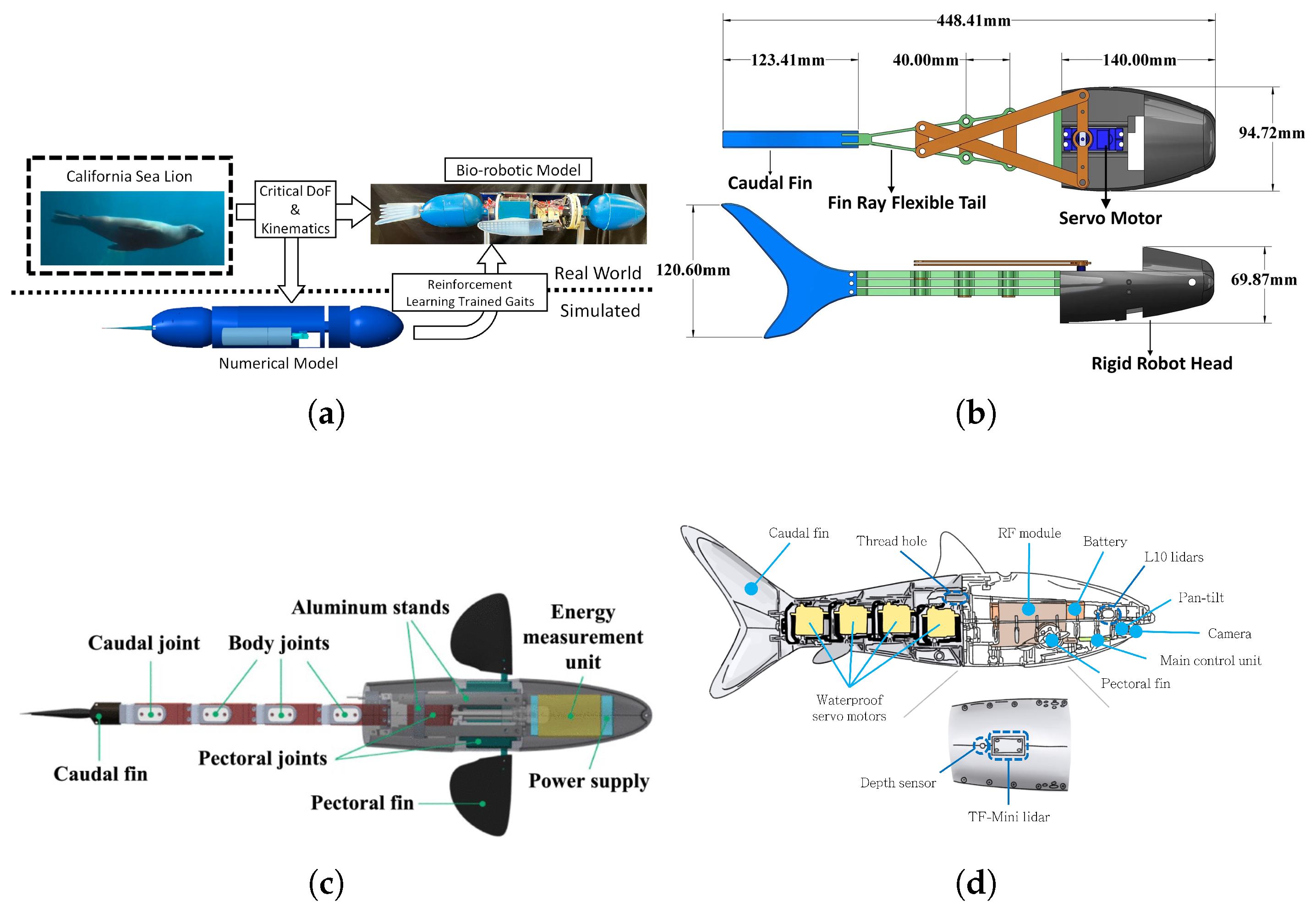

We're talking about the rise of biomimetic AI robots - machines designed to mimic human biology as closely as possible. And I'm not exaggerating when I say this represents one of the most significant shifts in robotics we've seen in the past decade. These aren't your industrial robots bolted to factory floors anymore. These are humanoid machines with warm, silicone skin, cameras embedded in lifelike eyes, and movement patterns that can trick your brain for just a split second before it screams "something's wrong here."

The technology is both fascinating and deeply unsettling. And that's exactly why we need to understand it.

Whether you're a tech enthusiast, a business leader considering robotics investment, or someone who's just creeped out by the idea of human-looking machines taking over your workplace, this guide breaks down everything you need to know about biomimetic AI robots. We'll explore how they work, why companies are building them, what makes them different from traditional robots, and whether we should actually be worried about the uncanny valley effect (spoiler: it's more nuanced than you think).

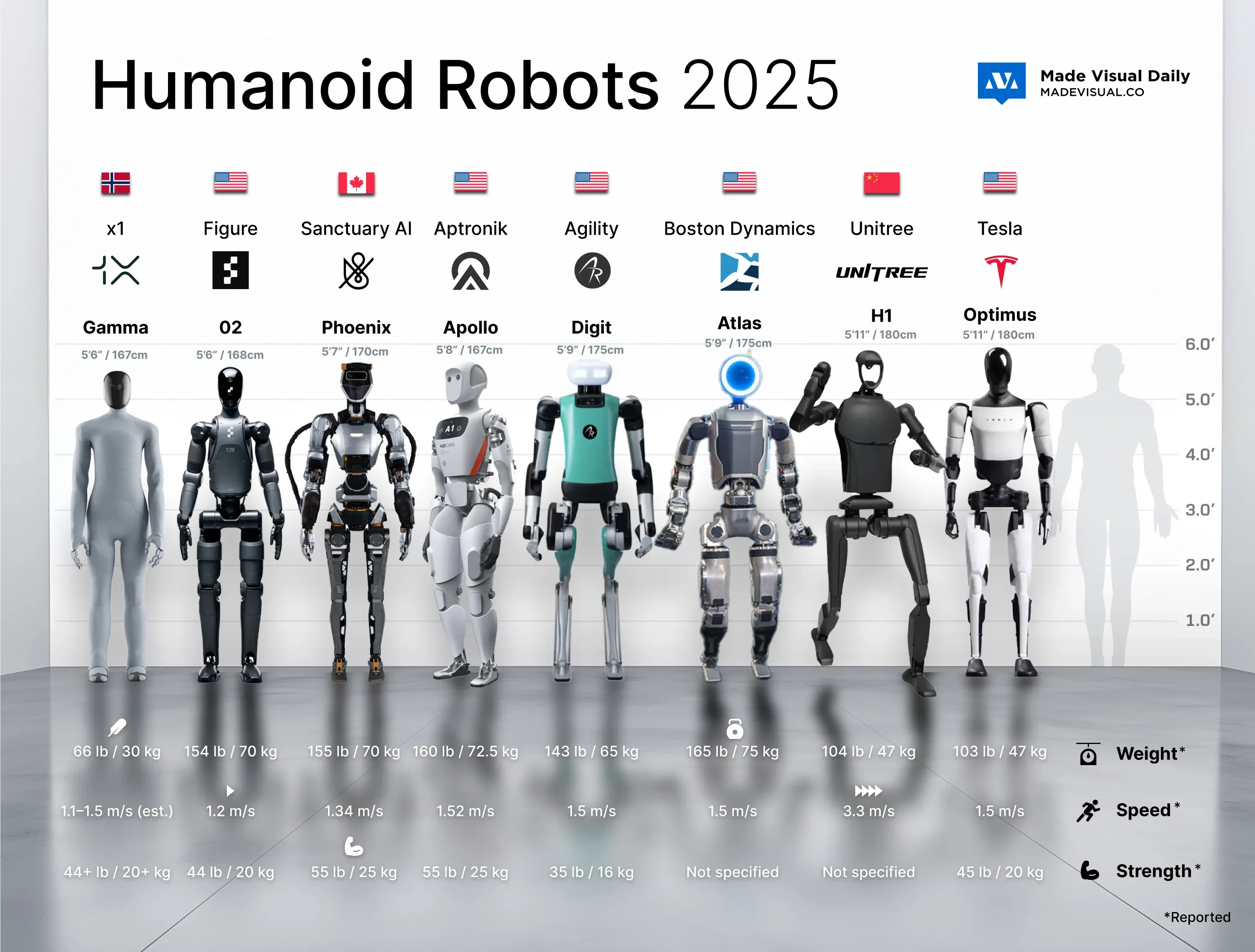

The robotics industry is at an inflection point. Companies like Tesla, Boston Dynamics, and Sanctuary AI are racing to create machines that don't just move like humans - they genuinely interact with humans in ways that feel almost natural. And the implications go way beyond creepy factor. We're talking about reshaping manufacturing, elder care, customer service, and even friendship itself.

Let's dig into what's actually happening in robotics right now, and why some of the smartest minds in tech think biomimetic design is the future.

TL; DR

- Biomimetic robots use human-like features including warm skin, realistic eyes, and natural movement patterns to interact with humans more intuitively

- The uncanny valley effect is real but less problematic than people think - purpose matters more than appearance

- Major players like Tesla, Boston Dynamics, and Sanctuary AI are racing to perfect humanoid robots with AI integration

- Real applications exist today in manufacturing, healthcare, hospitality, and elder care - not just sci-fi fantasies

- Market projections estimate the humanoid robot market will exceed $30 billion by 2035, with significant deployment in labor-shortage industries

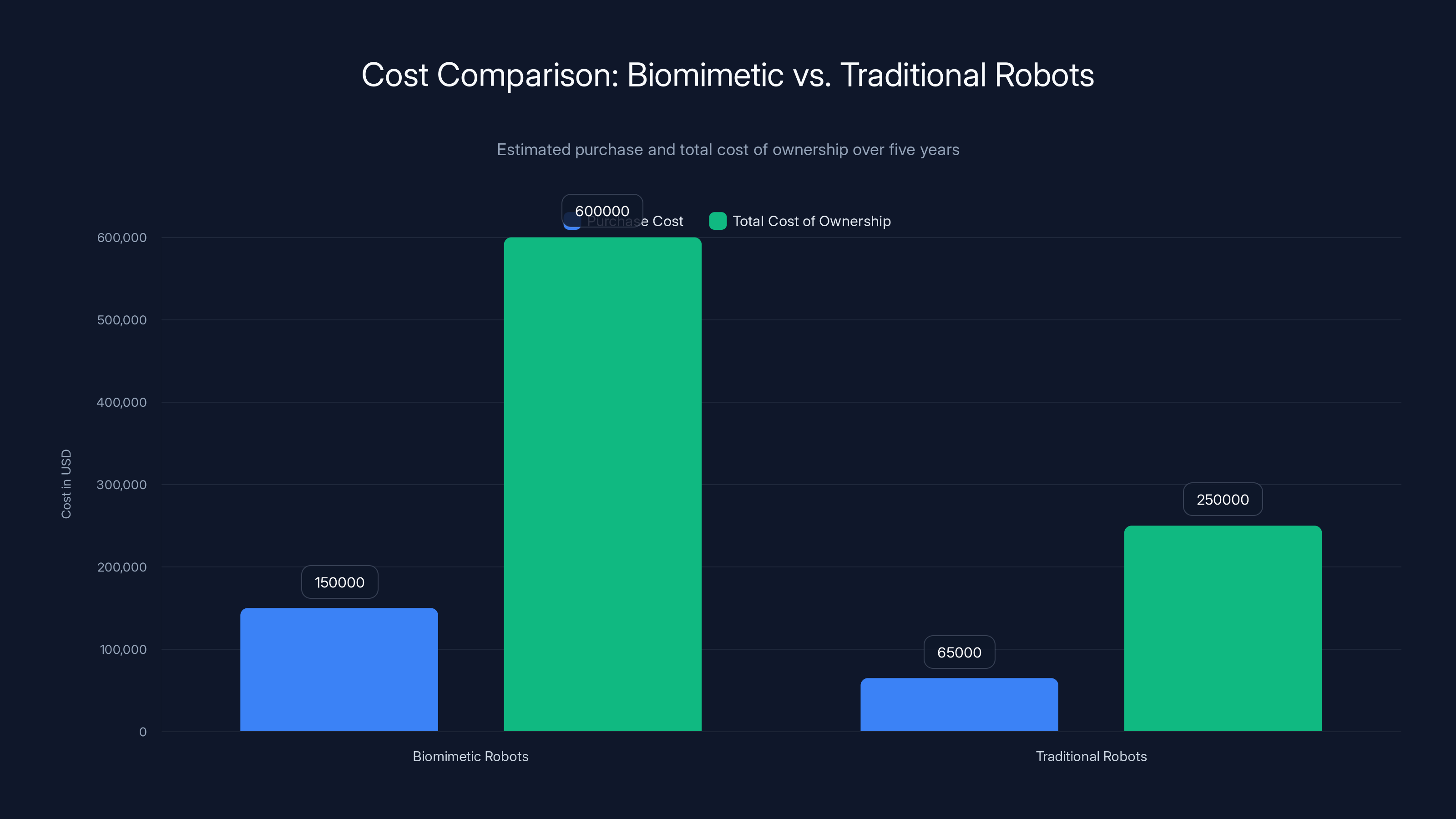

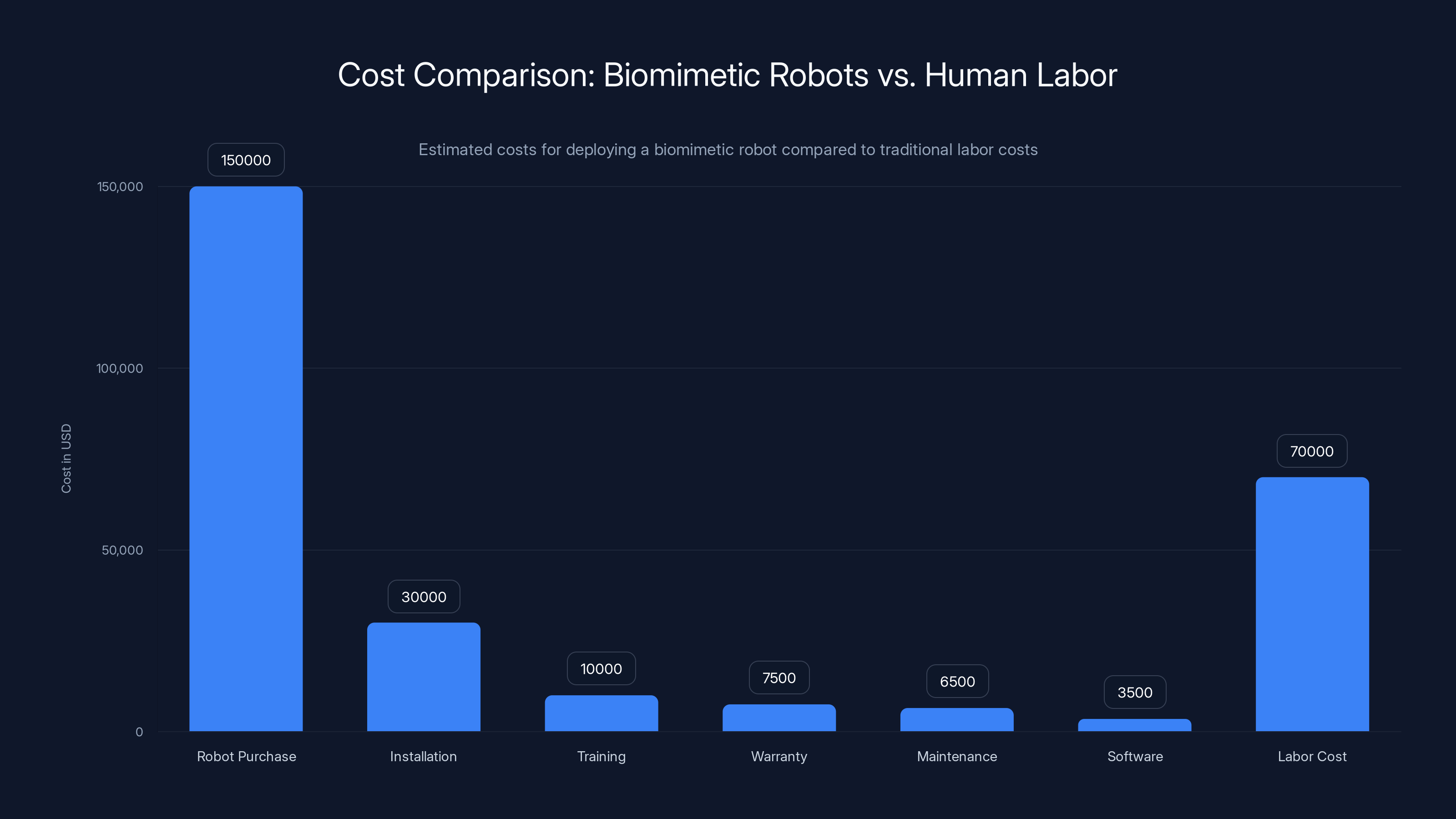

Biomimetic robots have a higher initial purchase cost and total cost of ownership compared to traditional robots, but they offer advanced capabilities that can justify the investment. Estimated data.

What Exactly Is a Biomimetic AI Robot?

Biomimetic means copying nature. In robotics, it means designing machines that look and move like humans.

But here's the thing that separates modern biomimetic robots from your standard humanoid: they're actually intelligent. They combine realistic human features with advanced AI systems that let them adapt, learn, and interact in real-time. That's a critical distinction.

Traditional humanoid robots like the Honda ASIMO or Pepper the robot had some human characteristics, but they were essentially sophisticated puppets. They followed programmed routines. They couldn't truly understand context or adapt to novel situations.

Modern biomimetic robots? Different animal entirely. These machines are equipped with computer vision, natural language processing, reinforcement learning, and sophisticated sensor networks. They don't just look human - they can perceive their environment like humans do, make decisions based on context, and adjust their behavior on the fly.

The physical design matters too. We're talking about:

- Silicone skin that mimics human tissue texture and warmth (some models maintain body temperature around 98 degrees Fahrenheit)

- Realistic facial features with moving eyes that can shift focus and express emotion

- Natural joint movement powered by pneumatic or electric actuators that mimic muscle behavior

- Distributed sensors throughout the body that provide haptic feedback and environmental awareness

- Adaptive posture and gait that shifts based on terrain, load, and interaction context

One robot that exemplifies this is Moya, the biomimetic robot that sparked this whole conversation. Moya features warm skin to the touch, cameras embedded in her eyes that can track and respond to human faces, and a movement system designed to feel natural rather than mechanical. Walk into a room with Moya, and your first instinct isn't "wow, cool robot." Your first instinct is usually a slight sense of wrongness.

And that wrongness is intentional.

The Uncanny Valley Explained: Why Human-Like Robots Feel Wrong

You've probably heard the term before. The uncanny valley is that sweet spot where something looks almost human enough to be recognizable but not quite right enough to be comfortable. It triggers something primal in your brain that says "something's off."

Imagine a spectrum. On one end, you have clearly robotic machines like industrial arms or even Boston Dynamics' Spot robot. Your brain classifies those as "machine" and moves on. On the other end, you have actual humans. Your brain classifies those as "human" and your social instincts kick in.

But in the middle? That's the uncanny valley. When a robot is 85% human but not quite 100%, your brain gets confused. It sends mixed signals. You're drawn to the human characteristics but repelled by the subtle wrongness. Eye contact becomes uncomfortable. Smiles feel creepy. Movement patterns trigger unease.

The science behind this is actually pretty straightforward. Humans are wired to recognize other humans with incredible precision. We've evolved to spot micro-expressions, subtle gait irregularities, and timing abnormalities in movement. When something mimics these characteristics but doesn't get them exactly right, it triggers an alarm system.

Most people describe the experience as "it looks like it's about to jump at me" or "something about its eyes is wrong." That's not irrational. That's your threat detection system doing exactly what it evolved to do.

Here's what's interesting though: the uncanny valley effect varies dramatically based on context and purpose.

When researchers tested biomimetic robots in hospital settings, nurses and patients reported significantly less discomfort when they understood the robot's purpose (providing care, assisting with tasks). The uncanny feeling didn't disappear, but it became manageable and even irrelevant when the robot was actually useful.

In contrast, robots designed purely to look human without serving a specific function triggered much stronger negative reactions. The same robot, essentially, but with different context created completely different emotional responses.

This matters because it suggests the uncanny valley effect isn't some fundamental, unchangeable truth about human psychology. It's context-dependent. And it's probably going to become less relevant as biomimetic robots become more common in our daily lives.

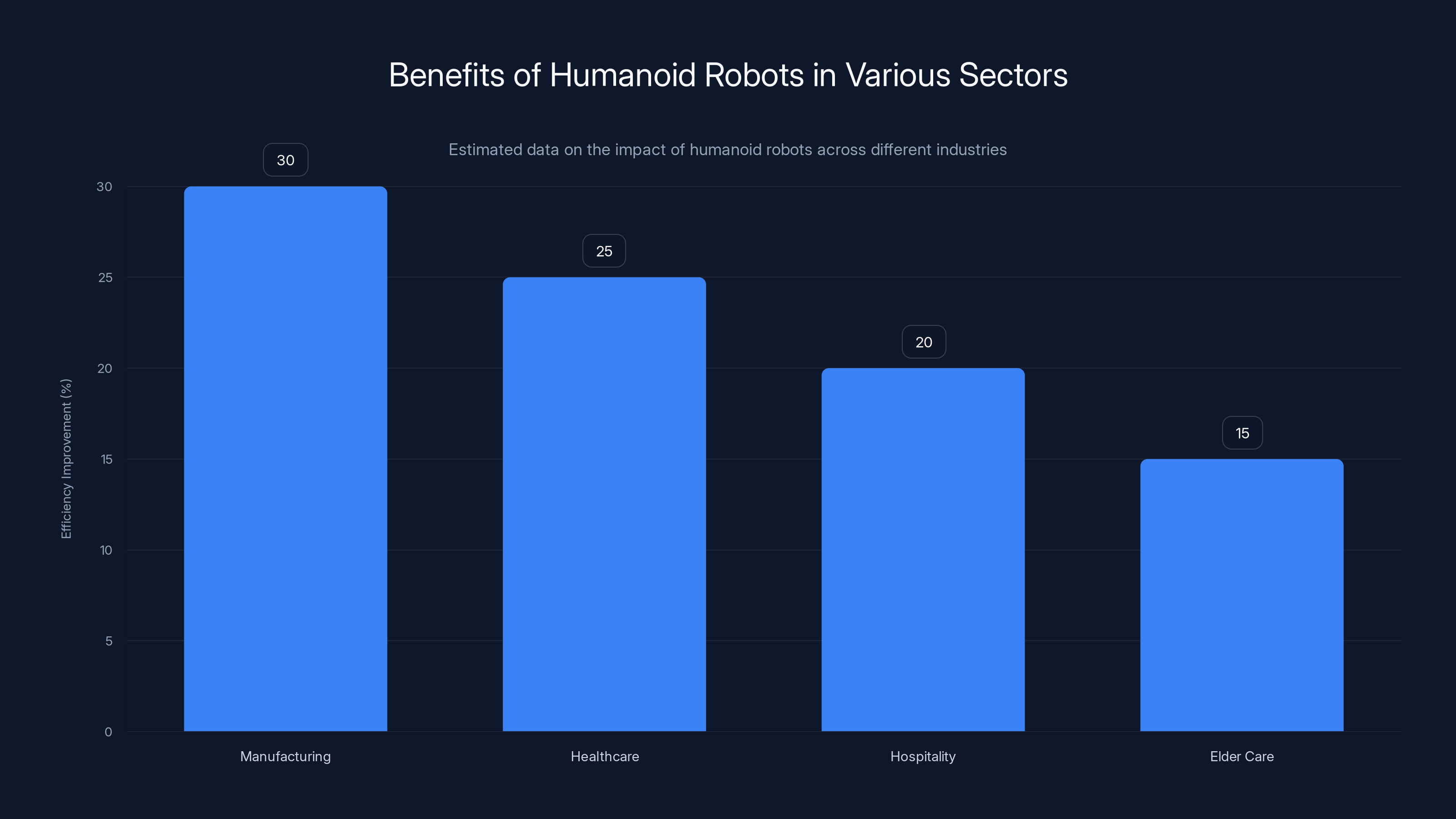

Humanoid robots can significantly improve efficiency in manufacturing (30%) and healthcare (25%), with notable benefits in hospitality and elder care as well. Estimated data.

Why Companies Are Actually Building These Things

You might be wondering: if robots this human-like make people uncomfortable, why build them at all? Why not just stick with clearly robotic designs that don't trigger the uncanny valley at all?

The answer is efficiency and interaction.

Humanoid robots can work in environments designed for humans. They can use human tools. They can navigate human spaces. They can interact with humans intuitively without extensive training. That's enormous.

Consider a manufacturing plant. If you want a robot to work on an assembly line, you can design a specialized robotic arm that's incredibly efficient at that specific task. Great. But if that robot breaks down or the product specs change, you need engineers to reprogram it, often requiring expensive downtime.

Now imagine a humanoid robot. It can learn new tasks through demonstration. A human shows it how to assemble something, and the robot learns and adapts. When the product changes, you show it the new process. It's more flexible, less specialized, and potentially more cost-effective over time.

More importantly, humanoid robots can work in customer-facing roles. Hospitality, healthcare, elder care - these industries have massive labor shortages and high turnover. A robot that can engage naturally with humans could fill these gaps.

The economics are compelling. Japan has an aging workforce and incredibly low immigration. Building humanoid robots isn't just a tech flex for companies like Sony or Toyota - it's a potential solution to an existential labor problem.

Tesla's Optimus robot, for instance, is being developed specifically for manufacturing and service tasks where human-like dexterity matters. Boston Dynamics' Atlas can navigate obstacles and manipulate objects in ways that purely specialized robots can't. These capabilities come directly from the humanoid design.

There's also the data angle. A humanoid robot with cameras in its eyes and sophisticated vision systems is essentially a mobile data collector. In industrial settings, it can monitor quality, identify problems, and flag inefficiencies. In retail settings, it can track customer behavior, inventory status, and store layout optimization.

Add AI to this mix, and you've got systems that genuinely learn and improve over time. That's something you can't achieve with fixed-purpose robots.

The Technical Architecture: How These Robots Actually Work

Building a biomimetic robot is absurdly complex. Let's break down the key systems.

Physical Structure and Movement

Start with the body. Modern biomimetic robots typically use:

- Skeletal framework made from aluminum alloys or carbon fiber for strength-to-weight ratio

- Joint systems powered by pneumatic actuators (air-driven) or electric motors with precision gearboxes

- Synthetic skin made from medical-grade silicone that can be both soft and durable

- Weight distribution systems that mimic human balance and allow bipedal walking on uneven terrain

The challenge isn't building a robot that can walk upright - we've had that for decades. The challenge is making it move naturally. Human movement is incredibly efficient and complex. We don't think about it because it's automatic, but achieving that same efficiency in a machine requires sophisticated algorithms.

Most advanced robots use what's called "dynamic balance control," where the system constantly adjusts leg position and center of gravity to maintain stability. Some models incorporate reinforcement learning algorithms that let them optimize their gait over time, actually improving their walking efficiency as they practice.

Vision and Environmental Awareness

The eyes aren't just for looks. Modern biomimetic robots typically have:

- Multiple camera systems (RGB cameras for color vision, infrared for temperature sensing, depth cameras for 3D mapping)

- Eye-tracking mechanisms that let the robot look at specific objects or people

- Real-time processing that identifies objects, faces, gestures, and emotional expressions

- Integration with AI models that can classify environments and predict human intentions

These systems run on local processors (for speed and privacy) combined with cloud connectivity for more complex reasoning. The embedded cameras in Moya's eyes, for example, can track human facial expressions and adjust the robot's own expression in response.

AI and Decision-Making Systems

Here's where it gets really interesting. Modern biomimetic robots don't just execute pre-programmed routines. They use:

- Transformer-based language models that let them understand and respond to natural language commands

- Computer vision models trained on millions of images to recognize objects, people, and situations

- Reinforcement learning systems that improve behavior through trial and error

- Contextual reasoning that lets them understand social cues and adapt behavior accordingly

Think of the AI stack as having multiple layers. The lowest layer handles immediate sensory processing - what do I see, what do I feel, what's the immediate threat level? The middle layer handles decision-making - given this situation, what action should I take? The highest layer handles social and contextual reasoning - this human looks angry, I should pause and ask if something's wrong.

Integrating these layers smoothly is where most of the engineering complexity comes in. You need systems that can communicate across layers, prioritize conflicting information, and respond quickly enough to feel natural.

Haptic Feedback and Touch

Touching a robot and having it feel natural is surprisingly hard. Modern systems use:

- Pressure sensors distributed across the robot's skin that detect touch intensity and location

- Temperature control systems that maintain body warmth (many models maintain 98.6°F)

- Responsive movement that adjusts based on touch intensity (gentle touch gets gentle response, firm touch gets acknowledgment)

- Safety systems that prevent injury if the robot comes into contact with humans

This matters because touch is how humans signal comfort, agreement, and emotional connection. A robot that doesn't respond appropriately to touch feels wrong in a way that's hard to articulate.

Current Biomimetic Robots: What's Actually Out There Now

Let's look at what exists today. Not vaporware, not concept renders. Actual robots that are functioning, being tested, or deployed.

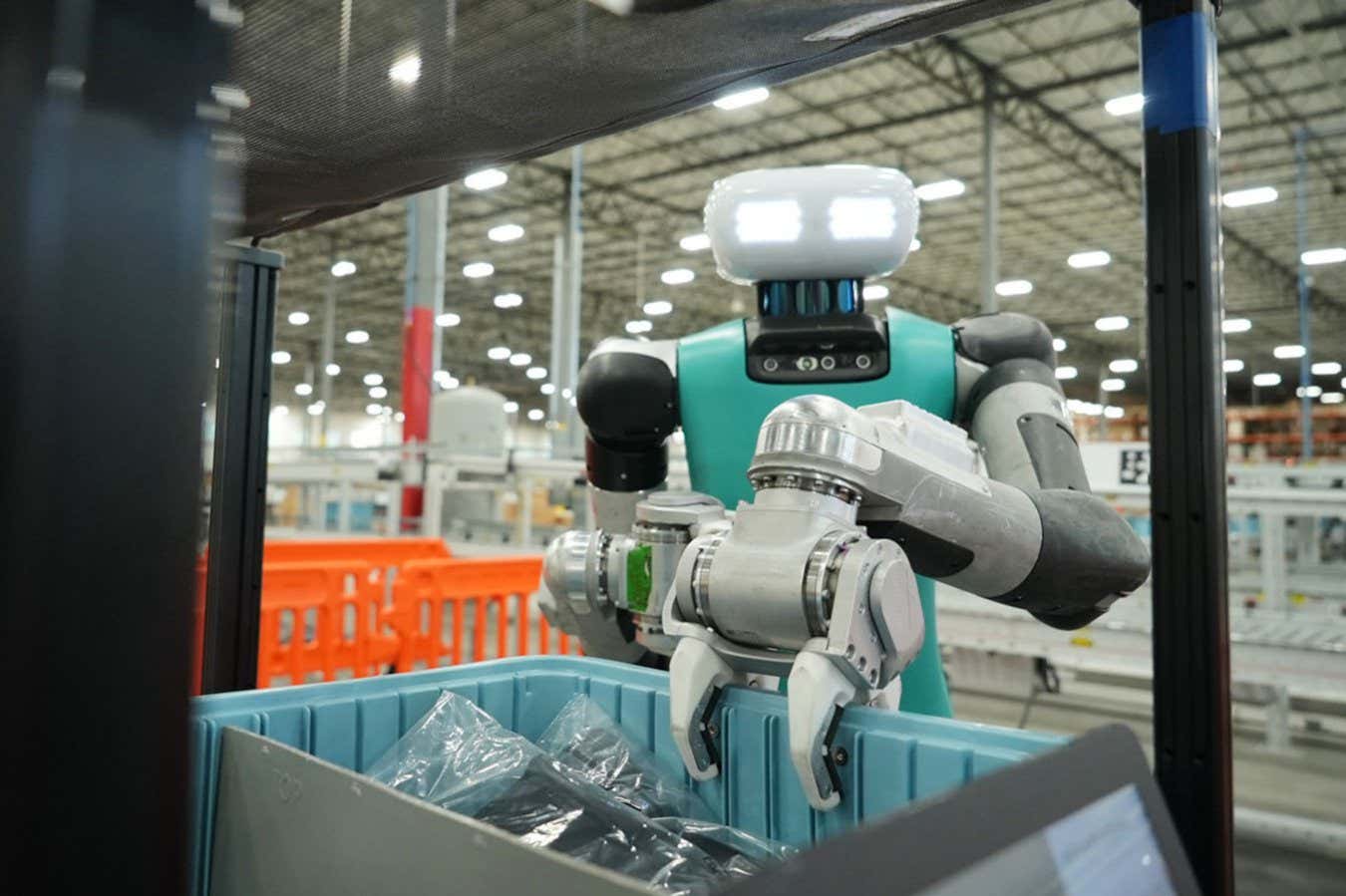

Sanctuary AI's Phoenix

Sanctuary AI released Phoenix, a humanoid robot designed for warehouse and logistics work. It stands about 5'6", has a human-like upper body, and can perform tasks like sorting, picking, and packing. What makes Phoenix interesting is the focus on dexterity - it has hands designed to grip and manipulate objects like humans do, not specialized grippers.

The AI component focuses on learning from human demonstration. Show Phoenix how to do a task once or twice, and it generalizes to similar tasks. Early deployments suggest significant productivity gains in warehouse environments.

Boston Dynamics' Atlas

Boston Dynamics has shifted Atlas toward commercial deployment after years of impressive demonstrations. The latest versions can navigate complex terrain, manipulate objects with precision, and adapt to environmental changes in real-time.

What's notable is that Boston Dynamics is partnering with actual companies - Hyundai, Tesla, and others - to put these robots in real-world environments. They're not perfect, but they're functional enough that businesses are willing to invest time and money in integration.

Tesla's Optimus

Tesla has been quieter about its robotics progress than its EV work, but the Optimus (formerly called Dojo) project is progressing steadily. The focus is explicitly on manufacturing automation, but with a secondary goal of general-purpose tasks.

Tesla's approach is interesting because they're using their expertise in AI and manufacturing to build robots optimized for factory environments. The design is distinctly humanoid but not trying to be creepy - it's more functional than biomimetic in appearance.

Moya and Other Research Prototypes

Moya represents a different approach - maximizing human-likeness specifically to study interaction patterns. With warm skin and embedded eye cameras, Moya is pushing the boundaries of what biomimetic means.

Other research institutions (MIT, UC Berkeley, ETH Zurich) are building prototypes focused on specific challenges: bipedal balance, object manipulation, environmental adaptation, or social interaction.

What's important to understand is that we're in a fragmented phase. Different organizations are optimizing for different goals. Some prioritize dexterity, some prioritize human interaction, some prioritize cost-effectiveness. This is actually healthy - it means the field is exploring multiple directions before settling on dominant designs.

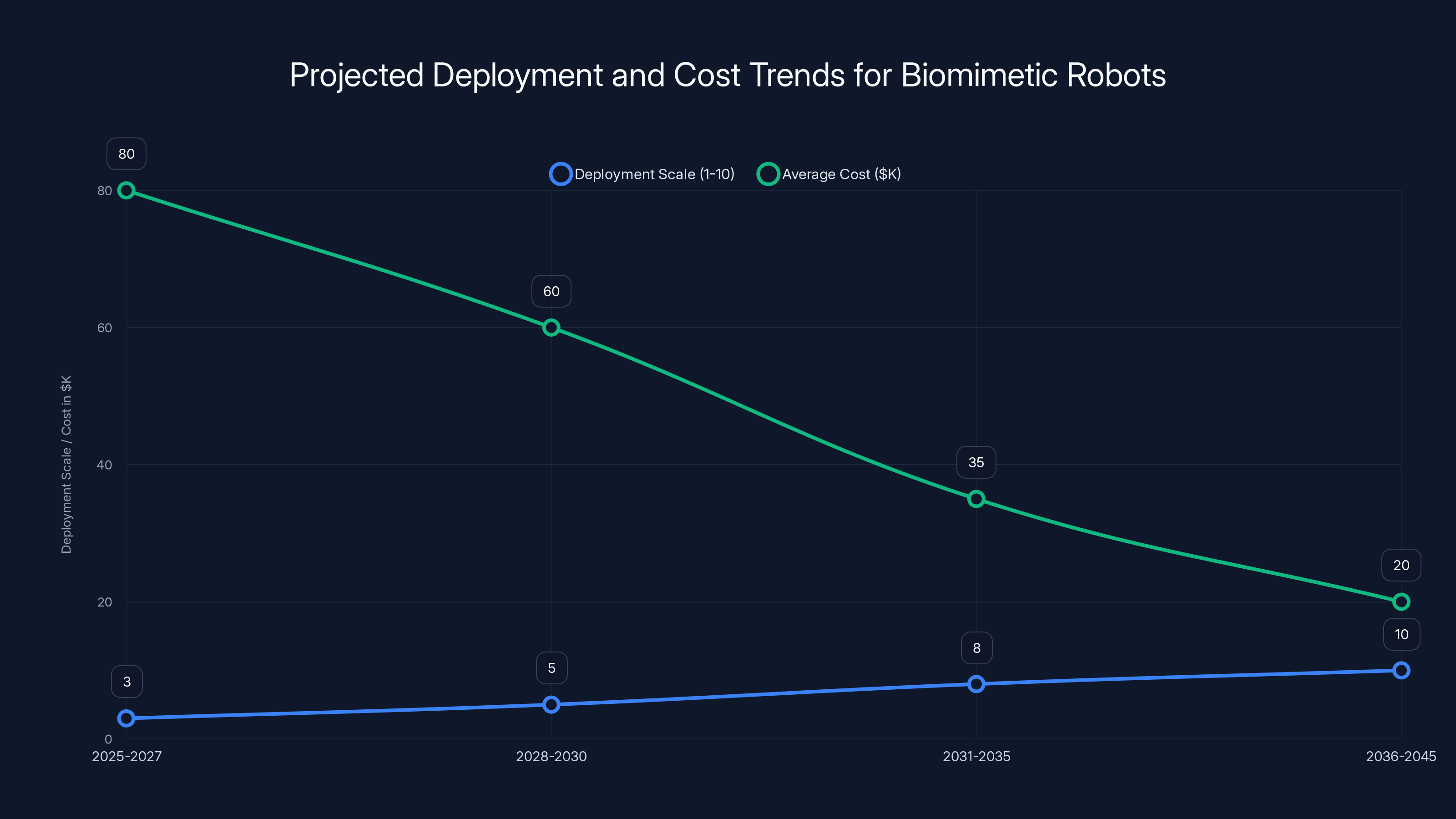

Biomimetic robots are expected to become more widely deployed and less costly over the next two decades, with significant mainstream adoption by 2031-2035. (Estimated data)

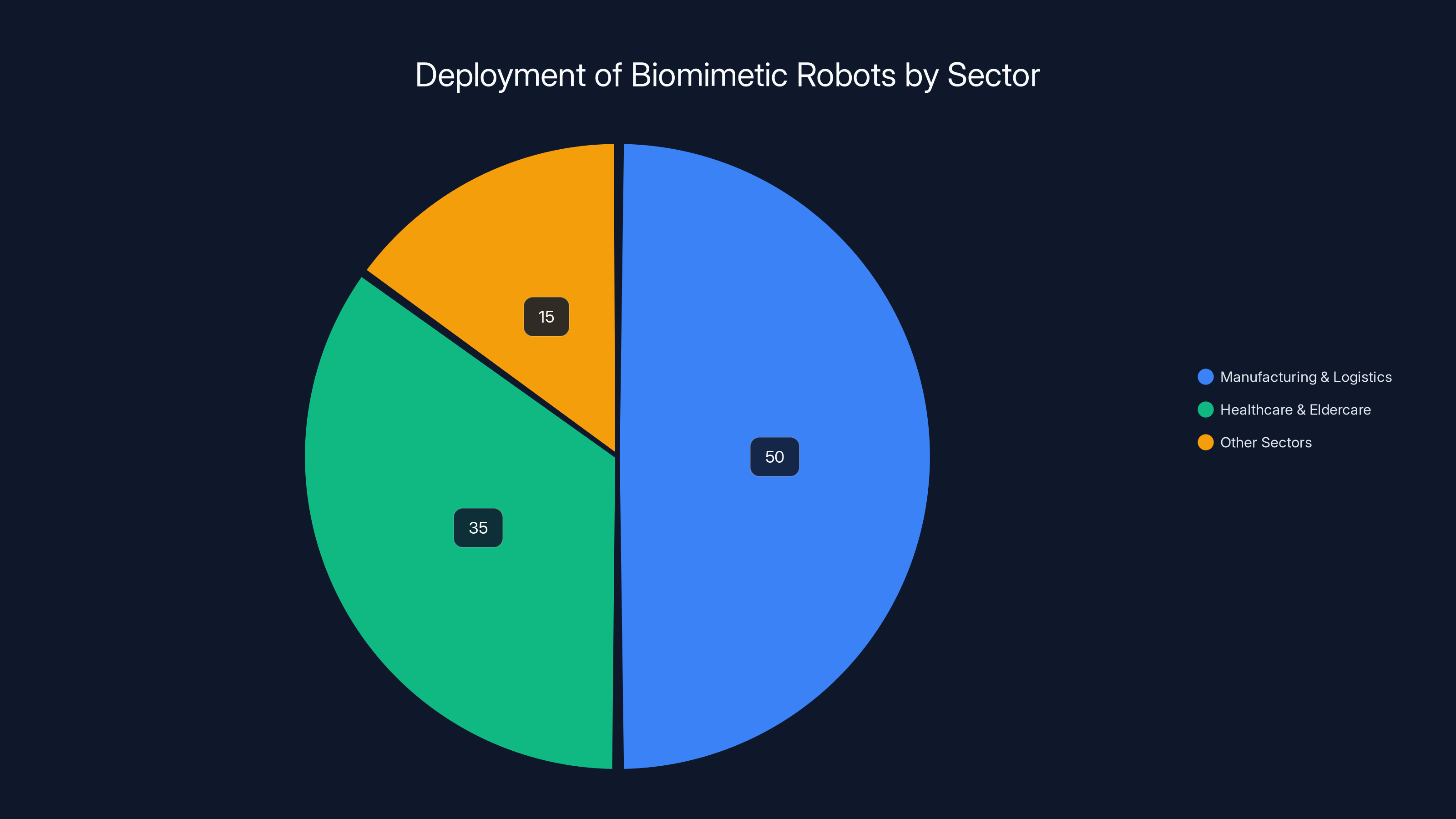

Where Biomimetic Robots Are Actually Being Deployed

Forget sci-fi fantasies for a moment. Where are these robots actually working right now?

Manufacturing and Logistics

This is the biggest opportunity. Factories and warehouses have massive labor shortages, repetitive tasks, and environments already instrumented with safety systems. Humanoid robots fit naturally into this space.

We're seeing initial deployments in:

- Electronics manufacturing where precision and dexterity matter

- Automotive assembly for tasks that are physically demanding or unsafe

- Warehouse automation for sorting, picking, and packaging

- Quality control where vision systems identify defects

The economics are straightforward. A humanoid robot costs

Healthcare and Eldercare

Aging populations create massive demand. Japan and several European countries are exploring biomimetic robots for elder care: companionship, physical assistance, monitoring, medication reminders.

Here's what's interesting: older adults often respond better to humanoid robots than younger people do. The uncanny valley effect seems less pronounced (or possibly they're less bothered by it). And the psychological benefit of interaction with something that appears alive seems genuine.

Hospitals are testing robots for:

- Patient transport and mobility assistance

- Medication delivery and tracking

- Disinfection in sterile environments

- Monitoring and alerts for high-risk patients

- Companion functions for isolated or dementia patients

The human touch element matters here. A cold robotic arm handing someone medication feels impersonal. A humanoid robot doing the same action feels more natural, even if intellectually you know it's still a machine.

Hospitality and Customer Service

Restaurants, hotels, and retail spaces are early adopters because labor shortages are acute and customer expectations are flexible. People seem to find it novel to interact with humanoid robots in these contexts.

Applications include:

- Servers in restaurants and cafes

- Concierges in hotels

- Shelf stockers in retail

- Tour guides in museums and attractions

- Receptionists in medical and corporate offices

The interesting thing is that companies using these robots report that customers are more entertained than disturbed. Novelty wears off quickly (after a few weeks, people treat the robot as just another staff member), but the initial engagement drives foot traffic.

Research and Development

Universities and tech companies use biomimetic robots as testbeds for AI, robotics, human-robot interaction, and biomechanics research. These aren't commercial deployments, but the insights feed back into product development.

The Competition: Major Players in the Biomimetic Robot Space

This is becoming a crowded field. Here's who's seriously investing:

Traditional Robotics Companies

Boston Dynamics (acquired by Hyundai in 2021) has shifted from research spectacle to commercial deployment. Their focus on dexterity and environmental navigation is unmatched. They're partnering with actual companies, which signals serious commercialization.

ABB, KUKA, and Fanuc are traditional industrial robotics companies slowly moving into humanoid space. They have manufacturing expertise and customer relationships, but they're not leading on AI integration.

Automotive and Tech Giants

Tesla is unique because it's developing robotics alongside AI expertise. The vertical integration of manufacturing knowledge, AI capability, and capital gives Tesla advantages that pure robotics companies don't have.

Hyundai (through Boston Dynamics ownership and its own robotics division) is betting heavily on this market, likely driven by Korean labor economics.

Toyota and other Japanese manufacturers are investing in humanoid robotics, again driven by domestic labor market pressures.

Startups and Specialized Companies

Sanctuary AI is focused on dexterous manipulation and learning from human demonstration. They're less flashy than Boston Dynamics but potentially more practically focused.

Figure AI (backed by Open AI and others) is building bipedal robots specifically for manufacturing. Their recent demonstrations show significant progress in object manipulation and navigation.

Unitree Robotics has developed surprisingly capable quadrupedal and humanoid robots at much lower price points than Western competitors. Their approach suggests that biomimetic robotics might be more cost-effective than people assume.

Academic and Government Programs

Universities and government research institutions (DARPA funding especially) continue pushing the boundaries. The U. S. and China are both investing heavily in robotics research, treating it as strategically important.

The Economic Case: When Does Deploying a Biomimetic Robot Make Sense?

Here's the practical question: should your organization invest in biomimetic robots?

The math is actually pretty straightforward. Calculate the total cost of ownership for a robot versus the labor cost you're replacing.

Capital costs:

- Robot purchase: 200K

- Installation and integration: 50K

- Training and setup: 20K

- Warranty and insurance: 10K per year

- Maintenance and repairs: 10K per year

- Software updates and licensing: 5K per year

Labor replacement:

- Average manufacturing worker salary: 60K per year

- Benefits and overhead: 20K per year

- Total labor cost: 80K per year

Basic math: A

But there are complexity multipliers:

Advantages that increase ROI:

- Availability (robots don't take vacation, call in sick, or quit)

- Consistency (robots don't have bad days or quality variations)

- Safety (robots handle hazardous tasks)

- Adaptability (robots can learn new tasks)

- Scalability (once one robot is optimized, deploying 10 more is incremental)

Disadvantages that decrease ROI:

- Setup complexity (integrating a robot into existing workflows takes time)

- Limited capability (robots still can't handle every situation)

- Maintenance (when robots break, fixing them is expensive)

- Regulatory friction (some industries have strict robot regulations)

- Social friction (workers and customers may resist)

For a manufacturing facility with high repetition, clear workflows, and stable labor costs, the ROI is compelling. For a dynamic environment with constantly changing tasks, the ROI is questionable.

Deploying a biomimetic robot incurs significant upfront costs but can lead to savings over time by replacing labor costs. Estimated data based on typical values.

Challenges and Limitations: What Biomimetic Robots Still Struggle With

Let's be honest about the limitations. Biomimetic robots are impressive but far from perfect.

Dexterity and Fine Motor Control

Human hands have 27 degrees of freedom with millions of sensory receptors. Robot hands typically have 5-10 degrees of freedom with basic pressure sensors. Tasks that require delicate touch or complex manipulation are still difficult.

This is improving - Open AI's work on robotic hands has shown significant progress - but we're still behind human capability for anything requiring genuine finesse.

Generalization and Learning

Robots trained in one environment often struggle in slightly different environments. A robot trained to pick objects from a specific bin layout might fail if the layout changes slightly. Transfer learning is getting better, but it's still a limitation.

Human learning is incredibly efficient - we see something done once and can adapt it to new contexts. Robot learning requires hundreds or thousands of repetitions.

Cost and Manufacturing Complexity

Making robots affordable at scale is still an open problem. Current biomimetic robots cost

Manufacturing costs are dropping (like everything else in tech), but we're probably years away from $10K humanoid robots, which is what you'd need for truly ubiquitous deployment.

Reliability and Maintenance

Robots break. And when they do, repairs are expensive and require specialist expertise. A manufacturing robot with 40 years of maturity has reliable parts and widespread service expertise. A 2-year-old biomimetic robot? You might be dealing with the manufacturer's support directly.

Safety and Liability

What happens when a robot injures someone? The liability chain is unclear. Is it the manufacturer? The operator? The programmer? These questions are still being worked out in courts and regulatory bodies.

This is creating friction in deployment. Insurance companies are nervous. Legal departments are cautious. This slows adoption more than technology limitations do.

The Uncanny Valley Revisited: Should We Actually Worry About This?

Here's my honest take: the uncanny valley is real, but it's way less important than people think.

Yes, seeing Moya for the first time is uncomfortable. Yes, most humans experience a moment of "what the hell is that?" when encountering a sufficiently human-like robot.

But that discomfort fades remarkably quickly. After about 10 minutes of interaction, people stop thinking about whether the robot is creepy and start focusing on what it's doing. Context matters more than appearance.

My prediction: the uncanny valley becomes irrelevant over the next 5-10 years not because we stop noticing it, but because we stop caring about it. As biomimetic robots become more common, as people see them in their workplaces and public spaces, the novelty wears off. They become just another tool.

There's also an opposite effect starting to happen: as robots become more capable and more useful, they become less uncanny. A robot that helps you with something challenging feels less weird than a robot that just sits there looking human.

The real risk isn't the uncanny valley. It's that biomimetic robots become so useful and so commonplace that we stop thinking critically about the implications.

Ethical Considerations: The Harder Questions

Beyond the creepy factor, there are legitimate ethical concerns:

Labor and Displacement

Robots replace workers. That's the entire economic case. What happens to the people whose jobs disappear? Retraining programs? Universal basic income? Society is poorly prepared for this transition.

The historical precedent is mixed. Automation has always created net job growth... eventually. But that "eventually" can be decades, and it doesn't help the people displaced in the meantime.

Anthropomorphism and Emotional Attachment

If robots look and act human, people develop emotional attachments to them. That's not necessarily bad - it could improve elder care if people bond with care robots. But it could also be exploitative if companies manipulate emotional attachment for profit.

Surveillance and Privacy

A biomimetic robot with embedded cameras is basically a mobile surveillance device. In a workplace, that raises questions about worker monitoring. In a home, it raises questions about family privacy.

Regulation is lagging here. We need clear rules about when and where these robots can be deployed, and what data they can collect.

Dependency and Human Skill Atrophy

If robots do everything, what happens to human skills? If nobody needs to do manual work, what does that mean for human development and capability?

This is a longer-term concern, but it's worth thinking about.

Biomimetic robots are primarily deployed in manufacturing and logistics (50%), followed by healthcare and eldercare (35%), with other sectors making up the remaining 15%. Estimated data.

The Future of Biomimetic Robots: Where This Is Heading

Let me make some predictions based on current trends:

2025-2027: Specialization and Niche Deployment

We'll see biomimetic robots increasingly deployed in specific high-ROI contexts: manufacturing, logistics, certain healthcare settings. Deployment will still be limited to companies with scale and capital to absorb the complexity.

Cost will decrease modestly (maybe 15-20%), but robotics companies will prioritize capability improvements over cost reduction.

2028-2030: Market Consolidation

The market will consolidate around 3-5 dominant player (likely Boston Dynamics, Tesla, and 2-3 others). Smaller competitors will either be acquired or pivot to specialized niches.

Standardization will begin emerging around interfaces, software, and integration patterns. This sounds boring, but it's actually the critical step that makes adoption scalable.

2031-2035: Mainstream Deployment

By this timeframe, biomimetic robots become genuinely commonplace in manufacturing and logistics. Costs will have dropped to

We'll start seeing secondary-market deployment: restaurants, retail, hospitality. Public acceptance will be higher because people will have grown up seeing these robots.

AI integration will be seamless. Robots will learn from each other across organizations. Federated learning systems will allow robots to improve based on collective experience.

2036-2045: Generalization

This is longer-term speculation, but if trends continue, robots could eventually handle most human-level physical tasks. We're probably 15+ years from truly general-purpose humanoid robots, but the trajectory is clear.

At that point, the labor market questions become unavoidable. We'll need serious policy responses around job displacement, retraining, income distribution.

Preparing Your Organization for Biomimetic Robots

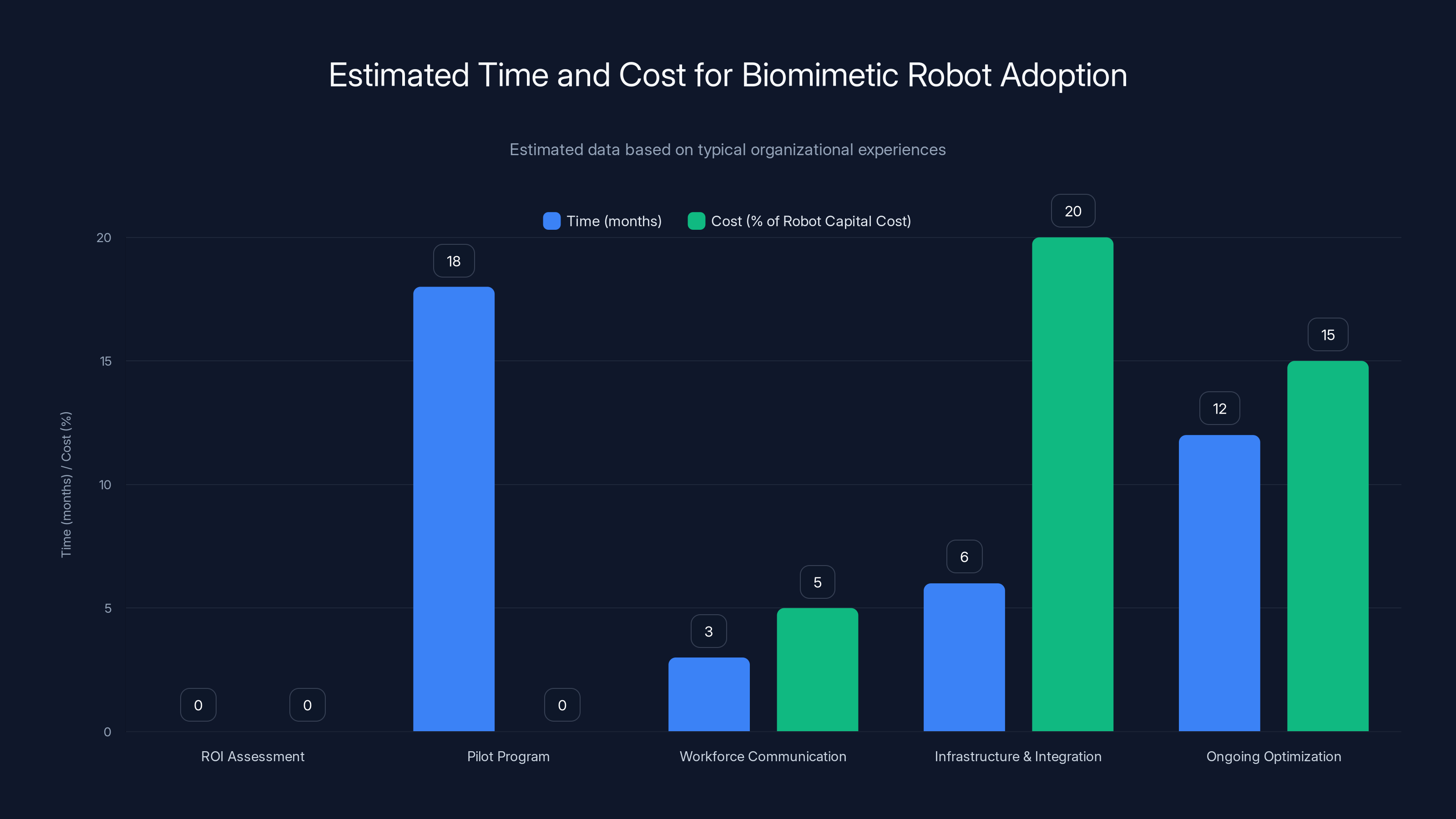

If you lead an organization that might benefit from robotics, here's how to think about adoption:

Step 1: Honest ROI Assessment

Don't romanticize robots. Calculate actual labor costs, setup complexity, and maintenance requirements. Be brutally honest about whether the math works.

A good rule: if a robot doesn't achieve 2-year payback period, it's not ready for your organization yet.

Step 2: Pilot Program

Don't deploy at scale immediately. Start with one robot in one workflow. Learn what works and what doesn't. Document everything.

Most organizations report that pilots take 6-12 months longer than expected. Build that into your timeline.

Step 3: Workforce Communication

Talk to your workers before deploying. Be honest about what robots will do. Invest in retraining for roles that will change. This reduces resistance and improves adoption.

Workers aren't inherently opposed to robots. They're opposed to job loss. Show them the robot creates new roles or improves existing ones, and resistance drops significantly.

Step 4: Infrastructure and Integration

Robots need integration with your existing systems. Manufacturing execution systems, inventory management, quality control. The robot is part of a larger system.

Budget for integration expertise. This is often more expensive than the robot itself.

Step 5: Ongoing Optimization

After deployment, robotics don't maintain themselves. You need dedicated people managing robots, handling maintenance, dealing with failures.

Most organizations underestimate this. Budget 10-15% of robot capital cost annually for operation and maintenance.

Comparing Biomimetic Robots to Traditional Automation

Here's how biomimetic robots compare to established automation approaches:

| Factor | Biomimetic Robots | Traditional Industrial Robots | Cobots | Manual Labor |

|---|---|---|---|---|

| Initial Cost | ~$0 | |||

| Setup Time | 3-6 months | 1-3 months | 1-2 weeks | Immediate |

| Flexibility | High | Medium | High | Very High |

| Per-Task Cost | Medium-Low | Very Low | Medium | Medium-High |

| Environment | Human-designed | Specialized | Human-designed | Human-designed |

| Learning Capability | Yes | Limited | Limited | Yes |

| Maintenance | Complex | Established | Simple | N/A |

| Human Interaction | Natural | Minimal | Collaborative | Direct |

| Payback Period | 2-4 years | 1-2 years | 2-3 years | N/A |

The key insight: biomimetic robots aren't better than all other approaches. They're better for tasks requiring flexibility, learning, and human-like dexterity. They're worse for repetitive, specialized tasks where traditional robots excel.

Organizations typically spend 6-18 months on pilot programs and should budget 10-15% of the robot's cost annually for ongoing optimization. Estimated data.

Real-World Case Studies: Where Biomimetic Robots Are Actually Working

Let's look at actual deployments:

Case Study 1: Electronics Manufacturing

A mid-sized electronics manufacturer in Singapore deployed three humanoid robots (from a company similar to Sanctuary AI) for assembly of circuit boards. The robots handled soldering, component placement, and quality inspection.

Results:

- 23% improvement in assembly speed (robots work 24/7 without breaks)

- 12% improvement in quality metrics (fewer defects per unit)

- 8% reduction in overall labor costs (not full replacement - humans handle complex tasks)

- 6-month payback period on robot investment

- Initial 3-month setup period where productivity was below baseline

Critical success factor: management invested heavily in worker retraining rather than layoffs. Workers transitioned to robot oversight and complex assembly tasks. This created organizational buy-in.

Case Study 2: Senior Living Facility

A Japanese elder care facility deployed two humanoid companion robots (similar to Moya) alongside human staff for dementia patients.

Results:

- Significant engagement increase in measured social interaction

- Reduced anxiety and agitation in dementia patients

- Improvement in staff ability to handle crises (robot handles routine care, staff focuses on complex needs)

- Slightly higher overall costs (robots are supplementary, not replacement)

- Unexpected benefit: family visitors found the robots novel and visited more frequently

Critical success factor: Clear positioning as complementary to human care, not replacement. Families understood robots couldn't replace human caregivers but could improve patient outcomes.

Case Study 3: Warehouse Automation

A logistics company deployed 12 biomimetic robots in a packaging warehouse handling e-commerce fulfillment.

Results:

- 35% faster order fulfillment

- 18% reduction in order errors (robots don't get tired)

- 15% reduction in worker injuries (robots handle heavy lifting)

- 4-year payback period (longer than manufacturing case)

- Sustained implementation issues with changing product SKUs

Critical success factor: Ongoing investment in robot training for new tasks. Initial robot deployment handled only standard packages. As team developed expertise, robots handled increasingly complex scenarios.

The Skills Gap: What You Need to Actually Deploy These Robots

Here's what most organizations miss: robots require completely different operational expertise than traditional manufacturing.

Technical Skills Needed

- Robotics technicians who understand hardware, software, and can troubleshoot problems

- Machine learning engineers who can train and retrain models as environments change

- Systems integrators who can connect robots to existing manufacturing systems

- Data engineers who can manage the enormous amount of data robots generate

- Robot programmers who understand both robot-specific languages and general programming

Organizational Skills Needed

- Change management - this isn't just technology adoption, it's organizational transformation

- Process redesign - workflows need to be optimized around robots

- Workforce development - people need retraining for new roles

- Risk management - handling robot failures and unexpected scenarios

- Supply chain coordination - making sure robot-enabled facilities align with rest of organization

Most organizations dramatically underestimate the human skills required. The technology is maybe 40% of the challenge. The organizational change is 60%.

Regulatory Landscape: What Laws Apply to Biomimetic Robots?

This is still a gray area. Here's what we know:

Manufacturing and Workplace Safety

OSHA (in the U. S.) and equivalent bodies in other countries are developing safety standards for collaborative robots. These standards cover:

- Maximum force/pressure robots can exert

- Safety zones and proximity requirements

- Emergency stop requirements

- Human-robot interaction protocols

biomimetic robots that work alongside humans fall into these categories, and compliance is increasingly mandatory.

Healthcare and Eldercare

Robots in healthcare face stricter regulation because human safety is at stake. FDA (in the U. S.) and equivalent bodies are developing guidelines for medical robots.

Key questions still being settled:

- Who's responsible if a robot causes injury?

- What testing and certification is required?

- How do you handle liability in autonomous systems?

Data Privacy

Robots with cameras and sensors collecting data face privacy regulations (GDPR in Europe, state laws in the U. S., etc.). Organizations deploying robots must ensure:

- Clear data collection policies

- Consent from people being recorded

- Data security and retention limits

- Right to deletion and access

Labor Law

There are emerging questions about robot workers and labor rights. Some countries are considering "robot taxes" to fund retraining and social support. The EU Parliament has proposed treating sophisticated robots as "electronic persons" with limited legal status.

This is all still developing, but the trajectory is clear: governments will regulate robots significantly.

The Psychological Impact: How Humans Actually Respond

Beyond the uncanny valley, how do people really feel about biomimetic robots?

Research shows a complex picture:

Initial Response:

- Novelty and curiosity dominate first few minutes

- Uncanny valley effect is real but milder than expected

- People quickly shift focus to what the robot is doing rather than how it looks

After Extended Interaction:

- People develop mental models of robots as "tools with personality"

- Emotional attachment can form, especially with service robots

- Cognitive dissonance (knowing it's a machine but treating it as semi-alive) becomes normal

- People become surprisingly protective of robots they work with

In Commercial Settings:

- Customers find robot servers/employees novel and entertaining

- But novelty wears off in weeks - people treat robots matter-of-factly after initial exposure

- Workers show surprising acceptance if robots are framed as teammates rather than replacements

- Safety concerns are higher than they probably should be - people are cautious around robots even when safety systems are comprehensive

In Healthcare/Eldercare:

- Older adults show higher acceptance than younger people

- Emotional attachment to care robots seems genuine and potentially beneficial

- Some concerns about dehumanization if robots replace human contact entirely

- Best outcomes when robots supplement rather than replace human care

The pattern across all studies: humans are incredibly adaptable. We adjust our mental models and emotional responses based on context and repeated exposure. The uncanny valley effect is real but not the limiting factor most people think it is.

Conclusion: We're Living in the Transition

Here's what's actually happening: we're in the middle of a transition from robots being specialized tools to robots being general-purpose collaborators.

The biomimetic approach - making robots that look and move like humans - isn't just about appearance. It's about unlocking flexibility, capability, and ease of integration that specialized robots can't achieve.

Are biomimetic robots creepy? Yes, absolutely. Moya walking into a room creates a visceral reaction. That discomfort serves a purpose - it makes you think about what you're looking at.

But creepiness shouldn't drive your decision-making. Utility should. If a biomimetic robot solves a real problem - labor shortage, dangerous work, flexibility required, human interaction - then the creepy factor is worth it.

The uncanny valley is real, but it's not an insurmountable barrier. It's a transition zone between "obviously a machine" and "genuinely alive." We'll spend some time in that zone, but we'll move through it.

My prediction for the next decade: biomimetic robots become commonplace in specific domains (manufacturing, logistics, healthcare) while remaining novelties elsewhere. They don't become humanoid servants doing everything - that's still science fiction. But they become important enough that organizations ignoring them lose competitive advantage.

The real risks aren't technological. They're social. How do we handle labor displacement? How do we ensure robot benefits are distributed broadly? How do we maintain human skills and purpose as robots handle more tasks?

Those questions matter more than whether Moya makes you uncomfortable.

If you're considering robotics for your organization, start with basics: calculate your ROI, identify your actual needs, assess your readiness to manage the implementation complexity. The robots are ready. The question is whether you are.

The future of work will involve robots. Specifically, humanoid robots that look unsettling and work alongside humans. That's not dystopian fiction anymore. That's emerging reality.

The only question is whether you'll adapt first or react later.

FAQ

What is a biomimetic AI robot?

A biomimetic AI robot is a machine designed to mimic human physical characteristics (warm skin, facial features, movement patterns) while incorporating advanced artificial intelligence for learning and adaptation. Unlike traditional specialized robots, biomimetic robots can work flexibly in human-designed environments and interact naturally with people through cameras in eyes, touch-responsive skin, and AI-powered decision-making systems.

How does the uncanny valley affect robot acceptance?

The uncanny valley effect is real - human-like robots that aren't quite perfect trigger discomfort in people because our brains expect biological responses we don't receive. However, research shows this discomfort diminishes rapidly with exposure and is heavily influenced by context. When robots serve clear purposes (manufacturing, healthcare), the uncanny feeling becomes secondary to perceived utility, making deployment feasible despite initial discomfort.

What are the main applications for biomimetic robots right now?

Current real-world deployments focus on manufacturing, warehouse logistics, healthcare, and eldercare. Manufacturing sees the strongest ROI with 2-4 year payback periods. Healthcare applications emphasize complementary human support rather than replacement. Hospitality and retail deployments exist but often serve as novelty attractions rather than core labor solutions.

How much do biomimetic robots cost compared to traditional automation?

Biomimetic robots typically cost

What skills do organizations need to successfully deploy biomimetic robots?

Successful deployment requires technical expertise (robotics technicians, machine learning engineers, systems integrators) combined with organizational capabilities (change management, workforce development, process redesign). Most organizations underestimate the non-technical challenges - the human and organizational aspects typically represent 60% of implementation complexity versus 40% for pure technology.

Are there safety concerns with biomimetic robots working alongside humans?

Biomimetic robots working in shared spaces require comprehensive safety systems including force-limiting controls, proximity sensors, and emergency stop mechanisms. Regulatory frameworks are still developing, but OSHA and international bodies are establishing standards for collaborative robots. Current deployments demonstrate that safety concerns can be managed, though liability frameworks remain legally unclear in some jurisdictions.

How quickly will biomimetic robots replace human workers?

Replacement is happening gradually in specific domains (manufacturing, logistics) where ROI is clear, but forecasts suggesting rapid widespread displacement are probably overstated. Historical precedent shows automation creates net job growth eventually, though it creates painful transitions. More likely scenario: biomimetic robots displace specific roles while creating new ones in robot management, maintenance, and human-robot collaboration.

What's the timeline for biomimetic robots becoming mainstream?

Most industry analysts project that humanoid robots become common in manufacturing and logistics by 2030-2035 as costs decline and capability improves. Mainstream adoption in service industries (hospitality, retail) likely follows 5-10 years later. General-purpose robots capable of handling most human-level physical tasks remain 15+ years away, if they're feasible at all within current technological paradigms.

Key Takeaways

- Biomimetic robots integrate realistic human features (warm skin, embedded eye cameras, natural movement) with advanced AI for flexible human-like interaction in real-world environments

- The uncanny valley effect is measurable but context-dependent—discomfort diminishes rapidly with exposure when robots serve clear purposes versus existing purely for appearance

- Current real-world deployments focus on manufacturing and logistics where 2-4 year ROI is achievable, with healthcare and hospitality growing secondary markets

- Organizations deploying biomimetic robots face greater organizational challenges (change management, workforce retraining) than technical ones—successful implementation requires both capability and culture shift

- Market projections show humanoid robotics growing from 30 billion (2035), driven by labor shortages, increasing capability, and declining costs as market consolidates around dominant players

Related Articles

- Tesla Optimus Gen 3: The Humanoid Robot Reshaping Industry [2025]

- Tesla Optimus: Elon Musk's Humanoid Robot Promise Explained [2025]

- Is Tesla Still a Car Company? The EV Giant's Pivot to AI and Robotics [2025]

- Social Companion Robots and Loneliness: The Promise vs Reality [2025]

- Tesla Optimus Gen 3: Everything About the 2026 Humanoid Robot [2025]

- CVector's Industrial AI Nervous System: How $5M Funding Powers Factory Intelligence [2025]

![Biomimetic AI Robots: When Technology Crosses Into the Uncanny Valley [2025]](https://tryrunable.com/blog/biomimetic-ai-robots-when-technology-crosses-into-the-uncann/image-1-1770255618666.jpg)