Building AI Culture in Enterprise: From Adoption to Scale

You've probably watched your organization pour millions into AI tools, only to find employees hesitant to use them. That's not a software problem. It's a culture problem.

The gap between AI investment and actual adoption is massive. Companies are spending heavily on the technology, but something crucial is missing: the human side. Employees worry about job security. They don't understand how AI fits into their daily work. They see flashy demos but can't connect them to real problems they actually face. Meanwhile, your ROI projections stay on spreadsheets instead of becoming reality.

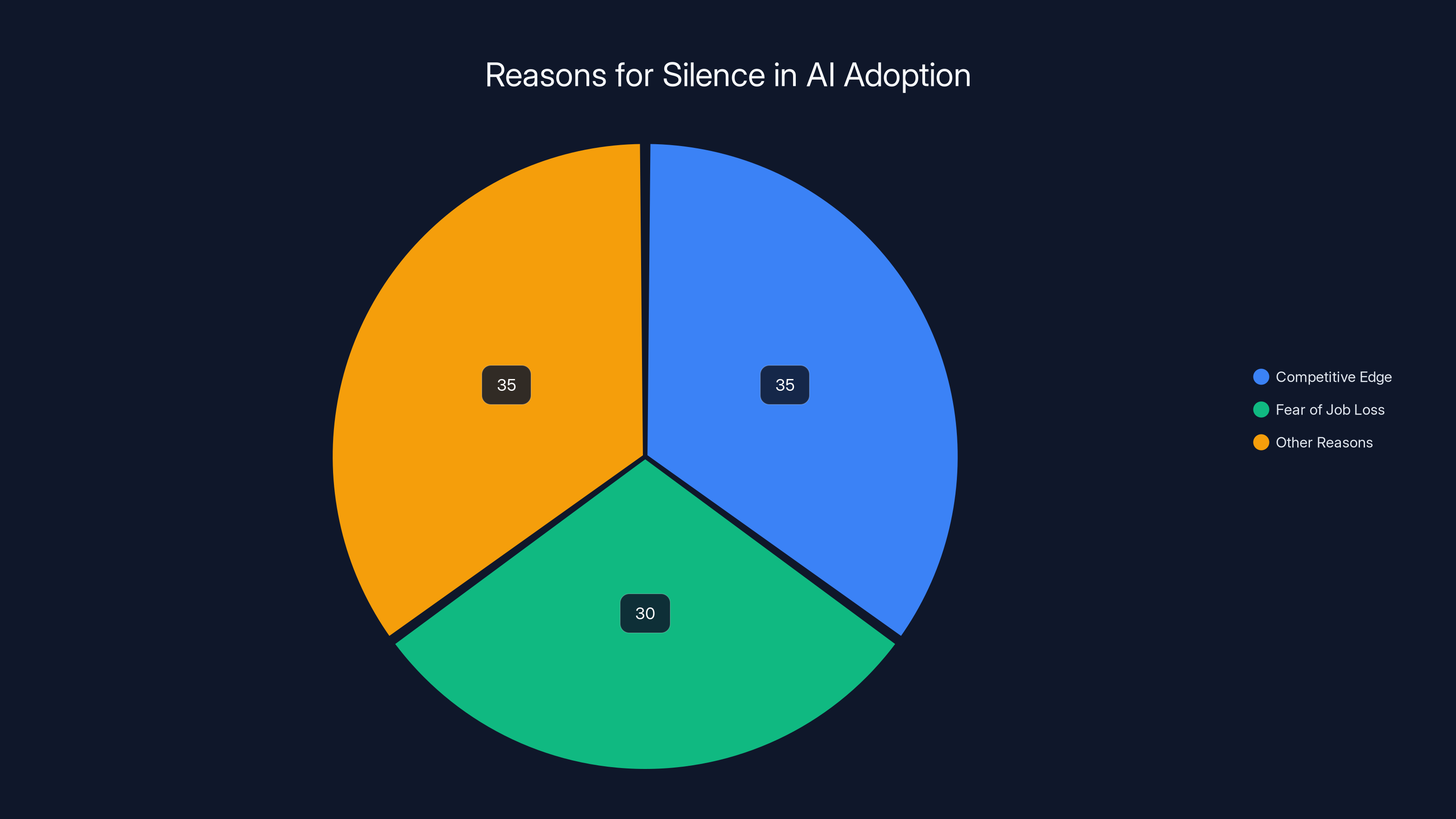

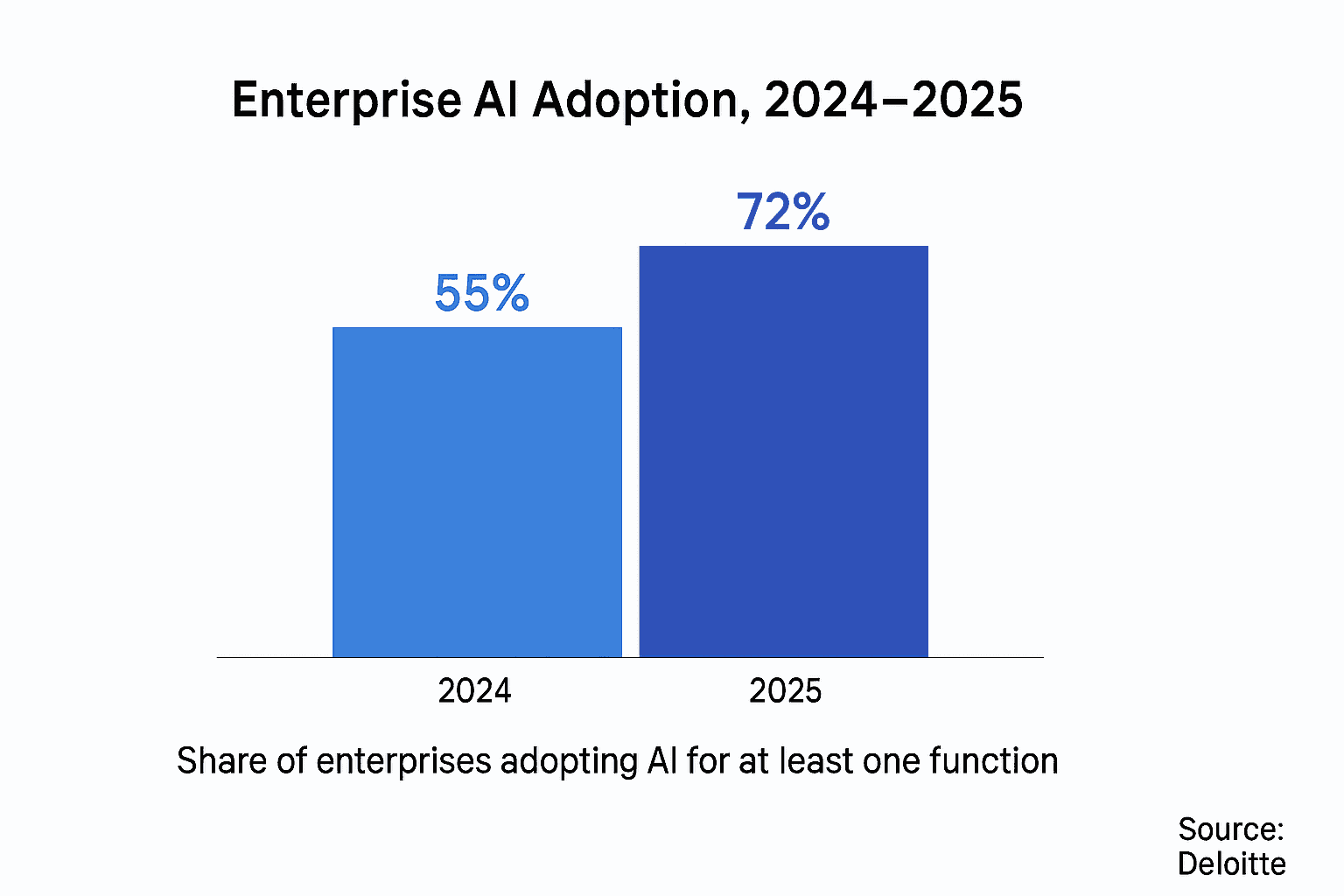

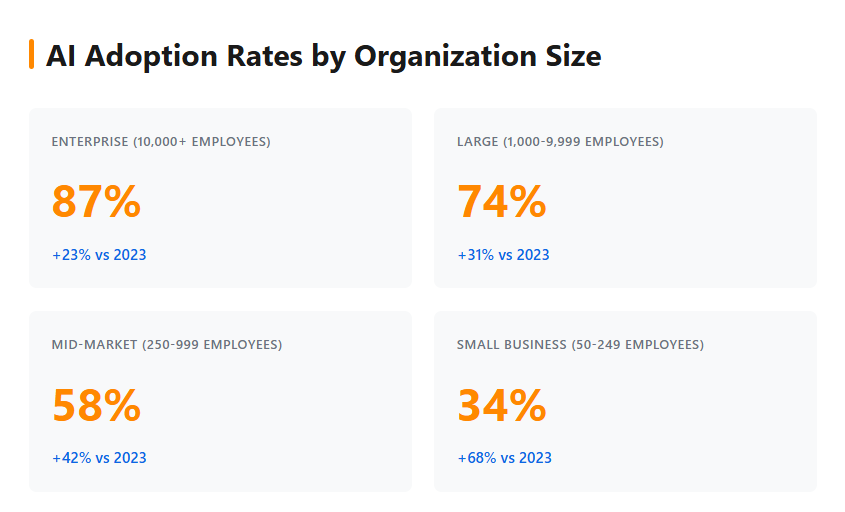

Here's what's happening right now in enterprise organizations: nearly 90% of companies plan to increase AI budgets this year. Yet according to research from Ivanti, over a third of workers keep their AI experiments secret to gain a competitive edge, while 30% stay completely quiet because they fear their roles could be eliminated. That hesitation isn't small or trivial. It's directly limiting the productivity gains your organization should be getting from these investments.

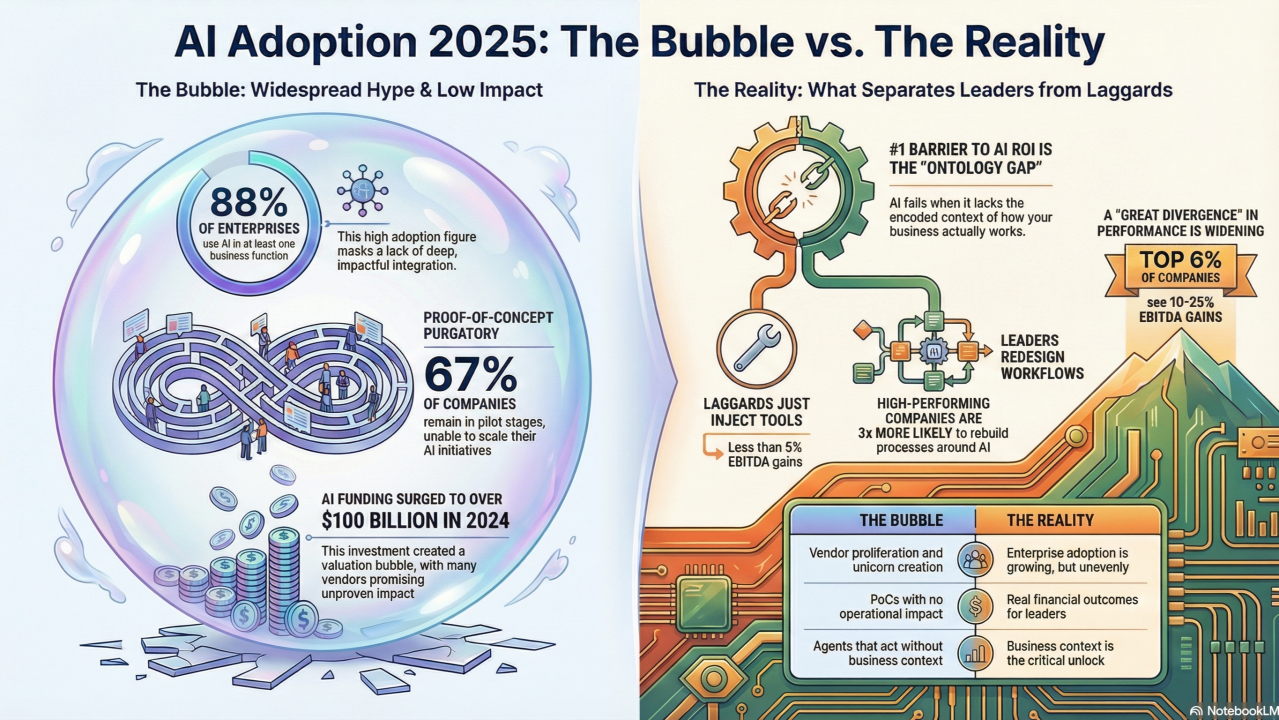

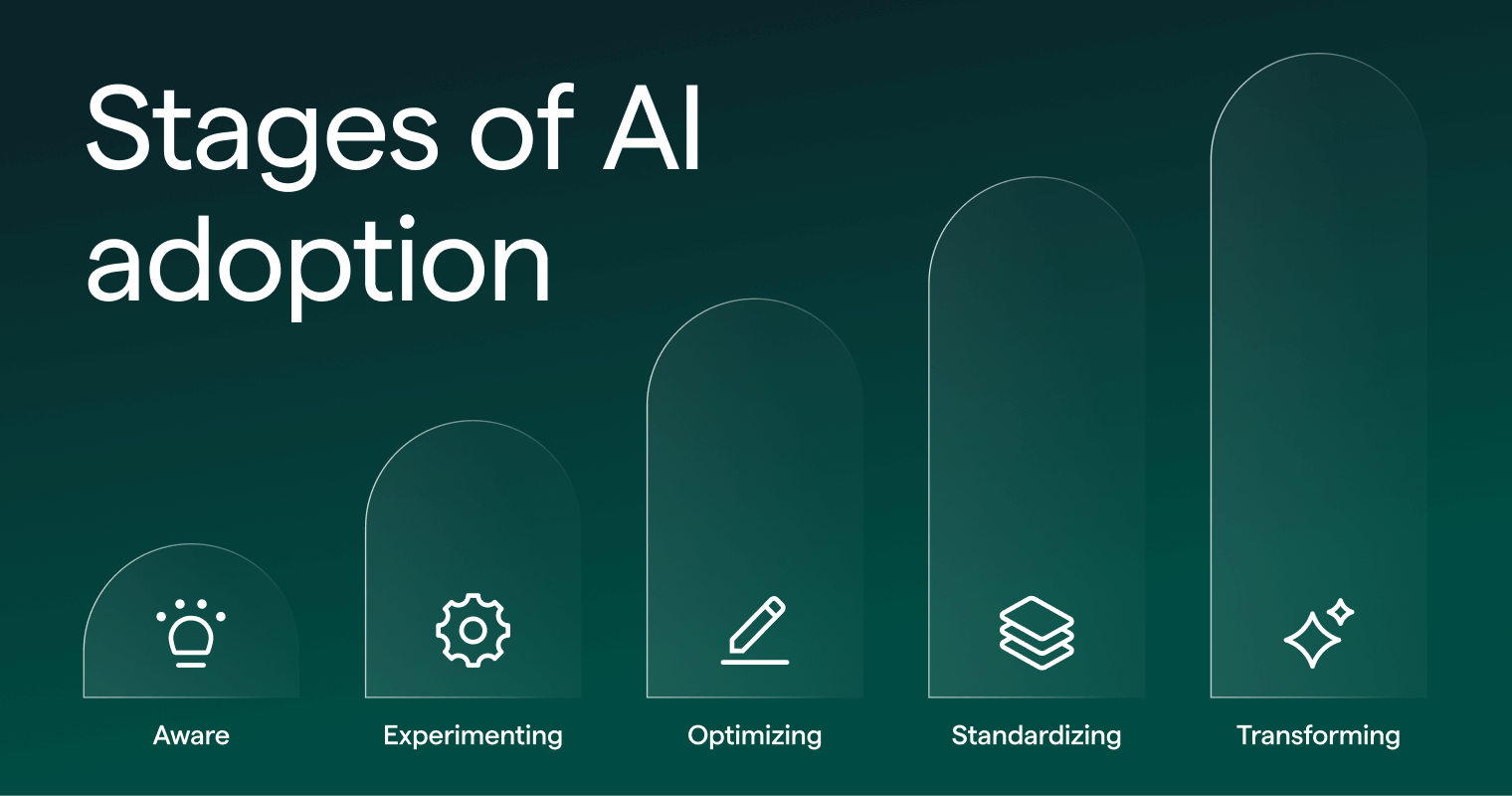

The real problem isn't the AI itself. It's the organizational structure around adoption. Companies are treating AI like a software rollout, when it actually requires a fundamental shift in how teams work, how leaders communicate, and how employees see their roles evolving. The companies that are winning aren't the ones with the fanciest models or the biggest budgets. They're the ones building AI culture.

Building that culture starts with a simple shift: moving from "AI curiosity" to "AI as normal." This means creating safe spaces where employees can experiment without fear. It means leaders openly sharing both wins and failures. It means measuring success beyond speed, including collaboration quality, decision-making improvement, and team capability development.

This guide walks you through the exact strategies that are working right now. You'll learn how to address employee concerns directly, how to measure AI ROI in ways that matter, how to scale trust alongside technology, and how to position your organization for sustainable AI transformation. The companies getting real value from AI aren't waiting for perfect conditions. They're building culture while they build capability.

TL; DR

- Culture beats technology: 36% of workers hide their AI use, and 30% stay quiet due to job security fears—cultural barriers matter more than tool quality.

- Safe experimentation drives adoption: Organizations that create safe spaces for AI pilots see 37% productivity gains versus peers, but only when paired with training and leadership modeling.

- Most AI pilots fail due to strategy, not capability: MIT research shows only 5% of integrated AI pilots deliver significant value—the gap isn't in the tool, it's in organizational approach.

- Trust scales ROI: Embedding governance, transparency, and employee buy-in from day one determines whether AI investments reach scale or stay isolated.

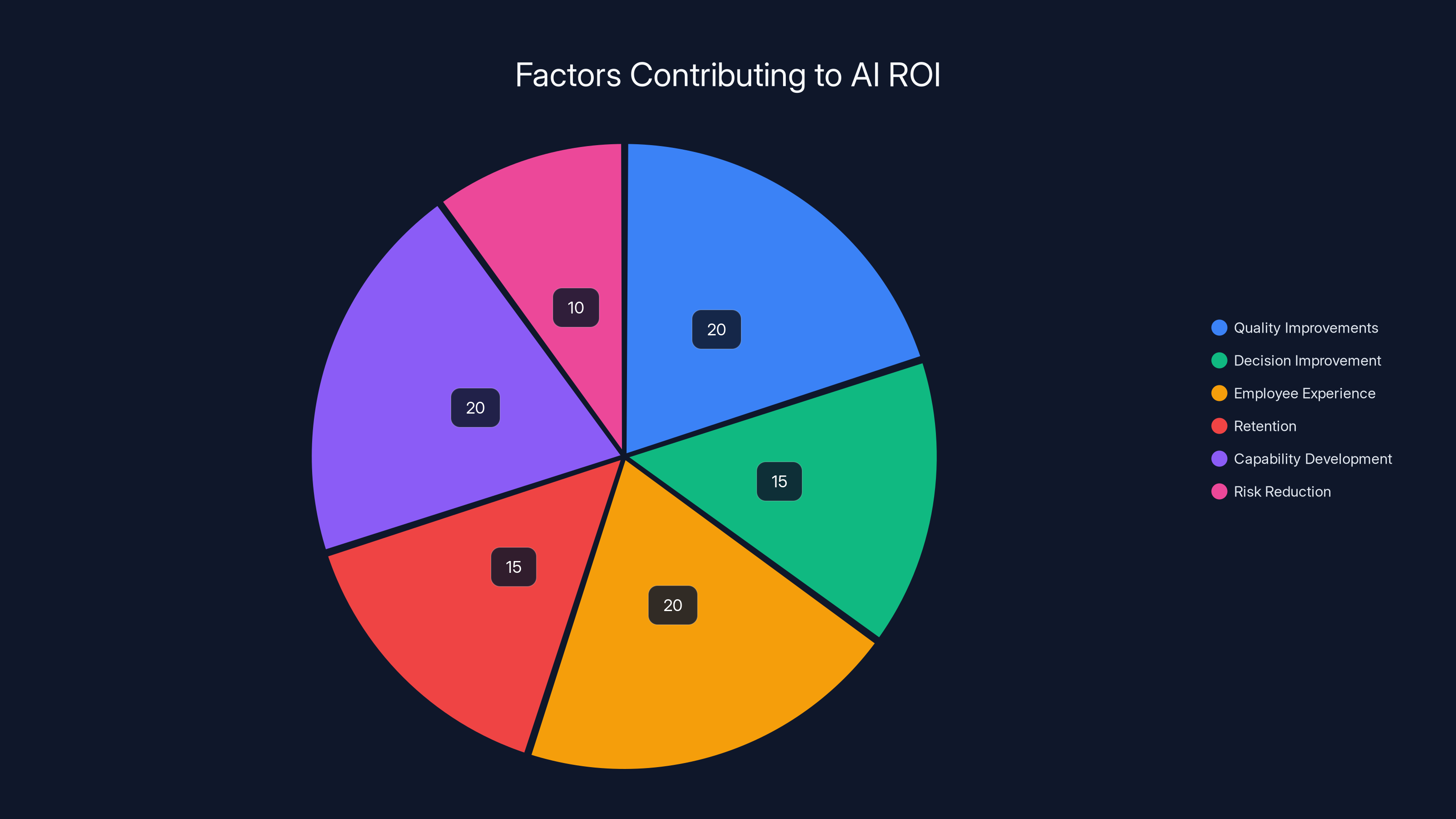

- Measure what matters: Speed matters, but real ROI comes from improved collaboration, better decision-making, skill development, and reduced employee turnover.

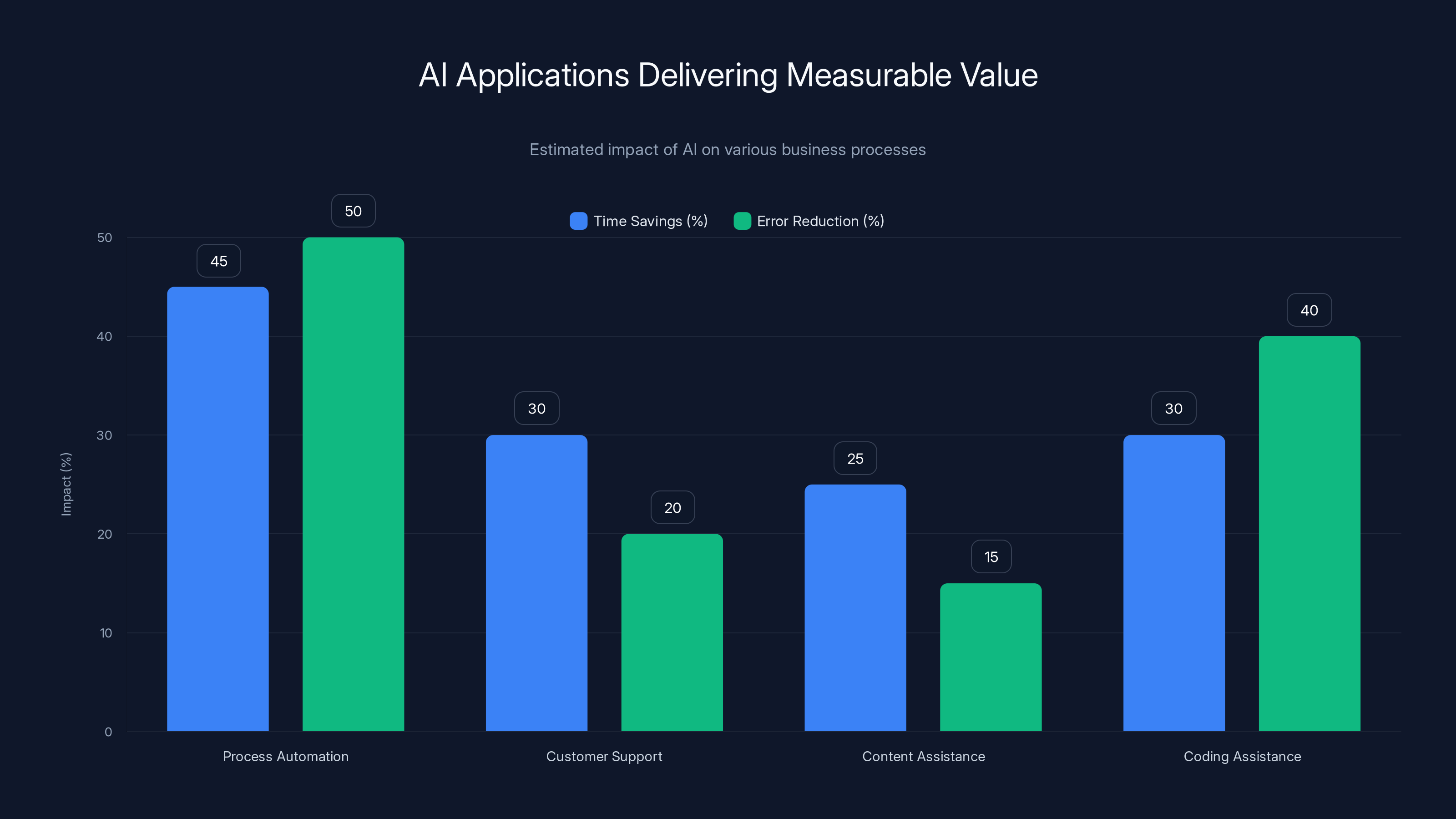

AI significantly reduces processing time and errors in process automation and coding assistance, with estimated time savings of 20-50% and error reductions up to 50%.

Understanding the AI Adoption Gap: Why Investment Doesn't Equal Impact

Your organization probably has the same problem as thousands of others right now. There's a chasm between what companies invest in AI and what they actually get back.

The numbers look good on a spreadsheet. Companies have allocated serious capital to AI initiatives. Tools are deployed. Training materials exist. But when you check actual adoption metrics three months later, what do you find? Sporadic use. Employees working around the tools instead of with them. The promised transformation hasn't materialized.

This gap exists for specific, identifiable reasons, and understanding them is the first step to closing it.

The Fear Factor: Why Employees Stay Silent

When Ivanti surveyed workers about AI adoption in their organizations, something unexpected became clear: silence is a strategic choice for many. Over a third of workers deliberately keep their AI experiments to themselves, believing it gives them a competitive edge in promotions or project selection. Another 30% stay quiet because they're genuinely afraid their role might be eliminated.

That's not paranoia. That's rational caution in an environment where the rules haven't been clearly explained. If your organization hasn't explicitly addressed what AI means for careers, job security, and role evolution, employees will fill that gap with their own worst-case scenarios.

The problem compounds when leadership isn't transparent. If executives talk about AI productivity gains without also talking about career pathways, skill development, and role transformation, employees hear one message: "We're replacing people." That fear becomes a massive barrier to adoption, regardless of how good your tools are.

The Productivity Paradox: Why Budget Alone Doesn't Deliver

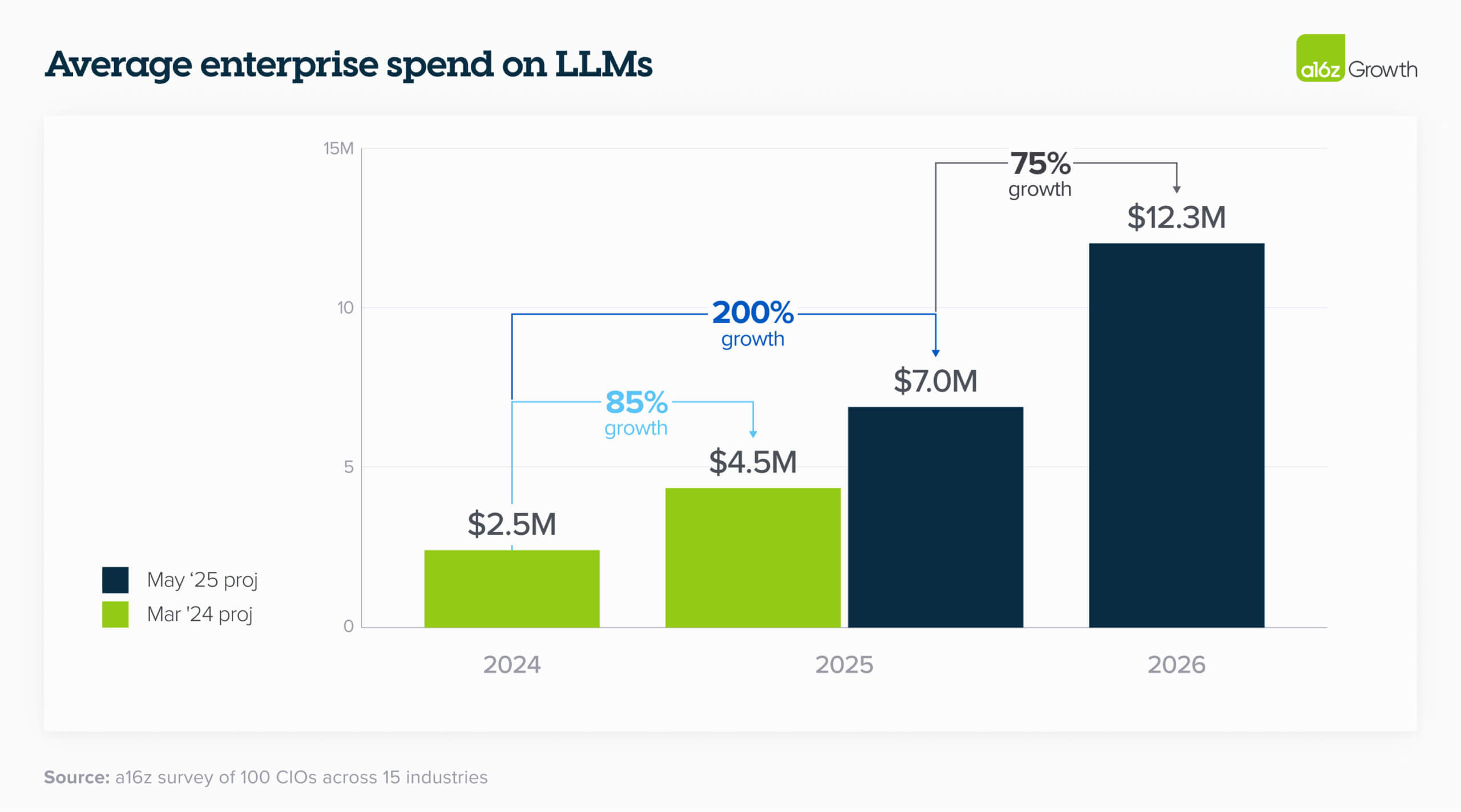

Almost 90% of companies surveyed expect to increase their AI budgets this year, and most predict measurable transformation within two years. Those predictions are optimistic, maybe too optimistic.

Budget is necessary but insufficient. You can throw $10 million at AI infrastructure, but if your organizational structure, workflows, and decision-making processes don't shift to accommodate it, you're just buying expensive software that nobody uses effectively.

The real issue is structural. Most organizations approach AI adoption like they approached previous software implementations: top-down mandate, mandatory training, measured compliance. That worked for email and spreadsheet tools because they were straightforward productivity replacements. AI is different. It requires employees to think differently about their work, to understand uncertainty and probability, to accept that sometimes machines are right and sometimes they're confidently wrong.

That requires more than a training video. It requires a cultural shift.

A significant portion of employees remain silent about AI adoption due to competitive edge and fear of job loss, each accounting for about a third of the workforce.

The Foundation: Creating Safe Spaces for AI Experimentation

You want employees to embrace AI, but first you need to make it psychologically safe for them to try.

That's where safe experimentation comes in. This isn't about building sandboxes where employees play with AI in their spare time. It's about creating structured, supported spaces where people can experiment with AI in their actual work, measure results, learn from failures, and build confidence alongside capability.

Designing Your Experimentation Framework

A solid experimentation framework has several core components. First, it needs clear boundaries. Employees should understand exactly what they can and cannot experiment with. Can they run AI on customer data? No. Can they use AI to draft internal emails and see if it saves time? Yes. Those boundaries exist to protect your organization while enabling real experimentation.

Second, it needs visible support from leadership. This isn't optional. If teams are going to take risks with AI, they need to know that leadership is genuinely supporting experimentation, including failed experiments. When leaders openly share their own AI experiments and failures, everything changes. Suddenly, trying something that doesn't work isn't a career risk. It's part of the process.

Third, the framework needs practical tools and resources. Experimentation doesn't mean "figure it out yourself." It means providing access to AI tools, documentation, example use cases, and support channels where employees can ask questions without feeling like they're slowing down the team.

Fourth, it needs measurement built in from the start. Before an experiment begins, teams should define what success looks like. How much time will this save? What quality improvement are we looking for? How will we know if it worked? This transforms experimentation from vague exploration into systematic learning.

Meeting Employees Where They Are

Advocating for AI adoption starts with meeting employees in an open forum, not lecturing at them. That means understanding what they actually care about.

Different roles have different concerns. A developer worries about whether AI will replace their coding. A marketer wonders if AI can actually understand brand voice or if it's just a template machine. A finance person wants to know if AI hallucinations could affect audit trails. An HR professional wonders if AI will introduce bias into hiring.

Those concerns aren't obstacles to push past. They're the actual conversation you need to have.

Lead by example. If you're an executive, share your own AI experiments. Not polished case studies—real stories about what you tried, what worked, what didn't, and what you learned. When senior leadership models curiosity and openness, it gives everyone permission to do the same.

Create forums specifically designed for this conversation. Not mandatory training sessions. Actual forums where employees can ask questions, share concerns, and learn from peers. Make participation optional and informal. The people who show up are already interested, and their enthusiasm is contagious.

Strategic AI Training: Building Capability and Confidence

Here's the truth about AI training: most of what organizations do isn't actually training. It's broadcasting information and hoping people retain it.

Real training changes how people think about their work. It builds confidence. It gives people frameworks for understanding what AI can and can't do. It addresses specific concerns relevant to their role. That requires a different approach than a single all-hands presentation.

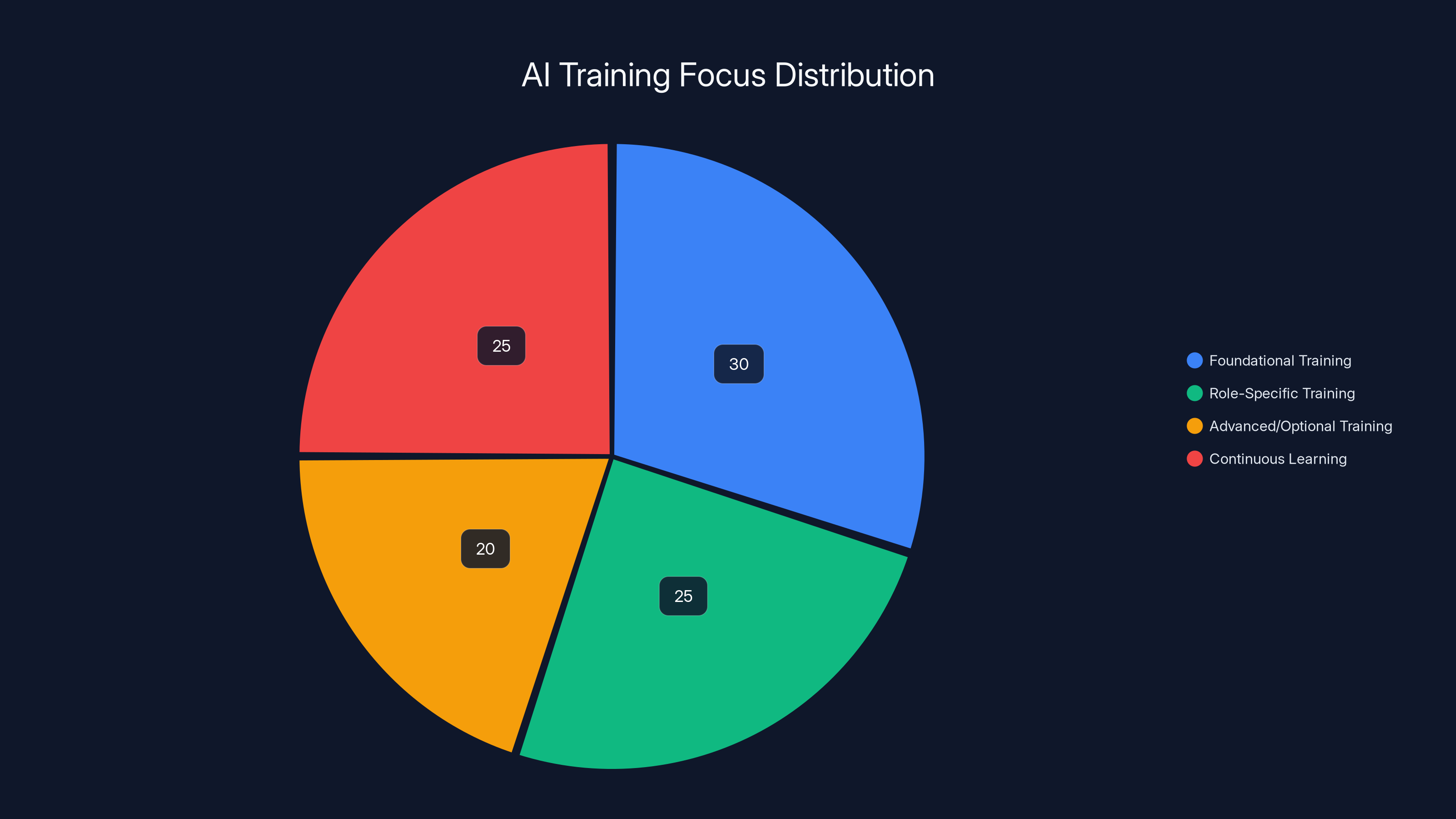

The Tiered Training Model

Effective AI training works in layers. Start with foundations that everyone needs: what is AI, how does it work, what are its limitations, what are common misconceptions. This doesn't need to be technical. Most employees don't need to understand transformer architecture. They need to understand that AI works from patterns in training data, which means it can reflect biases from that data. They need to know that AI can sound confident while being completely wrong. They need to understand that AI is a tool for augmenting human judgment, not replacing it.

Second layer: role-specific training. A developer gets training on AI-assisted coding workflows, code review best practices with AI-generated code, and how to handle security concerns. A marketer gets training on using AI for content generation, brand voice consistency, and editing AI-generated copy. A salesperson gets training on using AI for prospect research, email drafting, and followup workflows.

Third layer: advanced and optional. As people gain confidence, some will want deeper knowledge. Offer technical explainer series for people interested in understanding model behavior. Offer advanced modules on prompt engineering, fine-tuning, and building custom AI applications. Make this optional and available for people who want to go deeper.

Fourth layer: continuous learning. AI is moving fast. Build regular learning opportunities into your culture. Monthly AI case studies. Quarterly deep dives into emerging techniques. Accessible repositories of prompts, templates, and workflows that employees create and share.

Engagement Over Compliance

Mandatory AI training tends to fail. People check the box, forget what they learned, and go back to their regular workflows.

Engaging materials work better. That means mixing formats: interactive modules instead of lectures, real use cases instead of hypotheticals, practical exercises instead of theory, peer learning instead of top-down instruction. Create an AI community of practice where employees share discoveries, troubleshoot problems together, and build confidence through peer support.

Make materials bite-sized. A 2-hour training session on AI won't stick. Five 15-minute modules spread across a week, with practical homework between sessions, generates much higher engagement and retention.

Include both wins and failures. When you share case studies, don't just show successes. Show experiments that didn't work, explain why they failed, and describe what was learned. That's how people actually build judgment.

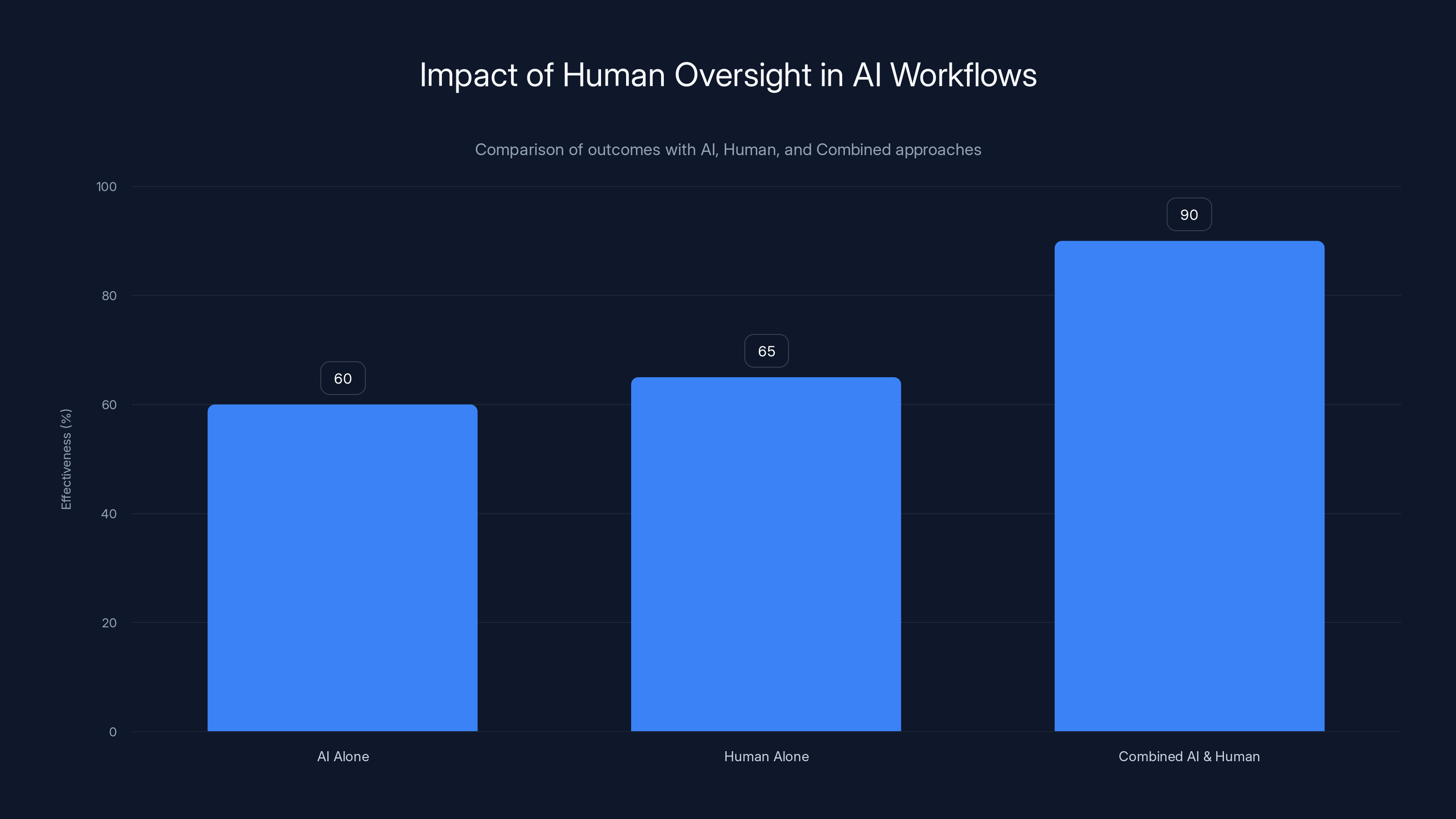

Workflows that combine AI automation with human judgment show 40% better outcomes than AI alone or human alone. This highlights the importance of human oversight in AI processes.

Leadership as Model: Why Your Actions Matter More Than Your Strategy

You can write the best AI adoption strategy in the world, but if leadership doesn't visibly embody it, employees won't trust it.

Leadership matters in adoption because people watch what leaders do, not just what they say. If you're asking employees to experiment with AI while you publicly dismiss it as a fad, you've already lost. If you're saying jobs are secure while every AI announcement comes with "efficiency gains" language, employees will assume the worst.

Demonstrating Responsible AI Use

Leaders need to actively model the behavior they want to see. That means using AI visibly in your own work. It means sharing what you learned. It means talking about failures, not just successes.

When a senior leader stands up and says, "I used AI to draft my quarterly strategy memo. Here's how it helped and where I had to step in," something shifts. Suddenly, it's not theoretical. It's real. And it's okay to admit that the tool helped with the hard parts but humans still need to make the final decisions.

Demonstrating responsible AI use also means addressing bias, hallucinations, and limitations openly. Don't pretend AI is perfect. Talk about where it makes mistakes. Talk about the controls you put in place. Talk about how you verify important outputs. When leaders normalize this conversation, employees understand that using AI doesn't mean blindly trusting it.

Leading Change Through Outcomes

Real leadership in AI adoption means identifying where AI delivers the most impact, piloting those high-impact workflows, measuring the outcomes carefully, and scaling what actually works.

That's different from how many organizations approach it. The typical pattern is: pick a tool, deploy it, hope people use it. Then measure adoption (did people click on it?) instead of actual impact (did it improve how work gets done?).

Leading change means resisting that pattern. Start small. Identify a specific process that's high-volume, repetitive, and important. Get a small team to pilot AI-assisted workflows. Measure actual outcomes: time spent, quality metrics, employee satisfaction, decision quality. Share the results. Let that success build momentum for the next pilot.

This approach teaches an important lesson: we're not doing AI for its own sake. We're doing it where it solves real problems.

Connecting AI to Career Development

Leaders need to explicitly connect AI adoption to employee career development. This is where organizations often miss the biggest opportunity.

Employees care about their careers. If they see AI as a threat to their career, they'll resist. If they see AI as a tool that makes them more valuable, that accelerates their career growth, they'll embrace it.

That means reframing the narrative. Instead of "AI will make your job easier," try "AI will let you focus on the parts of your job that require human judgment and creativity. You'll become better at the skills that actually matter for your career." Instead of "we're automating administrative work," try "we're upgrading your role. You'll spend less time on admin and more time on strategy, creativity, and client relationships."

Back that up with concrete action. Offer AI skill development as part of career growth. Create career pathways for people who want to specialize in AI workflows. Give people time to develop AI skills, not just their regular job responsibilities.

When employees see that learning AI makes them more valuable and opens new career opportunities, adoption shifts from reluctant compliance to genuine interest.

The ROI Question: Why 95% of AI Pilots Fail and How to Be in the 5%

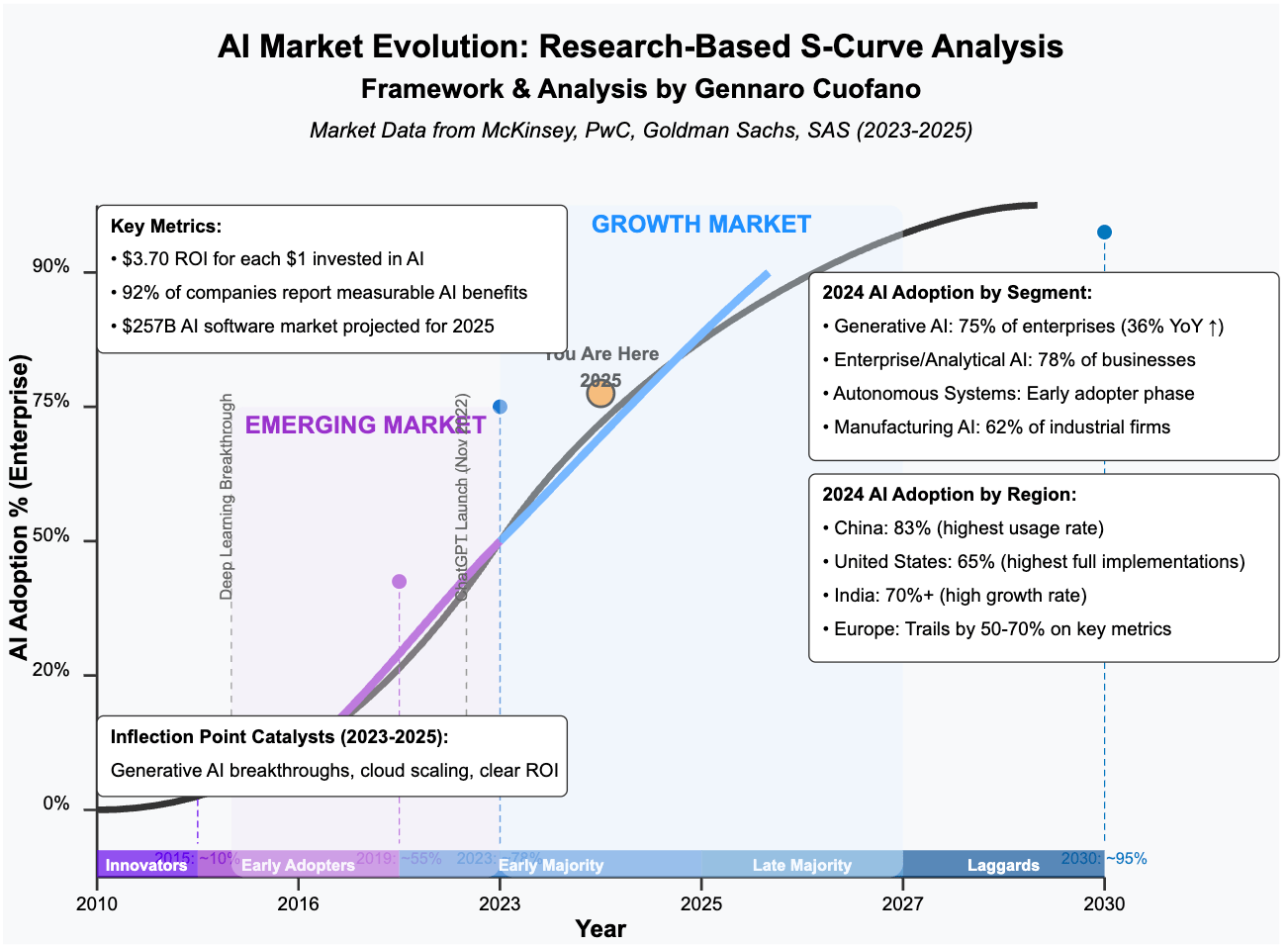

Here's the uncomfortable truth: according to MIT's State of AI in Business research, only about 5% of integrated AI pilots deliver significant value.

That statistic gets quoted to suggest AI doesn't work. But that's the wrong conclusion. It means strategy usually fails. It means the organizations running those pilots didn't have a clear approach to AI adoption. They treated it as a technology problem instead of a strategy problem.

The companies that ARE getting real value understand something crucial: ROI isn't about speed alone. It's about strategy.

Where AI Actually Delivers Value

Let's be specific about where AI is actually delivering measurable value today.

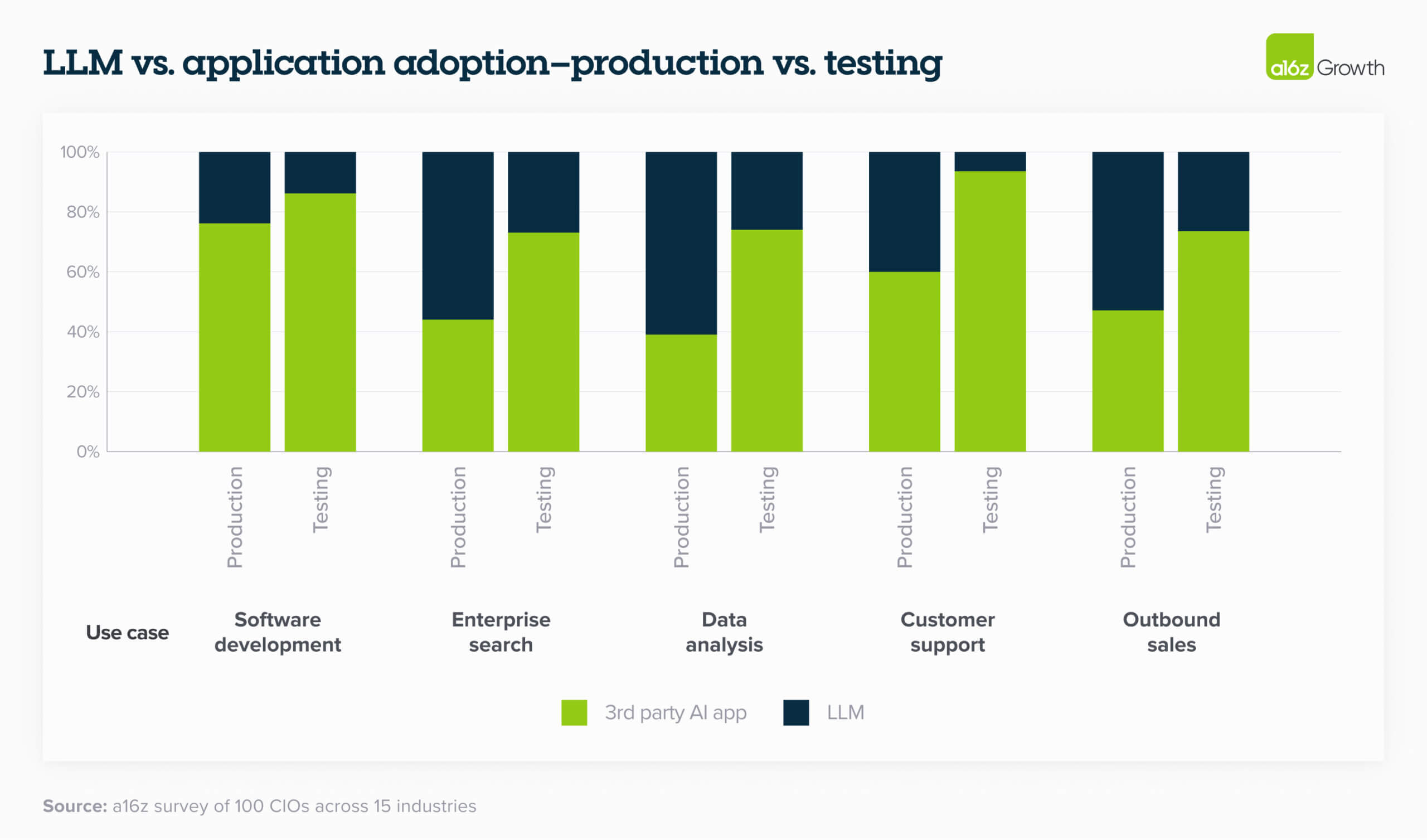

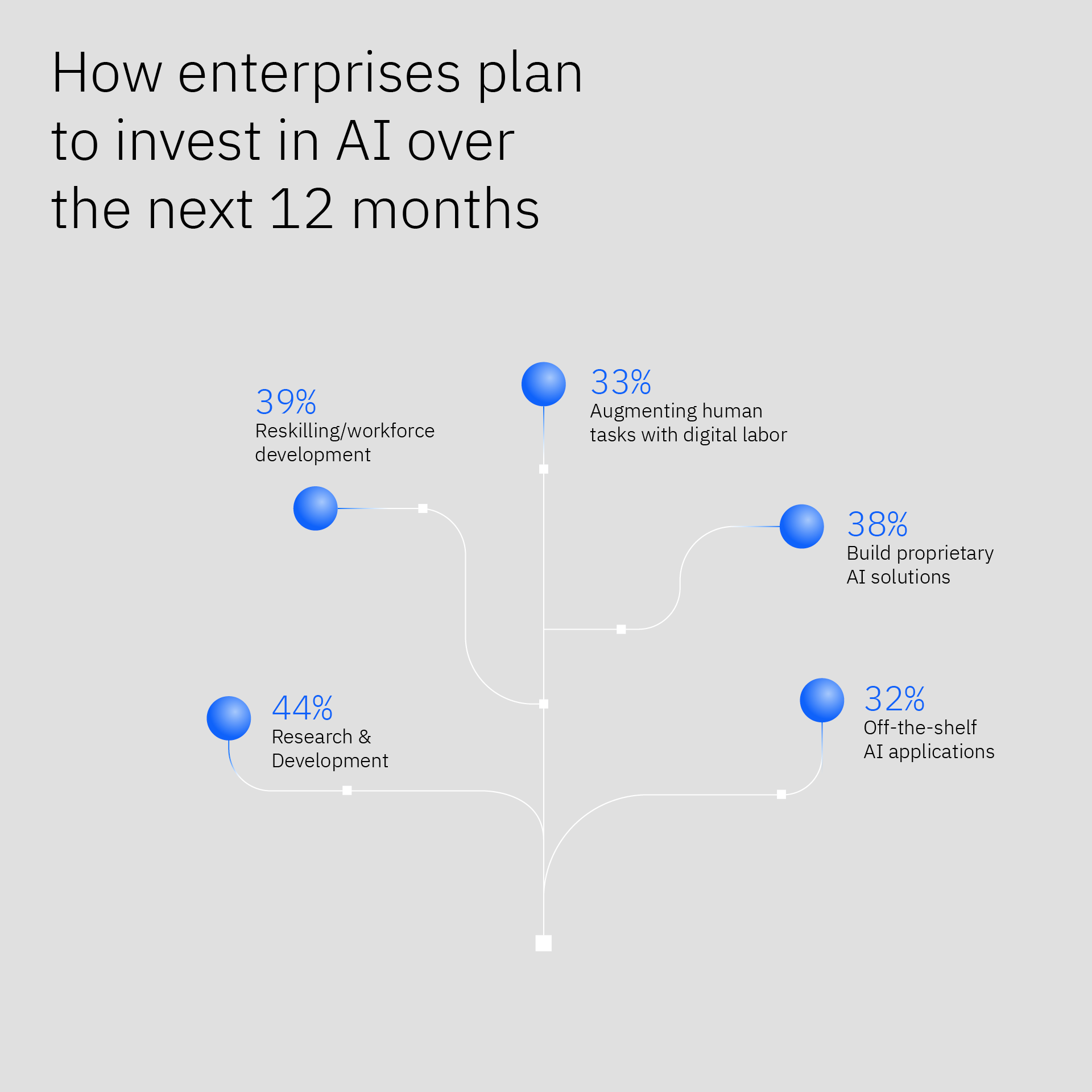

Process automation is one. Invoice processing, claims processing, data entry, and document categorization are areas where AI consistently reduces manual work and improves accuracy. Organizations are seeing 40-50% reductions in processing time. More importantly, they're reducing errors in areas where errors are expensive.

Customer support is another. AI-powered response suggestions, ticket categorization, and knowledge base search all improve response time and customer satisfaction. The automation isn't replacing the human—it's giving the human better information faster.

Content assistance is real. AI isn't replacing writers, but it's helping them write faster. First-draft generation, editing suggestions, research assistance, and outline creation are all areas where AI legitimately helps knowledge workers produce more while maintaining quality.

Coding assistance is one of the clearest wins. Organizations using AI-assisted coding report time savings of 20-40% on coding tasks, and often improved code quality because developers spend less time on boilerplate and more time on architecture. That's not speculation—that's measurable.

Decision support is emerging. When organizations feed AI their data and use it to suggest optimal inventory levels, demand forecasts, or resource allocation, decisions improve and costs come down.

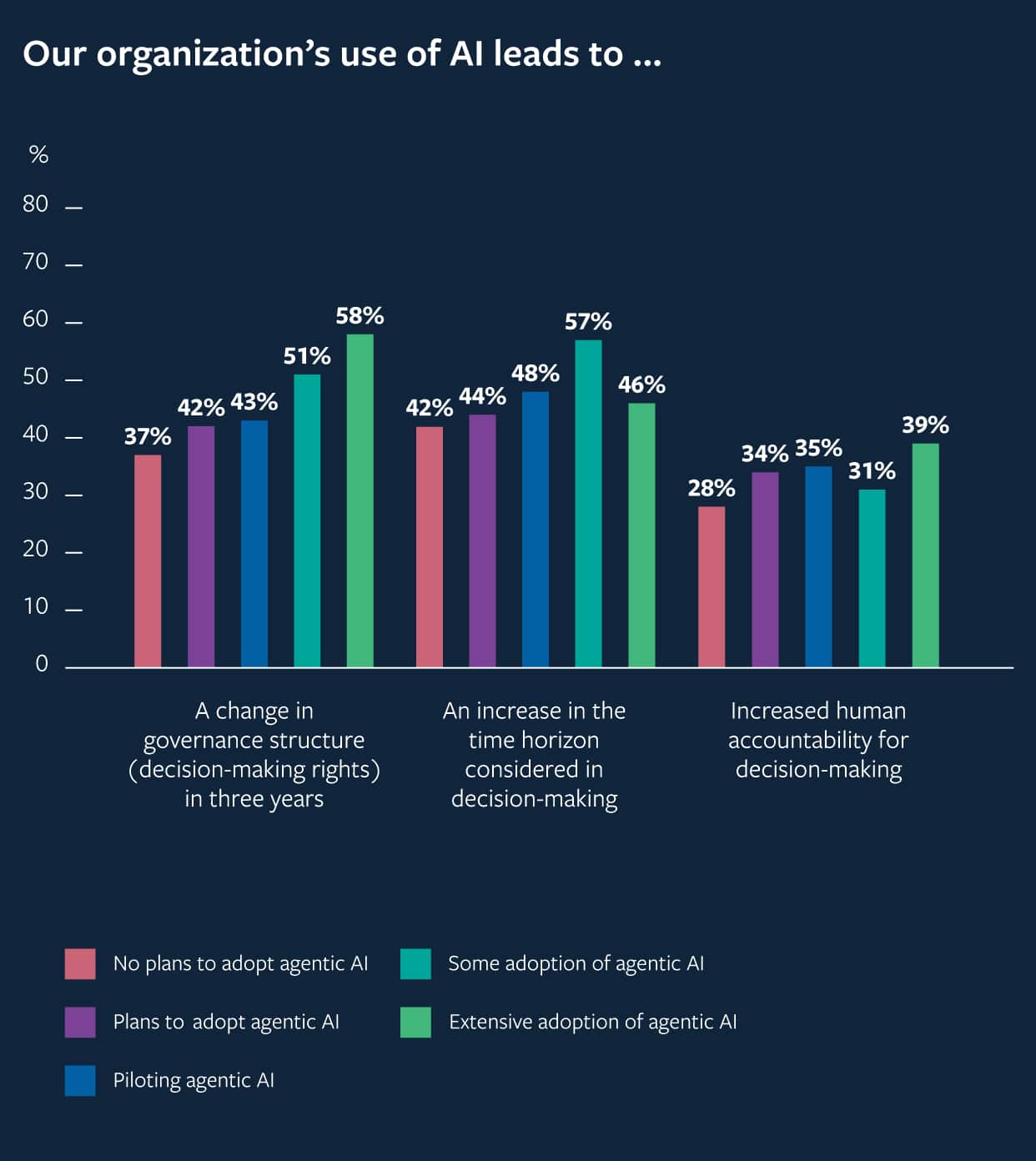

Leading firms using AI for process automation across these areas report productivity gains of approximately 37%, significantly outpacing peers. That's not from one tool or one process. That's from systematic application of AI across multiple workflows.

The Strategy Gap: Why Isolated Pilots Fail

Most organizations fail at AI ROI because they treat pilots as isolated experiments instead of building blocks for systematic transformation.

Here's the typical pattern: A department gets excited about AI. They identify a process they think will work. They run a pilot. It shows promise. Then what? Usually, nothing. The pilot ends. The lessons aren't systematized. The tools aren't integrated into actual workflows. The people who participated move on to other projects. Six months later, they're back to the old process.

That's how a successful pilot becomes a waste of resources. The issue isn't the pilot. It's that isolated pilots don't create systematic change.

Companies that get real ROI approach it differently. They identify a strategic area where AI can drive meaningful improvement. They pilot it. They measure outcomes carefully. They integrate it into actual workflows and roles. They measure results at scale. They systematize the learnings. Then they move to the next area.

This requires different thinking. Instead of asking "Does this AI tool work?" you're asking "How do we systematically integrate AI into this business process in a way that employees adopt, measure correctly, and scale across the organization?"

That means clear ownership. Someone is accountable for the ROI, not just the pilot execution. That means actual integration, not "try if you want." That means measurement that goes beyond adoption metrics to actual business outcomes.

Measuring What Actually Matters

Most organizations measure the wrong things when evaluating AI ROI.

Tool adoption metrics ("how many people used it?") are easy to measure but mostly meaningless. People might use a tool because it's mandatory, not because it's valuable. Measuring adoption tells you nothing about whether you're getting return on investment.

Time savings are better, but incomplete. If an employee saves 30 minutes on routine work, that's good. But what do they do with that 30 minutes? If they just take on more routine work, you haven't improved anything. If they spend the time on higher-value work—strategy, creativity, client relationships—that's real value.

Quality improvements matter. If AI reduces errors in your invoicing, that impacts your bottom line directly. If AI helps sales teams write better proposals, your win rate goes up. If AI helps customer service provide faster, more accurate answers, satisfaction improves. Those are real outcomes.

Employee experience matters. If AI tools make your employees' jobs more interesting, more autonomous, and less frustrating, you see lower turnover. That's a huge financial impact that doesn't usually make it into AI ROI calculations.

Decision quality matters. If AI gives your team better information faster, do they make better decisions? That's harder to measure, but enormously valuable.

Team capability matters. If employees develop new skills through AI use, they're more valuable to the organization. That's an investment that pays off for years.

A comprehensive ROI calculation should include: time savings, quality improvements, error reduction, decision quality, employee satisfaction, retention, and capability development. The sum of all that is much larger than speed alone.

Quality improvements, employee experience, and capability development are key contributors to AI ROI, each estimated at 20%. Estimated data.

Building Scalable Trust: The Foundation of Enterprise AI

You can't scale AI without trust. And trust isn't something you build after you deploy—it's something you build into everything from the beginning.

Scalable trust has specific components. It's not vague. It requires enterprise-grade security, clear data compliance, transparent model behavior, and employee confidence that innovation doesn't come at the cost of privacy or governance.

Enterprise Security and Data Compliance

When you scale AI across your organization, you're dealing with serious data. Customer data. Employee data. Financial data. Proprietary data. Your employees need to know that AI tools handle that data responsibly.

Enterprise-grade security means encrypted data in transit and at rest. It means access controls. It means audit trails. It means knowing who accessed what data, when, and why. It means compliance with regulations like GDPR, HIPAA, or whatever applies to your industry.

This isn't negotiable for enterprise adoption. Your employees won't trust AI systems with sensitive data unless they can verify that the system protects that data. That verification means technical controls, documented processes, and regular audits.

Data compliance also means being clear about what happens to data after processing. Does the AI vendor retain your data for model training? Do they share it with other organizations? What are the terms? Your employees need to understand these terms before they're comfortable using the tools.

Transparent Model Behavior

Trust requires understanding. Employees should know, at least at a high level, how the AI system works and why it's making the recommendations it makes.

This doesn't mean everyone needs to understand transformer architectures. But they should understand the basics: the AI learned patterns from training data, so it reflects what's in that data. The AI can be confident while being completely wrong. The AI doesn't have access to real-time data, so newer information might not be reflected. The AI can't actually understand context the way humans do—it's working from patterns.

When employees understand these limitations, they use AI more effectively. They verify important outputs instead of blindly trusting them. They don't feed the AI sensitive data it doesn't need.

Transparent model behavior also means being honest about limitations specific to your use case. If your industry has specialized jargon, the AI might misunderstand it. If your business has unique rules or constraints, the AI might not be aware of them. If your data is sensitive, the AI might make embarrassing mistakes.

The organizations that build trust name these limitations explicitly and design workflows that account for them. That honesty creates confidence, not fear.

Governance That Scales

As you scale AI, you need governance that scales with it. This isn't about bureaucracy. It's about controls that let the organization scale safely.

Scalable governance means clear policies about what data can go to which AI systems. It means approved tool lists for different use cases. It means audit capabilities that let you verify compliance. It means change management so that when AI tools update or change, the organization understands the implications.

Governance also means controls on decision-making. Some decisions can be made by AI with light review. Some need human sign-off. Some should never be made by AI alone. Being clear about which is which prevents problems.

Finally, governance means maintaining visibility. As AI use scales, you need to know what's happening. What problems are people encountering? What's working well? Where are the risks? That visibility lets you adjust and improve.

Addressing Bias, Hallucinations, and Limitations: The Reality of Modern AI

Here's what every organization adopting AI needs to accept: AI systems have real limitations and real failure modes. You can't build trust by ignoring them. You have to acknowledge them, understand them, and design workflows that account for them.

Bias in AI: Where It Comes From and Why It Matters

AI learns from data. If the data reflects biases—in hiring, lending, content moderation, whatever domain you're working in—the AI will learn and amplify those biases.

This isn't theoretical. There are documented cases of AI systems that showed bias against women in hiring, bias against certain groups in lending, bias in predictive policing. These biases came from the training data, which reflected historical discrimination.

For your organization, that means being careful about where you deploy AI that affects people. If you're using AI to screen resumes, you need to verify it's not biased against protected classes. If you're using AI to approve loans or make hiring decisions, you need to audit for bias regularly.

Bias isn't a flaw in AI that can be completely eliminated. It's a characteristic that needs to be managed. That means: 1) understanding what biases might exist in your training data, 2) testing your AI system for those biases, 3) designing oversight that catches problematic decisions, 4) being transparent with affected people about how decisions are made.

When employees understand that you're actively managing for bias instead of pretending it doesn't exist, trust increases significantly.

Hallucinations: Why AI Sometimes Confidently Lies

Large language models sometimes generate completely false information while sounding completely confident. They make up citations, invent facts, and create false memories.

This is called hallucination, and it's one of the most important limitations for employees to understand. An AI might tell you a fact that sounds plausible but is completely made up. It might cite sources that don't exist. It might confidently state something that's completely false.

Why does this happen? Because the AI's goal is to generate text that continues the pattern of the input. It's optimized for sounding good, not for being accurate. If it doesn't know the answer, it doesn't say "I don't know." It makes something up.

For your organization, this means never using AI for information that needs to be verified. Use it for drafting, for suggestions, for starting points. Verify it for anything that matters. Create workflows that assume hallucination will happen and design human review accordingly.

The good news: employees understand this limitation pretty quickly once you explain it. And once they understand it, they know to verify important outputs. That's not a weakness in AI adoption. That's smart usage.

Limitations Beyond Bias and Hallucinations

There are other important limitations worth acknowledging.

Training data lag: The AI was trained on data up to a certain cutoff date. It doesn't know what happened after that. If you ask it about recent events, it doesn't have that information.

Context limitations: The AI has a limit on how much context it can hold. If your document is longer than that limit, the AI won't see the whole thing.

Specialization gaps: The AI was trained on general information. If your domain has specialized knowledge or unique rules, the AI might not understand them.

Inconsistency: The AI might give you different answers to the same question if you ask it in a different way. That's not a bug, it's how the system works.

None of these mean "don't use AI." They mean "understand these limitations and design workflows that work with them, not against them."

Estimated data shows a balanced focus on foundational, role-specific, advanced, and continuous learning in AI training programs.

Championing Human Oversight: Why AI Without Judgment Fails

Here's a critical truth that some AI enthusiasts get wrong: AI is a tool for augmenting human judgment, not replacing it.

That means human oversight isn't a limitation or a bottleneck. It's the actual mechanism that makes AI valuable.

The Role of Human Review in AI Workflows

When you integrate AI into a workflow, you're not automating away the human. You're changing what the human does.

Instead of doing all the work, the human reviews and refines AI output. That's actually more valuable than the human doing all the work. Why? Because the human can now focus on the hard judgments while the AI handles the routine work.

Consider a sales workflow. AI can analyze prospects and suggest which are highest priority. A sales rep used to spend hours doing that analysis. Now they spend 30 minutes reviewing the AI's suggestions and tweaking them based on judgment the AI doesn't have. That's a huge time win, and it's better decisions because it combines AI's data processing with human judgment.

Consider a content workflow. AI can draft content. A writer used to start from a blank page. Now they start with AI-generated draft and refine it. That's faster and often better because the writer can focus on voice, nuance, and accuracy while the AI handles the structure and initial draft.

The key is building workflows where human review is built in, not an afterthought. Where the human is making judgment calls, not just checking boxes.

Training for Responsible AI Judgment

But human oversight only works if humans have the judgment to do it effectively. That requires training.

Employees need to understand how to review AI output. What should they be looking for? How do they catch hallucinations? How do they verify facts? How do they evaluate whether the AI understood the context correctly? How do they know when to trust the AI and when to be skeptical?

That's different from the upskilling training I mentioned earlier. This is specific training on "how to work with AI effectively." It's a critical skill that most people don't have yet.

Effective training here includes: case studies where AI output was wrong and how someone caught it, templates for reviewing AI output by role, checklists for verification, and practice exercises where people learn to spot common AI errors.

As people get better at reviewing AI output, they get better results from the AI. They catch errors earlier. They provide better feedback that actually improves AI performance in their specific domain.

The Feedback Loop: Getting Better Over Time

Here's something that separates organizations that fail with AI from organizations that succeed: feedback loops.

When employees review and refine AI output, that feedback is valuable. If you're capturing it, analyzing it, and using it to improve your AI systems, you're building a virtuous cycle. Over time, the AI gets better at your specific use cases.

This might mean fine-tuning general AI models with your domain-specific data. It might mean creating custom templates and prompts that work better for your business. It might mean building specific guardrails that prevent common errors in your domain.

Organizations that do this see continuous improvement. The AI that took 30 minutes per task in month one is down to 15 minutes per task in month six because you've optimized it for your workflow.

Organizations that don't capture and act on feedback just get the generic AI experience, forever.

Scaling Successfully: From Pilots to Systemic Transformation

The difference between AI pilots that stay pilots and AI initiatives that actually scale comes down to a few key factors.

Removing Structural Blockers

First, you have to identify and remove structural blockers. These are the organizational, process, or technical obstacles that prevent scaling.

Structural blockers might include: lack of integration between systems, unclear data ownership, complex approval processes, tools that don't work well together, outdated infrastructure that can't handle AI workloads, organizational silos that prevent knowledge sharing.

Unlike individual resistance to AI, structural blockers prevent everyone from scaling AI, not just the people who are skeptical. If your data is siloed across three different systems and there's no process for consolidating it, you can't scale AI data analysis. If your approval process requires three layers of review, you can't scale AI-assisted customer communications. If your tools don't integrate, you can't build seamless workflows.

Successful organizations identify these blockers early. They treat them as strategic priorities, not implementation details. They allocate resources to remove them. They measure progress on removing blockers, not just on adoption.

Model biases are a specific structural blocker worth calling out separately. If your organization hasn't addressed potential biases in your AI systems, that becomes a blocker to scaling into decisions that affect people. You'll hit regulatory or ethical resistance. You'll encounter employee hesitation. You'll be exposed to legal risk.

Removing this blocker means: auditing your AI systems for bias, documenting what you find, making improvements, and creating governance around bias management going forward.

Building Systems That Work at Scale

Successful scaling requires thinking about systems, not just tools.

A tool is Chat GPT or Copilot or whatever specific AI software you're using. A system is how that tool integrates with your processes, your data, your people, and your governance.

When you think systemically, you ask questions like: How does this tool connect to our data sources? What happens to the output? Who reviews it? What controls are in place? How do we measure impact? How do we maintain governance? How do we handle edge cases?

Answering those questions requires coordination across technology, operations, compliance, and people. It requires upfront investment that might seem excessive for a pilot but is necessary for scaling.

It also requires thinking about consistency. If you're deploying AI across 50 different teams, are they all using it the same way? Are the prompts consistent? Are the controls consistent? Are you measuring results consistently? If the answer is no, you're not scaling AI—you're scaling chaos.

Successful organizations develop templates, best practices, shared prompts, and shared infrastructure that let teams move fast while maintaining consistency.

Measuring and Adjusting

Scaling requires measurement and adjustment. You'll learn things as you scale that you couldn't have predicted during pilots.

Measure adoption, but measure real impact more heavily. Are employees actually using the tools? Yes or no. Are workflows actually improving? By how much? Is employee satisfaction improving? Are there unexpected problems?

As you learn things, adjust. Maybe the tool you chose works great for one team but not another. Adjust. Maybe the training approach works for some roles but not others. Adjust. Maybe the governance framework is too strict or too loose. Adjust.

This requires humility and flexibility. You won't get it right the first time. That's okay. What matters is learning and adapting.

It also requires transparent communication about adjustments. If you're changing tools or processes based on what you learned, tell people. Explain why. Let them provide feedback. When people see that their feedback actually leads to changes, trust in the AI initiative increases dramatically.

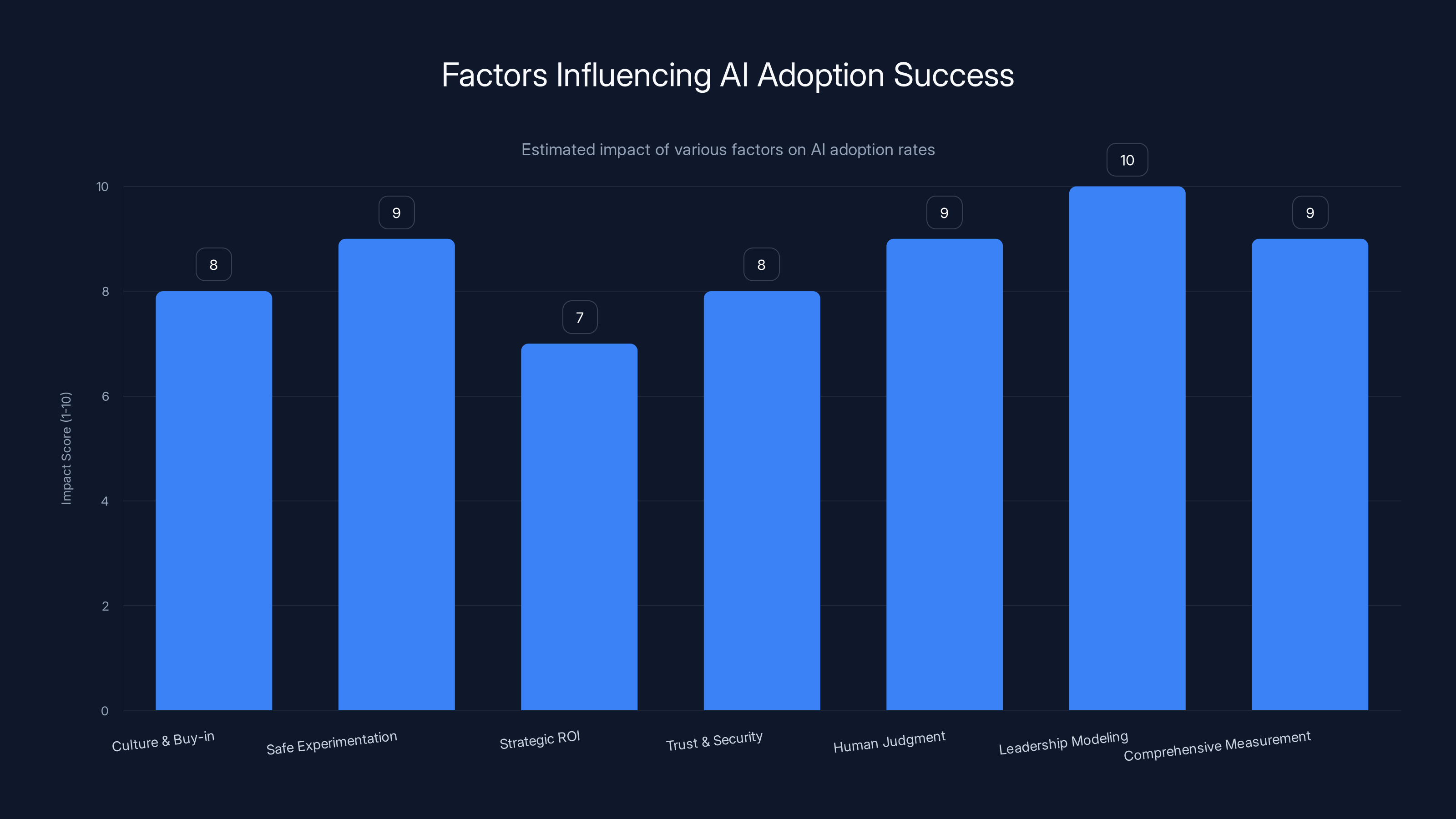

Leadership modeling and safe experimentation are key drivers of AI adoption success, with estimated impact scores of 10 and 9 respectively. Estimated data based on key takeaways.

Communicating Change: The Stories That Stick

Technical implementation and strategy matter, but the stories you tell matter equally.

When you're building AI culture, you're competing for mindshare with all the noise about AI taking jobs, AI being dangerous, AI being overhyped. You need stories that are more compelling than those narratives.

Sharing Real Wins, Not Marketing Wins

Marketing AI wins are great for external communication. They're polished, impressive, numbers-focused. "We saved 37% on processing costs." Employees don't connect to that.

Real wins are different. "Sarah used AI to draft her quarterly proposal last week. Normally that takes her three days. This time she had a draft in two hours that she could refine. She spent the time she saved on strategy instead of formatting. That's how AI changes work."

Real wins are specific. They're about people employees know or can relate to. They show actual impact on actual work. They're believable because they're not hyperbolic.

When you share real wins, employees start imagining how AI could help their own work. That's more valuable than any strategy document.

Sharing Failures and What Was Learned

Maybe more importantly, share failures. Not failures that make people look bad. Failures that teach something.

"We tried using AI for customer service responses. It worked great for 80% of tickets but struggled with ambiguous requests. We ended up with a hybrid approach where AI handles clear requests and humans handle ambiguous ones. That's actually better than what we had before—clearer routing, and people spend their time on harder problems."

That story teaches several things: AI doesn't have to be perfect to be valuable, failures are learning opportunities, and humans and AI work better together than either alone.

When leadership openly shares failures, the entire cultural tone shifts. Suddenly it's okay to try something and have it not work out. That's when real innovation happens.

Connecting AI to Values

Better yet, connect AI adoption to your organization's values.

If your company values customer satisfaction, show how AI is making customer interactions faster and more satisfying. If your company values employee development, show how AI is letting people spend less time on routine work and more time on meaningful work. If your company values innovation, show how AI is letting teams move faster and try more ideas.

When AI is connected to values, it stops being "technology we're pushing" and becomes "how we live our values." That connection is powerful.

The Organizational Role of AI Advocates and Architects

Successful AI transformation doesn't happen without champions. You need people explicitly responsible for driving it forward.

What AI Advocates Do

AI advocates are evangelists inside your organization. They're not necessarily technical. They might be business leaders, operations managers, or innovative individual contributors.

What makes them advocates is that they see the potential of AI for their organization, they're experimenting with it in their own work, they're willing to share what they learn, and they're helping other people understand how AI could help them.

They're not imposing AI on unwilling people. They're making a compelling case based on their own experience. They're answering questions and concerns honestly. They're modeling enthusiasm without hype.

The most effective advocates are people in roles that matter to the broader organization. When a VP of Sales becomes an AI advocate and starts sharing how AI is improving sales workflows, that's credible. When a well-respected engineer starts using AI for coding and talking about how it changes what they focus on, that matters.

What AI Architects Do

AI architects are different. They're responsible for the systems, governance, and strategy behind AI adoption.

They think about: Which processes should we target? What tools should we use? How do we integrate with our existing systems? What controls do we need? How do we measure success? How do we scale? How do we maintain governance?

Architects need technical knowledge but also business knowledge. They need to understand your processes, your data, your infrastructure, and your constraints. They need to balance innovation with stability.

The best architects are people who understand both technology and business deeply. They can talk to engineers about AI capabilities and limitations and also talk to business leaders about ROI and strategy.

Building Your AI Leadership Structure

Most organizations benefit from having both advocates and architects, working together.

Architects set strategy and oversee implementation. Advocates help drive adoption and gather feedback from the organization. Together, they create a feedback loop: architects learn from advocates what's working and what's not in the organization, advocates learn from architects what's possible and what makes sense strategically.

This structure works at any organizational size. A startup might have one person playing both roles. A large enterprise might have a whole team of each. The structure matters less than the fact that both roles exist and they're coordinated.

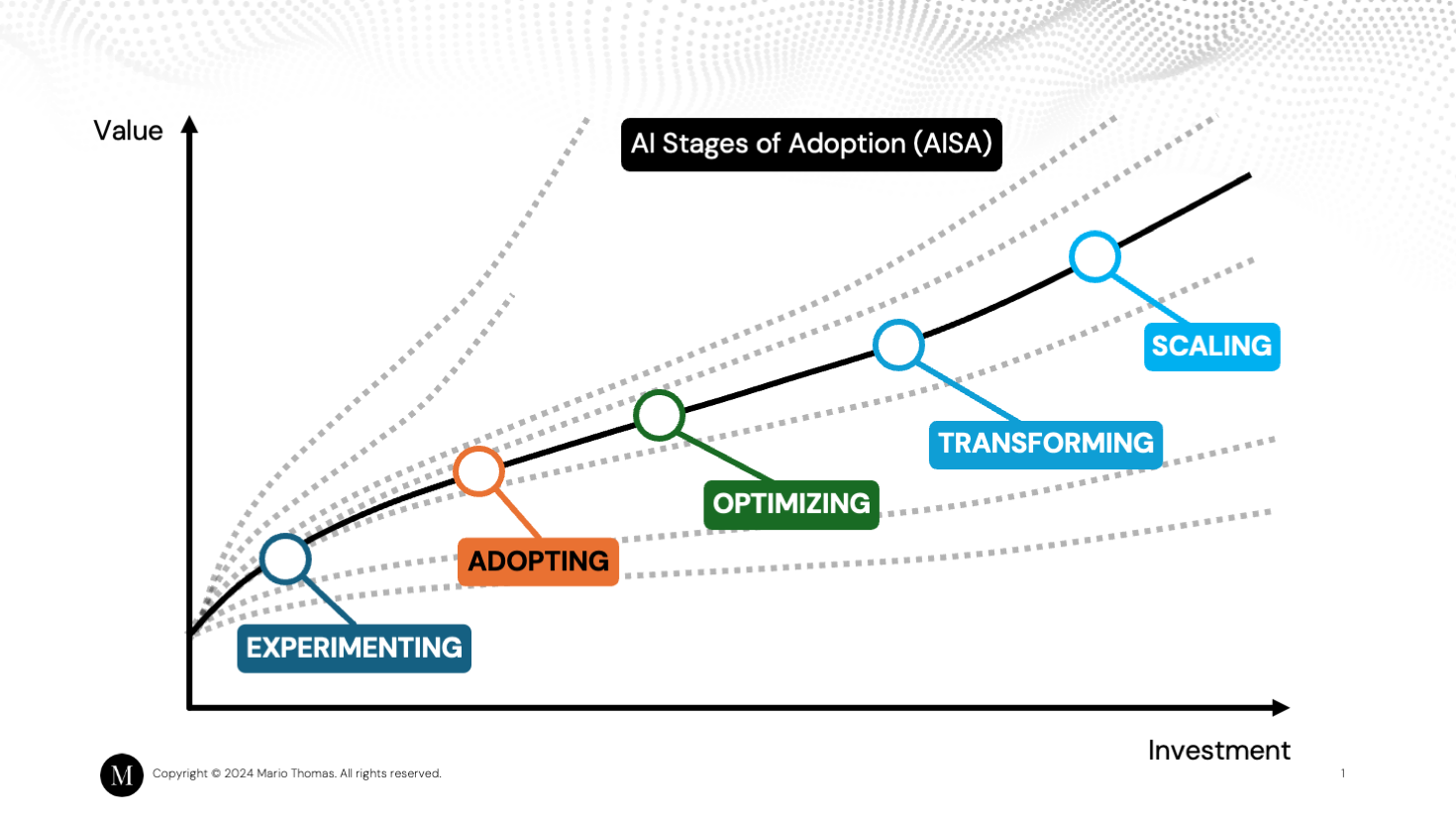

Future State: Where AI is Heading and What Organizations Need to do Today

AI is changing rapidly. What should your organization do today to prepare for where things are headed?

The Convergence of AI with Business Process Management

Right now, many organizations are treating AI as a separate initiative. We're deploying AI tools. We're building AI skills. We're adjusting governance for AI. It's a separate project.

Over the next couple of years, that will change. AI will be less about special AI projects and more about how every process works.

Organizations that are building that integration today—where AI isn't a separate tool but part of how work gets done—are setting themselves up for the next phase. They're building muscle memory. They're developing skills that will transfer. They're establishing cultural norms that make AI adoption easier.

The Role of Custom AI and Domain-Specific Models

Right now, most organizations are using general-purpose AI models. Chat GPT or Claude or similar. That's fine for starting. It's fast and it works.

But over time, custom models and domain-specific models will deliver more value. Models trained on your organization's data. Models fine-tuned for your specific workflows. Models that understand your industry or domain deeply.

Building those custom models requires: data infrastructure, governance that allows AI to access data (but safely), and technical capability to fine-tune models. Organizations that are building those foundations today will move faster when the time comes.

The Skill Development Pipeline

Right now, there's a shortage of AI skills. That will improve, but organizations that have built internal AI capabilities and skills will still have an advantage.

Start building your AI skill pipeline now. Identify people who are interested in AI. Give them time and resources to develop skills. Create career pathways for AI roles. As people develop skills, they become more valuable to your organization and more capable of solving complex AI problems.

Governance and Trust as Competitive Advantages

As AI becomes more central to business, organizations with strong governance and trust will have advantages. They'll be able to scale AI faster because they don't have to build trust—it's already there. They'll be able to deploy more sensitive applications because the governance is solid. They'll attract talent because working with AI is seen as safe and structured.

Investing in governance today isn't about compliance. It's about competitive advantage.

Bringing It Together: The Path Forward

Building AI culture in your organization is possible. It's not easy, but it's straightforward if you understand the fundamentals.

Start with the right foundation: meet employees where they are, address their concerns honestly, provide practical training, and create safe spaces for experimentation. Leaders need to model the behavior they want to see. AI adoption isn't a mandate from above. It's a culture that's built one conversation, one experiment, and one win at a time.

Build strategy, not just initiatives. Identify where AI delivers real value. Run pilots that are designed to scale. Measure outcomes that matter. Be willing to fail and learn. Scale what works across your organization systematically.

Invest in trust from day one. Enterprise-grade security, transparent governance, honest conversations about limitations and risks. That investment pays dividends in adoption, in employee confidence, and in organizational resilience.

Recognize that human oversight isn't a limitation. It's the mechanism that makes AI actually valuable. Design workflows that combine AI automation with human judgment. Train people to make that combination work effectively.

Tell the right stories. Share wins. Share failures. Connect AI adoption to your organization's values and to employee career development. Let advocates emerge. Give them the platform to share their experience.

Appoint architects who are responsible for strategy and systems. Hold them accountable for ROI. Let them create the infrastructure that lets the rest of the organization move fast.

Your organization probably has significant investment in AI already. The question isn't whether you'll use AI. The question is whether you'll use it well, at scale, in ways that deliver real value.

That comes down to culture. It comes down to strategy. It comes down to people.

Get those three things right, and the technology takes care of itself.

FAQ

What is AI culture in an enterprise organization?

AI culture is the shared mindset, behaviors, and values that make AI adoption normal and effective across an organization. It's not just having AI tools—it's having employees who understand AI, see it as valuable for their work, use it confidently, and hold the organization accountable for using AI responsibly. Organizations with strong AI culture see significantly higher adoption rates, better ROI from AI investments, and more sustainable transformation.

Why do employees resist AI adoption even when organizations invest heavily in tools and training?

Employee resistance usually stems from specific concerns that haven't been addressed: fear that AI will eliminate their job, uncertainty about how AI fits into their daily work, lack of trust in AI systems, insufficient training that's relevant to their role, or leadership that talks about AI benefits without addressing how it changes their work. Addressing resistance requires direct conversation about these concerns, transparent communication about job impacts, practical training relevant to specific roles, and visible leadership support for responsible AI use.

How should an organization measure AI ROI beyond time savings?

Comprehensive AI ROI includes multiple factors beyond speed: quality improvements (fewer errors, better decisions), decision improvement (faster, more informed choices), employee experience (job satisfaction, career development), retention (reduced turnover from better role design), capability development (teams become more valuable), and risk reduction (compliance, governance). Organizations that measure only time savings typically underestimate ROI by 50-70%. A balanced measurement framework should weight factors based on your organization's priorities.

What's the most common reason AI pilots fail to scale?

The most common reason is treating pilots as isolated experiments rather than building blocks for systematic transformation. Organizations run a successful pilot, celebrate it, then fail to integrate learnings into actual workflows or to systematize what worked. Real scaling requires: clear ownership and accountability for outcomes, explicit integration into workflows and roles, measurement that tracks to actual business impact, and the discipline to move from one pilot to scaling one area before starting the next pilot.

How can organizations address bias and hallucinations in AI systems without paralyzing adoption?

Address these limitations by building them into your design from the start, not trying to work around them later. For bias, audit your systems for potential biases, document what you find, make improvements, and create oversight for high-impact decisions. For hallucinations, design workflows that assume AI output needs verification. Build verification into processes that matter. Train employees to spot common errors. Use AI for drafting and suggestions, not for final authority on facts that need to be accurate. This approach reduces risk while enabling adoption.

What role should leadership play in AI adoption?

Leadership is critical because adoption isn't really about technology—it's about people. Leaders set the tone by: being transparent about AI limitations and risks, publicly modeling responsible AI use in their own work, sharing both wins and failures from AI experiments, explicitly addressing employee concerns about job security and career impact, measuring and celebrating the right outcomes, creating safe spaces for experimentation and learning, and maintaining consistent focus on AI as strategic priority. When leadership models these behaviors, adoption accelerates significantly.

How long does it typically take for an organization to build sustainable AI culture?

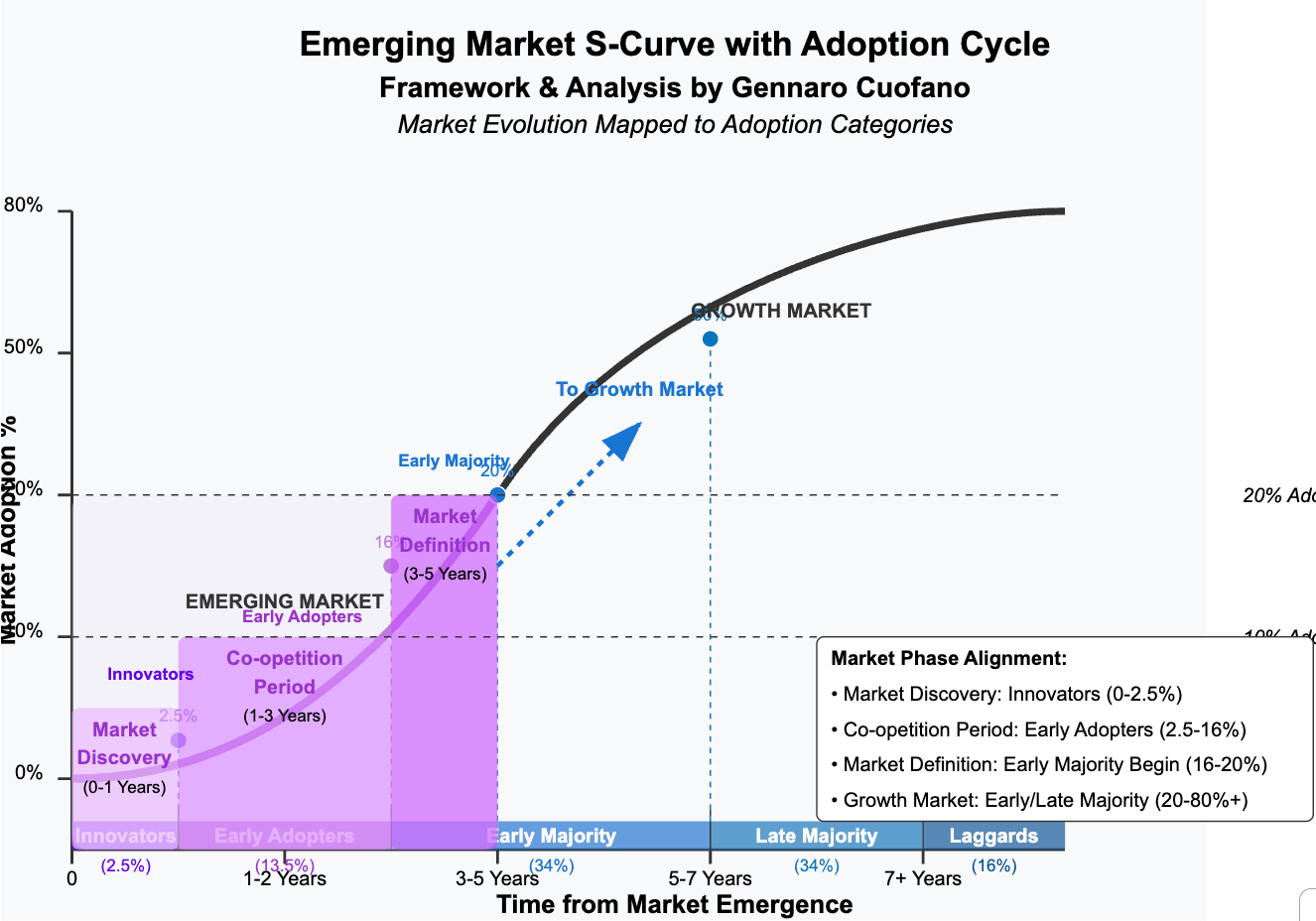

This depends on organizational size and starting point, but typical timelines are: months 1-3 (leadership alignment and initial training), months 3-6 (first pilots and early advocacy building), months 6-12 (scaling successful pilots and addressing resistance), months 12-24 (systemic integration across major business processes). The total timeline from start to sustainable culture is typically 18-24 months for medium-sized organizations. Larger organizations may need longer. The key is consistent focus and commitment throughout this period.

What's the relationship between AI tools, training, and culture—which matters most?

All three matter, but they're not equally important. Culture is the foundation. With strong culture, any reasonable tools and training will work. With weak culture, no tools or training will succeed. Your priority order should be: 1) build cultural foundation (leadership alignment, addressing concerns, creating psychological safety), 2) provide role-specific training (not generic), 3) deploy good tools (good but not perfect is sufficient). Many organizations reverse this order and wonder why adoption is slow.

How should organizations handle employees who are skeptical about AI benefits?

Don't try to convince skeptics with arguments. Instead, give them space to experience AI benefits directly. Let skeptical employees participate in pilots. Encourage them to try tools on their own timeline. Share case studies from peers they respect. Acknowledge legitimate concerns about limitations and risks—that credibility builds more trust than overselling benefits. Often, skeptics who have a positive experience become the strongest advocates because they're credible to other skeptics. Your best conversion tool is real experience, not persuasion.

Key Takeaways

- Culture determines AI adoption success more than technology. Without addressing employee concerns and building genuine buy-in, tools and training initiatives will fail regardless of quality.

- Safe experimentation is foundational. Organizations that create psychological safety for AI experimentation, coupled with practical training and leadership modeling, see adoption rates 3x higher than organizations that mandate AI use.

- ROI comes from strategy, not just speed. Only 5% of AI pilots deliver significant value—not because AI doesn't work, but because most organizations lack clear strategy for integration and scaling.

- Trust must be built into systems from the start. Enterprise-grade security, transparent governance, honest communication about limitations, and visible oversight create the foundation for scaling AI across an organization.

- Human judgment is where value comes from. Effective AI implementation combines AI automation with human oversight, creating workflows that leverage AI's processing power alongside human judgment. That combination is stronger than either alone.

- Leadership modeling changes everything. When senior leaders visibly use AI in their own work, share both successes and failures, and address employee concerns transparently, adoption accelerates significantly. Conversely, leadership that talks about AI without demonstrating it undermines the entire initiative.

- Measurement determines outcomes. Organizations that measure comprehensive ROI (quality, decision improvement, skill development, employee satisfaction) see 2-3x better results than organizations measuring only time savings. What gets measured gets managed.

Related Articles

- Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

- Admin Work is Stealing Your Team's Productivity: Can AI Actually Help? [2025]

- From AI Pilots to Real Business Value: A Practical Roadmap [2025]

- Larry Ellison's 1987 AI Warning: Why 'The Height of Nonsense' Still Matters [2025]

- OpenAI Disbands Alignment Team: What It Means for AI Safety [2025]

- OpenAI Researcher Quits Over ChatGPT Ads, Warns of 'Facebook' Path [2025]

![Building AI Culture in Enterprise: From Adoption to Scale [2025]](https://tryrunable.com/blog/building-ai-culture-in-enterprise-from-adoption-to-scale-202/image-1-1770896239701.jpg)