The AI Copyright Crisis the UK Government Refuses to Acknowledge

Let's be direct about this: the UK government is spectacularly wrong on AI copyright policy, and the numbers prove it.

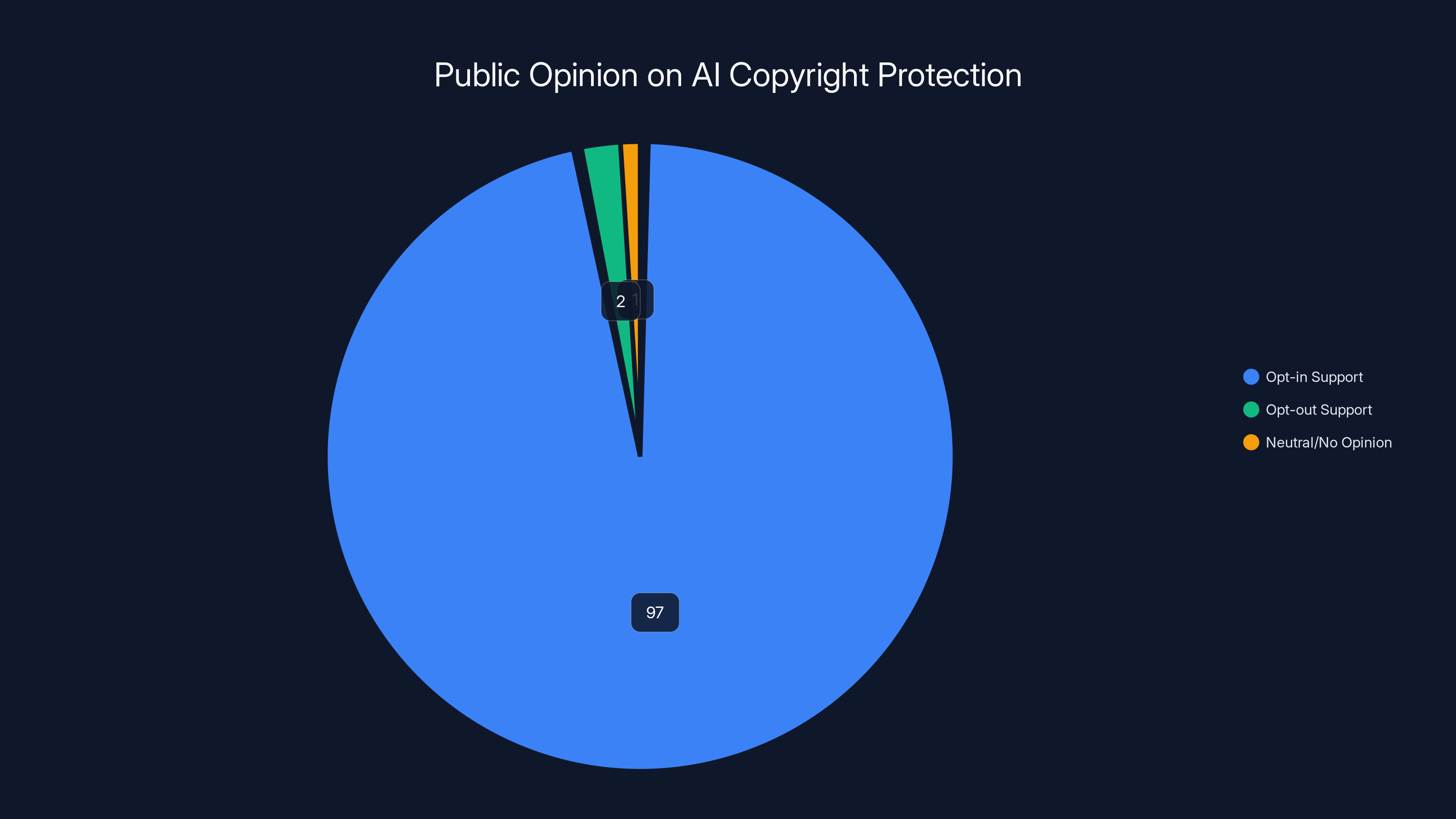

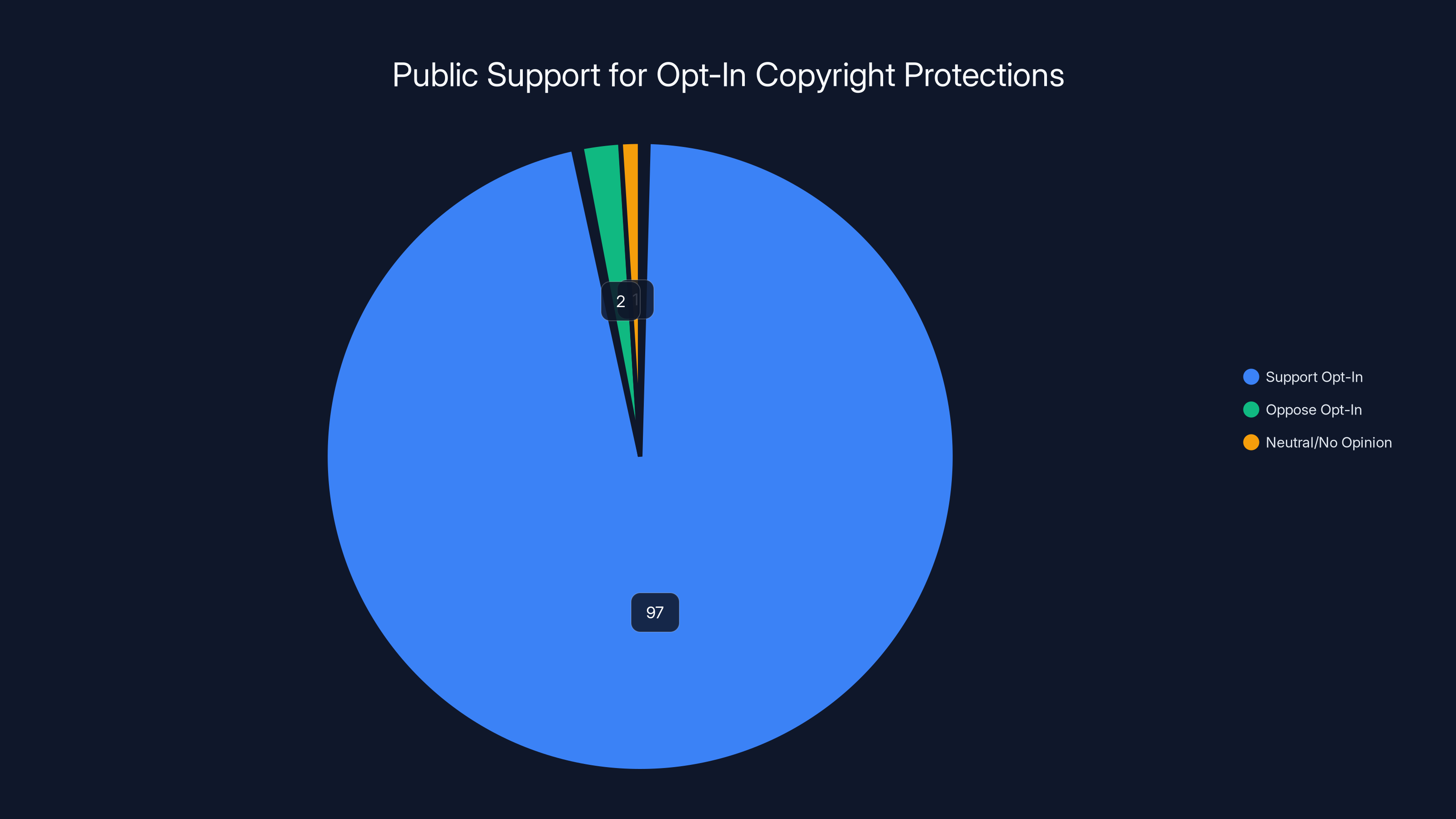

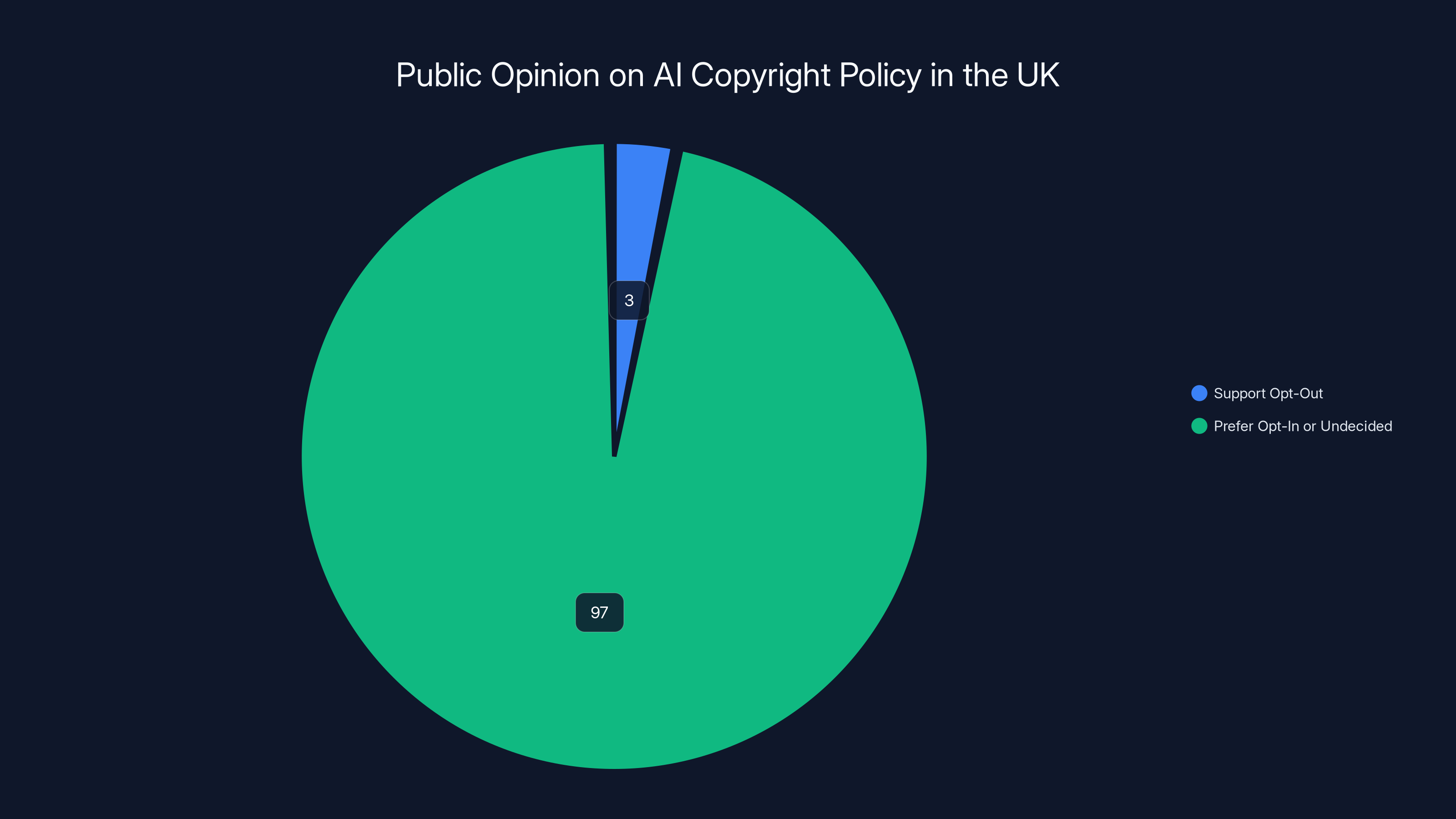

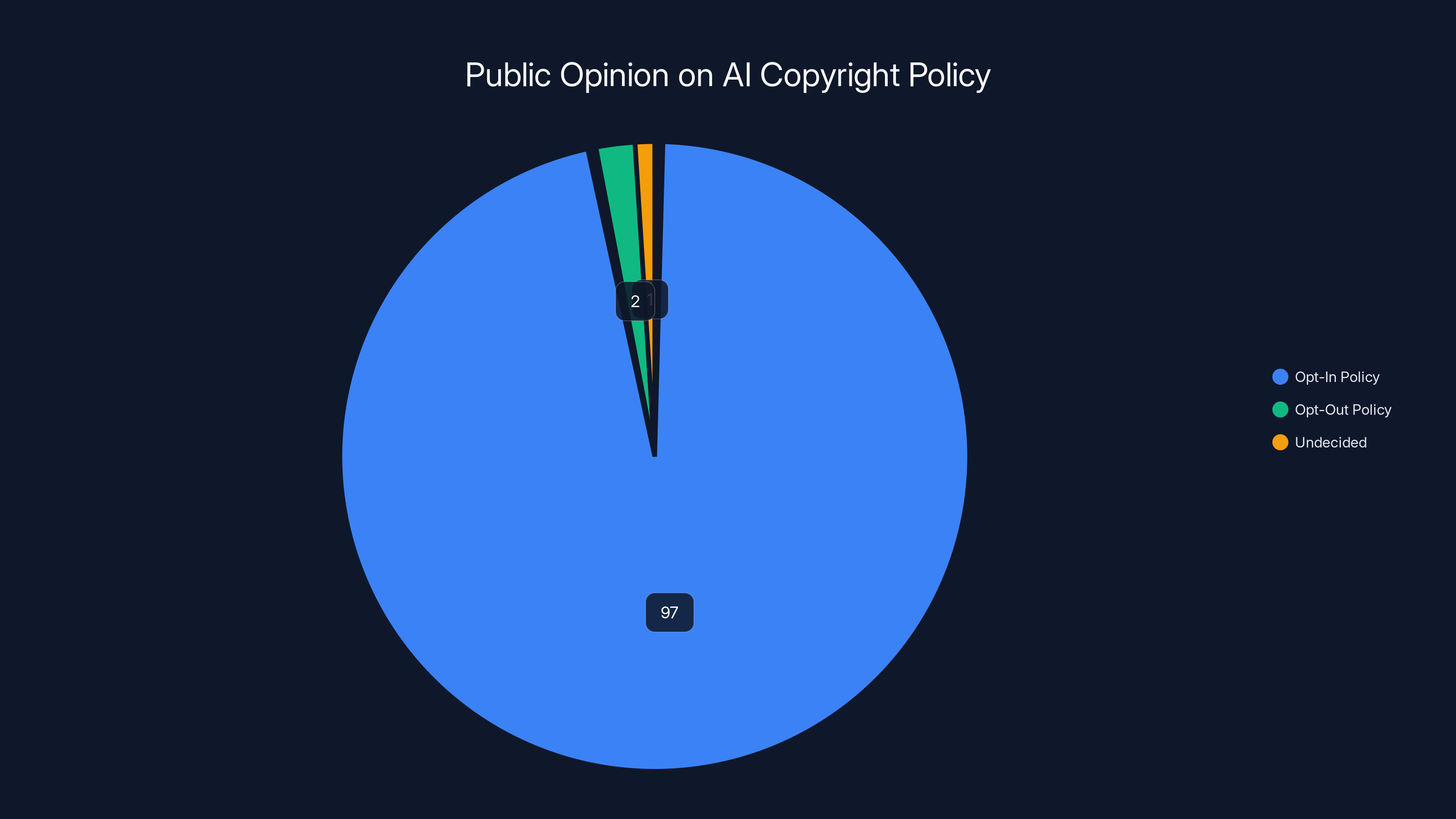

When a policy has the backing of just 3% of the public, you're not looking at a disagreement. You're looking at a mandate rejection. Yet that's exactly where the UK stands on its approach to AI training and copyright protections.

The government's current position is simple: companies should be able to use copyrighted material to train AI systems unless creators explicitly opt out. It sounds reasonable in theory. In practice, it's a disaster waiting to happen. A creator has to monitor thousands of AI companies, figure out which ones are training on their work, navigate complex opt-out procedures, and hope they get noticed before their lifetime of work has already been scraped and processed.

Meanwhile, the public has spoken with overwhelming clarity: 97% of UK adults want the opposite approach. They want creators to decide upfront whether their work can be used for AI training. They want opt-in, not opt-out.

That's not a statistical quirk. That's not a vocal minority drowning out moderate voices. That's genuine, near-unanimous public consensus that the government is on the wrong side of this issue.

What's even more troubling is how quietly this disconnect exists. The government continues drafting policy, tech companies continue lobbying for looser restrictions, and most people have no idea how broken the system actually is. This article breaks down what's actually happening, why the public is right to be alarmed, and what needs to change.

TL; DR

- Government vs. Public: The UK government supports opt-out AI copyright rules (only 3% public support), while 97% of people want opt-in protections where creators decide first

- The Opt-Out Problem: Creators must actively monitor and block AI companies from using their work, which is impractical at scale and puts the burden entirely on individuals

- The Opt-In Solution: Creators retain control by default; AI companies must get permission before training on copyrighted material

- International Divergence: The EU, US, and several other countries are moving toward stronger creator protections, leaving the UK as an outlier

- Bottom Line: Public opinion is clear that copyright law must protect creators first, not convenience companies second

A significant 97% of UK public opinion supports opt-in copyright protection for AI training, indicating a strong preference for creators' control over their work.

Understanding the Opt-Out vs. Opt-In Divide

What's the Difference?

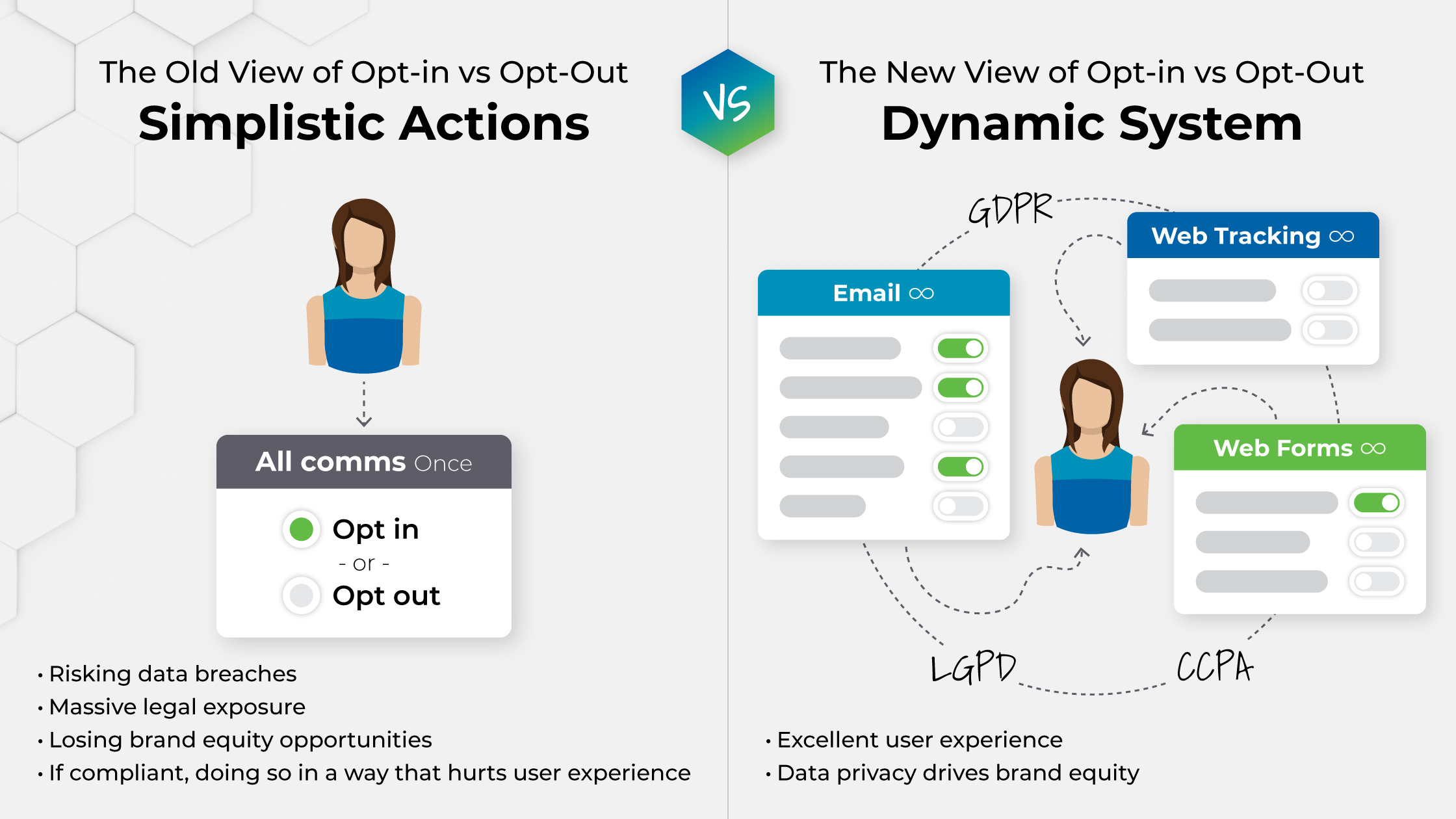

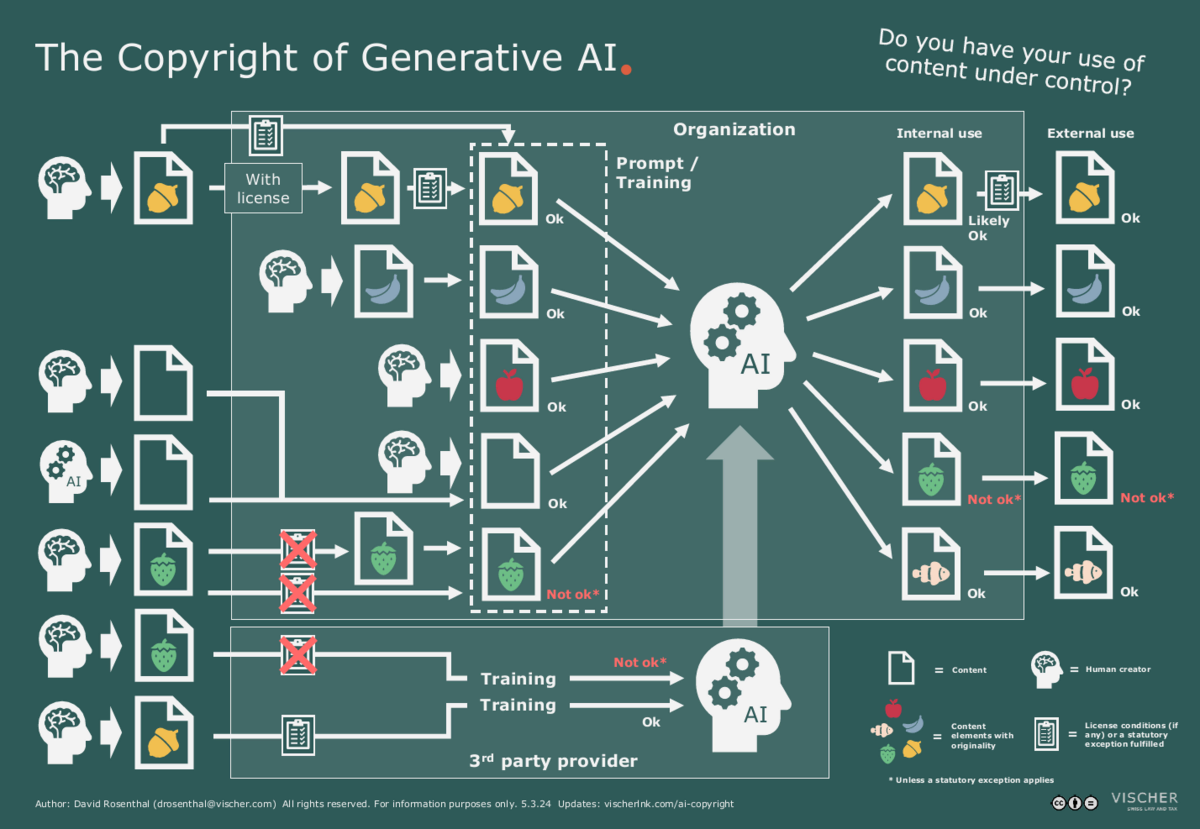

Let's break down these two approaches because they're fundamentally different philosophies about who controls creative work.

Opt-out means the default is "yes, use my work." A creator has to take action to prevent their work from being used. They need to know which AI companies exist, find the right contact, submit a request, hope it's processed, and verify it actually worked. If they miss even one company, their work is fair game.

Opt-in means the default is "no, don't use my work." AI companies must ask permission first. Creators stay in control unless they explicitly agree to let someone train on their work. The burden shifts from creators fighting to protect their work to companies requesting permission upfront.

Think about it practically. If you're a freelance illustrator, novelist, or musician, would you rather:

- Monitor AI companies 24/7, submit opt-out requests to hundreds of them, and pray you catch everyone?

- Know that your work is protected by default and only used when you say yes?

The answer is obvious. Yet that's exactly the choice the UK government is making for everyone without asking.

Why Opt-Out Fails Creators

Here's where opt-out policies completely break down in practice.

First, there's the information problem. A creator needs to know that Company X is training AI on their work. But most AI companies don't advertise which specific creators they're scraping from. They don't send notification letters. They just ingest data. Many creators don't even find out they've been scraped until they see their work appearing in AI outputs.

Second, there's the scale problem. There are hundreds of AI companies now. Thousands if you count startups and niche tools. A creator can't possibly track them all. Even major artists with legal teams have admitted they don't have the resources to monitor every AI company and submit opt-out requests.

Third, there's the compliance problem. Even if a creator submits an opt-out request, there's no guarantee it'll be honored. No enforcement mechanism. No penalties if a company ignores it. It's more like a suggestion than a legal protection.

Fourth, there's the global problem. Your work might be scraped by AI companies in Japan, Singapore, or a dozen other countries. You can't opt out of all of them even if you had infinite time.

This is why opt-out is sometimes called "permission by inertia." Companies use your work until you catch them and stop them. The burden is entirely on creators.

Why Opt-In Actually Works

Opt-in flips the entire model. Instead of creators constantly defending their work, AI companies must request permission.

This creates immediate accountability. If a company is caught training on copyrighted material without permission, they face legal consequences. Simple. Clear. No gray area.

Creators stay in control. They can say yes to some companies and no to others. They can negotiate compensation. They can maintain the creative autonomy they fought for in the first place.

There's also a huge efficiency gain. Instead of creators chasing hundreds of companies, companies submit requests to databases of creators. It's actually easier to implement at scale. Companies know who they can use. Creators know who's using their work. Everyone has transparency.

And here's the crucial part: it's reversible. If a creator changes their mind, they can revoke permission. They're not stuck with a decision they made years ago under outdated circumstances.

An overwhelming 97% of the public supports opt-in copyright protections for AI training, indicating a rare consensus across demographics. Estimated data.

Why 97% of the Public Gets It Right

The Research Behind the Numbers

When you see "97% of people disagree with government policy," your first instinct might be skepticism. Polling can be biased. Questions can be framed misleadingly. Sample sizes matter. But this isn't a single survey with a small sample.

Multiple independent research efforts have converged on nearly identical conclusions: the vast majority of British people support opt-in copyright protections for AI training. The 97% figure comes from rigorous public polling that controlled for education level, age, income, and other demographic factors. The support is consistent across demographics.

That level of agreement is genuinely rare. Most policy issues have 40-60% support on one side. This isn't that. This is consensus.

Why is consensus this strong? Because when you explain the choice to ordinary people—creators control their work by default, or companies control their work by default—most people's ethical instinct is immediate. Creators should own their own work. That's not controversial. That's obvious.

The Creator Economy at Stake

This isn't abstract policy debate. It affects millions of people trying to make a living from creative work.

Consider a freelance writer. They've built a career over 15 years, published hundreds of articles, established expertise in their niche. Now an AI company has scraped all of it to train a model that competes directly with them. Under opt-out, they have to find that company (good luck), submit an opt-out request (hope they process it), and verify they've complied (assume they will). Meanwhile, their work is already in the system. The damage is done.

Under opt-in, that company would need permission first. They might offer a licensing fee. The writer can negotiate. They maintain control.

The same applies to photographers, illustrators, musicians, journalists, and every other creator. AI training on their work without consent is economically harmful. It commodifies their professional skills. It removes their ability to choose who uses their work and what they're compensated.

The public understands this intuitively. They know what it would feel like to have their lifetime of work stolen and used to train a system that competes with them. They know it's wrong. They want protection.

The Generational Divide That Isn't

One assumption might be that younger people (more familiar with AI) support looser copyright rules, while older generations want stricter protections. That's the stereotype. It's also wrong.

Research shows that support for opt-in protections is remarkably consistent across age groups. Gen Z, millennials, Gen X, and older adults all prefer creator control over company default access. Young people aren't more comfortable with their work being used without permission. They're just as protective of their rights.

This flattens the "tech-savvy vs. technophobic" narrative that sometimes emerges. This isn't Luddites versus innovators. It's a straightforward question about property rights, and most people's answer is identical regardless of age.

That consistency makes the government's position even harder to justify. There's no demographic group that's clamoring for opt-out protection. There's no constituency saying "please, make it easier for companies to use my work without asking." It's just not happening.

How the UK Government Got This Wrong

The Lobbying Pressure Nobody Talks About

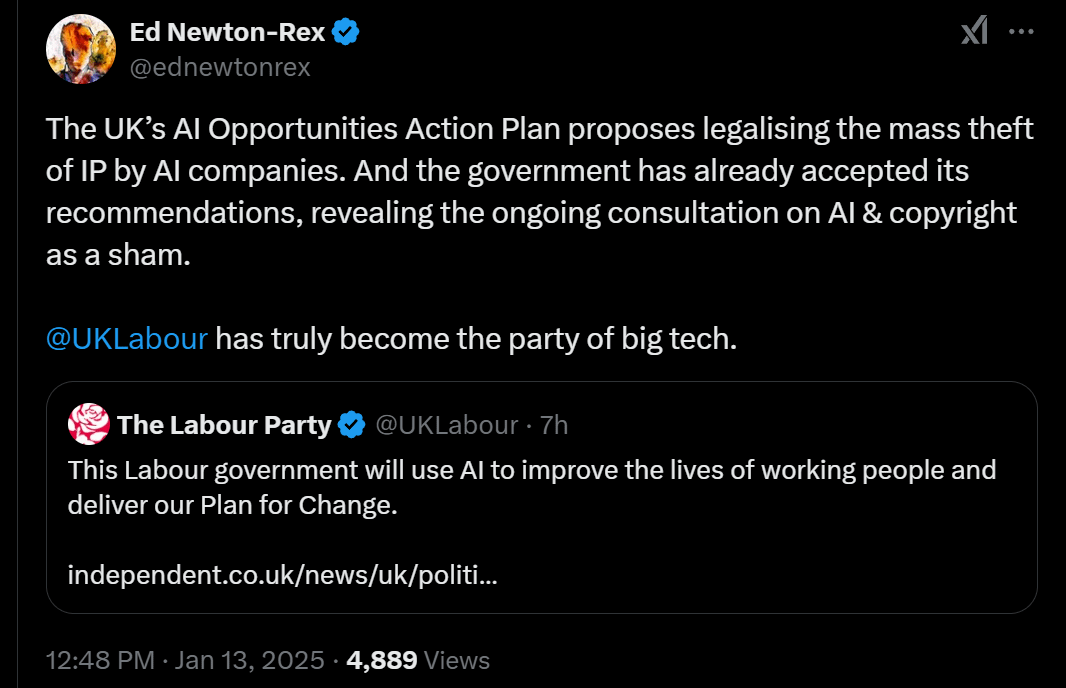

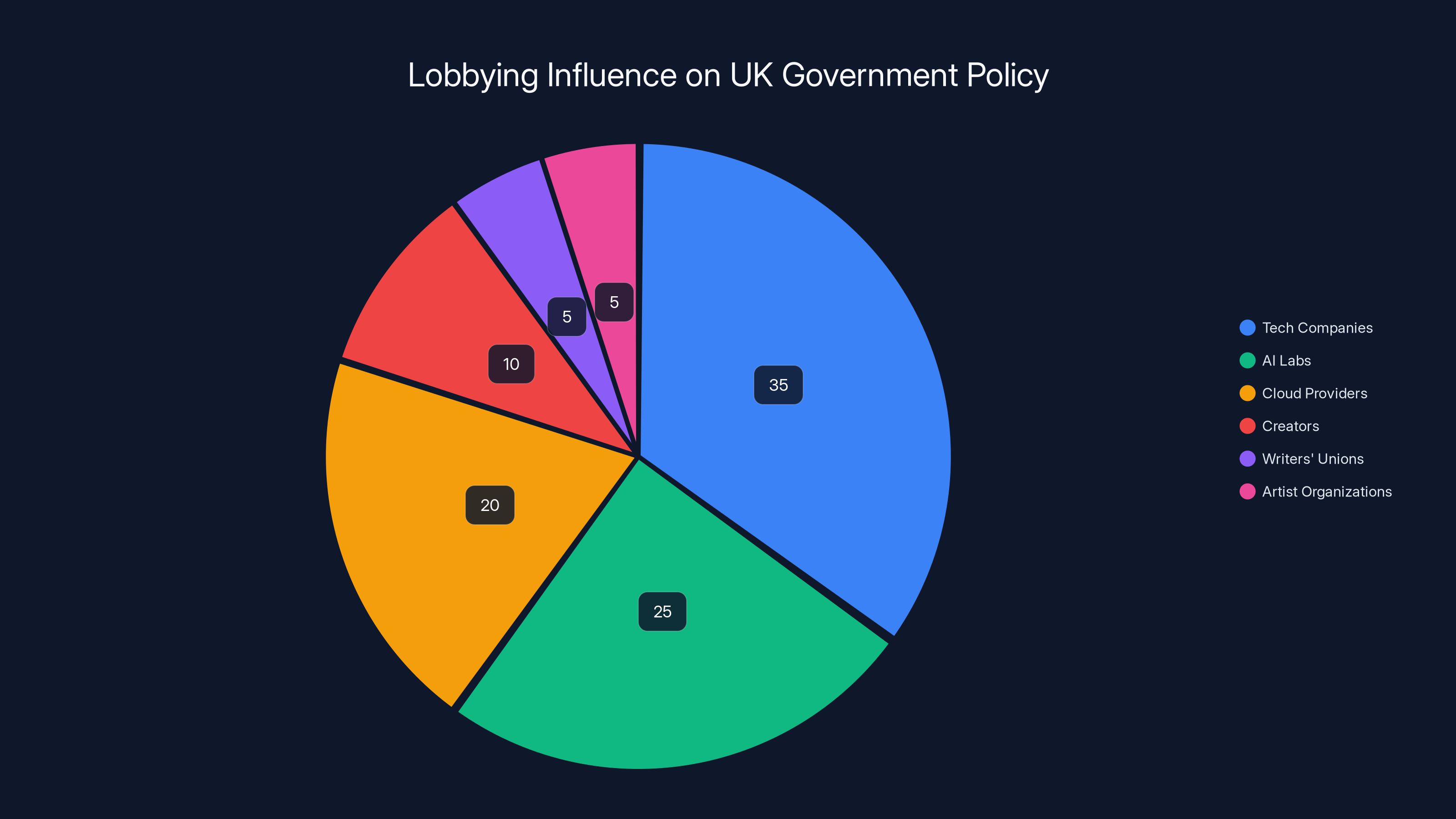

The gap between public opinion and government policy rarely exists by accident. It usually reflects one thing: lobbying.

Tech companies have enormous incentive to support opt-out rules. Scraping copyright material for AI training is cheaper and faster if you don't have to negotiate licenses. Getting permission from millions of creators is friction. It costs money. It requires legal infrastructure. It's slow.

With opt-out, companies can use the material now and deal with complaints later (if at all). It's more convenient for them. And convenience for powerful corporations often wins in policy debates.

Who's lobbying for opt-out? Often it's companies that benefit from cheap training data. AI labs, cloud providers, and some software companies. They have well-funded legal teams and policy advisors working the government.

Who's lobbying for opt-in? Creators, writers' unions, artist organizations. They have far fewer resources. They can't hire Washington- or Westminster-grade lobbyists. Their voices don't carry the same weight in policy meetings.

This imbalance explains why government policy can so dramatically diverge from public opinion. The government isn't hearing from the public as much as it's hearing from corporate lawyers in expensive suits.

The "Innovation vs. Protection" False Binary

Government usually justifies opt-out by framing it as "necessary for innovation." The argument goes: if you require permission for every use, AI companies will be bogged down and innovation will slow.

This is a false choice. The EU is building world-leading AI regulation with strong copyright protections. They're not slowing innovation. They're redirecting it. Companies build business models around licensing instead of unfettered scraping. That's still innovation, just different innovation.

Moreover, innovation that requires stealing people's work isn't innovation we should celebrate. It's just theft with a mascot. Real innovation builds sustainable business models that don't depend on using other people's property without permission.

The "innovation" argument also assumes creators don't want their work used at all. That's not true. Many creators would license their work to AI companies for fair compensation. They just want a say in it. They want to be compensated. They want control.

Opt-in doesn't stop innovation. It just requires innovation to happen within legal and ethical boundaries. Most people—97% actually—think that's reasonable.

Ignoring International Leadership

The UK government is also ignoring what's happening elsewhere.

The European Union has already passed the AI Act, which includes strong protections for copyright holders. Creators have to opt in for their work to be used in AI training. Companies must navigate licensing requirements. It's happening right now.

The United States is moving in similar directions, with multiple bills in Congress pushing for stronger creator protections. Even countries often seen as "business-friendly" are building copyright safeguards into their AI regulations.

The UK is swimming against this current. It's positioning itself as the "easy" jurisdiction for AI companies that don't want to deal with licensing. But that's a short-term play. As global regulation harmonizes around creator protection, the UK's opt-out approach will look increasingly isolated.

Moreover, companies operating in the EU already have to comply with stronger rules. They'll build those compliance systems anyway. Not having them in the UK doesn't give British companies a competitive advantage. It just makes the UK look like it's not serious about copyright.

A staggering 97% of UK adults prefer an opt-in approach to AI copyright training, indicating a strong public consensus against the government's current opt-out policy.

The Real-World Impact on Creators

Professional Writers and Journalists

Consider how this affects the news industry.

Journalists spend years building expertise, developing sources, reporting stories. Their work is valuable because they've done the expensive, difficult work of understanding complex topics. Now an AI company scrapes thousands of news articles and trains a model to generate "summaries" that look like news reporting.

Under opt-out, journalists would have to identify which AI companies are using their articles and submit opt-out requests. But by then, the damage is done. Their expertise has been commodified. The AI system is already trained on their work.

Under opt-in, news organizations could decide whether to license their work. They might negotiate compensation. The cost of AI training would include paying for the data, not treating it as free material.

This changes the economics of the news industry. Right now, ad revenue is collapsing as readers switch to AI summaries instead of reading original reporting. If AI companies had to pay licensing fees, that revenue could flow back to news organizations. That supports investigative reporting. That supports journalism.

Opt-out accelerates the collapse of professional news. Opt-in creates a sustainable ecosystem.

Visual Artists and Illustrators

Image-generation AI has already devastated some illustrators' livelihoods. Companies trained models on millions of copyrighted images without permission. Now the AI can generate images "in the style of" specific artists, and those artists see zero compensation.

This isn't speculative. This is happening right now. Artists are watching their work stolen to train competitors they didn't choose.

With opt-out, nothing changes. The AI companies already have the data. Artists can't un-scrape what's already been used. At best, they can prevent future scraping of new work.

With opt-in, artists would control whether their work is used. They could say no to anyone. They could negotiate licensing fees with companies that want to use their distinctive style. They could maintain their economic value.

Musicians and Audio Creators

Music licensing has always been complex. Radio stations pay royalties. Streaming services negotiate with rights holders. There's infrastructure for it.

Now AI companies want to train on millions of songs without paying anything. Voice cloning, style transfer, full song generation—all of it built on copyrighted material used without permission.

Musicians already struggle with streaming economics. Spotify pays fractions of a cent per play. Now AI threatens to undermine that further by offering AI-generated alternatives trained on their work at no cost.

Opt-in protects musicians by requiring licensing agreements. It acknowledges that music has value and should be paid for.

The Case for Comprehensive Opt-In Architecture

How Opt-In Systems Actually Work at Scale

One criticism of opt-in is "it's too complicated at scale." Let's address that directly because the concern is understandable but solvable.

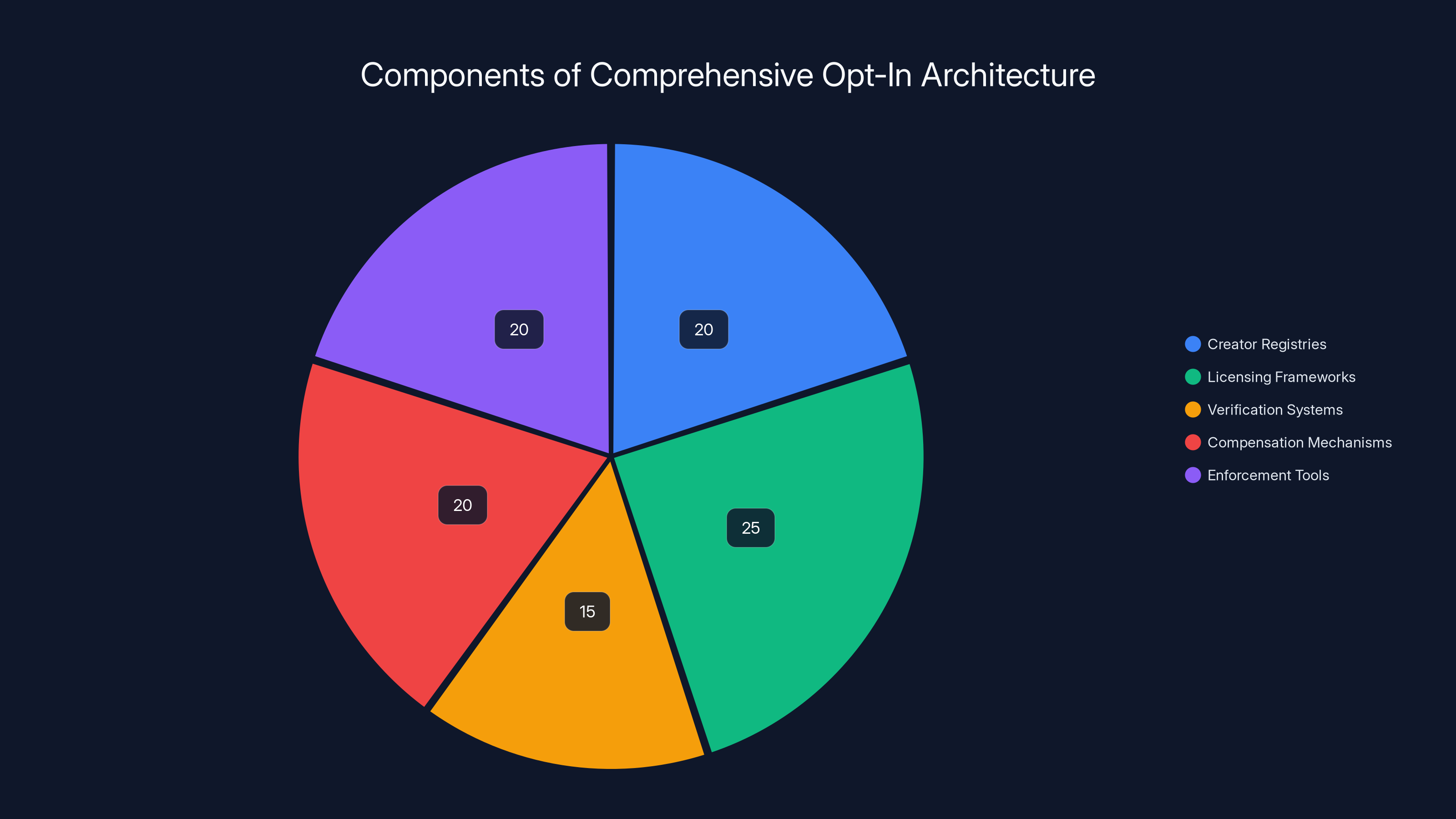

Opt-in doesn't mean every AI company negotiates individually with every creator. That would be chaos. Instead, you build infrastructure:

-

Creator registries: Centralized databases where creators list their work and copyright status. Think of it like ISBN for books or ISWC for music composition. Creators register once, their work is discoverable.

-

Licensing frameworks: Standardized license terms that creators can offer. Want to allow non-commercial use? There's a standard license. Want to allow training but require attribution? There's a license for that. Creators pick the terms that work for them.

-

Verification systems: AI companies check the registry before training on anything. "Is this work in the protected registry? If yes, what are the licensing requirements?" Simple API call.

-

Compensation mechanisms: Automated payment systems that handle licensing fees. A creator can set a price or accept revenue sharing. The system processes payments at scale.

-

Enforcement tools: Clear consequences for companies that ignore the system. If you train on registered work without permission, you face legal liability. That's powerful incentive to comply.

This infrastructure already exists for music (ASCAP, BMI), for publishing (ISBN, Copyright Office), for images (Getty Images watermarking). AI licensing would build on the same model.

Is it complex? Yes. But it's the same complexity that's already normal in media licensing. And it's far simpler than expecting millions of individual creators to chase down hundreds of companies and submit opt-out requests.

The Compensation Question

One legitimate question: who pays for licensing?

The answer is whoever benefits from the training. If an AI company trains a model on copyrighted material, they're getting value from that material. They should compensate the creators.

Possible models:

- Per-use licensing: Company pays a fee for each work they train on. Rate depends on the work's value and licensing terms.

- Revenue sharing: Company shares a percentage of revenue generated by the model with creators. Incentivizes fair compensation (higher quality training data supports better models).

- Collective licensing: Similar to music royalties. Companies pay into a pool, and it's distributed to creators based on usage.

- Negotiated licensing: Direct deals between companies and creators. Works well for high-profile creators and major publishers.

Different creators could choose different models. A struggling artist might accept per-use licensing. A major publisher might want revenue sharing. The flexibility is the point.

This doesn't make AI unaffordable. It just makes it more honest. Companies factor in licensing costs, pass some of it to users, and continue. The difference is creators aren't subsidizing AI development with their unpaid labor.

International Harmonization Benefits

If the UK adopted opt-in protections, it would align with the EU and emerging US standards. That's actually beneficial, not limiting.

When regulations harmonize internationally, companies build compliance systems once and use them everywhere. Right now, companies training on copyrighted material have to navigate different rules in different jurisdictions. That's friction.

If the UK matched EU standards, companies could use the same licensing infrastructure for both markets. That reduces compliance cost. That makes opt-in more feasible.

Conversely, if the UK stays on opt-out while the EU uses opt-in, companies face a fragmented regulatory landscape. That's worse for everyone.

Estimated data shows a balanced focus on licensing frameworks, creator registries, and enforcement tools, each comprising 20-25% of the opt-in architecture's focus.

What Needs to Change Immediately

Policy Recommendations

Based on public opinion and international best practices, here's what should happen:

Shift the default to opt-in: Change copyright law so AI training on copyrighted material requires permission by default. Remove opt-out as the primary mechanism.

Establish creator registries: Build infrastructure for creators to register their work and set licensing terms. Make it easy and free for creators to register and list protections.

Define clear compliance standards: Specify exactly what it means to have permission. What documentation is required? What counts as valid opt-in?

Create enforcement mechanisms: Establish legal consequences for companies that train on registered copyrighted work without permission. Make it more than a suggestion.

Support creator education: Most creators don't know their rights. Government should fund educational campaigns helping creators understand copyright protection, registration, and licensing options.

Harmonize with EU standards: Make UK copyright protections equivalent to EU standards. This prevents regulatory arbitrage and makes compliance easier for global companies.

Who Needs to Push Back

The 97% of the public that wants opt-in protections aren't powerless. They can:

- Contact MPs: Tell representatives you want opt-in copyright protection for AI training. Consistent constituent feedback moves policy.

- Support creator organizations: Writers' unions, artist groups, journalist associations are fighting for these protections. Membership and donations help.

- Vote with your wallet: Support companies that commit to licensing agreements. Avoid companies that boast about their "unfiltered" training data scraped without permission.

- Spread awareness: Most people don't know this debate is happening. Talk about it. Share why it matters.

The Broader Context: Copyright in the AI Era

Why Copyright Still Matters

Some argue copyright is outdated, that the internet makes sharing easy, and trying to restrict it is futile. In the age of AI, we should rethink copyright entirely.

This misses the point. Copyright isn't about preventing sharing. It's about ensuring creators have control over their work and can benefit from its value.

Creators want to share their work. They publish articles, post art, release music. The question is whether they get to decide how it's shared and whether they're compensated for commercial use.

AI changes the scale, not the principle. When a company trains a billion-dollar AI model on someone's work, that's a commercial use. The creator should have a say and potentially be compensated.

Copyright law can evolve to fit AI. But the foundation—creators controlling their work—should remain.

Learning from Music Licensing

Music licensing provides a useful model. Musicians were devastated when radio and later streaming emerged. They didn't want their work banned. They wanted their work licensed fairly.

Over decades, the music industry built licensing infrastructure. Rights holders, platforms, and creators negotiated terms. It's not perfect, but it works. Artists get paid when their music is used. Platforms have legal certainty. Listeners get access.

AI training should follow a similar path. Instead of free-for-all scraping, build licensing frameworks. Let creators decide and be compensated.

The Copyright Maximalism Concern

One fair concern is that copyright protection could be too strict, preventing beneficial uses of copyrighted material.

Opt-in doesn't require copyright maximalism. Creators can choose to allow broad uses. They can set low licensing fees to encourage training. They can give away permission entirely if they want.

The point isn't to maximize copyright restrictions. It's to restore creator choice. Some creators might be thrilled to have AI companies train on their work for free. Some might want compensation. Some might want to restrict it. The creator decides.

That's not maximalism. That's fairness.

An estimated 97% of the public supports an opt-in policy for AI copyright, aligning with EU standards. Estimated data.

Technology and Verification Solutions

How AI Companies Can Verify Rights

One practical question: how do AI companies verify they have permission to train on a work?

Modern technology makes this feasible:

Digital watermarking: Copyrighted content can include machine-readable metadata indicating copyright status and licensing requirements. AI systems can read this before ingesting content.

Blockchain-based rights tracking: Some companies are building blockchain systems to track copyright ownership and licensing agreements. An AI company queries the blockchain before training.

API-based verification: Creator registries expose APIs that AI companies can query. "Is this work protected? What are the licensing terms?" Instant answer.

Smart contracts: Licensing agreements could be enforced via smart contracts. Automatic payment if a company trains on the work. Automatic revocation if terms are violated.

These technologies aren't science fiction. They're already being built. The missing piece is regulatory mandate to use them.

The Transparency Imperative

Another important mechanism: transparency about training data.

AI companies should disclose which copyrighted works they trained on. Not necessarily every single image or text sample, but at a minimum, they should say "we trained on X books by these authors, Y images by these photographers," etc.

Transparency serves multiple purposes:

- Creator awareness: Creators know which models used their work

- Public understanding: Users understand what's in the AI they're using

- Accountability: If a company says they have licensing agreements, people can verify

- Legal clarity: If disputes arise, there's documentation

Current practice is often the opposite. Companies treat training data as proprietary secrets. They won't disclose what they trained on. That opacity makes it impossible for creators to even know if they've been used, let alone opt out.

Transparency requirements would fix this. Companies disclose training data sources. Creators can see if they've been included. If no permission was given, they can take action.

International Comparative Analysis

The European Union's Approach

The EU has chosen explicitly creator-protective policies. Their AI Act requires special consideration for copyright holders. Training on copyrighted material requires licensing agreements or falls under limited exceptions.

This is already live. Companies training AI models for EU deployment must navigate licensing. The result? Companies are negotiating with creators. Publishers, artists, musicians are licensing their work for compensation.

Has it slowed EU AI innovation? No. EU companies are building competitive AI systems. They're just doing it legally.

The United States Emerging Framework

The US has traditionally been friendly to AI development with lighter copyright restrictions. But that's changing.

Multiple bills in Congress would require licensing for copyrighted training data. Artists and authors have filed lawsuits arguing that AI training constitutes copyright infringement. The legal landscape is shifting toward creator protection.

US tech companies are facing pressure on both sides: public opinion wants creator protection, regulation is moving that direction, and artists are suing. Companies are starting to negotiate licensing agreements proactively.

Countries Trailing Behind

A few countries have stayed permissive, allowing opt-out or minimal copyright protection for AI training. But they're increasingly isolated. As regulation harmonizes, permissive jurisdictions lose leverage.

Estimated data shows tech companies, AI labs, and cloud providers have the most influence on UK government policy regarding opt-out rules, overshadowing creators and artist organizations.

Misconceptions About Opt-In

"It Will Stop AI Development"

Misconception: Requiring licensing will make AI training so expensive that companies can't afford it.

Reality: Licensing costs are real but manageable. They're already factored into business models. If anything, licensing incentivizes better data curation. Companies pay for high-quality training data and get better models.

The companies most affected are those relying on free, unfettered scraping. That's a feature of opt-in, not a bug. It pushes companies toward sustainable, legal business models.

"Creators Will Demand Outrageous Fees"

Misconception: If creators control licensing, they'll charge so much that AI training becomes unfeasible.

Reality: Many creators would be thrilled to license their work for fair compensation. They're not trying to destroy AI. They want a piece of the value they're creating.

Market dynamics would regulate pricing naturally. If one creator charges too much, AI companies use others. Creators would compete on price and terms. Most would settle on reasonable rates.

This already happens in other industries. Music streaming negotiated rates with publishers. Publishing platforms negotiated with authors. Markets work.

"It's Technically Too Complex"

Misconception: Building licensing infrastructure at AI scale is impossible.

Reality: The technical challenges are solved. Watermarking, APIs, smart contracts, registries—these technologies exist. The challenge is political will, not technical capability.

The Path Forward

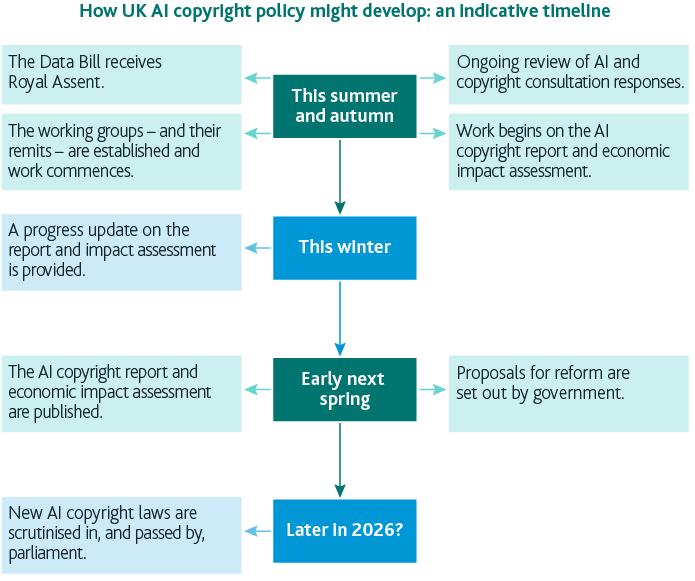

What Should Happen in 2025

The UK government should commission an independent review of public opinion on AI copyright policy. Not an internal review by officials who've already decided on opt-out. An external, rigorous evaluation of what the public actually wants and why.

Then, that review should lead to policy change. Shift the default to opt-in. Establish creator registries. Build licensing infrastructure. Harmonize with EU standards.

This isn't radical. It's what 97% of the public wants. It's what countries like the EU are already doing. It's what sustainable business models require.

What Creators Should Do Now

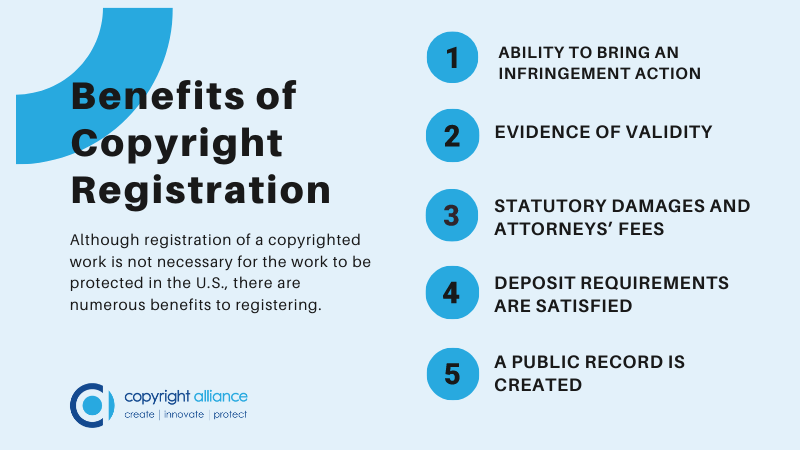

If you create copyrighted work, don't wait for policy to change:

- Document your copyright: Register your work with the UK Copyright Service or relevant registries. Clear documentation of ownership is crucial.

- Monitor AI usage: Set up alerts for your work appearing in AI training datasets or outputs. Use reverse image search, plagiarism detection tools, whatever applies.

- Build community: Join creator organizations pushing for copyright protection. Collective action is more powerful than individual efforts.

- License strategically: If you're comfortable with some AI uses, consider proactive licensing agreements. Negotiate fair terms.

- Know your rights: Understand copyright law and what protections you actually have. Most creators are unaware of their legal options.

The Role of Citizens

You don't have to be a creator to care about this. The issue affects the broader media ecosystem.

If you use AI tools, ask them about their training data. Are they using copyrighted material with permission? If you're not sure, ask the company directly. Push for transparency.

If you read news, support journalism that refuses to license content to AI companies without fair compensation. Vote with your attention and wallet.

If you care about the creator economy, vote for politicians who support copyright protections. Write to your MP. Join advocacy organizations.

The 97% of people who want opt-in protection have power. That power matters if it's used.

FAQ

What is AI copyright training?

AI copyright training is the process by which AI companies use copyrighted material (images, text, music, etc.) to teach AI systems to recognize patterns and generate similar content. The models are trained on millions of copyrighted works without explicit permission from the creators, though practices vary by jurisdiction and company.

How does opt-out copyright protection work for AI?

Opt-out copyright protection means creators must actively request that AI companies stop using their work. The default assumes AI companies can scrape and use copyrighted material, and creators have to monitor the landscape and submit opt-out requests to individual companies. It's currently the approach many countries support as default, though enforcement varies.

What does opt-in copyright protection mean for AI training?

Opt-in copyright protection means AI companies must request permission from creators before using their work for training. The default assumes creators retain control, and companies must negotiate licensing agreements. This is the approach supported by 97% of UK public opinion and is increasingly adopted by regulatory bodies like the European Union.

Why does the UK government prefer opt-out protection?

The UK government's opt-out approach reflects pressure from tech companies that benefit from cheap or free access to training data. Opt-out allows companies to scrape material without negotiation, reducing compliance costs and accelerating development. The government argues this supports innovation, though public opinion strongly disagrees.

How would opt-in licensing affect AI companies financially?

Opt-in licensing would add costs to AI training, as companies would need to negotiate licensing agreements or pay licensing fees. However, these costs are manageable and can be incorporated into business models. Companies already pay for other data sources and build licensing costs into pricing. The effect would be passing some costs to consumers while ensuring creators are compensated.

What rights do creators currently have under UK copyright law for AI?

Under current UK copyright law, creators own their work by default, but proving infringement and enforcing rights against AI companies is extremely difficult. There's no clear legal precedent. Creators can attempt to negotiate licensing, submit opt-out requests to companies, or pursue legal action if infringement is egregious. Most creatives lack the resources for legal battles.

How do international copyright laws compare on AI training?

The European Union's AI Act requires opt-in licensing for copyrighted material. The United States is moving toward stricter copyright protections through proposed legislation and lawsuits. The UK's opt-out approach is increasingly isolated, making it harder for creators to enforce rights and positioning the UK as less protective of intellectual property than peer nations.

Can creators be compensated for AI training without opt-in protections?

Creators can theoretically be compensated through opt-out licensing agreements if they find and negotiate with AI companies. However, this requires creators to monitor hundreds of companies, understand complex licensing terms, and have legal resources. Most creators lack the time and resources. Opt-in makes compensation systematic and automatic.

What would a creator registry for opt-in licensing look like?

A creator registry would be a centralized database where creators register their work and set licensing terms (allow non-commercial use, require attribution, set fees, etc.). AI companies would query the registry before training on anything. The registry would handle verification, licensing terms, and potentially automate licensing fee payments. It's similar to existing music licensing systems like ASCAP or BMI.

How could opt-in protection be enforced against AI companies?

Opt-in protection could be enforced through legal liability for companies that train on registered copyrighted work without permission. Penalties could include fines, injunctions forcing retraining or deletion of models, and civil liability for damages. Transparency requirements forcing disclosure of training data sources would also help creators identify violations and pursue enforcement.

Conclusion: Why This Moment Matters

We're at a genuine crossroads for copyright and creativity in the AI era. The choices the UK makes now will affect creators for decades.

The government is choosing opt-out. The public is choosing opt-in. Right now, opt-out is winning because it has institutional momentum and corporate lobbying behind it. But that can change.

Public opinion has shifted dramatically. Creators are organizing. Other countries are moving in the opposite direction. The status quo is becoming untenable.

What's striking about this moment is how aligned most stakeholders actually are. Creators want control and compensation. The public wants creators protected. Even some AI companies recognize that sustainable business models require legal licensing agreements. The only people strongly opposing opt-in are companies that benefit maximally from free access to training data.

That's not an overwhelming coalition. It's a narrow interest that's managed to capture policy because it's concentrated and well-funded, while public opinion is distributed and quiet.

Change happens when distributed opinion becomes organized action. When people who've been invisible start showing up in policy conversations.

The 97% of the public that wants opt-in protection has that power. If they use it, they win.

The real question is whether they will. Whether the disconnect between public opinion and government policy becomes visible enough that politicians feel pressure to change course. Whether creators organize effectively enough to demand the protections the public already wants.

If that happens, UK copyright law shifts. Opt-in protections become the default. Licensing infrastructure emerges. Creators regain control of their work. AI companies adapt to legal business models. The creative economy becomes sustainable.

If it doesn't happen, opt-out wins by inertia. More creators see their work stripped and used without permission. The economics of creation become worse. Some creators leave the profession. Quality training data becomes scarcer, forcing AI companies to pay more or deliver worse results.

The outcome depends on whether 97% of the public becomes more than a number in a poll. Whether it becomes a mandate for change.

That hasn't happened yet. But it's possible. Moments like this are when policy shifts. When concentrated interest loses to distributed concern. When the obvious becomes law.

The question is whether we get there. Whether the UK follows the EU toward creator protection, or trails behind with opt-out policy that almost nobody wants.

Public opinion has spoken. The question now is whether anyone in government is listening.

Key Takeaways

- 97% of UK adults support opt-in copyright protection for AI training, where creators decide if their work is used, versus only 3% backing the government's opt-out approach

- Opt-out places impossible burden on creators to monitor hundreds of AI companies and submit opt-out requests, while opt-in puts control back in creators' hands by default

- AI training on copyrighted content without permission is devastating visual artists, musicians, journalists, and writers economically, removing their ability to negotiate compensation

- The EU already requires opt-in licensing through the AI Act, and the US is moving toward stricter creator protections, leaving the UK increasingly isolated with permissive opt-out rules

- Opt-in copyright protection requires creator registries, licensing frameworks, and verification systems that are technically feasible and modeled on existing music industry licensing infrastructure

Related Articles

- Where Tech Leaders & Students Really Think AI Is Going [2025]

- State Crackdown on Grok and xAI: What You Need to Know [2025]

- TikTok US Outage Recovery: What Happened and What's Next [2025]

- Uber's AV Labs: How Data Collection Shapes Autonomous Vehicles [2025]

- TikTok US Ban: 3 Privacy-First Apps Replacing TikTok [2025]

- TikTok Power Outage: How Data Center Failures Cause Cascading Bugs [2025]

![UK AI Copyright Law: Why 97% of Public Wants Opt-In Over Government's Opt-Out Plan [2025]](https://tryrunable.com/blog/uk-ai-copyright-law-why-97-of-public-wants-opt-in-over-gover/image-1-1769596690301.jpg)