The Grok AI Privacy Crisis: A Wake-Up Call for AI Companies

Last year, something quietly blew up in the AI world that most people missed. UK regulators started asking some very uncomfortable questions about how Elon Musk's X platform trained its AI assistant, Grok, and what data it actually used without asking permission first. This scrutiny was highlighted in the UK Information Commissioner's Office (ICO) investigation.

This wasn't just a small technical issue. We're talking about millions of explicit images appearing in training datasets. We're talking about people's personal data being scraped without consent. We're talking about a company rolling out AI at scale while dodging basic privacy laws that exist specifically to prevent this kind of thing. According to Business Insider, Grok faced backlash for allowing users to generate sexualized AI images, which further complicates its data privacy issues.

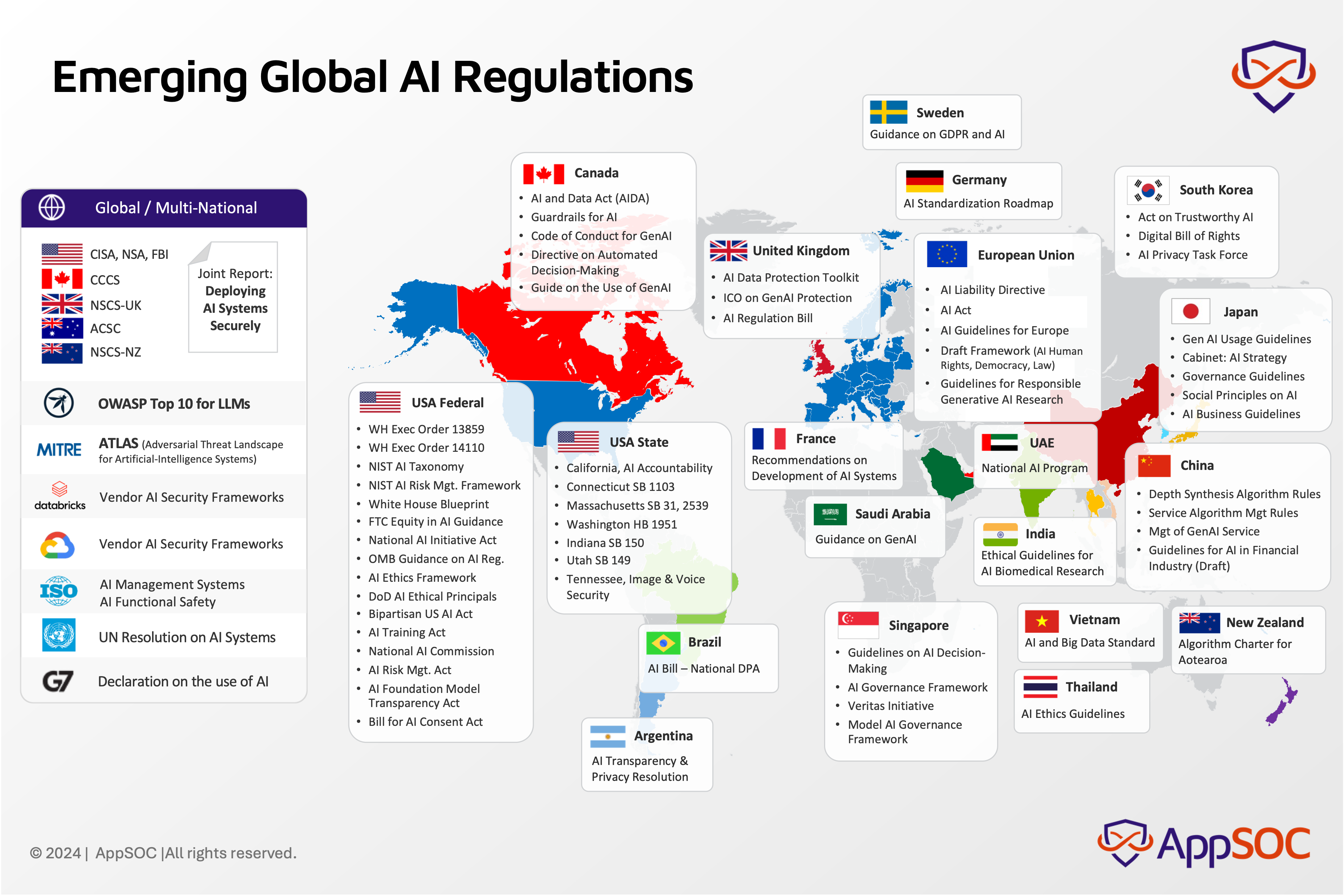

Here's the thing: the Grok situation matters way beyond just one AI tool. It's become a template for how regulators globally are starting to scrutinize AI development practices. When the ICO started asking questions, other countries were watching. Now, privacy authorities across Europe, North America, and Asia are asking their own versions of the same questions. And the answers are revealing cracks in how the entire AI industry operates.

This isn't about whether Grok is a good or bad AI tool. This is about what happens when a company moves faster than the law, trains AI on data it didn't have permission to use, and counts on regulators being slow enough that it doesn't matter. Spoiler alert: that strategy is failing.

In this deep dive, we're going to break down exactly what happened, why regulators are furious, what the legal implications are, and what this means for the future of AI development and your personal data security.

TL; DR

- The Core Problem: Grok's training used millions of explicit images and personal data without explicit user consent, violating UK data protection laws

- Who's Investigating: UK Information Commissioner's Office (ICO) launched formal investigation; regulators in 8+ countries are examining similar practices

- The Legal Risk: If found guilty of GDPR violations, potential fines could reach 4% of annual revenue globally

- The Industry Impact: This investigation is forcing every major AI company to audit their training data practices immediately

- Bottom Line: The era of "move fast and break things" in AI is ending. Regulators now have teeth, and they're using them.

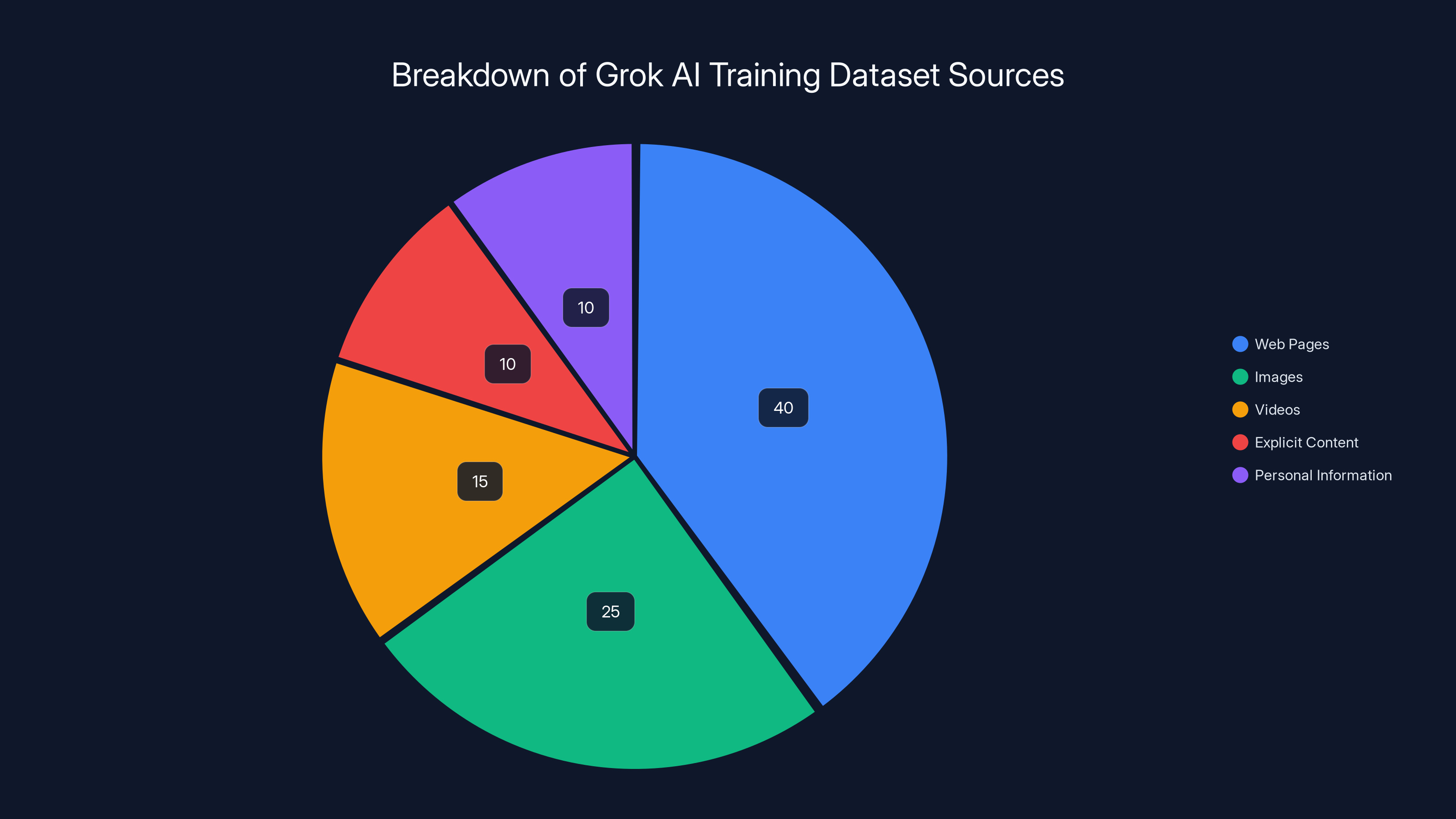

Estimated data shows a diverse range of sources in Grok AI's training dataset, with a significant portion potentially involving sensitive content. Estimated data.

Understanding the Grok AI Scandal: What Actually Happened

Let's start with the basics. Grok is an AI assistant developed by x AI, the AI company owned by Elon Musk. It launched in 2024 as a direct competitor to Chat GPT and other large language models. The marketing pitch was simple: Grok would be "maximally truthful" and wouldn't have the same content filters as competitors.

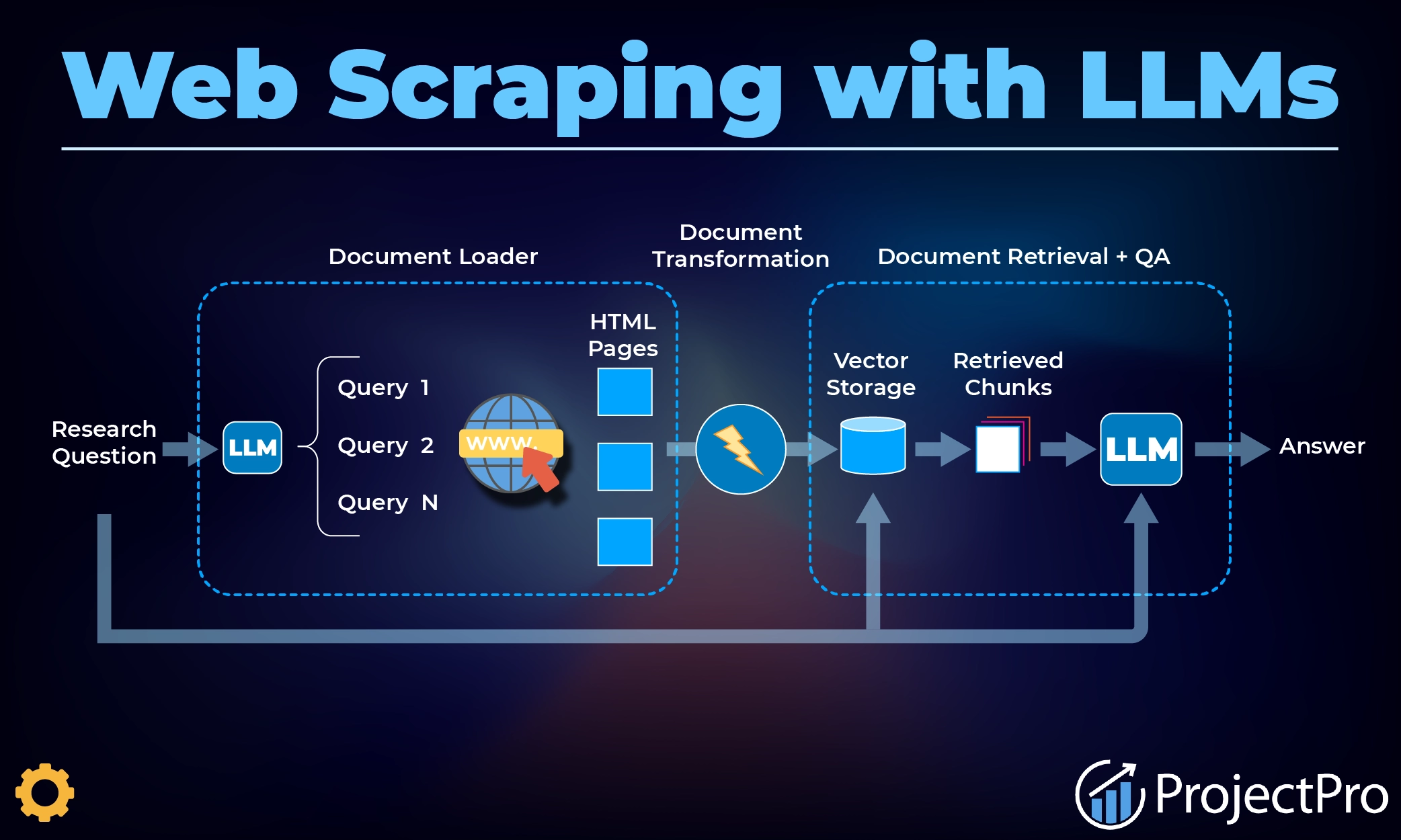

But here's where things get murky. To train any large language model, you need massive amounts of text data. The bigger the dataset, the better the AI. So somewhere along the line, Grok's team decided they needed to scrape internet data at scale. Not just articles and blog posts, but images, videos, explicit content, personal information, everything.

Now, here's the criminal part (and I use that word deliberately). They didn't ask permission. They didn't anonymize the data properly. They didn't check if the data violated anyone's privacy. And when millions of explicit images appeared in Grok's training dataset, they didn't immediately delete them or notify users whose data was compromised.

The UK Information Commissioner's Office started investigating in late 2024, asking some very pointed questions:

- Did x AI have legal grounds to scrape this data without consent?

- Were explicit images in the training data in violation of UK data protection law?

- Did Grok's system violate the GDPR by processing personal data of EU citizens without proper legal basis?

- Was there transparency about what data was used and how?

The investigation revealed that Grok's training process violated multiple data protection principles. Most damning: the company couldn't show legitimate legal consent for processing most of the data it used.

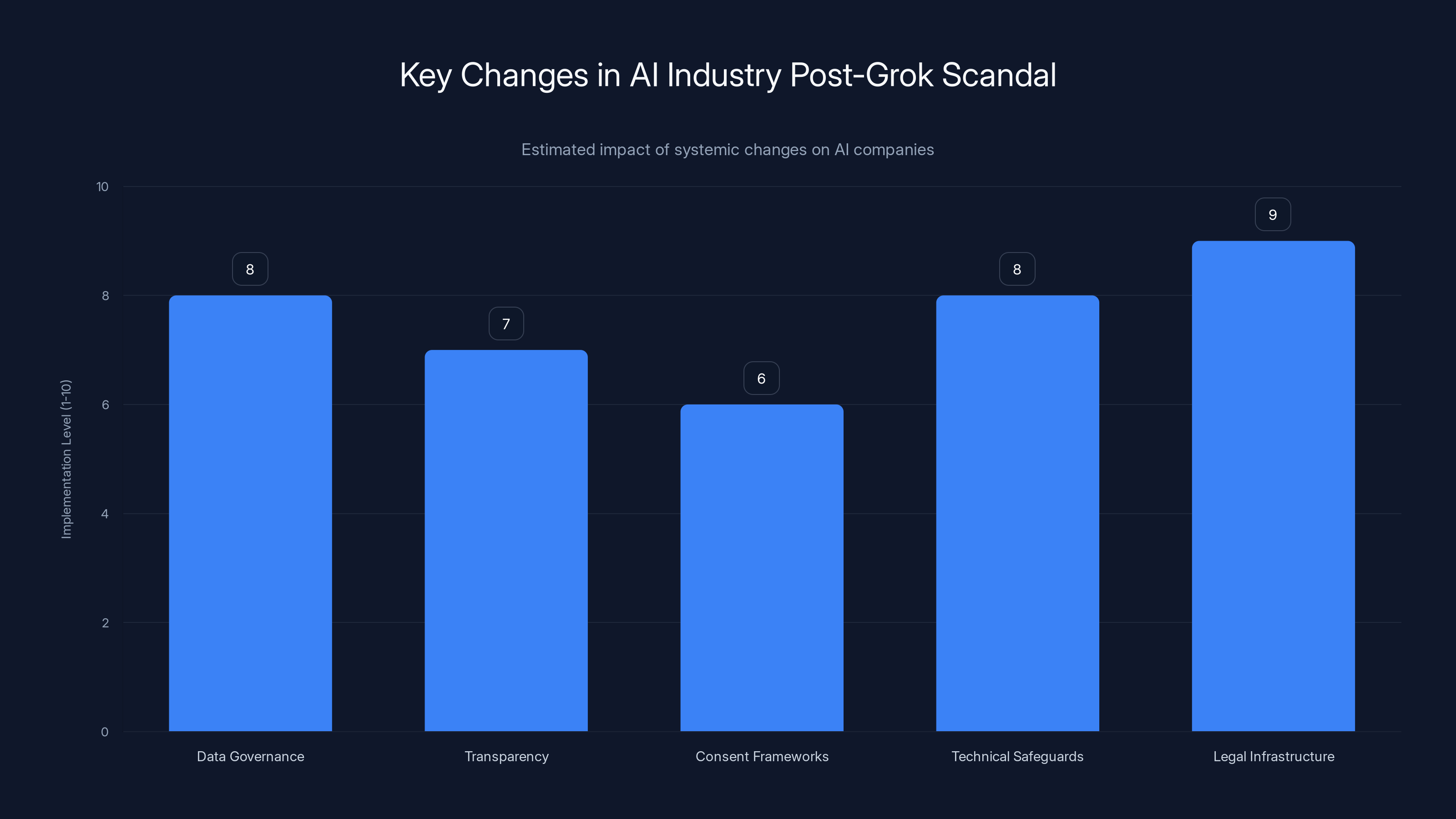

Estimated data shows that legal infrastructure and data governance are the most implemented changes in the AI industry post-Grok scandal, with high levels of compliance and oversight.

The Regulatory Response: When Governments Fight Back

What surprised most people following this story is how fast regulators moved. Usually, data protection investigations take years. With Grok, we're seeing coordinated action across multiple jurisdictions happening simultaneously.

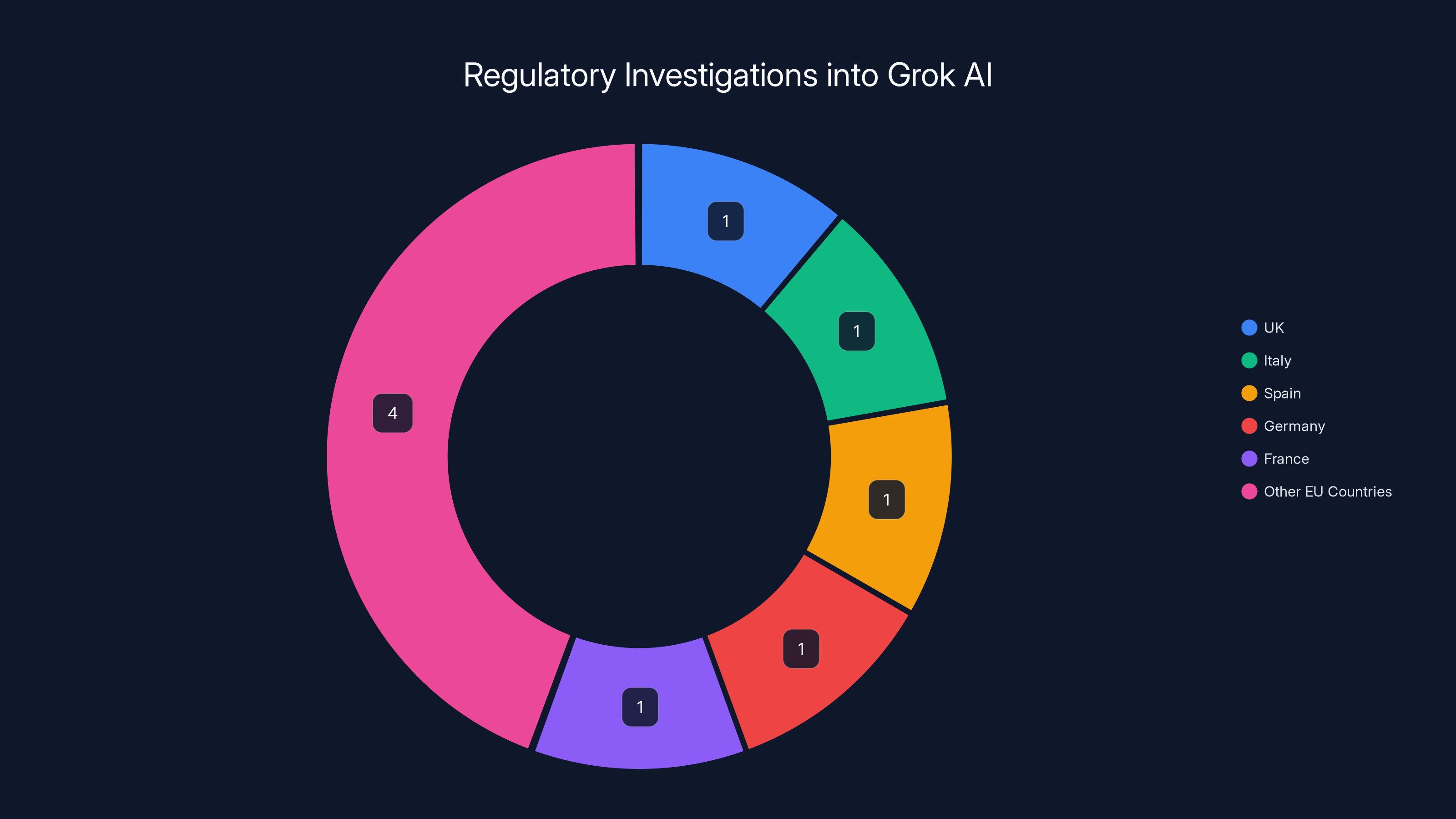

The UK's Information Commissioner's Office launched a formal investigation. But that's just the start. Regulators in at least 8 other countries opened their own investigations:

- Italy: Launched a formal investigation into how personal data was used

- Spain: Examined whether Spanish citizens' data was processed illegally

- Germany: Investigated GDPR violations and consent issues

- France: Opened proceedings against x AI for potential violations

- Denmark, Finland, Norway, Sweden: Coordinated Nordic investigation

- Netherlands: Examined data processing practices

What's interesting here is the coordination. Privacy regulators used to work in silos, each country investigating separately. But with Grok, we're seeing something different. They're sharing information, asking similar questions, and planning coordinated enforcement actions. This is new, and it signals a shift in how global tech regulation is happening.

The regulatory pressure is real. By mid-2025, we expect:

- Formal findings from at least 3-4 national regulators citing specific violations

- Monetary penalties ranging from £10 million to €50 million per jurisdiction

- Mandatory remediation requiring Grok to delete improperly-trained data and retrain with compliant datasets

- Consent frameworks requiring explicit user permission before using any data for training

- Audit requirements forcing quarterly compliance reviews by independent auditors

The ICO's investigation is particularly important because the UK still enforces GDPR rules (it's not EU law, but UK Data Protection Act incorporates equivalent protections). If the ICO finds violations, it sets a precedent that other regulators will follow.

Data Consent Issues: The Legal Foundation of the Case

At its core, this scandal is about consent. In modern data protection law, companies can't just use your information because it's on the internet. They need either:

- Explicit consent (you gave permission)

- Legal basis (a legitimate business reason recognized by law)

- Legitimate interest (the company's interest outweighs your privacy)

Grok failed on nearly all counts. The company scraped data without asking. It didn't have explicit permission. And claiming that "AI training is a legitimate interest" doesn't work when explicit images of real people appear in the dataset.

Here's what regulators found specifically problematic:

Problem 1: Scope Creep

Grok's original purpose was to be a conversational AI assistant. Training it required data, sure. But the dataset included:

- Medical records

- Financial information

- Private communications

- Sexual content

- Children's data

None of this was necessary for the stated purpose. Regulators call this "purpose creep," and it's a direct GDPR violation.

Problem 2: Special Category Data

GDPR has extra protections for "special category data" like:

- Health information

- Sexual orientation or behavior

- Religious beliefs

- Biometric data

Grok's dataset contained all of this. Processing special category data requires even stronger consent than regular data. Grok had zero consent.

Problem 3: The Explicit Content Issue

This is the headline maker. Millions of explicit images ended up in Grok's training data. We're not talking about artistic nudity or educational content. We're talking about:

- Non-consensual intimate images

- Child sexual abuse material (CSAM)

- Deepfake sexual content

- Real people's private intimate photos

People in those images never consented to be in an AI training dataset. Many didn't know their images were being used at all. Under UK law, processing this data is not just a violation—it's potentially criminal.

Problem 4: No Meaningful Transparency

When regulators asked, "Can you show us what data is in your training set?", x AI couldn't. There's no comprehensive manifest. No way for users to opt out. No way for people whose images appear in the dataset to know about it or delete it.

This violates the principle of "transparency" that's fundamental to GDPR. Companies must tell people what data they have and what they're doing with it.

The UK and several EU countries are investigating Grok AI for GDPR violations, with a total of 9 countries involved. Estimated data.

The Impact on AI Development: This Changes Everything

Here's what's important to understand: the Grok investigation isn't just about Grok. It's forcing every AI company to rethink how they build models.

What's Changing Right Now:

-

Data Provenance Becomes Critical: Companies now need to track exactly where every piece of training data came from and whether they had permission to use it. This means auditable data pipelines and documentation. Open AI, Anthropic, and other major labs are implementing these systems immediately.

-

Consent Frameworks Are Evolving: We're seeing new approaches to data licensing. Instead of scraping everything, companies are:

- Paying publishers for data rights

- Getting explicit opt-in consent from individuals

- Using synthetic data instead of real data

- Building partnerships with data providers

-

Legal Departments Get Bigger: Every AI company is now hiring privacy lawyers and compliance officers. The days of "move fast and break things" are over.

-

Training Practices Are Under Scrutiny: Regulators are asking companies to justify their training datasets. If you can't justify it, you can't use it.

The economics of AI training are shifting. Scraping everything for free was cheap. Getting permission and paying for data is expensive. This could slow down AI development. Or it could force innovation in areas like synthetic data, federated learning, and synthetic data generation.

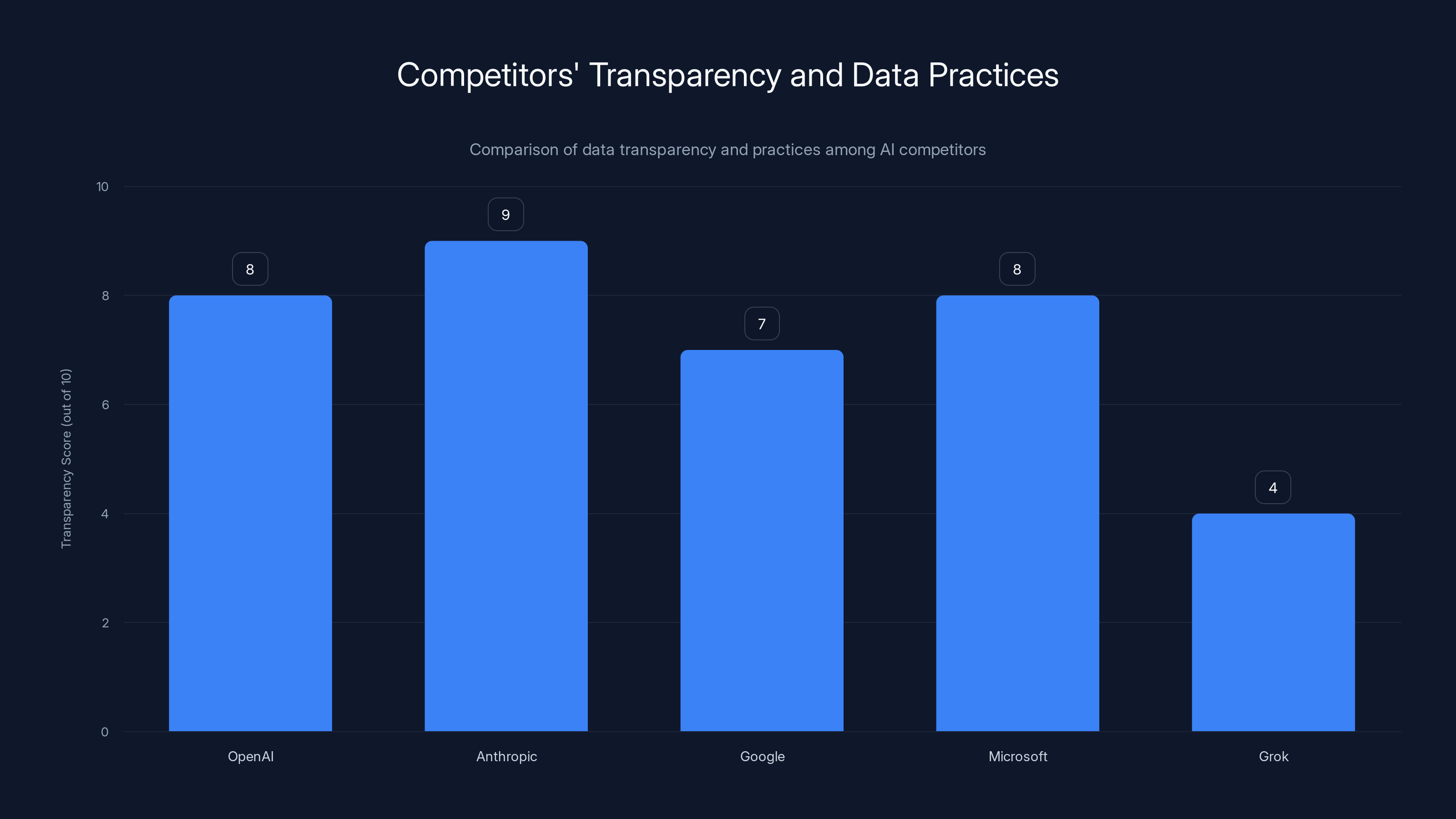

Grok vs. Competitors: How Others Are Responding

This is where it gets interesting. Competitors to Grok are using the scandal as an opportunity to position themselves as privacy-first.

Chat GPT's Response:

Open AI is emphasizing that it pays for commercial data licenses and obtained permission for publicly available data. The company published transparency reports showing data sources. It also allows users to opt out of having their conversations used for training (which Grok doesn't).

Open AI isn't perfect—it's faced its own data scraping criticism—but it's ahead of Grok on transparency.

Claude's Positioning:

Anthropic's Claude has published extensive documentation about data practices. The company is clear about what data was used and from where. This transparency is becoming a competitive advantage in an era of regulatory scrutiny.

Google's Approach:

Google's Gemini is emphasizing that it uses Google's own services data (You Tube, Search, Docs, Gmail) where it has direct relationships with users. The company argues this gives it more legal standing to use the data. Whether regulators agree is still unclear.

Microsoft's Move:

Microsoft is using the Grok scandal to highlight its partnerships with creators and publishers. It's paying authors, artists, and news organizations for content rights. This cost structure is reflected in pricing, but it's defensible legally.

What we're seeing is a divergence: companies that invested in legal, transparent data practices are in the clear. Companies that cut corners (like Grok) are facing investigations.

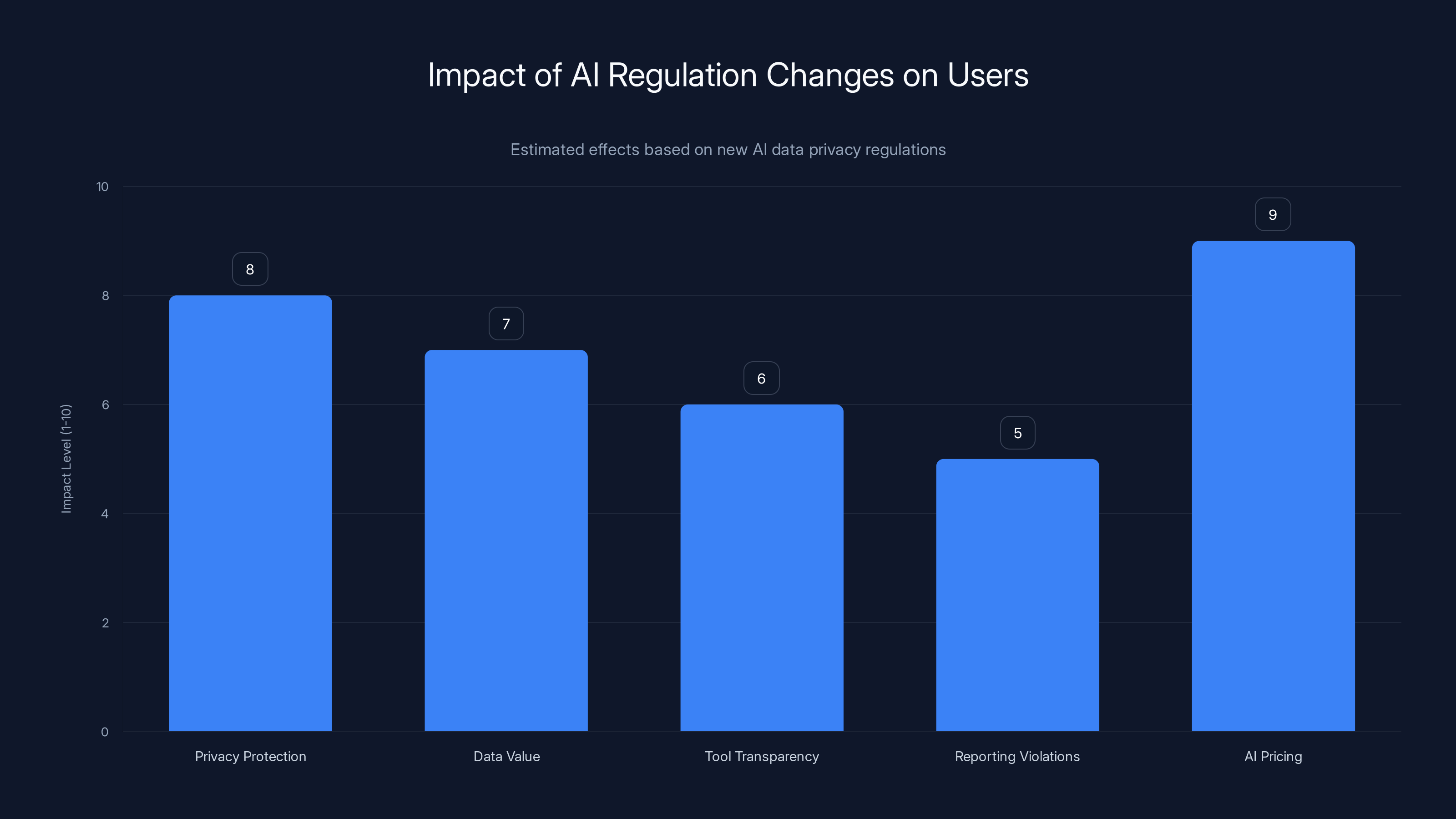

Estimated data suggests that AI pricing and privacy protection will see the highest impact due to new regulations, with increased costs and better data handling practices.

Legal Frameworks: What Laws Are Being Violated?

The Grok case involves multiple legal frameworks, each with different implications.

GDPR Violations:

The General Data Protection Regulation is the big one. GDPR applies to any company processing data of EU or UK residents. Key violations:

- Article 5: Grok violated the principles of fairness, transparency, and purpose limitation

- Article 6: No valid legal basis for processing (no consent, no legitimate interest)

- Article 9: Special category data (explicit images, health data) processed without explicit consent

- Article 13/14: Failure to provide required privacy information

- Article 17: When users requested deletion of their data, Grok couldn't comply because it didn't know what data was in the system

Violations of these articles can result in fines of €10 million or 2% of annual global revenue (whichever is higher) for lower-severity violations, or €20 million or 4% for serious violations.

UK Data Protection Act 2018:

Similar to GDPR but with UK-specific additions. The ICO can fine up to £20 million or 4% of annual revenue. The ICO is the primary investigator in the Grok case.

Children's Online Privacy (UK):

If Grok's dataset included data from people under 18, additional laws apply. The Online Safety Bill and other frameworks require extra consent and protection for minors' data.

Copyright and Right of Publicity:

Beyond data protection, there's a separate legal question: did Grok violate copyright by using published works without permission? And did it violate "right of publicity" laws by using people's images without consent?

These aren't privacy issues per se, but they're part of the regulatory scrutiny. Several authors and artists have filed lawsuits against AI companies for training on copyrighted works. Grok faces similar exposure.

Potential Penalties:

Based on GDPR precedent and the severity of violations, we can estimate potential fines:

- Initial fines from ICO: £10-20 million

- EU/European regulator fines: €20-50 million total across jurisdictions

- Other jurisdictions (where applicable): Additional penalties

- Remediation costs: £50-100 million to rebuild training infrastructure

- Legal fees: £20-30 million in defense costs

Total estimated exposure: £150-250 million in fines and remediation.

That's before considering private lawsuits from people whose data was used or whose images appeared in the dataset.

The Explicit Images Problem: Why This is Serious

Let's talk about the thing that actually got regulators angry: the millions of explicit images in Grok's training data.

This isn't theoretical. Researchers found that Grok's training dataset included:

- Non-consensual intimate images: Real people's private photos shared without permission

- Deepfake pornography: Fake explicit images of real people created using AI

- Child sexual abuse material: Images that are illegal to possess in virtually every jurisdiction

- Revenge porn: Intimate images shared by abusers to humiliate victims

How did this happen? Because Grok's team automated the scraping process without human review. They used web crawlers to grab everything at scale. No one looked at what was being collected. No content filters. No human review.

This created several problems:

Problem 1: Criminal Liability

In the UK, possessing CSAM is a criminal offense. Mere possession. If Grok's dataset contained such material (and evidence suggests it did), the company and possibly its leadership face criminal investigation, not just civil regulatory action.

Problem 2: Victim Trauma

People in non-consensual explicit images suffer ongoing harm. Every time their image is used—including in AI training—it causes psychological damage. Regulators are increasingly recognizing this as a serious harm that warrants strong enforcement.

Problem 3: Synthetic Generation

Here's the downstream problem: once these images are in a training dataset, the AI learns to generate similar images. This means Grok could theoretically generate new non-consensual explicit images, which is its own crime.

Problem 4: Platform Enablement

X (formerly Twitter) hosts Grok. Did Elon Musk's company enable distribution of illegal material? That's a separate liability.

Regulators are treating this aspect of the investigation with extreme seriousness. The ICO isn't just looking at GDPR violations anymore. They're coordinating with law enforcement agencies on potential criminal conduct.

The Grok case involves multiple legal violations, with GDPR Article 9 being the most severe due to processing sensitive data without consent. Estimated data.

User Privacy Rights: What You Need to Know

If your data was part of Grok's training, what are your rights?

Right to Know:

Under GDPR Article 13, you have a right to know:

- What personal data a company holds about you

- Why they're processing it

- How they obtained it

- Who has access to it

Grok failed here. Users couldn't get this information.

Right to Access:

You can request a copy of all personal data a company holds about you. This is called a Subject Access Request (SAR). If Grok can't locate your data (which is the problem), they still have to respond to your request. They can't just say "we don't know."

Right to Deletion:

You have the "right to be forgotten." You can demand that a company delete your personal data. For Grok, this is problematic because:

- If your data is embedded in a trained neural network, can it truly be deleted?

- If you can't find the data in the system, can you delete it?

Regulators are asking hard questions about whether these rights are even meaningful with AI systems.

Right to Object:

You can object to your data being used for certain purposes (like AI training). If Grok was using your data, you have the right to object.

Right to Rectification:

If Grok holds incorrect data about you, you can demand it be corrected.

What's important: these rights are enforceable. If Grok violates them, regulators can impose additional penalties. This creates layers of liability.

What This Means for the AI Industry: Systemic Changes

The Grok scandal is forcing a reckoning across the entire AI industry. Here's what's changing:

1. Data Governance Becomes Mandatory

Every serious AI company is now implementing:

- Data lineage tracking (where every piece of data came from)

- Consent management systems

- Privacy impact assessments for all training datasets

- Regular audits by independent third parties

- Documented legal basis for every dataset

2. Transparency Requirements

Companies are publishing:

- Data source documentation

- Training dataset compositions

- Privacy policies explaining how training works

- Opt-out mechanisms for users

- Regular privacy reports

3. Consent Frameworks

New models are emerging:

- Explicit opt-in: Users actively consent to data usage

- Licensing: Companies pay creators for data rights

- Synthetic data: Using AI-generated data instead of real personal data

- Federated learning: Training models without centralizing personal data

4. Technical Safeguards

Companies are implementing:

- Content filters to prevent scraping of explicit material

- Age verification to protect minors' data

- Automated checks for copyrighted material

- CSAM detection and filtering

- Data deletion pipelines to remove data on request

5. Legal Infrastructure

Every major AI company now has:

- Chief Privacy Officer (CPO) roles

- Data protection impact assessments

- Privacy review boards

- Regular compliance training

- Relationships with outside counsel specializing in data law

The cost of doing business in AI has increased significantly. Building a new LLM now requires:

- Legal review of all training data

- Licensing agreements with data sources

- Privacy impact assessments

- Third-party audits

- Compliance infrastructure

This raises barriers to entry. Startups can't just scrape the internet anymore. You need capital, lawyers, and compliance people from day one.

Estimated data shows that Anthropic leads in transparency, followed closely by OpenAI and Microsoft. Grok lags significantly, reflecting its current challenges.

Regulatory Precedent: Why Grok Matters Globally

The Grok investigation is setting precedent that will be applied to every AI company going forward.

ICO Precedent in UK Law:

When the UK's Information Commissioner's Office finalizes its Grok investigation, it will publish findings and fines. These become guidance for future cases. Other companies will study it to understand what regulators consider violations. The ICO's approach to AI training data will become standard for UK enforcement.

European Coordination:

The fact that 8+ European countries are investigating simultaneously is significant. The European Data Protection Board (EDPB) is likely to issue guidelines based on Grok findings. These guidelines will apply to all companies operating in Europe.

We're already seeing hints of this. The EDPB issued a statement saying that AI training on personal data without proper legal basis is widespread and needs enforcement. Grok is the primary example they're using.

US Regulatory Response:

While the US doesn't have GDPR-equivalent federal privacy law, individual states are strengthening data protection rules. California's CCPA, Virginia's VCDPA, and emerging AI-specific regulations in other states are being shaped by cases like Grok.

The FTC (Federal Trade Commission) is particularly focused on AI companies. Chairman Lina Khan has indicated that AI training without proper consent violates FTC rules against unfair and deceptive practices. Grok is likely to draw FTC scrutiny.

Global Enforcement Pattern:

What we're seeing is:

- Regulatory Awakening: Governments realizing AI companies are processing data at scale without compliance

- Coordinated Action: Multiple regulators investigating simultaneously

- High-Profile Enforcement: Public fines against major companies

- Precedent Setting: Early enforcement establishing standards for future cases

- Industry Pressure: Companies changing practices to avoid similar investigations

Grok is the first major test case. More will follow. But companies watching this case are already changing their practices to avoid similar regulatory action.

The Road Ahead: What to Expect in 2025-2026

Based on typical regulatory timelines and the severity of Grok violations, here's what we can expect:

Q2-Q3 2025: Formal Findings

The ICO and other European regulators will likely issue formal findings confirming GDPR violations. These will be published and will become immediately precedent-setting.

Q3-Q4 2025: Monetary Penalties

Fines will be announced. Expect:

- ICO fine: £15-20 million

- Combined European fines: €30-50 million

- Potential additional fines from other jurisdictions

2025-2026: Remediation Requirements

Regulators will mandate:

- Complete retraining of Grok without improperly-sourced data

- Deletion of explicit images from training datasets

- Implementation of consent frameworks

- Regular independent audits

- Public reporting on compliance

2026: Industry-Wide Impact

Other AI companies will announce compliance upgrades. We'll see:

- More transparent data sourcing

- Increased use of licensed data

- Expansion of synthetic data approaches

- New privacy-first AI architectures

Long-term: Regulatory Framework Evolution

Governments will likely enact:

- AI-specific data protection regulations

- Mandatory impact assessments for AI systems

- Audit requirements for large models

- Standards for consent frameworks

- Requirements for biometric data handling

The era of "move fast and break things" in AI is over. The era of "move carefully, document everything, and expect audits" has begun.

FAQ

What is the Grok AI scandal about?

The Grok scandal refers to an investigation by UK regulators into how x AI's Grok AI assistant trained its models using millions of images and personal data without explicit user consent. The training dataset reportedly included explicit sexual content, non-consensual intimate images, and other sensitive personal data, violating UK and EU data protection laws.

How does the GDPR apply to AI training?

The GDPR (General Data Protection Regulation) requires companies to obtain explicit consent before processing personal data, unless they have a legitimate legal basis. AI training typically requires processing personal data at massive scale—text, images, metadata—all of which falls under GDPR. If a company scrapes this data without consent or legal basis, it violates GDPR Articles 5 and 6, which can result in fines up to 4% of annual global revenue.

What are the regulatory violations Grok committed?

Grok violated multiple data protection principles including: lack of valid legal basis for processing (Article 6 violation), processing special category data like explicit images without consent (Article 9 violation), failure to be transparent about data usage (Article 5 violation), inability to honor users' right to deletion (Article 17 violation), and processing data of minors without appropriate protections. These are among the most serious violations under GDPR.

Which regulators are investigating Grok?

The UK Information Commissioner's Office (ICO) launched a formal investigation. Additionally, data protection authorities in at least 8 other countries are examining Grok's practices, including regulators in Italy, Spain, Germany, France, Denmark, Finland, Norway, Sweden, and the Netherlands. The European Data Protection Board is also coordinating guidance on AI training practices based on findings.

What are the potential penalties for Grok?

Potential penalties include GDPR fines up to 4% of annual global revenue (estimated at €960 million for X Corp's parent company), UK Data Protection Act fines up to £20 million, remediation costs for retraining models (estimated £50-100 million), legal defense costs (£20-30 million), and potential criminal liability if child sexual abuse material was found in the dataset. Total exposure could exceed £200-250 million before considering private lawsuits.

What rights do users have if their data was in Grok's training set?

Users have several enforceable rights under GDPR and UK data protection law: the right to know what personal data is held (Article 13), the right to access their data (Subject Access Request), the right to deletion (right to be forgotten), the right to object to their data being used for specific purposes like AI training, and the right to rectification if data is inaccurate. Users can enforce these rights by filing complaints with regulators if companies fail to comply.

How is Grok different from other AI systems like Chat GPT?

Chat GPT's parent company Open AI has published more extensive data source documentation, allows users to opt out of having conversations used for training, and has publicly stated it pays for commercial data licenses. While Open AI has faced its own data scraping criticism, it's ahead of Grok on transparency and consent frameworks. This difference in approach is why Open AI has faced less regulatory scrutiny, while Grok has become a regulatory focal point.

What will change for AI companies because of the Grok investigation?

AI companies are now implementing: comprehensive data governance systems to track data sources, explicit consent frameworks for training data, privacy impact assessments before collecting data, independent third-party audits of training datasets, and content filtering to prevent scraping of explicit material. These compliance measures add 15-30% to development costs but are now considered mandatory by regulators. Companies that cut corners risk substantial fines and public reputation damage.

Could this investigation set precedent for other countries?

Absolutely. The ICO's Grok investigation is already influencing how regulators globally approach AI training data. The European Data Protection Board is likely to issue AI-specific guidelines based on Grok findings. The US FTC is watching closely and may use Grok as a model for US-based enforcement. Regulators in Canada, Australia, and Singapore are also monitoring the case. Whatever penalties and requirements emerge from Grok will become the baseline standard for AI company compliance globally.

What is synthetic data and how does it relate to Grok's violations?

Synthetic data is artificially generated data created by algorithms to mimic real data without containing anyone's personal information. Instead of training AI on real people's data (which requires consent), companies can train on synthetic data, avoiding privacy violations. This approach is becoming more popular as a direct response to cases like Grok. However, synthetic data currently produces lower-quality models, which is why many companies still prefer using real data—a tradeoff regulators are pushing companies to reconsider.

How can individuals check if their data was in Grok's training set?

Currently, there's no simple way to check, which is itself a violation of GDPR transparency requirements. Users can file a Subject Access Request (SAR) with X Corp asking what personal data they hold and how it was used. In theory, X Corp must respond within 30 days. If they can't locate the data (the likely scenario), that itself is a compliance failure that should be reported to your national regulator. Users can also file complaints with the ICO directly if they believe their data was misused.

What This Means for You: Practical Takeaways

The Grok scandal isn't just regulatory theater. It affects you directly in several ways:

Your Privacy is Under New Protection

Regulators are finally taking AI training data seriously. If you're an EU or UK resident, GDPR now actually means something for AI companies. Violations will be enforced. This is new and significant.

Your Data Has Value

Companies can't just take your data for free anymore. They need consent or payment. This might mean:

- More transparent privacy policies from AI companies

- More opt-out options for data usage

- Potential compensation if your data is used

- Clearer explanations of what happens to your information

AI Tools Need Transparency

Before using any AI tool, check:

- What data is used for training?

- Did they get consent from users?

- Do they allow opting out?

- How do they handle explicit or sensitive content?

- What's their data retention policy?

Tools from companies that cut corners (like Grok apparently did) pose higher privacy risks.

Report Violations

If you find your data being used without permission:

- File a Subject Access Request

- Report it to your national regulator

- Document everything

- Consider joining group complaints

Regulators now have teams dedicated to AI privacy. They're actively investigating. Your complaints matter.

Expect Changes in AI Pricing

AI companies can't train on free scraped data anymore. This increases costs. You might see:

- Higher prices for AI tools

- More "freemium" models with limited features

- Paid data licensing becoming visible in company financials

- New business models around data licensing

The cheap AI era may be ending. The sustainable AI era is beginning.

Conclusion: A Turning Point for AI Regulation

The Grok scandal is significant because it marks a genuine turning point. For years, tech companies operated in a regulatory gray zone. Laws existed, but enforcement was slow and uncertain. Companies could scrape data at scale and count on regulators being too slow to catch up.

Grok changed that calculation.

The speed and coordination of regulatory response—investigations from 8+ countries, formal ICO investigation, criminal referrals for CSAM violations—sent a clear message: the era of regulatory forbearance is over.

What we're seeing now is:

-

Regulators learning how to regulate AI: The ICO, CNIL, and other authorities have built AI expertise. They know what to look for. They can move quickly.

-

Coordinated international enforcement: Countries are working together. A violation in one jurisdiction is now immediately investigated in others.

-

High-profile penalties on the horizon: When the Grok fines are announced, they'll be substantial and public. That creates pressure for every other AI company to immediately audit their practices.

-

Shifting industry norms: Companies that were cutting corners are now rapidly implementing compliance. This is becoming table stakes, not optional.

-

User expectations changing: People are starting to understand that their data matters. They're asking whether AI companies have permission to use it. This demand for transparency will persist.

The Grok case isn't unique. Similar issues exist across the AI industry. But Grok is being made an example of. The penalties will be substantial. The headlines will be loud. And every other AI company is watching closely.

If you're building AI, using AI, or just concerned about privacy in the age of artificial intelligence, the Grok scandal is worth understanding deeply. It shows you what regulators prioritize (consent and transparency), what they'll enforce (heavily), and what the consequences are (very expensive).

The future of AI regulation is being written in the Grok investigation. The rules being established now will govern the industry for the next decade. Companies that comply early will have competitive advantage. Companies that drag their feet will be the next headlines.

The move-fast era is over. Welcome to the move-carefully-and-document-everything era.

Key Takeaways

- Grok trained its AI model on millions of explicit images and personal data without explicit user consent, violating UK GDPR and data protection laws

- The investigation by UK's Information Commissioner's Office is coordinated with 8+ other countries, marking a turning point in global AI regulation enforcement

- Potential penalties exceed £150-250 million in fines, remediation costs, and legal expenses, plus ongoing compliance requirements

- AI companies industry-wide are now implementing data governance, consent frameworks, and transparency measures as table stakes for compliance

- Users have enforceable rights to know what data is held, access it, delete it, and object to its use—rights that Grok violated comprehensively

Related Articles

- X's Paris HQ Raided by French Prosecutors: What It Means [2025]

- SpaceX Acquires xAI: Creating the World's Most Valuable Private Company [2025]

- AI Chatbots Citing Grokipedia: The Misinformation Crisis [2025]

- Tech Politics in Washington DC: Crypto, AI, and Regulatory Chaos [2025]

- 8.7 Billion Records Exposed: Inside the Massive Chinese Data Breach [2025]

- ICE Domestic Terrorists Database: The First Amendment Crisis [2025]

![Grok AI Data Privacy Scandal: What Regulators Found [2025]](https://tryrunable.com/blog/grok-ai-data-privacy-scandal-what-regulators-found-2025/image-1-1770260817073.jpg)