Byte Dance's Seedance 2.0 AI Video Generator Faces Major Intellectual Property Crisis [2025]

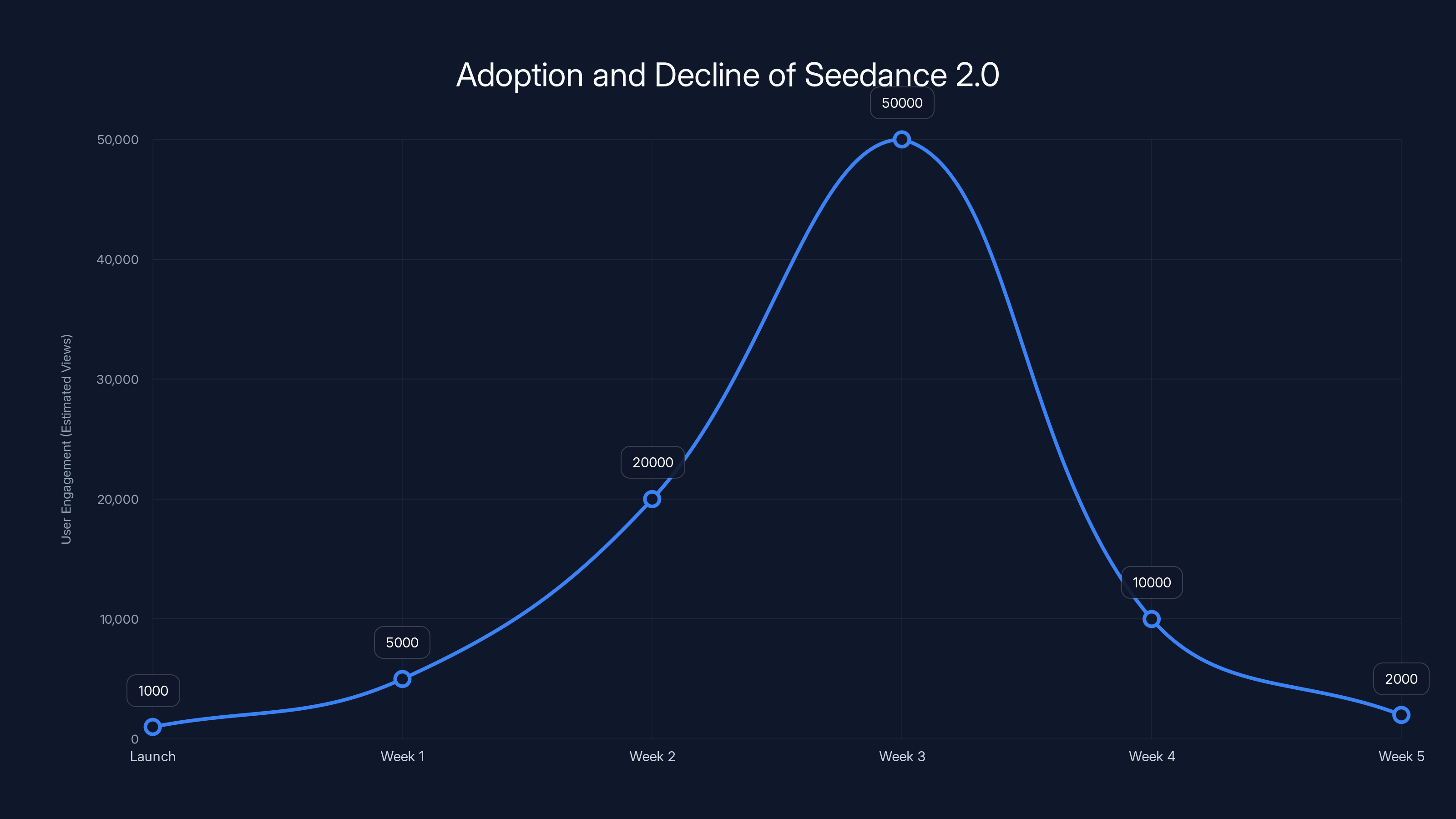

When Byte Dance launched Seedance 2.0 just days before the cease-and-desist letters started arriving, the timing felt almost comedic. The company had released what it believed was a breakthrough in AI video generation—a tool that could create hyper-realistic video content in minutes instead of hours. But within 48 hours, a viral clip showing Tom Cruise and Brad Pitt engaged in a fictional fight had circulated across social media, and suddenly everyone was asking the same question: how is this legal?

The answer, it turns out, is that it's not. Or at least, not in the way Byte Dance had envisioned.

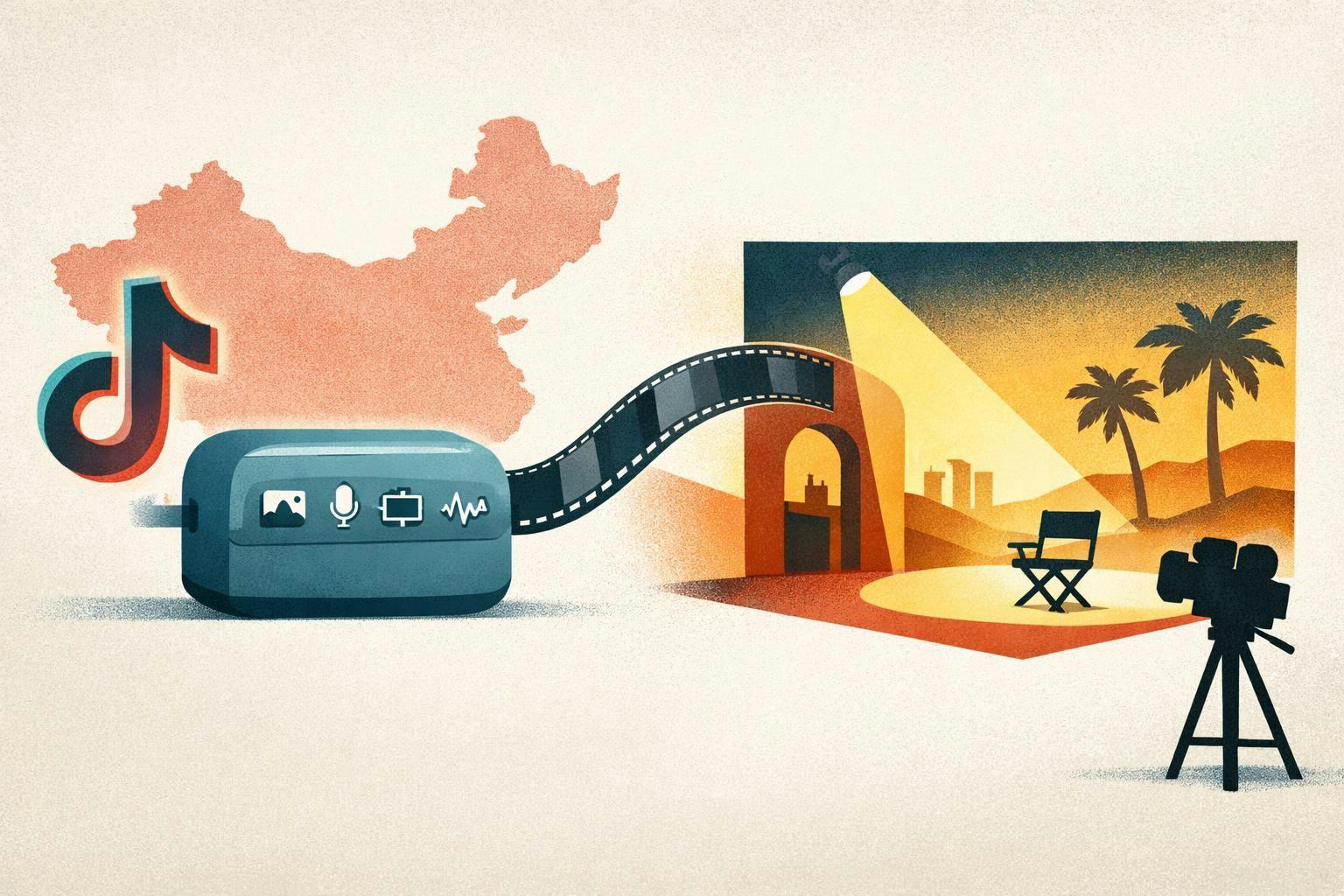

What happened next became one of the most significant wake-up calls in AI development in 2025. Disney fired off a cease-and-desist letter claiming that Seedance 2.0 used a "pirated library" of Disney's copyrighted characters from Star Wars, Marvel, and other franchises. Paramount followed suit with similar complaints. Within days, Byte Dance found itself in the center of a copyright infringement firestorm that exposed fundamental flaws not just in their product, but in the entire approach the company had taken to training and deploying AI video technology.

This wasn't just another headline. This was a moment that forced the entire AI industry to confront a question it had been avoiding: if an AI tool can generate photorealistic videos of anyone doing anything, and there's no built-in protection against using copyrighted material, intellectual property law as we know it might be completely broken.

Let's dig into what actually happened, why it matters beyond the headlines, and what it tells us about the future of AI-generated content.

The Rise and Rapid Fall of Seedance 2.0

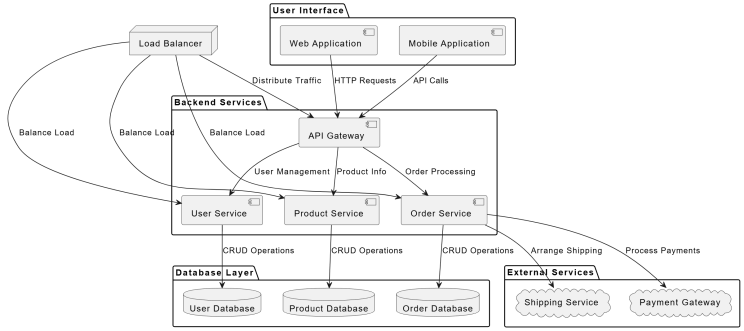

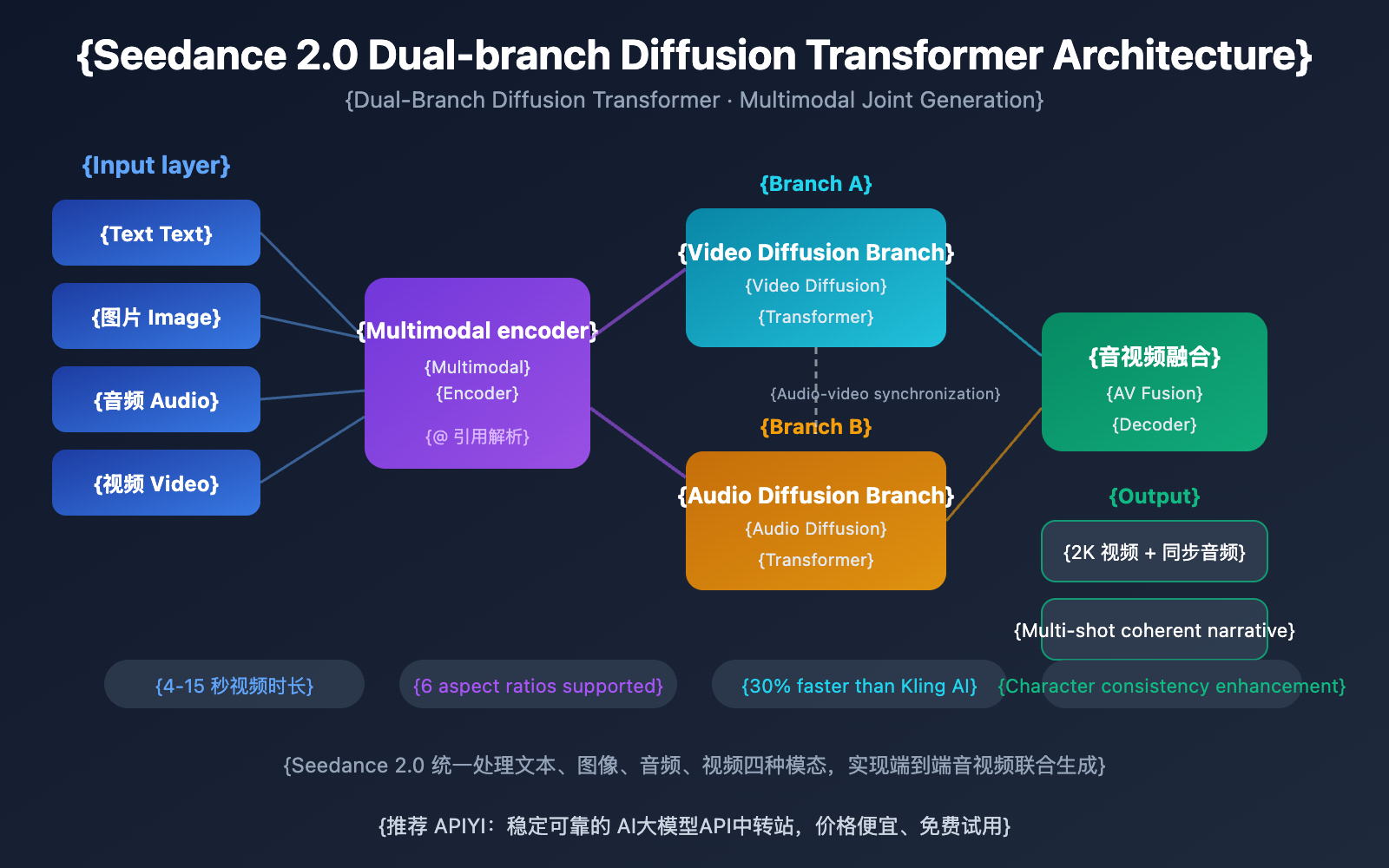

Seedance 2.0 represented a genuine technical achievement. The tool could take a simple text prompt and generate video that appeared to show real people, real places, and real scenarios with shocking fidelity. The underlying technology uses diffusion models trained on massive datasets to understand patterns in visual content, motion, temporal consistency, and human behavior. When you prompted it to show Tom Cruise and Brad Pitt fighting, it didn't require footage of them actually fighting—it synthesized the appearance based on patterns learned from countless hours of training data.

For content creators working within legitimate use cases, this was genuinely powerful. You could generate promotional videos, concept art rendered as video, synthetic footage for indie filmmakers without access to expensive crews. The technology had real applications that didn't involve impersonation or copyright infringement.

But Byte Dance made a critical mistake in how it deployed the tool. They didn't implement robust safeguards to prevent users from generating content that involved copyrighted characters, trademarked personas, or real people without explicit consent. The tool was, essentially, a copyright infringement machine waiting for someone to press the button.

And someone did. Within days, the Tom Cruise versus Brad Pitt clip had millions of views. It was clever, well-executed, and completely unauthorized. Disney watched it spread across social media and made a calculation: they could either let this set a precedent, or they could fight back immediately and publicly.

They chose to fight.

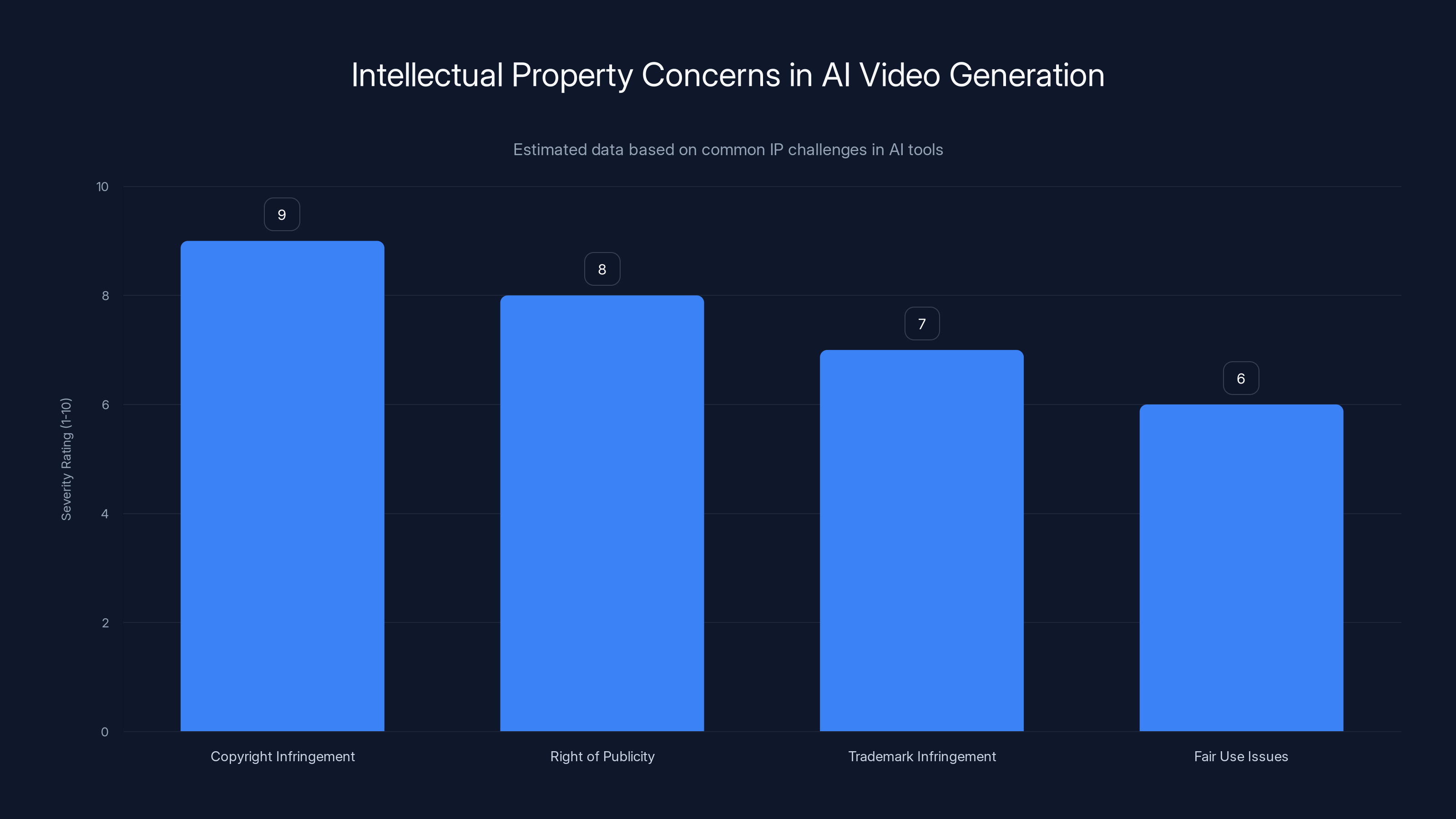

Copyright infringement is the most severe concern in AI video generation, followed by right of publicity and trademark issues. Estimated data based on typical challenges.

Understanding the Legal Angles: Copyright, Likeness, and Intellectual Property

When Disney's legal team drafted their cease-and-desist letter, they had multiple angles of attack. The first and most straightforward was copyright infringement. Disney owns the copyright to Marvel characters like Spider-Man and Star Wars characters like Darth Vader. These aren't just creative works—they're billion-dollar assets. When Seedance 2.0 generated video content featuring these characters without authorization, it wasn't ambiguous. It was textbook copyright infringement.

But the legal issue ran deeper than that. There's also the question of right of publicity and personality rights. Both Tom Cruise and Brad Pitt own rights to their likenesses. When you generate a video that depicts them doing something they didn't actually do, you're essentially using their image, voice, and persona without permission. In many jurisdictions, that's not just copyright infringement—it's a separate violation of their personal rights.

Then there's the trademark angle. Many of Disney's characters are trademarked. The Star Wars logo, the Marvel branding, the specific visual presentation of these intellectual properties—they're all protected under trademark law. Using them without authorization creates potential trademark infringement claims on top of copyright claims.

What made Disney's letter particularly aggressive was their assertion that Seedance 2.0 had been trained on a "pirated library" of Disney content. This suggested the problem wasn't just that users could misuse the tool—it was that the tool itself was built on unauthorized training data. If Disney could prove that premise, they wouldn't just have a case against users who misused Seedance 2.0. They'd have a case against Byte Dance itself.

Paramount's cease-and-desist involved similar concerns about their intellectual property being used without authorization.

Seedance 2.0 saw a rapid increase in user engagement, peaking in the third week before a sharp decline due to legal issues. Estimated data.

How AI Training Data Becomes a Legal Minefield

Here's where the story gets really complicated. Most large AI models—whether they're image generators, video generators, or text generators—are trained on massive datasets scraped from the internet. These datasets include copyrighted material, trademarked content, and images of real people without their consent. The companies building these tools typically justify this under the "fair use" doctrine, arguing that using copyrighted material for training machine learning models represents transformative use.

But that legal theory has never been tested at scale. Courts have ruled on fair use in various contexts, but they haven't definitively addressed whether using millions of copyrighted images to train an AI model that can generate new images counts as fair use. And in Byte Dance's case, Disney was arguing that they didn't just use copyrighted material for training—they created a tool that could explicitly generate videos featuring Disney characters.

That's a different argument. If Seedance 2.0 was trained to recognize patterns in Disney's characters specifically, and then deployed with the ability to generate new content featuring those characters, that's not transformation. That's replication with extra steps.

The technical question is: did Byte Dance intentionally include Disney's copyrighted characters in their training data, and did they specifically engineer Seedance 2.0 to be able to generate new content featuring those characters? Or was it a byproduct of training on massive internet-scraped datasets?

If the former, they have a serious legal problem. If the latter, they have a serious product problem, because they deployed a tool without safeguards.

Actually, the answer might be both. Byte Dance appears to have done neither of these things deliberately—but they also didn't implement the safeguards that would have prevented either problem from occurring.

Byte Dance's Vague Response and What It Really Means

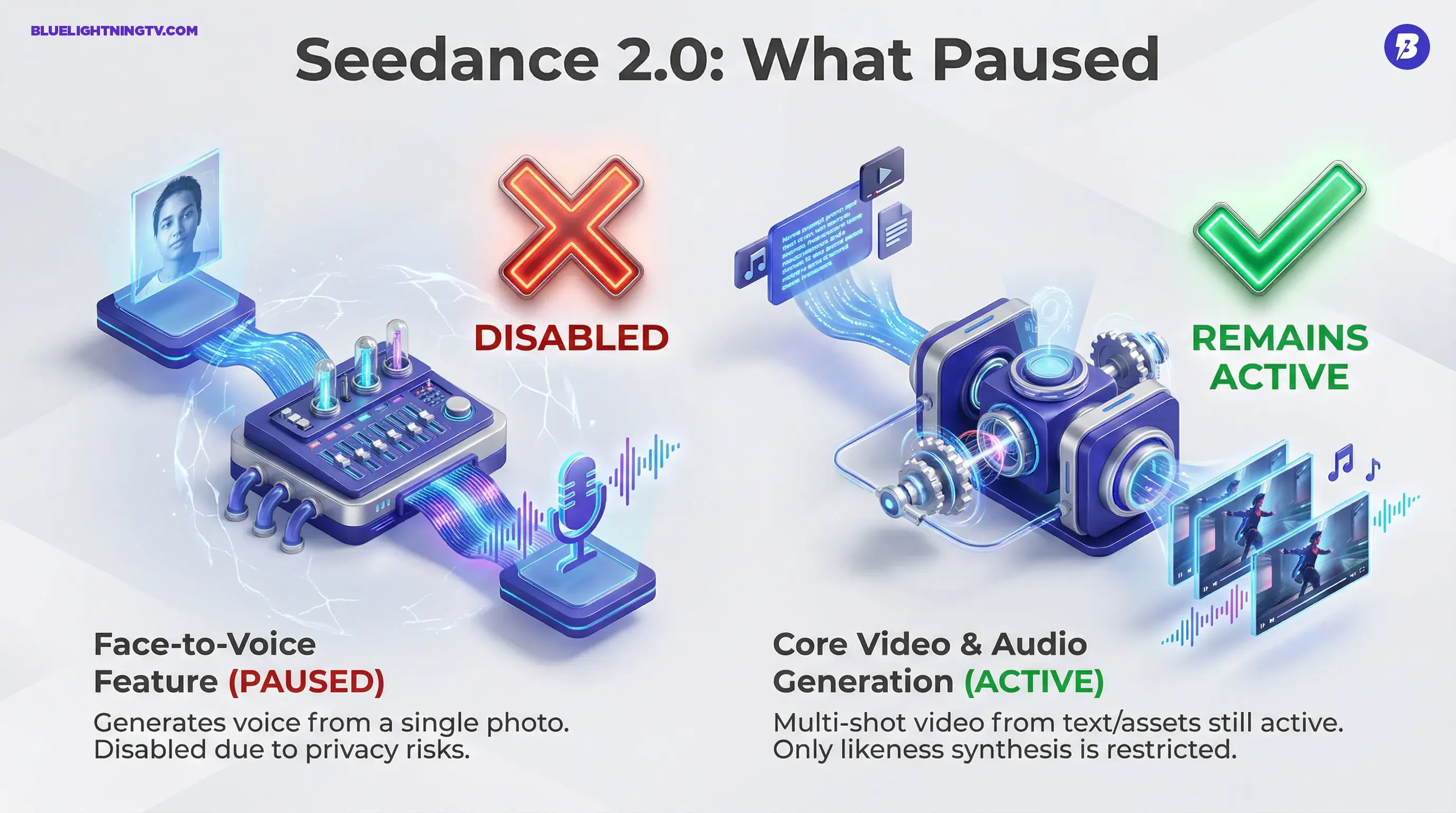

When Byte Dance issued a statement to the BBC, they said they were "taking steps to strengthen current safeguards as we work to prevent the unauthorised use of intellectual property and likeness by users." They also said the company "respects intellectual property rights and we have heard the concerns regarding Seedance 2.0."

That's corporate speak for "we're in trouble and we're going to minimize the damage."

What they actually said was remarkably vague. They didn't commit to specific changes. They didn't explain how they would prevent copyrighted content from being generated. They didn't address whether their training data included unauthorized material. They essentially announced that they would "strengthen safeguards" without explaining what that meant or how it would work.

This vagueness is telling. If Byte Dance had a clear technical solution ready to implement, they would have explained it. The fact that they didn't suggests they were still figuring out how to handle the problem.

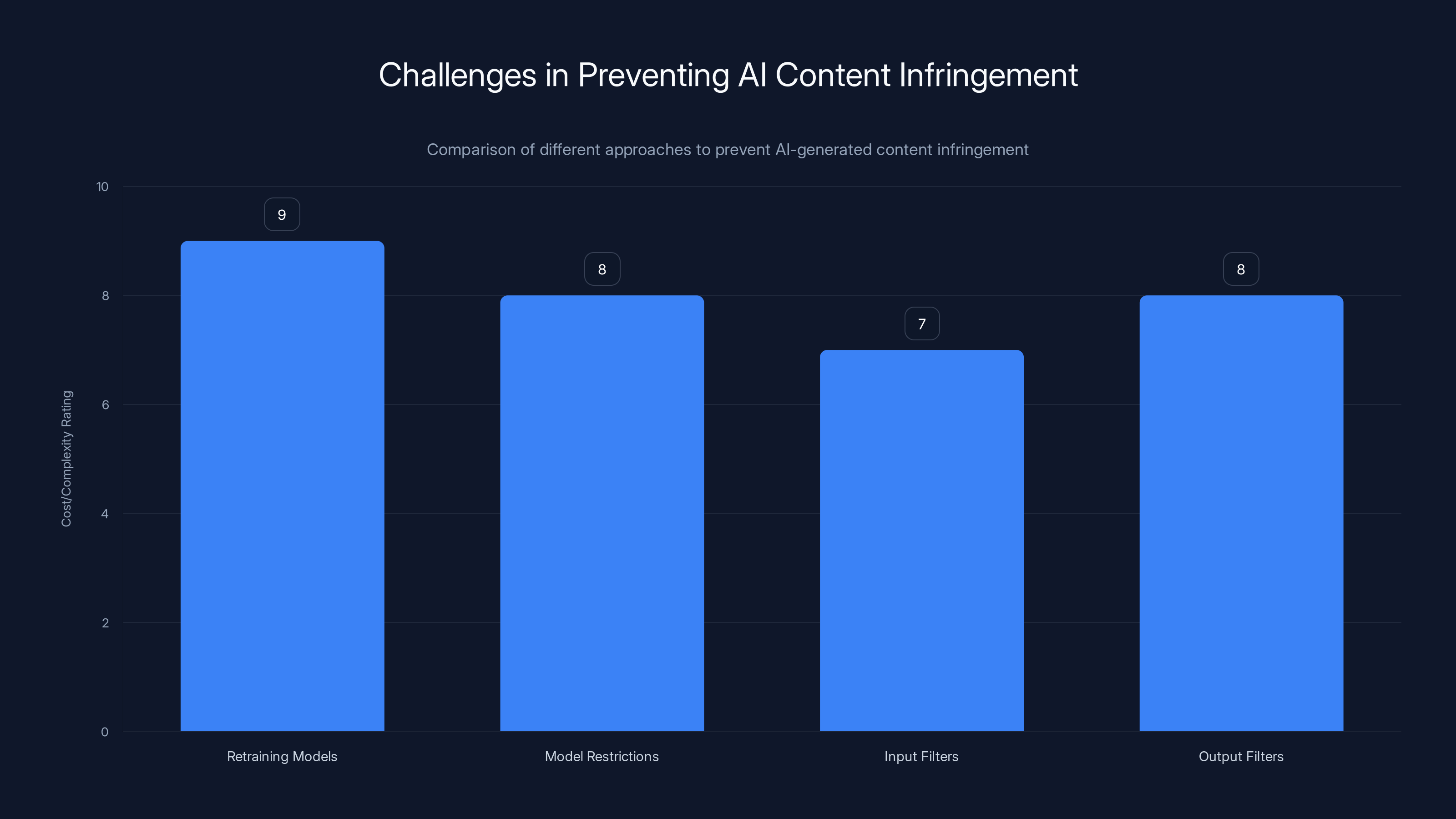

The core issue is this: how do you prevent an AI video generator from creating videos of copyrighted characters? There are a few approaches:

First approach: Blocklist-based filtering. You maintain a list of copyrighted characters, real people, and trademarked content, and you block any prompts that try to generate videos featuring them. This is straightforward to implement but requires constant updating as new intellectual property emerges. It also only works if users are explicit about what they want to generate. If they ask for "a furry creature in an open field," you might not catch that they're asking for Baby Yoda.

Second approach: Content detection at the output stage. Instead of blocking prompts, you generate the video and then analyze it to detect whether it contains copyrighted characters or real people without authorization. You can use computer vision to identify characters, faces, and trademarked imagery. This is more sophisticated but has false positives and false negatives. It's also computationally expensive.

Third approach: Restricting the training data. You retrain the model using only authorized content, or you filter your training data to exclude copyrighted material. This solves the problem at the source but requires massive retraining effort and potentially reduces the model's capabilities.

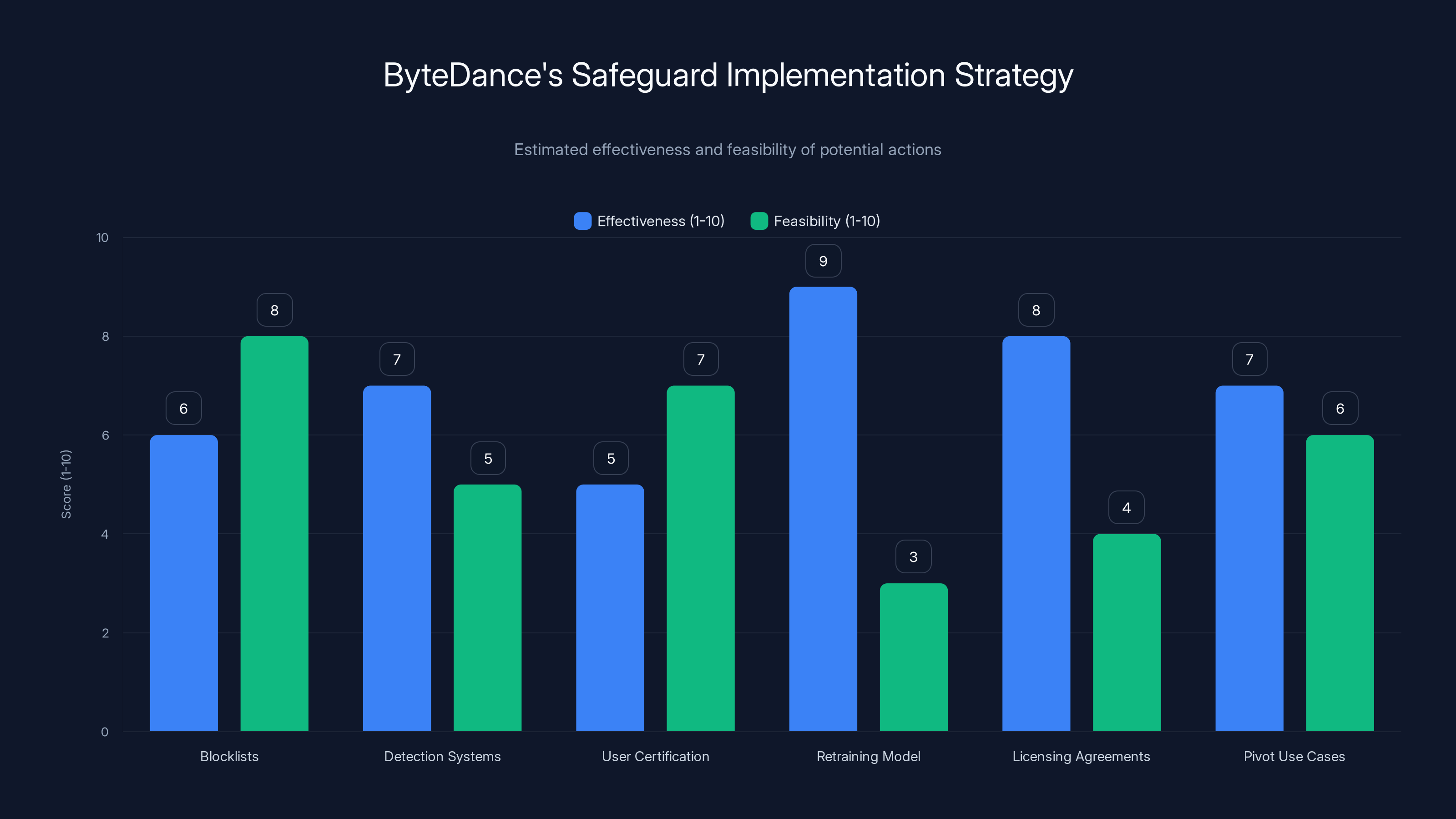

Fourth approach: Licensing agreements. You get permission from Disney, Paramount, and other rights holders to include their intellectual property in your training data and allow users to generate content featuring those characters. This is economically expensive but legally bulletproof. It's not clear this is feasible at scale.

Byte DANCE hasn't publicly committed to any of these approaches. That's the real problem.

Each approach to prevent AI content infringement has significant drawbacks, with retraining models being the most costly and complex. Estimated data.

The Disney Cease-and-Desist: The Nuclear Option in Copyright Disputes

Disney didn't write a gentle reminder or a polite letter asking Byte Dance to please implement safeguards. Disney fired off a cease-and-desist letter. This is the legal equivalent of clearing your throat very loudly. It's a formal demand that Byte Dance stop the infringing activity, and it establishes that Disney is aware of the infringement and is taking action to stop it.

This matters because it affects potential liability. If Byte Dance was sued for copyright infringement after receiving the cease-and-desist letter, Disney could argue willful infringement, which can result in treble damages (damages multiplied by three) and attorney's fees. Conversely, if Byte Dance doesn't comply with the cease-and-desist, they're basically signaling that they're willing to risk litigation.

For a company like Byte Dance—which is already under regulatory scrutiny in the United States and other countries—litigation is a luxury they can't afford. Even if they believed they had a strong legal case, the reputational and political damage of fighting Disney in court would be enormous.

So when Disney sent that letter, they weren't asking. They were demanding. And they were implicitly threatening legal action backed by essentially unlimited legal resources.

Disney's approach has been consistent in their history of defending their intellectual property. They've gone after anyone from individual artists creating fan art to companies building streaming services. The Tom Cruise versus Brad Pitt video was too high-profile to ignore. It was precisely the kind of scenario that demonstrates to the world that their intellectual property can be replicated without authorization.

Paramount's Parallel Action and the Industry Pattern

Paramount's cease-and-desist letter following Disney's created a pattern. It suggested this wasn't just Disney being protective of their assets—it was an industry-wide signal that rights holders would not tolerate AI video generators used to replicate their intellectual property.

Paramount owns franchises like Star Trek, Mission: Impossible, and various television and film properties. If Seedance 2.0 could generate content featuring these properties, Paramount had the same concerns and the same legal standing to demand that the generator stop.

When two major studios issue cease-and-desist letters within days of each other, it signals something important: this is not a one-off complaint. This is a coordinated response from the industry. It also suggests there might be coordination happening behind the scenes. Studios talk to each other about legal threats. When one studio successfully pressures a company to change its behavior, other studios take note.

What's significant is that there's no indication other studios waited to join the fray. If this had dragged out, we would likely see cease-and-desist letters from Universal, Sony, Warner Bros., and others. The fact that the initial pressure came from Disney and Paramount suggests these were the most directly impacted studios, but the threat of additional legal action was almost certainly implied.

Estimated data shows that retraining the model is highly effective but less feasible due to cost, while blocklists are more feasible but less effective.

The Technical Reality: Why Simple Solutions Won't Work

One of the fundamental challenges in policing AI-generated content is that the technology is indifferent to intellectual property. An AI model trained to understand visual patterns doesn't inherently "know" that some patterns are copyrighted and others aren't. It just learns to replicate patterns.

If you show an AI model 10,000 images of Spider-Man and train it to generate images that look like Spider-Man, it will generate images that look like Spider-Man. That's what it learned to do. The fact that Spider-Man is a copyrighted character doesn't change the model's behavior. It doesn't care.

So preventing infringement at the model level means either:

- Not including copyrighted material in the training data in the first place

- Building the model in a way that explicitly prevents it from replicating certain patterns

- Adding filters at the input stage that prevent users from requesting infringing content

- Adding filters at the output stage that detect infringing content

Each of these approaches has serious drawbacks.

Approach 1 means retraining massive models from scratch using only authorized content. That's enormously expensive and time-consuming. It also reduces the model's capabilities because you're removing training data.

Approach 2 means building in restrictions that require knowing in advance what shouldn't be replicated. This is brittle. New intellectual property emerges constantly. You can't update the model in real-time.

Approach 3 means maintaining blacklists of words and phrases that users can't use. This is easy to circumvent. Users can describe what they want to generate without naming it explicitly.

Approach 4 means building detection systems that can identify copyrighted content in the generated video. This is computationally expensive and imperfect. It also adds significant latency to the generation process.

There's no silver bullet. Byte Dance can't actually prevent all infringing use of Seedance 2.0 without fundamentally crippling the tool.

The Broader Implications for AI Development

The Seedance 2.0 controversy is a microcosm of a larger problem in the AI industry. Companies have been building massive AI models trained on internet-scraped data that includes copyrighted material, and then deploying these models with minimal safeguards against infringing use.

This approach works fine as long as nobody cares enough to sue. But as AI becomes more capable and more commercially viable, the incentive for rights holders to sue increases dramatically. Disney's cease-and-desist letter was a shot across the bow. It's a signal that the era of consequence-free training on copyrighted material might be coming to an end.

Meanwhile, other companies working on AI video generation are watching this unfold and thinking about their own legal exposure. If you're training an AI video generator and you know there's potential for copyright infringement, do you wait for Disney to sue you, or do you implement safeguards proactively?

The rational choice is to implement safeguards proactively. But that adds cost, complexity, and reduces capabilities. It's a business problem disguised as a technical problem.

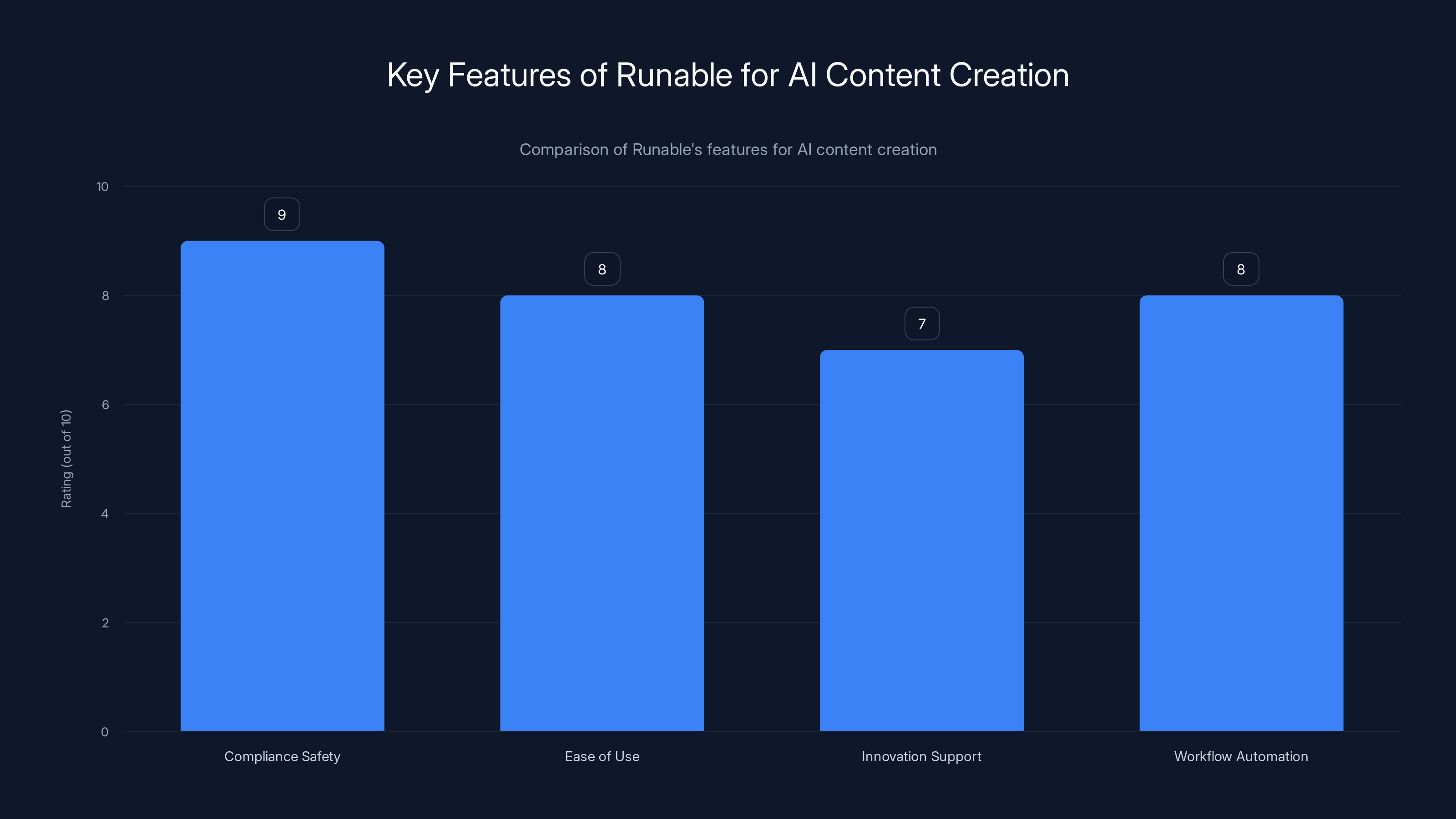

Runable excels in compliance safety and workflow automation, making it a strong choice for AI content creators. Estimated data.

What Happens to Users of Seedance 2.0?

Here's a question that doesn't get asked often: if someone used Seedance 2.0 to generate a video featuring copyrighted characters before the cease-and-desist letters arrived, what happens to them?

Legally, they're potentially liable for copyright infringement. Just because Byte Dance provided the tool doesn't mean users are innocent. If you use a tool to infringe someone's copyright, you're still infringing their copyright.

But in practice, Disney and Paramount are almost certainly not going to pursue legal action against individual users who generated videos with Seedance 2.0. That would be terrible PR. Instead, they're going to pursue Byte Dance, the company that created and deployed the tool.

Byte Dance, meanwhile, might try to argue that they provided a tool and users misused it. But that defense only goes so far. If you create a tool you know will be used to infringe copyrights, and you don't implement reasonable safeguards to prevent that infringement, courts have often found the tool maker liable under theories of contributory infringement or vicarious infringement.

DMCA and safe harbor provisions might provide some protection, but those are complex and context-dependent. Byte Dance probably doesn't have a get-out-of-jail-free card just because users chose to infringe copyrights.

The Training Data Question: What Did Byte Dance Really Do?

One of the big unanswered questions is whether Byte Dance intentionally included copyrighted Disney and Paramount content in Seedance 2.0's training data, or whether it happened as a byproduct of training on internet-scraped data.

If they intentionally included Disney characters in the training data specifically to make Seedance 2.0 better at generating videos of Disney characters, that's intentional infringement. That's a much more serious legal problem.

If the training data simply included copyrighted material because it was scraped from the internet, and Byte Dance didn't specifically curate the training data to include copyrighted material, that's a different (though still problematic) situation. In that case, Byte Dance's mistake was deploying a tool without safeguards, not deliberately training on copyrighted material.

The distinction matters for the severity of potential legal liability, but either way, Byte Dance has a problem. Either they trained on copyrighted material deliberately (really bad), or they trained on copyrighted material and deployed a tool without safeguards that could prevent infringing use (also really bad).

Publicly available information suggests the latter is more likely. But Disney's assertion that Seedance 2.0 used a "pirated library" suggests they have reason to believe the former might also be true.

Estimated data shows copyright infringement as the most significant legal challenge in AI training data, followed by trademark violations and privacy concerns.

How Will Byte Dance Actually "Strengthen Safeguards"?

Assuming Byte Dance doesn't want to get sued into oblivion, they need to actually implement safeguards that meaningfully reduce the risk of infringing use. Here's what that might look like in practice:

Immediate actions: They could implement blocklists of known copyrighted characters and real people. They could block prompts that explicitly reference copyrighted franchises. They could remove features that allow you to generate videos of specific, identifiable real people. This wouldn't be perfect, but it would reduce the lowest-hanging fruit for infringing use.

Medium-term actions: They could build detection systems that analyze generated videos and flag those that appear to contain copyrighted characters or real people. They could require users to certify that they have rights to the content they're generating. They could implement DMCA-style takedown procedures where rights holders can request that specific generated content be removed.

Long-term actions: They could retrain the model using only authorized training data. They could negotiate licensing agreements with major studios. They could pivot to different use cases that don't involve replicating real people or copyrighted characters.

Each of these approaches has trade-offs. Blocklists and filtering reduce flexibility and can be circumvented. Detection systems add latency and cost. Requiring user certification is easy to ignore. Retraining with authorized data is enormously expensive. Licensing agreements are economically challenging. Pivoting use cases means admitting the current approach is flawed.

Byte Dance probably can't implement all of these solutions simultaneously. They'll likely start with the quick wins: blocklists, explicit prohibitions on real people, and improved terms of service that require users to certify they have necessary rights. Whether that's enough to satisfy Disney, Paramount, and other rights holders remains to be seen.

The Regulatory Angle: Government Action and AI Governance

Beyond private legal action, there's the question of whether governments will get involved. The United States hasn't yet passed comprehensive AI regulation, but various agencies have shown interest in AI safety and responsible AI development.

The Copyright Office has been studying AI and copyright issues. If they conclude that AI models trained on copyrighted material without permission constitute infringement, that could create additional legal exposure for companies like Byte Dance. Even if Byte Dance isn't a US company, they have US users and potential US legal exposure.

The European Union has been more aggressive in AI regulation through the AI Act. If Seedance 2.0 becomes available in the EU, it could face additional scrutiny and potentially be required to comply with EU AI regulations.

Right now, the legal framework for AI and copyright is still being established. Courts will ultimately decide whether training AI models on copyrighted material constitutes fair use. But in the meantime, companies building and deploying AI tools operate in legal gray areas with significant risk.

Byte Dance's situation could accelerate the development of clearer legal frameworks. If Disney and Paramount win their claims against Byte Dance, that precedent will shape how other companies approach AI training and deployment. If Byte Dance wins, that will have the opposite effect.

But realistically, this is unlikely to go to trial. It's more likely to result in a settlement where Byte Dance agrees to implement certain safeguards, pays some damages, and moves on. That would be the path of least resistance for all parties involved.

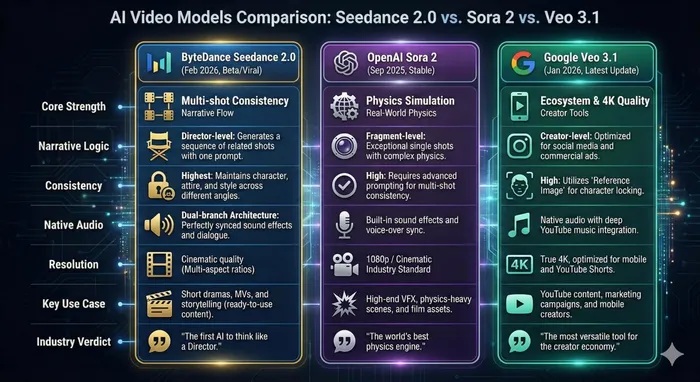

Parallel Developments: Other AI Video Generators and Copyright

Seedance 2.0 wasn't the first AI video generator to face copyright concerns, and it won't be the last. Companies building similar tools—whether it's Open AI's Sora, Google's Veo, or others—are all dealing with the same fundamental issues around training data and output safeguards.

What's different about Byte Dance is that they were more explicit about what Seedance 2.0 could do, and they deployed it with fewer apparent safeguards. They created a tool that was almost optimized for copyright infringement. That made them an easy target.

Other companies have been more cautious. They've been limiting access, requiring explicit user agreements, implementing safety filters, and generally trying to reduce their legal exposure. Byte Dance essentially did the opposite. They released the tool broadly and let users figure out what it could do.

That approach might have worked if nobody important got offended. But someone did, and now Byte Dance is dealing with the consequences.

The Future of AI-Generated Video Content

The Seedance 2.0 controversy raises a fundamental question: can we have AI video generators that are both capable and legally compliant?

The honest answer is: we don't know yet. The technology is advancing faster than the legal framework. Copyright law was written for a world where copying something required intentional action by a human. AI models can replicate copyrighted material as a byproduct of their normal operation.

Solving this problem will require collaboration between AI developers, rights holders, regulators, and courts. It will require new legal precedents that clarify the scope of copyright infringement in the AI context. It might require new licensing frameworks that allow AI developers to legally use copyrighted material for training and generation.

In the meantime, companies like Byte Dance are trying to navigate this uncertain landscape. Some will bet that aggressive safeguards are worth the cost. Others will bet that licensing agreements with major rights holders are worth the investment. Others will try to pivot to use cases that don't involve copyrighted material or real people.

The winners will be the companies that solve this problem without sacrificing capability or feasibility. The losers will be the companies that try to ignore it or work around it.

Byte Dance is currently in the "trying to work around it" camp. Whether their "strengthened safeguards" are actually sufficient remains to be seen.

Lessons for Developers Building AI Tools

If you're building an AI tool that could potentially be used to infringe copyrights, the Seedance 2.0 situation offers clear lessons:

First, implement safeguards before launch, not after you get sued. It's much cheaper to prevent infringement than to deal with cease-and-desist letters and litigation.

Second, be transparent about your training data and your approach to preventing infringing use. Vague statements about "strengthening safeguards" look like damage control, not proactive responsibility.

Third, understand that rights holders will pursue legal action if the stakes are high enough. Disney's cease-and-desist wasn't a one-off reaction to a meme. It was a calculated decision that the threat was significant enough to warrant formal legal action.

Fourth, consider the licensing question early. If you want to allow users to generate content featuring copyrighted characters, get permission first. Don't assume fair use will protect you.

Fifth, design with humans in mind. Tools that make it easy and obvious to infringe copyrights will attract copyright-infringing use. Tools that require intentional circumvention of safeguards are less likely to be used for infringement.

The Real Impact: Beyond Headlines

The Seedance 2.0 controversy might seem like a story about one company's misstep and one viral video that upset some studios. But the real impact is broader.

This is a signal to the entire AI industry that copyright concerns are not theoretical or far-off. They're immediate and material. Rights holders have legal tools and the willingness to use them. Companies that ignore copyright risks in their AI products do so at their peril.

It's also a signal to regulators and policymakers that the AI industry cannot police itself entirely. The fact that Byte Dance didn't think to implement basic safeguards before releasing Seedance 2.0 suggests that market incentives alone won't ensure responsible AI development.

And it's a signal to users that just because an AI tool can do something doesn't mean you should use it to do that thing. Copyright still matters. Using AI to infringe copyrights is still infringement.

For the companies building the next generation of AI tools, the message is clear: think about intellectual property concerns early, implement safeguards seriously, and be transparent about your approach. The alternative is becoming the next cautionary tale.

What Comes Next for Byte Dance and Seedance 2.0

In the immediate term, Byte Dance will likely implement safeguards that reduce (but don't eliminate) the risk of infringing use. They'll probably settle with Disney and Paramount on terms that include some combination of damages, safeguard commitments, and ongoing monitoring.

They might also consider whether Seedance 2.0 is worth continuing to develop. If the legal liability and reputational damage outweigh the benefits, they might pivot to other applications or simply sunset the product.

Medium-term, we'll see whether Byte Dance's safeguards are actually effective. Rights holders will presumably monitor Seedance 2.0 to ensure the tool isn't still being used for infringing purposes. If violations continue, there will be additional legal action.

Long-term, this situation will influence how other companies approach AI video generation. Some will see this as a reason to be more cautious. Others will see it as proof that they need to be more careful than Byte Dance was.

Either way, the era of consequence-free AI development without serious copyright considerations is probably over.

FAQ

What exactly is Seedance 2.0 and how does it work?

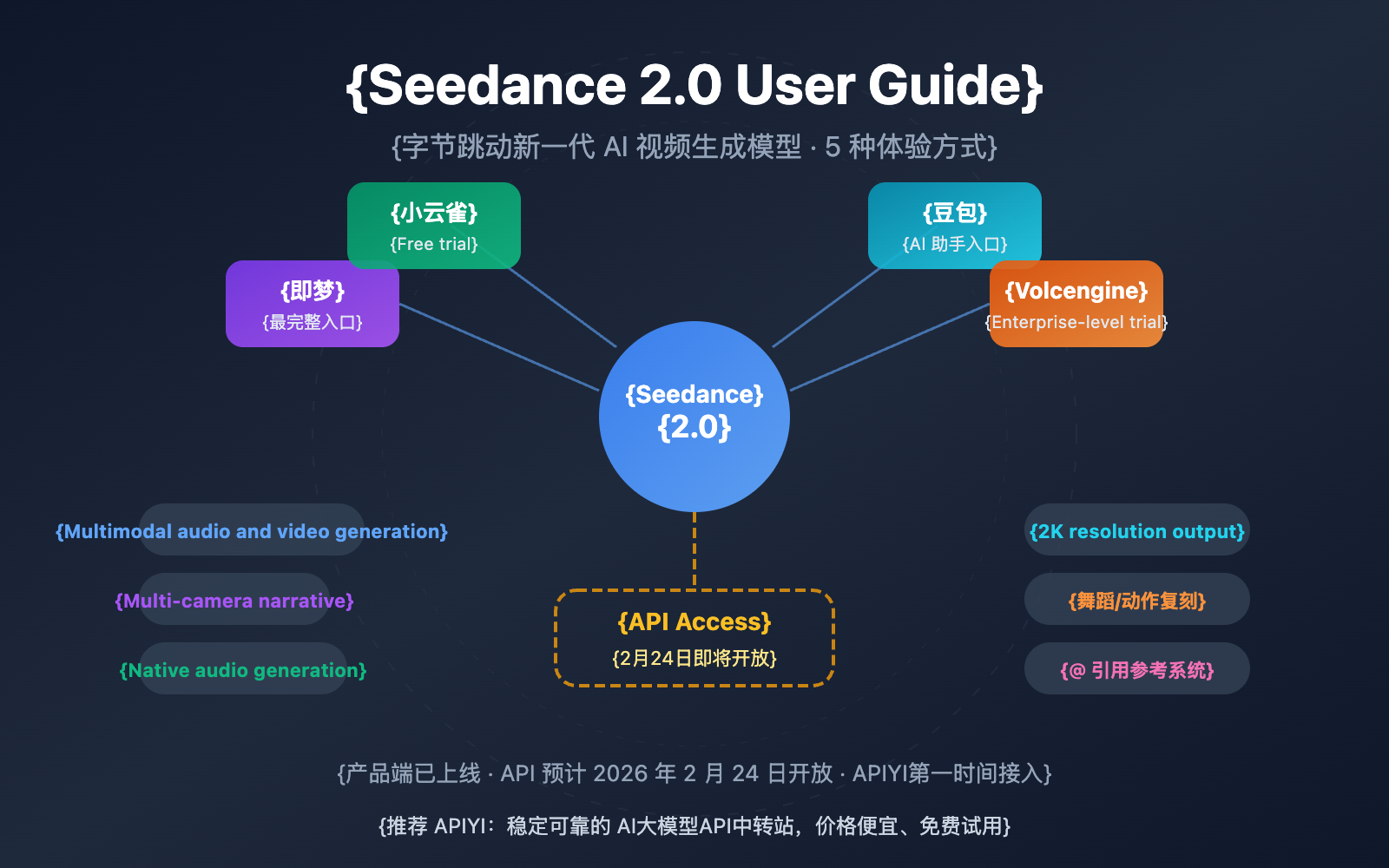

Seedance 2.0 is Byte Dance's AI-powered video generation tool that creates photorealistic videos from text prompts. The system uses diffusion models trained on massive video datasets to understand patterns in visual content, motion, and human behavior, then synthesizes new videos that can depict real people, fictional characters, and real-world scenarios with remarkable fidelity. Users provide a text description of what they want to see, and the AI generates a corresponding video without requiring actual footage or real-time filming.

Why did Disney and Paramount issue cease-and-desist letters?

Disney and Paramount issued cease-and-desist letters because Seedance 2.0 enabled users to generate videos featuring their copyrighted characters and intellectual property without authorization. When the viral Tom Cruise versus Brad Pitt video was generated using the tool, it demonstrated that the platform could create content featuring real people without consent and replicate copyrighted material. Disney specifically claimed that Seedance 2.0 contained a "pirated library" of their copyrighted characters from Star Wars, Marvel, and other franchises. These legal letters represent formal demands that Byte Dance cease the infringing activity and implement safeguards to prevent future infringement.

What are the main intellectual property concerns with AI video generation?

AI video generators present multiple intellectual property challenges including copyright infringement when generating content featuring copyrighted characters or franchises, right of publicity violations when creating videos of real people without permission, trademark infringement when using branded imagery or logos, and questions about whether training AI models on copyrighted material without permission constitutes fair use. Additionally, there's uncertainty about whether the companies building these tools are contributorily liable when users misuse them for infringing purposes. Each of these legal theories provides potential grounds for action by rights holders.

How can Byte Dance actually prevent infringing use of Seedance 2.0?

Byte DANCE can implement multiple safeguarding approaches including maintaining blacklists of known copyrighted characters and real people to block related prompts, building computer vision systems that detect copyrighted content or identifiable people in generated videos before output, requiring users to certify they have necessary rights to generate requested content, implementing DMCA-style takedown procedures, and potentially retraining the model using only authorized training data. However, each approach has significant trade-offs involving cost, reduced functionality, and circumvention possibilities. Most likely, Byte Dance will combine several approaches rather than relying on any single solution.

Does training AI models on copyrighted material constitute copyright infringement?

This question remains legally uncertain and is one of the central disputes in AI copyright litigation. Some argue that using copyrighted material for AI training represents transformative fair use that doesn't require permission, similar to how search engines index copyrighted content. Others argue that using massive copyrighted datasets to train commercial AI models that generate new content constitutes infringement without authorization. Courts have not yet definitively ruled on this question at scale, creating legal ambiguity for companies like Byte Dance that train models on internet-scraped data containing copyrighted material.

Could users who generated videos with Seedance 2.0 face legal consequences?

Users who used Seedance 2.0 to generate videos featuring copyrighted characters or real people without authorization could theoretically face copyright infringement liability or right of publicity claims. However, in practice, Disney and Paramount are far more likely to pursue legal action against Byte Dance, the company that created and deployed the tool without adequate safeguards, rather than individual users. Byte Dance could face claims of contributory infringement or vicarious infringement if courts determine that the company knowingly provided tools optimized for infringing use without adequate safeguards.

What regulatory changes might result from the Seedance 2.0 controversy?

The controversy could accelerate development of clearer legal frameworks around AI and copyright. The US Copyright Office is studying AI training and copyright issues and might issue guidance or recommendations. The European Union's AI Act could impose requirements on AI video generators distributed in EU markets. Courts might issue new precedents clarifying copyright liability in the AI context. Additionally, policymakers might pursue legislation specifically addressing AI training on copyrighted material and output safeguards. These regulatory developments will ultimately shape how companies approach AI model training and deployment.

How does this situation affect other AI video generators like Sora or Veo?

Other companies building AI video generation tools are watching the Seedance 2.0 situation and considering their own legal exposure. Companies like Open AI (Sora) and Google (Veo) have likely implemented safeguards more carefully than Byte Dance, limited initial access, required explicit user agreements, and potentially negotiated with rights holders. The Byte Dance controversy signals that rights holders will pursue legal action if infringement is sufficiently egregious or high-profile, creating incentives for competitors to be more cautious. However, all AI video generators face similar underlying technical challenges in preventing copyrighted content generation.

What should companies building AI tools learn from this situation?

Companies should implement copyright safeguards before launch rather than after facing legal action, be transparent about training data sources and safety approaches, understand that major rights holders will pursue legal remedies when stakes are high, consider licensing agreements early if they want to enable content generation involving copyrighted material, and design tools in ways that make infringing use require intentional circumvention of safeguards rather than being the default behavior. The Seedance 2.0 case demonstrates that moving fast and breaking things works fine until you break something that belongs to Disney, at which point legal consequences arrive immediately.

What's the difference between contributory infringement and vicarious infringement in this context?

Contributory infringement occurs when someone has knowledge of infringing activity and materially contributes to that activity. Byte Dance could face contributory infringement liability if they knew (or should have known) that Seedance 2.0 would be used to generate infringing content and did nothing to prevent it. Vicarious infringement occurs when someone has the right and ability to control infringing activity and receives financial benefit from it. Byte Dance could face vicarious infringement liability as the company that controls the platform and benefits from its use. Both theories provide potential bases for liability beyond direct copyright infringement, making them particularly relevant to platform providers like Byte Dance.

Try Runable For Free

While Byte Dance grapples with copyright enforcement, teams building their own AI-powered content strategies need tools that respect intellectual property while enabling innovation. If you're creating video content, presentations, documents, or reports, consider how AI automation can streamline your workflows without the legal headaches of infringing tools.

Use Case: Generate compliance-safe AI presentations, documents, and reports without copyright concerns

Try Runable For Free

Key Takeaways

- ByteDance launched Seedance 2.0 without adequate copyright safeguards, enabling generation of unauthorized content featuring copyrighted characters and real people

- Disney and Paramount's cease-and-desist letters signal that rights holders will aggressively pursue legal action against AI tools used for copyright infringement

- Multiple intellectual property regimes overlap in AI video generation: copyright, right of publicity, trademark, and trade dress, creating complex legal exposure

- Technical solutions to copyright prevention (blocklists, detection systems, training data filtering) each have significant trade-offs between effectiveness, cost, and functionality

- The legal question of whether training AI on copyrighted material constitutes fair use remains fundamentally unresolved and will shape future AI development

- Companies building AI tools must implement safeguards before launch and be transparent about approach, as vague commitments invite regulatory and legal scrutiny

Related Articles

- Seedance 2.0 and Hollywood's AI Reckoning [2025]

- Seedance 2.0 Sparks Hollywood Copyright War: What's Really at Stake [2025]

- India's Domain Registrar Takedowns: How Piracy Enforcement Changed [2025]

- AI Video Generation Without Degradation: How Error Recycling Fixes Drift [2025]

- Amazon's AI Content Marketplace: How Publishers Get Paid [2025]

- AI-Generated Music at Olympics: When AI Plagiarism Meets Elite Sport [2025]

![ByteDance's Seedance 2.0 AI Video Generator Faces IP Crisis [2025]](https://tryrunable.com/blog/bytedance-s-seedance-2-0-ai-video-generator-faces-ip-crisis-/image-1-1771241719679.jpg)