Introduction: When AI Becomes a Copyright Weapon

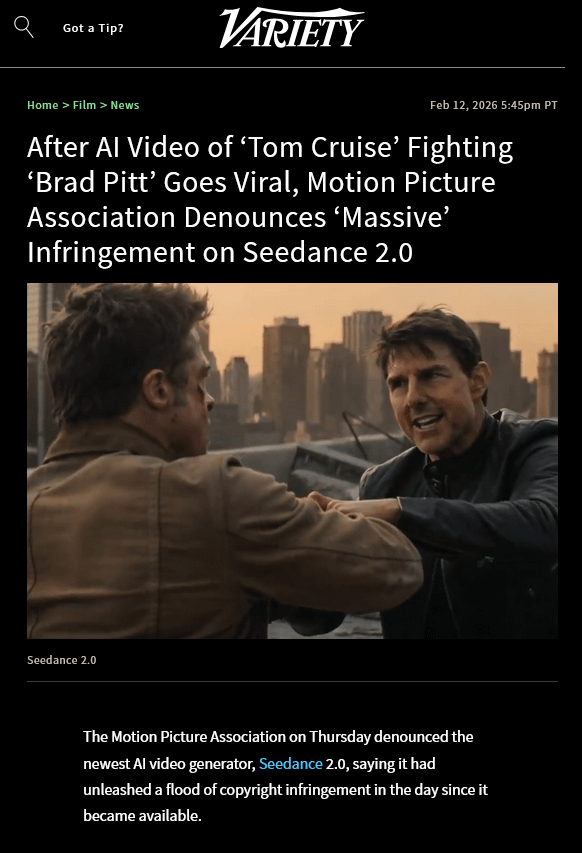

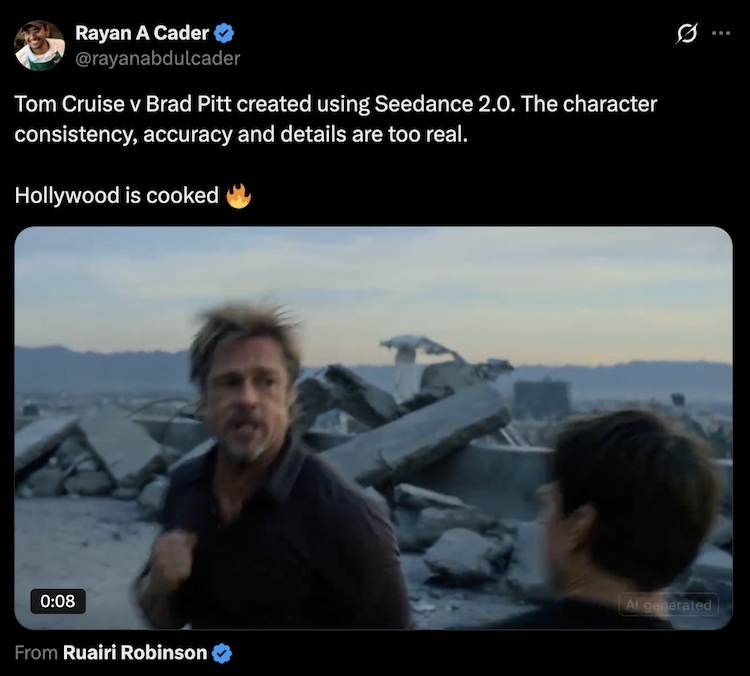

It's February 2026, and the internet just watched an AI create a convincing video of Tom Cruise fighting Brad Pitt from a two-line text prompt. What would've taken a Hollywood production team weeks and millions of dollars to film now takes seconds. The problem? Nobody asked permission. Nobody paid anybody. And the studios are furious.

Byte Dance, the Chinese company behind Tik Tok, just launched Seedance 2.0, an AI video generator that's upended the delicate relationship between Silicon Valley and Hollywood. Within 24 hours of launch, the Motion Picture Association fired off cease-and-desist letters. Disney did the same. Paramount followed. SAG-AFTRA, the actors' union, condemned it as "blatant infringement."

But here's what makes this moment different from previous AI controversies: this isn't theoretical anymore. It's not about potential problems down the line. The infringing content already exists. Right now. On the internet.

This article digs into what Seedance 2.0 actually does, why Hollywood is losing its mind, what the legal landscape looks like, and what this means for creators, AI companies, and the future of content creation itself. We're not just talking about copyright law here. We're talking about the fundamental question of who owns culture in an AI world.

TL; DR

- Seedance 2.0 is Byte Dance's text-to-video AI model that generates realistic 15-second videos from text prompts, similar to Open AI's Sora

- Studios claim massive copyright infringement within hours of launch, with AI-generated videos depicting Disney, Paramount, and other franchises

- Legal warfare has begun: Disney, Paramount, and the MPA sent cease-and-desist letters within days

- The real issue isn't the technology itself but the lack of safeguards against creating celebrity likenesses and using copyrighted material

- This could reshape how AI companies approach content moderation and how copyright law applies to generative AI tools

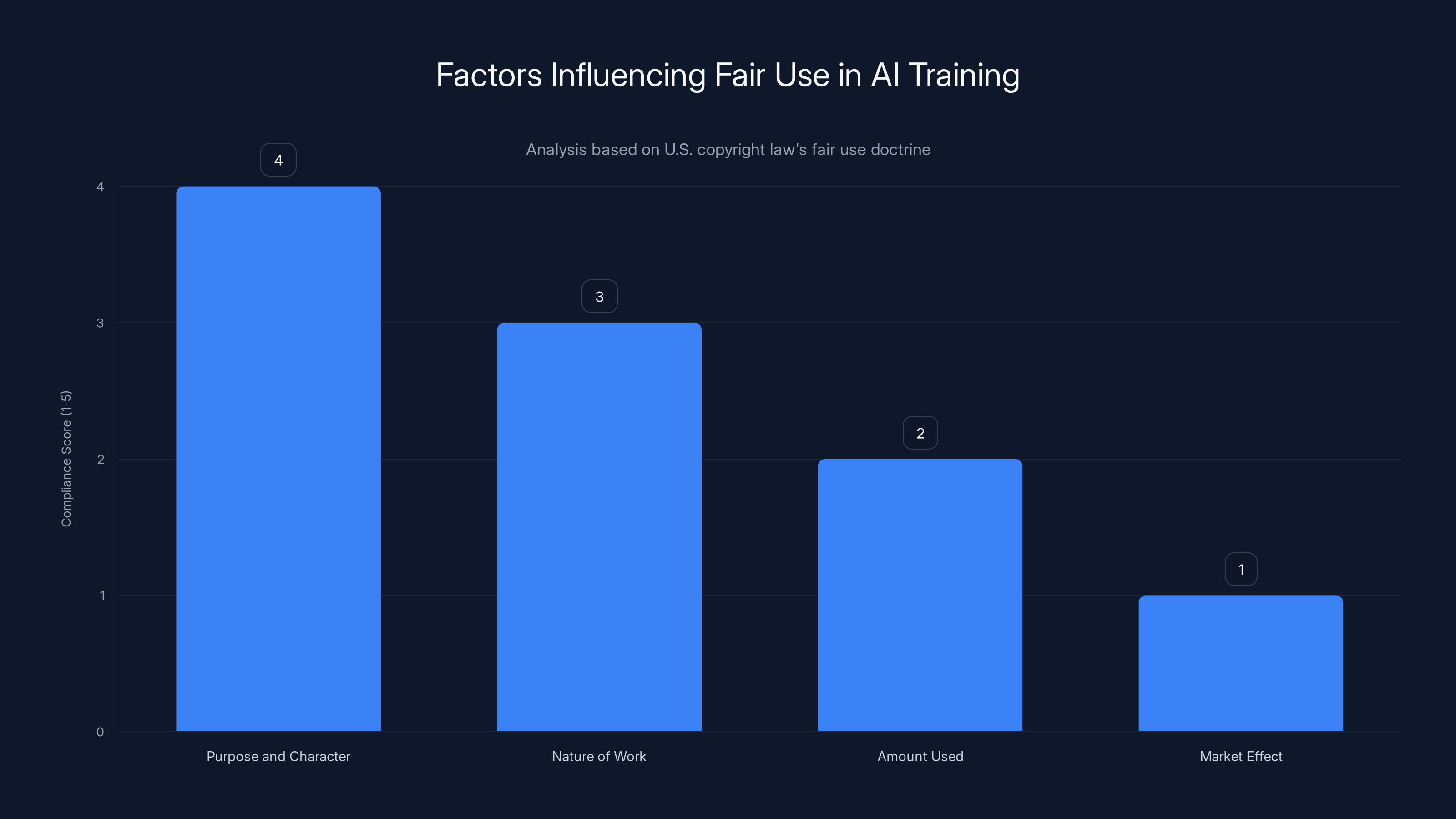

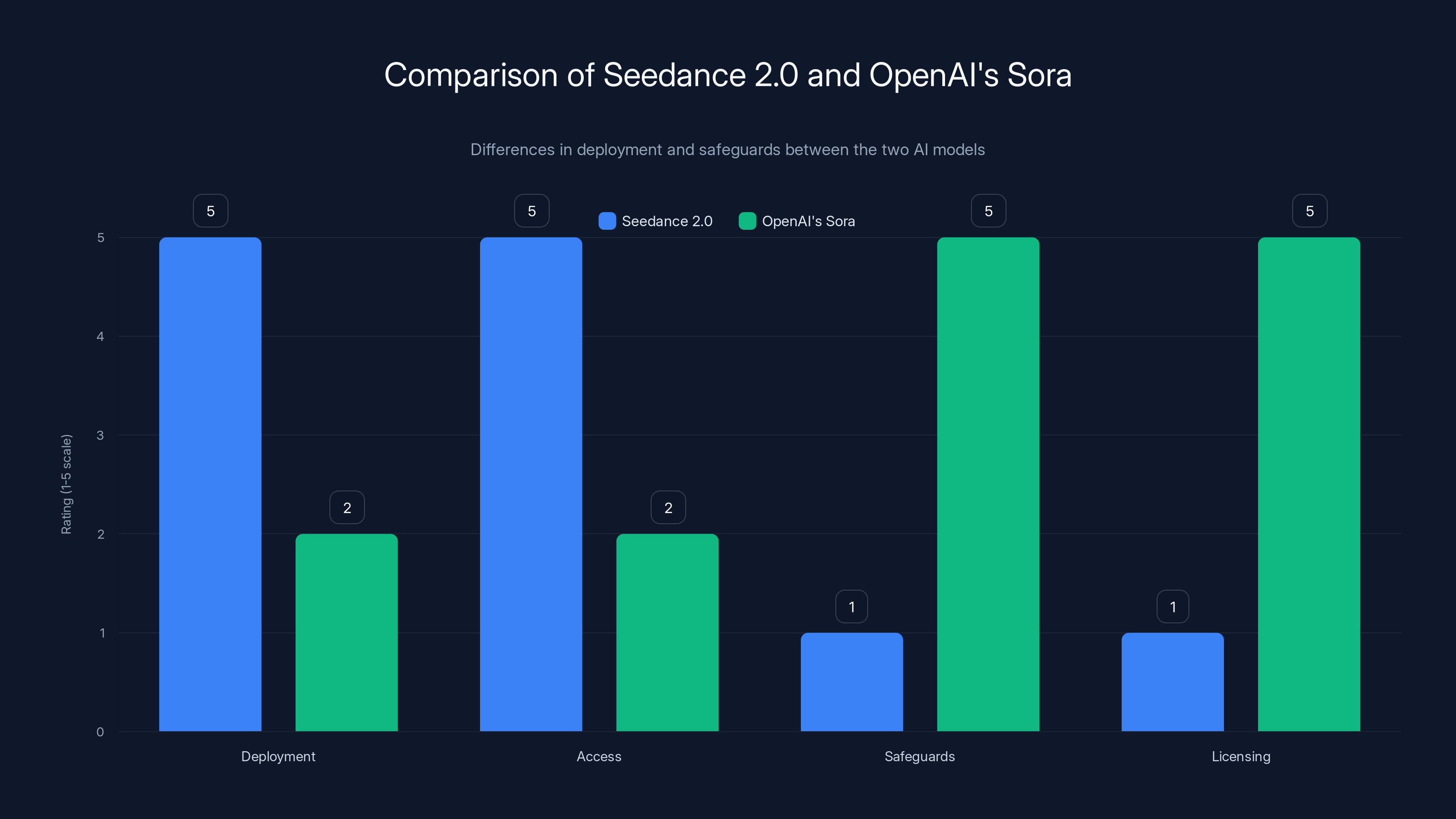

AI training data is likely compliant with 'Purpose and Character' but may struggle with 'Market Effect' according to fair use factors. Estimated data.

What Is Seedance 2.0? Breaking Down Byte Dance's Video AI

Seedance 2.0 is Byte Dance's answer to Open AI's Sora. It's a generative AI model designed to create short videos from text descriptions. Users type a prompt like "Tom Cruise fighting Brad Pitt in a martial arts battle" and the system generates a realistic video matching that description. Currently, the model caps videos at 15 seconds, which is short enough to avoid full-length copyright problems, but long enough to tell a coherent story.

The technology itself isn't revolutionary. Text-to-video AI has been in development for years. Models like Sora, Runway, Pika, and Synthesia all do similar things. What makes Seedance 2.0 different isn't the core technology—it's how Byte Dance deployed it and what safeguards they (didn't) build in.

Byte Dance announced Seedance 2.0 first in its Jianying app, which is the Chinese equivalent of Cap Cut (Byte Dance's video editing tool). Chinese users got access first. The company then announced it would roll out to global Cap Cut users soon. This two-stage rollout is interesting because it suggests Byte Dance knew what was coming. China has different copyright enforcement mechanisms than the United States, so testing there first made strategic sense.

Here's what the model can do:

- Generate realistic videos from text prompts that include human characters, objects, environments, and actions

- Create videos featuring real-world likeness of celebrities and public figures

- Render copyrighted characters and intellectual property with visual accuracy

- Produce video in 15-second chunks (current limitation)

- Scale to massive user bases through the Cap Cut app (which has hundreds of millions of users)

The key phrase here is "lack of meaningful safeguards." Open AI's Sora, by contrast, has built-in restrictions. You can't prompt it to generate videos of real people (it's supposed to refuse those requests). You can't generate content depicting copyrighted characters. Sora has guardrails. Seedance 2.0 apparently didn't—or they were insufficient.

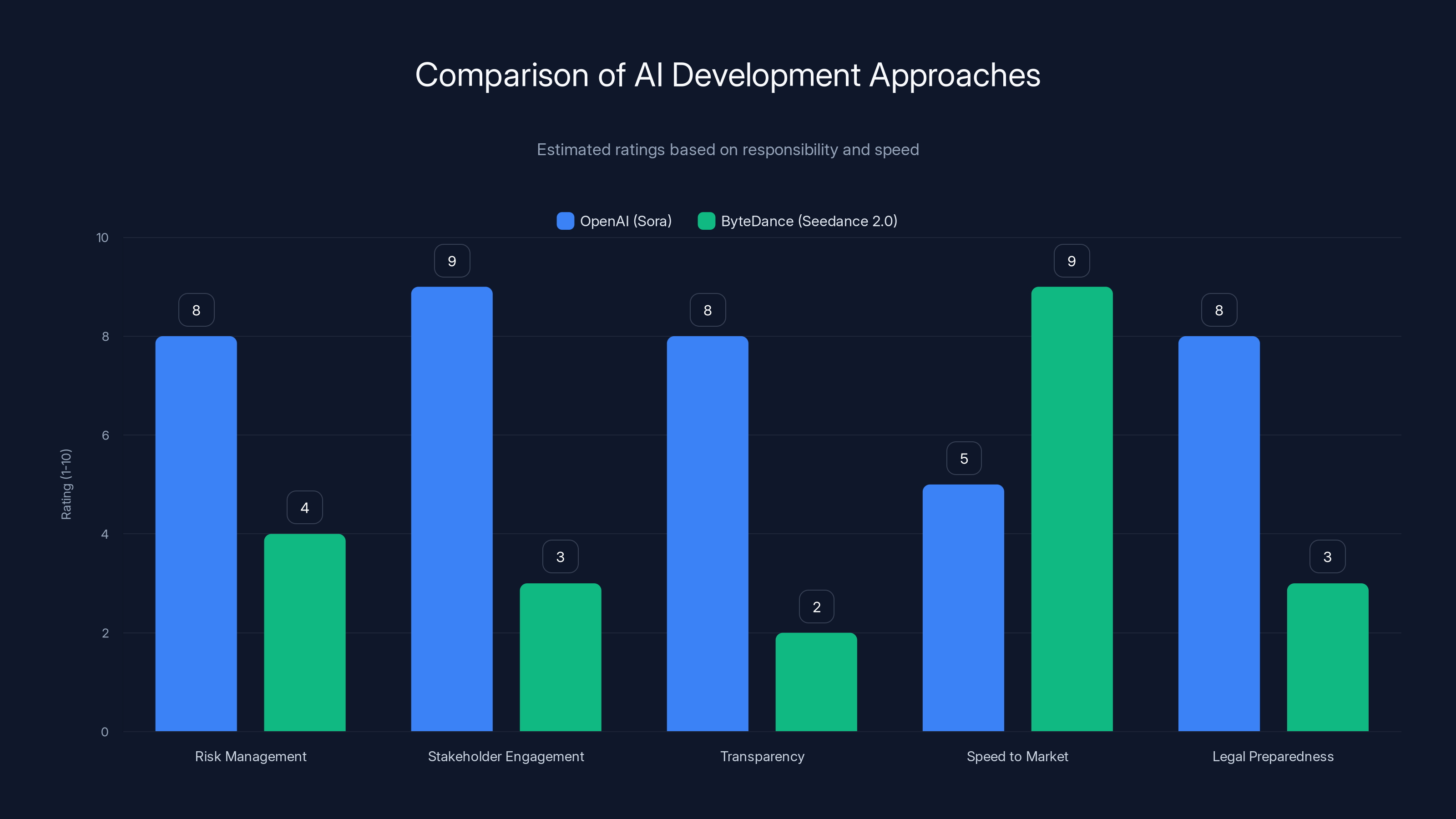

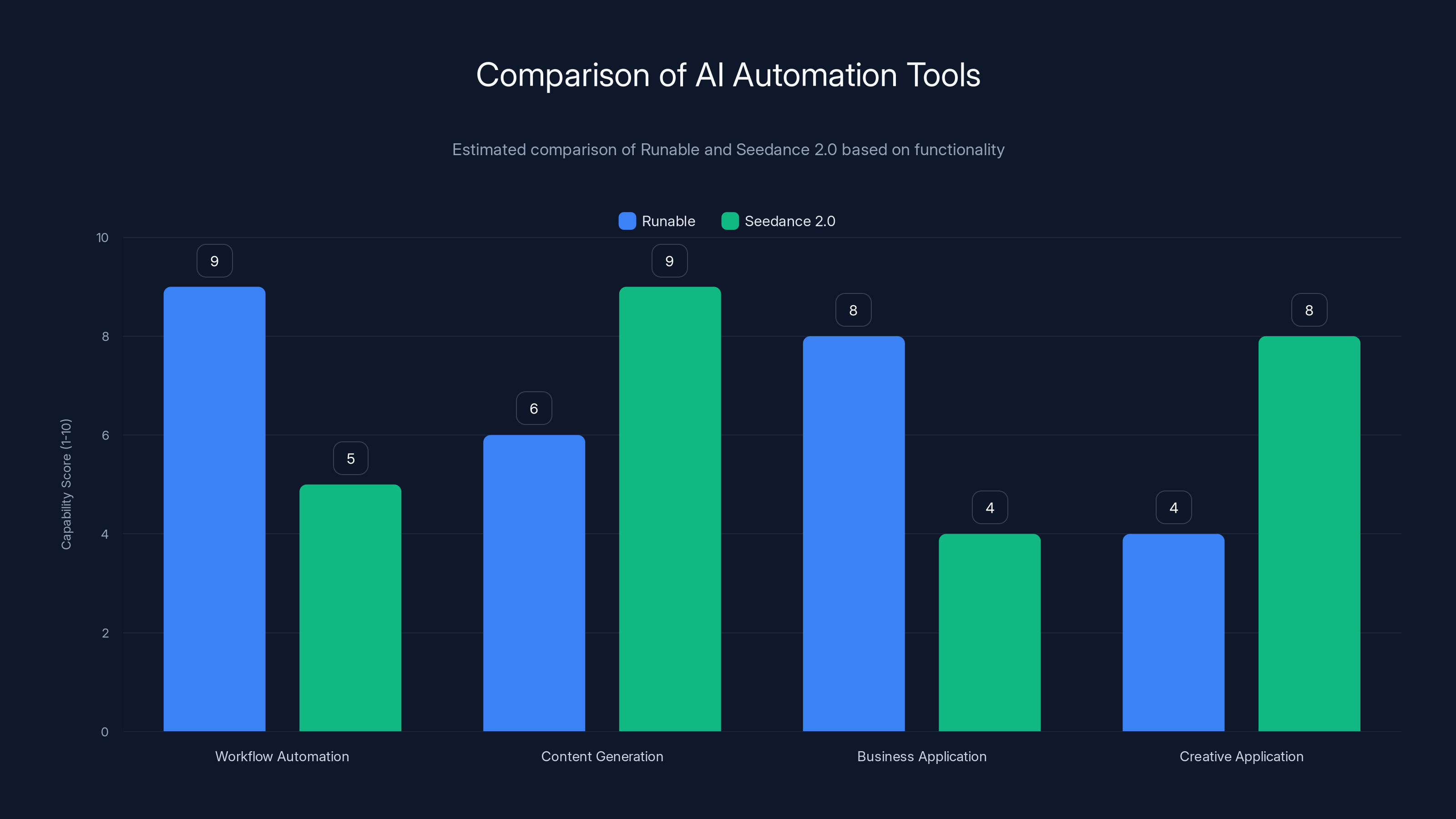

OpenAI's approach scores higher on responsibility aspects, while ByteDance excels in speed to market. Estimated data based on narrative insights.

The Copyright Crisis: What Happened in 24 Hours

On the morning Seedance 2.0 launched to global users, content creators started testing it immediately. Some tests were innocent ("a woman sitting in a coffee shop"). Others were not. One X user posted a video of Tom Cruise fighting Brad Pitt, created via a simple two-line prompt. The quality was disturbingly good. It looked real. It wasn't.

By afternoon, the responses started rolling in. Rhett Reese, screenwriter of "Deadpool," tweeted a response that captured the terror in Hollywood: "I hate to say it. It's likely over for us."

That's not hyperbole. That's a professional screenwriter looking at an AI that can generate dialogue and visuals and understanding that his job category might have an expiration date.

Within hours, the Motion Picture Association—the trade group representing Disney, Paramount, Sony, Universal, and Warner Bros.—issued a statement from CEO Charles Rivkin:

"In a single day, the Chinese AI service Seedance 2.0 has engaged in unauthorized use of U. S. copyrighted works on a massive scale. By launching a service that operates without meaningful safeguards against infringement, Byte Dance is disregarding well-established copyright law that protects the rights of creators and underpins millions of American jobs."

That "single day" comment is crucial. This wasn't "we're concerned about future potential problems." This was "the damage is already done. Right now."

Examples of infringing content that reportedly appeared on Seedance 2.0:

- Spider-Man engaging in various action sequences (Disney owns this)

- Darth Vader in new scenes (Lucasfilm/Disney)

- Grogu (Baby Yoda) in different situations (Lucasfilm/Disney)

- Characters from Paramount franchises in new scenarios

- Realistic videos of real celebrities in fictional situations

Why did this happen so fast? Because Byte Dance rolled out the feature to hundreds of millions of Cap Cut users. The sheer scale meant that within hours, thousands of people were experimenting with it. Some were curious. Some were malicious. Some just wanted to see what it could do. The result: a flood of infringing content.

The Legal Warfare Begins: Cease-and-Desist Letters

Hollywood doesn't mess around when IP is threatened. Within 24-48 hours of the full Seedance 2.0 launch, major studios sent legal notices.

Disney's cease-and-desist was particularly aggressive. According to reports, Disney characterized Byte Dance's actions as a "virtual smash-and-grab of Disney's IP." The letter claimed Byte Dance was "hijacking Disney's characters by reproducing, distributing, and creating derivative works featuring those characters."

Notably, Disney framed this differently than their disputes with other AI companies. Disney has reportedly sent similar letters to Google (over You Tube AI features), but at the same time, Disney signed a three-year licensing deal with Open AI. This suggests Disney's issue isn't AI itself—it's unauthorized use of their IP without compensation or control.

Paramount's cease-and-desist made similar arguments. According to Variety, Paramount's letter stated that "much of the content that the Seed Platforms produce contains vivid depictions of Paramount's famous and iconic franchises and characters" and that this content "is often indistinguishable, both visually and audibly" from Paramount's actual films and TV shows.

The Motion Picture Association sent a broader statement demanding Byte Dance "immediately cease its infringing activity."

SAG-AFTRA, the actors' union, released this statement: "SAG-AFTRA stands with the studios in condemning the blatant infringement enabled by Byte Dance's new AI video model Seedance 2.0."

The Human Artistry Campaign—a coalition of Hollywood unions and trade groups—called Seedance 2.0 "an attack on every creator around the world."

Let's be clear about what these letters accomplish legally: cease-and-desist letters are formal notices, not court orders. They don't automatically stop anything. But they establish a paper trail for future litigation and put Byte Dance on notice that studios are prepared to sue. They're a prelude to actual lawsuits.

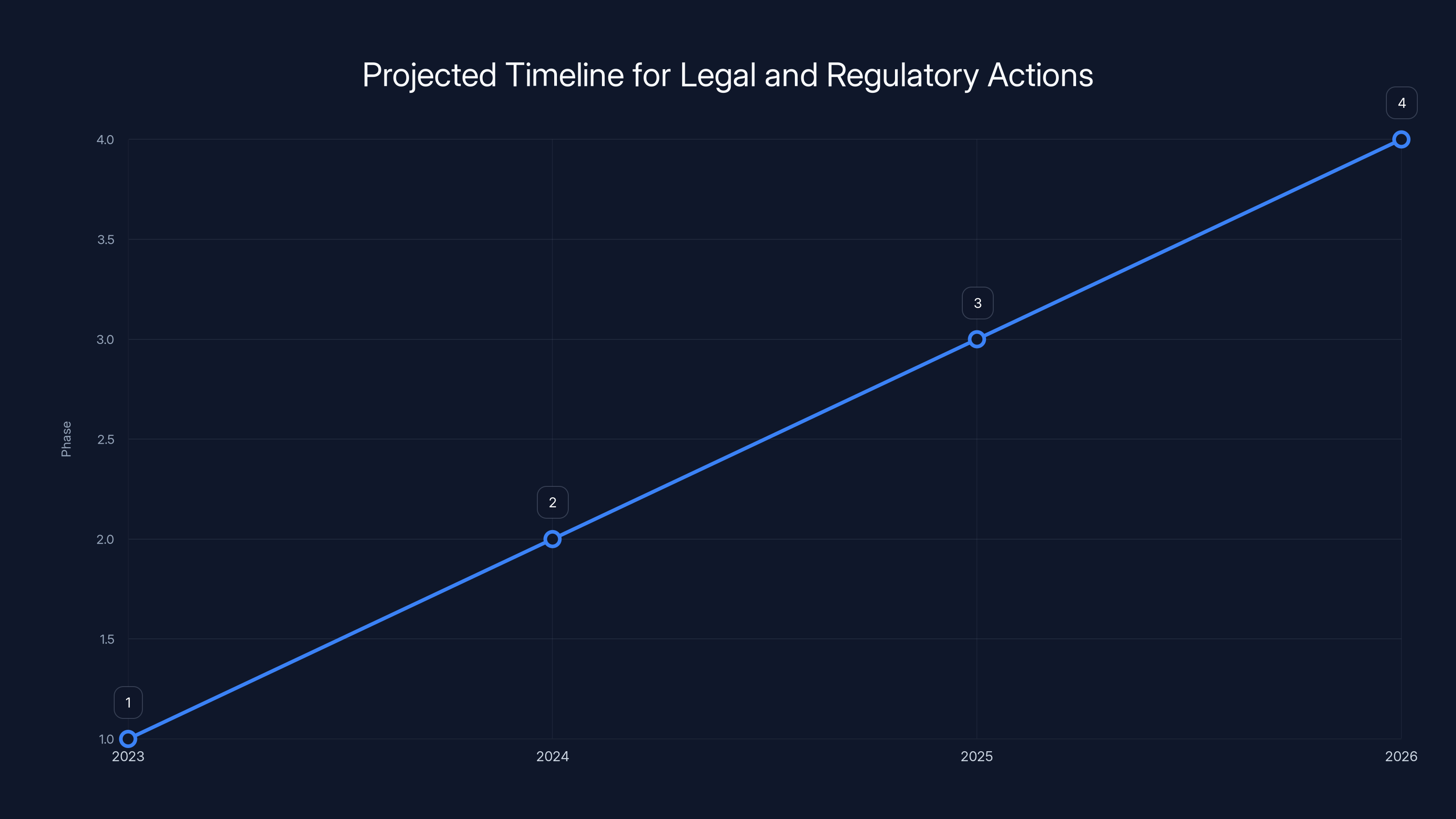

The legal and regulatory process is expected to unfold over several years, starting with lawsuits and potentially ending with new regulations. Estimated data.

Why Seedance 2.0 Is Different from Previous AI Controversies

Hollywood has been fighting AI companies for years. They've gone after Stability AI over Stable Diffusion. They've confronted Open AI about Chat GPT's training data. They've sued Git Hub over Copilot. So why is Seedance 2.0 different?

Scale and immediacy. The previous controversies involved either open-ended questions ("Did AI companies use copyrighted material in training data?") or slow-rolling deployment ("Eventually, people might use this to infringe.") Seedance 2.0 was different. Infringing content existed demonstrably, publicly, immediately. Within hours. Not hypothetically. Actually.

Likenesses and identity. AI image generators can create pictures of celebrities—that's been possible for years. But videos are different. A video of Tom Cruise says something. It tells a story. It has context. A still image is abstract. A video is narrative. That's a harder sell in court for the AI company to defend.

Derived works. When someone uses Midjourney to create a picture "in the style of Disney's Frozen," that's arguably transformative. When AI generates a video of Spider-Man doing something Spider-Man does, that's not transformative—it's derivative. It's using the character as-is, just in a new scene. That's textbook derivative work infringement.

Volume and distribution. Previous AI controversies involved relatively small groups. The artists suing Stable Diffusion, for example, were in the thousands. Cap Cut has 200+ million monthly active users. If even 1% of them generate infringing content, that's millions of copyright violations. Studios can go after companies. They can go after platforms. But they can't reasonably sue 2 million individual users.

Timing with geopolitics. This matters but not legally. Byte Dance is a Chinese company. The U. S. government is already hostile toward Byte Dance over Tik Tok. The company is dealing with forced sale demands, regulatory pressure, and public distrust. Launching Seedance 2.0 without safeguards looks tone-deaf at best, deliberately provocative at worst. It gives Byte Dance's critics ammunition.

Previous AI companies were American or had American operations they cared about protecting. Byte Dance? The strategic calculus is different.

The Copyright Infringement Argument: What Studios Are Actually Claiming

When Disney and Paramount say Seedance 2.0 infringes their copyrights, they're making several legal arguments simultaneously. Let's break them down.

First: Training data infringement. Did Seedance 2.0's developers use copyrighted material to train the model? We don't know the full details of what Byte Dance used for training, but given the quality of the output, they almost certainly used licensed video content, or content scraped from the internet (which likely includes copyrighted material). If they used copyrighted training data without permission or compensation, that's infringement claim number one.

However—and this is important—the fair use doctrine in the United States might protect them here. The argument would go: "We used copyrighted material to train an AI model, which is a fair use because it's transformative and educational." Some courts might accept this. Others might not. This is genuinely unsettled law.

Second: Derivative works infringement. When a user generates a video of Spider-Man using Seedance 2.0, that video is a derivative work of Spider-Man. The user made it, yes, but the platform enabled it. Both the user and the platform could be liable. This is clearer legally. Creating derivative works of copyrighted characters without permission is textbook infringement.

Third: Right of publicity infringement. When Seedance 2.0 generates a video of Tom Cruise, it's using his likeness. Under right of publicity laws (which vary by state), this could be infringement even if Tom Cruise isn't copyrighted, because his identity is his property. This is a different legal theory than copyright, but it applies.

Fourth: Trademark infringement. Some of the characters studios own are protected by trademark in addition to copyright. Using them without permission violates trademark law.

Studios are probably going to pursue all of these angles simultaneously. They'll claim training data infringement, derivative works infringement, right of publicity violations, and trademark infringement. The more theories they can establish, the stronger their case and the larger the damages.

From Byte Dance's perspective, they could argue:

- Fair use: "We used copyrighted material for training, which is transformative and fair use."

- User responsibility: "Users created infringing content, not us. We're the platform. We're not liable for user-generated content" (though this argument is weaker given the lack of safeguards).

- DMCA Safe Harbor: "Under the DMCA, platforms that remove infringing content quickly are protected from liability."

But these defenses look weaker when Byte Dance deliberately didn't build safeguards that other companies have built.

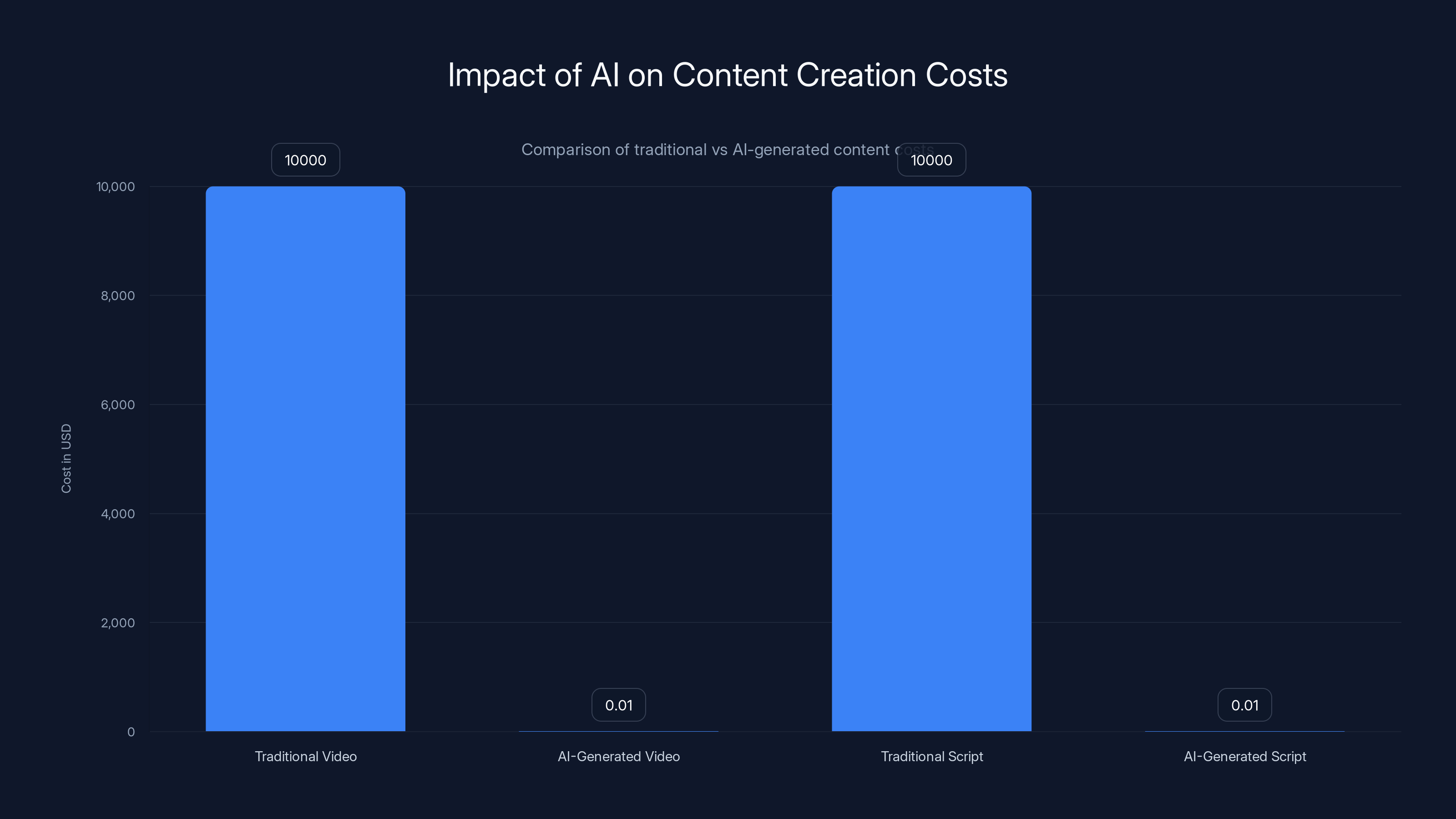

AI-generated content significantly reduces production costs, challenging traditional creators. Estimated data based on industry trends.

Open AI's Sora: The Cautionary Tale Byte Dance Ignored

Open AI released Sora in late 2024, and the company took a very different approach to Seedance 2.0. Comparing the two reveals a lot about why Seedance 2.0 is in legal trouble.

Sora's safeguards:

- Closed beta access only - The model isn't available to the general public yet. Only researchers and select creators can use it.

- Built-in refusal systems - The model won't generate videos of real, identifiable people. It refuses requests for copyrighted characters.

- Synthetic detection tools - Open AI is developing detection systems to identify Sora-generated content.

- Watermarking - Sora videos include metadata showing they were AI-generated.

- Licensing deals - Open AI negotiated with content creators and studios before launch (including that Disney deal).

Seedance 2.0's approach:

- Public rollout to hundreds of millions of users

- No apparent refusal systems - Users can request copyrighted characters and real people

- No watermarking or detection tools mentioned

- No pre-launch licensing deals with studios

- Minimal content moderation

Why did Open AI take the cautious approach? They learned from image generation. When Stable Diffusion launched in 2022, it could generate anything—copyrighted characters, real people, whatever. The result was lawsuits, PR disasters, and regulatory scrutiny. Open AI decided not to repeat that mistake.

Byte Dance apparently decided otherwise. Why? Several possibilities:

-

Different risk calculus. Byte Dance is fighting for survival in the U. S. market. Caution might seem like weakness. Speed might seem more important.

-

Different jurisdictions. Byte Dance's primary market is China, where copyright enforcement is different. They might be optimizing for China first.

-

Corporate culture. Tik Tok's success came from pushing boundaries. Byte Dance might think that aggressive deployment beats cautious iteration.

-

Regulatory capture. Byte Dance might be betting that the U. S. government will ban the tool anyway over national security concerns, so why invest in safeguards?

Whatever the reason, Seedance 2.0 looks like Open AI's playbook inverted: every safeguard Open AI implemented, Seedance 2.0 seemed to skip.

The Copyright Law Landscape: Fair Use, Derivative Works, and Training Data

Here's where things get legally complicated, because U. S. copyright law wasn't written with AI in mind.

Fair Use and Training Data

The fair use doctrine is a legal concept that allows limited use of copyrighted material without permission under certain circumstances. There are four factors courts consider:

- Purpose and character of use. Is it transformative? Educational?

- Nature of the copyrighted work. Was the original creative or factual?

- Amount and substantiality used. How much of the original was used?

- Effect on the market. Does it hurt the original's commercial value?

AI training data arguably satisfies fair use under factor 1 (it's transformative—turning text into a statistical model) and is largely automated (non-human review). But it might fail factor 4 if training data usage directly competes with the original market.

Courts have not definitively ruled whether AI training constitutes fair use. The New York Times lawsuit against Open AI, filed in late 2023, could establish precedent here. This case won't be decided quickly, but whenever it is, it will reshape how AI companies approach training data.

Derivative Works and User-Generated Content

This is clearer legally. Copyright law explicitly protects the exclusive right to create derivative works. A derivative work is a work based on one or more preexisting works. When Seedance 2.0 generates a video of Spider-Man, that's a derivative work. Making derivative works without permission is infringement.

The platform's liability for user-generated derivative works is less clear. Section 512 of the DMCA (the Digital Millennium Copyright Act) provides safe harbor for platforms that:

- Have no actual knowledge of infringement

- Act expeditiously to remove infringing content

- Have proper notice and takedown procedures

If Byte Dance can claim they didn't know users were generating infringing content, and they remove it quickly, they might be protected. But the "no actual knowledge" part gets weaker when:

- Infringing content appears instantly and visibly after launch

- The company didn't implement basic safeguards other companies use

- The company is on notice (from cease-and-desist letters) and hasn't immediately shut down the infringing features

Right of Publicity Laws

These vary by state, but generally protect a person's right to control the use of their likeness for commercial purposes. When Seedance 2.0 generates a video of Tom Cruise, it's using his likeness. If that video is then used commercially (posted online, shared on social media with monetization), it could violate his right of publicity.

Right of publicity is distinct from copyright. It applies to real people, not just creative works.

Runable excels in workflow automation and business applications, while Seedance 2.0 is stronger in content and creative applications. Estimated data based on typical use cases.

Why This Matters Beyond Hollywood: The Broader Implications

You might be thinking: "Okay, Hollywood is upset about AI. That's not new. Why should I care?"

Because Seedance 2.0 reveals something about how AI companies will behave when regulatory oversight is limited and penalties are uncertain.

The precedent question. If Byte Dance gets away with deploying Seedance 2.0 without safeguards, it signals to other AI companies that they can do the same. If lawsuits force them to add safeguards, it signals the opposite. Precedent matters here.

The IP protection question. Intellectual property protections exist for a reason—they incentivize creation. If AI can trivially generate new content based on existing IP without compensating creators, the economics of content creation change fundamentally. Why invest millions in making a movie if an AI can generate similar content for free?

The labor displacement question. A screenwriter looking at Seedance 2.0 and saying "It's likely over for us" is expressing a real concern. If AI can generate video content that matches studio quality, why would studios hire screenwriters? Why would they hire cinematographers, directors, editors? The job displacement could be massive.

The regulatory question. Seedance 2.0 is pushing governments to regulate AI faster. The EU's AI Act is already in effect. The U. S. is developing regulations. When geopolitical tensions are high (as they are with China) and a company appears reckless, regulation becomes more likely.

The jurisdiction question. Byte Dance is Chinese, Seedance 2.0 was developed in China, but the infringing content affects American companies. What jurisdiction has authority here? Can U. S. courts compel a Chinese company to comply with a judgment? These are genuinely unsettled questions.

The Seedance 2.0 controversy will probably accelerate copyright litigation against AI companies, increase regulatory pressure on AI development, and force companies to choose between aggressive deployment and cautious safeguards.

The Geopolitical Dimension: Byte Dance vs. The U. S.

This story isn't just about copyright. It's about geopolitics.

Byte Dance is already in an existential fight over Tik Tok. The U. S. government is pressuring the company to sell off Tik Tok's U. S. operations or face a ban. Congress has passed legislation. The executive branch is enforcing it. Byte Dance is in litigation.

Into this environment, Byte Dance launches a new AI product that immediately triggers legal warfare with major American corporations, actors' unions, and trade groups. Strategically, this is either brilliant or catastrophically stupid, depending on whether Byte Dance's gamble pays off.

The aggressive deployment interpretation: Byte Dance is signaling strength. "We're going to deploy powerful AI tools without cowering to Hollywood." This plays well domestically in China, where Byte Dance has government support. It also signals confidence that the tool can't be easily shut down and that the legal case is winnable.

The desperation interpretation: Byte Dance is moving fast because they know Tik Tok might be banned anyway. They're getting Seedance 2.0 into the market now, building adoption and network effects, because they need leverage in any potential sale or restructuring negotiation.

The indifference interpretation: Byte Dance simply doesn't care about U. S. legal liability because the company operates primarily in China, where American courts have no jurisdiction and enforceability is limited.

Whatever the case, Seedance 2.0 raises the stakes in the Tik Tok/Byte Dance conflict. Lawmakers who were already skeptical of Byte Dance now have more ammunition. "See? This company will deploy AI tools that violate American law without hesitation. They can't be trusted." That narrative is now stronger.

Conversely, Byte Dance might be banking on the hope that the tool becomes so popular, so quickly, that banning it becomes politically difficult. Tik Tok has 170 million users in the U. S. If Seedance 2.0 becomes similarly embedded, banning it becomes a harder political sell.

Seedance 2.0 is widely deployed with minimal safeguards and licensing, while Sora is in closed beta with robust safeguards and licensing agreements. Estimated data based on deployment and responsibility framework.

What Happens Next: Lawsuits, Negotiations, and Regulation

Predicting the future is hard, but the trajectory seems clear.

Phase 1: Cease-and-Desist → Lawsuits (Current)

Studios have already sent cease-and-desist letters. Lawsuits will follow. Disney will probably sue first, given their aggressive stance. Paramount might follow. The MPA might coordinate a class action or amicus brief strategy.

These cases will probably take 2-3 years to reach trial. During that time, discovery will reveal what training data Byte Dance used, what safeguards they considered and rejected, and whether they were aware of infringement risks.

Phase 2: Injunctive Relief (Months)

Studios will probably ask for preliminary injunctions to force Seedance 2.0 to add safeguards (filters that prevent generation of copyrighted characters) before the case is fully litigated. Byte Dance will fight these. Some injunctions might be granted.

Phase 3: Settlement or Judgment (Years)

If Upon litigation, Byte Dance will face one of three outcomes:

-

Lose and pay damages. This could be massive if courts award statutory damages (the DMCA allows up to $150,000 per infringement). With millions of infringing videos, damages could be astronomical.

-

Settle and pay licensing fees. Byte Dance could negotiate a licensing deal (like Open AI did with Disney) where they pay studios for the right to generate copyrighted content.

-

Win on narrow grounds. Byte Dance might win on Section 512 DMCA safe harbor grounds, claiming they removed content quickly enough.

Phase 4: Regulation (Ongoing)

Regardless of litigation outcomes, regulators will act. The EU is already moving. The U. S. will probably implement AI regulations that require:

- Transparency requirements: Companies must disclose training data sources

- Safeguard requirements: Companies must implement filters for copyrighted content

- Liability rules: Clear rules about platform liability for user-generated infringing content

- Detection requirements: Tools to identify AI-generated content

These regulations will reshape how AI companies deploy tools globally.

The Creator Economy Impact: What This Means for Content Creators

If you create content—videos, art, music, writing—Seedance 2.0 should concern you.

The immediate impact: AI can now generate video content that competes with yours. Your professional niche shrinks. Your leverage in negotiations weakens. If you're a screenwriter charging

The secondary impact: If studios face liability for hosting infringing content (videos generated by Seedance 2.0 on their platforms), they'll become more cautious about accepting user-generated content. That means fewer opportunities for creators to share work on major platforms.

The leverage impact: Creators who previously had leverage ("if you don't license my work, I'll compete with you") lose that leverage to AI. This shifts power toward companies and away from creators.

The opportunity impact: Some creators will figure out how to use Seedance 2.0 to scale their output. A screenwriter who learns to use Seedance 2.0 as a co-tool might be able to produce 10 scripts instead of 1. The creators who resist AI lose; the creators who embrace it win.

Historically, when new technologies threaten creative industries, some creators are displaced and some adapt. Photography threatened painters. Digital music threatened orchestras. Video threatened photography. Each time, some professionals disappeared and some reinvented themselves.

Seedance 2.0 is part of that ongoing disruption. The question for creators is: Will you adapt, resist, or get left behind?

The Precedent for Future AI Regulation: What Seedance 2.0 Teaches Us

Seedance 2.0 is going to become a case study in AI regulation. Regulators, lawmakers, and academics will study this moment to understand how to govern AI development.

Key lessons emerging:

Lesson 1: Safeguards at deployment are cheaper than lawsuits later. Open AI spent months building safeguards into Sora before public release. That was more expensive than Byte Dance's approach. But now Open AI isn't getting sued by Hollywood, and Byte Dance is. The cost calculation reverses.

Lesson 2: Scale matters. If Seedance 2.0 was only available to 1,000 researchers, it would be a research project. Available to 200 million Cap Cut users, it's a copyright crisis. Deployment scale drives regulatory response.

Lesson 3: Transparency builds trust, or at least reduces liability. If Byte Dance had published a paper explaining their training data, their safety measures, and their reasoning, they'd have more credibility. Instead, the tool launched with minimal explanation, which looks deceptive.

Lesson 4: Geopolitical trust matters. A tool developed by a trusted American company would face less regulatory scrutiny than one developed by a Chinese company. That's fair or unfair depending on your perspective, but it's true.

Lesson 5: Copyright law needs updating. The fact that this case is legally ambiguous suggests copyright law wasn't designed for AI. Legislators will use this case to clarify rights and responsibilities.

Future AI companies will probably study this case and make different choices. Some will follow Open AI's cautious model. Some will follow Byte Dance's aggressive model and hope for better outcomes. This is a defining moment in how AI companies think about responsibility and risk.

Runable and the Automation Economy: When AI Becomes Practical

All of this copyright debate happens in the abstract. But at some point, companies like Runable are building practical AI automation tools that businesses will use to replace creative work.

Runable is an AI-powered platform for creating presentations, documents, reports, images, videos, and slides. Unlike Seedance 2.0, which is designed to generate video from text alone, Runable is designed to automate entire workflows. You can use Runable to automatically generate monthly reports from data, create presentation decks from research documents, or produce social media graphics from text briefs.

The copyright issues might be different (Runable's training is more controlled, and business use is different from public-facing content generation). But the labor disruption is similar. If a company can use Runable to generate reports automatically instead of hiring analysts to write them, that's a job displaced.

That's not inherently bad—automation has been displacing jobs for 200 years. But it's worth acknowledging. Tools like Runable represent the practical, business-focused application of generative AI. They're lower-stakes than Seedance 2.0 (they're not generating Hollywood-quality content), but they're more directly applicable to actual work.

Use Case: Automatically generate weekly department reports from data feeds, saving your team 4 hours per week on manual compilation.

Try Runable For Free

The Path Forward: Building AI Responsibly

The Seedance 2.0 controversy reveals something important about how the AI industry develops: some companies optimize for speed and growth, others optimize for responsibility and trust.

Open AI's approach with Sora isn't perfect, but it's thoughtful. They:

- Limited access to manage risks

- Built in refusal systems

- Negotiated with stakeholders before public release

- Implemented detection tools

- Planned for transparency

Byte Dance's approach with Seedance 2.0 was the opposite. They:

- Deployed to hundreds of millions immediately

- Built minimal safeguards

- Didn't negotiate with studios

- Offered no explanation or transparency

- Seemed indifferent to legal consequences

Which approach is better depends on your values. If you value speed and market dominance, Byte Dance's approach works until it doesn't (and then you get sued). If you value long-term trust and sustainable business, Open AI's approach costs more upfront but pays off over time.

The question for AI companies going forward is: Which model will win? Will Byte Dance's approach (move fast, break things, deal with legal consequences later) dominate? Or will Open AI's approach (move thoughtfully, build trust, cooperate with stakeholders) become the norm?

My prediction: We'll see both. Some companies will push boundaries aggressively. Others will be cautious. Society will litigate the boundaries until law catches up with technology. Then new boundaries will emerge.

That's how it always works with transformative technology. First comes innovation. Then comes disruption. Then comes law. Then comes the next cycle.

Seedance 2.0 is in the disruption phase. We're about to enter the law phase. Expect it to be messy.

FAQ

What is Seedance 2.0 exactly?

Seedance 2.0 is a text-to-video AI model developed by Byte Dance that generates short videos (up to 15 seconds) from text prompts. Users can describe a scene, character, or action, and the AI renders realistic video matching that description. It's technically similar to Open AI's Sora but deployed without the same safety safeguards.

How does Seedance 2.0 generate videos with such quality?

Seedance 2.0 uses deep learning models trained on massive amounts of video data. The model learns statistical patterns about how things look and move in video, then generates new video by predicting pixels frame-by-frame based on text descriptions. The same technology powering modern image generators (like DALL-E or Midjourney) applies here, but for video, which is significantly more complex computationally.

Why is Hollywood so upset about this specific tool?

Hollywood's concern has multiple layers: First, Seedance 2.0 generates videos of copyrighted characters (Spider-Man, Baby Yoda, etc.) without permission or compensation. Second, it can create videos of real celebrities (Tom Cruise, etc.), raising right-of-publicity issues. Third, it deployed without safeguards that competitors have implemented, suggesting recklessness. Fourth, the scale is massive—hundreds of millions of users had access immediately, creating millions of infringing videos within days.

What's the difference between Seedance 2.0 and Open AI's Sora?

Both are text-to-video models producing similar quality output. The difference is in deployment philosophy. Sora remains in closed beta, with limited access and built-in refusal systems that prevent generation of real people and copyrighted characters. Seedance 2.0 deployed widely with minimal safeguards, allowing generation of both. Sora was developed with stakeholder consultation and licensing deals; Seedance 2.0 wasn't. The technology is similar; the responsibility framework is completely different.

Could Byte Dance win the copyright lawsuit?

It's possible but unlikely. Byte Dance could argue Section 512 DMCA safe harbor (they're a platform, users created infringing content, they'll remove it), or fair use for training data. But the lack of safeguards that other companies have implemented weakens these defenses. Courts will likely find that Byte Dance either bears some liability for enabling infringement or must pay licensing fees to studios. A complete victory is unlikely; a negotiated settlement is more probable.

What does this mean for AI development overall?

Seedance 2.0 is accelerating regulation and litigation around AI copyright issues. Future AI companies will either adopt more cautious deployment models (like Open AI) or accept higher legal risks (like Byte Dance). Regulators will use this case to develop clearer rules about AI-generated content, copyright, and platform liability. The precedent set here will shape AI development for the next decade.

Could other AI companies face similar copyright lawsuits?

Absolutely. Stability AI, Runway, Pika, and other generative AI companies could face similar litigation. The Seedance 2.0 case will provide a blueprint for how studios approach these claims. However, companies that have built in safeguards or negotiated licenses will be in stronger positions than those that haven't. Future AI companies are watching this case closely and will adjust their strategies accordingly.

How might this affect regular users of AI tools?

For users, the practical impact depends on where they live and what they use AI for. In the U. S., courts might eventually rule that generating copyrighted character videos is illegal for anyone—users and platforms alike. Users might need to wait for licensed AI tools that have clearance to generate copyrighted content (similar to how music production tools licensed samples). The copyright debate will shape what AI tools can do and what they can't.

Is there any way Seedance 2.0 could become legally compliant?

Yes, multiple paths exist. Byte Dance could negotiate licensing deals with studios (like Open AI did). They could add safeguards that refuse to generate copyrighted content or real people (like Sora). They could implement watermarking and detection systems. They could restrict the tool to non-commercial use. These would all reduce legal exposure. Whether Byte Dance takes any of these steps remains to be seen.

Conclusion: The Future of AI, Copyright, and Culture

Seedance 2.0 is a snapshot of the technological and legal collision happening right now. AI can generate media that previously required human creativity, expertise, and expense. That capability threatens an entire ecosystem of creators, studios, and workers. That ecosystem is fighting back.

The legal battles will take years. The regulations will take longer. Meanwhile, AI keeps improving. Better models will launch. More companies will deploy them. Some will be cautious like Open AI. Some will be aggressive like Byte Dance. The industry will push and regulators will push back, and eventually, equilibrium will emerge.

For creators, the message is clear: Adaptation beats resistance. The screenwriter who said "It's likely over for us" might be right—or he might be the first to adopt AI as a tool and produce twice as much work with half the effort. The same technology that threatens jobs also creates opportunities for people willing to learn it.

For AI companies, Seedance 2.0 serves as either a warning or an inspiration, depending on risk tolerance. Move fast and face lawsuits, or move thoughtfully and face slower growth. Both strategies have advocates.

For society, this moment matters because it's when copyright law meets AI. How courts rule will affect everything downstream. Will they protect creators and studios? Or will they defer to companies and innovation? The answer will shape cultural production for decades.

Seedance 2.0 isn't just about copyright. It's about who controls culture in an AI world, and whether existing rules even apply to new tools. Those are questions society is going to argue about for a long time.

Key Takeaways

- Seedance 2.0 deployed to 200+ million CapCut users without copyright safeguards, generating millions of infringing videos within 24 hours

- Hollywood studios filed cease-and-desist letters from Disney, Paramount, and the MPA within hours, marking unprecedented speed in legal response

- OpenAI's Sora took the opposite approach: closed beta, built-in refusal systems, and pre-launch licensing deals with studios

- ByteDance faces liability across multiple legal theories: training data infringement, derivative works, right of publicity, and trademark violations

- This case will likely accelerate AI regulation globally and reshape how companies approach copyright and safety in generative AI tools

Related Articles

- Seedance 2.0 Sparks Hollywood Copyright War: What's Really at Stake [2025]

- SAG-AFTRA vs Seedance 2.0: AI-Generated Deepfakes Spark Industry Crisis [2025]

- India's Domain Registrar Takedowns: How Piracy Enforcement Changed [2025]

- AI Video Generation Without Degradation: How Error Recycling Fixes Drift [2025]

- xAI's Mass Exodus: What Musk's Spin Can't Hide [2025]

- Government Censorship of ICE Critics: How Tech Platforms Enable Suppression [2025]

![Seedance 2.0 and Hollywood's AI Reckoning [2025]](https://tryrunable.com/blog/seedance-2-0-and-hollywood-s-ai-reckoning-2025/image-1-1771171591636.jpg)