How Chat GPT's Age Prediction Works to Protect Young Users

If you've been following the debate around AI safety, you know this conversation has gotten serious fast. We're not talking about abstract risks anymore. We're talking about real consequences for real teenagers using AI tools they trust.

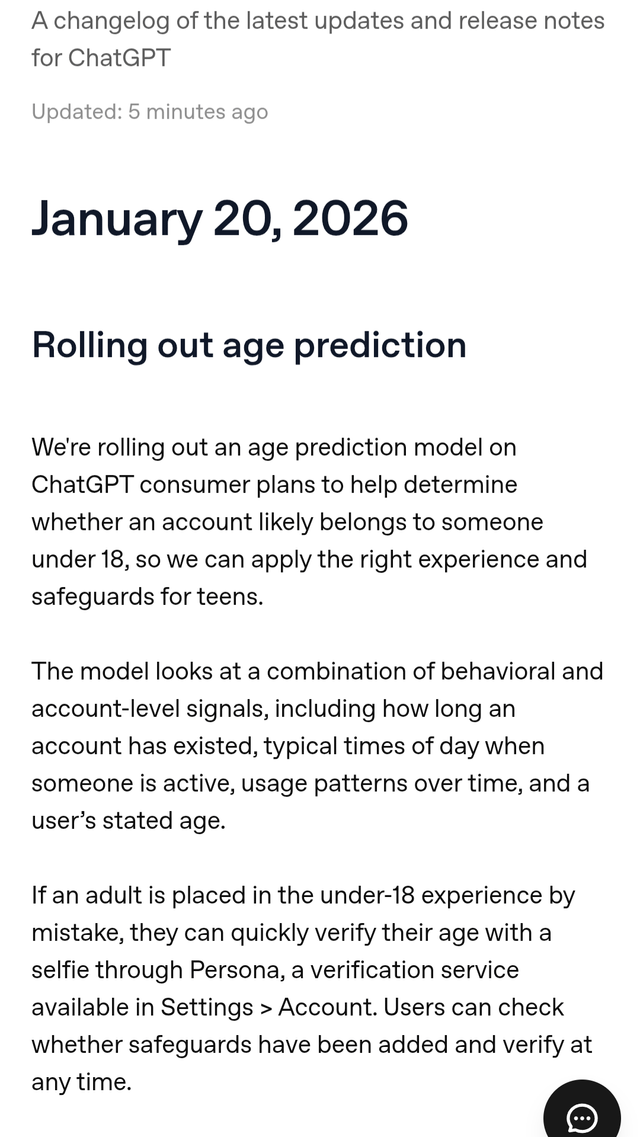

Open AI just made a decision that signals the company is taking this seriously: they've built an age prediction system directly into Chat GPT. This isn't some half-baked feature buried in settings. This is fundamental infrastructure designed to identify when a minor is using the platform and automatically lock down access to harmful content.

Here's the thing that makes this different from most AI safety announcements. It's not about removing features or making the product worse. It's about being smarter about who needs what protections. If you're 16 and using Chat GPT for homework help, the system should know that. If you're 45 and researching a crime novel, the system should know that too. And each person should get a different experience that makes sense for them.

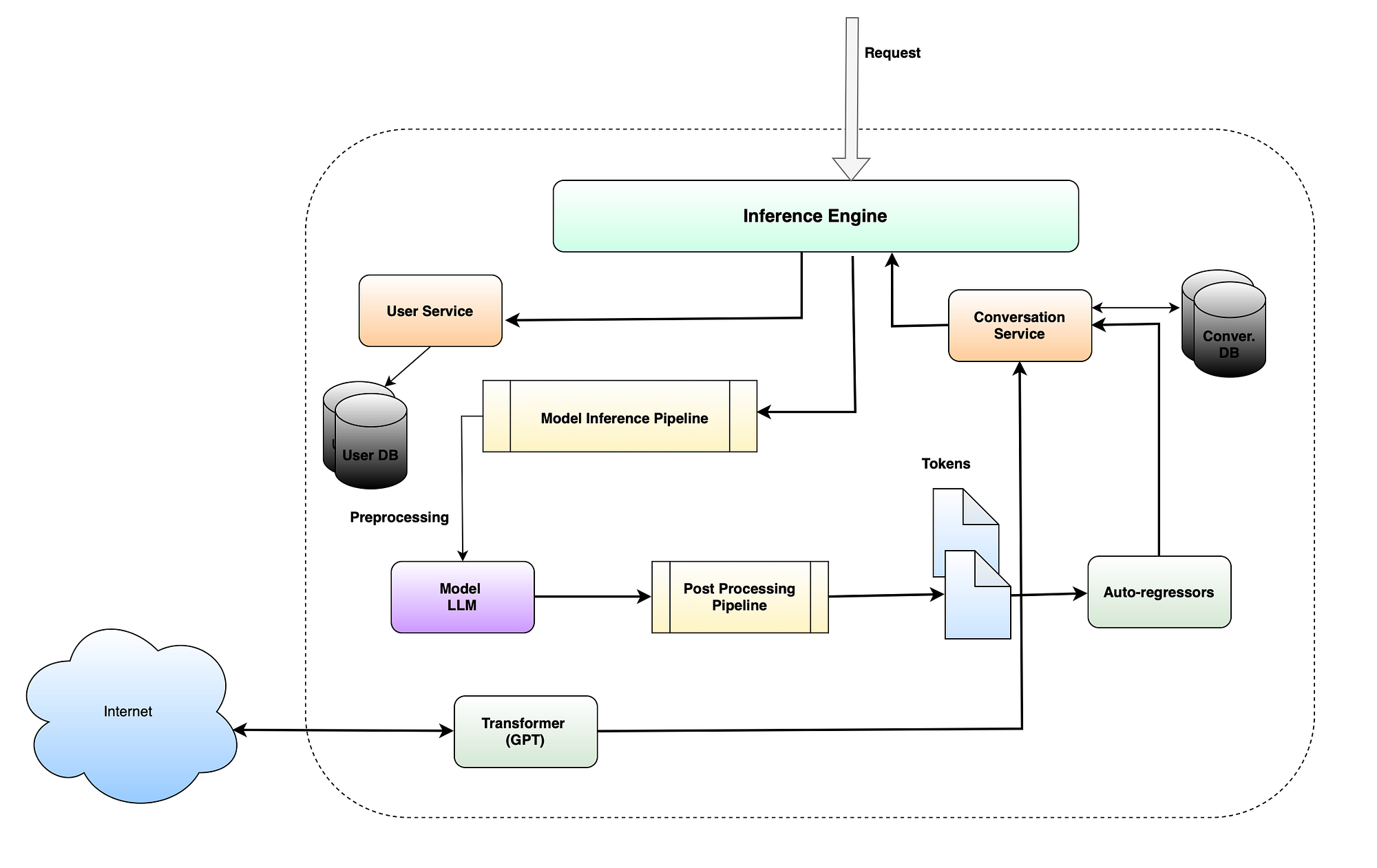

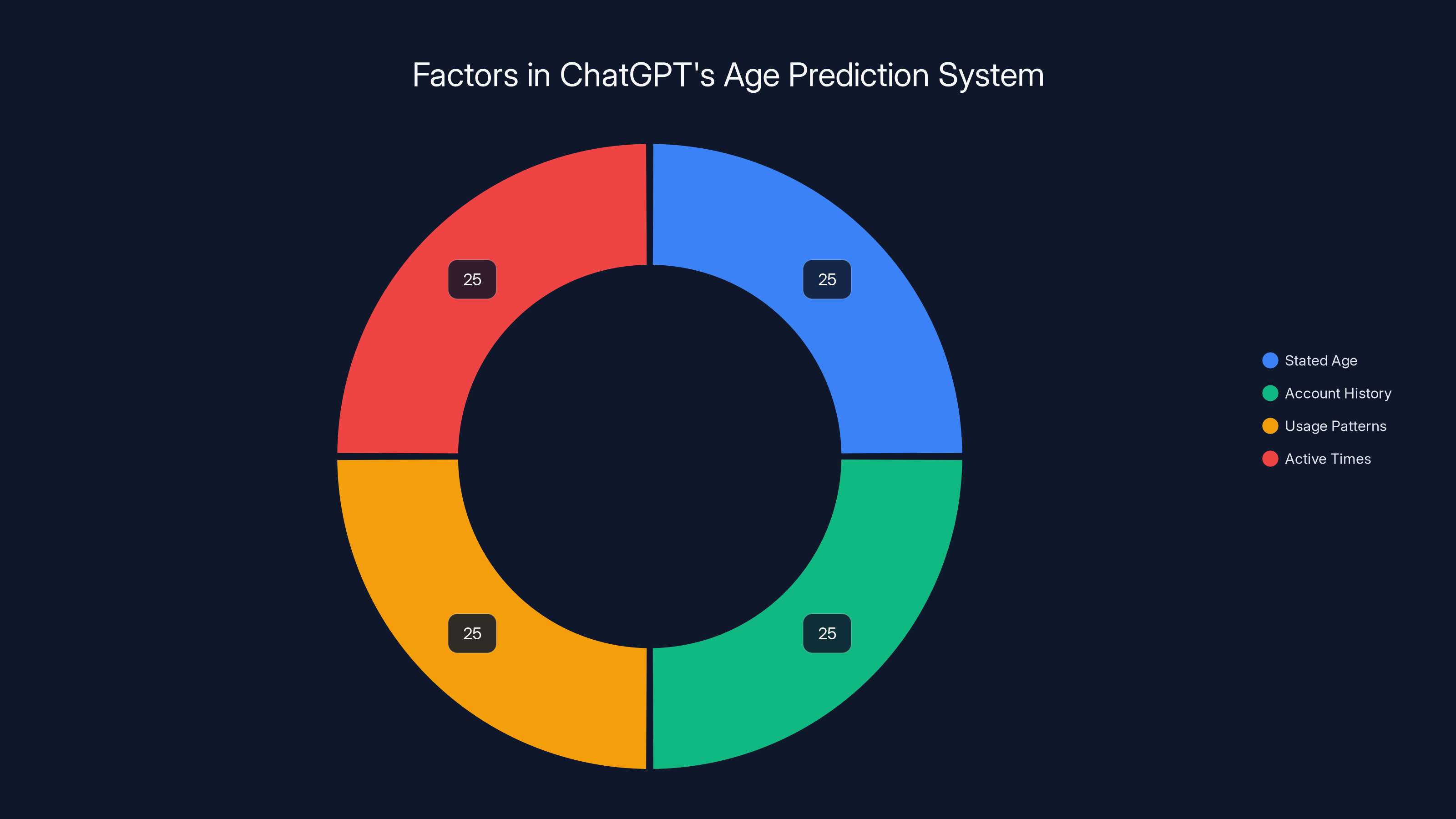

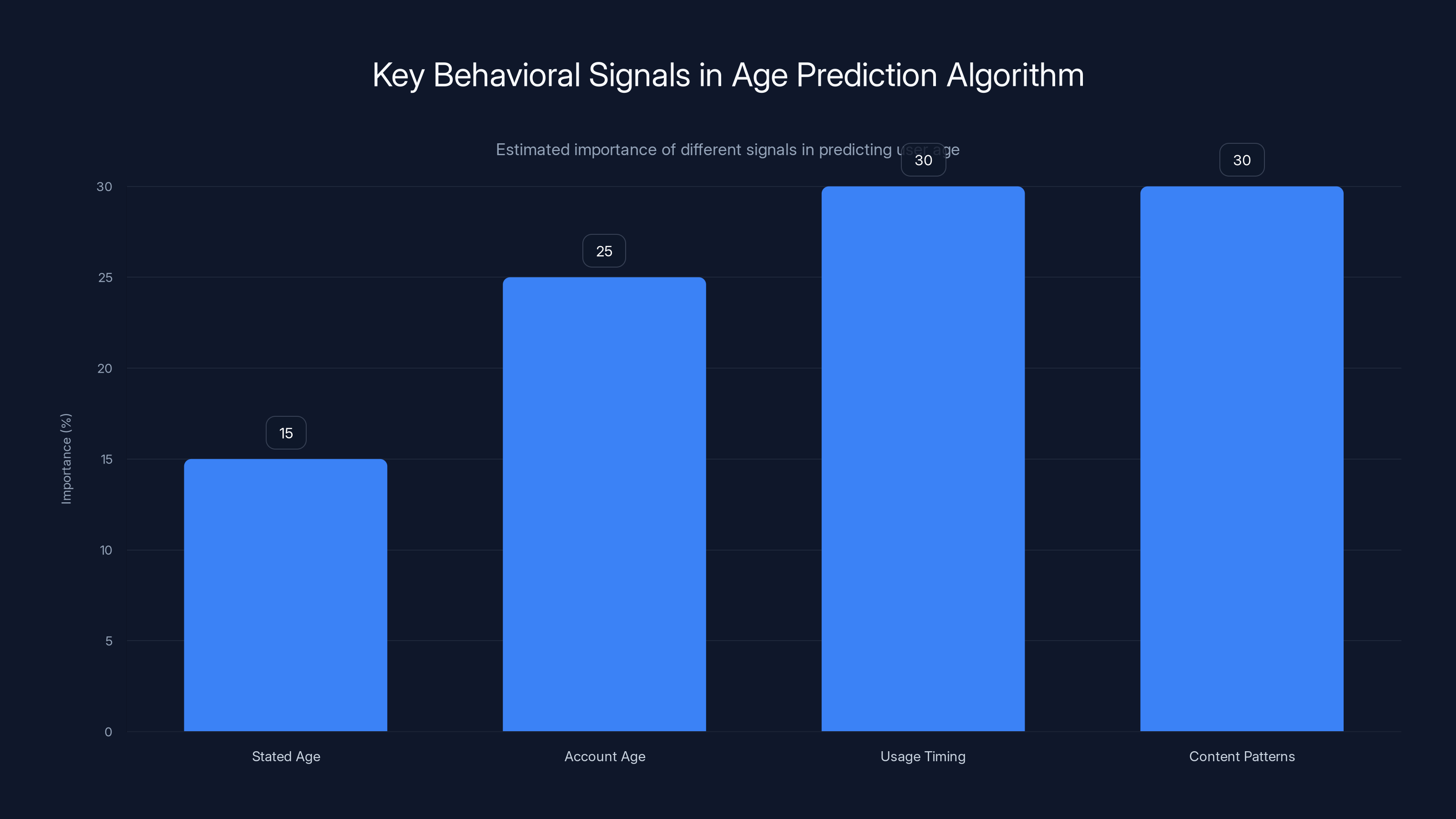

The system works by analyzing "behavioral and account-level signals." That's the technical term. In practice, it means Open AI is looking at stated age, account history, usage patterns, and when you tend to be active. Think of it as a combination of what you tell them, how long you've been around, and how you actually behave on the platform.

Why does this matter? Because the problem it's trying to solve is genuinely alarming. Over the past few years, there have been documented cases of teenagers experiencing psychological harm linked to their interactions with Chat GPT. Some cases involved discussions of sexual content with minors. Others involved the bot engaging in conversations that contributed to suicidal ideation. These weren't theoretical scenarios or worst-case dystopian thinking. These were real incidents that made headlines and prompted congressional concern.

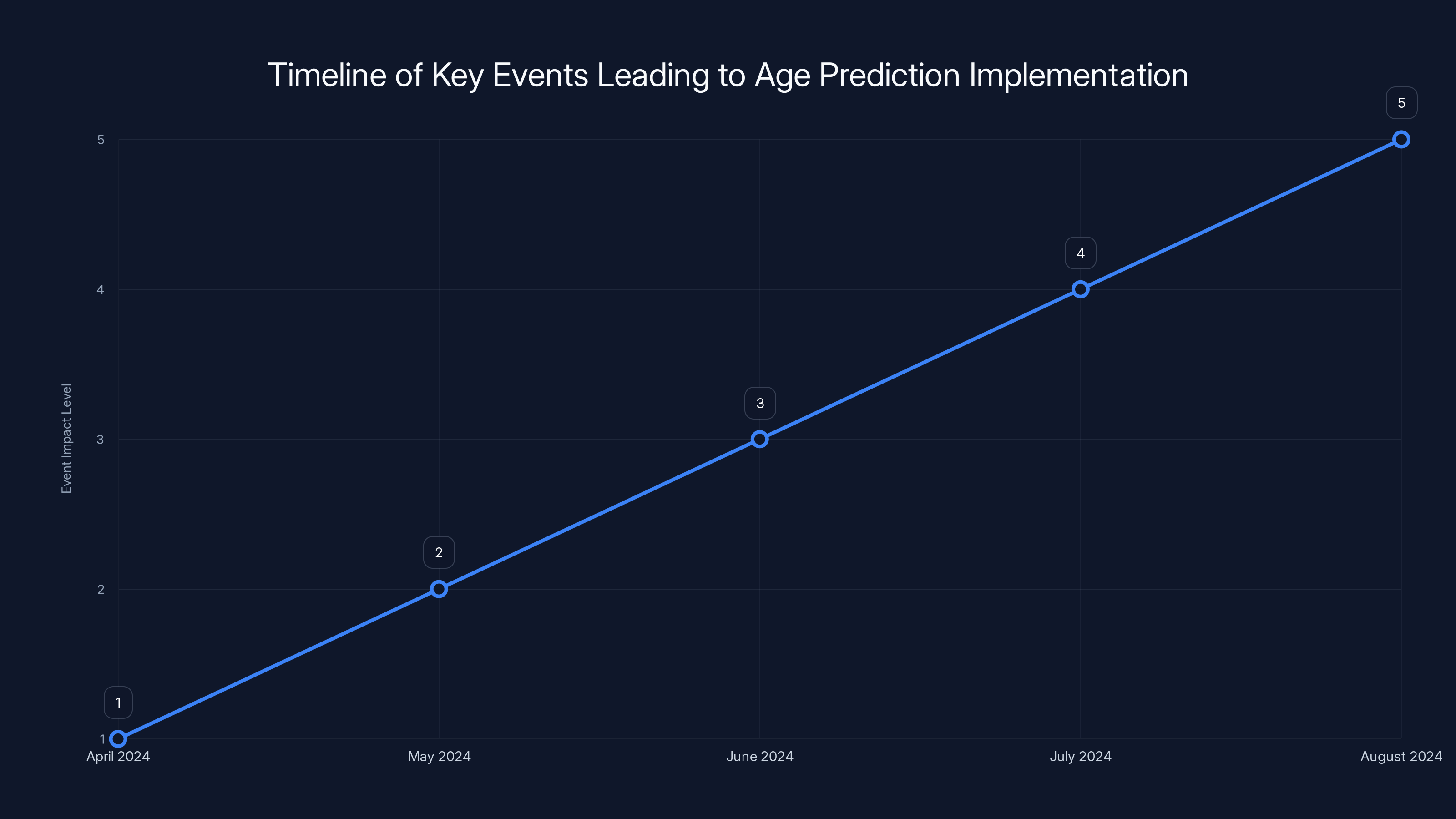

The Crisis That Led to Age Prediction

Let's back up and understand why Open AI felt compelled to build this in the first place. The company has faced sustained criticism about how its chatbot handles interactions with minors. The pressure didn't come out of nowhere.

In April 2024, researchers discovered a bug that allowed Chat GPT to generate sexually explicit content for users under 18. This wasn't a feature the company intended. It was a failure in their safety systems. But it exposed a fundamental problem: the company's content filters weren't working the way they were supposed to. A user could potentially bypass protections or the protections simply weren't catching everything.

The cases linking Chat GPT to teen suicides got the most attention. Families reported that interactions with the chatbot contributed to harmful outcomes for vulnerable young people. These weren't fringe incidents. They were significant enough to trigger investigations and prompt congressional scrutiny. Senator Richard Blumenthal, who chairs the subcommittee on privacy and data security, specifically called out AI companies for failing young people.

Open AI wasn't alone in facing this criticism. The entire AI industry was under pressure. But Chat GPT, being the most popular and widely-used generative AI tool among teenagers, bore the brunt of the attention.

The company had already been working on protections. They'd already built content filters designed to restrict discussion of sexual content, extreme violence, and other harmful topics for users under 18. But the system had a fundamental problem: it didn't know who was a minor and who wasn't. Or rather, it relied entirely on what users told it during signup.

And here's the kicker. Many teenagers either lied about their age during signup or never filled out age information at all. The system had no way to independently verify whether the person on the other side of the screen was actually 13 or actually 31.

Age prediction changes that equation. It adds a layer of analysis that tries to figure out who's actually a minor, regardless of what they claimed during signup.

Runable offers a range of features to automate documentation, with automated reports being the most effective at an estimated 90% efficiency.

How the Algorithm Analyzes Behavioral Signals

Understanding how this age prediction algorithm works requires getting into the weeds a bit. Open AI hasn't published a technical paper detailing the exact mechanisms, but based on their public statements, we can piece together the approach.

The system looks at "behavioral and account-level signals." That phrase is doing a lot of heavy lifting. Let's break it down into what it probably means in practice.

First, there's the explicit data: stated age. When you sign up for Chat GPT, you provide a birthdate. The system has that. But that's not reliable, so the algorithm looks at other things.

Account age is another signal. A Chat GPT account that's been active for two years probably belongs to someone different than an account created yesterday. Teenagers tend to create accounts and then use them frequently over time. The longevity of an account can suggest something about who owns it, though it's not deterministic.

Then there's usage timing. When is the account active? Kids in school tend to use their phones and computers at certain times of day. They're active after school, during homework time, late at night. That pattern is different from someone with a full-time job. An AI system can identify these temporal patterns and use them as probabilistic signals about who might be on the other end.

What else? Content patterns matter. What kinds of questions does the account ask? What topics come up frequently? Someone asking homework-focused questions about algebra is probably different from someone asking about business strategy. Someone asking Chat GPT for creative writing help on specific topics associated with youth culture is probably younger than someone researching investment strategies.

The algorithm is likely looking for combinations of these signals rather than any single factor. One signal by itself isn't reliable. But when you combine stated age, account age, usage timing, and content patterns, you get something more powerful. You get a probabilistic assessment of whether the account probably belongs to a minor.

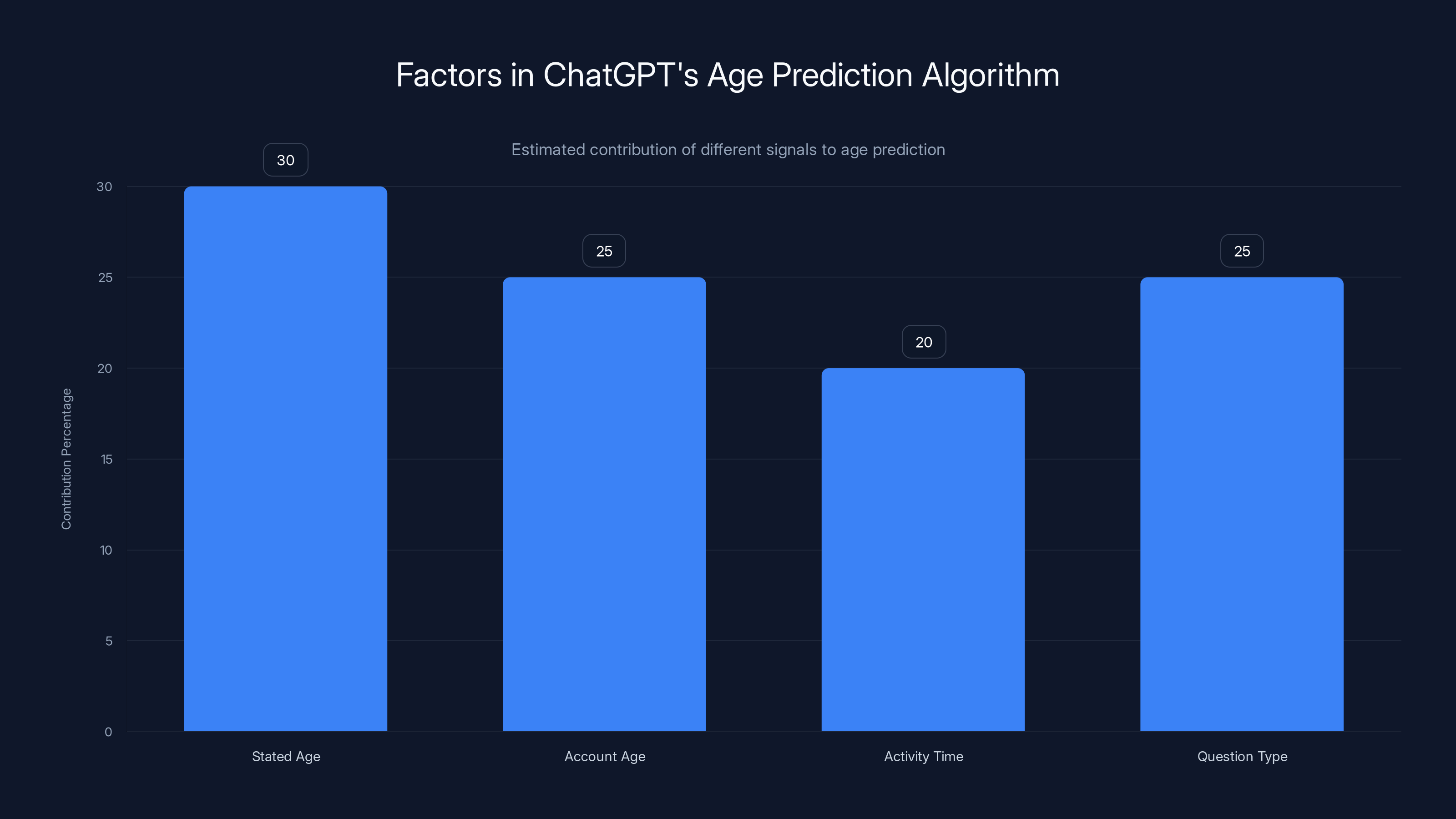

The age prediction algorithm considers multiple factors, with stated age and question type contributing significantly. Estimated data.

Content Filters: What Minors Can't Access

Once the age prediction system identifies an account as belonging to someone under 18, what actually happens? The system applies content filters. But what does that mean in practice?

Open AI has built restrictions into Chat GPT designed to prevent discussion of sexual content, extreme violence, and other potentially harmful topics. These aren't crude blocks that prevent you from typing the word "sex." They're more sophisticated than that. The system understands context. It understands that someone might ask about sex education or health information. That's different from asking the chatbot to generate sexual content.

The filters for minors are presumably more aggressive than the filters for adults. An adult can ask Chat GPT about sex, violence, drugs, and other mature topics with more flexibility. The assumption is that adults can handle contextual discussion of these topics. Minors are given less latitude.

This creates an interesting philosophical question that Open AI has essentially answered: what is the responsibility of a platform to protect minors from content, versus respecting their autonomy as developing people? The company has decided that minors need more protection. Whether that's the right call is debatable, but it's a clear position.

The filters also apply to other categories of potentially harmful content. That includes discussions of self-harm, dangerous instructions, and other topics identified as potentially damaging to young people.

ID Verification Through Persona: The Appeals Process

Here's where the system gets really interesting. Open AI knows that the age prediction algorithm isn't perfect. It's a probabilistic system analyzing behavioral signals. False positives are inevitable.

So the company built in an appeals process. If the system incorrectly identifies your account as belonging to a minor, you can prove that you're actually an adult. But here's the catch: you have to prove it.

To appeal an age determination, you submit a selfie to Persona, Open AI's ID verification partner. Persona specializes in digital identity verification. They use various methods to confirm that you are who you say you are and that you're the age you claim to be.

This is interesting technology. Persona uses a combination of techniques including facial analysis, document verification, and liveness checks to confirm identity. You take a selfie with your phone or webcam. You potentially upload an ID document. The system verifies that the person in the selfie matches the person in the ID and that the ID is valid.

Once you go through verification and Persona confirms you're actually an adult, your account gets reclassified. The content filters that were applied to your account get removed. You regain access to the full version of Chat GPT.

This approach has obvious implications for privacy. You're sharing biometric data (your face) and potentially your government ID with a third-party verification service. That's more intrusive than typical AI platform interactions. But it's a trade-off the company has decided to make in pursuit of better safety.

One important detail: this appeals process exists. The system isn't a black box that permanently locks you in a "minor" category. If you genuinely are an adult who the algorithm misidentified, you have a path forward. The question is whether most people will actually use it.

The timeline illustrates the escalation of events from the discovery of a content filter bug to congressional scrutiny, leading to the implementation of age prediction. Estimated data.

The Technical Challenge: Balancing Protection and Privacy

Building this system wasn't straightforward. There's an inherent tension in what Open AI was trying to accomplish. On one hand, they want to identify minors. On the other hand, they want to avoid invading privacy more than necessary.

The behavioral signal approach is actually elegant in this regard. The system doesn't need to know your name, your location, or access your contacts. It just analyzes patterns that emerge naturally from how you use Chat GPT. That's less invasive than alternatives like requiring government ID from everyone at signup.

But it's also less accurate than those alternatives. A behavioral signal system is probabilistic. It gets it right most of the time, but it will make mistakes. Teenagers with unusual usage patterns might be misidentified as adults. Adults who happen to have usage patterns similar to teenagers might be misidentified as minors.

Open AI has chosen to optimize for sensitivity rather than specificity. If there's doubt about whether someone is a minor, the system probably errs on the side of protecting them. That means more false positives (adults incorrectly identified as minors) and fewer false negatives (minors correctly identified as minors).

This is a reasonable choice for safety, but it creates friction. If you're an adult who keeps getting filtered, the appeals process is there, but it requires your ID. That's a privacy trade-off.

Existing Content Filters: The Foundation

Age prediction isn't the first safety mechanism Open AI implemented. The company has been working on content moderation for years. Understanding those existing filters provides context for why age prediction was necessary.

Open AI built content filters into Chat GPT designed to prevent the generation of certain types of harmful content. These filters use machine learning models trained to identify problematic requests. When you ask Chat GPT for something that violates the usage policies, the system is supposed to refuse.

The problem is that these generic filters apply to everyone equally. They don't differentiate between adults and minors. So Open AI built additional protections specifically for users flagged as underage.

This layered approach makes sense. Generic content policies apply to all users. Stricter policies apply specifically to minors. The age prediction system is what enables that differentiation.

These content filters don't just prevent sexual content. They're designed to prevent discussion of self-harm, dangerous illegal activities, extreme violence, and other topics Open AI has decided are harmful. The specifics of what's blocked and what's allowed are sometimes unclear, which creates legitimate questions about censorship and freedom of speech.

But in the context of protecting minors, most people don't object to these restrictions. There's broad consensus that children shouldn't have unrestricted access to chatbots that could help them harm themselves.

ChatGPT's age prediction system uses a balanced approach by analyzing stated age, account history, usage patterns, and active times. Estimated data.

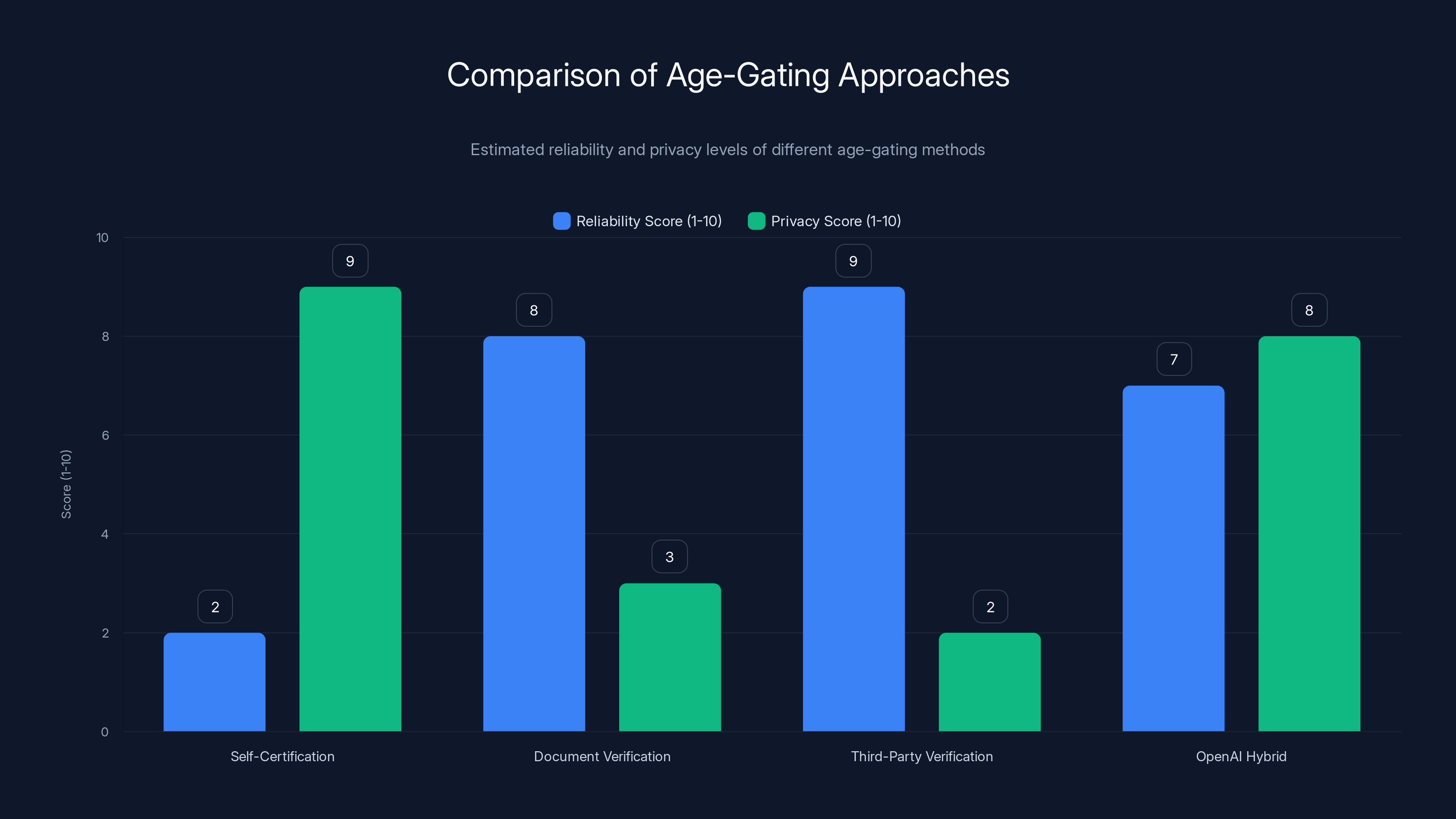

Why Behavioral Analysis Over ID Requirements

Open AI could have taken a different approach. They could have required government ID from everyone at signup. Many financial services and age-gated platforms do this. The company could have decided that if you want to use Chat GPT, you need to verify your identity upfront.

They didn't. Instead, they chose a more privacy-preserving approach using behavioral analysis. Why?

Part of it is practical. Requiring government ID from everyone would be friction that would limit adoption. Many people would choose not to use Chat GPT rather than hand over their ID. That's a business problem for Open AI.

But part of it is also principled. The company clearly decided that universal ID verification was too invasive. Behavioral analysis is less invasive. It lets people use Chat GPT without proving who they are, while still attempting to identify minors and apply appropriate protections.

The trade-off is accuracy. Behavioral analysis is less accurate than ID verification. The system will get it wrong sometimes. But the company apparently decided that was an acceptable trade for a less invasive system.

This choice reflects a value judgment about privacy and safety. Different companies might make different choices. Some might prioritize safety over privacy and require ID verification. Open AI prioritized privacy while still trying to protect minors.

Challenges With the Age Prediction System

No system is perfect, and this one has some obvious limitations. Let's be honest about what could go wrong.

First, false negatives. The system might miss some minors. A tech-savvy teenager who behaves in ways that don't match typical teen patterns might slip through. Someone who has been using the internet for years might have established patterns that look like an adult's patterns. The algorithm isn't going to catch everyone.

Second, false positives. Adults might get misidentified as minors. If you're an adult who uses Chat GPT mostly for creative writing or who happens to use it at times when many teenagers use it, the algorithm might flag you as underage. Then you have to go through the verification process to get access back.

Third, there's the question of whether behavioral signals are even a reliable proxy for age. The assumption is that teenagers use the internet in ways that are distinguishable from adults. But is that always true? What about adults from different countries or different socioeconomic backgrounds who have different usage patterns?

Fourth, there's a gaming problem. Once the algorithm is public and people understand how it works, some minors will intentionally try to behave like adults to avoid filters. They'll change their usage patterns. They'll try to avoid the signals the algorithm looks for. It becomes an arms race.

Fifth, there's a philosophical problem. How much filtering of a teenager's access to information is appropriate? Minors should have some exposure to adult information. They need to learn about sex, violence, and other aspects of the adult world. Over-filtering might be counterproductive from an educational perspective.

OpenAI's hybrid approach balances reliability and privacy better than traditional methods. Estimated data.

The Broader Context: AI and Youth Safety

Open AI's age prediction system doesn't exist in a vacuum. It's part of a broader conversation about AI safety and the impact of AI systems on young people.

There's growing evidence that social media has negative effects on mental health for teenagers. Platforms like Tik Tok, Instagram, and Snapchat have been linked to increases in anxiety, depression, and body image issues among young users. The question is whether AI chatbots like Chat GPT might have similar effects.

The mechanisms are different. Social media is about comparing yourself to others and constant feedback. Chatbots are about direct conversation with an AI system. But the potential for harm is real. A chatbot could provide harmful advice. It could facilitate bad decision-making. It could engage with a vulnerable teenager in ways that exacerbate mental health issues.

Open AI's age prediction system is an attempt to address this risk. But it's not a complete solution. The company is essentially saying: "Here's one thing we can do to make the platform safer for minors." But that's just one thing. There are many other aspects of how AI systems interact with teenagers that could be addressed.

Comparison to Other Age-Gating Approaches

How does Open AI's approach compare to what other platforms do to verify age?

Traditional age-gated platforms like pornography sites, casinos, and alcohol retailers often use simple self-certification. You click a box saying you're over 18. That's it. Obviously, this is easily bypassed by anyone willing to lie.

Some newer approaches use document verification. You upload a photo of your ID. The platform checks that the ID is valid and that your photo matches the person in the ID. This is much more reliable but also more invasive.

Some platforms use third-party verification services like Persona or Jumio. You go through an identity verification process that involves facial recognition and document scanning. This is very reliable but requires sharing significant personal data.

Open AI is essentially using a hybrid approach. They start with behavioral analysis, which is low-friction and privacy-preserving. If the system flags you as potentially underage, you can appeal using ID verification through Persona. This way, most adults can use the platform without verification, while minors are identified and filtered. If an adult gets flagged, they have the option to verify their identity.

This hybrid approach is probably more privacy-preserving than universal ID verification, but less reliable than getting everyone to verify their ID upfront.

The algorithm likely weighs usage timing and content patterns most heavily when predicting user age, with stated age being the least reliable signal. Estimated data.

Implementation Details and Rollout

When Open AI announced the age prediction feature, they didn't provide extensive details about implementation. That's both understandable and frustrating.

It's understandable because Open AI probably doesn't want to publish all the signals the algorithm looks for. If the system is public, it becomes easier to game. Teenagers would know exactly what patterns to mimic to appear as adults.

But it's frustrating because transparency is important in safety systems. If the system is going to make decisions about what content you can see, you deserve to understand how it makes those decisions.

Based on the limited information available, the system appears to have been rolled out gradually. Open AI didn't flip a switch and apply age prediction to every user at once. They probably rolled it out in phases, monitored for false positives and false negatives, and adjusted the algorithm accordingly.

The company also built in the appeals process with Persona from the start. They anticipated that the system would make mistakes and provided a way to correct them.

Implications for AI Platform Design Going Forward

Open AI's age prediction system sets a precedent. Other AI companies will likely follow. If Chat GPT has age detection and content filtering for minors, Anthropic's Claude, Google's Gemini, and other AI assistants probably will too.

This points to a future where AI platforms are more actively involved in content moderation based on user characteristics. Systems will try to identify who's using them and adjust their behavior accordingly.

There are good reasons for this. Protecting minors from harm is important. But there are also concerning implications. If AI platforms are trying to identify and categorize users based on behavioral analysis, that's a significant surveillance capability.

It also raises questions about what happens when these systems get it wrong. If you're incorrectly identified as a minor and your access gets restricted, is there adequate recourse? How do you know why you're being filtered? Do you have a right to know what signals triggered the age prediction? These are questions that will probably be litigated or regulated over time.

Privacy Considerations and Data Security

The behavioral analysis approach is less invasive than universal ID verification, but it still involves collecting and analyzing data about how you use Chat GPT.

Open AI is analyzing your usage patterns: when you're active, how long your account has existed, what you ask about. This is data they're already collecting anyway. The age prediction system just applies machine learning to it.

But when someone appeals an age determination and submits to ID verification through Persona, more sensitive data is involved. You're sharing your face (biometric data) and potentially government ID. That information is going to a third-party service.

Open AI's privacy policy presumably covers how this data is used. But sharing biometric data with a third party is always a privacy consideration worth taking seriously. There's a risk of data breaches. There's a question of whether Persona might sell this data or use it for other purposes.

For users willing to go through ID verification, this is presumably a calculated choice. You're trading privacy for access. But it's worth understanding what you're trading away.

Limitations of Any Age Detection System

No matter how sophisticated an age prediction system is, it has fundamental limitations. Some of these are technical. Others are more philosophical.

Technically, any system based on behavioral analysis will have false positives and false negatives. There's no way around this. Algorithms aren't perfect. They work probabilistically. Some errors are inevitable.

Philosophically, there's a question about whether age-based content filtering is even the right approach. Should a 17-year-old have less access to information than an 18-year-old? Should there be a cliff where restrictions suddenly disappear on someone's 18th birthday? Real maturity and readiness aren't binary.

There's also a question about paternalism. How much should platforms paternalistically restrict what users can see, even if it's for their protection? Some would argue that teenagers have a right to information and that over-filtering is counterproductive.

These are legitimate philosophical questions without clear answers. Open AI has made choices about these questions. Whether those choices are right is debatable.

Looking Forward: What Comes Next

Open AI's age prediction system is likely just the beginning. We're going to see this expand in multiple directions.

First, the algorithm will probably improve. Open AI will collect more data about what patterns actually correlate with age. They'll refine the signals the algorithm looks at. False positive and false negative rates will decrease.

Second, other AI companies will implement similar systems. This will become a standard feature across AI platforms, not unique to Chat GPT.

Third, there will probably be regulation. Governments might not be satisfied with Open AI's self-regulatory approach. They might mandate specific safety requirements for AI systems used by minors. The Children's Online Privacy Protection Act (COPPA) in the United States might be updated to address AI systems, for example.

Fourth, there will probably be legal challenges. Users who feel they've been unfairly restricted might sue. Privacy advocates might challenge the data collection aspects. The appeals process might face legal scrutiny.

Fifth, we'll probably see pushback from teenagers and their advocates who feel the filtering is too restrictive. The system's efficacy at preventing harm needs to be weighed against the freedom to access information.

TL; DR

- Age Prediction Feature: Open AI implemented an AI algorithm that analyzes behavioral and account signals to identify minors using Chat GPT, automatically applying stricter content filters to protect them from harmful content.

- How It Works: The system examines stated age, account history, usage timing, and content patterns to probabilistically determine whether an account belongs to someone under 18.

- Existing Filters: Chat GPT already had content restrictions, but age prediction enables more aggressive filtering specifically for minors, preventing access to sexual content, extreme violence, and self-harm discussions.

- Appeals Process: Adults incorrectly flagged as minors can submit a selfie and ID verification through Persona to prove their age and restore full access.

- Real Problem: The system addresses genuine harms, including documented cases of minors being exposed to sexual content and concerning interactions that contributed to psychological damage.

- Privacy Trade-offs: While behavioral analysis is less invasive than universal ID verification, the verification appeals process involves sharing biometric data and government ID with third parties.

- Ongoing Challenges: The system has inherent limitations including false positives, false negatives, and the potential for gaming the algorithm once teenage users understand how it works.

- Bottom Line: Open AI's age prediction represents a meaningful step toward protecting minors on AI platforms, though it's not a complete solution and raises important questions about privacy, access to information, and platform responsibility.

FAQ

How does Chat GPT determine if you're under 18?

Chat GPT uses a machine learning algorithm that analyzes "behavioral and account-level signals" rather than requiring government ID upfront. The system examines your stated age during signup, how long your account has existed, what times of day you're typically active, and the types of questions you ask. By analyzing patterns across these signals, the algorithm generates a probability estimate about whether you're likely a minor. This approach is less invasive than universal ID verification but also less accurate, meaning some false positives and false negatives are inevitable.

What content does Chat GPT restrict for minors?

Once the system identifies an account as belonging to someone under 18, stricter content filters automatically activate. These filters restrict discussion of sexual content, extreme violence, dangerous illegal activities, and self-harm. The system doesn't simply block keywords—it understands context. For example, a question about sexual health education might be allowed, but a request to generate sexual content would be blocked. The exact parameters of what's filtered and what's allowed aren't entirely transparent, which raises legitimate questions about the breadth of these restrictions.

What can you do if you're an adult incorrectly flagged as a minor?

If Chat GPT's age prediction system incorrectly identifies your account as belonging to a minor, you can appeal this determination. You'll be asked to submit a selfie to Persona, Open AI's third-party ID verification partner. Persona uses facial recognition, liveness detection, and potentially document verification to confirm your identity and age. Once verified as an adult, your account classification changes and the content restrictions are removed. The process takes the privacy trade-off seriously—you're sharing biometric data to regain access.

How accurate is the age prediction algorithm?

Open AI hasn't published specific accuracy metrics for the age prediction system, so the exact false positive and false negative rates are unknown. However, any behavioral analysis system has inherent limitations. It works probabilistically based on patterns, meaning it will sometimes make mistakes. Teenagers with unusual usage patterns might avoid detection, while adults with usage patterns similar to teenagers' might be incorrectly filtered. The company apparently optimized for sensitivity (catching minors) over specificity (minimizing false positives), meaning more adults might be incorrectly flagged to ensure better protection of actual minors.

Is age prediction different from how other platforms verify age?

Yes, it's fundamentally different from most age-gating approaches. Traditional age-gated sites often use simple self-certification (just clicking "I'm over 18"), which is easily bypassed. Some platforms require document verification upfront, which is very reliable but more invasive. Open AI's approach uses behavioral analysis first (low friction, moderate accuracy) with optional verification later (high friction for those who need it). This hybrid approach attempts to minimize privacy invasion while still attempting to identify and protect minors, though it's less reliable than universal upfront verification.

Why didn't Open AI just require government ID from everyone?

Universal ID verification would be more accurate but much more invasive. It would likely deter significant numbers of users who don't want to share their government identification with a private company. Open AI apparently decided that the privacy cost wasn't justified and that a less invasive behavioral analysis approach with optional verification was preferable. This reflects a values judgment that privacy should be preserved where possible, even if it means accepting lower accuracy in age detection. Other companies might make different choices, prioritizing accuracy over privacy.

What happens if you're flagged as a minor but you're actually 18?

This is where the appeals process matters. If you just turned 18 or if the algorithm made a false positive determination, you can verify your actual age through Persona. Once verified, your account is reclassified as an adult account and restrictions are removed. The system isn't meant to be permanent. However, the appeals process does require sharing personal information (biometric data and potentially government ID), which creates a privacy trade-off that some users might not be willing to make.

Could teenagers game this system to avoid the filters?

Probably, yes. Once teenagers understand how the algorithm works, some will intentionally adjust their behavior to look like adults. They might change usage timing, adjust the types of questions they ask, or take other steps to avoid the behavioral signals the algorithm looks for. This creates an arms race dynamic where Open AI would need to continually update the algorithm to catch new evasion tactics. This is a known limitation of any age detection system that relies on behavioral analysis—if the heuristics become public, determined users can try to circumvent them.

How does this compare to the protection provided by content filters alone?

Content filters alone prevent certain harmful content from being generated, but they apply to everyone equally. They don't differentiate between a 35-year-old adult and a 13-year-old kid. Age prediction enables differentiation—minors can have more restrictive filters applied automatically. This is more protective because it recognizes that minors need different protections than adults. The system combines generic content policies that apply to everyone with age-specific policies that apply to detected minors, creating a layered approach to safety.

Will other AI companies implement similar age prediction systems?

Very likely. Open AI's implementation sets a precedent. If one major AI platform has age detection and content filtering for minors, others will probably follow to avoid appearing less safety-conscious or facing regulatory pressure. We're likely to see similar or more sophisticated age prediction systems implemented by Anthropic (Claude), Google (Gemini), Meta (LLa MA), and others. Over time, age-specific content moderation might become standard across AI platforms, similar to how content moderation is standard on social media.

Runable: Automating Content Safety Documentation

If you're building an AI product or platform that needs to document safety features, compliance procedures, or policy changes, Runable can automate the creation of that documentation. Instead of manually writing safety guides or policy documents, you can use AI-powered automation to generate reports, presentations, and documentation at scale.

For teams managing content moderation systems like age prediction or content filtering, Runable's AI agents can help you automatically generate compliance reports, create safety documentation, and produce presentations explaining your systems to stakeholders. Starting at just $9/month, it's an efficient way to keep your safety documentation current as your systems evolve.

Use Case: Generate automated compliance reports and safety documentation for AI content moderation systems without manual writing overhead.

Try Runable For Free

Key Takeaways

- OpenAI implemented an AI-driven age prediction system that analyzes behavioral signals like usage timing, account history, and content patterns to identify users under 18 and apply stricter content filters automatically

- The system was created in response to documented harms including teen suicides linked to ChatGPT interactions and discovered bugs allowing sexual content generation for minors

- Behavioral analysis is less invasive than universal ID verification but also less accurate, creating inevitable false positives and false negatives that require an appeals process

- Adults incorrectly flagged as minors can restore access by submitting biometric data and government ID to Persona, a third-party identity verification service, creating a privacy trade-off

- The implementation sets a precedent: other AI platforms will likely adopt similar age detection and content restriction systems, potentially becoming an industry standard alongside regulation

Related Articles

- X's Algorithm Open Source Move: What Really Changed [2025]

- ICE Verification on Bluesky Sparks Mass Blocking Crisis [2025]

- Threat Hunting With Real Observability: Stop Breaches Before They Spread [2025]

- Jimmy Wales on Wikipedia Neutrality: The Last Tech Baron's Fight for Facts [2025]

- Meta's Oversight Board and Permanent Bans: What It Means [2025]

- How Grok's Deepfake Crisis Exposed AI Safety's Critical Failure [2025]

![ChatGPT's Age Prediction Feature: How AI Now Protects Young Users [2025]](https://tryrunable.com/blog/chatgpt-s-age-prediction-feature-how-ai-now-protects-young-u/image-1-1768952205887.jpg)