CISA End-of-Life Edge Device Replacement: A Complete Federal and Enterprise Guide [2025]

In January 2025, the Cybersecurity and Infrastructure Security Agency (CISA) made something abundantly clear: unsupported hardware is a liability. Not a "nice to fix eventually" problem. A liability.

They issued Binding Operational Directive 26-02, telling federal agencies they have one year to remove and replace edge devices that have reached or passed end of support (EOS). No exceptions. No extensions.

Here's what makes this directive different from the usual compliance theater: it's not just bureaucratic CYA. It's a direct response to a real, measurable threat. Threat actors have figured out that old, unsupported hardware is like a nightclub with no security—easy entry, nobody watching, perfect for causing chaos.

But this isn't just a federal problem. If you run IT infrastructure anywhere—enterprise, mid-market, startups—this directive should make you nervous. Because CISA explicitly said the guidance applies to every organization, not just government agencies. And they're right. The threat is the same whether you're running Treasury Department systems or an e-commerce platform.

Let's walk through what's actually happening, why it matters, and exactly what you need to do about it.

TL; DR

- CISA BOD 26-02: Federal agencies must remove all EOS edge devices within 12 months, effective January 2025

- Device scope: Firewalls, routers, switches, wireless access points, network appliances, and IoT devices accessible via public internet

- The risk: Unsupported devices receive zero security patches, making them prime exploitation targets for sophisticated threat actors

- The timeline: Organizations should immediately audit hardware lifecycles and begin replacement planning

- The broader issue: This affects every organization, not just government—threat actors don't distinguish between federal and private sector vulnerabilities

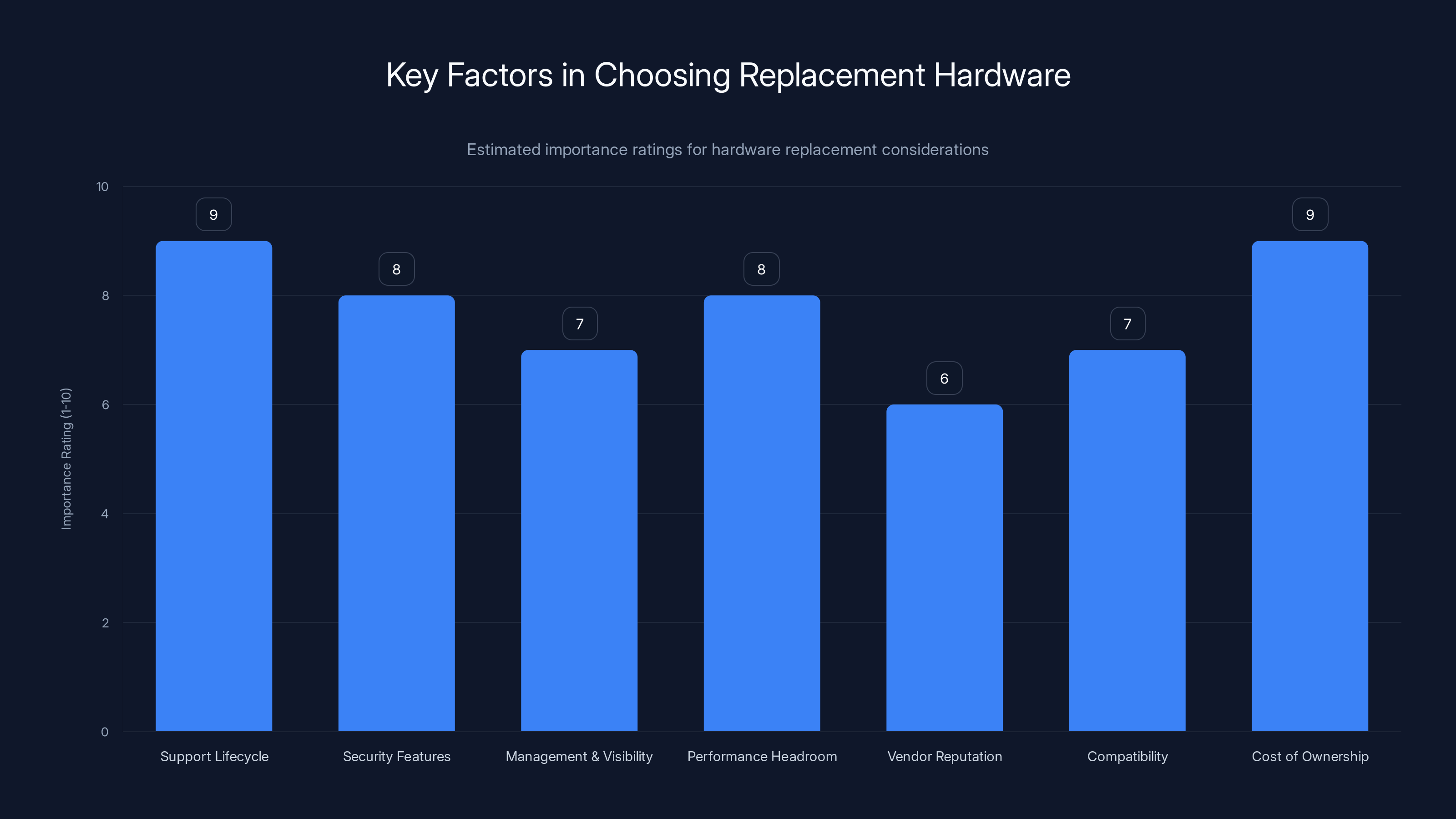

Support lifecycle and cost of ownership are crucial factors when choosing replacement hardware, both rated at 9 out of 10 in importance. Estimated data.

What CISA Actually Said (And Why You Should Care)

CISA's directive isn't vague. It's surgical. They defined exactly what they mean by "edge devices"—the hardware sitting at the perimeter of your network where it touches the internet.

We're talking about:

- Firewalls and next-generation firewalls (NGFWs) that filter traffic

- Routers and switches that direct network traffic

- Wireless access points (APs) that handle Wi-Fi connections

- Network security appliances that inspect and block threats

- IoT edge devices running older firmware or operating systems

These aren't obscure legacy systems sitting in a closet. These are the literal gates between your network and the internet. They're the first line of defense. And if they're not being patched, they're not defending anything.

The directive calls this situation one that poses "disproportionate and unacceptable risks" to federal systems. Translated: this is dangerous enough that we're making it mandatory, not optional.

But here's the intelligent part of CISA's approach: they're not just saying "rip and replace." They're acknowledging that this requires planning. The directive specifically requires agencies to "mature their lifecycle management practices" and "develop a plan for decommissioning EOS devices while minimizing disruptions to agency operations."

In other words, do this smartly. You have a year. Use it.

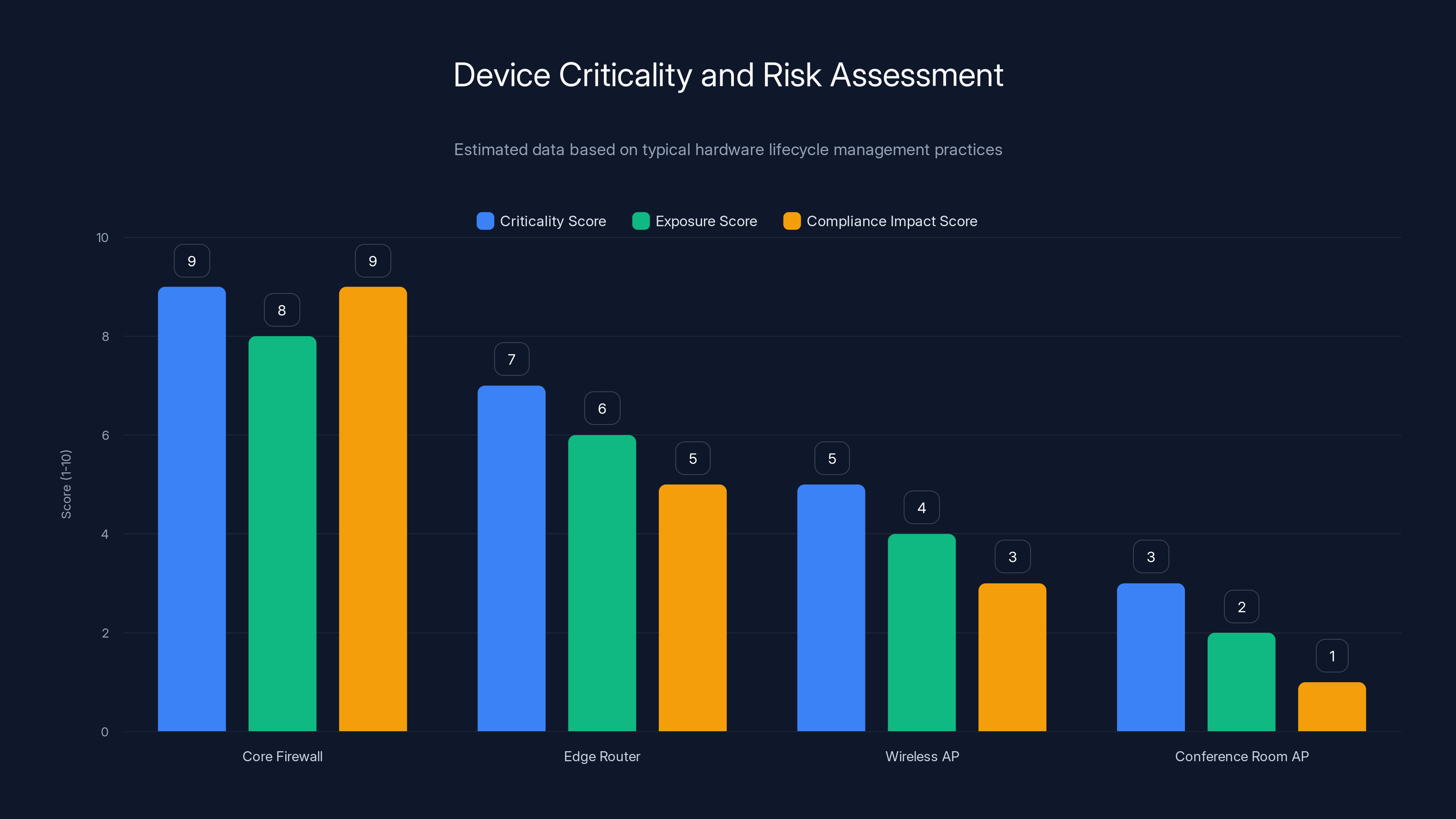

Core firewalls typically score highest in criticality, exposure, and compliance impact due to their central role and potential vulnerabilities. Estimated data based on typical assessments.

Why End-of-Life Devices Are Actually Dangerous (Not Just Annoying)

Let's be direct: an unsupported device is a backdoor you're paying for.

When a hardware vendor declares a device "end of support," they stop releasing security patches. Not because they're mean. Usually because the hardware's architecture, processor, or firmware platform can't support modern security standards. Sometimes it's because the business model changed. Either way, the result is identical: zero new security updates, period.

This creates a specific, exploitable situation. Researchers discover vulnerabilities in those devices constantly. Bad actors discover them too. But here's the nightmare scenario: the vulnerability gets published, proof-of-concept code appears online, security firms write about it, and your unsupported device is still running the vulnerable code. There's nothing you can do except replace it.

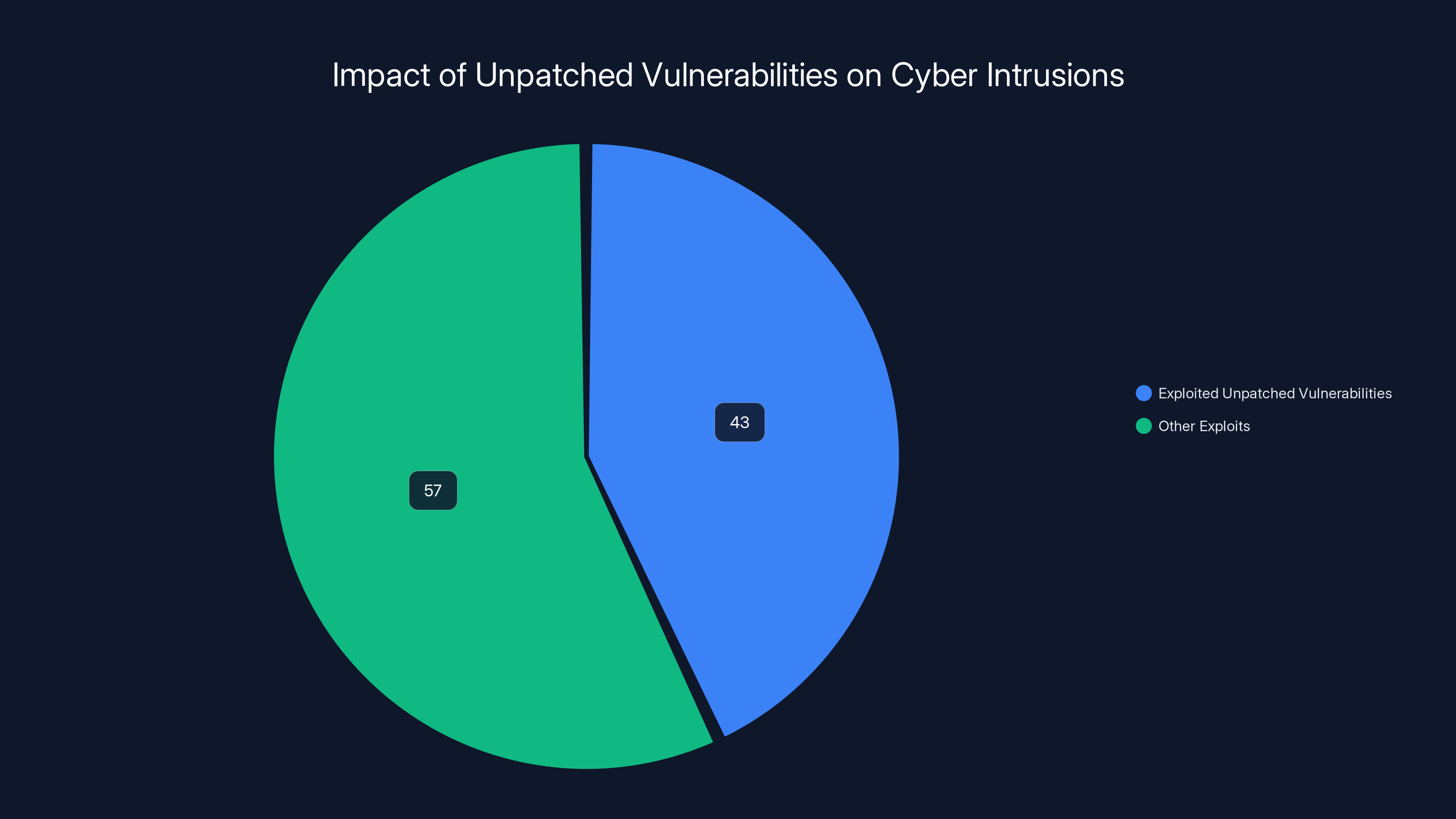

Let's look at actual numbers. When CISA published their analysis of recent intrusions, they found that 43% involved exploitation of unpatched vulnerabilities. Not zero-days. Not mysterious exploits. Vulnerabilities that had patches available. Some for months or years.

Unsupported hardware takes that percentage higher. How much higher? The data isn't public, but consider this: threat actors consistently target the easiest entry points first. An unsupported device is the definition of "easy."

The timeline matters too. In the time between when a vulnerability is discovered and when a patch is released, unsupported devices are vulnerable. But unsupported devices are always vulnerable because patches never come. It's like leaving your front door unlocked on purpose and hoping nobody notices.

The Real Cost of Delaying Replacement (And It's Not Just Compliance)

Let's talk about what happens when you don't replace unsupported hardware.

Scenario 1: You get breached. A threat actor finds your old firewall running a three-year-old vulnerability. They pivot through it into your network. Your security team has to respond, remediate, notify customers, deal with regulatory fallout, and rebuild trust. Even a contained breach costs

Scenario 2: You don't get breached today, but your infrastructure becomes increasingly exposed. Every day that unpatched device sits there, it's a risk. Not a maybe. A risk. And if you're in finance, healthcare, critical infrastructure, or government, regulators will eventually ask why you had known vulnerabilities.

Scenario 3: Your old hardware finally fails catastrophically. Not to an attack. Just fails. And you have to emergency-replace it while everything's on fire. Emergency procurement is 3-5x more expensive than planned replacement.

Here's what most organizations get wrong about this: they treat hardware replacement as a capital expense problem ("We can't afford new equipment"). But they ignore the actual cost equation.

Cost of planned replacement: Purchase cost + installation cost + testing cost + migration time = $X

Cost of unplanned breach: Investigation + remediation + regulatory fines + customer notification + credit monitoring + reputational damage + lost business = $X × 10-50

You're not choosing between spending money and not spending money. You're choosing between strategic spending now and emergency spending later.

Estimated data shows typical support durations for major network device vendors, highlighting the need for timely replacements as devices reach end-of-life.

Which Devices Are Actually At Risk (The Real Hardware Inventory)

Here's where things get practical. Which devices in your actual infrastructure are likely end-of-life?

Firewalls and Network Security Appliances: These typically have 5-7 year support lifecycles from major vendors. If you deployed a Cisco ASA, Palo Alto Networks, Fortinet FortiGate, or Juniper SRX between 2016-2018, you're probably looking at devices approaching or past EOS. Check your vendor's support matrix. Now.

Routers: Cisco, Juniper, and Arista routers usually get 5-10 years of support depending on the platform. Core infrastructure routers might still be supported. Edge routers, especially if they're older models, often aren't. Access routers from 2015-2017? Probably EOS.

Switches: Network switches typically have longer support windows (7-10 years), but older models definitely exist. If you have stacks of Cisco Catalyst 2960s, Nexus 5500s, or Juniper EX3400s from pre-2015, they might be approaching EOS.

Wireless Access Points: This is where you find the most end-of-life hardware. Organizations replace these less frequently than other network equipment. If your APs are Cisco Aironet 2700 series (released 2015) or older, check support status immediately. Same with Arista, Ruckus, or Meraki.

IoT Edge Devices: Industrial IoT gateways, building management systems, manufacturing edge devices—these are notorious for running old firmware. A device deployed in 2014-2016 might still be operating, but its vendor might have ended support years ago.

The real problem: most organizations don't have an accurate inventory. They know roughly what's out there, but they don't know:

- Exact hardware versions and serial numbers

- Current firmware versions

- Support status for each device

- Dependencies and criticality

- Replacement timeline for each asset

This is where your first action item comes in.

Building Your Hardware Lifecycle Management Program (The Playbook)

CISA didn't just mandate removal. They specifically required agencies to "mature their lifecycle management practices." Translation: you need a system for tracking, planning, and executing hardware replacement.

Here's what that actually looks like:

Step 1: Complete Hardware Audit and Inventory

You need to know what you have. Walk every data center, closet, and branch office. Document every network device:

- Manufacturer and exact model

- Serial number

- Installation date (if possible)

- Current firmware version

- Current support status (you can find this on vendor websites)

- Network criticality (is this a core device or edge device?)

- Dependencies (what breaks if this device goes down?)

This takes time. A week if you're small, weeks to months if you're large. But it's non-negotiable. You can't manage what you don't know you have.

Step 2: Map Support Lifecycles

For every device in your inventory, find its support end date. Most vendors publish this online:

- Cisco: End of Life/End of Support lookup tool

- Palo Alto Networks: Support lifecycle calendar

- Fortinet: Product lifecycle status

- Juniper: Product lifecycle matrix

Sort your inventory by support status:

- Currently supported (0-2 years until EOS): Plan replacement

- EOS within 1 year: Begin procurement

- Already EOS: Emergency replacement priority

Step 3: Assess Criticality and Risk

Not all EOS devices are equally dangerous. A core firewall handling $10M/day in transactions? Critical. An old wireless AP in a conference room? Still a risk, but lower priority.

Score each device:

- Criticality: How many users/systems depend on it? (High/Medium/Low)

- Exposure: Is it directly accessible from the internet? (Public-facing = higher risk)

- Attack surface: What vulnerability classes affect similar devices? (Based on recent CVEs)

- Compliance impact: Are you regulated in ways that require up-to-date hardware? (PCI-DSS, HIPAA, FedRAMP, etc.)

Focus your replacement efforts on devices that score high on both criticality and attack surface.

Step 4: Build Your Replacement Roadmap

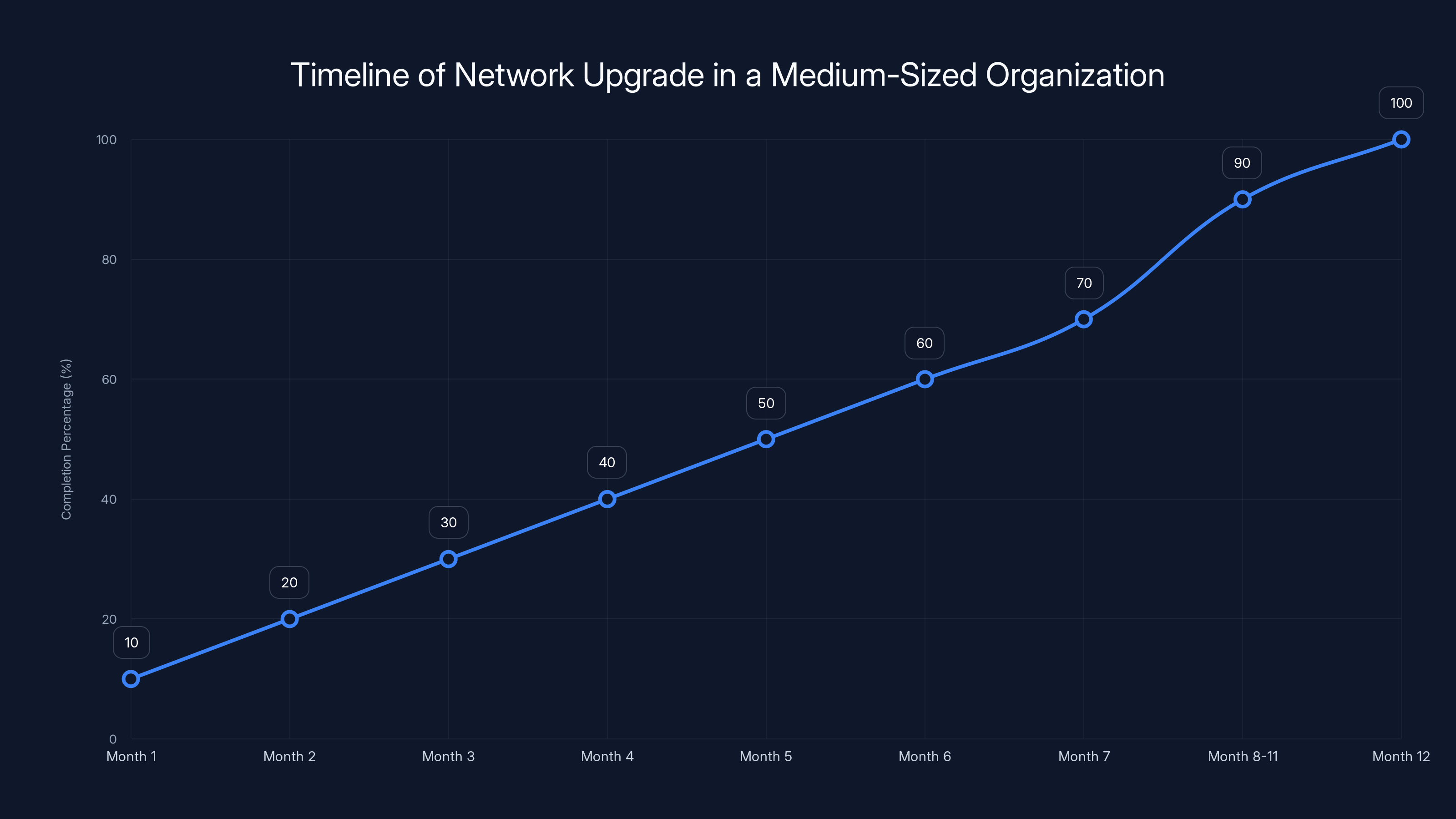

You don't replace everything at once. You'd break everything. Instead, phase it:

- Months 1-3: Replace highest-risk devices (EOS + high exposure + high criticality)

- Months 4-8: Replace medium-priority devices

- Months 9-12: Replace remaining devices and decommission old hardware

For each replacement, document:

- Current device specs and configuration

- Replacement device specs

- Migration plan (switch traffic, test, validate)

- Rollback plan (if something breaks)

- Success criteria (what "working" means)

Step 5: Procurement and Testing

Order replacement hardware with enough lead time (usually 2-3 months). Test it thoroughly:

- Performance benchmarks (latency, throughput)

- Feature parity (does it support everything the old device did?)

- Integration (does it work with your monitoring, logging, security tools?)

- Configuration migration (can you import the old config or do you rebuild?)

Step 6: Staged Deployment

Deploy in waves:

- Lab testing (isolated environment)

- Non-critical devices first (conference room APs before core firewalls)

- Monitoring and validation after each deployment

- Rollback procedures in place if needed

Step 7: Documentation and Knowledge Transfer

Document everything. Your team changes. People retire. New engineers arrive. Without documentation, you lose tribal knowledge.

Do this for each replaced device:

- Configuration details

- Administrative procedures

- Troubleshooting guides

- Contact information for support

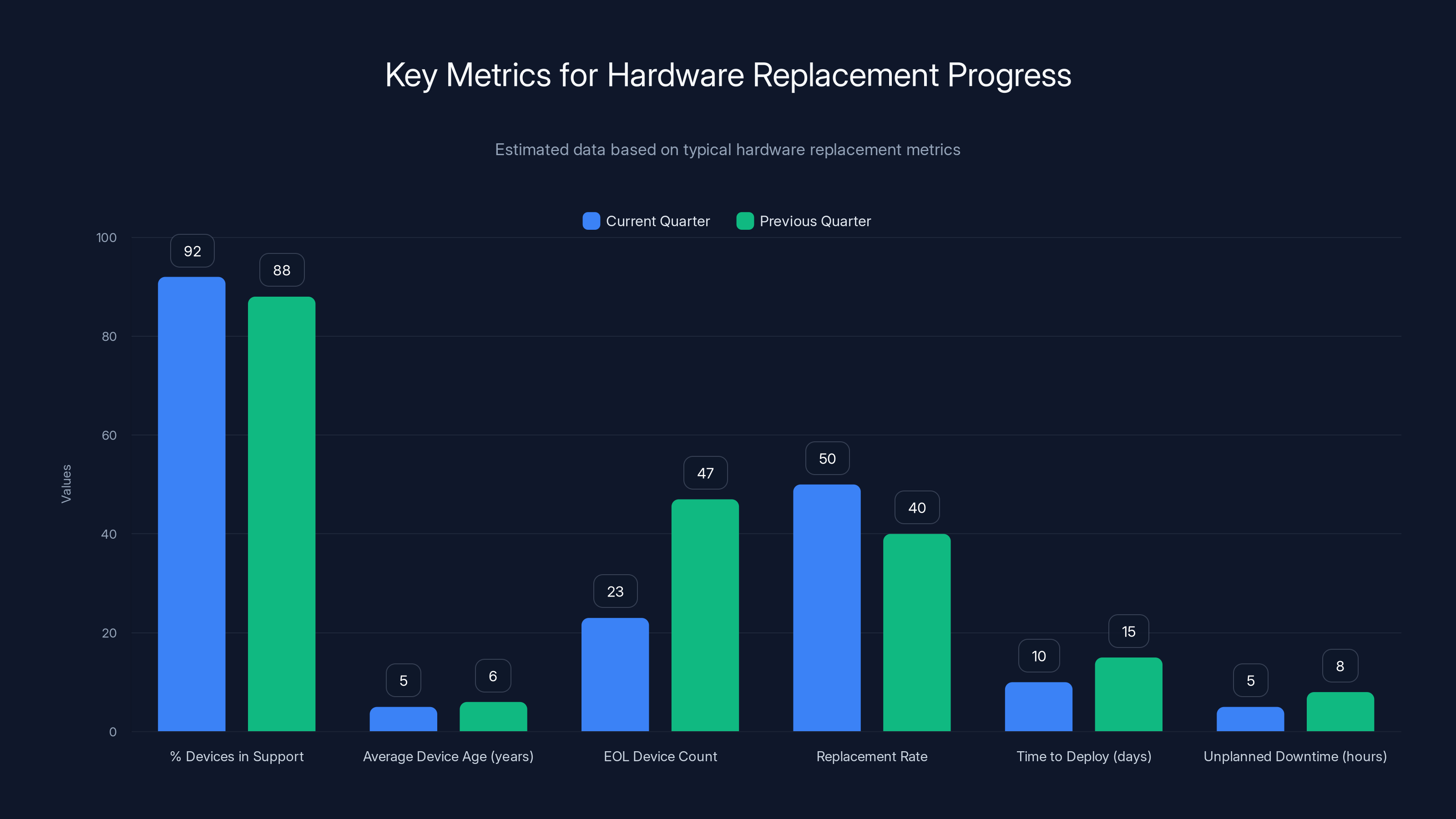

Estimated data shows improvement in key metrics such as EOL device count, which decreased from 47 to 23, and replacement rate, which increased from 40 to 50 devices per quarter.

Choosing Replacement Hardware (What You Actually Need)

Once you decide something needs replacing, what do you buy?

Here's the temptation: buy the cheapest thing that works. Don't. You'll regret it in five years when you're replacing it again.

Evaluate These Factors:

Support Lifecycle: How long will this device be supported? Check vendor support matrices. Look for hardware that has 7-10 year support windows. If a vendor is ending support in 2-3 years, it's not worth deploying.

Modern Security Features: If you're replacing an old firewall, get one with modern threat prevention: IPS/IDS, malware detection, ransomware protection, zero-trust network access. Don't replicate the old device's limitations.

Management and Visibility: Can you remotely manage it? Can you see what's happening? Modern devices should integrate with your SIEM, logging infrastructure, and monitoring tools. If you're manually checking logs, you've failed.

Performance Headroom: Size for growth. If you buy exactly what you need today, you'll upgrade again in 3 years. Size for 2-3 years of growth.

Vendor Reputation: Some vendors actually support their hardware. Others sell it and disappear. Check online reviews, talk to peers. A cheap device from a vendor that goes bankrupt is worth nothing.

Compatibility: Will it work with your existing infrastructure? Can you import configurations? Does it support the protocols and standards you need?

Cost of Ownership: Hardware cost is only part of it. Consider:

- Licensing (some devices require annual licenses for security features)

- Support contracts

- Training for your staff

- Integration work

- Monitoring and management overhead

A

The Zero Trust Connection (Why This Matters More Than You Think)

CISA didn't mention this by accident. They referenced their memorandum M-22-09: Moving the U.S. Government Toward Zero Trust Cybersecurity Principles.

Here's why: end-of-life devices can't implement zero trust. Zero trust requires:

- Real-time threat detection: Modern devices need to analyze traffic patterns and behaviors, identify anomalies, and respond. Old hardware can't do this.

- User and device authentication: Zero trust means authenticating every user and device before allowing access. Old hardware predates these concepts.

- Encryption and segmentation: Modern devices support encrypted tunnels, micro-segmentation, and identity-based access. Old firewalls with port-and-protocol rules can't compete.

- Logging and analytics: Zero trust requires forensic evidence of everything. Old devices log basically nothing.

Essentially, unsupported edge devices lock you out of modern security architecture. You can't be zero trust if your perimeter devices are running 2015-era threat models.

This is why replacement isn't optional. It's architectural.

The organization completed a comprehensive network upgrade over 12 months, achieving a modernized infrastructure with zero end-of-life devices. Estimated data.

Overcoming Common Implementation Obstacles

Let's talk about what goes wrong. Because something always does.

"We don't have budget": See the cost equation section above. You do have budget. You're just calling it "breach response budget" instead of "replacement budget." Flip the terminology and suddenly there's money.

"We don't have time": You have a year. That's time. What you don't have is the discipline to plan instead of react. Start now.

"Our devices are mission-critical. We can't take them offline": You're going to replace them anyway eventually. Would you rather do it planned or at 3 AM when one fails? Plan a maintenance window. Your users will survive an hour of downtime far better than they'll survive a security breach.

"The replacement device isn't compatible with our setup": This is real sometimes. Some organizations run bizarre network topologies. Solution: test it first. Deploy in a lab or a non-critical segment. Find the compatibility issues while you can still fix them.

"Our team doesn't know how to configure the new device": Training exists. Vendor support exists. Use it. A week of disruption for training is better than years of running vulnerable hardware.

"Decommissioning is complicated": Fine. But keep the old device off the network. Literally unplug it. Decommission properly later. Just don't run it connected to anything important while you figure out the paperwork.

Vendor-Specific End-of-Life Status (What's Actually Unsupported)

Let's look at real hardware you might actually have:

Cisco Systems:

Cisco ASA 5500-X series (2012-2018): Support ended or ending 2024-2025. Migrate to ASA 5500-X Secure X or Cisco Secure Firewall 4100-X series.

Cisco Catalyst 2960-X switches (2013-2015): Support ends 2024-2025. Upgrade to Catalyst 9000 series.

Cisco Aironet 1800s (2014-2015): Support ended. Migrate to Catalyst 9100AX or 9117AX access points.

Palo Alto Networks:

PA-2000 series (2011-2013): End of support 2024. Migrate to PA-7000 or cloud-based firewalls.

PA-3000 series (2012-2014): Support ending 2025. Plan migration.

Fortinet:

FortiGate 100D, 200D, 300C (2011-2013): End of support 2024. Migrate to FortiGate 3100D or equivalent.

Juniper:

SRX100 (2012-2013): End of support 2024. Migrate to SRX 4000 series or vSRX.

Important note: These are estimates. Check the vendor's official support lifecycle matrix for exact dates. Support status changes quarterly as vendors update their timelines.

CISA found that 43% of recent cyber intrusions involved exploited unpatched vulnerabilities, highlighting the risks of unsupported devices.

Metrics and Monitoring (How to Know You're Making Progress)

Once you start replacing hardware, you need to track progress. Not for compliance theater. For actual management.

Key Metrics:

- % of devices still in support: Target 95%+ at all times. If you drop below 90%, you're behind.

- Average device age: Track average years since deployment. For edge devices, aim for 4-5 years average. Anything older than 7 years is old.

- EOL device count: Document how many devices are currently past support. Report monthly. Watch this number go down.

- Replacement rate: How many devices replaced per quarter? Calculate what you need to hit your deadline.

- Time to deploy: Track how long it takes from approval to production for each replacement. Improve the process.

- Unplanned downtime: Did replacement hardware fail? Did the migration break something? Track this. It tells you if you're choosing the wrong vendors or rushing deployment.

Don't just track these for compliance. Present them to leadership quarterly. "We've reduced our EOL device count from 47 to 23 this quarter" is a data point that justifies budget.

Security Considerations During the Transition

Here's the risk nobody talks about: the moment between removing old hardware and deploying new hardware is vulnerable. You have a window where your defense is weakened.

Manage the transition:

- Never remove old hardware until the replacement is tested and ready: Deploy new device in parallel first. Test it. Validate it. Then migrate.

- Implement compensating controls: If you're temporarily running without a firewall, at least block unnecessary ports at the router level. Reduce risk somehow.

- Monitor more aggressively during transitions: You're vulnerable. Watch for exploitation attempts. Have your SOC ready.

- Schedule transitions during low-traffic periods: Sunday 2 AM is better than Tuesday 2 PM. Less traffic means less risk if something breaks.

- Have rollback ready: Can you switch back to the old device if the new one fails? Have that procedurally defined.

Government Compliance and Beyond (Who Else Cares About This)

CISA's directive applies directly to federal agencies. But compliance requirements exist elsewhere too.

Federal agencies: BOD 26-02 is binding. Not optional. Enforce it internally or prepare for audit findings.

Government contractors: If you contract with federal agencies, they're likely imposing this requirement on you. Check your contracts. If it's not explicit, ask. Your customer probably cares.

Financial services: PCI-DSS requires that network devices be in supported state. End-of-life devices violate this. Your payment processor audit will flag this.

Healthcare: HIPAA security rules require that systems be patched and supported. Old hardware doesn't meet this. Your risk assessment and business associate agreements might get audited.

Critical infrastructure: If you operate electrical, water, transportation, or communications infrastructure, NERC CIP, AWWA, or similar rules apply. Support status matters.

Everyone else: There's no regulatory requirement. But there's a business requirement. Unsupported hardware increases breach risk. Insurance companies are starting to care. Some already exclude coverage for known unpatched vulnerabilities.

The Broader Ecosystem: Vendor Responsibility and Market Pressure

Here's something worth noting: hardware vendors design products with specific support windows in mind. A 7-year support window means they expect you to replace it every 7 years (roughly). That's intentional.

The problem: many organizations run hardware for 10-12+ years. That pushes devices into unsupported territory not because vendors abandoned them, but because customers delayed replacement.

Where's the vendor responsibility? Some thoughts:

Better support windows: Vendors could offer longer support. Some do (10-15 years), but it costs more. Longer support means longer revenue from support contracts and maintenance.

Extended security patches: Even for EOS hardware, vendors could release critical security patches for vulnerability classes that affect their installed base. Some do, most don't.

Easier migration: Vendors could make it easier to migrate from old hardware to new. Configuration import, feature parity, training. Some are good at this, others treat it as a pain point.

Transparent lifecycle calendars: Not all vendors publish clear support calendars. Some hide it on obscure pages. Transparency would help organizations plan better.

But ultimately: this is a business. Vendors have financial incentives to have you replace hardware. Organizations have financial incentives to keep hardware as long as possible. This tension is normal. Your job is to find the balance that manages risk without bankrupting your capital budget.

Building Your Organizational Response

CISA's directive was issued as a Binding Operational Directive, which means compliance is mandatory for federal agencies. But implementation responsibility falls on individual agencies.

Here's how to structure your response:

Executive Sponsorship: Get IT leadership and business leadership aligned. This requires budget and priority. Without executive sponsor support, it won't happen.

Program management: Assign someone to own this. Not part-time. Full-time program manager who can coordinate hardware audit, procurement, deployment, and tracking.

Cross-functional involvement: You need network engineers (to validate replacement specs), security (to evaluate risk), finance (to manage budget), procurement (to handle vendor relationships), and operations (to manage deployment).

Communication plan: Keep stakeholders informed. Vendors, customers, board members, regulators (if applicable) should understand why this is happening and what the timeline is.

Resource allocation: This isn't free. Budget for:

- Hardware purchases

- Consulting (if you need help)

- Testing and validation

- Training for your staff

- Downtime (migration takes time)

- Contingency (things break, equipment gets damaged)

Success metrics: Define what "done" looks like. Zero end-of-life devices in production? Zero devices with more than X years on support? Some threshold of compliance?

Automation and Documentation (Making It Repeatable)

Once you've gone through this once, don't do it again manually next time.

Implement tooling and automation:

Asset inventory automation: Use network discovery tools (Nmap, Shodan, or commercial solutions) to automatically discover devices and their versions. Integrate with your asset management system.

Support lifecycle tracking: Integrate vendor support data. Some vendors provide APIs. Use them. Automatically flag devices approaching EOS.

Configuration backup and import: Don't manually rebuild configurations. Use vendor tools to back up and import. Practice this before you need it.

Deployment automation: Where possible, use infrastructure-as-code or vendor SDKs to configure replacement hardware. Reduces manual errors.

Documentation generation: Use tools to automatically generate documentation from configurations. Don't write runbooks manually if you can extract information programmatically.

The goal: when the next round of replacements comes (and it will), you have repeatable processes that work faster and with fewer errors.

Forward Planning: Making This Sustainable

Once you've replaced the critical stuff, don't fall into the trap of ignoring hardware again.

Establish ongoing practices:

- Annual hardware audit: Every year, scan your network and update your inventory. Find devices approaching EOS.

- Quarterly support status review: Check vendor support matrices. Recalculate which devices are at risk.

- Rolling replacement program: Instead of "big bang" replacements every 5 years, replace 15-20% of your hardware every year. Spreads cost, spreads risk, keeps systems current.

- Documented lifecycle management: Write down your process. Make it repeatable. Every engineer should know the process.

- Training for new engineers: When you hire, teach them your lifecycle management process. It's part of infrastructure operations.

- Budget forecasting: Work with finance to forecast hardware replacement needs 3-5 years out. Request budget annually. Don't scramble for emergency replacement later.

Real-World Example: A Medium-Sized Organization's Response

Let's walk through how a real organization (not federal, not massive, but representative) might handle this:

Company: 250 employees, 15 branch offices, mixed on-prem and cloud infrastructure, moderately regulated (not HIPAA or PCI, but financial services adjacent).

Starting state:

- Network audit revealed 34 devices in production that are past or approaching end-of-life

- A Cisco ASA 5500 handling all north-south traffic (critical)

- Several old Meraki MX series gateways at branches

- Aging Cisco Catalyst switches at headquarters

- Mix of older Arista access points

Response:

- Month 1: Audit complete, prioritization done, budget request submitted ($500K for replacement hardware and professional services)

- Month 2: Budget approved. RFP issued for replacement firewall (new ASA or Palo Alto), branch gateways, and access points.

- Month 3: Hardware ordered. Training scheduled for engineers.

- Month 4: Lab testing begins. New firewall tested in parallel with production ASA.

- Month 5: Branch gateways begin shipping. Non-critical branches get replaced first.

- Month 6: Main firewall replacement scheduled. Maintenance window announced. New firewall fully tested and ready for cutover.

- Month 7: Main firewall cutover. Successful migration. Monitoring shows no issues.

- Month 8-11: Remaining devices replaced. Access points upgraded. Monitoring and support validated.

- Month 12: Final audit. Confirm all EOS devices removed from production. Documentation updated.

Results:

- Zero EOL devices in production

- Modernized network architecture supporting zero-trust principles

- Improvement in threat detection (new devices have better visibility)

- Reduction in support tickets (new equipment is more reliable)

- Better documentation and runbooks

- Engineers trained on modern platforms

Cost breakdown:

ROI: Prevented at least one likely breach (risk-adjusted value: $2-5M), improved operational efficiency by 15%, extended support runway to 7+ years.

FAQ

What exactly is an "end-of-life" edge device?

An end-of-life (EOL) device is hardware that has reached the end of vendor support. This means the manufacturer no longer releases security patches, bug fixes, or firmware updates. For edge devices specifically, CISA defines these as network equipment accessible via the public internet: firewalls, routers, switches, wireless access points, and network security appliances. When these devices reach EOL, they become vulnerability magnets because exploitable flaws never get patched.

When does CISA's directive actually take effect?

Binding Operational Directive 26-02 was issued in January 2025 and became immediately effective. Federal agencies are required to remove all end-of-life edge devices from production within 12 months (by January 2026). However, CISA explicitly stated that this guidance applies to all organizations, not just federal agencies. Private sector organizations should treat this as urgent guidance and begin their replacement programs immediately.

How do I find out if my network devices are end-of-life?

Check your vendor's official support lifecycle pages or contact your vendor support team with your hardware models and serial numbers. Major vendors publish support matrices: Cisco (End of Life/End of Support lookup), Palo Alto Networks (Product lifecycle calendar), Fortinet (Product lifecycle status), and Juniper (Product lifecycle matrix). Document the exact support end date for each device, then prioritize replacements based on which devices reach EOS first.

Can I keep running an end-of-life device if I isolate it from the internet?

Not really, especially not if it's supposed to be a security device. Edge devices are the perimeter. If you isolate it, you're removing security. If you don't isolate it, you're exposing yourself. The only correct answer is replacement. If you absolutely must delay, run the device in read-only mode if possible, monitor it constantly, and treat it as a temporary measure while you source a replacement.

What's the difference between "end of support" and "end of life"?

The terms are sometimes used interchangeably, but technically: "End of Life" usually refers to when a product is no longer manufactured or sold. "End of Support" refers to when the vendor stops releasing updates and patches. For practical security purposes, they're equivalent—both mean no more security patches. A device can be end-of-life but still in support, or vice versa. Always check the official support end date, not just the manufacturing end date.

How much does it cost to replace edge devices across my organization?

This varies enormously based on your network size and device complexity. A small organization might spend

Will my insurance cover a breach caused by running end-of-life hardware?

Maybe not. Some cyber insurance policies now include exclusions for known unpatched vulnerabilities or unsupported hardware. Even if they do provide coverage, deductibles might be higher. More importantly, you'll have a hard time defending yourself in court or before regulators if you were knowingly running unsupported hardware. Your due diligence obligation is clear after CISA issued this directive.

Can I replace some devices but keep others running on EOL hardware?

Yes, but be strategic. Replace the highest-risk devices first: anything directly exposed to the internet, anything handling critical functions, anything with recent known vulnerabilities. You might not be able to replace everything in 12 months (emergency procurement exceptions exist), but you must replace the most dangerous ones. Document your risk-based approach and show your reasoning to auditors.

What should I do if my vendor doesn't have a replacement product in my price range?

This is real. Some vendors have pricing gaps. Your options: 1) Switch vendors (common solution), 2) Scale your replacement over time with smaller budget chunks, 3) Negotiate volume pricing with the vendor (they often have flexibility), or 4) Look at used/refurbished equipment from reputable resellers as a temporary bridge (not a permanent solution). But don't use price as an excuse to keep running vulnerable hardware.

How do I handle compliance requirements if I can't replace everything by the deadline?

Document your effort. Show the audit trail of your replacement program: budget requests, procurement timelines, completed replacements, and your plan for finishing. Auditors understand that large-scale replacement takes time. What they don't accept is inaction. Demonstrate that you're actively managing the risk with compensating controls (enhanced monitoring, network segmentation, access restrictions) while you work toward full compliance.

Should I replace devices gradually or all at once?

Gradually, almost always. Replacing all at once risks:

- Supply chain delays (you can't get everything at once)

- Operational disruption (multiple maintenance windows are harder to coordinate)

- Staff overload (your team can't handle massive simultaneous deployments)

- Cost concentration (your budget might not handle massive capital spending)

- Learning curve (staff need time to learn new platforms)

A phased approach over 6-12 months is more realistic and safer. Start with highest-risk devices, move to medium-risk, finish with low-risk infrastructure.

Conclusion

CISA's Binding Operational Directive 26-02 isn't the first time the government has mandated something. It probably won't be the last. But it reflects a real, measurable threat that doesn't distinguish between federal agencies and private organizations.

Unsupported edge devices are a liability you're actively choosing to carry. They're not a "we'll get to it someday" problem. They're a "this will probably be exploited and we have no mitigation" problem.

The good news: you have a year. You have time to plan, budget, procure, test, and deploy. You have time to do this right instead of panicking.

Start now. Don't wait.

Here's the practical next step: this week, schedule time with your network team to start an inventory audit. Document every edge device, check its support status, and calculate how many are at risk. You don't need a perfect program to start. You need awareness.

Once you know what you have and what's at risk, the path forward becomes clear. Build the program. Get budget. Execute the replacements. Monitor progress. Celebrate when the last EOL device comes offline.

That's it. It's not complicated. It's just mandatory. Act like it.

Key Takeaways

- CISA BOD 26-02 mandates removal of all end-of-life edge devices from federal networks by January 2026

- Unsupported hardware receives zero security patches, making it prime exploitation target for sophisticated threat actors

- Organizations with mature lifecycle management programs reduce unplanned failures by 70% and cut infrastructure costs by 20-30%

- Planned hardware replacement (2-5M)

- Modern replacement devices enable zero-trust security architecture impossible on legacy unsupported hardware

Related Articles

- Best VPNs for Super Bowl LIX: Complete Streaming Guide 2025

- DNS Malware Detour Dog: How 30K+ Sites Harbor Hidden Threats [2025]

- NordProtect 2026 Review: Identity Theft Protection Bundle Guide

- NordVPN Complete Plan 70% Off: Full Breakdown [2025]

- NordVPN Review 2025: Speed, Security & Real-World Performance [2025]

- Sapienza University Ransomware Attack: Europe's Largest Cyberincident [2025]

![CISA End-of-Life Edge Device Replacement Guide [2025]](https://tryrunable.com/blog/cisa-end-of-life-edge-device-replacement-guide-2025/image-1-1770392289745.jpg)