Android AI Photo Editor Data Breach: What Went Wrong and What You Need to Know

You downloaded what seemed like a fun app to add AI effects to your photos. It had good ratings. The features looked cool. You uploaded a few personal images. Nothing seemed wrong. Then, security researchers discovered something terrifying: your photos weren't just stored on your phone—they were sitting in an unsecured online database accessible to literally anyone.

This isn't hypothetical. It happened to nearly two million users of a popular Android app. And it's becoming a depressingly common pattern in the app ecosystem.

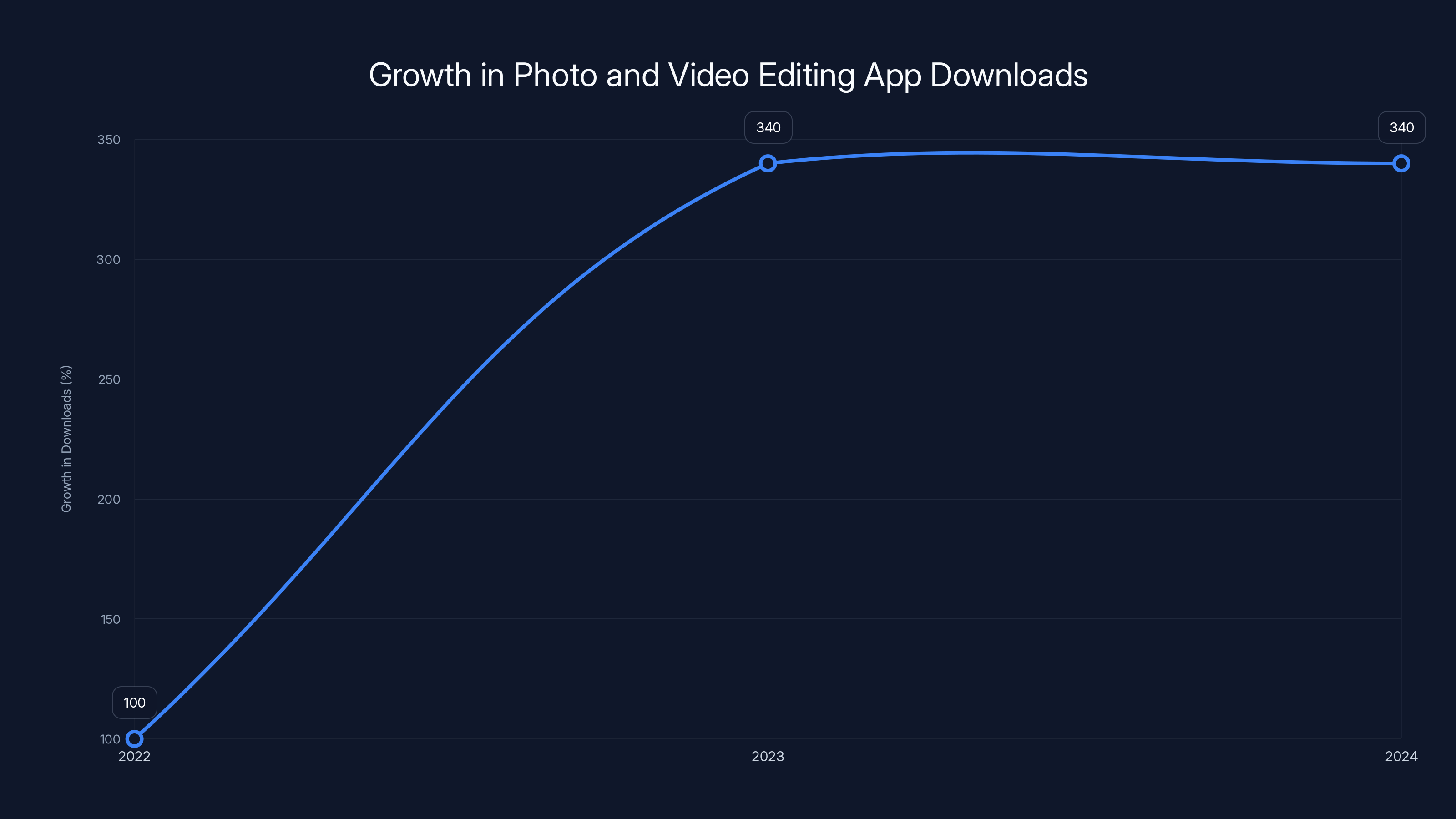

Last month, cybersecurity researchers uncovered a massive data breach affecting an Android application called "Video AI Art Generator & Maker." The app promised AI-powered photo and video enhancement—a feature that's exploded in popularity over the past two years. But behind the scenes, the developers had made a catastrophic security decision. They stored millions of user photos, videos, and AI-generated content in a Google Cloud storage bucket with literally no password protection.

This wasn't a sophisticated hack. There was no zero-day exploit. No clever social engineering. A misconfigured database—the digital equivalent of leaving your front door open and assuming nobody would notice.

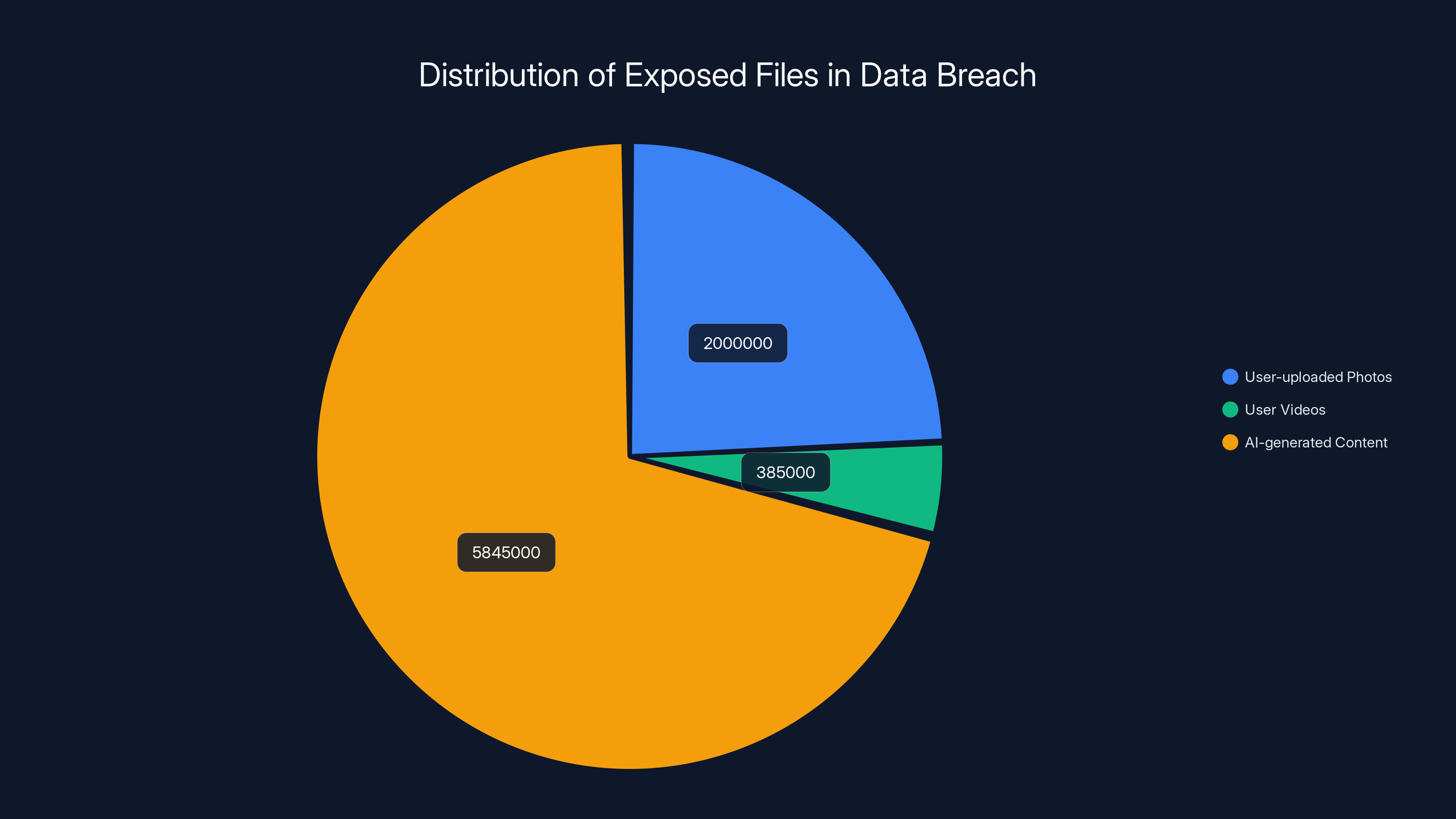

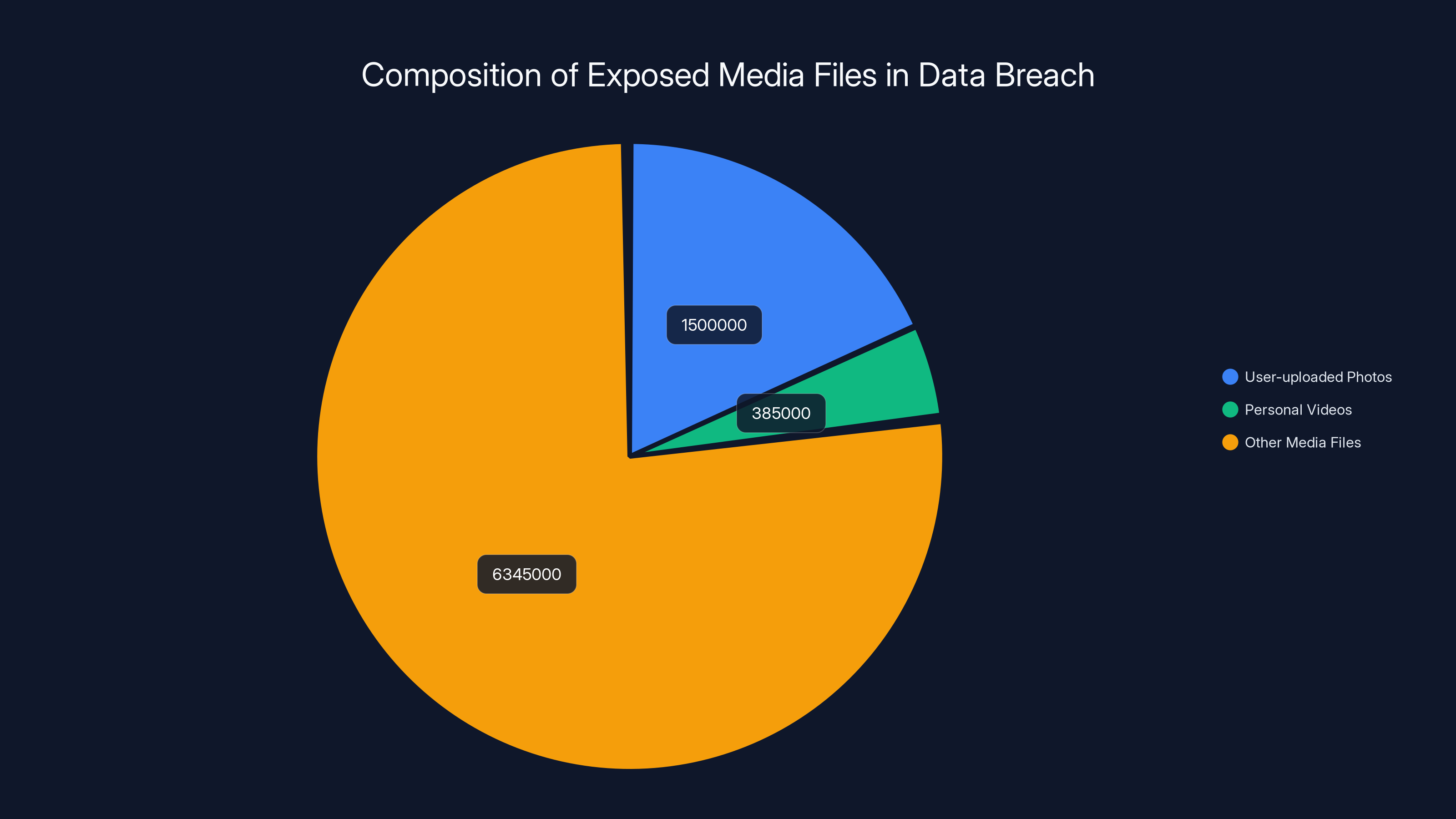

The numbers tell the story. The exposed bucket contained 8.27 million media files. Of those, more than 1.5 million were user-uploaded photos, and over 385,000 were personal videos. Add in AI-generated content, and you're looking at a collection of sensitive multimedia data that could compromise millions of people's privacy, security, and peace of mind.

But here's what makes this case particularly important: it's not an isolated incident. Similar vulnerabilities have been found in other apps from the same developer. This reveals a systemic problem in how some mobile app companies prioritize speed and features over security. And if you're using Android apps today, you need to understand what this means for your personal data.

Let's break down exactly what happened, why it happened, and most importantly, what you can do to protect yourself.

TL; DR

- The Breach: Popular Android app "Video AI Art Generator & Maker" left an unsecured database containing 2 million user photos and 385,000 user videos accessible to the public

- The Scale: Total leaked files reached 8.27 million, including AI-generated content and audio files

- The Cause: Misconfigured Google Cloud storage bucket with no authentication—developers prioritized speed over security

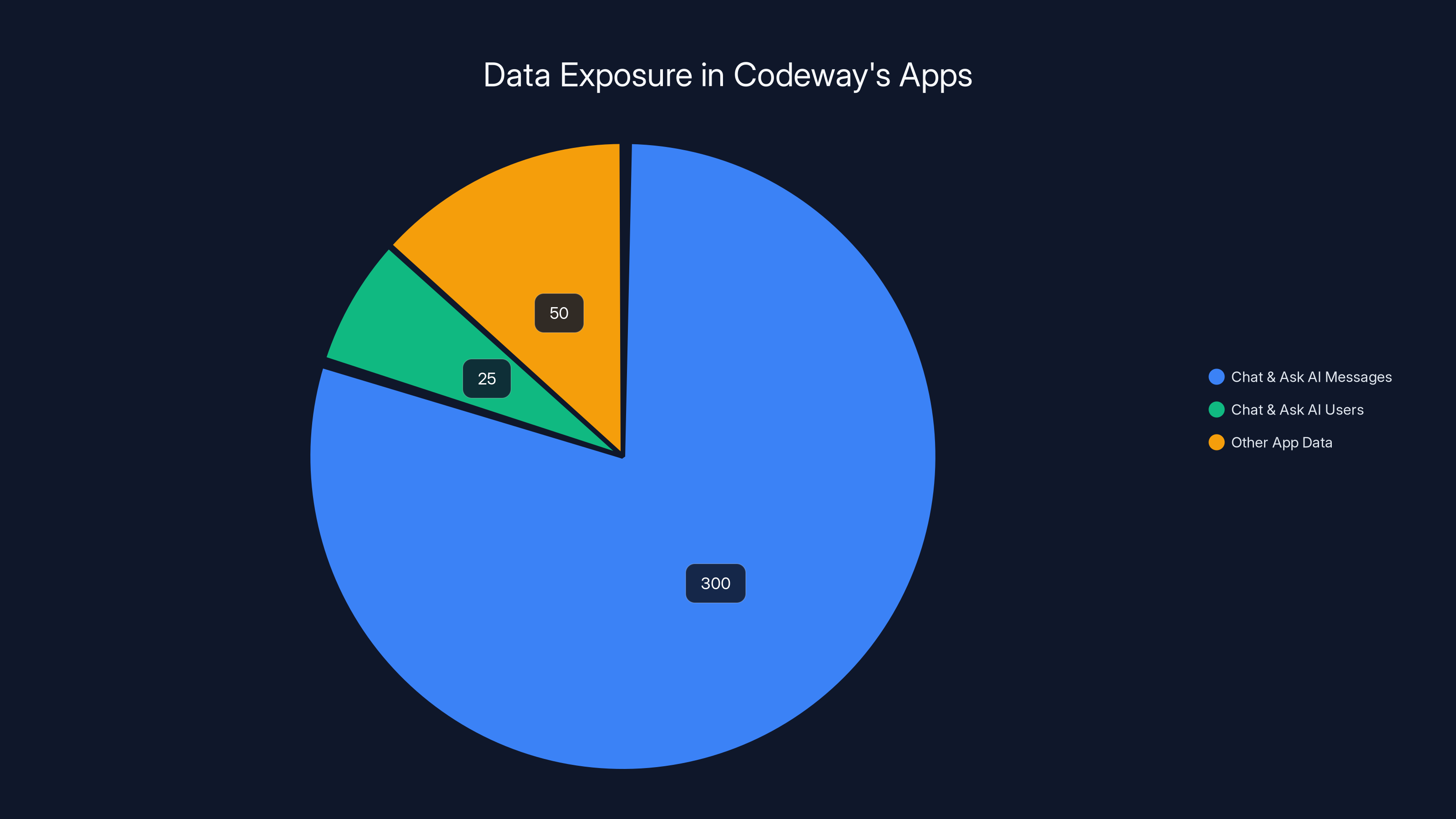

- The Pattern: Same developer's other app (Chat & Ask AI) had exposed 300 million messages from 25 million users with similar vulnerabilities

- Your Risk: If you used photo/video AI editing apps, your personal images could be in similar unsecured databases right now

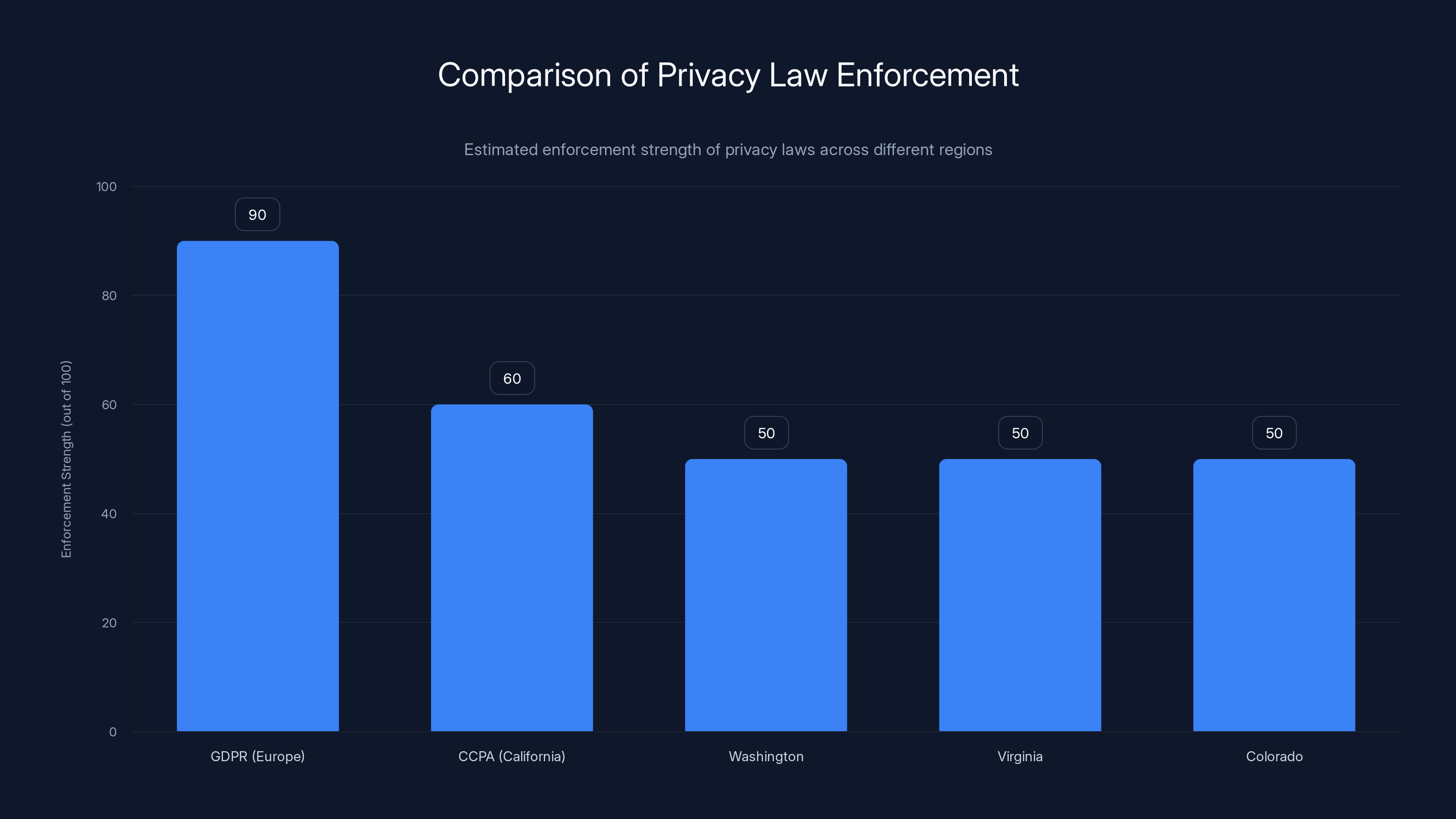

GDPR in Europe is estimated to have the strongest enforcement among privacy laws, while U.S. state laws like CCPA are catching up but still lag behind. Estimated data.

The App That Nobody (Officially) Knew About

Here's the first red flag that most users missed: the "Video AI Art Generator & Maker" app wasn't easy to find on the Google Play Store. Cybernews researchers tracked down that it was developed by a Turkish company called Codeway Dijital Hizmetler Anonim Sirketi. But when they checked the Play Store, the app wasn't publicly listed on the developer's official page.

This is significant. When an app lacks official distribution through major app stores, it often means it's either new, niche, or deliberately kept under the radar. In this case, it was launched in mid-June 2023, which means it had been collecting and storing user media for months before the security vulnerability was discovered.

The app's core functionality was straightforward and genuinely appealing to users. It offered AI-powered makeovers for photos and videos—the kind of feature that went viral on social media in 2023 and 2024. People wanted to see what they'd look like with different styles, ages, or artistic filters. The convenience was irresistible.

But convenience came at a cost. Every photo you uploaded was sent to Codeway's servers. Every video you processed got stored in their database. Every AI-generated result they created for you was kept on their infrastructure. This is standard practice for many apps—they need to store media to provide the service. The problem was how they stored it.

The Codeway developer's official website mentioned three apps on the Play Store, but only showed details for one. However, their online presence also advertised a different app called "Chat & Ask AI," which would later become relevant to this story. At the time, users had no way of knowing that their media was stored in a digital equivalent of a house with no locks.

How a Misconfigured Database Exposed Millions

Understanding the technical failure here helps explain why this keeps happening. It's not complicated, and that's exactly the problem.

Google Cloud Storage buckets are cloud storage containers that developers use to store files—photos, videos, documents, anything. They're incredibly useful for app backends. By default, these buckets can be configured with various security settings. You can require authentication. You can set permissions so only your app can access the data. You can encrypt everything. You can enable logging to track who accesses what.

Codeway did none of these things.

Instead, they configured their Google Cloud Storage bucket with public access enabled. No authentication required. No passwords. No API keys. No access controls. If you knew the bucket's URL, you could browse through millions of files as easily as scrolling through a public website.

This is catastrophically bad. It's like building a secure front door, then leaving the back door wide open with a neon sign pointing to it.

The researchers who discovered this weren't using advanced hacking techniques. They found the misconfigured bucket through a combination of digital reconnaissance and public data. Google Cloud Storage buckets, when misconfigured, sometimes leak information through search indexes, domain configuration records, or accidental exposure in app code. Once researchers identified the bucket existed, accessing it required almost no technical skill.

They discovered:

- 1.5+ million user-uploaded photos capturing personal moments, family pictures, travel photos, and intimate content

- 385,000+ user-uploaded videos with similar sensitivity

- 2.87 million AI-generated images created by the app's processing

- 2.87 million AI-generated videos the app had processed

- 386,000+ AI-generated audio files associated with the processing

Each file sat there, waiting for anyone curious enough to look.

The exposure timeline is particularly troubling. The app launched in mid-June 2023. By the time researchers discovered the misconfigured bucket, it had been leaking data for months. During that period, the app was actively collecting new user uploads, all of which went directly into the unsecured database.

The breach exposed a total of 8.27 million files, with AI-generated content comprising the largest share. Estimated data.

The Pattern: This Isn't the First App from This Developer

If Codeway's security practices seem reckless, the follow-up discovery made it clear this wasn't negligence. It was systemic.

Researchers found that the same company had developed another popular app called "Chat & Ask AI." This app did what its name suggests—it was an AI chatbot interface where users could have conversations with artificial intelligence. Except it had its own massive data exposure problem.

In early February 2025, an independent researcher discovered that Chat & Ask AI's backend infrastructure was misconfigured in almost identical ways. The result: 300 million messages from 25 million users were exposed. These weren't just random messages. They were conversations where people asked the AI for advice, shared personal details, explored sensitive topics, and had intimate discussions.

When you put these two breaches together, a clear pattern emerges. Codeway Dijital Hizmetler wasn't making isolated mistakes. They had a corporate culture that prioritized shipping new features and acquiring users over implementing basic security measures. They likely didn't have dedicated security engineers. They probably didn't do security audits. They certainly didn't have processes to verify cloud infrastructure configuration.

This is devastatingly common in the mobile app space. A startup or small company builds an app, gets users, makes money or attracts investors. Security becomes an afterthought. "We'll secure it later," they tell themselves. By the time later arrives, millions of users have already uploaded personal data.

What Was Actually Exposed: The Privacy Implications

It's easy to see "2 million photos exposed" and treat it as an abstract number. But each of those files represents something real. A person. A moment. Privacy.

Consider what people typically store in photo editing apps. Family photos. Travel pictures. Selfies at various angles. Videos of kids. Personal moments. Some users probably uploaded photos they wouldn't want shared with employers, family members, or the general public.

In the hands of malicious actors, this data has value. It can be used for identity theft. For blackmail. For creating deepfakes. For social engineering. For building databases of faces for surveillance. For targeting advertisements to specific individuals.

But beyond the dramatic risks, there's the everyday privacy violation. Someone, somewhere, was able to browse through millions of people's photos. They could see who went where, who was spending time with whom, what people looked like, how they dressed, what they valued enough to photograph.

The AI-generated content adds another layer of risk. If you used the app to generate stylized versions of your face or body, those AI outputs were also exposed. They could be used to create fake videos or images of you doing things you never did. As deepfake technology improves, this becomes an increasingly serious concern.

The 386,000+ audio files are particularly troubling. What were these? Voice conversations transcribed to audio? Messages converted to speech? Whatever they were, they represented another stream of personal data that nobody expected to be public.

The Technical Breakdown: How These Breaches Happen

Misconfigured cloud storage isn't a new problem. Security researchers have been sounding alarms about it for years. Yet it keeps happening. Understanding why helps explain why this is such a persistent issue.

When developers build a mobile app, they need backend infrastructure. Cloud providers like Google, Amazon, and Microsoft make this incredibly easy. You can spin up a storage bucket in minutes. You can integrate it into your app code with just a few lines. You can start uploading files immediately.

But here's where it goes wrong: all of this can happen without any involvement from a security team. A single engineer can set up the entire backend. They might not even know what security options are available. They might configure it with public access because that's the simplest way to get it working quickly. They might plan to "secure it later" once the MVP (minimum viable product) launches.

Later never comes. The app gets users. The developer gets distracted by feature requests and bugs. Security remains an afterthought until disaster strikes.

Google Cloud Storage has good security defaults available. But it also allows public access if you explicitly configure it that way. Same with Amazon S3 buckets. Same with most cloud storage services. The tools for security exist. The responsibility falls on developers to use them.

This represents a fundamental mismatch in the app development ecosystem. Security requires upfront planning and ongoing maintenance. The app economy rewards speed and viral growth. Companies that ship features fast attract users and investment. Companies that take time to build security often lose to competitors who move faster.

Until recently, there were no real consequences for developers who got this wrong. A data breach might make the news, but the company often survives. Users delete the app but rarely pursue legal action. Regulators move slowly. By the time anything happens, the developer has moved on to another project.

This is slowly changing. Data privacy regulations like GDPR in Europe and CCPA in California have started imposing fines. Customers are becoming more privacy-conscious. But the incentives still favor speed over security in most cases.

The surge in downloads for photo and video editing apps, driven by AI features, reached 340% growth between 2023 and 2024. Estimated data based on industry trends.

The Disclosure and Response: How the Leak Was Handled

Once Cybernews researchers discovered the misconfigured bucket, they did what ethical security researchers should do: they reported the vulnerability to the developer. This is called responsible disclosure. Instead of publishing the discovery immediately, researchers give the company time to fix the problem before public disclosure.

Codeway responded relatively quickly. They secured the database shortly after being contacted by researchers. The bucket was reconfigured with proper authentication and access controls. The exposed files were presumably still accessible for a brief window, but the vulnerability was closed before widespread public knowledge.

This is both good and bad. It's good that the company responded to the disclosure and fixed the problem. It's bad that it took a breach for them to implement basic security measures. It's also bad that millions of people will never know their data was exposed. They'll continue using the app (or similar apps) with no awareness of what happened.

The fact that Cybernews was able to establish contact with Codeway and get them to respond is itself notable. Not all companies respond to vulnerability disclosures. Some ignore researchers entirely. Some threaten legal action. Codeway at least acknowledged the problem and acted.

But there are questions that remain unanswered. How much data was downloaded during the exposure window? Who accessed the bucket? Was any data misused? How long was the bucket actually public? Companies rarely provide transparency on these details, leaving users in the dark about the true scope of the breach.

Similar Vulnerabilities and the Broader Pattern

The Codeway breaches aren't aberrations. They're part of a larger pattern in the mobile app space where security lags dangerously behind feature development.

In 2024 and early 2025, security researchers have documented dozens of similar cases. Photo sharing apps with exposed user galleries. Video editing tools with public video libraries. Fitness apps with exposed location histories. Dating apps with exposed profile information. The common thread: misconfigured cloud storage.

One analysis found that across all cloud storage breaches in the past two years, over 85% were caused by misconfiguration rather than sophisticated attacks. This means most of these breaches could have been prevented with basic security practices that cost nothing and take minutes to implement.

The problem isn't the cloud providers. Google, Amazon, and Microsoft all have excellent security documentation. They provide tutorials. They highlight best practices. They even have automated tools to detect and alert on misconfigurations. The problem is cultural and organizational. Companies don't prioritize security in their development process.

This creates perverse incentives. A company that spends time and money on security can't compete feature-for-feature with a company that skips security. Users can't easily tell which apps are secure. App stores don't prominently display security audits or certifications. So bad actors aren't punished until something goes catastrophically wrong.

The Data: What 8.27 Million Files Represents

To understand the scope of this breach, let's think about what 8.27 million files actually means.

If each file was 5 megabytes (roughly the size of a high-quality photo), the total dataset would be around 41 terabytes. If each video was 20 megabytes, and there were 3.27 million videos between user uploads and AI-generated content, that's another 65 terabytes. The complete exposed dataset likely exceeded 100 terabytes of personal media.

For context, a typical home internet connection can download 1 terabyte in about 40 hours of continuous downloading. One hundred terabytes would take 4,000 hours, or roughly 167 days of non-stop downloading. That's an enormous amount of personal data sitting in public.

But here's what matters more than the raw numbers: the humans behind them. With 2 million user-uploaded photos and videos, and an estimated 30-40% of users uploading personal content, that means roughly 5-7 million unique individuals had their media exposed.

Many of those individuals had no idea. They used the app, uploaded a photo, got their AI enhancement, and moved on. They assumed their data was secure. They didn't read the privacy policy (who does?). They didn't check where their files were being stored. They trusted a company they'd never heard of because the app worked well and had good reviews.

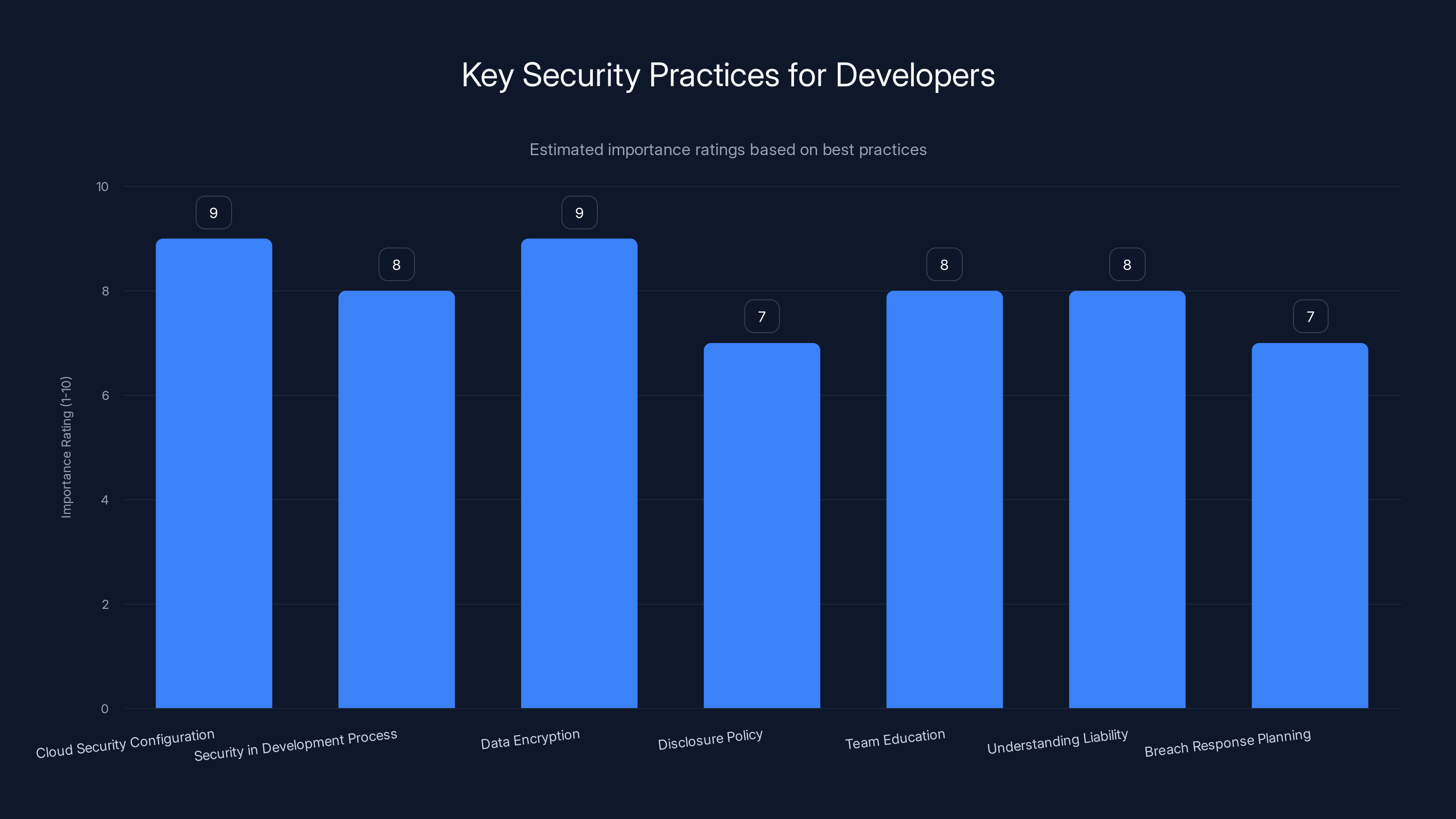

Cloud security configuration and data encryption are rated highest in importance for building secure apps. Estimated data based on industry best practices.

Security Red Flags: How to Spot Risky Apps

Not every app that stores your photos and videos is going to have a massive data breach. But knowing what to watch for can help you avoid the ones most likely to.

Vague privacy policies. If an app's privacy policy doesn't clearly explain where your photos are stored, who can access them, and how long they're kept, assume the worst. Transparent companies are usually more careful about security.

Unknown developers. Established companies have reputations to protect. Startups and solo developers might not. Check who made the app. Have they made other apps? Are they actively maintaining them? Do they have a company website with contact information?

No regular updates. Apps that don't receive regular updates are likely abandoned or neglected. Security vulnerabilities accumulate over time. If an app hasn't been updated in 6+ months, it's probably not receiving active security monitoring.

Excessive permissions. Does a photo editing app really need access to your contacts? Your location? Your phone logs? Apps that request more permissions than they need are often poorly designed or worse.

Unclear business model. If it's not clear how a free app makes money, the answer might be "by selling your data." Legitimate companies are transparent about monetization.

Poor support and communication. Companies that don't respond to support requests or user feedback are unlikely to respond to security issues either.

Duplicate functionality. If you can get the same feature from Google Photos, Adobe Lightroom, or another major app, why use a smaller app? The big players have resources for security. Smaller apps often don't.

None of these are absolute disqualifiers. A small startup can build secure software. But taken together, they suggest risk.

What Users Should Do If They Uploaded Content

If you used the "Video AI Art Generator & Maker" app or similar applications, here are concrete steps to take.

First, understand your exposure. Think about what you uploaded. Personal photos? Family pictures? Travel content? Selfies? Each represents different risk levels. Personal identifying information in photos is most dangerous.

Delete the app. Whether or not your specific data was accessed, continuing to use an app with known security vulnerabilities is unwise. Uninstall it and switch to alternatives.

Change passwords. If you used the same password anywhere else, change it immediately. Many breaches chain together—data from one breach gets used in attacks on other services.

Monitor financial accounts. Identity theft often follows photo breaches because facial recognition can be used to create fake IDs. Watch your bank and credit accounts for suspicious activity. Consider a credit freeze if you're concerned.

Watch for phishing. Attackers often combine leaked personal data with phishing emails. If you receive suspicious messages claiming to be from the app or related companies, don't click links or download attachments.

Request deletion. Even though the database was supposedly secured, request that Codeway delete your specific files. You might not get compliance, but creating a paper trail helps.

Report to authorities. In jurisdictions with data protection laws (EU, California, etc.), you can report data breaches to regulatory bodies. These complaints create accountability and sometimes trigger investigations.

Consider reputation monitoring. Services like Google Alerts can notify you if your personal information appears online. It's not perfect, but it catches some cases where your data gets shared.

None of these steps are guaranteed to protect you retroactively, but they limit ongoing damage.

The Broader Context: Why Photo Apps Are Particularly Risky

Photo and video editing apps are particularly vulnerable vectors for data breaches for several reasons.

They handle sensitive media. Photos and videos reveal far more about people than text. They show faces, locations, relationships, physical appearance, and intimate moments. Governments, criminals, and surveillance companies all find this data valuable.

They require server storage. Unlike some apps that work entirely locally, photo editors usually need backend infrastructure to store user media. This creates infrastructure that needs security.

They're convenience-first. Users care about the feature (making their photos look cool) far more than about security. This gives developers permission to skip security to ship faster.

They attract funding and growth pressure. The photo editing space is competitive. Investors pour money into startups promising AI-powered photo tools. That money comes with pressure to scale fast, grow user numbers, and ship features. Security slows all of that down.

The business model often includes data monetization. Some photo apps make money by analyzing the photos you upload to sell demographic data to advertisers. This incentivizes collecting and storing user media rather than deleting it.

They're typically built by young teams. Many photo editing startups are founded by people in their 20s or early 30s with strong engineering skills but limited security expertise. They ship products that work but aren't secure.

None of this means you shouldn't use photo editing apps. But it should inform your choices. Prefer apps from established companies with security track records. Use open-source alternatives where available. Keep sensitive photos off the cloud when possible.

User-uploaded photos and personal videos made up a significant portion of the 8.27 million exposed media files, highlighting the risk to user privacy.

Lessons for Developers: Building Secure Apps

If you're a developer reading this, the lessons from the Codeway breaches are clear.

Security isn't a feature, it's a prerequisite. You wouldn't ship an app with bugs in the core functionality. Don't ship it with security issues either.

Cloud security requires explicit configuration. Default isn't always secure. Learn your cloud provider's security model. Enable authentication. Restrict access. Test your configuration. Assume defaults are insecure until proven otherwise.

Include security in your development process. Allocate time for security reviews. Use automated tools to scan for common vulnerabilities. Have someone dedicated to security, even if it's part-time.

Encrypt sensitive data. User photos and videos should be encrypted at rest (on storage) and in transit (while uploading). Encryption doesn't prevent breaches, but it reduces damage when they happen.

Have a disclosure policy. Tell users and security researchers how to report vulnerabilities. Respond to reports. Fix problems quickly. This is called "responsible disclosure" and it's the standard in the industry.

Educate your team. Most security vulnerabilities come from knowledge gaps, not malicious intent. Run security training. Share best practices. Build a culture where security is everyone's responsibility.

Understand your liability. Many jurisdictions now have data protection laws with real penalties. GDPR fines can reach 4% of annual revenue. CCPA fines are $7,500 per violation. Security isn't just right—it's required.

Plan for breach response. You hope it never happens, but assume it might. Have a plan for how to respond, who to notify, what to communicate. Having a plan means you'll respond better when it matters.

The Regulatory Response: Are Laws Catching Up?

One of the most frustrating aspects of data breaches is that they keep happening even though we know how to prevent them. Part of the reason is lack of enforcement.

Traditionally, the U. S. had a patchwork of state-level privacy laws with limited enforcement. Companies could store data insecurely and face minimal consequences. The privacy landscape is slowly changing.

Europe's General Data Protection Regulation (GDPR) has been the strictest. Companies that violate GDPR can face fines up to 4% of annual global revenue. That's billions of dollars for large companies. But most app developers operate in the grey area where enforcement is unclear.

California's Consumer Privacy Act (CCPA) and similar state laws in Washington, Virginia, and Colorado are creating U. S.-based privacy rules. But enforcement is still weak compared to Europe.

The challenge is that data breaches are still treated as a cost of doing business by some companies. The fine for a breach might be less than the investment required to secure infrastructure properly. Until that changes, incentives will remain misaligned.

However, public pressure and reputational damage are starting to matter more. Users delete apps after breaches. Media coverage damages trust. Competitors use security as a marketing advantage. The market is slowly rewarding secure companies and punishing careless ones.

Alternatives: Safer Apps for Photo and Video Editing

If you want to edit photos and videos with AI features, you don't have to use risky apps. Several mainstream tools have better security track records.

Google Photos offers AI-powered editing through a company with dedicated security teams and compliance with major privacy regulations. Your photos are encrypted in transit and use Google's security infrastructure.

Adobe Lightroom is a professional tool with a clear business model (subscription fees) and decades of security focus. The company makes enough money from legitimate subscriptions that they don't need to monetize user data.

Microsoft Photo Editor provides AI editing through Windows and provides privacy controls aligned with privacy regulations.

Snapseed (by Google) and Pixlr both offer AI features with more transparent privacy policies than unknown startups.

These aren't perfect. But they're built by companies with security infrastructure, motivated to protect reputations, and transparent about data handling.

For AI-powered photo generation (creating new images from text descriptions), consider tools like Runway, Synthesia, or established platforms that have published security documentation.

The key question: Is the company's primary business selling you a product or service, or is it analyzing and monetizing your data? If it's the former, they have incentive to protect your data. If it's the latter, beware.

Chat & Ask AI exposed 300 million messages and data from 25 million users, highlighting a systemic security issue. Estimated data for 'Other App Data' based on typical exposure scenarios.

The Psychology: Why We Trust Apps With Personal Data

Understanding how millions of people came to upload personal media to an unknown app requires understanding how trust works in the app economy.

App store ratings artificially inflate trust. An app with 4.5 stars seems legitimate. Users assume that 100,000 five-star reviews indicate a safe product. But reviews can be faked. Early users might not yet have discovered security problems. People don't leave reviews after private data leaks—they just uninstall.

The app interface itself conveys legitimacy. A polished UI with smooth animations suggests professional development. But security isn't visible. An insecurely developed app can look exactly as professional as a secure one.

Social proof is powerful. If your friends used an app, or if you saw it on social media, it seems safe. Trends create gravity. Everyone's using this photo app, so it must be legitimate.

There's also the assumption that app stores (Google Play Store, Apple App Store) perform security vetting. They don't. Stores check that apps don't obviously steal from other apps or contain malware in obvious forms. But misconfigured databases don't trigger app store reviews. An app can be listed with terrible security practices.

Finally, there's the privacy paradox. Most people say privacy is important, but few take steps to protect it. Reading privacy policies takes time. Evaluating security infrastructure requires technical knowledge. It's easier to just install the app and assume it's fine.

This gap between what people say they care about and what they actually do creates an environment where risky apps thrive.

The Role of Security Researchers: How These Breaches Get Found

The Codeway breaches were discovered because dedicated security researchers are actively hunting for misconfigured databases. This isn't part of anyone's job—researchers do it to keep companies accountable.

Organizations like Cybernews run scanning operations that continuously probe cloud storage buckets for misconfigurations. They do this because they know that misconfigured databases are pervasive, and companies won't fix them unless forced.

This system is fragile. Security researchers are often not paid for this work. They're pursuing bugs for the satisfaction of uncovering vulnerabilities, the credibility of being first to find major breaches, and the hope that public disclosure will spur change.

But there are no guarantees. A researcher might find a breach, try to contact the company, and get ignored. Or the company might fix it quietly and ensure the researcher gets no public credit. Sometimes researchers publish findings and get threatened with legal action from companies who don't appreciate the attention.

Without security researchers, many breaches would go undetected indefinitely. The companies wouldn't learn they had vulnerabilities. Users would never know their data was exposed. This is why responsible disclosure is important—giving companies time to fix before public announcement rewards both the researcher and the security community.

But the entire system depends on researchers doing volunteer work. If fewer researchers hunt for vulnerabilities, more breaches go undiscovered. This is currently happening—the number of people doing security research hasn't grown as fast as the number of apps and companies that need it.

Long-term Solutions: Systemic Change in App Security

Fix individual apps and you solve one problem. Fix the systems that produce insecure apps and you solve the pattern.

Better defaults. Cloud providers could ship with more secure defaults. Making authentication and access control opt-in (instead of requiring explicit public access) would prevent most misconfiguration breaches.

Security in developer education. Computer science programs don't teach enough about security. They teach how to build features, not how to build them securely. Reversing this would produce more security-aware developers.

App store policy changes. Google and Apple could require security audits or certifications. They could flag apps with known vulnerabilities. They could incentivize secure development with App Store visibility.

Liability. If app developers faced real legal consequences for data breaches, they'd prioritize security. Right now, the worst consequence is app deletion and bad press. Make data breaches legally actionable and behavior changes fast.

Security insurance. Making companies carry data breach insurance would create economic pressure to invest in security. Insurance companies would require security standards.

Open-source security tools. Better open-source security tools and libraries would make it easier for developers to build securely without reinventing infrastructure.

Community standards. Industry groups could establish security standards that developers aspire to. Similar to how the healthcare industry has certifications and compliance standards.

None of these are silver bullets. But combined, they could significantly reduce the frequency of preventable breaches like Codeway's.

What's Next: Protecting Yourself Going Forward

The Codeway breaches are one incident in a long pattern. More breaches will happen. Security practices will slowly improve, but not fast enough to prevent all incidents. You can't rely on developers or regulators to protect your data completely.

Here's what you can control.

Be selective about what you upload. Don't upload photos to unknown apps that you wouldn't want made public. Default to keeping personal content off the cloud.

Use established tools. Stick with photo editors from companies with security track records. You're paying with attention or subscription fees, which means your data is less likely to be the product.

Read privacy policies. I know, nobody likes this. But five minutes reading about where your photos are stored and how long they're kept is worth the time.

Enable device security. Even if an app stores your photos insecurely, local device encryption limits exposure. Use strong device passwords. Enable biometric authentication.

Assume breaches will happen. This is pessimistic but realistic. Use unique passwords for different services so a breach in one doesn't compromise others. Monitor your credit and accounts. Be alert to phishing and social engineering.

Delete old data. Apps that delete user media after processing reduce breach risk. Prefer tools that don't store your content long-term.

Stay informed. Follow security researchers and tech journalists. When breaches happen, learning about them quickly lets you respond before attackers leverage the data.

These steps won't make you 100% secure. But they'll make you harder to exploit than the majority of users who don't think about security at all.

FAQ

What exactly happened in the Video AI Art Generator data breach?

Researchers discovered that the "Video AI Art Generator & Maker" app stored user photos, videos, and AI-generated content in a Google Cloud Storage bucket with public access enabled and no password protection. This exposed approximately 2 million user-uploaded photos and 385,000 user videos along with millions of AI-generated files. The misconfigured bucket was accessible to anyone who knew the URL, and the company secured it shortly after being notified by researchers.

How many users were potentially affected by this breach?

With 2 million user-uploaded photos and 385,000 user videos exposed, and considering that most users upload at least a few items, the breach potentially affected between 5 and 7 million unique individuals. However, the total dataset included 8.27 million files when counting AI-generated content, audio files, and other processed materials.

How can I tell if my data was part of this breach?

The best approach is to check if you used the "Video AI Art Generator & Maker" app. If you did, assume your uploaded content was exposed. Monitor your email for any breach notification from Codeway (though this is unlikely). Watch your financial accounts and credit reports for suspicious activity. You can also request data deletion directly from Codeway, though compliance is not guaranteed.

Is this the only Codeway app with security problems?

No. The same developer created "Chat & Ask AI," which was found to have a similar misconfigured database exposing 300 million messages from 25 million users. This indicates a systemic security problem with the company's development practices rather than isolated incidents.

What are the practical risks of having my photos exposed in a data breach?

Exposed photos can be used for identity theft, creating fake IDs, building surveillance databases, creating deepfakes or fake videos, social engineering and phishing attacks, blackmail, and selling personal information to marketers or data brokers. The risk level depends on what information the photos contain and who accesses them.

Why did this misconfiguration happen in the first place?

Codeway's development team likely prioritized shipping features quickly over implementing security. They probably configured their cloud storage with the simplest settings to get the app working, intending to secure it later. Later never came. This pattern is common in startup environments where investors pressure teams to acquire users fast before worrying about infrastructure security.

How can I avoid similar risks with other apps?

Use photo editing apps from established companies with security track records like Google, Adobe, or Microsoft. Check app reviews for security complaints. Avoid uploading sensitive personal content to unknown apps. Read privacy policies to understand where photos are stored. Delete apps that don't receive regular security updates. Consider offline editing tools that process images locally without uploading to servers.

What responsibility do app stores have for these breaches?

App stores like Google Play do scan for obvious malware and policy violations, but they don't perform deep security audits on all apps. They don't detect misconfigured databases or server-side vulnerabilities. While stores could implement stricter security requirements, most breaches happen in infrastructure that app store scanning cannot easily identify.

Will Codeway face legal consequences for this breach?

This depends on jurisdiction and applicable laws. If users are in Europe (GDPR), California (CCPA), or other regions with data protection laws, they could file complaints with regulators who might impose fines. However, enforcement is often slow and penalties vary widely. Individual civil suits against the company are possible but difficult to organize and pursue.

What should I do if I find another app with similar security problems?

If you discover a misconfigured database or security vulnerability, report it responsibly to the company first. Give them reasonable time (typically 30-90 days) to fix the issue. If they don't respond or refuse to fix it, you can report to security researchers, journalists, or relevant regulatory bodies. Never publicly disclose vulnerability details until the company has had time to patch.

Conclusion: Security Is Everyone's Responsibility

The Video AI Art Generator data breach illustrates something that security researchers have been saying for years: misconfigured cloud storage is pervasive, preventable, and devastating. It's not a technical problem anymore. It's a cultural problem.

A single engineer at Codeway could have secured the database in five minutes. Instead, 2 million people had their personal photos exposed to the world. This wasn't a sophisticated attack. It was negligence at scale.

But responsibility doesn't fall only on developers. Users trust unknown apps without investigating their security. App stores claim to curate safe apps without actually verifying security. Regulators move slowly. Companies treat data breaches as the cost of doing business. The entire ecosystem enables insecurity.

Changing this requires movement on multiple fronts. Developers need security training and company cultures that prioritize security alongside features. Cloud providers need better defaults and automated detection of misconfigurations. App stores need stronger vetting. Laws need teeth. Companies need liability for breaches. Users need education and tools to protect themselves.

None of this will happen overnight. But it needs to happen because the current trajectory is unsustainable. Every month brings new major breaches. Every breach exposes millions of people's personal data. Every exposure makes surveillance and identity theft easier.

For now, you need to protect yourself. Be cautious about what you upload to unknown apps. Stick with established tools. Monitor your accounts. Understand your risk. The companies building the apps you use aren't yet obligated to make security a priority. Until that changes, you have to do it for them.

The good news is that this doesn't require advanced technical skills. It requires awareness. Read privacy policies. Check who made the app. Look at reviews. Delete apps that seem risky. Use established tools from known companies. These simple steps eliminate most exposure to breaches like Codeway's.

The Codeway breaches aren't unique because they failed. They're unique because they were discovered. Hundreds of other apps probably have similar vulnerabilities right now. Some will be found by researchers. Most won't be—until they're exploited by malicious actors. Until those databases are sold to the highest bidder.

The choice is yours: continue trusting every app that seems convenient, or start thinking about the data you're surrendering. The cost of the first choice keeps getting higher. The second choice takes just a bit more attention and time.

Choose wisely. Your data is worth it.

Key Takeaways

- A misconfigured cloud database in the 'Video AI Art Generator & Maker' app exposed 2 million user photos, 385,000 user videos, and 8.27 million total files with no authentication required

- The same developer's other app ('Chat & Ask AI') had identical security failures, exposing 300 million messages from 25 million users—revealing systemic negligence rather than isolated incidents

- Misconfigured cloud storage causes 85%+ of cloud data breaches and is entirely preventable with basic security practices that take minutes to implement

- Users can protect themselves by using photo editors from established companies (Google, Adobe, Microsoft), reading privacy policies, avoiding permission creep, and deleting apps lacking security track records

- The app ecosystem systematically incentivizes speed over security; regulatory pressure and liability laws are slowly changing this, but users must manage personal data protection until systemic change occurs

Related Articles

- Abu Dhabi Finance Summit Data Breach: 700+ Passports Exposed [2025]

- Google Play's AI Defenses Block 1.75M Bad Apps in 2025 [Analysis]

- CarGurus Data Breach: 1.7M Records Stolen by ShinyHunters [2025]

- Figure Data Breach: What Happened to 967,000 Customers [2025]

- Billions of Exposed Social Security Numbers: The Identity Theft Crisis [2025]

- North Korean Identity Theft Scheme at US Companies [2025]

![Android AI Photo Editor Data Breach: 2M Users Exposed [2025]](https://tryrunable.com/blog/android-ai-photo-editor-data-breach-2m-users-exposed-2025/image-1-1771613007922.png)