Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains, and the Reality of AI in Big Business

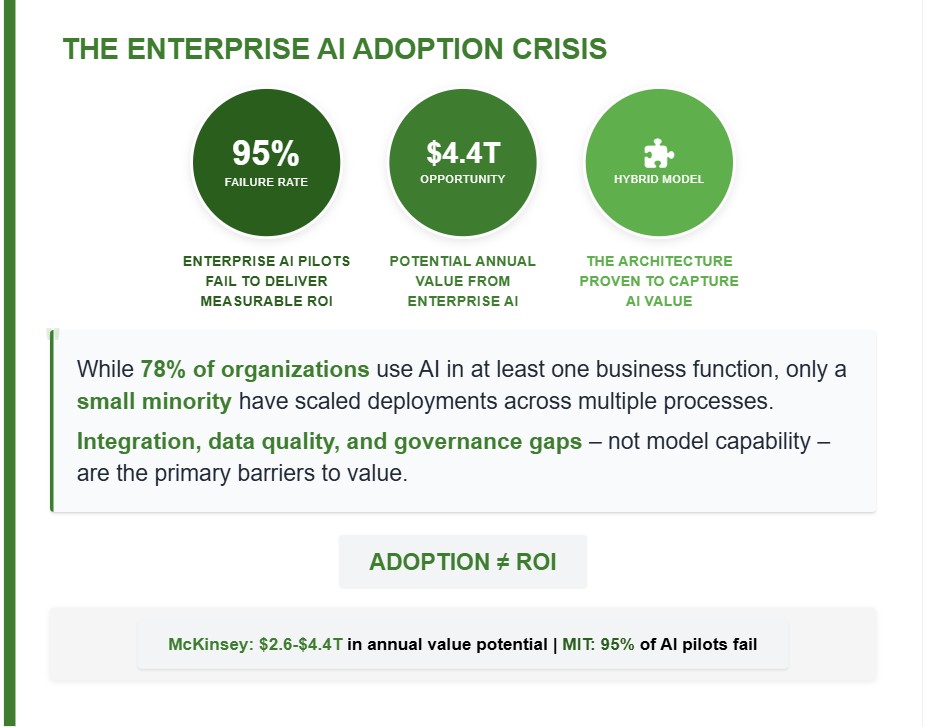

Here's the thing about enterprise AI adoption: everyone talks about it, but nobody has the real numbers. Until now.

Wing Ventures just dropped a comprehensive report interviewing 180+ C-level executives about how AI is actually playing out in their organizations. And the findings? They're way more grounded than the hype machine suggests.

When you dig past the headlines about AI replacing entire departments or revolutionary breakthroughs, you find something more interesting. Real companies are quietly moving AI from experiment to production. They're measuring ROI. They're building sustainable practices. And yes, they're worried about workforce reduction—but it's more nuanced than the doomsday narrative.

Let me walk you through what the data actually says, what it means for your organization, and where this is all heading.

TL; DR

- 50% of AI pilots successfully move into production, contrary to the "most AI fails" narrative

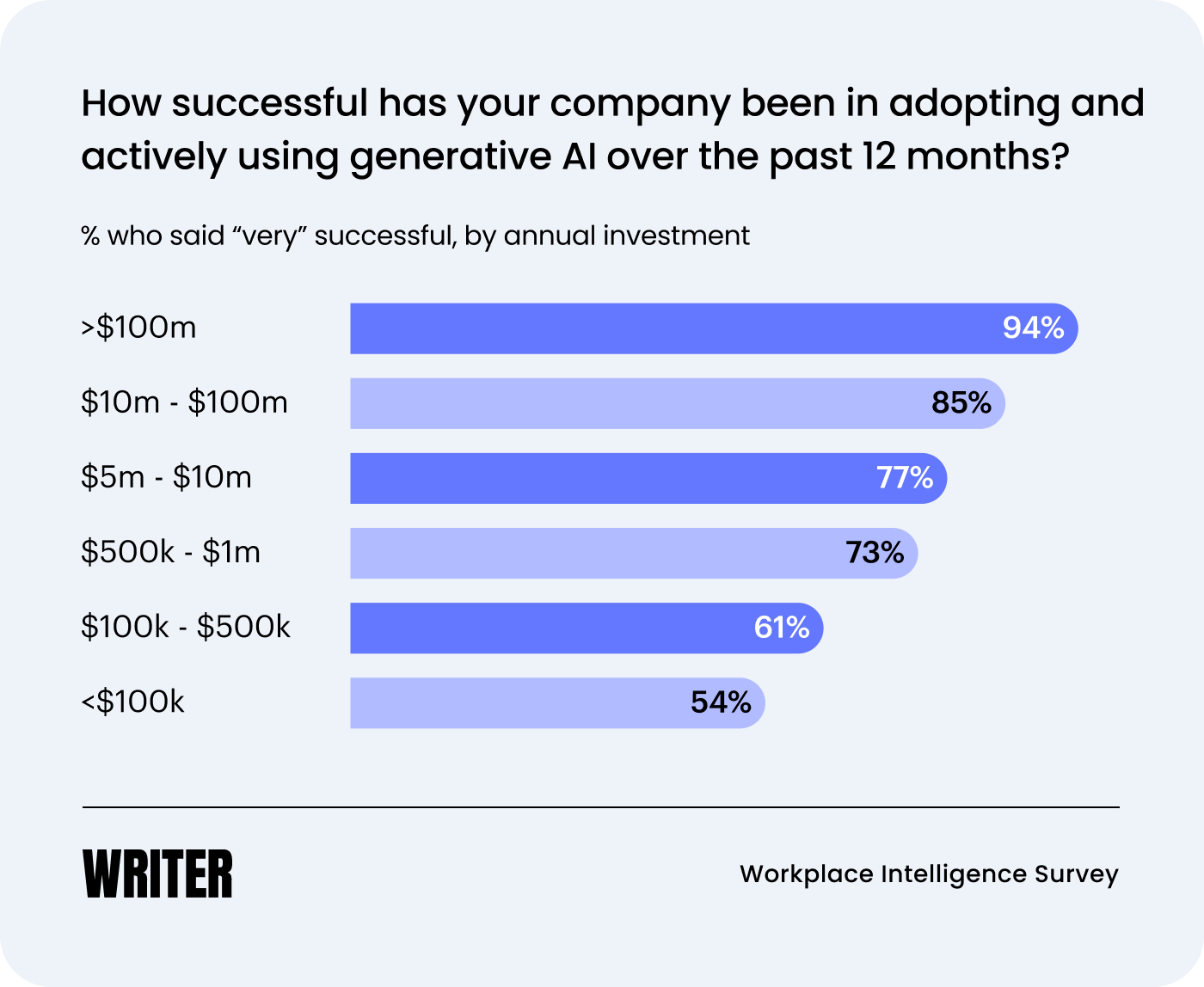

- 53% of enterprises report positive ROI on 2+ AI use cases, suggesting real business impact

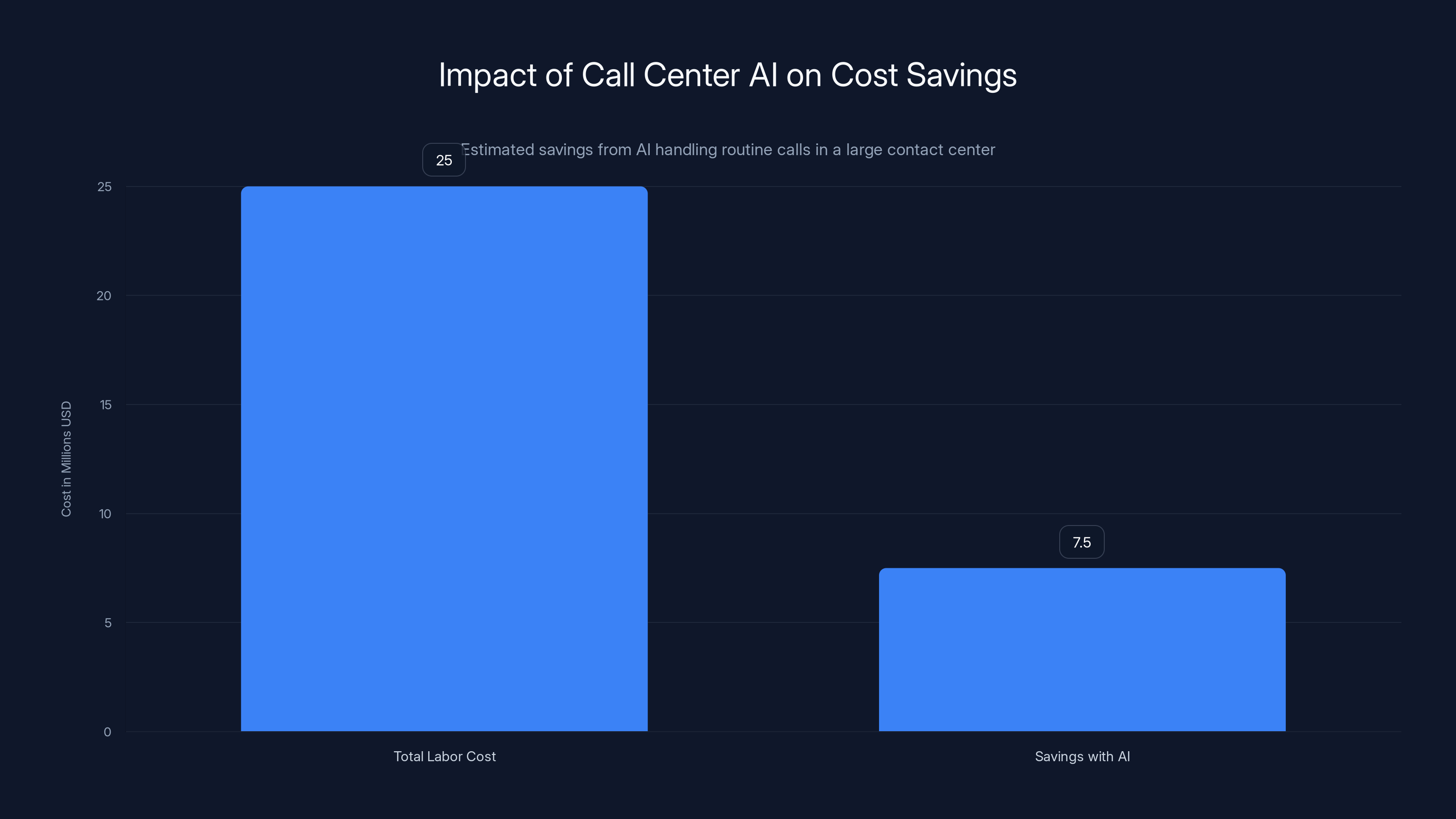

- Call center automation is the #1 use case for large enterprises, with customer service AI driving measurable efficiency gains

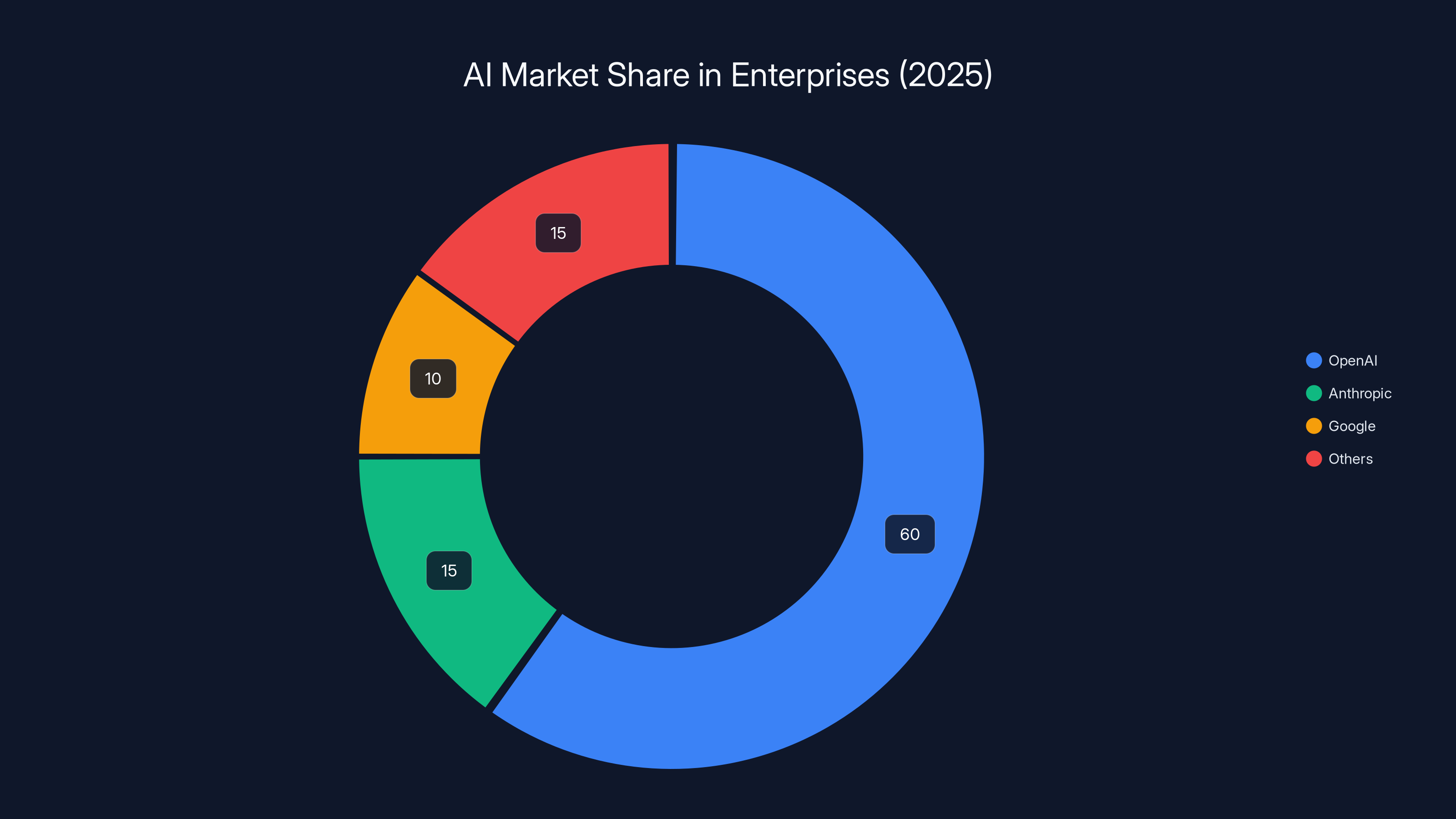

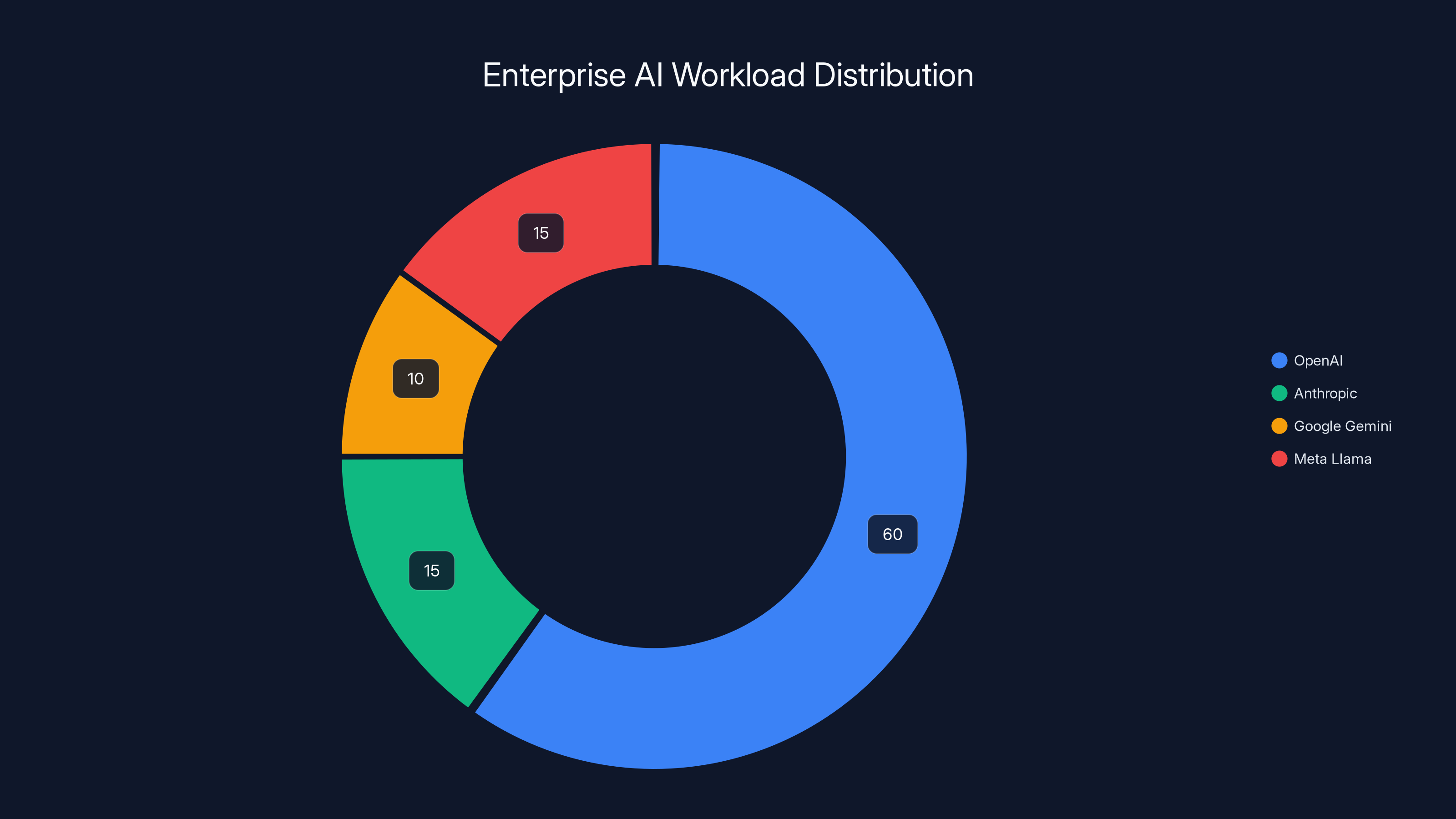

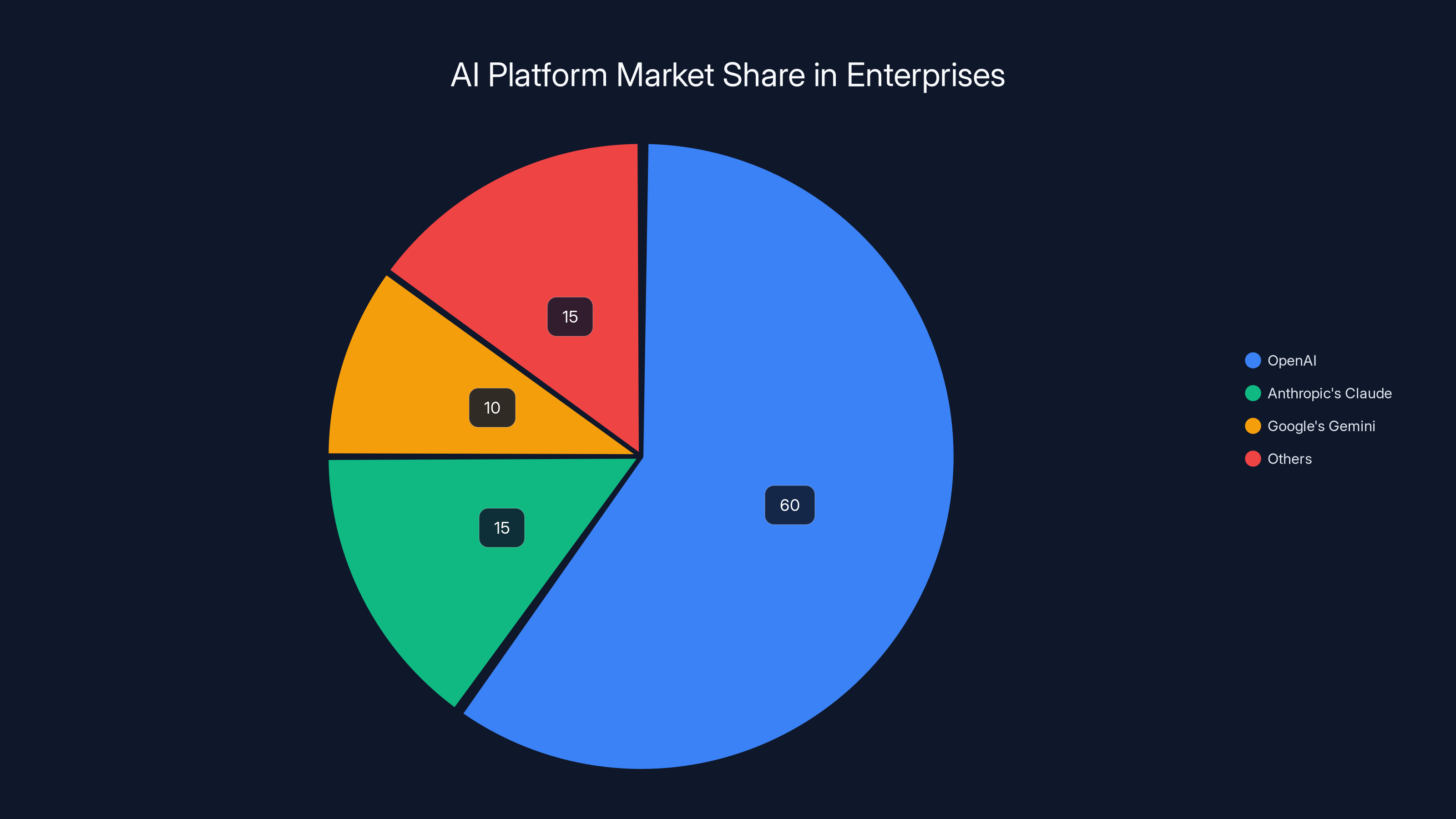

- 60%+ of enterprise workloads run on Open AI, showing clear model preference despite Anthropic's growth

- 70-75% of enterprises believe AI will reduce headcount, indicating confidence in automation's impact on staffing models

- The gap between small and large enterprises is shrinking, democratizing AI adoption across company sizes

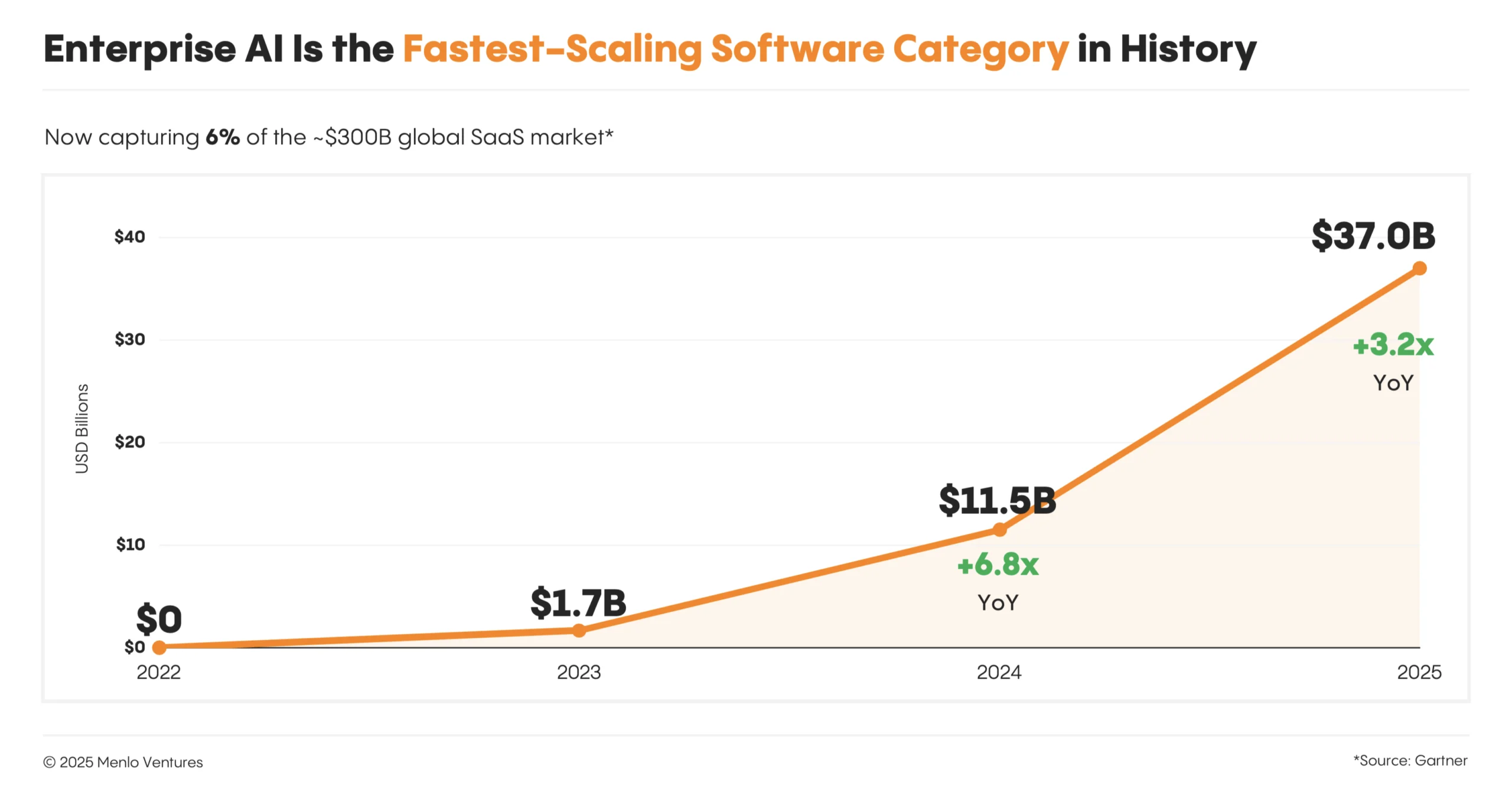

OpenAI holds a dominant 60% market share due to its first-mover advantage and ease of integration, while competitors like Anthropic and Google are gradually gaining ground. (Estimated data)

What Changed: Why Enterprise AI Finally Started Working

Five years ago, everyone built an AI pilot. Most crashed. But something shifted in 2024 and early 2025.

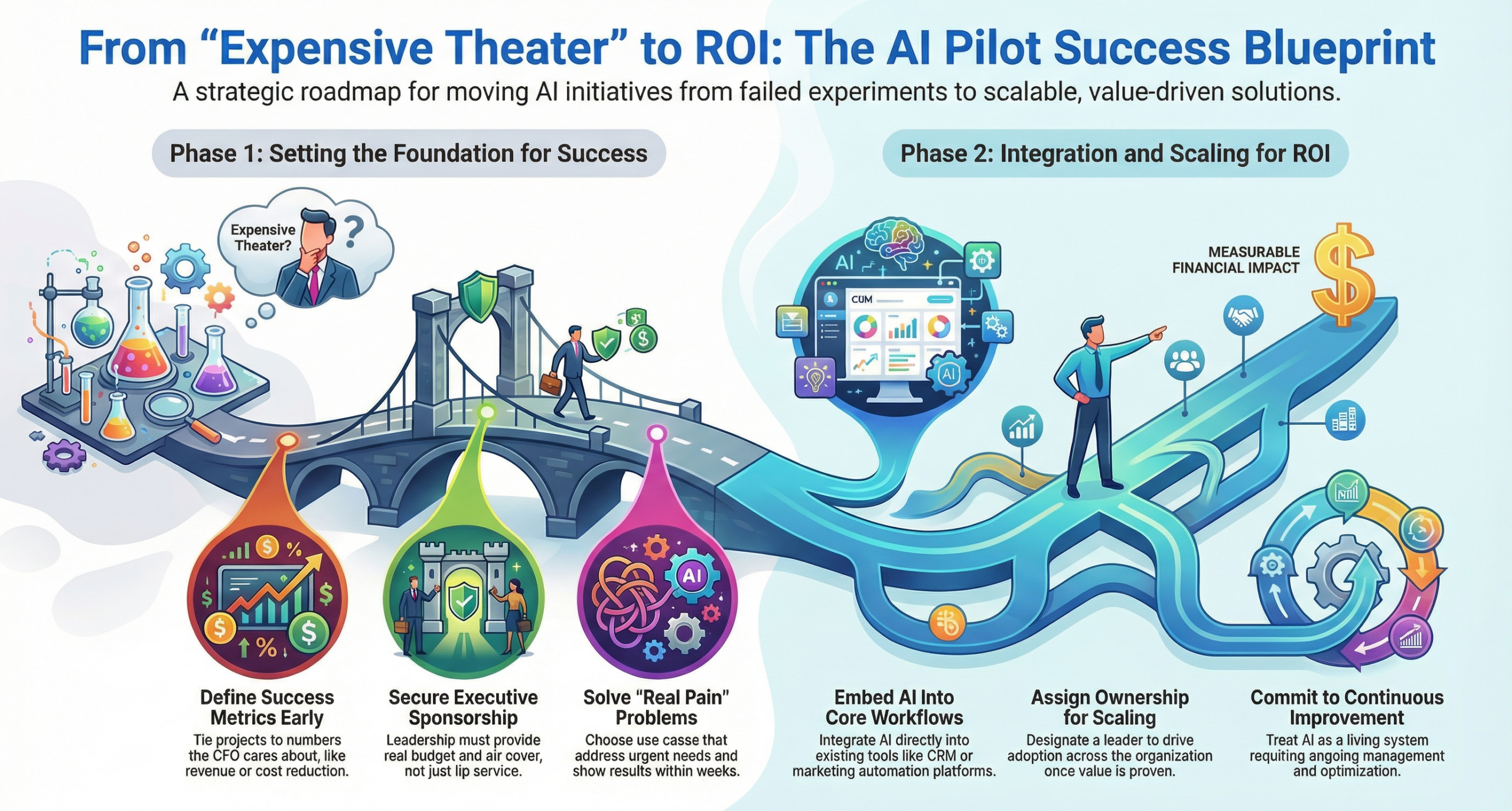

Companies stopped treating AI as a science experiment. They stopped asking "What can AI do?" and started asking "What's the smallest problem we can solve that saves us real money?" According to TechCrunch, this strategic shift has been pivotal.

That's why 50% of pilots now proceed to production. It's not that AI got better overnight (though it did). It's that enterprises got smarter about where to apply it.

Think about this: a pilot that costs

The executives Wing Ventures interviewed figured this out. They're building pilots with exit criteria from day one. They're measuring success in saved labor hours and improved customer satisfaction, not in how "advanced" the AI is.

The 50% Pilot Success Rate: Why Half of Them Actually Work

Let's unpack the headline: half of all AI pilots move into production.

That's shocking to people who remember the AI winter of 2016-2019, when machine learning pilots routinely failed or got abandoned. But it makes sense if you understand what's different now.

First, the barrier to entry dropped. Building a machine learning model five years ago required a PhD in statistics and a team of data engineers. Building an AI agent today requires someone who knows how to write a prompt. That's not hyperbole—it's why enterprises are running more pilots and more of them succeed.

Second, the ROI threshold changed. Enterprises aren't betting the farm on AI anymore. They're testing small, localized problems. "Can we automate this customer service workflow?" "Can we speed up document processing?" These aren't existential bets. They're Tuesday afternoon experiments that happen to use AI.

Third, there's organizational acceptance now. In 2022, an AI pilot meant a VP of Engineering had to justify it to the board. In 2025, it means a team lead can grab a tool and test it. That psychological shift matters enormously.

The 50% success rate tells us something important: we're past the "Does AI work at all?" phase. We're in the "Where does AI actually help us?" phase. That's progress.

But here's what the report doesn't explicitly say: the other 50% didn't fail because the technology is bad. They failed because someone built it for the wrong reason—proving they could use AI, rather than solving a concrete business problem.

OpenAI dominates with over 60% of enterprise AI workloads, despite competition from Anthropic, Google, and Meta. Estimated data.

53% Report Positive ROI: What "Positive" Actually Means

Here's a sentence that could be misread: "53% report positive ROI on 2 or more AI agent use cases."

Before you do a victory lap, understand what this means. These executives believe they're seeing positive ROI. That's not the same as having audited financial proof with quarterly reconciliation.

But also, don't dismiss it. Belief matters in business.

When a CFO thinks an AI implementation saved the company money, they're more likely to fund the next one. When a call center manager sees 15% fewer escalations after deploying AI, they're more likely to expand it to other teams. The perception of value drives adoption, which eventually creates actual value.

That said, the fact that it's 53% and not 85% is telling. It means more than 40% of enterprises either don't see positive ROI yet or are unsure if they do. That's a significant chunk of organizations still figuring out whether AI actually pays for itself.

What the data suggests: enterprises see ROI fastest when:

- The problem is measurable. You can't optimize what you don't measure. Companies with clear baseline metrics (call handle time, support ticket resolution time, document processing speed) see ROI faster.

- The solution is specific. Replacing an entire department is a fantasy. Replacing one annoying, repetitive workflow is realistic.

- The team is prepared. Organizations that trained staff on AI capabilities and limitations before deployment saw better outcomes.

- The timeline is reasonable. Companies expecting ROI in month one will be disappointed. Month four? That's more realistic.

The report mentions that executives value the belief in positive ROI almost as much as actual positive ROI. That's worth sitting with. It suggests that confidence in AI's business value might be driving further adoption, creating a self-reinforcing cycle.

Call Center AI: The Obvious Winner Nobody Expected to Dominate This Hard

This is the most interesting finding in the entire report: for enterprises with more than 10,000 employees, call center automation is the #1 AI use case.

Why is this surprising? Because everyone expected AI to disrupt something sexier—legal document review, financial analysis, code generation. But no. It's customer support.

The reason is obvious when you think about it: call centers represent a massive, predictable cost center. A mid-sized contact center might cost a company $50-100M annually in labor. That's before benefits, real estate, technology infrastructure, and management overhead.

If AI can handle just 20-30% of incoming calls—the routine billing questions, password resets, policy inquiries—that's $10-30M in savings. The ROI is so clear you can calculate it on a napkin.

But there's something deeper going on here. Call center work is highly repetitive and rules-based. A customer calls about a charge. They ask the same questions thousands of other customers asked. The answer is documented. An AI system that's trained on your FAQs, billing system, and past conversations can handle this without hallucinating.

Compare that to, say, legal document review. An AI system can draft a memo or summarize case law, but lawyers worry about liability. They want a human checking the work. The ROI math is less clear-cut.

That's why call center AI is winning. It's boring, but it works.

What's interesting is that this finding contradicts some of the AI hype we've been hearing. The sexiest AI applications aren't the most deployed. The most painful problems are.

The Open AI Dominance: 60%+ of Enterprise Workloads

If you've been following the AI space, you know Anthropic has been gaining serious ground. Claude is genuinely competitive with GPT-4. Some benchmarks show Claude ahead on reasoning tasks.

So seeing that 60%+ of enterprise workloads run on Open AI models is surprising to exactly nobody who's been paying attention, but also tells a clear story.

Open AI owns enterprise AI. Not because GPT-4 is always the best model for every task—it's not. But because Open AI hit the market first with a product people could actually use, and enterprise switching costs are real.

When your integration is built on Open AI's API, your team is trained on Chat GPT, and you've got 50 workflows depending on GPT-4o, switching to Anthropic requires rework. It's not impossible, but it requires justification.

What's interesting is that this dominance exists despite legitimate competition. Anthropic is better at some tasks. Google Gemini is cheaper. Meta's Llama models are open source and increasingly powerful.

But network effects matter. When your peers all use Open AI, when integrations exist for it, when your employees have Chat GPT accounts and understand the interface, you're unlikely to switch.

This is a classic "first-mover advantage" moment. It won't last forever. But in 2025, it's real.

The 60%+ number also suggests that enterprises aren't optimizing for model quality alone. If they were, we'd see more fragmentation—using Claude for reasoning tasks, Llama for cost-sensitive applications, GPT-4 for complex reasoning. Instead, there's consolidation around one provider.

That tells you enterprises value consistency and simplicity over optimal performance on each individual task.

OpenAI holds a dominant 60% market share in enterprise AI platforms, but competitors like Anthropic's Claude and Google's Gemini are gaining traction. Estimated data.

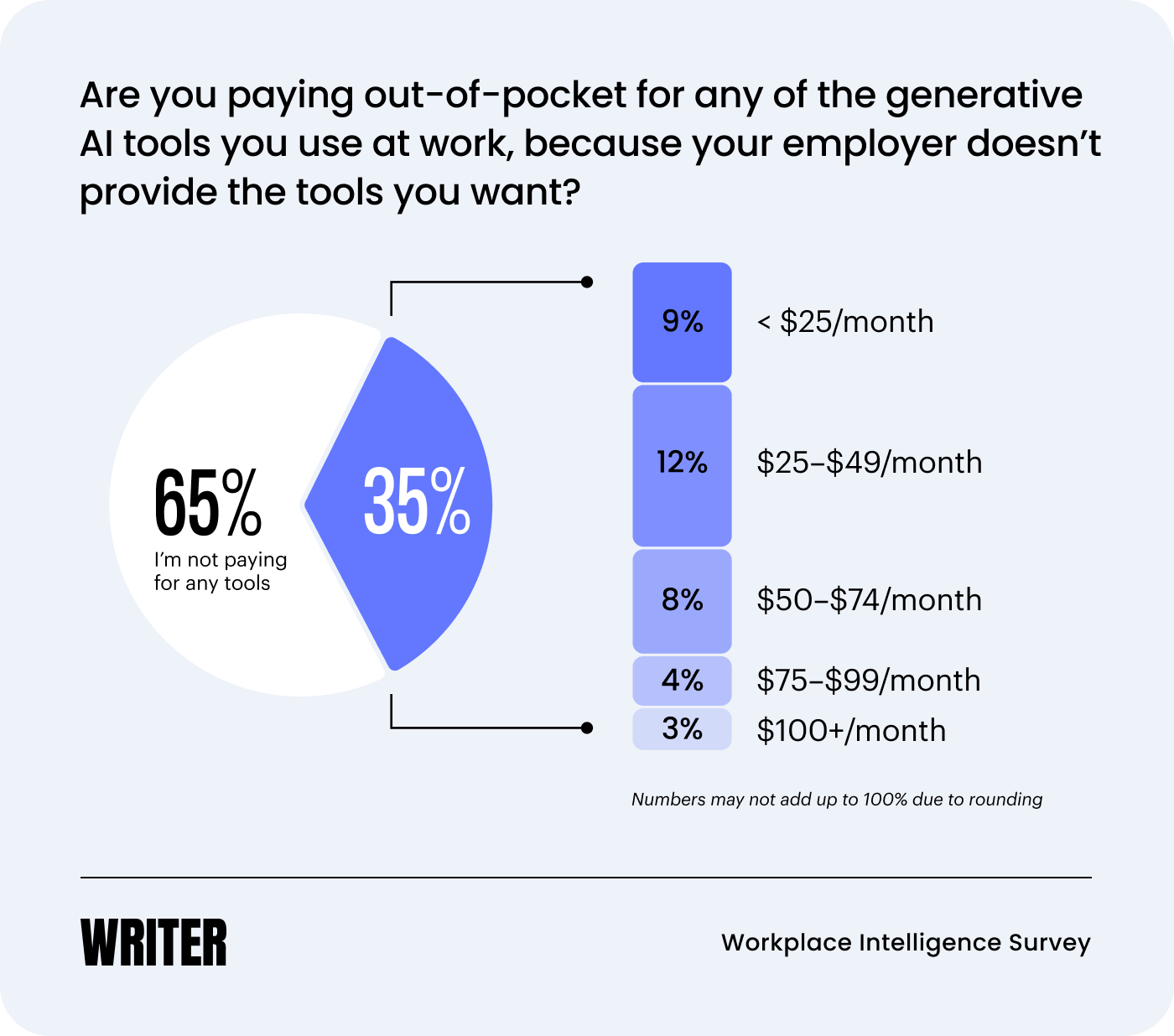

70-75% Believe AI Will Reduce Headcount: The Confidence Question

Here's the most politically charged finding: 70-75% of enterprises believe AI will reduce headcount.

Let's be clear about what this means and what it doesn't mean.

It means C-level executives think their organizations will need fewer people in the future because of AI. It doesn't mean layoffs are imminent or that this is malicious. It means they see a future where fewer human-hours are required to do the same work.

The report notes something important: "If they believe it to be true, it likely will be." That's psychology, not prophecy. But it matters.

When a VP of Operations believes AI will replace routine work, they start building differently. They stop hiring for low-complexity roles. They invest in training people for higher-value work. They create an environment where AI augmentation is expected, not resisted.

Self-fulfilling prophecies are real in business.

But here's what's worth examining: not all headcount reduction is equal.

If AI eliminates 10% of customer service rep positions but those people transition to higher-value roles like relationship management or technical support, that's workforce evolution, not displacement. If AI eliminates 30% of positions and those people are just... gone, with no transition path? That's different.

The report doesn't distinguish between these scenarios. But the fact that 70-75% of executives believe in headcount reduction suggests they're thinking about it. That's the first step toward either planning for transition or just hoping it works out.

What's notable is that even executives who say headcount will be reduced aren't showing panic. They're treating it as inevitable but manageable. That suggests either confidence in their industry's ability to absorb workers, or denial about the scope of potential displacement.

Historically, new technologies do eliminate some jobs while creating others. The industrial revolution automated farming, but created manufacturing jobs. ATMs eliminated many bank teller positions, but banks actually increased their footprint and hired more people in other roles.

AI might follow that pattern. Or it might be different because it's general-purpose and cognitive, not task-specific. The report doesn't answer this. But the fact that executives aren't panicking suggests they think the disruption is manageable.

Why The Gap Between Small and Large Enterprises Is Closing

One finding hiding in plain sight: enterprise size matters less for AI adoption than it did two years ago.

In 2023, if you wanted to deploy AI, you needed: a data science team, infrastructure budget, integration expertise. That was expensive. It meant large enterprises (1,000+ employees) had an advantage.

In 2025, you need: a Chat GPT account, 30 minutes, and a business problem. That's democratized.

This changes everything about enterprise AI.

Smaller companies aren't waiting for large companies to figure things out anymore. They're moving simultaneously. A 500-person company can now deploy the same AI capabilities a 50,000-person company can. The barrier to entry is just too low.

Wing Ventures' report includes data on companies across multiple sizes, and the spread is tighter than you'd expect. That's not coincidence. It's a sign of maturation.

When a technology democratizes, adoption becomes about executive vision and organizational will, not budget and engineering resources. That's actually a bigger shift than the technology itself.

Think about it: if every company has access to the same AI models and tools, what becomes the differentiator? It's not the technology. It's how you apply it, how you integrate it with your existing systems, how you train your team to use it.

That shifts competitive advantage from "Who has the best data scientists?" to "Who understands their business problems deeply enough to ask AI the right questions?"

It's not a technical competition anymore. It's a business strategy competition.

The ROI Math: How Enterprises Calculate AI Value

When 53% of enterprises report positive ROI, how are they calculating it?

The report doesn't provide exact methodologies, but enterprise ROI calculations for AI typically work like this:

Formula for AI ROI:

In practical terms:

- Cost Saved: If your AI implementation handles 25% of routine support tickets that previously required a 50 =750K annually.

- AI Implementation Cost: Building or deploying the system might cost $100K upfront.

- Ongoing Costs: Maintenance, monitoring, and refinement might cost $30K annually.

- Net ROI: (100K) / (30K) × 100 = 457% in year one.

That's why 53% see positive ROI. The math is favorable when you target high-impact use cases.

But here's what makes the number credible: it's not 95% or 98%. It's 53%. That suggests enterprises are being somewhat realistic about success rates and measurement challenges.

They're not saying "AI is a guaranteed moneymaker." They're saying "Most of our AI initiatives show positive returns, but not all of them."

That's honest.

Implementing AI in call centers can save approximately $7.5 million annually by managing routine calls, significantly reducing labor costs. Estimated data.

Why Some Pilots Fail (And How to Avoid It)

The 50% success rate means 50% fail. Let's talk about why.

Failing AI pilots typically fall into several categories:

1. Wrong Problem, Wrong Solution

Someone decides to "use AI" and then goes looking for problems. Backwards. You find a concrete, expensive problem, then ask if AI solves it.

A company that started an AI project to "improve marketing" but didn't define what "improve" meant? Doomed. A company that said "We spend $2M annually on customer support for password reset requests, can AI handle this?" Likely to succeed.

2. Underestimated Data Quality Requirements

AI is only as good as the data it trains on. If your historical customer support conversations are full of errors, or if your sales data is dirty, you're teaching the AI to fail.

Failing pilots often start without getting the data cleaned first. Successful pilots begin with data quality assessment.

3. Organization Wasn't Ready

AI changes workflows. If you deploy an AI system that handles customer support, support staff need retraining on how to work with it, when to override it, how to handle edge cases. Skip this, and the system sits unused.

4. No Clear Success Metric

If nobody agreed upfront what success looks like, you can't determine if the pilot succeeded. Some pilots fail silently because nobody tracked the right metrics.

5. Scope Creep

Starting small is the right approach. But then stakeholders add requirements. "Can it also handle this?" and "Can it also do that?" Soon you're building something that's 3x larger than planned and unlikely to ship.

6. Underestimated Integration Costs

The AI model itself might be free or cheap. But connecting it to your existing systems—your CRM, your billing system, your knowledge base—that's where costs explode. Pilots that underestimate this run out of budget or timeline.

Wing Ventures' 50% success rate suggests these failure modes are real but not insurmountable. You can avoid them by being disciplined about problem definition, data quality, organizational readiness, and scope management.

The companies moving into production are the ones who got these basics right.

The Call Center AI Deep Dive: Why It's Winning

Let's zoom in on why call center AI dominates the list of enterprise AI deployments.

The Problem It Solves

Contact centers operate on narrow margins. A large center might handle 5 million calls annually with 500 agents. Each agent costs roughly

No other business process has ROI that obvious and that large.

The Implementation Is Straightforward

Call center AI doesn't need deep integration into complex business systems. It needs access to:

- Your phone system (to route calls)

- Your knowledge base or FAQ system (to answer questions)

- Your billing system (to look up account info)

- Your CRM (to add notes to customer records)

These integrations exist. The playbook is established. Multiple vendors have done this before.

Compare that to, say, AI for financial forecasting, which requires integration with ERPs, historical financial data, market data feeds, and complex business logic. Way harder.

It's Easy to Measure

Call center metrics are ancient and well-understood:

- Average handle time (AHT)

- First-call resolution (FCR)

- Customer satisfaction (CSAT)

- Cost per call

You know your baseline. You can measure if the AI changes these metrics. You can calculate ROI cleanly.

It Doesn't Require Trust Yet

For many AI use cases, you need to trust the AI to make decisions autonomously. That's hard. But for call center AI, you can start with "assist mode." The AI suggests responses; the agent approves them. Gradually, you increase automation.

That gradual trust-building is psychologically important and reduces risk.

The Talent War Creates Urgency

Large contact centers struggle to hire and retain agents. If AI can handle 30% of volume, you need 30% fewer people, reducing hiring pressure and turnover. That's a competitive advantage beyond pure cost savings.

These factors combined explain why call center AI is the first domino in enterprise AI adoption. It's not the most interesting use case. It's the most obvious one.

What's interesting is what comes next. Once companies get comfortable with AI in call centers, they start asking: "What else can we automate?"

The Emerging Use Cases: What Comes After Call Centers

Call center AI is the easy win. But the Wing Ventures report hints at what's coming next for enterprises.

Document Processing and Review

Legal, HR, and finance teams spend enormous time reading documents: contracts, invoices, job applications, compliance filings.

AI can pre-process these at scale. Extract key information. Flag items that need human review. This is lower-risk than autonomous document review, but higher-value than call center AI. Expected ROI: 200-400%.

Sales Process Optimization

CRM and sales teams are next. AI can review prospect conversations, suggest next steps, identify deal risks. CRM integration is easier than ever. Sales teams are also extremely ROI-focused, making their buy-in easier than, say, HR.

Knowledge Work Augmentation

This is trickier because it's harder to measure. But engineers, marketers, and analysts are using AI as a thinking partner. Less about automation, more about augmentation. Enterprises still figuring out how to evaluate ROI here.

Financial and Supply Chain Forecasting

Once you have clean historical data and good integrations, AI for forecasting is high-value. Companies are cautiously piloting this now. If successful, ROI is massive because better forecasting reduces waste across the entire supply chain.

The pattern here is that enterprises move from "AI handles routine work" to "AI helps smart people work smarter" to "AI optimizes complex systems."

It's a maturity curve. We're at stage two for most enterprises.

Scope creep is the most significant contributor to AI pilot failures, estimated to account for 25% of failures. Addressing these issues can improve the success rate of AI implementations. Estimated data.

The Organizational Change Required: Beyond Technology

Here's what the Wing Ventures report implies but doesn't spell out: the technology is the easy part. The organizational change is hard.

When you deploy call center AI, you're changing how 500 people do their jobs. That requires:

1. New Roles and Responsibilities

AI doesn't eliminate call center jobs overnight. It changes them. Agents become AI monitors. They handle escalations, edge cases, and complex issues the AI routes to them. They also train the AI by providing feedback on its responses.

That's a different skill set. Companies need to rethink hiring and training.

2. New Metrics and Incentives

If agents used to be measured on calls-handled-per-hour, and now AI handles 40% of calls, you need new metrics. Quality of escalation handling? Training feedback that improves the AI? Customer satisfaction on AI-assisted calls?

Wrong metrics and incentives will sabotage AI adoption.

3. Change Management and Communication

Employees worry AI will replace them. That's not paranoia; it's realistic. Transparent communication about how AI will be deployed, how roles will change, and what opportunities exist for reskilling is essential.

Companies that treated AI deployment as a communication challenge (not just a technology challenge) had better adoption rates.

4. Management Structure Evolution

If your call center manager used to manage agents purely on productivity metrics, managing them with AI requires new skills. They need to understand AI limitations, override decisions when needed, and manage a hybrid human-AI team.

Training managers on AI is as important as training the AI itself.

Wing Ventures' 50% success rate likely reflects that companies succeeding are the ones who took organizational change seriously. Those treating it as a pure technology play are in the 50% that fail.

Why Executives Believe in AI When Evidence Is Mixed

The report's finding that executives see positive ROI at 53% (not higher) is interesting because it suggests they're not delusional.

But 53% also means they believe in the potential of AI even when hard ROI proof is limited. Why?

1. They See Leading Indicators

Cost-per-call went down. Call handle time decreased. Customer satisfaction ticked up slightly. These aren't guaranteed ROI, but they're promising signals.

2. They're Comparing AI to the Status Quo

If your current call center process is broken, and AI improves it by 15%, that's a win. You're not comparing AI to a perfect process; you're comparing it to your current situation.

3. They Understand the Trend Arrow

Executives intuitively understand that AI is improving fast. GPT-4 is better than GPT-3.5. Claude 3.5 is better than Claude 3. There's a clear trajectory upward.

They might not have broken AI out yet, but they're confident it's coming. That belief shapes their assessment of current ROI.

4. They See Competitors Moving

If your competitor deployed AI in their call center and they're claiming benefits, you feel pressure to match. That competitive dynamic doesn't require proven ROI; it just requires perceived opportunity.

This is actually healthy skepticism wrapped in optimistic language. Executives aren't saying "AI is a miracle." They're saying "It's working better than I expected, and it's improving, and I believe in the direction." That's reasonable.

The Pragmatist's Guide to Enterprise AI Adoption

Based on everything in the Wing Ventures report, here's how to think about enterprise AI adoption in 2025:

Start Small and Specific

Don't aim for "AI transformation." Aim for "AI solves this one expensive problem." Call centers, document processing, routine customer service queries. Problems where:

- ROI is calculable

- Success metrics are clear

- Implementation path is known

- Scale is manageable

Measure Everything

Baseline metrics before you deploy. Track metrics during and after. Be honest about results. If it's not working, kill it. This isn't failure; this is learning.

Invest in Organizational Change

Your team needs to adapt. New workflows. New roles. New metrics. Budget for this explicitly. It's not a side project for one person. It's organizational transformation requiring senior leadership attention.

Plan for Evolution

Your first AI use case won't be your last. Call center AI in year one probably leads to document processing in year two and forecasting in year three. Build platforms and approaches that scale across use cases.

Accept That 50% Will Fail

The Wing Ventures data suggests half your pilots will make it to production and half won't. Plan for this. Don't treat every pilot like it's make-or-break. Run multiple pilots simultaneously. Let the good ones succeed and the bad ones die quickly.

Stay Vendor Agnostic

Open AI owns 60% of enterprise workloads right now, but that will change. Build systems that can swap models. Use APIs, not lock-in. Don't build your entire stack on one provider's proprietary features.

Estimated data suggests significant variation in pilot success rates across sectors, with financial services potentially achieving higher success than retail. Estimated data.

The Competitive Landscape: Who's Winning

Wing Ventures' data shows clear patterns about which AI platforms and use cases are winning in enterprises.

Open AI: Dominant but Not Inevitable

60%+ market share is huge. But it's built on momentum and ease-of-use, not technical inevitability. Anthropic's Claude is gaining. Google's Gemini is improving. But inertia is powerful. Expect Open AI dominance to persist through at least 2026, then face real pressure.

Call Center AI Vendors: The Real Winners

While everyone talks about foundation models, the actual business value is concentrating in specialized AI vendors building for specific use cases. Amazon Connect, Genesys, and others building AI-first contact center platforms are seeing adoption rates that dwarf generic AI tool vendors.

The lesson: vertical AI tools are winning over horizontal AI tools in enterprise adoption.

Integration Platforms: Dark Horse Winners

Companies like Zapier and Make that make it easy to connect AI services to existing business systems are quietly becoming critical infrastructure. They're not sexy, but they enable every other AI deployment.

For enterprises looking to quickly integrate AI into existing workflows, automation platforms like Runable are providing AI-powered solutions for presentations, documents, reports, images, and videos starting at $9/month. These tools make AI accessible without requiring deep technical implementation.

What's Missing From the Report (And What It Means)

Wing Ventures surveyed 180+ executives, but their report has some notable gaps. Understanding what's not in the data is as important as what is.

Sector Breakdown Missing

We don't know if 50% pilot success is uniform across industries or if financial services sees 70% success while retail sees 30%. That would be really useful data. The absence suggests either that the report didn't capture it, or that sector differences aren't as big as expected.

Company Size Impact Understated

The report mentions the gap between small and large enterprises is closing, but doesn't quantify it. We don't know if a 500-person company has different pilot success rates than a 50,000-person company. That would be insightful.

Failure Mode Analysis Absent

Of the 50% of pilots that don't make it to production, what goes wrong? Technology issues? Organizational barriers? Budget constraints? ROI fell short? Understanding failure modes would be valuable for other enterprises planning pilots.

Longer-Term Outcomes Unknown

The report captures a moment in time (early 2025). We don't know which of these pilots are still running 18 months later, which ones expanded, which ones failed after initial success.

Cost Data Not Provided

How much does a typical pilot cost? How much does a production deployment cost? What's the payback period? We get ROI percentages but not absolute numbers.

The absence of this data doesn't invalidate the report. It just means it's a snapshot, not a comprehensive encyclopedia. Any enterprise using this data should expect to do additional research specific to their situation.

The 2025-2026 Outlook: Where This Is Heading

Based on Wing Ventures' data and broader industry trends, here's what I expect:

More Pilots, Higher Success Rates

As knowledge of what works spreads, success rates will tick up from 50%. By 2026, I expect 60%+ of AI pilots to make it to production as organizations learn from each other's mistakes.

Consolidation Around High-ROI Use Cases

Enterprises will stop exploring random AI applications and focus on proven categories: call center automation, document processing, forecasting. The explore phase ends; the optimize phase begins.

Open AI Market Share Plateaus

60%+ is dominant, but defending it is harder than building it. By 2026, I expect Open AI to hold 50-55% as Anthropic, Google, and Meta take market share. The story becomes "Open AI is still leading but losing ground."

Headcount Impact Becomes Real

70-75% of enterprises believe AI will reduce headcount. By mid-2026, some of those beliefs become reality. We'll start seeing workforce reduction announcements citing AI, which will either accelerate or decelerate AI adoption depending on how the transitions are handled.

Workflow Integration Becomes the Bottleneck

As AI models mature, success will depend less on model quality and more on how well AI integrates into existing business workflows. Companies that excel at workflow redesign will outperform those betting on technology alone.

Lessons for Your Organization

If you're planning enterprise AI adoption, here are the lessons from Wing Ventures' data applied to your situation:

1. Pilot Success Is Achievable, But Intentional

50% success isn't an accident or a ceiling. It's what happens when organizations approach AI with clear business problems, realistic expectations, and discipline around scope and metrics.

2. ROI Is Real, But Timing Matters

53% reporting positive ROI suggests AI pays for itself in many cases. But the timeline matters. Plan for 6-12 months to positive ROI for high-volume process automation, and 18-24 months for more complex use cases.

3. Call Center AI Is the Safe Bet

If your organization has a contact center, that's your pilot target. The playbook is established, ROI is clear, and success will fund the next phase of AI adoption.

4. Organizational Change Is 50% of the Work

The technology is mostly solved. Your competitive advantage comes from how well you change workflows, train staff, and measure success.

5. Start Now, Even If Imperfectly

The enterprises winning with AI today started 12-18 months ago. If you wait for perfect clarity on the ROI and the best solution, you'll miss 2025 and be behind in 2026.

FAQ

What does the Wing Ventures report actually measure?

Wing Ventures surveyed 180+ C-level executives (CEOs, CFOs, CTOs, etc.) about their enterprise AI adoption. The report captures adoption rates, success metrics, model preferences, and beliefs about future impact. It's a snapshot of what large enterprises are doing with AI in early 2025, not a scientific study of optimal outcomes.

Why is 50% pilot success considered positive?

50% success is positive because historical AI adoption (pre-2022) saw much lower success rates. Most machine learning pilots 5-10 years ago either failed or never reached production. 50% reflects maturation of both the technology and enterprise understanding of how to deploy it effectively.

How is "positive ROI" measured in this report?

The report doesn't specify exact measurement methodologies, but enterprise ROI for AI typically compares cost savings from automation (labor hours eliminated, error reduction, etc.) against implementation and ongoing operational costs. At 53% reporting positive ROI, enterprises are seeing real financial benefits, though not universally.

Why is Open AI dominating with 60%+ market share if Anthropic's Claude is competitive?

Open AI dominance reflects first-mover advantage, ease-of-use with Chat GPT, and integration inertia. Enterprises already built on Open AI's API are unlikely to switch unless there's a compelling reason. This dominance is likely to gradually erode as Anthropic, Google, and others improve, but switching costs remain high.

Is the 70-75% headcount reduction belief realistic?

Partially. Enterprises will reduce headcount in high-volume, routine roles like basic customer service and data entry. But AI will also create new roles like AI trainers, quality monitors, and workflow designers. The net employment impact depends on industry and how well companies manage workforce transition. It's neither apocalyptic nor negligible.

What should my organization do with this data?

Use it as benchmarking data, not gospel. If 50% of pilots succeed, you should expect similar odds. If 53% see positive ROI, that's achievable for your organization if you target high-impact use cases and execute disciplined pilots. Focus on proving ROI in one domain (like call center AI) before expanding.

Which AI use case should we start with if we don't have a call center?

If you don't have a call center, start with your highest-volume, most repetitive process that has clear metrics. Document processing, data entry, routine customer inquiries (via email), or expense processing are all viable. The key is finding a process where AI can handle 25-40% of volume and the ROI math works.

How much should we budget for an AI pilot?

This varies by scope, but a typical enterprise AI pilot costs

What's the biggest reason AI pilots fail after the initial pilot succeeds?

Organizational change resistance and insufficient investment in workflow redesign. The technology works, but staff don't know how to work with it, managers don't have incentives to use it, and integration into existing processes is incomplete. Planning for production deployment is as important as building the pilot.

Should we expect AI headcount reduction at my organization?

If your organization uses high-volume, routine processes, expect some headcount reduction over 24-36 months. But reduction usually means attrition rather than layoffs, if you plan for it. Train people into higher-value roles. Offer buyouts or severance to those who can't or won't transition. Companies managing this proactively avoid both retention problems and PR crises.

The Bottom Line: Enterprise AI Adoption Is Normalizing

Wing Ventures' report is valuable not because it contains shocking revelations, but because it quantifies what smart enterprises have figured out: AI works when you point it at the right problems.

50% of pilots succeed. That's not a ceiling; that's a baseline we'll improve on. 53% see positive ROI. That's not a lottery; that's a repeatable business case. 70-75% believe in headcount reduction. That's not fear-mongering; that's realistic planning.

The enterprises winning with AI today are the ones who:

- Started with specific, expensive business problems

- Measured success rigorously

- Invested heavily in organizational change

- Ran multiple pilots to learn quickly

- Accepted that not all pilots would succeed

That's boring. It's not "AI reveals the future." It's "Disciplined execution with AI as a tool."

But boring is often what wins in business.

If you're planning your enterprise AI adoption in 2025, don't get caught up in the hype. Use Wing Ventures' data as a benchmark. Ask: Can we achieve 50% pilot success? Can we hit 53% positive ROI? Can we plan for the 70% who believe in headcount reduction?

If the answer to those questions is yes, you're on a solid path. If it's no, dig into why before you spend real money.

The enterprises that think about AI adoption this way are the ones you'll read about in 2026, quietly crushing their competitors with AI. The ones chasing hype? They'll be writing blog posts about their failed AI transformation and asking what went wrong.

Don't be the second type.

Use Case: Automate your enterprise reports and documentation with AI-powered templates, saving your team 4+ hours weekly on manual document creation.

Try Runable For Free

Key Takeaways

- 50% of enterprise AI pilots successfully move to production, contradicting earlier narratives of widespread failure

- 53% of enterprises report positive ROI on 2+ AI use cases, demonstrating real business value despite implementation challenges

- Call center automation dominates as the #1 AI use case for large enterprises, driven by clear ROI and established implementation playbooks

- OpenAI maintains 60%+ market share of enterprise workloads, reflecting first-mover advantage and integration inertia despite competitive alternatives

- 70-75% of enterprises believe AI will enable workforce reduction, suggesting confidence in automation's impact but raising important transition planning requirements

- Organizational change management and workflow redesign are equally important to technology implementation for pilot success

- ROI becomes achievable when enterprises focus on specific high-impact problems rather than broad AI transformation initiatives

Related Articles

- OpenAI's 2026 'Practical Adoption' Strategy: Closing the AI Gap [2025]

- Microsoft CEO: AI Must Deliver Real Utility or Lose Social Permission [2025]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- Google's Hume AI Acquisition: The Future of Emotionally Intelligent Voice Assistants [2025]

- RadixArk Spins Out From SGLang: The $400M Inference Optimization Play [2025]

- ServiceNow and OpenAI: Enterprise AI Shifts From Advice to Execution [2025]

![Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains [2025]](https://tryrunable.com/blog/enterprise-ai-adoption-report-2025-50-pilot-success-53-roi-g/image-1-1769089078419.jpg)