Building Your Own AI VP of Marketing: The Real Truth [2025]

We didn't want to build it. That's the honest starting point here.

When you run a SaaS company at scale, the math is usually simple: buying beats building. The opportunity cost of engineering time is too high. The maintenance burden kills you. The vendor you'd compete against has ten thousand customers you don't, meaning their product is ten thousand times more battle-tested than anything you could ship.

But sometimes the market forces your hand.

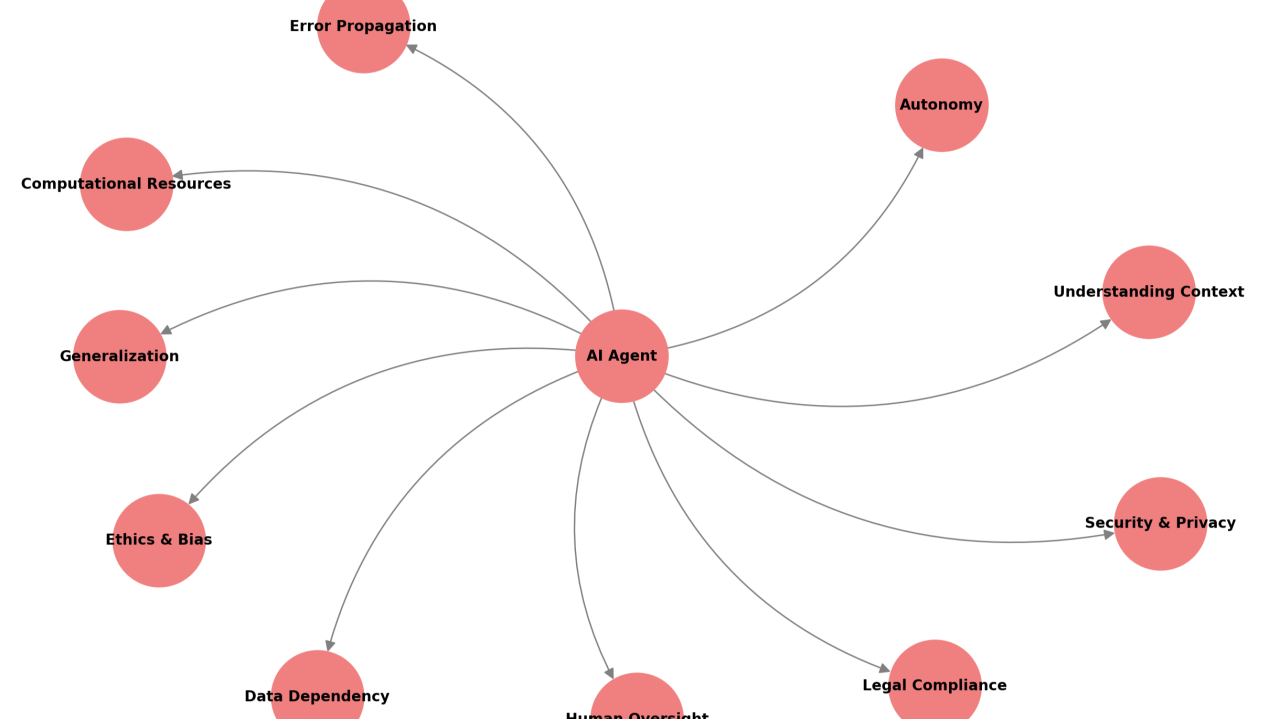

After evaluating every AI marketing agent solution available, we realized something uncomfortable: they all do the same thing. They write content. Blog posts, social captions, email copy, maybe some SEO optimization. And that's genuinely useful for some companies. But when you already have five thousand pieces of content, twenty million words, and a growing archive that's actually a liability instead of an asset, you hit a wall. Content generation isn't your bottleneck. Strategic orchestration is.

We couldn't find a tool that does what we actually needed. So we built one. And after running it in production for months, we've learned something that most of the AI hype completely misses: building AI agents is easy. Maintaining them is brutal. They require roughly as much management attention as human employees. But when they work, they work in ways that no generic tool ever could.

This is what building and running your own AI VP of Marketing actually looks like when you strip away the startup vibes and focus on the numbers.

TL; DR

- We wanted to buy but couldn't find a solution that does marketing orchestration instead of just content generation

- Most AI marketing agents are one-trick ponies that write content; they can't strategize, plan, or adapt to real-time data

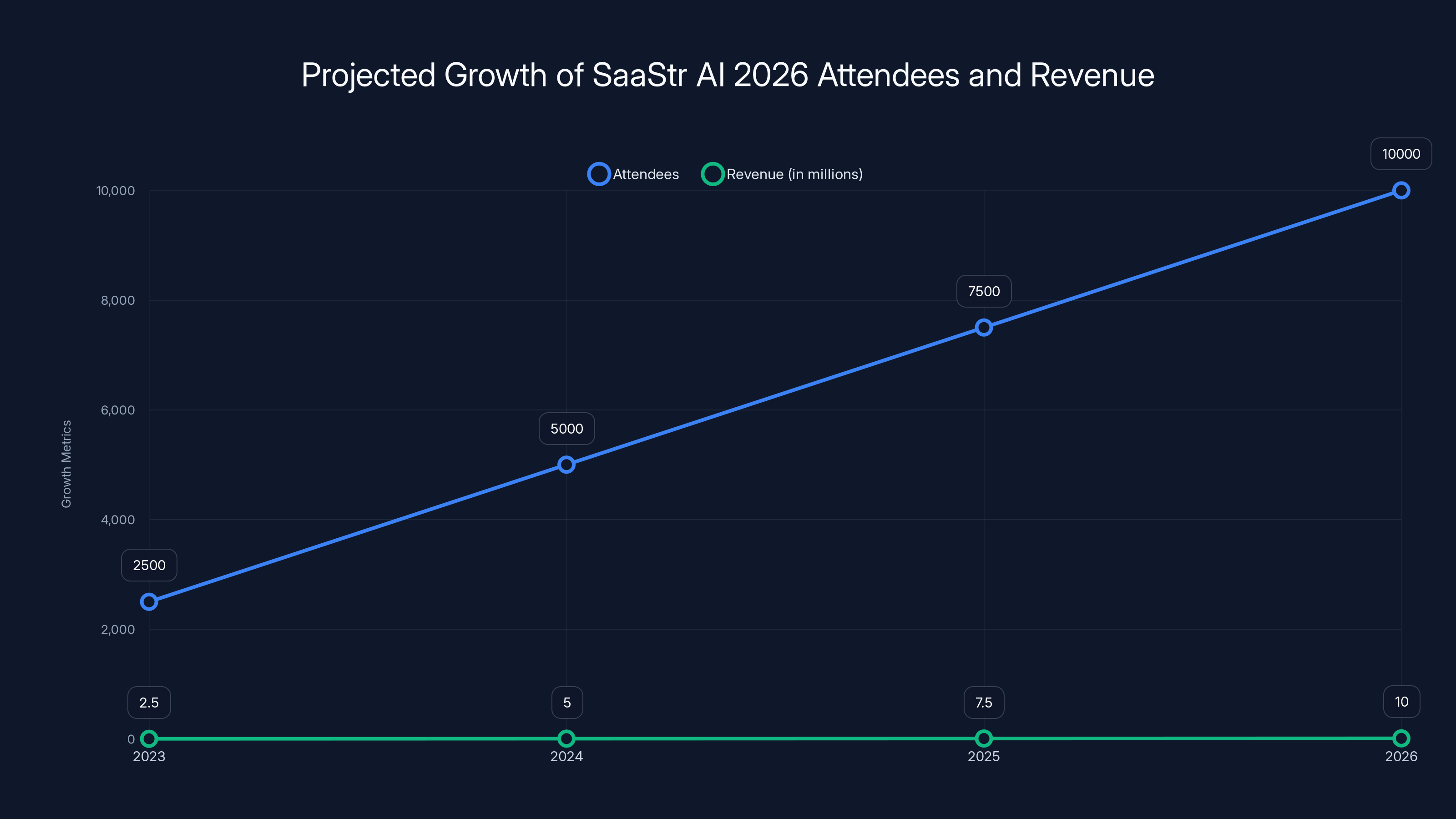

- We built our own agent called "10K" specifically focused on driving 10,000 SaaStr AI 2026 attendees and $10M in revenue

- It uses Claude Opus for deep analysis, real-time data synthesis, and daily plan adjustment instead of static quarterly planning

- Maintenance burden is real: our AI infrastructure requires 30% of an engineer's time daily to keep running, monitor, and improve

- AI agents don't replace humans; they require different management work (prompt tuning, hallucination catching, output review) that still demands significant attention

- The real value comes from orchestration and pattern recognition, not content generation or task automation

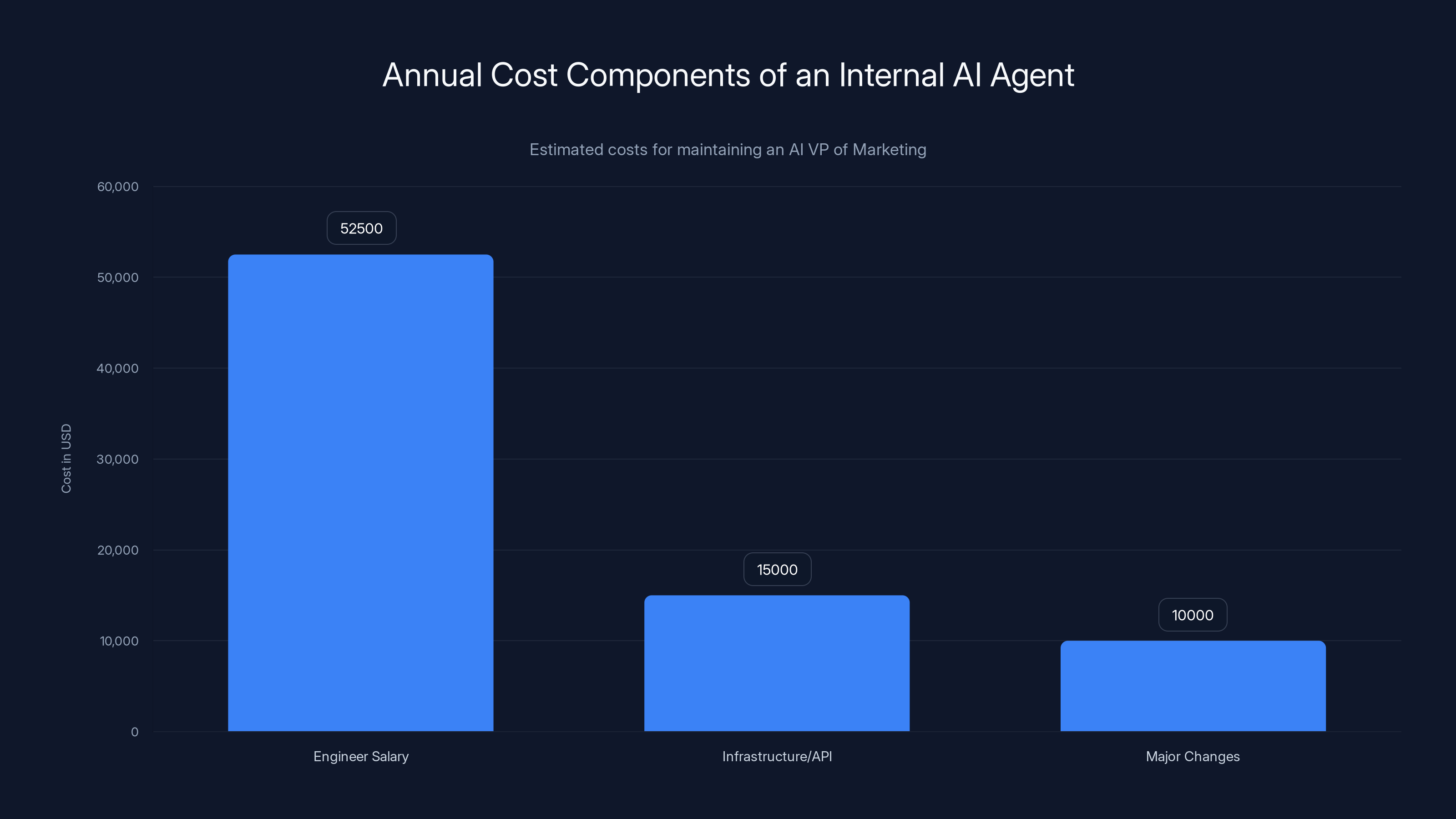

The major cost components for maintaining an internal AI agent include engineer salary, infrastructure/API costs, and major changes, totaling approximately $77,500 annually. Estimated data.

Why We Usually Buy, Not Build: The 90/10 Rule

Let's start with the meta-lesson that few companies get right: when you should build your own tools and when you should buy them.

At SaaStr, we've invested heavily in both sides of this equation. We're running twenty-plus AI agents in production. We've put over five hundred thousand dollars into AI infrastructure this year alone. We're the number one performing customer across multiple AI vendor platforms, which means we're not amateur hour with this stuff.

But here's the thing we've learned that the venture capital community seems to miss: the 90/10 rule is real.

Ninety percent of the time, you cannot build something better than what thousands of customers are already paying for. The collective intelligence of ten thousand paying customers, all providing feedback, all hitting edge cases, all demanding improvements, creates a product flywheel that's nearly impossible to compete with internally. Your five engineers are not smarter than five hundred engineers distributed across a thousand companies, all pressure-testing the same product every single day.

We use Artisan for outbound prospecting. Our response rates run between five and seven percent, and we've got over a million dollars in pipeline from AI-driven outbound. That's not because we're special. It's because Artisan has ten thousand Arthurs deployed, each one learning from interactions, each one getting feedback, each one making that system incrementally better every single day. We could never replicate that internally.

We use Qualified for inbound lead handling. Over one million dollars in closed revenue in three months. Seventy percent of our closed deals in October came directly from an AI agent handling the conversation. Again, that's not magic. It's the compounded intelligence of Qualified's entire customer base.

We use Gamma for presentations, Momentum and Attention for Rev Ops, Opus Pro for video production. In every case, we looked at the build versus buy decision and came to the same conclusion: we cannot compete with the vendor's entire customer base. The opportunity cost is absurd. The maintenance burden would be astronomical.

So we built a simple rule: only build custom tools when you literally cannot buy a solution AND the problem is P1 or P2 priority. Everything else, you buy.

We've built some custom tools that cleared this bar. Our speaker review system eliminated one hundred eighty thousand dollars in annual agency costs. Our valuation calculator has been used over three hundred thousand times. Our pitch deck reviewer has helped over one thousand founders. But in each case, we couldn't buy what we needed, and the problem was genuinely urgent.

Marketing orchestration became that problem.

The AI VP of Marketing is projected to help achieve 10,000 attendees and $10 million in revenue by 2026. Estimated data based on strategic objectives.

The Problem With Every AI Marketing Agent We Evaluated

Let me be specific about what we were looking for because this matters.

We evaluated the major players in the AI marketing agent space. Every single one we demoed had the same fundamental limitation: they're content generation engines with marketing labels on them. They produce blog posts. They optimize email copy. They write social media captions. Some of them have basic scheduling. A few of them integrate with multiple channels. But not a single one could do what we actually needed.

And here's what we actually needed: we needed something that understands our strategic goals for the next eighteen months, analyzes four years of historical performance data across every campaign we've ever run, looks at real-time conversion data, and then tells us specifically what to do today, tomorrow, next week, and next month to hit those goals. Not in general terms. Not quarterly themes. Specific, executable daily tasks that cascade into a coherent strategy.

We needed something that adapts. When a campaign underperforms, it recalibrates. When new pipeline data comes in, it adjusts. When a sponsor relationship is heating up, it recommends the right outreach strategy for that specific sponsor based on historical patterns.

We needed something that synthesizes data from multiple sources in real-time. What sponsors should we be reaching out to this week based on pipeline data and historical conversion patterns? Which audience segments are most likely to register for SaaStr AI if we reach out with specific messaging? When should we launch a campaign versus when should we hold back?

We needed something that understands causality. We don't just need more blog posts. We need to know which blog post, published on which specific date, connected to which email campaign, targeting which audience segment, will actually drive registrations for our conference. We need to know the execution path from blog post to email to sponsor outreach to actual attendee.

Every AI marketing agent we evaluated was fundamentally incapable of this. They were great at the execution layer. They could write a blog post. But they couldn't tell you which blog post to write, why you should write it, when you should publish it, or how it connects to your broader strategic goals.

So we stopped looking.

The Architecture Behind Our AI VP of Marketing

We built something we call "10K." The name isn't fancy. It reflects our two core objectives: get us to ten thousand attendees for SaaStr AI 2026 in May, and help drive our first ten million dollars in revenue this year. Everything the agent does ladders back to one of those two goals.

Here's how it actually works under the hood, because this is where the real lessons live.

Deep Historical Analysis Layer

The first component runs monthly. We feed 10K our complete historical dataset: every campaign we've run in the past four years, every conversion rate, every email open rate, every click-through rate, every sponsor interaction pattern, every registration sequence, literally everything. We use Claude Opus to process this data and create a comprehensive analysis of what actually drives results for us.

This isn't just descriptive statistics. It's causal analysis. What campaigns worked? Which variables moved the needle? When did we spend money on things that didn't work? What patterns emerge when we look at successful campaigns versus failed ones? What audience segments convert best? What messaging resonates?

This analysis becomes our knowledge base.

Dynamic Campaign Planning Engine

That analysis feeds into the second layer, which is an app we built on Replit. This is where strategy becomes executable. The app takes our strategic objectives, combines them with the historical analysis, and then does something that no off-the-shelf marketing tool we found does: it designs every single marketing action for the year, day by day.

Not quarterly initiatives. Not monthly themes. We're talking about: Monday, write blog post X targeting audience segment Y. Tuesday, send email campaign Z to subscribers who have shown interest in topic W. Wednesday, reach out to sponsors A and B with specific messaging that we know converts based on historical patterns. Thursday, publish social content on LinkedIn and Twitter about specific topics. Friday, re-engage lapsed registrants with testimonial-based messaging.

Every single day has an executable plan. That plan updates as we go.

Real-Time Adaptation Layer

Here's where it gets interesting. The plan isn't static. Every day, new data comes in. Campaign performance data, registration data, email engagement data, sponsor interaction data, all of it flows into Claude Opus for re-analysis.

If a campaign is underperforming—say, our email about early bird pricing isn't getting the open rate we predicted—the system recalibrates. It might recommend shifting budget to a different messaging angle or reaching out to a different audience segment. It might suggest pulling back on that campaign entirely and doubling down on something that's outperforming.

This isn't happening quarterly or monthly. This is daily recalibration based on real-time signals.

Predictive Intelligence Integration

The system also pulls APIs from all our vendor tools. Salesforce for pipeline data, email platforms for engagement metrics, registration systems for sign-up trends, everything. It synthesizes this into predictive intelligence.

Which sponsors should we be talking to right now? Which audience segments are most likely to register for the conference in the next two weeks? What's the optimal time to reach out to lapsed leads? What messaging angle will resonate with each segment?

The system answers these questions daily based on real-time data and historical patterns.

Automation Where Possible

To the extent the system can actually execute on its recommendations, it does. It's not just planning; it's execution. Not everything. Some things still require human judgment or creativity. But a surprising amount of marketing execution is automatable when you have a clear plan and real-time data feeding into it.

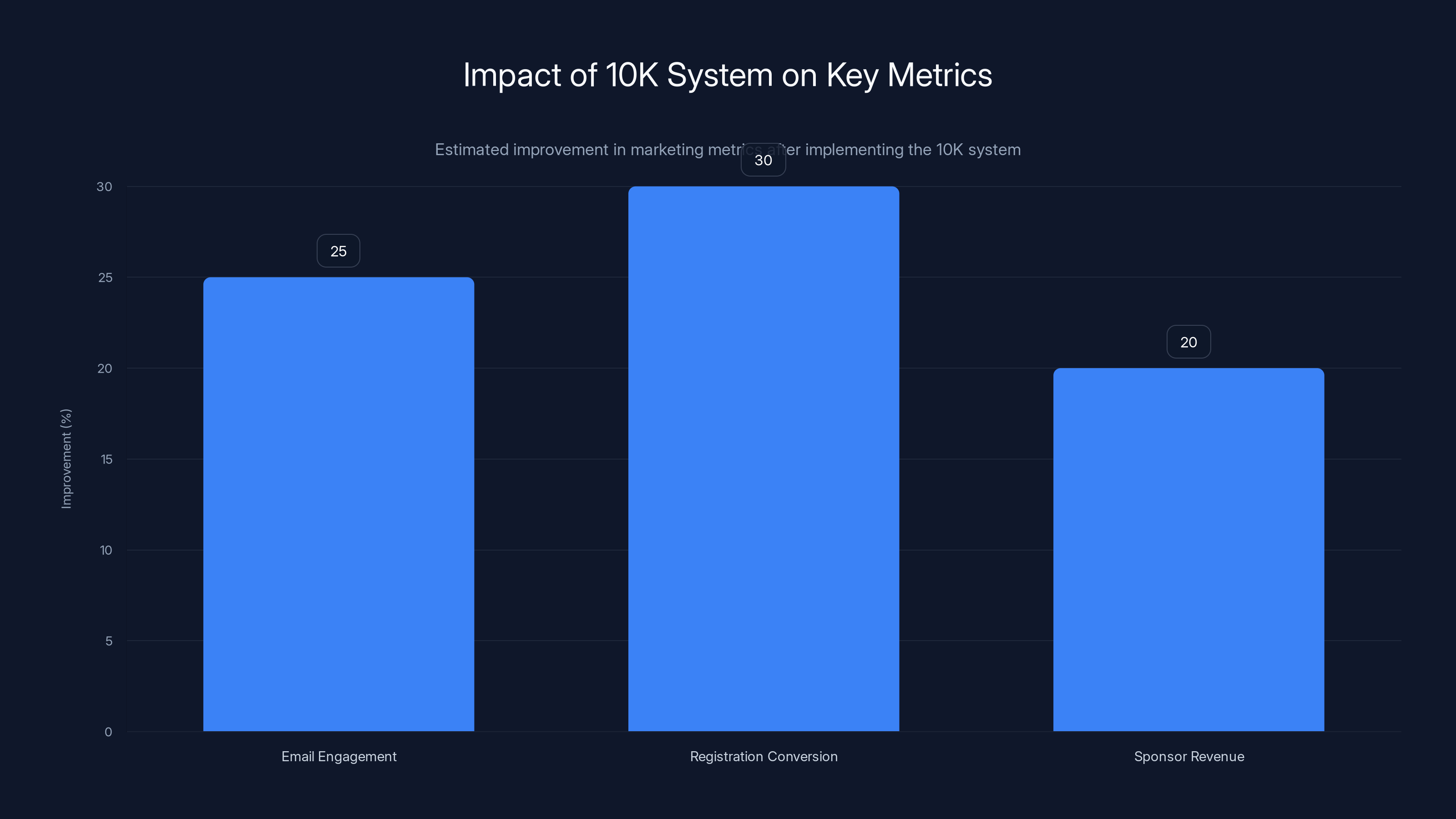

The 10K system has led to estimated improvements in email engagement by 25%, registration conversion by 30%, and sponsor revenue by 20%. Estimated data based on system performance insights.

What 10K Actually Produces: The Daily Output

So what does a day in the life of this system look like?

Every morning, our marketing leadership gets a briefing. Today's recommended actions, context about why those actions make sense given current performance data and strategic goals, predicted outcomes for each action, and integration with sponsor relationship data, customer segment data, and pipeline information.

Something like: "Based on current registration trends, attendees from the financial services industry are 40% more likely to register if contacted via LinkedIn with company-specific use case content. We have 1,200 financial services professionals in our database who haven't registered yet. We recommend launching a three-email sequence starting today targeting this segment with case studies and ROI data." The system can then actually launch that sequence.

Or: "Sponsor X has shown increased engagement over the past 72 hours—three website visits to sponsor resources, two email opens of sponsor-related content. Historically, this engagement pattern precedes a purchasing decision by 5-7 days. We recommend having the account manager reach out today with a specific offer." The system can flag this for human follow-up.

Or: "Blog post on X topic in your database has experienced a 200% increase in organic traffic over the past week, correlating with a trending topic in your industry. We recommend creating follow-up content on related subtopics and scheduling email outreach to segments who engaged with the original post." The system can draft that content and get it queued for review.

The output isn't magic. It's not replacing human judgment. But it's providing a strategic framework that's grounded in data, updated constantly, and executable daily instead of quarterly.

We've been running this system for several months, and the results are measurable. Our email engagement rates are up. Our registration conversion rates are up. Our sponsor revenue is tracking ahead of plan. Not because of AI magic, but because we're no longer making marketing decisions based on intuition and last quarter's planning spreadsheet. We're making decisions based on real-time data and historical patterns.

The Maintenance Reality Nobody Talks About

Here's where the venture capital narrative completely breaks down.

Building an AI agent is genuinely easy now. You can have something functional in a week. Training it, monitoring it, fixing it when it breaks, adapting it as your business changes, keeping it from hallucinating nonsense, reviewing outputs—that's the hard part. And it doesn't get easier. It gets more complex.

Our Chief AI Officer spends thirty percent of their time every single day maintaining and improving our AI agents. Not thirty percent of one project. Thirty percent of their entire job, every working day. That's roughly twelve hours per week, minimum.

What does that time look like?

First, there's prompt tuning. The system generates recommendations and sometimes those recommendations are wrong or miss context or don't account for something we care about. So we adjust the prompts that feed into Claude. We clarify what matters. We add constraints. We refine the logic. This is iterative and endless because business changes, market changes, and the system needs to evolve with it.

Second, there's hallucination detection and correction. Sometimes the system makes recommendations that seem plausible but aren't grounded in real data. It might recommend a campaign strategy that contradicts what we know about our audience. Or it might pull information from a data source that's outdated. We catch those and correct them, then adjust the system so it doesn't repeat the same mistake.

Third, there's output review. Not everything the system produces is good enough to ship without human eyes on it. We review recommendations, campaign plans, email copy, and content suggestions. We're not reviewing everything—we've created tiers where low-risk items go straight through and high-risk items get multiple reviewers—but this still takes time.

Fourth, there's integration maintenance. The system pulls data from multiple vendors. APIs change. Data formats change. We've had vendors deprecate endpoints we were using. Maintaining the plumbing that connects all these systems is not trivial.

Fifth, there's performance monitoring. We track: Does the system's recommendations actually lead to better results? Are there blind spots? Are there categories of decisions where the system consistently misses? We look at this monthly and feed the findings back into prompt adjustments.

Sixth, there's scaling. As we add new marketing channels, new data sources, new strategic goals, the system needs to evolve. We're not just maintaining something static; we're actively extending it.

This is not low-maintenance infrastructure. This is ongoing engineering work. And we're not unique in this—this is what every company that's running AI agents at scale is discovering right now.

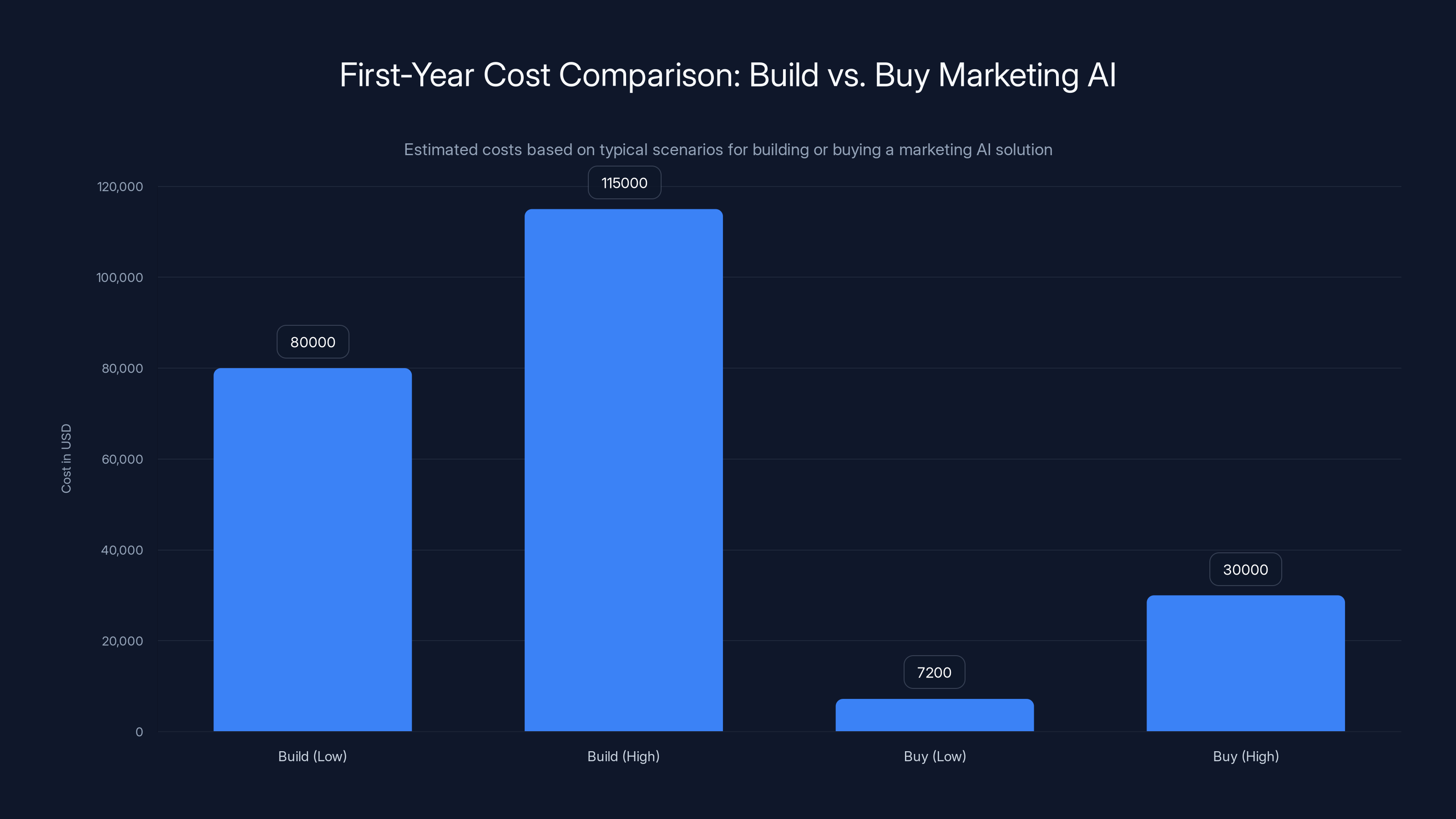

Building a marketing AI solution can cost significantly more in the first year compared to buying, with build costs ranging from

What 10K Can't Do (And Shouldn't Try)

Let me be equally clear about the limitations.

Our system is not creative. It can't come up with genuinely novel marketing ideas. It can recognize patterns and synthesize recommendations based on historical data, but true creative insight—"what if we did something nobody's ever done before"—requires human ingenuity. We still need our creative team for that.

It can't make high-stakes judgment calls. If we're deciding whether to acquire a company, restructure a marketing team, or enter a new market, that's not something we're delegating to an AI system. That requires human judgment, risk assessment, and accountability.

It can't replace relationship management. Some of our most valuable business relationships are based on personal connection and trust. A sponsor relationship that started as a business transaction has evolved into genuine partnership because two specific people have worked together for years. No AI system is going to replace that.

It can't handle unprecedented situations well. The system works well in steady state. When things are predictable and historical patterns apply. But when something genuinely novel happens—industry disruption, economic shock, unexpected competition—the system's recommendations might not be very good. It's seeing through a rearview mirror, and rearview mirrors don't predict the future well.

It can't be trusted without verification. We catch hallucinations regularly. The system will confidently recommend something that sounds plausible but is actually incorrect. We've built in review processes, but that means we still need human judgment in the loop.

It can't operate fully autonomously. We've tried letting it make decisions and execute on them fully without human approval, and every time we've done that, something weird has happened. Maybe the system interpreted a constraint differently than we intended. Maybe it optimized for the wrong variable. Maybe it got stuck in a local maximum instead of finding the global optimum. The more autonomy we give it, the more review we have to do after the fact.

So we've settled on a hybrid model: the system is a recommendation engine with execution privileges for low-risk activities. Everything significant goes through human review. This slows things down compared to full automation, but it also means we don't wake up to surprise decisions we regret.

AI Agents Require Similar Management to Humans (But Different Skills)

This is the most important lesson we've learned that contradicts the startup narrative.

Managing an AI agent is not fundamentally easier than managing a human employee. It's just different work.

With a human employee, you do regular one-on-ones, provide feedback, clarify expectations, catch mistakes, and iterate on performance. The cycle is weekly or monthly, and most of the work is conversational.

With an AI agent, you do prompt refinement, output review, hallucination detection, performance monitoring, and integration maintenance. The cycle is daily or weekly, and most of the work is technical and data-focused.

In both cases, you're spending roughly thirty percent of a senior person's time to manage one resource. In both cases, you need someone who understands your business deeply, can make judgment calls, and can hold the resource accountable to standards. In both cases, you're going to get bad outputs sometimes and you're going to need to catch and correct them.

The difference is that humans improve over time with feedback, while AI agents improve over time with prompt adjustment. Humans can improvise and adapt to unexpected situations, while AI agents get stuck when patterns don't match training data. Humans have judgment about what matters, while AI agents require you to encode that judgment explicitly.

This matters because the venture capital community is selling a story about AI agents that will reduce headcount and save money and operate autonomously. Some of that is true eventually. But in 2025, the reality is: AI agents are another thing your company has to manage carefully, with significant ongoing investment.

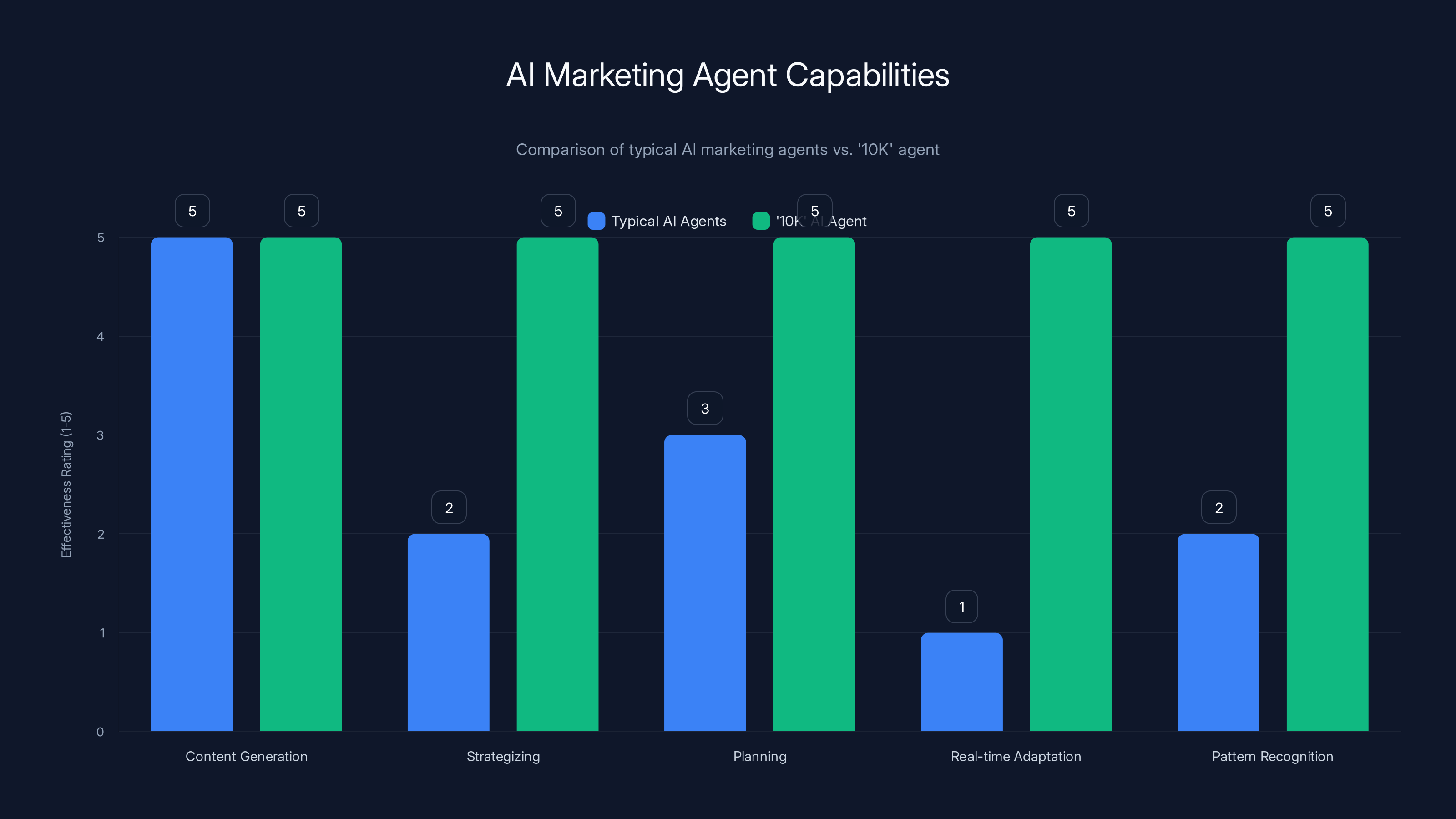

The '10K' AI agent excels in strategizing, planning, real-time adaptation, and pattern recognition compared to typical AI marketing agents, which often focus mainly on content generation. Estimated data.

The Economics of Build Versus Buy for Marketing AI

Let's talk about the actual financial decision tree here because the numbers matter.

Building an internal marketing AI agent costs:

- One senior engineer for 3-4 weeks to build the initial version: 35,000 in salary

- Ongoing maintenance at 30% of one engineer's time: 60,000 annually

- Infrastructure costs: Claude API calls, computational resources, data storage, monitoring: 20,000 annually

- Opportunity cost of that engineer not building other things: whatever the next best use of that person's time would be

Total first-year cost:

Buying a marketing AI agent solution costs:

- Zapier or Make.com with third-party apps: 500/month (6,000 annually)

- Or an AI marketing agent platform: 2,000/month (24,000 annually)

- Implementation time: one marketing person for 2-4 weeks

Total first-year cost:

On paper, buying looks cheaper. And it probably is cheaper for the first year or even the first three years. But there are factors that shift the calculation:

First, if no commercial solution actually does what you need, the cost of buying is infinite because you can't solve your problem at any price.

Second, if what you need is truly unique to your business model, custom built might be the only option.

Third, if you're running multiple AI agents and you've got the infrastructure and expertise already built, the marginal cost of building another one goes way down.

Fourth, once you've built something, you own it. You can iterate on it as your needs change. With commercial software, you're at the vendor's roadmap mercy.

But fifth, and this is critical, maintaining something you built is more expensive than maintaining something you bought. You're responsible for bugs, security, API changes, everything.

So the real question isn't "should we build or buy?" It's "what problem do we have, what's the total cost to solve it either way, and what's the trade-off between customization and maintenance burden?"

For us, the answer was: build, because we couldn't find a commercial solution that did what we needed, and the problem was strategically important enough to justify the investment.

The Reality of Implementation: Three Months to MVP, Six to Production, Ongoing to Polish

Let's be concrete about the timeline because a lot of companies underestimate this.

Months 1-3: We built an MVP. Basic data analysis in Claude, simple planning logic, manual execution of recommendations. This was rough but functional. We got to a point where we had daily plans and could test the approach.

Month 4-6: We built out the real system. Better data integration, more sophisticated planning logic, partial automation, proper monitoring, integration with our other tools. This is when we moved from "experiment" to "production system."

Month 7+: We're still optimizing. Better data pipelines, more refined prompts, higher confidence in recommendations, more automation, better hallucination detection, integration with new data sources.

At each stage, the system was delivering value but also revealing gaps. The gaps couldn't be predicted upfront. So if you're planning this, expect that the real timeline and cost is going to be longer and higher than your initial estimates.

/Blogs/4459558-SEO_(18).png?Expires=1767963230&Signature=BZkfk62zh3jysf-C4mOU5kRrEzv5ebgGJt1mEPNSohSXU5UF~XOgq1Xmnmhbz7jT1O6s5Usdh8T-ME~M43YgTA4dplAwvclNiN-w31hSKN6kwTDz34uX4pE0zi~gKLehZR-6RCNiwx4R2aS3EV6ay4Ix-VuQJm6w7L8lewkdlQL-W-tM7i4IFxm4Akb1hDKlzYtqWV-wfYf0vn~smE8a3KkTiF2h3Deo-zARbGF4prWdFbUrJf3~4Jgv563UUUwSDyQR5Psxrfr~1qCNkY1vb8V-en0wCrGh4jnSbTv67mQ82j90-ija1~H5omWm7~0oMd9iZ-ramDsRrfL1X7l8lw__&Key-Pair-Id=APKAIIFZDCEANAVU2VTA)

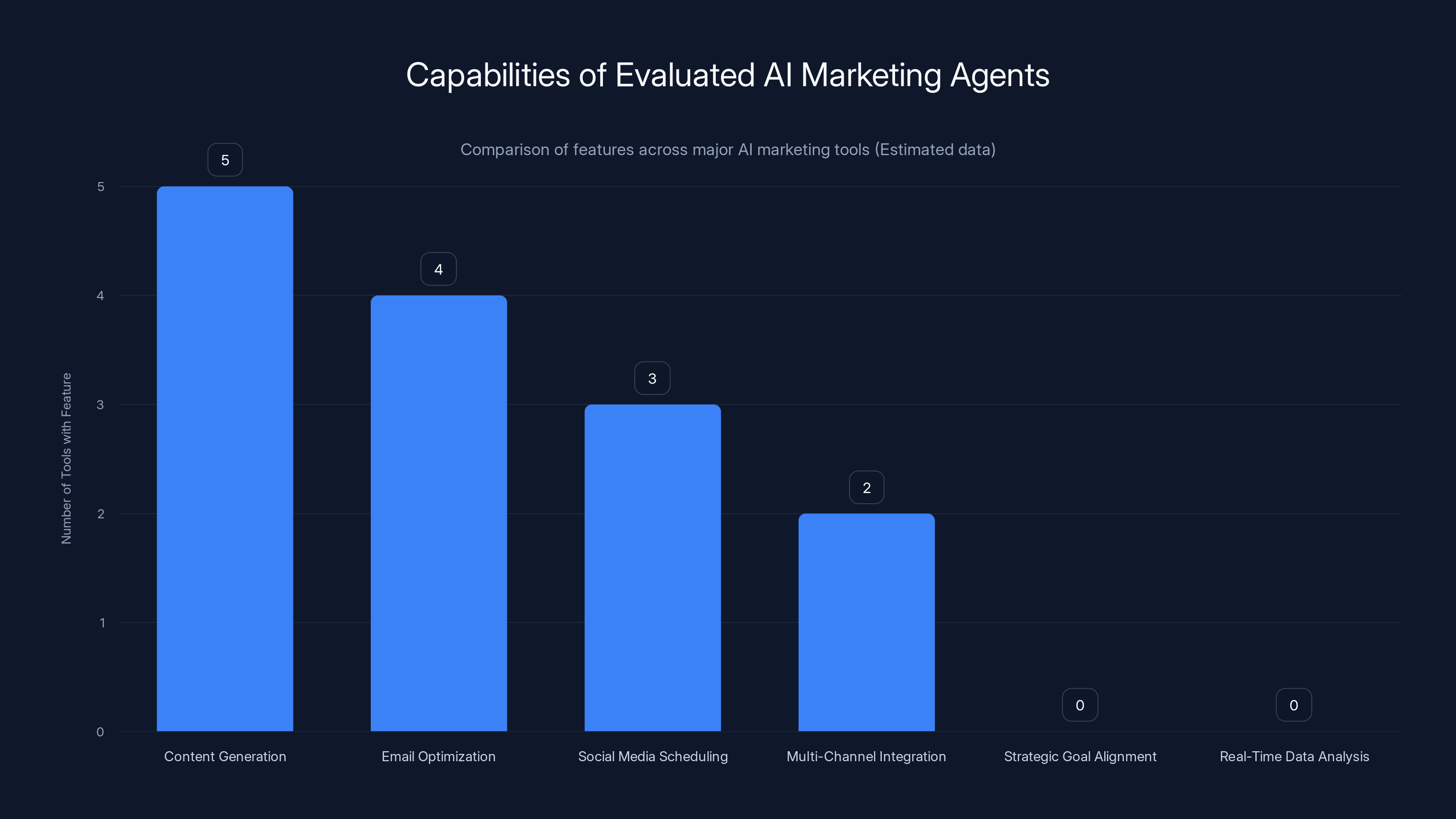

While most AI marketing agents excel in content generation and email optimization, none align with strategic goals or analyze real-time data effectively. Estimated data based on typical capabilities.

How This Fits Into a Broader AI Infrastructure Strategy

We're not running just one AI agent. We're running twenty-plus across our entire company. And the question we ask about each one is the same: what problem does this solve, what's the cost to build versus buy, and what's the ongoing maintenance burden?

For some problems, we buy. For others, we build. For most, we've actually started with buying and then built custom layers on top.

For example, we buy Artisan for outbound prospecting, but we built custom audience segmentation and messaging frameworks on top of it.

We buy Qualified for inbound, but we built custom qualification logic that feeds into our revenue operations systems.

We buy Gamma for presentations, but we built custom templates and styling that reflects our brand.

The pattern is: buy the core capability, build the custom layer that makes it work for your specific business.

This hybrid approach seems to be where the maturity curve is pointing. Full build is expensive and risky. Full buy is limiting if the vendor doesn't match your needs. Build plus buy, where you pick and choose commercial components and augment them with custom layers, seems to be the sweetspot.

Lessons We'd Do Differently (Mistakes We Made)

Full transparency about what we'd change if we were building this again.

First, we'd invest more in data quality and pipelines upfront. The system is only as good as the data feeding it. We spent time in months 2-3 fixing data issues that we should have fixed before building the system. If you're going to do this, make your data infrastructure solid first.

Second, we'd spend more time defining clear success metrics upfront. What does success actually look like? We defined it (10K attendees, $10M revenue), but we should have built in more detailed sub-metrics that the system could optimize against. It would have given the AI clearer objectives.

Third, we'd involve our business stakeholders more in the design phase. We built something, showed it to marketing leadership, and then had to make adjustments because their mental model of how they want to work was different from how we designed the system. More collaboration upfront would have saved that iteration.

Fourth, we'd be less ambitious in the initial version. We tried to build something that did too much at first. If we'd started with a narrower scope—just event marketing instead of all marketing—we could have gotten to production value faster and expanded from there.

Fifth, we'd have a clearer escalation path for when the system gives recommendations that somebody disagrees with. What's the process? Who decides? That lack of clarity led to some conflicts that were dumb to have.

The Future: Where This Is All Heading

Right now, in 2025, AI agents are at the "useful but high maintenance" stage. This is similar to where data engineering was in 2015. Valuable, necessary, but requires specialists and significant ongoing work.

Over the next 2-3 years, I expect the tooling to improve significantly. Better out-of-the-box prompts. Better hallucination detection. Better self-monitoring systems that catch their own mistakes. Better integration between different agents so they can coordinate without human orchestration.

But I don't think we're heading toward "fully autonomous agents that don't need human oversight." I think we're heading toward "human-AI collaboration where the division of labor gets more sophisticated but never disappears."

Humans will focus on judgment, strategy, and creativity. AI will focus on analysis, execution, and optimization. The question will be how to structure organizations so that collaboration happens naturally instead of being forced.

For marketing specifically, I think we'll see:

- More sophisticated campaign orchestration where AI handles the day-to-day execution and humans handle the strategic direction

- Better real-time adaptation, where campaigns adjust based on performance data within hours instead of weeks

- More personalization at scale, where messaging gets tailored to individual segments or even individuals based on behavioral data

- Better measurement, where attribution becomes more sophisticated and AI can tell you more accurately which marketing actions actually drove which business outcomes

But I don't think we're heading toward "fire all the marketers and let the AI handle everything." That's not going to happen because strategy requires judgment, and judgment requires humans.

Should You Build Your Own Marketing AI Agent?

Here's the decision tree.

Do you have a specific marketing problem that no commercial solution addresses? If no, buy something off the shelf. If yes, continue.

Is that problem important enough to justify 3-4 weeks of engineering time and ongoing 30% maintenance burden? If no, accept the commercial solution and adapt your process around its limitations. If yes, continue.

Do you have the technical expertise in-house to build and maintain this system? If no, either hire that expertise or accept that you'll need to outsource it. If yes, continue.

Can you afford the infrastructure costs, the ongoing maintenance, and the opportunity cost of that engineering time? If no, rethink this. If yes, continue.

Are you prepared for this to be messier and more time-consuming than you expect? If no, don't do it. If yes, build it.

If you make it through that decision tree, then building might make sense for you. But most companies should stop at question two. They should buy something commercial and focus their engineering effort on core product.

We got through the whole tree. The problem was unique enough. The business was important enough. We had the expertise. We could afford it. And we were prepared for it to be complicated.

So we built it. And it's working. But only because we understood what we were getting into.

The Real Reason AI Marketing Agents Aren't Solving This Problem Yet

Let's zoom out for a moment and talk about why the market hasn't solved this problem yet.

The reason is economic. Ninety-five percent of marketing organizations are SMB sized. They need content generation. They need help writing copy. They need scheduling tools. The market that commercial AI marketing agents are serving is massive and standardized.

Building a marketing orchestration system is much harder. It requires understanding each customer's unique business model, their specific goals, their data structures, their existing tools and integrations. It's customized work. It's high-touch. The unit economics are terrible compared to selling content generation at scale.

So the vendor incentive is to build content generation tools and call them marketing agents. That's where the money is.

But there's a subset of companies—typically large SaaS companies or sophisticated brands—that have a different problem. They have enough content. They've got enough tools. What they need is orchestration. Strategic coherence. Real-time adaptation.

That subset is underserved by the market. And it's a significant opportunity for the next generation of marketing AI tools. But it's going to require a different approach. It's going to require some level of customization. It's going to require the vendor to understand your business deeply.

Or it's going to require you to build it yourself. Like we did.

Key Takeaways: The Practical Playbook

Let me distill everything into something actionable.

First, understand that AI agents are not magic. They're tools that combine pattern recognition, data analysis, and execution automation. They're useful but limited.

Second, before you build anything, really commit to finding a commercial solution. The opportunity cost of building is high.

Third, if you do build, start narrow. Don't try to build a system that solves all your marketing problems. Build something that solves one specific problem very well.

Fourth, plan for ongoing maintenance. If you think you're going to build something and then not touch it, you're going to be disappointed. Budget for 20-30% of an engineer's time per agent in perpetuity.

Fifth, make the data pipeline your first priority. A sophisticated system running on bad data is worse than a simple system running on good data.

Sixth, involve stakeholders in the design. Don't build in isolation and show it to people later. Get feedback early and often.

Seventh, measure everything. Define success metrics upfront and track them religiously. You need to know whether this thing is actually working.

Eighth, assume you'll find bugs and hallucinations. Build in a review process. Not everything the system produces is going to be correct.

Ninth, recognize that AI agents are supplements, not replacements. They're not going to eliminate the need for human marketing judgment. They're going to amplify good judgment and expose bad judgment.

Tenth, stay humble about what you've built. No matter how good your system is, there's a bigger, better version if you had infinite resources. Accept the limitations and work within them.

FAQ

What exactly does an AI VP of Marketing do that a regular AI tool doesn't?

An AI VP of Marketing goes beyond content generation to actually strategize. It analyzes historical performance data, understands your business goals, synthesizes real-time signals, and produces daily executable plans that connect different marketing activities into a coherent strategy. Regular AI tools typically just write content or schedule posts without understanding how those actions ladder up to business objectives.

Why couldn't you just buy an existing AI marketing solution off the shelf?

We evaluated every major AI marketing agent platform available and they all had the same limitation: they optimize for content generation or task automation, not strategic orchestration. Most of our problem wasn't "we need more blog posts" (we already have five thousand pieces of content). Our problem was "we need daily strategic decisions about which campaigns to run, when to run them, who to target, and how they connect to our business goals." No commercial platform we found could do that.

How long does it take to build something like this from scratch?

Our timeline was roughly three months to an MVP that we could test, six months to a production system we could rely on, and we're still optimizing beyond that. The initial build isn't actually that hard if you've got good engineers and clear requirements. The hard part is the ongoing maintenance, refinement, and adaptation as your business changes.

What's the annual cost of maintaining an internal AI agent like this?

Budget for: one engineer spending 20-30% of their time on maintenance and optimization (roughly

How do you prevent the AI from hallucinating or giving bad recommendations?

You build in multiple layers of review. The system doesn't have full autonomy to execute everything. Low-risk activities go through. High-risk decisions get human review. You actively monitor performance to catch when the system is consistently wrong about something. You refine the prompts based on mistakes you catch. And you maintain a mental model of where the system is likely to be wrong so you're alert to those failure modes.

Is building an AI agent like this worth the investment versus just hiring a marketing manager?

That depends on your scale and your specific problem. For a small company, hire a marketing manager. For a large company trying to solve a specific orchestration problem that affects your entire marketing operation, building might make sense. But genuinely evaluate whether a commercial solution, even if it's not perfect, would be cheaper and easier than building.

How does your AI agent handle unexpected situations or market changes?

It doesn't handle them well, if we're being honest. The system works well when patterns are consistent and historical data predicts the future. When something genuinely novel happens—industry disruption, economic shock, unexpected competition—the system's recommendations might not be very good because it's seeing through a rearview mirror. That's when you need human strategic judgment to override or recalibrate the system.

Can we fully automate marketing execution with something like this?

Not really, not in 2025. Not safely. We've tried full automation and every time we've discovered that the system was optimizing for something we didn't intend or missing important context. A hybrid model where AI makes recommendations and humans execute significant activities is safer and more reliable than trying to run fully autonomous marketing.

What's your biggest regret in how you built this system?

Starting too ambitious. We tried to build something that solved all our marketing problems at once. If we could do it over, we'd start much narrower—just SaaStr AI 2026 event marketing—and then expand to other campaigns once we had that working well. Fewer moving pieces initially means faster iteration and getting to value faster.

How do you integrate data from multiple marketing systems into one AI agent?

We built APIs that connect to Salesforce, our email platform, registration systems, website analytics, and sponsor relationship data. We maintain data pipelines that keep this information current and accessible to Claude. That's actually one of the more complex technical challenges because different systems have different data formats and schemas. Don't underestimate the engineering work required to get good data flowing into your system.

Is this scalable to other departments beyond marketing?

Probably. We're exploring building similar systems for sales (orchestrating outbound and sponsor prospecting) and operations. The core architecture—deep analysis of historical data, dynamic planning, real-time adaptation, partial automation—applies to a lot of business problems. But each domain requires its own domain expertise and customization.

Building an AI VP of Marketing is not a shortcut. It's an investment. It's more sophisticated than just throwing content generation at your marketing problem. It requires commitment, expertise, and ongoing maintenance. But if you're a company that's already scaled to the point where your bottleneck is strategic orchestration and not content generation, and you've genuinely exhausted commercial solutions, it might be the right move. Just go in with your eyes open about what the real costs are, what the maintenance looks like, and what the limitations will be. The AI revolution in marketing isn't going to eliminate marketing leadership. It's going to amplify good marketing leadership and expose leadership that's actually just spinning wheels.

Related Articles

- Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains [2025]

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- Nvidia's $1.8T AI Revolution: Why 2025 is the Once-in-a-Lifetime Infrastructure Boom [2025]

- RadixArk Spins Out From SGLang: The $400M Inference Optimization Play [2025]

- Physical AI: The $90M Ethernovia Bet Reshaping Robotics [2025]

- Humans&: The $480M AI Startup Redefining Human-Centric AI [2025]

![Building Your Own AI VP of Marketing: The Real Truth [2025]](https://tryrunable.com/blog/building-your-own-ai-vp-of-marketing-the-real-truth-2025/image-1-1769096357365.jpg)