The Memory Chip Shortage Nobody Saw Coming (But Should Have)

Remember when graphics cards disappeared from shelves? When you couldn't build a PC without dropping a small fortune on a GPU? That was 2021 and 2022, and most people thought it would never happen again.

They were wrong.

But this time it's not gamers getting squeezed out of the market. It's everyone else.

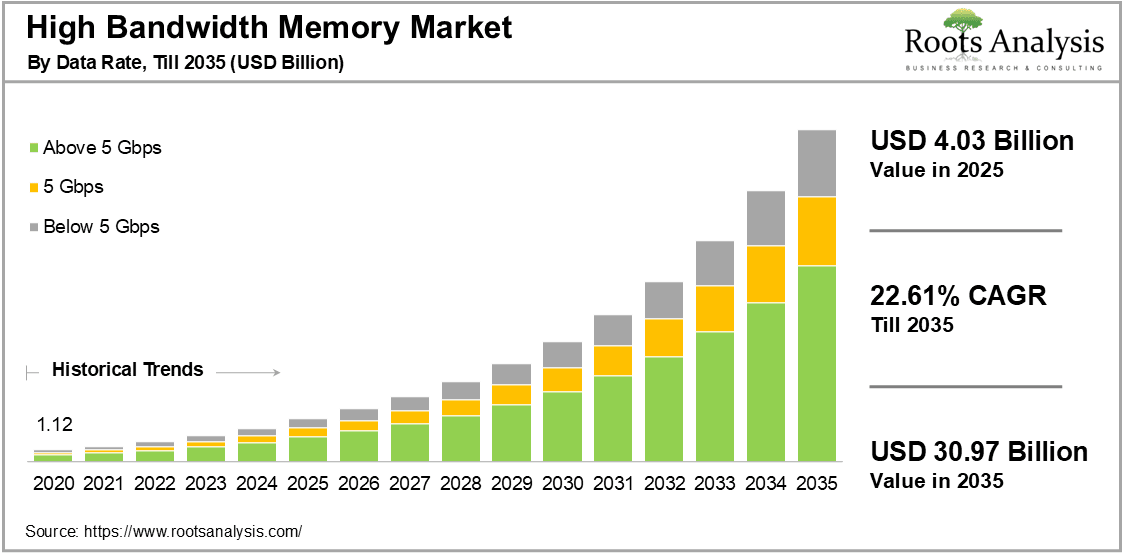

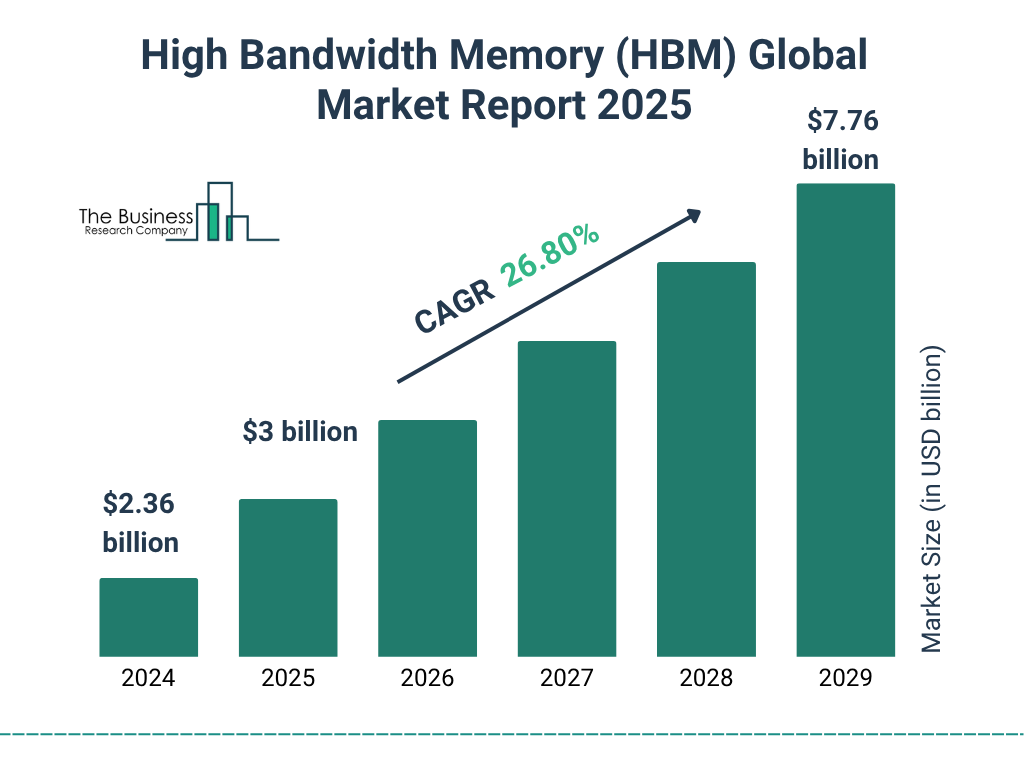

Right now, data centers are on an absolute rampage to vacuum up every premium memory chip they can find. We're talking about the highest-end stuff: HBM (high-bandwidth memory), DDR5, and GDDR6X. The kind of memory that powers AI servers, not your gaming rig or laptop.

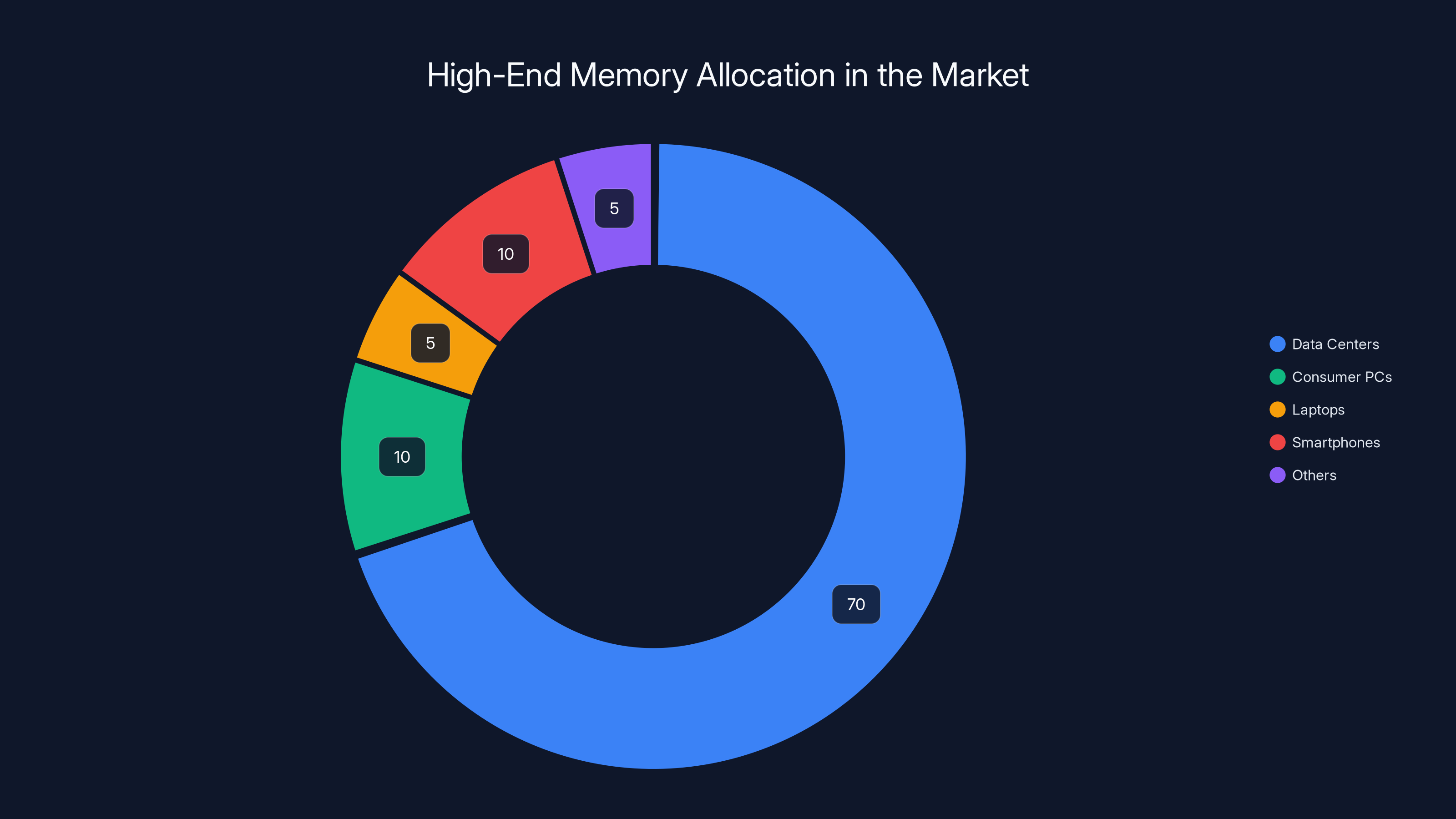

And here's where it gets wild: by 2026, data centers are expected to claim roughly 70% of all high-end memory chip production. That's not a suggestion. That's not an estimate with wiggle room. That's an industrial reality reshaping the entire semiconductor landscape.

What does that mean for you? If you're shopping for a new PC, laptop, or gaming system in the next 18 months, you're about to get a masterclass in the economics of scarcity. Prices will climb. Availability will shrink. Options will narrow. The AI boom isn't just transforming technology. It's ransacking the supply chains that feed every other computing device on Earth.

This isn't hyperbole. Let's break down exactly what's happening, why it matters, and what you can actually do about it.

TL; DR

- Data centers will consume 70% of premium memory chips by 2026, leaving only 30% for consumer, mobile, and enterprise markets

- HBM (high-bandwidth memory) is the bottleneck, with AI companies competing fiercely for limited production capacity

- Memory prices are already climbing, with premium DDR5 and HBM becoming genuinely scarce commodities

- Consumer PC upgrades will stall as manufacturers prioritize enterprise and data center contracts

- The supply chain won't rebalance until 2027-2028 at the earliest, long after new AI server generations ship

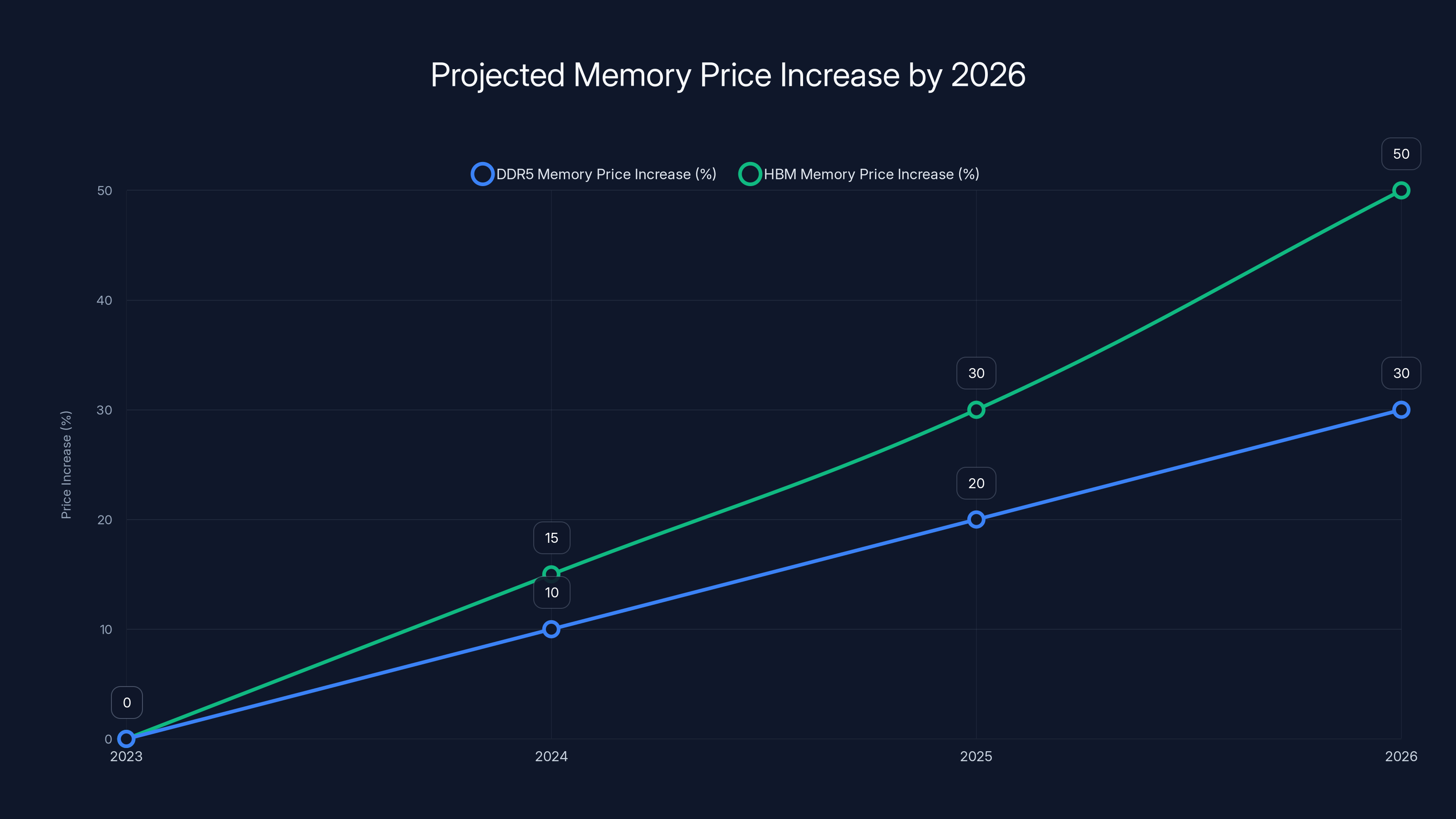

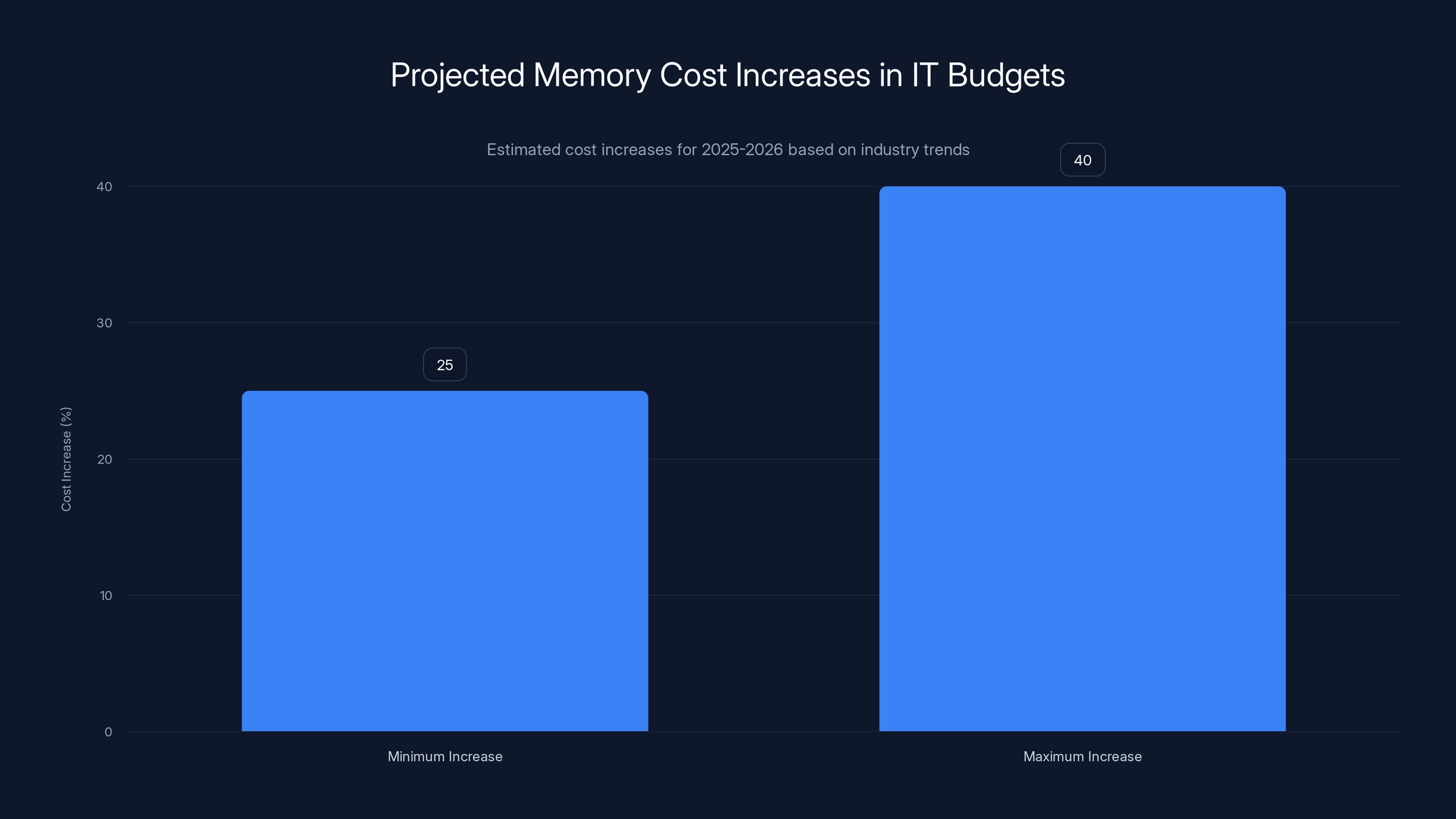

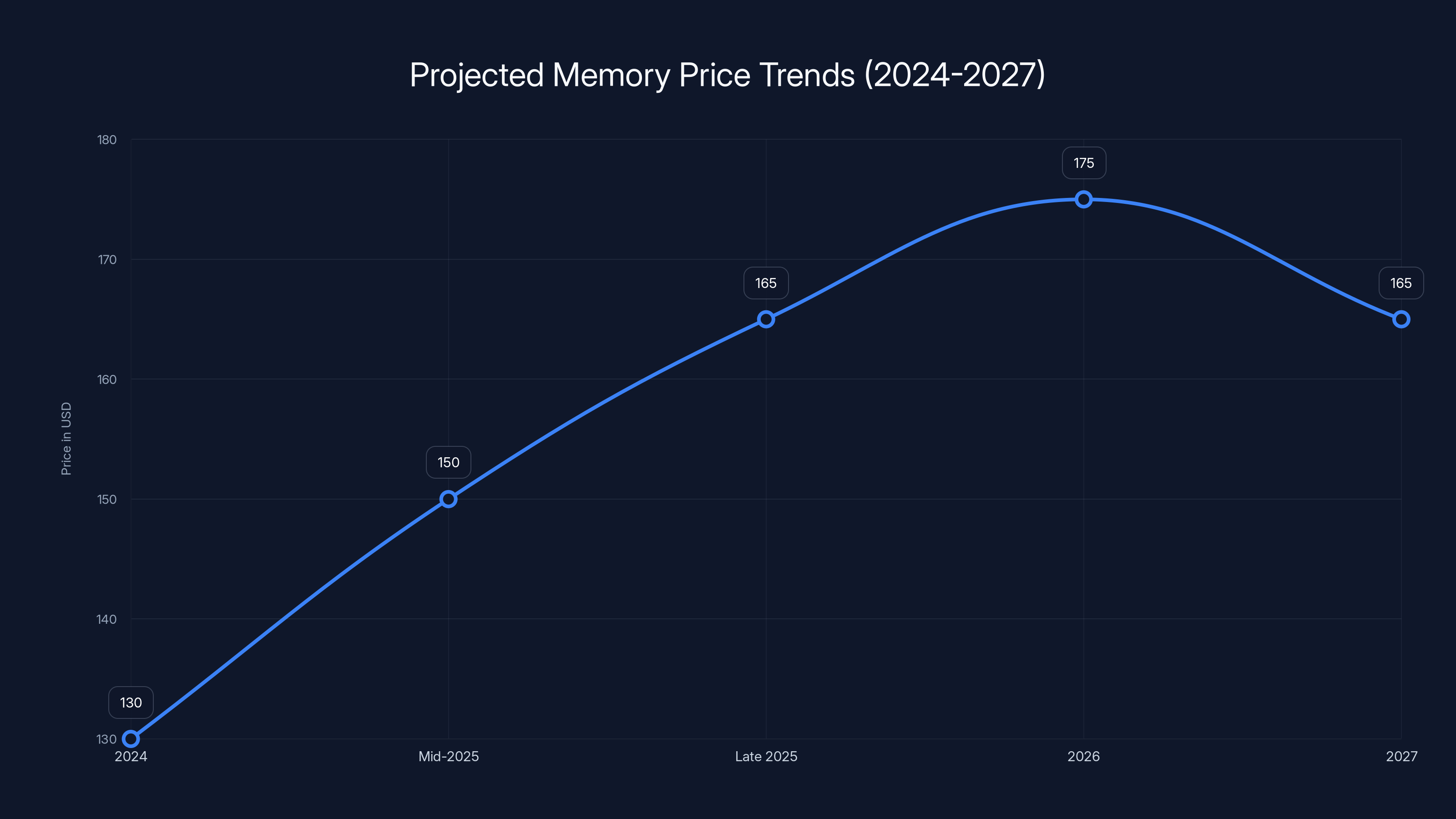

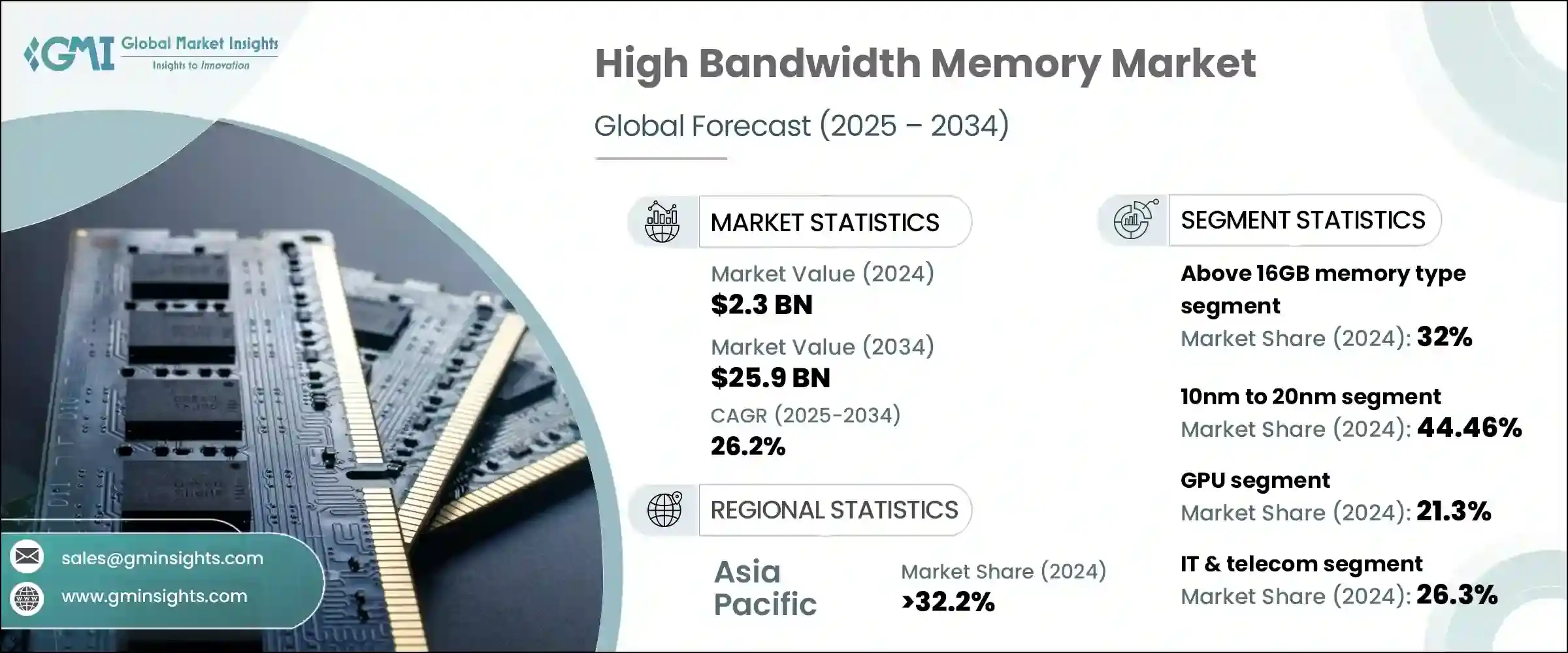

DDR5 memory prices are expected to increase by 25-40% by 2026, while HBM memory could see a 30-50% rise. Estimated data based on historical trends and fab constraints.

Why Data Centers Are Hoarding Memory Like It's Going Out of Style

Let's start with the obvious question: why are data centers even buying this much memory?

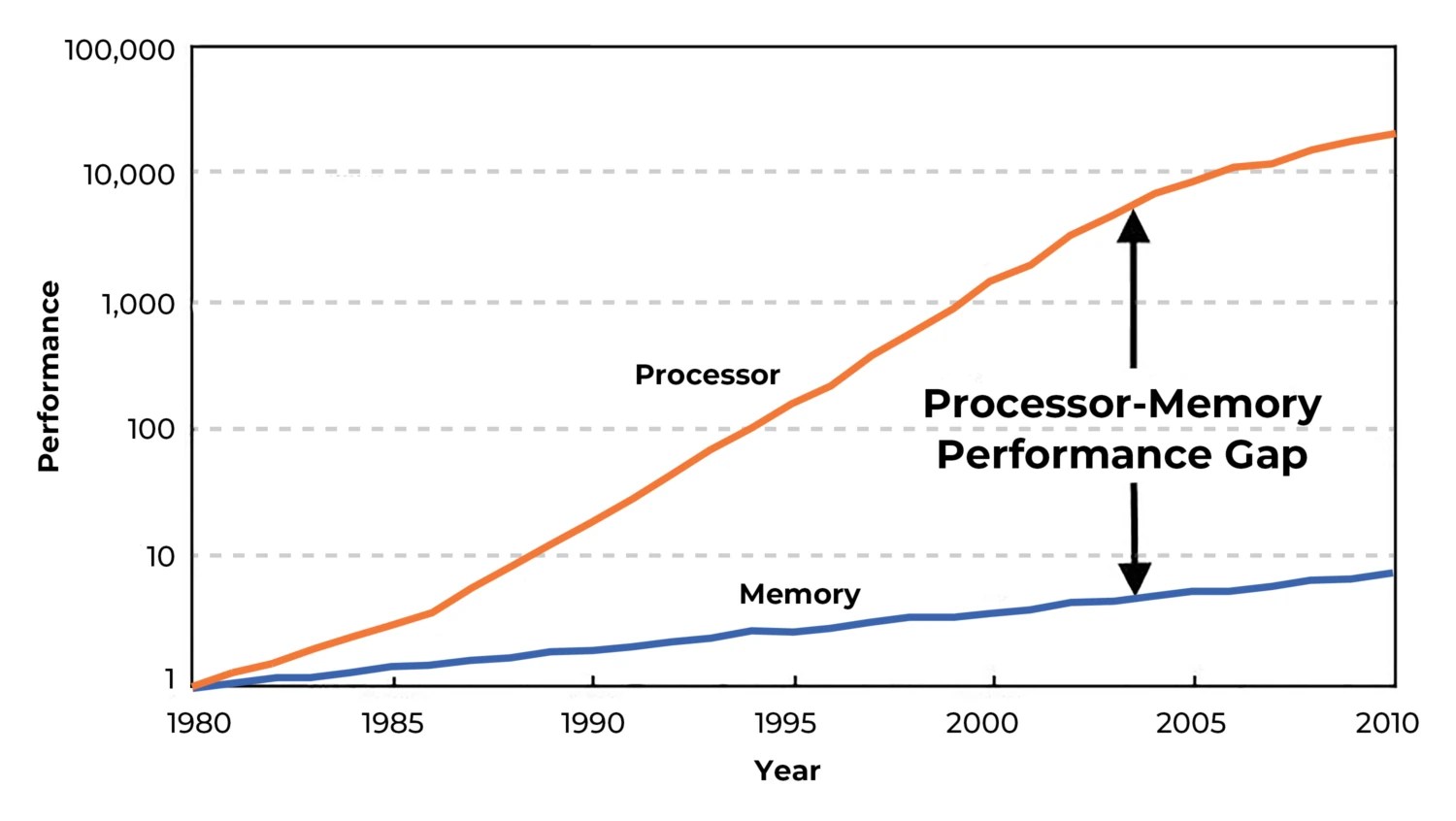

AI training doesn't work the way most people think. You can't just throw some GPUs at a problem and watch magic happen. Modern large language models like GPT-4 or Claude need massive amounts of working memory to function. We're talking about systems with parameters in the tens or hundreds of billions.

When you run inference on these models, every token that gets processed requires temporary storage in high-speed memory. The larger the model, the more memory you need. The faster you want responses, the more bandwidth that memory requires.

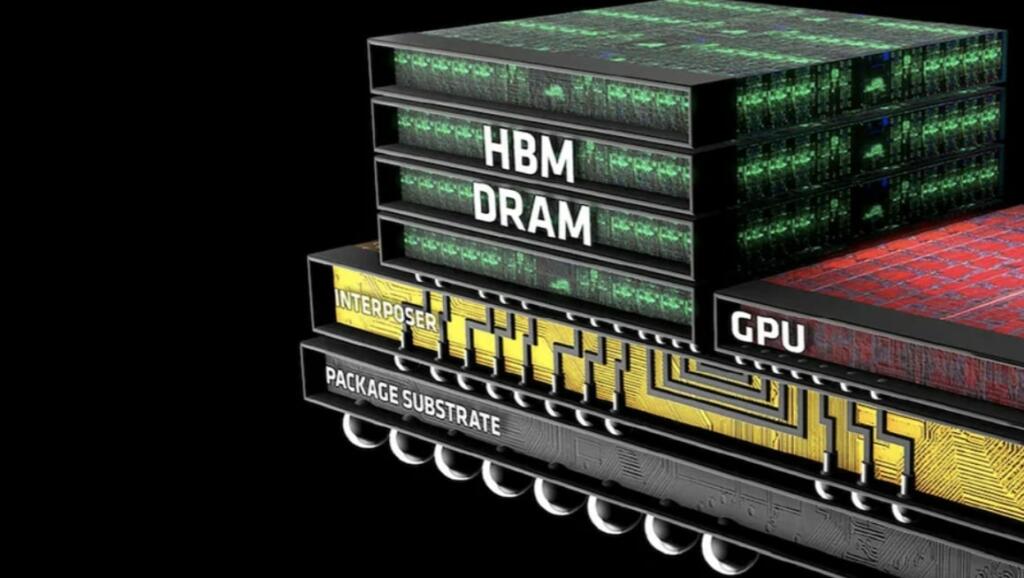

HBM is specifically designed for this scenario. It's got 2 to 3 times the bandwidth of traditional DDR5, and it sits right on top of the GPU die, eliminating latency issues. For AI workloads, HBM doesn't just help. It's often non-negotiable.

So here's what's happening: every major cloud provider (AWS, Google, Microsoft, Meta) is building out new AI infrastructure. Every startup working on AI is doing the same thing. Every hedge fund, every pharmaceutical company, every financial institution wants GPU-accelerated computing for their AI projects.

All of them need memory. Specifically, they need high-end memory. And there's only so much the world can manufacture in a given year.

The math is brutal. Memory manufacturers like SK Hynix, Samsung, and Micron can only make so much HBM per quarter. Every wafer that goes into AI memory is a wafer that's NOT going into consumer DDR5, laptop LPDDR5, or mobile memory.

It's zero-sum. More for data centers means less for everyone else.

The Memory Chip Supply Chain: A Bottleneck Gets Tighter

To understand why this matters so much, you need to understand how memory chips actually get made.

Memory manufacturing is one of the most capital-intensive industries on Earth. A single semiconductor fab costs

Retooling a fab to produce a different type of memory? That's not quick. That's not even easy. Some fabs can produce DDR5, HBM, and GDDR6X with configuration changes. Others are locked into specific product lines.

Right now, the bottleneck is HBM. There are only a few manufacturers with the expertise to make it at scale: SK Hynix, Samsung, and Micron. Each of them has allocated massive amounts of production capacity to HBM because the AI companies are literally willing to pay premium prices for it.

According to industry analysts, HBM production is growing, but not fast enough. Even with increased investments, the gap between demand and supply won't close until 2028 or later. By then, the next generation of AI chips will demand even more advanced (and scarcer) memory technologies.

It's like watching a runner on a treadmill. The treadmill keeps speeding up. The runner keeps running faster. But they never quite catch up.

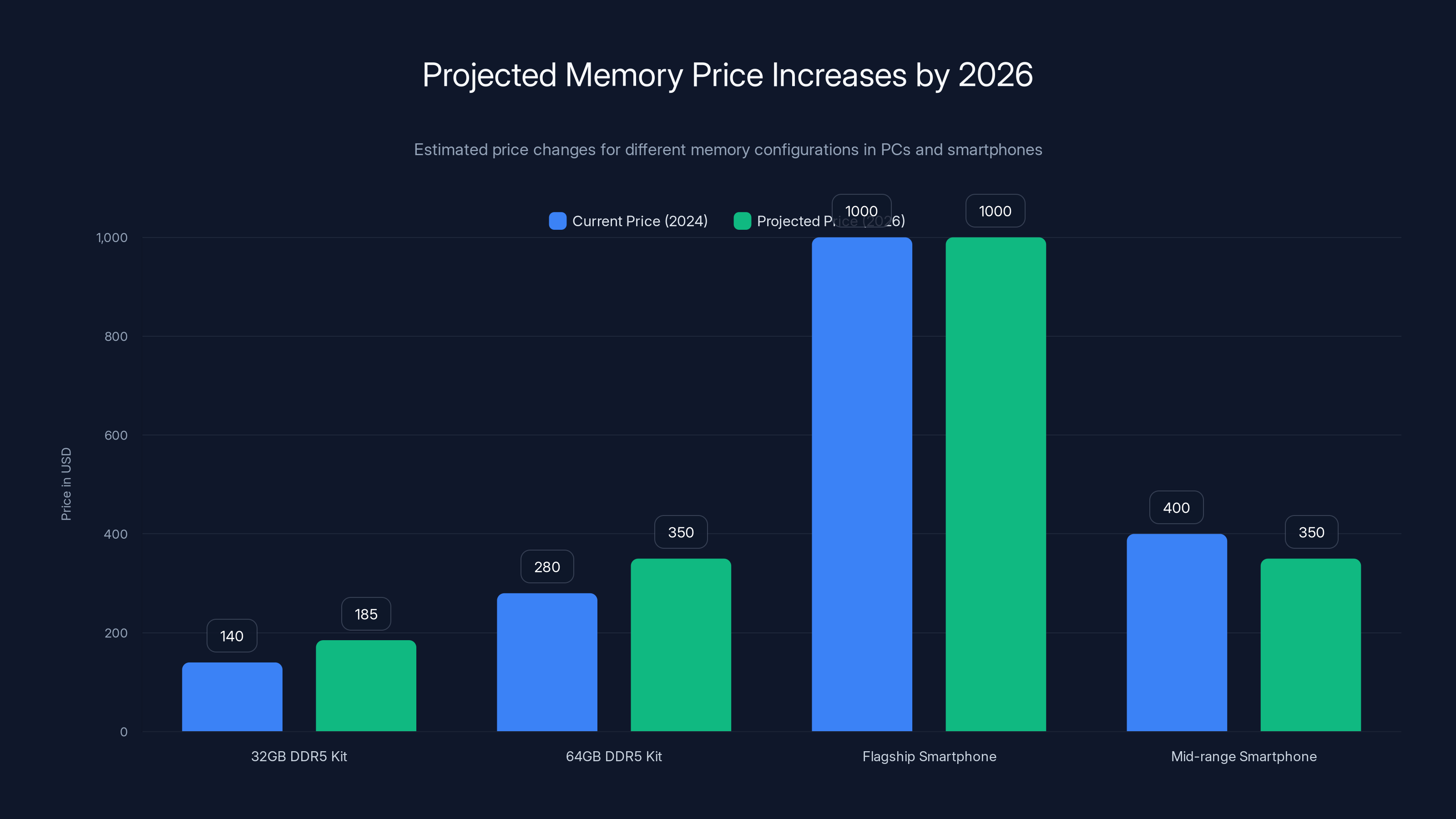

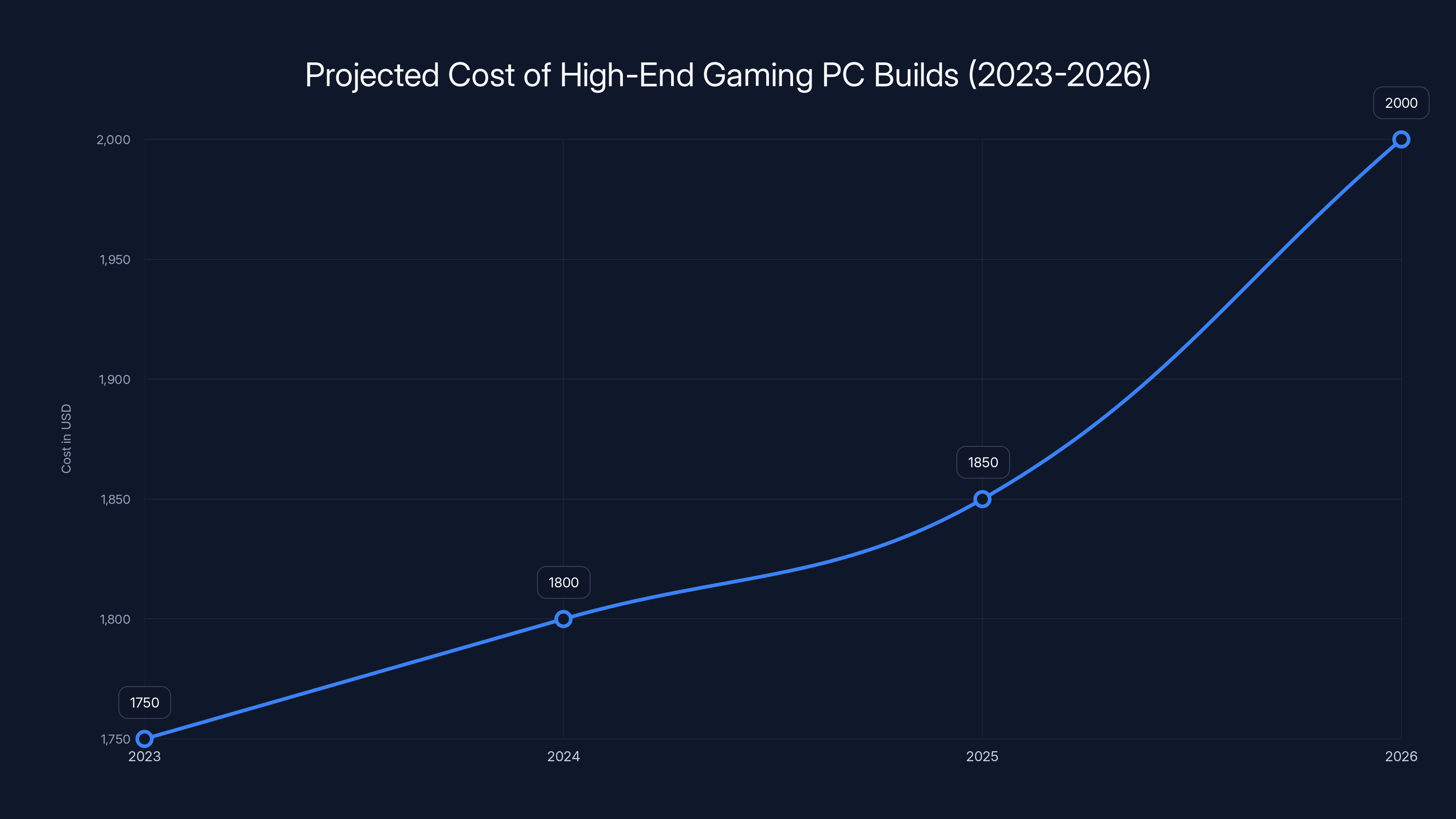

Estimated data shows that by 2026, the price of memory for PCs and smartphones will increase significantly, with high-end gaming PC memory seeing the largest hikes.

What 70% Allocation Actually Means for the Market

Let's translate the data center's dominance into real-world consequences.

If data centers are consuming 70% of all high-end memory production, that leaves 30% for everyone else. That "everyone else" includes:

- All consumer PC manufacturers (Dell, HP, Lenovo, ASUS, etc.)

- All laptop makers (Apple, Lenovo, Dell, HP, Razer, MSI, etc.)

- All smartphone manufacturers (Apple, Samsung, Qualcomm, Media Tek suppliers)

- All enterprise computing (corporations, financial institutions, law firms)

- All gaming hardware manufacturers

- All storage and embedded systems

- All consumer electronics (tablets, smart TVs, networking equipment)

Now think about the math. Consumer PCs alone sell roughly 250 million units per year globally. Each one needs DDR5 memory. Laptops? Another 200 million units annually. Smartphones? Over 1.2 billion. Tablets? Hundreds of millions more.

Now try to fit all that demand into a pool that represents only 30% of high-end memory production. You physically cannot.

So what happens? First, prices rise. Manufacturers can't increase supply, so they increase prices to manage demand. A 32GB DDR5 kit that cost

Second, inventory shrinks. Retail channels dry up. Certain configurations become unavailable. Need 96GB of DDR5 for a workstation? Good luck finding it at any price.

Third, performance tiers get constrained. Manufacturers prioritize high-margin products. The super-premium stuff stays available (because customers will pay anything). The mid-range stuff dries up fast. The budget segment gets crushed.

Consumers and small businesses will feel this first. Large corporations with procurement contracts will be protected somewhat. But for the average person shopping for a laptop or building a PC? It's going to get rough.

The HBM Shortage: Why It's the Real Crisis

HBM isn't just another type of memory. It's the critical constraint in the entire system.

DDR5 is reasonably mature at this point. Multiple manufacturers produce it. Fab capacity exists globally. If demand spikes, production can ramp up over 12-18 months. It's not trivial, but it's doable.

HBM is different. It's newer. It's more complex. And the manufacturing process is significantly tighter.

HBM requires stacking multiple memory dies on top of each other and using micro bumps to connect them. The yield rates (the percentage of chips that don't have defects) are lower than DDR5. The capital investment required per unit capacity is higher. The expertise required to manufacture it exists in only a handful of fabs globally.

Right now, SK Hynix and Samsung dominate HBM production. Micron is building capacity, but they're years behind. Meanwhile, NVIDIA (the biggest customer) wants every chip they can get. They're not buying HBM as a nice-to-have feature. They're buying it because their customers absolutely require it.

The result? HBM prices have skyrocketed. Last year, high-end HBM3 memory was priced at roughly

This is the core of the problem. HBM isn't a commodity like DDR5. It's a bespoke, high-margin product that requires years of R&D investment and massive manufacturing precision. The supply constraint is real and structural, not temporary.

Manufacturers are investing in new HBM capacity, but even aggressive expansion won't close the gap until 2027 or 2028.

How AI Companies Are Actually Competing for Memory

Here's where it gets interesting: the AI companies aren't just buying memory. They're competing aggressively for it at every level of the supply chain.

Open AI, Google, and Meta don't buy NVIDIA GPUs from retailers. They buy directly from NVIDIA. And when they do, they negotiate terms that include guaranteed memory configurations. They're essentially pre-allocating production capacity before the chips even leave the factory.

Microsoft signed a massive deal with NVIDIA for AI chips. Amazon is building its own AI accelerators. Both companies are competing for the same memory suppliers at the same time.

This creates a winner-take-all dynamic. The companies with the biggest budgets and strongest relationships get priority allocation. Smaller players get whatever is left over. And consumer manufacturers? They're at the back of the line.

This is already happening. Several PC manufacturers have reported difficulty sourcing enough DDR5 memory for mid-tier and entry-level systems. Inventory is down 20-30% compared to 2023. Available SKUs are limited.

A gaming PC builder might normally offer a system in 16GB, 32GB, 48GB, and 64GB configurations. Due to memory scarcity, they might only offer 32GB and 64GB by late 2025.

Data centers consume 70% of high-end memory, leaving only 30% for other segments like consumer PCs, laptops, and smartphones. Estimated data.

The Timeline: When This Gets Really Bad

The scarcity isn't uniform. It'll get worse in specific waves.

Q2-Q3 2025: The real squeeze begins. Companies start realizing that memory allocations are tight. Data center orders spike as companies rush to lock in AI infrastructure before prices rise further. Consumer memory availability tightens visibly.

Q4 2025 - Q1 2026: Peak shortage. New AI server generations ship (likely from NVIDIA, AMD, and others). All of them need massive amounts of HBM. Data centers are ramping deployments. Memory prices hit peak levels. Consumer DDR5 becomes genuinely hard to find in standard configurations.

2026: The 70% allocation to data centers becomes the new normal. Non-data-center demand must shrink to fit. Prices stabilize at higher levels. Supply chains adapt by producing different product mixes.

2027-2028: New HBM capacity comes online from expanded Samsung, SK Hynix, and Micron fabs. But by then, the next generation of AI chips (likely requiring HBM4 or beyond) will start the cycle again.

This is a multi-year problem, not a temporary blip.

Consumer Impact: Your Next PC Will Cost More (If You Can Get One)

Let's get specific about what this means for average people.

Gaming PCs: If you're planning to build a high-end gaming PC in 2026, expect to pay 20-30% more for memory than you would today. A 32GB DDR5 kit that costs

Availability will also narrow. Certain brands (like Corsair and G. Skill's ultra-premium lines) will become impossible to find at MSRP. Buyers will either wait months or pay secondary market premiums.

Laptops: Laptop manufacturers are already dealing with memory constraints. They're migrating to soldered memory (where possible) to reduce component sourcing complexity. This means fewer upgradeable laptops and longer product cycles. A laptop that might have gotten a 32GB option will stay stuck at 16GB much longer.

Enterprise Systems: Companies buying workstations for video editing, 3D rendering, or data analysis will face similar constraints. They might end up with older generation systems simply because current-generation memory is unavailable or prohibitively expensive.

Smartphones and Tablets: Mobile memory (LPDDR5) will also feel pressure, though less acutely than PC memory. Expect flagship phones to maintain their memory options longer than mid-range models. The $400 smartphone market will probably see memory specs decline (moving from 12GB to 8GB configurations, for example).

Budget Tier: The worst hit will be budget computing. Sub-

The Semiconductor Manufacturer Response: Building New Capacity

Of course, memory manufacturers aren't sitting idle. They see the demand. They're investing heavily in new capacity.

Samsung is expanding its HBM production at multiple fabs. SK Hynix is similarly ramping. Micron is building new production lines specifically for HBM and advanced DDR5.

But here's the catch: new fab construction takes 3-4 years. Even accelerated timelines put new HBM capacity online in 2026-2027. And that capacity needs time to ramp up to full production.

Meanwhile, demand from AI companies continues to accelerate. By the time new capacity comes online, the next generation of AI chips (requiring even more advanced memory) will likely be ramping.

It's a perpetual game of catch-up.

What manufacturers CAN do in the short term is optimize yields, increase working hours, and improve process efficiency. Samsung claims they can boost HBM output by 30-40% through operational improvements alone, without building new fabs. But even that's not enough to close the gap.

Some manufacturers are also hedging by investing in next-generation memory technologies like HBM4 and DDR6, hoping to get ahead of demand curves. But those technologies won't ship in meaningful volumes until 2027 or later.

IT budgets should account for a 25-40% increase in memory costs for 2025-2026. Estimated data based on industry trends.

The Broader Supply Chain Disruption

This isn't just about memory prices going up. It's about the entire PC and computing ecosystem getting disrupted.

When memory becomes scarce, manufacturers face difficult choices:

- Reduce total production - Build fewer PCs, fewer laptops, fewer devices

- Reduce memory configurations - Offer fewer SKUs with lower memory specs

- Increase prices - Pass costs to consumers

- Use older memory - Substitute DDR4 for DDR5, LPDDR4 for LPDDR5

- Prioritize high-margin products - Focus on premium systems, abandon budget segment

Most likely, manufacturers will do all five simultaneously.

This creates a ripple effect: retailers stock fewer options, consumers have less choice, and the competitive landscape shifts. Smaller manufacturers get squeezed out because they can't negotiate favorable memory allocation. Larger players with global leverage (Apple, Dell, HP, Lenovo) maintain relative stability while smaller brands struggle.

The market consolidates. Competition decreases. Prices rise. Innovation slows.

Enterprise and Data Center Winners vs. Consumer Losers

Let's be clear about who wins and who loses in this scenario.

Winners:

- Major cloud providers (AWS, Google Cloud, Microsoft Azure) - They have capital to secure allocation

- NVIDIA, AMD, Intel - Chip designers benefit from data center demand

- Memory manufacturers - Higher prices, guaranteed demand

- Large PC manufacturers - Can negotiate better terms

Losers:

- Consumer PC buyers - Higher prices, limited options

- Budget device makers - Squeezed out of supply chains

- Gamers - Gaming memory becomes harder to find

- Laptop buyers - Fewer configurations, higher prices

- Smartphone users - Incremental price increases

The irony is brutal: the AI boom that's supposed to democratize intelligence and make technology better is actually making it harder and more expensive for regular people to buy computers.

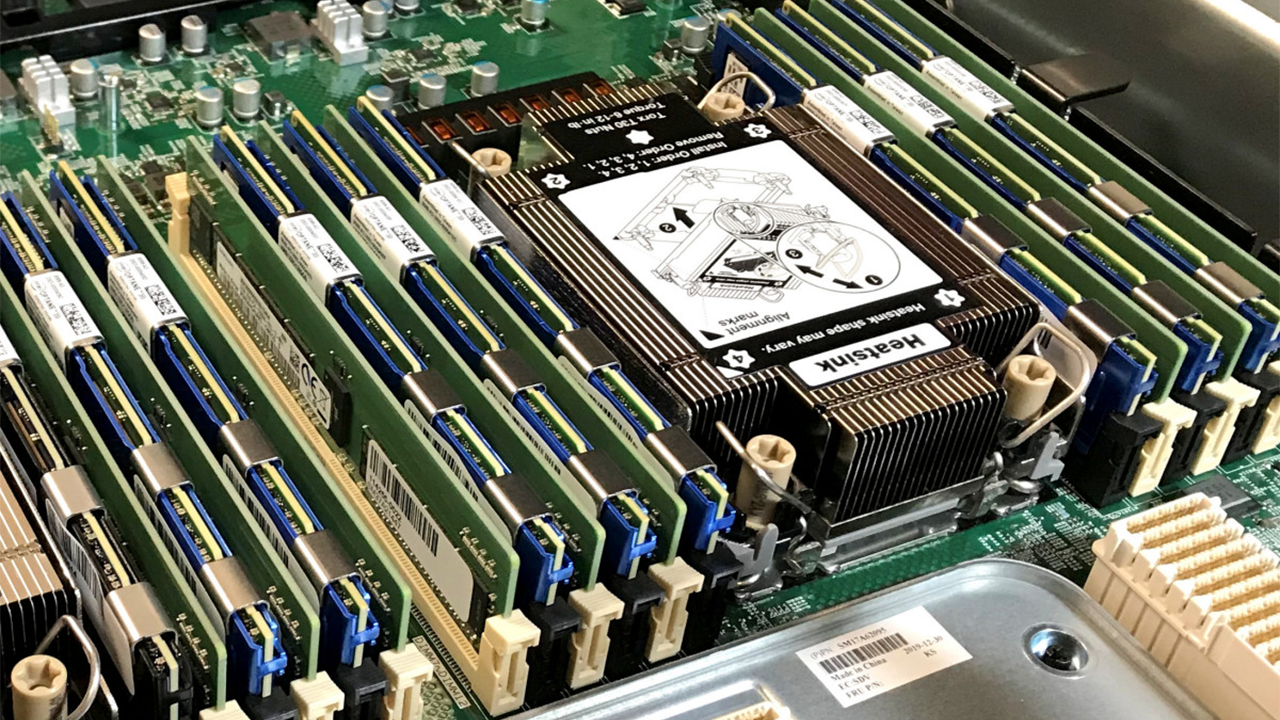

What Data Center Memory Actually Looks Like vs. Consumer Memory

Just to demystify this a bit: what exactly is data center memory, and how is it different from what's in your laptop?

Consumer DDR5: 32GB or 64GB modules, 5,600-6,400MHz speeds, mass-produced for desktops and laptops. Roughly $120-180 for 32GB today.

Enterprise DDR5: Same as consumer, mostly, but with ECC (error-correcting code) for reliability. Used in servers and workstations. Slightly more expensive ($150-200 for 32GB).

HBM3 (AI Servers): 141GB stacks, extraordinary bandwidth (up to 4,600GB/s), sits directly on GPU. Manufactured in tiny quantities. Costs $50,000+ per unit in bulk.

So when we talk about data centers grabbing 70% of high-end memory, we're partly talking about HBM (which is almost exclusively AI), and partly about enterprise DDR5 for servers and storage systems.

Consumer DDR5 is still relatively abundant compared to HBM. But the problem is that some of the same fabs and production capacity that could make consumer memory is instead being allocated to enterprise and AI products because the margin is higher.

It's not that your DDR5 disappears entirely. It's that available capacity shrinks relative to demand.

Memory prices are projected to rise by 35-40% by 2026, with stabilization and slight decreases expected in 2027 as new production capacities are realized.

The Geopolitical Angle: Where Memory Is Actually Made

Here's a complicating factor: where memory is actually manufactured matters for supply chain resilience.

Most HBM is made in South Korea (Samsung, SK Hynix). Most commodity DRAM and DDR5 is also made in South Korea, Taiwan, and Japan. There's minimal memory production in the United States, Europe, or elsewhere.

This creates a concentration risk. If there's any disruption in South Korea's fabs (weather, geopolitics, accidents), the global memory supply collapses instantly.

The U. S. has been trying to incentivize domestic memory manufacturing through CHIPS Act funding, but new fabs won't produce memory in volume until 2025-2026 at earliest.

Until diversification happens, global memory supply depends on maintaining stability in a handful of Asian locations. The data center demand surge actually exacerbates this risk by concentrating production schedules.

Memory Prices: What's Actually Happening Right Now

If you've bought memory recently, you've probably noticed prices are already climbing.

According to industry pricing data, DDR5 memory has increased roughly 15-25% since late 2024. HBM pricing is up 30-40% year-over-year. It's not a dramatic spike (yet), but the trend is clear.

Analysts project:

- Mid-2025: 15-20% additional increase from current levels

- Late 2025: 25-30% cumulative increase from early 2024 prices

- 2026: Prices stabilize at elevated levels (30-40% above 2024)

- 2027+: Modest decreases as new capacity comes online

These aren't wild speculations. They're based on historical shortage patterns, announced fab investments, and current allocation trends.

For a consumer, this means:

- Today: 32GB DDR5 = ~$130

- Mid-2025: 32GB DDR5 = ~$150

- Late 2025: 32GB DDR5 = ~$165

- 2026: 32GB DDR5 = ~$170-180

- 2027: 32GB DDR5 = ~$160-170 (slowly declining)

Over two years, you're looking at roughly a 35-40% price increase for baseline high-end memory.

How to Navigate This: Practical Strategies for Buyers

Okay, so this is happening. What can you actually do about it?

Strategy 1: Buy Now If You Can

If you need memory and you have the budget, buy it now. This isn't rocket science. Prices are lower today than they'll be in 12 months. Locking in current prices beats paying 20-30% more later.

For consumers: Upgrade your PC or laptop now. For businesses: Stock up on server memory if cash flow allows. This isn't panicking. It's rational economic behavior.

Strategy 2: Prioritize DDR5 Over DDR4

DDR4 is being phased out anyway. Prioritize DDR5 systems. Yes, they're more expensive than older systems, but DDR4 won't be cheaper in the future. DDR4 shortages will probably affect older systems harder as manufacturers shift to DDR5.

Strategy 3: Choose Upgradeable Devices

As shortages hit, more manufacturers will solder memory directly to motherboards (making upgrades impossible). If you're buying a laptop or device, prioritize models with upgradeable memory. When shortages hit in 2026, you might want to add more RAM. Having that option is valuable.

Strategy 4: Plan for Stability, Not Upgrades

Traditionally, consumers bought devices with minimal memory and planned to upgrade later. With memory scarcity ahead, that strategy breaks down. Instead, buy with all the memory you might need for the device's entire lifespan. The cost of upgrading in 2026-2027 will exceed the cost of buying it pre-installed today.

Strategy 5: Consider System Longevity

Instead of planning to upgrade every 3-4 years, buy higher-spec systems now that you can keep for 5-6 years. A PC with 64GB of memory today will feel more future-proof than a 32GB system you were planning to upgrade. The calculus changes when upgrades are expensive and hard to find.

Strategy 6: Watch Secondary Markets Carefully

Second-hand memory prices will eventually exceed new retail prices due to scarcity. Buying used memory in 2026 could be a disaster compared to buying new today. Monitor this carefully.

The cost of building a high-end gaming PC is projected to increase by approximately 14% from 2023 to 2026, primarily due to rising memory prices. Estimated data.

Enterprise and Corporate Implications

For businesses, this situation demands different strategies.

IT teams should:

- Conduct memory audits - Identify exactly what memory configurations are currently deployed across all systems

- Project future needs - Based on headcount growth and performance needs, calculate required memory capacity for next 3 years

- Lock in contracts - Negotiate multi-year memory supply agreements with vendors now while allocation is still reasonable

- Diversify vendors - Don't rely on single memory suppliers; maintain relationships with multiple manufacturers

- Budget conservatively - Assume 25-40% memory cost increases in IT budgets for 2025-2026

- Defer non-critical upgrades - Hold off on optional PC refreshes; prioritize only business-critical systems

- Evaluate cloud migration - For some workloads, moving to cloud infrastructure (where providers handle memory scarcity) might be cheaper than buying and maintaining local systems

Large corporations will weather this better than small businesses because they can negotiate volume pricing and long-term contracts. Smaller businesses should act urgently.

The Gamer's Dilemma: High-End PC Building Gets Harder

For PC gamers specifically, this is particularly frustrating because gaming benefits from memory improvements, but gamers are getting priced out of the market.

Today, you can build a solid gaming PC with 32GB DDR5 for around

The memory itself isn't the biggest component cost (GPU is), but it's a meaningful piece. More importantly, availability constraints might mean certain systems simply aren't available at any price.

Gamers should consider:

- Building now if planning 2025-2026 - Lock in current pricing

- Waiting for GPU upgrades - If planning a major build, wait until NVIDIA or AMD's next generation ships, then build around those

- Considering pre-built options - Manufacturers might offer better memory allocation than hobbyist builders. Pre-built systems might actually be cheaper than custom builds

- Upgrading GPU without upgrading memory - If your system has adequate memory, the next GPU upgrade might not require memory changes

What Happens After 2026? The Long Game

Eventually, this resolves. New fab capacity comes online. Memory production increases. Prices moderate. But the timing matters.

If we assume:

- New HBM capacity ships in 2026-2027

- New DDR5 capacity ramps through 2027-2028

- Next-gen memory (DDR6, HBM4) starts shipping in 2027-2028

Then by 2028-2029, supply should roughly match demand again. Prices will decline modestly from 2026 peak levels (maybe 10-15% drops) but won't return to 2023-2024 prices. We've seen this pattern before: shortages create new baselines that rarely fully reset.

Meanwhile, the AI boom will likely have moved past training and into inference. Inference requires less memory than training, which might actually ease pressure on high-end memory by 2027-2028.

But that's speculation. The certain part is: 2025 and 2026 are going to be tight years for memory availability.

Memory Technology Roadmap: What's Coming

While we're dealing with current shortages, the industry is simultaneously engineering next-generation solutions.

HBM4: Expected 2026-2027. Roughly 2x the bandwidth and capacity of HBM3. Will help with AI workloads but will be scarce initially.

DDR6: Expected 2027-2028. Higher speeds, better power efficiency. Will take years to ramp to meaningful volumes.

3D DRAM: Being researched to increase capacity without increasing die size. Might help with future memory density but won't impact this shortage cycle.

Chiplet memory architectures: Some AI companies are exploring custom memory hierarchies (fast local memory + slower remote memory) to reduce reliance on HBM. This could ease pressure eventually.

None of these solve today's problem. They're tomorrow's solution.

The Uncomfortable Reality Check

Let's be honest about what's happening: the world is experiencing a severe imbalance between data center infrastructure investments and consumer computing supply chains.

Billions of dollars are flowing into AI infrastructure. Trillions of dollars in valuation are tied to AI companies. The incentive to secure memory for AI servers is enormous.

Meanwhile, the consumer computing market is fragmented. No single entity commands as much purchasing power as Google or Microsoft or Open AI. Consumers can't compete with that scale.

This isn't new—it's happened before with GPUs. But it's instructive: when investment flows toward one sector, other sectors get squeezed. That's just how supply chains work.

The good news: this is temporary. In 2027-2028, capacity will rebalance. Consumers will be fine eventually.

The bad news: "eventually" is 18-24 months away. That's a long time to wait or pay premium prices.

Takeaways: What You Actually Need to Know

If you're still reading this far, here's what matters:

-

Buy memory now if you need it. Prices are going up. Availability is shrinking. Lock in what you need.

-

Plan for more expensive upgrades. If you were planning to upgrade your PC in 2026, budget 30-40% more than you would have budgeted in 2024.

-

Businesses should secure supply contracts. IT teams should negotiate multi-year agreements with memory vendors immediately while allocation is still favorable.

-

The shortage is structural, not temporary. This won't resolve in a few months. It'll run through 2026 at minimum.

-

Older systems become relatively valuable. DDR4 systems might actually hold their value better than expected because DDR5 availability tightens. The upgrade equation changes.

-

This will eventually pass. By 2028, this will be a footnote in tech history. But that's still years away.

The AI boom is genuinely transformative. It's also genuinely disruptive to the supply chains that feed everyone else. Both things are true simultaneously.

FAQ

What is HBM and why do AI data centers need it so badly?

HBM (High-Bandwidth Memory) is a specialized memory technology that stacks DRAM dies vertically with exceptional bandwidth capabilities (2-3x faster than DDR5). AI companies need it because training and running large language models requires massive amounts of fast memory sitting directly on GPU dies. A single H100 GPU might have 141GB of HBM, and data center clusters often run 1,000+ GPUs in parallel, meaning HBM demand is staggering.

How much more expensive will memory be in 2026 compared to today?

Based on current trends and analyst projections, premium DDR5 memory will likely be 25-40% more expensive in 2026 than it is today. HBM and enterprise-grade memory could see even larger increases of 30-50%. A 32GB DDR5 kit costing roughly

Why can't memory manufacturers just build more capacity immediately?

Building a new semiconductor fab takes 3-4 years and costs $10-20 billion. Manufacturers can't instantly pivot production lines to make different memory types—it requires reconfiguration and optimization that takes months. Even aggressive expansions of existing fabs won't meaningfully increase HBM supply until 2026-2027. By then, the next generation of AI chips will demand even more advanced memory.

Should I buy a laptop or PC now or wait?

If you need one, buy now. Memory prices are currently lower than they'll be in 12 months. If you were planning to buy in 2026, you'll either pay 25-40% more or face limited availability. If your current device works, you can wait, but don't expect better prices or options—expect worse. Prioritize devices with upgradeable memory so you can add more RAM later if needed.

What about gaming and consumer DDR5 specifically?

Consumer DDR5 will feel the shortage less acutely than HBM, but prices will still increase 20-35% through 2026. Gaming memory (Corsair, G. Skill) might face availability constraints with certain configurations becoming hard to find. Gamers who want specific memory specs should buy now rather than waiting. Waiting will mean higher prices and potentially impossible-to-find configurations.

Can I do anything to get around these price increases?

Several strategies work: Buy memory now before prices rise further. Prioritize systems with adequate memory for their entire lifespan (don't plan to upgrade later). Choose devices with upgradeable memory rather than soldered configurations. For businesses, negotiate multi-year supply contracts with vendors immediately. Lock in pricing before allocation gets tighter. For consumers, monitor secondary markets carefully (though they'll likely be expensive by 2026).

When will this shortage actually end?

Major relief likely arrives in 2027-2028 when new fab capacity from Samsung, SK Hynix, and Micron comes online. However, prices won't return to 2023-2024 levels—they'll stabilize at modestly elevated prices (20-30% above 2024) as the new baseline. The next generation of AI chips arriving around the same time will create renewed pressure on the most advanced memory types, so it's more of a rebalancing than a resolution.

How does this affect mobile phones and tablets?

Mobile memory (LPDDR5) will face some pressure, but less acutely than PC memory because it's produced on different manufacturing lines with somewhat more flexibility. However, expect flagship phones to maintain memory options longer than budget models. Budget smartphones might see memory specs decline (dropping from 12GB to 8GB configurations) to manage costs. Tablets will see similar patterns with reduced upgrade tiers.

Why are data centers getting 70% of all high-end memory?

Because AI infrastructure investments dwarf consumer computing investments. Companies like Open AI, Google, Microsoft, Meta, and others are spending hundreds of billions on GPU clusters for AI training and inference. Each cluster needs enormous amounts of HBM. These companies can offer premium prices and volume commitments that individual consumer device manufacturers simply cannot match. It's pure economics: whoever can afford to pay most and commit longest gets the memory.

Should enterprises delay technology refreshes?

No, but they should be strategic. Defer non-critical upgrades to 2027+. For business-critical systems, proceed with upgrades now at current pricing and availability. Negotiate multi-year supply agreements locked at current prices to hedge against future increases. Consider cloud-based alternatives for some workloads where pricing might actually be more stable than managing on-premises hardware. Budget conservatively for IT spending in 2025-2026 (assume 25-40% increases for memory-related costs).

Conclusion: The New Reality of Computing

We're in a genuinely strange moment in technology history. AI is transforming everything. Investments are flowing at unprecedented scales. But those investments are creating real, immediate hardship for regular people trying to buy computers.

It's not permanent. It will resolve. But pretending it won't happen, or won't affect you, is naive.

The practical reality is straightforward: if you need memory, need a new PC, need to upgrade your laptop, or need to refresh enterprise systems, the time to act is now. Not in a panic-buying way. But in a rational, economically sound way.

Prices are lower today than they'll be in 12 months. Availability is higher today than it'll be in 12 months. Allocation is easier to secure today than it'll be in 12 months.

That's not speculation. That's what industry data is already showing.

The AI boom is real and transformative. The memory shortage is equally real. Both things coexist. Understanding that reality, and making purchasing decisions accordingly, is the only sensible approach.

By 2027 or 2028, we'll look back on 2025-2026 as a tight but temporary period. But living through tight, temporary periods is still… tight. Prepare accordingly.

Key Takeaways

- Data centers will claim 70% of all high-end memory production by 2026, forcing dramatic constraints on consumer systems

- HBM memory production is bottlenecked to only 3 manufacturers (SK Hynix, Samsung, Micron) with demand far exceeding supply through 2027

- Memory prices will increase 25-40% through 2026, with DDR5 climbing from ~165-180 for 32GB modules

- New semiconductor fab capacity won't meaningfully increase supply until 2026-2027, making 2025-2026 the peak shortage period

- Consumers and businesses should lock in memory purchases now at current prices rather than waiting for future improvements

Related Articles

- DRAM Prices Surge 60% as AI Demand Reshapes Memory Market [2026]

- Samsung TV Price Hikes: AI Chip Shortage Impact [2025]

- PC Market Downturn: How AI's Memory Crunch Will Impact 2026 [2025]

- AI Storage Demand Is Breaking NAND Flash Markets [2025]

- Why Premium SSDs Cost More Than Gold: Storage Price Crisis [2025]

- Micron's AI Memory Pivot: What It Means for Consumers and PC Builders [2025]

![Data Centers to Dominate 70% of Premium Memory Chip Supply in 2026 [2025]](https://tryrunable.com/blog/data-centers-to-dominate-70-of-premium-memory-chip-supply-in/image-1-1768901821533.jpg)