TikTok Censorship Fears & Algorithm Bias: What Experts Say

Something shifted on TikTok last week, and it wasn't just the server infrastructure. When hundreds of thousands of users suddenly couldn't upload videos criticizing Immigration and Customs Enforcement, couldn't mention Jeffrey Epstein in direct messages, and started seeing mysterious error messages, they didn't panic randomly. They recognized a pattern.

TikTok's official response? Technical glitches. A power outage at a US data center caused some bugs. Nothing intentional. Move along.

But here's where it gets complicated. When digital literacy experts at institutions like Columbia University and the University of Colorado studied the pattern, they didn't find coincidence. They found something worse: plausible deniability layered over systematic suppression. Whether intentional or algorithmic bias baked into the system by design, the result looks the same to users watching their content vanish.

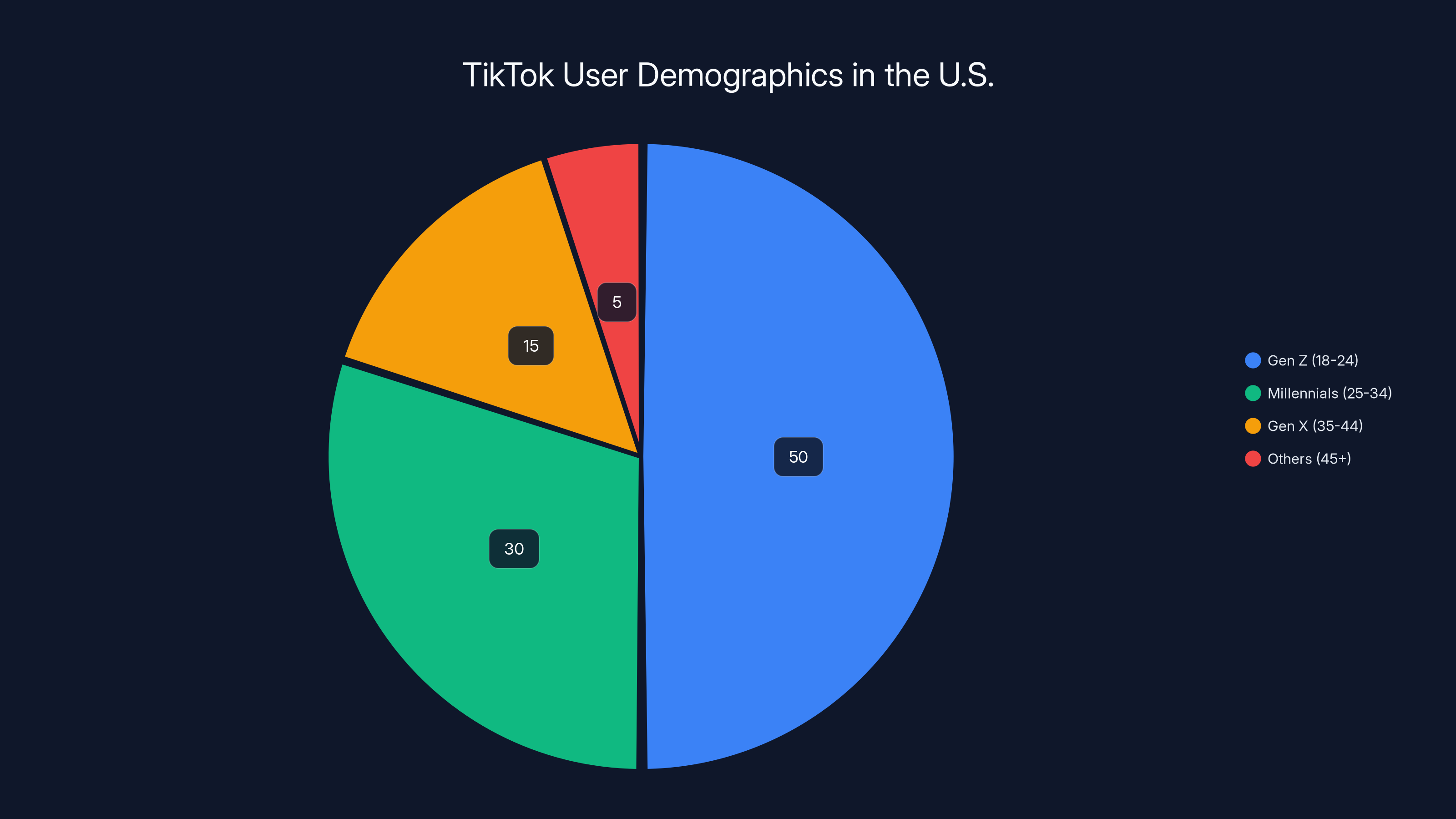

This matters because TikTok isn't just another app competing for your screen time. With 170 million American users, it's become the primary political communication platform for Gen Z, the place where cultural movements start, where activism organizes, where young voters discover news. When control of that platform shifts to politically aligned ownership, the stakes aren't theoretical anymore.

Let's break down what actually happened, why experts think users are right to be concerned, and what this means for digital freedom in 2025.

The Incident That Triggered Everything

On a Tuesday afternoon in early January 2025, TikTok users discovered they couldn't upload videos containing criticism of ICE enforcement. When they tried, they got an error message. No explanation. No support link. Just failure.

Then came the Epstein issue. Users trying to mention Jeffrey Epstein in direct messages saw their messages blocked. The platform didn't allow references to the disgraced financier in DMs, even though TikTok's official policy explicitly permits discussing Epstein. Users could post about him on the main timeline without issue, but private conversations? Blocked.

Anti-Trump content started disappearing from feeds. Pro-Trump content climbed faster. Users who'd spent years cultivating audiences built on progressive politics watched their engagement tank overnight.

TikTok responded with technical explanations. The data center power outage explanation made sense on the surface. Infrastructure failures do happen. AWS goes down sometimes. Google Cloud has outages. It's part of running internet-scale services.

But timing matters in pattern recognition. These bugs appeared immediately after Trump's hand-picked investors took control of the platform. They affected specific categories of content, not random data. And they appeared to correlate suspiciously well with political messaging aligned against the incoming administration.

That's not paranoia. That's observation.

Estimated data suggests a mix of technical issues, with data center outages and content-specific problems being prominent. Estimated data.

Why Experts Say Users' Fears Are Justified

Ioana Literat, an associate professor at Teachers College Columbia University who has studied TikTok's political function since 2018, cut through the technical explanations with a simple observation: the pattern is what matters.

"Even if these are technical glitches, the pattern of what's being suppressed reveals something significant," Literat explained to reporters. "When your 'bug' consistently affects anti-Trump content, Epstein references, and anti-ICE videos, you're looking at either spectacular coincidence or systems that have been designed—whether intentionally or through embedded biases—to flag and suppress specific political content."

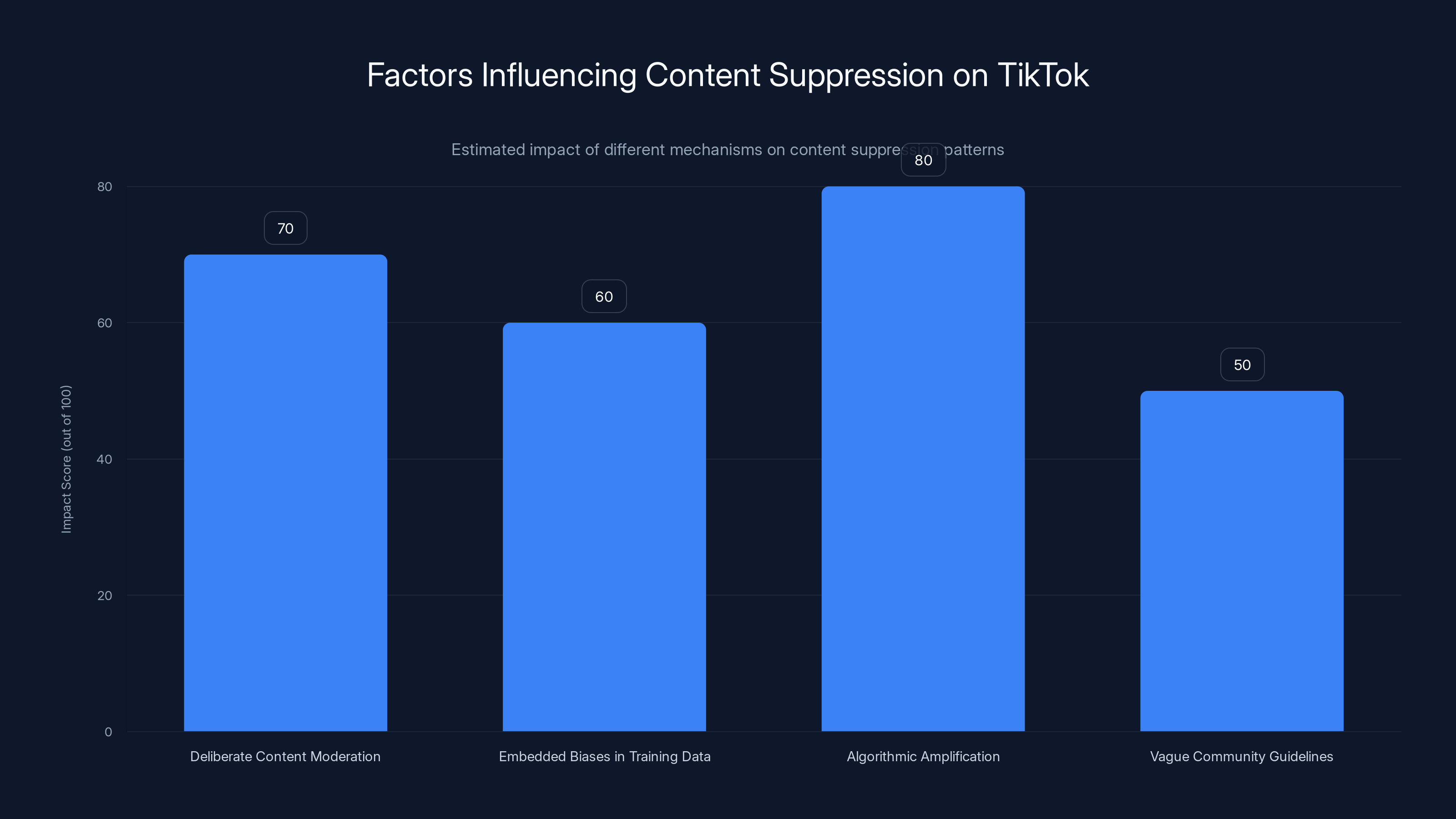

This distinction is crucial. Literat isn't accusing anyone of deliberate censorship, though that's possible. She's pointing out something more insidious: even if the bugs are genuine, the underlying systems have learned to suppress certain categories of speech. That learning could have happened through:

Deliberate content moderation policies fed into machine learning systems by humans making editorial decisions. "Remove content critical of Republican candidates" becomes a training signal that makes the algorithm learn to suppress that content.

Embedded biases in training data where historical moderation decisions—even if made by different people with different intentions—taught the algorithm which content to prioritize and which to suppress.

Algorithmic amplification tied to engagement metrics where conservative users tend to engage more with pro-Trump content on TikTok, so the system learns that type of content generates "valuable" interactions.

Vague community guidelines that are interpreted differently when applied to different political content. "Harassment" or "violence" could apply to anti-ICE activism or pro-Trump rhetoric, but enforcement is inconsistent.

Any of these mechanisms—or combinations of them—could produce exactly the outcome users are seeing without requiring a smoking gun memo saying "censor the left."

Casey Fiesler, an associate professor of technology ethics and internet law at the University of Colorado Boulder, took the analysis further. She told media outlets that whether the censorship is intentional becomes almost irrelevant to users' actual experience.

"Even if this isn't purposeful censorship, does it matter? In terms of perception and trust, maybe," Fiesler observed. "Once users lose confidence that they can speak freely, the platform's utility as a communication space collapses. It doesn't matter if the suppression was deliberate or accidental—the chilling effect on speech is identical."

That's the insight that separates experts from corporate PR. For users, the distinction between "we intentionally censored you" and "our algorithms learned to censor you, sorry about that" is meaningless. Either way, their voices aren't reaching people.

Algorithmic amplification and deliberate content moderation policies are estimated to have the highest impact on content suppression patterns on TikTok. Estimated data.

The Digital Literacy Argument: This Isn't Paranoia

One of the most interesting angles from Literat's research is her reframing of what looks like paranoia as actually sophisticated digital literacy.

Users worried about censorship on TikTok aren't making things up. They've watched this movie before:

Instagram suppressed Palestinian solidarity content for years, only admitting it years later after researchers documented the pattern.

Twitter became a different platform after Elon Musk's acquisition, with algorithmic changes that measurably favored certain political perspectives.

TikTok itself had engaged in content moderation that affected left-leaning creators before the ownership change, shadowbanning videos without explanation.

YouTube demonetized anti-war content while allowing militaristic channels to thrive.

Facebook's algorithm was deliberately adjusted to promote divisive political content because it drove engagement.

When you've experienced these patterns directly—watched your own content disappear, seen friends shadowbanned, noticed political videos from one perspective getting recommended while others don't—it's not paranoia to notice when the pattern repeats.

"They've watched Instagram suppress Palestine content, they've seen Twitter's transformation under Musk, they've experienced shadow-banning and algorithmic suppression, including on TikTok prior to this," Literat noted. "So, their pattern recognition isn't paranoia, but rather digital literacy. They understand how these systems actually work because they've been users of these systems for years."

This is worth sitting with. The people most concerned about TikTok censorship aren't conspiracy theorists. They're sophisticated users who understand platform mechanics, who've studied how algorithms work, who've experienced algorithmic suppression firsthand. When they say something feels off, they might be right.

The Political Stakes: Why This Platform Matters

Understanding TikTok's importance requires recognizing what it actually is in the American information ecosystem. It's not primarily an entertainment platform, though that's what the branding suggests. It's the primary political communication channel for voters under 30.

Where boomers get political news from cable TV and newspapers, where millennials get it from Facebook and podcasts, Gen Z gets it from TikTok. Climate activism? TikTok. Student loan policy? TikTok. Police reform? Immigration policy? Palestinian rights? LGBTQ+ protections? These movements organize and spread on TikTok faster than anywhere else.

That's why Trump's famous comment about wanting TikTok to be "100 percent MAGA" matters so much. He wasn't joking. He was describing his actual intent for the platform. And the takeover structure—with Trump-aligned ownership taking control—creates the structural possibility for that intent to become policy.

Literat has studied both left-leaning and right-leaning TikTok communities. The left side is famous: young progressives using the platform to advocate for racial justice, climate action, gun reform, LGBTQ+ rights, Palestinian solidarity. But there's a whole conservative ecosystem too: Christian TikTok, tradwife content, right-wing political commentary, pro-Trump organizing.

For years, TikTok's algorithm created what researchers call "filter bubbles"—environments where you mostly see content aligned with your existing views, but not completely. You see some opposing viewpoints, enough to know they exist, but not enough to be challenged constantly.

"Political life on TikTok is organized into overlapping sub-communities, each with its own norms, humor, and tolerance for disagreement," Literat explained. "The algorithm creates bubbles, so people experience very different TikToks."

The question now is whether those bubbles remain neutral or get algorithmically biased toward one political perspective. If TikTok starts suppressing left-wing content while amplifying right-wing content—and the bugs suggest it already might be—the platform transforms from a communication space into a propaganda apparatus.

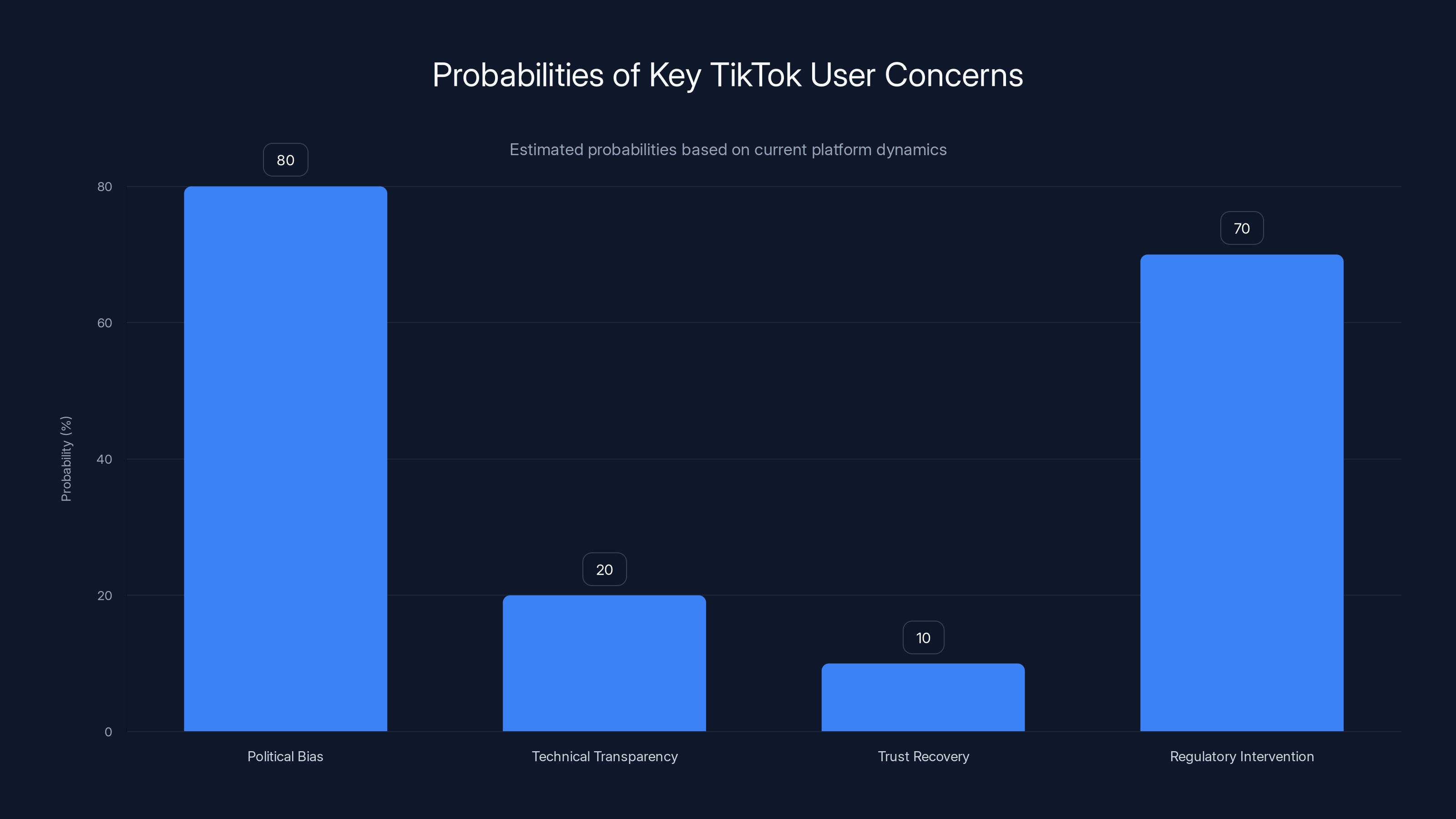

Estimated data shows high probability of increased political bias and regulatory intervention, with low chances for technical transparency and trust recovery.

The Historical Irony and Why It Matters

There's a bitter irony worth examining here. For years, American politicians argued that TikTok was dangerous specifically because it was controlled by the Chinese government and might manipulate Americans for propaganda purposes.

That argument had merit. Foreign control of a major political communication platform is risky. China's government does censor content, does propagandize, does use tech platforms for state purposes.

But the solution wasn't to hand the platform to American political leaders. It was to ensure the platform remained neutral and transparent about how it moderates content.

Instead, Trump forced a sale to investors aligned with his political interests. Now the concern about government-backed censorship remains, but the government is a different one—and the censorship, if it's happening, serves a different power structure.

Literat called out this historical irony explicitly: "We went from 'TikTok is dangerous because it's controlled by the Chinese government and might manipulate Americans' to 'TikTok is actually now controlled by Trump-aligned investors and is manipulating Americans.' The threat model changed, but the fundamental problem—a major political communication platform being controlled by a government-aligned actor—remained the same."

This observation matters because it shows how the policy debate got the right problem (political control of communication infrastructure is bad) but implemented the wrong solution (gave it to American political leadership instead of making it independent).

What Users Are Actually Experiencing

Beyond the philosophical implications, real users with real audiences are experiencing real consequences.

The subreddit dedicated to TikTok became filled with posts from creators grieving the platform. Content they'd built for years suddenly stopped reaching audiences. Videos that used to get hundreds of thousands of views got a few hundred. The algorithm—which had been predictable enough for creators to optimize around—suddenly became random and hostile.

Some users tried to delete their accounts in protest. But here's where the frustration compounds: they couldn't. TikTok's account deletion system started failing. Users reported being unable to complete the deletion process, getting stuck on error screens, being told to wait 24 hours and try again.

Whether that's intentional platform lock-in or just another bug, it compounds the sense of betrayal. Users don't just feel like their speech is being suppressed; they feel trapped on the platform.

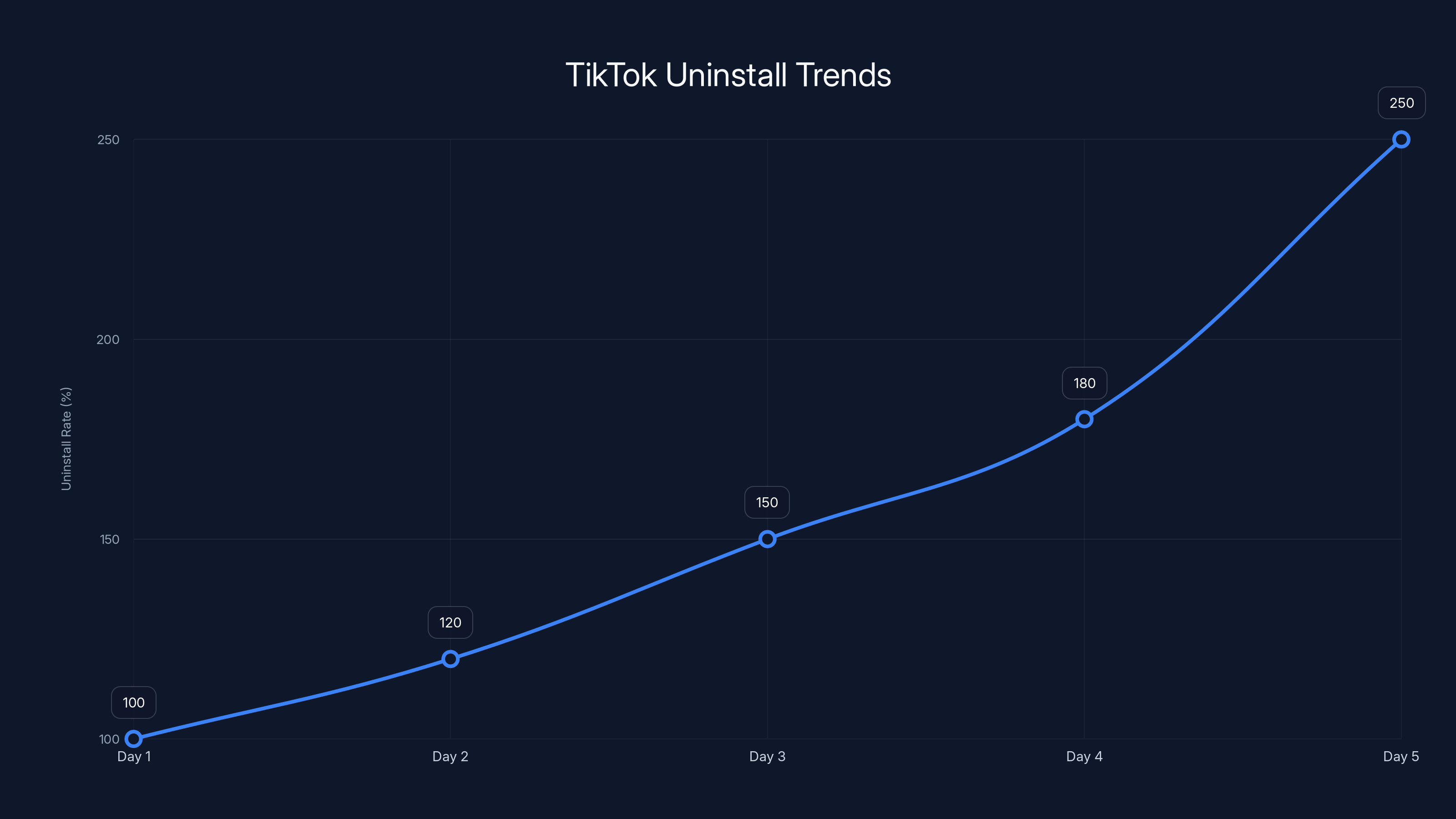

Data from Sensor Tower, a mobile analytics firm, quantified what was happening: TikTok uninstalls jumped nearly 150 percent in five days compared to the previous three-month average. Hundreds of thousands of users were trying to leave simultaneously.

But many couldn't. The system designed to let users exit the platform had stopped working.

TikTok experienced a 150% increase in uninstalls over five days, indicating user dissatisfaction and attempts to leave the platform. Estimated data.

The Technical Explanations and Why They're Insufficient

TikTok's official statements about the issues focused on technical explanations:

-

The data center power outage knocked infrastructure offline temporarily, causing various errors and slow response times.

-

The Epstein filter investigation was treated as a separate technical issue unrelated to the outage, something TikTok said it was investigating.

-

The anti-Trump content suppression was attributed to the broader infrastructure issues affecting upload functionality.

On their surface, these explanations are plausible. Data centers do experience power failures. Content moderation systems running on degraded infrastructure might behave erratically. Temporary suppression of uploads could definitely happen during infrastructure issues.

But the pattern raises questions that technical explanations don't address:

Why did some categories of content fail while others succeeded? If this was a blanket infrastructure issue, why could some users upload while others couldn't? Why was the failure correlated with specific content types?

Why did the Epstein filter persist separately? If everything was caused by the data center outage, why did the Epstein issue remain after other systems recovered?

Why did the timing align perfectly with political control changing hands? Technical failures happen randomly throughout the year. What are the odds it happened exactly when new leadership took over?

Why did TikTok lack transparency about what was happening? If the cause was genuinely just technical issues, why not explain the architecture decisions that caused content-specific failures? Why not provide detailed incident reports?

Fiesler's point resurfaces here: whether the suppression is intentional becomes less relevant than whether the platform can rebuild trust that it's not happening. TikTok's opaque technical explanations make rebuilding that trust much harder.

The Algorithm and How It Actually Works

Understanding TikTok's algorithm requires understanding how recommendation systems in general work. It's not a simple "show me what I want to see" setup.

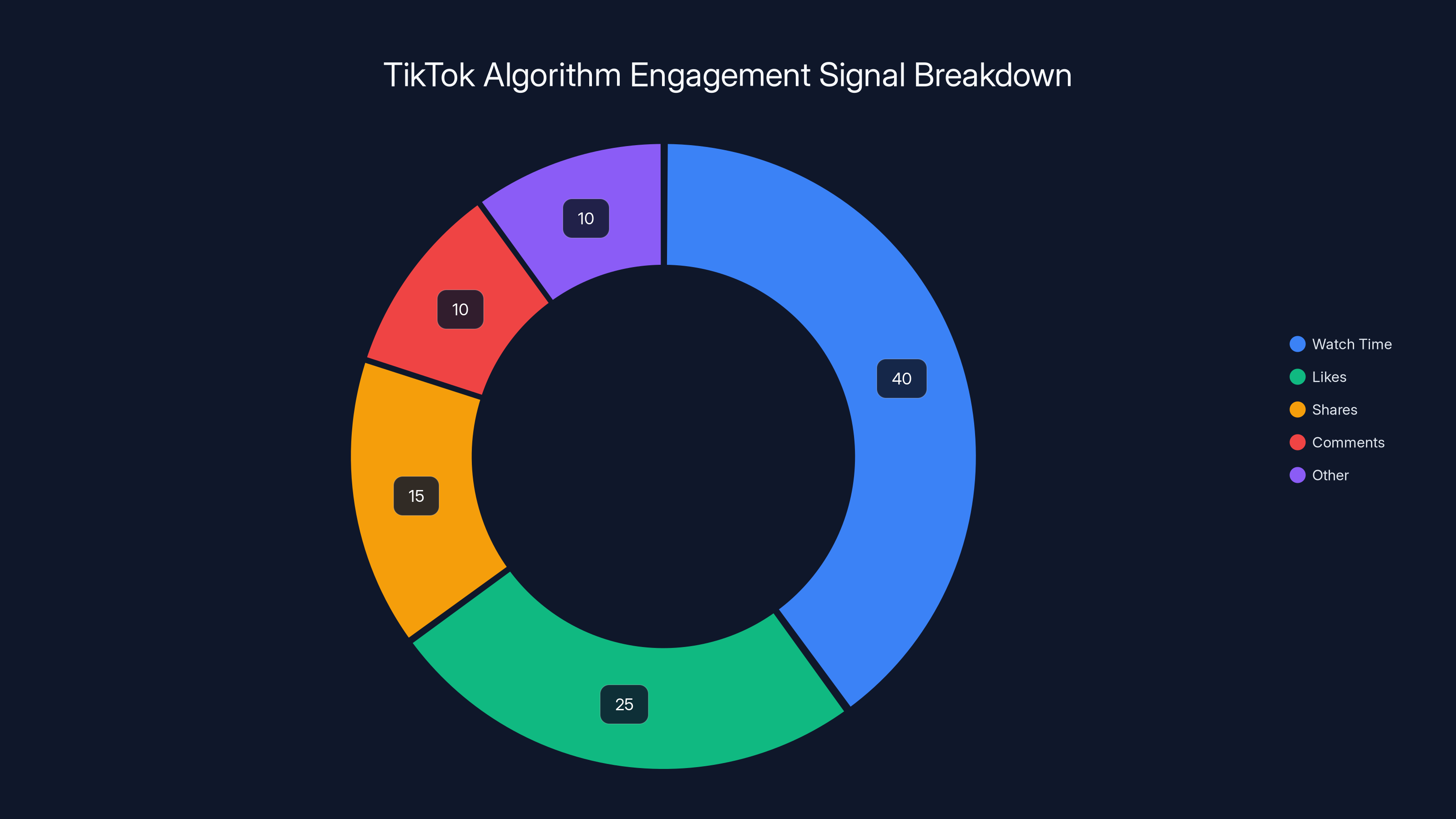

Instead, the algorithm optimizes for what keeps users engaged. Watch time, likes, shares, comments—these are the signals the system learns to maximize. The algorithm then learns which types of content produce those signals and prioritizes showing more of that content.

This creates a feedback loop. If conservative users tend to engage more with pro-Trump content (which engagement data suggests they do), the algorithm learns to show more pro-Trump content to users the system predicts will engage with it. This isn't necessarily intentional bias; it's just the system doing exactly what it was designed to do: maximize engagement.

But here's the problem: engagement-maximization isn't the same as truth-maximization or democratic-speech-maximization. The algorithm doesn't care whether content is factually accurate or whether it's promoting healthier political discourse. It only cares whether people click, watch, and interact.

When the ownership changed, the objectives could have shifted. Maybe TikTok isn't just optimizing for engagement anymore. Maybe it's also optimizing for political alignment with the new owners. That would be a small change to the algorithm's objective function, but it would have massive effects on what content gets amplified.

A small adjustment like this might not show up in error messages or crash logs. It would just quietly change which content users see. And to most users, it would feel like bugs—inexplicable suppression of content they wanted to see.

Estimated data shows that Gen Z (18-24) constitutes the largest group of TikTok users in the U.S., highlighting its influence as a political communication platform.

What Happens When Algorithm Changes Occur

Literat made an important prediction that's worth examining: as the algorithm undergoes tweaks, frequent users will be the first to notice.

"Frequent TikTok users will likely be the first to pick up on subtle changes, especially if content unaligned with their political views suddenly starts appearing in their feeds when it never did before," Literat observed.

This is already happening. Users are reporting that their "For You" pages have become noticeably more conservative. Content they never would have seen before—militia-adjacent material, anti-immigrant content, pro-Trump commentary—is now showing up in their recommendations.

Some of this might be natural algorithmic drift. Some might be intentional. But the key point is that users will notice. TikTok's user base is digitally literate enough to spot when the algorithm shifts. They'll compare notes. They'll document changes. They'll share evidence on Reddit and other platforms.

If the platform really is being "100 percent MAGA-fied," as Trump suggested, that process won't be secret. It'll be visible in the changing composition of recommended content. And once users confirm that it's happening, trust in the platform collapses.

This creates a curious situation: TikTok can't actually hide algorithmic bias. Its users are sophisticated enough to detect it. So any attempt to stealthily shift the algorithm toward political bias will eventually be exposed.

The question is whether TikTok even cares. If the ownership is explicitly trying to create a right-wing platform, maybe visibility doesn't matter. Maybe the plan is to own the transformation rather than hide it.

The Trust Problem and Why It Matters More Than the Technical Fix

Here's the thing about digital platforms: trust is everything. Once users believe they're being lied to, once they think the platform is working against them, engagement collapses.

Facebook experienced this. Trust in Facebook among younger users declined precipitously after the Cambridge Analytica scandal revealed how the platform's data was being used. Even though the platform's technical functionality remained the same, the loss of trust damaged the experience so badly that millions of users left.

Twitter experienced this more recently. Musk's takeover and the subsequent algorithmic changes convinced millions of users that the platform was no longer a neutral public square. Whether those concerns were technically justified became almost irrelevant. The perception was powerful enough to drive behavior.

TikTok is at this inflection point now. Whether the bugs are genuine technical issues or the start of political suppression, the outcome is the same: users have lost confidence that the platform operates fairly.

Fiesler emphasized exactly this: "The longer that errors damage the perception of the app, the more trust erodes. And once trust is gone, it's nearly impossible to recover."

TikTok's technical team might fix the bugs. The infrastructure might be restored. But rebuilding trust requires much more than that. It requires transparency about how the algorithm works, public explanation of moderation policies, independent audits of bias, and a commitment to remaining politically neutral.

Given TikTok's new ownership and their stated intention to make the platform more aligned with Trump's political vision, rebuilding trust seems unlikely.

Estimated data shows that watch time is the most significant engagement signal processed by TikTok's algorithm, followed by likes and shares. A small change in signal weighting can significantly alter content visibility.

Why This Moment Matters for Digital Freedom

The TikTok situation is part of a larger pattern where major communication platforms are being captured by political actors.

Twitter was bought by an individual with strong political views. Meta remains under Mark Zuckerberg's control, and his political neutrality is questionable. YouTube suppresses certain viewpoints algorithmically. Reddit moderated its political forums extensively. Threads, Meta's Twitter competitor, is controlled by a company with clear political interests.

The fundamental problem is that the infrastructure of political speech in America is now owned by private companies with profit motives, political preferences, or both. These aren't neutral public squares. They're privately owned spaces where the owners can set rules.

For a generation that relies on these platforms for political organization, this is a huge problem. Young voters can't organize effectively if the platform where they primarily communicate is actively working against their political interests.

Literat called this out directly: "The implication that Trump views TikTok as a potential propaganda apparatus rather than a space for authentic expression and connection is deeply concerning. When political leaders see communication platforms as tools for political capture rather than spaces for free speech, the threat to democracy becomes very real."

This isn't about TikTok specifically anymore. It's about whether any major communication platform can remain free from political control. And right now, the answer appears to be no.

The Data Center Explanation and What It Actually Reveals

TikTok attributed the initial bugs to a power outage at a US data center operated by a partner company. This explanation might be technically true, but it reveals something important about how the company operates.

If TikTok's US infrastructure is now so fragile that a single data center failure causes widespread content moderation failures, that's a problem. Major platforms have redundancy built in specifically so that single points of failure don't cascade into widespread user-facing issues.

Either TikTok's infrastructure was hastily rebuilt to meet the deadline for the forced sale and doesn't have proper redundancy, or the technical explanation is incomplete. Neither option is reassuring.

A well-architected system would have:

- Multiple data centers so that failure of one doesn't affect the service

- Content moderation systems distributed across regions so that local failures don't suppress content globally

- Failover mechanisms that gracefully degrade service rather than suddenly blocking uploads

- Monitoring systems that alert engineers immediately to issues

The fact that TikTok experienced cascading failures across these systems suggests either recent, sloppy infrastructure changes or systems that were never well-designed to begin with.

This matters because it suggests that the platform's technical foundation is shakier than users realized. If a single data center power failure causes this much damage, what happens when there's a more serious infrastructure issue? What happens when the political pressure increases?

The Path Forward: What Users Need to Know

For TikTok users, the immediate question is whether to stay on the platform or leave. Some have already decided to go. Others are waiting to see whether the new ownership actually does implement political censorship or whether the bugs were just bad luck.

Making that decision requires honest assessment of the platform's likely future:

Probability of increased political bias: High. The ownership has explicitly stated the intention to make the platform more politically aligned.

Probability of technical transparency: Low. Major platforms rarely explain their algorithms in detail, and TikTok has no incentive to be more transparent than competitors.

Probability of trust recovery: Very low. Users who've lost confidence rarely regain it unless the ownership changes fundamentally.

Probability of regulatory intervention: Moderate to high. California Governor Gavin Newsom announced plans to investigate whether TikTok is violating state law through censorship. Other states and federal regulators will likely follow.

Given these probabilities, users should consider what their continued presence on TikTok means. Are they comfortable using a platform that's likely to suppress certain political speech? Are they okay with their data being controlled by Trump-aligned investors? Can they trust the platform to treat all users fairly?

These are personal decisions that reasonable people might answer differently. But the decision should be made with clear eyes about what's actually happening, not based on TikTok's technical explanations.

What This Means for the Future of Digital Platforms

The TikTok takeover signals something important about how the internet is evolving. We're moving away from the era of nominally neutral platforms toward explicitly political ones.

Facebook used to present itself as politically neutral, a space where all voices could be heard equally. That pretense is increasingly abandoned. Threads explicitly positions itself as a Democrat-friendly alternative to Twitter. Twitter under Musk is openly right-leaning. Blue Sky is being built as a left-leaning alternative.

TikTok may be next. Instead of pretending to be neutral, TikTok might become an explicitly right-leaning platform, captured by Republican interests.

What does this fragmentation mean? Probably a further divergence in information ecosystems. Left-leaning users go to Blue Sky and Threads. Right-leaning users stay on Twitter and TikTok. The middle ground—platforms where different perspectives interact—shrinks.

That's bad for democracy, but it's where the market is heading. Users will sort into platforms that align with their politics. Creators will follow audiences. The algorithms will learn to reinforce homogeneity.

Understood this way, TikTok's transformation isn't a bug. It's the inevitable endpoint of how digital platforms evolve under private ownership and political pressure.

Literat's framing is useful here: "This is the historical irony. We're back to a world where major communication infrastructure is captured by governments or government-aligned actors. We've just swapped one government for another. The fundamental problem—communication platforms being tools for political capture rather than spaces for free speech—remains unchanged."

The Regulatory Response and What It Might Achieve

Governor Newsom announced plans to investigate whether TikTok violated California law through political censorship. This is likely the first of many regulatory actions.

What could regulation actually accomplish?

Algorithmic transparency: Require platforms to explain how their algorithms make decisions, including how political content is treated relative to other content.

Bias audits: Mandate independent audits of algorithmic bias, with results published publicly.

Data protection: Strengthen rules about who can access user data and how it's used for political purposes.

Switching costs: Make it easier for users to export their data and accounts to competing platforms, reducing lock-in.

Ownership restrictions: Prevent political actors from controlling major communication platforms, similar to media ownership rules for TV and radio.

None of these are guaranteed. Regulation moves slowly, and tech companies are effective at lobbying against it. But the political pressure is building, and TikTok's recent actions have made the problem impossible to ignore.

What's less clear is whether regulation can actually solve the problem. Even with transparency requirements, if the platform's ownership is explicitly political, it will still use the communication infrastructure for political purposes. Regulation might make that capture more visible, but it won't prevent it.

The deeper solution probably requires rethinking who can own major communication platforms. If we want genuine free speech, we might need platforms that are either publicly owned, cooperatively owned by users, or owned by neutral entities with explicit commitments to political independence.

That's not happening anytime soon. But the TikTok situation makes clear that the current model—private ownership with profit motives and political influence—isn't sustainable.

Why the Experts' Warnings Matter More Than You Think

When Literat and Fiesler issued their warnings about TikTok's direction, they weren't engaging in speculation. They were drawing on years of research studying exactly how platforms suppress speech, how algorithms develop bias, and how political actors capture communication infrastructure.

Literat has published extensively on TikTok's role in political organizing. Fiesler has studied content moderation decisions and their effects on free speech across multiple platforms. These aren't academics making wild predictions. They're researchers documenting patterns they've observed.

The pattern is consistent: when ownership of a platform shifts to a politically aligned actor, suppression of opposing viewpoints follows. Not always in obvious ways. Sometimes through algorithm changes. Sometimes through vague policy interpretations. Sometimes through infrastructure decisions that affect different content differently.

But it follows. And users notice.

So when these experts say TikTok users' fears are justified, they're not offering comfort. They're confirming that what users are experiencing is real, observable, and predictable based on how digital platforms work.

That's not reassuring. But it is honest.

The Election Implications and Political Stakes

We're heading into a 2026 midterm cycle and a 2028 presidential election. TikTok is where young voters get political information.

If TikTok is suppressing anti-Trump content and amplifying pro-Trump content, that's not a minor platform issue. That's election interference.

Literat made this point explicitly: "If TikTok becomes a propaganda apparatus controlled by Republican interests, we're not just losing a social media app. We're losing the primary political communication channel for Gen Z. The electoral implications are massive."

This creates an interesting situation for policymakers. They need to address TikTok's political bias before the next major election, but they also face pressure from different directions. Some want to ban TikTok entirely. Others want to preserve it as a platform for free speech. Still others want to capture it for their own political purposes.

The odds of actually solving this problem before 2026 are low. More likely, TikTok becomes a contested political issue itself—part of the broader battle over who controls digital communication infrastructure.

For users, that means paying attention to how the platform changes over the next year. Document algorithmic shifts. Share evidence. Organize backup communication channels. The political future might depend on it.

Moving Forward: What You Actually Need to Do

If you're a TikTok user concerned about censorship and political bias, here's what to do:

Document the changes you see. Screenshot your feed. Note what content gets recommended. Compare notes with friends. This documentation becomes evidence if there's future investigation.

Export your data. Most platforms let you download your data and videos. Do it now, before the situation gets worse.

Find alternative platforms. Red Note, which is China-owned ironically, became a temporary refuge for TikTok users. But for longer-term alternatives, look at Blue Sky, Threads, Discord servers, and independent platforms.

Support regulatory efforts. Call your representatives. Newsom's investigation is a start, but federal action will be necessary.

Stay connected. The communities you're part of matter more than the platform. If your favorite TikTok creators migrate to other platforms, follow them.

Educate others about algorithmic bias. Digital literacy is the defense against platform manipulation. Share what you know.

None of this guarantees that TikTok will become less political or more transparent. But it's how you maintain agency in a situation where major platforms are increasingly captured by political interests.

The Bigger Picture: Platforms and Democracy

The TikTok situation is one instance of a much larger problem: the concentration of communication infrastructure in private hands, with no accountability to users or the public.

We've allowed major communication platforms to develop with minimal regulation, minimal oversight, and minimal commitment to neutrality. Now we're seeing the consequences.

When platforms were smaller and more numerous, this was less of a problem. Users could leave one platform for another. But now that TikTok, Facebook, YouTube, Twitter, and a handful of others control the vast majority of digital speech, there's nowhere to go.

Fixing this requires thinking bigger than just regulating TikTok. It requires rethinking the fundamental architecture of digital communication. Do we want social networks to be proprietary platforms owned by corporations? Or do we want them to be open, decentralized systems where no single actor can capture the infrastructure?

These are questions for the next decade of policy. For now, TikTok is the immediate crisis point.

The experts are telling us that what we're worried about—political capture of our communication platforms—is real and measurable. We should listen to them. The decisions we make about TikTok in the next few months will shape what digital freedom looks like for the rest of the 2020s.

FAQ

What exactly is the TikTok censorship concern?

Users reported that videos critical of ICE enforcement and references to Jeffrey Epstein were being suppressed or blocked. TikTok attributed these to technical glitches from a data center power outage, but experts note the pattern of suppression correlates suspiciously with political content opposing Trump and the new ownership structure.

Are the bugs real technical failures or intentional censorship?

Experts like Ioana Literat acknowledge that the bugs might genuinely be technical failures, but argue the distinction becomes meaningless to users. Whether intentional or accidental, the result—suppression of specific political content—remains the same. The underlying question is whether TikTok's systems have been designed or trained to suppress certain viewpoints.

Why should I care if I'm not a TikTok user?

TikTok is the primary political communication platform for Gen Z, with 170 million American users. If it becomes a tool for one political perspective, it affects election outcomes, policy debates, and how young voters organize politically. This matters for democracy regardless of whether you personally use the app.

What does algorithmic bias actually mean in this context?

Algorithmic bias means the system consistently suppresses or amplifies certain types of content—in this case, political content—not because of explicit rules, but because of patterns in training data or optimization objectives. It's discrimination encoded into the math rather than the policy document.

Can regulation actually fix TikTok?

Regulation can increase transparency and require bias audits, but it can't force a politically aligned owner to become neutral. If the ownership structure remains Trump-friendly, regulatory oversight might expose the bias but won't eliminate it. The deeper solution requires addressing who can own major communication platforms.

What's the difference between TikTok's current problems and Twitter's problems after Elon Musk acquired it?

Both involve ownership change by a politically aligned actor, followed by algorithmic shifts that suppress opposing viewpoints. The main difference is that Musk acted overtly—firing thousands of moderators and openly discussing right-wing bias—while TikTok is attributing changes to technical glitches, creating plausible deniability. Both outcomes threaten free speech, but TikTok's opacity makes it harder to prove.

If I delete TikTok, where should creators and communities move?

Bluesky is positioning itself as a Twitter alternative with better moderation. Threads is Meta's Twitter competitor but comes with Meta's own political questions. Red Note has attracted some TikTok users but is China-owned. YouTube Shorts and Instagram Reels are options but owned by Meta. Discord communities and email lists offer long-term sustainability for creators who want to own their audience.

What would a truly neutral TikTok look like?

Transparency about algorithmic decision-making, independent audits for political bias, explicit commitment to treating all political content equally regardless of ownership preferences, and user control over algorithmic filtering. The challenge is that these commitments conflict with profit-maximization and political control, making them unlikely under current ownership models.

Is it paranoia if I'm worried about platform censorship?

No. The experts Ioana Literat and Casey Fiesler explicitly affirmed that users' concerns are justified and rooted in pattern recognition from previous platform experiences. Users have watched Instagram suppress Palestine content, Twitter shift under Musk, and Facebook manipulate feeds. That history makes current concerns reasonable, not paranoid.

What happens if TikTok actually becomes a fully right-wing platform?

Young progressive voters lose their primary political communication channel. The information ecosystem fragments further, with left-leaning users moving to Blue Sky and right-leaning users staying on TikTok and Twitter. Electoral implications become significant because political organizing happens where young voters congregate, and if that space is captured by one party, the other party's ability to reach young voters diminishes dramatically.

Key Takeaways

- Experts confirm TikTok users' fears of political censorship are justified based on observed patterns of content suppression

- Whether bugs are intentional or accidental, the effect on users is identical: suppression of anti-Trump and anti-ICE content

- TikTok's new Trump-aligned ownership creates structural incentive to shift algorithm toward right-wing bias

- User digital literacy makes algorithmic bias detection inevitable—attempts to hide suppression will eventually be discovered

- Loss of trust in a platform matters more than technical explanations—users will abandon TikTok regardless of bug origin

Related Articles

- TikTok Data Center Outage Sparks Censorship Fears: What Really Happened [2025]

- TikTok Settles Social Media Addiction Lawsuit [2025]

- Palantir's ICE Contract: The Ethics of AI in Immigration Enforcement [2025]

- Payment Processors' Grok Problem: Why CSAM Enforcement Collapsed [2025]

- Social Media Addiction Lawsuits: TikTok, Snap Settlements & What's Next [2025]

- Pornhub UK Ban: Why Millions Lost Access in 2025 [Guide]

![TikTok Censorship Fears & Algorithm Bias: What Experts Say [2025]](https://tryrunable.com/blog/tiktok-censorship-fears-algorithm-bias-what-experts-say-2025/image-1-1769557096661.jpg)