Introduction: The Clock Ticks Closer Than Ever

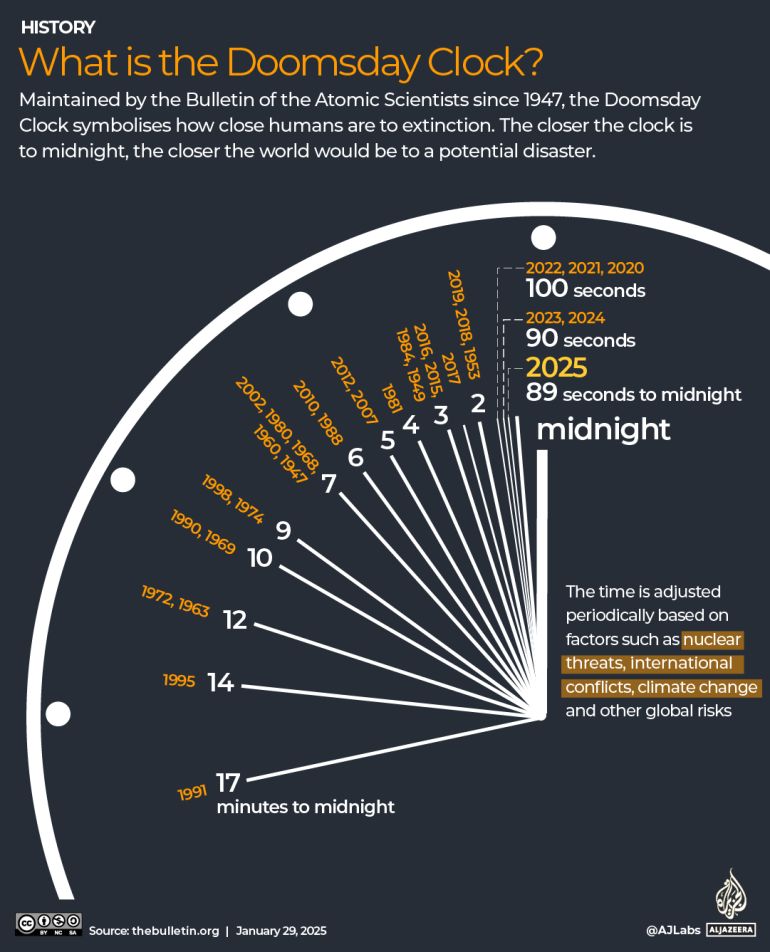

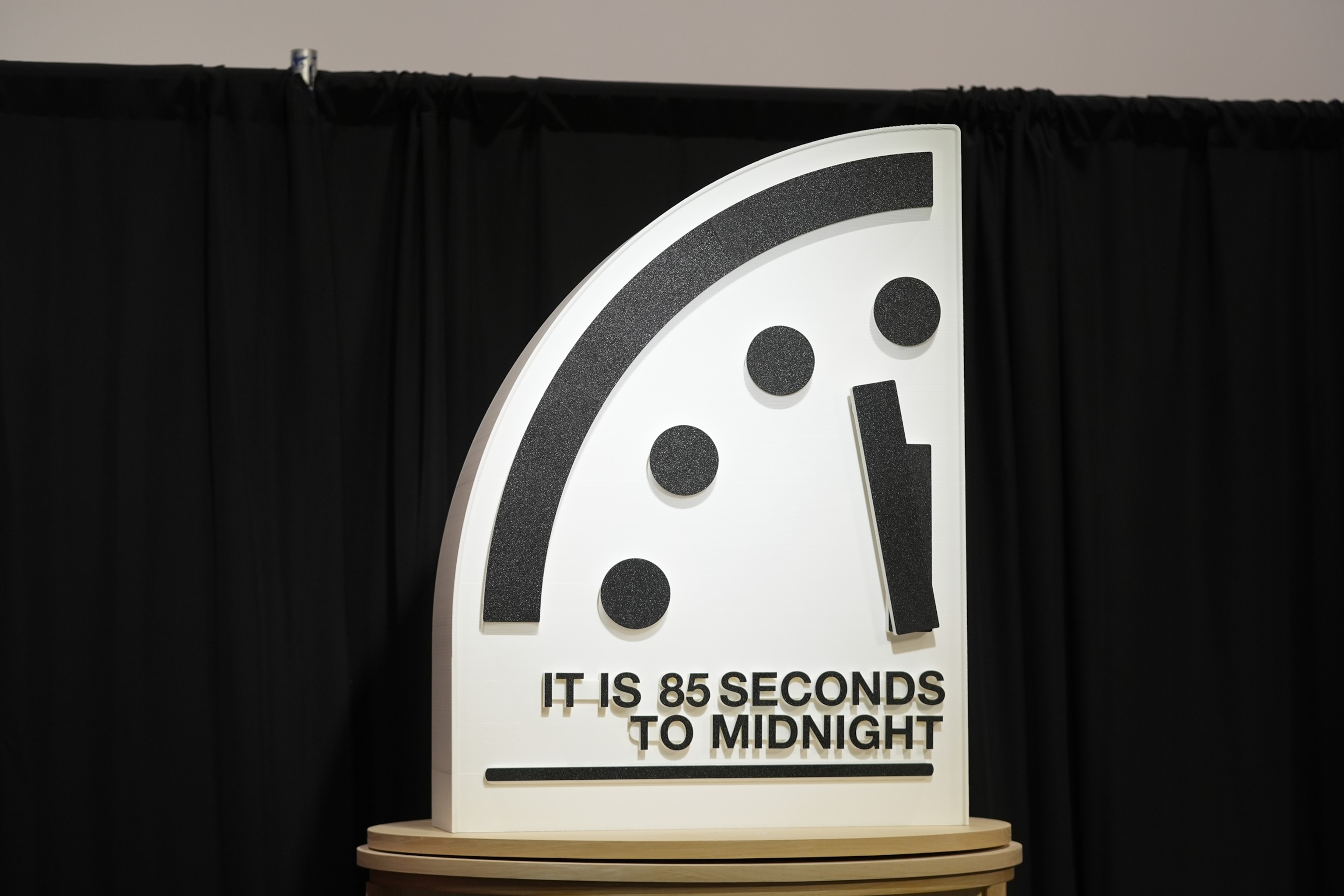

It's 11:54:35 PM on the world's most dire timepiece. That's where the Doomsday Clock stands now, closer to midnight than at any moment in its nearly 80-year existence. When the Bulletin of the Atomic Scientists announced this update in 2025, the message was unambiguous: humanity isn't just walking toward the cliff anymore. We're sprinting.

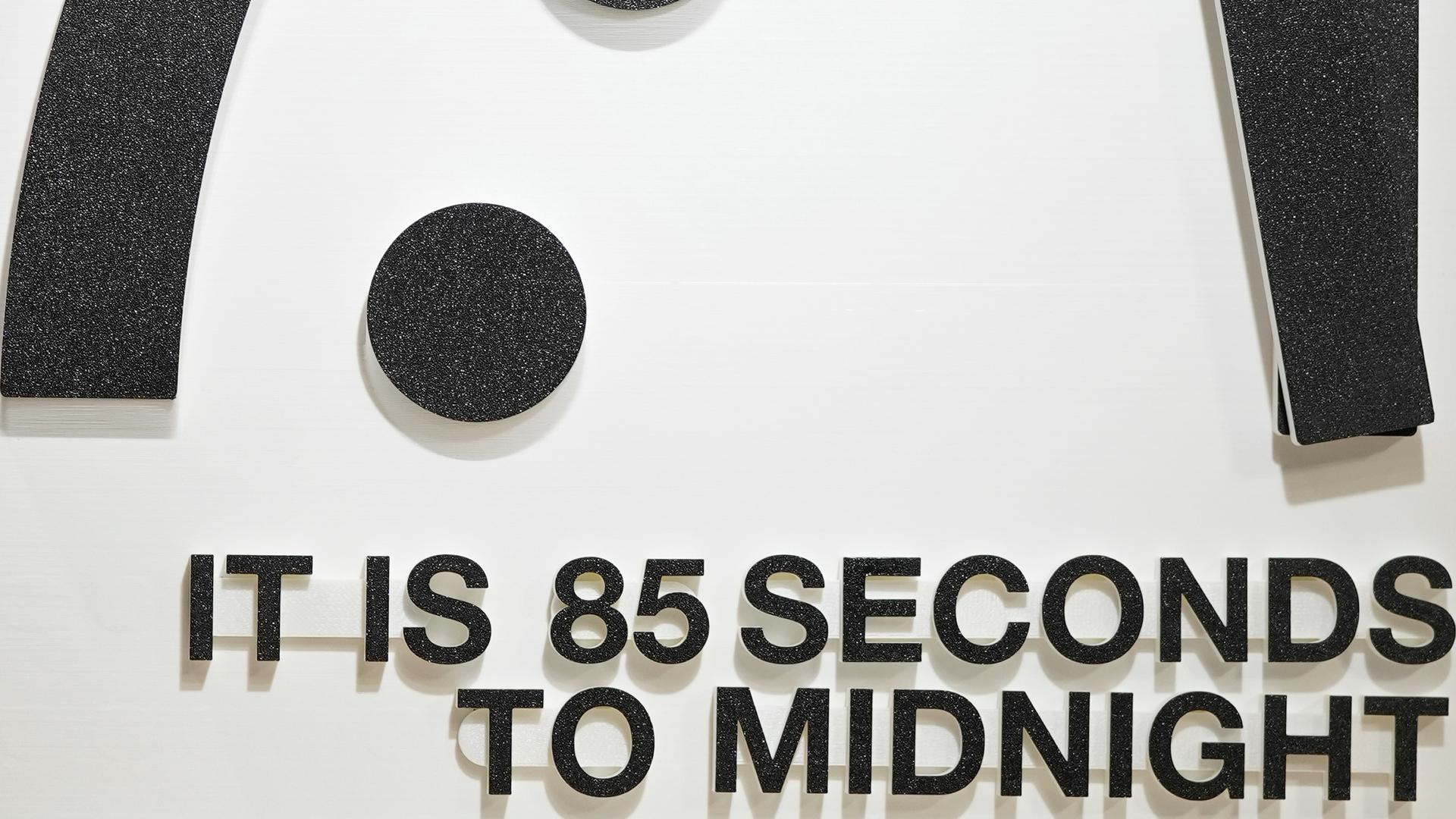

The clock reads 85 seconds to midnight. Not 90 seconds. Not 100. Eighty-five. Think about that for a moment. It takes roughly that long to read this paragraph. In the time your eyes move across these words, we've collectively moved closer to what the scientists call "the moment when humanity will have rendered the Earth uninhabitable."

But here's the thing that makes this different from doomsday porn or apocalyptic theater. The people adjusting this clock aren't conspiracy theorists or fringe prophets. They're physicists, climate scientists, biosecurity experts, and policy analysts. The Science and Security Board includes Nobel laureates and former government officials. When they say the world is in trouble, it's not ideology. It's assessment.

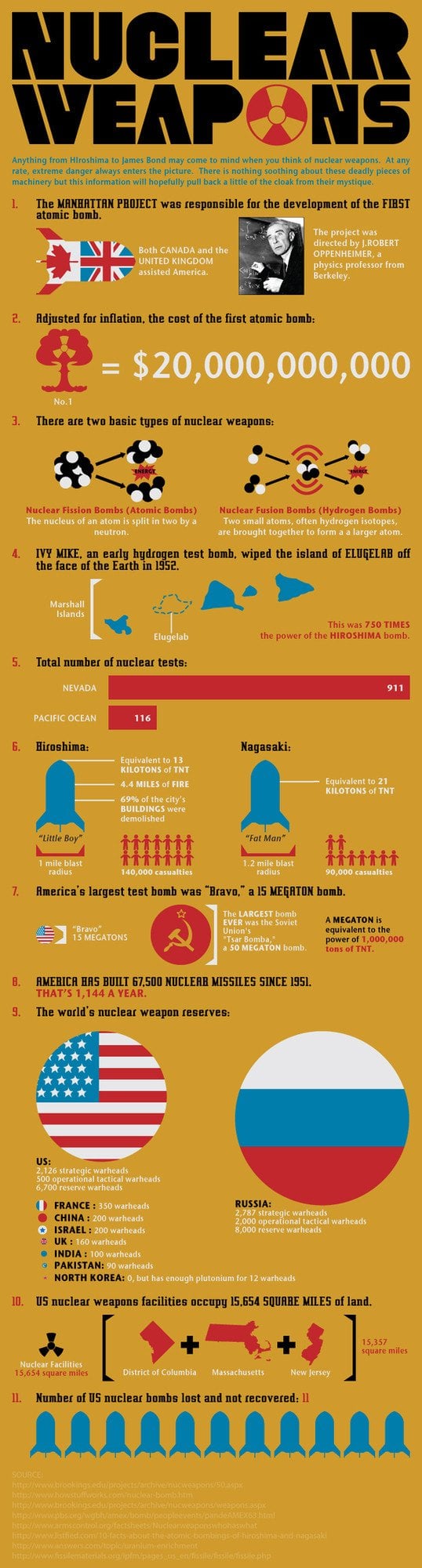

The reasons are multifaceted and overlapping. Nuclear arsenals that could end civilization remain on hair-trigger alert. Climate change accelerates past every projection made a decade ago. Artificial intelligence develops faster than governance structures can contain it. Biological threats loom larger as biotechnology becomes accessible to smaller actors. And underneath all of this sits a fracturing international system where great powers compete rather than cooperate.

This article breaks down what the Doomsday Clock actually measures, why it's moved so dramatically, and what the real-world consequences look like when you translate symbolic time into actual risk. We're not here to panic you. We're here to explain what you're actually dealing with.

TL; DR

- 85 seconds to midnight is the closest the Doomsday Clock has ever been to symbolic apocalypse

- Nuclear weapons remain the primary existential threat, with reduced diplomatic channels making accidental escalation more likely

- Artificial intelligence represents an entirely new category of risk that existing governance frameworks cannot contain

- Climate crisis continues accelerating while international cooperation collapses, making adaptation increasingly impossible

- Nationalist competition between great powers is actively preventing the cooperation needed to address shared threats

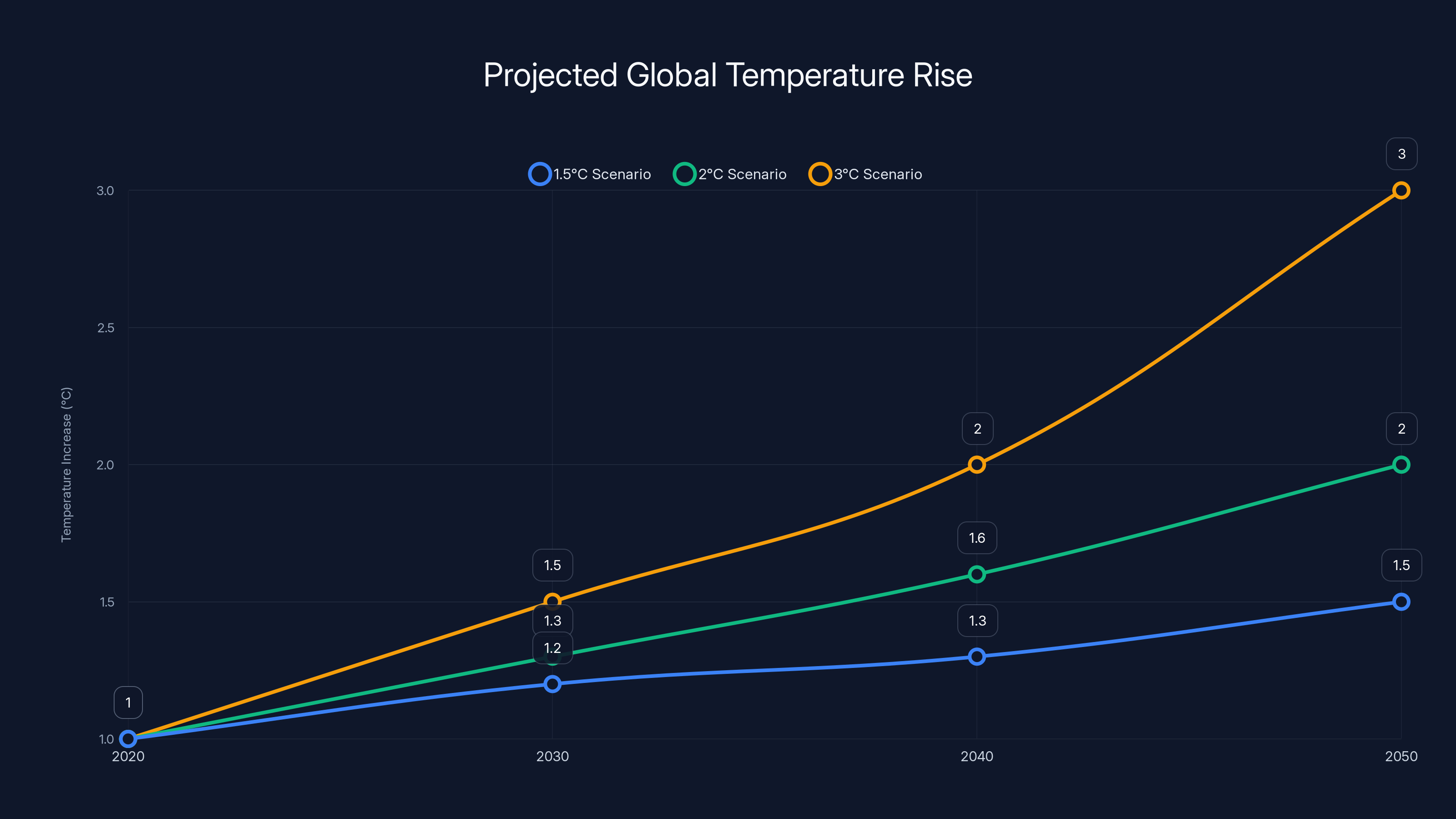

Estimated data shows potential temperature increases under different climate scenarios. Keeping warming below 1.5°C is crucial to avoid catastrophic impacts.

What Is the Doomsday Clock, Actually?

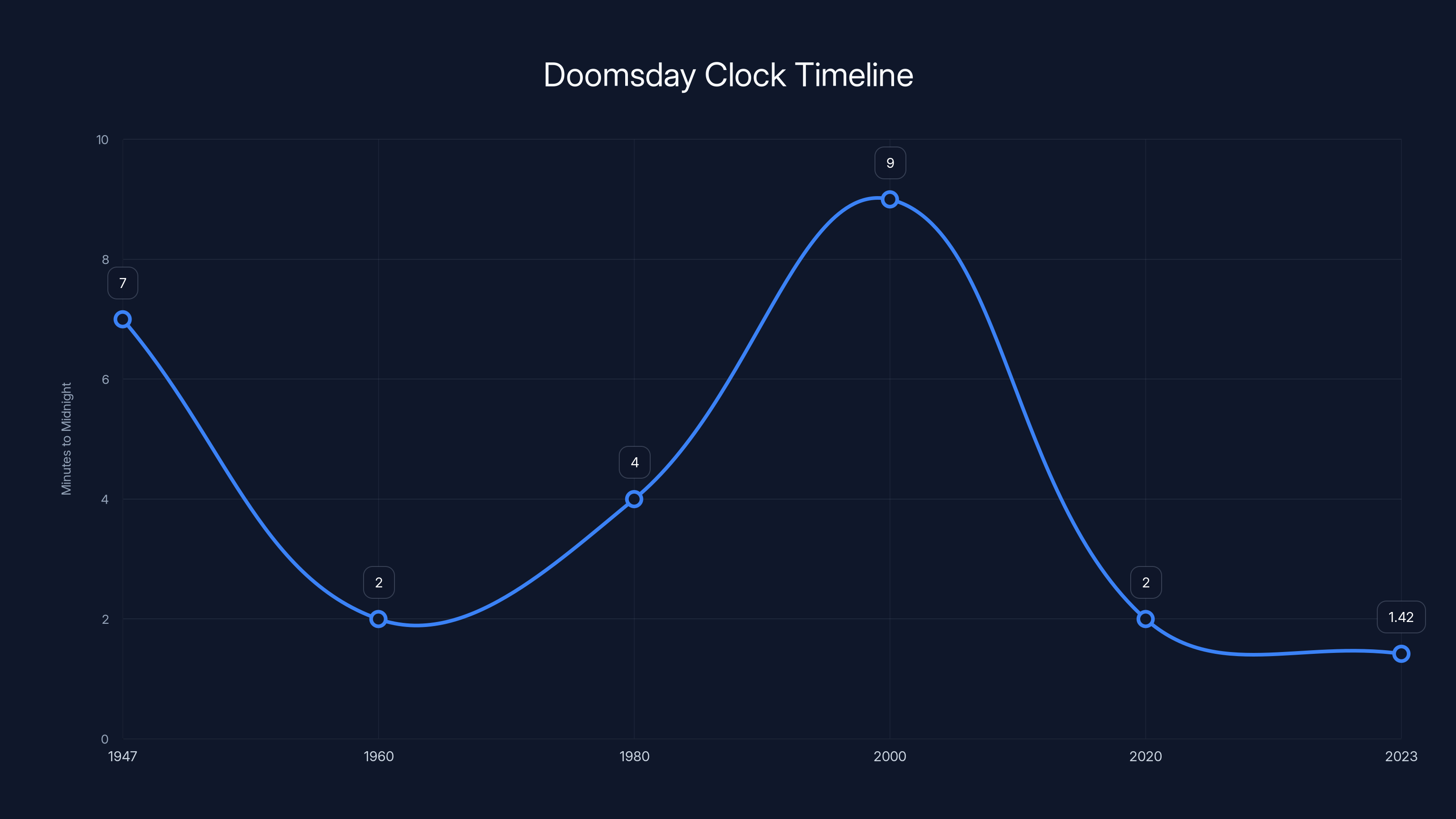

Created in 1947 by the University of Chicago scientists who worked on the Manhattan Project, the Doomsday Clock emerged from a specific moral crisis. These physicists had just watched their theoretical work become Hiroshima and Nagasaki. They understood, viscerally, that scientific knowledge could end civilization.

The clock itself isn't real. There's no actual timepiece ticking away in some laboratory. Instead, it's a metaphor. Midnight represents societal collapse. The hands move based on expert assessment of existential risk.

When the clock debuted, it was set to seven minutes to midnight. The world was still reeling from atomic weapons, and the Soviet Union was developing its own bomb. The analogy made sense: nuclear standoff felt like waiting for the inevitable explosion.

But the clock doesn't measure just nuclear risk anymore. Over decades, the scope broadened. Climate change was added in 2007. Biosecurity threats appeared in 2018. In 2020, the board explicitly added disruptive technologies—mostly meaning AI—to the assessment framework. The clock now represents a synthesis of multiple existential risks.

The clock moves in response to actions (or inactions) by world leaders. When countries sign arms control treaties, the clock steps back. When nuclear testing resumes, it steps forward. When countries pass climate legislation, it ticks backward. When they abandon climate commitments, it ticks forward. The board publishes a detailed statement explaining each move.

This matters because the Doomsday Clock has become the most visible artifact for communicating existential risk to the general public. It's simultaneously completely symbolic and taken seriously by policymakers. That paradox is the entire point.

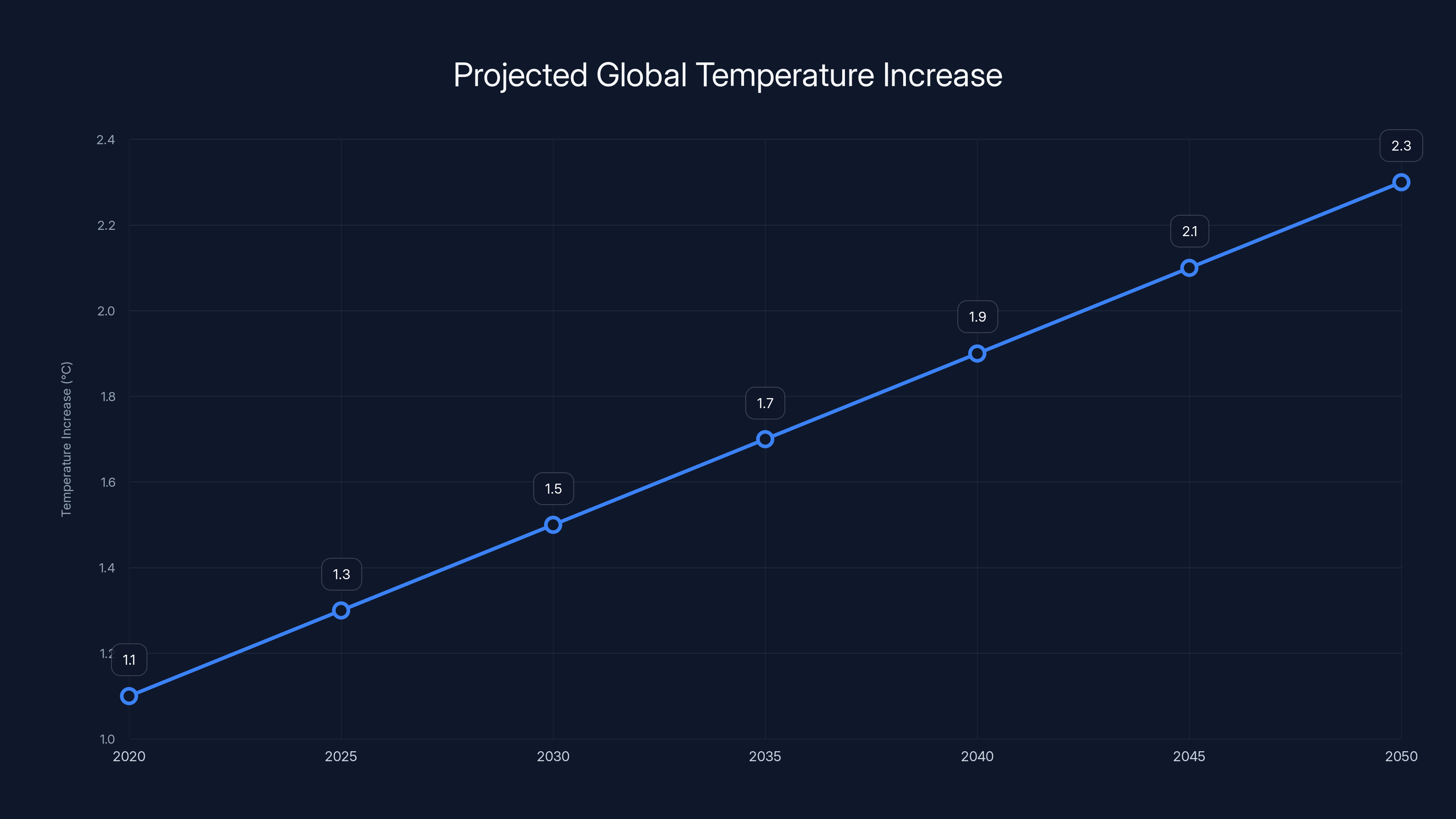

Projected data suggests global temperatures could increase by 2.3°C by 2050 if current emission trends continue. Estimated data.

The Nuclear Timeline: From Cold War Standoff to New Instability

Nuclear weapons still top the threat assessment, even as new risks have emerged. And the situation has fundamentally shifted from the Cold War era that spawned the clock.

During the height of Cold War tension, both superpowers knew exactly where they stood. There were hotlines. There were arms control treaties. The Strategic Arms Limitation Talks produced actual agreements that capped warhead counts. Missiles were visible, trackable, and constrained by mutual understanding. Mutually Assured Destruction created a perverse but functional equilibrium.

That entire architecture has crumbled. Russia withdrew from arms control agreements. The US suspended compliance with others. China accelerated its nuclear arsenal expansion without transparency. North Korea developed tested warheads. And the systems that prevented accidental escalation have atrophied from disuse.

Consider what happens when diplomatic channels close. In 1962, during the Cuban Missile Crisis, the US and Soviet Union at least had communication lines. They could negotiate. The crisis lasted 13 days of genuine uncertainty. Today, those channels barely exist between the US and Russia. Military-to-military communication is minimal. If a miscalculation occurs—a radar glitch, a misidentified aircraft, a faulty satellite sensor—there's no established protocol for rapid de-escalation.

Russia currently possesses roughly 6,000 warheads, with about 1,500 deployed on missiles and aircraft. The US maintains similar numbers. These aren't theoretical capabilities locked in vaults. They're actively deployed on submarines, aircraft, and silos. A significant fraction are on launch-ready status, meaning they can be fired within 15 minutes of authorization.

The Stockholm International Peace Research Institute estimates that an all-out nuclear exchange between the US and Russia would kill 750 million people immediately. The long-term effects—nuclear winter, agricultural collapse, societal breakdown—would likely kill the remainder of the world's population within years.

That's the baseline worst case. But the Doomsday Clock doesn't move based on worst-case analysis. It moves based on the probability of worst-case scenarios increasing. And that probability has climbed as diplomatic structures collapsed.

AI: The New Risk Nobody Fully Understands

Artificial intelligence represents something genuinely novel in the existential risk landscape. Nuclear weapons are at least well-understood. We know what they do. We've modeled the consequences. AI is different.

No one truly knows what happens when you build sufficiently advanced artificial intelligence systems. The science is settled on narrow, specific capabilities. Large language models can write coherent text. Vision systems can identify images. But scaling these systems to general intelligence—AI that can do most things humans can do—remains theoretical.

The leading AI labs are racing toward increasingly capable systems. Open AI released systems that demonstrate step-by-step reasoning. Deep Mind built systems that solve complex mathematical and scientific problems. The trajectory suggests we're moving toward more general capabilities.

Here's where the risk compounds. These systems are being deployed without adequate safety testing. They're being integrated into critical infrastructure. Military applications are being explored. And governance structures to manage AI development remain years behind the actual technology.

The concern isn't Skynet. It's subtler and potentially more dangerous. It's about systems that optimize for specified goals without understanding context or consequences. It's about AI making decisions in financial markets, weapons targeting, or disease surveillance without human oversight. It's about creating dependencies on systems whose decision-making processes nobody fully understands.

The Bulletin of the Atomic Scientists added AI to their assessment not because they believe AGI will achieve consciousness and rebel against humanity. They included it because the development trajectory of AI systems shows characteristics that historically precede catastrophic outcomes: rapid capability gains, decentralized deployment, inadequate oversight, and integration into critical systems.

Consider military applications. Military AI development is accelerating across all major powers. Autonomous weapons systems are being tested. AI is being incorporated into nuclear command-and-control systems. What happens when an AI system makes a targeting decision in milliseconds based on pattern recognition, and human operators have no ability to verify its assessment before missiles launch?

This isn't science fiction. It's the current trajectory of military AI development.

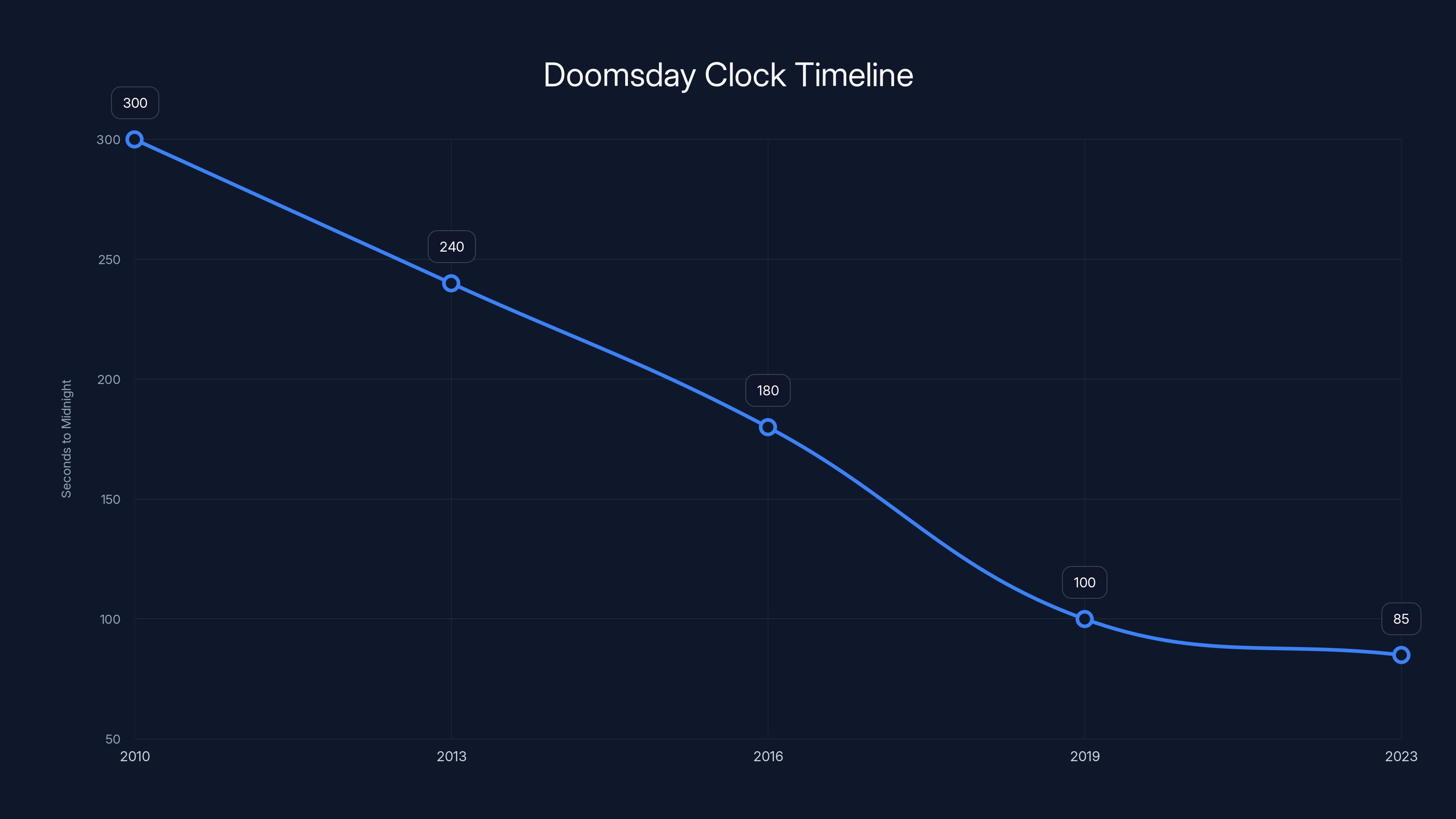

The Doomsday Clock has moved closer to midnight over the years, reflecting increased global threats. Estimated data shows a significant decrease in seconds to midnight from 2010 to 2023.

Climate Crisis: The Slow Apocalypse Nobody's Solving

Nuclear risk is dramatic and immediate. AI risk is nebulous and future-focused. Climate change is different. It's happening right now, and we're watching it accelerate past every projection made five years ago.

The Intergovernmental Panel on Climate Change publishes assessment reports on planetary warming. Each successive report has increased urgency. The 2023 assessment concluded that limiting warming to 1.5 degrees Celsius—the Paris Agreement target—is "rapidly closing" as a possibility.

We've already warmed roughly 1.1 degrees Celsius since pre-industrial times. At current emission rates, we'll reach 1.5 degrees sometime in the 2030s. The gap between stated commitments and actual emission reductions continues widening.

Here's the math. To limit warming to 1.5 degrees, global carbon emissions would need to drop roughly 45% by 2030. Currently, they're still rising. The gap between necessary reductions and actual progress is one of the largest policy failures in human history.

Climate change creates compounding risks. Rising temperatures trigger agricultural stress. Agricultural stress creates resource scarcity. Resource scarcity creates conflict. Conflict disrupts the international cooperation needed to address shared threats. Meanwhile, climate migration displaces millions, creating political instability.

The real problem is that climate solutions require international coordination at massive scale. Nations need to transition away from fossil fuels simultaneously. That requires trust, shared sacrifice, and long-term thinking. Exactly the things that are disappearing from international relations.

Instead, we're seeing nationalist responses. Countries are securing their own resources, building walls, reducing immigration. The cooperation framework that climate solutions require is actively deteriorating.

Biosecurity: The Accelerating Threat

Biological risks have always existed. Pandemics occur naturally. Disease has killed more humans than warfare, famine, or disaster. But for most of history, biological threats were acts of nature or accident.

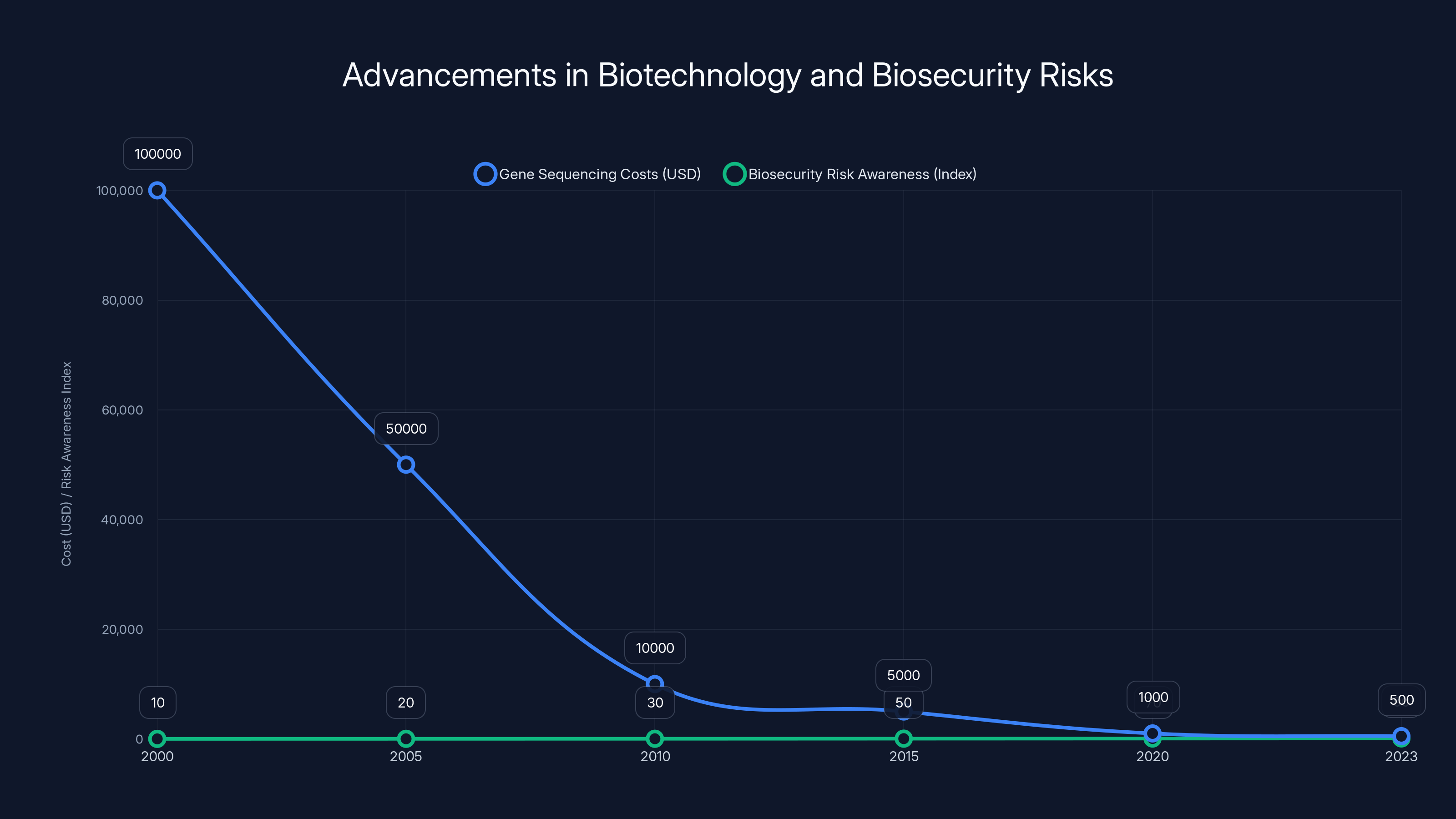

That's changing. Biotechnology is becoming democratized. Gene synthesis technology—the ability to manufacture DNA sequences—costs a fraction of what it did a decade ago. The knowledge required to work with dangerous pathogens is increasingly available online. The equipment is becoming smaller and cheaper.

The COVID-19 pandemic killed roughly 7 million people officially and an estimated 15-20 million including excess deaths. It was a natural virus. Now imagine a scenario where someone with biotechnology knowledge intentionally creates or modifies a pathogen to be more transmissible or more lethal.

The World Health Organization has identified gain-of-function research—deliberately engineering pathogens to be more dangerous to understand how they might evolve naturally—as a biosecurity concern. This research occurs in laboratories worldwide, sometimes with minimal oversight.

The dual-use problem complicates this. The same knowledge used to create vaccines can be used to create bioweapons. The same techniques used for beneficial medical research can be weaponized. Distinguishing legitimate research from dangerous work is nearly impossible without intrusive inspection regimes that nations resist.

The combination of democratized knowledge, accessible technology, and weak governance creates genuine catastrophic risk. A single actor with expertise and malicious intent could potentially engineer a pathogen that spreads globally and kills millions.

The probability is low for any given year. But compound that over decades, and the expected value of biosecurity failure becomes very high.

The Doomsday Clock has fluctuated over the decades, reflecting global tensions and risks. In 2023, it is set at 1.42 minutes to midnight, the closest ever, highlighting urgent global challenges. (Estimated data)

The Collapse of International Cooperation

Here's what ties everything together: every solution to nuclear risk, climate change, AI risk, and biosecurity requires international cooperation. Treaties. Verification systems. Shared investments. Coordinated policy.

And international cooperation is actively collapsing.

The United Nations Security Council is deadlocked. The major powers can't agree on basic frameworks. Arms control treaties are abandoned faster than new ones are signed. Climate negotiations produce agreements that signatories don't implement. AI governance discussions haven't produced binding commitments from any major power.

Instead, we're seeing nationalist competition. Countries frame security in zero-sum terms. If Russia limits weapons, it's not because the world is safer—it's because Russia is weaker. If the US reduces emissions, it's not environmental leadership—it's economic disadvantage relative to China.

This mindset makes cooperation mathematically impossible. In game theory terms, we're stuck in a prisoner's dilemma. Everyone's better off cooperating, but each individual actor is better off defecting when others cooperate. Without enforcement mechanisms—which require power, which requires cooperation—the system spirals toward mutual defection.

The rise of nationalist autocracies accelerates this dynamic. Democratic leaders face electoral pressure that incentivizes short-term thinking. Autocratic leaders face survival pressure that incentivizes consolidating power. Neither system creates incentives for long-term, cooperative international problem-solving.

Meanwhile, the problems requiring cooperation become more urgent. That's the trap humanity sits in. The clock doesn't tick based on absolute risk levels. It ticks based on the trajectory. And the trajectory shows growing risks combined with deteriorating institutions for managing those risks.

What Moving Closer Means in Practical Terms

The Doomsday Clock moving from 90 seconds to 85 seconds to midnight might sound abstract. But translate it to actual risk, and the implications are concrete.

Each step closer reflects meaningful changes in underlying threat conditions. When nuclear arsenals become less stable, the clock moves closer. When countries abandon arms control agreements, the clock moves closer. When climate change accelerates, the clock moves closer. When international institutions weaken, the clock moves closer.

This year's adjustment reflects all of these factors simultaneously. Russia's withdrawal from arms control frameworks. China's acceleration of nuclear buildup. Failed climate negotiations. Rapid AI development without governance. Weakened international institutions.

The compound effect is what matters. In isolation, each risk might be manageable. Nuclear standoff is tense but stable. Climate change is progressing but adaptable. AI is advancing but not yet transformative. Biological threats exist but remain theoretical.

But when these stressors combine, resilience degrades. Economic disruption from climate migration destabilizes governments, making them more aggressive on security. Economic pressure drives nations to defect from arms control agreements. Weak institutions can't coordinate responses to emerging AI risks. The cascade accelerates.

The optimistic interpretation is that 85 seconds still allows time for course correction. Midnight isn't imminent. There's still time to restart arms control negotiations. Still time to implement climate solutions. Still time to establish AI governance frameworks. Still time to rebuild international institutions.

The pessimistic interpretation is that we've exhausted the easy corrections. Every year, the problems become more entrenched, the solutions become more difficult, and the window for action narrows further.

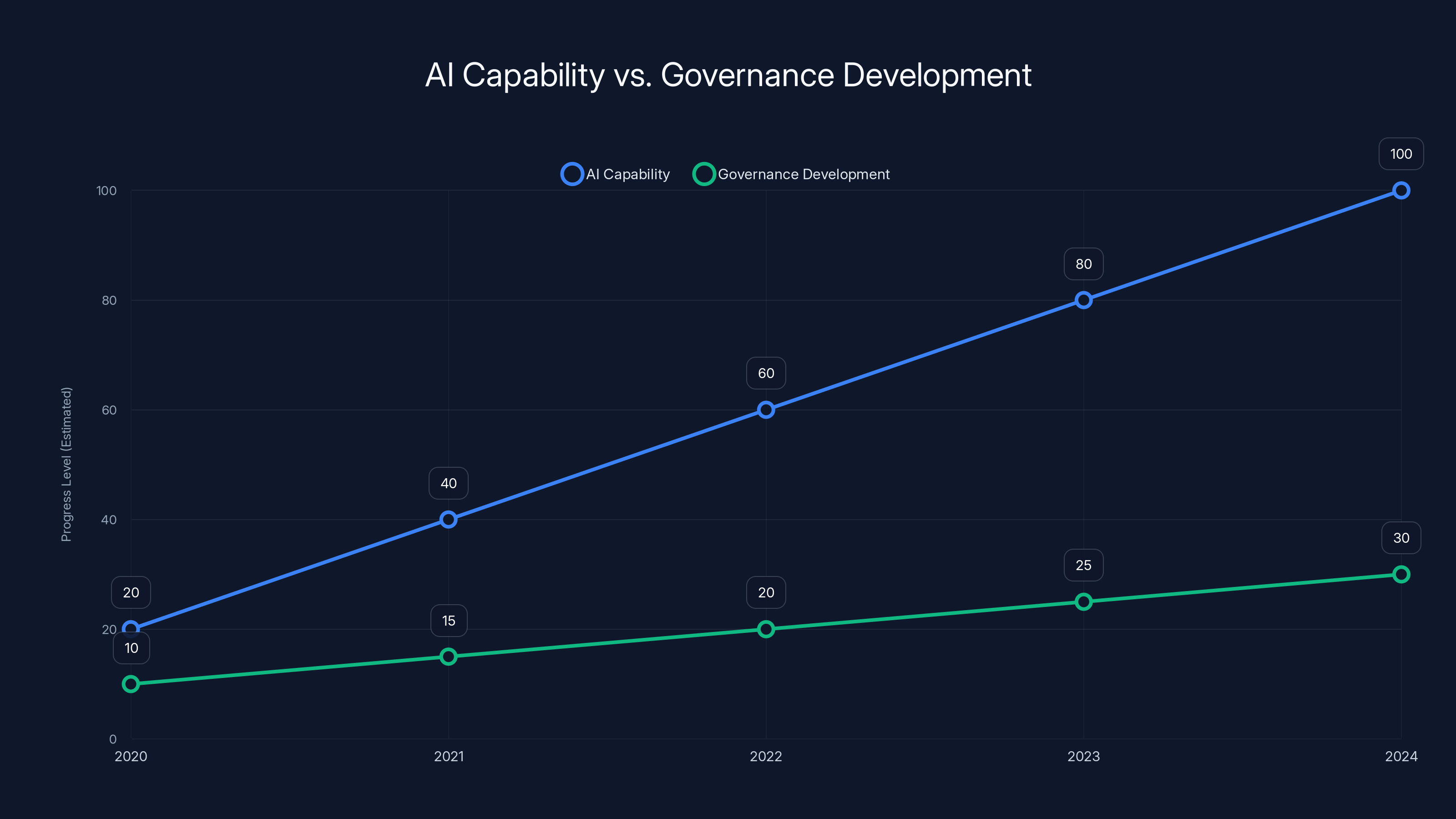

AI capabilities are advancing rapidly, akin to Moore's Law, while governance structures lag significantly behind. Estimated data highlights the growing gap.

Nuclear Weapons: The Unresolved First Problem

Nuclear weapons remain the most thoroughly studied existential risk. Generations of strategists, scientists, and policymakers have analyzed nuclear war. The conclusions are consistent: large-scale nuclear exchange would devastate civilization.

But understanding the risk hasn't reduced it. In fact, the mechanisms for preventing nuclear war have degraded significantly since the Cold War.

During the Cold War, deterrence doctrine was grim but coherent. Mutually Assured Destruction meant that attacking would guarantee your own destruction. Both sides understood this. It was awful, but it worked. Neither side launched.

Today, the situation is more complex and less stable. Major arms control agreements have collapsed. Russia withdrew from the New START treaty framework. The US suspended compliance with anti-ballistic missile treaties. Trust between nuclear powers is at historic lows.

Meanwhile, new complications emerged. Hypersonic missiles can't be easily tracked by existing detection systems. Cyber weapons can disable early warning systems. Space-based weapons add new dimensions of vulnerability. The arsenal designed for Cold War stability is deployed in an increasingly unstable context.

The probability of accidental nuclear war—a miscalculation, a technical failure, a misinterpreted signal—is difficult to quantify but clearly non-zero. And with diplomatic channels closed, the ability to de-escalate has been severely compromised.

Most concerning are scenarios involving multiple nuclear powers. India and Pakistan have nuclear weapons and a history of conflict. China and the US have growing tensions. North Korea's intentions are unclear. Israel maintains a nuclear arsenal amid regional instability. When multiple nuclear powers are involved, coordination becomes nearly impossible.

The solutions seem obvious in retrospect: restore arms control agreements, rebuild diplomatic channels, increase transparency about arsenals and intentions. But each of these requires the other side to cooperate. And in zero-sum competition, cooperation looks like weakness.

AI Development and Governance: A Systems Without Controls

Artificial intelligence presents a novel governance challenge because the capabilities are advancing faster than institutions can regulate them.

There's no binding international agreement on AI development. There's no treaty limiting AI research. There's no verification mechanism to ensure compliance. Individual countries have published policy documents and regulatory frameworks, but these lack enforcement and coordination.

Meanwhile, the capabilities race continues. The economic incentives to develop more powerful systems are enormous. Countries fear falling behind technologically. Companies fear losing competitive advantage. The race-to-the-bottom dynamic is already visible.

The specific risks from AI are threefold. First, capability risk: systems become capable of causing harm even when they're trying to be helpful, through misaligned incentives or unintended consequences. Second, concentration risk: powerful AI systems might be controlled by small groups without accountability. Third, militarization risk: AI integrated into weapons systems removes meaningful human control from targeting decisions.

Each of these risks requires governance frameworks. And creating those frameworks requires international cooperation at scale. Treaties need to be negotiated, enforced, and verified. That requires trust. That requires transparency. That requires countries to accept some constraints on their own capabilities in exchange for others doing the same.

Currently, none of this exists. AI development is decentralized across multiple countries, multiple companies, and multiple research labs. Some countries are pushing for stronger regulation. Others are actively avoiding it to preserve competitive advantage. The result is minimal coordination on the risks most likely to cause catastrophic harm.

The governance gap for AI is perhaps the widest among all existential risks. Nuclear weapons had governance frameworks from the moment they were created. Climate change has international agreements addressing it. But AI emerged from technical research, not from geopolitical competition, so the governance structures were built retroactively and inadequately.

Filling this gap requires genuine coordination. It requires countries to agree on red lines—certain AI capabilities too dangerous to develop. It requires verification mechanisms to ensure compliance. It requires penalties for defection that are more costly than the benefit of cheating.

Historically, when nations have succeeded in creating binding international agreements on dangerous technologies, they did so because the alternative was unacceptable to everyone. Nuclear non-proliferation worked because both superpowers feared a world with dozens of nuclear powers. Biological weapons treaties worked because releasing engineered pathogens posed risks to the nation releasing them.

For AI, the equivalent would be demonstrating that uncontrolled AI development poses risks to everyone, including the countries developing it. That's true, but it hasn't yet generated sufficient political will for action.

Gene sequencing costs have plummeted by 99% over two decades, while awareness of biosecurity risks has increased significantly. Estimated data.

Climate Change: Physical Limits We're Hitting

Climate change differs from other existential risks in one crucial way: it's not a future possibility. It's happening right now, and we're watching the predictions come true.

The NASA climate data is unambiguous. Global temperatures are rising. Arctic ice is melting faster than models predicted. Heat waves are becoming more intense and frequent. Precipitation patterns are changing. Agricultural zones are shifting.

Each degree of warming increases the severity of climate impacts. The difference between 1.5 and 2 degrees of warming is the difference between manageable and catastrophic for many regions. Beyond 2 degrees, the impacts compound in ways that become self-reinforcing.

Consider the math. The global economy has grown roughly 3% annually for decades. Decarbonizing the global economy requires reducing carbon emissions roughly 45% by 2030. That's a massive structural shift. It requires transitioning energy systems, transportation systems, manufacturing systems, and agricultural systems away from fossil fuels.

This is technically feasible. Renewable energy is now cost-competitive with fossil fuels in most markets. Battery technology has improved dramatically. Energy efficiency technologies exist. The constraint isn't technological—it's political and economic.

Countries fear the disruption. Industries fight regulation. Short-term political cycles don't align with long-term climate solutions. The countries with highest emissions often lack political will to reduce them. The countries with will often lack the economic power to drive global change.

Meanwhile, the impacts accelerate. Extreme weather becomes the norm. Agricultural production becomes unreliable. Water stress increases. Migration pressure grows. The economic costs of adaptation rise exponentially with each additional degree of warming.

The catastrophe isn't sudden. It's the slow breakdown of systems that civilization depends on. Food production declines. Water becomes scarce. Coastal cities become uninhabitable. Migration displaces billions. That displacement creates conflict. The conflict destabilizes governments. Destabilized governments can't cooperate on shared problems. The spiral continues.

This is already happening in slow motion. Each year brings new records for extreme weather. Each year sees agricultural stress in new regions. The migration pressure from climate-affected regions is already reshaping geopolitics.

But the response remains inadequate. Climate commitments are missed. Fossil fuel subsidies continue. The investment required for energy transition isn't being deployed. We're watching the catastrophe happen in real time while the systems designed to prevent it remain paralyzed.

Biosecurity: The Scenario Nobody Wants to Contemplate

Biological threats occupy an uncomfortable position in existential risk discussions. Unlike nuclear weapons, which are explicitly dangerous, biological research often has legitimate beneficial purposes. Unlike climate change, which is visible and measurable, biological threats remain mostly potential rather than actual.

But the trajectory is concerning. Biotechnology is advancing rapidly. Gene sequencing costs have dropped 99% in two decades. DNA synthesis technology is becoming increasingly available. The knowledge required to work with dangerous pathogens is increasingly published openly.

Combine this with the dual-use problem. Beneficial research into vaccines, diagnostics, and treatments uses the same knowledge and techniques that could be weaponized. The International Biological Weapons Convention prohibits biological weapons, but verification is nearly impossible. Countries can maintain deniability about their biological weapons programs.

The risks take several forms. Natural pandemic risk: another jump from animal to human populations, potentially more severe than COVID-19. Accidental release from laboratories: the BSL-4 biosafety level has been breached multiple times historically. Deliberate creation: someone with expertise and malicious intent using biotechnology to create a more dangerous pathogen.

Each of these has some probability. The compound risk over time is significant. The stakes are civilizational. A pathogen engineered to be highly transmissible and highly lethal could kill billions.

The governance structures are inadequate. The Biological Weapons Convention has no binding verification mechanism. Nations can pursue research under the guise of defensive programs. The WHO has no authority to inspect laboratories or restrict research. Most biosecurity governance relies on voluntary compliance by researchers and institutions.

This creates a collective action problem. If one nation unilaterally restricts biological research, it might lose scientific competitiveness and defensive capabilities against potential adversaries. So nations continue the research. But this perpetuates the risk that someone, somewhere, might create something catastrophic.

The solutions are similar to other existential risks: international agreements limiting dangerous research, verification mechanisms to ensure compliance, investment in surveillance and containment capabilities. But implementing these requires trust between nations that currently barely exists.

Why Nationalistic Competition Makes Everything Worse

Each existential risk would be easier to address through international cooperation. Nuclear weapons would be less dangerous with functioning arms control. Climate change would be more solvable with coordinated action. AI governance would be possible with global agreements. Biosecurity would be manageable with transparent oversight.

But the international system is moving in the opposite direction. Nationalist movements are strengthening. Great power competition is intensifying. Trust between nations is declining. Multilateral institutions are weakening.

This creates a perverse dynamic. The more urgent the shared problems become, the less capable the international system is of addressing them. Countries prioritize narrow national interest over collective survival. They defect from agreements when they perceive advantage in defection. They develop weapons systems specifically designed to give them advantage in competition rather than reduce mutual risk.

The NATO alliance and its competitors are locked in zero-sum thinking. Every advantage one side gains is seen as a loss for the other. This might make sense for traditional military competition. But for existential risks that threaten everyone, it's suicidal.

Consider climate change. Both the US and China will suffer severe consequences from climate catastrophe. It's clearly in both nations' interest to cooperate on emissions reduction. But instead, they compete. The US sees China's economic growth as threatening. China sees the US as trying to constrain its development. So they continue building coal plants and burning fossil fuels, each trying to secure relative advantage, while the underlying threat grows to catastrophic levels.

The same dynamic plays out with AI. Every country fears falling behind technologically. So they all accelerate development. No one restricts research that might be dangerous because the other side isn't restricting theirs. The result is a race toward potentially catastrophic capabilities with minimal safety infrastructure.

Breaking this dynamic requires leadership that can see beyond immediate competition. It requires leaders who understand that mutual survival is more important than relative advantage. It requires willingness to accept some constraints on one's own capabilities in exchange for constraints on others.

Historically, this has happened. The US and Soviet Union, despite cold war hostility, signed arms control agreements because they recognized that nuclear war threatened both. But that required sustained effort, political will, and genuine fear of the alternative.

Today, those conditions seem absent. Neither the US nor Russia is as afraid of the other as they should be. Neither China nor the US has demonstrated willingness to constrain its own development for mutual benefit. The institutions that might bridge these gaps have weakened rather than strengthened.

Time Remaining and Decision Points Ahead

The clock reads 85 seconds to midnight. In practical terms, that means roughly 2-3 years of political timeline before critical decisions are made that will either reduce or increase existential risk.

The next major inflection points:

2025-2026: Nuclear negotiations. Arms control agreements need renewal. Talks on limiting advanced nuclear capabilities are possible but not likely without significant diplomatic shift. These negotiations will signal whether great powers are moving toward competition or cooperation.

2025-2030: AI governance. Serious governance frameworks for AI need to be established during this window. If major powers don't sign binding agreements on dangerous research by 2030, the capability gaps will be too large to bridge later. The window for establishing rules is narrow.

2025-2030: Climate transition. The global economy needs to begin serious decarbonization in this period. The investment required is enormous, but the technology exists. The constraint is political will. If this period is wasted, 1.5 degrees becomes impossible, and 2 degrees becomes extremely difficult.

2025-2030: International institution reform. The UN Security Council, the international governance structures, and the institutions that coordinate global response need reform. They're currently unable to function effectively. Reforming them requires cooperation from the major powers, but is possible during this window.

After 2030, the accumulated costs of inaction compound rapidly. Climate impacts become harder to adapt to. Advanced AI systems become more entrenched and harder to govern. The geopolitical situation locks into patterns that are difficult to reverse.

This doesn't mean the problems are unsolvable. Humans have addressed massive collective action problems before. The Montreal Protocol reduced ozone-layer-damaging chemicals. Non-proliferation agreements limited nuclear weapons spread. International institutions like the WHO coordinated pandemic response.

But these successes required leadership, sustained effort, and often, a crisis that made the problem undeniable. For existential risks, the ideal is preventing the crisis rather than responding to it. But the political structures necessary to prevent catastrophe are increasingly difficult to build.

Specific Actions That Would Move the Clock Backward

The Doomsday Clock isn't immutable. It moves backward when conditions improve. Here's what would actually need to happen to reverse the current trajectory:

On nuclear weapons: Restart comprehensive arms control negotiations between the US and Russia. Include China in talks about strategic stability. Reduce deployed warheads further. Restore communication channels for de-escalation. These aren't new ideas. They're proven frameworks from the Cold War era that could be revived with political will.

On climate: Implement carbon pricing globally. Transition subsidies from fossil fuels to renewables. Establish binding emissions reduction targets with enforcement mechanisms. Invest trillions in energy transition. This requires coordinated global action but is economically feasible. The cost of transition is far lower than the cost of climate catastrophe.

On AI: Establish international agreements limiting dangerous AI research. Create verification mechanisms to ensure compliance. Invest heavily in AI safety research. Integrate safety into development processes. None of this is technologically impossible. It requires governance structures and coordinated commitment.

On biosecurity: Strengthen the Biological Weapons Convention with verification mechanisms. Implement transparency in dangerous biological research. Invest in surveillance and containment capabilities. Create agreements on dual-use research oversight. Again, technically possible but requiring international cooperation.

On cooperation itself: Reform the UN Security Council to be functional. Establish mechanisms for addressing transnational threats. Rebuild diplomatic channels between great powers. Create forums where shared interests can be identified and pursued. This is the foundation that makes everything else possible.

None of these actions are unknown. They're standard policy recommendations from the Bulletin of the Atomic Scientists and other expert bodies. The constraint is political will and the institutional capacity to implement coordinated global action.

The Psychology of Existential Risk: Why We're Failing to Act

Knowing what to do and doing it are different challenges. Humans are remarkably bad at responding to distant, probabilistic threats compared to immediate, certain ones. A nuclear war that might happen decades from now seems less urgent than next quarter's earnings. Climate change that will worsen gradually seems less actionable than a quarterly budget shortfall.

This is partly psychological. The human brain evolved to respond to immediate threats. A predator in front of you triggers fight-or-flight response. A statistical probability of civilization collapse at some point in the future doesn't trigger the same response.

It's also institutional. Political systems operate on election cycles measured in years. Corporate systems operate on quarterly earnings. The threats posed by nuclear weapons, climate change, and advanced AI operate on timescales of years to decades. The incentive structures don't align.

Leadership matters enormously. When leaders take existential risks seriously and prioritize addressing them, progress happens. When leaders dismiss the risks or prioritize short-term advantage, inaction persists.

Currently, global leadership is inadequate to the challenge. The US is divided internally and struggling to coordinate globally. Russia is belligerent and uninterested in cooperation. China is rising but insecure about its position. Europe is fractured. The institutions that might coordinate global action are weak and functional.

Breaking this pattern requires leadership that recognizes the existential stakes. It requires willingness to sacrifice some short-term advantage for long-term survival. It requires building coalitions around shared interest in human survival rather than narrow national interest.

That's not impossible. Public opinion polls show citizens care about existential risks. Young people especially are motivated by climate change concerns. There's latent demand for leadership focused on these challenges. But translating public concern into political action requires institutional change.

The Role of Individuals in Addressing Existential Risk

When facing problems at the scale of existential risk—problems that require global coordination and policy change—individual action seems powerless. But individuals and communities actually play crucial roles.

First, there's the signaling function. When individuals make choices that reflect concern about existential risks—supporting climate-focused candidates, choosing careers in AI safety or biosecurity, investing in companies addressing these risks—it demonstrates that these problems matter to voters and consumers.

Second, there's the competence function. We need more people working on these problems. AI safety researchers are vastly outnumbered by capability researchers. Climate scientists are outnumbered by fossil fuel industry workers. Biosecurity experts are vastly fewer than needed. Individual choices to pursue careers in these fields matter.

Third, there's the institutional function. Schools, universities, and research institutions shape what problems people work on and how they think about them. Encouraging institutions to take existential risks seriously, to fund relevant research, and to train people in relevant fields drives change at scale.

Fourth, there's the political function. Voters can demand that leaders take existential risks seriously. Citizens can support international cooperation. Communities can transition away from fossil fuels. These actions are individually small but collectively powerful.

None of this is a substitute for policy change and global coordination. Individuals transitioning to electric vehicles doesn't solve climate change without broader energy policy shifts. Individuals working on AI safety doesn't solve AI governance without binding international agreements. But individual action creates the conditions—public concern, available expertise, institutional capacity—that make policy change possible.

Looking Forward: Paths to Catastrophe and Paths to Safety

The clock at 85 seconds to midnight doesn't specify which catastrophe we're closest to. It's an aggregate assessment of multiple threats increasing simultaneously.

The catastrophic scenarios are familiar from decades of analysis. Nuclear war—either direct conflict or escalation of regional conflict into global nuclear exchange—remains the fastest path to civilization collapse. Climate catastrophe—the combination of agricultural collapse, water stress, heat extremes, and migration triggering instability—unfolds slower but is increasingly inevitable if current trends continue. AI catastrophe—loss of control over advanced systems with unaligned goals—remains speculative but increasingly plausible as capabilities advance. Biosecurity catastrophe—deliberate or accidental release of engineered pathogens—has low probability but enormous consequences.

But these aren't the only possibilities. There are paths toward increasing safety. Not utopian scenarios where all problems are solved. Rather, scenarios where major powers recognize existential stakes, rebuild cooperation, establish governance frameworks, and direct resources toward addressing catastrophic risks.

These paths aren't obvious or easy. They require political leadership that currently doesn't exist. They require international institutions that currently don't function. They require sacrificing some national advantage for shared survival. But they're possible.

The difference between catastrophic and survivable futures depends on decisions made in the next few years. Not decisions that will be made someday in the future. Decisions that need to be made now.

The Doomsday Clock is a reminder that time exists, but not infinite time. It's a call to action dressed in symbolic language. And unlike fictional apocalypses, this one can actually be prevented.

FAQ

What exactly is the Doomsday Clock measuring?

The Doomsday Clock is a symbolic timepiece that represents how close humanity is to global catastrophe. Midnight represents destruction of civilization. The specific catastrophes measured include nuclear war, climate change, biological threats, artificial intelligence risks, and disruptive technologies. The clock's position is determined annually by the Science and Security Board of the Bulletin of the Atomic Scientists based on expert assessment of global threat levels.

Why did the clock move so close to midnight?

The clock advanced to 85 seconds from the previous 89 seconds due to multiple deteriorating conditions: escalation of nuclear tensions without functioning arms control agreements, collapse of international cooperation frameworks, acceleration of climate change without adequate response, rapid AI development without governance structures, and weakening of institutions designed to manage global risks. These factors combined signal an increasingly unstable situation where catastrophic outcomes are more likely.

Is the Doomsday Clock actually predictive of catastrophe?

The clock isn't a precise predictor. It's an assessment of trajectory and trend rather than probability. When the hands move closer to midnight, it indicates that underlying conditions have worsened—that the path toward catastrophe has become steeper. When the hands move backward, it indicates genuine progress in addressing existential risks. The clock's value is in communicating the directionality of change and the urgency of action required to reverse negative trends.

What specific actions would move the clock backward?

Major actions that would reverse the clock's movement toward midnight include: reestablishing arms control negotiations between nuclear powers, implementing serious climate transition policies with enforcement mechanisms, creating binding international agreements on AI development safety, strengthening biosecurity governance with verification systems, and rebuilding international institutions capable of coordinating global response to shared threats. Each of these requires political will and international cooperation but is technologically and economically feasible.

How much time does "85 seconds to midnight" actually represent?

The clock uses symbolic time rather than literal predictions. The seconds don't translate directly to years. The board adjusts the clock annually based on threat assessment, so 85 seconds to midnight generally indicates a critical period of one to three years where decisions made will significantly impact whether the trajectory toward catastrophe continues or reverses. After three years, new assessments will be made and the clock will be adjusted.

Why is international cooperation so important for addressing existential risks?

Existential risks like nuclear war, climate change, AI development, and biological threats can't be solved by individual nations acting alone. A single country reducing emissions doesn't prevent climate catastrophe if others continue emitting. A single nation restricting AI research doesn't prevent catastrophic AI development if others continue accelerated research. International cooperation enables verification that agreements are followed, creates enforcement mechanisms for violations, and distributes the burden of transition costs. Without coordination, nations have incentives to defect from agreements and pursue narrow advantage, making collective problems worse for everyone.

Can anything actually be done to reverse this trend?

Yes. History shows that humans can recognize existential threats and coordinate responses. The Montreal Protocol successfully addressed ozone depletion. Arms control agreements reduced nuclear weapons deployed. International cooperation managed pandemic responses. The challenge is building sufficient political will and institutional capacity to address the current constellation of threats simultaneously. This requires leadership that prioritizes shared survival over relative advantage and citizens who demand that leaders take existential risks seriously. The window for action is limited but still open.

Conclusion: The Clock Reminds Us That Time Still Remains

Eighty-five seconds to midnight is terrifyingly close. It's the closest humanity has ever come to the symbolic moment of apocalypse. But there's something important embedded in that number: it's not midnight itself. Time remains. Options exist. Futures are still contingent on choices made now.

The Doomsday Clock emerged nearly eighty years ago as a warning. Scientists who understood the power of nuclear weapons wanted to communicate the danger to the world. They created a symbol precisely because symbols can penetrate the noise and inertia of normal political discourse in ways statistics cannot.

The clock has performed that function consistently. Each time it moves, news cycles focus on existential risk for a moment. For a brief period, global leaders feel pressure to take catastrophic risks seriously. The clock works because it makes abstract probabilities concrete—not through false precision, but through symbolic urgency.

What matters now is whether that urgency translates into action. The Science and Security Board has laid out exactly what needs to happen: nuclear powers need to negotiate arms control frameworks, nations need to coordinate climate transition, major powers need to establish AI governance, and international institutions need to be rebuilt to function on these shared challenges.

None of this is secret knowledge. The policy solutions are understood. The technology to address climate change exists. The diplomatic frameworks for arms control have been proven. The expertise to manage AI development safely is available. The constraint is purely political will.

For individuals, the lesson is that concern about existential risk isn't defeatist. It's clarifying. Understanding the stakes makes it possible to act with appropriate urgency. It means choosing careers in fields that matter—AI safety, climate science, biosecurity, international relations. It means voting for leaders who take these risks seriously. It means supporting institutions working on these problems.

For leaders, the lesson is that the international system is creaking under the weight of unmanaged existential risks. Competition makes sense in a world of abundance, but the threshold of catastrophe requires cooperation. The fastest path to national security is collective security. The path to economic prosperity is through environmental stability. The path to technological advantage is through responsible development.

The clock at 85 seconds to midnight is a reminder that humanity stands at a genuine inflection point. The decisions made in the next few years will determine whether we move closer to catastrophe or pull back from the brink. It's not predetermined. It's not inevitable. The future depends on what we choose to do right now.

That's terrifying. But it's also empowering. The outcome isn't fixed. The clock can move backward. Catastrophe can be prevented. But only if we understand the stakes and act accordingly.

Key Takeaways

- The Doomsday Clock is now at 85 seconds to midnight, closer than ever, reflecting multiple simultaneous existential threats reaching critical levels

- Nuclear weapons remain destabilized by collapsed arms control frameworks and deteriorating diplomatic channels between major powers

- Artificial intelligence represents a novel governance challenge with capabilities advancing faster than international regulatory structures

- Climate change is accelerating past projections while international cooperation mechanisms remain inadequate to implement necessary transitions

- International institutions are failing to coordinate global responses precisely when shared existential threats demand cooperation, creating a catastrophic mismatch

- Solutions exist but require political will: arms control negotiations, AI governance treaties, climate transition, biosecurity oversight, and rebuilt institutions

- The critical decision window is 2025-2030, when policy choices will determine whether humanity pulls back from the brink or continues sliding toward catastrophe

Related Articles

- Moltbot AI Assistant: The Future of Desktop Automation (And Why You Should Be Careful) [2025]

- State Crackdown on Grok and xAI: What You Need to Know [2025]

- Where Tech Leaders & Students Really Think AI Is Going [2025]

- Chrome's Gemini Side Panel: AI Agents, Multitasking & Nano [2025]

- Amazon's 16,000 Job Cuts: What It Means for Tech [2025]

- AI Discovers 1,400 Cosmic Anomalies in Hubble Archive [2025]

![Doomsday Clock at 85 Seconds to Midnight: What It Means [2025]](https://tryrunable.com/blog/doomsday-clock-at-85-seconds-to-midnight-what-it-means-2025/image-1-1769632672920.jpg)