The Open-Source Promise That Keeps Getting Broken

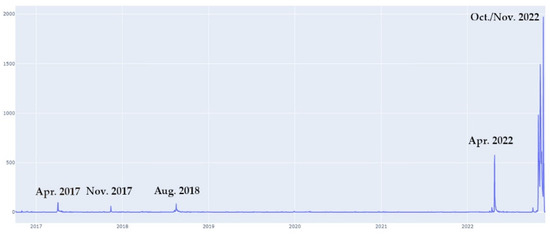

Back in April 2023, when the platform was still called Twitter, Elon Musk announced something that sounded genuinely revolutionary. The company would open-source the algorithm that decided what appeared in your feed. Not some sanitized version or high-level documentation, but actual code. The real thing.

For a second, the internet got excited. Here was one of the world's most powerful people seemingly embracing radical transparency about how social media's black box actually worked. You could see exactly why your feed showed you rage bait at 3 AM. You could understand why posts from people you follow sometimes disappeared. You could verify that the algorithm wasn't secretly shadowbanning you.

Then nothing happened. Well, that's not quite fair. Something did happen. The code got uploaded to GitHub. But it never got updated. Not once. For two years straight.

This matters because it reveals something uncomfortable about how tech companies handle transparency promises. They're not lies, exactly. But they're not quite truths either. They're announcements that feel good to make, that generate headlines about open-source commitment, that position the company as progressive and trustworthy. Then real work takes over. Engineers get pulled onto other projects. Maintaining public code becomes a low priority. And eventually, what was supposed to be a living, breathing open-source project turns into a dusty artifact that nobody touches.

Now Musk is doing it again. In early 2025, he announced that he'd open-source X's algorithm in seven days. He promised updates every four weeks. Developer notes with each release. Full transparency about organic and advertising recommendations.

Before you get excited, ask yourself: why should you believe this time will be different?

Why 2023's Open-Source Release Matters (And Failed)

The 2023 promise wasn't just talk. Musk actually did release code. On Twitter's official GitHub repository, you can still see it: the-algorithm. It's there. It exists. And that's the problem.

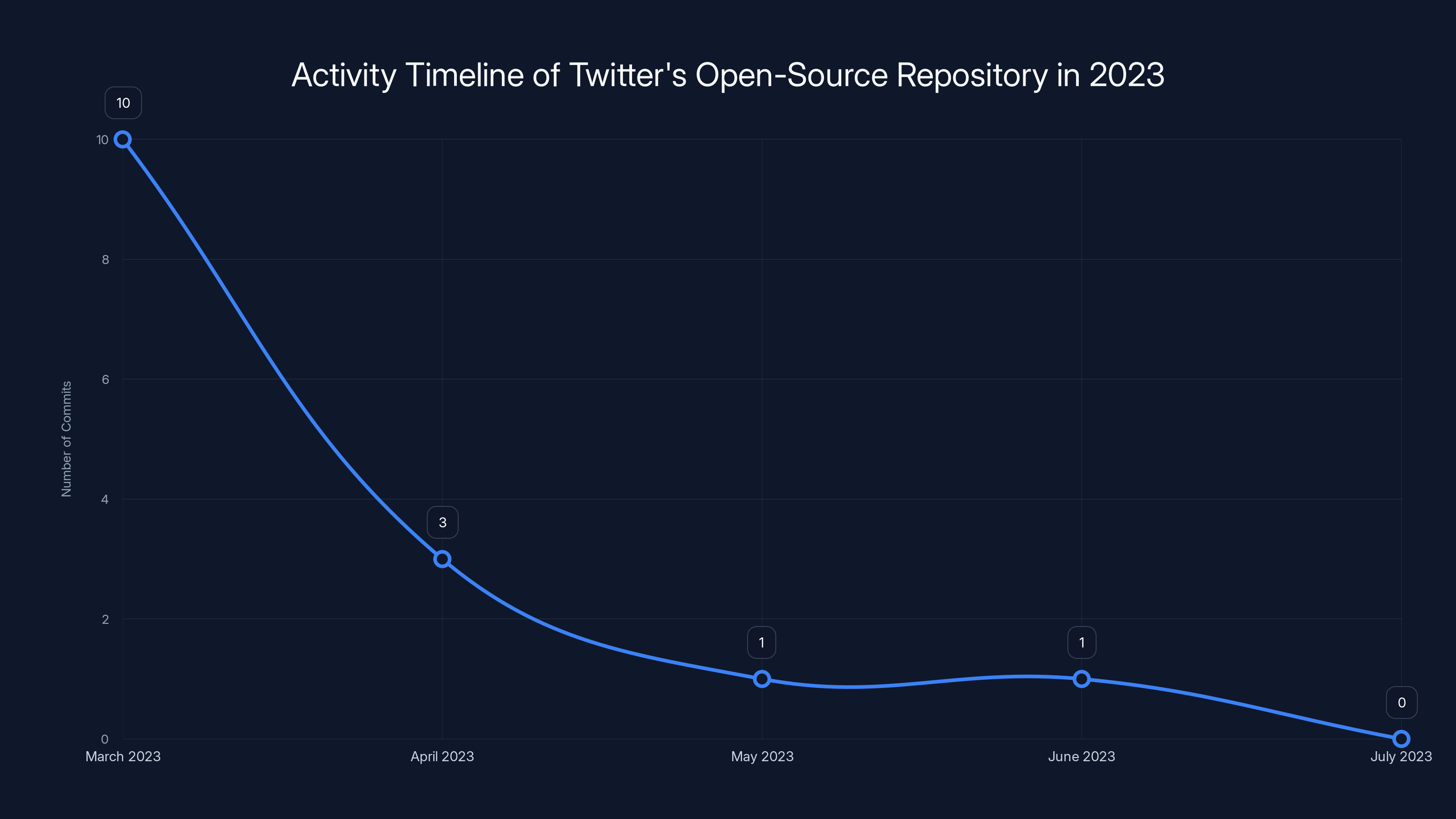

When you look at the commit history, most of the files show timestamps from March 2023. That's the initial upload. After that, the repository basically went dormant. A few tweaks here and there, but nothing substantial. No updates to reflect how the algorithm actually evolved. No changes to account for new features, algorithm adjustments, or improvements the company had made.

For developers who actually tried to use this code, the experience was frustrating. The repository was theoretically useful but practically abandoned. If you cloned it and tried to run it locally, you'd get deprecated dependencies. Functions would reference code that no longer existed in the main codebase. It was like being handed a car manual from 2019 when you're trying to fix a 2023 model.

Why did this happen? Several factors probably contributed. First, maintaining open-source code requires real work. You need to keep dependencies updated, document changes, respond to issues from developers trying to use it. That costs time and engineering resources. Second, there's actually security risk in public code. Every piece of code you release is a potential target for people looking for exploits or ways to game the system. Third, and most cynically, once the initial announcement passed and the headlines faded, there was no external pressure to maintain it. Nobody important was checking if updates were coming. The promise was made and technically kept, even if not in spirit.

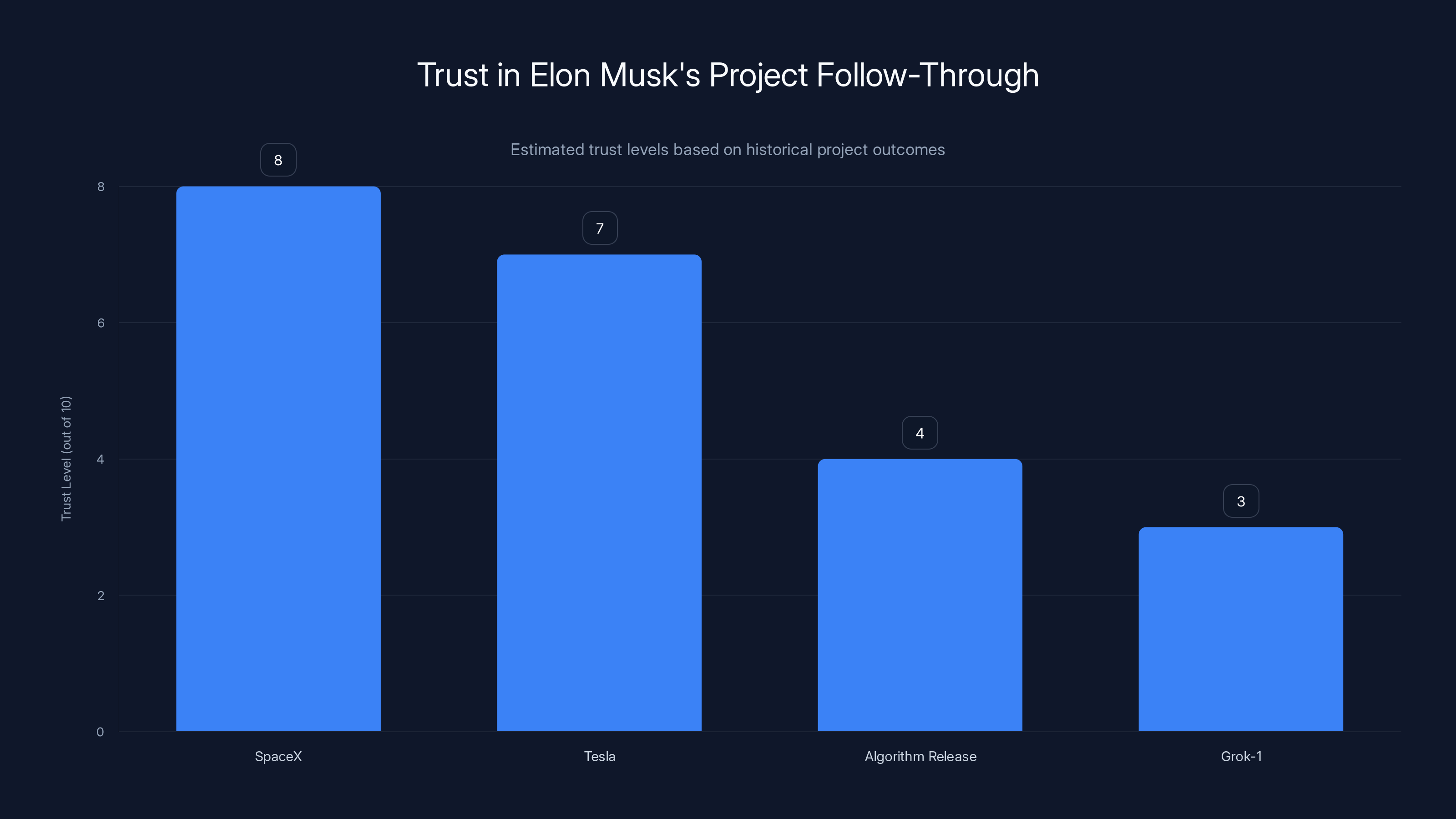

Then there's the case of Grok-1, Musk's AI model. In 2024, he open-sourced it with similar fanfare. The GitHub repository exists. But it hasn't been updated in two years. The model itself is now on version 3, but the open-source version is stuck in time.

The pattern here is clear. Musk makes promises about open-source. He follows through initially. Then the promises fade as reality sets in.

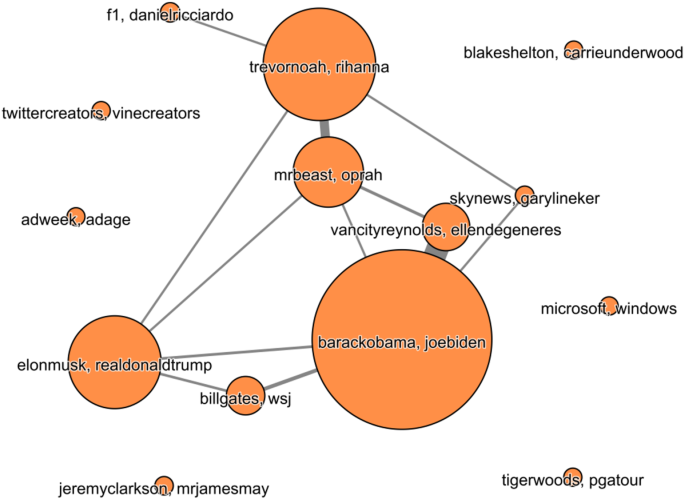

The repository showed initial activity in March 2023 but quickly became inactive, with minimal updates in subsequent months. Estimated data based on narrative.

Understanding Algorithm Transparency: What Could Actually Be Revealed

Before diving into why Musk might break this promise again, it's worth understanding what we're actually talking about when we discuss "the algorithm."

The recommendation algorithm at a social network like X is actually multiple algorithms working together. There's the ranking algorithm that decides which posts appear highest in your feed. There's the feed generation algorithm that pulls candidate posts for you to see. There's the ranking algorithm that decides which ads you see. There's the content moderation algorithm that decides what gets removed or suppressed.

When Musk says he'll release "all code used to determine what organic and advertising posts are recommended to users," that's a significant chunk but not the whole picture. It doesn't include the moderation systems. It doesn't include the APIs that integrate with Musk's broader business interests. It probably doesn't include the business logic that decides how to prioritize revenue generation through ads.

Even the parts that are theoretically being released get complicated. Modern recommendation systems rely on machine learning models trained on massive datasets. The trained weights and parameters are the secret sauce. The code that uses those models is less important than what the models learned. You can release all the code you want, but if the actual trained models stay private, you haven't really revealed how the system works.

There's also the question of what happens when you actually understand how the algorithm works. Some people want to use that knowledge to game the system. If you know the algorithm favors posts with high early engagement, you'll design posts to get quick reactions. You might use bots. You might game metrics. Complete transparency doesn't just democratize information, it also democratizes manipulation.

Compare this to Google's approach. They publish broad guidelines about how search works, but they don't release the actual ranking algorithm. They've decided that the risks of complete transparency outweigh the benefits. Whether you agree with that decision or not, it's a coherent philosophy.

Musk's promise lands somewhere in the middle, which creates its own problems. Partial transparency sometimes raises more questions than it answers. People will see the code and wonder what's missing. They'll assume the hidden parts are doing something sinister. In some cases, they'll be right.

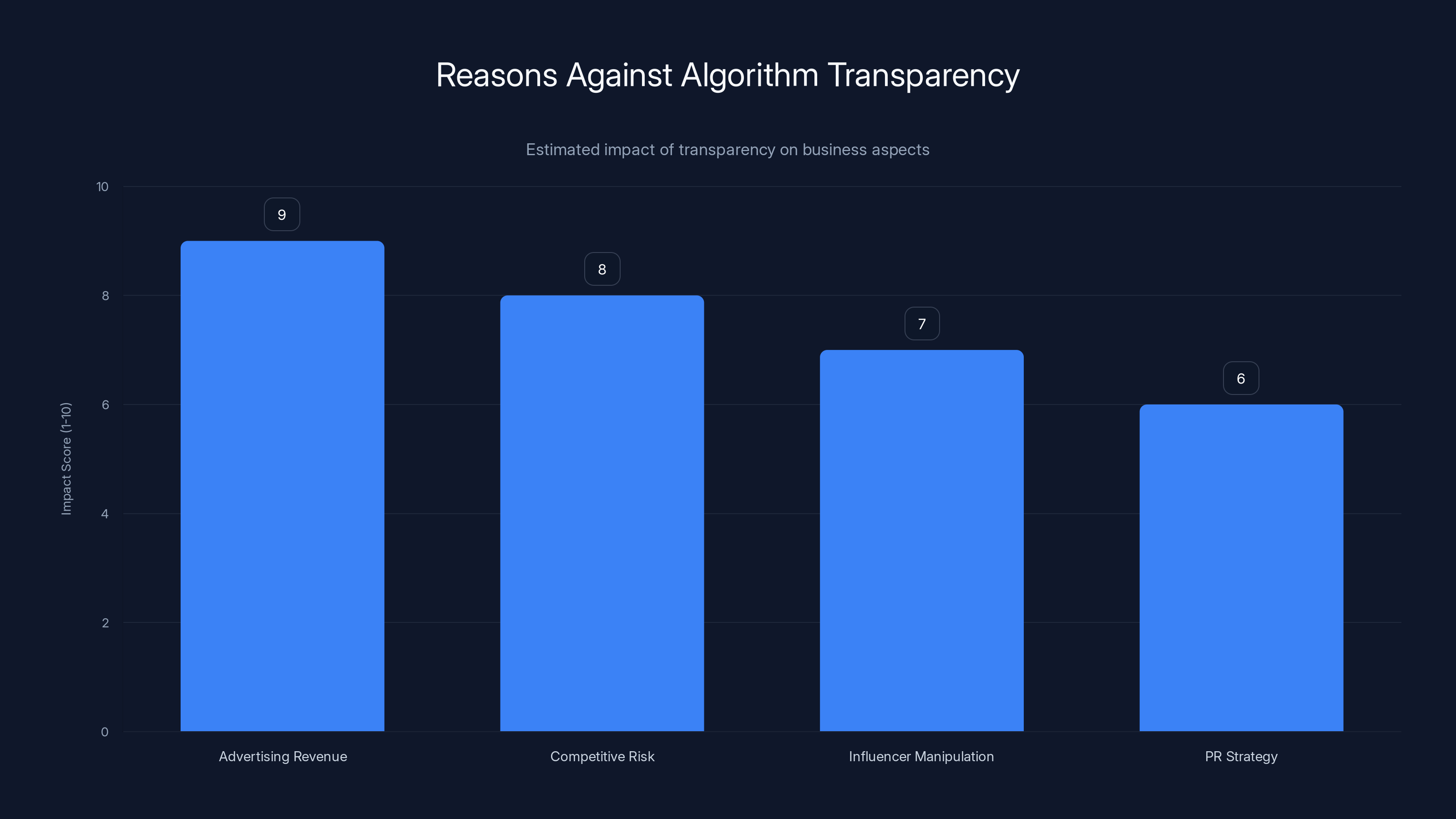

Estimated data suggests that algorithm transparency could significantly impact advertising revenue and competitive positioning, with a moderate influence on influencer manipulation and PR strategies.

The Business Problem With Algorithm Transparency

Here's the uncomfortable truth: there are legitimate business reasons why social media companies don't want to fully open-source their algorithms.

X is an advertising business. The algorithm's primary job is to keep you engaged so you see more ads. If you completely understand how the algorithm works, you can optimize for it in ways that might not align with X's business interests. Influencers and brands could manipulate their way to huge reach without ever running paid ads. The revenue model breaks down.

There's also competitive risk. If you release exactly how your recommendation system works, competitors can build better ones. TikTok's algorithm is famously secretive partly because it's believed to be genuinely superior to what competitors have. Releasing that code would level the playing field.

Musk, specifically, has a complicated relationship with X's business model. He bought the company for $44 billion in October 2022, largely to protect it from what he saw as political censorship. But he also needs to make the business profitable. Advertising revenue is the only real path to profitability for a social media platform. Advertisers want reliable audience targeting and performance metrics. They want to know their money is being spent effectively. None of that gets better with complete algorithm transparency.

There's also the timing issue. Musk announced the open-source release while facing criticism about Grok's ability to generate deepfake nudes. The announcement conveniently shifted conversation from "your AI makes fake pornography" to "look at our commitment to transparency!" It's a classic PR move: respond to criticism with a positive announcement that addresses a different concern.

The timing makes you wonder whether the algorithm release is actually coming or whether it's an announcement designed to make news and then quietly fade away when attention moves elsewhere.

Why Companies Promise Open-Source and Fail to Deliver

Musk isn't the only tech leader making open-source promises that fizzle. This is a broader pattern in tech.

Facebook promised to open-source more of their AI infrastructure. Meta actually follows through better than most, releasing LLaMA and other models. But even Meta's open-source strategy is selective. They release things they believe will become commodities anyway or where open-sourcing actually helps their business by setting standards.

Amazon talked about open-sourcing parts of their recommendation system. GitHub is still waiting. Microsoft released parts of Windows source code in the early 2000s as a PR move, then mostly retreated from that strategy.

The reasons fall into a few categories.

Resource constraints are real. Maintaining public code requires engineers. Those engineers could instead be building new features that customers pay for. Most companies choose new features.

Security concerns are legitimate. When you open-source something, malicious actors get to study your code for vulnerabilities. You're essentially holding a security workshop for hackers. Some companies decide the risks outweigh the benefits.

Competitive concerns are significant. If your algorithm is better than competitors', releasing it narrows your advantage. Some companies would rather keep secrets.

Regulatory uncertainty matters. If you're dealing with potential regulation around algorithms, publishing everything might create legal liability. You might be saying "this is what we do" right before regulators decide what you're doing is illegal.

Executive attention is limited. A CEO announces something big. The PR team celebrates. Then the CEO moves on to the next thing. There's no ongoing mechanism to ensure the promise gets followed through. Inertia takes over.

Sometimes promises are made with good intentions. The people making them genuinely believe they'll deliver. But when it's time to prioritize resources, open-source maintenance ranks below literally everything else.

Trust levels vary across Musk's projects; high for SpaceX and Tesla, but lower for open-source algorithm releases and Grok-1. (Estimated data)

What We Actually Know About X's Algorithm (Without the Code)

Even without the code being released, we know quite a bit about how X's algorithm works. Not because Musk released it, but because researchers have studied it, engineers have left the company and talked about it, and X has occasionally published blog posts explaining how it works.

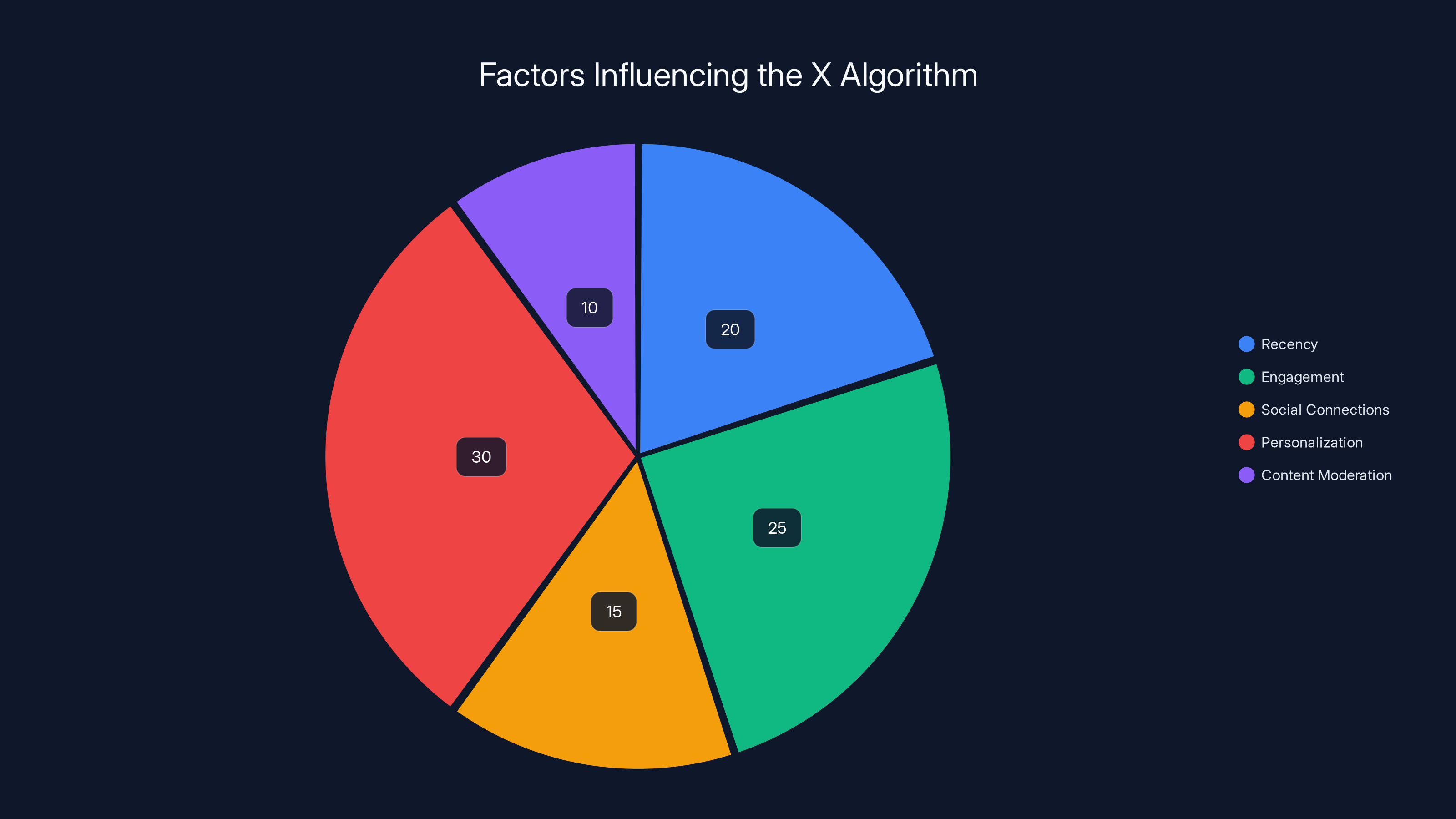

The algorithm combines multiple signals. Recency matters. Newer posts rank higher than old ones, though not exclusively. Engagement signals matter. Posts with lots of replies, retweets, and likes get boosted. Social connections matter. Posts from people you follow or interact with frequently get weighted higher. Personalization happens. The algorithm learns what topics you engage with and shows you more similar content.

There are also explicit ranking factors. Posts that are replies, media-heavy content, and original tweets (not retweets) get some boost. Tweets from verified accounts get different treatment than tweets from unverified accounts. X's official support pages actually explain some of this.

Then there are the quality filters. X removes spam, blocks some content based on moderation policies, and suppresses other content from wide distribution without removing it entirely. This shadowbanning exists, though X calls it something else.

And there's the business logic. Tweets from X Blue subscribers (the paid verification tier) might get slight distribution boosts. Tweets that link to promoted content might get suppressed. The algorithm tries to optimize for multiple objectives: engagement, revenue, retention, content moderation, and user satisfaction. Those objectives sometimes conflict.

This knowledge comes from observation, leaked documents, and statements from people who've worked at the company. It's not as detailed as actual code, but it's more than most people realize.

The question is: would the actual code reveal anything dramatically different?

Probably not. The code would show you implementation details. You'd see variable names, data structures, and how different signals get weighted. You might discover some parameters are different from what was publicly stated. You might find some heuristics or shortcuts that weren't mentioned. But you wouldn't find a dramatically different system.

The Role of Regulatory Pressure in Transparency Promises

There's a larger context here. Governments around the world are starting to care about algorithm transparency.

The European Union's Digital Services Act requires platforms to explain how their algorithms work and to give users options to opt out of personalized recommendations. The DSA doesn't require full code release, but it requires meaningful explanation. The law has teeth. Violating it means fines.

In the United States, there's less regulatory mandate, but there's congressional pressure. Senators and representatives have questioned social media executives about algorithmic amplification of misinformation. Congress hasn't passed comprehensive social media regulation yet, but the threat exists.

In this context, Musk's open-source promise serves multiple purposes. It positions X as progressive and transparent. It might help with regulatory compliance arguments. It looks good in press releases.

But the regulatory requirements are actually for transparency to regulators and users, not necessarily to the general public. X could technically comply with the DSA without ever releasing code. They could explain how the algorithm works in a report to regulators and to users, without making the code public.

So the promise to open-source isn't actually necessary for regulatory compliance. It's beyond what's legally required. That makes it both more impressive on paper and more likely to not happen, because there's less legal pressure forcing it.

When you see companies making promises that exceed regulatory requirements, ask yourself why. Usually, it's either because they're genuinely committed to transparency, or because they're creating a good PR story while being confident the promise will be forgotten.

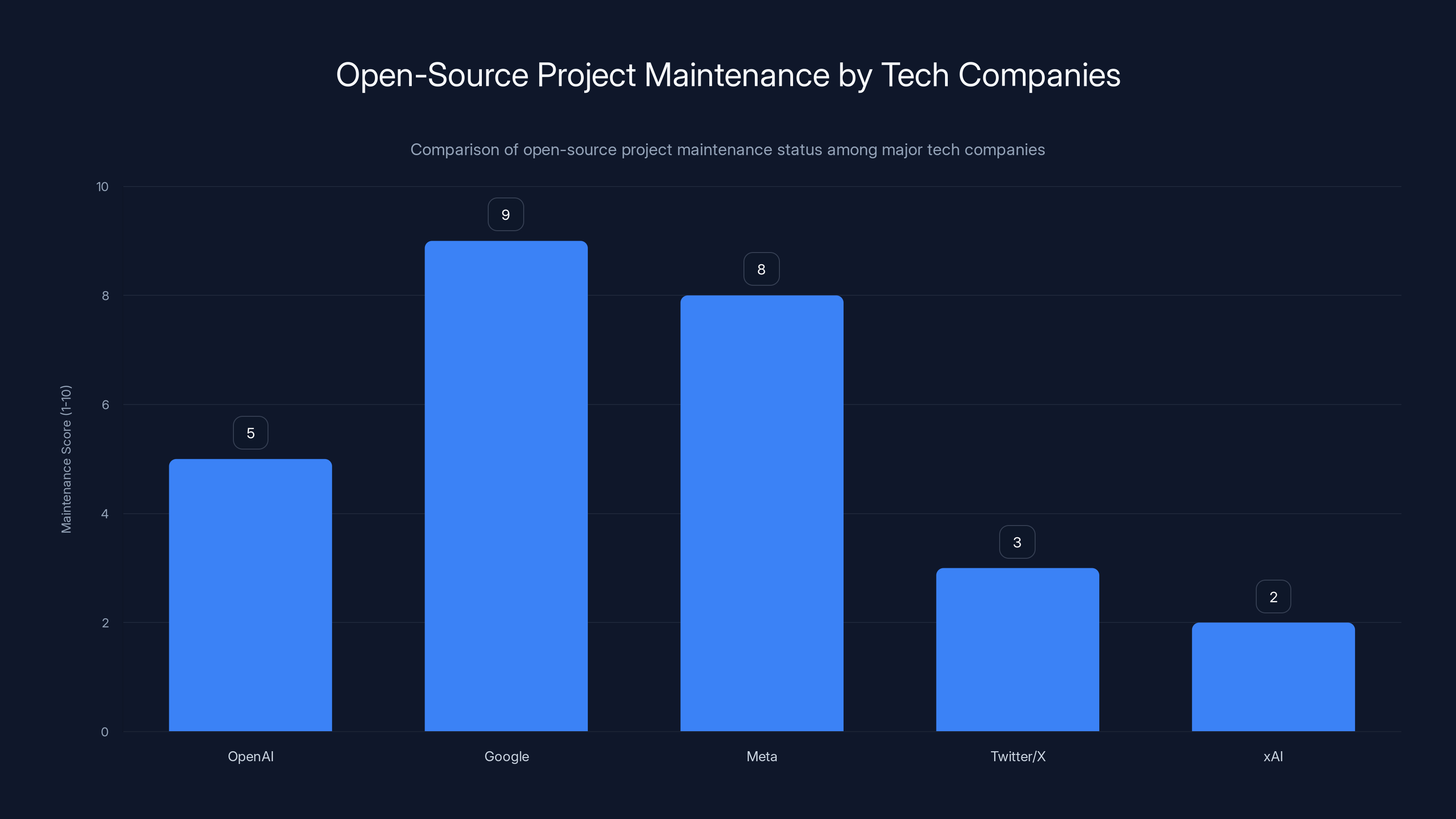

Google leads in maintaining open-source projects with a score of 9, while xAI lags behind with a score of 2. Estimated data based on project updates and community involvement.

Comparing Open-Source Promises: Who Actually Delivers?

Not all open-source promises are equal. Some companies actually follow through. Others don't.

OpenAI has a complicated relationship with open-source. They released GPT-4 code in limited form, but not the full model. They released other tools and libraries that are actively maintained. Their track record is mixed but better than pure vaporware.

Google open-sourced TensorFlow, their machine learning framework. They actually maintain it. New versions come out regularly. They accept community contributions. This is real open-source that works.

Facebook (now Meta) open-sourced various infrastructure projects. PyTorch gets regular updates. It's not perfect, but it's maintained and useful.

Twitter/X released their algorithm code. Then didn't maintain it. This is the baseline for what we're discussing.

The companies that successfully maintain open-source projects do so because:

- The project serves their business interests (TensorFlow helps machine learning adoption, which benefits Google).

- They allocate actual engineering resources to it.

- There's external pressure or community involvement that keeps it active.

- The project is small enough to maintain without being a burden.

Musk's track record on maintaining open-source is not great. xAI released Grok-1. It hasn't been updated. There's no reason to think the algorithm code would be different.

What Would Actually Be Useful: The Code Vs. The Documentation

Here's something worth considering: the code might matter less than you'd think. What would actually be useful to the public is good documentation.

Imagine Musk released:

- Clear documentation of what the algorithm does, in plain English.

- Explanation of how each signal gets weighted.

- Examples of how different types of posts get ranked.

- Information about edge cases and exceptions.

- Regular updates when things change.

That would be genuinely useful. You wouldn't need the code. You'd understand how X works.

Conversely, imagine he releases:

- Raw Python code with minimal comments.

- Reference to internal variable names and data structures.

- Outdated dependencies and broken imports.

- No documentation explaining what anything does.

- Never updates it again.

That's useless. Worse than useless, because it creates the appearance of transparency while actually being opaque.

The promise matters less than the execution. And so far, Musk's execution on these promises has been poor.

For developers and researchers who might want to actually use released code, this matters enormously. Are you getting something you can actually work with? Or are you getting a museum exhibit of code that's no longer alive?

The promise to update every four weeks with developer notes is crucial. If that happens, the code could actually be useful. If it doesn't happen, it's theater.

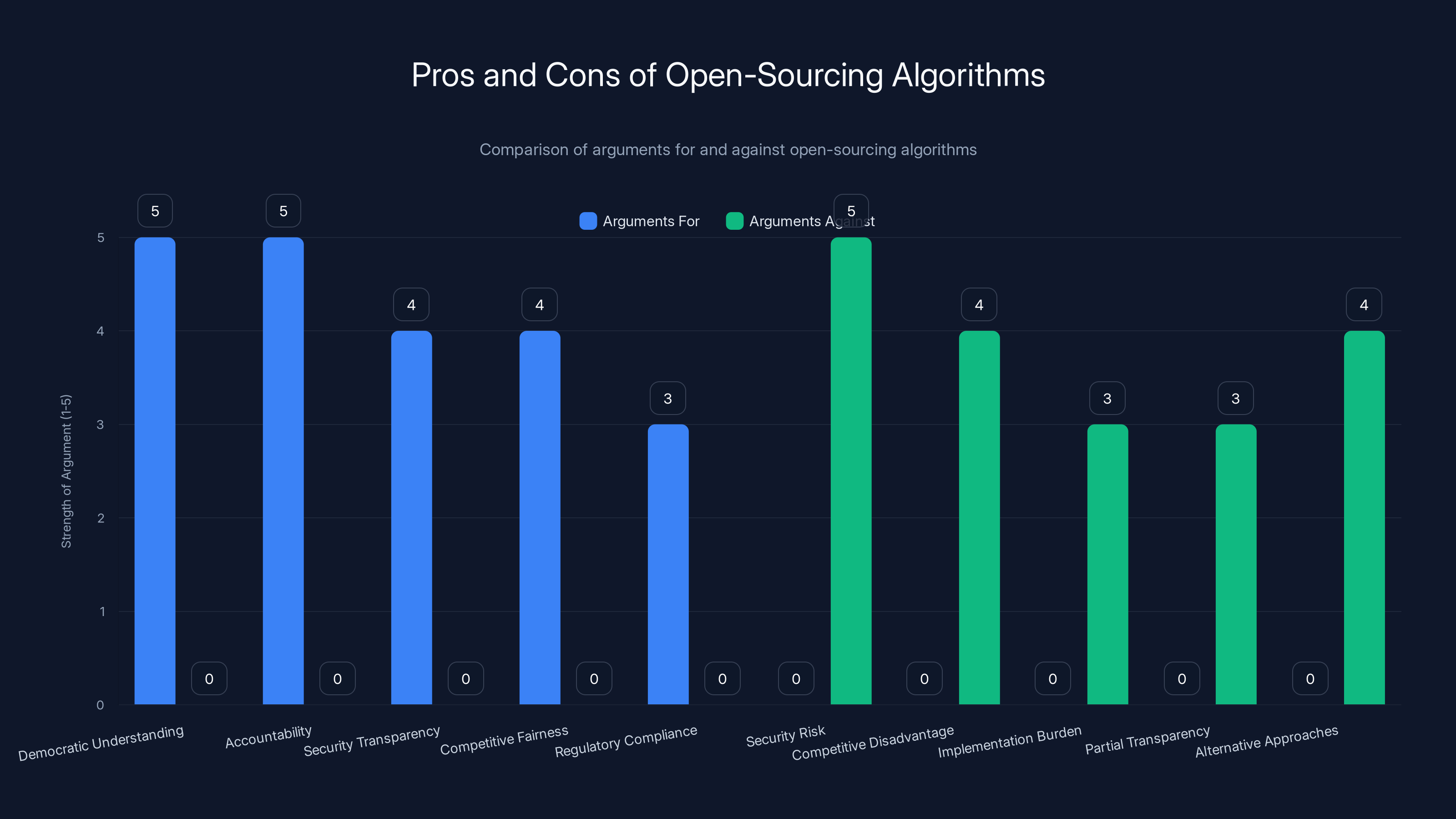

This bar chart compares the strengths of arguments for and against open-sourcing algorithms. Arguments for focus on transparency and fairness, while arguments against highlight security risks and competitive disadvantages.

The Broader Question: Should Algorithms Even Be Open-Source?

Worth asking: is open-sourcing the algorithm actually the right goal?

Arguments for open-sourcing the algorithm:

- Democratic understanding: The public should understand how decisions about information flow are made.

- Accountability: Code is facts. You can't lie about code. It is what it is.

- Security through transparency: Researchers can find vulnerabilities faster if they have the code.

- Competitive fairness: Everyone can see the rules and optimize accordingly.

- Regulatory compliance: Governments increasingly want this.

Arguments against open-sourcing the algorithm:

- Security risk: Bad actors get detailed information about how to game the system.

- Competitive disadvantage: If your algorithm is better, releasing it narrows your edge.

- Implementation burden: Maintaining public code requires resources.

- Partial transparency is worse than none: Understanding 80% of how something works creates confidence you shouldn't have.

- Alternative approaches exist: Regulatory oversight, audits, and reporting might be better.

The truth is probably that some middle ground is better than full release. Something like: regular audits by independent researchers with access to the code (but not public release), published reports about how the algorithm works, and regulatory oversight.

What's definitely not good is the current situation: promises of release followed by stagnant code. That's the worst of both worlds. You get the compliance theater without the actual transparency.

Predicting What Will Actually Happen

Let's be honest about what's likely to happen.

Musk will probably release some code. He'll point to it in interviews and articles. The story will exist: "Musk Open-Sources X Algorithm." News outlets will cover it. It'll trend on social media for a few hours.

Then, gradually, the code will become outdated. Updates will become less frequent. After a few months, they'll stop entirely. By the time you're reading this, if the release has happened, it's probably already slowing down.

This isn't cynicism. It's pattern recognition based on Musk's previous behavior and broader industry trends.

Why do I predict this?

- Historical precedent: The 2023 Twitter algorithm is still sitting there, unmaintained.

- Resource reality: Actually maintaining open-source code is harder and less rewarding than it sounds.

- Incentive misalignment: There's no penalty for stopping maintenance and no reward for continuing it.

- Attention economy: Once the announcement phase ends, there's no ongoing pressure to maintain it.

- Alternative solutions: X could comply with regulations through audits and reporting without ongoing code maintenance.

What could prove me wrong?

If X actually hires a dedicated team to maintain the released code. If they set up processes for accepting community contributions. If they publish updates every four weeks as promised. If the code becomes an actual resource that developers and researchers use and build on.

That's possible. It would be impressive. It would also require sustained commitment that hasn't been evident in previous releases.

The X algorithm is primarily influenced by personalization (30%) and engagement (25%), with recency, social connections, and content moderation also playing significant roles. Estimated data based on typical recommendation systems.

The Implications for Users and Researchers

Whether the code gets released and maintained matters less for users than for researchers and developers.

For users: The algorithm works the same way whether the code is open or closed. Releasing code won't change how your feed works. It might be interesting to understand how it works, but it won't improve your X experience. You'll still see posts the algorithm thinks you want to see.

For developers: Actual, maintained code could be useful. They could fork it, modify it, run it locally. They could understand what changes were made between versions. They could propose improvements. But only if the code is maintained and documented.

For researchers: Academic researchers studying algorithmic bias, filter bubbles, and content amplification could use actual code to run experiments. They could test theories about how the algorithm works. They could publish findings. Again, only if the code is maintained.

For competitors: Understanding exactly how the algorithm works helps them build better ones. This is why fully open-sourcing is a genuine competitive risk for X.

For regulators: They probably care more about documentation and regular reporting than about public code release. The European Union's regulators got clarification about how TikTok's algorithm works through regulatory requests and audits, not open-source releases.

The ripple effects of open-sourcing, if actually done well, could be significant. But they'd take years to materialize. You'd need researchers to analyze the code, run experiments, publish findings, and those findings to influence how the algorithm gets regulated or improved. That's a long chain that hasn't started yet.

Historical Context: Why Tech Companies Make These Promises

Understanding why Musk makes these promises in the first place requires looking at broader tech culture.

In the late 1990s and early 2000s, open-source software moved from niche to mainstream. Linux went from hobbyist project to powering much of the internet. Open-source became not just practical but ideologically significant. It represented transparency, democratization, and resistance to corporate control.

Tech culture absorbed this narrative. Companies started using open-source contributions as a way to build brand image. Google contributes to open-source. Facebook releases infrastructure. It looks good. It positions you as enlightened and committed to the greater good.

Musk has always positioned himself as ideologically committed to transparency and freedom. In his own mind, he probably genuinely believes in these values. But belief in a value and actual commitment to it are different things.

The promise is culturally significant. Making it signals that you're on the right side of transparency. The follow-through is difficult and expensive.

Over the years, tech companies learned they could make these promises with limited downside risk. If you follow through, you get credit. If you don't, people forget. There are no real consequences.

Nobody loses their job because an open-source project went unmaintained. Nobody gets fired for not following through on a promise from two years ago. The PR value comes upfront. The cost comes later, when people have moved on.

What Regulation Could Actually Require

There's a difference between what Musk is promising and what regulations actually require.

The European Union's Digital Services Act doesn't mandate open-source release. It requires platforms to:

- Explain how the algorithm works to users and regulators.

- Allow users to opt out of personalized recommendations.

- Publish regular reports about algorithmic amplification.

- Undergo audits by third parties.

None of this requires public code. A technical white paper would satisfy the law. Regular reports about changes would satisfy it. Allowing auditors to view the code under NDA would satisfy it.

Similar requirements are emerging in other jurisdictions. The UK Online Safety Bill has algorithmic transparency requirements. Singapore's code of practice for AI has guidelines. But most of them allow for transparency without public release.

The actual regulatory requirement is "explain how it works." Musk's promise is more ambitious: actually release the code. This might be because he thinks it's right, or because he thinks it protects him from regulation, or some combination.

But from a regulatory standpoint, releasing code is going above and beyond. You could comply with regulations without it.

The Technical Debt Problem

There's another reason why releasing and maintaining the algorithm code gets harder over time: technical debt.

Code written two years ago might not reflect current best practices. Dependencies get outdated. The overall architecture might not be what you'd build today. Refactoring code to make it good enough to public release takes effort.

When you publish code, you're implicitly endorsing it as something worth other people using. If you know the code has technical debt, security issues, or architectural problems, you have an incentive to fix those before releasing. But fixing them takes time. You could also release the code as-is and explain that it's a snapshot of a specific point in time.

That's probably what would happen in practice: release code that represents how the algorithm worked at some point, but doesn't necessarily represent best practices or current implementation.

This gets at a deeper issue with the open-source promise. The code itself is less important than the documentation and explanation of what it does. But releasing code creates expectations about code quality and usability that might not be met.

Alternative Ways to Achieve Transparency

If you actually care about understanding how X's algorithm works, code release isn't the only path.

Academic research: Independent researchers have studied X's algorithm and published findings. Google Scholar has dozens of papers analyzing social media algorithms. These papers often reveal more than code would, because they explain what things mean.

Regulatory audits: Companies like Trust Labs and Ranking Digital Rights conduct audits of tech platforms and publish findings. These audits sometimes have more weight than public code.

Leaked documents: Employees leave companies. They sometimes talk about how things worked. While not official, these accounts are often more revealing than sanitized public code.

Technical investigation: Security researchers and technologists can infer how algorithms work by reverse engineering. They submit test posts, observe results, and build models of how the system behaves.

Published papers from the company: X publishes some research about how their recommendation system works. These papers, while sometimes promotional, do explain algorithms in detail.

User-visible behavior: You can understand a lot about how an algorithm works by watching how it behaves. When you post something, how quickly does it spread? What types of posts get shown to how many people? What patterns emerge?

All of these provide transparency without the company needing to open-source the code.

The advantage of code release is that it's definitive. There's no ambiguity about what the system does. The disadvantage is that it requires maintenance and can be misinterpreted without good documentation.

The Trust Question

Ultimately, this comes down to trust.

If you trust Musk and X to do what they promise, then the open-source release is good news. You'll get to see the algorithm, understand how it works, and the promise of regular updates means it stays current.

If you don't trust them, then the promise means nothing. You'll wait for the release, see unmaintained code, and feel cynical about corporate transparency claims.

There's evidence supporting both positions:

For trusting Musk: He's ambitious. He completes some big projects. SpaceX went from failed launches to successful reuse. Tesla went from bankruptcy risk to market dominance.

Against trusting Musk: He makes promises that don't materialize. The 2023 algorithm code went unmaintained. Grok-1 hasn't been updated. Twitter promised reliability improvements that took years to materialize.

The specific question is whether maintaining an open-source algorithm release is a priority for him. Given that it's not directly connected to any of his core business objectives, and given that it requires sustained engineering effort, the evidence suggests it won't be.

But it's possible. If he makes it a priority, if he allocates resources, if he makes it part of his mission to increase transparency, then it could happen.

The thing about Musk is that he's genuinely unpredictable. He might follow through to prove critics wrong. He might lose interest. He might get distracted by something else.

What to Watch For

If you actually want to track whether this open-source promise is real, here's what to watch:

The initial release: Does code actually appear on GitHub? Is it complete? Is there documentation?

The second update: The first update is always easy. You just release the initial code. The second update is the real test. If it comes within four weeks with meaningful changes documented, you know there's a commitment.

Maintenance pattern: After a few updates, does the frequency hold or start slowing? Are there responses to GitHub issues from developers trying to use it? Are pull requests from the community getting reviewed?

Documentation quality: Does the code have good comments and explanation? Is there a README explaining what things do? Is there a changelog? Or is it raw code with no context?

Completeness: Are there models included or just code? Can someone actually run it? Or is it code that requires proprietary data and infrastructure to work?

Timeline tracking: Keep a calendar. Four weeks from the initial release should bring an update. Four weeks after that should bring another. If the updates stop, you have your answer.

These are concrete signals. If they all point positive, transparency is real. If they point negative, it's theater.

The Bigger Picture: Where Transparency Is Actually Heading

Regardless of what happens with X, the broader trend toward algorithmic transparency isn't going away.

Regulators in Europe, California, and elsewhere are implementing requirements. Companies that don't respond will face fines. TikTok is already dealing with regulatory pressure about its algorithm. Instagram and Facebook have published information about how they work.

The smart money is on transparency becoming table stakes. Companies that figure out how to be transparent without compromising competitive advantages will win. Those that resist will face pressure and regulation.

Musk's promise to open-source the algorithm fits this trend. Whether he follows through is almost secondary to the fact that the expectation now exists. Users expect transparency. Researchers expect access. Regulators expect explanation.

X might not need to actually release the code to satisfy these expectations. But the expectation is now in place. That's progress of a sort.

The question is whether it's real progress or just expectation-setting theater. Time will tell.

Making Sense of It All

Here's what we know:

- Musk promised to open-source X's algorithm in 2025, updating it every four weeks.

- He made similar promises in 2023 that never materialized into maintained code.

- The history of his open-source projects (Grok-1) suggests poor follow-through.

- Industry patterns show that maintaining open-source projects is harder than releasing code initially.

- Business incentives don't strongly support algorithm maintenance and release.

- Regulatory requirements don't actually mandate code release, just transparency.

- Trust in whether this will happen reasonably should be low, based on evidence.

That doesn't mean it won't happen. It means the probability is lower than the announcement suggests.

Worth keeping in mind: even if the code gets released and properly maintained, it won't revolutionize your experience on X. You'll still see what the algorithm thinks you want to see. Understanding how it works is valuable for researchers and developers. For regular users, it's interesting but not transformative.

The real value of open-source algorithms would be for competitive purposes and for academic research. It would let people build better alternatives. It would let researchers understand bias and amplification. It would create accountability.

But those benefits only materialize if the code is real, current, and actually usable. Theater doesn't provide those benefits.

The question we should all be asking is whether Musk is making a genuine commitment or performing one. Based on the evidence, the answer is probably both, with performance winning out eventually.

FAQ

What exactly is the X algorithm?

The X algorithm is a recommendation system that decides what posts appear in your feed, in what order, and which ads you see. It combines signals like recency, engagement, social connections, and personalization based on your behavior. The algorithm also applies content moderation filters and other quality controls. Different parts of the algorithm serve different purposes: ranking posts, generating feed candidates, recommending ads, and determining suppression.

Why did Musk's 2023 open-source release fail to stay updated?

The GitHub repository for the 2023 Twitter algorithm release went dormant because maintaining public code requires sustained engineering effort that competing business priorities discouraged. After the initial announcement and PR value faded, there was no external accountability or pressure to continue updates. Engineers got pulled to other projects, and the repository became a static artifact. This is a common pattern in tech companies where open-source maintenance ranks below everything else in priority.

What are the regulatory requirements for algorithm transparency?

The European Union's Digital Services Act requires platforms to explain how algorithms work to users and regulators, allow users to opt out of personalized recommendations, publish regular transparency reports, and undergo third-party audits. Similar requirements exist in other jurisdictions like the UK and Singapore. Importantly, these regulations don't require public code release; they require explanation and documentation, which can be provided through reports and restricted audits instead.

Could releasing the algorithm code actually harm X's business?

Yes, potentially. Competitors could study the code and build better alternatives. Malicious actors could find exploits or ways to game the system if they understand exactly how it works. Advertisers might optimize in ways that don't serve X's business interests if they fully understand the algorithm. These are real competitive and security risks that explain why complete transparency creates genuine trade-offs. However, Runable and similar platforms demonstrate how AI-powered systems can operate with more transparency than social networks currently provide.

What's the difference between releasing code and documentation?

Code is the actual implementation while documentation explains what the code does in human-readable form. Good documentation can provide more useful transparency than raw code without comments. Conversely, code without documentation is nearly useless. For actual transparency, what matters most is clear explanation of how decisions get made. Code is valuable mainly for researchers and developers who want to run experiments or verify claims. Regular users benefit more from clear documentation about how the algorithm works and what data it uses.

How can users verify whether X actually maintains the released algorithm code?

Watch for these concrete signals: Does code appear on GitHub with documentation? Does a second update arrive within four weeks of the initial release? Do subsequent updates keep coming regularly? Are there responses to GitHub issues and pull requests from the community? Is the code actually runnable, or does it require proprietary infrastructure? Are there trained models included or just code? Do changelogs explain what changed between versions? These signals distinguish real open-source projects from theatrical releases.

What would happen if the algorithm code became genuinely open-source and actively maintained?

Researchers could run experiments to understand algorithmic bias and filter bubbles. Developers could build alternative clients or systems that use similar algorithms. Security researchers could find vulnerabilities faster. Competitors could understand what makes X's algorithm work and build better ones. Regulators would have easier time auditing claims. Users could understand exactly how their feeds get created. These benefits take years to materialize because they require research, publication, and adoption of findings, but the long-term impact could be significant.

Key Takeaways

- Musk promised to open-source X's algorithm in 2025 with updates every four weeks, but his 2023 Twitter algorithm release went dormant and was never updated.

- Open-source maintenance requires sustained engineering resources that competing business priorities usually discourage, explaining why tech company promises often fail.

- EU regulations like the Digital Services Act don't actually require code release, only transparency through reports and audits, making Musk's promise more ambitious than legally necessary.

- The critical test is whether a second update appears within four weeks of initial release—the first release is easy, but sustained maintenance reveals genuine commitment.

- Even if code gets released and maintained, the benefit goes primarily to researchers and developers; most users won't notice algorithmic changes from transparency.

Related Articles

- Grok's AI Deepfake Crisis: What You Need to Know [2025]

- Cloudflare's $17M Italy Fine: Why DNS Blocking & Piracy Shield Matter [2025]

- WhatsApp Under EU Scrutiny: What the Digital Services Act Means [2025]

- Digital Rights 2025: Spyware, AI Wars & EU Regulations [2025]

- Tesla Loses EV Crown to BYD: Market Shift Explained [2025]

![Elon Musk's Open-Source X Algorithm Promise: History, Reality, and What It Means [2025]](https://tryrunable.com/blog/elon-musk-s-open-source-x-algorithm-promise-history-reality-/image-1-1768082767676.jpg)