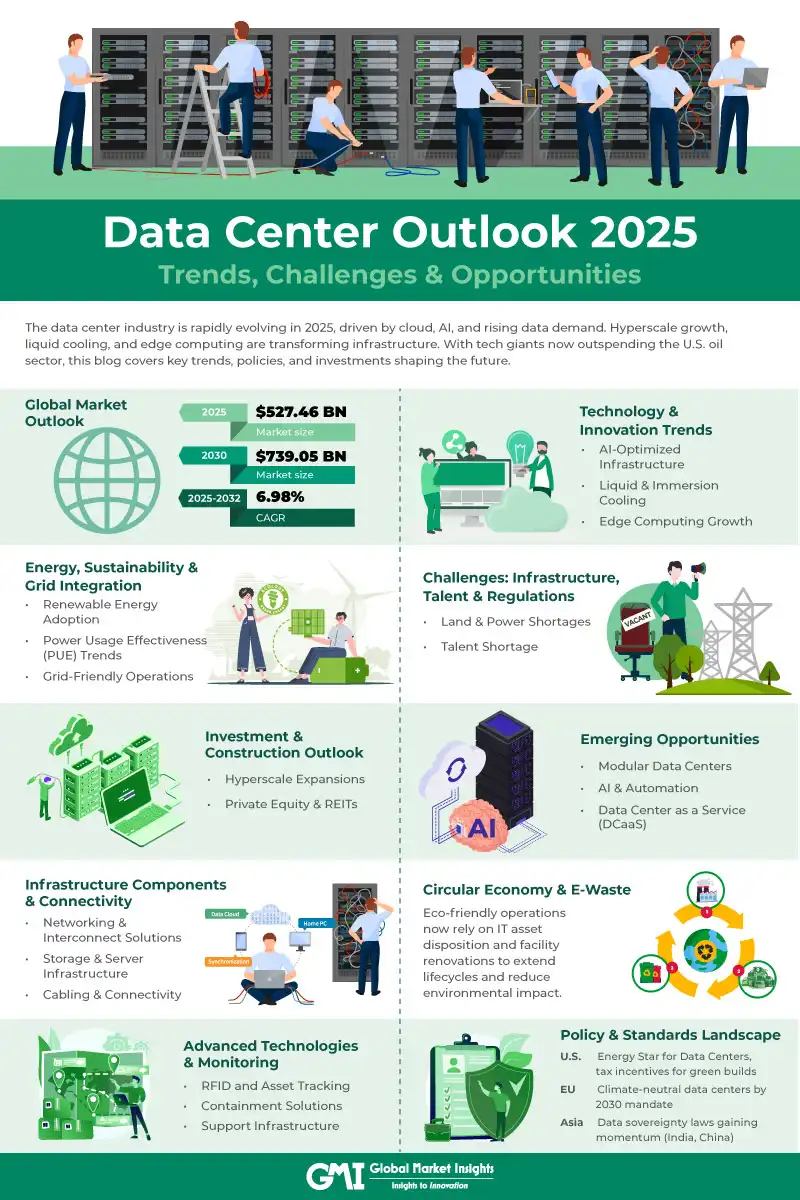

EU Data Centers & AI Readiness: The Infrastructure Crisis Holding Back Europe's AI Future

Europe's got a problem. And it's not a small one.

Right now, only one in five data centers across Europe and the Middle East can actually handle AI workloads. Let that sink in. While companies everywhere are racing to deploy AI models, build large language applications, and scale machine learning infrastructure, most of Europe's existing data center facilities are stuck in the past. They were built for traditional cloud computing. They're not built for what AI demands.

Here's the brutal math: AI workloads require high-density GPU racks that consume massive amounts of power and generate intense heat. Legacy data centers, built when the industry thought cloud servers would always be relatively modest, simply weren't engineered for this. Their power distribution systems can't handle it. Their cooling infrastructure wasn't designed for sustained heat loads from thousands of GPUs running 24/7. It's like trying to run a modern factory through electrical wiring designed for a 1980s office building.

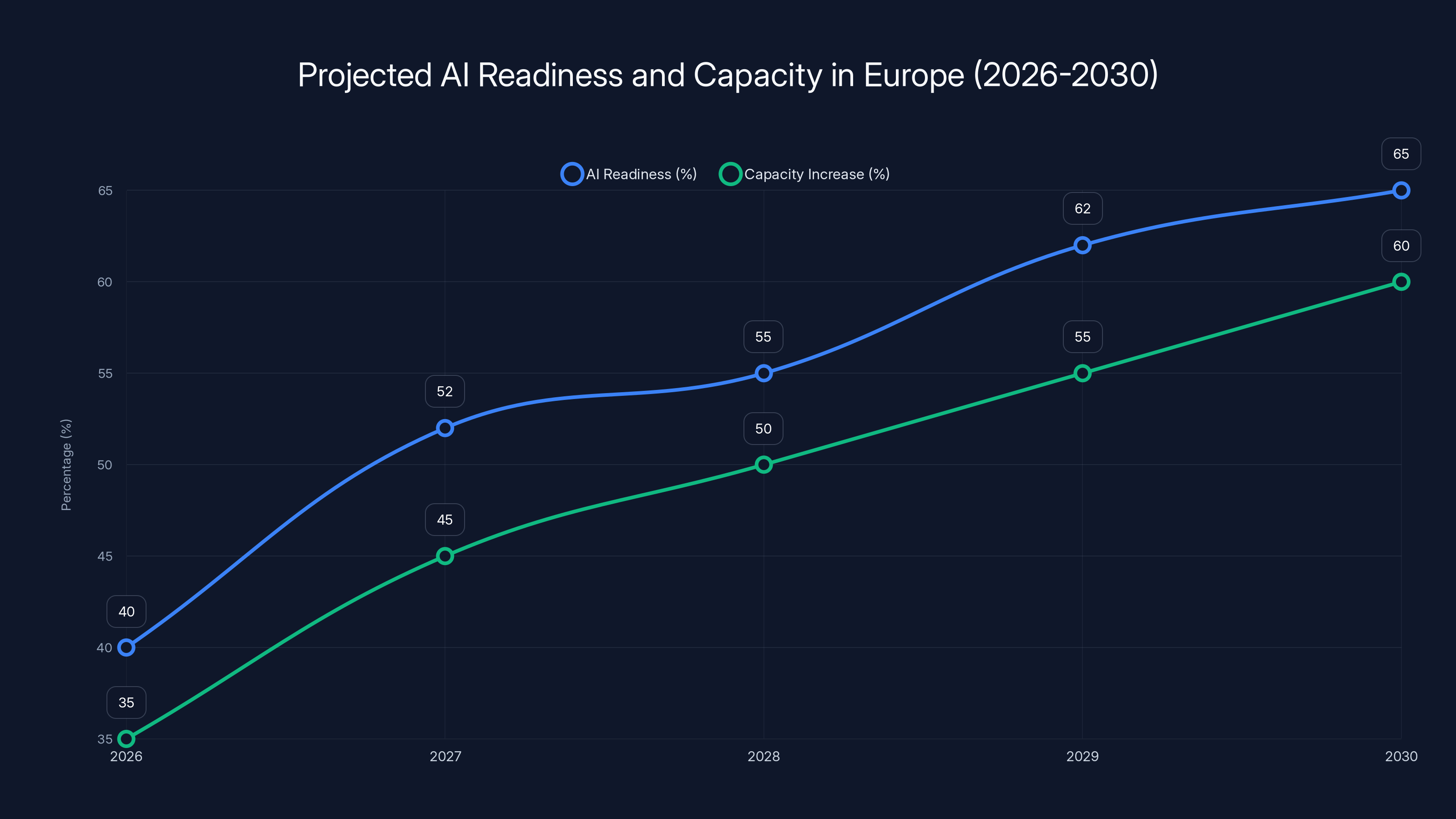

What makes this even worse? The gap between reality and demand is widening, not shrinking. Even though AI-readiness is projected to reach 70% by 2030, industry experts predict demand will outpace supply dramatically. In the next 12 months alone, 93% of surveyed data center professionals expect AI capacity demand to spike. Nearly 78% explicitly blame AI as the driver.

This isn't just a European problem, either. It's a European crisis. The continent faces a perfect storm: land shortages, grid connection delays, supply chain volatility, and a severe shortage of skilled technicians. Meanwhile, regions like the Nordic countries and Middle East are positioning themselves as the future hubs for AI infrastructure.

The question everyone's asking is simple: Can Europe catch up? Or will it fall permanently behind in the AI economy because its infrastructure can't support the workloads?

Let's dig into what's actually happening on the ground, why it matters, and what it means for companies trying to build AI systems in Europe.

TL; DR

- Only 20% of European data centers meet AI requirements today, though projections show 70% readiness by 2030

- Power density and cooling systems are the primary technical barriers preventing most legacy facilities from supporting high-density GPU racks

- Upgrade costs are staggering, often running into hundreds of millions for major facilities, making retrofits economically impractical for many operators

- Supply will likely lag demand even with aggressive buildout, creating potential bottlenecks for European AI companies through 2027-2028

- Nordic regions and Middle East countries have geographic and regulatory advantages that may attract new AI data center investment

- 86% of industry professionals report supply chain volatility is now structural, not temporary, extending development timelines

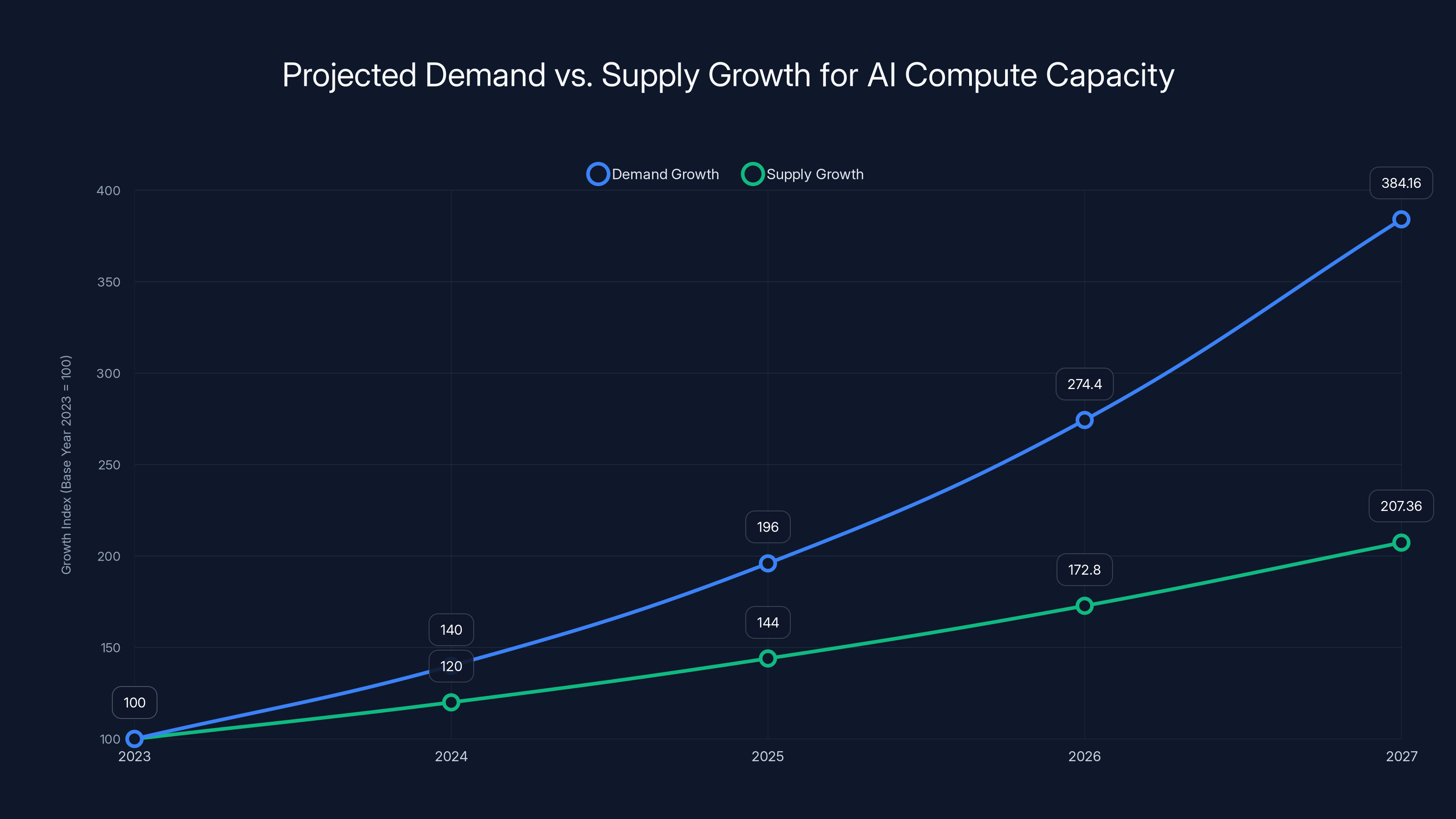

Estimated data shows demand for AI compute capacity growing significantly faster than supply, potentially leading to acute shortages by 2027.

The 20% Reality: Why Most European Data Centers Can't Handle AI

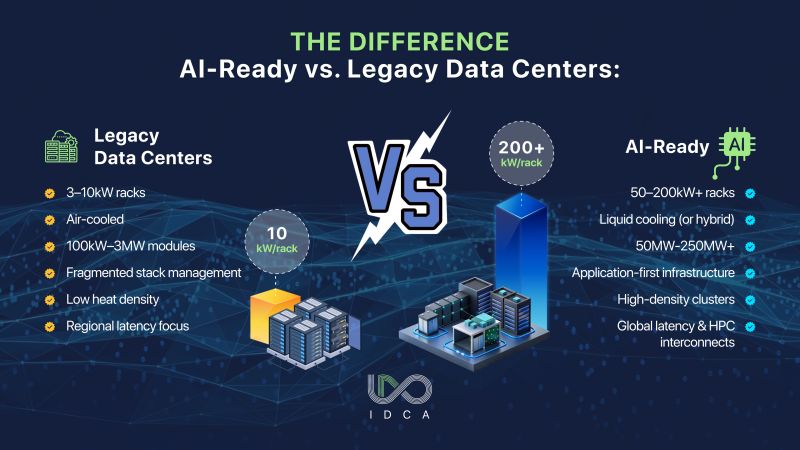

Let's start with the technical reality. When data centers were designed 10-15 years ago, nobody was running billions of parameters through neural networks simultaneously. The typical workload was web servers, databases, occasional storage, and some middleware. These weren't resource-intensive from a power perspective.

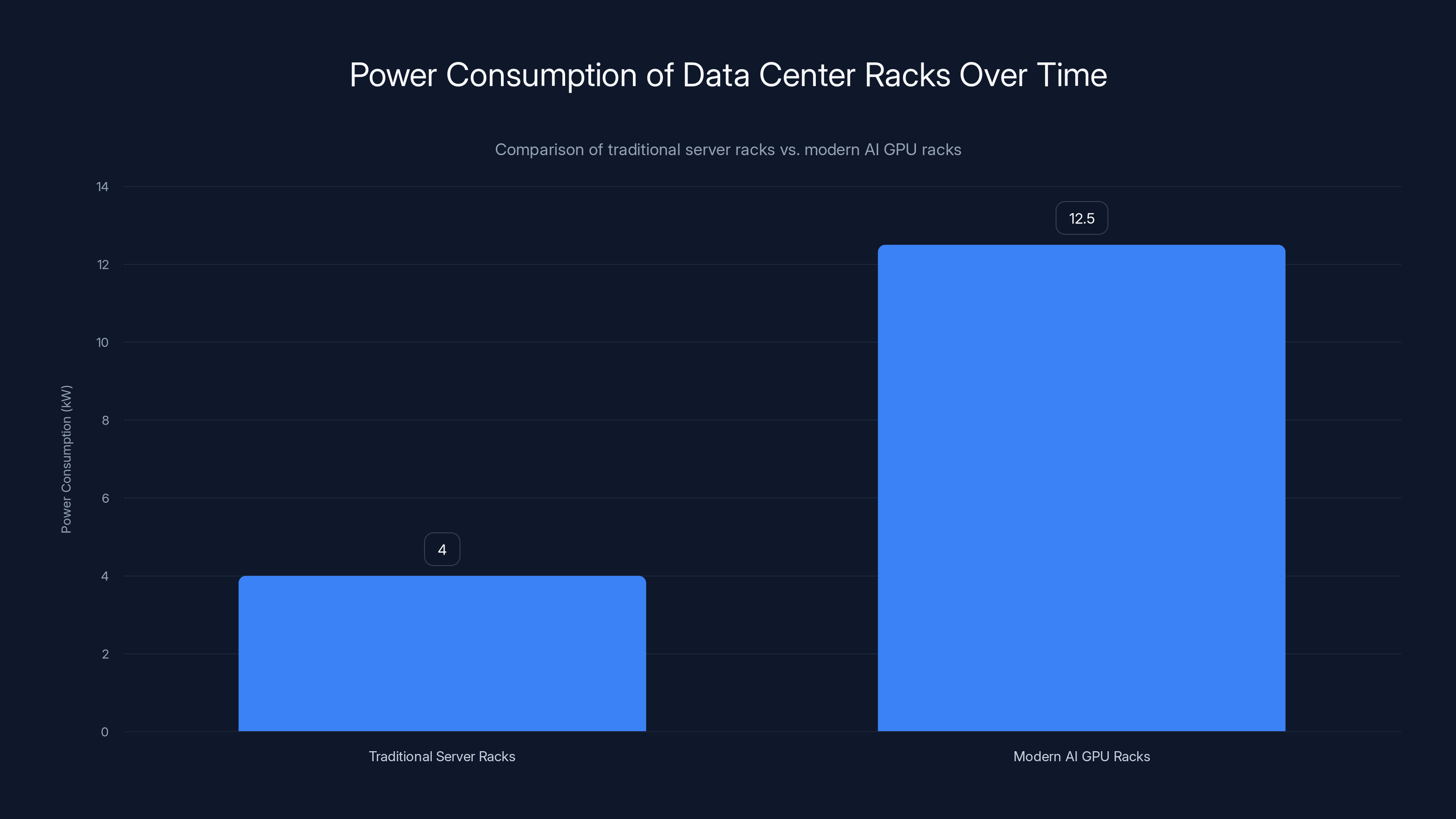

AI changed the game overnight. A single modern GPU rack can consume 10-15 kilowatts of power or more. Compare that to traditional server racks from a decade ago, which typically consumed 3-5 kilowatts. That's a 3-5x increase in power density in the same physical footprint.

But power consumption is only half the problem. The other half is heat dissipation.

When you're running GPUs at full capacity for hours, they generate enormous amounts of heat. A single NVIDIA H100 GPU can produce over 700 watts of thermal output. String together a rack of them, and you're creating a localized heat furnace. Legacy cooling systems—designed when data centers operated at lower power densities—simply can't keep up. They were built around assumptions about ambient temperature, airflow patterns, and heat distribution that no longer apply.

The result? Data center operators face three choices:

Option One: Accept lower utilization. Run the GPUs at reduced capacity, which defeats the purpose of having them.

Option Two: Massive capital expenditure. Rip out the old power distribution infrastructure, install new electrical systems rated for 20+ kilowatts per rack, upgrade cooling systems (which might mean replacing entire sections of the facility), and pray the building's structure can handle it.

Option Three: Abandon the facility. Start from scratch with a purpose-built AI data center.

For most operators, Option Two is economically unviable. We're talking about investments of hundreds of millions of euros for a single facility. For smaller regional operators, that's an existential question.

Power Density: The Killer Metric

Power density is measured in watts per square meter (or watts per rack, or watts per cabinet). This single metric determines whether a data center can run modern AI infrastructure.

Traditional data centers operate at around 5-10 kilowatts per rack. They're happy with that. They planned their electrical infrastructure, cooling capacity, and facilities management around that assumption.

AI-ready data centers need to support 15-25+ kilowatts per rack without breaking a sweat. Some cutting-edge facilities are being built to handle 30 kilowatts per rack or more.

What's the practical impact? A data center with 500 racks designed for traditional cloud workloads can theoretically be retrofitted for AI, but the math gets ugly fast:

- Traditional power budget: 500 racks × 7.5 k W average = 3.75 megawatts

- AI power budget: 500 racks × 20 k W average = 10 megawatts

You've just increased your power requirements by 2.67x. Your electrical infrastructure, transformers, distribution panels, backup generators, and fuel storage all need to scale. Your cooling systems need to be completely redesigned. Your facility might not even be able to draw that much power from the grid—grid connection requests get queued and delayed in many European countries.

For a facility that's 20 years old and has already been amortized, spending $200-400 million on upgrades doesn't make financial sense, especially if you're not guaranteed enough AI workload demand to justify it.

Cooling: The Hidden Infrastructure Nightmare

Power is one thing. Heat is another.

Traditional data center cooling was based on the concept of hot aisle/cold aisle containment. You have rows of servers, some facing each other in hot aisles (where hot air exhausts), some facing away in cold aisles (where cool air is supplied). Air conditioning systems cool the air, it gets pushed through the cold aisle, servers intake it, and hot air exhausts into the hot aisle.

This works great at 10 k W per rack. It breaks down at 20+ k W per rack.

Why? Because traditional cooling systems can't push enough cold air fast enough. The air in the hot aisle gets too hot too quickly. You end up with temperature stratification, hot spots, and thermal runaway conditions where certain areas of the rack experience temperatures well above safe operating ranges.

Modern AI data centers solve this with direct-to-chip liquid cooling. Instead of relying on air circulation, cold liquid (usually just water with some additives) flows directly to the heat-producing components. It's vastly more efficient.

But retrofitting a data center to support liquid cooling? That's essentially a complete rebuild. You're replacing the entire cooling distribution infrastructure, installing new loop systems, adding monitoring and redundancy for liquid systems (because a leak is catastrophic), and retraining your operations team.

Many European data centers simply don't have the space or structural capacity to support new cooling infrastructure. Liquid cooling loops need dedicated infrastructure. In a tightly packed facility built decades ago, there might not be room.

Estimated data shows AI readiness in Europe could reach 65% by 2030, with capacity increasing steadily. This suggests gradual improvements in infrastructure, though challenges remain.

The Scale of the Upgrade Challenge: Why Retrofitting Is Almost Impossible

Let's talk numbers, because this is where the infrastructure crisis becomes clear.

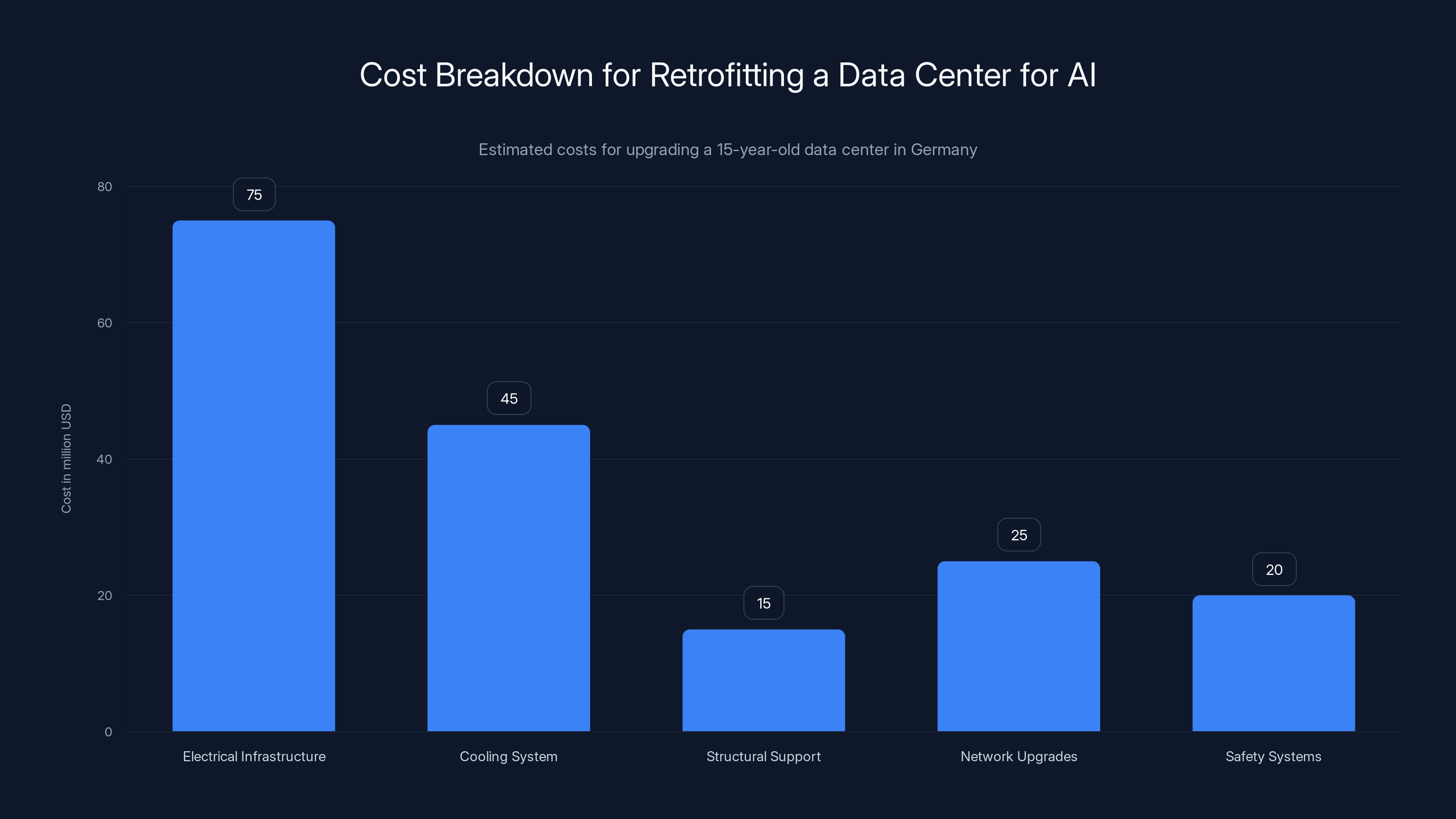

Suppose you're a data center operator with a major facility in Germany. You've got 2,000 racks, it's been operating for 15 years, and it's doing fine for traditional cloud workloads. Now your customers are demanding AI capacity. What do you do?

You commission an engineering study. It comes back with a sobering assessment: to make your facility AI-ready, you need:

-

New electrical infrastructure: Replace transformers, upgrade distribution panels, potentially negotiate new power draw allocation with the utility grid. Cost: $50-100 million.

-

Cooling system redesign: Install new chillers, loop systems, and potentially move to liquid cooling. Cost: $30-60 million.

-

Reinforced structural support: Modern racks with heavy GPU hardware weigh more. Verify or upgrade floor loading. Cost: $10-20 million.

-

Network infrastructure upgrades: AI clusters need lower-latency interconnects (NVIDIA's NVLink, Infini Band, etc.). Rewire the facility. Cost: $20-30 million.

-

Fire suppression and safety systems: Liquid cooling requires different fire suppression. Update backup generators and UPS systems. Cost: $15-25 million.

Total upgrade cost: $125-235 million for a facility that might have a residual economic life of only 10-15 years. Some of these facilities were built 20+ years ago, meaning they might have only 5 years of economically viable remaining life.

The ROI calculation doesn't work. You'd need to charge premium prices for AI capacity, run at extremely high utilization rates, and assume no competition or oversupply. In competitive European markets, that's not realistic.

Real Numbers from Real Facilities

Some European operators have actually attempted retrofits. The costs are staggering.

One major operator in the Netherlands spent approximately **

Was it worth it? For them, yes, because they had strong customer demand and the financial backing to absorb the costs. For most mid-sized operators? It's a non-starter.

The alternative—building new AI-dedicated facilities—is actually cheaper per-rack in many cases, though it requires capital allocation upfront and takes time to plan, permit, and construct.

The Supply Chain Bottleneck: Why Building Isn't Fast Enough

Here's the cruel irony: even if every data center operator in Europe decided tomorrow to build new AI-ready facilities, they couldn't do it fast enough to meet demand.

The issue isn't just capital. It's the supply chain infrastructure itself.

Building a new data center requires:

- Land acquisition and permitting (6-12 months)

- Environmental impact assessments (varies by country, 3-9 months)

- Grid connection requests and negotiations with utility providers (6-18 months, sometimes longer)

- Construction (12-24 months)

- Procurement of equipment (servers, racks, cooling systems, networking gear)

- Hiring and training staff (ongoing)

Just the grid connection process is a killer. In countries like Germany, Belgium, and the Netherlands, the electrical grid is already heavily loaded. If your proposed data center needs 50+ megawatts of power, you're not just plugging into an outlet. You're requiring utility companies to invest in new distribution infrastructure in your region.

They'll do it, but it takes time. Queue delays for grid connections are now measured in years, not months, in many parts of Europe.

Meanwhile, 86% of industry professionals report that supply chain volatility is now structural—not a temporary pandemic-related issue, but a permanent feature of the market. Chip shortages persist. Manufacturing capacity for GPUs and AI accelerators is constrained. Cooling equipment manufacturers are backlogged.

So the timeline for bringing new AI data center capacity online, even with unlimited capital, looks something like:

- Today to 12 months: Permitting, land deals, grid connection requests

- 12-24 months: Construction begins, long-lead equipment ordered

- 24-36 months: Facility coming online, staff training, initial customer pilots

- 36-48 months: Full capacity, optimized operations

Demand for AI computing capacity is growing in months, not years. That's the mismatch.

The Electricity Grid Problem

Power availability is now the primary factor when data center operators choose where to build new facilities. This is a shift from just a few years ago, when considerations like proximity to customers, real estate costs, and local talent pools dominated decisions.

Why? Because there's only so much electricity available, and major European countries have committed to electrification (electric vehicles, electric heating, industry) and renewable energy transition simultaneously. This creates competing demand for limited power.

Germany, for example, has been decommissioning nuclear power plants while expanding renewable capacity. The transition is happening, but there's a lag. Data centers wanting to operate at 50+ megawatts require coordination with regional and national grid operators, and getting priority is increasingly difficult.

France and Switzerland, with nuclear baseload power, have advantages. They can more easily allocate power to data centers without destabilizing the grid. Nordic countries (Norway, Sweden, Finland, Denmark) have abundant hydroelectric power and can draw from neighboring grids.

But countries in Central Europe—Poland, Czech Republic, Hungary—face tighter power situations. Their grid operators are more cautious about allocating massive power draws to data centers.

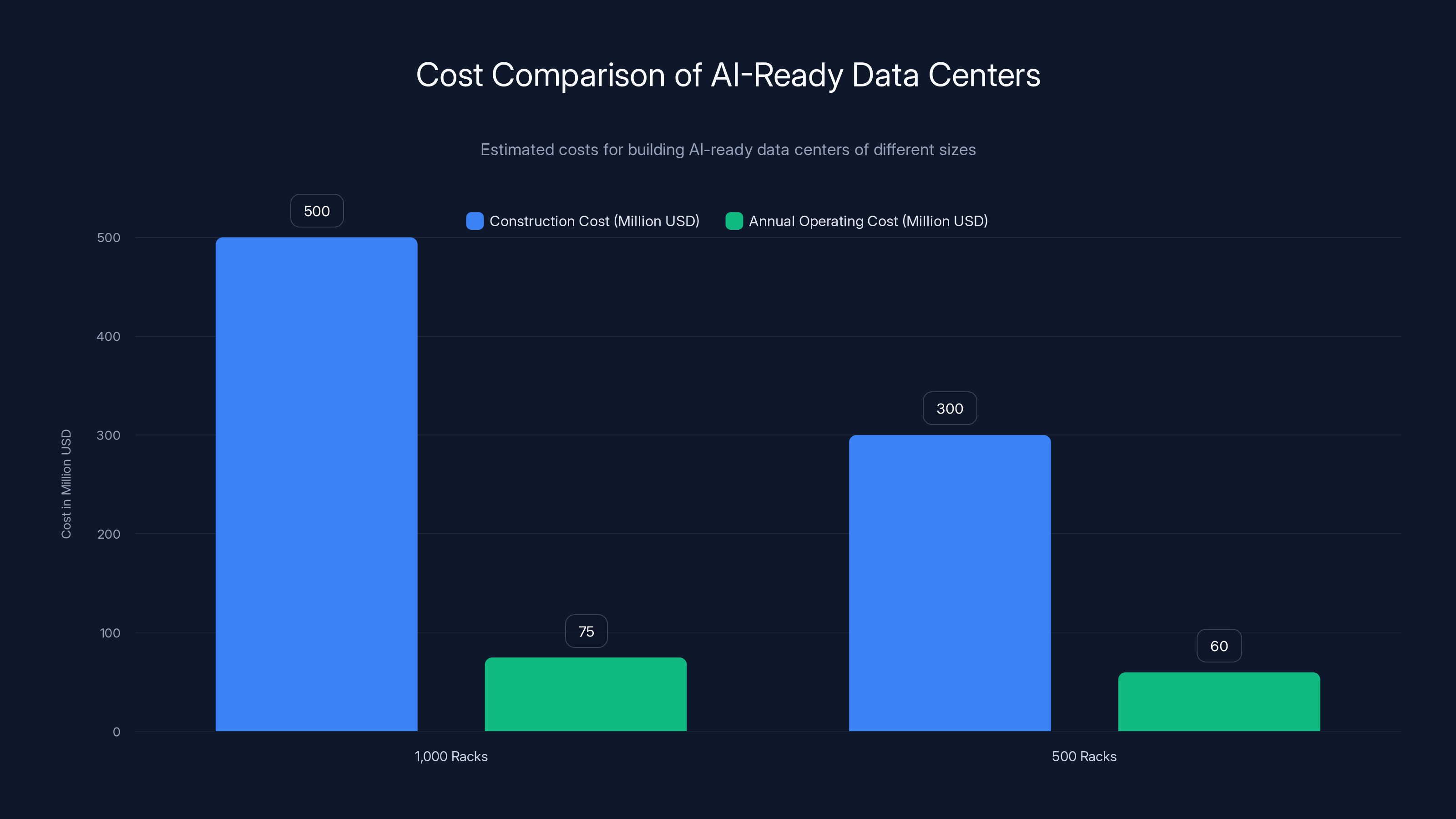

Building a 1,000-rack AI-ready data center costs approximately

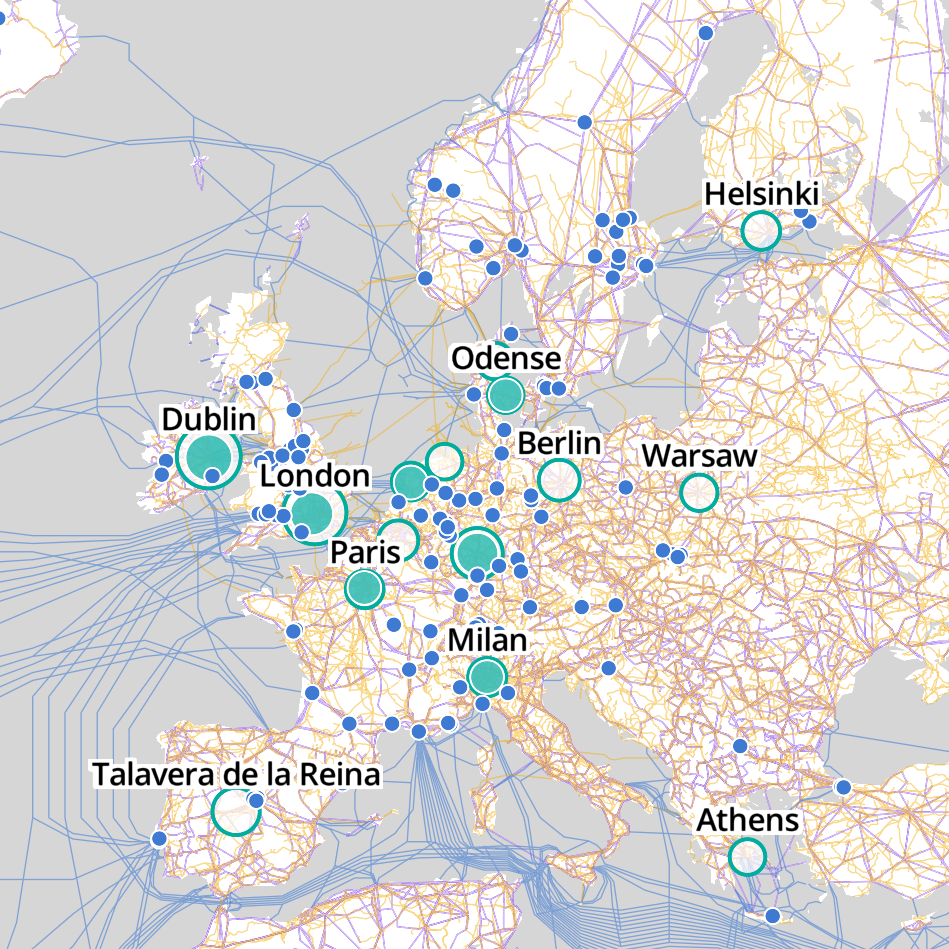

Geographic Winners and Losers: Where New AI Data Centers Will Actually Get Built

Not all of Europe is equal when it comes to AI data center infrastructure.

The geographic winners are becoming clear:

Nordic Dominance: The Climate Advantage

The Nordic countries—Sweden, Norway, Finland, Denmark—have an outsized advantage. Their cool climate reduces cooling costs dramatically. Air-based cooling (which is cheaper than liquid cooling) works much better when ambient temperatures are low.

During winter, data centers in Stockholm or Oslo can use free cooling—outside air is cold enough that you can dump data center heat directly into the atmosphere through economizer units. Even during summer, ambient temperatures are mild compared to Central Europe.

The financial advantage? A data center in the Nordics might spend 10-15% of operational costs on cooling. The same facility in Spain or southern France might spend 20-25% on cooling. Over a decade, that's hundreds of millions of dollars in difference.

Plus, the Nordics have abundant hydroelectric power (Norway, Sweden) and wind power (Denmark), with strong renewable commitments and grid capacity to support major new loads.

It's no surprise that some of the largest new AI data center projects in Europe are being planned for the Nordics. Tech companies, from hyperscalers to AI startups, are looking at Swedish and Norwegian sites.

Alpine Advantage: Switzerland and Austria

Switzerland and Austria also have climate and power advantages. Alpine locations mean cooler temperatures and abundant hydroelectric capacity. Both countries have stable grids and strong industrial infrastructure.

Switzerland's issue is cost—real estate and labor are expensive. But for premium AI services where margin justifies capital expenditure, it's viable.

Austria is seeing growing interest as companies balance cost with climate and power advantages.

Central Europe's Challenge

Countries like Germany, Poland, and Czech Republic face headwinds. Germany has strong industrial demand for electricity (automotive, manufacturing), plus the power transition is creating tight grid conditions. New data center capacity will get built—there's too much demand—but it won't be easy.

Warsaw and Prague are increasingly attractive for data centers because they're cheaper and have good connectivity, but power availability remains a constraint.

The Middle East as an Alternative

The report specifically highlighted the Middle East as a viable alternative for AI data center expansion. Why? Several reasons:

-

Abundant energy capacity: Oil and gas-producing countries have extensive power infrastructure and can allocate capacity without the political complications of the energy transition.

-

Land availability: Space isn't a constraint. You can build a massive facility for less capital per square meter than in Europe.

-

Lower labor and construction costs: Building and staffing is significantly cheaper.

-

Political will: Governments actively court data center investment as part of economic diversification.

The downside? Talent acquisition, geographic distance from most European customers, and potential latency issues for real-time AI applications.

But for training models, batch processing, and non-latency-critical workloads, the Middle East is becoming increasingly viable for European companies.

Sustainability Expectations: The 89% Commitment to Renewables

Here's a number that surprised many observers: 89% of data center professionals expect most energy (approximately 90%) to come from renewables by 2035.

That's a massive commitment. And it adds another layer of complexity to the infrastructure challenge.

Renewable energy is variable. Solar and wind output fluctuates based on weather and time of day. Data centers, especially those running AI workloads, need consistent, reliable power. The solution is a combination of:

- Direct power purchase agreements (PPAs) with renewable generators, locking in supply

- Battery storage systems to buffer intermittency

- Geographic distribution so you can shift workloads between regions based on renewable availability

- Demand flexibility where workloads that can tolerate slight delays run during high renewable output periods

The infrastructure investment required to support this is substantial. Battery systems for a large data center can cost tens of millions of dollars. Grid integration technology requires continuous investment.

For legacy facilities, adding renewable energy commitment essentially requires the same retrofit considerations as AI readiness. For new facilities, it's built in from the start, which is one reason new construction is becoming more economically attractive despite higher upfront costs.

This sustainability commitment is partly driven by customer demand—large tech companies want to advertise carbon-neutral operations—and partly by regulation. The EU's Digital Operations Energy Efficiency Directive and other regulations are tightening efficiency requirements.

Modern AI GPU racks consume approximately 3-5 times more power than traditional server racks, highlighting the increased power density challenges faced by data centers.

Demand Growth: Why 93% of Experts Expect Capacity Shortages

Let's look at the demand side of the equation.

93% of surveyed industry professionals expect AI-driven demand to increase significantly in the next 12 months. This isn't speculation—companies are already requesting capacity. Startups building generative AI applications need GPU clusters. Enterprises are training large language models. Research institutions are scaling AI projects.

Where's this demand coming from?

-

Generative AI applications: Companies building Chat GPT-like interfaces, code generation tools, creative AI assistants need reliable, high-capacity compute infrastructure.

-

Enterprise AI adoption: Major corporations are embedding AI across their operations—recommendation systems, fraud detection, customer service automation, predictive maintenance. Each requires significant compute capacity.

-

Research and development: Universities, research labs, and corporate R&D teams are scaling AI research projects.

-

Real-time AI inference: Edge computing and real-time AI applications (autonomous vehicles, robotics, real-time translation) require distributed compute capacity.

-

Regulatory compliance: Some regulations (like GDPR) require data to be stored within certain geographic regions, driving demand for European compute capacity specifically.

The challenge is that demand is growing faster than supply. Industry models suggest:

- Demand growth: 40-50% annually through 2027

- Supply growth: 20-25% annually through 2027

The gap widens each year. By 2026-2027, the shortage could be acute.

The Pricing Implications

When supply is constrained relative to demand, prices rise. This is basic economics.

For companies needing AI compute capacity in Europe:

- Today (2025): GPU/accelerator capacity costs are at historical highs, but availability is still possible with planning

- 2026: Expect 30-50% price increases as capacity tightens

- 2027: If new capacity doesn't come online quickly, prices could increase another 50%+

- 2028+: Assuming new facilities launch, prices stabilize or decline

For a company planning a multi-year AI project, this creates a difficult trade-off:

- Buy capacity now: Lock in current prices but pay for unused capacity if demand doesn't materialize

- Wait for capacity: Risk higher prices later and potential project delays

- Hybrid approach: Commit to some baseload capacity now, plus flexible spot capacity for variable needs

Smart companies are already locking in long-term contracts with data center operators who have roadmaps for new AI capacity. This gives them price certainty and supply security.

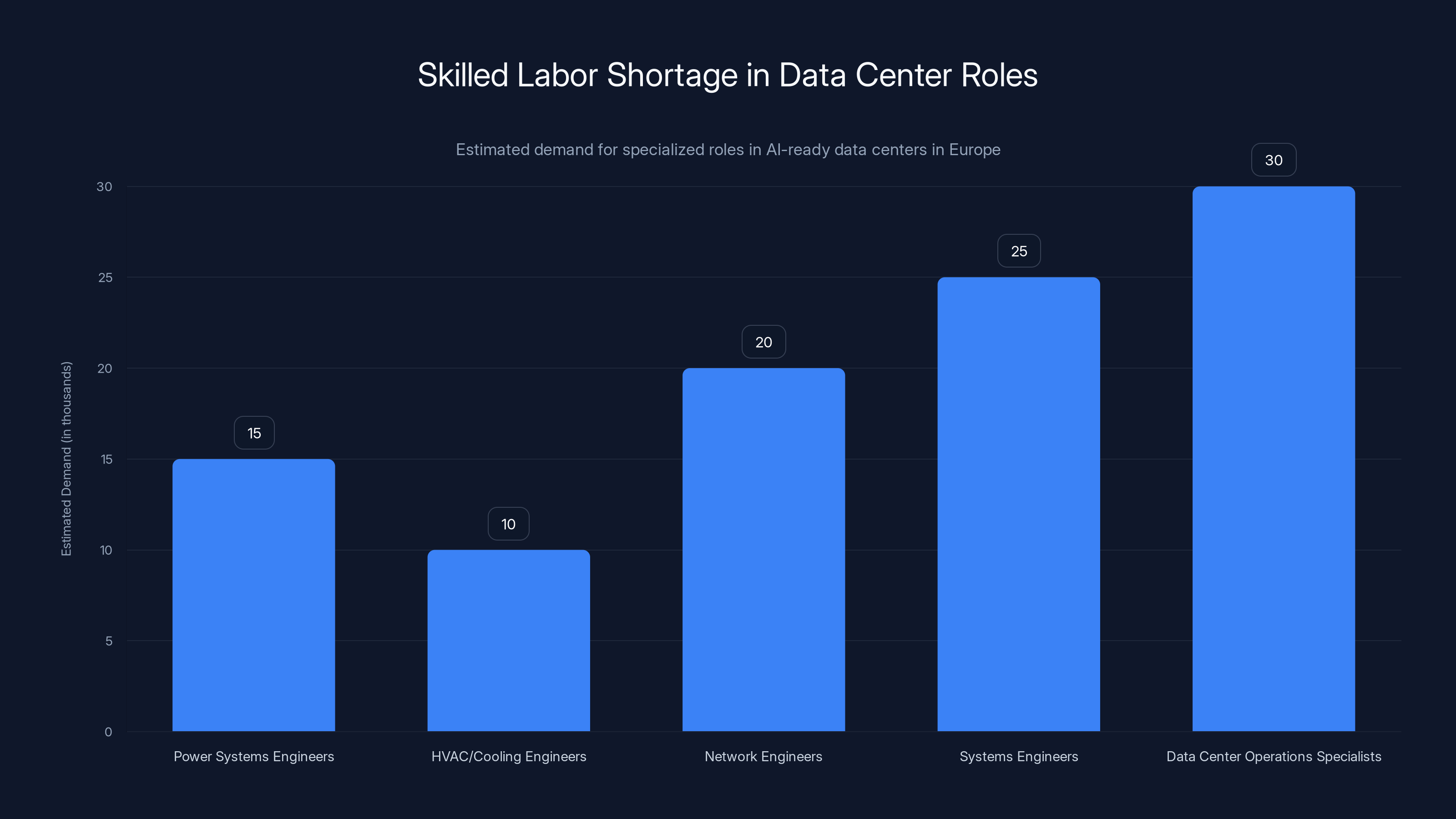

The Skilled Labor Shortage: The Workforce Problem Nobody Talks About

Technical infrastructure isn't the only constraint. Skilled labor is becoming a severe bottleneck.

Building and operating an AI-ready data center requires specialized expertise:

- Power systems engineers (electrical infrastructure, UPS systems, generators)

- HVAC/cooling engineers (advanced cooling system design and troubleshooting)

- Network engineers (high-performance interconnects like NVIDIA NVLink, Infini Band)

- Systems engineers (GPU cluster management, CUDA optimization)

- Data center operations specialists (monitoring, troubleshooting, maintaining 99.99%+ uptime)

These aren't generic IT jobs. They require specialized training and experience. And Europe has a talent gap.

Why? Partly because the U. S. built out data center infrastructure earlier, creating a larger experienced workforce. Partly because smaller countries don't produce enough specialized graduates. Partly because compensation in Silicon Valley and the U. S. cloud provider hubs exceeds European salaries.

The result? Data center operators building new facilities in Europe face recruitment challenges. Some are solving this by:

- Importing talent from other countries (visa processes can be slow)

- Investing in training programs (takes 6-12 months to develop skilled staff)

- Partnering with equipment vendors for operational support (adds cost)

This labor constraint extends timelines and increases costs for new facility development. It's not a showstopper, but it's a real friction factor that compounds the infrastructure challenges.

Estimated costs for retrofitting a data center range from

Supply Chain Volatility: The "Structural" Problem

When 86% of industry professionals say supply chain volatility is structural, not temporary, that's a powerful signal. This isn't about pandemic disruptions anymore. This is about the fundamental reality of modern manufacturing and logistics.

Specific constraints affecting European data center buildout:

Semiconductor Supply

GPUs, CPUs, and other semiconductors are made by a handful of manufacturers (TSMC, Samsung, Intel). Demand far exceeds supply. NVIDIA's H100 and newer GPUs have multi-month lead times. AMD's MI300X accelerators are similarly constrained.

Building a 10,000-GPU data center facility might require waiting 6-12 months just for the chips. During that time, prices can fluctuate, and competitors might outbid you for available inventory.

Cooling Equipment

Liquid cooling solutions are increasingly necessary but not yet commoditized. Manufacturers like Asetek, Liquid Cool, and others have limited production capacity. Custom solutions require 6-9 month development and manufacturing timelines.

Electrical Equipment

Transformers, distribution gear, UPS systems, and generators are all experiencing lead time extensions. Utility companies are investing in grid upgrades (renewable integration, EV charging infrastructure) and that's absorbing supply.

Construction Materials and Labor

Steel, cable, and other materials are subject to commodity price volatility. Labor rates are increasing as the construction industry competes for workers across multiple sectors (energy transition, infrastructure, housing).

The cumulative effect? Building a new data center facility takes longer and costs more than plans developed even 12 months ago. Operators must include contingency buffers in their timelines—often 20-30% padding—to account for supply chain uncertainty.

Regulatory and Sustainability Frameworks: New Requirements Adding Complexity

Europe isn't just dealing with technical and supply chain challenges. Regulatory frameworks are tightening.

Key regulations affecting data center development:

The Digital Operations Energy Efficiency Directive

This EU directive establishes minimum energy efficiency standards for data centers. Requirements include:

- Minimum Power Usage Effectiveness (PUE) targets (a measure of how much energy is wasted on cooling vs. actual computation)

- Temperature and humidity monitoring requirements

- Water efficiency standards

- Waste heat recovery obligations

For new facilities, these requirements push toward advanced cooling (liquid cooling, economizers) and monitoring infrastructure. For legacy facilities, compliance often requires the same upgrades needed for AI readiness.

Data Residency and Sovereignty Regulations

GDPR and emerging regulations like the Data Act in the EU require that data from EU citizens be stored within the EU. This means European data centers have a regulatory captive demand base—companies legally can't use data centers outside Europe for certain workloads.

This sounds positive for European data center operators, but it also means European capacity is spoken for. If supply is constrained, European companies might find it harder to secure capacity because of competing demand from other European companies facing the same regulatory requirements.

Green Energy Mandates

Several countries (Sweden, Denmark, Norway) have passed or are considering laws requiring data centers to source renewable energy above certain thresholds. This isn't a bad thing from an environmental perspective, but it increases operational complexity and cost.

Estimated data shows a high demand for specialized roles in AI-ready data centers, with data center operations specialists being the most sought after. This shortage is a significant challenge for European facilities.

The Path Forward: What's Actually Being Built

Despite all these challenges, new AI data center capacity is being developed across Europe. Here's what's actually in the pipeline:

New Major Projects

Several major operators and cloud providers have announced new AI-dedicated facilities:

- Scandinavian projects: Multiple announcements for large-scale facilities in Sweden, Norway, and Denmark, leveraging climate and power advantages

- Germany: Despite challenges, significant investments in AI-ready facilities, particularly near Frankfurt and Cologne

- Netherlands: Amsterdam and Rotterdam are seeing new builds, with strong interconnection to other European facilities

- France: Projects leveraging nuclear power in eastern regions

- Poland and Czech Republic: Lower-cost alternatives attracting investment despite power constraints

Timeframes

Most projects announced in 2024 are targeting initial capacity in:

- 2025-2026: First-phase openings (50% capacity)

- 2027-2028: Full capacity, assuming construction stays on schedule

None of these will begin to solve the supply-demand gap until 2027 at the earliest.

Hybrid and Distributed Strategies

Companies needing AI capacity now are adopting diverse approaches:

- Multi-cloud strategy: Using AWS, Google Cloud, and Azure datacenters that already have AI capacity, even if not in the ideal geography

- Regional partners: Contracting with European cloud providers building capacity

- Geographic distribution: Accepting lower performance for different workload types in different regions

- On-premises AI infrastructure: For large enterprises, building private data centers or co-location arrangements

Strategic Implications for Companies and Policymakers

For AI Companies and Enterprises

The infrastructure reality demands pragmatism:

-

Lock in capacity early: If you need substantial compute capacity, don't wait. Secure contracts now while availability exists.

-

Diversify geography: Don't depend on a single data center operator or region. Build workload distribution strategies.

-

Optimize for efficiency: Use techniques like mixed-precision training, quantization, and other optimization methods to reduce compute requirements.

-

Consider alternatives: Cloud GPUs (AWS EC2, Google Cloud, Azure) offer flexibility and can be cost-effective if you're not in a geographic bottleneck.

-

Plan for higher costs: Budget for 30-50% increases in compute costs through 2027.

For Data Center Operators

-

Upgrade or build: The middle ground—struggling with legacy infrastructure while hoping capacity materializes—is not viable. Commit to either major upgrades or new facilities.

-

Secure power contracts: The biggest differentiator for new projects is reliable, affordable power. Lock in renewable energy PPAs.

-

Talent acquisition: Invest in hiring and training now. Competition for skilled staff will only increase.

-

Geographic strategy: Nordic locations have advantages, but there's opportunity in other regions for operators who commit to infrastructure quality.

For Policymakers

-

Streamline permitting: Fast-track environmental and construction permits for AI data center projects. The EU's economic competitiveness depends on infrastructure parity.

-

Grid investment: Coordinate with utility companies to prioritize grid upgrades in regions where data center buildout makes sense.

-

Talent development: Fund training programs for data center operations and specialized engineering fields.

-

Incentivize sustainability: Use tax incentives or grants to encourage new facilities that meet or exceed renewable energy commitments.

-

Regional balance: Avoid concentrating all new capacity in a few locations. Distributed infrastructure is more resilient.

The 70% by 2030 Projection: Is It Realistic?

The industry projection that 70% of European data centers will be AI-ready by 2030 is ambitious. Let's stress-test it.

Assuming approximately 7,000-8,000 data centers across Europe and the Middle East:

- Current state (2025): ~1,400 are AI-ready (20%)

- Target (2030): ~5,250 should be AI-ready (75%, slightly above the 70% projection)

- Implied new capacity: ~3,850 facilities need to be upgraded or built

- Average per year: ~770 facilities

That seems feasible on the surface. But the math isn't that simple:

-

Not all facilities need to be AI-ready: Many are optimized for specific workloads (backup storage, archival, edge computing) that don't require AI capability. The relevant denominator might be smaller.

-

Growth during the period: New facilities will be built that are AI-ready from day one. Retiring legacy facilities reduces the count.

-

Partial upgrades: Some facilities might upgrade partially, offering limited AI capacity alongside traditional workloads.

Adjusting for these factors, the 70% target is plausible but will require:

- Massive capital investment ($100+ billion across Europe)

- Successful solution to the power/grid constraint

- Sustained growth in AI demand (if demand flattens, the economic case for upgrades weakens)

- Breakthrough cooling technologies or novel approaches

My honest assessment: 50-60% by 2030 is more likely than 70%, but 70% is achievable if several factors align favorably. It's not a slam dunk.

Cost of Delay: Why Waiting Isn't an Option

Here's a harsh reality: Every year Europe delays solving this infrastructure problem, the competitive gap with the U. S. and Asia widens.

The U. S. already has abundant AI data center capacity. Companies can build large-scale AI applications with confidence in infrastructure availability. Cloud providers have room to innovate and compete on price.

Europe is constrained. Companies building AI applications face uncertainty about capacity availability and pricing. This creates a vicious cycle:

- Limited capacity → Higher prices → European AI companies are less competitive → Venture capital flows to U. S. companies → Talent follows money → Europe falls further behind

Breaking this cycle requires rapid infrastructure investment. Years of delay compound.

Consider this: A company founded today in San Francisco has clear paths to scale AI infrastructure. A company founded in Paris or Berlin faces infrastructure uncertainty and higher costs. In a competitive market, that's a meaningful disadvantage.

This isn't just about business competitiveness. It's about economic sovereignty. If European companies can't reliably access compute infrastructure for AI development, they'll become dependent on U. S. cloud providers. Strategic AI capabilities will be controlled from the U. S.

Some policymakers are waking up to this risk. The EU's AI Act includes provisions about compute infrastructure resilience. Individual countries are incentivizing data center investment. But it's happening more slowly than the market dynamics demand.

Emerging Solutions and Innovations

It's not all doom and gloom. Several emerging approaches might help address the infrastructure gap:

Distributed and Edge AI

Instead of centralizing all AI workloads in massive data centers, some workloads are moving to edge locations (smaller facilities closer to users). This reduces peak demand on central facilities and can improve latency for real-time applications.

Technologies like federated learning (training models across distributed locations) are evolving, making this more practical.

AI Efficiency Improvements

As AI models become more efficient (lower compute requirements for comparable results), the infrastructure demand growth might moderate. Techniques like:

- Mixture of Experts (activate only relevant model components)

- Quantization (reduce precision, lower computational cost)

- Sparse models (skip unnecessary computations)

- Hardware-software co-optimization

These could reduce infrastructure demand by 20-40% over 5 years.

Alternative Hardware

Beyond NVIDIA's dominance, alternatives are emerging:

- Custom AI accelerators from cloud providers (Google's TPU, AWS Trainium, Meta's MTIA)

- FPGA-based solutions for specific workloads

- Emerging startups building novel architectures

Diversity in hardware reduces dependency on any single manufacturer and increases capacity options.

Power and Cooling Innovation

- Immersion cooling (submerging servers in non-conductive liquid)

- Direct water cooling with higher efficiency

- AI-optimized power systems with less waste

- Waste heat recovery for district heating in northern climates

These technologies are advancing rapidly and could improve data center efficiency by 15-25% in the next 3-5 years.

The Big Picture: Infrastructure as a Competitive Weapon

Datacenters have become infrastructure weapons in economic competition.

The U. S. is investing heavily in compute capacity and has a head start. China is building massive facilities with government backing. Europe is playing catch-up.

The companies and countries that solve the infrastructure problem faster will have outsized advantages in AI applications, capabilities, and economic value creation.

Europe's path forward requires:

- Political will to treat data center infrastructure like energy infrastructure (essential, strategically important)

- Capital investment at scale—$100+ billion across the continent

- Regulatory pragmatism (streamline permitting, balance sustainability with growth)

- Public-private partnerships where government helps with land, grid connections, and incentives

- Talent development to staff new facilities and innovations

It's doable. Europe has the engineering expertise, capital resources, and demand. But it requires recognizing the problem as urgent and acting accordingly.

The next 24-36 months are critical. Decisions made now about infrastructure investment will determine European competitiveness in AI through 2030 and beyond.

Looking Ahead: 2026-2030 and Beyond

Let's project forward to see how this might play out:

2026 Scenario

New AI data centers start coming online in Scandinavia, Germany, and the Netherlands. Capacity increases by 30-40% from 2025 levels. Prices remain elevated but stabilize. Some companies that waited too long face serious capacity constraints and escalating costs.

2027-2028 Scenario

Second wave of facilities reach full capacity. AI-readiness approaches 50-55% across Europe. Prices begin declining as supply starts catching up with demand. Companies that invested in diverse geographic strategies see competitive advantages.

2029-2030 Scenario

Additional capacity comes online. Efficiency improvements and distributed AI reduce central demand. AI-readiness approaches 60-65%. Market stabilizes with less capacity constraints and more competitive pricing.

Wildcard Scenarios

If efficiency improvements outpace expectations: Demand growth slows, supply catches up faster, prices decline. This helps Europe compete.

If new killer applications emerge: AI demand exceeds projections, capacity remains constrained, prices spike. Infrastructure shortage becomes critical bottleneck.

If geopolitical tensions escalate: Export restrictions on semiconductors or compute capacity could create severe European shortages, forcing massive accelerated investment.

The most likely outcome is somewhere in the middle: gradual improvement in capacity availability through 2027-2028, with meaningful but not dramatic price relief. Europe doesn't fully solve the problem until 2029-2030, by which time the U. S. has solidified its lead in AI infrastructure and applications.

Conclusion: Europe's AI Infrastructure Reality Check

Let's cut through the corporate speak and policy nonsense. Here's the situation:

Only 20% of European data centers can handle modern AI workloads. Upgrading most legacy facilities is economically impractical. Building new capacity takes 3-4 years. Demand is growing faster than supply. Prices will likely remain elevated through 2027-2028.

This is a problem. And it's not going to solve itself.

For companies that need AI compute capacity in Europe, the message is clear: Plan conservatively, secure capacity early, diversify geography, and budget for higher costs. Cloud providers have capacity now. Lock in long-term contracts if you need certainty. Don't assume that waiting for prices to drop is a viable strategy.

For data center operators, the math is equally clear: Legacy facilities without clear paths to AI readiness are becoming stranded assets. Commit to either major upgrades or new builds. Half-measures won't work. The operators that build new AI-dedicated facilities in strategic locations (Nordic regions with power and climate advantages) will capture disproportionate value.

For European policymakers and industry leaders, the challenge is existential: Without significant infrastructure investment and regulatory streamlining, European companies will become dependent on U. S. cloud providers for AI capabilities. Strategic autonomy in AI will be surrendered.

The good news? It's solvable. Europe has the capital, expertise, and demand to build world-class AI infrastructure. What's missing is urgency and coordinated action.

The next 12-24 months will determine whether Europe closes the gap or falls further behind. The infrastructure decisions being made right now will reverberate through the rest of the decade.

FAQ

Why can't legacy data centers just add more cooling systems?

Adding cooling alone doesn't solve the problem. The issue is integrated: power distribution, structural support, cooling, networking, and electrical infrastructure all need to scale together. You can't upgrade cooling without upgrading the power systems that feed the cooling infrastructure. You can't increase power density without structural reinforcement for heavier equipment. The components are interdependent, and updating one often requires updating others. For a facility built 20 years ago, this cascades into a complete redesign.

How much does it cost to build a new AI-ready data center from scratch?

A modern AI-dedicated data center with 1,000 racks and 20 megawatts of capacity typically costs

Why are the Nordic countries better positioned for AI data centers?

The Nordic region (Sweden, Norway, Finland, Denmark) has several advantages: abundant hydroelectric and wind power (stable renewable energy), cold climate reducing cooling costs (free cooling in winter, lower ambient temperatures in summer), excellent electrical grid infrastructure, strategic geographic location near Europe, high technical expertise, and regulatory environments encouraging data center investment. A data center in Stockholm or Oslo can operate with cooling costs 40-50% lower than a facility in Central Europe, which compounds to billions in savings over decades.

When will AI data center capacity be available in Europe without long waiting periods?

Based on current projects and typical development timelines, meaningful relief in capacity constraints likely comes in 2027-2028, when second-generation facilities reach full operation. Until then, expect continuing supply constraints and elevated prices, particularly outside major metropolitan areas with existing infrastructure. Companies with projects launching in 2025-2026 face the tightest capacity markets.

Is it cheaper to use U. S. cloud data centers instead of building in Europe?

Costs vary by workload and geography. U. S. cloud providers (AWS, Google Cloud, Azure) often have economies of scale that reduce per-unit compute costs. However, data residency regulations (GDPR, Data Act) may require European storage and processing, eliminating that option. Additionally, transferring large amounts of data internationally incurs latency and transfer costs. For workloads that legally must stay in Europe, it's not a matter of choice. For others, hybrid approaches (EU for regulated data, U. S. for commodity compute) can be cost-optimal.

What's the actual timeline for getting new AI data center capacity online?

From initial planning to full operational capacity typically takes 3-4 years: 6-12 months for site selection and permitting, 6-18 months for grid connection negotiation, 12-24 months for construction and equipment procurement, 6-12 months for staffing and initial operation. Some projects move faster with expedited permitting; others take longer due to grid constraints or complex environmental assessments. Most projects announced in 2024 will reach initial capacity in 2026-2027.

Are there any breakthrough technologies that could reduce the infrastructure crunch?

Several emerging approaches could help: distributed and edge AI (running computations closer to users rather than centralized), AI models becoming more efficient (requiring less compute for comparable results), specialized hardware alternatives to GPUs (providing more competition and capacity), and advanced cooling technologies (improving efficiency). However, none of these are mature enough to meaningfully reduce strain through 2026. Real relief comes from traditional supply expansion: more data centers, more power infrastructure, more computing capacity.

What happens if European data center capacity remains constrained through 2030?

High prices for compute capacity would make European AI companies less competitive with U. S. counterparts. Talent and investment would flow toward regions with abundant, affordable infrastructure. European strategic autonomy in AI would be compromised, creating dependency on U. S. cloud providers. However, this outcome isn't inevitable. Aggressive investment and policy changes starting now can prevent this scenario. But delay increases the risk significantly.

How does sustainability regulation impact data center upgrades?

Regulations requiring renewable energy sourcing and energy efficiency standards add complexity and cost to both new builds and upgrades. A facility must not only upgrade to handle AI workloads but also demonstrate renewable energy compliance and meet efficiency targets. For legacy facilities, this often means upgrades aren't economically viable because the combined costs of AI-readiness and sustainability compliance exceed the facility's remaining economic life. New facilities are designed for both from the start, making the financial case stronger.

The Bottom Line

Europe's AI infrastructure problem is real, significant, and time-sensitive. The gap between capacity and demand will likely persist through 2027-2028. Smart companies and policymakers are acting now to position themselves for this reality. Those waiting for the problem to solve itself will face higher costs, capacity constraints, and competitive disadvantages. The next 24 months are critical.

Key Takeaways

- Only 20% of European data centers can handle modern AI workloads due to power density and cooling system limitations

- Retrofitting legacy facilities costs $125-235 million per facility with questionable ROI, making new builds the more practical path forward

- Building new AI-ready data centers takes 3-4 years from planning to full capacity, while demand is growing 40-50% annually

- Nordic countries have significant geographic and climate advantages, making them the likely hubs for European AI infrastructure

- Supply chain volatility and power grid constraints will likely keep capacity tight and prices elevated through 2027-2028

- 86% of industry professionals report supply chain issues are now structural, not temporary, extending development timelines indefinitely

Related Articles

- Steam Deck OLED Out of Stock: The RAM Crisis Explained [2025]

- xAI's Interplanetary Vision: Musk's Bold AI Strategy Revealed [2025]

- Anthropic's Data Center Power Pledge: AI's Energy Crisis [2025]

- Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

- Windows 11 26H1: The Exclusive Arm PC Release Changing Windows Updates [2025]

- Windows 10 Secure Boot Certificates: What You Need to Know [2025]

![EU Data Centers & AI Readiness: The Infrastructure Crisis [2025]](https://tryrunable.com/blog/eu-data-centers-ai-readiness-the-infrastructure-crisis-2025/image-1-1770905226473.jpg)