EU's TikTok 'Addictive Design' Case: What It Means for Social Media [2025]

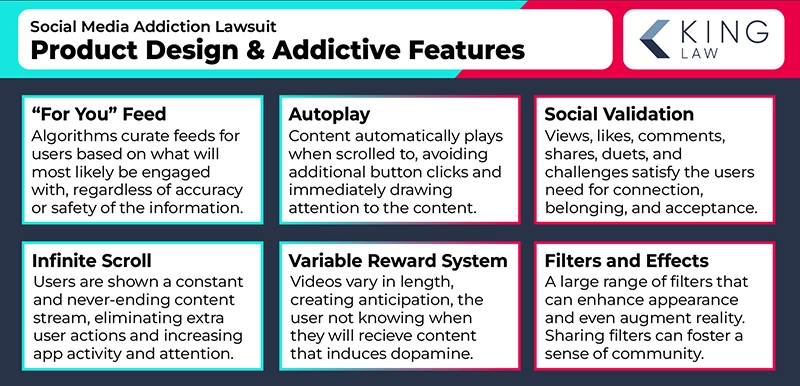

Last year, the European Commission made a bold move. It didn't just complain about TikTok's design choices or write a concerned letter. It formally declared that the platform's core features—the infinite scroll, the algorithm, the push notifications—are illegal under European law. According to The New York Times, this wasn't some niche regulatory filing. This was the EU saying that one of the world's most powerful social media platforms is deliberately designed to be addictive, and it needs to change.

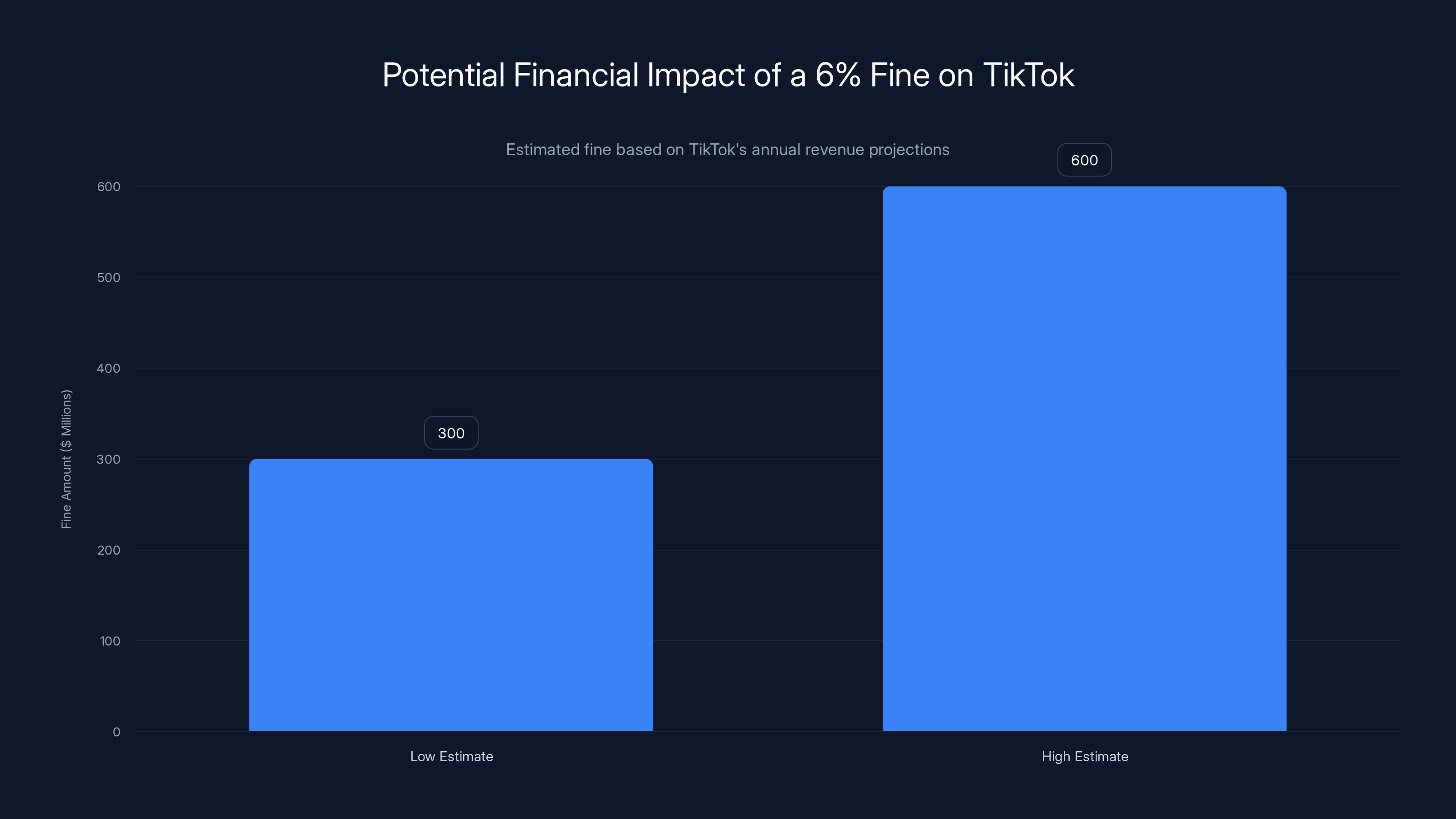

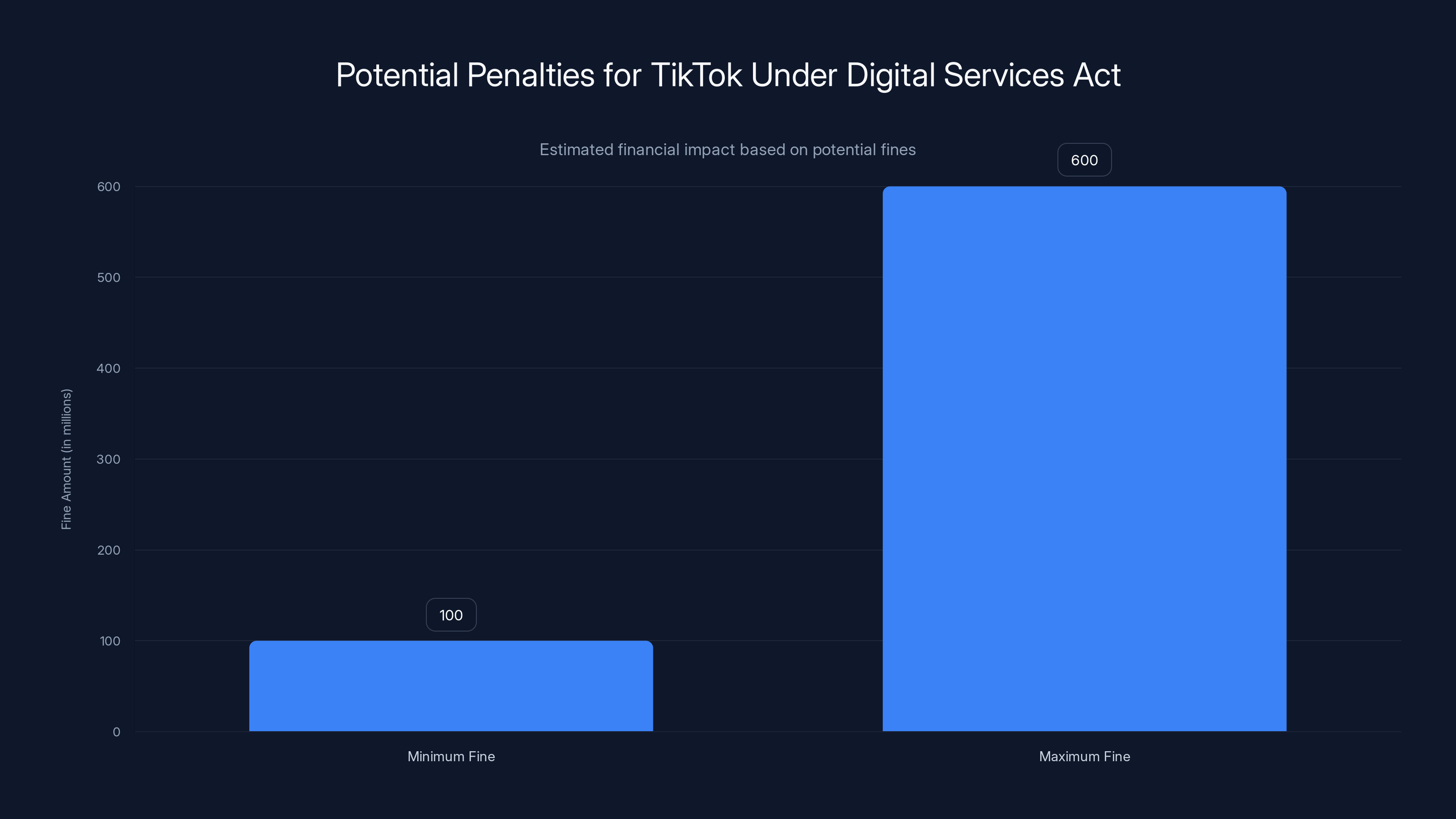

The decision matters because it sets a precedent. For the first time, a major regulatory body has put "addictive design" under legal scrutiny and argued that it's not just a product philosophy—it's a violation of consumer protection law. TikTok has a chance to respond and defend itself, but the pressure is real. A fine of up to 6% of annual worldwide turnover is on the table, as noted by Belga News Agency.

What's interesting is that this isn't really about banning TikTok or shutting it down. It's about forcing the company to redesign its most powerful engagement mechanisms. And that's the part that matters for anyone using social media, building products, or trying to understand how tech regulation works in 2025.

Let's break down what happened, why it matters, and what comes next.

TL; DR

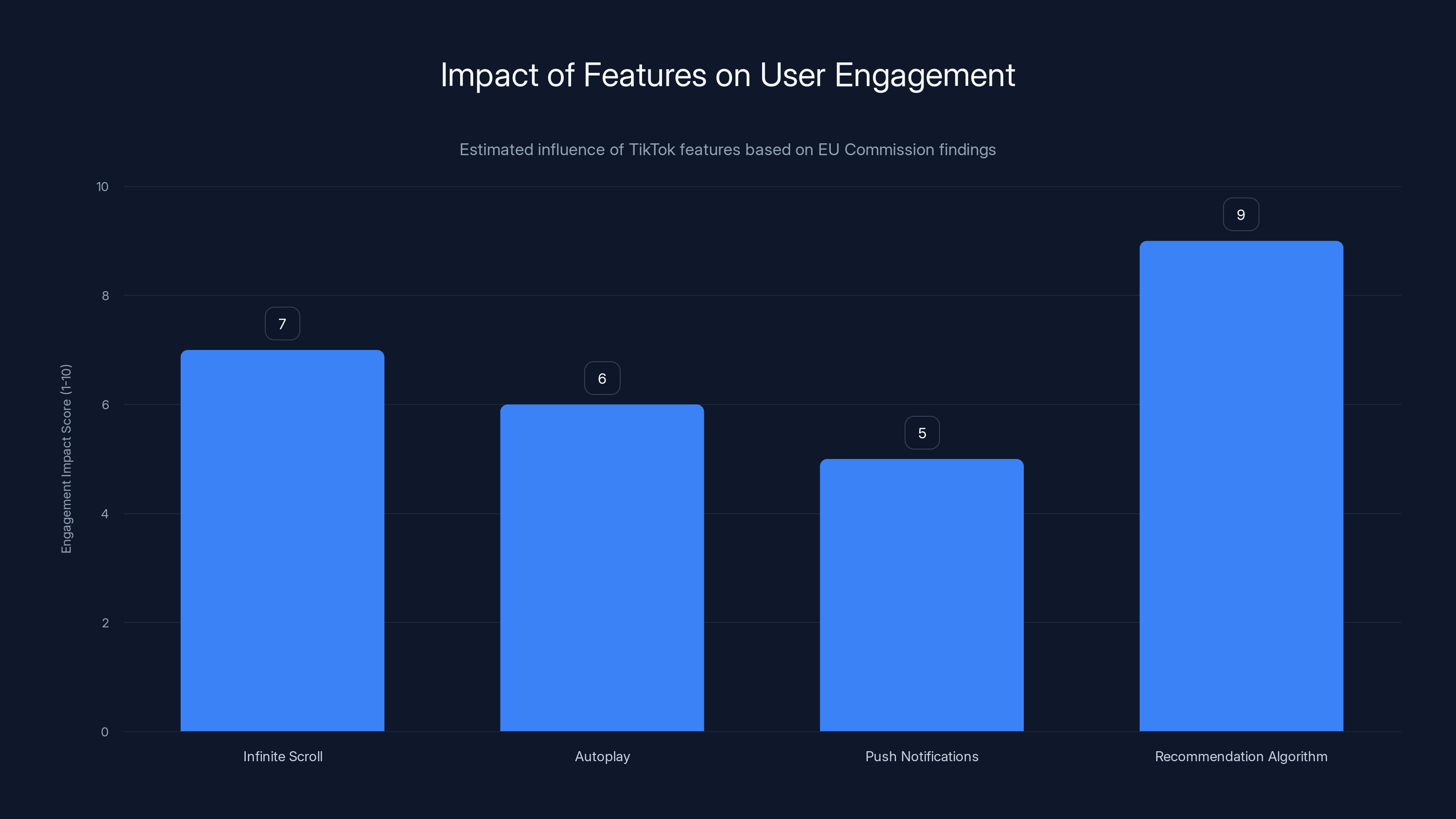

- The Finding: The EU Commission preliminarily found TikTok violates the Digital Services Act by using addictive design features including infinite scroll, autoplay, push notifications, and personalized algorithms, as reported by Politico.

- The Harm: Scientific research shows these features push users into "autopilot mode," reducing self-control and encouraging compulsive behavior, particularly affecting minors, according to Lawsuit Information Center.

- The Stakes: TikTok faces fines up to 6% of annual worldwide revenue if found guilty, with possible forced modifications to core platform features.

- What's Next: The Commission will give TikTok time to respond, and the company has signaled it will "use any means available" to challenge the findings, as noted by ABC News.

- The Bigger Picture: This case establishes a legal framework for regulating engagement mechanics across all social platforms, not just TikTok.

A 6% fine on TikTok's estimated revenue could range from

Understanding the European Commission's Preliminary Findings

The European Union didn't wake up one day and decide to attack TikTok. This came from a systematic investigation that started in February 2024 under the Digital Services Act (DSA), a comprehensive piece of legislation designed to regulate how large tech platforms operate. According to BBC News, the Commission's preliminary findings are crucial to understand. "Preliminary" means this is the EU's initial assessment, not a final judgment. TikTok gets to respond, present its case, and argue why the Commission is wrong. This is the due process part of the regulatory system.

But here's what makes this significant: the EU didn't just find one problem. It found several interconnected issues that work together to create what regulators call an "addictive" experience.

First, there's the infinite scroll feature. This isn't new—YouTube, Instagram, Facebook, and Twitter all use it. But the Commission argues that when combined with TikTok's other features, it creates a compulsive loop. You scroll, the algorithm shows you something interesting, you keep scrolling. There's no natural stopping point, no "end of feed." Your brain, the research suggests, shifts into autopilot.

Second, there's autoplay. When one video ends, the next one starts automatically. You don't have to decide to keep watching. You don't have to take action. The platform just... continues. For users, especially younger ones, this removes friction from continued consumption.

Third, there are push notifications. These are the alerts that bring you back to the app. "You have 5 new messages." "Your friend liked your video." "Check out this trending sound." Each notification is designed to trigger a behavior: open the app, engage, stay longer.

Fourth, and perhaps most powerful, is the personalized recommendation algorithm. This is TikTok's secret sauce. Unlike traditional social feeds that show you content from accounts you follow in chronological order, TikTok's algorithm learns what keeps you watching. It studies your behavior, tests different content, and optimizes for one metric: time spent on the app.

The Commission's argument is that these features don't just happen to be engaging. They're intentionally designed to be addictive. And the company has failed to put in place adequate safeguards to protect users, especially minors, from the potential harms.

What's particularly important is the focus on minors. TikTok's user base skews young. A significant portion of users are teenagers and pre-teens. The Commission argues that these users are especially vulnerable to addictive design because their prefrontal cortex—the part of the brain responsible for impulse control and decision-making—is still developing.

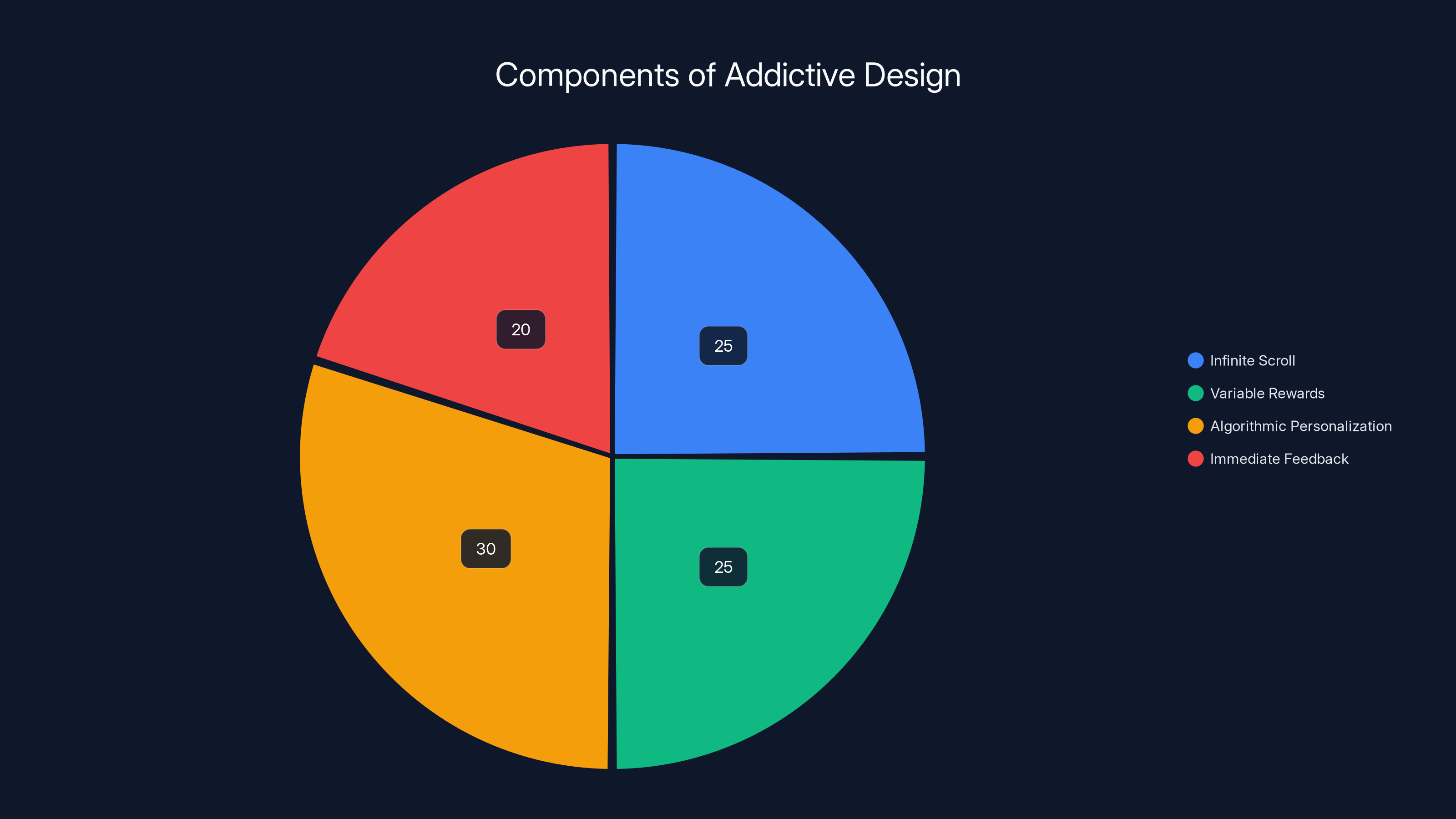

The scientific research cited by the Commission is real and peer-reviewed. Studies from neuroscientists and psychologists have documented that endless scroll design, variable rewards (not knowing what you'll see next), and algorithmic personalization can genuinely reduce self-control and encourage compulsive use. It's not a conspiracy theory or scaremongering. It's documented behavioral science.

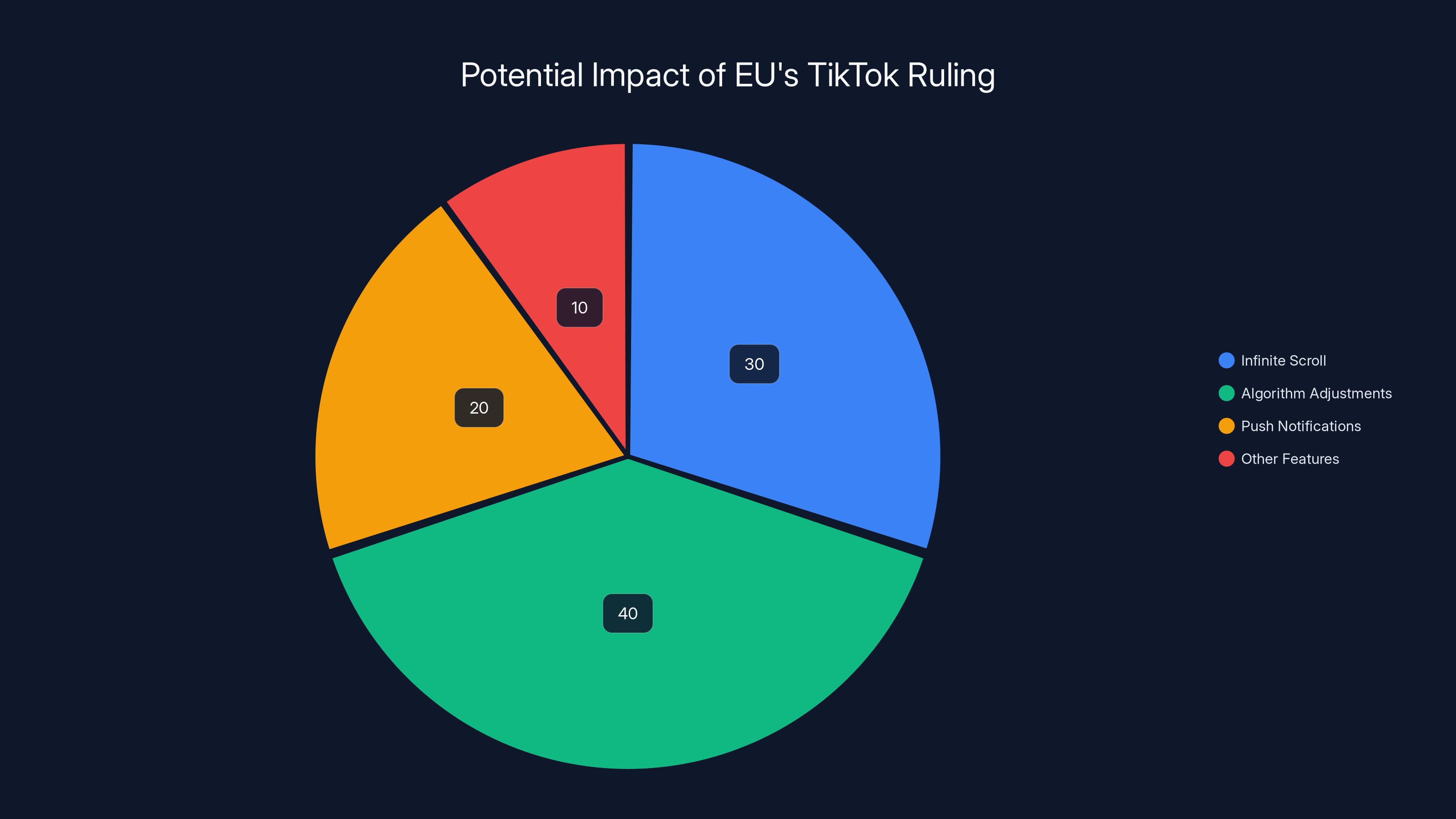

Estimated data shows algorithmic personalization as the largest contributor to addictive design, followed by infinite scroll and variable rewards.

The Digital Services Act and Why This Case Matters

To understand why the European Commission has the authority to regulate TikTok's design in the first place, you need to know about the Digital Services Act.

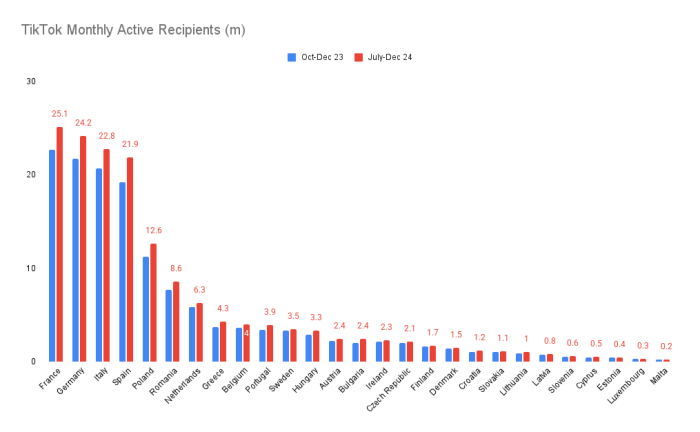

The DSA is one of the most important pieces of tech regulation passed globally. It came into effect in 2024 and applies to any "very large online platform" with more than 45 million monthly users in the EU. TikTok definitely qualifies.

The DSA has several key provisions. Platforms must be transparent about their algorithms. They must allow users to opt out of algorithmic recommendations. They must protect minors. They must remove illegal content quickly. And—this is where TikTok's case falls—they must not use dark patterns or manipulative design to harm users.

A "dark pattern" is a design technique that tricks you into doing something you didn't intend to do. Common examples include:

- Making it easy to subscribe but hard to unsubscribe

- Pre-checking boxes in settings you don't notice

- Using confusing privacy controls so you accidentally share more data than intended

- Using urgency tactics ("Only 3 spots left!") to rush decisions

- Defaulting to options that benefit the company, not the user

TikTok's design features don't necessarily fall into the "trick you" category in the traditional sense. You know infinite scroll exists. You can put your phone down. But the DSA's definition of harmful design is broader. It's not just about obvious tricks. It's about design that exploits psychological vulnerabilities, particularly in vulnerable populations like minors.

This is a shift in how regulators think about tech. For years, the focus was on data privacy and security. "Where does your data go? Who accesses it? Is it encrypted?" Those are still important questions, and the EU has found TikTok wanting in those areas too.

But now, the focus is also on behavioral design. "How is this app designed to keep you using it? Does it exploit psychological principles? Does it harm mental health?" These are newer questions, and the DSA gives regulators the authority to address them.

The precedent set by this case is massive. If the Commission finds TikTok guilty, every other social platform becomes a potential target. Instagram, YouTube, Snapchat, Twitter—they all use infinite scroll and algorithmic recommendations. If TikTok has to change, why shouldn't they?

That's why TikTok's response matters so much. The company needs to convince the Commission that either:

- The design features aren't actually addictive (a tough argument given the science), or

- The company has adequate safeguards in place (current parental controls and screen time limits), or

- The design serves important user interests that outweigh the risks

None of these arguments are slam dunks.

The Science Behind "Addictive" Design: What Research Shows

When the Commission uses the word "addictive," it's not throwing around a marketing term. There's actual neuroscience behind it.

Infinite scroll, variable rewards, and algorithmic personalization trigger specific responses in the brain. When you scroll and don't know what you'll find, your brain releases dopamine in anticipation. This is the same neurochemical response triggered by gambling, which is why slot machines use the same principle: you don't know what you'll get, so you keep trying.

The personalized algorithm amplifies this. Because TikTok learns what you like and shows you more of it, you're essentially getting a customized slot machine. Content is curated specifically to maximize your engagement. The algorithm doesn't show you random content. It shows you content it predicts you'll watch, like, comment on, or share.

Research from behavioral psychologists has shown that this combination—unpredictable rewards (infinite scroll), immediate feedback (instant likes and comments), and personalized content (algorithm)—creates what's called a "variable ratio reinforcement schedule." This is the most effective type of reward schedule for creating compulsive behavior.

Let's break down the mechanics:

The Dopamine Loop

You open TikTok. The first video is okay. You swipe. The next one is hilarious. Dopamine spike. You keep swiping. The algorithm has learned what makes you laugh, so it serves you more comedy. You're not consciously thinking about it. You're just swiping, rewarded repeatedly by content optimized for your taste.

Meanwhile, each video is designed to be short enough that you watch it, but compelling enough that you continue. Fifteen seconds to two minutes. That's long enough to be engaging but short enough that you feel like you can watch "just one more."

The Notification Effect

You put your phone down. Minutes later, a notification. Someone liked your video. A friend posted. A trending sound you follow is gaining traction. Each notification triggers a small dose of dopamine and a strong urge to check.

This is particularly effective for younger users. Adolescent brains are hyperresponsive to social reward signals. A like from a peer activates reward centers in a teenager's brain more intensely than in an adult's. This isn't a design flaw for TikTok—it's a feature. The company knows this and has optimized for it.

The Autoplay Continuation

When one video ends, the next begins automatically. There's no friction. No decision point. No moment to evaluate whether you want to keep watching. The experience flows seamlessly, and many users report that hours pass without them realizing it.

The EU Commission cited actual peer-reviewed research in its findings. Studies from institutions like Stanford and MIT have documented that infinite scroll design reduces self-control. Neuroimaging studies show increased activity in reward-processing brain regions during algorithmic feed scrolling. Behavioral studies demonstrate that users often spend significantly more time than they intended.

Now, here's the important caveat: research also shows that people enjoy TikTok. It's genuinely entertaining. Users aren't victims who can't help themselves. Many people use the app in a measured way. Some people never experience the compulsive use patterns that researchers describe.

But the point the Commission is making is that the design features are optimized to exploit psychological vulnerabilities. If you're susceptible to compulsive behavior, or if your brain is still developing (as with minors), these features can be particularly problematic. The company has a responsibility to account for this.

Estimated data showing potential focus areas for TikTok's redesign following the EU ruling. Algorithm adjustments and infinite scroll are likely primary targets.

TikTok's Current Safeguards: Are They Enough?

TikTok does have safeguards in place. The company isn't entirely without controls.

There's a screen time limit feature. Users can set a daily limit, and when they reach it, they get a prompt to take a break. There are parental controls that allow parents to restrict access, set time limits, and control who can message the user. There's a restricted mode that filters out more mature content.

The Commission's position is that these safeguards are insufficient.

Why? Because they're optional. You have to decide to turn them on. Most users don't. If you're a teenager, the nudge to "take a break" isn't particularly compelling when the app is literally serving you content optimized to keep you engaged.

It's like a casino putting a sign near the slots that says, "Remember, gambling can be addictive. Maybe take a break." The warning exists, but the entire environment is designed to encourage the opposite behavior.

The Commission's argument is that safeguards should be built into the core design, not tacked on as optional features.

Possible changes TikTok might be forced to make include:

- Limiting infinite scroll: Introducing natural stopping points. For example, after watching 20 videos, the app pauses and asks if you want to continue.

- Disabling autoplay by default: Making autoplay opt-in rather than the default, so users have to consciously choose to continue.

- Mandatory screen time warnings: More frequent and prominent reminders about time spent, especially for users under 18.

- Algorithmic transparency: Showing users why specific content is recommended to them and giving them more control over the algorithm.

- Reduced push notification frequency: Limiting how often the app sends notifications, particularly for younger users.

- Age-appropriate design for minors: Different interface and features for users under 18 that prioritize wellbeing over engagement metrics.

None of these changes would break the platform. Users would still find TikTok entertaining. But they would reduce the compulsive use factor.

The Financial Stakes: What a 6% Fine Actually Means

When the EU says a company could face a fine of up to 6% of annual worldwide turnover, people's eyes glaze over. Let's make it concrete.

TikTok's parent company, ByteDance, generates significant revenue, though exact numbers are hard to pin down since it's privately held. Estimates suggest ByteDance's annual revenue is in the ballpark of

If we estimate TikTok's annual revenue at roughly

That's not a rounding error for any company. That's real money. But it's also not catastrophic for ByteDance. The company could pay it.

What's more important to TikTok than the fine is the operational impact. If the Commission forces changes to core platform features, how will that affect user engagement? If infinite scroll is limited, if autoplay is disabled by default, if push notifications are restricted—will users spend less time on the app? And if so, will advertising revenue decline?

That's the real risk. Not the fine itself, but the possibility that a redesigned TikTok becomes a less engaging product, and users migrate to alternatives.

Here's the thing, though: other platforms have adapted to regulation before. Instagram and Facebook were forced to change how they handle minors' data. YouTube added restrictions on algorithmic recommendations for kids. Twitter implemented content moderation policies. None of these changes destroyed the platforms. Users adapted.

The difference with TikTok is that the changes might be more fundamental to the user experience. Infinite scroll and algorithmic recommendation are TikTok's core mechanics. You can't really remove them without changing what TikTok is.

But that might also be the point. Maybe that's exactly what the Commission thinks needs to happen.

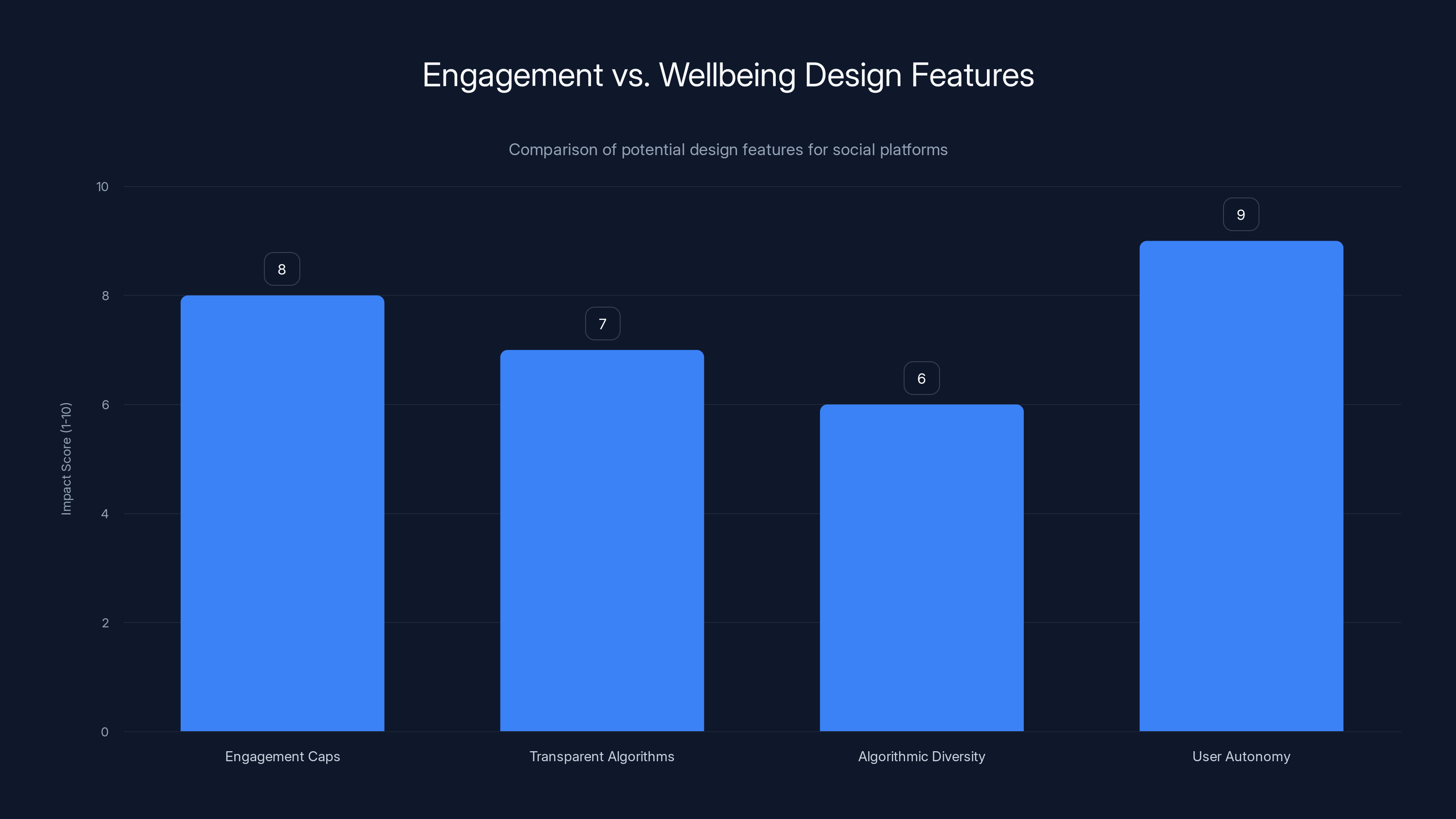

Estimated data: Engagement caps and user autonomy are projected to have the highest impact on balancing engagement with user wellbeing.

Why TikTok Is the Target: Algorithm, Data, and Politics

You might wonder: why is the EU coming down so hard on TikTok when YouTube, Instagram, and TikTok all use essentially the same design features?

There are a few answers.

First, there's the geopolitical angle. TikTok is a Chinese company. The EU has concerns about Chinese tech companies, their data practices, and their relationship with the Chinese government. This doesn't make the regulatory findings any less valid, but it provides context. The EU is probably more motivated to regulate TikTok aggressively than it is to regulate Meta or Google, even if the design features are similar.

Second, TikTok's algorithm is particularly effective at engagement. The platform has invested heavily in recommendation systems and has seen remarkable success. TikTok users spend more time on the app than users of competing platforms. The algorithm is more sophisticated and more optimized for engagement. So when regulators look at addictive design, TikTok is the most obvious target.

Third, TikTok's user base is particularly young. Unlike Facebook or Instagram, which have broad demographics, TikTok skews heavily toward Gen Z and younger millennial users. A significant portion of users are minors. This makes the platform more vulnerable to harm-focused regulation because the protective standards for minors are higher.

Fourth, the EU opened its investigation in response to reported concerns about the platform's impact on young people's mental health. There were media reports, parental concerns, and calls for regulation. The Commission responded by investigating, and once it started looking, it found what it was looking for.

It's worth noting that the EU has also investigated other platforms. There are ongoing proceedings against Meta and Google under the DSA. But TikTok got hit first, probably because of the combination of factors above.

What TikTok's Response Actually Says About the Company's Strategy

When TikTok heard about the preliminary findings, the company didn't apologize or negotiate. It attacked.

"The Commission's preliminary findings present a categorically false and entirely meritless depiction of our platform," the company said in a statement. TikTok signaled it would "use any means available" to challenge the findings.

This is a combative stance. It's not "we hear your concerns and will work with you." It's "you're wrong and we're going to fight you."

Why take this approach?

For one, TikTok believes it's right. The company's position is probably that:

- The design features are not addictive in a harmful way—they're just engaging.

- Users have agency and can choose to limit their use.

- The safeguards in place are adequate.

- The Commission is playing politics and using regulation as a weapon against a Chinese competitor.

For another, TikTok can't afford to concede the broader point. If the company admits that its design is intentionally addictive and that safeguards are insufficient, that opens the door to massive regulation, not just in the EU but globally. Regulatory bodies in the US, UK, Canada, and Australia are all watching this case. A loss here could trigger a wave of similar findings worldwide.

So TikTok's strategy is to fight aggressively, argue that the Commission is wrong on the facts and the law, and try to discredit the regulatory process itself as politically motivated.

This is a high-stakes game. The company will likely hire expensive lawyers, commission its own studies arguing that the design is not addictive, and try to find technical flaws in the Commission's reasoning.

The Commission, meanwhile, has expert consultants, peer-reviewed research, and the backing of European governments. This isn't a close fight.

If found guilty, TikTok could face fines ranging from

The Broader Implications: What This Means for All Social Platforms

Even if TikTok wins—even if the company successfully challenges the Commission's findings—the regulatory framework is now in place. Other platforms are on notice.

The DSA gives EU regulators clear authority to regulate addictive design. The Commission has published guidance on what constitutes a dark pattern or manipulative feature. The bar for what's considered harmful has been set.

This means Instagram, YouTube, Snapchat, and any other platform with infinite scroll and algorithmic recommendations could face similar investigations.

Meta is probably already preparing. The company has deep legal resources and regulatory experience. But the precedent is concerning. If TikTok loses, what's Meta's defense going to be? Instagram's infinite scroll is identical to TikTok's. The algorithm works the same way. The features that got TikTok into trouble are exactly what make Meta's platforms profitable.

YouTube might be slightly safer because users expect longer video watches, so the design is optimized differently. But YouTube's recommendations and autoplay have been criticized for similar reasons.

The DSA applies across the EU, not just to TikTok. So even if TikTok wins this specific case, the Commission could launch new investigations into other platforms using different criteria or approaches.

One interesting question: could this regulation spread beyond the EU?

The UK has similar concerns and is developing its own regulatory framework. Canada is discussing online harms legislation. Various US states are pushing for restrictions on algorithmic recommendations, particularly for minors.

The US federal government hasn't taken the same approach as the EU, but there's definitely pressure. Congress has proposed bills like the Kids Online Safety Act that would require platforms to prioritize child safety over engagement metrics.

So the TikTok case in the EU could be a bellwether for global regulation. If the Commission wins and forces TikTok to change, we could see similar requirements worldwide.

The Design Philosophy Question: Engagement vs. Wellbeing

At the heart of this case is a fundamental question about how social platforms should be designed.

For the past 15 years, tech companies have optimized for engagement. More engagement means more time on the platform, more data collected, more advertising impressions, more revenue. This is the business model. The metrics that matter are daily active users, session length, frequency of returns, and total time spent.

Design teams have become incredibly sophisticated at optimizing for these metrics. They run thousands of A/B tests. They study user behavior. They understand psychological triggers. Everything from the color of a button to the timing of a notification has been engineered to maximize engagement.

This approach has been wildly successful. TikTok, Instagram, YouTube, Facebook, and Twitter are all massively profitable because they've perfected engagement optimization.

But the EU—and increasingly, other regulators—are saying this approach is wrong. Or at least, it's wrong when it comes at the expense of user wellbeing, especially for vulnerable populations like minors.

The Commission is essentially arguing for a different design philosophy: platforms should be engaging, yes, but not at the cost of manipulating users into compulsive use. The goal should be to create genuinely valuable experiences that users enjoy, but with built-in protections that respect autonomy and limit harms.

What does this actually look like in practice?

Some possibilities:

- Engagement caps: Limiting the amount of time an app can engage a user before requiring a conscious decision to continue. "You've been scrolling for 45 minutes. Do you want to continue?" But more than an opt-out—actually pausing the experience.

- Transparent algorithms: Showing users exactly why they're seeing specific content and giving them granular control over the recommendation system.

- Algorithmic diversity: Instead of optimizing for maximum engagement, algorithms could be designed to expose users to diverse content, even if it's not the most engaging.

- Downranking addictive content: Intentionally reducing the visibility of content designed to be extremely engaging (viral challenges, ASMR, specific triggering sounds) to limit compulsive use.

- Different defaults for minors: Disabling features like infinite scroll, autoplay, and notifications by default for users under 18.

- Voluntary friction: Making it slightly less seamless to continue using the app. If you have to tap to continue between videos, you might think about it more.

None of these would make social platforms useless. But they would reduce the compulsive use factor.

The question is whether this is the right approach. Do platforms have a responsibility to protect users from their own impulses? Or is it up to individuals to manage their own use?

The EU's answer is clear: platforms have responsibility. Particularly for minors, and particularly when the design is specifically engineered to exploit psychological vulnerabilities.

Whether TikTok agrees, whether the courts agree, and whether other platforms follow remains to be seen.

The EU Commission's preliminary findings suggest that TikTok's personalized recommendation algorithm has the highest impact on user engagement, followed by infinite scroll, autoplay, and push notifications. Estimated data.

Timeline: Where Does This Case Go From Here?

The current status is that the Commission has issued preliminary findings. TikTok now has time to respond, usually 10 to 30 business days depending on the complexity of the case.

During this period, TikTok will submit its response. The company will argue why the Commission is wrong. It might present evidence that the design is not addictive, that safeguards are adequate, that the research cited by the Commission is flawed, or that the Commission has misinterpreted the DSA.

After TikTok responds, the Commission will review the response and make a final decision. This could take weeks or months.

If the Commission finds TikTok guilty, the company has legal recourse. It can challenge the decision in EU courts. This process can take years. TikTok would need to convince a judge that the Commission was wrong in its interpretation of the law or in its factual findings.

Meanwhile, the Commission could also issue interim measures. For example, it might order TikTok to suspend infinite scroll or disable autoplay while the case is ongoing. This would be to prevent ongoing harm while the legal process unfolds.

The full timeline from preliminary findings to final resolution could be 2-5 years, depending on appeals and the complexity of the case.

But here's what's important: even during this lengthy process, regulatory pressure is building. Other investigations are ongoing. Other regulators are watching. If TikTok is forced to make changes, the changes will likely start being implemented relatively soon, not years from now.

The Precedent for Other Platforms and Future Tech Regulation

Let's say the Commission finds TikTok guilty and forces the company to make changes. What happens next?

Probably, the Commission launches similar investigations into other platforms. Meta faces scrutiny. YouTube faces scrutiny. Snapchat might be next.

The findings would likely be similar: infinite scroll, autoplay, algorithmic personalization, push notifications are all designed to maximize engagement and could be considered addictive design under the DSA.

But here's where it gets interesting: other platforms might have easier paths to compliance than TikTok.

For example, YouTube could argue that long-form video consumption is qualitatively different from short-form TikTok videos. Users expect videos to be longer. The autoplay feature is less of an issue when videos are 10-30 minutes rather than 15-60 seconds.

Instagram and Facebook could argue that their user bases are more mature than TikTok's, so different protective standards apply.

But these are marginal arguments. The core issue—infinite scroll plus algorithmic personalization equals addictive design—applies equally to all social platforms.

So we could see a future where all social platforms are required to:

- Disable infinite scroll by default or limit it to a certain number of content pieces

- Make algorithmic recommendations transparent and user-controllable

- Implement stricter limits on push notifications

- Offer different experiences for users under 18

- Submit to regular audits and compliance checks

This would fundamentally change how social media works. The business model might still hold—advertising is still possible—but the user experience would be different.

Think of it like tobacco regulation. Cigarette companies couldn't advertise freely anymore, couldn't use certain packaging, had to include warnings. The business survived, but it was constrained. The product was still sold and still profitable, just under more restrictions.

Social platforms might go through a similar evolution. They'll still exist, users will still use them, but they'll be designed and operated under stricter rules focused on limiting harms.

What Users Can Do Right Now

Regulation takes time. While the TikTok case unfolds, what can users do to protect themselves from addictive design?

First, be aware of the mechanics. Understanding that infinite scroll, variable rewards, and algorithmic personalization are designed to keep you engaged is half the battle. Just knowing that you're being targeted by sophisticated design patterns makes you more resistant to them.

Second, use the built-in controls. Most platforms have screen time limits, do-not-disturb settings, and ways to reduce notifications. These tools exist, and they work. Enable them.

Third, create friction. Delete the app from your home screen. Require Face ID to open it. Unfollow accounts that trigger compulsive scrolling. Mute notifications. The harder it is to reflexively open the app, the more likely you are to think about whether you actually want to use it.

Fourth, use alternative platforms with different design philosophies. Some platforms, like Bluesky and Threads, are experimenting with feeds that show chronological content rather than algorithmic recommendations. No infinite scroll. Notifications only for direct interactions. Different mechanisms.

Fifth, set rules for yourself. Designate phone-free times. Don't use the app in bed before sleep. Don't check it first thing in the morning. These behavioral rules work better than relying on willpower.

Sixth, talk to younger people about this. If you're a parent, educator, or mentor, have conversations about how these apps work and how to use them healthily. Kids are not immune to addictive design—they're actually more vulnerable.

Seventh, support the regulation. If you think the EU's approach is right, make your voice heard. Write to your elected representatives. Support organizations pushing for tech regulation. Vote for politicians who prioritize consumer protection.

Regulation and individual agency aren't mutually exclusive. You can use these tools to protect yourself while also supporting systemic change through regulation.

The Counterargument: Why the EU Might Be Wrong

To be fair, there are legitimate arguments against the Commission's position.

First, there's the question of causality. The Commission argues that addictive design causes harm to mental health. But correlation isn't causation. TikTok users might spend a lot of time on the app because they genuinely enjoy it, not because they're addicted. The app doesn't create a compulsive need—it fulfills an existing desire for entertainment and social connection.

Second, there's the question of agency. Adults can regulate their own behavior. If you're spending too much time on TikTok, you can put the phone down. You have free will. The argument that design features override your ability to control your behavior might be overstated.

Third, there's the innovation question. If you mandate that platforms disable infinite scroll or autoplay, are you preventing innovation? These features exist because they enhance user experience. Requiring them to be disabled or limited might make platforms worse, not better.

Fourth, there's the competitive question. If the EU forces TikTok to change but American platforms (YouTube, Instagram, Meta) aren't subject to the same rules, does that give American companies a competitive advantage? Is the EU unfairly targeting foreign companies?

Fifth, there's the question of whether design features are actually the problem, or whether other factors—social pressure, FOMO, mental health issues—are more important. Maybe the issue isn't TikTok's design. Maybe it's that young people are stressed, anxious, and seeking escape. Changing the app won't fix that.

These are serious arguments, and they deserve engagement. The Commission's position isn't obviously correct just because it's well-reasoned. There's genuine debate here about how to balance innovation, consumer freedom, and protection from harm.

But the EU's perspective is that platforms have tilted too far toward engagement optimization at the expense of user wellbeing, and that correction is necessary.

International Responses and the Possibility of Coordinated Regulation

The EU isn't alone in scrutinizing TikTok and social media engagement mechanics.

In the UK, Ofcom (the media regulator) has issued guidance on online harms and is developing a new regulatory framework. The UK government has proposed Online Safety Bill provisions that would require platforms to take action against harmful content and design practices.

In Canada, regulators are exploring online harms legislation and have expressed concerns about addictive design.

In Australia, the government has proposed legislation to restrict algorithmic recommendations for minors.

In the US, there isn't yet a comprehensive federal law like the DSA, but individual states are moving. California, for example, has proposed restrictions on algorithmic recommendations for users under 18. Congress has proposed the Kids Online Safety Act, which would require platforms to prioritize child safety.

What's interesting is that these jurisdictions aren't coordinating, yet they're arriving at similar conclusions. Addictive design, algorithmic harms, protection for minors—these are concerns worldwide.

There's a possibility that we could see coordinated international regulation. The UN, OECD, or other international bodies could develop standards for platform design and engagement mechanics. Countries could harmonize their rules.

Alternatively, we could see a patchwork of different rules. The EU requires one set of changes, the UK requires different changes, the US requires others. This would be chaotic for platforms, which would have to maintain different versions of their products for different markets.

Or, we could see a middle ground where industry groups develop voluntary standards, and regulators acknowledge these standards as compliant with their rules.

Regardless of how it plays out, the era of completely unregulated engagement optimization is probably over. The question is just what the regulation will look like.

What This Means for Creators and the Creator Economy

One angle that doesn't get discussed much: how does regulation of addictive design affect creators?

TikTok's platform is massive because it drives engagement. Creators make money through ads, brand deals, and fan support—all of which depend on high engagement. If the platform is redesigned to reduce addictive features, could creator earnings decline?

It's possible. If infinite scroll is limited, users might spend less time on the platform, and less time means fewer video views, lower engagement, and less money.

But it's also possible that the change is beneficial for creators. If the platform is less optimized for pure engagement metrics, success might depend more on content quality and audience loyalty than on viral mechanics. Creators who build genuine followings might do better.

Also, regulation could level the playing field. Right now, the algorithm favors content designed to be maximally engaging—shocking, dramatic, entertaining at all costs. A different design philosophy might create opportunities for creators doing other things: education, skill-building, meaningful storytelling.

For creators relying entirely on TikTok's algorithm for income, regulation is a threat. For creators with diversified audiences and revenue streams, it might not matter much.

The Long-Term Vision: What Does Healthy Social Media Look Like?

When we step back and think about what social media could be, absent the pressure to maximize engagement at all costs, what emerges?

Possibly platforms that are still fun and engaging, but designed around user autonomy and wellbeing. Chronological feeds instead of algorithmic ones, so you see what you want rather than what the algorithm thinks will keep you. Optional notifications, off by default. Transparent algorithms. Tools to control your experience.

Possibly platforms that combine social features with other value: education, skill-building, community. TikTok could be entertaining and also educational. Instagram could showcase beautiful moments and also help you develop photography skills.

Possibly platforms that are smaller and more community-focused. Instead of billion-user networks, we might see smaller platforms where you know the people, trust the moderation, and use the platform intentionally rather than compulsively.

Possibly platforms that are built on different business models. Instead of advertising-based (where engagement is the goal), we could see subscription-based platforms where success is measured by user satisfaction, not attention captured.

Possibly a future where we've moved past the social media boom entirely. Maybe younger generations will see the harms, reject these platforms, and build something different.

Or maybe we just get better regulation, platforms adapt, and we continue with social media as a central part of digital life, but with guardrails.

The TikTok case is one step in that evolution. It's the regulatory system saying: this matters, and we're going to enforce standards.

FAQ

What exactly did the European Commission find about TikTok's design?

The Commission preliminarily found that TikTok violates the Digital Services Act through its use of addictive design features, specifically infinite scroll, autoplay, push notifications, and a highly personalized algorithm. The Commission argues these features are designed to keep users engaged compulsively and fail to include adequate safeguards, particularly for minors, to prevent mental and physical harms from excessive use.

How does the Digital Services Act define addictive design as illegal?

The Digital Services Act prohibits "dark patterns" and manipulative design practices that exploit psychological vulnerabilities and cause harm to users, especially minors. While the DSA doesn't explicitly use the term "addiction," it empowers regulators to identify design features that demonstrably reduce user autonomy, increase compulsive use, or cause documented harms to wellbeing. The Commission's argument is that TikTok's combination of features meets this threshold.

What penalties could TikTok face if found guilty?

If the Commission ultimately finds TikTok guilty of violating the Digital Services Act, the company could face fines of up to 6% of annual worldwide turnover. For a company like TikTok, this could amount to hundreds of millions of dollars. Beyond fines, the Commission could mandate specific changes to the platform's features, such as disabling infinite scroll, limiting autoplay, or redesigning the recommendation algorithm. Repeated violations could result in even larger fines.

Can TikTok appeal or challenge these findings?

Absolutely. The Commission's findings are preliminary, meaning TikTok has the opportunity to respond, present counterarguments, and challenge the findings. If the Commission issues a final decision finding TikTok guilty, the company can appeal to EU courts. The legal process could take several years. During this time, TikTok will likely hire regulatory experts and legal teams to contest the Commission's interpretation of the law and the facts of the case.

Are other social media platforms being investigated for similar issues?

Yes, the EU has ongoing investigations into other major platforms under the Digital Services Act, including Meta and Google. However, TikTok is the first platform to face specific preliminary findings related to addictive design. Other platforms like Instagram, YouTube, and Snapchat use similar design features (infinite scroll, algorithmic recommendations, autoplay), so they could potentially face similar scrutiny if the Commission proceeds with investigations into their practices.

What changes might TikTok be forced to make to comply?

Possible required changes include disabling infinite scroll by default or limiting it to a set number of videos, making autoplay optional rather than the default experience, reducing or limiting push notifications, providing greater transparency and user control over algorithmic recommendations, and implementing more robust parental controls and protections specifically for users under 18. The company might also be required to regularly audit its design for compliance with DSA requirements.

How does this regulation affect users outside the EU?

If TikTok is forced to make design changes to comply with EU regulations, those changes would likely apply globally. Large platforms typically maintain a single codebase and deploy changes worldwide rather than creating different versions for different regions. Users outside the EU would experience the same feature modifications as EU users, even though they're not directly subject to EU law. This global effect is one reason the TikTok case is significant internationally.

Is the regulation of addictive design unique to the EU?

The EU's Digital Services Act is the most comprehensive regulatory framework addressing addictive design, but other jurisdictions are developing similar rules. The UK, Canada, and Australia are all proposing or considering legislation related to algorithmic harms and addictive design, particularly for minors. In the US, individual states and Congress have proposed similar measures. The TikTok case in the EU could serve as a template for regulation in other parts of the world.

What's the scientific basis for calling design features "addictive"?

The Commission cited peer-reviewed research from neuroscientists and behavioral psychologists demonstrating that infinite scroll, variable rewards (unpredictable content), and algorithmic personalization trigger dopamine responses in the brain and can reduce self-control. Studies show that users often spend more time than intended on platforms optimized this way, particularly adolescents whose prefrontal cortex (responsible for impulse control) is still developing. This research doesn't mean all users become compulsively dependent, but it shows the designs are engineered to exploit psychological vulnerabilities.

What can users do to protect themselves from addictive design in the meantime?

Users can enable built-in screen time limits and do-not-disturb features, reduce notification frequency, unfollow accounts that trigger compulsive scrolling, delete the app from the home screen to create friction, set personal rules about when and how they use the app, and be aware of the psychological mechanisms at play. For younger users, parents and educators can set boundaries, have conversations about healthy use, and model good digital habits themselves.

The path forward for TikTok, social media regulation, and digital wellbeing is still being written. What's certain is that the era of completely unregulated engagement optimization is ending. How platforms adapt, how regulators proceed, and how users respond will shape the internet for years to come.

Key Takeaways

- The EU Commission found TikTok violates the Digital Services Act through addictive design features including infinite scroll, autoplay, and algorithmic personalization.

- Scientific research shows these design patterns exploit psychological vulnerabilities and can reduce self-control, particularly in adolescents.

- TikTok faces potential fines up to 6% of annual worldwide revenue and may be forced to redesign core platform features.

- This precedent applies to all social platforms and could trigger similar investigations into Instagram, YouTube, and other apps using identical mechanics.

- Global regulation is accelerating with the UK, Canada, Australia, and US states all developing similar addictive design and algorithmic accountability frameworks.

Related Articles

- TikTok Settles Social Media Addiction Lawsuit [2025]

- Meta Teen Privacy Crisis: Why Senators Are Demanding Answers [2025]

- French Police Raid X's Paris Office: Grok Investigation Explodes [2025]

- X's Paris HQ Raided by French Prosecutors: What It Means [2025]

- Egypt Blocks Roblox: The Global Crackdown on Gaming Platforms [2025]

- TikTok's Ownership Shift & User Recovery: What Really Happened [2025]

![EU's TikTok 'Addictive Design' Case: What It Means for Social Media [2025]](https://tryrunable.com/blog/eu-s-tiktok-addictive-design-case-what-it-means-for-social-m/image-1-1770384985556.jpg)