Meta's Teen Privacy Reckoning: A Deep Dive Into Congressional Scrutiny and Platform Accountability [2025]

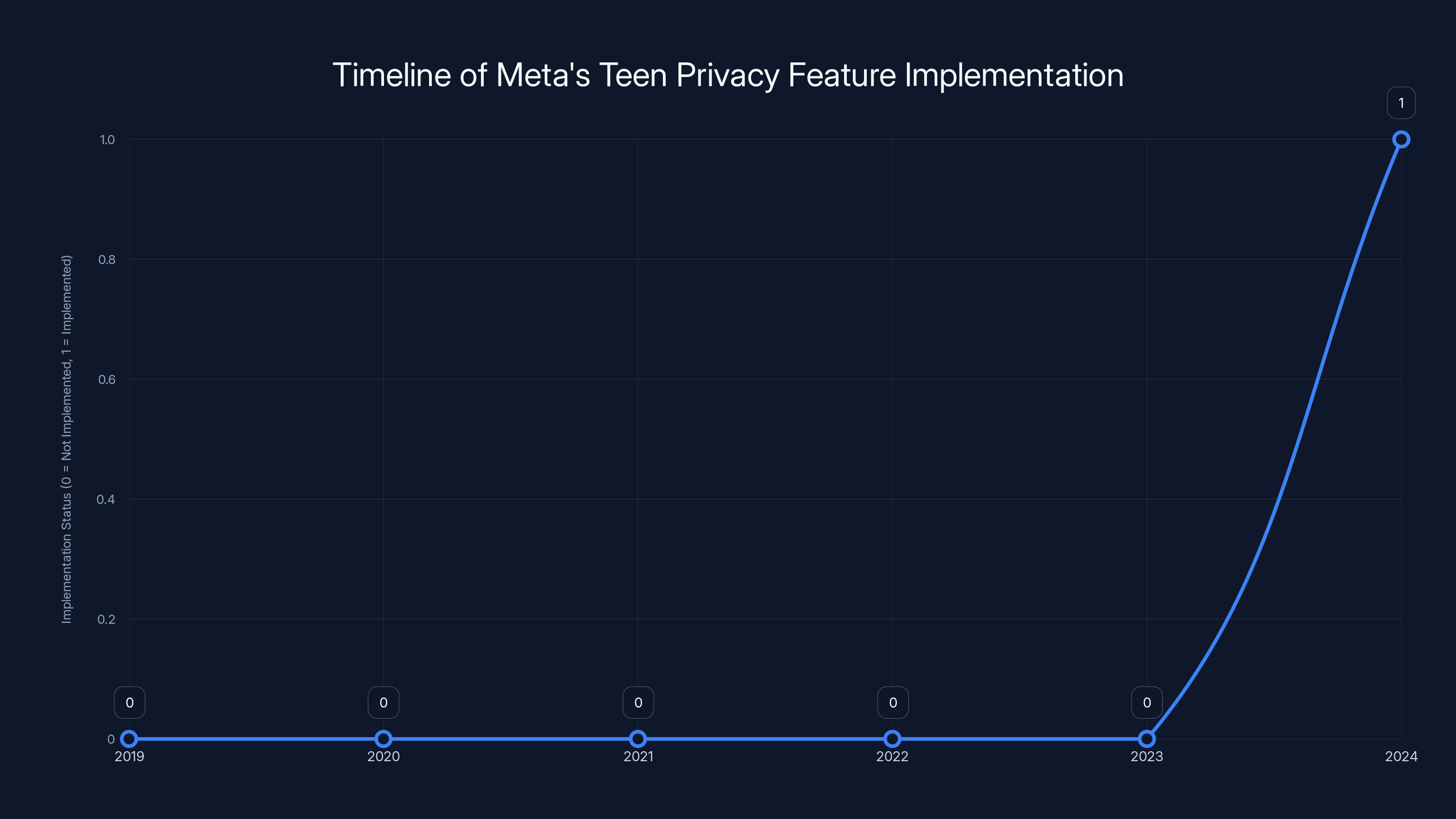

Last year, Meta made headlines by finally rolling out private account defaults for teenagers on Instagram. Sounds good, right? Here's the problem: the company apparently considered doing this back in 2019. That's a six-year delay, and now Congress wants to know exactly why.

In early 2025, a bipartisan group of senators penned a letter to Meta CEO Mark Zuckerberg that reads like a parent asking their teenager why homework wasn't done. Except this teenager runs a company worth nearly $2 trillion and influences billions of lives. The letter, signed by Brian Schatz (D-HI), Katie Britt (R-AL), Amy Klobuchar (D-MN), James Lankford (R-OK), and Christopher Coons (D-DE), gets to the heart of something that's been festering in tech regulation for years: the gap between what companies know and what they actually do.

This isn't just about privacy settings. It's about a fundamental question that's haunted social media regulation since the early 2010s. When platforms know their products harm users, especially vulnerable teenagers, do they have an obligation to change course immediately? Or can they delay protective measures if those changes cut into the metrics that make investors happy?

The senators' investigation points to something potentially damning. Unsealed court documents revealed that Meta may have deliberately avoided making protective changes because they'd reduce engagement metrics. In the world of social media, engagement is everything. It drives ad revenue, stock prices, and investor confidence. Reducing it, even for good reasons, feels like heresy to executives focused on quarterly earnings.

But here's what makes this moment different. We're not in 2015 anymore, when social media companies could claim ignorance about their platforms' psychological effects. By 2019, when Meta supposedly considered making teen accounts private by default, the company had access to extensive internal research showing precisely how their algorithms affected young users. Some of that research would later become the "Facebook Papers," leaked documents showing that Meta's own scientists understood the harm their platforms caused.

The senators' letter raises a cascade of uncomfortable questions about corporate responsibility, regulatory capture, and the price we're willing to pay for free apps. Let's unpack what's actually happening here and what it means for the future of social media regulation.

The Timeline: How a Six-Year Delay Became a Congressional Investigation

Understanding the Meta teen privacy story requires tracking when the company made decisions versus when those decisions finally became public policy.

Rewind to 2019. Meta (then still called Facebook, though the parent company branding was in flux) was sitting on research that showed its platforms negatively affected teen mental health and self-image. The company had sophisticated data about how algorithmic feeds, compare-and-despair dynamics, and addictive design patterns impacted adolescent psychology. That research wasn't hidden in a basement somewhere. It was in the hands of Meta's product teams, safety executives, and leadership.

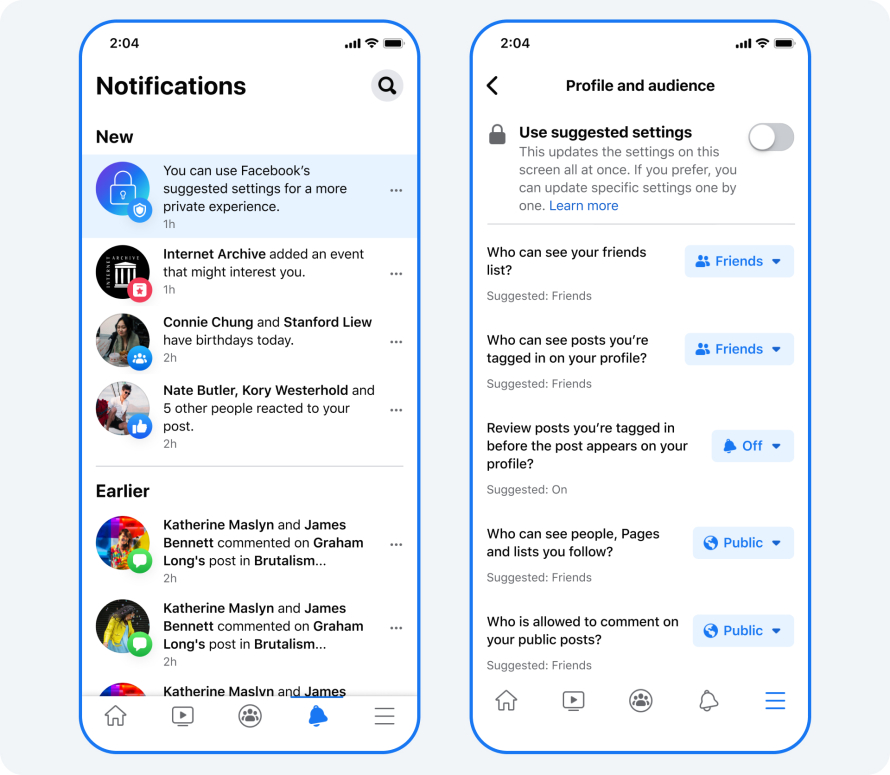

At some point in 2019, someone at Meta apparently said, "You know what would actually help? Making teen accounts private by default." It's a simple idea. Teenagers get accounts that can't be seen by the general public by default. They'd have to actively opt into public visibility, rather than having to dig through privacy settings to achieve safety. This is the opposite of how social media normally works. The default is always maximum visibility, and safety requires effort.

But here's where the story gets interesting. According to the unsealed court documents cited in the senators' letter, Meta decided against implementing this feature at scale. The reason, allegedly, was that privacy-by-default would "likely smash engagement." That phrasing alone reveals something about how Meta's leadership thought about the tradeoff between safety and metrics. It's not, "We think there's a technical implementation challenge." It's not, "We need time to test this properly." It's specifically about engagement impact.

Fast forward to September 2024. More than five years later, Meta finally rolled out private accounts by default for teens on Instagram. The rollout came quietly, almost unannounced. No press conference. No CEO blog post. Just a feature update that made privacy the path of least resistance instead of something that required active user engagement.

Then in 2024, Meta extended similar protections to Facebook and Messenger. Again, the rollout was subdued. No major announcement. No acknowledgment of the years-long delay.

The senators' letter landed in early 2025, with a deadline for Meta's response by March 6th, 2025. They're not asking hypothetical questions. They have specific documentation suggesting the company delayed a protective measure because of engagement concerns. They want to understand the decision-making process, which teams were involved, and whether similar delays happened with other safety features.

That timeline gap—2019 to 2024—is now the center of a regulatory investigation. And that's the thing about paper trails. Sometimes they take five or six years to become visible, but eventually, they do.

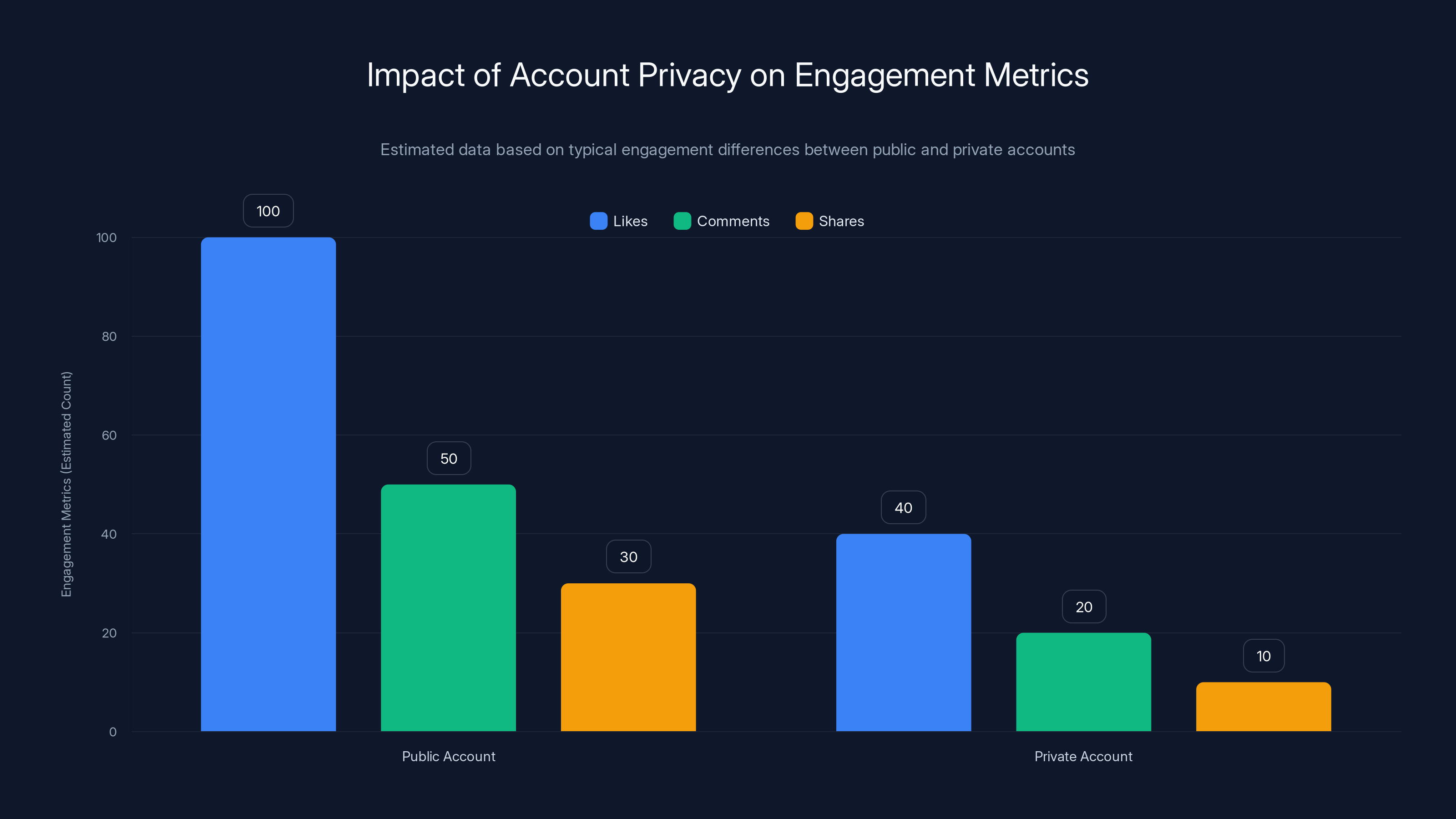

Estimated data shows public accounts generate significantly higher engagement metrics compared to private accounts, influencing Meta's decisions on privacy settings.

Understanding Teen Privacy Defaults: Why This Feature Matters More Than It Seems

To understand why the senators are so concerned about the timing, it helps to grasp what exactly a "private by default" account means and why it matters for teenage users specifically.

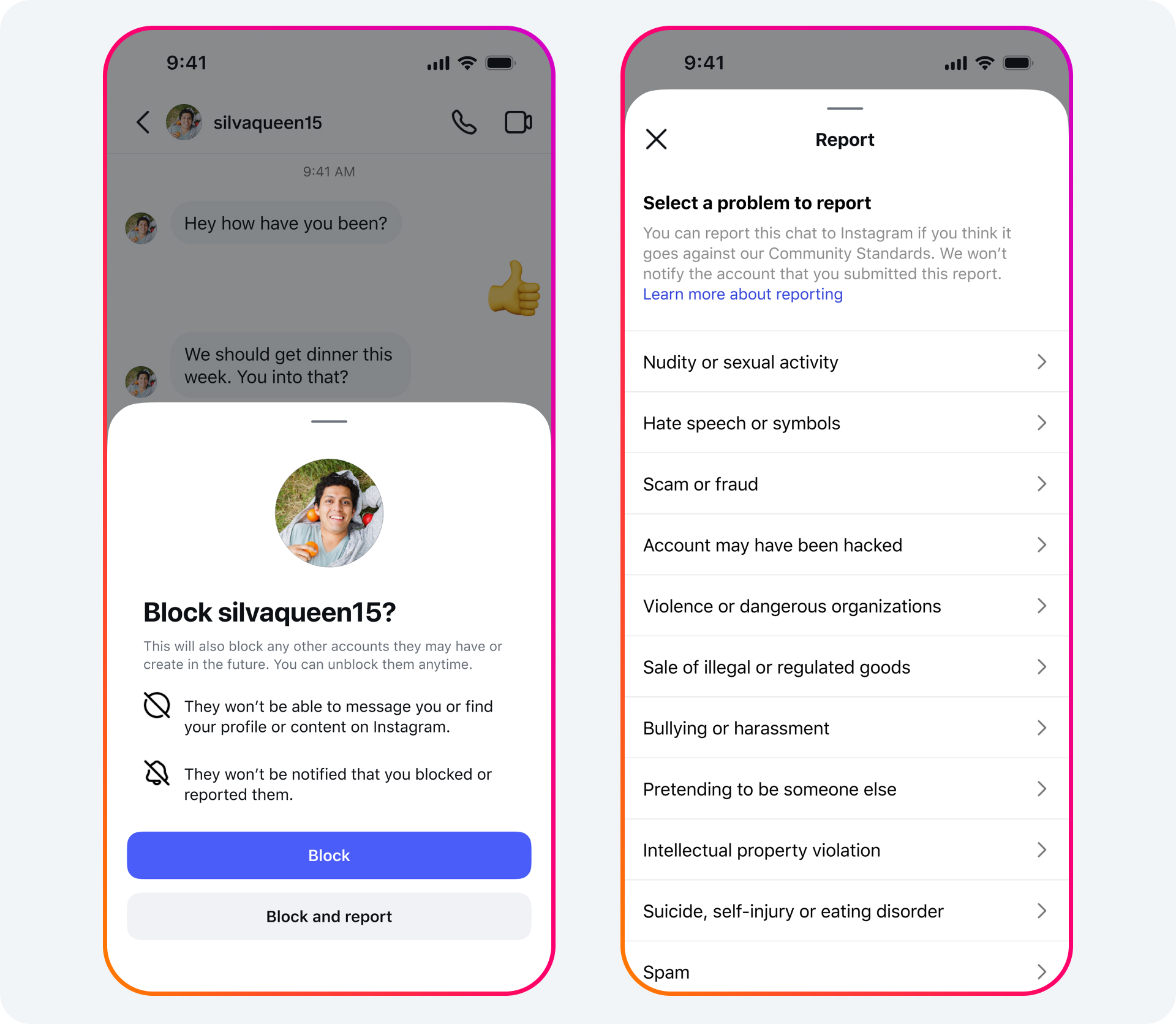

On Instagram and Facebook, an account's privacy setting determines who can see your posts, stories, and profile information. A public account means anyone on the internet can find you, comment on your content, and follow you without permission. A private account requires you to approve follower requests and restricts visibility to just your approved followers.

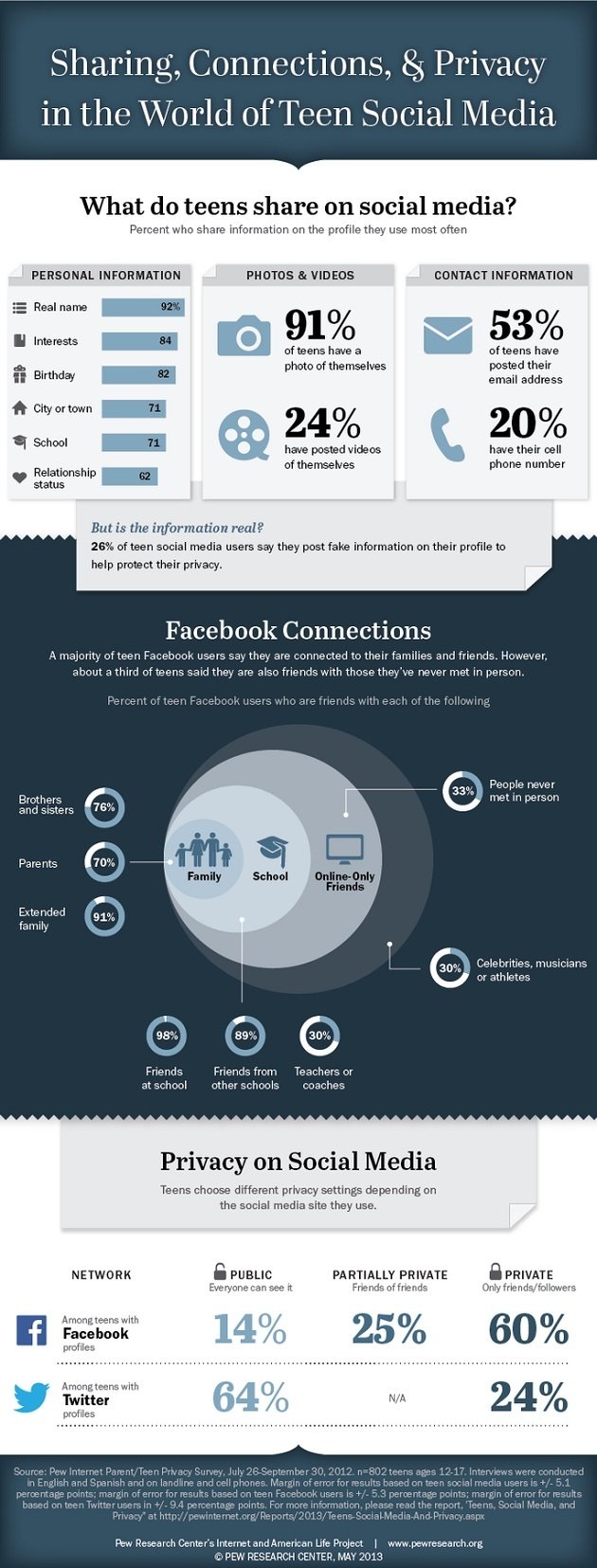

For adults, this is mostly a preference thing. Some people want to build a public brand. Others prefer a closed circle of friends. For teenagers, the implications are much more serious.

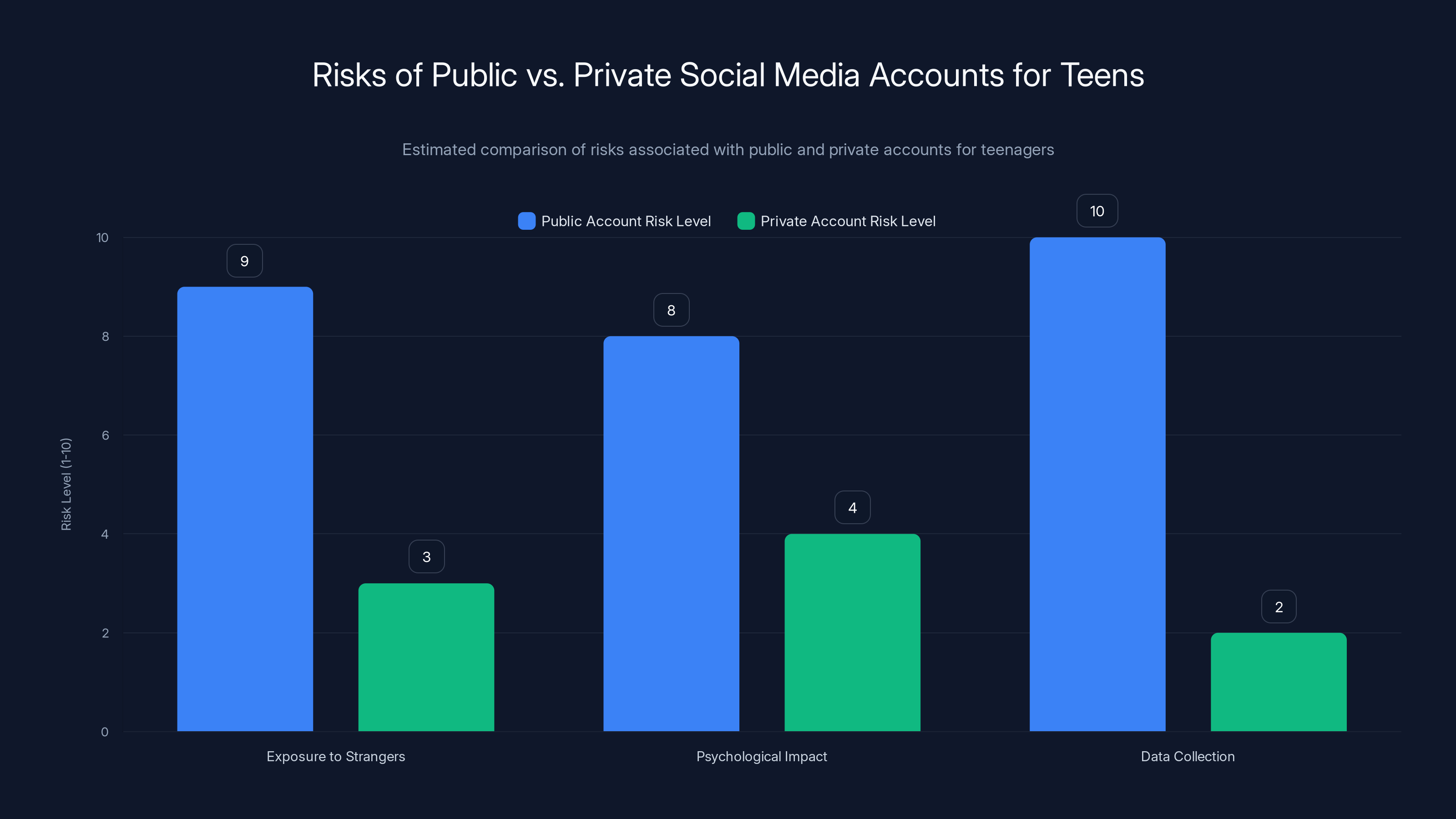

When accounts are public by default, teenagers face several specific risks. First, there's exposure to strangers. Predators who exploit social media have an easy way to find and contact young users. Researchers studying online child safety consistently find that public accounts make targeted grooming easier. Second, there's the psychological impact of exposure. Teenagers already deal with social anxiety at baseline. Knowing their posts are visible to thousands or millions of strangers amplifies that anxiety for many. Some research suggests it contributes to increased depression and anxiety symptoms.

Third, there's the data collection angle. Public accounts generate more engagement metrics for Meta. More visibility means more comments, likes, and shares. More engagement means more data flowing through Meta's algorithms. More data means more precise targeting for advertisers. It's not coincidental that Meta's default has always been maximum visibility. That default makes the company money.

Private-by-default accounts reverse this dynamic. They reduce the path to visibility, making it something teenagers have to actively choose. They limit exposure to strangers by default. They reduce the raw engagement metrics that flow through Meta's systems. They make it harder for the platform to extract behavioral data from teen activity.

Does a private account make a teenager completely safe? No. Determined predators can still find ways to contact minors. Teenagers can still choose public visibility if they want. But private-by-default shifts the burden. Instead of requiring teenagers (and their parents) to understand privacy settings and actively change them, it makes privacy the starting point.

The senators' core question is this: if Meta knew in 2019 that making accounts private by default would improve teen safety, why wait until 2024? That five-year gap, during which millions of teenagers came of age on the platform with default-public visibility, represents a lot of exposure that could have been prevented.

The Court Documents: What Unsealed Evidence Revealed About Meta's Decision-Making

The senators' investigation gained momentum when a crucial piece of evidence became public. Court documents filed in a nationwide social media child safety lawsuit included unsealed sections that contained damaging admissions and testimony about Meta's internal processes.

One document featured testimony from Meta's former head of safety and well-being. This is the person who should theoretically have the most authority to demand protective measures when evidence of harm emerges. Instead, the testimony suggests that even safety leadership at Meta faced pushback when trying to implement protective changes.

The document alleges that Meta would only suspend accounts belonging to people soliciting minors for sexual purposes after they had accumulated 17 violations. Seventeen. That's not a threshold. That's almost a quota. It suggests that enforcement was reactive and slow, allowing problematic behavior to persist across multiple incidents before any meaningful action was taken. If you're a parent reading that, it's chilling. If you're a regulator, it suggests Meta was accepting a certain level of harm as a normal cost of doing business.

Other unsealed sections alleged that Meta considered halting research or studies into its platforms' well-being impacts when the research produced unfavorable results. In other words, if data showed the algorithm was making teenagers depressed, Meta might not publish that research. Might not act on it. Might not even finish the study. This isn't just bad corporate practice. This is actively suppressing knowledge of harm.

These documents didn't emerge from some investigative journalist digging through trash bins. They came through official legal channels because plaintiffs in a lawsuit demanded access to Meta's internal communications and decision logs. The lawsuit is still ongoing, but these early unsealed documents have already painted a picture of a company that knew about harms and didn't always act with urgency.

What makes this particularly damaging is the specificity. These aren't accusations of negligence. They're specific claims about specific people, specific meetings, and specific decisions. Meta can't easily dismiss them as misunderstandings or cultural differences. The company now has to either refute them with evidence or explain them away with context.

Estimated data shows that public accounts pose significantly higher risks to teenagers in terms of exposure to strangers, psychological impact, and data collection compared to private accounts.

The Engagement Versus Safety Tension: Understanding Meta's Core Business Incentive Problem

To understand why Meta might delay teen safety features, you need to understand the fundamental economic structure of the company. Meta doesn't charge users for Instagram or Facebook. You don't pay a monthly subscription. So how does Meta make money? Through advertising. And what drives advertising revenue? Engagement metrics.

Engagement is the metric that matters most on Meta's platforms. It's measured in likes, comments, shares, saves, clicks, and time spent. The more users engage, the more data Meta collects about user behavior and interests. More data means more precise targeting for advertisers. More precise targeting means higher ad prices. Higher ad prices mean higher revenue.

This creates a permanent alignment of incentives around maximizing engagement. Every product decision at Meta gets filtered through this lens. Will this feature increase engagement or decrease it? Even if it's a safety feature, the first question is always how it affects engagement.

Now, making teen accounts private by default would absolutely decrease engagement. Here's why. Private accounts have smaller audience reach. When you post something, fewer people see it (just your approved followers). Fewer people seeing content means fewer comments, fewer likes, fewer shares. The feedback loop that makes social media addictive works partly because of wide visibility. You post something and dozens or hundreds of people engage with it. That positive reinforcement trains your brain to post again.

Private accounts limit that feedback. Posting to 200 followers will always generate less engagement than posting to 5,000 random internet users. And teenagers, despite not yet having fully developed prefrontal cortices, are incredibly sensitive to these engagement signals. They want the likes. The wider audience creates more likes.

So Meta faced a choice in 2019. They could implement private-by-default, which would reduce teen engagement on the platform, which would reduce data collection from teen behavior, which would reduce advertiser targeting precision, which would reduce ad revenue. Or they could leave default accounts as public, accept some teen harm, and continue the revenue machine.

They chose the revenue machine.

This isn't unique to Meta, by the way. YouTube's algorithm prioritizes engagement (watch time and clicks) over accuracy or safety. TikTok's algorithm is famously addictive partly because it optimizes for engagement metrics. Twitter's (now X's) algorithm was similarly engagement-focused under previous ownership. The entire social media industry has structured itself around this engagement-first model.

But Meta is special in one important way. Meta conducts extensive internal research on these harms. The company employs psychologists, data scientists, and researchers who specifically study how their platforms affect users. They have sophisticated knowledge about how engagement-maximization creates harm, particularly for vulnerable populations like teenagers.

That knowledge-action gap is what the senators are focused on. It's not, "Meta should have known their platforms harm teens." It's, "Meta did know, had research proving it, and delayed protective measures anyway."

Congressional Intent: What the Senators Actually Want From Meta

The senators' letter isn't just performative outrage. It asks for specific information on a specific timeline. Understanding what they're actually demanding reveals what Congress is attempting to establish.

First, the senators want Meta to explain the decision-making process around private-by-default teen accounts. Specifically, they want to know why the company "delayed" implementation. The word "delayed" is important. It implies the feature was planned, then postponed. The senators have evidence suggesting exactly that. They're asking Meta to either confirm this narrative or refute it with alternative explanations.

Second, they want to know which teams were involved in the delay decision. This matters because it creates accountability. If a low-level product manager wanted to postpone the feature, that's different from the CEO mandating the delay. By asking which teams were involved, the senators are trying to establish whether this was a systematic, company-wide decision or an isolated choice by individuals. If the latter, Meta can blame a few bad actors. If the former, it suggests the delay was intentional policy.

Third, the senators want information about whether Meta has a pattern of halting research that produces unfavorable outcomes. This gets at the core of the knowledge-action gap problem. They're asking: does Meta systematically suppress information about harms when it conflicts with business interests? This would establish a pattern of behavior, not a one-time mistake.

Fourth, they're asking about Meta's child sexual abuse material (CSAM) enforcement. Specifically, they want to understand why accounts engaging in child sexual exploitation were only suspended after accumulating 17 violations. They want to know if this is an intentional threshold or a failure of enforcement. They want to know if it's changed. This addresses the most serious harm potential, beyond privacy and mental health into direct exploitation.

The deadline for response is March 6th, 2025. That's a specific hard deadline, not a courtesy request. If Meta doesn't respond, Congress can escalate. They can subpoena documents. They can hold hearings. They can recommend enforcement actions to the FTC.

What the senators are essentially doing is establishing a paper trail of their own. They're asking specific questions that force Meta to provide detailed answers. Those answers become evidence in future regulatory or legal proceedings. They create a record of what Meta knew and when they knew it. In corporate accountability proceedings, that record is everything.

The Broader Regulatory Landscape: Why This Moment Matters

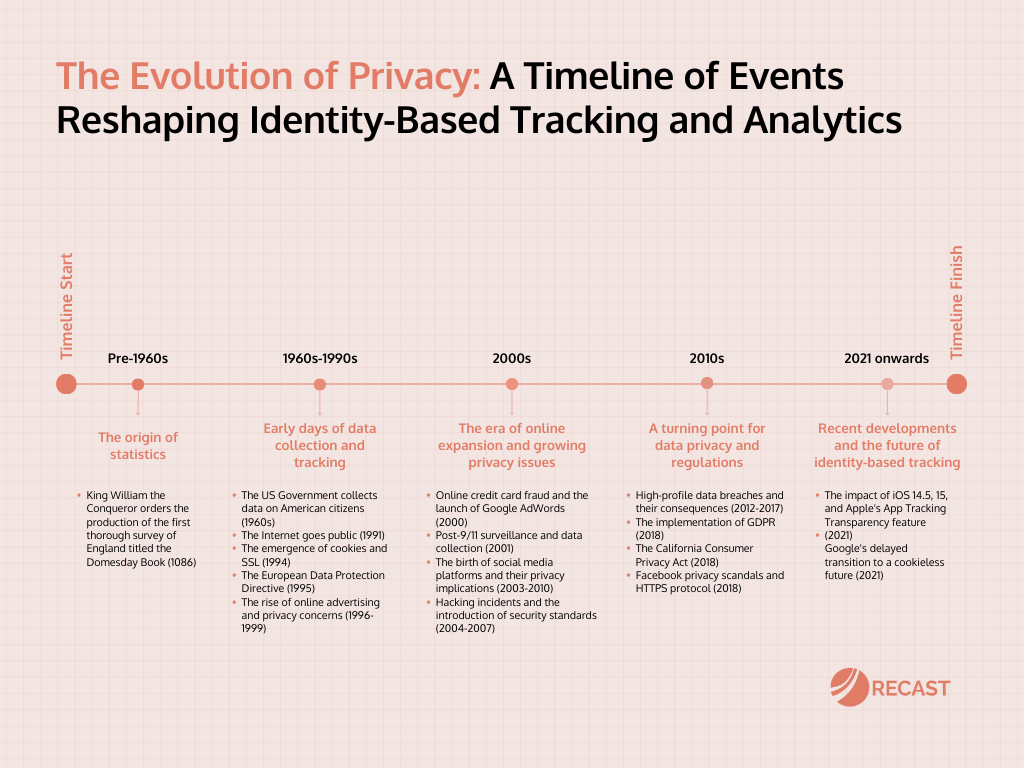

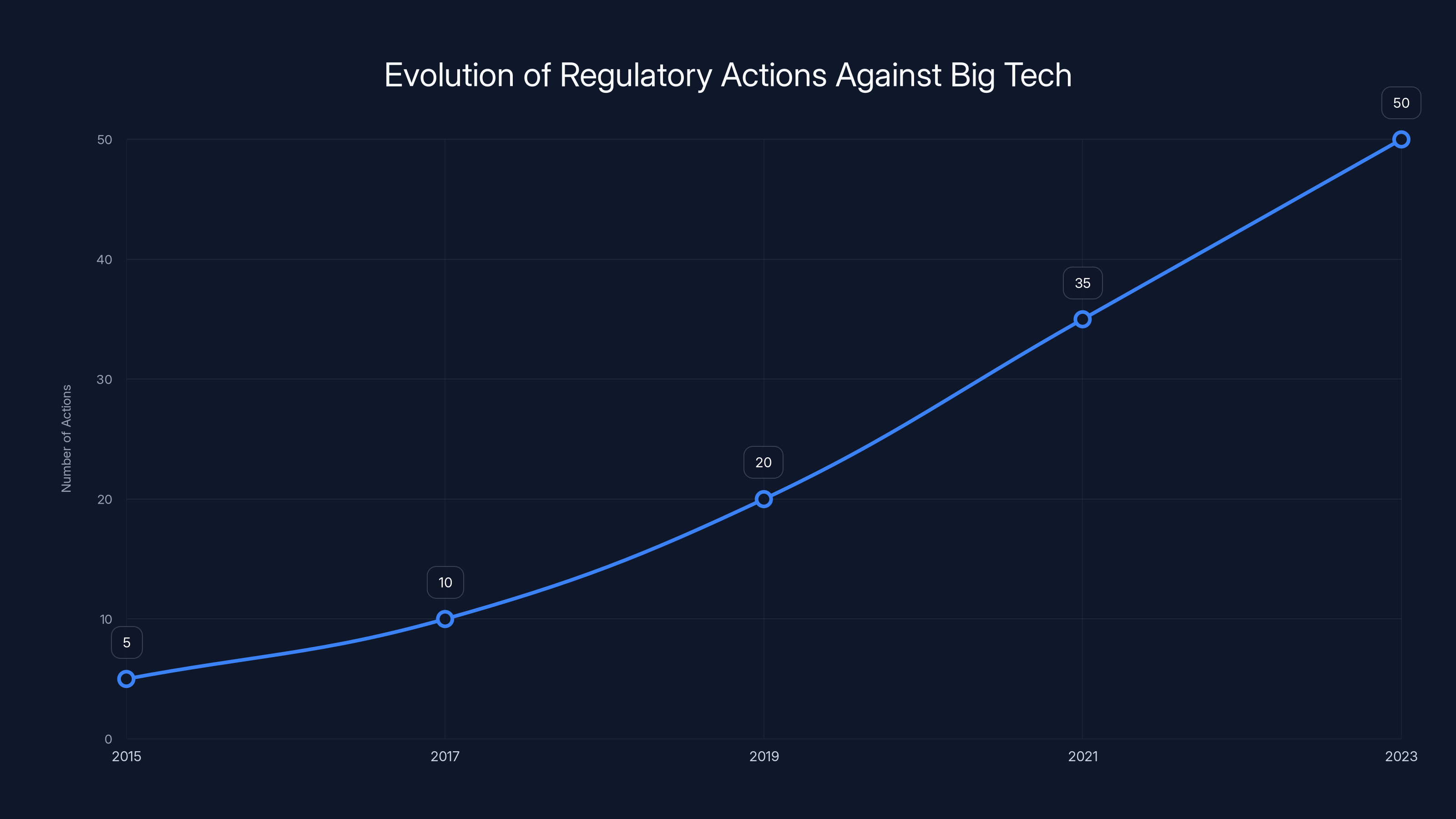

The senators' investigation doesn't exist in a vacuum. It's part of a broader shift in how Congress approaches social media regulation. For years, Big Tech companies operated with minimal regulatory oversight. Section 230 of the Communications Decency Act, passed in 1996, provided broad liability protections. The FTC had limited tools for enforcement. Legislators didn't understand the technology well enough to regulate effectively.

That's changed. Congress now understands that social media companies make deliberate product choices that affect billions of people. They understand that these companies have sophisticated knowledge of those effects. They understand that profit motive sometimes conflicts with public welfare. And they're starting to act on that understanding.

The Meta investigation is part of this broader trend. So is increased scrutiny of TikTok's algorithm. So is the push for state-level privacy laws like California's privacy legislation. So is the FTC's increased enforcement against tech companies. So is the EU's Digital Services Act, which imposes strict requirements on platform responsibility for user harm.

What's shifting is the legal and regulatory theory. Instead of treating social media companies as neutral platforms that merely facilitate communication (the old Section 230 logic), regulators are treating them as editors who make deliberate choices about what content is promoted, what is suppressed, and how users interact. That distinction matters enormously. If you're an editor, you're responsible for editorial choices. You can't hide behind "we're neutral." You can't claim ignorance.

Meta's delays on teen privacy protections fit into this broader reframing. The company made a deliberate choice about a product feature that affects teen safety. They made that choice based on engagement metrics. That's an editorial choice, not a neutral platform decision. And Congress is asking them to justify it.

Estimated data shows a significant increase in regulatory actions against Big Tech from 2015 to 2023, reflecting a shift in legislative focus and understanding.

The Role of Internal Research: How Meta's Own Data Created a Liability

One of the most damaging aspects of the senators' investigation is that Meta can't claim ignorance. The company's own research documented harms. That research became public partly through the Facebook Papers leaked by Frances Haugen and partly through the ongoing lawsuit discovery process.

Meta's research teams have studied how Instagram affects teen body image, how the algorithm promotes eating disorders, how the platform's features correlate with increased anxiety and depression rates among teenage users. Some of this research was commissioned by Meta specifically to understand and mitigate harms. Some of it was conducted independently by Meta researchers and then ignored or buried.

What's particularly damaging is the pattern. When research showed that Instagram was beneficial (say, for community building), Meta highlighted it and used it in marketing and regulatory proceedings. When research showed Instagram harmed teens (increased body dissatisfaction, for example), the company downplayed it or limited its distribution. This selective use of internal research is exactly what creates regulatory liability.

From a legal standpoint, it means Meta can't claim the harm was unforeseeable. They foresaw it. They documented it. They chose to prioritize engagement growth anyway. That's not negligence. That's knowing violation of an implicit duty of care toward minors.

The senators are smart to focus on this. By asking whether Meta "halted" research that produced unfavorable results, they're trying to establish not just that Meta knew about harms but that they actively worked to suppress knowledge of harms. That's an even more serious allegation than mere negligence.

The Teen Safety Landscape: What Private-by-Default Actually Prevents

To understand why six years between a considered change and actual implementation matters, it's helpful to quantify what happens during those six years in terms of teen exposure to risk.

Between 2019 and 2024, hundreds of millions of teenagers created accounts on Instagram and Facebook. Virtually all of them had default-public accounts. That means their profiles, their location data, their patterns of activity were visible to the entire internet. Some of those teenagers experienced harassment. Some experienced grooming. Some experienced non-consensual sharing of intimate images. Some were targeted for exploitation.

How many? That's hard to quantify exactly because social media companies have fought transparency measures that would allow precise counting. But law enforcement reports, research studies, and testimony from internet safety organizations paint a picture of massive scale. The National Center for Missing and Exploited Children reported examining over 32 million suspected child sexual abuse material (CSAM) images in 2023. That's not just Meta, but the problem exists across social media platforms, and Meta's platform is one of the largest.

A private-by-default account doesn't eliminate exploitation risk, but it does reduce exposure. It makes it harder for strangers to find teen users. It limits the amount of behavioral data that can be extracted and used for targeting. It changes the likelihood that a teen receives unwanted contact from unknown users.

Over five years, if private-by-default accounts reduce teen exploitation risk by even 5 to 10 percent, that could mean tens of thousands of avoided negative interactions, hundreds of prevented grooming attempts, and lives made materially safer. The senators are implicitly asking: why did Meta accept that harm when they knew how to prevent it?

From Meta's perspective, they might argue that the feature took time to develop properly. That they needed to test it thoroughly. That they wanted to make sure the implementation was right. Those are reasonable arguments. But the court documents suggest the delay wasn't about technical challenges or testing time. It was about engagement impact.

The Parallel to Other Tech Regulation: Lessons From History

The Meta investigation doesn't exist in historical isolation. There are parallels to other tech regulation efforts that reveal patterns about how Congress approaches these issues.

When the tobacco industry fought regulation decades ago, they used a similar playbook. They funded research that cast doubt on health effects. They claimed the science was unsettled. They delayed implementation of warning labels and safer products. When regulation finally came, it revealed that the industry had known about health risks for years. Internal documents showed they understood the dangers and chose profit over public health anyway.

Social media regulation is following a similar trajectory. First, skepticism about whether harms are real. Then, claims that the science is unsettled. Then, slow implementation of known protective measures. Then, regulatory investigations that reveal the internal knowledge gap. The timeline is different (cigarettes took decades; social media is moving faster), but the pattern is recognizable.

What makes the Meta investigation significant is that it's happening while the company is still dominant, while the harm is still occurring, and while regulatory opportunities still exist. The tobacco investigation came decades after the harms were established. This investigation is coming relatively quickly after the knowledge became public.

There are also parallels in how Congress is framing the issue. Just as tobacco regulation eventually focused on whether companies knowingly concealed health risks, social media regulation is increasingly focused on whether platforms knowingly implement features that increase harm. The legal theory is shifting from "platforms aren't responsible for user behavior" to "platforms are responsible for their own design choices."

Meta delayed the implementation of private-by-default teen accounts for approximately five years, from initial consideration in 2019 to actual implementation in 2024. Estimated data based on narrative.

Implementation Challenges: Why Teen Privacy Protections Are More Complex Than They Seem

To be fair to Meta, actually implementing private-by-default for millions of teen users is more complex than flipping a switch. There are technical considerations, product design challenges, and unexpected consequences that need to be managed.

One challenge is the existing user base. When you change defaults, what happens to users who already have public accounts? Do you force them private without asking? That's intrusive. Do you ask them to confirm? That defeats the purpose of private-by-default. Do you grandfather in existing users and only apply the default to new accounts? That's incomplete protection.

Another challenge is international variation. Different countries have different cultural norms around privacy. In some regions, social media is primarily a tool for public presence and community building. Making accounts private by default might be unpopular in those markets. Meta has to balance global product consistency with local preferences.

There's also the creator economy consideration. Teenagers who have found audiences for their content (musicians, comedians, educators) benefit from public visibility. Making accounts private by default limits their reach and growth potential. Some teenage content creators might actively work against the feature to maintain public visibility.

And there's the enforcement challenge. A private-by-default setting doesn't prevent teenagers from changing it. It just changes what the default is. So the feature depends on teenagers not overriding it. Research on defaults suggests most people stick with defaults, but some portion will change them. For teenagers who are seeking attention and validation (which is normal developmental psychology), the temptation to go public is strong.

These are real implementation challenges, not excuses. They're reasons why rolling out the feature might take time. But they don't explain a five-year gap. A company with Meta's resources could have solved these challenges much faster if they prioritized teen safety over engagement metrics.

The Role of Regulatory Capture: How Industry Influence Shapes Policy Timelines

One question lurking beneath the senators' investigation is whether Meta's delay reflects not just the company's preferences but also successful lobbying to prevent regulatory action on teen privacy.

Regulatory capture is a term for when companies that are supposed to be regulated by government agencies end up influencing those agencies to their advantage. It happens through lobbying, through revolving doors between industry and government, through campaign contributions, and through favorable relationships between executives and regulators.

Has this happened with Meta and teen privacy? It's hard to prove definitively, but the pattern suggests it might. Meta has spent millions on lobbying related to social media regulation, child safety, and privacy issues. Meta executives have cultivated relationships with key regulatory figures. Meta has employed former government officials and regulators. These are all standard practices in corporate governance and lobbying, but they create an environment where regulatory action is slower and easier to influence.

The senators' letter is partly an attempt to bypass traditional regulatory channels. Instead of waiting for the FTC to investigate (the FTC has a slow bureaucratic process), they're using congressional pressure to force accountability more quickly. It's a way of working around potential regulatory capture by bringing direct political pressure to bear.

This is increasingly the pattern in tech regulation. Congressional pressure, often coordinated across party lines, is moving faster than agency-based regulation. When Congress applies pressure, executive agencies often respond more aggressively. The Meta investigation is using this dynamic strategically.

What Meta's Response Might Look Like: Predictions and Strategic Considerations

Meta's response to the senators' questions will be carefully crafted by teams of lawyers, executives, and communications professionals. It will likely follow a specific playbook that acknowledges some concerns while defending the company's actions.

Expect Meta to emphasize that they've now implemented the features in question. They'll point to private-by-default as a positive step that shows their commitment to teen safety. They'll argue that the feature is now broadly available, and teens can use it. They'll frame the previous delay as part of an iterative process, not a deliberate suppression of a safety feature.

Expect them to dispute or contextualize the "smash engagement" quote from the unsealed court documents. They'll argue it was either taken out of context, that it referred to a different feature, or that it was a preliminary discussion that didn't reflect final decision-making. They'll argue that engagement metrics are relevant to product decisions but not the sole consideration.

On CSAM enforcement, expect Meta to present new data showing recent improvements. They'll argue that the 17-violation threshold was either mischaracterized or has since been changed. They'll present their work with law enforcement and NCMEC (National Center for Missing and Exploited Children) as evidence of their commitment to combating exploitation.

On the research halting question, expect Meta to distinguish between research projects that were completed and published versus projects that were discontinued for resource reasons or because results were inconclusive. They'll argue that research discontinuation happens all the time in companies and isn't evidence of suppressing unfavorable findings.

Will these explanations satisfy the senators? Probably not completely. But they're the kind of response that creates room for further negotiation, additional requirements, and potential regulatory settlements. Meta's goal will be to contain the damage, show responsiveness to congressional concerns, and avoid triggering enforcement actions.

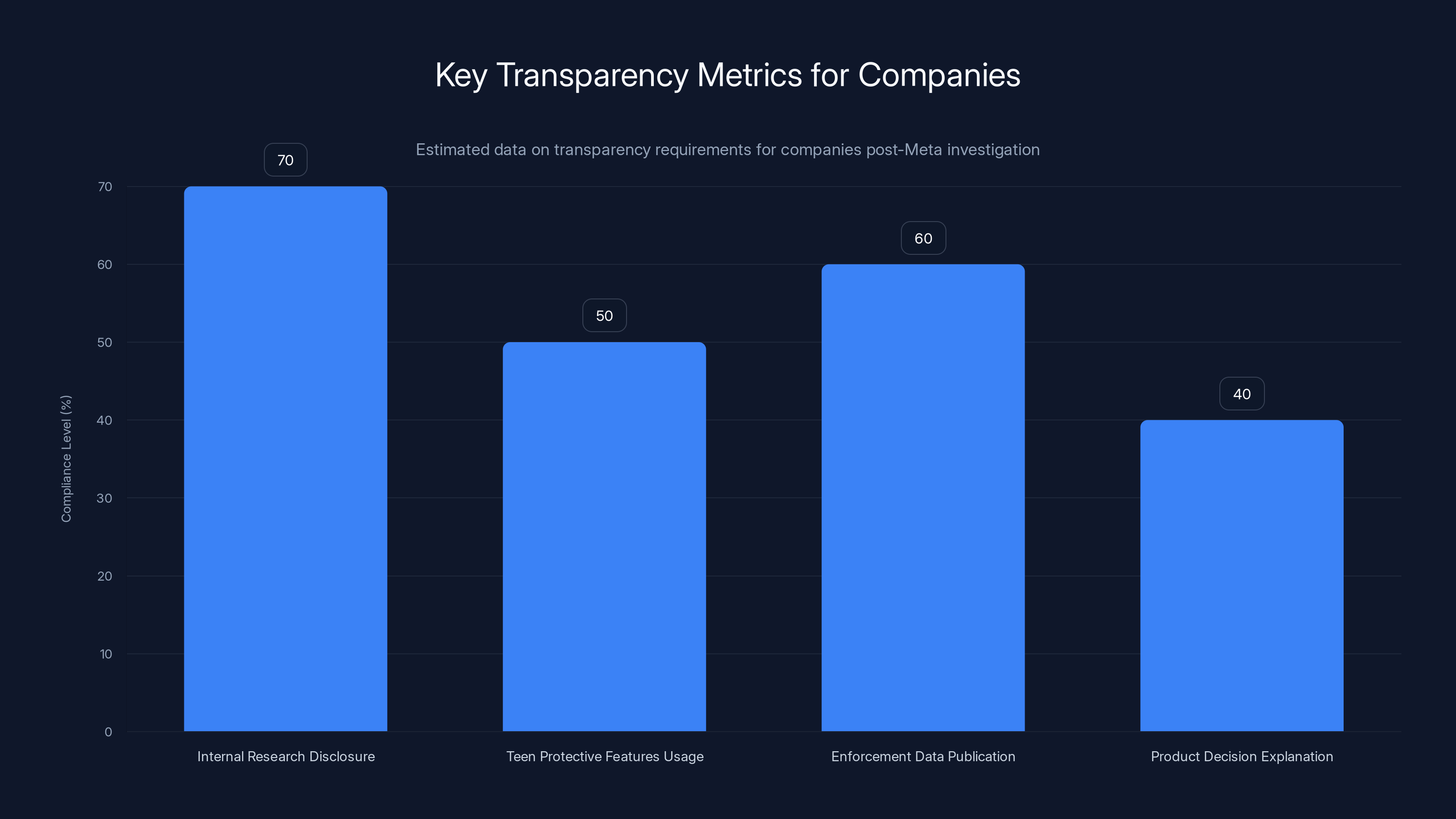

Estimated data suggests varying levels of compliance with transparency requirements, with internal research disclosure being the most adhered to. Estimated data.

The Future of Teen Privacy Protections: What Happens After the Investigation

If the Meta investigation follows typical patterns in tech regulation, it will likely lead to several outcomes.

First, more stringent requirements for teen privacy protections across all platforms. If Congress finds that Meta delayed an important safety feature, they'll likely want to ensure that other platforms don't do the same. You'll see proposed legislation mandating private-by-default accounts, limiting data collection from minors, requiring parental consent for certain uses, and increasing enforcement around exploitation.

Second, increased transparency requirements. Congress will likely demand that social media companies publicly disclose their internal research on user harms, particularly research related to teens. They'll want to see proof that the companies are acting on that research. This would flip the current paradigm where internal research is treated as proprietary.

Third, potential financial penalties for Meta and other platforms that have delayed protective measures. These could come through FTC enforcement actions, through regulatory fines, or through expanded liability in civil lawsuits.

Fourth, structural changes to how social media companies operate. Some proposals floating around include separating ad targeting from content creation (limiting the engagement incentives), requiring oversight boards to review major product decisions affecting minors, or implementing regular external audits of teen safety practices.

None of these are certain, but the trend is clear. Teen privacy and safety are becoming regulatory priorities. Platforms that continue to prioritize engagement over teen wellbeing will face consequences. That's a meaningful shift from where the industry was even five years ago.

Broader Implications: Beyond Meta to the Entire Social Media Industry

While the senators' letter is addressed to Meta, it sends a message to the entire social media industry. The era of self-regulation and good-faith claims about protecting teens is ending. Regulators now understand the business incentives that drive platforms to delay protective measures. They understand that engagement maximization and teen safety are often in tension. And they're not going to accept delays based on business metrics.

For TikTok, this means increased pressure to implement protective features for teen users and to demonstrate that their algorithm isn't being optimized at the expense of teen wellbeing. For YouTube, it means scrutiny of how their recommendation algorithm affects teen content consumption. For Discord, Snapchat, and other platforms popular with teens, it means they're all in the regulatory crosshairs.

The Meta investigation is setting a precedent. It's saying that platforms can't rely on claiming they eventually implemented safety features while hiding the fact that they delayed implementation for business reasons. Congress wants to know the real timeline, the real reasoning, and the real impacts.

For tech companies, this creates pressure toward genuine proactive protection rather than reactive responses. If you're a platform with a teen user base, you can't afford to research a safety measure, decide it conflicts with engagement metrics, and then implement it five years later. Congress will come after you. Regulators will impose requirements. Civil lawsuits will extract damages.

This is part of a broader evolution in how society regulates powerful technology companies. Instead of light-touch regulation based on trust, we're moving toward oversight based on understanding the structural incentives that drive behavior. Once you understand that engagement maximization drives harm, and that companies prioritize engagement, you can predict behavior. And you can design regulation accordingly.

The Role of Transparency and Disclosure: What Companies Need to Prove Now

One major change coming from the Meta investigation will be increased transparency requirements. Companies will need to demonstrate, not claim, that they're protecting teens.

This means disclosing internal research, both the positive findings and the unfavorable ones. It means publishing reports on how many teens use protective features. It means making enforcement data public (how many accounts they suspend for CSAM, how many attempts at minor exploitation they catch). It means explaining product decisions in detail, particularly decisions about features that affect teen users.

This transparency is uncomfortable for companies because it reveals tradeoffs, unresolved issues, and knowledge-action gaps. It's much easier to claim commitment to safety than to provide granular data showing what percentage of teens actually use your safety features and how your algorithms treat teen users differently than adult users.

But transparency is increasingly the regulatory requirement. The EU's Digital Services Act requires platforms to file regular compliance reports. Several U.S. states are moving toward transparency mandates. Congress is considering federal requirements. What was once considered proprietary business information is becoming regulatory necessity.

For companies, this means investing in safety infrastructure not just as a feature but as a core part of how you operate. You need auditable processes, documented decision-making, measurable metrics on outcomes, and clear communication about tradeoffs. You can't operate in the shadows anymore.

Regulatory approaches are estimated to enhance user safety but may reduce innovation and revenue, while increasing compliance costs. Market solutions favor innovation and revenue but may compromise user safety. Estimated data.

Enforcement Mechanisms: What Can Congress Actually Do?

Understanding the senators' leverage requires knowing what tools Congress actually has to enforce compliance and consequences.

Direct regulatory power is one tool. Congress can pass legislation that creates specific requirements for platforms, sets penalties for violations, and creates enforcement mechanisms. They've done this with data privacy in some cases (like state privacy laws). They can do it for teen protections.

Subpoena power is another tool. If Meta doesn't cooperate voluntarily, Congress can subpoena internal documents, force executives to testify under oath, and demand access to research and decision-making materials. Subpoenas are binding and carry criminal penalties for non-compliance.

FTC referral is a third tool. Congress can recommend that the FTC investigate and prosecute companies for deceptive practices or unfair competition. The FTC has broad authority to challenge practices that harm consumers, and teens are definitely consumers.

Public pressure is a fourth tool. Congressional hearings are public. Testimony gets covered by media. Bad headlines can affect company reputation, stock price, and advertiser relationships. Social media companies are sensitive to these pressures because advertising revenue depends partly on brand reputation.

The threat of these tools is often enough to push companies toward voluntary compliance. Meta doesn't want a Congressional subpoena. They don't want FTC enforcement. They don't want negative headlines. So they respond to the senators' questions substantively and make concessions.

Timeline Expectations: When Will This Investigation Conclude?

Based on the pattern of previous tech investigations, the Meta teen privacy investigation will likely unfold over months or years.

The immediate timeline is March 6, 2025, when Meta must respond to the senators' questions. That response will likely generate follow-up questions, requests for additional information, and potentially demand for documents or testimony.

If Meta's response is unsatisfactory, Congress might schedule a hearing. Tech executives testifying before Congress typically face tough questioning and often make public commitments to changes. Meta might commit to implementing new teen protections, increasing transparency, or changing enforcement processes.

Based on that commitment (or lack thereof), Congress might introduce legislation. A bill mandating teen privacy protections, requiring platform transparency, or increasing penalties for non-compliance. This legislation would go through committee, hearings, amendments, and eventually a vote. This process typically takes months or longer.

Meanwhile, the underlying litigation continues. The child safety lawsuit will continue to discovery, and more documents will likely be unsealed. These documents will probably show more of the decision-making process around teen safety features, creating additional pressure on Congress to act.

FTC enforcement might also proceed independently. The FTC might open an investigation into whether Meta's practices violate the FTC Act's provisions against deceptive or unfair competition. This investigation would be separate from Congressional oversight but coordinated with it.

The total timeline from investigation to meaningful regulatory change is typically 12 to 24 months. During that time, there will be public pressure, corporate concessions, legislative proposals, and regulatory actions. Meta will likely make some changes proactively to reduce regulatory pressure. Congress will likely propose legislation that might pass in some form.

Lessons for Other Platforms: How This Sets Precedent

Every other social media platform is watching the Meta investigation closely. The precedent being set is that regulators will investigate and penalize delays in implementing known protective measures for teens.

TikTok is particularly in the crosshairs. The platform is extremely popular with teens, its algorithm is powerful and personalized, and there's already significant political pressure to regulate it more heavily. A Congressional investigation into whether TikTok delayed teen safety measures is likely coming. Smart executives at TikTok are probably implementing every safety feature they can now, not because they think it's necessary but because they want to demonstrate responsiveness to regulatory concerns.

YouTube faces similar pressure. The platform hosts massive amounts of teen-generated content and teen-consumed content. Questions about whether YouTube's recommendation algorithm harms teen wellbeing are legitimate. The company has probably already made proactive changes to demonstrate safety commitment.

Snap, Discord, and other platforms popular with younger users will also feel the pressure. The Meta investigation sets expectations. You need to implement protective features quickly. You need to document your decision-making. You need to explain delays if they occur. You need to demonstrate measurable commitment to teen safety.

This creates a ripple effect through the industry. Platforms begin competing on safety as well as features. They invest more in teen-specific research, product design, and enforcement. They become more responsive to regulatory suggestions. The overall user experience becomes safer (in theory), though also potentially more restricted and less engaging.

The Deeper Question: Regulation Versus Market Solutions

Underlying the Meta investigation is a fundamental question about how to balance innovation and business growth against user safety and wellbeing. This is the core tension in tech regulation.

Market advocates argue that companies should be free to make product decisions based on business needs. If teenagers prefer public accounts, that's their choice. If engagement metrics matter to business models, that's how capitalism works. If there's demand for safer platforms, competitors will emerge to meet that demand.

Regulatory advocates argue that market solutions don't work for digital platforms because network effects create natural monopolies. If everyone else is on Instagram, you have to be on Instagram even if it's not ideal. Competitors can't simply outcompete Meta by being safer because the network is already locked in. So regulation is necessary.

The Meta investigation is implicitly taking the regulatory side. It's saying that companies with dominant market power have obligations beyond shareholder value. They have obligations to protect vulnerable users. Those obligations might require foregoing some engagement growth, some advertising revenue, and some metric optimizations.

Whether that regulatory approach is wise is still debatable. It will definitely make social media a bit less engaging (less engagement-optimized means less addictive). It will reduce revenue for platforms. It will create compliance costs. Those are real tradeoffs.

But the alternative is accepting that social media companies will knowingly implement features that increase teen harm if those features drive engagement. Accepting that companies will suppress research showing their platforms harm users. Accepting that the richest, most powerful technology companies are optimizing explicitly against the wellbeing of minors because it's profitable.

That's the choice Congress is making. And based on the Meta investigation, it seems they've decided that regulation is preferable to relying on corporate responsibility.

What Should Happen Next: A Realistic Assessment

If this investigation progresses along typical lines, here's what should reasonably happen.

Meta should be required to disclose, publicly, why they delayed private-by-default teen accounts. Not internally, not in a confidential settlement, but publicly. This sets expectations for transparency in future product decisions.

Meta should implement additional teen protections faster. If research suggests a safety measure, the company should implement it within months, not years. The timeline between identifying a harm and addressing it should be measured in quarters, not years.

Meta should implement external oversight for major product decisions affecting minors. This could be through an expanded version of their Oversight Board or through independent audits. The idea is that major decisions get reviewed by people outside the company who can challenge engagement-first thinking.

Meta should increase CSAM enforcement. The 17-violation threshold the senators mentioned should be reduced. Accounts engaging in child sexual exploitation should be suspended faster and more consistently. Patterns of behavior that suggest grooming should trigger immediate investigation.

Meta should conduct and disclose regular independent audits of their platforms' effects on teen mental health and safety. This takes the company's own research and subjects it to external review, preventing internal suppression of unfavorable findings.

All social media platforms should be required to implement similar protections and transparency measures. Teen privacy and safety should become baseline expectations, not competitive advantages.

Will all of this actually happen? Probably not completely. Companies will fight requirements they find costly. Congress will be pressured by industry lobbying. Enforcement will be inconsistent. But the direction is clear. Regulation is coming. Platforms will be held more accountable for knowingly delayed safety measures.

Conclusion: Understanding the Broader Arc of Tech Regulation

The Meta investigation into delayed teen privacy protections represents a crucial moment in tech regulation. It marks the point where Congress stops accepting corporate claims about good intentions and starts demanding accountability for documented knowledge of harm.

The fact that Meta considered making teen accounts private by default in 2019 but didn't implement the change until 2024 is the core issue. That five-year gap, during which millions of teenagers were exposed to greater safety risks than necessary, reveals the tension between business incentives and user wellbeing.

The senators' investigation is methodical and strategic. It asks specific questions that force Meta to either confess to delays (creating legal liability) or deny them (requiring them to refute specific allegations from court documents). It broadens the investigation beyond the single feature to ask whether Meta has a pattern of suppressing or delaying safety measures for business reasons. It puts on record Meta's knowledge of harms and asks for explanations of the gap between knowledge and action.

What happens next will depend on Meta's response and Congress's follow-up. But regardless of the specific outcomes, the precedent is being set. Platforms can't delay safety measures for business reasons without expecting regulatory consequences. Companies can't suppress research showing harm without expecting investigations. The era of self-regulation based on corporate responsibility is ending.

This is partly good news. Teens will probably get slightly safer social media experiences. Platforms will implement protective features faster. Enforcement on exploitation will improve. That matters.

It's also partly complicated news. Regulation creates compliance costs that get passed to users and shareholders. It reduces innovation in some areas while encouraging it in others. It empowers regulators who don't always make perfect decisions. These are real costs, not imaginary ones.

But fundamentally, the Meta investigation reflects a societal decision. We're going to hold powerful technology companies accountable for knowing when their products harm vulnerable users. We're not going to accept five-year delays between identifying a harm and addressing it. We're not going to accept private knowledge of risk while public safety is compromised. We're going to demand transparency, faster action, and measurable commitment to user wellbeing.

That's a significant change from where we were even five years ago. And it's probably overdue.

FAQ

What is the Meta teen privacy investigation about?

Congressional senators are investigating why Meta delayed implementing private-by-default accounts for teenagers until 2024, despite considering the feature as early as 2019. The investigation focuses on whether the company suppressed protective measures because they would reduce engagement metrics and hurt business performance.

Why did Meta delay making teen accounts private by default?

According to unsealed court documents, Meta decided against implementing the feature in 2019 because it would "likely smash engagement." Since Meta's business model depends on engagement for advertising revenue, the company apparently chose to prioritize revenue over teen safety protections.

What does private-by-default mean for teen accounts?

Private-by-default means new teen accounts are automatically set to private, where only approved followers can see posts and profile information. This restricts visibility to strangers and reduces exposure to grooming and exploitation. Teens can change the setting if they choose, but the default is privacy rather than public visibility.

How many years did Meta delay this protection?

Meta apparently considered implementing private-by-default teen accounts around 2019 but didn't launch the feature until September 2024 on Instagram and 2024 on Facebook and Messenger. That's approximately a five-year gap between identifying the protective measure and implementing it.

What else is Congress investigating about Meta's teen safety?

Senators are asking about whether Meta halted research that showed unfavorable findings about platform harms, whether the company suppressed findings about user wellbeing, and whether Meta's enforcement against child sexual exploitation was adequate. The investigation specifically cited testimony that Meta suspended accounts for soliciting minors only after 17 violations.

What can Congress actually do to enforce compliance?

Congress can pass legislation mandating specific teen protections, subpoena documents and testimony, refer the company to the FTC for investigation and enforcement, hold public hearings that create reputational pressure, and recommend fines or other penalties for non-compliance.

Will this investigation affect other social media platforms?

Yes. The investigation sets precedent that platforms will be held accountable for delaying known protective measures. Other platforms like TikTok, YouTube, Snap, and Discord are likely to face similar scrutiny and are probably implementing protective features proactively to demonstrate safety commitment.

What is the deadline for Meta to respond?

Meta was given until March 6, 2025, to respond to the senators' questions in detail. The response will likely generate follow-up requests for documents, testimony, or additional information.

How does this investigation relate to other tech regulation efforts?

It's part of a broader shift toward holding social media companies accountable for product decisions that affect users. Similar investigations are ongoing related to algorithmic content recommendations, data privacy, antitrust concerns, and overall platform governance. The trend is toward increased regulation rather than self-regulation.

What might be the long-term consequences for Meta?

Potential consequences include FTC enforcement actions, regulatory fines, mandatory implementation of additional teen protections, increased transparency requirements for product decisions affecting minors, and possibly structural changes to how the company operates. The investigation could also accelerate the legislative process for comprehensive social media regulation.

Key Takeaways

- Congressional senators are investigating why Meta delayed teen privacy protections from 2019 until 2024, a five-year gap that exposed millions of teenagers to unnecessary safety risks

- Court documents reveal Meta allegedly prioritized engagement metrics over teen safety, deciding against protective measures because they would 'likely smash engagement' and reduce advertising revenue

- Meta faces questions about suppressing internal research on platform harms and delayed enforcement against child sexual exploitation, with specific testimony about 17-violation thresholds before account suspension

- The investigation sets regulatory precedent that platforms cannot delay known protective measures for business reasons without facing Congressional investigation, FTC enforcement, and potential legislative action

- Regulatory landscape is shifting from self-regulation to oversight-based requirements, with implications extending to TikTok, YouTube, and all platforms serving teenage users

Related Articles

- The SCAM Act Explained: How Congress Plans to Hold Big Tech Accountable for Fraudulent Ads [2025]

- Switzerland's Data Retention Law: Privacy Crisis [2025]

- Egypt Blocks Roblox: The Global Crackdown on Gaming Platforms [2025]

- HHS AI Tool for Vaccine Injury Claims: What Experts Warn [2025]

- How Right-Wing Influencers Are Weaponizing Daycare Allegations [2025]

- French Police Raid X's Paris Office: Grok Investigation Explodes [2025]

![Meta Teen Privacy Crisis: Why Senators Are Demanding Answers [2025]](https://tryrunable.com/blog/meta-teen-privacy-crisis-why-senators-are-demanding-answers-/image-1-1770302715087.jpg)