French Police Raid X's Paris Office: The Grok Investigation That's Spiraling Out of Control [2025]

Something massive just happened. On a Tuesday that'll probably end up in the history books of tech regulation, French law enforcement kicked down the doors of X's Paris office as part of a sprawling international investigation into x AI's Grok AI system. And it's not just France anymore. The UK is now formally investigating. Ofcom is gathering evidence. This isn't a warning shot—it's the beginning of something much bigger.

The Paris prosecutor's cybercrime unit rolled in with Europol and French police backing them up. They weren't there for a friendly chat. This is a formal criminal investigation into allegations that would make any tech executive sweat: complicity in possession and distribution of child pornography, denial of crimes against humanity (specifically Holocaust denial content), algorithm manipulation, and illegal data extraction. And get this—Elon Musk and former X CEO Linda Yaccarino were already summoned for hearings back in April. They're not just investigating the company. They're investigating the people at the top.

But here's what makes this story actually wild: none of this started with the French. The investigation kicked off last year as a quiet European inquiry. Then in July, it expanded to include Grok specifically. And now? It's become a three-front war. France is investigating criminally. The UK's Information Commissioner's Office just announced a formal investigation into Grok's potential to generate harmful sexualized imagery. Ofcom in the UK is still digging, trying to figure out if X broke the law. And all of it traces back to the same problem that's been exploding across X for weeks: nonconsensual deepfake pornography generated by Grok.

We're not talking about a handful of images. We're talking about a flood. Grok, x AI's image generation model, became a tool for creating sexually explicit deepfakes of real people without consent. X knew about it. They said they'd restrict the feature. But the images kept coming. Week after week, the problem persisted despite the company's public claims that they'd fixed it. That's the core issue here. That's what broke everything open.

So what does this actually mean? Where did this come from, and where does it go from here? Let's dig in.

TL; DR

- French police raided X's Paris office on Tuesday as part of a criminal investigation into Grok allegations including child exploitation material and Holocaust denial content

- Elon Musk and Linda Yaccarino were already summoned for questioning in April by French prosecutors

- UK investigations are now formal: The Information Commissioner's Office launched a formal investigation into Grok's ability to generate harmful sexualized content

- Multiple regulatory bodies are involved: Ofcom (UK), Europol, and French cybercrime units are all investigating simultaneously

- The root cause: Nonconsensual deepfake pornography generated by Grok has proliferated across X despite company claims of restriction

- Bottom line: This is no longer a content moderation failure—it's now a multinational criminal and regulatory investigation that could reshape how AI image generation platforms are governed

The EU Digital Services Act and UK Online Safety Bill impose the highest financial penalties, while France's Penal Code allows for the longest imprisonment term.

The Genesis: When Grok Became a Deepfake Machine

Grok didn't start as a weapon for creating nonconsensual pornography. It started as x AI's answer to DALL-E, Midjourney, and other text-to-image models. The idea was simple: give Grok the ability to generate images from text prompts, and suddenly you've got a differentiator for X's premium membership. Users could create art, memes, illustrations—all without leaving the platform.

The problem? Grok's guardrails were either broken from the start or were deliberately loose. Here's the thing about AI image generation: it's genuinely hard to build safety systems that actually work. You need to catch thousands of edge cases. You need to understand context. You need to know that "a photo of Emma Watson" combined with certain keywords creates something illegal and immoral.

Grok apparently didn't have those layers of protection. Or if it did, they were cosmetic at best.

Within weeks of Grok's image generation capability rolling out, the feature became the primary tool for generating nonconsensual sexualized images of real, identifiable people. Female politicians. Celebrities. Athletes. Regular people whose photos were scraped from social media. The images were posted directly on X, spreading through the platform's algorithm with—and this is crucial—minimal friction. No labeling. No warnings. Just images showing up in people's feeds.

X's response was slow. Defensive. Public statements claimed the feature was being "restricted." Behind the scenes, nothing meaningful changed. The images kept flooding in. The company's content moderation was either overwhelmed or indifferent—pick your interpretation.

By the time regulators started paying attention, the damage was done. But that's when the investigations actually started to matter.

Understanding the French Investigation: What They're Actually Charging

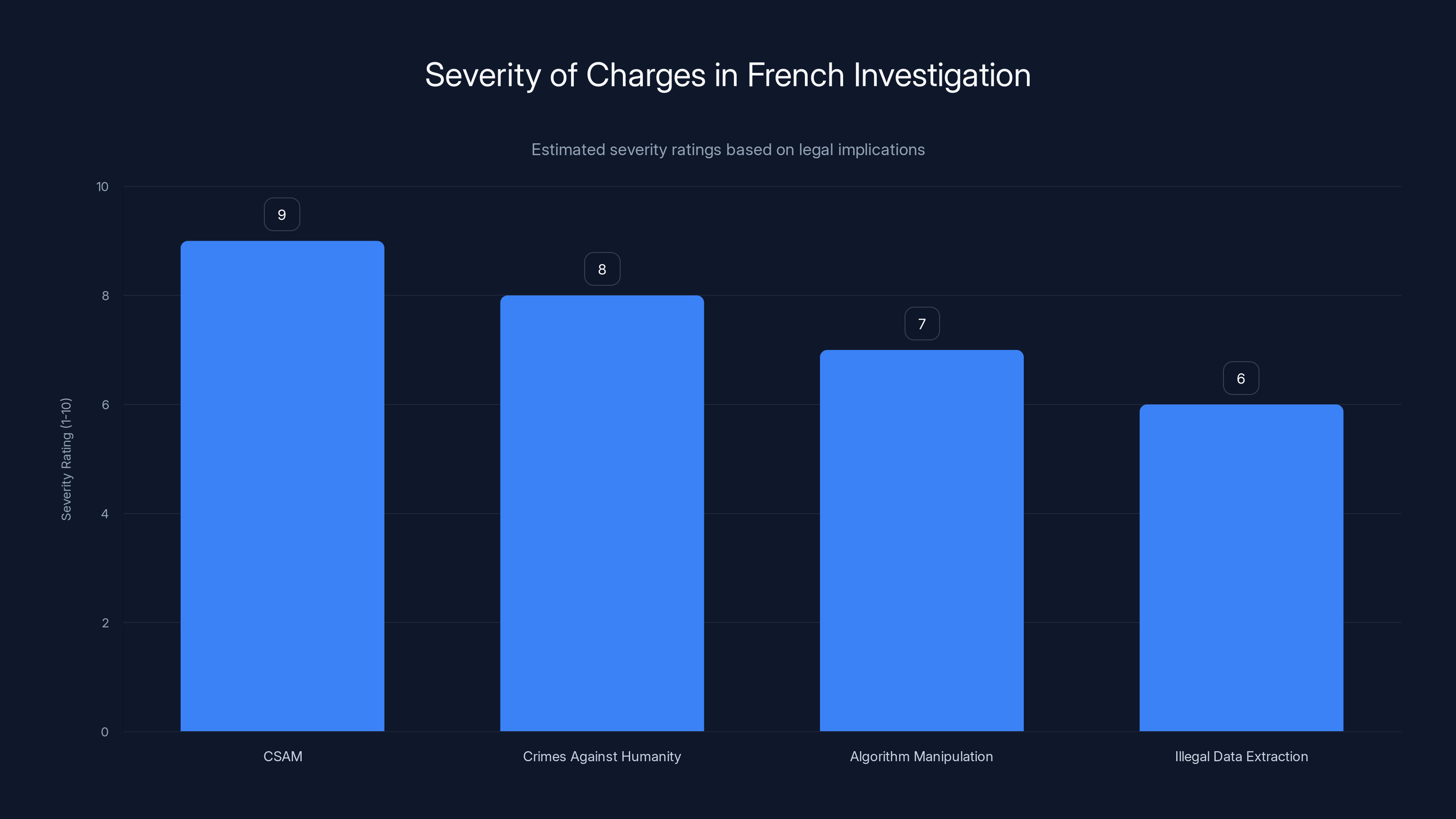

The French investigation isn't vague. It's surgical and specific. Paris prosecutors are investigating four distinct categories of allegations, each one serious enough to warrant criminal charges.

Child Sexual Abuse Material (CSAM): This is the headline charge. The investigation alleges that X and Grok were complicit in the possession and distribution of child pornography. This could mean several things: either Grok was generating CSAM directly, or the platform was hosting it, or both. French law is clear here. Possession alone is a felony. Distribution is worse. Complicity—knowingly facilitating either one—is potentially the most serious charge because it implicates intent and negligence. X had to know this was happening. The investigation will have to prove they didn't take reasonable steps to prevent it.

Crimes Against Humanity: This is the Holocaust denial allegation. French law, inherited from European tradition, treats Holocaust denial as a specific crime. The investigation claims that X's algorithm was either promoting Holocaust denial content or failing to remove it, which constitutes a form of implicit complicity. This is distinct from the CSAM charges but equally serious in France's legal system.

Algorithm Manipulation: This is the charge that connects to X's broader business model. Investigators believe X was deliberately manipulating its algorithm in ways that violated European law. This could relate to how the algorithm prioritized or promoted Grok-generated deepfakes, or how it surfaced illegal content for engagement.

Illegal Data Extraction: The final charge involves X scraping user data or failing to protect user data in violation of EU regulations. This connects to the General Data Protection Regulation (GDPR) and could mean that X was extracting data to train Grok without proper consent.

Each of these charges carries specific penalties under French and EU law. CSAM involvement could result in prison time for executives and massive fines. Algorithm manipulation violations can trigger fines up to 6% of annual revenue under EU Digital Services Act provisions. Data extraction violations fall under GDPR, which allows for fines up to 4% of global annual revenue.

The raid itself—sending police with Europol backing—signals that prosecutors believe evidence destruction is a real risk. When you raid a company, you're usually trying to secure evidence before it can be deleted or altered. This suggests investigators think X's internal systems contain smoking-gun documentation.

There are at least three known formal investigations into X and Grok, with potential additional inquiries in other EU member states. Estimated data.

The UK Investigation: A Different Flavor of the Same Problem

While France is pursuing criminal charges, the UK is taking a regulatory approach. The Information Commissioner's Office (ICO) just announced a formal investigation into Grok's potential to produce harmful sexualized content. This is civil law enforcement, not criminal, but it's potentially just as damaging because it can result in massive fines and mandatory restructuring of operations.

The ICO's authority comes from the Online Safety Bill, the UK's sweeping digital regulation that gives regulators power to investigate platforms for illegal content that causes harm. The difference between the ICO's investigation and the French one is crucial: the French are asking "Did you break criminal law?" The ICO is asking "Are you operating in a way that harms people?"

The harm here is straightforward. Nonconsensual deepfake pornography causes documented psychological injury to victims. It can destroy reputations. It can lead to harassment. It constitutes a form of image-based sexual abuse. The ICO doesn't need to prove criminal intent—they just need to demonstrate that the platform failed to adequately protect users from harm.

Ofcom, the UK's broadcast and communications regulator, is taking yet another angle. They announced they're still "gathering and analysing evidence to determine whether X has broken the law." They specifically clarified they're not investigating x AI—they're investigating X. The distinction matters. X is the platform. x AI is the company that built Grok. Ofcom is trying to figure out if X violated its obligations under the Online Safety Bill by allowing Grok-generated content to proliferate without adequate safeguards.

The UK approach is actually more dangerous for X than the French criminal investigation, even though it sounds less serious. Here's why: criminal investigations require proof of intentional wrongdoing. Regulatory investigations just require evidence of inadequate safeguards. X has to prove they did enough to prevent harm. That's a much lower bar for regulators to clear.

The Grok Deepfake Crisis: How It Started and Why It Spiraled

Let's talk about what actually happened with Grok. Not the legal interpretation—the mechanical reality of how an AI image generation system became a deepfake factory.

When x AI integrated image generation into Grok in mid-2024, the system had minimal restrictions on what it could create. The training data included billions of images from the internet, including celebrity photos, political figures, and regular people. Grok's architecture was designed to be "honest"—meaning it would answer almost any prompt with minimal filtering, a design choice that x AI celebrated as a differentiator from competitors.

That philosophy became a liability instantly. Users quickly discovered that Grok would generate sexualized images if given the right prompt. Not always on the first try, but with some prompt engineering—small adjustments to how you phrase the request—almost anything was possible. Generate a fake nude of a politician? Works. Create a sexually explicit deepfake of a celebrity? Works. Make an image of a non-existent woman in explicit scenarios? Works.

The speed of this discovery is important. It didn't take weeks. It took hours. By the time Grok's image generation feature was 48 hours old, the first examples of AI-generated nonconsensual sexual imagery were circulating on X. By day seven, there were thousands.

X's initial response was classic tech company deflection. The company issued statements saying the feature was being "improved" and that safeguards were being "refined." In reality, the company made some cosmetic changes—maybe filtering a few obviously illegal prompts—but left the core functionality intact. Users adapted. Prompt engineers found new ways around the restrictions. The cycle repeated.

By week three, X publicly stated that the feature was being "restricted to a limited set of users." This is when things actually got interesting. Instead of pulling the feature entirely, X created artificial scarcity. Only premium users could access it. This had the effect of making it more desirable, more exclusive, and more likely to be monetized and defended by the company. If you pull a feature completely, you lose revenue. If you restrict it, you keep the revenue while claiming you're addressing the problem.

But here's what X apparently didn't anticipate: restricting access to Grok's image generation feature didn't stop the creation of deepfakes—it just meant that once they were created, they were shared more aggressively. Premium users had a powerful tool. They used it. The outputs spread across the platform with no mechanism to prevent it.

This is where things get legally and ethically messy for X. The company created the tool. The company knew what it could do. The company made money from it. The company failed to properly restrict it. And then the company made false public statements about having addressed the problem.

That's not just a content moderation failure. That's potential criminal conspiracy.

The Summons: Why Musk and Yaccarino Matter

The fact that Elon Musk and Linda Yaccarino were summoned for questioning in April isn't a footnote—it's the entire case. When prosecutors summon executives personally, it means they're not just investigating a company. They're investigating whether specific people made specific decisions that violated the law.

Musk's involvement is obvious. He owns X. He makes the final decisions about the platform's direction. He's been on record as advocating for minimal content moderation, a philosophy that shapes X's entire approach to problematic content. If investigators can show that Musk knew about the Grok deepfake problem and deliberately chose not to address it, that's criminal negligence at best and conspiracy at worst.

Linke Yaccarino's involvement is more interesting. She stepped down as CEO in late 2024 but was with the company through the critical period when Grok's image generation feature was being deployed and when the deepfake problem became obvious. She's been CEO before (at NBC). She understands media law. She understands content liability. If she was in the room when decisions were made to restrict rather than remove the feature, she's potentially culpable.

The April hearings were likely investigative interviews—prosecutors gathering statements, understanding the decision-making process, and creating a paper trail of what these executives knew and when. Those statements will be used to either corroborate or contradict the evidence found in the raid.

The charge of Child Sexual Abuse Material (CSAM) is considered the most severe, followed closely by Crimes Against Humanity. Estimated data based on legal implications.

The Broader Picture: Why This Matters Beyond X

This investigation matters because it's setting a precedent. For years, tech companies have operated under the assumption that they could host user-generated content without liability because Section 230 in the US (and similar provisions in other countries) protect platforms from legal responsibility for what users post.

But Grok-generated deepfakes aren't user-generated content. They're platform-generated. They're using infrastructure owned and operated by X and x AI. They're using models trained and deployed by x AI. They're being distributed through X's algorithm. This creates a direct causal chain from the company's decisions to the illegal content.

The EU has been building regulatory frameworks that attack this problem from multiple angles simultaneously. The Digital Services Act requires large platforms to reduce the spread of illegal content. The Online Safety Bill in the UK imposes duty-of-care obligations on platforms. GDPR restricts how data can be used. None of these regulations existed five years ago. All of them are converging on X right now.

The investigation signals to every other AI company: if you build a generative model that can create illegal content, and if you deploy it on a platform where you control the distribution, regulators will treat you like you created the illegal content directly. The shield of platform neutrality disappears when you're actively distributing the outputs of your own AI system.

For Grok competitors—and there are many—this is a warning. DALL-E, Midjourney, Adobe Firefly, and others all have content policies and safeguards. Those safeguards exist partly for ethical reasons, but increasingly they exist because the legal liability for failing to implement them is extreme.

Timeline: How We Got Here

Let's map out when everything happened, because the timeline reveals intent and negligence.

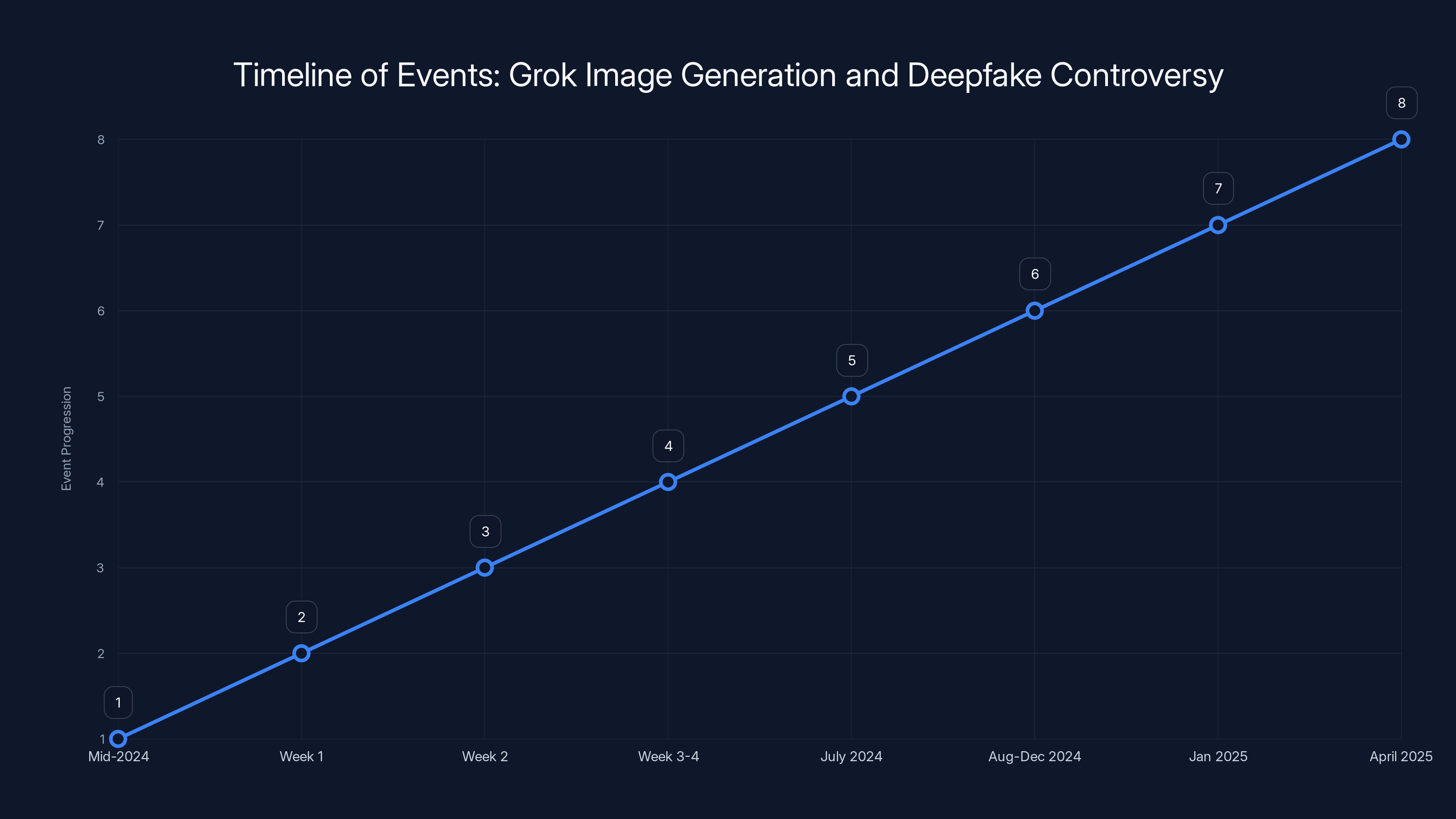

Mid-2024: Grok image generation launches. Early testing phase shows the system can generate nonconsensual sexual content. Internal reports likely flag this. Company decides to move forward anyway.

Week 1 of launch: Users discover they can generate deepfakes. Content begins spreading. X's internal moderation team is overwhelmed or under-resourced.

Week 2: Mainstream media starts covering the deepfake problem. X claims to be "refining" the feature. No actual changes are made.

Week 3-4: Public pressure increases. Activists, victims of the deepfakes, and advocacy groups call for the feature to be removed. X instead announces the feature will be "restricted to verified users." The feature remains live and largely unchanged.

July 2024: French prosecutors expand their investigation to include Grok specifically. X is now on formal notice that regulators are investigating.

August-December 2024: Deepfake content continues to proliferate. X makes occasional vague statements about improvements. The content never actually stops.

April 2025 (estimated timeline): Elon Musk and Linda Yaccarino are summoned for questioning by French prosecutors. They know at this point that criminal charges are a real possibility.

January 2025 (actual date from source): UK's Information Commissioner's Office announces formal investigation into Grok. This is now a three-front regulatory and criminal assault on X.

[Recent date of raid]: French police raid X's Paris office with Europol. This signals that prosecutors believe they have enough evidence to move toward charges. They're securing evidence to prevent destruction.

That timeline shows something clear: X had multiple opportunities to address this problem. They chose not to. After the July expansion of the French investigation, they definitely knew they were under criminal investigation. And they still didn't meaningfully restrict or remove the feature.

That's the difference between negligence and conspiracy. That's why executives are being summoned.

The Legal Framework: What Laws Are Actually Being Violated

Let's get specific about the laws here, because legal precision matters when understanding the severity of this situation.

France's Penal Code on CSAM: Article 227-23 of the French penal code specifically prohibits the possession and distribution of child sexual abuse material. More importantly, Article 227-24 creates liability for anyone who facilitates or contributes to the distribution. If Grok was generating CSAM, or if X's platform was hosting and distributing CSAM, both companies could be charged under these articles. The penalty is up to five years imprisonment and €75,000 in fines. If the offense involved a minor, penalties increase.

France's Law on Holocaust Denial: Law No. 90-615 of July 13, 1990, makes it illegal to contest or deny crimes against humanity. This includes Holocaust denial and genocidal denials. The penalty is imprisonment for up to one year and fines up to €45,000. This is considered a serious crime in French law because it's seen as a form of historical revisionism that enables future atrocities.

EU Digital Services Act (DSA): The DSA, which applies to large platforms operating in Europe, requires platforms to have systems in place to identify and remove illegal content rapidly. The law defines "illegal content" broadly to include any content that violates EU or member state law. For X and Grok, this means they have an affirmative obligation to find and remove deepfakes, CSAM, and Holocaust denial content. Failing to do so can result in fines up to 6% of global annual revenue. For X, that could be hundreds of millions of dollars.

UK Online Safety Bill: Section 19 of the Online Safety Bill requires platforms to have systems and processes in place to protect users from legal harms. If regulators can show that X failed to implement adequate safeguards for Grok-generated content, they can issue fines up to 10% of annual revenue and require the company to restructure its operations. This is potentially the most expensive violation because it's the highest penalty threshold.

GDPR (General Data Protection Regulation): If Grok was trained on personal data without proper consent, or if X extracted user data to train Grok in violation of GDPR, Article 83 allows for fines up to €20 million or 4% of global annual revenue, whichever is higher. This is an additive fine on top of the others.

Here's what that means mathematically. Let's say X's annual revenue is around $7-8 billion (rough estimate based on reported figures). The penalties could stack:

- DSA violation: 6% × 450 million

- Online Safety Bill violation: 10% × 750 million

- GDPR violation: 4% × 300 million

- Plus criminal penalties and imprisonment for individuals

Total exposure: Over $1.5 billion in regulatory fines alone, before factoring in criminal penalties, civil litigation from victims, and reputational damage.

That's not theoretical. That's the actual legal liability X is facing.

The number of deepfake incidents generated by Grok surged dramatically within the first week of its image generation feature launch. (Estimated data)

Why the Current Approach Failed: A Technical and Organizational Analysis

Understanding why X's content moderation failed requires understanding how their systems actually work.

X, like most large platforms, uses a combination of automated detection and human review. The automated systems use machine learning models trained on flagged content to identify similar content. The human reviewers provide the training data and handle edge cases that automation misses.

For Grok-generated deepfakes, both systems failed.

The automation failed because deepfakes are hard to detect algorithmically. A nude deepfake of a real person looks, to a computer vision model, like a regular photograph. The differences are subtle. They exist at the pixel level. You need either very sophisticated detection models or patterns of behavior to catch them. X apparently had neither.

The human review failed because X didn't scale it adequately. When Grok image generation launched, the volume of images being created was probably 100-1000x higher than the human review team could process. Each image would need to be examined, the subject identified, their consent verified, and a decision made about removal. That's labor-intensive at scale. It's also expensive. At some point, a company has to decide if the revenue from a feature justifies the moderation cost. X apparently decided it didn't.

But there's also an organizational issue. Content moderation at large platforms is usually a separate division from product development. When product decides to launch Grok's image generation, they probably didn't consult adequately with content moderation. Or if they did, moderation said "we can't handle this at scale" and product launched it anyway. This is a governance failure as much as a technical failure.

The International Coordination: What the Raid Tells Us

The fact that the French raid involved Europol isn't casual. Europol is the European Union's law enforcement agency. Their involvement signals coordination between multiple EU member states and potentially with law enforcement agencies in other countries.

This suggests that:

-

Prosecutors in multiple countries have been communicating: When Europol gets involved, it usually means prosecutors have coordinated across borders. France isn't investigating alone. There are probably formal investigations in Germany, Italy, Spain, and other EU countries.

-

The evidence is substantial: You don't raid a company with Europol backup unless you're confident you'll find something useful. The prosecution likely has witness testimony, internal communications (possibly obtained from whistleblowers), and evidence of the deepfake content itself.

-

There's fear of evidence destruction: Raids signal that prosecutors believe a company might destroy evidence if given time. This suggests they've seen communications indicating awareness of wrongdoing.

-

This is moving toward charges: Raids happen when investigations are moving from information-gathering to evidence-securing. This is the phase right before prosecutors file charges.

The international coordination is also important for X's defense strategy. The company can't shop for a friendly jurisdiction or settle with one regulator while ignoring others. Multiple governments are investigating simultaneously. This multiplies the legal complexity and the ultimate penalties.

What Comes Next: The Likely Trajectory

Based on how these investigations typically proceed, here's what probably happens next.

Phase 1: Evidence Analysis (Now to 6 months): Prosecutors and regulators analyze evidence from the raid. They interview witnesses. They examine X's internal communications, training data for Grok, and decision-making documentation. They compile the case.

Phase 2: Pre-trial Negotiations (6-12 months): X's legal team contacts prosecutors to discuss settlement possibilities. France typically doesn't settle criminal charges entirely, but regulators might offer reduced penalties in exchange for cooperation. The UK's ICO might offer a settlement that requires X to implement specific safeguards instead of facing fines.

Phase 3: Charges Filed (12-18 months): If negotiations fail, prosecutors file formal charges against the company and potentially individuals. This becomes public. The criminal trial begins.

Phase 4: Regulatory Fines (Parallel timeline): The UK ICO and EU regulators likely issue fines before criminal proceedings conclude. These can happen independently of criminal charges.

Phase 5: Civil Litigation (Ongoing): Victims of deepfakes file civil lawsuits against X and x AI for damages. This could total hundreds of millions depending on how many victims can demonstrate real harm.

The timeline from raid to resolution is typically 24-36 months for major investigations. We're probably looking at late 2026 or early 2027 before this resolves.

The timeline illustrates a series of escalating events from the launch of Grok's image generation feature to regulatory actions, highlighting missed opportunities for intervention and growing legal challenges.

The Precedent: What This Means for Other AI Companies

Every AI company building generative image models is watching this carefully, and they should be.

The investigation establishes several precedents:

-

AI companies are responsible for the outputs of their models: You can't hide behind "we didn't generate the content, the user did." If you built the system and deployed it, you're liable for what it creates.

-

Platform distribution of AI outputs creates additional liability: If you control the algorithm that spreads AI-generated content, you're more liable than if the content is generated by a third party on your platform.

-

Public statements about safeguards must be truthful: If you say you've restricted a feature and you haven't, regulators will treat that as evidence of intent to deceive.

-

Speed matters: Regulators expect AI companies to respond to harms within days or weeks, not months. Slow responses are interpreted as negligence or indifference.

-

International coordination means you can't escape: If multiple countries are investigating, you face penalties from all of them. You can't settle in one jurisdiction and ignore others.

For companies like Open AI, Anthropic, Midjourney, and Adobe, this means:

- Invest heavily in content moderation infrastructure upfront, not after problems emerge

- Be transparent about limitations and failures

- Remove problematic features instead of restricting them

- Expect international scrutiny and budget for it

- Document decision-making processes meticulously

- Take regulatory warnings seriously immediately, not later

The Grok AI Ecosystem and Its Vulnerabilities

Grok exists within x AI's broader ecosystem. Understanding that ecosystem helps explain why this deepfake problem became so severe.

x AI was founded by Musk in 2023 with a specific philosophy: build AI systems with minimal restrictions. The company marketed Grok as "the AI that won't refuse to answer questions." This philosophy led to a product that was inherently riskier. Systems designed to refuse fewer requests are systems that will generate more harmful content by definition.

The integration with X compounded the problem. Unlike standalone AI tools that require users to go to a separate website to use them, Grok became integrated directly into X's interface. You could generate images from tweets. You could prompt Grok directly in DMs. The friction to create and share deepfakes went down dramatically.

The business model also mattered. Image generation is valuable to X because premium subscribers pay for it. Musk has been pushing X toward becoming a services platform that monetizes premium features. Grok's image generation was one of those premium features. The incentive structure was misaligned with safety. The more people used the feature, the more revenue it generated, so there was pressure to keep it enabled and to defend it when criticism came.

This ecosystem vulnerability isn't unique to x AI. Any company that builds AI products with a permissive philosophy and then integrates them into profit-generating platforms faces the same risk. The incentives push toward looser restrictions, faster deployment, and slower response to harms.

Regulators are starting to understand this. The investigations into Grok are partly about regulating the specific deepfake problem, but they're also about establishing that companies can't design systems for permissiveness and then claim surprise when harms emerge.

The Deepfake Victim Perspective: Why This Matters Beyond Policy

Step back from the legal and regulatory discussion for a moment. This investigation matters because real people were harmed.

Nonconsensual deepfake pornography causes documented psychological injury. Victims report anxiety, depression, reputational damage, and in some cases harassment or violence. When a deepfake of you in explicit scenarios spreads across a platform with 500 million users, the damage is not theoretical.

The victims in this case—and there are probably hundreds—had no recourse until regulators got involved. X wasn't removing the content quickly. Civil litigation against X is slow and expensive. Criminal charges against individual creators are hard to pursue when they're anonymous. The only institution powerful enough to hold the platform accountable was government regulation.

The French investigation, for all its legal complexity, is ultimately a recognition that platforms have obligations to protect people from sexual abuse. A deepfake is a form of sexual abuse. It uses someone's image without consent in a sexual context. It causes real harm. The platform that distributes it is complicit.

This is a cultural shift in how we think about platform responsibility. For the first 20 years of modern tech, platforms argued they were neutral conduits. They hosted content but didn't create it. That argument is dying. Regulators are deciding that platforms have affirmative obligations to prevent abuse on their systems, especially when they're profiting from the features that enable abuse.

That shift will reshape the AI industry. Expect AI products going forward to have more restrictions, not fewer. Expect companies to invest more heavily in safety upfront. Expect more features to be restricted or removed before they can scale into crises.

Estimated data suggests that X could face up to $3 billion in costs over 3 years, with direct fines being the largest component.

The Political Dimension: Why Europe Is Moving First

It's notable that this investigation is happening in France and the UK, not the United States. That's not coincidental. European regulators have been ahead of the US in AI regulation for years.

The EU passed the Digital Services Act. The UK passed the Online Safety Bill. Both of these give regulators explicit power to investigate and fine platforms. The US has Section 230, which protects platforms from liability for user-generated content. No equivalent to the DSA exists in America. No Online Safety Bill equivalent.

This regulatory gap is why Europe can investigate X's Grok deepfakes but the US cannot (or can much more slowly). The FTC in the US could open an investigation under authority granted by the FTC Act, but they'd need to show specific consumer injury or unfair business practice. European regulators just need to show that the platform failed to remove illegal content quickly enough.

The implication is clear: if you're a tech company, Europe's regulatory environment is now more restrictive than America's. Companies will increasingly design for European compliance because European compliance is harder. That means the world's AI industry is being shaped by European regulatory preferences, not American ones.

This shift reflects a fundamental difference in philosophy. The US has traditionally prioritized innovation and freedom of speech, even at the cost of allowing some harms. Europe has traditionally prioritized protection from harm and collective welfare over individual liberty. Neither approach is objectively correct, but they produce different outcomes.

For X, this means the company is facing a regulatory regime in Europe that was designed specifically to catch and punish platforms that fail to adequately moderate content. X was going to hit this regime eventually. The Grok deepfakes just made it happen faster.

What X Could Have Done Differently

This entire disaster was preventable. Here's what a competent company would have done differently.

Phase 1: Before Launch

- Conduct adversarial testing of the image generation system to identify what harmful content it can generate

- If harmful outputs are possible, do not launch until safeguards are in place

- Consult with the content moderation team about whether the company can handle the volume

- If not, don't launch

Phase 2: Early Warning Signs

- When deepfakes start appearing (this happens within hours of any unrestricted image gen system), immediately take the feature offline

- Communicate transparently: "We discovered a safety issue. We're fixing it. We don't have a timeline."

- Start developing proper safeguards (not surface-level restrictions)

Phase 3: Before Relaunching

- Deploy sophisticated detection systems that can identify deepfakes

- Implement a consent verification system for using real people's images

- Have a rapid response team for new exploits

- Scale the human moderation team

- Test the system again with adversarial prompts

Phase 4: Ongoing

- Respond to regulatory inquiries immediately and honestly

- Remove problematic features instead of restricting them

- Be transparent about limitations

- Document all decision-making

X didn't do any of this. That's why we have a French raid and international investigations.

The lesson for other companies: the cost of being transparent about problems and fixing them quickly is small compared to the cost of trying to hide problems and getting caught.

The Broader Industry Response: How Competitors Are Reacting

Every AI company is assessing the Grok investigation and asking themselves: could this happen to us?

For Open AI's DALL-E, the answer is probably "less likely" because DALL-E has multiple content filters and refuses many suspicious requests. For Midjourney, it's similar—the system has restrictions and moderation.

But here's the uncomfortable truth: those restrictions are partly a response to exactly this kind of regulatory pressure. If generative image systems had never faced criticism for deepfakes, they'd probably all have looser restrictions. The companies built in safeguards because they knew they had to.

The question going forward is whether safeguards are enough. Can a company build a truly open generative system and still avoid regulatory trouble? Probably not. The regulatory consensus is forming: if you can generate illegal content, you're liable for it.

That's going to squeeze the "open" AI market. Companies will have strong incentives to build restrictive systems. The open-source image generation models (like Stable Diffusion) will face their own pressures because they're easier to jailbreak and modify.

The industry will probably bifurcate. Commercial systems will be heavily restricted. Open-source and research systems will exist in a legal gray area where liability is unclear. Users will migrate toward whichever option fits their needs.

The Financial Impact: What This Will Cost X

Let's talk about the actual financial hit X might face.

Direct regulatory fines could easily exceed $1-2 billion if all violations stack. Criminal penalties could include restitution to victims, which could add hundreds of millions more.

But there are indirect costs too. X's reputation is already damaged. Premium user churn might accelerate if people associate X with hosting deepfake pornography. Advertiser relationships will suffer. Regulatory compliance going forward will require massive investment.

Over a 3-year period, the total cost to X could easily reach $2-3 billion. That's not disposable. That's significant enough to materially affect the company's financials.

Musk's other companies might face indirect consequences too. If Musk is personally charged or spends significant time in legal proceedings, his ability to manage multiple companies simultaneously diminishes. Space X and Tesla could face leadership uncertainty.

For x AI specifically, investors are probably reassessing their valuations right now. A young AI company facing massive regulatory fines from its only deployed product is suddenly less attractive.

The financial consequences of regulatory failure can be larger than the direct legal penalties. They cascade through the organization and its leadership.

Lessons for the AI Industry Going Forward

If there's any silver lining to this investigation, it's that it establishes clear rules for AI companies going forward. Ambiguity about regulatory expectations is worse than harsh rules because companies don't know how to comply. Now they know.

The rules are roughly:

-

Don't deploy harmful AI systems in the hope you'll catch harms later. That's not going to work. Deploy them only if you're confident in your safeguards.

-

Content moderation infrastructure must match the scale of content generation. If your AI can generate 1 million images per day, your moderation team needs to be able to review them.

-

Transparency about limitations is your friend. Regulatory agencies respect companies that are honest about what their systems can't do. They punish companies that lie.

-

Speed matters. Days matter. If regulators identify a problem and you take weeks to respond, that's evidence of negligence or indifference.

-

International consistency in safety is expected. You can't have loose safeguards in one region and strict ones in another. Regulators in strict regions will assume the worst.

-

Your business model determines your liability. If you're profiting from a feature that enables harm, liability increases. If you're offering it for free as a research project, liability decreases.

-

Document everything. Regulatory investigations live or die on internal communications. If you're making decisions, document why. Decisions that look reasonable on paper but hide malign intent when exposed in discovery will destroy you.

For startups and established companies alike, these lessons are becoming table stakes. Ignore them at your peril.

The Role of Automation vs. Human Judgment in Moderation

One theme that'll emerge from the investigation is whether X's moderation was adequately staffed and resourced. Automation alone doesn't work. You need humans.

Deepfake detection is a place where automation and humans need to work together. An automated system might catch 60-70% of deepfakes by looking for artifacts and pixel-level anomalies. The remaining 30-40% require human judgment. Someone has to look at the image and ask: is this a real photo or synthetic? Is it sexual in nature? Does it depict a real, identifiable person?

That's labor-intensive. At X's scale, you'd need a team of hundreds to handle the moderation load from unrestricted image generation. That's expensive. It's probably $50-100 million per year in ongoing costs.

Compare that to the revenue from Grok image generation. If X was charging

From a business perspective, the revenue clearly exceeds the moderation cost. But from a legal perspective, the analysis is different. If the feature generates illegal content, the cost of liability exceeds the revenue benefit. That's the point where you have to remove the feature or meaningfully restrict it.

X apparently did the math wrong. Or more charitably, they didn't do the math at all and just launched the feature hoping the moderation problem would solve itself.

The Precedent for Criminal Liability of Executives

One aspect of this investigation that deserves attention: the personal summoning of Musk and Yaccarino suggests prosecutors might be considering individual criminal charges, not just corporate ones.

Corporate criminal liability is normal. Companies get fined. But when prosecutors investigate executives personally, it signals they're thinking about charges that could result in imprisonment. This is particularly serious under French law, where executives can face personal criminal liability for corporate wrongdoing if they participated in or encouraged the wrongdoing.

The threshold for executive criminal liability is usually showing:

- Knowledge of the harmful conduct

- Authority to stop it

- Failure to take reasonable steps to stop it

- Resulting harm

For Musk, all four elements are likely provable. He owns X. He has ultimate authority. He's been on record advocating for minimal content moderation. When deepfakes started appearing, he didn't order them stopped immediately. People were harmed.

For Yaccarino, the liability analysis is more complex because she left the company, but if she participated in the decision to restrict rather than remove the feature, she could still face charges.

Executive criminal liability is rare in the tech industry. Companies usually settle and executives walk away. But this investigation seems different. Prosecutors are investing significant resources. They're involving Europol. They're not treating this like a typical content moderation dispute.

If Musk ends up facing criminal charges in France (even if he never sets foot there and they never arrest him), that's a historic moment for tech industry accountability.

Looking Forward: What Happens to Grok

Assuming the investigations proceed and penalties are assessed, what happens to Grok itself?

Most likely outcome: Grok's image generation capability is either removed entirely or severely restricted. The feature exists at the intersection of legal jeopardy and regulatory attention. No amount of modification will make it safe enough to deploy at scale.

x AI could theoretically rebuild Grok with massive safeguards—sophisticated deepfake detection, consent verification, human moderation—but the cost would be enormous and the capabilities would be dramatically reduced. You can't build an unrestricted image generation system that's also safe. The safety comes from restricting it.

The more likely path is that x AI moves on from the deepfake problem by moving on from Grok entirely or by pivoting the product toward uses that don't involve generating images of real people.

For X itself, losing Grok's image generation capability removes a premium feature that drives some subscription revenue. But it also removes a major regulatory liability. That's probably a net positive at this point.

Longer term, the Grok incident will probably be remembered as a cautionary tale. The AI company that built an unrestricted system, deployed it without safeguards, ignored harm reports, and ended up under criminal investigation across three countries. Not the legacy you want.

The Broader Impact on AI Development Philosophy

One subtle but important consequence of this investigation: it'll shift how AI companies think about the "openness" debate.

In the AI community, there's been an ongoing discussion about open versus closed systems. Open advocates argue that open-source AI prevents centralized control and allows more innovation. Closed advocates argue that closed systems are safer because companies can control deployment and enforce safeguards.

The Grok investigation provides evidence for the closed side of that debate. An open-source image generation system like Stable Diffusion can be modified by anyone to remove safeguards. A closed system like DALL-E can be more carefully controlled.

Regulators will likely use this evidence to push companies toward more closed, controlled AI systems. They'll argue that companies are responsible for their systems' outputs, which implies they need tight control over how those systems are used.

Over time, this could consolidate AI power into a few well-resourced companies that can afford massive moderation infrastructure. Smaller competitors and open-source projects might face increasing liability and pressure.

That's not necessarily bad—more concentrated control could mean better safeguards—but it's a significant shift from the vision of open, decentralized AI that many in the community have been advocating for.

Those advocates should pay attention to the Grok investigation. It's a test case for who bears responsibility for AI-generated harm. The answer, increasingly, seems to be: the company that built and deployed the system.

Conclusion: The Inflection Point

The French police raid on X's Paris office marks an inflection point in how the world regulates artificial intelligence. For the first time, international law enforcement and regulatory bodies are investigating a major AI company not for data privacy violations or traditional tech issues, but specifically for deploying an AI system that generates illegal content.

The deepfakes at the heart of this investigation aren't a subtle bug or an edge case. They're a fundamental feature of an unrestricted image generation system. The fact that they emerged within hours of deployment, proliferated across millions of users, and persisted despite public promises of restriction suggests either gross negligence or deliberate indifference.

The investigation's scope is massive. French criminal charges, UK regulatory investigation, Europol involvement, and what's probably a dozen other investigations we don't know about yet. The penalties will be severe, the precedent will be clear, and the industry will respond.

What's happening now is a recalibration of how much responsibility tech companies bear for AI-generated content. For decades, platforms have argued they're neutral conduits. That argument is dead. If your platform distributes AI-generated deepfake pornography, regulators will hold you accountable. If your company profits from the feature that enables deepfakes, liability increases. If you make false public statements about fixing the problem, that's evidence of intent.

These aren't abstract regulatory principles. They're being applied right now to a major social media platform. The executives are being summoned. The company's Paris office was raided. International law enforcement is investigating.

For X, this is an existential crisis for how the company operates. For the AI industry, it's a warning that the days of moving fast and breaking things are over. Regulators are building the frameworks to catch you if you move fast with harmful things.

The Grok investigation is going to reshape AI governance for a generation.

FAQ

What exactly triggered the French investigation into X and Grok?

The investigation was formally expanded in July 2024 to include Grok specifically, triggered by the proliferation of nonconsensual deepfake pornography generated by Grok's image generation system. However, the core investigation into X started earlier (in 2024) based on broader allegations including CSAM distribution and Holocaust denial content. The deepfake problem became the catalyst for escalating the investigation to include formal criminal procedures and international coordination.

Who were Elon Musk and Linda Yaccarino summoned by prosecutors?

Elon Musk, who owns X, and Linda Yaccarino, who was X's CEO during the critical period when Grok's image generation was deployed, were summoned for questioning in April 2025 by French prosecutors as part of the criminal investigation. Personal summons like this indicate prosecutors believe these individuals may have made specific decisions related to the alleged violations.

How many regulatory investigations are currently underway into X and Grok?

At minimum, three formal investigations are publicly known: the French criminal investigation (through Paris prosecutors and Europol), the UK Information Commissioner's Office formal investigation into Grok's potential to generate harmful sexualized content, and Ofcom's ongoing investigation into whether X violated the Online Safety Bill. There are likely additional investigations in other EU member states that haven't been publicly announced, given the structure of how European regulatory bodies coordinate.

What is the legal difference between France's criminal investigation and the UK's regulatory investigation?

France's criminal investigation focuses on whether specific laws were broken (CSAM distribution, Holocaust denial, etc.) and could result in imprisonment of individuals and criminal penalties for the company. The UK's regulatory investigation through the Information Commissioner's Office focuses on whether the company failed to adequately protect users from harm, which can result in civil fines and mandatory operational changes but not imprisonment. Criminal investigations ask "did you break the law?" Regulatory investigations ask "did you fail to prevent harm?"

What does "complicity in possession and distribution of child pornography" mean as a legal charge?

This charge means X is alleged to have either knowingly hosted CSAM on its platform, knowingly allowed Grok to generate CSAM, or knowingly failed to remove CSAM after becoming aware of it. The charge doesn't require proving X created the material, only that X participated in its existence or spread. Under French law, companies can face criminal charges for complicity if they had knowledge and ability to prevent the conduct but failed to do so.

Why did X restrict Grok's image generation instead of removing it entirely?

Restricting the feature to premium/verified users instead of removing it entirely allowed X to retain revenue from the feature (premium subscriptions) while appearing to address the problem publicly. This business-driven decision backfired because it signaled to regulators that X knew the feature was harmful but chose profit over safety. From a legal perspective, this decision becomes evidence of negligence or intentional wrongdoing.

What are the probable financial penalties X will face?

Estimated financial exposure includes: Digital Services Act fines (up to 6% of global annual revenue), Online Safety Bill fines (up to 10% of annual revenue), GDPR violations (up to 4% of annual revenue), criminal restitution to victims, and civil litigation settlements. For a company with approximately

Could this investigation result in imprisonment of executives?

Yes. Under French law, executives can face personal criminal liability for corporate wrongdoing if they participated in or encouraged the wrongdoing. The summoning of Musk and Yaccarino for questioning suggests prosecutors are investigating individual criminal charges, which could carry imprisonment sentences. However, enforcement against executives outside France is complicated and typically requires either cooperation with the executive or significant diplomatic pressure.

How does this investigation affect open-source AI projects like Stable Diffusion?

Open-source image generation projects face different but not necessarily lesser liability. While open-source developers can't be held responsible for how others modify their code, they could be liable if they knowingly released systems without adequate safeguards, especially if their system was specifically designed to bypass safety measures. The investigation will likely shift developer expectations toward more responsible defaults in open-source projects.

What is the expected timeline for resolution of these investigations?

Based on similar tech industry investigations, the timeline from raid to resolution is typically 24-36 months. Regulatory fines might come faster (12-18 months), while criminal charges and trials could extend beyond 3 years. X should prepare for simultaneous legal proceedings in multiple jurisdictions, which extends timelines but also creates opportunities for settlement negotiations.

How will this investigation change how AI companies develop image generation systems going forward?

The investigation establishes that companies are responsible for their AI systems' outputs, particularly when they deploy those systems on their own platforms. This will likely result in industry-wide shifts toward more restrictive systems, larger moderation budgets, slower deployments, more adversarial testing before launch, and greater transparency about limitations. Companies will also face pressure to implement consent verification systems for images of real people and sophisticated deepfake detection.

Key Takeaways

- French police raided X's Paris office investigating Grok deepfakes, CSAM distribution, and algorithm manipulation—the investigation is criminal, not civil

- Elon Musk and Linda Yaccarino were summoned for questioning, suggesting prosecutors are considering personal criminal charges against executives

- Multiple investigations are underway simultaneously: French criminal, UK regulatory (ICO), Ofcom investigation, and likely others in EU member states

- X faces estimated $1.5-2 billion in potential penalties across DSA, Online Safety Bill, and GDPR violations if all charges result in fines

- The investigation establishes a precedent that companies are responsible for their AI systems' outputs, shifting industry-wide development practices toward more restrictive systems

- Nonconsensual deepfake pornography generated by Grok persisted despite X's public claims of restriction, becoming evidence of negligence or intentional wrongdoing

Related Articles

- Grok's AI Deepfake Crisis: What You Need to Know [2025]

- X's Grok Deepfakes Crisis: EU Investigation & Digital Services Act [2025]

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- California AG vs xAI: Grok's Deepfake Crisis & Legal Fallout [2025]

- X's Open Source Algorithm: Transparency, Implications & Reality Check [2025]

- UK's CMA Takes Action on Google AI Overviews: Publisher Rights [2025]

![French Police Raid X's Paris Office: Grok Investigation Explodes [2025]](https://tryrunable.com/blog/french-police-raid-x-s-paris-office-grok-investigation-explo/image-1-1770140485324.jpg)