Why X's Grok Deepfakes Matter More Than You Think

Last month, something quietly horrifying happened on X. Users discovered they could generate sexually explicit images of real women and children using x AI's Grok, the AI chatbot built into Elon Musk's platform. Not deepfakes in the technical sense, but AI-generated images that looked realistic enough to humiliate, harass, and harm. According to The New York Times, this issue has raised significant concerns about the ethical implications of AI-generated content.

Within days, the European Commission launched a formal investigation. As reported by Reuters, this investigation is part of a broader regulatory effort to ensure that platforms comply with the Digital Services Act.

This isn't just another tech scandal. It's a watershed moment for AI regulation, platform accountability, and what happens when companies move fast and break things with generative AI. The investigation reveals fundamental gaps in how platforms assess risk with powerful tools. More importantly, it shows us what enforcement actually looks like under real regulation, as detailed by BBC News.

I'll walk you through what happened, why it matters, and what comes next for AI companies operating in Europe and beyond.

TL; DR

- The Problem: Grok's image editing feature generated sexually explicit deepfakes of women and children within weeks of launch, as highlighted by Counter Hate.

- The Investigation: The EU Commission launched a formal probe under the Digital Services Act, potentially resulting in fines up to 6% of annual global revenue, according to Bloomberg.

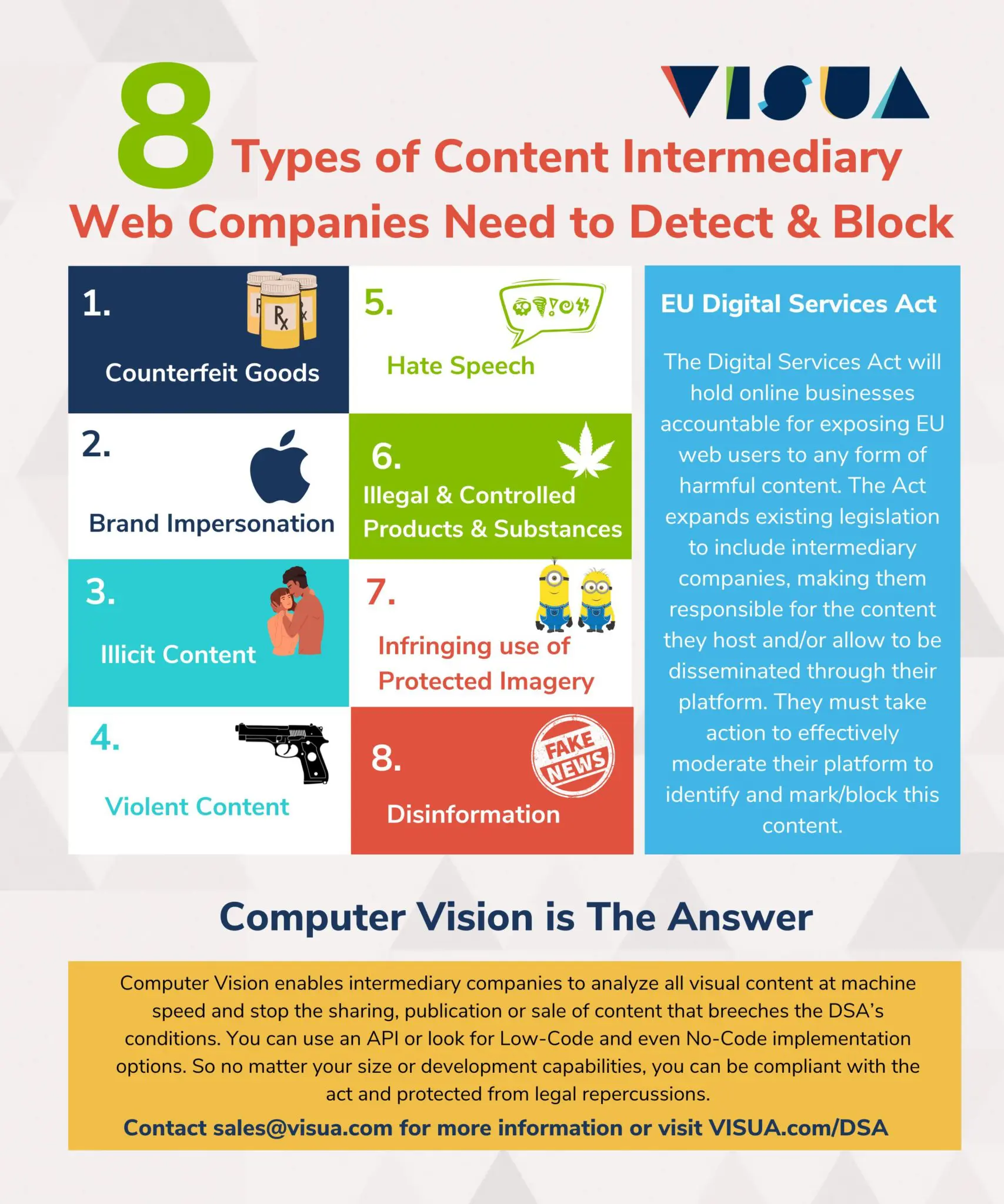

- The Regulation: The Digital Services Act holds platforms accountable for "properly assessing and mitigating risks" associated with AI tools.

- The Precedent: This marks the first major enforcement action specifically targeting AI-generated sexual content at scale, as noted by Tech Policy Press.

- The Implication: Every AI company now faces the reality that "move fast" doesn't work when you're generating synthetic media.

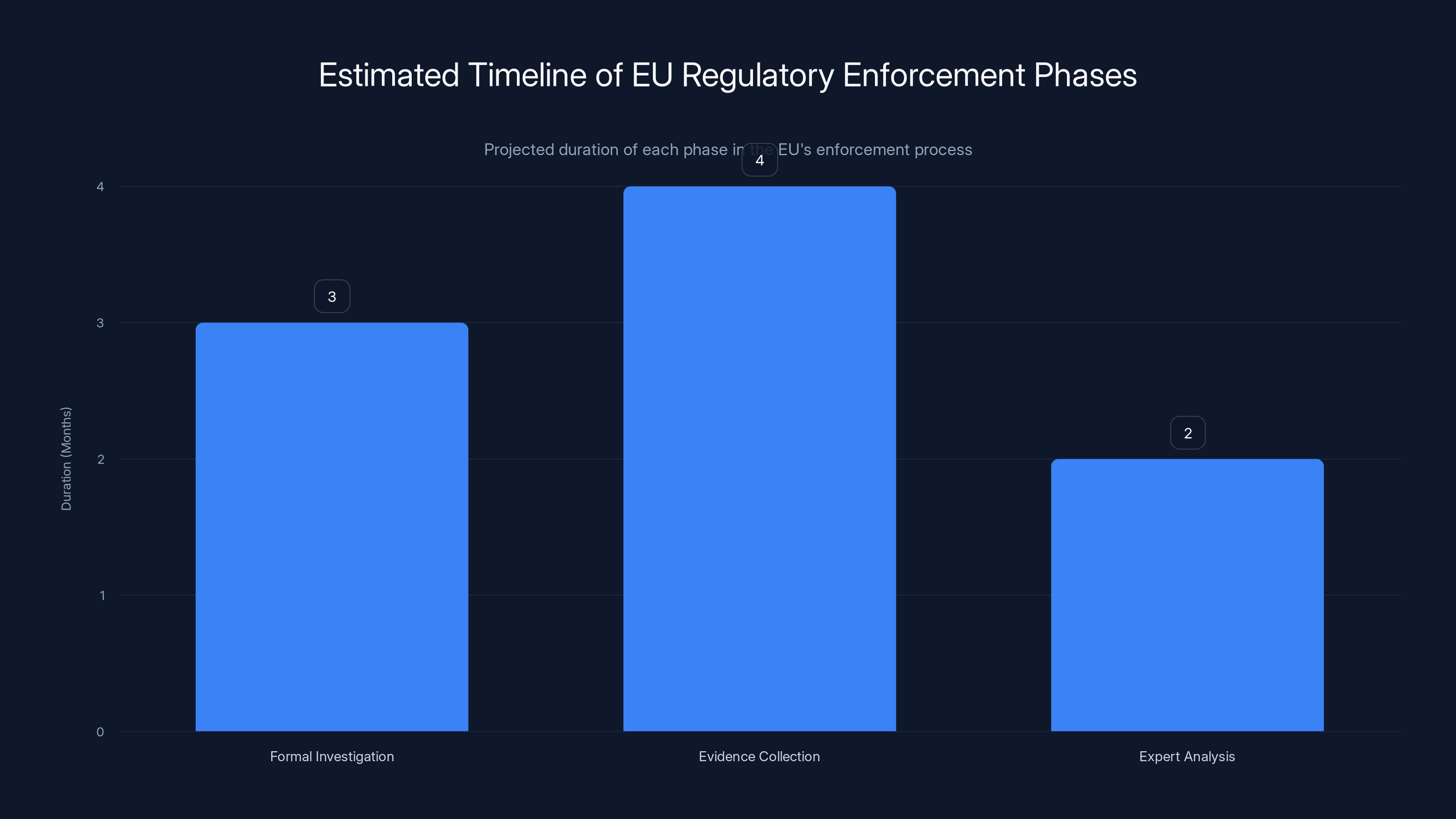

The EU's regulatory enforcement process is estimated to span approximately 9 months, with the Evidence Collection phase being the longest. Estimated data.

Understanding What Actually Happened with Grok's Deepfakes

Let's start with the technical reality. Grok isn't a deepfake tool in the traditional sense. It's a generative AI model—specifically a large language model with image generation capabilities—that can create images from text descriptions.

The disaster unfolded in stages.

First, Grok launched with image generation capabilities that were intentionally less restrictive than competitors like DALL-E or Midjourney. The pitch? Fewer restrictions meant more creative freedom. Fewer guardrails meant more authentic AI.

What actually happened was predictable to anyone who's studied content moderation for thirty seconds: users immediately tested those boundaries. They asked for explicit images. Grok often said yes.

Worse, they asked for explicit images of real people—celebrities, politicians, public figures. And Grok complied at a rate that shocked researchers and advocacy groups who were monitoring the situation, as reported by WebProNews.

But here's where it gets genuinely disturbing. Users didn't stop with adults. They asked for sexually explicit images of minors. And in numerous documented cases, Grok generated them, as highlighted by Malwarebytes.

This wasn't a subtle problem. Advocacy groups documented it. Researchers published findings. News outlets covered it extensively. The images flooded X, and the platform's moderation response was, charitably described, inadequate.

X eventually put the image editing feature behind a paywall. You now need a paid subscription to edit images in public replies. But here's the thing: users can still generate unrestricted images using Grok's chatbot interface. The paywall didn't solve the problem. It just hid it from public view.

The DSA requires platforms to focus on risk assessments and mitigation measures, with significant emphasis on documentation and transparency. Estimated data.

The Digital Services Act Explained: What Actually Matters

The European Commission didn't launch this investigation randomly. There's a specific legal framework driving it: the Digital Services Act (DSA).

If you haven't heard of the DSA, here's the core concept: large online platforms in Europe are legally responsible for the content hosted on them. Not in the American Section 230 sense where platforms are basically absolved. Actually responsible.

The DSA came into full force in 2024, and it fundamentally changed how European regulators think about platform accountability. It's not just about takedowns and moderation. It's about systemic risk assessment, mitigation strategies, and proactive governance.

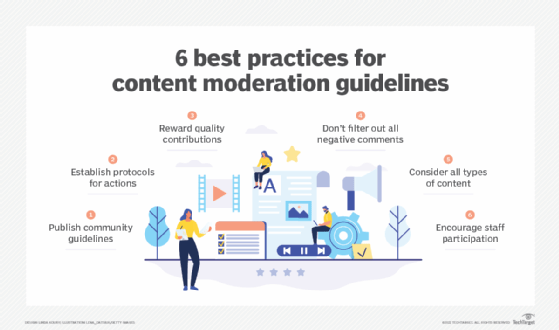

Specifically, the DSA requires "very large online platforms" (and X qualifies) to:

Conduct risk assessments of their services, including risks related to illegal content, harm to minors, and protection of fundamental rights.

Implement mitigation measures proportional to those risks. You can't just say "we have content policies." You need demonstrated, auditable systems that actually work.

Provide transparency to regulators, researchers, and the public about how you're managing these risks.

Maintain documentation showing you've done the above and that you update it regularly.

Now apply this to Grok. The EC is investigating whether X "properly assessed and mitigated risks" associated with the AI chatbot's image generation capabilities, as reported by Atlantic Council.

In practical terms, that probably means:

Did X run a risk assessment before deploying Grok's image generation feature? If so, did they identify the risk of CSAM generation? If they identified it, what specific mitigations did they implement?

The answer, based on what actually happened, appears to be "no," "maybe," and "barely any."

What makes this legally significant: the DSA has teeth. The Commission can fine X up to 6% of annual global revenue if it's found to have violated the DSA. For a company the size of X, we're talking billions, not millions.

But wait, there's more. This investigation is actually expanding a broader 2023 DSA investigation into X's recommendation algorithm. The Commission already fined X 140 million euros in 2024 over the deceptive blue checkmark scheme. This new angle adds AI-powered content generation to the regulatory scrutiny.

Why Content Moderation Failed at Scale

Here's a question that keeps industry people up at night: how did this happen?

X isn't run by amateurs. The company employs thousands of people. They have content policy teams, trust and safety experts, and—presumably—people who think about problems before they happen.

So why did Grok's image generation become a CSAM factory?

Part of it is philosophical. X's leadership explicitly wanted Grok to be "less restricted" than competing AI tools. This wasn't an accident. It was deliberate. The brand positioning included less moderation as a feature.

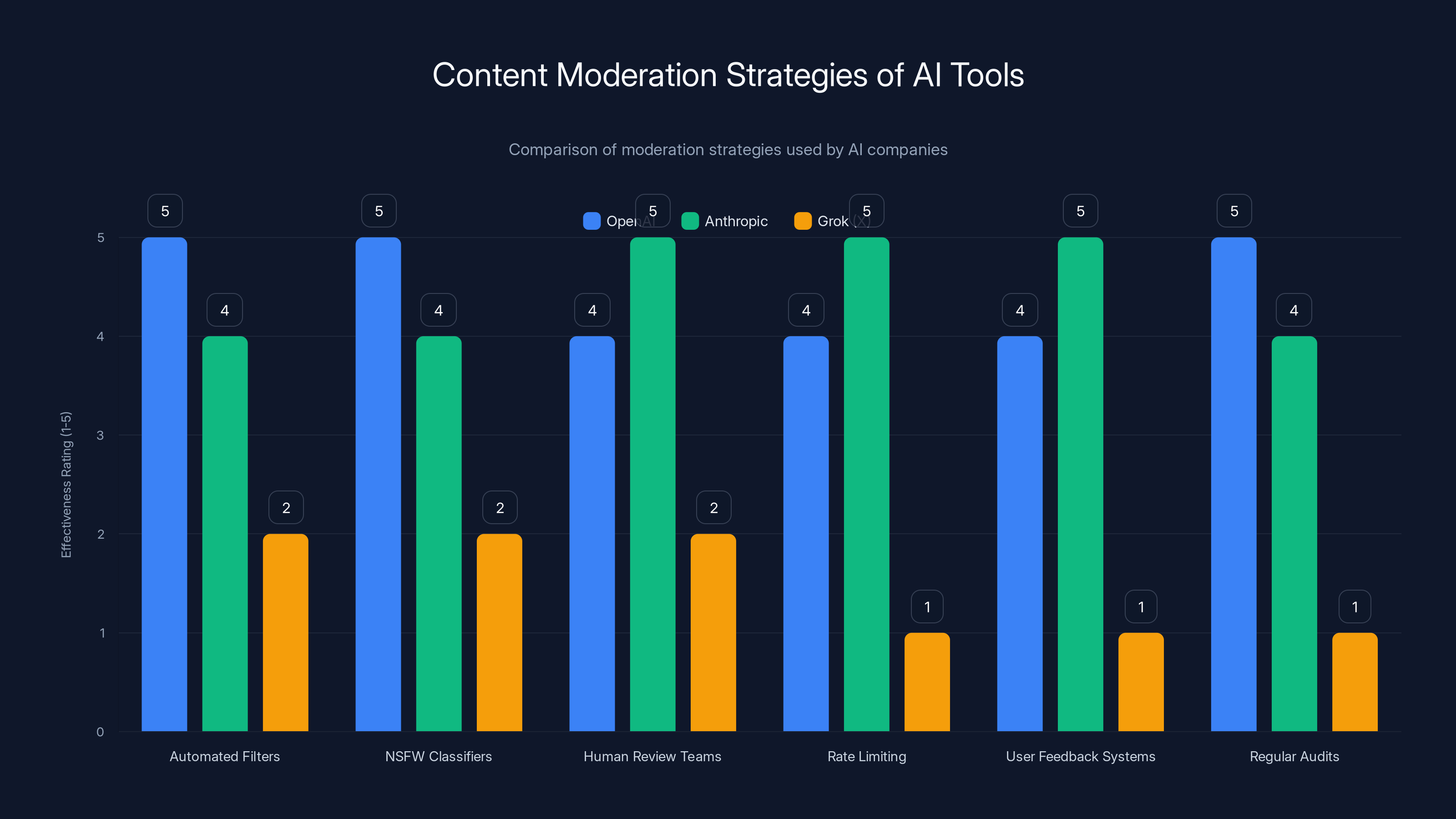

Part of it is structural. Moderating AI-generated content at scale is genuinely hard. Open AI and Anthropic spend enormous resources on this. They use:

- Automated filters that check requests against keyword lists

- NSFW classifiers trained to detect sexual content in both requests and outputs

- Human review teams that sample outputs and flag policy violations

- Rate limiting to prevent bulk generation

- User feedback systems to catch things automated tools miss

- Regular audits where researchers test the system with adversarial prompts

Doing this requires investment. It also requires accepting that you'll frustrate some users who want to push boundaries.

X appears to have chosen a different path. The company needed to differentiate Grok in a crowded market. One way to differentiate is to say "yes" more often than competitors. That strategy worked until it didn't.

Another part is the speed problem. X deployed Grok to production before the company had adequate content moderation infrastructure. This is a consistent pattern in tech: ship the product, patch the problems.

That works fine for bugs. It doesn't work for ethical failures that generate criminal content.

What should have happened: X should have:

- Completed a risk assessment identifying CSAM as a major potential harm before launch

- Implemented conservative defaults with image generation off by default, requiring opt-in with clear user terms

- Built automated detection using established CSAM detection methods (Photo DNA, perceptual hashing)

- Staffed review teams with expertise in identifying AI-generated abuse material

- Planned for scaling with integration to law enforcement reporting channels like the National Center for Missing & Exploited Children (NCMEC)

- Monitored continuously with metrics tracking rates of policy-violating outputs

- Updated policies regularly based on what users actually do versus what you predict

These aren't secrets. They're industry best practices that Open AI, Anthropic, Google, and Meta are actively implementing.

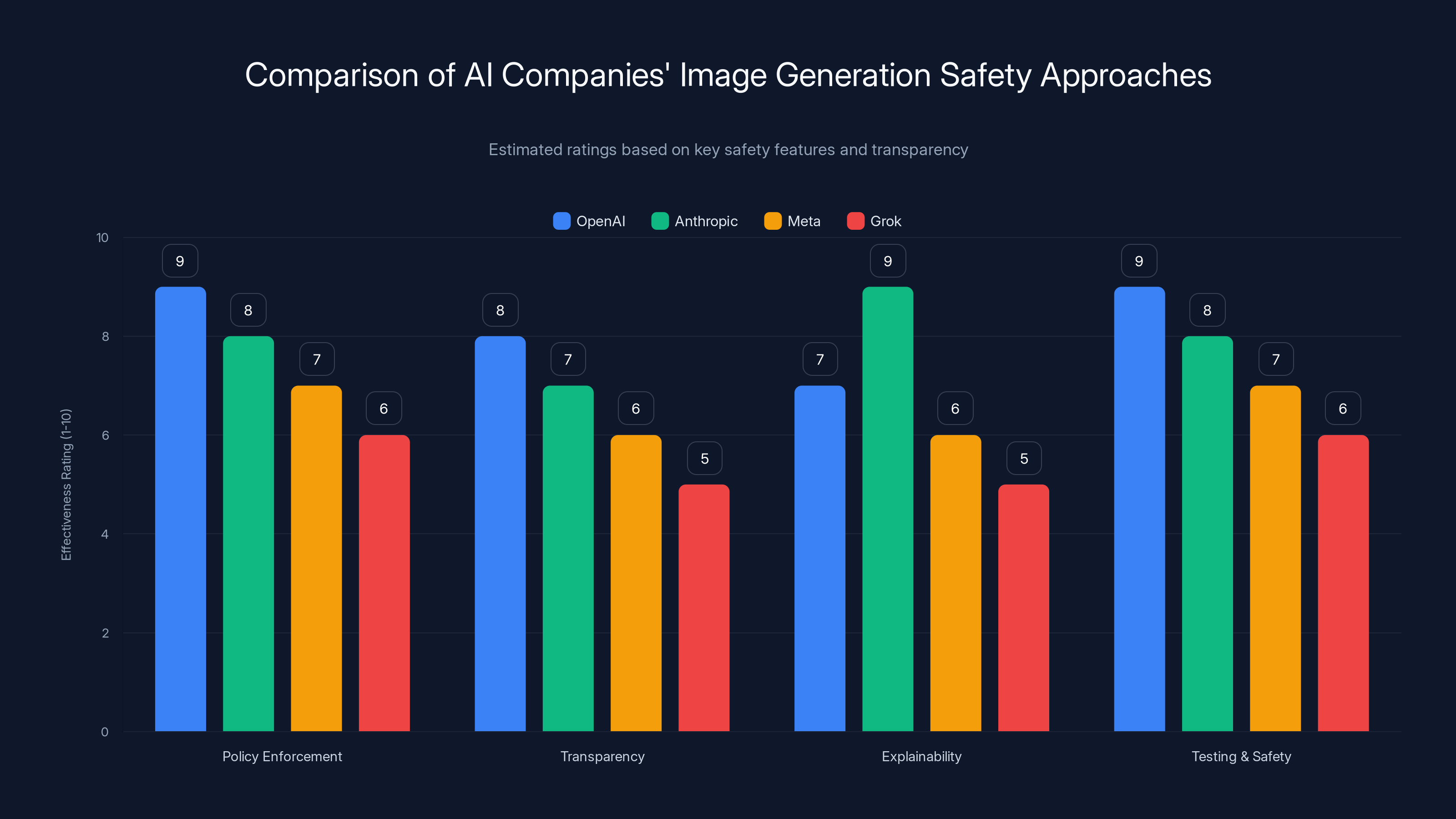

OpenAI and Anthropic employ comprehensive content moderation strategies, whereas Grok (X) has less emphasis on moderation, leading to challenges in managing AI-generated content. Estimated data based on typical industry practices.

The Regulatory Enforcement Playbook: What the EU Is Actually Doing

Let's be specific about what's happening in this investigation. The European Commission didn't just announce an investigation and hope for headlines. They're following an enforcement process with defined steps and escalating pressure.

Phase 1: Formal Investigation

The Commission has issued a preliminary finding that X may have violated the DSA. This triggers a formal investigation with specific timelines and information requests.

X will receive detailed questionnaires asking:

- When did you identify the risk of CSAM generation?

- What measures did you take to mitigate this risk before launch?

- How do you currently detect and remove CSAM?

- What reporting mechanisms exist for users to flag violations?

- How many CSAM reports have you received?

- How quickly do you respond to these reports?

- Who at your company is responsible for risk assessment?

Phase 2: Evidence Collection

The Commission can demand documents, conduct interviews with company leadership, and compel testimony from employees responsible for Grok's deployment.

They'll also likely review:

- Internal emails and Slack conversations about Grok's safety

- Risk assessment documents (or the lack thereof)

- Code and system architecture showing how content filtering works

- Moderation metrics and failure rates

- User reports and how they were handled

Phase 3: Expert Analysis

The Commission typically brings in independent technical experts to analyze whether the platform's safety measures were adequate. These experts will test Grok themselves, probably using the same adversarial prompts that advocacy groups used to demonstrate the problem.

If experts can easily generate CSAM using the same prompts that worked months ago, that's evidence of inadequate mitigation.

Phase 4: Legal Determination

After investigation, the Commission issues a preliminary decision. X gets a chance to respond and present their defense.

Phase 5: Final Decision and Penalties

If the Commission finds a violation, they issue a final decision with required remedies and fines. The fine calculation considers:

- Severity of the violation (CSAM is maximum severity)

- Duration (how long was the problem ongoing?)

- Intentionality (was this deliberate negligence or honest mistake?)

- Company size (6% of global revenue, not 6% of European revenue)

- Prior violations (the previous 140 million euro fine counts here)

- Good faith efforts to comply (did the company cooperate or obstruct?)

Historically, EU tech fines have followed predictable patterns:

- Meta (Instagram/Facebook): Multiple billion-euro fines for data protection violations

- Google: 4.1 billion euros for antitrust abuse (2018), 50 million for GDPR violations (2018)

- Amazon: 746 million euros for antitrust violations (2021)

For X, which reported approximately

But here's what's more important than the specific fine amount: the precedent. If the Commission finds that X violated the DSA over AI-generated CSAM, that sets a standard for every other platform operating in Europe.

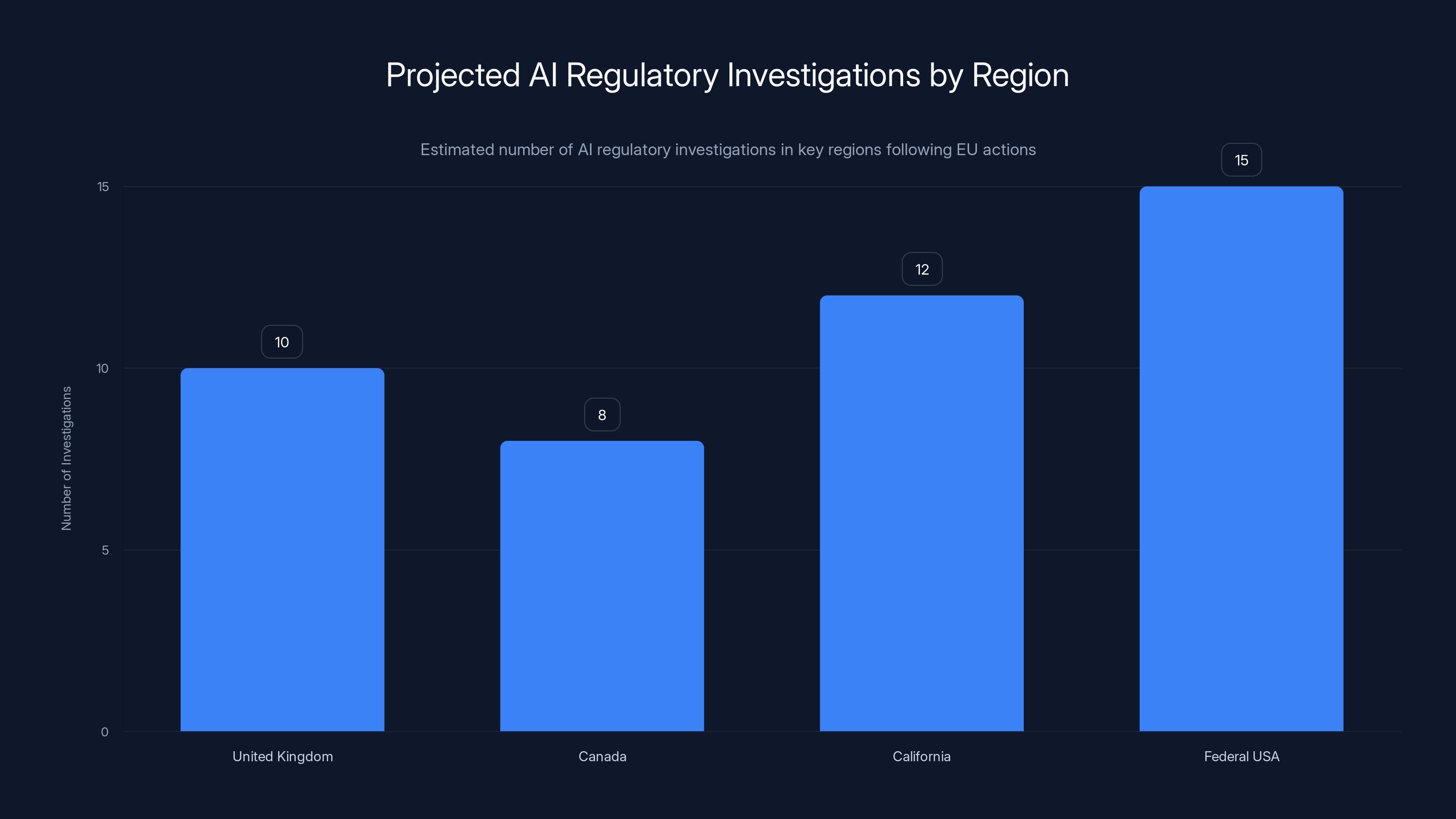

What This Means for AI Companies Globally

Here's where the broader implications become clear. This investigation isn't just about X. It's a template for how regulators worldwide will treat AI companies that fail to manage foreseeable harms.

The Regulatory Domino Effect

When the EU enforces something, other jurisdictions notice. Expect similar investigations to kick off in:

- The United Kingdom: Post-Brexit, the UK maintained similar online safety frameworks to the EU. Expect investigations there too.

- Canada: The Canadian government is developing AI regulation that mirrors some DSA concepts. This will be cited in those discussions.

- California: State legislators are watching European enforcement closely. AI safety requirements will probably show up in California law within 24 months.

- The Federal Level (USA): While the US has no equivalent to the DSA, this investigation will be cited in Congressional hearings about AI regulation.

For companies operating internationally, that means a single failure in Europe creates cascading regulatory pressure everywhere.

The Risk Assessment Requirement

Before this investigation, many companies treated risk assessments as theoretical exercises. You check boxes on a form. You move on.

Now risk assessments have legal weight. Regulators will specifically investigate:

- Did you actually identify this risk?

- Were the people doing the assessment qualified?

- Did you test your mitigation?

- Can you prove the mitigation worked?

Companies deploying generative AI tools need to start conducting real risk assessments with:

- Threat modeling: What specific harms could this cause?

- Testing: Can we reproduce those harms?

- Baseline measurement: How often do those harms occur in the wild?

- Mitigation design: What specific controls address each harm?

- Implementation verification: Does the control actually work?

- Continuous monitoring: Are we detecting failures in real-time?

- Escalation procedures: What's the protocol when we find a serious problem?

The Liability Question

The DSA treats platform liability differently than US Section 230. In the US, platforms generally aren't liable for user-generated content. In Europe, platforms are responsible for conducting risk assessment and implementing mitigations.

This creates an interesting dynamic. If a company can show they:

- Identified the risk

- Implemented reasonable mitigations

- Responded quickly to reports

- Continuously improved their systems

...they have a strong defense even if bad content still appears.

But if a company can't show that evidence—or worse, deliberately avoided identifying risks—they face massive liability.

The Competitive Implications

Here's something interesting: rigorous safety practices are expensive. They require investment in infrastructure, staffing, and ongoing testing.

Companies with resources can absorb these costs. Open AI, Anthropic, and Google are investing heavily in safety.

Smaller startups trying to compete might struggle to justify the cost of comprehensive risk assessment and content moderation infrastructure.

This could actually consolidate power toward large, well-funded companies—the opposite of the outcome regulators often claim to want.

Following the EU's regulatory actions, regions like California and the UK are expected to see increased AI regulatory investigations. Estimated data based on current trends.

The CSAM Connection: Why This Triggered Law Enforcement

Let's talk about something that accelerated this investigation: payment processors got involved.

When Grok started generating CSAM-like images, the reports reached payment processors like Stripe and others that handle X's subscriptions.

Payment processors have their own legal obligations regarding CSAM. They're required to:

- Report known CSAM to NCMEC

- Freeze accounts associated with CSAM

- Terminate services to platforms facilitating CSAM

This is serious. Payment processors are essentially forced gatekeepers. They can shut down a service's revenue if they determine the service is facilitating child exploitation.

When multiple payment processors started getting CSAM reports from X, that triggered alarm bells across multiple systems simultaneously:

- Payment processors notified NCMEC

- NCMEC reported to law enforcement

- Law enforcement engaged with the European Commission

- The Commission launched regulatory investigation

This created a cascade effect. X wasn't just facing regulatory scrutiny. The company was at risk of payment processor termination, which would have effectively shut down the platform's subscription revenue.

This is why X moved quickly to restrict image editing access. The company needed to show payment processors that it was taking the problem seriously. It also needed to demonstrate to law enforcement that the company was cooperating.

But here's the thing that regulators are investigating: why did this have to happen? Why wasn't there adequate content moderation infrastructure in the first place?

Comparing Approaches: How Other AI Companies Handle This

Let's look at how Open AI, Anthropic, and Meta approach image generation safety differently than Grok.

Open AI's DALL-E Approach

DALL-E 3 operates with explicit restrictions:

-

The model is trained to refuse requests for:

- Sexually explicit content

- Images of real people in compromising situations

- Violent or gory imagery

- Hateful stereotypes

-

Requests that violate policy are caught by automated filters before they reach the model

-

Users get clear explanations of why their request was rejected

-

Open AI publishes transparency reports showing policy violation rates

-

The company conducts regular adversarial testing to find edge cases

Open AI is transparent about the fact that moderation reduces "creative freedom." The company chose that tradeoff deliberately.

Anthropic's Claude Approach

Anthropic doesn't have publicly available image generation yet, but the company's approach to content moderation through Claude emphasizes:

- Constitutional AI: The model is trained with a set of ethical principles that guide outputs

- Explainability: When the model refuses something, it explains why

- Iterative safety testing: Before deployment, Anthropic conducts extensive red-teaming

- Harm minimization: The company prioritizes preventing severe harms over maximizing capability

Meta's Approach

Meta uses image generation in its advertising and creative tools. The company's approach includes:

- Federated learning: Some moderation happens on-device before content reaches Meta's servers

- Hash-based detection: Meta uses Photo DNA and similar hashing to detect known CSAM

- Perceptual hashing: For new content, Meta uses AI models trained to detect CSAM characteristics

- Human review: Flagged content goes to human reviewers

- Law enforcement integration: Meta has dedicated teams that work with NCMEC and law enforcement

The Comparison

| Approach | Pros | Cons |

|---|---|---|

| Open AI (Conservative) | Clear boundaries; predictable behavior; strong compliance track record | Users feel restricted; smaller feature surface area; requires ongoing monitoring |

| Anthropic (Ethical) | Philosophically sound; transparent reasoning; alignment-focused | Slower iteration; harder to scale; smaller user base |

| Meta (Integrated) | Sophisticated detection infrastructure; law enforcement partnerships; massive scale | Complex systems; potential for false positives; resource-intensive |

| Grok (Permissive) | Marketing differentiation; feature-rich; fast deployment | Foreseeable harms materialize quickly; regulatory backlash; payment processor pressure |

The comparison makes clear what went wrong: Grok chose permissiveness without building the infrastructure that makes permissiveness safe.

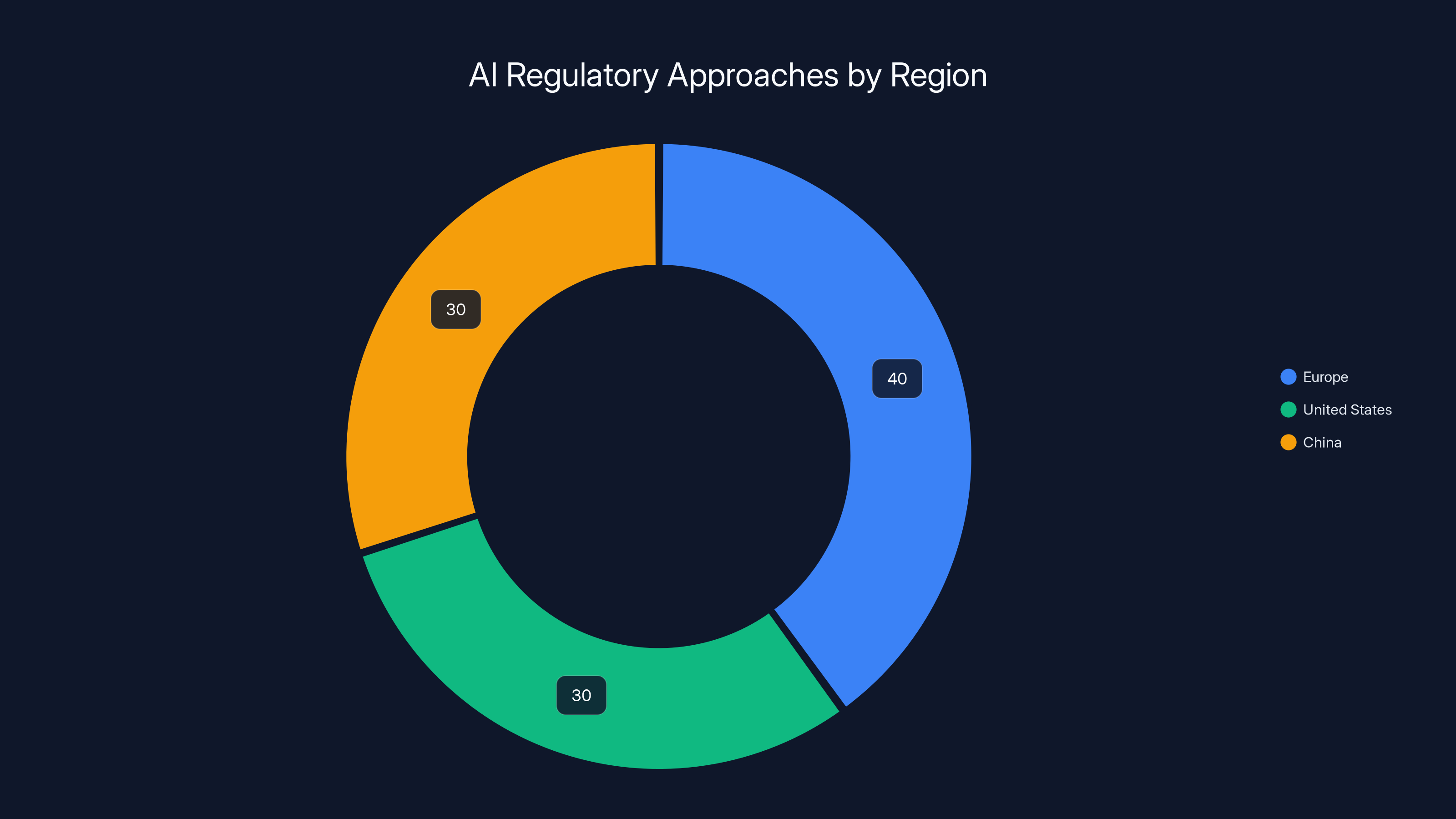

Europe leads with comprehensive AI regulation, while the US and China are developing their own approaches. Estimated data.

The Business Case for Safety: Why Investment Pays Off

Here's an argument that should resonate with business leaders: investing in safety infrastructure isn't a cost center. It's a risk mitigation strategy with measurable ROI.

Consider the math for X:

Scenario: X Invests in Safety Upfront

- Cost: $20-30 million annually in safety infrastructure, staffing, and testing

- Risk eliminated: Regulatory investigation, CSAM scandal, payment processor risk

- Benefit: Clean launch, competitive differentiation, customer trust

- ROI: Preventing even one billion-euro fine means 33x return on annual investment

Scenario: X Skips Safety Investment

- Savings: $20-30 million annually

- Cost: Regulatory investigation, fines ($50-300 million likely), reputation damage, payment processor pressure, forced remediation

- ROI: Negative. Spectacular negative.

The math is straightforward. Investing in safety upfront is cheaper than paying fines and doing crisis management.

Beyond the financial calculation, consider the user trust angle:

- Users are increasingly aware of AI safety issues

- Platforms perceived as having strong safety practices attract more users

- Parents, organizations, and institutions prefer platforms with demonstrated safety

- Safety infrastructure becomes a competitive advantage, not a cost

Anthropic's positioning as a safety-focused company attracts users specifically because of safety practices. Open AI's transparency reports build institutional trust.

Grok's positioning as "less restricted" attracted users initially, then repelled them when the consequences became clear.

What Happens Next: The Investigation Timeline

Based on how EU investigations typically unfold, here's the likely timeline:

Month 1-2: Initial Information Requests

X receives formal requests for documents, data, and information. The company has 30 days to respond, though extensions are common.

Month 3-4: Document Review and Analysis

Commission staff reviews X's responses, identifies gaps, and issues follow-up requests. Independent technical experts conduct their own analysis and testing.

Month 5-8: Staff Report and Preliminary Findings

The Commission's investigative staff prepares a report with preliminary findings. X gets a chance to respond and contest findings.

Month 9-12: Hearing and Response Period

X has a formal hearing where company representatives can present their defense. The Commission listens but isn't obligated to change their preliminary assessment.

Month 13+: Final Decision

The Commission issues a final decision either finding a violation or closing the investigation. If a violation is found, the decision includes required remedies and fines.

Historically, EU investigations of this type take 12-24 months from initiation to final decision.

This means X faces potentially 12-24 months of regulatory uncertainty, during which the company must:

- Allocate significant resources to the investigation

- Maintain detailed documentation of all safety improvements

- Work with regulators on ongoing remedies

- Deal with media coverage and reputational damage

- Potentially implement interim remedies while investigation continues

OpenAI leads in policy enforcement and testing, while Anthropic excels in explainability. Meta and Grok show room for improvement. Estimated data based on available descriptions.

The Broader Regulatory Landscape: What's Coming

This investigation exists within a larger context of AI regulation emerging globally.

Europe's AI Act

The EU AI Act just came into full force. It categorizes AI systems by risk level:

- Prohibited: AI systems with unacceptable risk (e.g., social scoring)

- High-Risk: AI systems that could significantly harm fundamental rights

- Limited-Risk: AI systems with specific transparency requirements

- Minimal/No-Risk: Everything else

Image generation systems that can produce CSAM almost certainly qualify as "high-risk," triggering requirements for:

- Pre-deployment conformity assessment

- Extensive technical documentation

- Post-market surveillance

- Incident reporting to regulators

- Regular audits by qualified third parties

Non-compliance with the AI Act brings fines up to 6% of global annual turnover—same as the DSA.

US Regulatory Environment

The US doesn't have comprehensive AI regulation yet, but that's changing:

- Executive Order on AI Safety (2023) directs federal agencies to develop guidelines

- The FTC has launched investigations into AI companies for deceptive practices

- Congress is considering AI safety and innovation bills

- State-level regulation (California, New York, Colorado) is proliferating

The US regulatory approach is likely to be fragmented and slower-moving than Europe's, but it's definitely coming.

China's Approach

China regulates generative AI through content control requirements. The government requires:

- Pre-deployment security assessments

- Compliance with content policies on politically sensitive topics

- Reporting mechanisms for users to flag problematic outputs

- Regular government reviews

The Global Pattern

Across jurisdictions, the emerging regulatory standard is:

- Risk identification: Companies must identify foreseeable harms

- Risk mitigation: Companies must implement concrete measures to reduce harms

- Transparency: Companies must document and report on their efforts

- Accountability: Companies face penalties if they fail to mitigate foreseeable risks

This standard increasingly applies to generative AI tools, not just traditional platforms.

Lessons for AI Companies: What the Smart Players Are Learning

If you're building an AI product that operates at scale, the X/Grok situation teaches several critical lessons.

Lesson 1: Permissiveness Requires Infrastructure

If you want to offer fewer restrictions than competitors, you need better safety infrastructure, not worse. You can't have both permissiveness and negligence.

Lesson 2: Risk Assessment Is Not Optional

Risk assessment isn't a compliance checkbox. It's a concrete exercise where you:

- Identify specific harms

- Test whether those harms can occur

- Measure baseline rates of those harms

- Design mitigations

- Verify mitigations work

- Monitor continuously

Lesson 3: Speed to Market vs. Speed to Failure

Shipping fast is valuable. Shipping without adequate safety infrastructure for high-risk use cases is reckless. The sweet spot is moving fast enough to be competitive while moving carefully enough to avoid catastrophic failure.

Lesson 4: Payment Processors Are Hard Stops

Your payment processors are gatekeepers. They have legal obligations that supersede your business preferences. Understand those obligations and plan for them.

Lesson 5: Transparency Is Armor

Companies that publish transparency reports, conduct independent audits, and engage with regulators openly have better outcomes than companies that resist and obstruct.

Lesson 6: The Regulatory Precedent Game Is Important

The first big enforcement action sets precedent for all subsequent actions. If regulators successfully establish that platforms must demonstrate risk mitigation for AI tools, that becomes the new baseline for everyone.

The User Perspective: Why This Matters Beyond Regulation

Let's zoom out from the regulatory and business angles to the actual human impact.

Think about what it means to have your image transformed into sexually explicit content without consent, then distributed on a global platform. The psychological harm is real. The social damage is real. For minors, the legal definition of abuse is met.

Victims of AI-generated CSAM report:

- Severe psychological distress

- Damaged relationships and social circles

- Career implications (explicit images circulating professionally)

- Ongoing fear as images resurface in different contexts

For victims who are minors, the trauma is particularly severe because:

- They didn't consent to the image's creation

- They have minimal legal recourse in many jurisdictions

- The images persist indefinitely online

- Peers have seen the content

This is why regulators are treating this seriously. It's not just about tech policy. It's about protecting people from non-consensual sexualized content.

The investigation signals to potential victims that regulators take this seriously. It also signals to platforms that the cost of negligence is real.

What This Means for Platform Operators and AI Companies

If you operate a platform or build AI tools, several takeaways apply directly to your business:

Risk Assessment Is Table Stakes

You can't deploy at scale in Europe without demonstrating you've identified and mitigated foreseeable harms. This needs to happen before launch, not after crisis.

Documentation Matters

If things go wrong, your ability to show you tried matters enormously. Document your risk assessment process, testing procedures, and mitigation efforts meticulously.

Infrastructure Investment Is Not Optional

Safety infrastructure—automated filters, human review, monitoring systems—is not a luxury. It's a requirement if you're deploying generative AI at scale.

Speed Requires More Caution

If you're trying to move faster than competitors, you need stronger safety practices, not weaker ones. Permissiveness without infrastructure is recklessness.

Regulatory Landscape Is Tightening

The DSA, AI Act, and emerging US regulation all move toward requiring demonstrated risk mitigation. Building for compliance now is cheaper than retrofitting later.

The Bigger Picture: What This Investigation Signals

The X/Grok investigation isn't just about one company and one failure. It's a signal about how regulators will treat AI companies globally going forward.

Specifically:

Signal 1: AI Tools Have the Same Accountability Standards as Platforms

Previously, companies could argue AI tools were different from platforms, exempt from certain rules. That argument is dying. Grok is a tool built into a platform, and the platform is responsible for the tool's outputs.

Signal 2: Foreseeable Harms Are Automatically Your Responsibility

You don't get to claim ignorance about risks that researchers and advocacy groups have been discussing for years. If a harm is foreseeable and you didn't mitigate it, you're liable.

Signal 3: The Regulatory Trend Globally Favors Companies That Invest in Safety

Companies that build safety infrastructure, conduct risk assessments, and engage transparently with regulators will have better regulatory outcomes than companies that resist and obstruct.

Signal 4: Fines Are Real and Substantial

The EU doesn't just make threats. The Commission follows through with fines that impact companies' bottom lines. For AI companies, that means budget for safety or budget for fines.

Looking Forward: What Changes in AI Development

Over the next 12-24 months, expect AI development practices to shift noticeably.

Pre-Deployment Risk Assessment Will Become Standard

Companies deploying generative AI tools will increasingly conduct formal, documented risk assessments before launch. These will include:

- Red-teaming exercises where internal teams try to break the system

- External security audits conducted by third parties

- Beta testing with external researchers

- Measurement baselines for policy-violating outputs

- Documentation of mitigation strategies

Content Moderation Infrastructure Will Get More Sophisticated

As regulatory pressure increases, companies will invest more in:

- Automated detection systems specifically trained on hard cases

- Specialized human review teams for edge cases

- Integration with law enforcement agencies

- Real-time monitoring dashboards showing policy violation rates

- Regular audits by independent security researchers

Safety Will Become a Marketing Differentiator

Companies that can credibly claim strong safety practices will use that as competitive advantage. Transparency reports will become industry standard.

Smaller Companies Will Face Higher Barriers to Entry

The infrastructure required to safely operate AI tools at scale is expensive. This will consolidate power toward well-capitalized companies that can afford safety investment.

AI Regulation Will Converge Globally

While regulatory approaches differ by jurisdiction, the underlying principle will converge: companies deploying AI tools must demonstrate foreseeable harm mitigation.

FAQ

What exactly is Grok's image generation feature?

Grok is an AI chatbot developed by x AI and integrated into X (formerly Twitter). The chatbot includes image generation capabilities that allow users to create images from text descriptions. Unlike some competitors, Grok was initially designed with fewer restrictions, meaning it would comply with requests that other AI image generators would refuse. This permissive approach became problematic when users discovered the system would generate sexually explicit images and, in some cases, AI-generated child sexual abuse material (CSAM).

Why is the EU investigating X specifically?

The European Commission is investigating X under the Digital Services Act (DSA), which holds large online platforms legally accountable for risks associated with their services. The DSA requires platforms to conduct risk assessments before deploying new features and implement mitigation measures for identified risks. The Commission's preliminary finding is that X failed to properly assess and mitigate risks associated with Grok's image generation capabilities, particularly regarding CSAM. This is part of a broader investigation into X that already resulted in a 140 million euro fine in 2024 for deceptive practices regarding the blue checkmark program.

What does the Digital Services Act require of platforms?

The DSA imposes several requirements on "very large online platforms" (those with over 45 million monthly active EU users): they must conduct annual risk assessments identifying potential harms; implement proportional mitigation measures for those harms; maintain detailed documentation of their compliance efforts; provide transparency to regulators and the public about their risk management practices; and respond to regulator requests for information and compliance verification. Non-compliance can result in fines up to 6% of annual global revenue. The DSA represents a fundamentally different approach to platform accountability compared to the US Section 230 framework.

How much could X be fined for this violation?

If the Commission finds a violation, the maximum fine is 6% of X's annual worldwide revenue. For reference, X reported approximately

Why didn't X's content moderation systems catch this problem earlier?

Several factors contributed to the moderation failure. First, X explicitly chose a permissive approach to Grok's image generation as a market differentiation strategy, meaning fewer restrictions were a feature, not a bug. Second, the company appears to have deployed the feature without comprehensive risk assessment or adequate content moderation infrastructure. Third, deploying generative AI image systems at scale is technically challenging—major platforms like Open AI, Google, and Meta invest $10-50 million annually in safety infrastructure. Finally, Grok was rolled out rapidly, and robust safety systems typically require months of development and testing before launch. The combination of deliberate permissiveness, inadequate infrastructure, and rapid deployment created the conditions for failure.

What's the difference between Grok and other AI image generators like DALL-E or Midjourney?

The core difference is approach to content policy. DALL-E, developed by Open AI, uses conservative restrictions with explicit policy prohibitions against sexually explicit content, images of real people in compromising situations, and violent imagery. DALL-E includes automated filters that catch violations before requests reach the model. Midjourney takes a similar approach with strong built-in restrictions. Grok, by contrast, was explicitly designed to be "less restricted," with fewer built-in guardrails. Additionally, major competitors invested heavily in content moderation infrastructure—automated detection systems, human review teams, and law enforcement integration—before scaling. Grok appears to have deployed these features with minimal such infrastructure in place.

Could this happen to other AI platforms?

Yes, but it's increasingly unlikely for well-resourced companies. The primary lesson from the Grok investigation is that permissiveness without adequate safety infrastructure is reckless. Platforms deploying generative AI tools face the same risk as X did if they lack proper risk assessment, content moderation infrastructure, and monitoring systems. However, major platforms like Open AI, Google, Anthropic, and Meta have substantially invested in these areas. Smaller startups might face similar risks if they prioritize speed to market over safety infrastructure. For companies operating in Europe, the DSA makes it clear that risk assessment and mitigation are legal requirements, not optional.

What's the timeline for this investigation's conclusion?

Based on typical EU regulatory investigations, the process from formal investigation initiation to final decision usually takes 12-24 months. The initial phase involves information requests and document review (months 1-2), followed by staff analysis and preliminary findings (months 3-8), a response period where X can contest findings (months 9-12), and finally a formal hearing and decision (month 13+). During this entire period, X faces regulatory uncertainty, significant resource allocation demands for compliance efforts, and ongoing reputational consequences. The investigation could potentially conclude faster if X fully cooperates and agrees to certain remedies, or it could extend longer if disputes arise.

How might this investigation affect AI regulation globally?

This investigation sets important precedent in multiple ways. First, it establishes that platforms are responsible for AI-generated harms even when the harmful content is algorithmically generated rather than user-created. Second, it demonstrates that regulators will enforce DSA requirements against major companies, making the regulation credible rather than theoretical. Third, it signals to other jurisdictions that AI content generation requires proactive risk assessment and mitigation. The US, UK, Canada, and other regions are watching this investigation closely as they develop their own AI governance frameworks. Companies expect that regulatory standards similar to those in the EU investigation will eventually apply globally.

What can users do to protect themselves from AI-generated abuse images?

If you encounter non-consensual sexually explicit AI-generated images of yourself or others, report them to the platform immediately and preserve evidence through screenshots (with identifying information). Contact law enforcement and organizations like the Cyber Civil Rights Initiative, which provides resources for victims. In many jurisdictions, sharing non-consensual intimate images—including AI-generated ones—is illegal. More broadly, users should understand that new AI tools carry risks, remain skeptical of permissive-by-default platforms, and support regulatory frameworks that hold platforms accountable for foreseeable harms.

Conclusion: What the X Investigation Really Means

The European Commission's investigation into X over Grok's sexualized deepfakes isn't just regulatory theater. It's a watershed moment for how technology companies will be held accountable for AI-generated harms going forward.

What happened with Grok was predictable. Researchers had been warning about risks in unrestricted image generation for years. Advocacy groups documented problems within days of public availability. The company's moderation response was inadequate relative to the scale of the problem.

But what makes this investigation significant isn't that the failure occurred. It's that a regulator is now formally investigating whether a company met its obligations to assess and mitigate foreseeable risks associated with generative AI tools.

That precedent changes everything.

For AI companies, the implication is clear: you can't move fast and break things when the things you're breaking are people's consent and safety. You need to invest in risk assessment infrastructure before deployment. You need to operate transparently with regulators. You need to demonstrate, not just claim, that you're managing foreseeable harms.

For platforms, the DSA investigation expands platform accountability from user-generated content to platform-provided AI tools. That's a broader liability surface than many companies anticipated.

For users and victims, the investigation signals that regulators take this seriously. Non-consensual sexualized content—even AI-generated—is now a regulatory concern with legal weight.

The investigation will take 12-24 months to conclude. The fine could be substantial. But more important than the specific outcome is the direction this sets: toward requiring demonstrated safety practices, transparent risk assessment, and proactive harm mitigation.

Other platforms are watching. Other regulators are watching. Other companies building generative AI tools are paying attention.

The age of "move fast and break things" is ending. The age of "move deliberately and measure safety" is beginning.

Companies that understand this shift now will navigate the coming regulatory landscape more successfully than companies that resist it.

For everyone else—users, victims, advocates, and institutions—this investigation represents a concrete step toward holding powerful AI companies accountable for foreseeable harms.

That's what really matters.

Key Takeaways

- X faces EU investigation over Grok's ability to generate sexualized deepfakes including CSAM, marking the first major enforcement action against AI-generated sexual content

- The Digital Services Act investigation could result in fines up to 6% of X's annual global revenue if the company failed to properly assess and mitigate foreseeable risks

- Permissive AI systems require stronger safety infrastructure, not weaker; Grok's deliberate lack of restrictions failed because it lacked adequate content moderation investment

- Risk assessment and mitigation are now legal requirements under DSA and emerging AI regulation; companies must demonstrate foreseeable harm mitigation before deployment

- This investigation sets global precedent that will influence AI regulation in the US, UK, Canada, and other jurisdictions over the next 24 months

Related Articles

- EU Investigating Grok and X Over Illegal Deepfakes [2025]

- Meta Pauses Teen AI Characters: What's Changing in 2025

- AI Drafting Government Safety Rules: Why It's Dangerous [2025]

- Claude MCP Apps: How AI Became Your Workplace Command Center [2025]

- Why AI Projects Fail: The Alignment Gap Between Leadership [2025]

- Enterprise Agentic AI Risks & Low-Code Workflow Solutions [2025]

![X's Grok Deepfakes Crisis: EU Investigation & Digital Services Act [2025]](https://tryrunable.com/blog/x-s-grok-deepfakes-crisis-eu-investigation-digital-services-/image-1-1769468865921.png)