Understanding the HHS AI Initiative for Vaccine Safety Monitoring

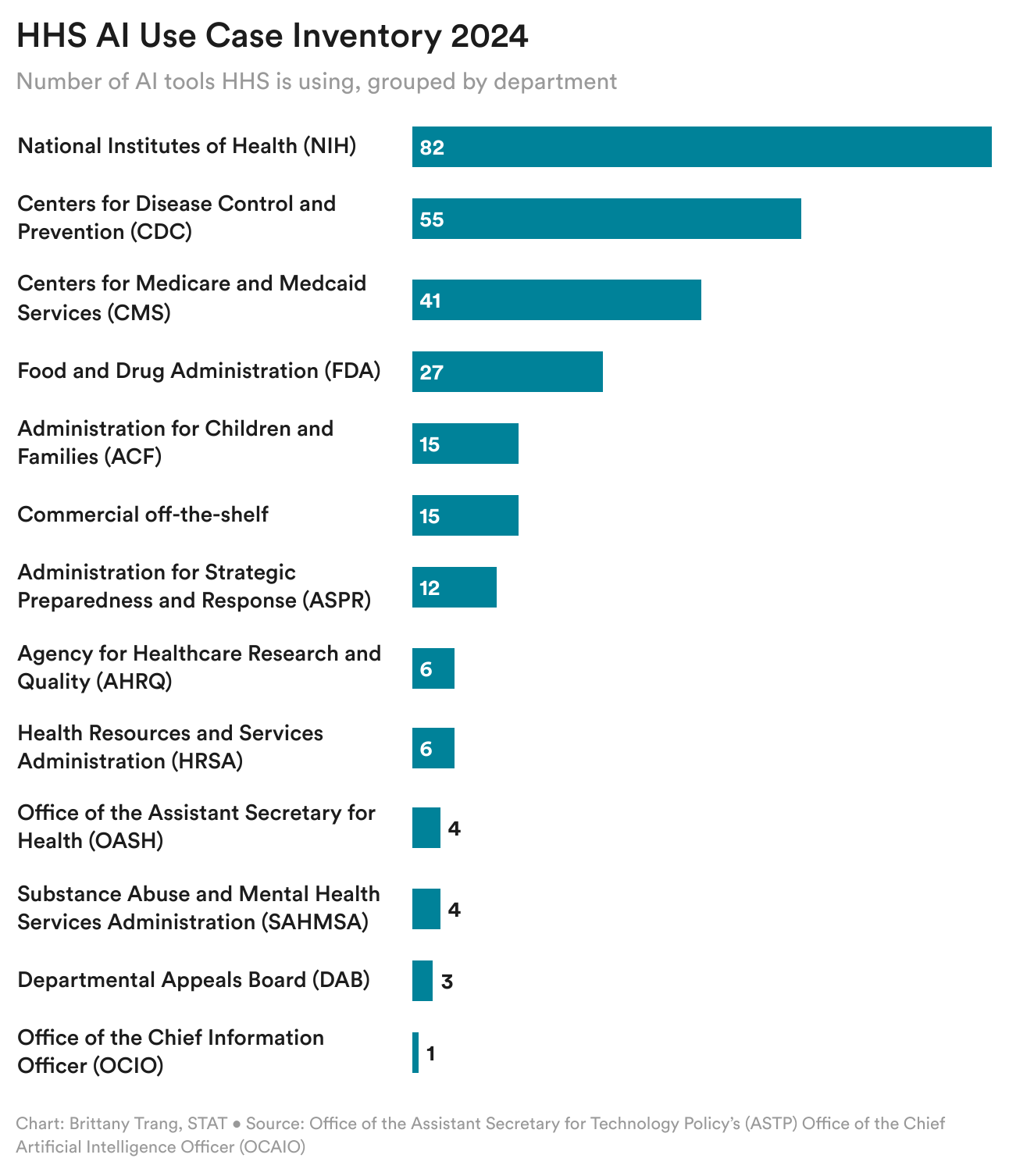

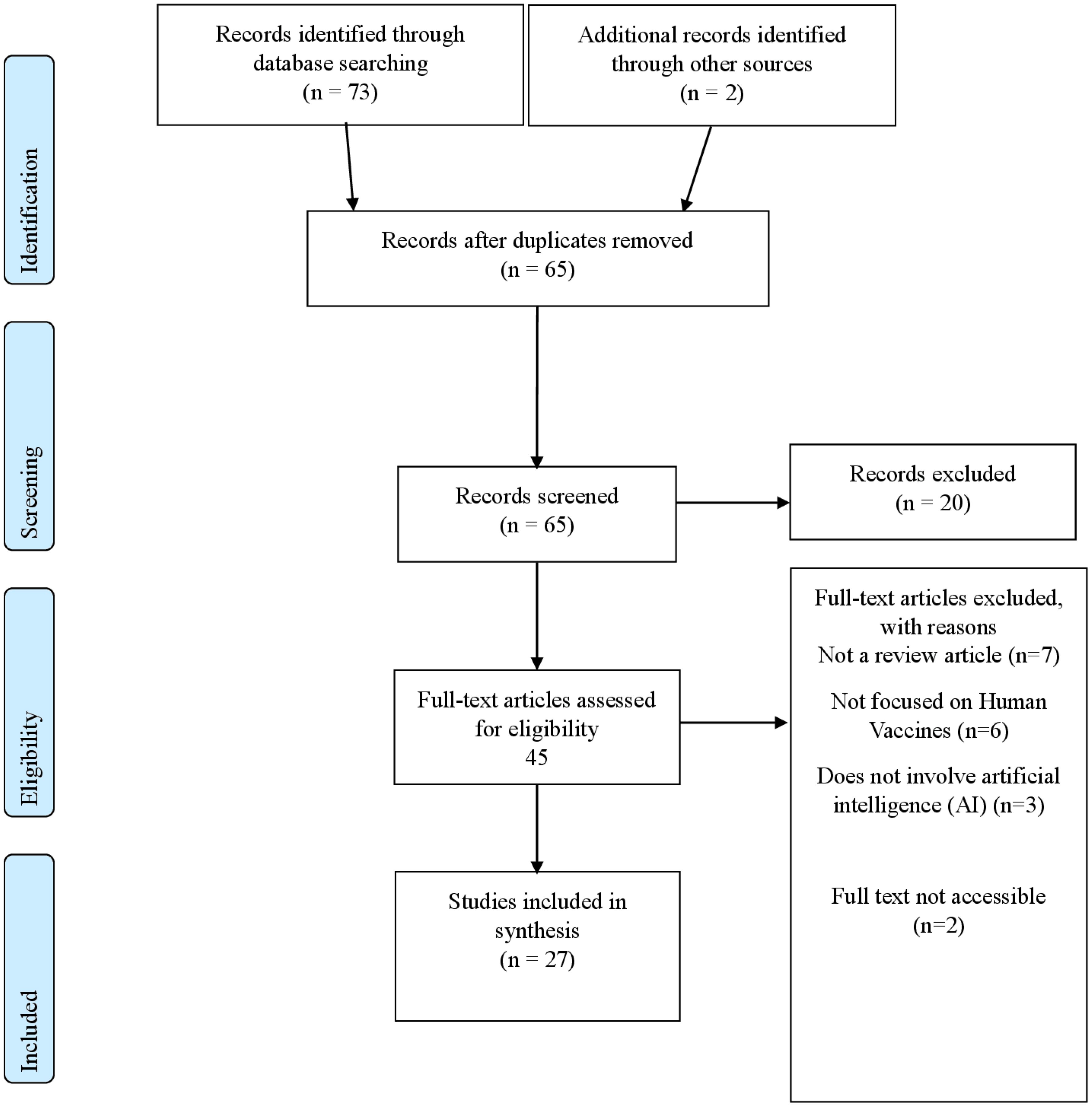

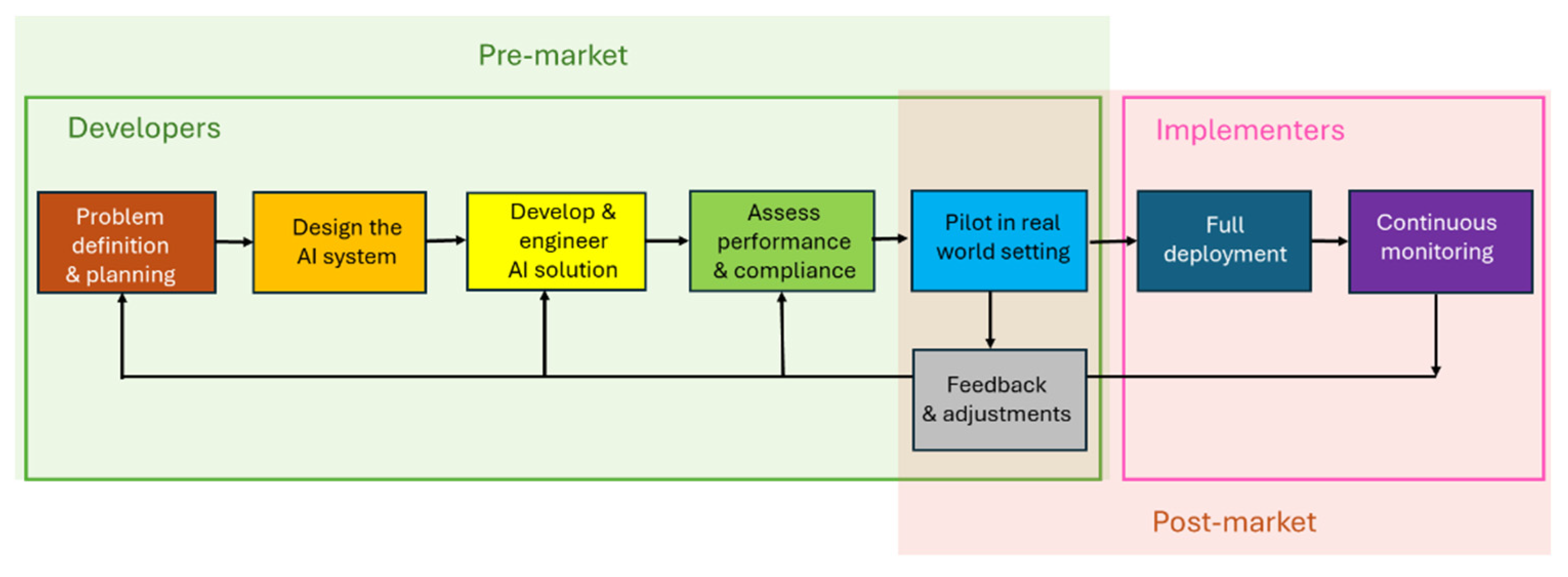

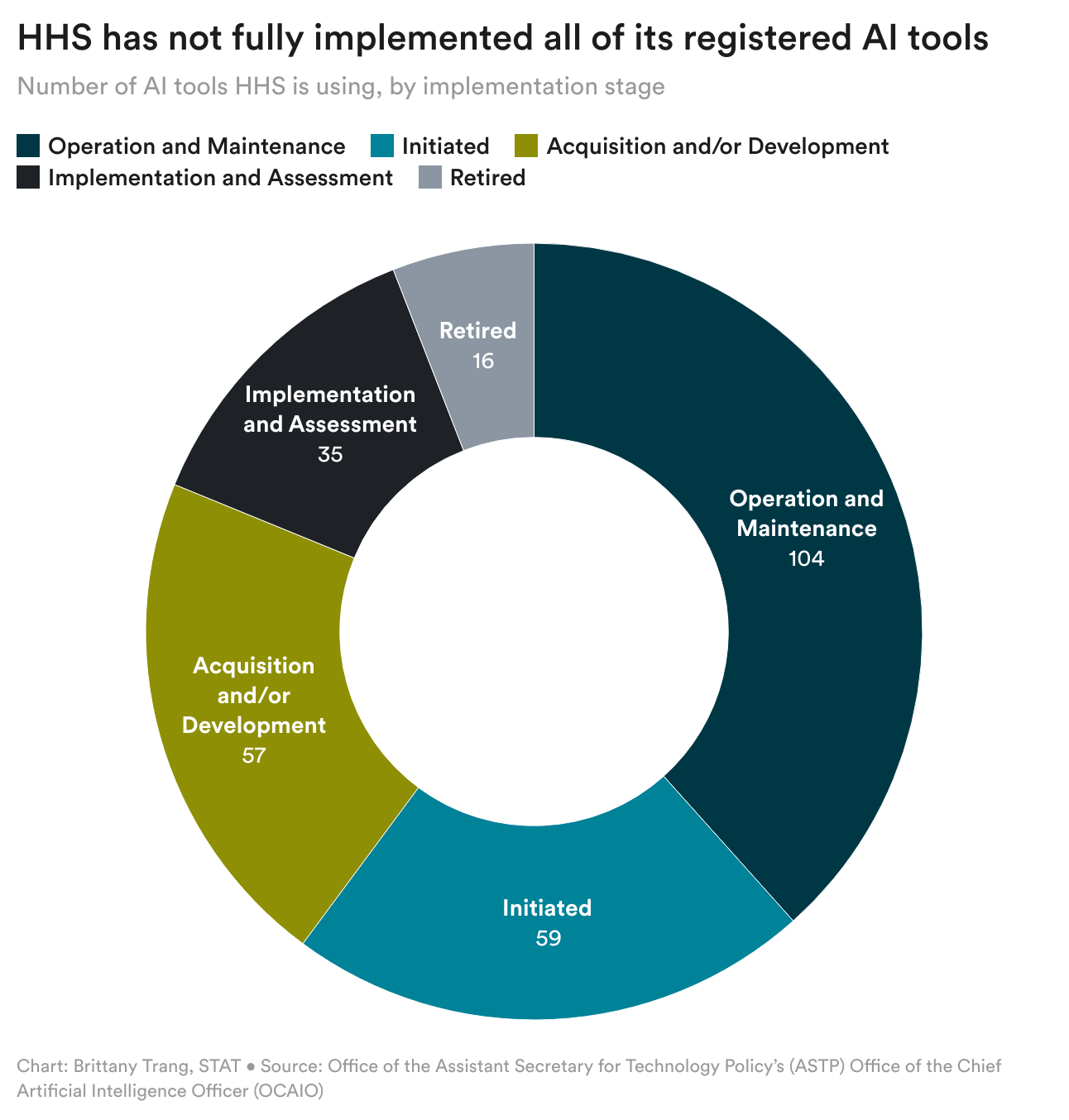

The U.S. Department of Health and Human Services (HHS) recently unveiled plans to develop an artificial intelligence tool designed to detect patterns in vaccine injury claims and generate hypotheses about potential adverse vaccine effects. This announcement, buried in an annual AI inventory report, has sparked significant concern among public health experts, epidemiologists, and vaccine safety researchers who worry about how the tool might be deployed under current HHS leadership. According to Wired, the initiative represents a significant shift in how federal agencies approach vaccine safety data analysis.

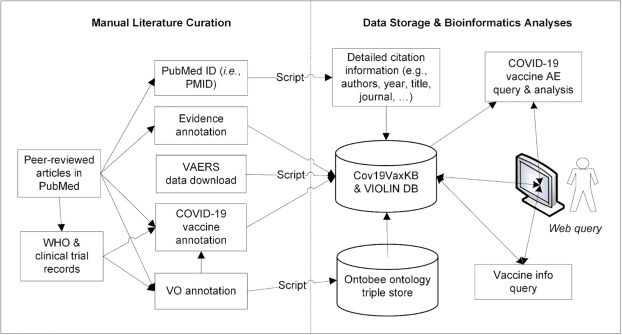

Rather than relying solely on traditional statistical methods and manual review processes that have governed vaccine monitoring for decades, HHS is moving toward generative AI models capable of processing massive datasets and producing novel hypotheses about vaccine-related injuries. While the integration of advanced AI into public health surveillance sounds promising on the surface, the context matters enormously. When combined with leadership that has openly questioned vaccine safety and pushed to remove vaccines from the childhood immunization schedule, experts worry the tool could become a weapon in the anti-vaccine arsenal rather than a genuine safety enhancement.

This development raises fundamental questions about AI governance in public health, the role of computational tools in disease prevention policy, and how government agencies should balance innovation with the potential for misuse. The story is less about whether AI can help analyze vaccine data, and more about whether it will be used responsibly or weaponized to undermine one of modern medicine's greatest achievements.

The Vaccine Adverse Event Reporting System: How It Actually Works

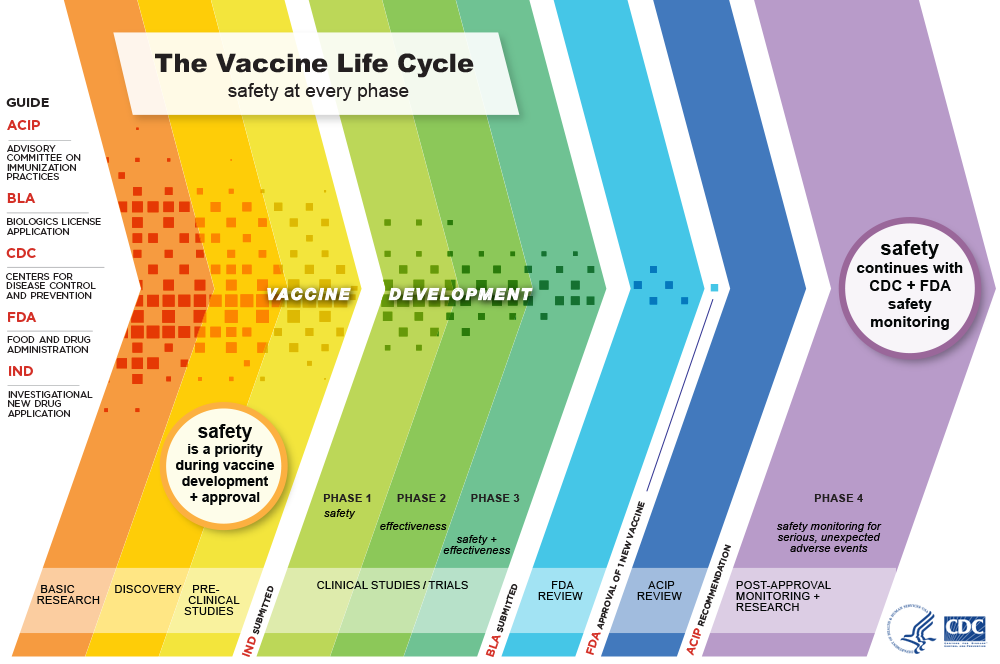

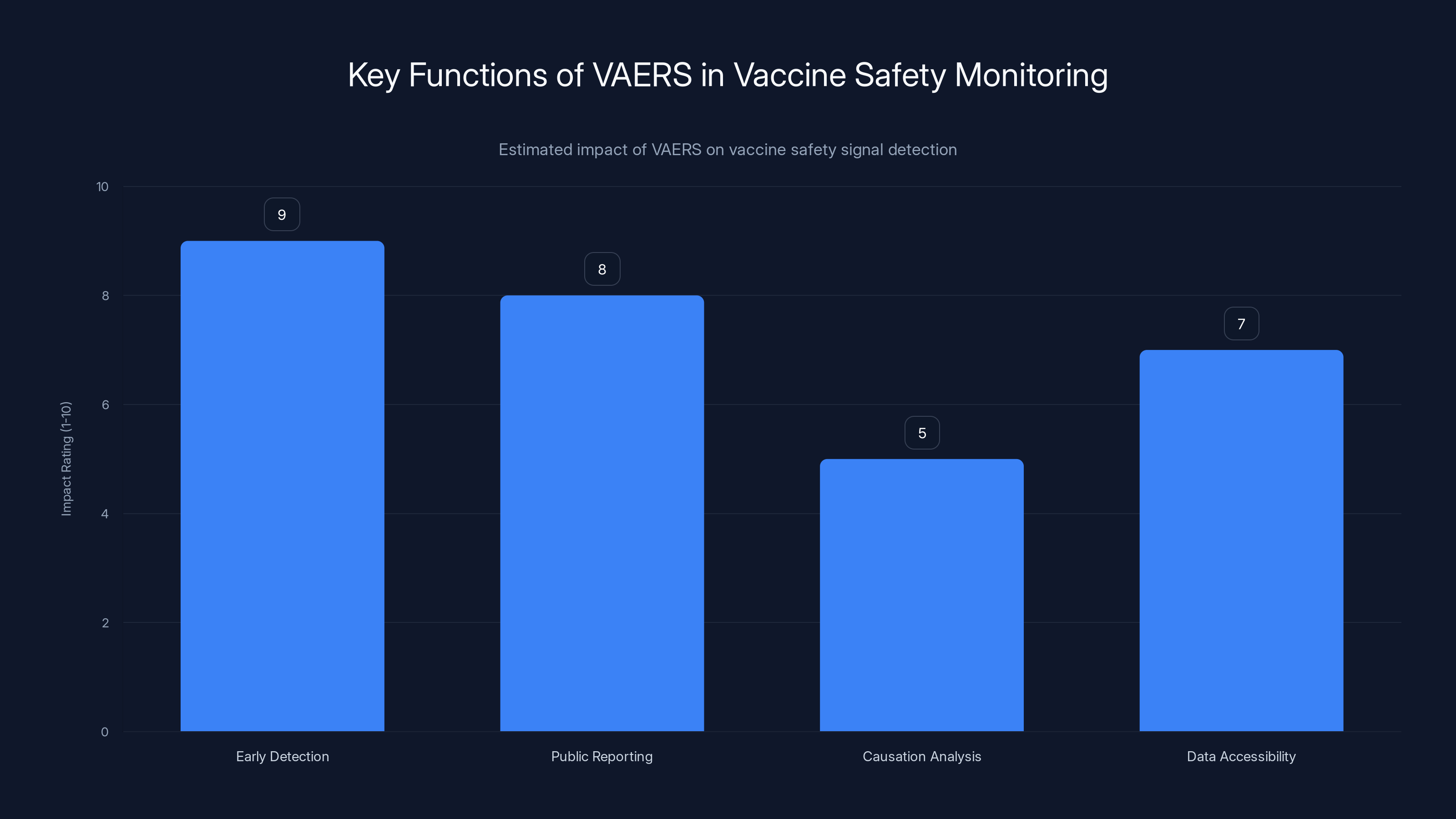

To understand why experts worry about this new AI tool, you need to grasp how vaccine safety monitoring currently functions in America. The Vaccine Adverse Event Reporting System, known as VAERS, serves as the foundational database for detecting potential vaccine safety signals. Established in 1990 through a partnership between the Centers for Disease Control and Prevention and the Food and Drug Administration, VAERS operates as a passive surveillance system where anyone—health care providers, vaccine manufacturers, or members of the general public—can submit reports of adverse events that occur after vaccination.

This openness is both VAERS's greatest strength and its most exploitable weakness. The system deliberately maintains low barriers to entry because public health experts recognize that early detection of safety signals saves lives. When the Johnson & Johnson COVID-19 vaccine was linked to rare blood clots, VAERS flagged the problem. When researchers identified myocarditis cases in younger males receiving mRNA vaccines, VAERS data contributed to that discovery. These represent genuine successes where the system worked exactly as intended, identifying rare but serious adverse events that warranted investigation and public communication.

However, VAERS data contains a critical caveat that anti-vaccine activists routinely ignore: the system cannot prove causation. A report submitted to VAERS means only that an adverse event occurred at some point after vaccination. It doesn't establish that the vaccine caused the event. This distinction matters tremendously. Imagine a 65-year-old receives a flu vaccine on Monday and suffers a heart attack on Wednesday. That can be reported to VAERS. But statistically, the person would likely have suffered that heart attack regardless of vaccination status. VAERS alone cannot distinguish between coincidental timing and causal relationships.

The database also lacks a crucial denominator: how many people actually received each vaccine. This absence creates a mathematical trap. If 10,000 reports of a particular side effect are logged in VAERS following 200 million vaccine administrations, the true incidence rate is quite low. But if you simply quote the 10,000 figure without context, you create an impression of widespread harm. This is precisely how anti-vaccine advocates have misrepresented VAERS data for years, cherry-picking alarming numbers while stripping away the epidemiological context that reveals them to be uncommon.

Paul Offit, a pediatrician and director of the Vaccine Education Center at Children's Hospital of Philadelphia who previously served on the CDC's Advisory Council on Immunization Practices, describes VAERS as a "hypothesis-generating mechanism" rather than a proof mechanism. It's noisy. It's unverified. It lacks denominators. It was never designed to be the final word on vaccine safety. Instead, it's meant to be the first whisper—a signal that prompts rigorous investigation using more sophisticated epidemiological methods. When VAERS flags a potential problem, the proper response is controlled studies, careful statistical analysis, and comparison with background rates in unvaccinated populations.

That process takes time. It requires expertise. It requires academic integrity. And therein lies the concern about deploying advanced AI to rapidly generate hypotheses from VAERS data without the institutional safeguards currently in place.

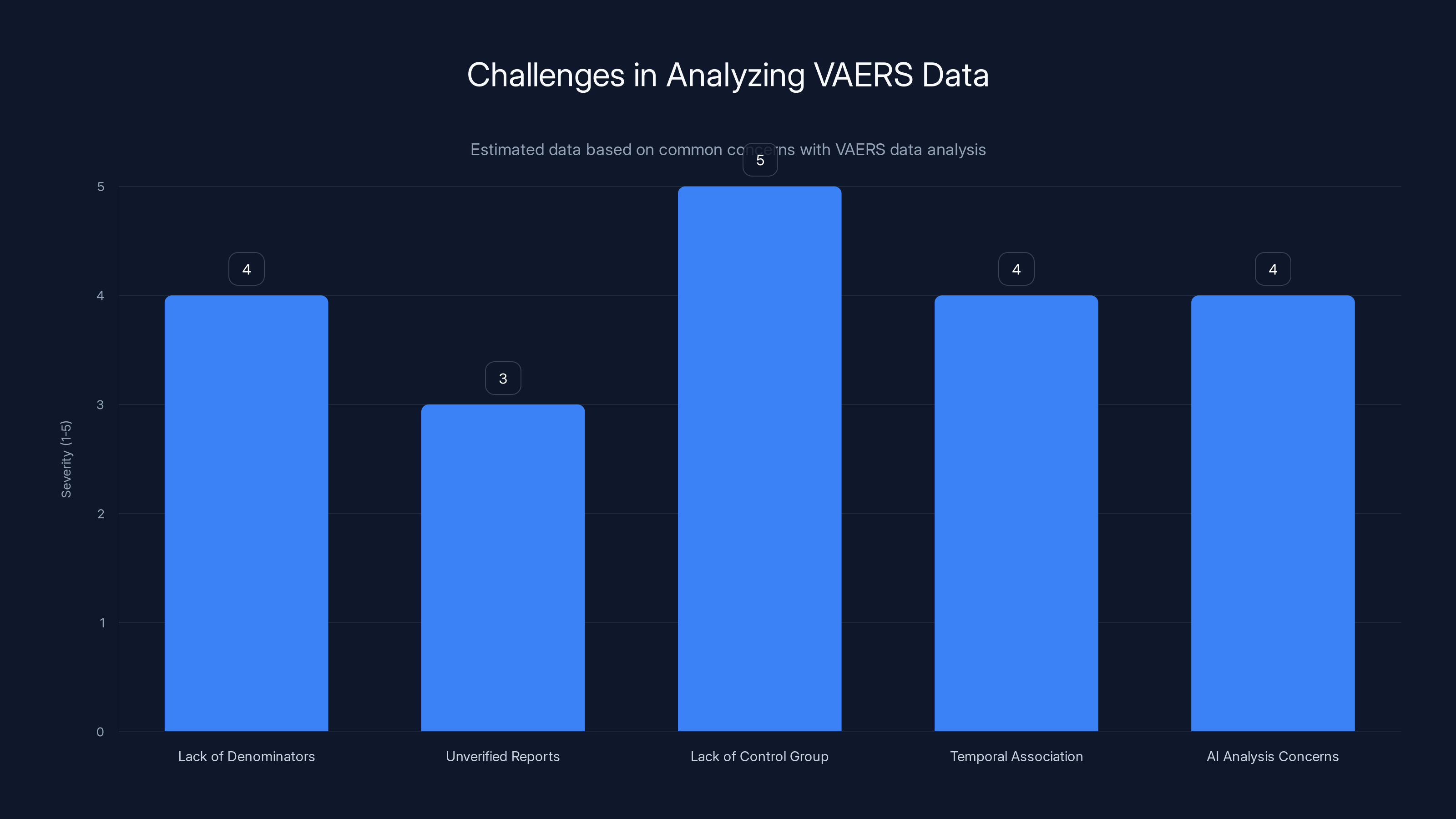

Estimated data shows that the lack of a control group and AI analysis concerns are among the most significant challenges in analyzing VAERS data.

The Architecture of VAERS Data: What AI Actually Sees

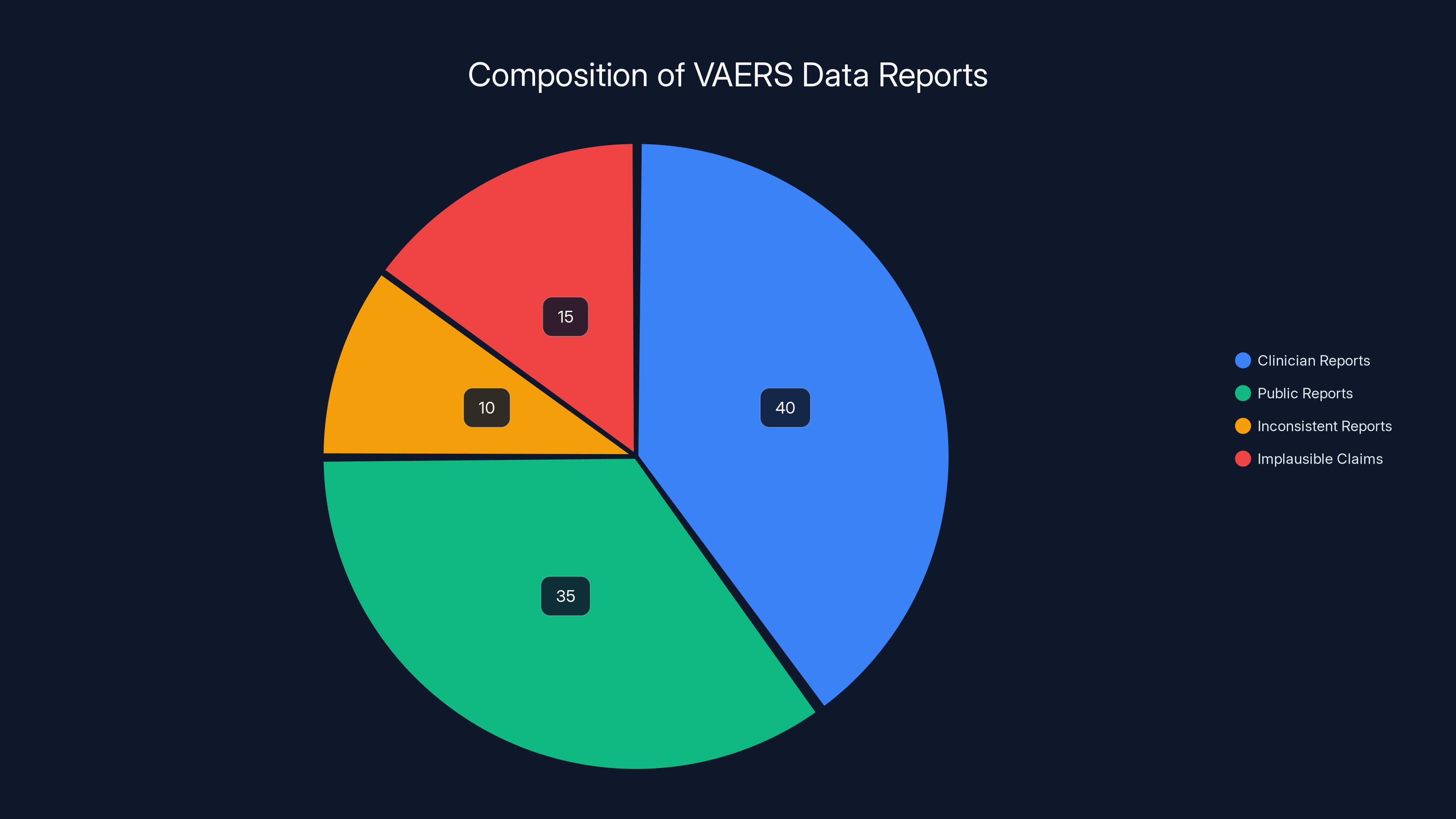

To appreciate why AI systems might produce problematic outputs when analyzing VAERS data, consider what the database actually contains from a computational perspective. VAERS collects unstructured text reports alongside structured fields like age, vaccine type, and reported symptoms. These reports vary wildly in quality, specificity, and accuracy. Some come from trained clinicians who carefully document medical details. Others come from the general public, sometimes with minimal medical knowledge, sometimes based on hearsay or internet rumors.

A 2024 analysis of VAERS submissions found that approximately 10-15% of reports contain obvious inconsistencies or implausible claims. Some reports describe symptoms or timelines that violate basic biological principles. Others involve deaths attributed to vaccines despite clear alternative causes documented in medical records. The CDC maintains quality control processes to flag and contextualize problematic submissions, but these human reviews can't catch everything, and bad data inevitably contaminates the database.

When you feed this messy, partially incorrect data into a large language model trained to find patterns and generate hypotheses, you create a recipe for plausible-sounding false discoveries. Machine learning systems excel at detecting correlations in high-dimensional data, but they lack any understanding of biological causation, medical implausibility, or epidemiological principles. An LLM might identify a pattern where women over 60 report joint pain within two weeks of vaccination and confidently generate a hypothesis that the vaccine causes arthritis. The model would have no way to recognize that joint pain is extremely common in this age group regardless of vaccination status, or that post-hoc temporal association proves nothing about causation.

Moreover, generative AI systems are famously prone to what researchers call "hallucinations"—confident but false outputs that sound authoritative but contain fabricated details. In the context of vaccine safety analysis, hallucinations could generate hypotheses about non-existent side effects, associate vaccines with health outcomes they don't cause, or produce statistics that appear to come from legitimate studies but were entirely invented by the neural network.

Leslie Lenert, formerly the founding director of the CDC's National Center for Public Health Informatics and now director of the Center for Biomedical Informatics and Health Artificial Intelligence at Rutgers University, acknowledges that government scientists have been using traditional natural language processing to analyze VAERS data for several years. The move toward more advanced large language models isn't surprising from a pure technology perspective. But the context matters. Under previous administrations, such tools would be developed with strict protocols requiring expert validation, epidemiological review, and peer scrutiny before any findings influenced policy. Under current HHS leadership, there are legitimate concerns that hypotheses generated by AI might be treated as established facts and used to justify removing vaccines from the childhood schedule without proper investigation.

Estimated data suggests that while clinician reports form a significant portion of VAERS data, a notable percentage includes inconsistent or implausible claims, highlighting challenges in data quality.

Who's Leading HHS and Why It Matters

The composition of leadership at any federal agency determines how its tools get used. The Department of Health and Human Services under its current administration has Robert F. Kennedy Jr. serving as secretary—a position that would be laughable if the stakes weren't so high. Kennedy is not a public health expert. He's not an epidemiologist. He's not a vaccine researcher. What he is, unambiguously, is a long-standing vaccine skeptic with a documented history of spreading vaccine misinformation.

Kennedy's public statements about vaccines span decades and constitute a greatest hits album of vaccine myths. He has promoted the false claim that vaccines cause autism, despite overwhelming evidence that the original study suggesting such a link was fraudulent and has been retracted. He has suggested that the polio vaccine actually spread polio, contradicting mountains of epidemiological evidence showing that the vaccine has saved millions of lives and eliminated polio from most of the world. He has claimed that the childhood vaccination schedule is designed to generate revenue for pharmaceutical companies rather than protect children, a conspiracy theory that collapses under basic scrutiny.

In his first year as HHS secretary, Kennedy removed several vaccines from the recommended childhood immunization schedule. Specifically, he removed vaccinations against COVID-19, influenza, hepatitis A and B, meningococcal disease, rotavirus, and respiratory syncytial virus from the formal recommendation list. Each of these vaccines prevents serious diseases that cause childhood deaths and disabilities. The removal of these recommendations is not based on new safety data or epidemiological evidence suggesting harm. It's based on ideology.

Beyond the removal of specific vaccines, Kennedy has called for overhauling the entire VAERS system itself. His argument is that VAERS suppresses information about the true rate of vaccine side effects. In reality, VAERS is transparent—anyone can query the database and access the data directly. What VAERS does is contextualize the data, explaining that reported events don't prove causation. Kennedy apparently interprets scientific honesty about the limitations of surveillance data as "suppression."

He has also proposed changes to the federal Vaccine Injury Compensation Program that could make it easier for people to sue manufacturers for adverse events that haven't been proven to be associated with vaccines. This represents a fundamental shift in legal standards, moving away from requiring proof of causation before compensation and toward accepting temporal association as sufficient grounds for liability.

Against this backdrop, the announcement of an AI tool to generate hypotheses about vaccine injuries takes on a darker character. In the hands of a genuine public health expert, such a tool would be valuable—a way to surface patterns that merit investigation. In Kennedy's hands, preliminary hypotheses from an AI system could become ammunition in a campaign to justify further vaccine removals and reshape pediatric immunization practices in ways that would lead to outbreaks of preventable diseases.

The Problem of AI Misuse in Public Health Policy

When government agencies use computational tools to inform policy, the integrity of the analysis process matters as much as the technical sophistication of the system. An AI tool analyzing VAERS data correctly requires institutional structures, expert oversight, and commitment to scientific integrity. Specifically, you need:

Epidemiological expertise: Someone with formal training in epidemiology needs to review any hypotheses generated by the AI system. They need to evaluate whether the patterns detected are plausible, whether alternative explanations exist, and whether the data quality supports further investigation. This isn't optional bureaucratic overhead. It's the difference between science and pseudoscience.

Comparative analysis: VAERS hypotheses must be tested against background rates in unvaccinated populations. If you claim a vaccine causes a particular adverse event at a certain frequency, you need to demonstrate that the frequency is higher in vaccinated individuals than in similar unvaccinated people. The AI system, on its own, cannot make this comparison. It needs to be integrated with actuarial data, health insurance databases, and epidemiological cohorts.

Peer review and independent validation: Before any AI-generated hypothesis influences policy, it should be subjected to peer review and independent replication. Scientists outside government should evaluate the analysis, critique the methodology, and validate findings. This process is slower than simply deploying a tool and following its recommendations, but it's how science actually works.

Transparency and public scrutiny: The methodology, data, and decision-making criteria need to be public. If the HHS AI tool generates a hypothesis that a particular vaccine causes a specific adverse event, the public should be able to examine the underlying data and analysis. This transparency allows the scientific community to evaluate whether the conclusion is justified.

Currently, there's no evidence that any of these safeguards will be in place. If you develop an AI system to generate vaccine safety hypotheses, deploy it in an agency led by a vaccine skeptic, and allow rapid translation of AI outputs into policy changes, you've created a system optimized for producing predetermined conclusions rather than discovering truth.

Consider a hypothetical scenario: the AI tool analyzes VAERS data and generates a hypothesis that the polio vaccine causes a particular type of joint inflammation. A genuine public health expert would recognize that this requires validation through multiple independent studies before considering any policy change. Kennedy, believing what he already believes about vaccines, might use this AI-generated hypothesis as justification to remove polio vaccination from the childhood schedule. The result would be tragedy—outbreaks of polio among unvaccinated children, permanent paralysis, and deaths that would have been entirely preventable.

This isn't speculative. Kennedy's statements about polio vaccination suggest he already questions whether polio is truly eradicated. An AI-generated hypothesis about polio vaccine harm, even a preliminary one, could provide the cover he needs to make catastrophic policy changes.

VAERS excels in early detection and public reporting but is less effective in proving causation. Estimated data.

Large Language Models and the Hallucination Problem

Generative AI systems, despite their impressive capabilities, have fundamental limitations that make them problematic tools for safety-critical analysis. The most significant limitation is their tendency to produce confident but false outputs—what researchers call hallucinations or confabulations. These aren't random errors. They're false statements that sound plausible and authoritative, constructed in a way that mimics legitimate knowledge.

Why do LLMs hallucinate? Because they're fundamentally statistical systems trained to predict the next word in a sequence based on patterns in training data. They're not retrieving facts from a knowledge base. They're generating text that matches the statistical patterns they've learned. When no valid continuation exists, they generate one anyway—making something up rather than declining to answer.

In the context of vaccine safety analysis, hallucinations could be particularly dangerous. An LLM might read through VAERS reports and generate a hypothesis like "reports show correlation between COVID-19 vaccination and sudden cardiac events in 40-year-olds." The system would have found genuine reports of cardiac events temporally associated with vaccination. But it might have hallucinated the statistical relationship, invented the specific age range, or misrepresented the frequency. If this hallucinated hypothesis gets treated as a genuine finding, it could influence policy decisions.

Jesse Goodman, an infectious disease physician and professor of medicine at Georgetown University, acknowledges that LLMs could potentially detect previously unknown vaccine safety issues. But he emphasizes the critical importance of rigorous human follow-up. "I would expect, depending on the approaches used, a lot of false alerts and a need for a lot of skilled human follow-through by people who understand vaccines and possible adverse events, as well as statistics, epidemiology, and the challenges with LLM output," Goodman explained in interviews about this issue.

The required human follow-through is not trivial. It demands expertise that's increasingly scarce in government. The CDC has experienced significant staffing cuts in recent years, reducing the number of epidemiologists and vaccine safety experts available to investigate potential safety signals. Deploying an AI system that generates numerous hypotheses without the institutional capacity to properly investigate them is worse than not deploying the system at all. You create a backlog of false leads while genuine safety signals get lost in the noise.

Moreover, the political context matters. If leadership is predisposed to believe that vaccines are harmful, there's pressure—whether explicit or implicit—to treat AI-generated hypotheses as confirmations of existing beliefs rather than preliminary findings requiring investigation. A skeptical expert might generate a thorough critique of an AI-generated hypothesis. In a properly functioning organization, that critique would be heard. In an organization led by someone with a predetermined anti-vaccine agenda, that expert's concerns might be dismissed or that expert might face pressure to fall in line.

Historical Precedent: VAERS Misuse and Lessons Learned

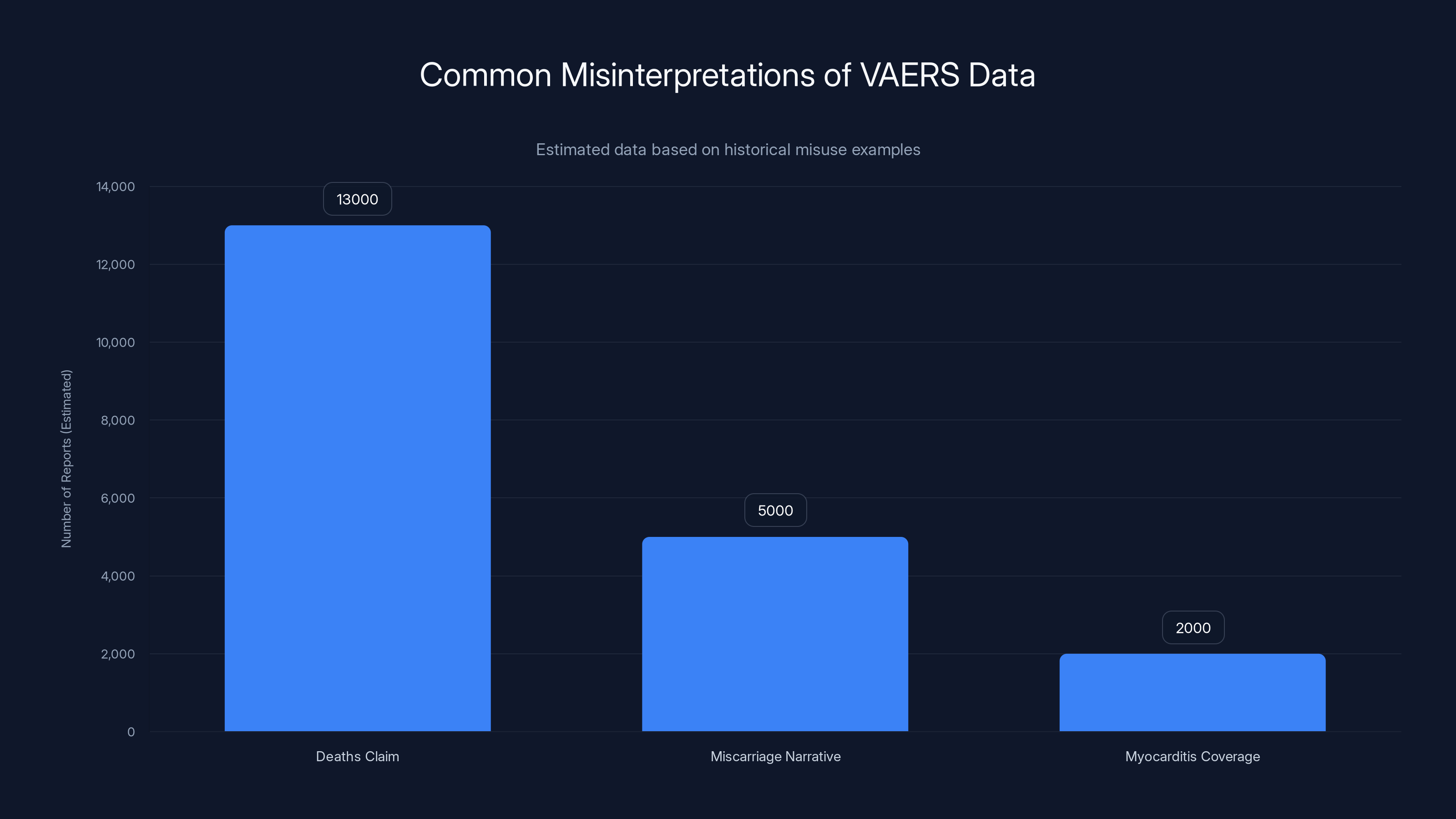

The misuse of VAERS data by anti-vaccine advocates didn't start with the current HHS administration. It's been happening for years, providing a cautionary tale about what happens when preliminary data gets weaponized without proper context. During the COVID-19 pandemic, VAERS data was repeatedly cited by vaccine skeptics to argue that COVID-19 vaccines were far more dangerous than commonly understood.

Some specific examples illustrate the pattern:

The 13,000+ Deaths Claim: Anti-vaccine activists promoted the idea that VAERS showed over 13,000 deaths associated with COVID-19 vaccines in the United States. News headlines built on this figure without mentioning that VAERS itself explicitly states that reports don't prove the vaccine caused the death, and that CDC review of the most concerning deaths found no evidence that vaccines caused them. When you investigate the actual cases, you find deaths from heart attacks in elderly patients, deaths from cancer in people with advanced disease, deaths from accidents—events that would have happened whether or not the person received a vaccine that week.

The Miscarriage Narrative: Anti-vaccine groups seized on reports of miscarriages in VAERS following pregnancy vaccination and claimed this proved vaccines harmed pregnancy. In reality, miscarriage occurs naturally in approximately 15-20% of pregnancies. When millions of pregnant people are vaccinated, thousands of miscarriages will occur temporally associated with vaccination purely by chance. Proper epidemiological studies comparing miscarriage rates in vaccinated versus unvaccinated pregnant people found no increase in risk from vaccination. But the dramatic VAERS numbers, stripped of context, created a compelling false narrative.

The Myocarditis Coverage: There was genuine signal detected in VAERS about myocarditis (inflammation of the heart muscle) following mRNA vaccines, particularly in younger males. This was a real safety finding that warranted investigation. But anti-vaccine advocates used the same data to argue that mRNA vaccines were far more dangerous than the disease they prevented. In reality, the risk of myocarditis from vaccination is substantially lower than the risk of myocarditis from COVID-19 infection itself, especially in younger people. The vaccine prevented more myocarditis than it caused, but that context got lost in the debate.

In each case, the pattern was identical: take VAERS data, remove the epidemiological context, present crude numbers without denominators or comparative analysis, and conclude that vaccines are dangerously harmful. The data itself wasn't fabricated. But the interpretation was fundamentally dishonest.

Now imagine that same dynamic, but with an AI system in the mix. The system generates hundreds of preliminary hypotheses from VAERS data. Anti-vaccine activists and sympathetic officials choose the most alarming hypotheses, treat them as established facts, and use them to justify vaccine policy changes. The AI system provides what appears to be scientific legitimacy to conclusions that are actually ideologically driven.

Estimated data suggests high risk levels associated with LLM hallucinations, especially the need for human follow-up to mitigate false alerts.

The Role of Institutional Safeguards and How They Prevent Abuse

The reason historical VAERS misuse was ultimately contained is that institutional safeguards existed to prevent false findings from becoming policy. The CDC maintained independence from political pressure. The FDA reviewed safety data through rigorous scientific processes. Vaccine recommendations were made by experts on the Advisory Committee on Immunization Practices, a group of epidemiologists and vaccine specialists insulated from direct political control. These safeguards weren't perfect, but they worked well enough to prevent catastrophic policy mistakes based on misinterpreted data.

Those safeguards are currently under assault. The CDC is experiencing what experts describe as political interference in vaccine safety decisions. The FDA faces pressure to adopt more restrictive vaccine approval standards based on philosophical beliefs rather than scientific evidence. The ACIP itself has seen changes to membership that reduce representation of vaccine experts and epidemiologists. Meanwhile, the HHS secretary is openly skeptical of vaccine safety and has already removed vaccines from recommendations without scientific justification.

Into this environment, you're introducing an AI system capable of generating novel hypotheses from vaccine safety data. For this to be responsible, you would need strong institutional protections ensuring:

Independence of analysis: The scientists conducting VAERS analysis and interpreting AI-generated hypotheses need to be insulated from pressure to reach predetermined conclusions. Career civil servants with civil service protections are typically more independent than political appointees, but current leadership has found ways to pressure career staff.

Peer review of findings: Before any AI-generated hypothesis influences policy, it should be reviewed by independent experts outside government. This process is standard in scientific research and is essential here.

Documentation of decision-making: Policy decisions about vaccines should be documented with clear reasoning explaining why recommendations changed. If a vaccine is removed from recommendations based on an AI-generated hypothesis from VAERS, there should be detailed documentation explaining the analysis, alternative explanations considered, and why the conclusion is justified. Such documentation allows oversight and accountability.

Congressional and public transparency: Congress should know how AI tools are being used to shape vaccine policy. The public should be able to access information about how vaccine recommendations are being made. This transparency is the ultimate check on misuse.

Currently, there's little evidence that any of these safeguards are robust. Kennedy has not pledged to maintain scientific independence in vaccine safety analysis. The HHS has not committed to peer review of AI-generated hypotheses. Documentation of decision-making processes has become less transparent, not more. Congressional oversight has been weak.

The Specific Technical Concerns: How AI Systems Get It Wrong

Beyond the political and institutional concerns, there are specific technical reasons why AI systems might produce problematic outputs when analyzing VAERS data. Understanding these technical limitations is essential for evaluating whether the HHS system could be trusted to support vaccine policy decisions.

Confounding variables: VAERS data doesn't control for confounding variables—factors other than the vaccine that might explain reported adverse events. If elderly people receive vaccines at higher rates than younger people, and elderly people experience more health problems generally, then crude analysis of VAERS data will show more health problems associated with vaccination simply because elderly people were vaccinated. Machine learning systems are notoriously bad at distinguishing genuine causal effects from confounding relationships without explicit statistical control.

Selection bias: People who experience adverse events are more likely to report them to VAERS than people who don't. This creates systematic bias in the data. If you notice that a particular vaccine has more reported side effects than another vaccine in VAERS, you can't conclude that it's actually more dangerous. Perhaps the community of people vaccinated with the first vaccine is more likely to report adverse events. Or perhaps vaccine safety advocates encouraged reporting for that particular vaccine to support their narrative. AI systems can't detect this selection bias without explicit modeling.

Temporal association without causation: The core problem with VAERS is that it shows temporal association but not causation. If you create a graph showing that cardiac events increase in a certain age group during the weeks following vaccination rollout, it looks damning. But the same age group might experience naturally increasing cardiac events for reasons completely unrelated to vaccines. Without comparison to a control group, you can't determine whether vaccination changed the rate or whether you're just observing background variation.

Pattern detection over biological plausibility: Machine learning systems are excellent at finding patterns in data, even patterns that make no biological sense. An AI might identify a correlation between vaccination and reports of taste changes or other non-serious symptoms and generate a hypothesis about mechanisms, even though there's no plausible biological reason why the vaccine would cause that specific effect. This superficial pattern detection creates false leads that waste resources investigating impossible relationships.

Language variation and coding errors: VAERS reports are written in natural language, and people describe symptoms differently. One person reports "arm pain," another reports "pain at injection site," another reports "soreness." These are probably the same symptom. A machine learning system might treat them as distinct and generate hypotheses about different mechanisms. Or it might group them together and find patterns that are actually just different descriptions of expected side effects.

The HHS would need to address each of these technical issues explicitly in the design and deployment of the AI system. There's no evidence this is happening.

Estimated data shows how certain narratives have been frequently misused, with the '13,000+ Deaths Claim' being the most prevalent. Estimated data.

Expert Warnings and Academic Concerns

Public health experts have been notably cautious about the deployment of this AI system. Their warnings focus on the combination of technical risks and political context. Leslie Lenert, with extensive experience in both public health informatics and AI applications, has expressed concern that VAERS is "supposed to be very exploratory" but that "some people in the FDA are now treating it as more than exploratory." She suggests that there's pressure to use preliminary data as justification for policy decisions, which is inappropriate.

Vinay Prasad, director of the FDA's Center for Biologics Evaluation and Research, has already signaled this approach. In a memo to staff, Prasad blamed deaths of at least 10 children on the COVID-19 vaccine without citing evidence. The deaths were reported to VAERS and had been reviewed by FDA staff, who found no evidence that the vaccine caused them. Nevertheless, Prasad attributed the deaths to the vaccine and used this unjustified attribution to propose stricter vaccine regulation.

In response, more than a dozen former FDA commissioners wrote a letter published in The New England Journal of Medicine expressing concern about Prasad's proposed guidelines. They noted that the changes would "dramatically change vaccine regulation on the basis of a reinterpretation of selective evidence." These are people with extensive experience in vaccine safety and regulatory policy, and they were alarmed by the direction being taken.

Jesse Goodman, the infectious disease physician at Georgetown, has been careful to acknowledge that LLMs could, in principle, detect unknown safety issues. But he emphasizes that this depends on sophisticated follow-up. "I would expect, depending on the approaches used, a lot of false alerts and a need for a lot of skilled human follow-through by people who understand vaccines and possible adverse events, as well as statistics, epidemiology, and challenges with LLM output," he stated. He also noted that deep staffing cuts at the CDC mean there's inadequate capacity to conduct the required rigorous investigation.

The common theme in expert commentary is cautious acceptance of the technology paired with serious concern about implementation in the current political context. If this AI tool were being developed at the CDC under strong epidemiological leadership with commitment to scientific integrity, it might be valuable. In its current context, it looks like a tool designed to generate predetermined conclusions.

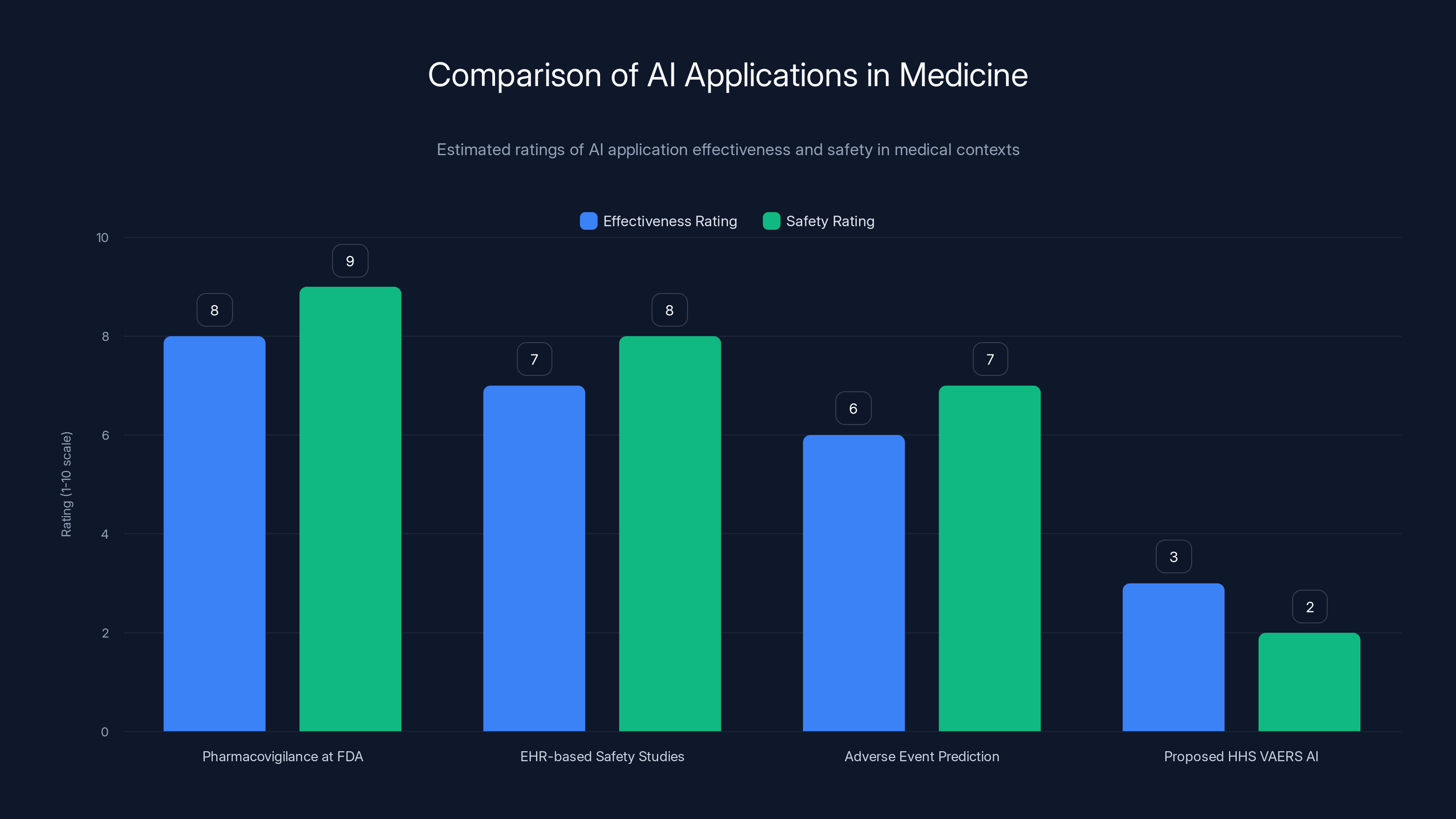

Comparison to Legitimate AI Applications in Medicine

To understand what responsible AI deployment in vaccine safety would look like, consider legitimate applications of AI in medical research and public health that haven't become controversial. Machine learning has genuinely improved drug safety monitoring in multiple ways:

Pharmacovigilance at FDA: The FDA uses machine learning to analyze adverse event reports for pharmaceuticals. The system identifies patterns and signals that might indicate unknown drug interactions or side effects. But the FDA has extensive protocols: the AI generates potential signals, expert pharmacists and physicians review each signal, the signal is validated against other data sources, and only well-supported signals influence regulatory decisions. The process is transparent and peer-reviewed.

EHR-based safety studies: Researchers use electronic health records combined with machine learning to conduct automated studies of medication safety in real populations. These studies have identified genuine drug safety issues. But they include proper statistical controls for confounding, comparisons to control groups, and peer review before publication.

Adverse event prediction: AI systems have been trained to predict which patients might experience adverse events based on their medical history and genetics. These predictions help clinicians provide better informed consent and monitor high-risk patients more carefully. But the predictions are tools supporting clinical judgment, not determining policy.

In each case, the responsible approach involves: development by genuine experts, testing and validation before deployment, multiple layers of review, transparency, and maintenance of human oversight. The proposed HHS VAERS AI system has none of these safeguards.

Estimated data shows that current AI applications in medicine, like those by the FDA and in EHR studies, are rated higher in effectiveness and safety compared to the proposed HHS VAERS AI system, which lacks essential safeguards.

The Epidemiological Reality: Why Vaccines Remain Safe

Before addressing what should happen next, it's worth establishing the actual state of vaccine safety science. Despite anti-vaccine activism and the new AI initiative, the evidence overwhelmingly demonstrates that vaccines are among the safest medical interventions ever developed.

Vaccines are tested extensively before approval, including in large clinical trials with tens or hundreds of thousands of participants. Most vaccine adverse events occur within days of vaccination, meaning that decades of accumulated evidence can determine actual safety profiles. For COVID-19 vaccines specifically, we have data from billions of doses administered worldwide. For childhood vaccines, we have data spanning decades. In all cases, serious adverse events occur at rates far lower than the serious complications from the diseases the vaccines prevent.

The comparison is critical. Yes, some people experience myocarditis following mRNA COVID-19 vaccination. But myocarditis from COVID-19 infection itself is substantially more common and more severe. The vaccine prevents myocarditis rather than causing net harm. Yes, there was a rare association between one COVID-19 vaccine and blood clots. In response, that vaccine was restricted to populations where the benefit clearly exceeded risk. The system worked. Safety signals were detected and appropriate responses were implemented.

Flourishing disease control provides the ultimate proof: measles, polio, hepatitis B, and other vaccine-preventable diseases have been eliminated or reduced to minimal levels in populations with high vaccination rates. When vaccination rates drop, these diseases return. This is not opinion. This is observable reality. When Kennedy removed measles vaccination from HHS recommendations, public health officials correctly predicted measles outbreaks. The outbreaks have already begun.

Vaccines remain safe not because of propaganda or suppression, but because they were carefully designed, extensively tested, and continuously monitored. An AI tool that leads to policies undermining vaccine confidence doesn't protect public health. It endangers it.

Regulatory Capture and the Independence Problem

One way to understand what's happening at HHS is through the lens of regulatory capture—the phenomenon where agencies meant to protect public interests become dominated by ideology or private interests that run counter to their mission. In this case, the capture is ideological rather than financial. Kennedy doesn't have a financial stake in vaccine skepticism. But he has spent decades as a prominent vaccine critic, and his ideological commitments now shape agency policy.

This is actually worse than traditional regulatory capture. A regulator captured by industry at least wants industry to produce profitable products that can be sold. The profit motive provides some incentive for reasonable safety standards. An ideology-captured regulator might pursue policies that are actively harmful if they align with ideological commitments.

Vaccine safety science has been protected historically by institutional independence. Career civil servants at the CDC have been shielded from direct political pressure. The FDA has had a tradition of technical expertise informing decisions. These institutions aren't perfect—there have been failures and areas for legitimate criticism. But they've generally worked to prevent catastrophic errors.

The deployment of an AI tool to analyze vaccine safety data represents an attempt to circumvent these protections. Rather than having epidemiologists and vaccine experts make determinations about safety signals, an AI system makes the initial determination. Leadership then interprets the AI's output however serves ideological goals. The tool becomes a kind of technological permission slip for predetermined conclusions.

Historically, when officials wanted to change vaccine policy, they had to build a case based on evidence. The evidence had to be reviewed by experts who understood the science. This imposed friction that prevented hasty or ideologically motivated changes. AI-generated hypotheses reduce this friction. Now a policy change can be justified based on preliminary AI output rather than extensive evidence.

What Should Happen: Safeguards and Accountability

If the HHS proceeds with developing this AI tool—and it appears they will—there are specific safeguards that should be mandatory. These safeguards should be established in regulation or statute so they can't be easily circumvented by agency leadership:

Statutory requirement for peer review: Before any AI-generated hypothesis influences vaccine policy, it must be reviewed and validated by independent experts outside government. This review should be published.

Epidemiological expertise requirement: Any team interpreting AI output must include epidemiologists with formal training in vaccine safety. Such experts should have civil service protections preventing removal for providing inconvenient analysis.

Documentation and transparency: All decisions to change vaccine recommendations based on AI analysis should be documented with detailed reasoning accessible to the public and Congress. Specifically, documentation should explain why the AI hypothesis was preferred to alternative explanations.

Comparison to unvaccinated controls: VAERS hypotheses should be validated against actual epidemiological data comparing vaccinated and unvaccinated populations. Merely showing that adverse events are reported in VAERS following vaccination is insufficient justification for policy changes.

Congressional oversight: Congress should require regular briefings on how this AI tool is being used, what hypotheses it has generated, and which hypotheses have influenced policy decisions. This oversight should be bipartisan and informed by vaccine safety experts.

Whistleblower protections: Scientists and public health professionals should have strong protections if they raise concerns about inappropriate use of the AI tool. History shows that without such protections, dissenting experts get marginalized.

Sunset clause: The deployment should be authorized for a defined period with mandatory reauthorization requiring evidence that the tool has improved vaccine safety decisions. If misuse is documented, authorization should be withdrawn.

Public access to data and methodology: The underlying algorithms, training data, and validation methodology should be available for independent researchers to examine and critique. This transparency allows the scientific community to identify problems.

Without such safeguards, the tool becomes a policy weapon rather than a safety enhancement.

The Broader Implications for AI Governance in Public Health

This situation highlights fundamental questions about how government agencies should govern AI deployment, particularly in areas with significant public health and policy implications. Several principles emerge from the current situation:

Technical sophistication doesn't equal wisdom: An AI system that successfully identifies patterns in data is technically impressive but potentially dangerous if deployed without proper oversight. The capability to do something doesn't mean it should be done without safeguards.

Context determines appropriate use: The same AI system could be used responsibly by one agency and irresponsibly by another, depending on institutional culture, expertise, and leadership commitment to scientific integrity. The tool itself is neutral. Its use is not.

Institutional independence matters: Agencies that make safety-critical decisions need insulation from short-term political pressure. This isn't about removing accountability, but about ensuring that decisions are made based on evidence rather than ideology. Career experts with civil service protections serve this function.

Transparency is security: When agencies use AI to inform policy, the public's ability to understand that process is essential. Opaque decision-making creates opportunity for abuse. Transparent processes, even if slower, build legitimate authority.

Expertise can't be replaced by automation: No AI system can replace human expertise in interpreting complex scientific data. Machine learning should support human judgment, not replace it. In areas like vaccine safety, the supporting experts need to be genuine experts—not politicians claiming expertise.

These principles should guide AI deployment across government, not just in vaccine safety. The stakes are too high to do otherwise.

Timeline and Current Status

Based on HHS inventory reports, this AI initiative has been in development since late 2023. As of the most recent inventory released in early 2025, the tool has not yet been deployed. This provides a window of opportunity for establishing safeguards before the system goes live and starts generating policy-influencing hypotheses.

The question is whether that window will be used effectively. Will Congress assert oversight? Will former FDA leaders and other experts in vaccine safety speak up more loudly? Will career scientists at the CDC and FDA resist inappropriate uses of the tool? Or will the window close and the tool be deployed without adequate safeguards?

The timeline is also important because of disease dynamics. Measles and other vaccine-preventable diseases have long incubation periods and may take months to resurge visibly after vaccination rates drop. If the AI tool influences policy changes that reduce vaccination rates, the harms won't be immediately obvious. By the time hospitalizations and deaths start occurring, the vaccination drops will be entrenched and difficult to reverse. This temporal lag between policy change and visible consequence is another reason why safeguards before deployment are critical.

Lessons from Historical Health Disasters

History provides sobering examples of what happens when government agencies make health policy decisions based on ideology rather than evidence. These examples offer cautionary tales about the dangers of the current trajectory:

Thalidomide Approval: In the 1950s, regulatory agencies in some countries approved thalidomide for use in pregnancy without adequate testing. Thousands of children were born with severe birth defects. The tragedy exposed the dangers of approving medications without robust evidence of safety.

Tobacco Industry Influence on Health Policy: For decades, tobacco companies influenced government health policy despite scientific evidence of harm. Millions died from preventable tobacco-related diseases because policy was shaped by industry rather than evidence.

Denial of AIDS Treatment Options: In the early AIDS crisis, some government agencies delayed approval of effective treatments or provided inadequate support for research, contributing to thousands of preventable deaths.

Denial of Antiviral COVID-19 Treatments: Early in the COVID-19 pandemic, some policy makers discouraged research into antiviral treatments despite evidence they could be effective, delaying their availability to patients.

In each case, the pattern was similar: ideology, political interest, or ideological capture of agencies led to policies that harmed public health. In each case, independent experts warned of the dangers. And in each case, the harm was substantial and often irreversible.

The vaccination situation is following a familiar pattern. An ideologically motivated leader has taken control of an agency. That leader is using available tools, including AI systems, to generate support for predetermined conclusions. Experts are warning of dangers. The public health community is watching with alarm. The question is whether institutions have sufficient independence and checks to prevent catastrophic outcomes.

Moving Forward: What Needs to Happen

The path forward has several components. First, Congress needs to assert robust oversight of how AI tools are being used in vaccine safety decisions. This shouldn't be partisan—Democrats and Republicans both have reasons to care about vaccine safety and governmental integrity. Congressional hearings with vaccine safety experts could establish that there are legitimate concerns about current deployment plans.

Second, the scientific community needs to speak up more visibly. Vaccine safety experts, epidemiologists, and infectious disease specialists should publish position statements about responsible and irresponsible uses of AI in their field. Their voices carry credibility that political advocates lack.

Third, whistleblower protections for government scientists need to be strengthened. If career employees at the CDC or FDA encounter inappropriate uses of the AI tool, they should have clear channels to report concerns without fear of retaliation.

Fourth, the regulatory framework governing AI use in government decision-making needs to be strengthened. Agencies should be required to establish review processes for AI tools before deployment, particularly tools that inform consequential policy decisions.

Fifth, independent auditing of the AI system's outputs would provide accountability. Regular, independent analysis of the hypotheses the system generates and how they're being used in policy decisions would expose misuse.

Finally, funding for legitimate vaccine safety research needs to increase. If resources were directed toward rigorous epidemiological studies of vaccine safety conducted by independent researchers, those studies would provide the strongest possible evidence about vaccine safety profiles. This is the appropriate way to investigate safety questions, not through rapid AI-generated hypotheses.

The Irreducible Tension

There's an irreducible tension at the heart of this situation. Generative AI systems are genuinely useful for analyzing large datasets and identifying patterns that might merit investigation. In principle, an AI tool analyzing VAERS data could help identify genuine vaccine safety signals that warrant further study. This is a legitimate value proposition.

But deploying such a tool under leadership that is ideologically opposed to vaccines, that has already removed vaccines from recommendations without scientific justification, and that has proposed using AI-generated preliminary hypotheses as justification for policy changes creates obvious risks. You can't separate the technology from its context. The same tool, deployed responsibly, would be beneficial. The same tool, deployed irresponsibly, would be dangerous.

The question isn't whether the AI tool itself is good or bad. It's whether institutional safeguards exist to ensure responsible use. Currently, those safeguards appear inadequate. That's the real concern.

FAQ

What is the VAERS database and how does it work?

The Vaccine Adverse Event Reporting System (VAERS) is a passive surveillance system established in 1990 jointly by the Centers for Disease Control and Prevention and the Food and Drug Administration. It allows anyone—healthcare providers, vaccine manufacturers, or members of the public—to submit reports of adverse events that occur after vaccination. The system deliberately maintains low barriers to entry because early detection of safety signals can save lives, but it cannot determine whether reported events were actually caused by vaccines, only that they occurred in temporal association with vaccination.

Why can't VAERS data alone prove that a vaccine caused an adverse event?

VAERS data cannot establish causation for several reasons: the database lacks denominators showing how many people received each vaccine, it contains unverified reports from various sources with inconsistent quality, it lacks a control group of unvaccinated people for comparison, and temporal association does not prove causation—many adverse events would occur at the same time even without vaccination. A rigorous epidemiological study comparing vaccinated and unvaccinated populations is needed to determine whether vaccines actually caused reported events at higher rates than background incidence.

What specific concerns do experts have about using AI to analyze VAERS data?

Experts worry that large language models analyzing VAERS data could produce confident but false hypotheses due to the models' tendency to hallucinate plausible-sounding but fabricated information. Additionally, AI systems lack understanding of biological plausibility and may identify patterns that make no medical sense, they can't control for confounding variables without explicit statistical methods, and they excel at finding correlations without distinguishing correlation from causation. Most critically, the political context is concerning because HHS leadership has already demonstrated skepticism about vaccine safety and has removed vaccines from recommendations without scientific justification.

How has VAERS data been misused historically by anti-vaccine advocates?

Anti-vaccine activists have repeatedly taken VAERS numbers out of context to suggest vaccines are dangerously harmful. For example, they cited 13,000+ reported deaths after COVID-19 vaccination without mentioning that VAERS doesn't prove causation and CDC investigation found no evidence the vaccine caused those deaths. They promoted miscarriage reports from VAERS without acknowledging that miscarriage naturally occurs in 15-20% of pregnancies. They seized on myocarditis reports without comparing incidence rates in vaccinated versus unvaccinated populations, where the disease itself causes more myocarditis than the vaccine. These misrepresentations stripped data of its epidemiological context to generate false alarms.

What safeguards would be needed for responsible AI deployment in vaccine safety analysis?

Responsible deployment would require: mandatory peer review by independent experts before any AI hypothesis influences policy, epidemiological expertise on interpretation teams with civil service protections, transparent documentation of decisions accessible to the public and Congress, validation against actual epidemiological data comparing vaccinated and unvaccinated populations, Congressional oversight with regular briefings on AI usage and policy changes, strong whistleblower protections for scientists raising concerns, clear sunset provisions with mandatory reauthorization, and public access to the algorithms, training data, and validation methodology for independent scrutiny.

Why is institutional independence important in vaccine safety decisions?

Historically, vaccine policy decisions have been made by career experts at the CDC and FDA insulated from direct political pressure. This independence allows decisions to be based on scientific evidence rather than ideology or political interest. When agency leadership can directly override expert judgment or pressure experts to reach predetermined conclusions, the integrity of safety decisions is compromised. The extensive evidence supporting vaccine safety comes from this system where experts evaluated data impartially. Removing that independence risks decisions being driven by ideology rather than evidence.

What happened to legitimate vaccine safety signals detected in VAERS?

VAERS has successfully identified genuine vaccine safety issues that warranted investigation. Blood clots associated with the Johnson & Johnson COVID-19 vaccine were initially flagged through VAERS and subsequently investigated, leading to appropriate restrictions on use in populations where risks exceeded benefits. Myocarditis cases among younger males receiving mRNA vaccines were identified through VAERS and validated through proper epidemiological study, leading to appropriate monitoring and informed consent discussions. These represent the system working correctly—preliminary signal detection followed by rigorous investigation and proportionate response.

How does the current HHS leadership's history with vaccines affect the credibility of vaccine safety analysis?

Robert F. Kennedy Jr., the current HHS secretary, has spent decades promoting vaccine skepticism and vaccine misinformation. He has promoted false claims linking vaccines to autism despite the original study being fraudulent and retracted, suggested polio vaccines caused polio despite massive evidence otherwise, and claimed the childhood vaccination schedule is designed for profit rather than protection. In his first year leading HHS, he removed several vaccines from the recommended childhood schedule without scientific justification. This established history creates legitimate concerns that AI tools analyzing vaccine safety data might be used to justify predetermined anti-vaccine conclusions rather than to genuinely investigate safety questions.

What alternative approaches would be more appropriate for investigating vaccine safety questions?

More appropriate approaches would include rigorous epidemiological studies comparing vaccinated and unvaccinated populations using electronic health records or insurance data, long-term prospective studies following cohorts of vaccinated and unvaccinated individuals, mechanistic studies investigating whether proposed biological pathways for adverse events are plausible, and peer-reviewed publication requiring external expert evaluation before findings influence policy. These approaches take more time than AI-generated hypotheses but provide the kind of evidence actually needed to determine whether vaccines cause adverse events. Funding for such research would be a more credible response to vaccine safety questions than deploying AI systems to rapidly generate preliminary hypotheses.

What would happen if vaccine recommendations were changed based on inadequately validated AI hypotheses?

Reduced vaccination rates historically lead to outbreaks of preventable diseases. Measles, polio, hepatitis B, and other vaccine-preventable diseases have been largely eliminated in highly vaccinated populations but return rapidly when vaccination rates drop. If AI-generated hypotheses lead to vaccine recommendations being removed without proper validation, the predictable consequence would be outbreaks of preventable diseases causing deaths and permanent disabilities in unvaccinated populations. Children would suffer from diseases their parents never had to fear because of vaccines. The harm would be substantial and largely irreversible, particularly for diseases like polio that cause permanent paralysis.

TL; DR

- HHS is developing an AI tool to analyze vaccine injury claims from the VAERS database and generate hypotheses about vaccine adverse effects, building on previous natural language processing efforts.

- VAERS data cannot prove causation because it lacks denominators, contains unverified reports, and only shows temporal association with vaccination—a critical limitation that anti-vaccine advocates have systematically misused for years.

- AI systems pose specific technical risks including producing confident but false outputs (hallucinations), detecting patterns without biological plausibility, and lacking understanding of confounding variables and epidemiological principles.

- Leadership concerns are paramount since HHS Secretary Robert F. Kennedy Jr. has a documented history of vaccine skepticism, has already removed vaccines from recommendations without scientific justification, and appears positioned to use AI hypotheses to further anti-vaccine policy changes.

- Adequate safeguards are currently missing including requirements for peer review, epidemiological expertise, transparent documentation, comparative epidemiological validation, Congressional oversight, and whistleblower protections.

- Bottom line: While AI could theoretically improve vaccine safety analysis, deploying such a system under leadership with a predetermined anti-vaccine agenda without robust safeguards risks using preliminary hypotheses as justification for dangerous policy changes that would lead to preventable disease outbreaks.

Key Takeaways

- HHS is developing an AI tool to analyze VAERS vaccine injury claims without clear safeguards against misuse by anti-vaccine leadership

- VAERS data cannot prove causation and has been systematically misused by vaccine skeptics for years to create false health scares

- Large language models analyzing vaccine data risk producing confident but false hypotheses due to hallucinations and inability to understand biological plausibility

- Current HHS leadership has already removed vaccines from recommendations without scientific justification, suggesting predisposition to use AI output ideologically

- Responsible AI deployment in vaccine safety requires multiple institutional safeguards including peer review, epidemiological oversight, and transparency that currently appear absent

Related Articles

- US Exit From WHO: $768 Million Gap & Global Health Crisis [2025]

- DOE Climate Working Group Ruled Illegal: What the Judge's Decision Means [2025]

- Prediction Markets Like Kalshi: What's Really at Stake [2025]

- Waymo Robotaxi Hits Child Near School: What We Know [2025]

- Trump Administration Relaxes Nuclear Safety Rules: What It Means for Energy [2025]

- Syphilis Origin Story: 5,500-Year-Old Discovery Rewrites History [2025]

![HHS AI Tool for Vaccine Injury Claims: What Experts Warn [2025]](https://tryrunable.com/blog/hhs-ai-tool-for-vaccine-injury-claims-what-experts-warn-2025/image-1-1770201321365.jpg)