How Deep AI Integration Transforms Customer Service ROI [2025]

You've deployed AI in your support operation. The early wins came fast: response times dropped, ticket volume moved faster, and your team stopped drowning in routine questions. Everyone's happy. Numbers look good.

But here's what happens next: you hit a ceiling. The easy efficiency gains max out. You're still treating AI like a tool that saves time, not like a complete reshaping of how support creates value.

This is the moment most teams miss.

The real transformation doesn't happen when AI first arrives. It happens when AI becomes so deeply woven into your support operations that it changes the fundamental economics of customer service itself. When that shift occurs, everything changes. ROI becomes measurable at scale. Capacity freed by automation flows directly into revenue-generating activities. Support stops being a cost center and starts driving customer lifetime value.

The difference between teams seeing modest efficiency gains and teams experiencing true transformation? It's the depth of integration.

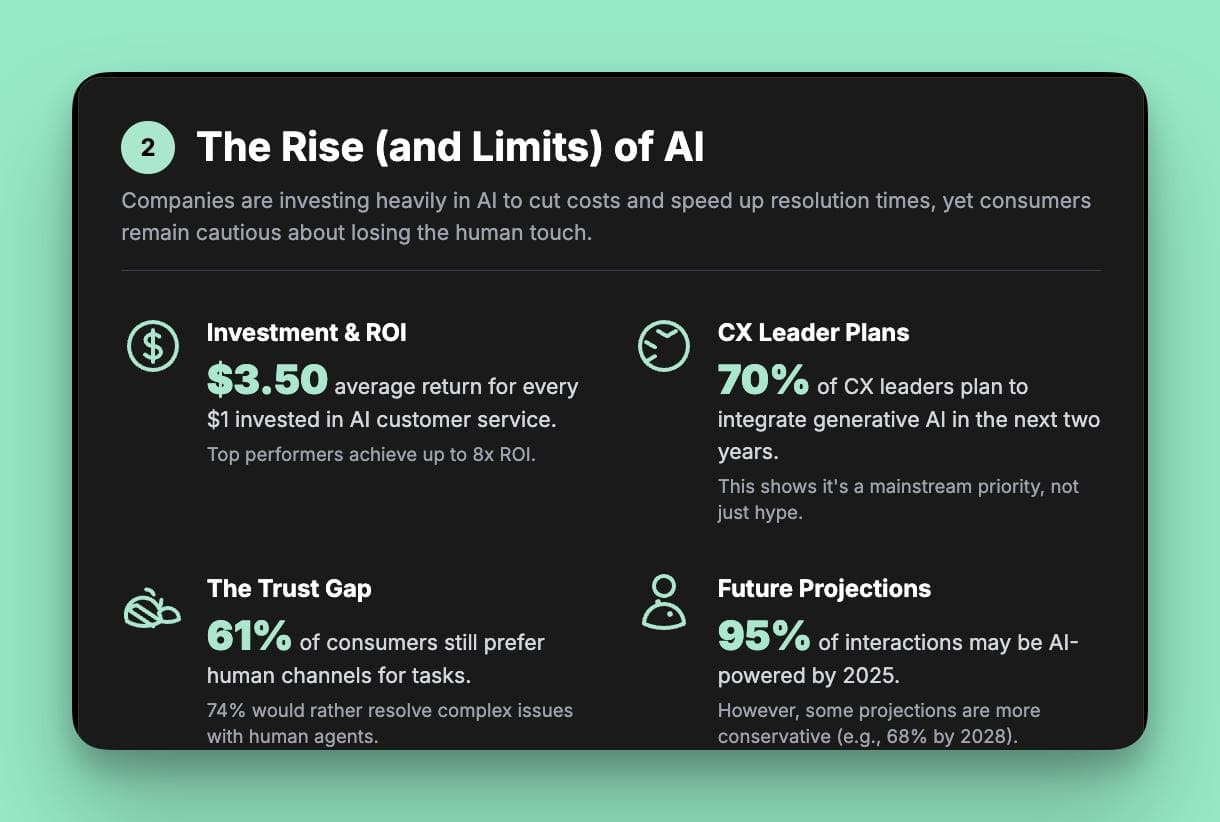

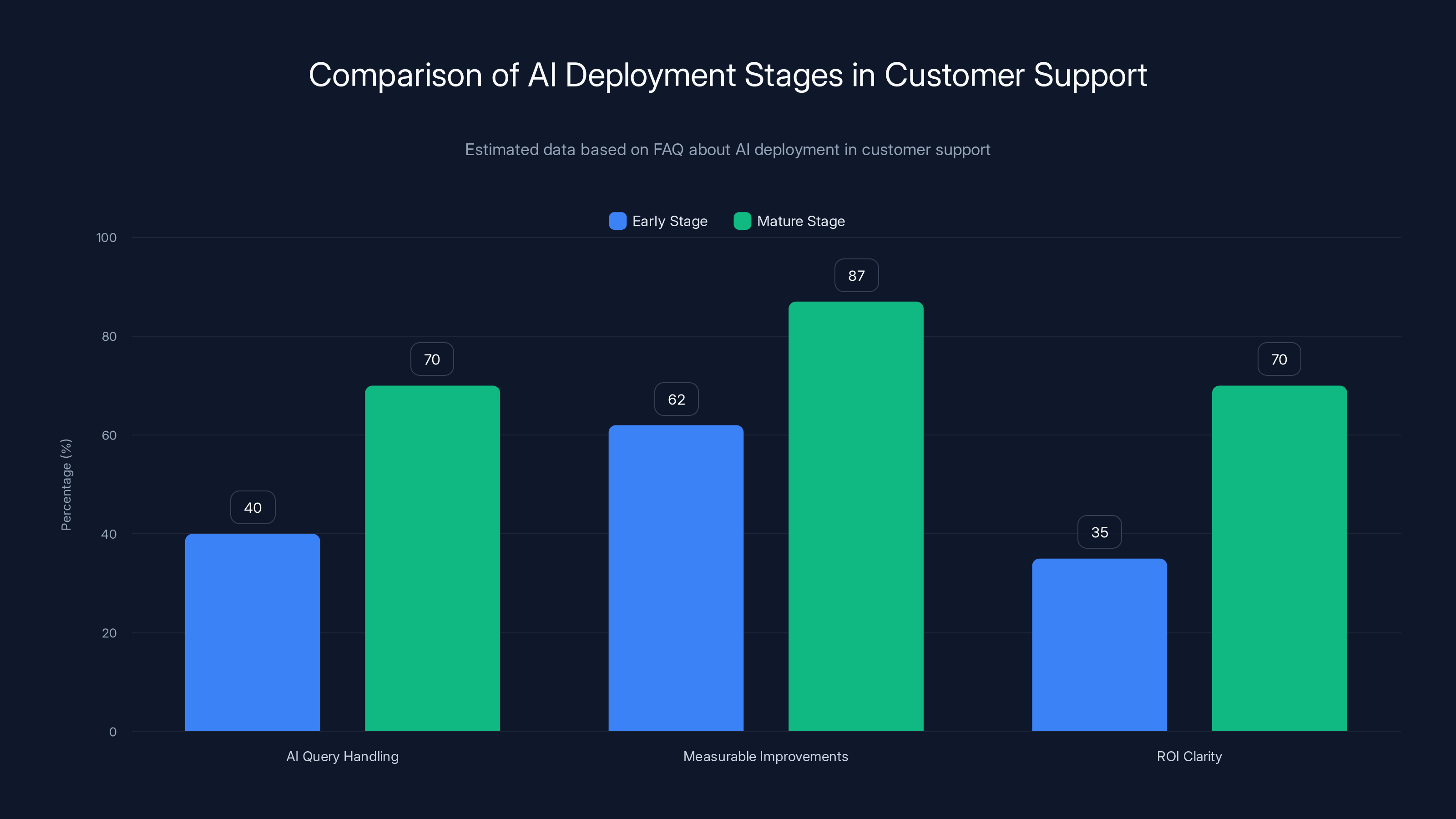

Our latest research tracking customer service transformation across hundreds of organizations reveals something striking: teams at mature AI deployment stages report 87% improvement in key metrics, compared to just 62% across all stages. But that's not even the most important finding. The real story is how measurement itself evolves. Early-stage teams struggle to articulate ROI. Only 35% can clearly measure their return on AI investment. By the mature stage, that number jumps to 70% as noted in AI Journ.

That gap exists because the economics are fundamentally different. And until you understand how, you'll keep measuring AI through the lens of legacy support models that were designed for a completely different world.

This isn't about AI getting better at what support teams already do. This is about support teams getting better at what AI enables them to do. The implications ripple across your organization, touching customer activation, retention, lifetime value, and ultimately, revenue.

Let's explore how this transformation actually works, why the economics fundamentally shift, and how to position your organization to capture value at scale.

TL; DR

- 87% of mature AI deployments see measurable metric improvements, versus 62% at early stages, with 70% able to clearly measure ROI

- The economics shift entirely from cost reduction to revenue generation, with 56% of mature teams directing freed capacity toward growth activities

- Measurement evolves dramatically as AI takes on complex work, moving from tracking time saved to demonstrating revenue influence and customer lifetime value impact

- Legacy support models break down under AI integration, requiring new frameworks that treat AI as a workforce multiplier rather than an efficiency tool

- System improvement creates compounding returns, where every resolved interaction makes the AI agent smarter, creating network effects within your support operation

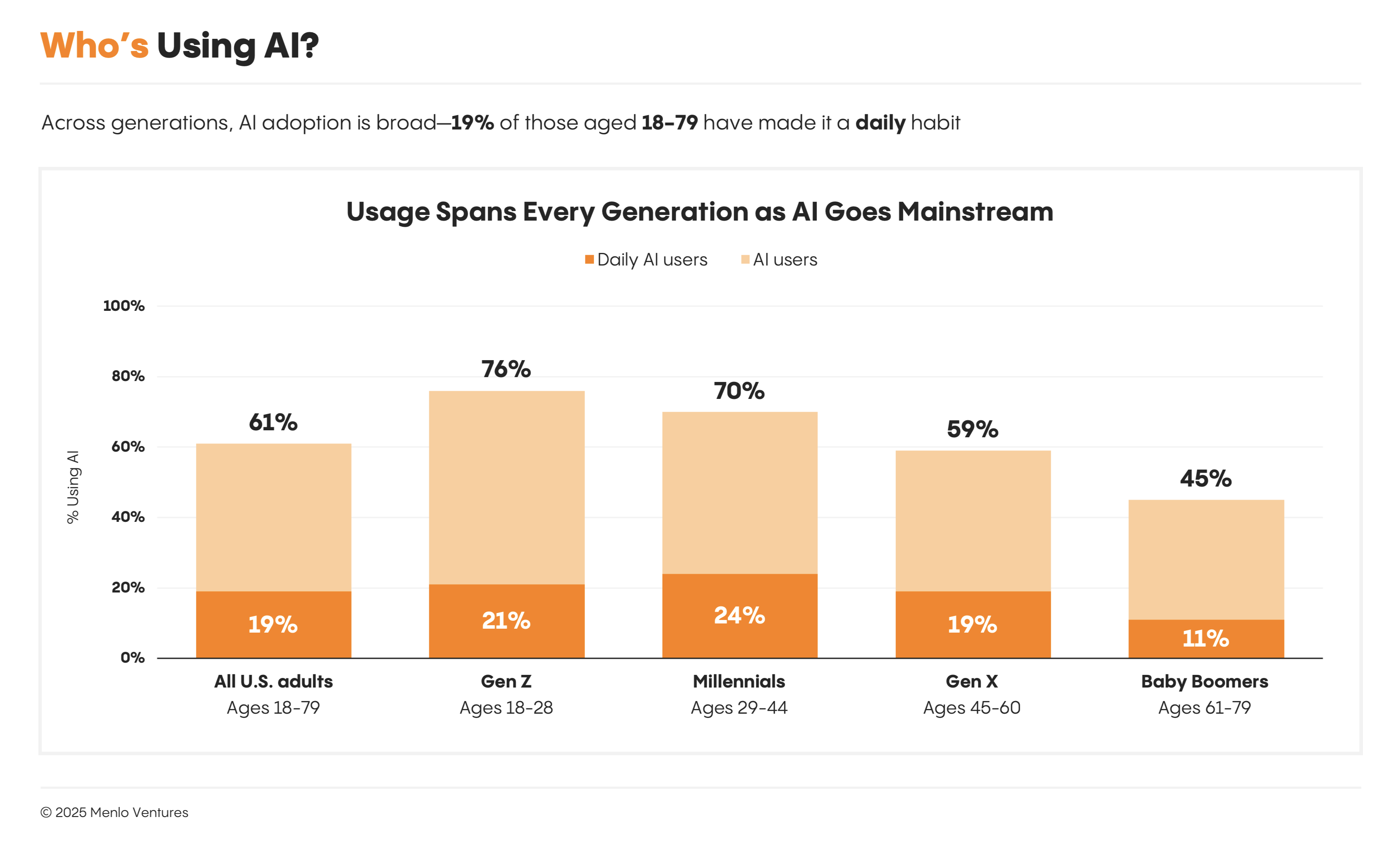

Mature AI deployments handle a higher percentage of queries and show greater improvements and ROI clarity compared to early-stage deployments. Estimated data based on FAQ insights.

The Deployment Gap That's Reshaping Customer Support

There's a quiet revolution happening in customer service organizations right now. It's not dramatic. There are no viral announcements or sudden pivots. Instead, it's the methodical difference between teams treating AI as another tool in their stack and teams treating AI as the foundation of their entire support strategy.

The gap between these two approaches is widening every quarter.

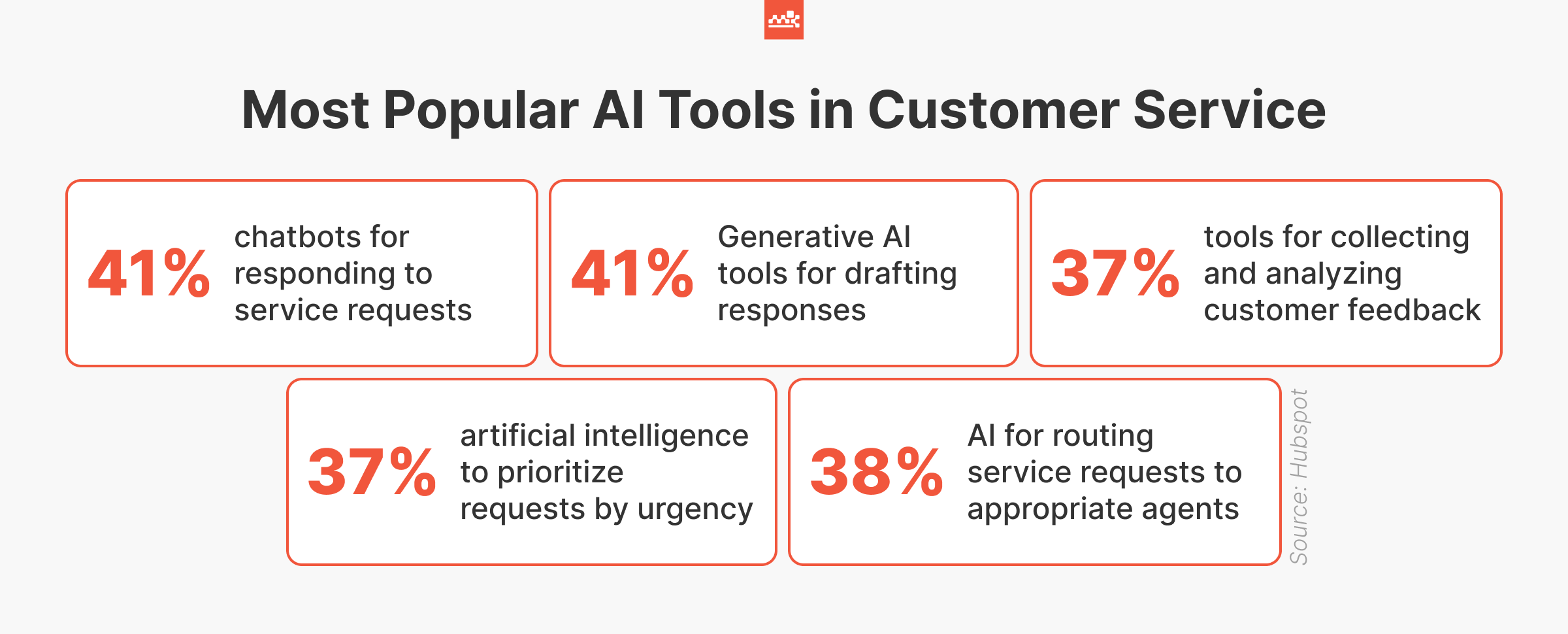

When AI first arrived in customer support, the adoption curve looked predictable. Early explorers grabbed quick wins. They used AI for simple query categorization, basic chatbot responses, and routine ticket deflection. The results were obvious and immediately measurable. Response times dropped by 20-40%. First-contact resolution rates improved. Support staff shifted from repetitive work to more complex issues.

But this created a false equilibrium. Teams celebrated the efficiency gains, reported the metrics to leadership, and then... stopped. They'd hit what looked like the natural ceiling of AI's potential.

The teams that pushed past this ceiling did something different. They didn't just deploy AI wider. They deployed it deeper. They let AI handle increasingly complex customer problems. They retrained their teams not to manage AI, but to work alongside it on problems that actually required human judgment. They redesigned workflows around what AI could do instead of forcing AI into workflows designed for humans.

The difference in outcomes is staggering.

Teams in early exploration phases report 62% experiencing improved metrics since deploying AI. That's not nothing. But teams at mature deployment—where AI is fully integrated into operations, handling complex work, and driving the strategy—report 87% seeing measurable improvements. That 25-percentage-point gap compounds across every dimension you measure.

Even more revealing is the ROI measurement gap. Among early-stage deployers, only 35% say they can clearly articulate their return on AI investment. Most teams at this stage can point to speed improvements and cost reductions. But they can't connect those to business outcomes. By the mature stage, 70% of teams have clear ROI visibility.

Why such a dramatic difference? Because the ROI story changes entirely.

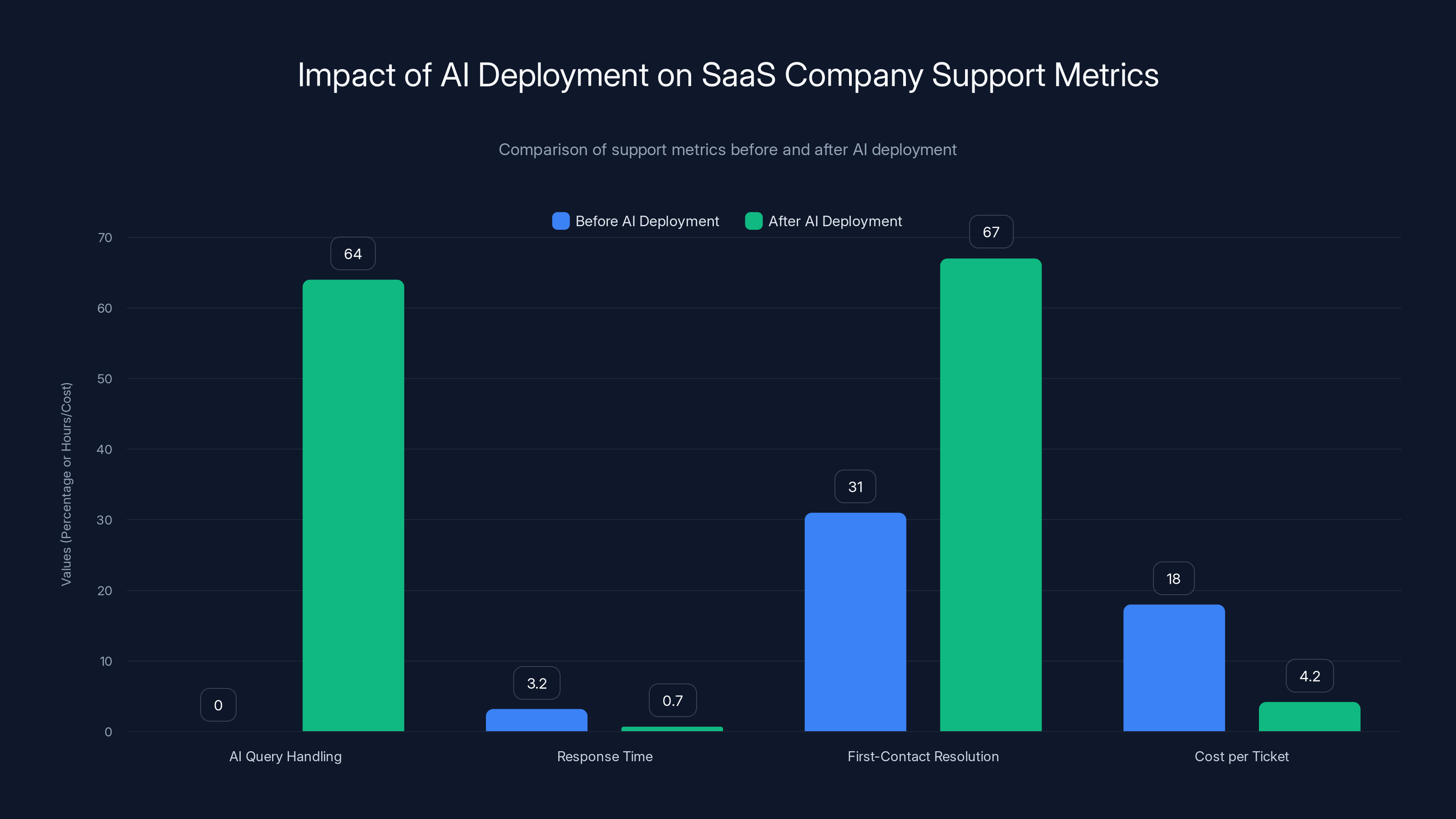

After AI deployment, the SaaS company saw a significant improvement in support metrics: AI now handles 64% of queries, response time decreased to 42 minutes, first-contact resolution increased to 67%, and cost per ticket dropped to $4.20.

Why Early AI Adoption Metrics Plateau

In the first weeks and months after deploying AI, support teams experience what feels like exponential progress. A chatbot resolves 15% of incoming tickets without human intervention. Your team resolves the remaining 85% faster because they're not context-switching between simple and complex queries. Average response time drops from 4 hours to 90 minutes. First-contact resolution climbs from 28% to 42%.

These metrics are real. The impact is genuine. Your support operation is legitimately more efficient.

But here's the problem: this is where most measurement stops.

Early-stage deployments typically measure three things. First, volume metrics: how many queries did the AI handle? Second, speed metrics: how much faster are responses? Third, capacity metrics: how much human time was freed?

These answers are straightforward to calculate. You can graph them. You can present them in quarterly reviews. They prove something happened.

But they also trap teams in a narrow view of value. You've optimized for throughput when the real opportunity is outcome. You've measured what AI saves when you should be measuring what you create with that savings.

There's another invisible ceiling hiding in these early-stage metrics. As AI handles the easiest 20% of queries—simple password resets, account balance checks, subscription status questions—you get rapid returns. But that remaining 80% is harder. Some of it requires context about the customer's history. Some needs judgment about policy exceptions. Some involves problems the AI has never seen before.

If you keep trying to push AI into handling more volume, you hit diminishing returns fast. You spend more effort training and tuning the model for marginal improvements. The easy efficiency gains are gone. The system stalls.

Teams that break through this ceiling stop optimizing for volume and start optimizing for complexity. They let their AI handle increasingly difficult problems. They redesign their support workflows to support this shift. They measure different things.

The results look completely different.

How Mature Deployments Change the Measurement Game

Imagine you're running support at a fast-growing Saa S company. You've deployed AI four months ago. Early metrics are solid. Your AI handles routine queries. Response times are down 35%. Your support team is less burned out.

Now imagine checking in with that same team eighteen months later. AI is handling 60% of all inbound queries. But here's what makes the difference: that 60% includes genuinely complex interactions.

Your AI now handles technical troubleshooting for integration issues. It understands your customer's usage patterns and can recommend features that would solve their problems before they even ask. It's fielding billing disputes by analyzing contract terms and usage history. It's investigating bugs and suggesting workarounds while it escalates to engineering.

Your support team isn't managing fewer tickets. They're managing fewer tickets of lower complexity. The 40% that reach humans now involves complex judgment calls, policy exceptions, and strategic customer relationships.

When you measure this operation, you're measuring something completely different than you were eighteen months ago.

Early-stage teams measure: "How many tickets did AI handle?" and "How much faster are we responding?"

Mature-stage teams measure: "How is freed support capacity being reinvested?" and "What's the revenue impact of our support operation?"

These are radically different questions.

Our research shows this clearly. Across all maturity stages, the most common ROI metric is "time freed up that the support team can use to focus on value-adding activities for customers." But look at the adoption rates. At early exploration, 56% of teams cite this metric. At mature deployment, 73% cite it.

That's not just more teams using the same metric. That's the metric becoming deeper and more strategic.

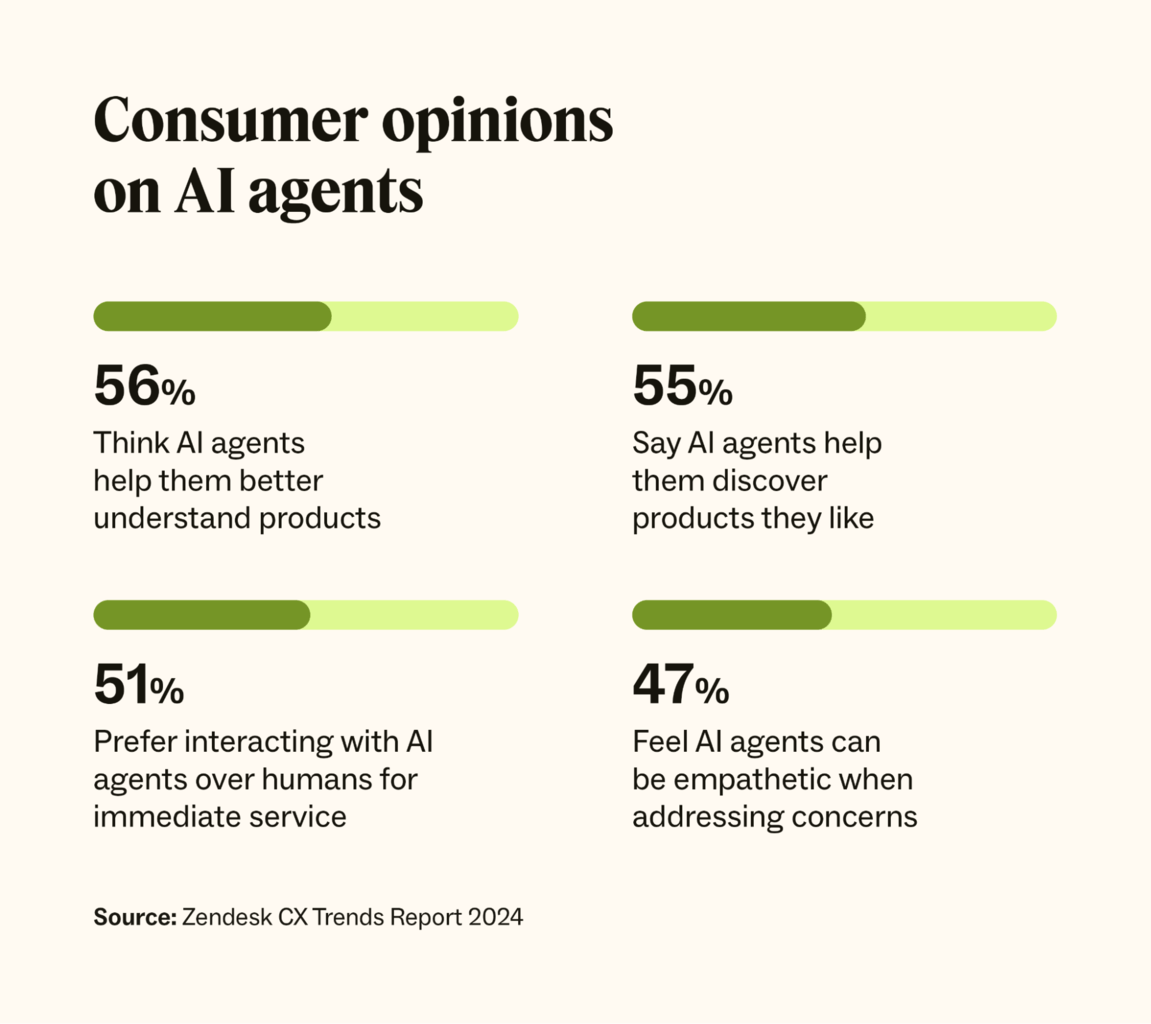

But here's where it gets really interesting. At early exploration, 34% of teams say freed capacity is being directed toward revenue-generating activities. By mature deployment, that number is 56%. More than half.

Think about what that means. Your support team is spending meaningful time on activities that directly drive revenue. Maybe they're helping high-value prospects during sales cycles. Maybe they're proactively reaching out to at-risk customers to prevent churn. Maybe they're identifying expansion opportunities within existing accounts.

These activities weren't possible before because the team was drowning in routine tickets. But when AI handles 60% of queries (including complex ones), suddenly your most experienced support staff have time to work on things that influence revenue.

You've just transformed support from a cost center to a revenue multiplier.

When you measure that, the ROI story is entirely different. It's not "we saved

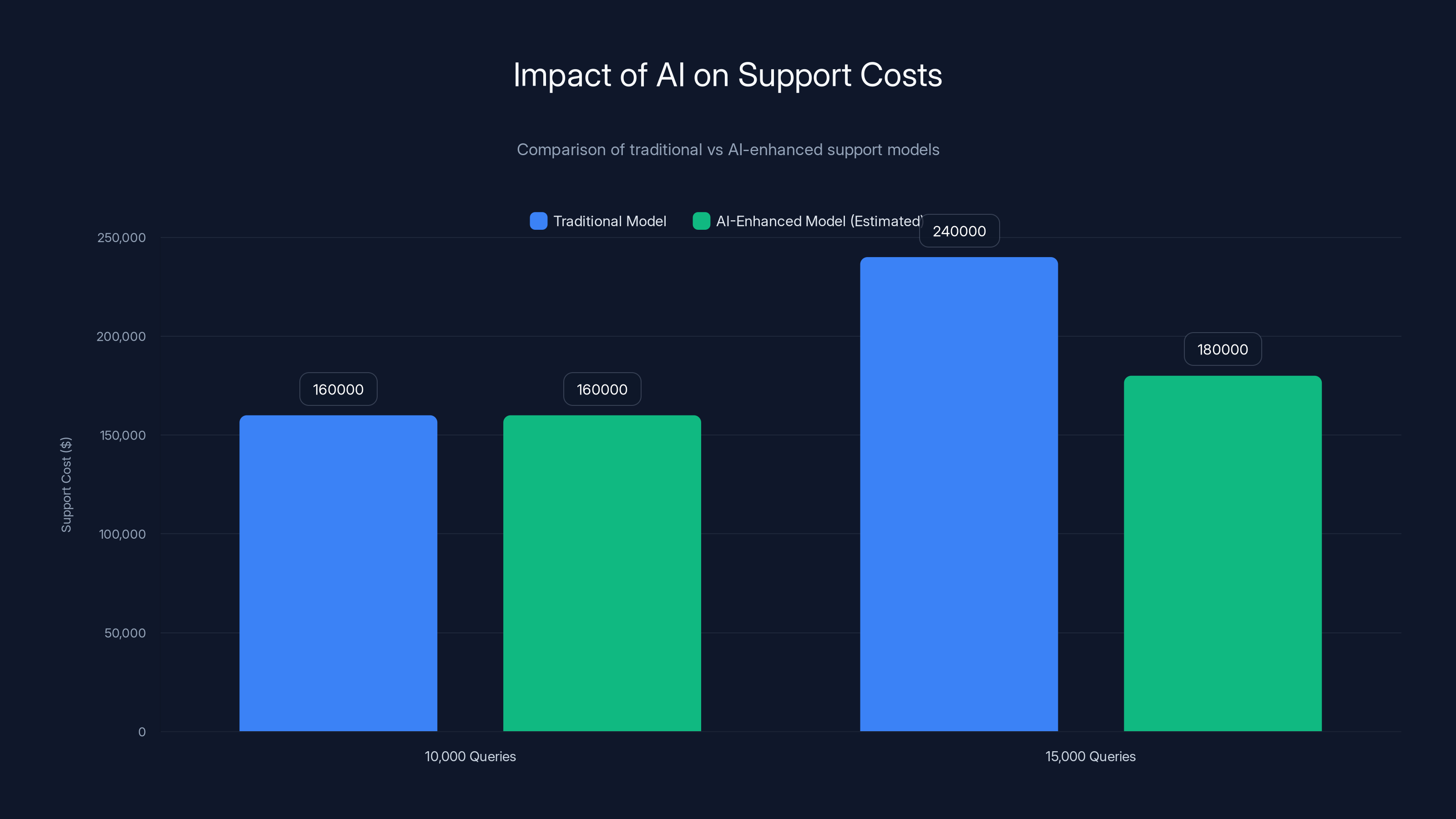

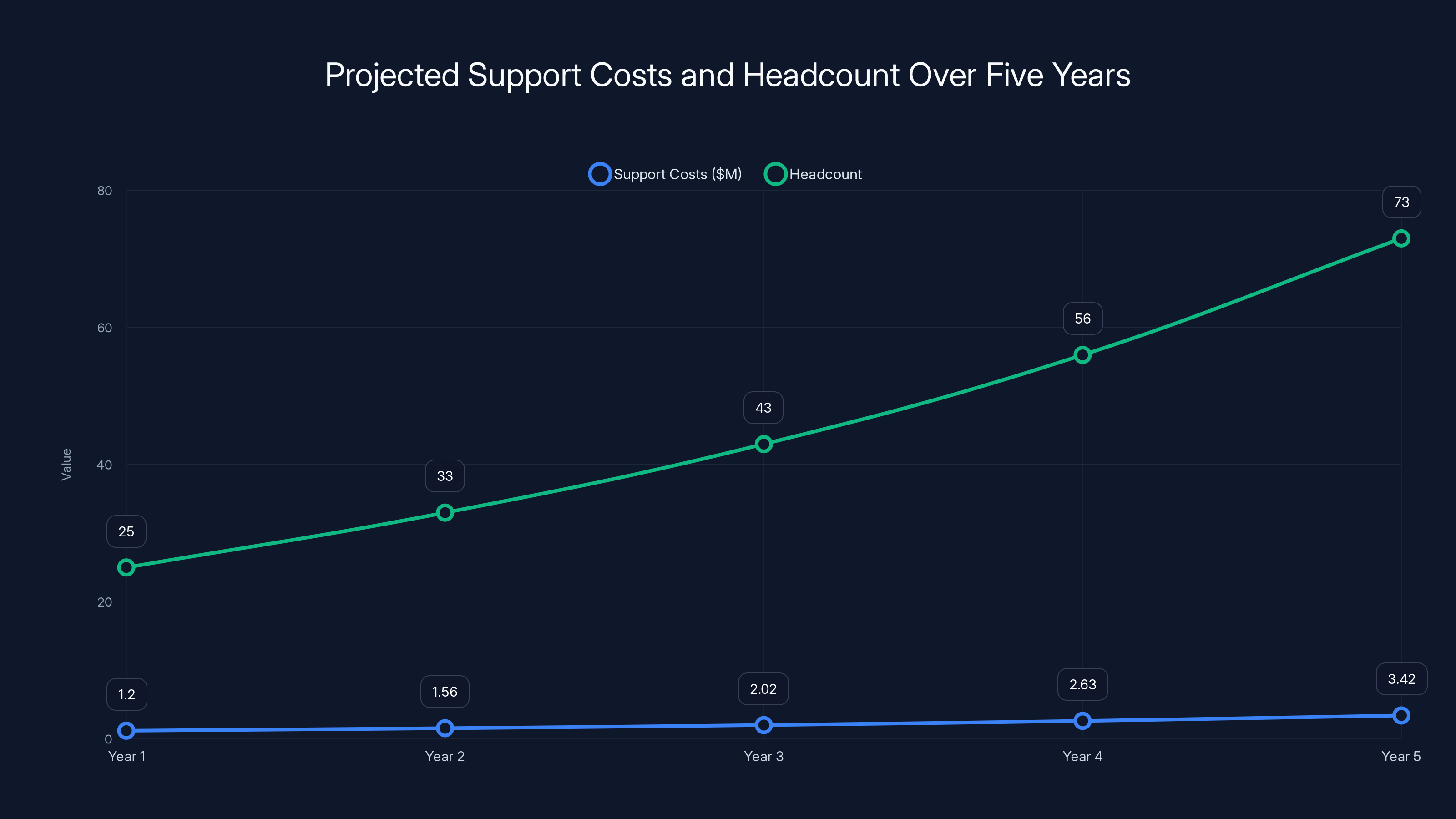

AI-enhanced support models can handle increased query volumes with significantly lower cost increases compared to traditional models. Estimated data shows a more efficient scaling with AI.

The Economics Model That Legacy Support Teams Can't Measure

Here's the uncomfortable truth: most support organizations are still operating under an economic model designed for the 1990s.

Legacy support economics work like this. Your company grows. Customer volume increases. Support ticket volume increases proportionally. You hire more support staff. You add capacity. You achieve success through containment: keep tickets in automation as long as possible, escalate the rest to humans, minimize the time humans spend on each ticket.

The economic relationship is linear. More customers equals more tickets equals more headcount. Your support cost per ticket trends stable or increases slightly as salary inflation outpaces efficiency improvements.

Success is measured through containment rates and cost per resolution. A good support team is a lean support team. You're trying to keep costs down while maintaining service quality.

This model made sense when the alternative to automation was hiring more people. In a linear growth model, that's the calculus that matters.

But AI doesn't scale linearly. It doesn't follow the old economic rules.

When you deploy a traditional chatbot or knowledge base, you get incremental improvement. You automate 10-15% of queries. You save some labor cost. The economic model holds.

But when you integrate AI deeply into your support operation, something different happens. You're not just automating individual queries. You're building a system that gets smarter with every interaction. You're creating a support multiplier that compounds.

Let's work through the math. Say you have a support team of twenty people handling 10,000 queries per month. Your fully-loaded cost per team member is

Legacy economics would suggest: to increase capacity, hire more people. Want to handle 15,000 queries? Hire thirty people. Cost: $240,000 per month. Linear relationship.

Now imagine you deploy AI deeply. Your AI handles 6,000 queries per month (60% of volume). But more importantly, it handles those 6,000 with sufficient quality that your team trusts it completely. Your support team now has capacity to spend meaningful time on high-value work.

You're still running at $160,000 per month. But now that 20-person team is:

- Handling complex escalations that come from AI (2,000 queries per month)

- Proactively reaching out to at-risk customers (saving 8-12% annual churn)

- Working with sales on high-value prospects (directly influencing deals)

- Improving product by feeding customer insights back to engineering (reducing future support load)

Your cost per query drops dramatically. But more importantly, support is now driving measurable revenue impact.

The economic model has completely changed. You're not measuring success through cost efficiency anymore. You're measuring success through customer lifetime value impact, revenue influence, and strategic contribution to growth.

Legacy economics can't measure this because the model assumes support is a cost center. The new model understands support as a revenue multiplier.

Teams trying to measure AI ROI within the legacy framework get stuck. They can calculate time saved. They can't calculate revenue influenced. They can see cost reduction. They can't see lifetime value impact. The numbers don't tell a compelling story because the framework isn't built for this kind of value.

This is why mature deployments are so much better at measuring ROI. They've abandoned the legacy framework and built a new one.

Breaking Down the New Support Economics Model

The teams that have successfully integrated AI aren't just deploying better technology. They're operating under a completely different economic theory about what support does and how it creates value.

This new model has five interconnected components.

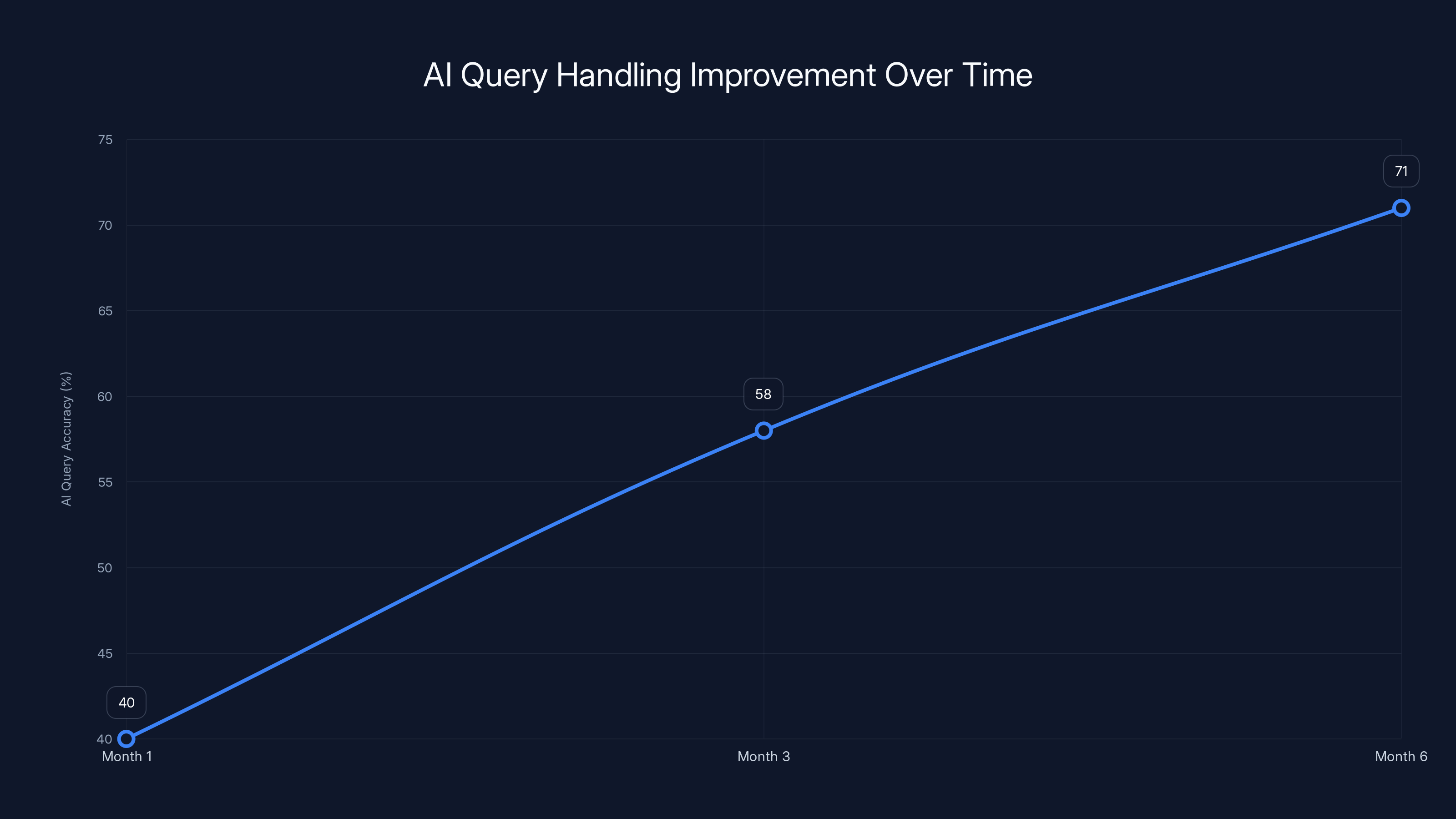

First: System improvement through continuous learning.

Every query your AI resolves makes the system slightly smarter. Not in the magic, science-fiction sense where AI suddenly becomes sentient. But in the concrete sense where patterns emerge, edge cases surface, and the system learns where it fails and adjusts.

This creates a compounding effect. Month one, your AI handles 40% of queries accurately. Month three, accuracy is 58% because it's seen more customer interactions and patterns. Month six, you're at 71%. The same system, same basic model, better performance because it's continuously learning from real customer interactions.

This learning curve didn't exist with human support teams. Hiring experienced support staff costs money. Training takes time. But an AI system that handles 10,000 monthly interactions has inherent advantage: it's learning from every single one.

The economics compound. Your cost per query drops not just because AI is handling volume, but because every additional query handled improves future performance.

Second: Capacity reallocation toward strategic work.

When AI handles the predictable, routine work reliably, your human team's time becomes genuinely valuable in a different way.

Instead of your best people spinning on simple queries, they're working on problems that actually need human judgment. Instead of your team being reactive, they can be proactive. Instead of them being transaction-focused, they can be relationship-focused.

This reallocation is the second economic shift. You're not adding headcount. You're reallocating existing headcount toward higher-value work. That's a margin improvement with no additional cost.

Third: Revenue influence, not just cost reduction.

When your support team has capacity to reach out to at-risk customers, they prevent churn. When they have time to identify expansion opportunities in existing accounts, they drive net new revenue. When they work with sales on important prospects, they influence deal velocity and size.

The support function becomes a revenue lever, not just a cost to be minimized.

Our research shows 56% of mature-stage teams are directing freed capacity toward revenue-generating activities. For these organizations, support ROI is no longer "how much did we save?" It's "how much did we influence?"

A mature deployment might look like this:

- Cost reduction from automation: $120K annually

- Churn prevention from proactive outreach: $1.2M annually

- Revenue influence from sales collaboration: $800K annually

- Net new expansion revenue from customer success efforts: $600K annually

Total ROI:

That's not efficiency. That's transformation.

Fourth: Product improvement feedback loop.

Your support team is your customers' voice. They know what problems customers are actually trying to solve. When they have capacity to feed that back to product, something magical happens: you build better products.

AI makes this loop faster. Your AI surfaces the patterns in customer interactions that humans would miss. Your support team translates those patterns into product insights. Your product team builds solutions. Future customer questions about that problem disappear entirely.

You've shifted from solving problems to eliminating them. The economics are profound. Every problem you eliminate is one your support team will never have to handle.

Fifth: Customer intelligence and strategy.

Deep AI integration creates rich data about customer behavior, preferences, problems, and intent. Not anonymized, abstract data. Specific information about how your specific customers are using your product and what they're struggling with.

That intelligence, properly used, informs business strategy. It tells you which customer segments are most at-risk. It reveals unmet needs that represent new product opportunities. It shows you where your product documentation is failing customers.

A mature support operation becomes an intelligence function that informs how the entire business operates.

The AI system's query handling accuracy improves significantly over six months, showcasing the compounding effect of continuous learning. Estimated data.

How Deep Integration Creates Compounding Returns

Let's ground this in what actually happens when a support team moves from early-stage to mature AI deployment.

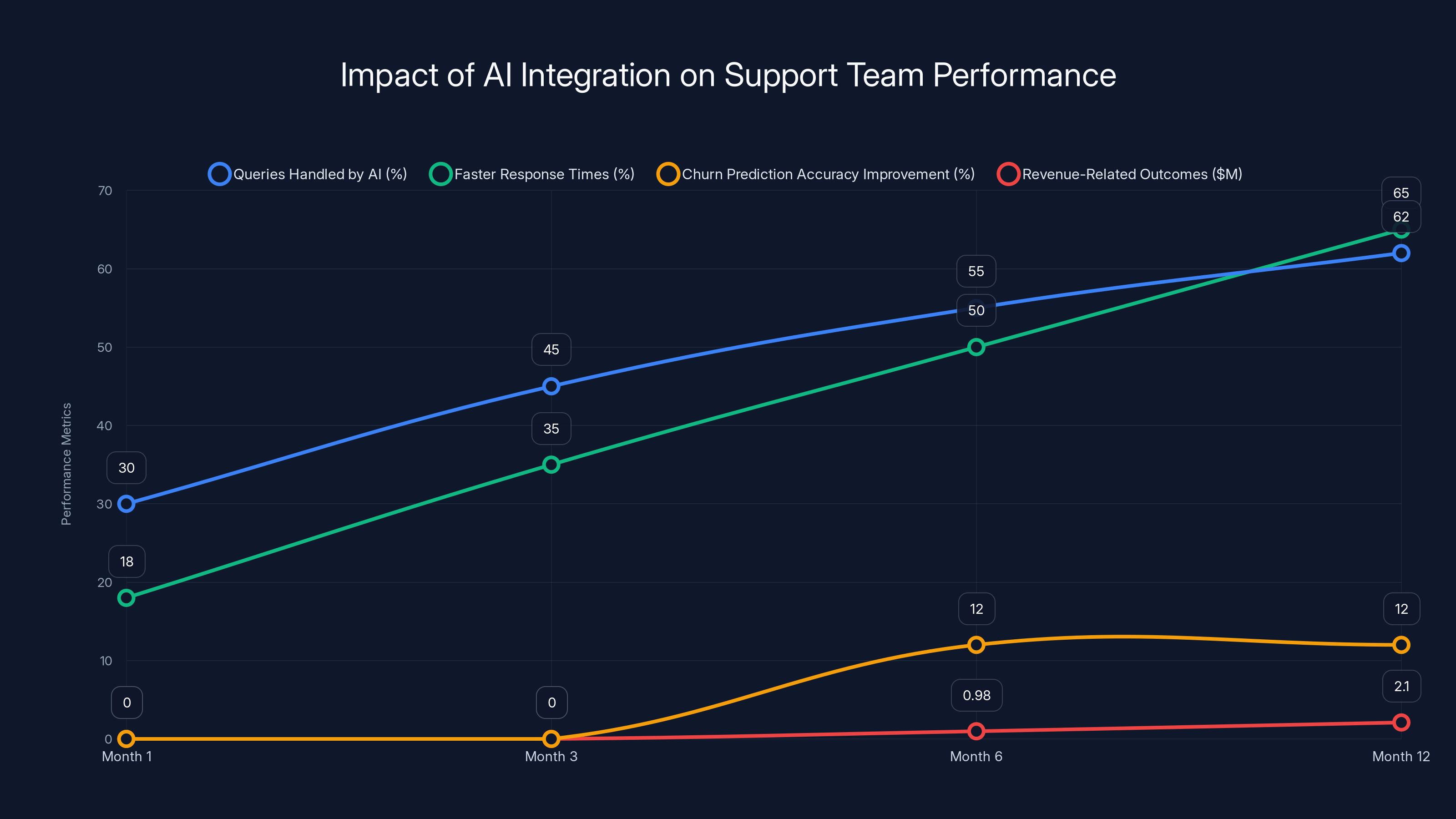

Month one: You deploy an AI chatbot. It's trained on your knowledge base and handles simple queries. Accuracy is moderate. You're excited but cautious. Metrics: 30% of queries handled by AI, 18% faster response times overall.

Month three: You've integrated AI deeper into your support workflow. It's now pre-writing responses for human agents to review and send, reducing draft time by 60%. It's categorizing tickets automatically, eliminating manual routing overhead. Accuracy has improved because it's handling more volume. Metrics: 45% of queries handled by AI, 35% faster response times, 40% reduction in time spent on ticket categorization.

Month six: AI is handling complex escalation decisions. It's analyzing customer history to identify when a refund might prevent churn. It's flagging high-value customers who are struggling so humans can proactively reach out. It's not perfect, but humans trust it enough to work with it. Metrics: 55% of queries handled by AI, 50% faster response times, 12% improvement in churn prediction accuracy, your team has spent 600 hours on proactive outreach (preventing an estimated $980K in annual churn).

Month twelve: AI isn't just handling queries. It's handling customer relationships. It's identifying when a customer is ready to upgrade and flagging them for sales team follow-up. It's debugging technical issues and suggesting workarounds while it escalates to engineering. It's analyzing support conversations for product improvement insights. Your team has shifted from transaction processing to relationship and growth management. Metrics: 62% of queries handled by AI, 65% faster response times, support team has influenced $2.1M in revenue-related outcomes (churn prevention + expansion opportunities + sales influence), product team has shipped three features directly informed by support insights, support cost per total customer has decreased 38% while customer lifetime value has increased 24%.

Notice what happened. The metrics didn't just improve. They fundamentally transformed. You're measuring different things because the function is doing different things.

This transformation compounds because of system improvement. Every month, your AI gets better. Every customer interaction teaches it something. Every mistake it catches and corrects makes it smarter. Your baseline gets better while you're adding new capabilities on top.

You reach a point where the ROI is self-evident and self-reinforcing. The better your AI gets at handling complex work, the more capacity your team has for strategic work. The more strategic work your team does, the more revenue and retention impact you create. The more impact you create, the easier it is to justify investment in making AI even better.

The Shift from Time-Saving to Value-Creation Narratives

When you're trying to convince leadership that AI in support is worth the investment, the narrative matters enormously.

Early-stage teams often lead with time-saving narratives. "AI handles 40% of queries, freeing our team to focus on complex issues." It's a clean story. Everyone understands it.

But time-saving narratives have a problem: time savings are easy to cut if the business gets tight. If you're telling a cost-reduction story, the CFO's default response to budget pressure is "then we need less support budget." You've positioned support as a cost center. That's the wrong frame.

Mature deployments tell a different story. "Our AI handles 65% of all queries, including complex ones. Our team is now spending 40% of their time on activities that directly influence customer lifetime value: proactive churn prevention, expansion opportunity identification, and strategic relationship management. Last year, support-led activities prevented

This is a completely different conversation. You're not defending support. You're claiming it as a growth lever.

The measurement shift reflects this narrative change. Early-stage teams measure: hours saved, cost per ticket, time to resolution.

Mature teams measure: revenue influenced, churn prevented, customer lifetime value impact, expansion opportunities identified, customer satisfaction with strategic interactions.

Both are technically ROI. But one is defensive, one is offensive. One frames AI as helping you do support cheaper. One frames support as helping you grow the business better.

This isn't just semantics. It's strategy. When you move from "AI saves us money" to "AI makes us more revenue," you've fundamentally repositioned support in the organization. You've given yourself the resources and authority to keep investing in making it better.

Over 12 months, AI integration significantly improved support team performance, handling more queries, speeding response times, and influencing revenue outcomes. Estimated data based on narrative.

Designing Support Operations for Deep AI Integration

Not every support team can move from early stage to mature deployment. It requires rethinking workflows, retraining teams, and rebuilding incentive structures. Here's what actually has to change.

Workflow redesign for AI collaboration, not automation displacement.

Most support workflows were designed with the assumption that a human will eventually read and respond to each query. Even with AI, they're structured around that assumption. The AI bounces out if it's not sure. The human takes over.

But if your AI is going to handle 65% of queries accurately without human review, you need different workflows.

Instead of AI doing initial triage and humans doing final work, you need AI doing the work and humans doing strategic oversight. The workflow becomes: AI handles query → AI escalates if necessary or uncertain → Human does high-value judgment work.

Instead of humans being gatekeepers, they become strategic advisors who step in when the situation needs human judgment.

This requires completely rethinking how tickets flow, how work is assigned, and how quality is managed.

Team structure around capability, not query volume.

Early-stage operations organize around volume. You have a first-line team for simple stuff, a second-line team for complex stuff, a specialist team for edge cases. The organization is flat and linear.

Mature deployments organize around capability. You have people who excel at reading customer intent and knowing which product feature would solve their problem (for product/expansion guidance). You have people who are exceptionally skilled at handling objections and preventing churn. You have relationship managers who work with high-value accounts. You have technical experts who debug integration issues.

AI handles the volume. Humans apply expertise.

This restructuring is dramatic. You're no longer training everyone to answer basic questions faster. You're developing deep expertise in different areas.

Measurement systems that reward strategic work, not transaction speed.

If you keep measuring your team on average response time and first-contact resolution rate, you're going to keep optimizing for transaction speed, not strategic value.

Mature teams measure differently. They measure things like: churn prevented through proactive outreach, expansion opportunities identified, revenue influenced, customer satisfaction with strategic interactions, product insights fed back to engineering.

These measurements require different tools and different data. But they're the only measurements that make sense once your AI is handling routine transactions.

Quality standards that account for AI collaboration.

Your quality standards need to shift from "human answered the customer's question correctly" to "customer's problem was solved through the best combination of AI and human input."

Sometimes that means the AI handled it entirely. Sometimes it means the AI drafted a response the human refined. Sometimes it means the AI identified the customer's real problem underneath their initial question.

You're no longer grading individual human performance. You're grading team performance, which includes AI.

Real-World Implementation: What Mature Deployments Look Like

Let's walk through how this actually looks in practice. These are patterns we see across organizations that have reached mature AI deployment stages.

Example one: Saa S company with complex technical product.

This company had a support team of 32 people handling about 8,000 queries per month before AI deployment. Average response time was 3.2 hours. First-contact resolution was 31%. Cost per resolved ticket was $18.

They deployed AI, but did it deeply. Instead of using AI just for simple queries, they invested in training it on their extensive API documentation, customer implementation guides, and technical problem history. They also deeply integrated it into their support platform so it could access customer account history, usage data, and implementation details.

After twelve months of mature deployment:

- AI handles 64% of queries, including complex technical troubleshooting

- Response time: 42 minutes (from 3.2 hours)

- First-contact resolution: 67% (up from 31%)

- Cost per resolved ticket: 18)

But here's what matters more. Their support team went from 32 transaction processors to 32 strategic assets.

Ten team members are now focused on proactive technical support: identifying customers who are struggling with implementations and reaching out before they request help. In the last year, they've prevented an estimated $2.8M in churn and accelerated implementations, reducing customer time-to-value by an average of 3.2 weeks.

Eight team members are focused on product feedback and feature requests. They work closely with engineering, translating customer problems into product insights. They've directly informed the roadmap for six major features, three of which are now live and have reduced support load by an estimated 18%.

Five team members work with the sales team on enterprise deals, helping navigate complex implementation scenarios and identifying expansion opportunities. They've influenced $1.4M in net new revenue.

Four members manage strategic relationships with the company's top fifty customers. They're focused on retention, expansion, and strategic partnership.

Five people manage the AI system itself: training, quality assurance, workflow optimization, and escalation management.

Their support cost per total customer has dropped 38%. Their customer lifetime value has increased 24%. Support ROI has moved from

Example two: High-volume consumer Saa S company.

This company had massive support volume: 400,000 queries per month. They were running lean with contracted support, outsourced overflow, and a small core team managing quality.

Deep AI integration fundamentally changed their model. Instead of throwing volume at outsourced support, they invested in AI that could handle the volume accurately.

After twelve months:

- AI handles 71% of queries

- Support ticket volume per customer has dropped 34% (because AI solves problems better)

- Customer satisfaction with AI interactions: 87%

- Support cost per customer: down 52%

But they didn't just close the outsourcing contract. They reinvested the savings.

They built a small team of about fifteen people focused on understanding their customer segments. This team is identifying which customer groups are churning fastest, why, and how the product should change to prevent it. They're working with product to design solutions.

They're identifying high-value customers who are showing early signs of churn and reaching out directly with personalized solutions. They've prevented an estimated $18M in annual churn.

They're working with sales to identify expansion opportunities in their user base. Last year, they identified $2.1M in expansion opportunities that had been sitting dormant in existing accounts.

This team of fifteen is now responsible for an enormous amount of growth and retention value. The support function has gone from a volume game to a strategic game.

Without AI integration, support costs and headcount are projected to increase significantly over five years with a 30% annual growth rate. Estimated data.

The Measurement Evolution: From Cost to Revenue

When you look at the data on how measurement changes across deployment stages, a clear pattern emerges.

At early exploration stage, teams measure:

- Queries handled by AI: 40-50%

- Response time improvement: 25-40%

- Cost per ticket: incremental reduction

- Time freed up: reported but not reinvested

- ROI clarity: 35% of teams can clearly measure it

At mature deployment, teams measure:

- Queries handled by AI: 60-75%

- Response time improvement: 50-70%

- Revenue influenced by support: millions of dollars

- Churn prevented through proactive work: millions of dollars

- Expansion opportunities identified: hundreds of thousands

- Product improvements informed by support insights: tracked

- Customer lifetime value impact: measurable improvement

- ROI clarity: 70% of teams can clearly measure it

Notice that the first four items in each list are similar. Better tech, faster responses. But the mature stage has four additional measurements that early stage doesn't track.

These additional measurements exist because the support function is doing additional work. Early stage is optimizing the existing function. Mature stage has expanded what the function does.

This measurement evolution is why mature deployments have such clearer ROI visibility. They're measuring all the value they're creating, not just the efficiency they've achieved.

Overcoming Integration Barriers: Why Most Teams Stay Early Stage

If mature deployment is so valuable, why don't more teams get there?

Because it's hard. Not technically hard. Organizationally hard.

The organizational barriers to deep integration:

First, most support leaders don't have authority to reorganize their team structure. They report to a CFO who wants cost control, not a CMO or VP of Growth who wants revenue influence. The incentive structure points toward cost reduction, not revenue generation. Suggesting that support should spend more time on expansion and strategic activities sounds like cost creep to someone focused on P&L reduction.

Second, the team composition makes deep integration difficult. If your support team is optimized for transaction volume, it's full of people who are good at handling volume, not good at strategic relationship management or product thinking. You can't just ask them to switch.

Third, your support infrastructure probably isn't built for deep AI integration. Your knowledge base might be thin. Your customer data might be siloed. Your support platform might not integrate with your CRM or product analytics. Building the infrastructure for deep integration costs money and time.

Fourth, there's the measurement problem we discussed. If you can't clearly measure ROI from strategic work, it's hard to justify the investment and get support for the organizational changes needed.

Fifth, there's risk. Deep integration means letting AI make important decisions with real business impact. If the AI makes mistakes, it's not just slowing response time, it's potentially losing customers or revenue. That's a big responsibility to place on a system.

These are real barriers. They explain why many teams plateau at early-to-mid stage deployment. The easy wins are achievable. The hard ones require organizational commitment most support leaders can't secure.

Teams that do push through these barriers typically have one of three advantages: a leader with organizational authority to reorganize the team around AI opportunities, a clear business case showing revenue impact (which drives investment), or a crisis that forces rapid transformation.

Building the Business Case for Deep Integration

If you're trying to move your organization from early-stage to mature AI deployment, you need a business case that resonates with leadership.

Here's the structure that works:

Start with current state.

"Our current support operation costs $1.2M annually and delivers 31% first-contact resolution. We're handling 10,000 queries per month with a team of twenty-five people."

Be specific about costs. Fully-loaded salary, software, infrastructure, everything.

Describe the opportunity if you stay the same.

"If we maintain our current approach, as we grow, support costs will increase linearly. For every 20% growth in customer volume, we'll need to hire 20% more people. Support will remain a cost center. Our support cost per customer will remain stable or increase as hiring costs rise."

Show the extrapolation. If you grow 30% per year, what happens to support costs and headcount over five years?

Describe what deep integration unlocks.

"With mature AI deployment, our team of twenty-five could handle 60% higher volume while improving resolution quality. But that's not the main value. The freed capacity enables three new capabilities: (1) proactive churn prevention through at-risk customer outreach, (2) expansion opportunity identification and pursuit, (3) strategic relationship management with high-value accounts."

Be specific about activities. What, exactly, will your team do with freed capacity?

Quantify the upside.

This is the crucial part. You need to show concrete numbers.

For churn prevention: "Our current annual churn is

For expansion: "Our average customer lifetime value is

For sales support: "Our average deal size is

Total quantified upside: $800K+ in annual revenue influence.

Then add the cost savings: "We'll also realize $180K in annual support cost reduction through improved automation and efficiency."

Total value: $980K annually.

Describe the investment required.

"To build this capability, we need:

- AI infrastructure investment: $80K

- Team restructuring and retraining: $40K

- Workflow redesign: $30K

- First-year ongoing costs: $120K

Total first-year investment: $270K."

Show the ROI math.

"Year one:

"Year two:

These numbers change based on your situation. But the structure is the same.

The key is moving from "how much do we save" to "what revenue do we generate." That's what makes the business case compelling enough to overcome organizational barriers.

The Future of Support Economics: Where This Trend Leads

We're in the early stages of a complete transformation in how customer support works economically.

Right now, we're seeing the early manifestations. Teams are deploying AI and getting 35-40% of queries handled while improving response time. That's valuable. That's why adoption is accelerating.

But this is just the beginning. The teams that are reaching mature deployment are showing us where this goes.

In three to five years, the mature deployment model becomes the baseline. Support organizations that are still operating under early-stage paradigms will be struggling. They'll be trying to compete with organizations that have already broken the cost-per-customer metric and are now optimizing for lifetime-value per customer.

Further out, support operations become indistinguishable from growth operations. The line between "support" and "customer success" and "expansion" blurs. A single team, augmented heavily by AI, is managing customer relationships across the entire lifecycle: onboarding, adoption, expansion, retention.

AI handles all the transactional elements. Humans handle all the strategic relationship elements. The economic model is based not on cost per interaction but on lifetime value per relationship.

This shift has profound implications.

First, it means support organizations that don't integrate AI deeply will struggle to compete. Cost per ticket matters less than lifetime value per customer. If you're optimizing for the former while competitors optimize for the latter, you lose.

Second, it means support leaders need to develop skills beyond support. You need to understand business metrics. You need to think about revenue, retention, and expansion. You need to be able to make strategic decisions about where your team's capacity is highest value.

Third, it means the support function becomes more strategically important. Right now, most support leaders report to operations or a VP of Customer Experience. In the future, they'll need to report to executives who care about growth. Because support, done right with deep AI integration, drives growth.

Fourth, it means the skills you need in support change. You still need people who are good with customers. But you increasingly need people who are good at strategic relationship management, product thinking, and business analysis.

The teams that are building this future now are gaining enormous competitive advantage. They're reducing support costs while increasing customer lifetime value. They're making support a revenue-generating function. They're positioning support as core to growth strategy rather than peripheral to cost management.

The teams that aren't building this future will be disrupted by those who are.

Moving From Theoretical to Actual: Implementation Roadmap

If all this resonates and you want to move your organization toward mature AI deployment, here's what the journey actually looks like.

Phase one: Deepen current deployment (months 1-3)

Don't rip and replace. Expand what's already working.

If you have AI handling 40% of simple queries, invest in expanding what it can handle. Improve the training data. Integrate more customer context. Test AI handling moderately complex issues.

Measure: How much can we expand query volume while maintaining quality?

Goal: Increase AI query handling from 40% to 55% while maintaining or improving quality metrics.

Phase two: Redesign one workflow for AI-human collaboration (months 4-6)

Pick one high-volume ticket type. Redesign the workflow so AI does the work and humans provide oversight rather than AI doing the work and humans doing final handling.

Measure impact. What changes? Does quality improve? Do humans trust the AI more? Does productivity improve?

Learn from this experiment before rolling out to other workflows.

Phase three: Restructure one team around strategic work (months 7-9)

Take your highest-performing team and restructure them around strategic activities. Give them ownership of churn prevention, expansion opportunities, or product insights.

Measure: What value can this team generate through strategic work? How does it compare to their previous work handling transactions?

Use this as proof of concept. Show what's possible when people focus on strategic work instead of transaction volume.

Phase four: Build measurement systems for revenue impact (months 10-12)

Design systems to track revenue influenced, churn prevented, expansion opportunities identified, product insights delivered. Make this visible and reportable.

Measure: Can you clearly see the revenue impact of support work? Is it compelling enough to justify broader investment?

Phase five: Design and implement full organizational restructuring (months 13-18)

With proof from earlier phases, redesign the entire support function around AI + strategic work.

This is the big change. It requires reorganization, retraining, new tools, new metrics.

Phase six: Optimize and scale (months 19-24)

Tune workflows. Improve AI accuracy. Expand into additional customer segments or market segments. Refine measurement and reporting.

This roadmap is roughly two years. Some teams move faster, some slower. But the pattern is consistent: expand gradually, measure constantly, use proof points to justify bigger changes.

The teams that try to make all these changes at once usually fail. The teams that evolve gradually, learning at each stage, build to mature deployment successfully.

Common Mistakes That Block Integration

Over the course of researching this topic and talking with dozens of support leaders, certain patterns emerge about what derails teams trying to deepen AI integration.

Mistake one: Starting with technology, not strategy.

Many support leaders approach AI by asking "What's the best AI tool?" and then trying to fit their organization to the tool.

Better approach: Ask "What's our support strategy? What do we want to accomplish? How would AI enable that?" Then choose technology that fits strategy, not the other way around.

Mistake two: Measuring narrow metrics instead of broad impact.

It's easy to measure response time and ticket volume. It's hard to measure churn prevented or revenue influenced.

But if you only measure the easy metrics, you optimize for the wrong things. You'll eventually hit a ceiling because you're optimizing for efficiency instead of impact.

Invest in measuring broad impact. It's harder upfront, but it drives better decisions.

Mistake three: Not restructuring the team.

You can't achieve mature deployment with an organization designed for transaction processing. You need to reorganize around strategic capabilities.

Most support leaders shy away from this because reorganization is hard and disruptive. But without it, you can't move beyond early-stage deployment.

Mistake four: Treating AI as a replacement for humans instead of an augmentation.

The goal isn't to automate people out of jobs. It's to free people from transactional work so they can do strategic work.

If your team feels threatened by AI, you'll get resistance. If your team sees AI as a tool that makes their jobs better and higher-impact, you get adoption.

Communicate this clearly. Make it real by actually reallocating freed capacity toward high-value work.

Mistake five: Not investing in infrastructure.

Deep integration requires good infrastructure. Your knowledge base needs to be comprehensive. Your customer data needs to be accessible. Your support platform needs to integrate with your CRM and product data.

If you skimp on infrastructure, AI implementation will always feel hacky and limited.

Budget for infrastructure. It's worth it.

Mistake six: Setting unrealistic timelines.

Some teams expect to move from early deployment to mature deployment in a few months.

Realistic: two years for a well-executed transition. That's a lot of change: technical, organizational, cultural.

Rushing leads to mistakes, team frustration, and failed implementation.

FAQ

What does "mature AI deployment" actually mean in customer support?

Mature deployment means AI is so deeply integrated into support operations that it handles 60-75% of all customer queries, including complex ones that require context, judgment, and customer history analysis. More importantly, it means the entire support organization has been restructured so that humans focus on strategic work—churn prevention, expansion opportunities, customer relationship management—rather than transaction processing. Teams at this stage report 87% experiencing measurable improvements in key metrics and 70% have clear visibility into ROI, compared to just 62% and 35% at early stages. The transformation is organizational and economic, not just technical.

How does the ROI measurement change between early and mature AI deployments?

Early-stage teams measure narrow metrics: how many tickets AI handles, response time improvements, and cost per ticket reductions. At this stage, only 35% of teams can clearly measure ROI because the metrics don't tell a complete story. Mature teams measure different things entirely: revenue influenced through churn prevention (56% report redirecting freed capacity to revenue activities), expansion opportunities identified, customer lifetime value impact, and product insights delivered. This shift from "cost reduction" to "revenue generation" measurement explains why mature teams achieve 70% ROI clarity. The economics fundamentally change—support goes from a cost center to a growth lever.

What specific activities should support teams focus on once AI handles routine queries?

Once AI reliably handles routine work, your support team should focus on activities that directly impact customer lifetime value: proactive churn prevention (reaching out to at-risk customers before they request cancellation), expansion opportunity identification (finding customers ready to upgrade or add products), strategic relationship management with high-value accounts, collaboration with sales on complex deals, and feeding product insights back to engineering based on customer problems. Research shows mature teams spend about 40% of time on these activities, with 56% reporting these activities are revenue-generating. The key shift is from reactive transaction processing to proactive relationship and growth management.

Why do most support teams plateau at early deployment stages instead of pushing to mature deployment?

Three main barriers stop teams from deepening integration. First, organizational barriers: most support leaders report to cost-focused executives rather than growth-focused ones, making it hard to justify spending capacity on revenue activities. Second, structural barriers: teams optimized for transaction volume lack the skills and experience needed for strategic relationship work. Third, infrastructure barriers: without comprehensive knowledge bases, integrated customer data, and CRM connectivity, deep AI integration remains limited. Teams that overcome these barriers typically have a leader with organizational authority, a compelling business case showing revenue impact, or a crisis that forces rapid change.

How long does it actually take to move from early-stage to mature AI deployment?

Expect a realistic timeline of 18-24 months for a well-executed transition. This breaks into phases: deepening current deployment (3 months), redesigning one workflow for AI-human collaboration (3 months), restructuring one team around strategic work (3 months), building measurement systems (3 months), full organizational restructuring (6 months), and optimization and scaling (6+ months). Trying to rush this timeline usually leads to mistakes, team frustration, and failed implementation. The best-performing organizations move gradually, learning at each stage before making bigger changes. Each phase should produce concrete proof points that justify moving to the next phase.

What's the financial impact of mature AI deployment compared to early deployment?

The difference is dramatic. Early deployment might achieve

How should support organizations restructure their teams to support deep AI integration?

Instead of organizing around query volume (first-line for simple issues, second-line for complex issues), reorganize around capability and strategic function. This means creating teams focused on specific high-value areas: one team dedicated to churn prevention through proactive outreach, one focused on expansion opportunity identification, one managing strategic relationships with top accounts, one collaborating with sales on complex deals, one focused on product insights and feedback loops, and a smaller team managing the AI system itself. This restructuring is a major change, but it's essential for moving beyond early-stage deployment. It requires different hiring criteria, different training approaches, and different performance metrics.

What infrastructure investments are necessary for mature AI deployment?

Four core infrastructure elements are essential. First, comprehensive knowledge base: your AI needs excellent training data in the form of complete, well-organized documentation. Second, integrated customer data: your support platform needs access to customer history, usage data, implementation details, and account value. Third, platform integration: your support platform needs to connect seamlessly with your CRM (to flag expansion opportunities and churn risk), product analytics (to understand usage patterns), and billing system (to identify expansion signals). Fourth, measurement infrastructure: you need systems to track revenue influenced, churn prevented, and expansion opportunities identified. Most teams underestimate these infrastructure costs—expect

How do you convince leadership that support should focus on revenue activities instead of just cost reduction?

The key is building a specific business case with concrete numbers. Start with your current state (support costs, customer metrics). Then quantify the opportunity: "Proactive churn prevention addressing 8-12% of annual churn =

What are the biggest risks of deep AI integration and how do you mitigate them?

The primary risk is that AI makes mistakes on important decisions with real business impact. If your AI incorrectly escalates a churn-at-risk customer or misses an expansion opportunity, the costs can be high. Mitigation: Don't fully automate critical decisions. Use AI to identify and flag opportunities, with humans making final calls on important decisions. Start with lower-risk areas (internal workflows, flagging) and gradually expand to customer-facing decisions as confidence builds. The second risk is team resistance if people fear job displacement. Mitigation: Be transparent that AI is freeing people from transactional work so they can do more strategic, fulfilling work. Make this real by actually creating strategic roles and giving people time to do strategic work. The third risk is that the infrastructure investment doesn't deliver expected returns. Mitigation: Start with a proof-of-concept in one area, measure carefully, and use results to justify broader investment.

Conclusion: The Real Opportunity in AI-Driven Support

We're at an inflection point in customer support.

For years, the support function operated under a basic economic model: more customers meant more support costs. Success was measured through cost efficiency and volume containment. The best support organizations were the ones that served customers most cheaply.

That model is breaking down.

Teams that have deeply integrated AI aren't just optimizing the old model. They're operating under a completely different model. They're breaking the link between customer volume and support costs. They're turning support into a revenue-generating function. They're measuring success through customer lifetime value impact, not cost per ticket.

The data shows this shift clearly. Teams at mature deployment stages report 87% seeing measurable improvements in key metrics, compared to 62% at early stages. More importantly, 70% of mature teams can clearly articulate their ROI, compared to just 35% at early stages. And critically, 56% of mature teams are directing freed capacity toward revenue-generating activities.

This isn't incremental improvement. This is transformation.

But here's the catch: not all organizations will get here. Reaching mature deployment requires more than good technology. It requires organizational restructuring, infrastructure investment, measurement system redesign, and a willingness to fundamentally reimagine what the support function does.

Most support leaders will plateau at early deployment. They'll get the easy efficiency gains and stop. They'll be measuring the same metrics. They'll be operating under the same economic assumptions. They'll feel good about their progress because early-stage wins are real.

But they'll be leaving enormous value on the table.

The organizations that push through will look completely different in three years. They'll be processing the same or higher volume with similar or lower headcount. But their support teams will be driving meaningful revenue impact. Churn will be lower. Expansion will be higher. Customer lifetime value will be significantly improved. Support will be a growth lever, not a cost center.

Your question now is: which organization will yours be?

The gap between early and mature deployment is widening. The longer you wait to transition, the bigger the competitive disadvantage grows. The teams that are building mature deployment now are establishing advantages that will be extremely difficult to overcome later.

If you're ready to move beyond early wins, the roadmap is clear. Expand AI into more complex work. Measure different things. Restructure your organization around strategic capabilities. Build the infrastructure that enables deep integration. Quantify the revenue impact. Use that to secure support for bigger changes.

It's a two-year journey. It's not easy. But the organizations that complete that journey will have broken their support cost curve, transformed their customer retention and expansion metrics, and repositioned support as core to their growth strategy.

That's not just operational improvement. That's competitive transformation. And it starts now.

Key Takeaways

- Teams at mature AI deployment report 87% measurable metric improvements vs 62% at early stages, with 70% achieving clear ROI visibility

- The economics fundamentally shift from cost reduction (2-3M annually) as integration deepens

- 56% of mature deployments direct freed capacity toward revenue-generating activities like churn prevention and expansion

- Transformation requires 18-24 months and includes workflow redesign, team restructuring, infrastructure investment, and measurement system evolution

- The real opportunity extends far beyond efficiency: turning support into a growth lever that influences customer lifetime value and retention

Related Articles

- From AI Pilots to Real Business Value: A Practical Roadmap [2025]

- How to Operationalize Agentic AI in Enterprise Systems [2025]

- Who Owns Your Company's AI Layer? Enterprise Architecture Strategy [2025]

- 2026 Joseph C. Belden Innovation Award: Complete Nomination Guide [2026]

- How AI Transforms Startup Economics: Enterprise Agents & Cost Reduction [2025]

- Deploying AI Agents at Scale: Real Lessons From 20+ Agents [2025]

![How Deep AI Integration Transforms Customer Service ROI [2025]](https://tryrunable.com/blog/how-deep-ai-integration-transforms-customer-service-roi-2025/image-1-1770995421658.png)