Introduction: The Problem With Impossible Scenarios

Imagine this: You're sitting in a Waymo robotaxi on a sunny California afternoon. Everything's normal. Then, without warning, a massive tornado forms across the highway. The vehicle's sensors light up. Its decision-making system kicks in. But here's the thing—the Waymo driver has never actually seen a real tornado before. It can't have. Tornadoes are rare. Testing against one in real-world conditions would be insanely dangerous, ethically impossible, and probably career-ending for everyone involved.

So Waymo solved this the way modern AI companies solve impossible problems: by building a digital world where the impossible becomes routine.

Waymo's new World Model, powered by Google's Genie 3 technology, can generate photorealistic simulated driving scenarios that never happened, never existed, and maybe never should exist in the real world. A flooded suburban street with furniture floating through the air. A neighborhood completely engulfed in flames. A rogue elephant wandering down a residential road. These aren't special effects from a disaster film. They're test cases for an autonomous vehicle system that needs to handle literally anything.

This is the cutting edge of autonomous vehicle development, and it reveals something important about how we actually build safe AI systems. You can't test safety by waiting for accidents to happen. You have to manufacture danger, study it, and prepare your system for every possible variant before anyone's life depends on it.

Here's what's really happening behind the scenes, how it works, why it matters, and what it means for the future of autonomous vehicles.

TL; DR

- Waymo built a hyper-realistic simulator using Google's Genie 3 AI model that can generate photorealistic driving scenarios from text prompts

- Edge cases are incredibly rare but incredibly dangerous, making simulation essential for AV development without risking real-world harm

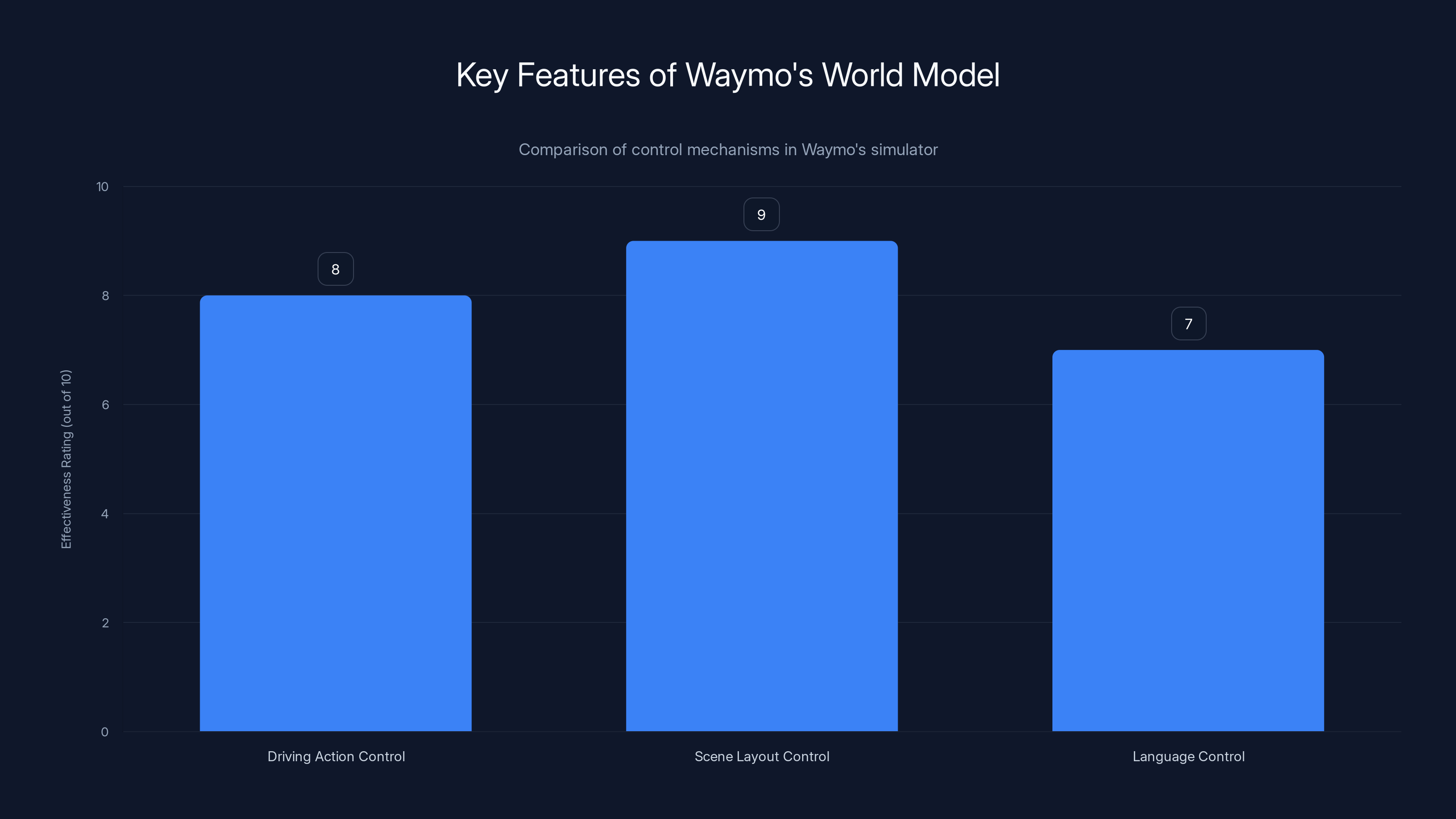

- Genie 3 enables three control mechanisms: driving action control ("what if" scenarios), scene layout control (road structure), and language control (weather, time of day)

- The simulator can process real dashcam footage and transform it into interactive environments for maximum realism

- This approach accelerates safety validation by allowing millions of simulated miles to be tested without physical vehicles or risk to passengers

Driving Action Control, Scene Layout Control, and Language Control are rated for their effectiveness in enhancing simulation flexibility and realism. Estimated data.

Understanding Edge Cases in Autonomous Driving

Let's start with the fundamental problem. Autonomous vehicles operate in one of the most complex, unpredictable environments known to robotics: actual human society on actual roads. But here's what nobody talks about: most of the time, driving is boring. Predictable. A robot can handle the normal stuff pretty well at this point.

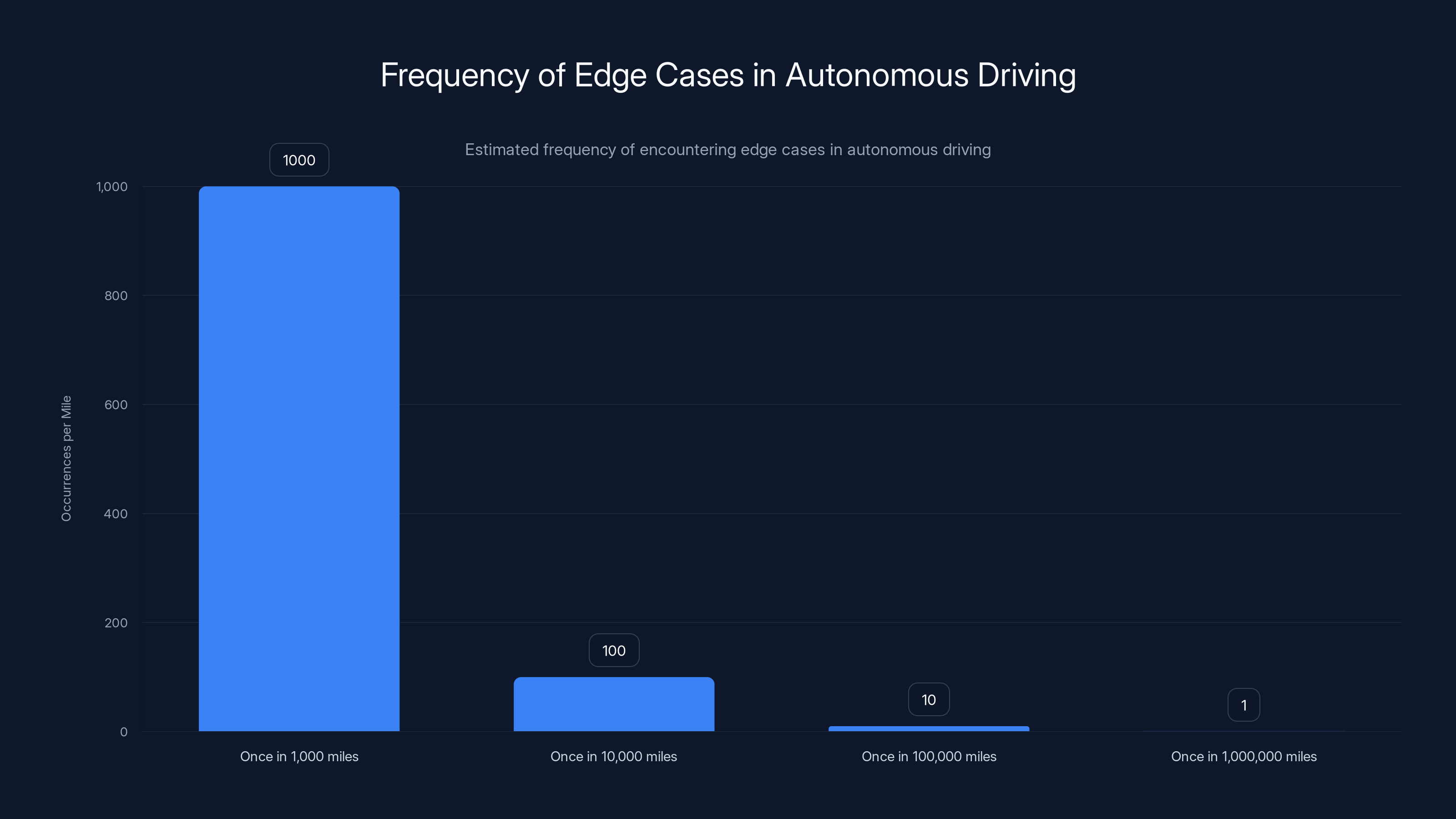

The danger isn't in the everyday scenarios. It's in what the industry calls "edge cases"—those rare, extreme, or unusual situations that happen maybe once in a thousand miles, or once in a million miles, or once in a billion miles. But they do happen. And when they do, your system better know what to do.

Consider some real examples. A school bus suddenly stops and opens its doors while your vehicle is approaching. A traffic signal malfunctions and cycles between red and green rapidly. A pedestrian runs directly in front of your car from between two parked vehicles. These things occur, but they're uncommon enough that most drivers might go their entire lives without seeing them. But an autonomous vehicle operating 24/7 in a fleet will encounter them. Statistically, it has to.

The traditional way to test for edge cases used to be the brute force method: drive billions of miles in the real world, document every weird thing that happens, and update your system. But this approach has obvious problems. It's slow. It's expensive. Most importantly, it's dangerous during the learning phase. You're asking the system to encounter rare situations it hasn't been trained on, in real time, with real passengers or pedestrians at risk.

Simulation solves this problem by flipping the script. Instead of waiting for rare scenarios to occur naturally, you create them synthetically. You run millions of them. You study what goes wrong. You fix it. Then you release your system into the world already prepared for situations it's never actually encountered.

How Genie 3 Changes the Simulation Game

Waymo's previous simulation systems weren't bad, necessarily. But they had limitations. Most autonomous vehicle simulators work by hand-crafting scenarios or using procedural generation—algorithms that follow predefined rules to create variations on themes. You can only create scenarios that match patterns you've explicitly coded.

Genie 3, Google's new world-building AI model, changes this fundamentally. It's a generative model, meaning it can create novel, realistic scenarios from scratch based on text descriptions or image prompts. Tell it "a tornado approaching a highway intersection" and it generates a photorealistic, interactive 3D environment that doesn't rely on pre-existing templates or hard-coded logic.

What makes this different isn't just the realism, although that's important. It's the flexibility. Waymo's product manager described it as enabling "virtually any scene—from regular, day-to-day driving to rare, long-tail scenarios—across multiple sensor modalities." That's not marketing fluff. That's actually a massive capability expansion.

Genie 3 works by understanding the physical rules of the world and applying them to generated content. It doesn't just create pretty pictures. It creates interactive environments where the physics are consistent, where obstacles have proper collision properties, and where sensor simulation works correctly. When a Waymo vehicle's lidar sensor hits the generated tornado environment, the sensor returns realistic readings based on how real weather affects real lidar.

This is crucial because if you're going to test a system in simulation, the simulation has to be faithful to reality. A lidar sensor behaving differently in the simulated world versus the real world would teach your autonomous vehicle system to handle situations it will never actually encounter. That's worse than no testing at all.

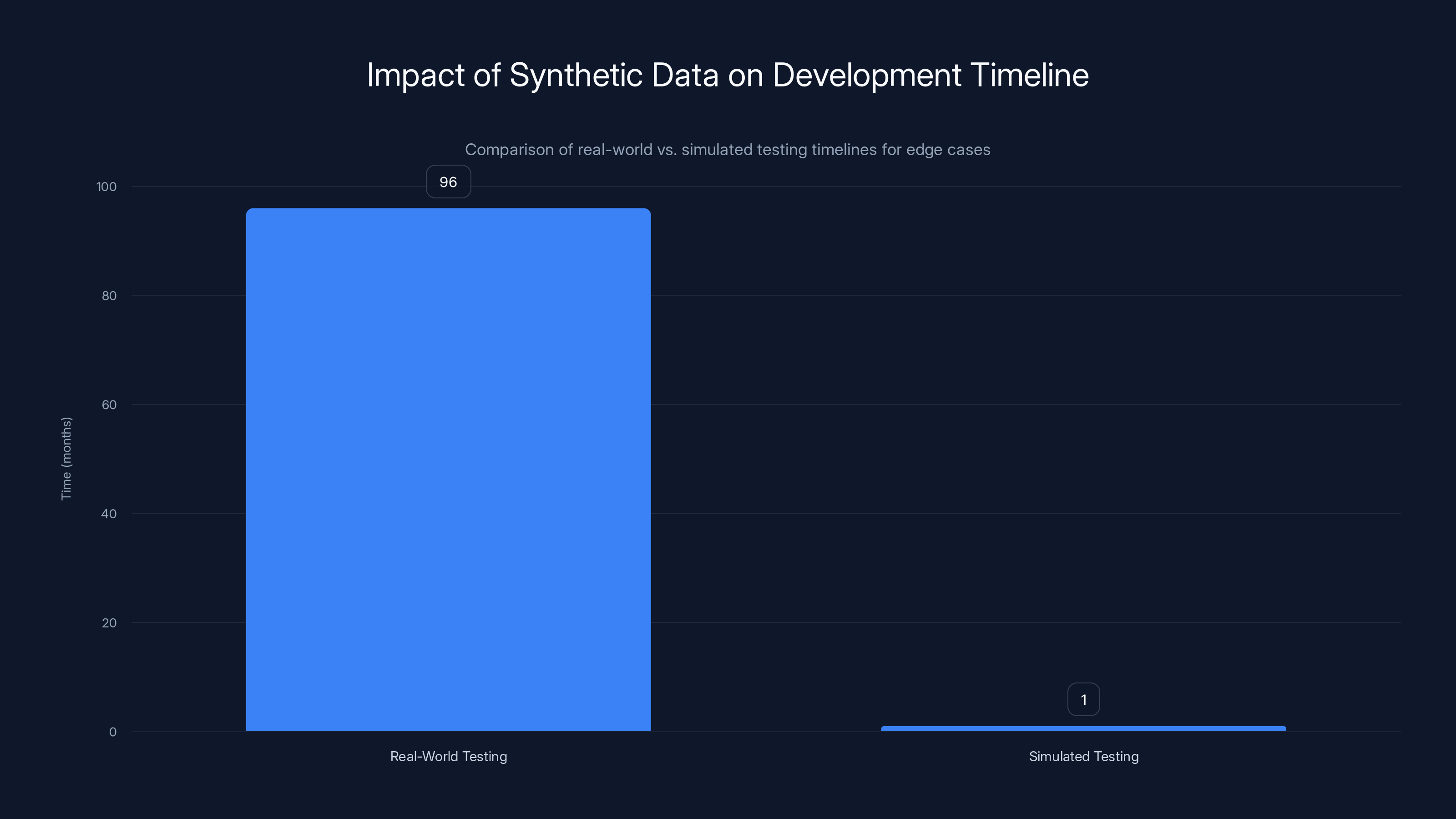

Simulated testing can compress the timeline for testing edge cases from 8 years (96 months) to just 1 month, significantly accelerating development. Estimated data based on typical scenarios.

The Three Control Mechanisms Explained

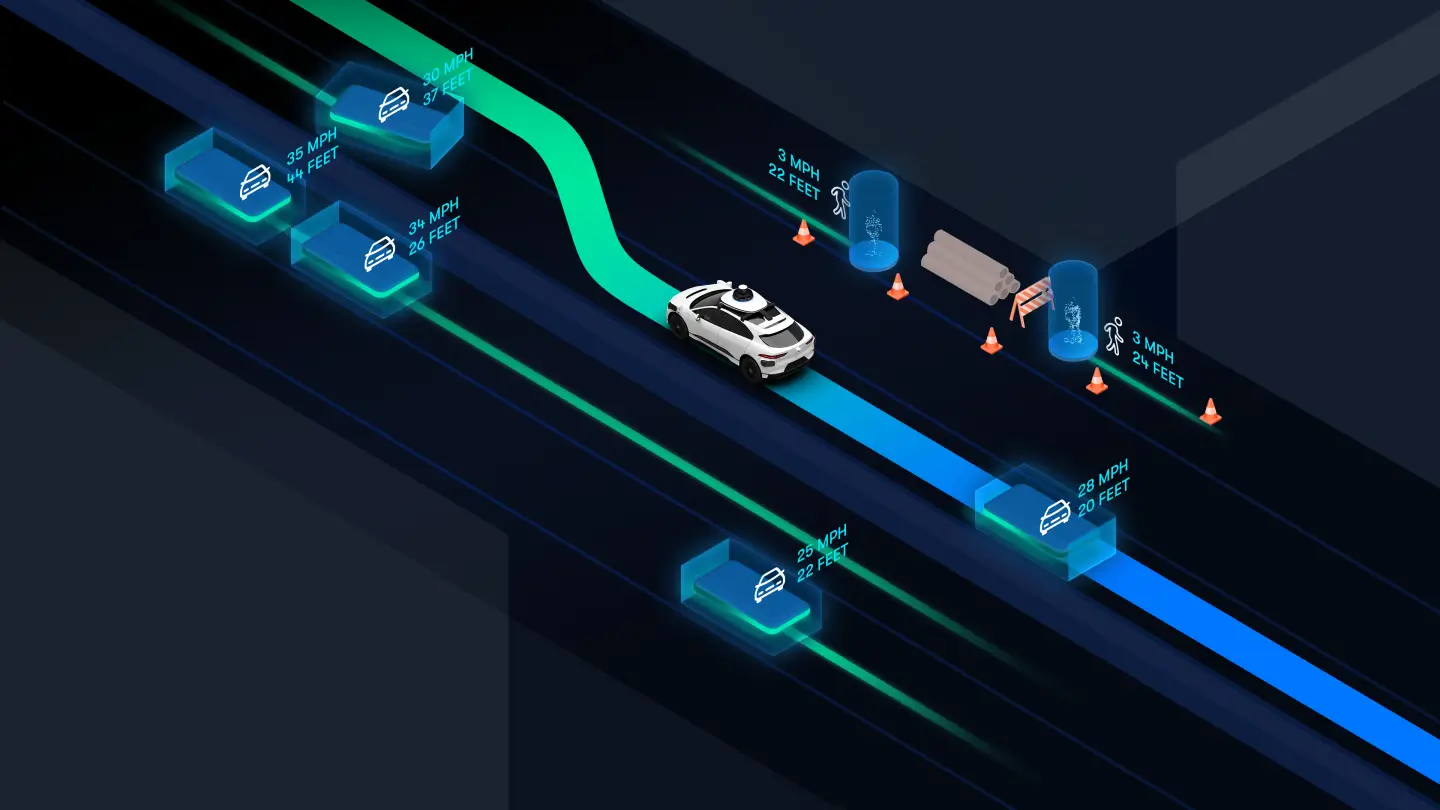

Waymo identified three specific ways to control what Genie 3 generates, and each one addresses a different testing need.

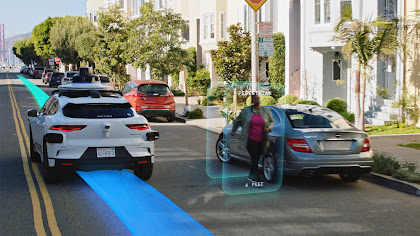

Driving Action Control: Testing "What If" Scenarios

This mechanism lets developers simulate counterfactual scenarios—situations that didn't happen in the original footage, but might have. Let's say Waymo captures dashcam footage of a car changing lanes. Driving action control lets them ask: what if that car changed lanes differently? What if it accelerated instead of slowed down? What if it failed to signal?

This is powerful because it lets you stress-test your decision-making algorithms against variations on real situations. You're not starting from scratch with entirely synthetic scenes. You're starting from real-world footage and then asking "what else could have happened?"

For instance, imagine a dashcam recording where a cyclist is riding at a safe distance from traffic. You can use driving action control to simulate that same cyclist suddenly swerving into traffic. The background, the lighting, the road conditions—all realistic from the original footage. But the behavior is a counterfactual, an alternative action the cyclist didn't actually take. Your system learns to handle that scenario without it ever actually happening.

Scene Layout Control: Customizing Road Structure and Behavior

This mechanism lets developers modify the structure of the simulated environment and the behavior of other road users. Want to test how the vehicle handles a complex four-way intersection during heavy traffic? Scene layout control lets you specify traffic flow patterns, signal timing, pedestrian behavior, and obstacle placement.

This is where you test the vehicle's ability to navigate different types of roads under different conditions. A quiet residential street looks different from a highway during rush hour, which looks different from a rural intersection, which looks different from a parking lot full of pedestrians. Each of these environments has different challenges, different expectations, and different failure modes.

You can use scene layout control to test "what if" scenarios that involve other road users too. What if the car in front of you suddenly brake-checks you? What if a pedestrian walks into traffic expecting you to swerve? What if a motorcycle weaves between lanes at high speed? All of these are variations on real-world conditions that you can parameterize and test systematically.

Language Control: The Most Flexible Tool

Waymo describes language control as their "most flexible tool," and it's worth understanding why. This mechanism lets developers specify conditions using natural language: "foggy morning in San Francisco," "heavy rain and nighttime," "snow-covered roads," "bright sunlight with high glare."

The significance here is that these conditions fundamentally change how sensors work. A camera struggles in heavy glare. Lidar can be fooled by snow. Radar behaves differently in rain. By specifying weather and lighting conditions in language, Waymo can ensure that their sensor fusion algorithms—the systems that combine data from multiple sensors—are tested under realistic adversarial conditions.

More broadly, language control means developers can iterate quickly. Instead of spending weeks building a specific scenario in a simulation tool, they can write a sentence: "autonomous vehicle approaching a crowded outdoor market during sunset with heavy foot traffic." Genie 3 generates it. They test it. They iterate. They don't like something? They modify the language prompt and regenerate.

This dramatically accelerates the design iteration cycle. Instead of testing sequential scenario variations one at a time, you can batch-generate dozens or hundreds of variations and test them all in parallel.

From Dashcam Footage to Interactive Simulation

One of the most sophisticated capabilities Waymo mentioned is the ability to take real-world dashcam footage and transform it into an interactive, simulated environment. This deserves special attention because it's genuinely non-trivial from a technical perspective.

Here's what's happening: A Waymo vehicle has been driving around for months or years, recording everything its cameras see. You have thousands of hours of footage. But footage is just video—a 2D representation of a 3D world. To create a simulation, you need a 3D model. You need to know not just what things look like, but where they are in 3D space, what their physical properties are, and how they interact with sensor systems.

Genie 3 can infer this 3D structure from 2D footage. It understands the scene well enough to reconstruct it as an interactive environment. This is valuable for the obvious reason: it's the most realistic source material possible. These aren't imagined scenarios. They're real situations that actually happened, reconstructed into a simulation space where you can now vary conditions and other agents' behaviors.

Waymo mentions that this approach delivers "the highest degree of realism and factuality" in virtual testing. That phrasing matters. Realism is about how the world looks. Factuality is about whether the scenario actually happened or is representative of real-world conditions. Both matter for safety validation.

Processing and Efficiency: Simulation at Scale

Here's another detail worth understanding: Waymo says their system can create "longer simulated scenes, such as ones that run at 4X speed playback, without sacrificing image quality or computer processing." This sounds technical, but the implications are huge.

In autonomous vehicle testing, you want to test millions of miles. Literally millions. Running full-speed simulation takes computational resources. A single dashcam video stream, uncompressed, is enormous. A full autonomous driving stack processing multiple sensor streams in real time is computationally expensive.

By allowing 4X speed playback without quality degradation, Waymo can test 4 miles of simulated driving in the time it would take to test 1 mile at real-time speeds. Over a testing campaign with millions of miles, this is the difference between weeks of computation and months. It's a practical engineering constraint that matters a lot.

The fact that they can do this without sacrificing image quality is the technical achievement. Most approaches would get faster by simplifying the visual fidelity, reducing sensor simulation accuracy, or cutting corners in other ways. If you degrade realism to get speed, you're not actually testing your system against conditions it will face in reality.

Edge cases in autonomous driving occur at varying frequencies, with some happening as rarely as once in a million miles. Estimated data highlights the rarity and challenge of preparing for these scenarios.

The Broader Context: Why Waymo Keeps Leaning on Google's AI

Waymo's World Model is just the latest example of Waymo using Google's vast AI capabilities to improve autonomous driving. This is actually a strategic choice worth examining, because it suggests something important about how modern AV development works.

Waymo previously built EMMA (End-to-End Multimodal Model for Autonomous Driving) using Google's Gemini large language model. They're reportedly working on a Gemini-based in-car voice assistant. And DeepMind, Google's AI research lab, has provided solutions to help reduce "false positives" in sensor data—situations where sensors misidentify something, creating phantom obstacles that don't exist.

This pattern shows that modern autonomous vehicle development isn't really about isolated self-driving technology anymore. It's about integrating cutting-edge AI across multiple systems: perception (what you see), planning (what you should do), control (how you execute), voice interfaces, and now, simulation. Waymo doesn't build all of this from scratch. They leverage Google's research and infrastructure.

Is this a strength or a weakness? It's complicated. Strength: Waymo gets access to state-of-the-art AI research faster than competitors. They benefit from massive compute resources and a team of world-class researchers. Weakness: Waymo's autonomous vehicle system is deeply integrated with Google's AI ecosystem. If something changes at Google, it affects Waymo. If Google's AI research goes in a different direction, Waymo has to adapt.

For now, though, it's clearly a strength. Google's progress in AI directly translates to better simulation, better perception, better decision-making. Waymo moves faster because they're not reinventing the wheel in AI research.

Testing the Impossible: Real Examples of Simulated Edge Cases

Waymo provided specific examples of scenarios they can now test, and they're worth taking seriously because they illustrate the breadth of what's possible.

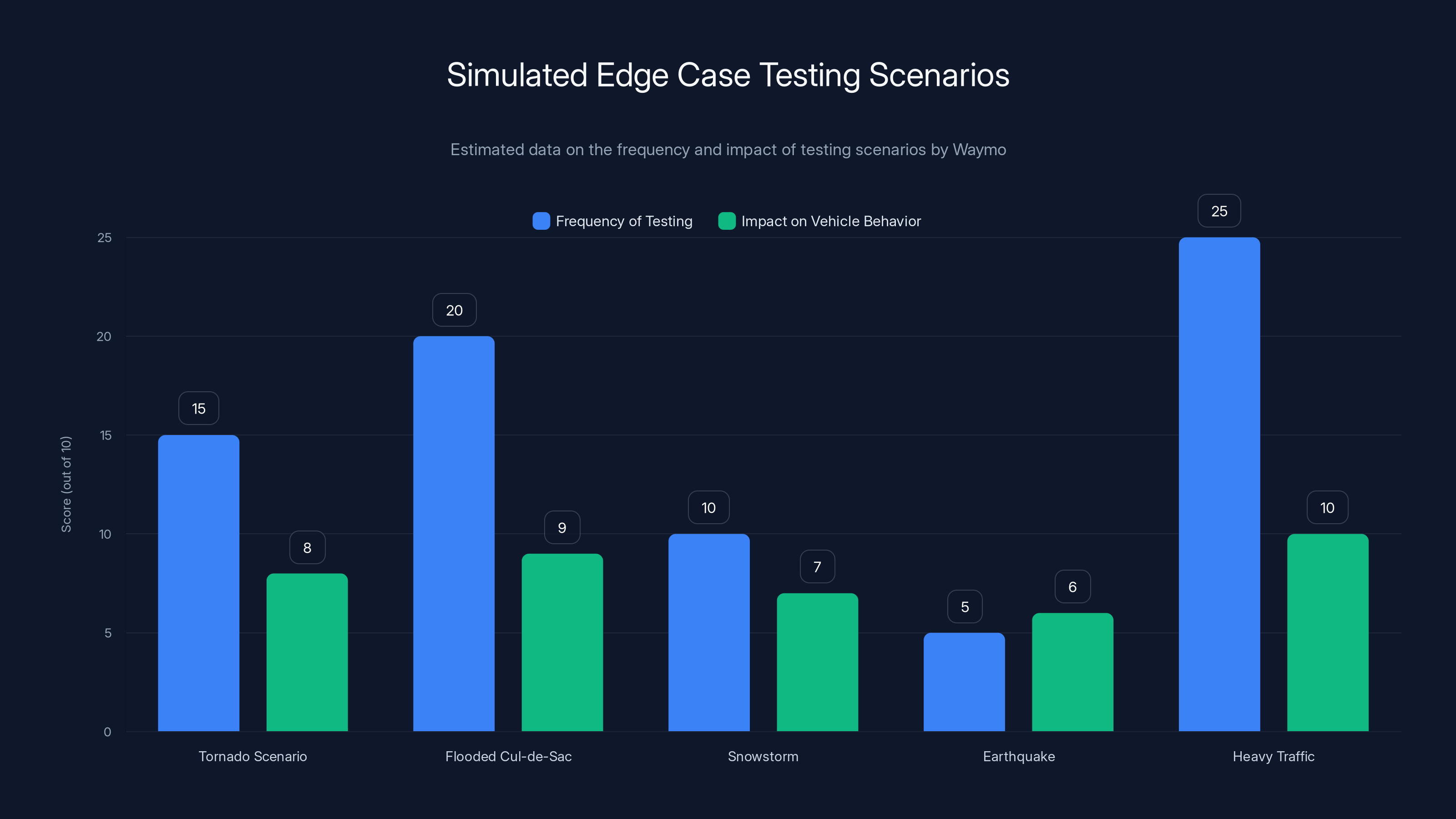

The Tornado Scenario

A tornado approaching a highway is genuinely rare. Most drivers go their entire lives without encountering one while driving. But in extreme weather conditions, it's possible. More importantly, autonomous vehicles operating continuously across the US—a region that experiences significant tornado activity—will eventually encounter something like this.

What should the vehicle do when a tornado is detected? The answer isn't obvious. Should it pull over? Should it accelerate away? Should it identify shelter? Can it even detect a tornado with its sensors, or does it need some other indicator? These are questions that deserve answers before a vehicle is operating with passengers in tornado-prone areas.

Waymo's system can now test how the vehicle responds to multiple tornado variations: different distances, different wind speeds (inferred from sensor behavior), different road conditions. They can test whether the vehicle maintains control, whether it makes reasonable navigation decisions, whether it prioritizes passenger safety appropriately.

The Flooded Cul-de-Sac

Flooding with floating furniture is absurd, but it's not fictional. Flooding happens. Flooding with floating debris happens. Furniture and other objects floating downstream is a real phenomenon during serious floods.

Waymo can simulate how their system responds to flooded roads, obscured lane markers, and unpredictable floating obstacles. How does the lidar sensor behave when bouncing off water? Can the vehicle identify that the road is impassable? Does it maintain control on slippery surfaces? What happens if something floating suddenly enters the vehicle's path?

These aren't questions with obvious answers, and they matter more than you'd expect. Flooding is a common weather event in many regions. It's not as rare as you'd think.

The Neighborhood Engulfed in Flames

This one seems extreme, but it's actually a documented scenario. During major wildfires, flames can cross roads. Smoke can be so thick that visibility is nearly zero. Heat can affect vehicle systems. A vehicle might need to navigate through or around an active fire.

This is extreme, sure, but it's not impossible. It's happened in California. It could happen again anywhere with serious wildfire risk. And if you're operating an autonomous vehicle fleet in regions with that risk, you need to handle it.

The Rogue Elephant

This one is admittedly unusual, but it's not fictional either. Elephants do sometimes interact with human infrastructure, particularly in Africa and Southeast Asia. If Waymo ever operates in those regions—and they've expressed international ambitions—they need to handle large animals appearing on roads.

Even in North America, the principle applies: deer, moose, bears, and other large animals frequently appear on roads, especially in rural areas. Waymo can test how their system responds to large obstacles that appear suddenly. This is important because hitting a large animal at highway speed can damage the vehicle, injure the animal, and potentially harm passengers.

The Sensor Reality: Why Simulation Needs to Match Physical Sensors

Here's something crucial that's easy to overlook: when Waymo tests in their simulated environment, the vehicle isn't just processing images or video. It's processing sensor data the way it would in the real world. The lidar generates point clouds. The cameras capture RGB images. The radar returns distance and velocity measurements. The IMU (inertial measurement unit) provides acceleration data.

This is important because the vehicle's decision-making system is trained on real sensor data. It needs to process simulated sensor data the same way. If you simulate a tornado, you have to simulate how that tornado affects lidar returns. Lidar uses infrared lasers. Precipitation and water droplets scatter infrared light differently than visible light. So a simulated tornado doesn't just look like a tornado in the camera feed—it has to scatter lidar light realistically.

The same applies to all sensors. Radar behaves differently in heavy rain. Cameras struggle in bright glare. Ultrasonic sensors can be fooled by certain surfaces. An autonomous vehicle system uses all of these sensors together, cross-checking and validating each other's data. If one sensor is simulated accurately but another isn't, the system learns false correlations.

This is why Waymo emphasizes that their simulation works "across multiple sensor modalities." They're not just generating pretty pictures. They're generating physically consistent sensor behavior.

Waymo frequently tests various edge cases, with heavy traffic and flooded cul-de-sacs being the most tested scenarios. The impact on vehicle behavior is highest in heavy traffic and flooded conditions. (Estimated data)

Accelerating Development With Synthetic Data

The practical impact of this capability is substantial. Waymo can now generate millions of edge case scenarios without waiting for them to occur naturally. This means they can:

Compress the development timeline. Instead of driving billions of miles to encounter rare scenarios, they generate them. Testing that might take years of real-world operation can happen in weeks of simulated testing.

Test more comprehensively. They can test not just the edge cases they can think of, but systematic variations on edge cases. How does the system respond to a tornado at different distances? Different wind speeds? Different road types? Different vehicle speeds? Each variation is a different scenario to learn from.

Iterate faster on fixes. When they identify a problem in the simulated environment, they can fix the system, regenerate the scenario, and test again. The iteration cycle is hours or days, not months.

Reduce safety risks during deployment. Because the system has been tested against so many synthetic edge cases, it's less likely to encounter a real-world situation it hasn't been prepared for. This reduces the chance of failure when the vehicle is operating with passengers or near pedestrians.

The math here is worth considering. Let's say Waymo's fleet does 1 million real-world miles per month. In a year, that's 12 million miles. If a particular edge case occurs once per 100 million miles of driving, it would take roughly 8 years to encounter it naturally. With simulation, they can test that scenario hundreds of times in a month. This acceleration is the entire value proposition of simulation as a tool.

Integration With Existing Waymo Systems

Waymo's World Model doesn't exist in isolation. It integrates with their existing decision-making and perception systems. Here's how the pieces fit together:

Perception: Lidar, camera, radar, and other sensors generate data. In simulation, this data is generated synthetically based on the simulated environment.

Processing: The sensor data is processed by neural networks and decision-making algorithms. In real deployment, these are Waymo's trained models (like EMMA). In simulation, the exact same models process the synthetic sensor data.

Planning: The system decides what to do (accelerate, brake, turn, stop). In simulation, there are no real consequences, so any decision can be observed and studied.

Logging: The entire interaction is logged. Over millions of scenarios, patterns emerge about which decisions lead to good outcomes and which lead to failures.

Iteration: The models are retrained on this logged data. The system learns from its mistakes in simulation before ever encountering those situations in reality.

This closed-loop system is how simulation actually drives safety improvements. It's not just about testing the system as-is. It's about using simulation to train the system to be better.

Comparing This to Competitor Approaches

Waymo isn't the only autonomous vehicle company using simulation. Tesla, Cruise, Waymo competitors all use simulators to some degree. But Waymo's approach is noteworthy for its sophistication.

Most simulators are hand-crafted or procedurally generated. You write code or create 3D models that describe specific scenarios. Genie 3-based simulation is generative. You describe scenarios in natural language or images, and the AI generates them.

This is faster and more flexible than traditional approaches. It also enables a different kind of testing: you can generate a scenario, test the vehicle, find a failure mode, slightly modify the scenario using natural language, and test again. This rapid iteration loop is powerful.

Competitors using more traditional simulation approaches are probably not at this level of flexibility and speed. Waymo's choice to use Genie 3 gives them a practical advantage in how quickly they can generate and test edge cases.

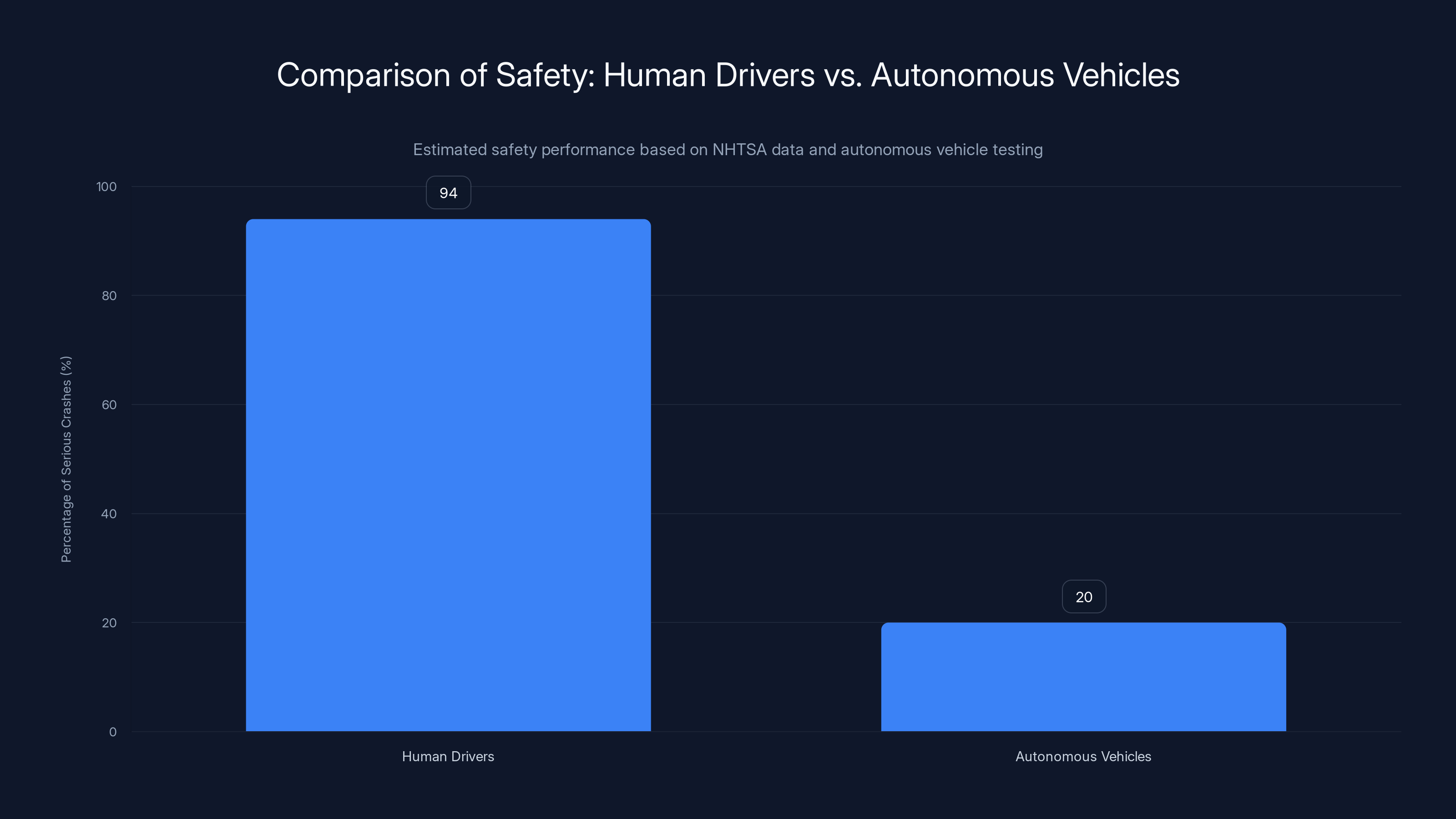

Human drivers are responsible for about 94% of serious crashes due to critical errors. Autonomous vehicles aim to significantly reduce this percentage, targeting a much lower incidence rate. Estimated data based on safety projections.

The Future: Where This Technology Goes

This is early technology for autonomous driving simulation. Genie 3 itself was only recently released. We should expect the capabilities to expand significantly.

More sensor modalities: Simulating how different sensors behave is complex. As the technology matures, simulating more sensor types (thermal cameras, additional radar configurations, event cameras) will become routine.

Longer, more complex scenarios: Current simulations focus on discrete interactions. Future simulations might generate long, complex scenarios with multiple interacting agents and evolving situations.

Closed-loop feedback: Real-time simulation where the system's decisions affect the environment in realistic ways. The vehicle brakes; the road conditions change; other vehicles react; the simulation updates accordingly.

Cross-domain learning: Testing in simulation, deploying in reality, learning from real-world failures, incorporating those into simulation. A continuous loop where reality and simulation inform each other.

International scenarios: Waymo operates globally. Future simulation might handle right-hand traffic, left-hand traffic, different road conventions, different pedestrian behaviors, and different vehicle types.

The Safety Argument: Why This Matters More Than You Think

All of this boils down to one crucial point: autonomous vehicles need to be safer than human drivers, and they need to be demonstrably, provably safer before they operate with passengers.

You can't prove that by statistics alone. You can't say "our vehicle has driven 10 million miles without serious incidents, so it's safe." First, 10 million miles is a small fraction of the miles an autonomous vehicle fleet will drive. Second, absence of evidence of a problem isn't evidence of absence of the problem.

What you need is evidence that your system can handle the things that go wrong. Simulation provides that evidence. You can show that your system has been tested against a diverse set of edge cases, and in each case, it responded safely.

Waymo's ability to generate arbitrary edge cases and test against them is a way of building confidence that the system is robust. Not perfect. Robustness isn't perfection. It's the ability to handle unexpected situations gracefully.

Simulation can't prove safety absolutely. A vehicle can pass a million simulated edge cases and still fail in an unexpected real-world situation. But simulation can significantly improve the odds of safe deployment by ensuring the system has been prepared for diverse scenarios.

This is the meta-argument for why Waymo's investment in advanced simulation is strategic. It's not just about testing faster. It's about being able to demonstrate that they've tested thoroughly, systematically, and against scenarios they specifically designed to be challenging.

Real-World Deployment: The Next Challenge

Waymo is already operating robotaxi services in multiple cities: San Francisco, Los Angeles, Phoenix, and other regions. These are real operations with real passengers. The simulation work isn't about hypothetical future deployment. It's about improving the safety of existing deployments.

Each time Waymo encounters an unusual situation in the real world, they can potentially recreate it in simulation, test variations on it, and improve their system. Each time their simulation generates a scenario they hadn't previously encountered, they can deploy that scenario to their fleet in the real world, monitor the performance, and incorporate what they learn.

This feedback loop—real world to simulation to improved system—is continuous. Simulation is a tool for ongoing improvement, not just pre-deployment validation.

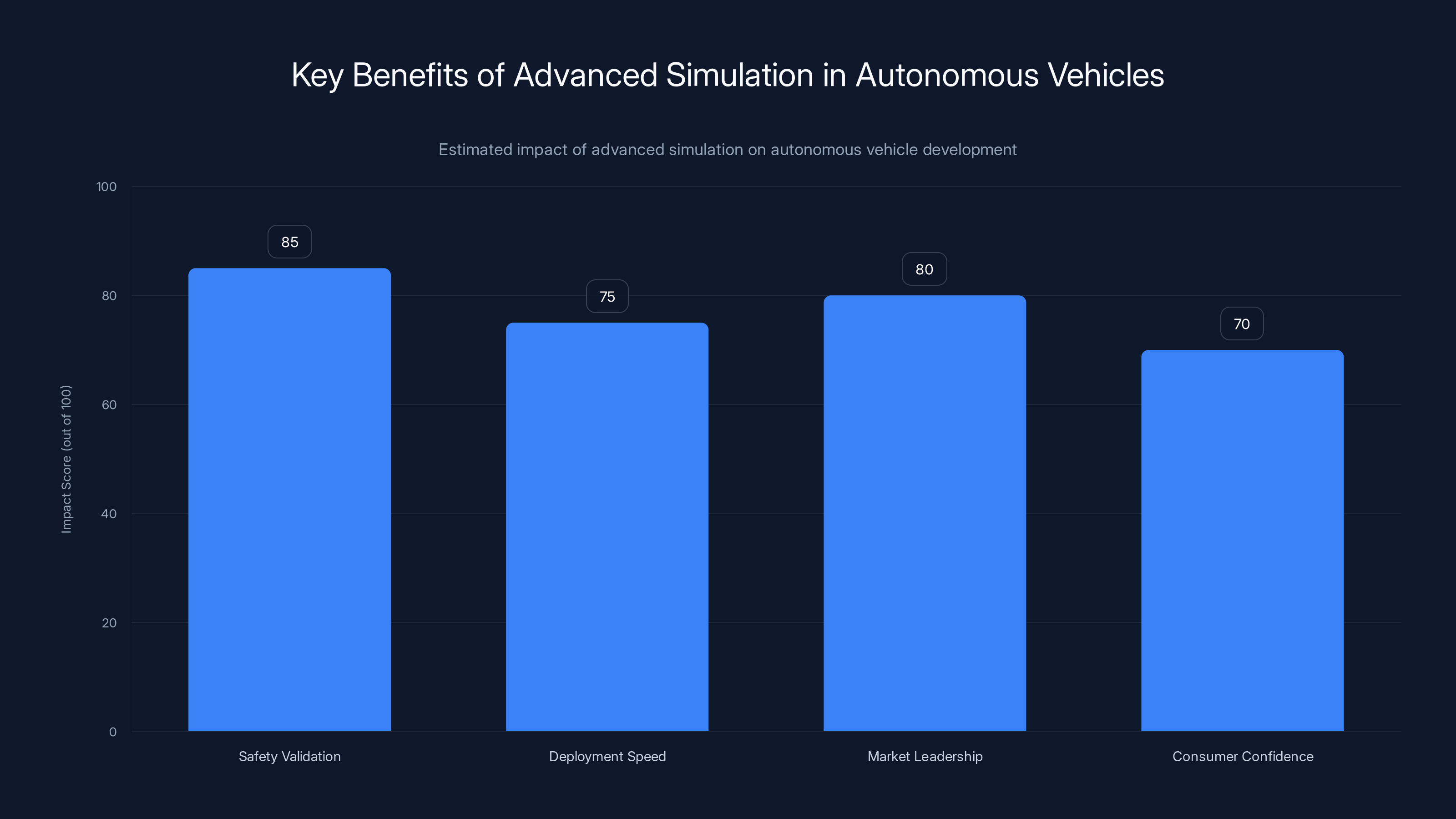

Advanced simulation technologies like Genie 3 significantly enhance safety validation, accelerate deployment speed, and bolster market leadership and consumer confidence. (Estimated data)

Technical Challenges and Open Questions

Despite the sophistication of Genie 3-based simulation, there are still challenges and open questions.

The Sim-to-Real Gap

Simulation can never be perfectly faithful to reality. There will always be aspects of the real world that the simulation doesn't capture perfectly. How confident can you be that a system trained in simulation will behave the same way in reality?

This is called the sim-to-real gap, and it's a known problem in robotics and autonomous systems. Waymo addresses this by using real dashcam footage as a foundation, by ensuring sensor simulation is physically consistent, and by continuous real-world validation. But the gap exists.

Edge Case Exhaustion

How many edge cases are there? You can't test all of them. At some point, you've tested enough that additional scenarios provide diminishing returns. Figuring out where that point is requires sophisticated analysis and judgment.

Unexpected Unknowns

The hardest scenarios to test are the ones you don't know to test. A novel situation that your simulation never imagined. These are rare, but they happen. The best mitigation is diverse, comprehensive simulation across different types of scenarios, different environments, and different conditions.

Practical Implementation: How This Actually Works

Let's ground this in actual practice. Here's roughly how Waymo's simulation workflow probably works:

Data collection: Waymo vehicles record dashcam footage and all sensor data continuously.

Scenario identification: Engineers or automated systems identify interesting scenarios from the collected data. Scenarios that are unusual, challenging, or representative of important categories.

Simulation generation: Using Genie 3, they generate variations on these scenarios. A dashcam recording becomes multiple interactive simulations with different vehicle behaviors, weather conditions, or road configurations.

Vehicle testing: They run their autonomous driving system (Waymo Driver) in these simulations, collecting data about how the system behaves.

Analysis: Engineers and machine learning systems analyze the behavior, identifying failures, edge cases where the system performed unexpectedly, or situations where the system made questionable decisions.

Improvement: Based on the analysis, they improve the system. Perhaps by retraining models on simulated data, perhaps by adjusting decision-making logic, perhaps by updating perception systems.

Real-world validation: They deploy the improved system to real vehicles and monitor actual performance against new data.

Feedback loop: Repeat. New real-world data informs new simulations, which improve the system, which is deployed again.

This loop, running continuously, is how simulation drives actual safety improvements.

The Bigger Picture: AI for Safety

Waymo's investment in Genie 3-based simulation is part of a broader trend in autonomous systems: using AI not just for the core task, but for validating safety.

Simulation is one tool. Testing in controlled environments is another. Real-world deployment with extensive monitoring is a third. The most robust approach combines all of them.

And the use of generative AI (Genie 3) to accelerate simulation is a clever application of the technology. Rather than using generative AI to replace human judgment or make decisions, Waymo is using it to generate test cases that improve the safety of decision-making systems. This is a good use of the technology—leveraging AI's strengths without taking on unnecessary risks.

This model might extend to other safety-critical systems. Insurance companies could use generative AI to simulate unusual claims scenarios. Medical systems could use it to simulate rare diseases or drug interactions. Power grids could use it to simulate cascading failures. In each case, the idea is the same: use AI to generate challenging scenarios, test your system's response, improve the system, repeat.

Limitations Worth Understanding

For all its sophistication, Waymo's simulation system has real limitations worth stating clearly.

Simulation is not reality: No matter how realistic, a simulation is not the actual world. There will always be phenomena, interactions, or scenarios that the simulation doesn't capture perfectly.

Generative AI can hallucinate: Genie 3 is a generative model, which means it can sometimes generate physically impossible or unrealistic scenarios. The system needs humans to validate that generated scenarios are actually plausible.

Not all edge cases are testable: Some scenarios (rare disasters, certain physical phenomena) might not be feasible to simulate accurately. Or, they might be so rare that testing against them provides minimal practical value.

Testing doesn't guarantee safety: A system can pass all tests and still fail in the real world. Testing is necessary but not sufficient for safety.

Human oversight remains essential: Ultimately, autonomous vehicles will need human oversight in certain scenarios, and simulation can't fully prepare a system for situations where human judgment is required.

What This Means for Autonomous Vehicle Adoption

Waymo's World Model represents meaningful progress in autonomous vehicle technology. Better simulation leads to better systems, which leads to safer vehicles, which leads to faster, broader adoption.

But it's important not to oversimplify the impact. Simulation is one component of safety validation. It's not a magic solution that makes autonomous vehicles instantly safe. But it's a powerful tool that lets Waymo compress the validation timeline and test more comprehensively.

For consumers and regulators, this matters because it means autonomous vehicles are likely to be safer when deployed at scale, and that deployment might happen faster than previously expected.

For competitors, this is a challenge. Waymo has access to Google's cutting-edge AI research and resources. Competitors without those resources might find themselves falling behind in the arms race of simulation sophistication.

The Path Forward

Waymo's continued investment in simulation, particularly using generative AI, suggests the company is confident in this approach as a path toward safer, more capable autonomous vehicles. They're betting that the ability to generate and test arbitrary edge cases will remain a competitive advantage, and that the integration with Google's AI research will continue to provide benefits.

The technology is likely to improve. Genie 3 is not the final form of generative world models. Future versions will be more realistic, more flexible, and more efficient. As simulation capabilities improve, so will the safety validation process.

This is how modern safety-critical systems are actually built: not by perfect design, but by iterative testing, learning, and improvement. Waymo's approach is sophisticated, but it's also fundamentally pragmatic.

FAQ

What is Waymo's World Model?

Waymo's World Model is a hyper-realistic simulator built using Google's Genie 3 AI technology that can generate photorealistic driving scenarios from text prompts or images. It allows Waymo to test autonomous vehicles against rare, dangerous edge cases like tornadoes, floods, wildfires, and unusual obstacles without physical risk. The simulator can transform real dashcam footage into interactive environments and test variations on scenarios at 4X speed playback.

How does Genie 3 technology enable better simulation?

Genie 3 is a generative AI world model that creates novel, realistic 3D environments from natural language descriptions or image prompts, rather than relying on hand-crafted scenarios or rigid procedural generation. It understands physical laws and generates interactive environments where sensor behavior is physically consistent. This flexibility enables rapid iteration—developers can describe a scenario in text, generate it, test it, modify the description, and regenerate it without weeks of manual scenario creation.

Why is simulation critical for autonomous vehicle development?

Simulation is essential because autonomous vehicles must be safe before encountering dangerous, rare situations in reality. Edge cases like tornadoes or wildfires might occur once per hundred million miles of driving—too rare to test naturally but critical to handle correctly. Simulation lets developers test millions of scenarios safely, compress the validation timeline from years to weeks, and ensure their systems are prepared for situations they might never actually encounter in real deployment.

What are the three control mechanisms in Waymo's World Model?

Driving action control lets developers simulate "what if" scenarios by modifying how vehicles or other agents behave in real footage. Scene layout control lets them customize road structures, traffic patterns, pedestrian behavior, and obstacle placement. Language control is the most flexible, allowing developers to specify conditions like "foggy morning," "heavy rain," or "nighttime" that affect how sensors behave—without needing to manually recreate the environment.

How does Waymo ensure simulations are realistic enough to predict real-world behavior?

Waymo ensures realism by building simulations on real dashcam footage as a foundation, simulating sensor behavior consistently across multiple sensor types (lidar, camera, radar), and integrating with their actual autonomous driving system models. The simulations aren't just visually realistic—they're physically realistic. Sensors in the simulation behave the way they actually behave in the real world, so the vehicle's perception system learns from accurate data.

Can simulation guarantee autonomous vehicle safety?

No, simulation can't guarantee safety absolutely. A system can pass millions of simulated tests and still encounter unexpected situations in reality. However, comprehensive simulation significantly improves the odds by ensuring the system has been tested against diverse, challenging scenarios. Simulation is necessary but not sufficient for safety—it must be combined with real-world testing, human oversight, and continuous monitoring during deployment.

How does simulation accelerate Waymo's development timeline?

Instead of waiting years for rare edge cases to occur naturally during real-world testing, Waymo can generate them immediately in simulation. A scenario that might occur once per 100 million miles can be tested hundreds of times in a month. The iteration cycle is dramatically compressed—when they identify a problem, they can fix the system, regenerate the scenario, and test again in hours or days rather than months.

What happens when simulation identifies a failure in the autonomous vehicle system?

When Waymo's system fails in simulation, they analyze the failure to understand what went wrong. They then improve the system—either by retraining neural network models on simulated data, adjusting decision-making logic, or updating perception algorithms. The improved system is tested again in simulation, and once it passes consistently, it's deployed to real vehicles where performance is monitored against real-world scenarios.

How does Waymo use real dashcam footage in simulation?

Waymo transforms real dashcam footage into interactive 3D simulated environments using Genie 3's world-building capabilities. This provides maximum realism and factuality—the scenarios are based on situations that actually happened. Then developers can modify these base scenarios using the control mechanisms: changing how other agents behave, modifying road structure, or adjusting weather and lighting. This hybrid approach combines the authenticity of real footage with the flexibility of generative simulation.

What role does sensor simulation play in Waymo's testing?

Sensor simulation is critical because autonomous vehicles don't process images or videos—they process sensor data from lidar, cameras, radar, and other sensors. When Waymo simulates a scenario, they must also simulate how each sensor would behave in that scenario. Lidar returns different data in rain than in clear weather. Cameras struggle in glare. Radar behaves differently at different distances. Accurate sensor simulation ensures the vehicle's perception system learns from realistic sensor behavior, not simplified or idealized data.

Conclusion: The Strategic Importance of Advanced Simulation

Waymo's investment in Genie 3-based simulation represents more than a technological upgrade. It's a strategic decision to prioritize safety validation and accelerate the path to safe, scalable autonomous vehicle deployment.

The ability to generate arbitrary edge cases from text prompts, test autonomous vehicles against them, and iterate rapidly addresses one of the fundamental challenges in autonomous vehicle development: how to prepare a system for rare situations without waiting decades for them to occur naturally.

Genie 3 provides the technical foundation. Waymo's integration of this technology into a comprehensive simulation pipeline provides the practical execution. The result is a system that can prepare autonomous vehicles for tornadoes, flooded roads, wildfire conditions, and countless other scenarios before those vehicles encounter those situations in reality.

This approach isn't foolproof. No amount of simulation can guarantee perfect safety in the real world. But it's significantly better than the alternative—deploying autonomous vehicles with limited testing and hoping they perform well when situations get weird.

For Waymo, this is about competitive advantage. Better simulation leads to safer vehicles, which leads to faster approval for wider deployment, which leads to market leadership. For consumers and regulators, this is about confidence that autonomous vehicles have been properly tested before operating in communities.

The technology is still early. Generative world models like Genie 3 will continue to improve. Simulation will become more sophisticated, more realistic, and more efficient. As these tools evolve, so will the safety and capability of autonomous vehicles.

Waymo's World Model is one step in a longer journey toward fully autonomous vehicles that the public trusts. It's a sophisticated step, built on cutting-edge AI, validated rigorously, and deployed strategically. But it's still just one step. The journey continues, and the next innovations will likely come from companies that, like Waymo, invest in understanding the hard problems and building systematic, evidence-based solutions.

What happens when Waymo runs into a tornado? Based on this simulation technology, it's prepared. Not perfectly prepared. But far better prepared than any system could be without the ability to test against synthetically generated edge cases. That preparation, multiplied across thousands of scenarios and millions of test miles, is what moves autonomous vehicles from theoretical possibility to practical reality.

Key Takeaways

- Waymo's World Model uses Google's Genie 3 to generate photorealistic edge case scenarios for testing autonomous vehicles safely

- Three control mechanisms—driving action, scene layout, and language control—enable flexible, rapid scenario generation from text prompts

- Simulation compresses validation timelines from years of natural testing to weeks of intensive synthetic testing

- Sensor simulation must be physically accurate to ensure vehicles learn from realistic data, not idealized conditions

- The continuous feedback loop of real-world data, simulation improvement, and system deployment represents the practical path to safer autonomous vehicles

Related Articles

- Waymo's $16B Funding: Inside the Robotaxi Revolution [2025]

- Waymo's $16 Billion Funding Round: The Future of Robotaxis [2025]

- Waymo's $16B Funding Round: The Future of Autonomous Mobility [2025]

- Senate Hearing on Robotaxi Safety, Liability, and China Competition [2025]

- Waymo at SFO: How Robotaxis Are Reshaping Airport Transport [2025]

- Google's Project Genie AI World Generator: Everything You Need to Know [2025]

![How Waymo Uses AI Simulation to Handle Tornadoes, Elephants, and Edge Cases [2025]](https://tryrunable.com/blog/how-waymo-uses-ai-simulation-to-handle-tornadoes-elephants-a/image-1-1770396015947.jpg)