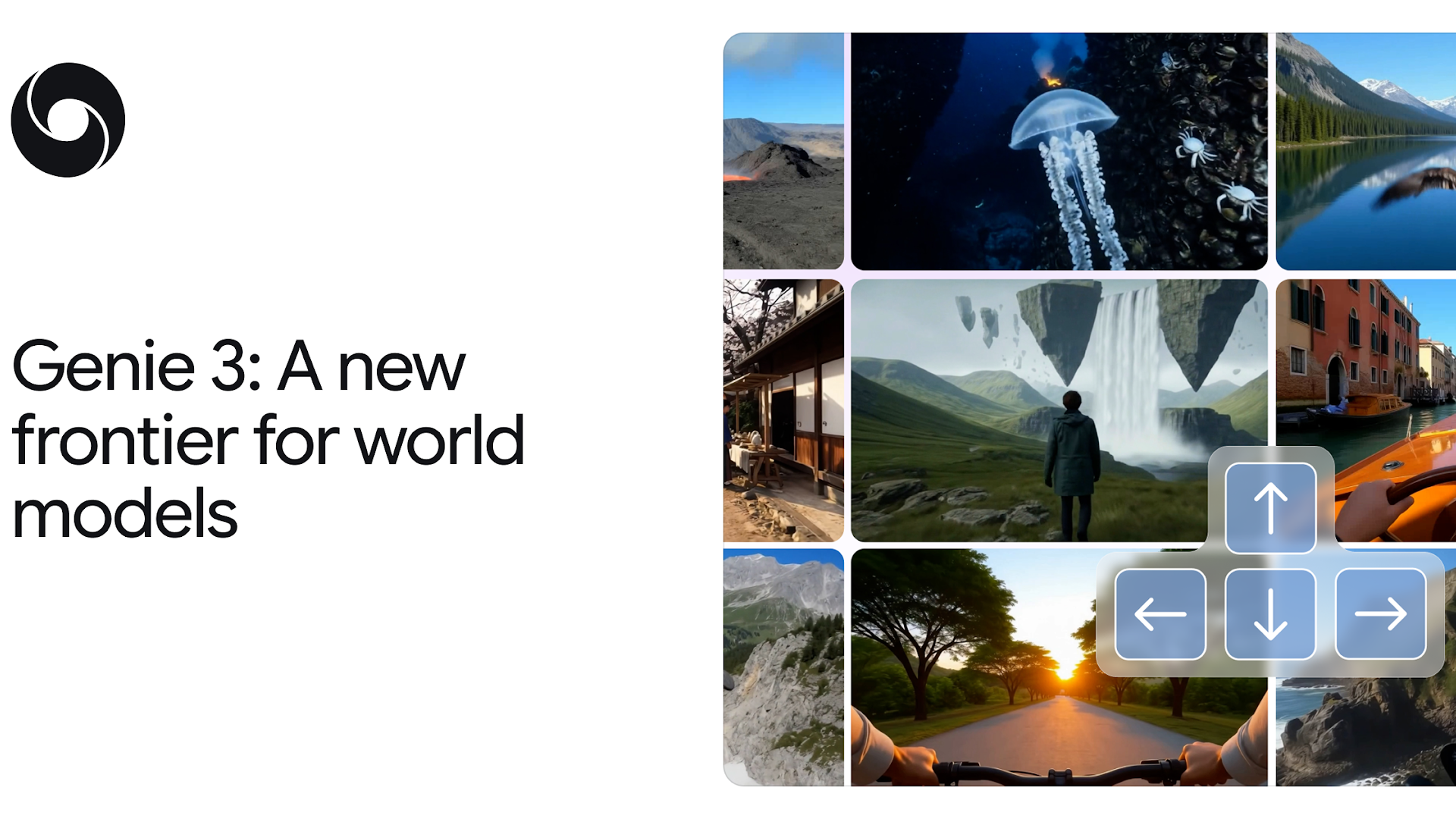

Introduction: Building Worlds with AI Is Finally Here

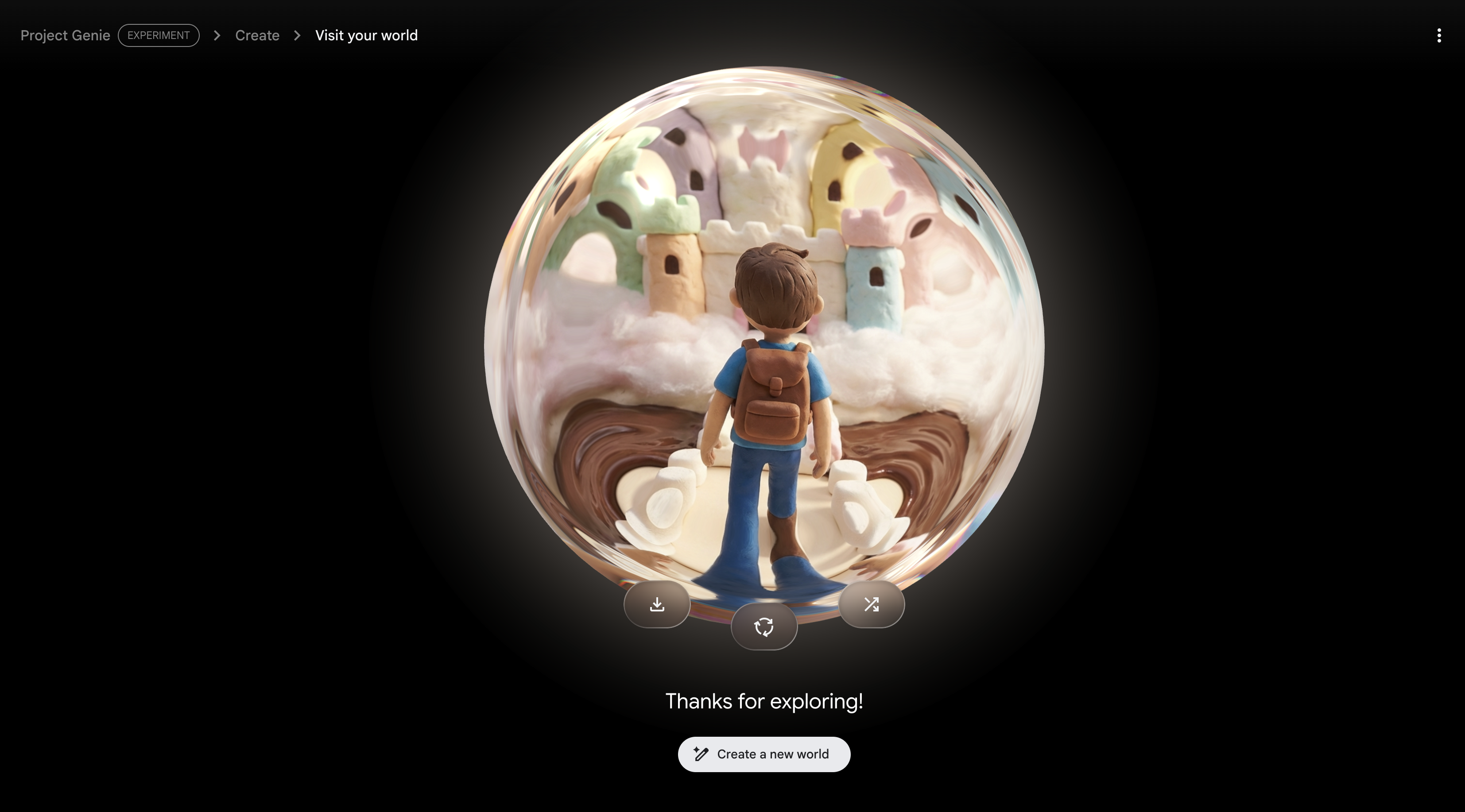

Last year, I would've laughed if you told me I'd be exploring marshmallow castles in a video game I created by just typing a description. Not because it sounds impossible, but because it felt like science fiction that was still decades away. Yet here we are. Google DeepMind just dropped Project Genie into the hands of everyday users, and honestly, the results are wild.

This isn't some flashy marketing stunt either. Project Genie represents a genuine leap forward in what AI can do. It's a world model, which means it's actually understanding how environments work, how physics behave, how characters move through space. That's fundamentally different from most AI tools you've used before.

For context, world models are the missing piece that AI researchers believe we need to get closer to artificial general intelligence (AGI). They're not just pattern-matching machines; they're systems that can generate internal representations of environments and predict how actions will ripple through a world. Think of it like teaching an AI to understand the rules of reality itself.

Google is rolling this out as an experimental prototype to Google AI Ultra subscribers in the US starting this month. You've got 60 seconds per generation to explore whatever world you dream up, though that limitation is more about compute constraints than technical impossibility. DeepMind is being refreshingly honest about the prototype's flaws too. It's inconsistent. Sometimes brilliantly so. Sometimes baffling. But that's the point of a research tool like this.

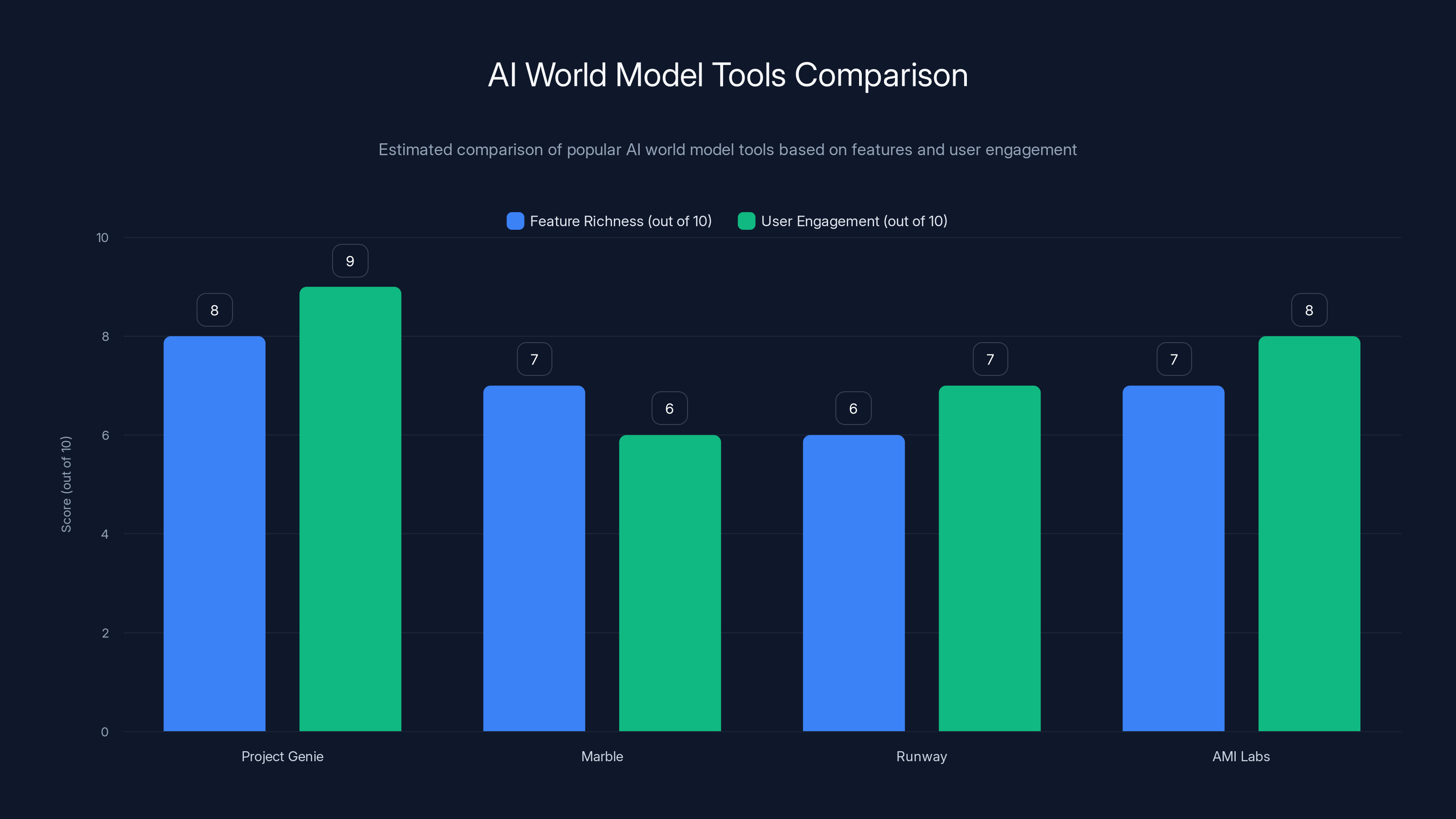

The timing matters. We're in the middle of what I'd call the world model arms race. Fei-Fei Li's World Labs released Marble last year. Runway dropped their own world model. Yann Le Cun's AMI Labs is focused entirely on this problem. The race is on, and Project Genie is Google's opening move to capture user feedback and training data while simultaneously proving DeepMind can ship consumer products, not just research papers.

But here's what really fascinates me: this isn't about games, not really. Games are just the first commercial use case. The real endgame is using world models to train robots and embodied agents in simulation before they ever touch the real world. That's where the serious money and impact will be.

TL; DR

- Project Genie creates interactive game worlds from text descriptions and images using advanced AI models

- Powered by three core technologies: Genie 3 world model, Nano Banana Pro image generation, and Gemini for understanding prompts

- Currently limited to 60 seconds of world exploration due to compute constraints, but the capability exists for much longer sessions

- Performance is hit-or-miss: whimsical, creative worlds work beautifully, while realistic or copyrighted content often falls flat

- Broader implications extend beyond gaming toward training AI-controlled robots and agents in simulation, potentially accelerating AGI research

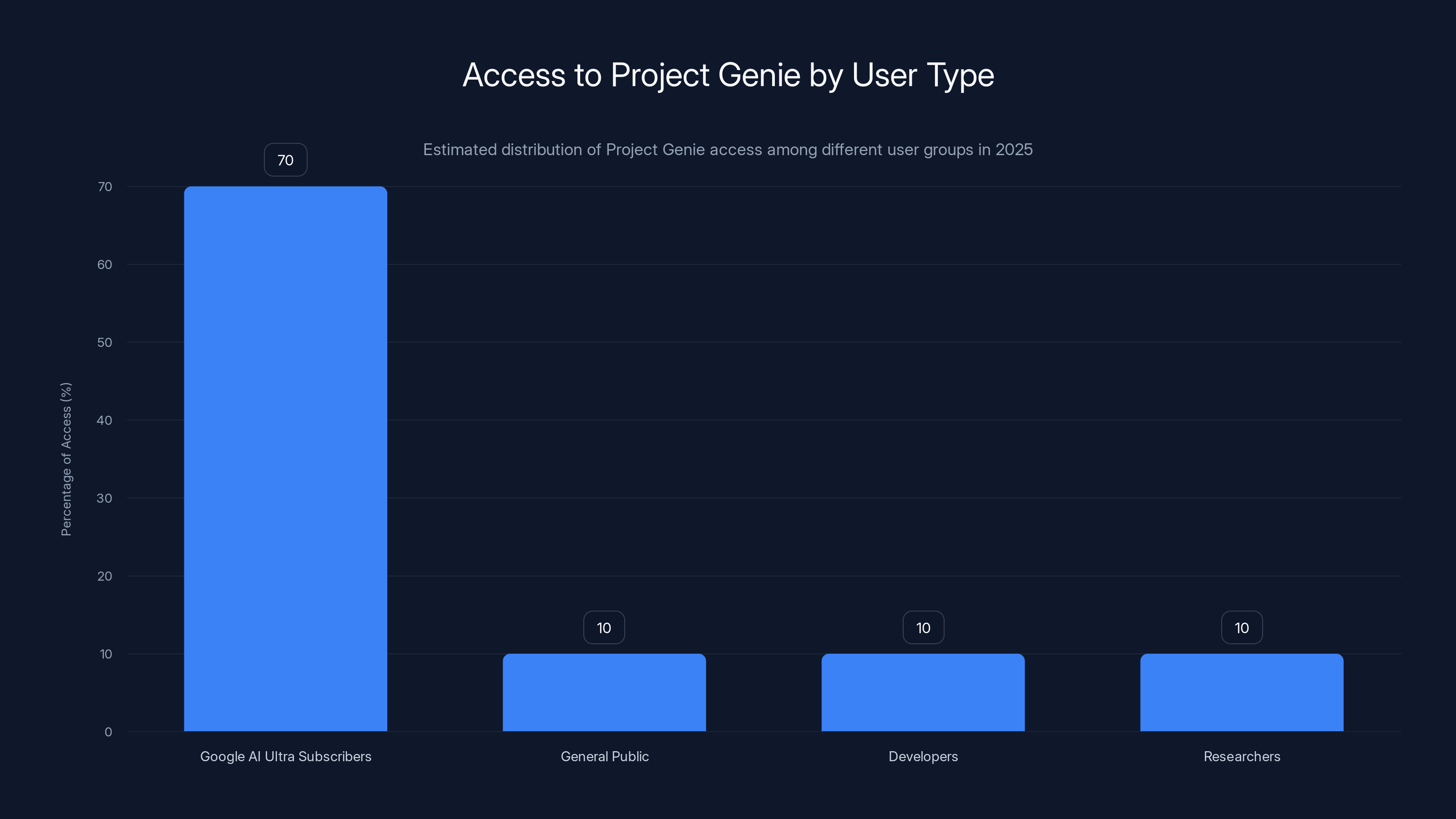

Currently, 70% of Project Genie access is limited to Google AI Ultra subscribers, with the remaining 30% shared among developers, researchers, and the general public. Estimated data based on access restrictions.

How Project Genie Actually Works: The Three-Part System

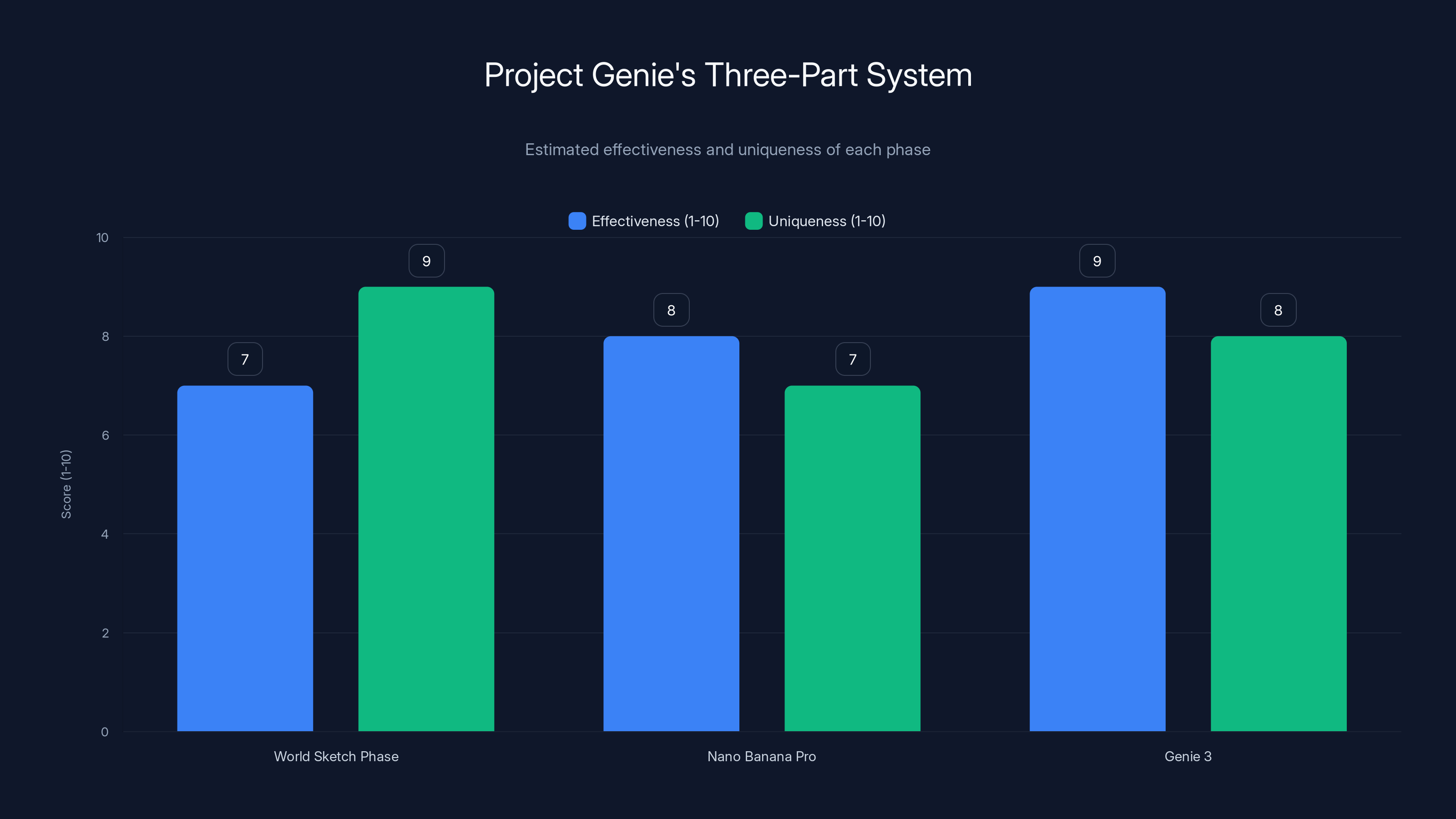

Project Genie isn't a single model. It's a three-part orchestra, and each instrument matters.

First, you've got the world sketch phase. You describe the environment you want and the character you'll be playing as. These are natural language prompts, so you can be vague or hyper-specific. "A castle in the clouds made of marshmallows with chocolate sauce rivers and candy trees" works just as well as "futuristic city street."

The second part is Nano Banana Pro, which is Google's image generation model. It takes your descriptions and creates a visual representation. This is crucial because Genie 3, the actual world model, uses images as its starting point. You can modify the generated image before moving forward, editing details to better match your vision. The model sometimes misses the mark here—ask for green hair and get purple instead—but it's directional.

Here's where it gets interesting: you can also use real photographs as your baseline. Hand over a photo of your backyard, and Genie will try to build an interactive world around it. This feature is genuinely mind-bending when it works, though results are inconsistent.

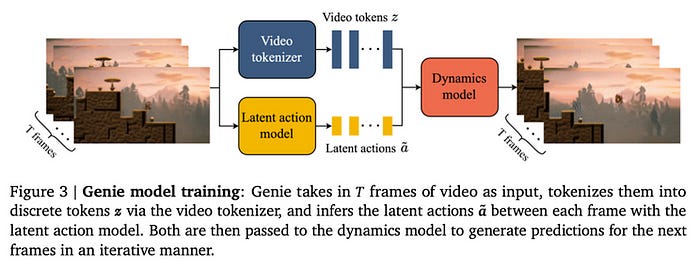

Once you've locked in your image, Genie 3 takes over. This is the actual world model, an auto-regressive model that generates the interactive environment frame-by-frame. It's auto-regressive, meaning it predicts the next frame based on previous frames and your inputs. You get an explorable world in seconds—not minutes, seconds.

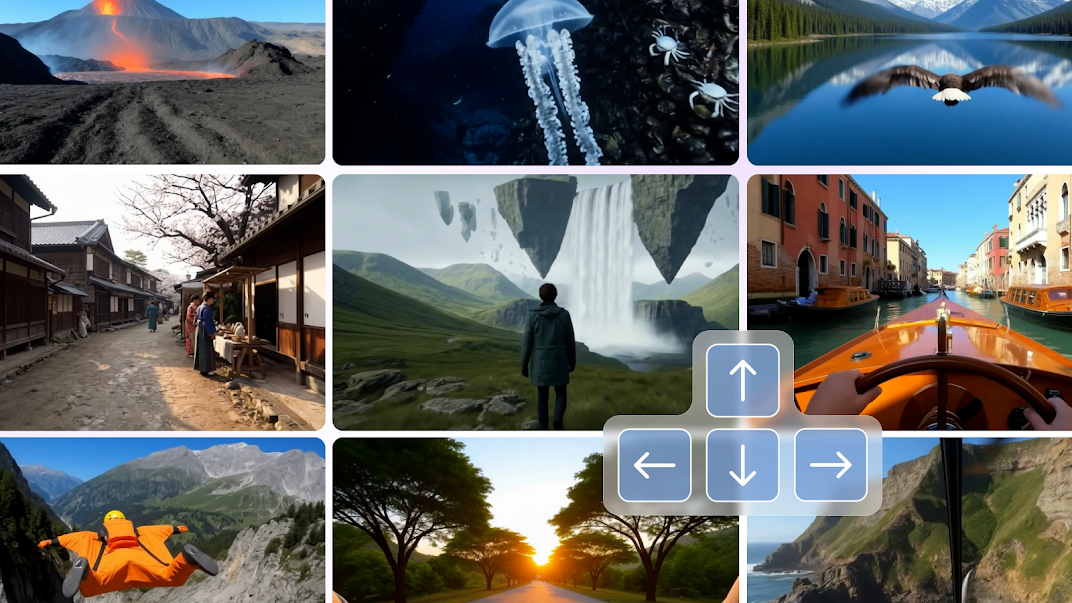

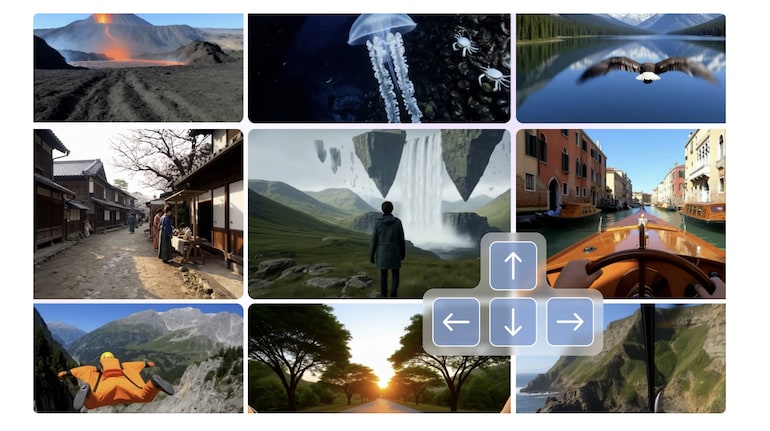

The system handles both first-person and third-person perspectives. You can move through the world, interact with objects, and watch how the environment responds to your actions. The interactions are limited compared to AAA games, but remember, this entire world was generated from text. That's the staggering part.

You can download videos of your creations, share them, or remix them. The remix feature lets you build on existing worlds by tweaking the prompts and regenerating. There's also a gallery of curated worlds and a randomizer if inspiration isn't striking.

The entire system runs on Google's infrastructure, which means the actual compute happens in data centers, not on your device. That's why they had to cap it at 60 seconds per session. Genie 3, being auto-regressive, demands dedicated hardware per user. Shlomi Fruchter, a research director at DeepMind, explained it directly: when you're using it, there's literally a chip somewhere that's only yours, running only your session.

Exceed 60 seconds and the compute costs become unjustifiable for a free or affordable service. Extend that limit substantially and you've eliminated the ability to serve thousands of concurrent users. It's a hard ceiling that Fruchter acknowledged as a current limitation they hope to improve, though he also noted that the environments become somewhat repetitive after about 60 seconds anyway.

The Three Technologies Behind Project Genie

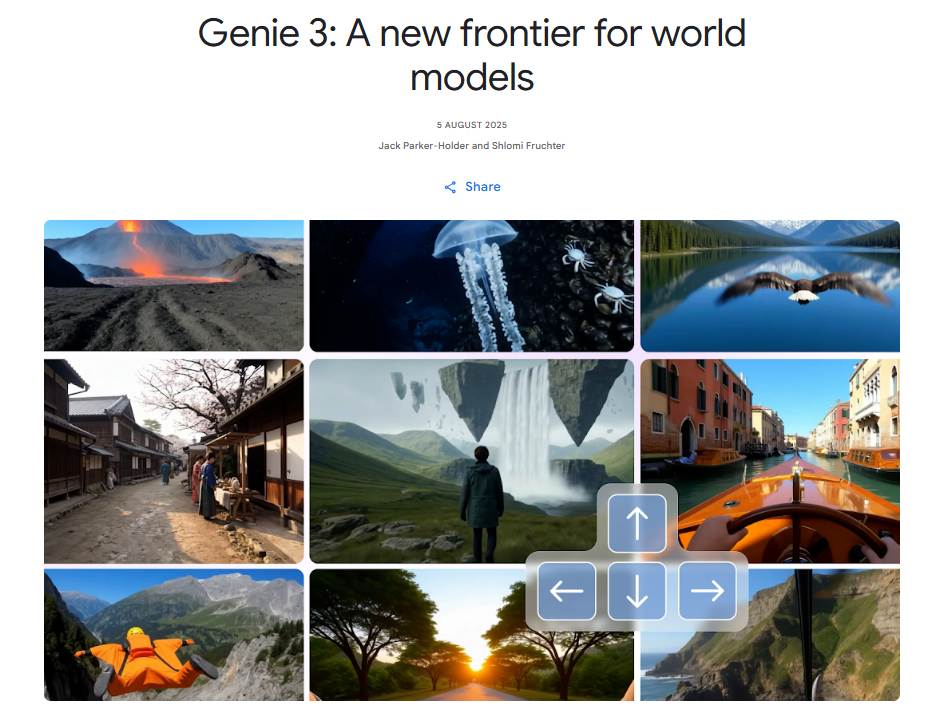

Genie 3: The World Model That Changed Everything

Genie 3 is the beating heart of this entire system. It's a world model in the truest sense, which means it's not just generating random pixels that happen to look like a world. It's learning and modeling the actual rules of how worlds work.

World models function by building an internal representation of an environment. Think of them like a neural network that's learned the physics of a space—gravity, momentum, collision, causality. Feed Genie 3 a starting image and a sequence of actions, and it can predict what the next frames will look like. More importantly, it can do this realistically within the rules of the world it's generating.

This is genuinely difficult. Most AI image generation models are stateless. Generate one image, and the next image is independent. World models need memory. They need consistency. They need to understand that if you move a character forward, the background should shift in a specific way based on perspective and distance.

Genie 3 was trained on massive amounts of video data, learning patterns about how worlds evolve over time. It observed how lighting changes, how momentum works, how objects interact. It absorbed millions of hours of real-world video to understand the baseline rules.

The breakthrough with Genie 3 specifically is that it can do this generation in real-time, interactively. You're not waiting 10 seconds per frame. The world is rendering at what feels like video game speed.

Here's the trade-off: the environments are inherently constrained. The model understands basic physics and interaction, but it's not simulating a full physics engine. Think of it more like a very sophisticated visual prediction tool rather than a true simulation. The interactions you can have are limited to what the model has learned from training data.

But this is still remarkable. Previous world models were slower, less stable, and generated less coherent worlds. Genie 3 raised the bar significantly, and that's why DeepMind feels confident releasing it to the public.

Nano Banana Pro: The Image Generation Foundation

Nano Banana Pro is Google's image generation model, and it's specifically optimized for being small and efficient while still maintaining quality. The name's whimsical, but the technology is serious.

Why not just use a bigger, more capable image model? Size matters here. The entire Project Genie system needs to be responsive and efficient. Using a lightweight image model means the entire pipeline stays snappy. You describe a world, generate an image, and move to the world model generation in seconds.

Nano Banana Pro has its limitations. It sometimes adds details you didn't ask for, misses style requests (like that green hair turning purple), and occasionally struggles with complex prompts. But it's predictable and fast, which matters more in this context than absolute perfection.

The interesting design choice is letting users edit the generated image before Genie takes over. That's not just user-friendly; it's fixing model errors through human input. If Nano Banana Pro misses the mark, you can paint over it or adjust it before the world model sees it.

Gemini: The Prompt Understander

Gemini is the large language model that's interpreting your natural language descriptions and converting them into meaningful inputs for the image and world generation systems.

Why does this matter? Because most AI systems are sensitive to how you phrase things. Gemini's job is to take your somewhat loose description and extract the semantically important details. Is it a castle? How big? What's it made of? What mood or atmosphere are you going for?

Gemini is handling reasoning, context, and nuance. It's also helping enforce safety guardrails, which brings us to an important limitation.

Estimated data shows Genie 3 as the most effective phase, while the World Sketch Phase is the most unique. Nano Banana Pro provides a strong balance between the two.

What Works Brilliantly: The Whimsy Advantage

I tested Project Genie for hours, and the clearest pattern emerged quickly: whimsy works. Fantasy works. Stylized, creative, unconventional worlds work beautifully. Realism falters.

My first creation was that marshmallow castle. I asked for a claymation-style fortress in the clouds, complete with a chocolate sauce river and candy trees. The result was stunning. The pastels, the puffy textures, the way the candy trees moved as I walked past them—it nailed the aesthetic. This was a world I'd imagined as a kid, and the AI actually built it.

I wasn't alone in this experience. Other testers reported similar success with whimsical scenarios. Ask for a magical forest where everything glows with bioluminescence? You get a magical forest. Ask for a steampunk airship interior? The model handles it.

The key is that whimsical worlds have less rigid physical rules. If things look slightly off or physics bends in unexpected ways, it fits the aesthetic. When you're exploring a world made of candy, you don't expect gravity to work perfectly.

I also tested remix worlds, building on top of existing creations in the gallery. Changing a few keywords in an existing prompt and regenerating produced interesting variations. The system understood the core concept and could pivot it in new directions. This feature has genuine creative potential if you're iterating on ideas.

The best results came from users who treated Project Genie like a creative partner rather than a literal command system. Instead of demanding photorealism, embrace the AI's strengths. Ask for stylized environments. Request specific art styles. Give it parameters it can actually excel within.

What Fails Badly: The Realism Problem

Now, the flip side. Ask Project Genie to generate a photorealistic world, and you'll quickly understand why this is still experimental.

I attempted several realistic scenarios. A detailed office building. A beach scene. A city street. The results ranged from unsettling to laughable. Proportions were off. Objects didn't quite fit together. Lighting was inconsistent. Textures were blurry or wrong. It was like looking at a dream you can't quite focus on.

The problem is that realism is unforgiving. Our brains have spent decades learning to spot errors in realistic imagery. Photorealism demands precision. It demands that details make physical sense. A claymation castle can have impossible geometry and we don't care. A photorealistic office should have doors that make sense, windows that are proportional, desks that don't float.

Genie 3 simply hasn't learned enough constraints about realistic physics to handle this. The model is predicting frames based on patterns in training data, but realistic worlds have far stricter rules than fantasy worlds.

I also ran into the copyright guardrails multiple times. I tried generating a Little Mermaid underwater fantasy world and got blocked. Asked for an ice queen's castle and was denied. The safety systems, powered by Gemini, are actively filtering out anything that might resemble copyrighted characters or IP.

This makes sense given Disney's cease-and-desist, but it does create an awkward creative constraint. You can't build worlds based on existing fictional universes. You can only build original creations.

There's also the issue of consistency across extended exploration. Even when a world generates successfully, if you explore it for too long, details start degrading. The auto-regressive nature of the model means it's predicting frame-by-frame, and small errors accumulate over time. After a minute or so, you might notice weird visual artifacts creeping in.

The Compute Reality: Why 60 Seconds Exists

That 60-second limit isn't arbitrary, and understanding why reveals something crucial about how AI scales right now.

Genie 3 is auto-regressive. That means for every single frame it generates, it's running an inference on a massive model. For a 60-second world at 24 frames per second, that's about 1,440 individual model runs per user session. Each run requires significant GPU resources.

When you're using Project Genie, you're not sharing computational resources with other users. Fruchter was explicit about this: there's literally a dedicated chip running only your session. That's necessary for the low-latency, interactive experience you get. If they tried to multiplex users on the same GPU, latency would spike and the experience would become unresponsive.

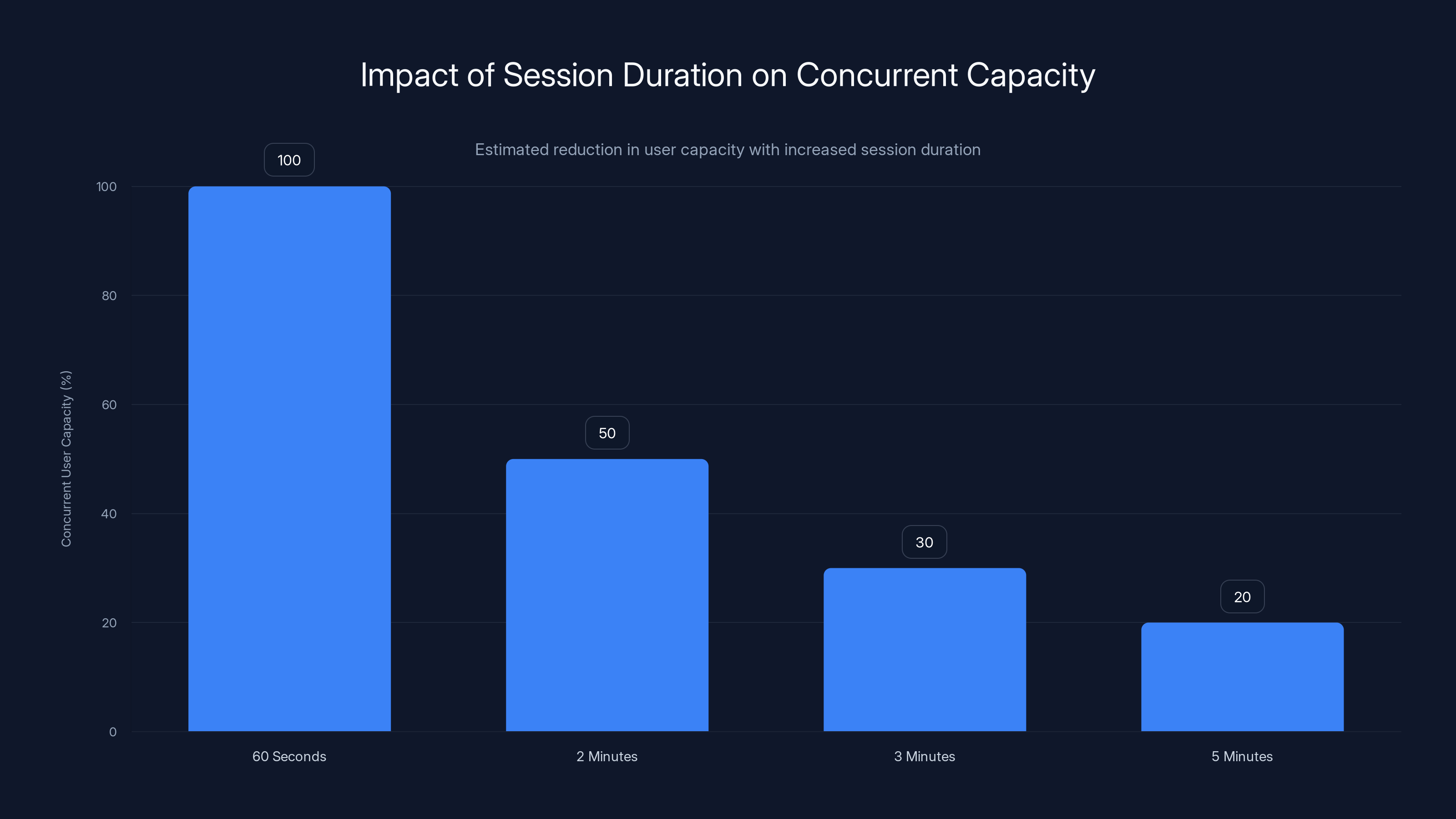

Google is running this on some of the most advanced hardware available, but even with that infrastructure, there's a hard limit to how many concurrent users they can serve. If you cap each user at 60 seconds, you can serve thousands of people with your current hardware. If you extend that to 5 minutes? You've reduced concurrent capacity by 80%.

At scale, the economics matter. Google is offering this as an experimental prototype to AI Ultra subscribers, which means it's not free, but it's highly subsidized. The actual cost per session is probably significant. Every second of additional generation time compounds across millions of potential users.

This is why Fruchter also noted that extending beyond 60 seconds might have diminishing returns anyway. The worlds don't become dramatically more interesting or valuable after that point. They do become more repetitive because the model is continuing to generate variations on what it's already created. You're exploring the same space over and over.

But here's the silver lining: this is a hardware limitation, not a fundamental technical one. As inference speeds improve, as GPUs become more efficient, as model compression techniques evolve, that 60-second window will expand. It's not a permanent ceiling; it's a checkpoint.

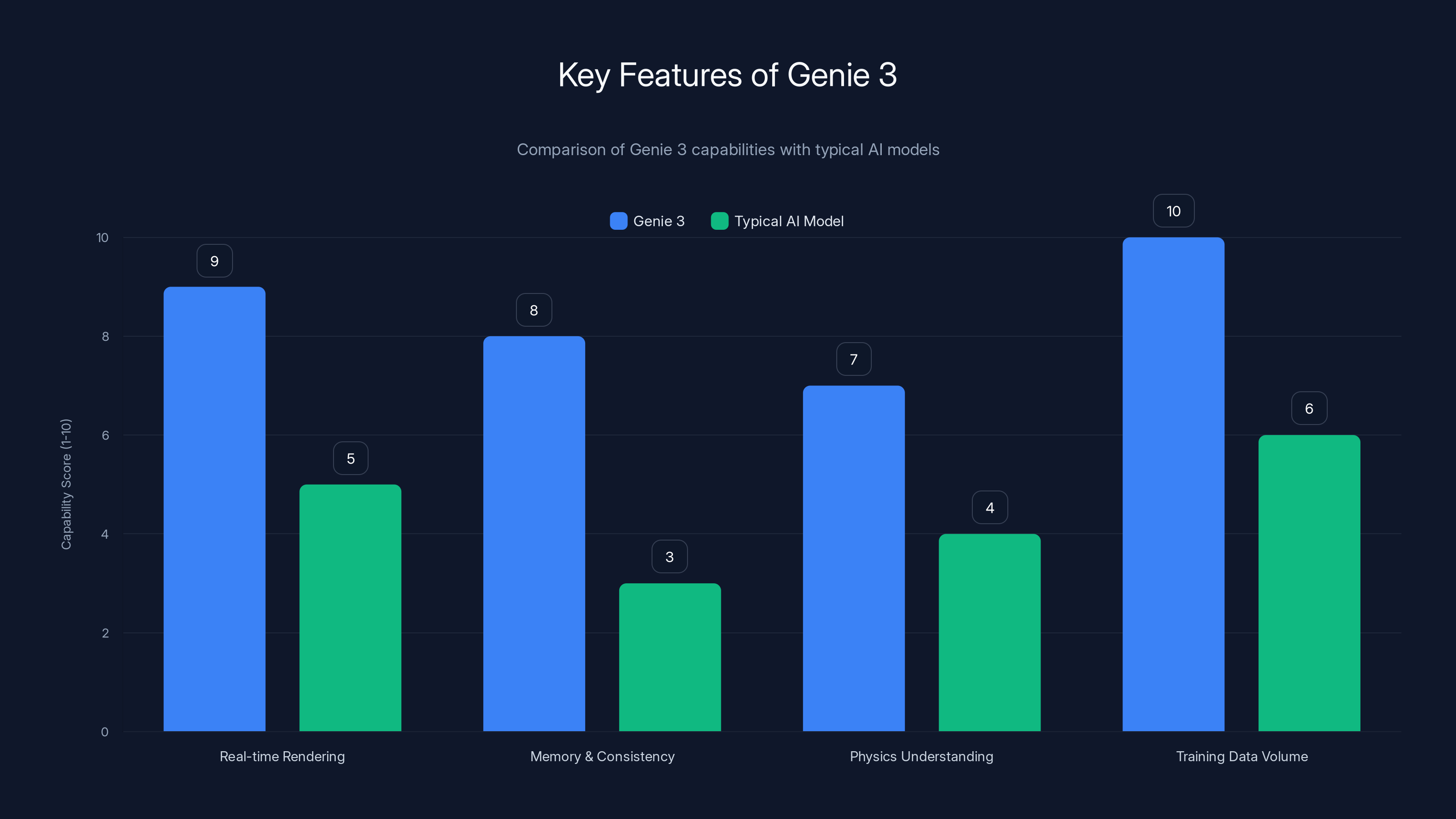

Genie 3 excels in real-time rendering and utilizes extensive training data, providing better memory and physics understanding compared to typical AI models. Estimated data.

Safety Guardrails: What You Can't Build

Project Genie isn't a lawless creative sandbox. There are guardrails, and they're actively enforced.

I couldn't generate anything remotely resembling nudity. That was enforced at the Gemini level—the prompt was rejected before it even reached the image generation stage.

I couldn't generate copyrighted characters or content. Disney, Marvel, Star Wars, DC, animated franchises—all blocked. This is partly legal protection post-Disney cease-and-desist, and partly responsible AI practices.

I couldn't generate graphic violence or gore. Disturbing imagery was filtered out.

The interesting part is how transparent this all was. When I tried to create a Little Mermaid world, I didn't get an error. I got a clear explanation that I couldn't generate worlds based on copyrighted characters, and I was invited to create an original underwater world instead.

These guardrails are reasonable, especially in a world where AI is under intense legal and regulatory scrutiny. They prevent copyright infringement, they prevent misuse, and they keep the tool from generating harmful content.

But they also reveal a constraint: you're building within a specific creative box. You can't lean on existing intellectual property as inspiration. That's actually fine for most users, but it does eliminate an entire category of possible creations.

There's also an implicit brand safety guardrail. Google wouldn't want viral videos of Project Genie generating disturbing worlds or clearly infringing content. The guardrails protect both users and the company.

The question is whether these guardrails will evolve. As model safety improves, as legal precedents clarify, will these restrictions loosen? Or will they tighten? Right now, they're in a reasonable middle ground.

The World Model Race Is Heating Up

Project Genie doesn't exist in a vacuum. There's a competitive race happening in world models, and it matters far more than most people realize.

Fei-Fei Li's World Labs released Marble, a world model product, late last year. Marble is specifically designed for simulation and embodied AI training. It's not focused on consumer gaming; it's focused on enabling researchers and companies to train AI systems in realistic simulations before deploying them in the real world.

Runway, the AI video generation startup, launched their own world model recently. Runway's approach emphasizes video synthesis and creative applications. Their world model is integrated into their video generation tool, allowing for more consistent and controllable long-form video generation.

Yann Le Cun's AMI Labs is focused entirely on developing world models. Le Cun, a legendary figure in AI who spent years at Meta, is building from first principles with a focus on scaling world models efficiently.

OpenAI has been quiet about world models publicly, but the company has almost certainly been working on this internally. The capability is too important to AGI development to ignore.

What's emerging is a clear pattern: every major AI lab and company understands that world models are crucial. They're not a novelty. They're fundamental to the next phase of AI development.

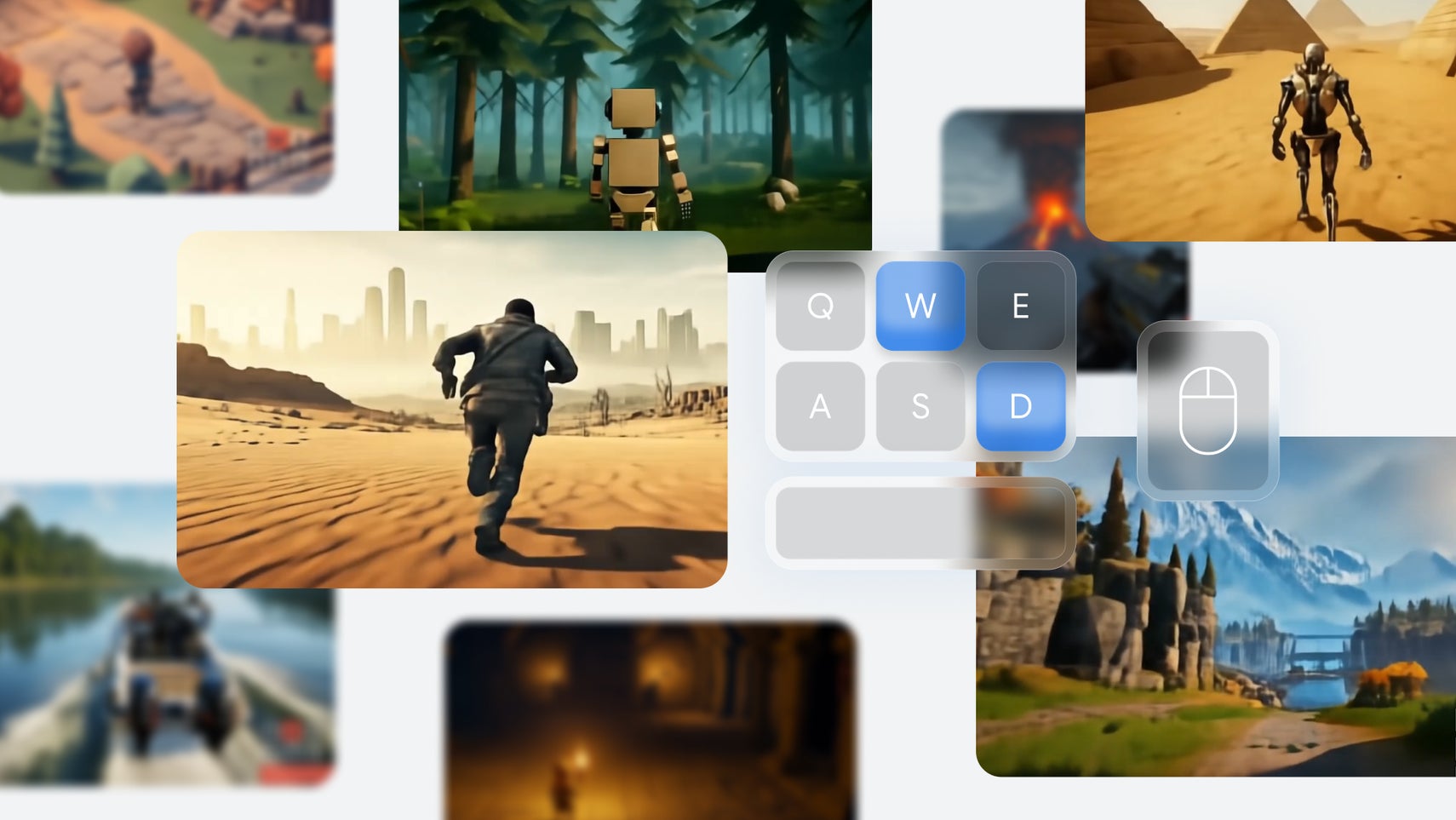

The race has both near-term and long-term implications. Near-term, whoever builds the best world model for consumer applications wins that market segment. Games, interactive entertainment, creative tools—these are all viable businesses built on world models.

Long-term, whoever masters world models gets closer to AGI. If you can teach an AI system to understand and predict how the world works, you've solved a massive part of the intelligence puzzle.

Project Genie's release is Google saying, "We're in this race, we're shipping products, and we're ready to compete." It's a statement of intent and capability.

From Entertainment to Embodied AI: The Real Endgame

Here's where the conversation shifts from cool to genuinely important.

Games are the launch vehicle. They're a clear commercial use case. People understand games. The market exists. Companies will pay for tools that make game development easier or enable new types of interactive experiences.

But that's not why DeepMind is actually building world models.

The real application is training embodied agents—basically, robots and AI-controlled systems—in simulation before they ever interact with the real world. Think about how you'd train a robot to navigate a house, manipulate objects, or perform complex tasks. Training in the real world is slow, expensive, and error-prone. Train in a simulation first? Dramatically faster and cheaper.

World models enable this by creating realistic simulations that are physics-aware and consistent. A robot trained in a world model simulation can transfer its learning to the real world because the simulation has actually understood the rules of physical reality.

This is why companies like Boston Dynamics care about world models. This is why Elon Musk's Tesla is investing heavily in robotics and simulation. This is why this matters so much.

DeepMind's longer-term strategy is almost certainly: perfect world models through consumer gaming and feedback, then license that technology to robotics companies, autonomous vehicle manufacturers, and industrial automation companies for serious money.

The games aren't the product. They're the training ground.

This also hints at why DeepMind is being so open about releasing Project Genie and gathering user feedback. They need massive amounts of diverse, real-world usage data to improve the models. Gaming communities will provide that. Users will create worlds the researchers never anticipated, find edge cases, generate patterns the model needs to learn from.

It's a clever business model: let consumers drive innovation and improvement through actual usage, gather valuable training data, and then deploy that knowledge in far more lucrative applications.

Estimated data shows that extending session duration from 60 seconds to 5 minutes reduces concurrent user capacity by 80%.

Technical Limitations and Trade-offs

Project Genie is experimental, and that shows in several technical areas.

Interaction depth is limited. You can move through worlds and the environment responds, but the types of interactions are constrained. You can't pick up arbitrary objects and use them in novel ways. You can't dynamically alter the world. You can't have complex dialogue with NPCs. The model supports basic environmental interaction, not the kind of physics simulation that games like Unreal Engine provide.

Visual consistency degrades over time. As mentioned, because the model is predicting frame-by-frame, errors accumulate. After extended exploration, you'll notice visual artifacts, weird textures, objects behaving strangely. This isn't a bug; it's a fundamental limitation of auto-regressive generation.

Scalability is expensive. The compute cost per user per second is high. That's why the 60-second limit exists. Until inference becomes dramatically faster or cheaper, scaling this to millions of concurrent users is economically challenging.

Training data bias is implicit. Genie 3 was trained on video data, which biases the model toward patterns, structures, and scenarios that exist in that training data. Novel or unusual environments sometimes confuse the model because they weren't well-represented in training.

Physics accuracy is approximate. The model doesn't use true physics simulation. It's predicting what a realistic-looking next frame would be based on patterns. This works reasonably well for most scenarios but breaks down in unusual situations where physics would behave in counterintuitive ways.

Determinism and control are limited. You can't precisely control every detail of generated worlds. There's inherent randomness and stochasticity in the generation process. Two identical prompts might generate slightly different worlds.

These aren't flaws in the research sense. These are fundamental properties of the current approach. Solving them requires different architectures, better training data, or entirely new approaches.

Generative AI Integration: The Broader Context

Project Genie doesn't exist separately from other generative AI tools. It's part of a larger ecosystem.

You need image generation to create the starting point. That's why Nano Banana Pro is embedded. But it also means Project Genie inherits all the issues current image generation models face: bias, sometimes inaccurate details, style drift.

You need language understanding to interpret prompts. That's why Gemini is integrated. But language understanding is imperfect. Ambiguous prompts might be interpreted differently than you intended.

You need world modeling to generate consistent environments. That's Genie 3. But world models have their own limitations and failure modes.

The system is only as good as its weakest link. If the image generation fumbles, the world model might work with a flawed foundation. If language understanding misses nuance in the prompt, you'll get something unexpected.

But here's the beautiful part: these three models are complementary. Imperfect image generation can be edited by users. Language understanding is augmented by user feedback. World modeling is visually grounded in the image. Together, they're more capable than any single component.

This is the pattern we're seeing across generative AI: instead of single monolithic models, we're seeing orchestrations of multiple models, each handling what it's good at, with human feedback in the loop.

Practical Use Cases and Creative Possibilities

Beyond the cool factor, what can people actually use Project Genie for?

Game prototyping is an obvious one. Designers can quickly generate test worlds to explore ideas before investing in full development. "Does this layout feel good to navigate? How do players feel exploring this environment?" You can answer those questions in minutes with Project Genie instead of weeks of traditional level design.

Storyboarding and visualization is another strong use case. Write a scene description, generate a world, capture video, and you've got visual reference material for actual game development, film production, or animation.

Education and training could use world generation. Imagine generating historical environments for educational purposes. "Show me a medieval village in 1200 AD." The limitations mean it wouldn't be perfectly accurate, but it could still provide useful visual context.

Creative expression is the most open-ended. Some users will just want to build increasingly absurd worlds. A city floating in space made of impossible architecture. A surreal landscape where everything is made of water. A candy land that shifts and changes. These have commercial value to creators, streamers, and artists.

Mod creation could be interesting if the tool matures. Imagine game modders using Project Genie to quickly generate variations on game worlds, then fine-tuning them with traditional tools.

The use cases will expand as the technology improves. Right now, we're at the "cool toys" phase. But that's how transformative technologies often start.

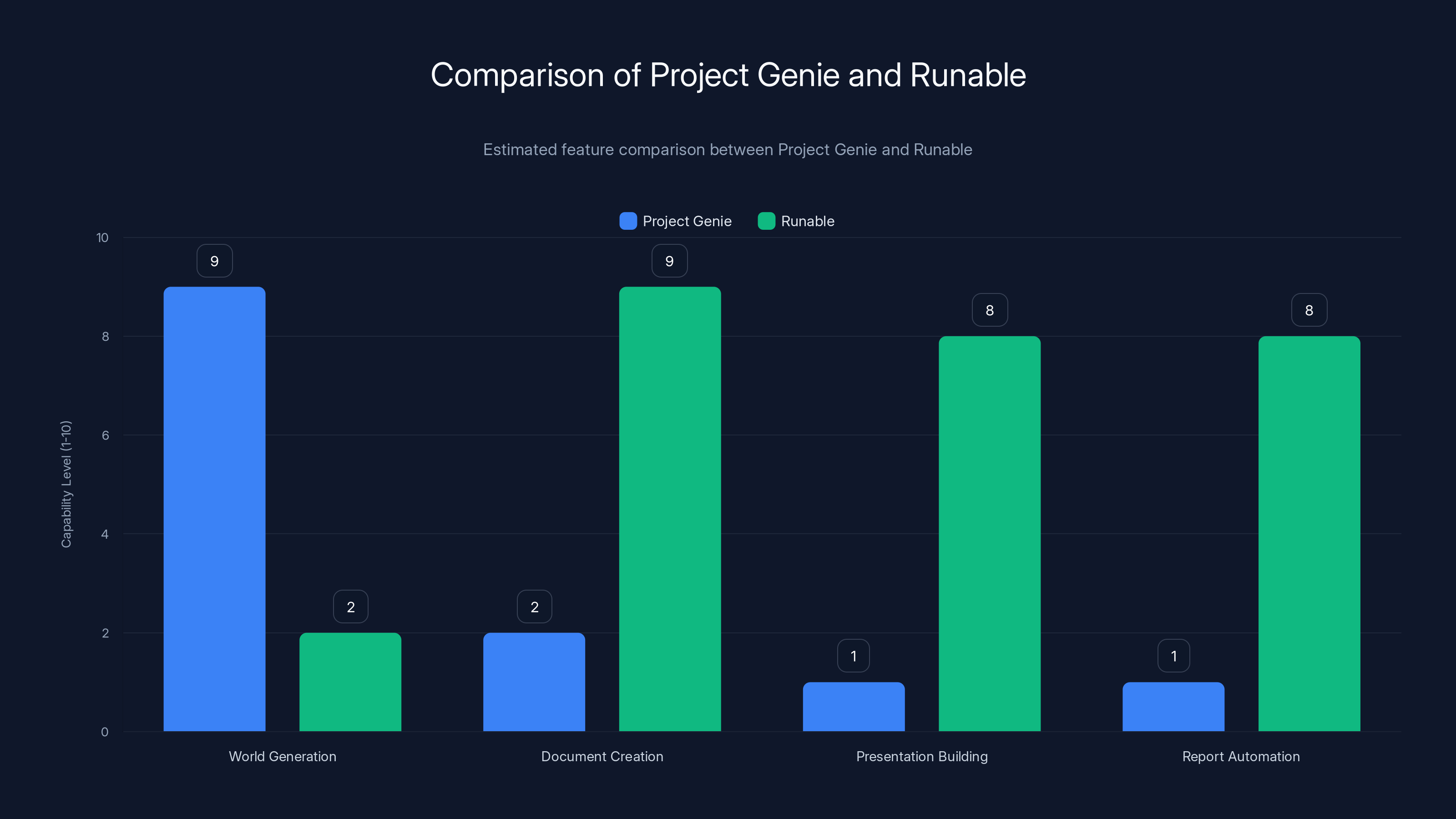

Project Genie excels in world generation, while Runable is stronger in document and presentation automation. Estimated data.

Comparing Project Genie to Existing Tools

How does Project Genie stack up against other game creation and world generation tools?

Unreal Engine and Unity are the industry standard for game development. They offer incredible control and fidelity. But they also require extensive learning and manual work. Project Genie is the opposite: minimal learning, minimal manual work, but less control and fidelity. They're not competing; they're in different categories. Project Genie is concept generation. Unity and Unreal are production tools.

Runway's world model is more focused on video generation than interactive exploration. You get video outputs rather than navigable environments. Different use case, different tool.

World Labs' Marble is aimed at researchers and embodied AI developers. It's not consumer-facing. It's specialized infrastructure for simulation and training.

Procedural generation tools in game engines can create worlds, but they're rule-based systems that humans design. Project Genie is generative, meaning it creates novel worlds from descriptions.

Project Genie's unique position is as an accessible, generative, interactive world creation tool. You don't need to be a programmer or game developer. You don't need specialized knowledge. You describe a world and explore it. That's genuinely novel.

For hobbyists, creative professionals, and people who want to experiment with world generation, Project Genie occupies a unique space.

The Safety and Copyright Implications

Project Genie exists in a legally and ethically complex landscape.

Copyright is the immediate issue. Disney's cease-and-desist showed that AI training on copyrighted material and generation of copyrighted content is legally fraught. Google has responded by implementing strong filtering. You can't generate Disney worlds. This is both legally protective and creatively limiting.

The broader question is whether generative AI should be allowed to train on copyrighted material at all. This is being litigated right now in multiple jurisdictions. The outcome will determine how future world models are trained and what they can generate.

There's also the question of synthetic training data. Once Project Genie exists and people use it to generate worlds, that synthetic data could theoretically be used to train future models. Is that ethical? Is that legal? We don't know yet.

Deepfakes and misinformation are potential risks. As world models get better, could they be used to generate convincing false videos of events? It's theoretically possible, though current technology isn't there yet.

Bias and representation matter. Genie 3 was trained on video data that reflects real-world biases. Those biases get encoded into the model. When you generate worlds, you're often seeing those biases reflected back.

Google's approach of releasing slowly, gathering feedback, and implementing safety measures seems reasonably responsible. But these are unsolved problems. As the technology gets better, the risks get more serious.

The Future of World Models and AI

Where does this go from here?

Immediate improvements are probably in inference speed and visual quality. Faster models mean longer sessions. Better models mean more consistent, realistic, or beautiful worlds.

Interaction depth will likely expand. Right now, interaction is basic. Future versions might support object manipulation, NPC interaction, more complex physics. Imagine moving to a world generated by Project Genie where you could actually interact with it like a real game.

Mobile and edge deployment could be coming. Right now, Project Genie requires cloud compute. But as models become more efficient, could they run on phones or local machines? That would democratize access and unlock new use cases.

Integration with other tools is almost certain. Imagine Project Genie as a plugin in game engines, 3D modeling tools, or animation software. Generate a base world, then refine it with traditional tools.

Multimodal and multi-user worlds could be possible. Right now, you explore alone. Could future versions support multiple players exploring the same generated world simultaneously?

Embodied AI training will almost certainly be the next phase. Once consumer product roadmap is established, DeepMind will probably release specialized APIs for robotics companies and embodied AI researchers to use world models for training.

Regulation will shape development. As governments implement AI regulations, world model development will need to fit within those constraints. That could slow progress or redirect it, depending on how thoughtful regulation is.

The long-term trajectory is clear: world models will become more capable, more efficient, more accessible, and more integrated into AI development. Project Genie is the consumer-facing version of something that will have profound implications for robotics, autonomous systems, and eventually AGI development.

Project Genie leads in user engagement with a score of 9, while it also scores high in feature richness. Estimated data based on current trends.

Getting Started with Project Genie: What You Need

If you want to actually use this tool right now, here's what you need:

A Google account is the baseline.

Google AI Ultra subscription is required. This is Google's premium tier for their AI services.

US location is currently required. Project Genie is rolling out to US users first.

A web browser is all the interface you need. No downloads, no installations, no special hardware on your end.

Once you have those, the actual interface is straightforward. You describe your world and character, edit the generated image if needed, and then explore the generated environment.

The tool is free to experiment with as part of your AI Ultra subscription, though the cost of the underlying compute is subsidized, not eliminated. Google is absorbing significant costs to make this accessible.

Tips for success:

Be specific but creative in descriptions. Vague prompts produce vague results.

Use art style modifiers: "claymation," "watercolor," "cyberpunk aesthetic." These help the model aim for specific looks.

Start simple. Master basic world generation before trying complex scenarios.

Edit the generated image before Genie creates the world. Fix obvious issues and the world model will have better input.

Experiment with the remix feature. Building on existing worlds is often more productive than starting from scratch.

Download and share interesting worlds. The gallery and community feedback help DeepMind improve the model.

Accept imperfection. This is experimental software. Weird things will happen. That's part of the fun.

What DeepMind's Ambitions Really Are

Looking at Project Genie, it's easy to see it as a cool gaming tool. But the real ambitions are bigger.

DeepMind is investing in world models because they believe—reasonably, based on current AI research consensus—that world models are crucial to AGI. If you can teach an AI system to actually understand how the world works, not just recognize patterns, you've solved a massive problem.

World models also have enormous commercial potential in robotics, autonomous vehicles, industrial automation, and simulation-based training. The company or lab that perfects world models gets access to enormous markets.

Project Genie serves multiple purposes simultaneously. It's a research tool gathering data about how humans interact with generated worlds. It's a product that builds user relationships and captures value. It's a proof-of-concept showing that DeepMind can ship research, not just publish papers. And it's a stepping stone toward more ambitious applications.

By releasing to the public and gathering feedback, DeepMind is doing something smart: letting the community help improve the model while building familiarity and excitement. Users who experiment with Project Genie today might be the ones building robotics companies that license world model technology tomorrow.

It's strategic, it's smart, and it reveals how seriously DeepMind takes the world model opportunity.

Comparing Project Genie to AI-Powered Automation Platforms

While Project Genie focuses on interactive world generation, there's a broader context of AI-powered automation tools transforming how teams work. Platforms like Runable represent a different but related frontier: automating document creation, presentations, reports, and complex workflows.

Both Project Genie and tools like Runable share core similarities. They both use AI agents to understand user intent and generate sophisticated outputs. Runable's AI agents create presentations, documents, reports, and images from simple prompts, much like Genie generates worlds from descriptions.

Where they diverge is scope. Project Genie specializes in interactive world generation for creative and research applications. Runable ($9/month) focuses on business automation, enabling teams to build presentations, documentation, and reports without manual effort.

Both represent the wave of AI tools moving beyond chat-based interfaces toward specialized, output-oriented agents. Instead of having a conversation with an AI, you specify what you want and the system generates it for you.

Use Case: Teams building project presentations or documentation automatically from code and data, similar to how Genie generates worlds from descriptions

Try Runable For Free

The Convergence of AI Technologies

Project Genie demonstrates something important: the convergence of multiple AI capabilities into specialized tools.

You have language models handling interpretation. Image generation providing visual grounding. World models creating consistent environments. None of these technologies existed five years ago. Now they're orchestrated together into a consumer product.

This pattern will repeat. We'll see more tools that combine multiple AI capabilities in novel ways. The question becomes: which combinations are most valuable? Where do users get the most benefit from AI working across multiple domains?

Project Genie found a great answer: let people describe worlds in language, visualize them with image generation, and then explore them with world models. Each technology handles what it's good at.

Future products will discover other powerful combinations. Some will be straightforward. Others will be surprising. But the pattern is clear: the next era of AI isn't about better single models. It's about smarter orchestration of multiple models toward specific user outcomes.

Conclusion: The Marshmallow Castle Moment

I started this exploration with a marshmallow castle. It's not profound. It's whimsical. But it's meaningful because it represents something that wasn't possible two years ago.

I described a fantasy world, and an AI system actually built it. Not perfectly, not with the kind of fidelity that professional game developers would achieve, but real enough that I could explore it, move through it, and experience it.

That's a crossing of an invisible line. We've moved from AI that talks about things to AI that builds things. AI that understands not just language but spatial relationships, physics, causality, interaction.

Project Genie is explicitly experimental. It's flawed. It has real limitations. The 60-second window feels constraining. The graphics sometimes look weird. Realistic worlds don't work great. Copyrighted content is blocked.

But none of that changes the fundamental fact: this works. It's a shipping product that millions of people can access that does something genuinely novel.

The world model race is real, the competition is fierce, and Project Genie is Google's opening move. DeepMind is signaling that they're in this game, they're capable, and they're building toward something bigger than gaming.

That's what matters most. The marshmallow castle is cool. The world models that will eventually train robots and autonomous systems? That's transformative.

We're in the early phase of something significant. Project Genie is the obvious, consumer-facing manifestation. But the implications extend far beyond gaming and creativity into robotics, simulation, training, and eventually AGI development.

If you've got a Google AI Ultra subscription and access in the US, spend 20 minutes playing with Project Genie. Try to break it. Get creative. Embrace the whimsy. You're not just having fun; you're participating in training data that will improve the world models of the future.

And if you're thinking about what this means for your industry, your work, or your future, world models are worth paying attention to. This is barely the beginning.

FAQ

What is Project Genie and how does it work?

Project Genie is Google DeepMind's AI system for generating interactive game worlds from text descriptions and images. The system works in three stages: first, you describe the world and character you want, then Nano Banana Pro (Google's image generation model) creates a visual representation, and finally Genie 3 (the world model) transforms that image into an interactive, explorable environment. The entire process takes seconds, and you can navigate through the generated world in either first-person or third-person perspective.

Who can access Project Genie right now?

As of 2025, Project Genie is available exclusively to Google AI Ultra subscribers in the United States. The tool is currently in experimental research preview, meaning it's limited in some ways but fully functional. Access expands based on user feedback and infrastructure capacity, but currently, you need an active Google AI Ultra subscription and a US-based account to use it.

Why is Project Genie limited to 60 seconds?

The 60-second limitation exists because Genie 3 is an auto-regressive model that requires dedicated GPU compute per user session. Each frame generated demands significant computational resources, and extending sessions beyond 60 seconds would drastically reduce how many concurrent users Google can serve. As inference technology improves and becomes more efficient, this limitation will likely increase, but currently, 60 seconds balances computational resources with user experience.

Can I generate worlds based on Disney or other copyrighted characters?

No. Project Genie explicitly blocks generation of copyrighted content, Disney characters, and unauthorized IP. This is both a legal safety measure following Disney's cease-and-desist letter and a responsible AI practice. You can generate original worlds and characters, but building directly on existing fictional universes is not permitted by the system's safety filters.

What's the difference between Project Genie and other game creation tools like Unity or Unreal Engine?

Project Genie is a generative tool for rapid world creation, while Unity and Unreal Engine are production engines for professional game development. Project Genie requires minimal technical knowledge and creates worlds in seconds, but with less control and lower fidelity. Unity and Unreal require extensive learning but offer complete creative control. They're complementary tools: use Project Genie for concept generation and rapid prototyping, then refine in traditional engines if you need production-quality results.

How does Project Genie connect to world models and AGI development?

World models are AI systems that learn and simulate how environments work, including physics, causality, and interaction. DeepMind and other AI labs believe world models are crucial for achieving artificial general intelligence (AGI). Project Genie demonstrates consumer-facing world model capability, but the real strategic importance lies in robotics and simulation applications. Eventually, companies will use world models to train robots and autonomous systems in simulation before deploying them in the real world, where the implications are far more significant than gaming.

Why do whimsical worlds work better than realistic ones in Project Genie?

Realistic worlds demand precise physics, accurate proportions, and consistent lighting—details our brains are trained to recognize as correct or wrong. Whimsical, stylized, and fantasy worlds are more forgiving. A claymation castle can have impossible geometry and it fits the aesthetic; a photorealistic building must follow actual architectural rules. Genie 3 hasn't learned enough constraints about rigid realistic physics to handle photorealism consistently, but it excels at fantasy, cartoon, and stylized environments where physics can bend creatively.

Can I download and share worlds I create in Project Genie?

Yes. You can download videos of any world you explore and share them on social media or other platforms. This is one way users contribute to training data—by generating diverse worlds and exploring them, they're providing feedback that helps DeepMind improve the model. The gallery and remix features also let you build on existing community-generated worlds or share your creations with other Project Genie users.

What are the main limitations of Project Genie?

The primary limitations are: 60-second generation limit due to compute constraints, inconsistent results with realistic imagery, lack of complex object interaction (you can't pick up arbitrary items and use them creatively), copyright filtering that blocks IP-based worlds, and degrading visual consistency during extended exploration due to frame-by-frame prediction accumulating errors. These aren't flaws; they're features of the current approach that will improve as the technology evolves.

How does Project Genie compare to World Labs' Marble and Runway's world model?

Each serves different purposes. Project Genie is consumer-facing and designed for interactive entertainment and creative exploration. World Labs' Marble is specialized infrastructure for researchers training embodied AI and robots in simulation. Runway's world model focuses on video generation rather than interactive exploration. Project Genie is unique in being accessible to general users while maintaining interactive capabilities, though it sacrifices the specialization of research-focused alternatives.

What skills do I need to use Project Genie effectively?

You don't need technical skills at all. The tool is designed for anyone to use through natural language prompts. However, effectiveness improves with a few practical skills: writing clear, specific descriptions helps generate better worlds, understanding art style terminology ("claymation," "watercolor," "cyberpunk") helps target specific aesthetics, and iterating on prompts teaches you what the model responds to. Essentially, it's a creative tool that rewards experimentation and clear communication of your vision.

Key Takeaways

- Project Genie generates interactive game worlds from text descriptions using orchestrated AI models (Genie 3, Nano Banana Pro, and Gemini)

- The tool is limited to 60 seconds of world exploration due to GPU compute constraints, not technical limitations

- Whimsical and stylized worlds work beautifully; realistic and copyrighted content consistently fail

- World models represent a crucial stepping stone toward AGI, with major AI labs racing to develop superior versions

- The long-term endgame for world models is training robots and embodied AI systems in simulation before real-world deployment

Related Articles

- AI Discovers 1,400 Cosmic Anomalies in Hubble Archive [2025]

- Nvidia's AI Chip Strategy in China: How Policy Shifted [2025]

- Flapping Airplanes and Research-Driven AI: Why Data-Hungry Models Are Becoming Obsolete [2025]

- Data Centers & The Natural Gas Boom: AI's Hidden Energy Crisis [2025]

- Tesla's $2B xAI Investment: What It Means for AI and Robotics [2025]

- Doomsday Clock at 85 Seconds to Midnight: What It Means [2025]

![Google's Project Genie AI World Generator: Everything You Need to Know [2025]](https://tryrunable.com/blog/google-s-project-genie-ai-world-generator-everything-you-nee/image-1-1769708315255.png)