Introduction: Welcome to AI Time

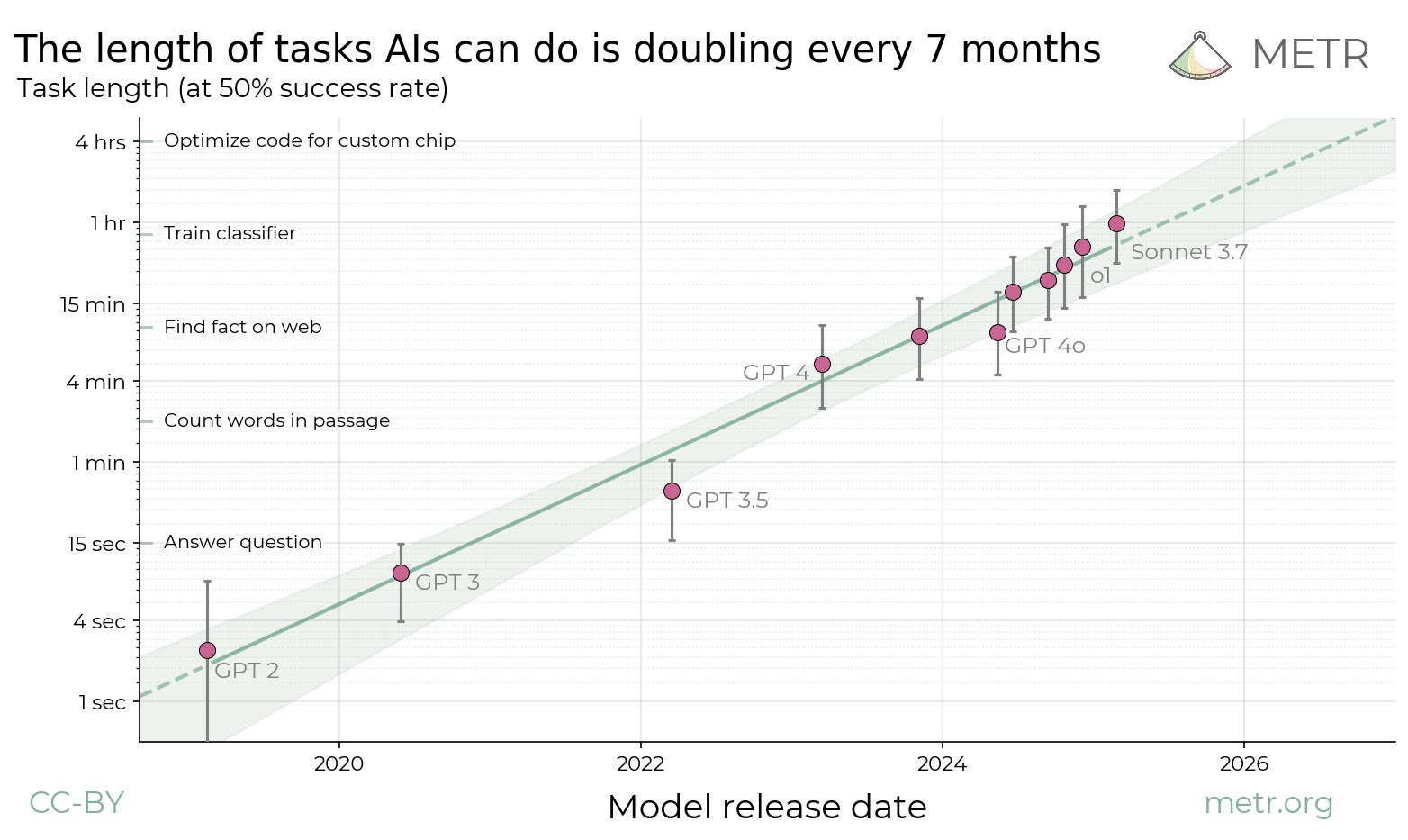

We're living through something unprecedented. Not a gradual technological shift that plays out over decades, but a compression of change happening in months. What used to take ten years now happens in three. What took three years happens in a quarter.

This isn't hyperbole. This is the reality of AI Time.

Most people sense it. Your job description is different than it was last year. The tools your team uses barely existed eighteen months ago. The skills that felt valuable six months back? Already outdated. You're not paranoid for noticing this. You're paying attention.

But here's what trips people up: we keep trying to understand AI's impact using frameworks designed for slower change. We ask "Will AI replace my job?" like it's a binary flip switch. We wonder if AI will "take over" like we're in a movie. We create five-year plans that are obsolete before the ink dries.

The real problem isn't any single AI application. It's that we're living in a time signature humans never evolved to handle. Our brains are wired for incremental change. Year over year, we handle it fine. But AI Time? That's different.

Yet here's the thing that keeps me going: understanding this time compression is exactly what saves us. Once you see how AI Time actually works, you stop panicking about the wrong things. You start preparing for what actually matters.

Let's dig into what living in AI Time really means, why it feels so disorienting, and most importantly, why we're not actually screwed.

What Is AI Time, Anyway?

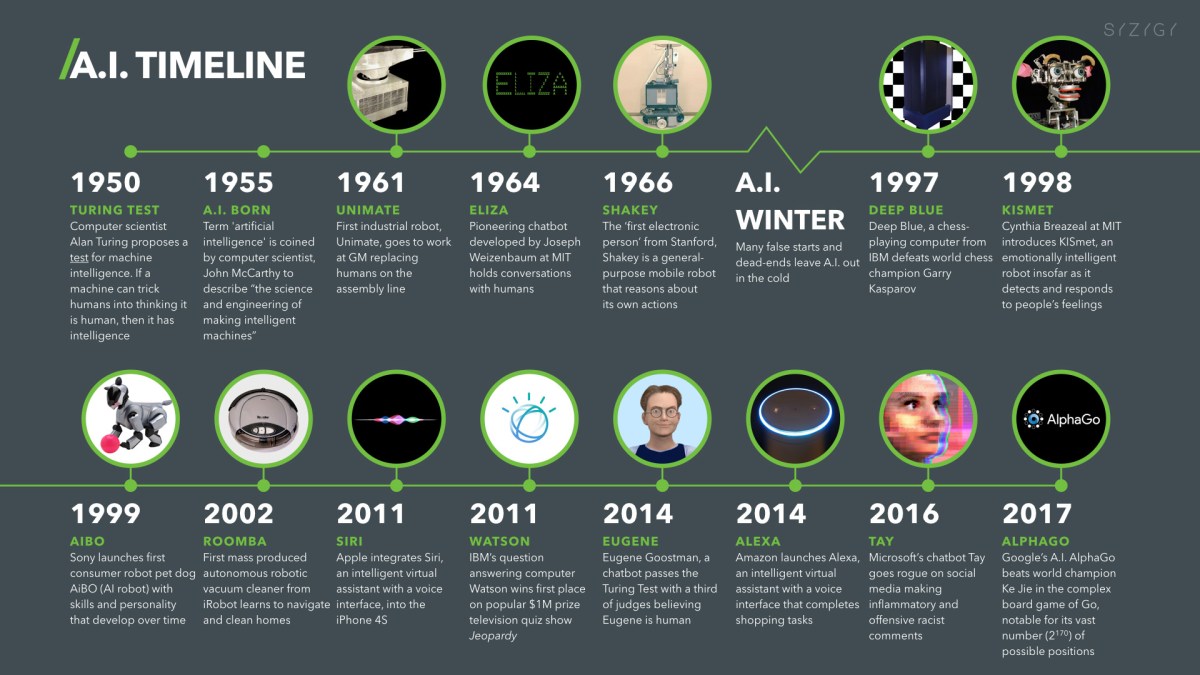

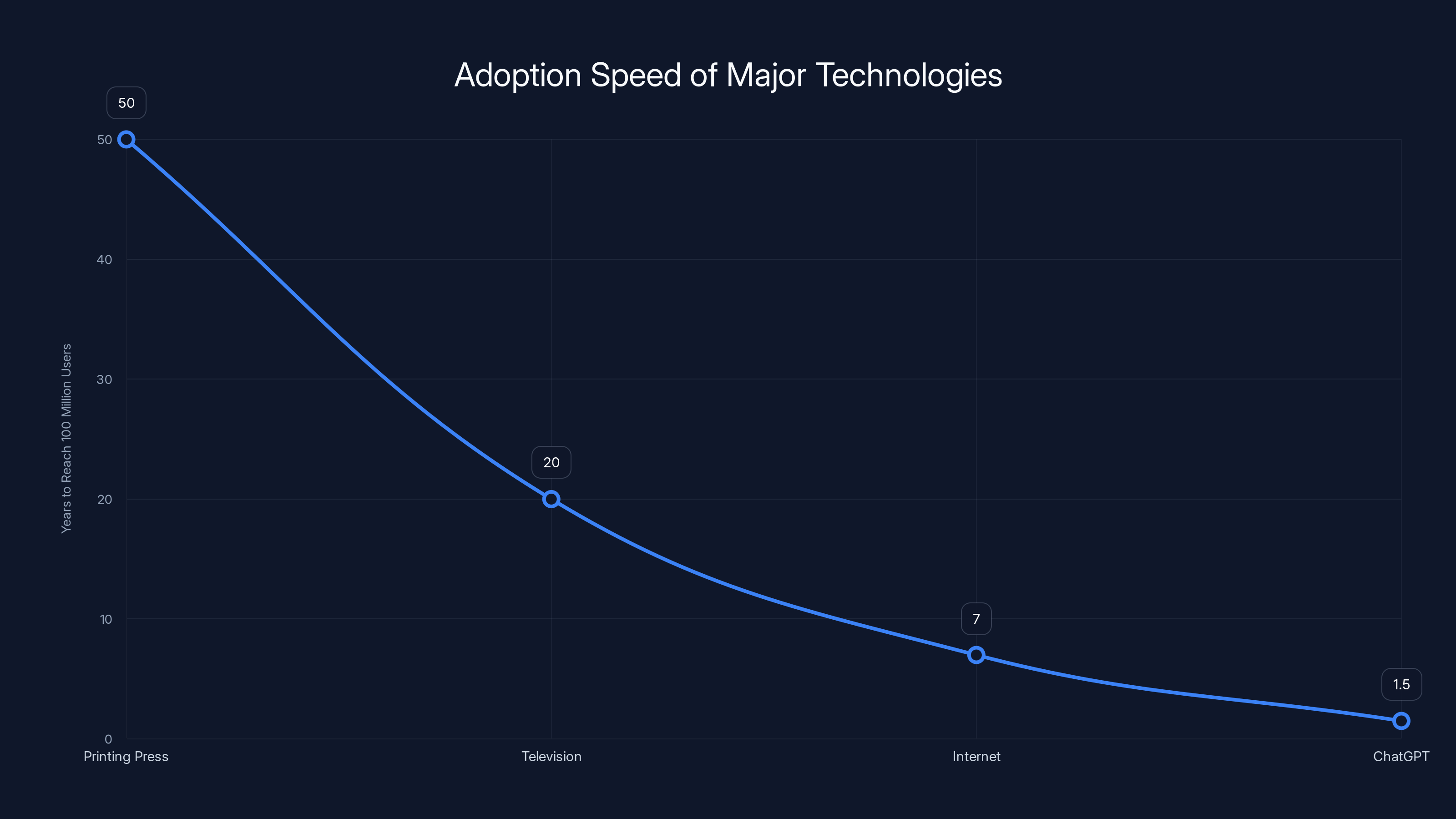

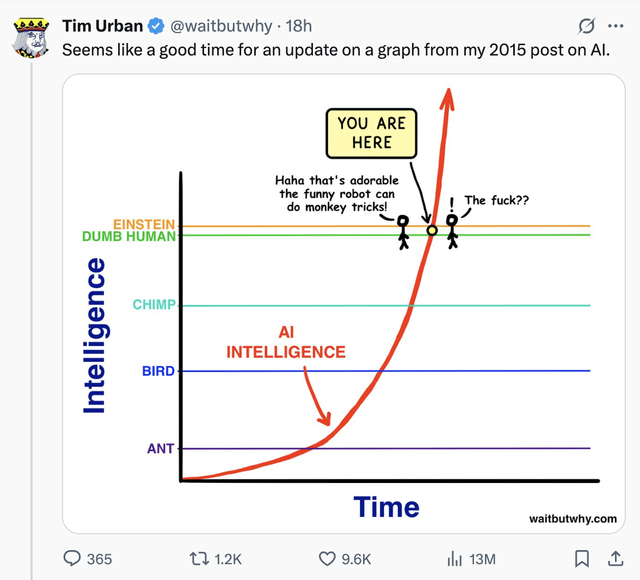

AI Time is fundamentally different from technological change we've experienced before. It's not the speed of change that makes it unique. The printing press was fast. The internet was fast. Television adoption was fast.

What makes AI Time different is the simultaneity of change across every domain at once.

When the web emerged, it didn't immediately touch manufacturing, agriculture, physical retail, or construction. It was a layer on top of existing systems. You could ignore it for a while if your job wasn't digital. Your accountant still worked the same way in 2000 as in 1995, even while web companies exploded.

AI is different. It's not a new layer. It's an intrusion into the foundation of knowledge work itself.

Consider what we've seen in just the past eighteen months. ChatGPT reached 100 million users faster than any application in history. Not because it's perfect, but because it immediately proved useful for dozens of different professions simultaneously. Lawyers are using it to draft contracts. Doctors are using it to review research. Engineers are using it to debug code. Marketing teams are using it to brainstorm campaigns. Each discovery creates new pressure on adjacent industries.

The velocity is genuinely disorienting because there's no "wait and see" option anymore. By the time you decide whether to adopt an AI tool, three better versions exist and your competitors are already using them.

This compression creates a weird paradox. On one hand, everything feels urgent and unstable. On the other hand, the tools keep getting better and more accessible. Last year's

That's AI Time. It's not that AI is necessarily more dangerous than previous tech. It's that the pace and breadth of disruption simultaneously touches everything humans do. Your job, your industry, your skills, your relationships with work itself.

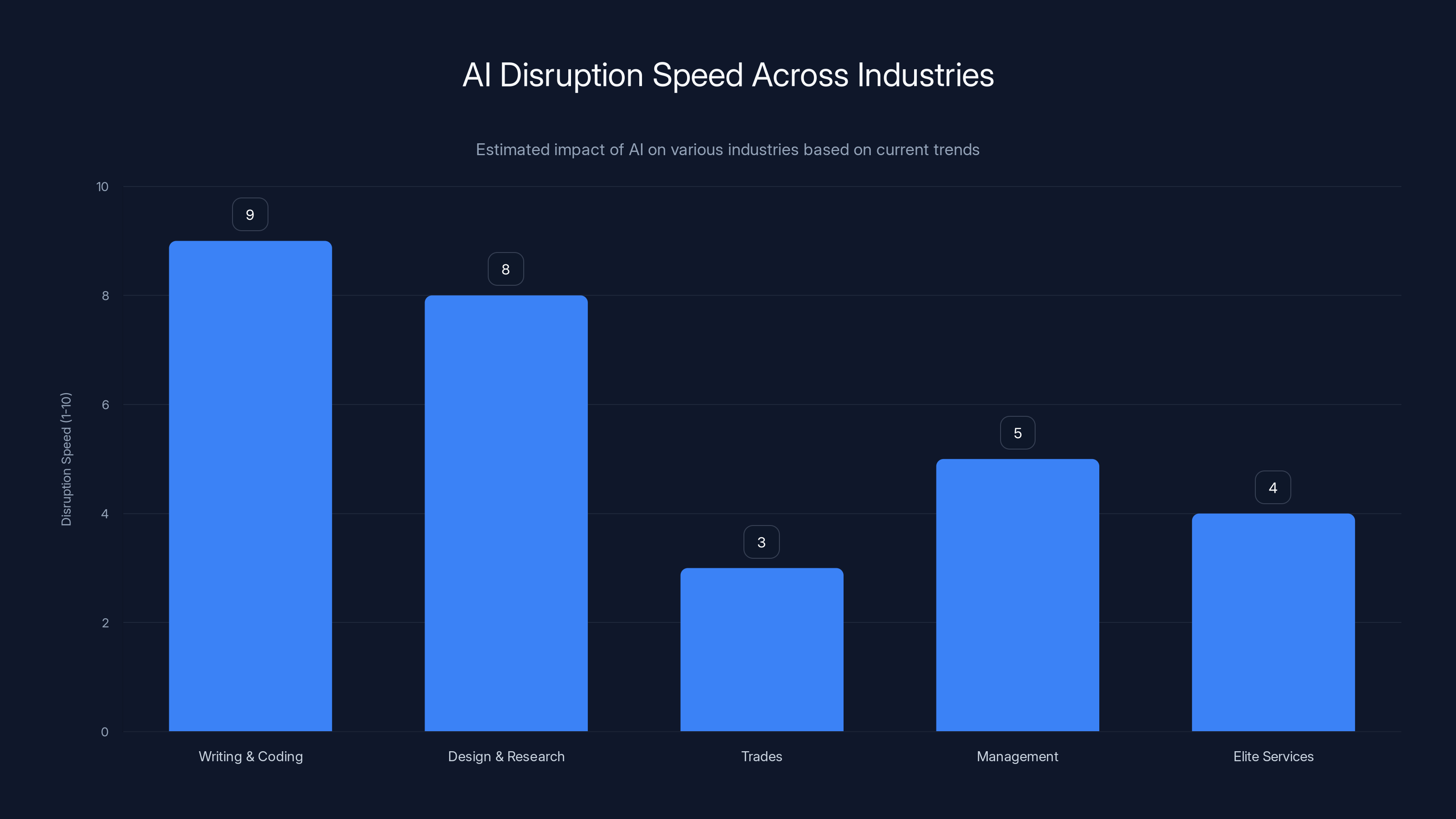

Knowledge-intensive fields like writing and coding are experiencing the fastest AI disruption, while trades and elite services are less affected. (Estimated data)

The Discomfort of Compressed Time

Here's what nobody talks about honestly: living in AI Time is psychologically exhausting.

Our brains evolved for gradual change. You learn a skill, use it for twenty years, maybe have to refresh it once. That's the rhythm humans are built for. Mastery takes time, and time used to be abundant.

AI Time inverts that. Mastery becomes a moving target. By the time you've mastered a tool, a better one exists. By the time you understand a technology's implications, it's already reshaping your industry.

This creates a specific kind of anxiety that's different from previous career concerns. It's not "Will I be replaced?" That's too simple. It's more like "By what will I be replaced, and when, and will I even see it coming?"

The psychological research on this is clear. Humans adapt well to change we see coming. We adapt less well to change we don't understand. We adapt worst to change that defies our mental models entirely.

AI Time does all three simultaneously. It's coming fast (we see that). It's hard to understand (AI explanations are genuinely technical or insufferably oversimplified, nothing in between). And it defies mental models because it's not like previous technology shifts. There's no "AI adoption curve" that looks like previous S-curves. There's no "widespread adoption in 2027" timeline that feels reliable.

So you get this ambient anxiety. Not panic attacks. Just a persistent low-level sense that you're missing something important, that everyone's adapting except you, that the rug is about to get pulled.

The uncomfortable truth is that some of this anxiety is warranted. Some roles will be genuinely disrupted. Some skillsets will become less valuable. Some organizations built on outdated processes will get crushed.

But here's where most people's thinking fails: they assume this disruption is new. It isn't. Every major technology shift in history created winners and losers. The difference is velocity and visibility. In AI Time, you can see it happening in real-time. You can watch your industry transform in Slack channels instead of wondering about it in retrospect.

That visibility feels worse. But it's actually better.

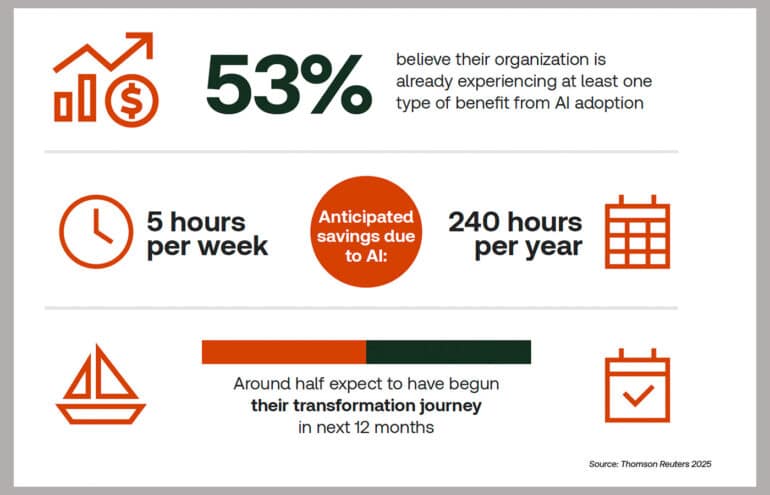

Estimated data shows that marketing teams spend 30% of their time on routine tasks like content formatting and deck building. By integrating AI tools, they can reclaim this time for strategic and creative activities.

Why Skills Are Becoming Liquid

One of the harshest realities of AI Time is that the concept of "expertise" as we've understood it is becoming unstable.

Historically, expertise meant accumulated knowledge in a domain. You spent ten years learning your field, and that knowledge had a half-life of maybe five to ten years. You could be an expert for a decade. Maybe longer if your field moved slowly.

In AI Time, the half-life of specific technical skills is shrinking dramatically. Not all skills. Not forever. But for knowledge work in fields AI can touch? The math is brutal.

Consider programming. Five years ago, being good at writing Python meant something durable. The language changed slowly. Best practices evolved gradually. You could invest in mastery.

Now? A developer who's world-class at writing Python by hand is potentially less valuable than a junior developer who's excellent at directing AI to write Python. The skill isn't "Can you write Python?" anymore. It's "Can you specify what you want, review what AI produces, and catch errors?"

That's not a small shift. That's a complete recalibration of what "expertise" means.

This is happening across fields. In writing, the skill has shifted from "Can you produce prose?" to "Can you edit and direct AI prose?" In design, it's shifting from "Can you create layouts?" to "Can you guide AI through iteration?"

The uncomfortable part: previous expertise becomes baggage. The systems that made you valuable (the specific techniques, the workarounds you've memorized, the knowledge you've internalized) are suddenly irrelevant. Or worse, they slow you down because you're fighting against a tool that works differently.

It's like being an expert horseback rider right when cars were invented. The skills don't transfer. The muscle memory is wrong. The mental models don't apply.

But here's what's crucial and often missed: new expertise is emerging just as fast. The experts of AI Time aren't the people who memorized the most about their field. They're the people who understand how to work alongside AI.

Think of it like this: a master watchmaker might struggle with programming because the skills don't transfer. But someone who's good at understanding systems, breaking problems into components, and iteratively improving solutions? They'll adapt to AI-augmented work faster.

The liquid skill isn't in the specific domain knowledge. It's in the meta-skills: problem decomposition, quality judgment, creative constraint recognition, understanding what AI is actually good and bad at doing.

The Automation Paradox

Here's something counterintuitive that AI Time reveals: the more powerful AI becomes, the stranger work becomes.

We were promised automation would reduce toil. That's true. AI absolutely eliminates busywork. Spending two hours writing an email to a client? AI knocks that to fifteen minutes. Reviewing documentation for consistency? AI catches issues you'd miss manually.

But there's a flip side almost nobody anticipated.

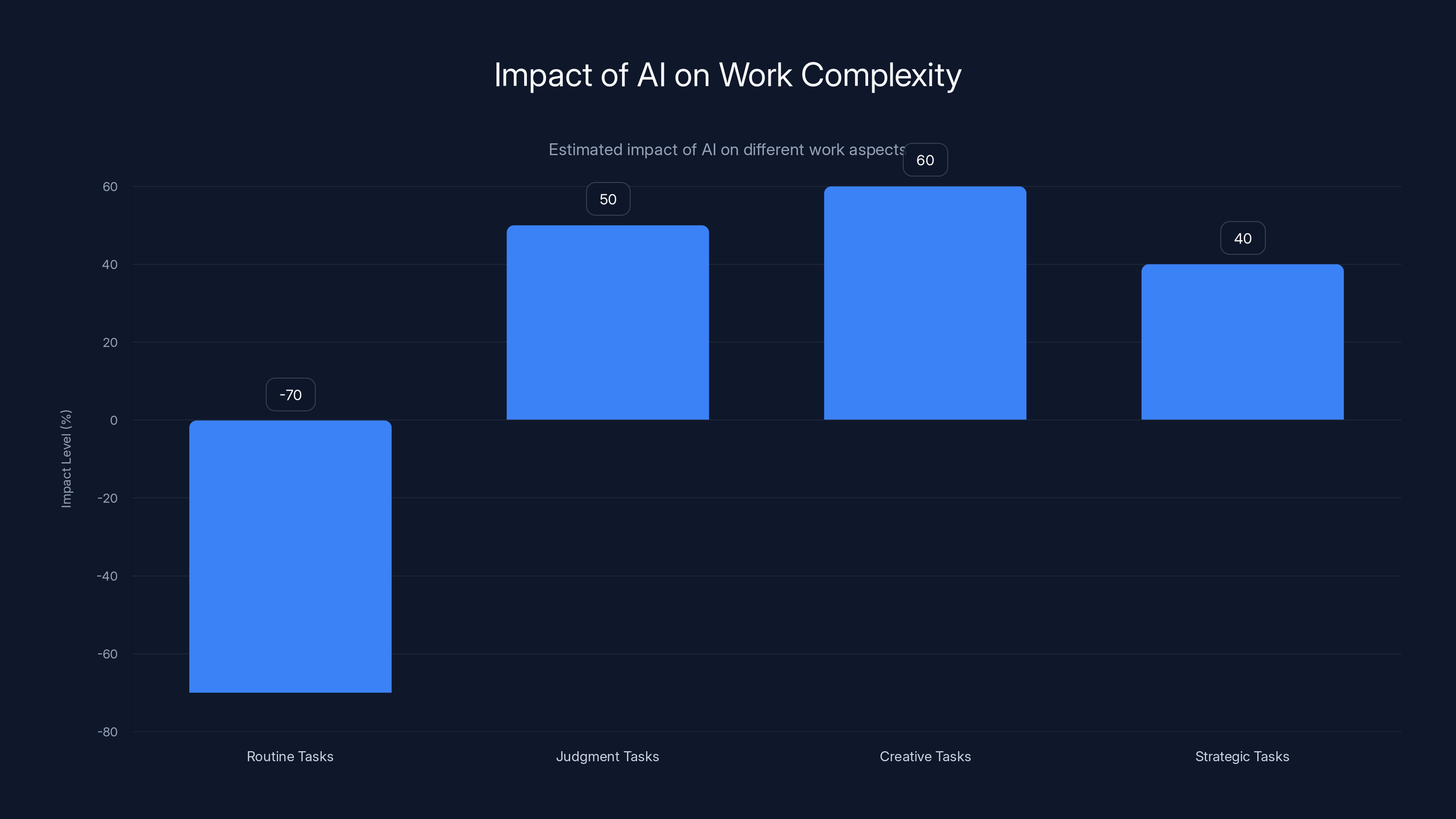

As AI handles routine work, what remains isn't just "more interesting work." What remains is the strange, edge-casey, ambiguous stuff. The work that requires judgment. The problems that don't have clean solutions. The situations where being "good enough" isn't good enough.

This creates a weird paradox. Work becomes simultaneously easier and harder. Easier because the grinding busywork disappears. Harder because you're left with the genuinely difficult problems.

It's like the difference between being a taxi driver (clear inputs, somewhat clear objectives, established routes) versus being a therapist (ambiguous problems, multiple possible solutions, judgment calls). As AI automates the taxi part, human workers get pushed toward the therapy part.

Except most people weren't hired to be therapists. They were hired to do tasks. Now the tasks are gone, but the jobs remain, and suddenly they're supposed to figure out strategy, judge edge cases, and make decisions without clear criteria.

This is where AI Time gets genuinely disorienting. Not because jobs disappear. Because the nature of work transforms faster than organizations can restructure, and faster than individuals can psychologically adapt.

In theory, this should be great. Remove toil, focus on impact. In practice? Many organizations don't know how to structure work around judgment and creativity. The office buildings and management systems and career ladders were all designed for task work.

AI Time reveals how much of our organizational infrastructure was built for a world of task execution. The org charts. The job titles. The metrics. The performance reviews. None of it maps well onto ambiguity and judgment calls.

So we're in this weird liminal space. AI can handle about 60-70% of routine professional work well enough to ship it. But organizations still need people. The question is: people for what?

That question hasn't been answered at scale. Most companies are still figuring it out. Which means most workers are still figuring it out.

Organizations face significant challenges in adapting to AI Time, with job architecture and market velocity mismatch being the most impacted areas. (Estimated data)

How Speeds Vary Across Industries

One of the cruel features of AI Time is that the impact isn't evenly distributed. Some industries are getting hit by the hurricane. Others haven't even felt the breeze yet.

Knowledge-intensive fields with clear outputs are disrupted first. Writing, coding, design, research, analysis. These are the canaries in the coal mine. If your job involves producing knowledge artifacts (documents, code, visuals, analyses), you're already experiencing AI Time in full force.

Other fields are harder to disrupt. Trades that require physical presence and fine motor control (plumbing, electrical work, surgery, hairstyling) will take longer. Not because AI can't contribute, but because the interface between digital and physical is messier.

Management and leadership feel somewhat insulated right now because people still want to see a human making decisions. But AI is already creeping in at the edges. HR departments are using AI to screen resumes. Sales leaders are using AI to draft strategy. Finance teams are using AI to build models.

The cruelest part? The industries most disrupted are often the ones that provided stable middle-class work for people without elite degrees. Writing, customer service, data entry, basic coding. These aren't jobs that required going to Harvard. They were accessible. They paid okay.

AI is disrupting those first and hardest.

Meanwhile, elite professional services (strategy consulting, high-end law, investment banking) are integrating AI more slowly because the economics are different. These industries extract value from scarcity and judgment. AI actually threatens their fundamental business model less than it threatens straightforward execution work.

So you get this perverse inversion. The jobs that were hardest to get were the least vulnerable to automation. The jobs that were easier to access are getting disrupted first.

But here's the thing that's often missed in this analysis: disruption isn't instantaneous. The impact varies by company size, industry maturity, and specific role.

A large media company might lose 30% of its writing staff to AI but maintain 70% because those writers do strategy, client relationship, and editorial judgment that AI can't (yet) replace alone.

A small content agency might disappear entirely because its competitive advantage was fast, cheap writing. Once AI does fast and cheap, what's the moat?

The speed of impact depends on how replaceable you are, how quickly your organization adopts, and whether you're in an industry that can restructure around AI or an industry that gets disrupted by it.

The Adaptation Challenge

Here's what makes AI Time particularly brutal: it's not just that things change fast. It's that adaptation itself is hard, and AI Time doesn't give you much time to adapt.

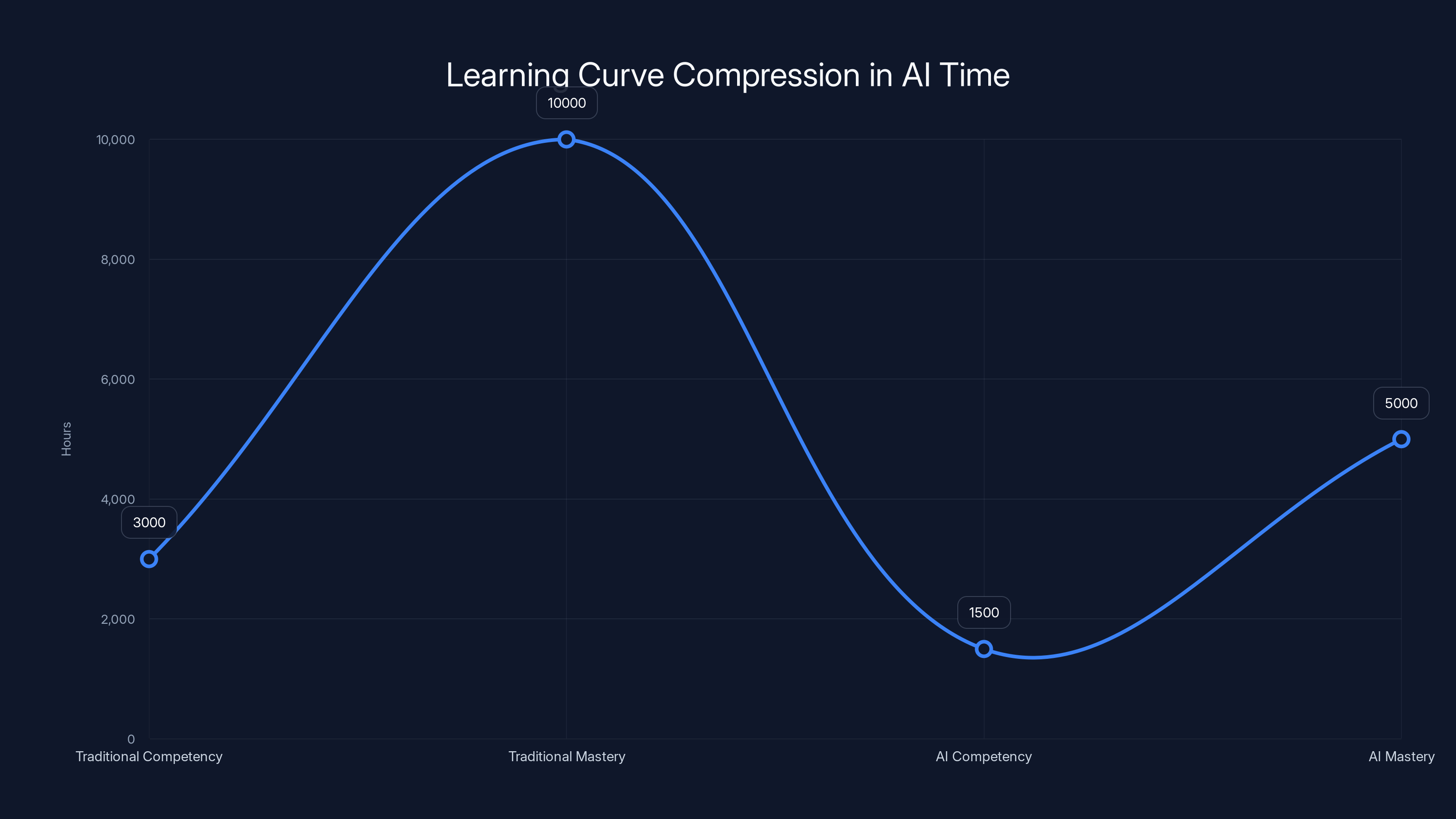

Learning curves in traditional fields are well-understood. It takes 10,000 hours to become world-class at something. Maybe 1,000-5,000 hours to become competent. These timelines are baked into how we think about learning and development.

AI Time compresses these timelines on one hand (you can learn faster with AI tutors, better documentation, faster feedback loops) while stretching them on the other (you have to keep learning because everything keeps changing).

The psychological burden is underestimated. Learning something new is cognitively expensive. It's stressful. You have to maintain beginner's mind about things that used to be comfortable and automatic.

Adapt to the new AI writing tool. Just got good at it? Here comes GPT-5 and you have to relearn workflows. Finally figured out the new design AI? Better learn the next one.

This isn't necessarily a bad thing in moderation. But in AI Time, you're not moderating. You're sprinting on a treadmill that keeps speeding up.

The companies and people who handle this best aren't the ones who are "smarter" or "more technical." They're the ones who've built psychological resilience around learning and changing. They don't try to master anything. They try to develop fluency. They don't expect tools to be stable. They expect tools to evolve and plan accordingly.

It's a different relationship with knowledge. Less "I know this" and more "I can learn this quickly when I need to."

Organizations that get this right tend to have a few things in common:

-

Regular learning budgets: Not "one training class a year" but ongoing investment in skill development and experimentation.

-

Psychological safety to experiment: If trying new tools carries risk of failure that impacts your job, you won't try them. If there's space to experiment, learn, and fail, people adapt faster.

-

Clear-eyed thinking about what's changing: Organizations that deny AI's impact or pretend it's hype adapt slower. Organizations that look directly at what's changing in their industry and prepare accordingly move faster.

-

Tolerance for inefficiency: The fastest way to adapt is to give people time to learn and experiment. That looks inefficient. It is. But it's faster than trying to maintain 100% efficiency while the world shifts underneath you.

AI significantly reduces routine tasks (-70%) while increasing the complexity of judgment (50%), creative (60%), and strategic tasks (40%). Estimated data.

The Productivity Mirage

One of the biggest misconceptions about AI Time is that it automatically makes everyone more productive.

It doesn't. Or rather, it does in some ways and creates new problems in others.

Yes, AI can make individual tasks faster. You can write faster. Code faster. Design faster. Analyze faster.

But productivity at the system level is different. Productivity is output relative to input. If AI makes you faster but also creates new expectations (faster turnaround, more output, more frequent iteration), then you've increased speed without increasing productivity. You're just working faster.

Worse, there's a phenomenon researchers call "the productivity paradox." Systems that should obviously increase productivity sometimes don't, because they create new processes and overhead that consume the gains.

Example: Email was supposed to save time. It did. It also created expectations for instant responses, constant connectivity, and volume that consumed all the time it saved. Most people now spend more time on email than they did on letters and phone calls combined.

AI is triggering the same pattern. It can write faster. It also creates expectation for more writing. It can code faster. It also creates expectation for more features. The time saved gets consumed by increased scope.

This is particularly visible in knowledge work. Teams adopt AI tools for productivity. Initially, they get faster. Then they use that speed to take on more work. Then they're back to being busy, just busier with more ambitious projects.

Whether this is good or bad depends on your perspective. On one hand, you're accomplishing more. On the other hand, you're not actually less stressed. You're just running faster to stay in place.

There's also a quality concern. If AI makes you faster but you're using that speed to do more things rather than do fewer things better, you might be sacrificing depth. Three well-researched documents are harder to argue with than six drafted-by-AI documents that are serviceable but surface-level.

The organizations that handle this best are the ones that don't just adopt AI for speed. They adopt it to reclaim time, then intentionally spend that time on different work. Instead of writing faster, they write less but focus more. Instead of coding faster, they write less code but architect better.

That requires discipline. Because capitalism and competition create pressure to just go faster, produce more, ship more. If your competitor adopts AI and uses it to go faster, staying disciplined about "we're going to use this to go slower but better" feels risky.

It probably is risky. Just less risky than the alternative (everyone goes faster, quality suffers, and you're competing on speed and cost where AI owns the advantage).

What AI Is Actually Bad At (And Why It Matters)

One of the most useful things to understand in AI Time is what AI genuinely sucks at. Not because it's reassuring (though it is), but because it defines what humans still own.

AI is bad at:

Novelty: AI is trained on what already exists. It can remix and recombine, but generating truly novel approaches is hard. It works great for variations on known themes. It struggles with "nobody's done this before." Human creativity still owns that space, though AI is becoming a better collaborator in the creative process.

Context and judgment: AI can't actually understand context the way humans do. It can simulate it well enough that it sounds human. But it's missing the embodied understanding that comes from actually living in a world. When something requires genuine judgment ("Is this worth doing?" "What matters here?"), AI can support the thinking but can't replace the thinker.

Relationships: People buy from people. They trust people. They follow people. AI as customer service feels fine for help with billing. AI as trusted advisor doesn't work. This is partly technical (AI isn't good enough yet) and partly psychological (people don't trust AI with relational stakes yet, maybe ever).

Ethical judgment: This is bigger than "AI shouldn't be used for bad things." It's that AI can't actually determine what "good" means in context. It can be constrained by human values. It can't generate new values or recognize when ethical tradeoffs are needed. When two goods are in tension (fairness vs. efficiency, individual vs. collective, short-term vs. long-term), humans have to make those calls.

Accountability: When something goes wrong, who's responsible? AI can't answer that. A human has to be. This isn't going away, which means every AI system needs humans who are accountable for its outputs.

Physical presence: There's still something about being there that matters. Therapy over video works okay. Therapy in person works better. Teaching online works. Teaching in person works better. The presence gap closes with technology but doesn't disappear.

The list of what AI is bad at is important because it's the permanent job security list. Not because these things won't be disrupted, but because they're harder to disrupt, which gives you time to evolve.

The things to worry about aren't "Can AI do this task?" but rather "How soon will AI do this task well enough that economics favor automation?"

Sometimes the answer is "Never." Mostly it's "In 5-20 years." Sometimes it's "Already did."

ChatGPT reached 100 million users in just 1.5 years, showcasing the rapid adoption speed of AI compared to previous technologies. Estimated data based on historical trends.

Why Organizations Struggle With AI Time

Individual workers experience AI Time as disorienting. Organizations experience it as destabilizing.

Most organizational structures were built for stability. You have departments that own specific functions. You have processes optimized for repetition. You have leadership hierarchies designed for control and consistency. None of this is bad. It all makes sense for a stable environment.

AI Time is not stable.

The problems emerge immediately:

Job architecture breaks: If a role is 70% writing and 30% strategy, and AI handles 60% of the writing, what's the role now? 10% writing, 30% strategy, 60%... what? Most organizations don't know. So they eliminate the person instead of restructuring.

Skill valuation becomes unclear: If expertise in "Python programming" is worth less when AI can write Python, what's your compensation structure? How do you hire? What's the career path? Organizations typically respond by chaos and unfairness.

Metrics become meaningless: Output metrics (lines of code, documents written, cases processed) become weird when AI can distort the numbers. Quality metrics become hard to measure. Productivity metrics become ambiguous.

Leadership loses control: Traditional management is about directing work. In AI Time, the work is partly invisible (AI is doing it), partly unpredictable (AI outputs are weird), and partly requiring judgment that leadership isn't equipped to judge.

Risk becomes undefined: What's the risk of shipping AI-generated content? What's the liability? What's the brand impact? Most organizations haven't decided. So they vacillate between "this is great" and "we can't use this" multiple times a year.

Organizational inertia vs. market velocity mismatch: Organizations can't restructure every quarter. But market conditions are shifting that fast. So you get into this weird state where your org structure is increasingly divorced from reality.

The organizations handling this best tend to be:

Startups with clarity: New companies that start around "how do we use AI as core infrastructure" don't have legacy processes to unwind. Airbnb, Figma, and newer companies are baking AI into their models from day one.

Large organizations with dedicated AI functions: Companies that built separate AI teams to experiment, learn, and eventually integrate. Microsoft, Google, and mature tech companies have this.

Companies comfortable with chaos: Some organizations are just accepting that the next 5 years will be messy. They're hiring for adaptability, funding experiments, and treating it as managed chaos rather than trying to maintain perfect stability.

The ones struggling most are mid-market companies with legacy structures, long decision cycles, and conservative cultures. They know they need to adapt. They're not sure how. So they move slowly and fall further behind.

The Skill Inversion: What's Actually Valuable Now

One of the clearest patterns of AI Time is an inversion in what skills are valuable.

For decades, the premium was on deep, narrow expertise. You became a specialist. That specialization was expensive (hard to learn) and defensible (hard to replace). Specialists commanded high salaries.

AI Time inverts this.

Deep specialization in a narrow technical domain becomes less valuable when AI can do the narrow domain stuff. A PhD in machine learning is valuable until AI can generate machine learning papers. A CPA is valuable until AI can do tax returns. Suddenly the narrow expertise isn't defensible anymore.

Meanwhile, skills that were previously considered "soft" or less valuable are becoming premium:

Systems thinking: Not understanding one piece deeply, but understanding how pieces connect. Seeing your domain in relation to adjacent domains. Understanding failure modes and edge cases. This is hard for AI because it requires synthesis and judgment.

Communication clarity: The ability to explain complex things simply, to translate between technical and non-technical, to persuade, to teach. AI can write, but writing that actually persuades a specific person in a specific context is harder for AI to do well.

Taste and judgment: Being able to distinguish good from mediocre. Knowing what matters in your field. Recognizing what's being missed. Judgment is inherently human because it requires values and context.

Resilience and adaptability: Less about any specific skill and more about the ability to learn, adjust, and not freak out when things change. This is becoming a primary skill.

Ethical reasoning: More companies need people who can think through ethical implications of what they're building. Not because they suddenly care (though some do), but because ethical missteps are increasingly costly, and you need someone who's actually thought about it.

Cross-domain thinking: Understanding how ideas from one field apply to another. This is genuinely hard for AI because it requires broad knowledge plus synthesis.

This is a subtle but real shift in what organizations pay for.

It also means career paths are changing. The old model was: apprentice in narrow field, become expert, profit from expertise for 30 years.

The new model is harder to articulate but looks more like: develop broad competence, build judgment about your domain, stay adaptable, develop strong communication skills, and use those to move across roles and contexts.

It's less secure in some ways (you're not protected by deep expertise). It's more secure in others (you're not vulnerable when your specialty is automated).

AI Time compresses learning timelines significantly, allowing faster competency and mastery. Estimated data shows AI tools can halve traditional learning hours.

The Opportunity In AI Time

Everything so far sounds grim. Here's where it gets interesting: AI Time is also genuinely full of opportunity.

Every disruption creates gaps. People and companies that can move into those gaps quickly win big.

Consider what's happening right now. AI can do routine writing. What it can't do well is:

- Editorial direction: Deciding what story to tell, what angle to take, what the point actually is.

- Authentic voice: Writing that sounds like you, carries your perspective, and couldn't be replaced.

- Relationship building: Using writing to genuinely connect with specific people.

- Strategic thinking: Using writing to move business forward, not just produce content.

The writers who are thriving in AI Time aren't the ones who write faster. They're the ones who moved up the stack: strategy, voice, relationship, direction. They use AI as a tool to execute their thinking, not as a replacement for their thinking.

Same pattern across fields. The opportunity isn't "How do I do what I did before, faster?" It's "What did I used to do that I couldn't because I was busy with routine stuff? How do I do that now that routine stuff is handled?"

A customer service team that used to spend 80% of time handling standard inquiries now has the opportunity to spend that time building relationships with high-value customers, understanding pain points, and feeding that back into product development.

A developer who used to spend half their time writing boilerplate code now has capacity to think about architecture, mentor junior devs, or contribute to open source.

An analyst who used to spend days gathering and formatting data now has capacity to do deeper investigation and smarter recommendations.

These aren't obvious opportunities. They require initiative. The opportunity is there, but claiming it requires you to do the work of figuring out what you actually want to do with the freed time.

There's also an opportunity in being the person who understands both the AI tools and the domain. An accountant who understands both accounting and how AI can improve it is suddenly valuable in a way they weren't before. They're not competing on execution (AI wins). They're competing on judgment, strategic thinking, and understanding the full system.

The companies that are winning at AI Time are the ones that:

- Adopt AI early but carefully: Not blindly adopting every tool, but experimenting thoughtfully.

- Restructure around freed time: Not laying people off, but asking what work used to not get done because people were busy? Let's do that now.

- Invest in workers who can adapt: Helping existing staff develop new skills rather than just hiring new people.

- Stay focused on what they're actually good at: Not trying to be everything. Using AI to be better at what they already do well.

Building Resilience In Uncertain Times

If you take nothing else from living in AI Time, take this: you can't predict exactly what's going to change, so plan for adaptability instead of predicting the future.

Resilience in AI Time has a few components:

Financial resilience: Keep some runway. Don't spend everything you earn. Don't load up on debt right now. If your situation changes (role gets restructured, industry gets disrupted, field changes direction), having six months of expenses saved gives you options.

Skill diversity: Don't put all eggs in one expertise basket. Know how to do your main thing well. Also know adjacent things. Also maintain some generalist skills. This isn't about being a generalist forever. It's about not being dependent on one narrow skill for survival.

Relational resilience: Do actual work maintaining professional relationships. Not networking (ugh). Just real work relationships. Know people. Let people know who you are. When disruption happens, your network is your lifeline.

Psychological resilience: This is the hardest one. It's about developing the ability to handle uncertainty and change without freaking out. This looks like: practicing accepting that you don't know what's coming, getting comfortable saying "I don't know," trying new things and being okay with failing, and maintaining curiosity instead of fear.

Institutional awareness: Know what's happening in your industry and your company. Not to predict the future, but to be less blindsided. Read the trends. Pay attention to what's shifting. Adapt when you see the direction, rather than reacting after the fact.

Organizations build resilience similarly, but scaled up:

- Financial: Maintain margins, avoid over-leveraging, keep cash reserves.

- Product: Don't be dependent on one product, revenue stream, or customer.

- Team: Hire for adaptability, invest in growth, build culture that tolerates change.

- Information: Stay aware of market shifts, competitive threats, technological changes.

The organizations that make it through AI Time intact aren't the ones that were "smartest" or "most technical." They're the ones that built flexibility into their structure.

The Future Of Work In AI Time

We're going to spend the next decade figuring out what work actually looks like in a world where AI can do routine cognitive work.

It won't be "robots took all the jobs" because the economics don't work that way. It will be "work changed, some jobs disappeared, other jobs were created, everyone had to adapt."

The most likely scenarios aren't dramatic. They're boring and complicated.

Some roles get eliminated. Some get restructured. New roles get created. Some people thrive. Some people struggle. Organizations that adapt well grow. Organizations that don't adapt shrink.

There will be disruption. There will also be opportunity. The people positioned to take advantage of opportunity are the ones who:

- Stay aware: Pay attention to what's changing in your field.

- Develop judgment: Build the ability to see around corners, recognize what matters, distinguish signal from noise.

- Maintain adaptability: Stay in the habit of learning and changing.

- Protect what's human: Invest in relationships, communication, ethics, creativity. The things AI genuinely struggles with.

- Build resilience: Keep financial, relational, and skill buffers.

The future of work in AI Time isn't predetermined. It's being built right now by the decisions organizations and individuals make. If you wait for a clear path to emerge, you'll be reactive. If you start thinking about it now, you can be strategic.

The essay that nailed this (the one that inspired this whole piece) captured something important: we're not doomed, but we're also not safe. We're in a transition. And transitions are uncomfortable.

But discomfort is also opportunity. What's being unmade right now will be remade. The question is whether you're going to be passive in that process (hoping your job doesn't disappear) or active in it (helping shape what comes next).

Why We're Not Actually Screwed

Okay, let's be direct: the reason we're not screwed is because humans have a weird superpower that AI doesn't have.

We can redefine what we're doing.

AI can't do that. It can do what it's trained to do, and variations on that. But it can't look at its situation and decide "Actually, let's do something completely different."

Humans do that constantly. We get disrupted, and we figure out something new to do. Sometimes it's a conscious choice. Sometimes it's just necessity driving creativity.

When agriculture automated, people didn't just sit around unemployed. They moved to cities and worked in factories. When factories automated, people moved to services and knowledge work. This isn't to say these transitions were smooth (they weren't) or fair (they weren't). But there was always work to do.

AI is bigger than previous technological shifts. It's touching more domains simultaneously. But the fundamental dynamic is the same: disruption forces adaptation, adaptation creates new work, new work creates new problems, and solving those problems requires humans.

The people who are going to struggle most are the ones who treat AI like a static threat to manage. The ones who are going to thrive are the ones who treat it like a dynamic element in an evolving landscape.

You can't control whether AI disrupts your field. You can control how you respond. You can control whether you adapt. You can control whether you stay curious. You can control whether you invest in the skills and resilience that matter.

AI Time is real. It's disorienting. It's also not the end of human work or human relevance. It's a transition that rewards adaptability, judgment, and relational skills.

The harsh reality is that some things you learned are becoming less valuable. The hopeful reality is that the skills becoming more valuable (judgment, creativity, communication, ethics, adaptability) are human strengths.

We're not screwed. We're uncomfortable. Discomfort is the price of transition, and transitions are how humans actually get better at things.

So here's my take: Stop trying to predict whether your job will survive. Start building the resilience and skills to thrive regardless of what comes. The future of AI Time isn't about avoiding disruption. It's about being the kind of person who gets disrupted and figures out something better to do.

FAQ

What exactly does "AI Time" mean?

AI Time refers to a period of accelerated technological change where artificial intelligence systems are advancing and disrupting work across multiple sectors simultaneously, rather than in isolated industries. Unlike previous technology shifts that happened industry-by-industry over decades, AI's impact is happening across knowledge work, creative fields, analysis, and coding all at once. This creates a compressed timeline where what would normally take decades of adaptation is being compressed into months or years. The speed of change is genuinely disorienting because you're learning new tools, adapting workflows, and redeveloping skills on a schedule that doesn't match how humans typically handle change.

How does AI Time differ from previous technological disruptions?

Previous technological shifts were more localized and gradual. The internet disrupted publishing and retail, but your accountant worked the same way in 2005 as in 1995. The printing press took centuries to fully reshape society. AI is different because it simultaneously touches every domain involving knowledge work, decision-making, and creative work. A lawyer, a designer, a programmer, and a marketer all experienced significant disruption from AI within months of each other. There's no "waiting it out" in an unaffected sector. There's also no clear endpoint—each AI advancement creates new possibilities and new disruptions, unlike previous tech which eventually stabilized and became "normal."

Why are routine jobs more at risk than specialized roles?

Jobs that consist primarily of routine execution—data entry, straightforward writing, basic coding, customer service—are more vulnerable to AI because these tasks are learnable patterns that AI can replicate. Specialized roles that depend on judgment, client relationships, strategic thinking, or creative novelty are harder to automate because they require understanding context and making judgment calls. However, this isn't permanent. As AI becomes more sophisticated, it will move up the complexity ladder. The key difference is timing: routine jobs are being disrupted now, specialized judgment work is being disrupted more gradually, which gives you time to adapt.

What skills should I be developing in AI Time?

Focus on skills that are difficult or impossible for AI to replicate: complex judgment, creativity, cross-domain thinking, ethical reasoning, relationship building, and communication. Also develop meta-skills like learning agility (ability to pick up new tools and concepts quickly), systems thinking (seeing how different pieces connect), and psychological resilience. Avoid putting all your career eggs in narrow technical expertise that AI can potentially learn. Instead, build breadth plus deep judgment in your domain. The premium is shifting from "know everything about one narrow thing" to "understand your domain broadly, judge what matters, and can communicate about it effectively."

How can I make myself less replaceable by AI?

Movement isn't about making yourself irreplaceable (you eventually will be replaceable). It's about making yourself valuable in ways that require judgment and relationship. Do work that requires understanding context and nuance. Build relationships with clients or colleagues that are based on trust and understanding. Develop a point of view and perspective that's distinctly yours. Contribute to decision-making that has real consequences, because responsibility is hard to automate. And stay constantly learning, because versatility and adaptability are genuinely hard for AI to simulate—AI is stable once trained, but humans stay flexible.

Should I worry about my job being automated away?

Some worry is warranted and productive. Unproductive worry leads to paralysis. The productive version looks like: understand what percentage of your job is routine vs. judgment-based, pay attention to what's changing in your industry, develop skills in the judgment-based aspects, and build financial resilience so you have options if your situation changes. You should also honestly assess: Is my industry likely to be disrupted? (Yes, in five years, maybe now.) How fast is my organization moving? (Slow, which might protect you in the short term but hurts you long-term.) What's my actual competitive advantage? (If it's "I write well," AI is relevant. If it's "I understand my customers and write for them," you're in better shape.)

How should organizations adapt to AI Time?

The most successful organizations are ones that adopt AI thoughtfully, restructure work around freed time rather than just cutting headcount, invest in helping workers adapt rather than replacing them, and maintain clear thinking about what they're actually good at. They also build tolerance for experimentation and a culture where trying new things and failing is normal. Less successful organizations are ones that pretend AI won't affect them, delay adaptation until it's too late, try to maintain past practices while pretending things haven't changed, or treat AI as purely a cost-cutting tool. The best competitive advantage is clarity: knowing what you do better than anyone and using AI to do it even better, rather than trying to do everything.

Is there actual opportunity in AI Time, or just threat?

Genuine opportunity exists for people and organizations positioned to see it. Every time routine work gets automated, the people who were doing routine work have freed capacity. The opportunity is in figuring out what work used to not happen because people were busy. For writers, it's strategy and voice rather than volume. For developers, it's architecture and mentoring rather than boilerplate. For customer service, it's relationships and insight rather than triage. Opportunity also exists in new roles being created—people who can bridge AI and domain expertise, people who can judge AI outputs, people who can orchestrate AI-human workflows. The companies and individuals that treat AI Time as disruption to survive will struggle. The ones that treat it as capability to leverage will thrive.

What does resilience in AI Time actually mean?

Resilience has multiple components: financial (keep runway and reserves), skill-based (maintain diverse capabilities), relational (build and maintain professional relationships), psychological (develop comfort with uncertainty and change), and informational (stay aware of what's happening in your field). It's not about predicting the future or preventing disruption. It's about being positioned to handle disruption when it comes, and having enough options that disruption doesn't feel like catastrophe. Resilience is built now, in advance, not during crisis. Financial resilience means saving money. Skill resilience means learning. Relational resilience means maintaining relationships. Psychological resilience means practicing accepting uncertainty. These aren't fun activities, but they're better than hoping disruption doesn't happen.

TL; DR

-

AI Time is real: Technological change is happening faster than humans can adapt, simultaneously across all knowledge work sectors, creating genuine psychological and professional stress that's different from previous disruptions.

-

Some jobs are more at risk: Routine execution-based work (writing, coding, analysis, data entry) is being disrupted now. Judgment-based, relational, and creative work is more stable, at least in the short term.

-

Skills are becoming liquid: The half-life of specific technical expertise is shrinking. What matters increasingly is adaptability, judgment, systems thinking, and communication rather than deep narrow expertise.

-

Opportunity exists for the positioned: Organizations and individuals that restructure around freed time, invest in adaptation, and develop judgment-based work will thrive. Cost-cutting approaches and denial strategies will struggle.

-

You're not screwed: Humans still have advantages AI doesn't: redefining what we do, exercising judgment, building relationships, and maintaining creativity. The future isn't predetermined; it's being built by choices we make now about how to adapt.

Integrating AI Tools Into Your Response To AI Time

While you're adapting to AI Time, platforms like Runable help teams work with AI more strategically. Rather than trying to out-specialize AI in narrow domains, you can use AI-powered platforms to handle routine content generation, document creation, and workflow automation, freeing your team to focus on strategy, judgment, and creative work. Runable's AI agents can assist with creating presentations, documents, reports, images, and videos starting at $9/month, allowing you to reclaim time for higher-value work while staying current with how AI is reshaping professional workflows.

Use Case: Your marketing team spends 30% of time on routine content formatting and deck building. Reclaim that time for strategy and creative thinking using AI-powered automation.

Try Runable For Free

Key Takeaways

- AI Time is characterized by simultaneous disruption across all knowledge work sectors, not sequential technology adoption like previous shifts—creating psychological stress humans aren't evolved to handle

- Routine execution-based jobs (writing, coding, analysis) are disrupted first and fastest; judgment-based and relational work remains more stable, at least in the short term

- Technical expertise is becoming liquid with shrinking half-life; the premium is shifting toward systems thinking, judgment, communication, and adaptability rather than deep narrow specialization

- Organizations that restructure around AI-freed time to do previously undone work thrive, while those using AI purely for cost-cutting and headcount reduction struggle with disruption

- Humans retain genuine competitive advantages—redefining what we do, exercising judgment in ambiguous situations, building relationships, and creative novelty—making us not screwed if we adapt deliberately

Related Articles

- Robot Baristas vs. Human Touch: The Future of Coffee Shops [2025]

- The Hidden Cost of AI Thinking: What We Lose at Work [2025]

- Chrome's Auto Browse Agent Tested: Real Results [2025]

- Why Companies Won't Admit to AI Job Replacement [2025]

- Claude Opus 4.6: Anthropic's Bid to Dominate Enterprise AI Beyond Code [2025]

- AI Chatbots & User Disempowerment: How Often Do They Cause Real Harm? [2025]

![Living in AI Time: The Harsh Reality & Why We're Not Screwed [2025]](https://tryrunable.com/blog/living-in-ai-time-the-harsh-reality-why-we-re-not-screwed-20/image-1-1771016843027.jpg)