Microsoft's Historic $7.6B Open AI Payout: What It Means for AI and Cloud Computing

When Microsoft reported its Q3 2025 earnings on Wednesday, the numbers were impressive.

That's not a quarterly revenue number. That's pure profit from owning a piece of one of the world's most valuable AI companies. And it signals something fundamental about how AI investments are reshaping corporate balance sheets, cloud computing infrastructure, and the entire technology landscape. As reported by GeekWire, these investments are transforming Microsoft's business model and future revenue streams.

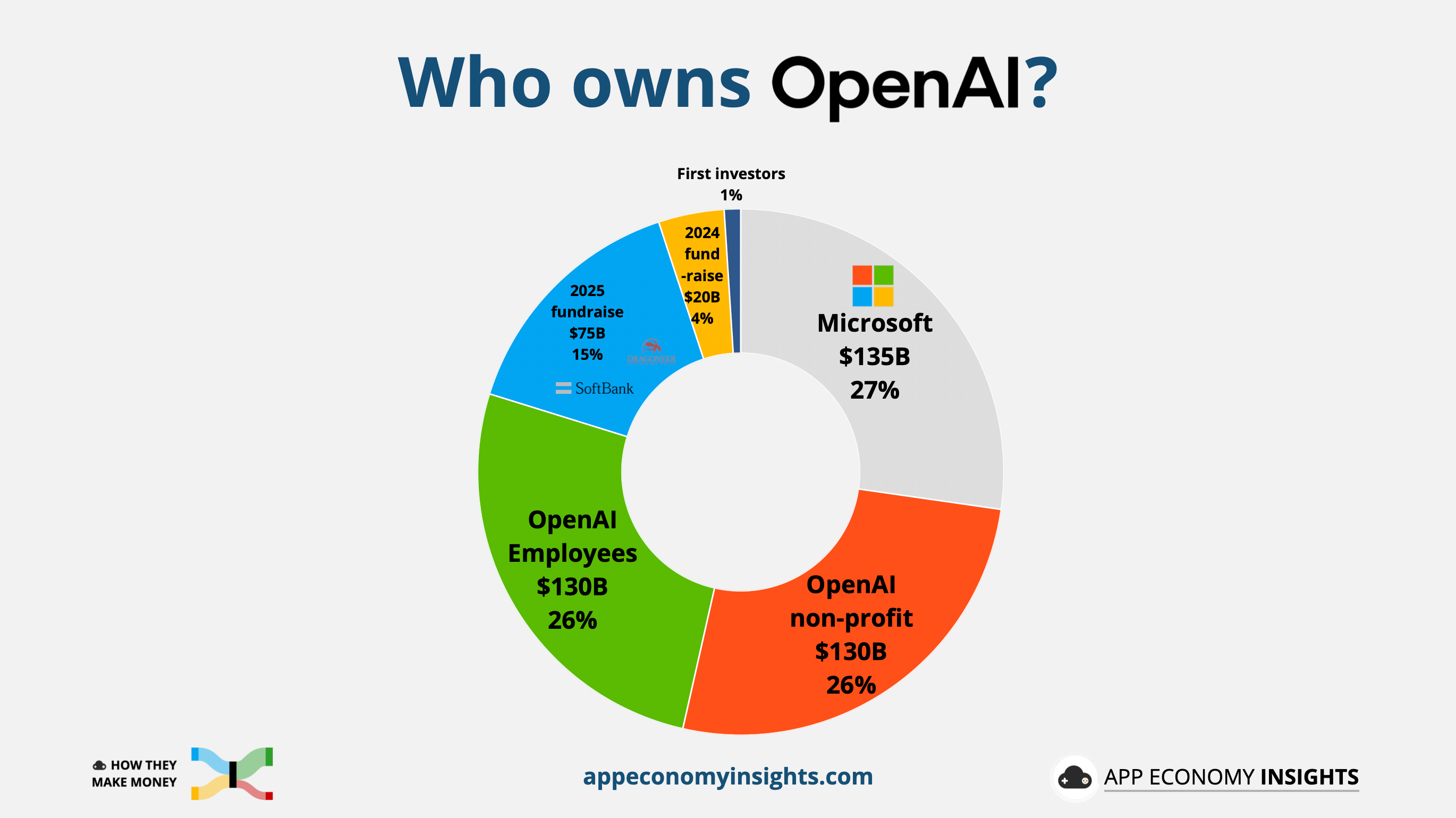

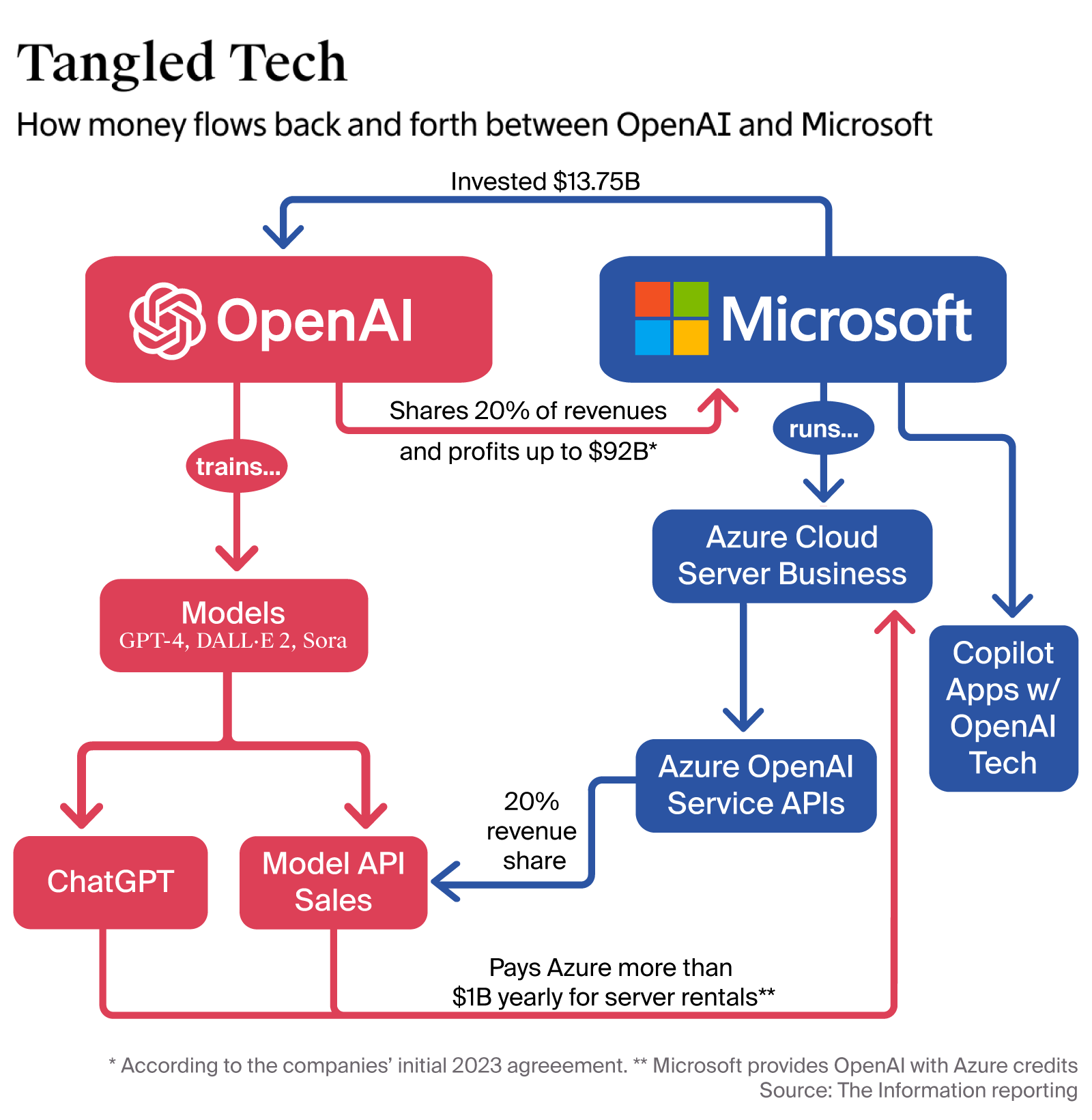

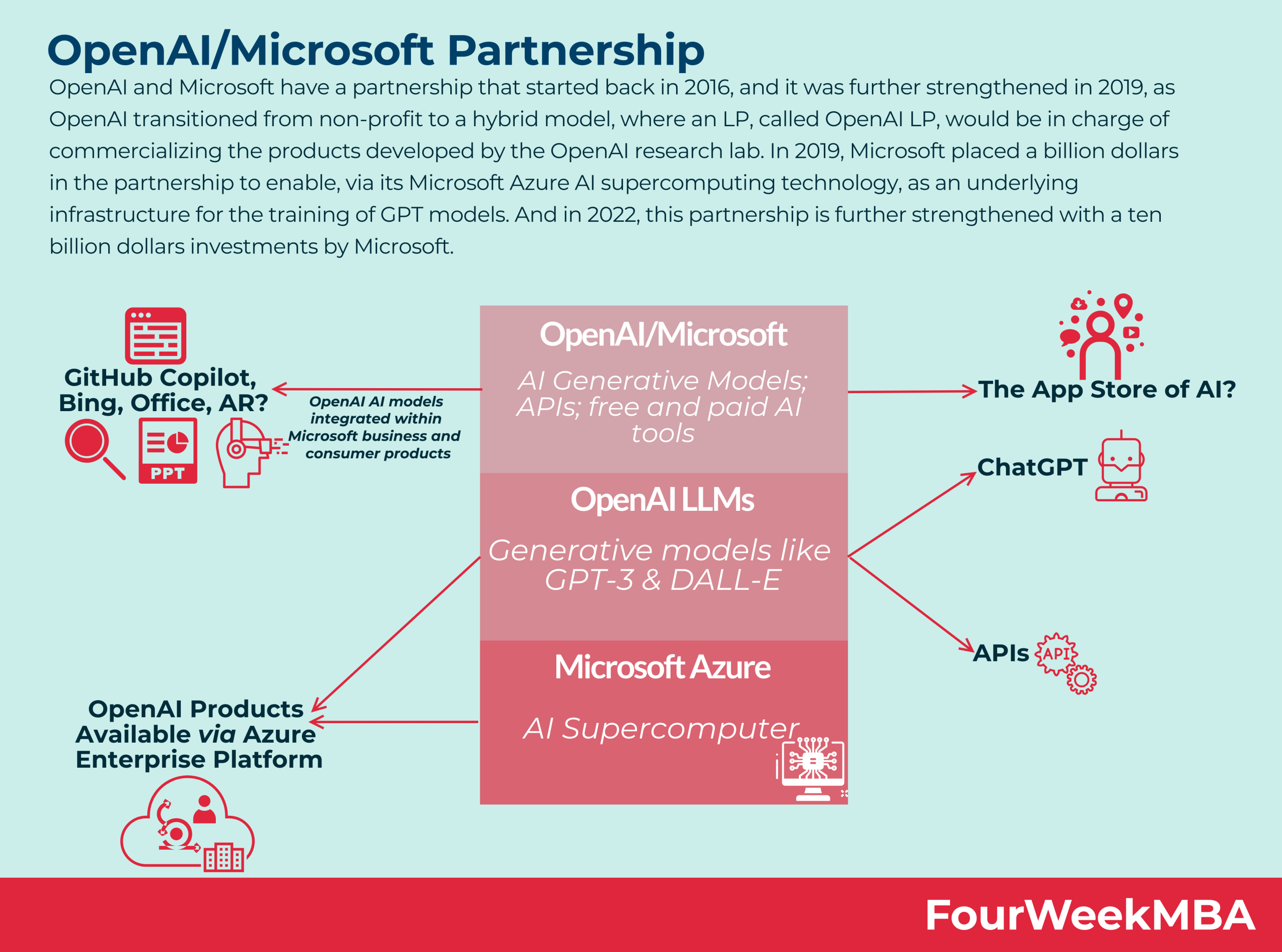

Microsoft didn't invent this partnership overnight. The company has poured more than $13 billion into Open AI since 2019, making it by far the largest financial backer of the AI lab. Along the way, it's built a commercial relationship that's become increasingly symbiotic. Microsoft gets exclusive cloud infrastructure rights. Open AI gets world-class computing power and enterprise distribution. And now, the returns are starting to materialize in ways that would've seemed impossible just three years ago.

The question isn't whether Microsoft's bet on AI is paying off anymore. The question is how much bigger it gets.

TL; DR

- Microsoft generated $7.6B in net income from its Open AI investment last quarter, proving that AI company stakes are now serious revenue drivers

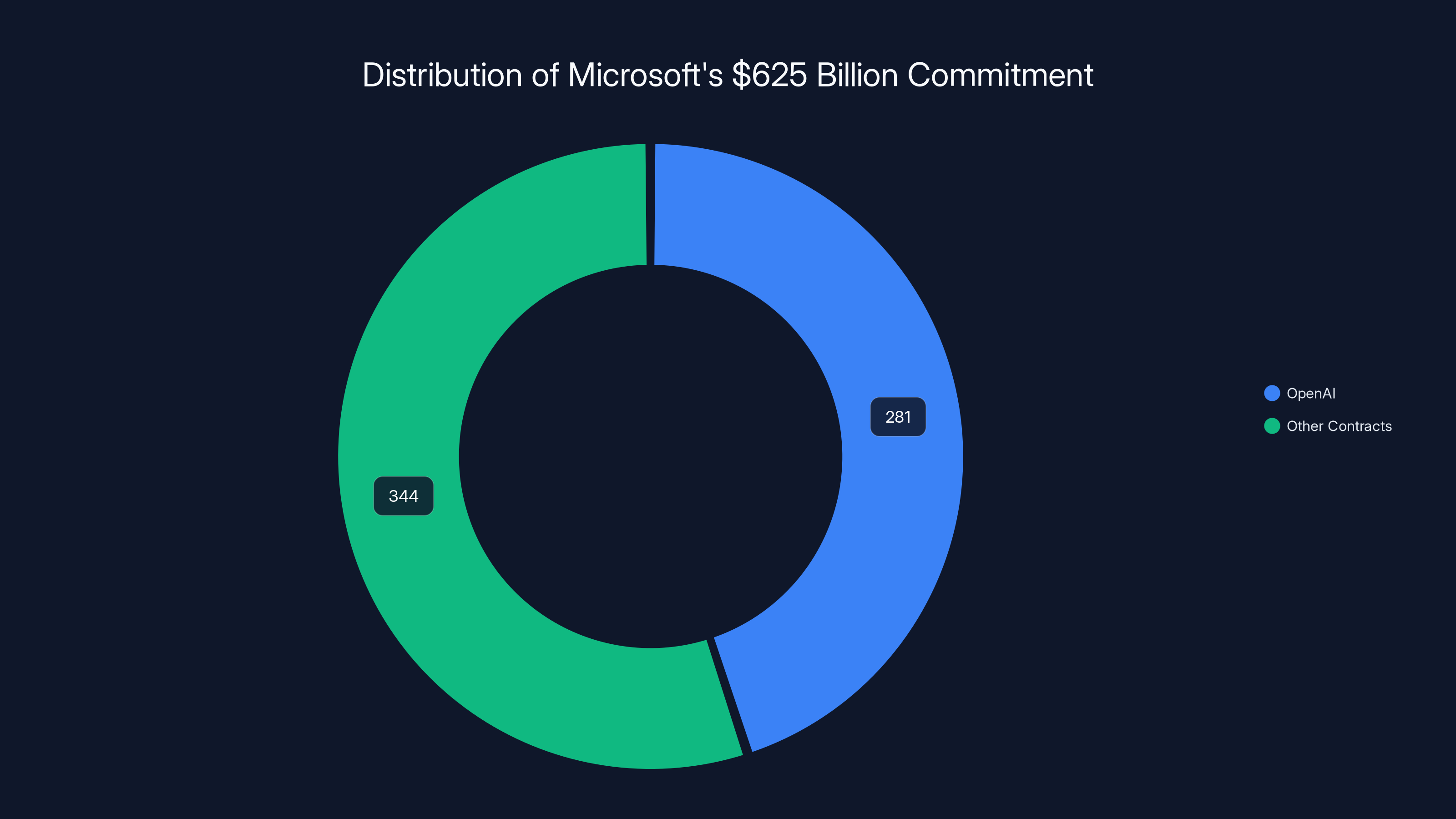

- The partnership now spans $625 billion in commercial performance obligations, with 45% tied to Open AI commitments through 2030+ as highlighted by MarketWatch

- Microsoft is spending $37.5B quarterly on AI infrastructure, with two-thirds going to GPU/CPU assets for Azure AI services

- The 20% revenue share agreement with Open AI means Microsoft benefits directly from every dollar Open AI makes from cloud computing

- This model is replicating across the industry, with Microsoft also investing 30B of Azure compute commitments, as noted by CNBC

OpenAI accounts for 45% (

The $7.6 Billion Question: How Did This Happen?

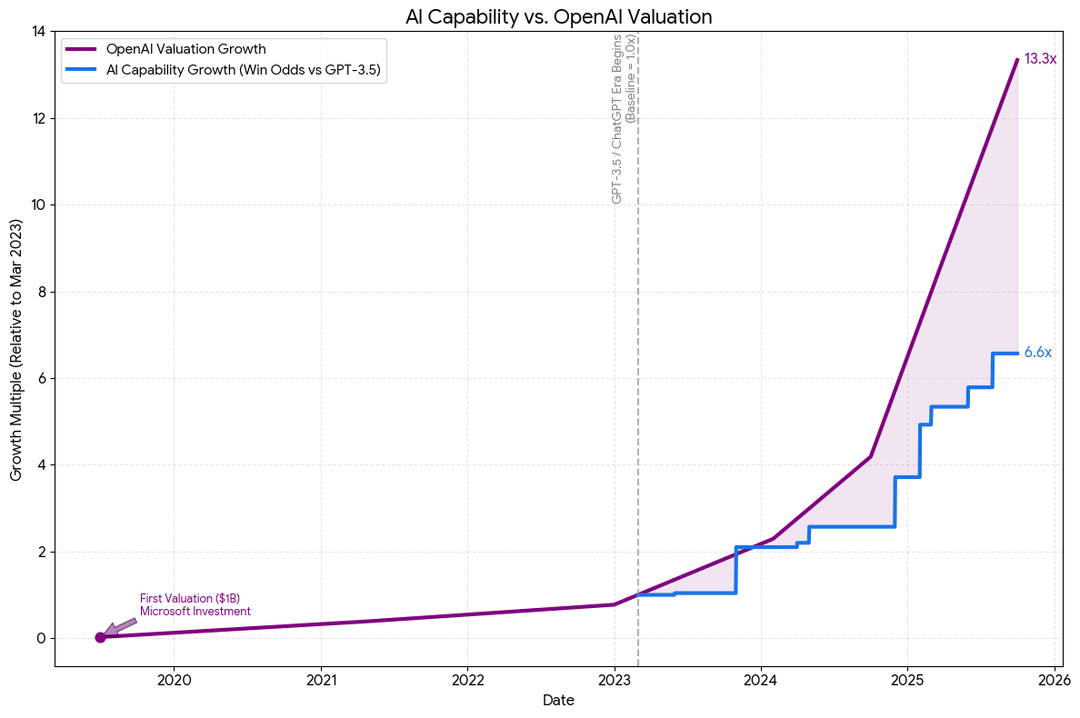

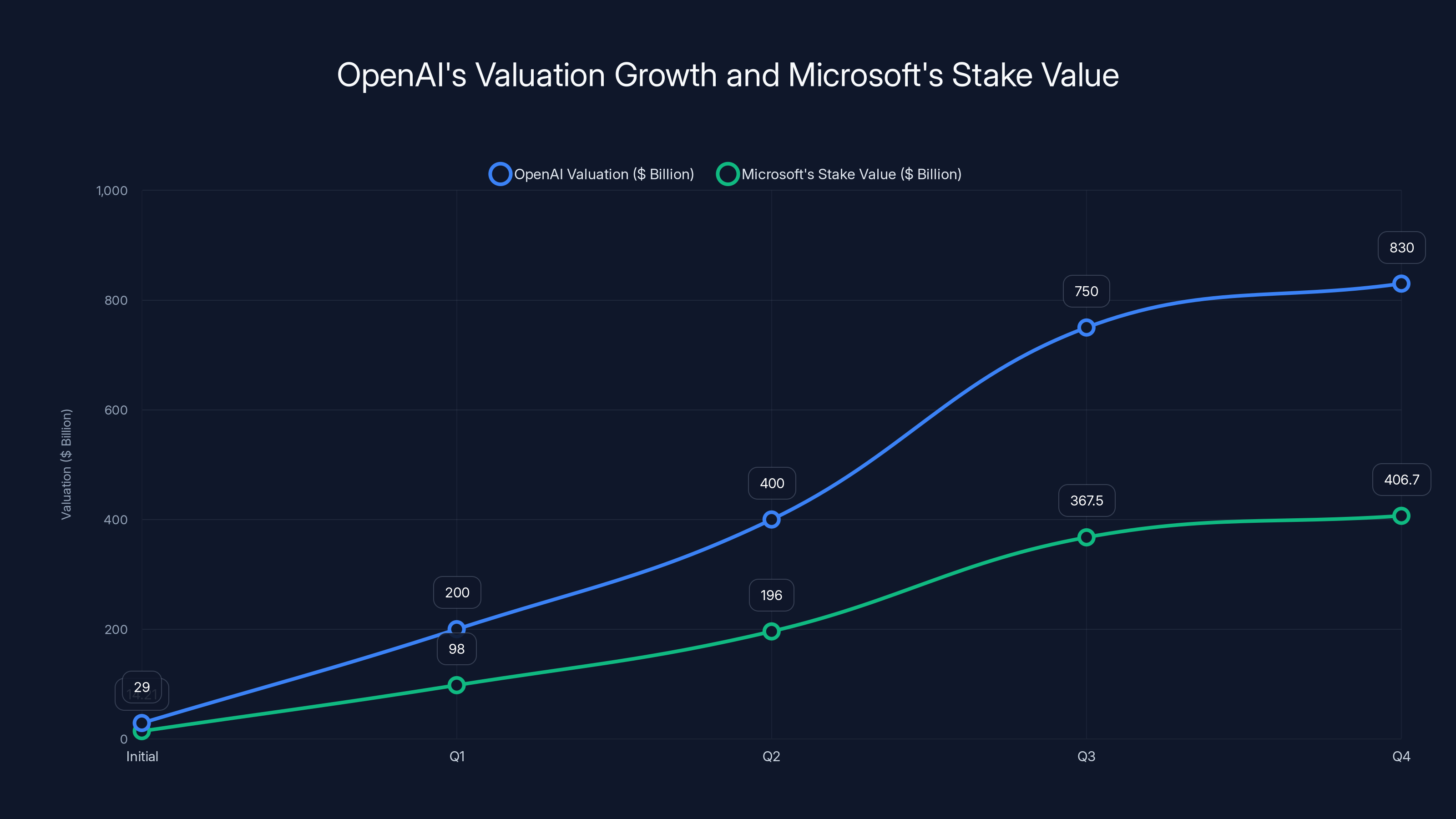

Let's start with what seems straightforward but actually requires some unpacking. Microsoft's quarterly earnings report listed a $7.6 billion increase in net income from its Open AI investment. That's not investment gains in the traditional venture capital sense, where you wait for an exit and hope for a return multiple.

This is something different. This is a valuation increase on existing holdings. As Open AI's valuation has climbed from

But here's the critical part: it's not just paper gains. The

This is how big tech companies boost earnings without actually selling products or delivering services. They make strategic investments in high-growth companies, those companies become unicorns or decacorns, and suddenly their balance sheet shows massive unrealized gains. Microsoft has been doing this playbook for years with companies like Alibaba and Git Hub. But the Open AI numbers dwarf anything the company has seen before.

The real kicker? This isn't a one-time event. Open AI's valuation continues climbing. As long as the company keeps growing its revenue and the investment community keeps bidding up AI company valuations, Microsoft's stake will keep appreciating. That $7.6 billion could look like a modest gain in three years.

But appreciation alone doesn't tell the whole story. Microsoft isn't just hoping Open AI succeeds as a theoretical exercise. The company has structured a deal where it directly benefits from Open AI's revenue growth and capital commitments.

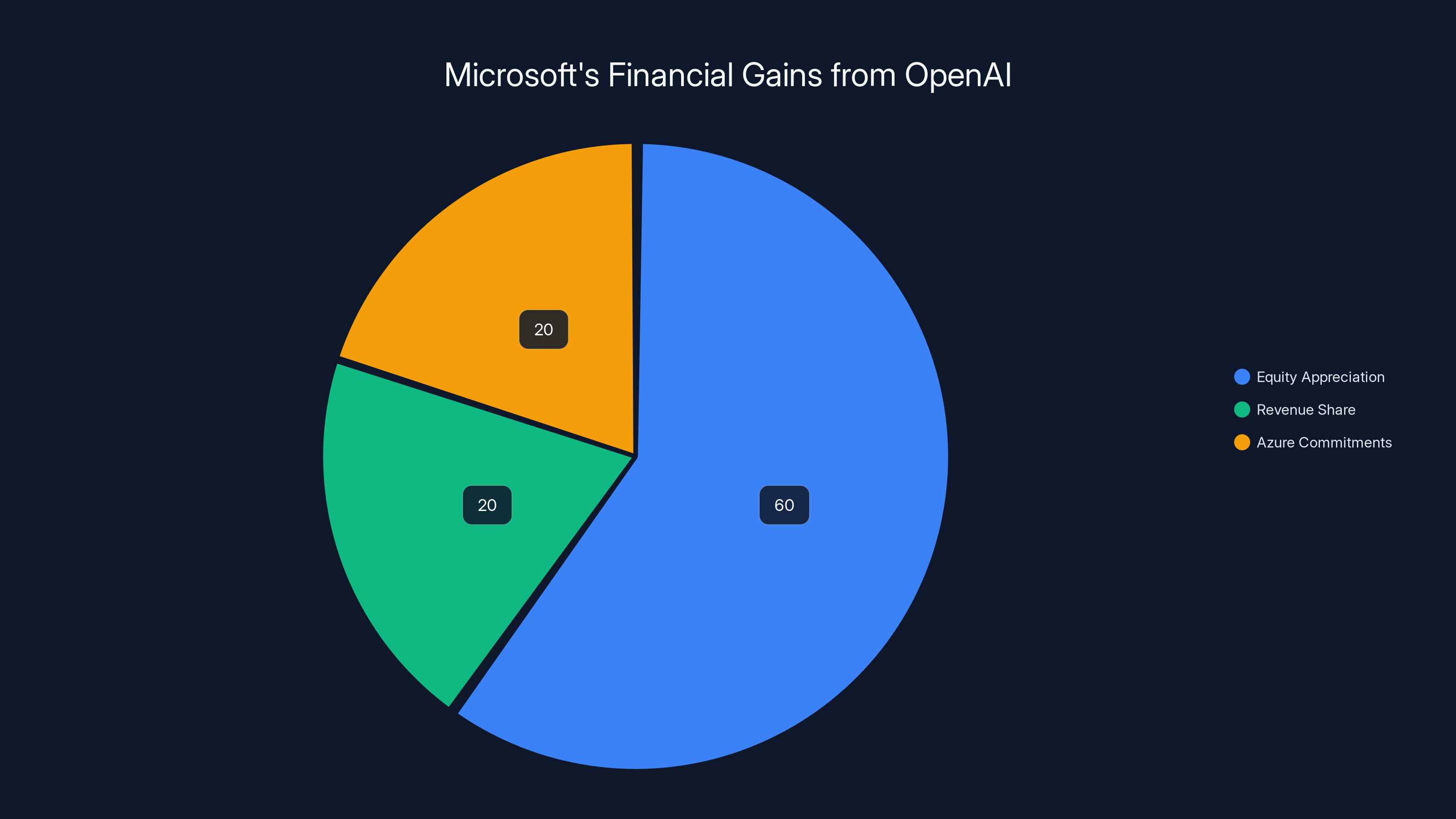

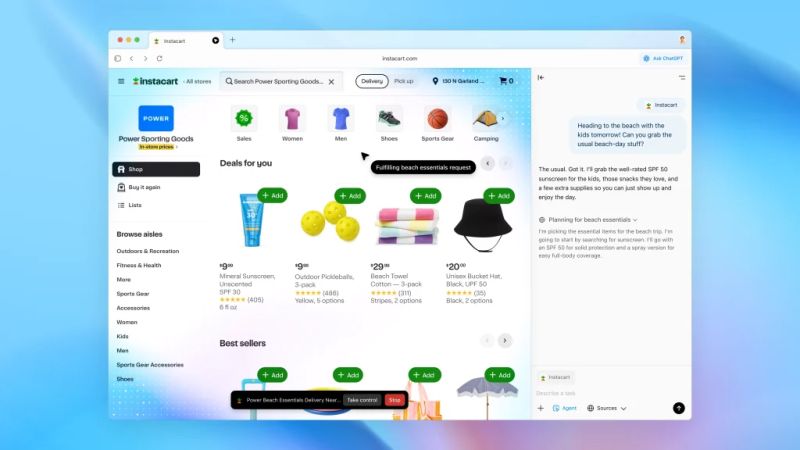

Microsoft's $7.6 billion gain from OpenAI is primarily driven by equity appreciation (60%), with revenue share and Azure commitments each contributing 20%. Estimated data.

The 20% Revenue Share: Microsoft's Biggest Advantage

Neither Microsoft nor Open AI officially announced the exact terms of their commercial agreement. But industry sources and financial analysis from major investors consistently point to one critical number: Microsoft takes 20% of Open AI's revenue for providing cloud infrastructure through Azure. This arrangement was highlighted in a Medium article by Beth Kindig.

Think about what that means. Every dollar Open AI makes from Chat GPT subscriptions, API calls, enterprise contracts, and future products flows through Azure data centers. Microsoft doesn't have to sell Chat GPT. Doesn't have to support users. Doesn't have to handle customer service or product management. Open AI handles all of that. Microsoft just operates the infrastructure and captures 20% of the top line.

In traditional software partnerships, you'd be thrilled with 30% gross margins. But this is different. Microsoft is getting paid to run the servers that power one of the hottest products on the planet. If Open AI's revenue grows 50% next year—which many industry analysts predict—Microsoft's share of that revenue grows 50% as well, almost automatically.

How much is Open AI making? The company reportedly hit

But the revenue share agreement is just the foundation. The real commitment comes in the form of compute obligations.

The $625 Billion Commitment That Changes Everything

This is where the partnership becomes genuinely historic. Microsoft reported that its "commercial remaining performance obligations"—essentially, contracts that haven't been paid out yet—jumped from

Where did it come from? Open AI accounts for 45% of that total, according to Microsoft's earnings disclosure. That means Open AI has committed to purchasing approximately $281 billion of Azure services, mostly GPU and compute infrastructure, over the next several years.

Let that sink in. Open AI has contractually committed to spending

Why would Open AI do this? Because to run large language models at scale, you need access to the most advanced GPUs available, and those GPUs live in Microsoft's Azure data centers. The company can't scale Chat GPT, GPT-5, and future AI products without that computing power. And Microsoft, aware of its leverage, negotiated a long-term commitment to guarantee that scale.

From Microsoft's perspective, this commitment is extraordinary. It guarantees revenue streams for the next five to seven years, minimum. It locks in pricing and terms while the AI market is still establishing baselines. And it gives Microsoft visibility into Open AI's roadmap and growth trajectory—information worth more than gold in the competitive AI space.

For the financial community, it explains why Microsoft's earnings beat expectations and why the company's guidance for future quarters remains robust. Every dollar of that $281 billion will flow through Microsoft's income statement as revenue and contribute to operating margin.

But here's the thing: Open AI is burning through that commitment quickly. Reports suggest the company is consuming compute resources at rates that will exhaust the initial commitments within three to five years, depending on product rollout pace. Which means Open AI will likely need to negotiate additional commitments or Azure will need to expand capacity even further. Either way, Microsoft wins.

OpenAI's valuation has surged from

Capital Expenditure: The Hidden Cost of Winning

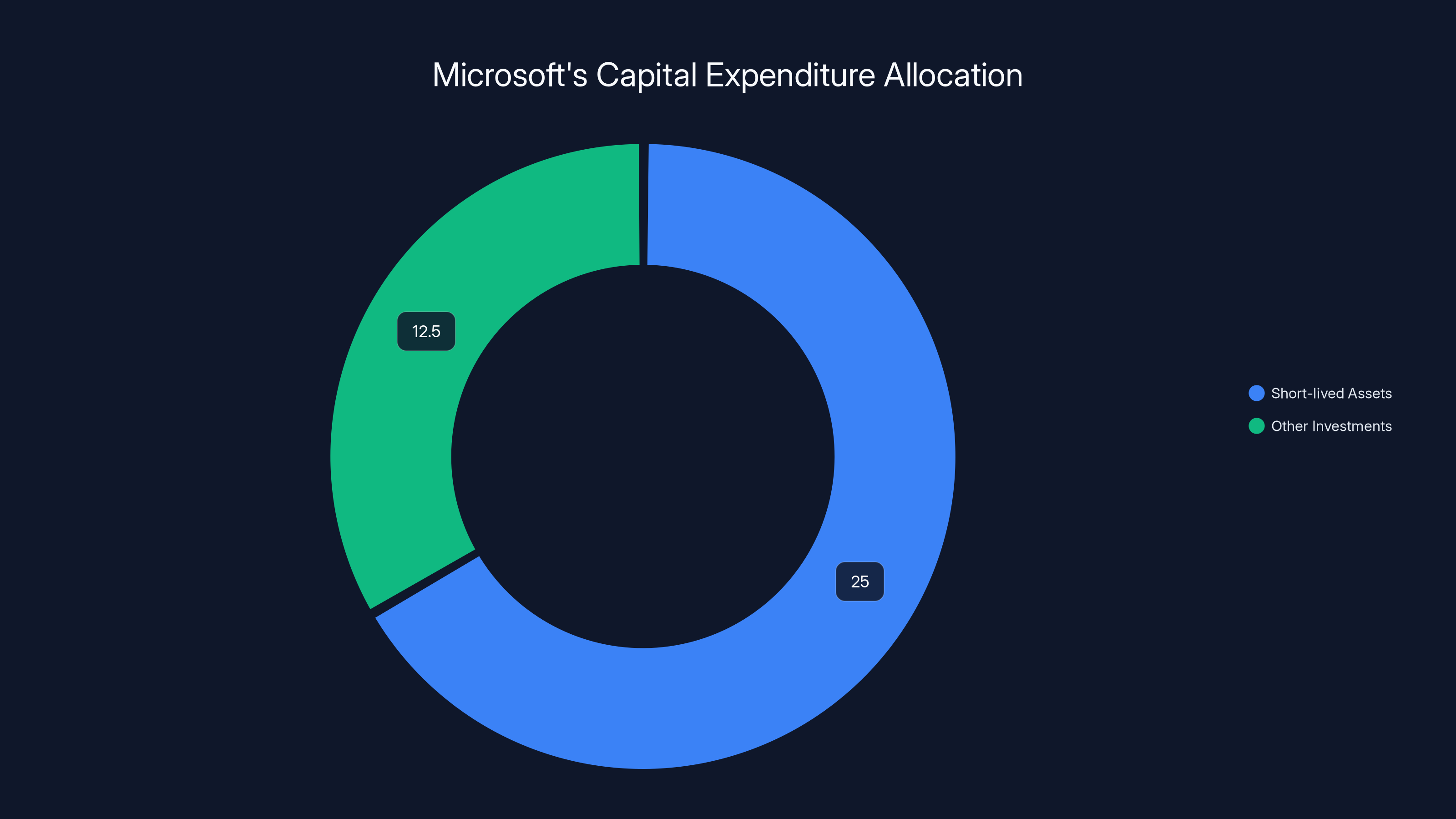

Now for the less celebrated part of Microsoft's earnings report: the company spent $37.5 billion in capital expenditures last quarter. That's the single largest quarterly capex number in company history. This was highlighted in Microsoft's blog.

Two-thirds of that money—roughly $25 billion—went toward what Microsoft called "short-lived assets." Translation: GPUs, AI accelerators, data center infrastructure, and networking equipment. The stuff that powers Azure's AI capabilities.

Why "short-lived"? Because in the world of cutting-edge AI hardware, Moore's Law is accelerating. A GPU that's state-of-the-art today is ancient history in 18 months. Nvidia releases new chip architectures faster than ever. AMD is catching up. And inference workloads keep changing as model architectures evolve. So Microsoft is essentially writing off this hardware over a 3 to 5-year period instead of the traditional 7 to 10-year depreciation schedule for data center equipment.

That means Microsoft is on a perpetual treadmill. Spend

But here's where the math works out for Microsoft: the revenue from that infrastructure—the 20% Open AI share, plus all the other enterprise AI customers using Azure—grows faster than the hardware costs. Gross margins on cloud services typically run 60% to 70%. Even with aggressive depreciation, Microsoft is likely generating more than $15 billion in gross profit quarterly from AI services.

Which means the company is running a cash-positive business despite investing at unprecedented scale. That's the hallmark of a platform winner. Microsoft isn't gambling on AI. It's investing in its own future by ensuring it remains the essential infrastructure layer for every AI company worth talking about.

The key metrics tell the story:

- Capital expenditure on AI hardware: $25 billion per quarter

- Estimated gross margin on cloud services: 65% to 70%

- Projected quarterly gross profit from Azure AI: 18 billion

- Revenue payback period on infrastructure investment: 18 to 24 months

Why Open AI Agreed to the Azure Monopoly

From an outside perspective, the arrangement seems one-sided. Microsoft gets guaranteed revenue, a cut of Open AI's profits, and insider visibility into one of the world's most important AI companies. Open AI gets... a place to run its workloads?

But Open AI's perspective is more nuanced. The company has a few basic options when it comes to infrastructure:

First, it could build its own data centers. That sounds appealing in theory—you own the assets, you control the margin. But building data center capacity at the scale Open AI needs costs tens of billions of dollars, takes years, and requires expertise in power infrastructure, networking, and hardware management that Open AI doesn't have. Amazon and Google have spent 15+ years building their cloud infrastructure. Open AI could never catch up alone.

Second, it could use multiple cloud providers. That's what some large AI companies like Anthropic are attempting. You diversify your infrastructure risk and potentially negotiate better pricing by playing providers against each other. But it comes with massive operational complexity—different API conventions, different performance characteristics, different costs. You end up building custom abstraction layers just to manage the complexity. For a company focused on AI research and product, that's a distraction.

Third, it could use the hyperscalers that already exist: Microsoft, Google Cloud, or AWS. Google Cloud offers cutting-edge infrastructure and is seriously investing in AI. AWS is enterprise-grade and globally distributed. Microsoft offers... all of those things, plus a company founder (Satya Nadella) who genuinely understands both enterprise software and AI, plus integration with Office, Git Hub, Teams, and every Microsoft product. Plus, crucially, the largest enterprise sales force in software, which can embed Chat GPT into workflows across millions of knowledge workers.

From Open AI's perspective, the Microsoft partnership makes sense. The company gets infrastructure it can't build, distribution it can't replicate, and a partner whose incentives are aligned. And in exchange, Microsoft gets a slice of the revenue and contractual commitments that guarantee Open AI will keep growing with Microsoft's infrastructure as the backbone.

It's a partnership where both sides win. That's rare. That's valuable. That's why the deal has held even as the industry has changed.

Two-thirds of Microsoft's $37.5 billion quarterly capex was allocated to short-lived assets like AI hardware, highlighting the company's focus on AI infrastructure. Estimated data.

The Anthropic Playbook: How Microsoft Is Betting on Multiple AI Winners

But Microsoft isn't putting all its eggs in the Open AI basket. In November 2024, the company announced a $5 billion investment in Anthropic, the AI company founded by former Open AI executives Dario and Daniela Amodei. This was reported by CNBC.

Anthropic I has committed to $30 billion in Azure compute capacity, with the option to buy more. That's not as massive as Open AI's commitment, but it's substantial. And it follows the exact same playbook: Microsoft invests capital, the AI company commits to using Microsoft infrastructure, Microsoft captures revenue and margin.

Why does Microsoft need Anthropic if it already has Open AI? Portfolio diversification. Open AI's leadership and board structure have been chaotic—the internal drama, the merger talks with Stripe, the restructuring into a public benefit corporation. Microsoft benefits from having a second large AI company that owes it billions in infrastructure commitments and that has a different technical roadmap.

If Anthropic's Claude architecture turns out to be architecturally superior to GPT, Microsoft still wins because Claude runs on Azure. If Open AI stumbles on the next generation of model scaling, Microsoft still wins because Anthropic is available. If both companies hit rough patches, Microsoft has infrastructure commitments from both.

This is the ultimate hedge strategy. Instead of betting on one AI company succeeding, Microsoft is betting on the infrastructure layer becoming indispensable regardless of who wins the model wars. And that's a much safer bet.

Microsoft also mentioned that Anthropic helped boost its "commercial bookings," which grew 230% year-over-year. That's an extraordinary number—a 2.3x increase in total new contract values. Some of that is from existing Azure customers adding AI workloads. Some is from new customers signing up specifically because Microsoft offers integrated AI. And some is from AI companies like Anthropic committing to multi-billion-dollar infrastructure contracts.

The strategy is working.

The Cloud Revenue Milestone That Changes Everything

Microsoft has been gradually shifting from a software company to a cloud company for the past decade. Azure has become the company's second-most profitable business unit after Office. But last quarter represented a watershed moment: Microsoft Cloud revenue hit $50 billion for the first time in a single quarter. This milestone was covered by The Motley Fool.

For context, that number exceeds the total annual revenue of most Fortune 500 companies. IBM's total annual revenue is roughly

And the growth isn't slowing. Cloud revenue grew at double digits across all of Microsoft's business units—Productivity and Business Processes, Intelligent Cloud, and More Personal Computing all grew by double digits. Even Xbox, typically the weakest performer, was only slightly down.

The Windows business was essentially flat, growing just 1%. That's the real story of Microsoft's evolution. The company no longer depends on Windows as the primary profit driver. Windows is now background infrastructure. The money is in the cloud, and specifically in the cloud services that power enterprise AI, data analytics, and infrastructure transformation.

Why does that matter? Because it means Microsoft's fundamental business model has shifted. The company is no longer selling perpetual software licenses to individuals and enterprises. It's selling ongoing cloud services where the customer gets billed continuously for computing power, storage, and AI capabilities.

Once you switch to Azure, switching away is expensive and disruptive. Your data lives there. Your applications are optimized for it. Your team is trained on the tools. This creates stickiness that a Windows license never had.

And with AI workloads now driving incremental cloud adoption—enterprises adding GPU capacity, testing new AI services, building custom AI applications—Microsoft's cloud business is in a virtuous cycle. More enterprises use Azure. More need AI capabilities. Those AI capabilities mostly run on Azure (because that's where Open AI runs). Which drives more adoption. The cycle reinforces itself.

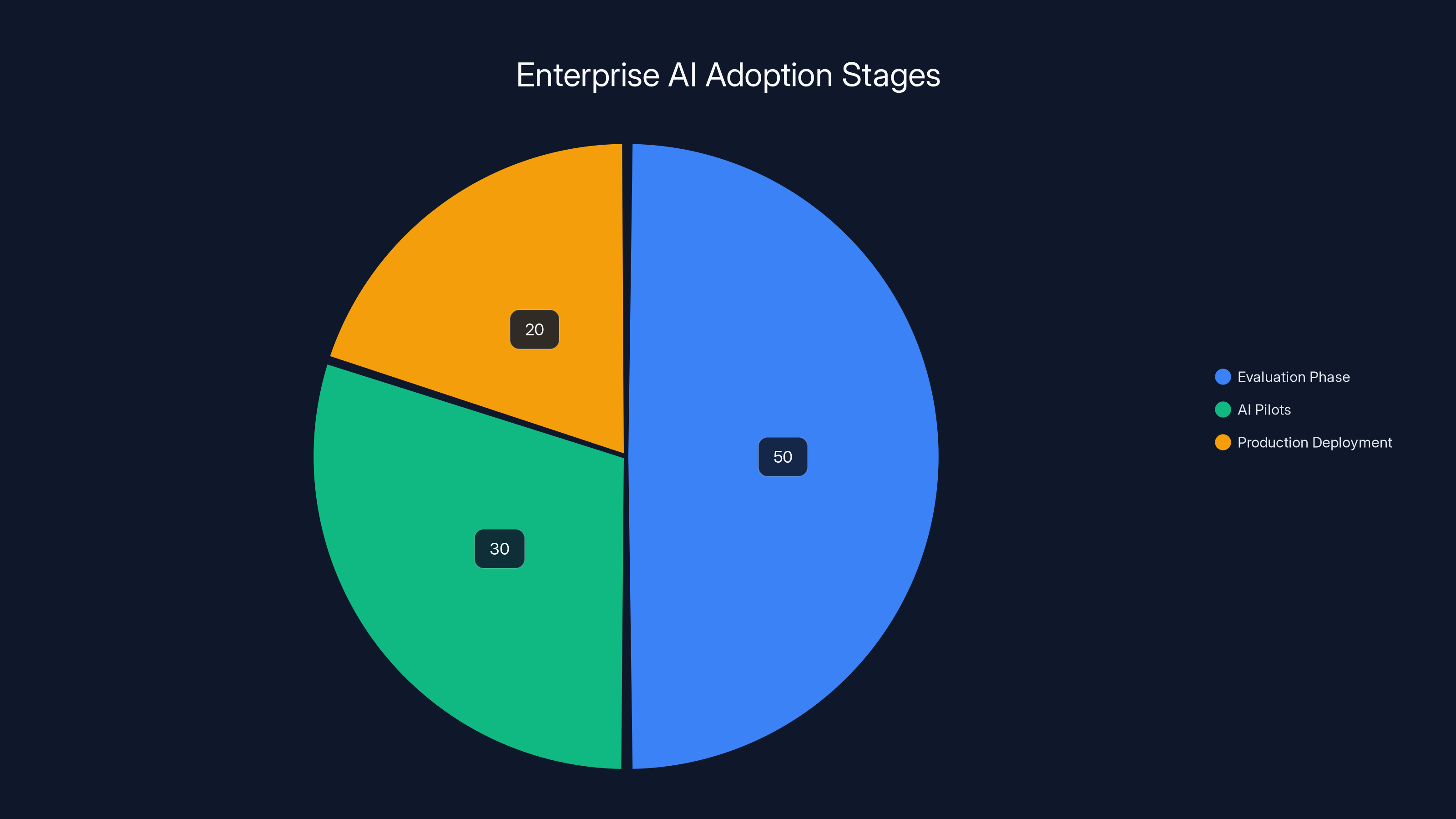

Estimated data shows that 50% of enterprises are in the evaluation phase, 30% have AI pilots, and only 20% have deployed AI in production.

The GPU Shortage That Microsoft Solved

One of the most underrated aspects of Microsoft's AI strategy is that it essentially solved the GPU shortage problem that plagued the industry in 2023 and 2024.

Nvidia manufactures roughly 70% to 80% of all AI chips. When demand exploded, Nvidia couldn't manufacture them fast enough. Customers who wanted to build AI infrastructure faced wait times of 6 to 12 months for the latest GPUs. It created a massive bottleneck in AI development.

Microsoft's solution was to commit to buying GPUs in such massive quantities that Nvidia essentially reserved production for them. The company likely negotiated exclusive access to Nvidia's latest chips, or at minimum, reserved large portions of manufacturing capacity. In exchange, Microsoft pays a premium—but it guarantees supply.

That guaranteed supply means Open AI, Anthropic, and every other Azure customer never faces the GPU shortage problem. They can scale workloads without worrying about hardware availability. That's worth billions in avoided delays and lost revenue to customers.

But there's another layer. Microsoft hasn't put all its chips in Nvidia's basket. The company has been aggressively developing its own custom AI accelerators—chips designed specifically for Azure workloads. These chips—code-named "Maia" and other projects—are unlikely to ever match Nvidia's software ecosystem. But they can run inference workloads at lower power and cost, which is where the majority of AI workload execution happens.

This is Microsoft playing the long game. Nvidia dominates training. But inference—running trained models on new data—is a different market. If Microsoft can provide cheaper inference chips through custom hardware, customers will use those for at least part of their workload. That drives more revenue to Azure, more margin to Microsoft, and less dependence on Nvidia long-term.

The Enterprise AI Market Is Just Getting Started

Here's the thing about the

Enterprise adoption of AI is still in the early stages. According to industry surveys, fewer than 20% of enterprises have deployed large language models in production. Fewer than 30% have serious AI pilots running. Most companies are still in the evaluation phase.

That means the wave of AI adoption is still coming. As enterprises move from pilots to production, as they build custom AI applications, as they integrate AI into existing workflows, they're going to need compute infrastructure. Lots of it.

Where will they get it? Increasingly from Microsoft, because Microsoft owns the distribution channels (Office, Teams, Dynamics, Power Platform), the enterprise relationships, and the infrastructure scale that's hard to match.

Google Cloud is investing heavily in AI and has strong technology, but lacks the enterprise sales force and relationship depth. AWS is enterprise-grade but has been slower to embrace AI. That leaves Microsoft as the primary beneficiary of enterprise AI adoption.

But there's a wild card: open-source models. Companies like Meta, Mistral, and others are releasing increasingly capable open-source large language models. Some of these models can run on commodity hardware or smaller clusters. If enterprises can run decent AI workloads on cheaper, open-source infrastructure, that reduces the demand for Microsoft's premium cloud services.

Microsoft's answer to this threat is twofold. First, provide better integrations with open-source models through Azure so customers can run them cheaply. Second, invest in proprietary models and services that are just good enough that enterprises prefer the convenience and support of paying for premium services.

The company is essentially trying to own the entire stack: the infrastructure, the models, the software integration, and the enterprise support. That's a powerful position.

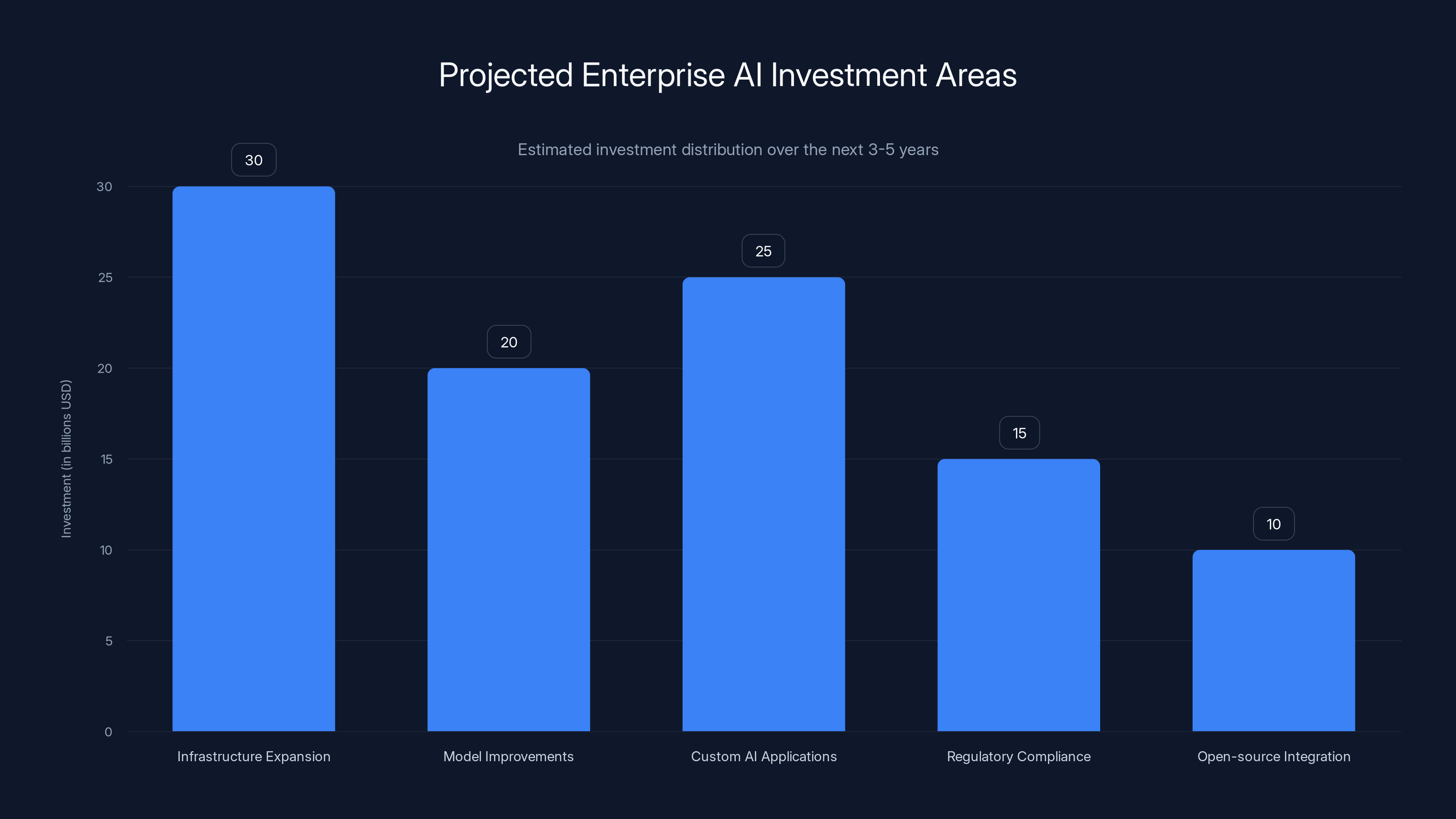

Estimated data suggests significant investment in AI infrastructure and custom applications, with regulatory compliance and open-source integration also receiving attention.

The Rivalry With Open AI That Actually Benefits Microsoft

Microsoft and Open AI have a notoriously rocky relationship, even though they're financially intertwined. Reports suggest deep tensions between the companies' cultures, strategies, and leadership.

Open AI wants to be independent. Microsoft wants closer integration. Open AI is exploring publishing a chip design. Microsoft would prefer Open AI depend entirely on Azure. Open AI is raising capital at a $750 billion+ valuation, which might let it take on debt and reduce its dependence on Microsoft funding. Microsoft would prefer Open AI remain financially constrained enough to need deep partnership.

The recent restructuring of Open AI into a public benefit corporation was partly designed to make the company more independent from Microsoft's influence. But the restructuring also included a $250 billion Azure purchase commitment that essentially locks Open AI into Microsoft for the rest of the decade.

The tensions are real. But they actually benefit Microsoft. Because Open AI, driven by a desire to reduce dependence on Microsoft, will likely invest in building its own infrastructure, recruiting infrastructure teams, and exploring alternatives. This creates strategic friction.

But that friction is completely asymmetrical. If Open AI invests $10 billion building its own infrastructure, Microsoft wins. Because Open AI still has to use Azure for peak capacity, for redundancy, and for customer demands that exceed in-house capacity. So Open AI's infrastructure investments complement Microsoft's rather than compete.

Meanwhile, Microsoft's infrastructure becomes more proven, more reliable, and more capable because it handles Open AI's workloads. That makes it more attractive to other enterprise customers and other AI companies.

So the rivalry, which looks like a weakness on the surface, is actually a strength. Microsoft benefits from Open AI's independence efforts because those efforts ultimately drive more value to the partnership.

How the Renegotiation Changed Everything

In September 2024, Open AI restructured its corporate form and renegotiated terms with Microsoft. This is significant because it revealed how much both companies value the relationship.

Open AI's restructuring into a public benefit corporation was designed to enable the company to eventually go public while preserving its mission focus. But it required capital commitments from existing investors, including Microsoft.

During the renegotiation, Open AI secured a

For Microsoft, that's extraordinary. It's a 10-year contractual commitment (or longer) with one customer. It locks in predictable revenue. It justifies the capital expenditure on AI infrastructure because the company has contractual assurance the infrastructure will be used.

For Open AI, it's a strategic commitment that says: "We're betting on Microsoft Azure to scale our product for the next decade." That's a vote of confidence in Microsoft's infrastructure that, frankly, Microsoft couldn't have bought with advertising.

The renegotiation also set terms that likely reduced Microsoft's concerns about Open AI exploring alternatives. The deeper the contractual commitment, the less attractive alternatives become. By locking Open AI into a $250 billion commitment, Microsoft made sure the company won't seriously explore other cloud providers.

This is Microsoft playing 4D chess while other companies play checkers. The capital expenditure on AI infrastructure is massive. But the revenue commitments are larger. The strategy is sound.

Implications for Competitors: Google, Amazon, and Others

What does Microsoft's success mean for Google Cloud and AWS?

For Google, it's a warning. Google has strong AI technology—its Gemini models are competitive with GPT-4. But Google's cloud infrastructure lacks deep enterprise relationships. Many large enterprises are either already committed to AWS or are cautious about cloud adoption generally. Google's position in AI isn't weak, but it's not the default choice for enterprises the way Microsoft is.

Google is trying to compete by offering better pricing, deeper AI model customization, and unique capabilities in areas like multimodal AI and robotics. But pricing competition in cloud services historically favors the incumbent, which is increasingly Microsoft in the enterprise space.

For AWS, the situation is more concerning. Amazon's cloud business is the largest and most mature. But AWS has been slower to embrace AI than Microsoft. The company does offer AI services and is integrating anthropic into its portfolio. But AWS doesn't have the same enterprise software relationships that Microsoft has through Office and Teams.

What's the difference? When an Office or Teams user wants to use AI, they click a button and get Chat GPT through Microsoft's integration. That integration is so seamless that many users don't realize they're using Azure infrastructure in the background. AWS has no equivalent.

AWS is trying to catch up by making it easier to run open-source models on its infrastructure and by investing in its own AI models. But the head start Microsoft has is enormous.

For everyone else—startups offering cloud infrastructure, edge computing companies, specialized AI hardware providers—Microsoft's dominance is a problem. The company is on track to own the entire stack: the hardware, the infrastructure, the software, the distribution, and the enterprise relationships.

That's unprecedented concentration of power in the cloud market. It creates opportunities for competitors to carve out niches. But it makes building a general-purpose competitor to Microsoft much harder.

What This Means for AI Companies and Startups

If you're an AI company or startup, the Microsoft-Open AI partnership has direct implications for your business.

First, if you're building an AI product, you probably want to run on Microsoft Azure. It's increasingly where your customers are. It's integrated with the tools enterprises already use. And there are probably network effects—as more AI companies run on Azure, Microsoft invests more in AI infrastructure, which makes Azure more attractive.

Second, if you're raising venture capital, investors will ask whether you have a clear path to distribution. For many AI companies, that path runs through Microsoft. Either you're running on Azure, or you need a really good reason not to.

Third, if you're building infrastructure—GPUs, accelerators, networking, storage—you need to decide how to position yourself relative to Microsoft. You can compete head-on, which is expensive and probably futile. Or you can specialize in niches that Microsoft doesn't dominate: edge computing, embedded AI, specific verticals, open-source infrastructure. That's more viable but requires accepting that you'll never be as large as Microsoft.

Fourth, if you're building software or services on top of AI models, you need to think about which models and platforms you're optimized for. Building only on Open AI/GPT creates a dependency on a single provider. Building on multiple models (Open AI, Anthropic, open-source) gives you flexibility but adds complexity. Many companies are choosing the belt-and-suspenders approach: optimize for the model that's best, but make it possible to swap in alternatives if needed.

The power dynamics are real, but they're not immovable. Companies that build genuinely better products, that create strong network effects, that distribute directly to users can still succeed outside of Microsoft's ecosystem. But it's harder. And the most likely path to success is to work with Microsoft, not against it.

The Future: What Comes Next?

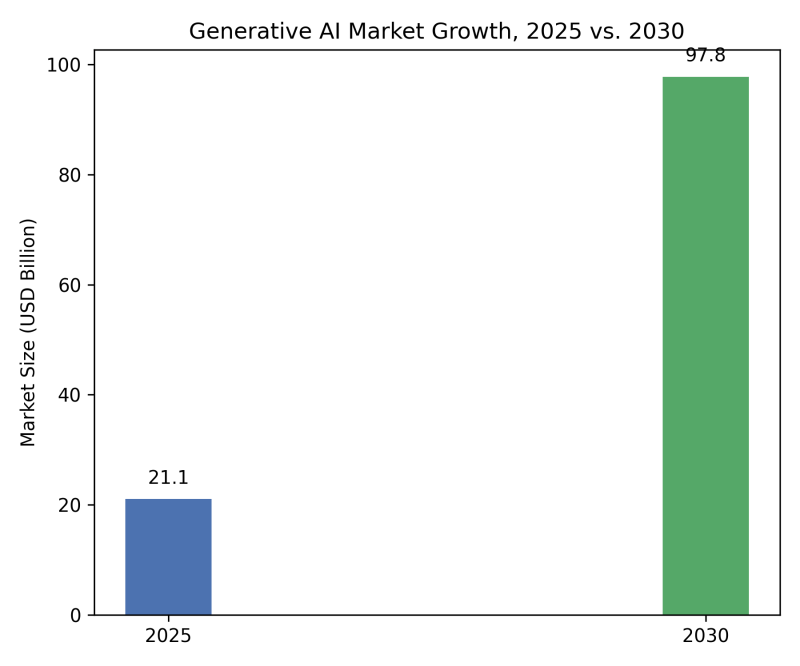

If Microsoft's earnings and the partnership with Open AI tell us anything, it's that the enterprise AI market is just beginning. The

Over the next three to five years, expect:

Continued infrastructure expansion: Microsoft will keep spending

Model capability improvements: Open AI and other AI companies will continue improving their models. GPT-5 and beyond will be more capable, more efficient, and more valuable. That drives higher enterprise demand for inference and fine-tuning services, which means more Azure usage.

Custom AI applications: Most of the enterprise AI upside comes from custom applications built on top of foundation models. Your company's proprietary data, your specific problems, your unique workflows. These require compute infrastructure to run. Microsoft will be the platform where most of these are built.

Regulatory framework evolution: AI regulation is coming. EU AI Act, potential US legislation, industry standards. Companies that have deep relationships with regulators and enterprise customers (like Microsoft) will be better positioned to navigate this. That's another advantage for Microsoft.

Open-source challenge: Open-source models will get better. Some enterprises will choose to run self-hosted AI rather than pay for cloud services. This will reduce total TAM for cloud providers. But it's unlikely to reduce Microsoft's relative position, because Microsoft will offer the most seamless way to run open-source models in production.

Hardware diversification: Nvidia's dominance in AI chips will gradually decrease. Custom AI accelerators from Microsoft, AMD, Intel, and others will capture increasing share of inference and some training workloads. Microsoft will be well-positioned with its custom chips and relationship with Nvidia.

The base case is that Microsoft's AI-related revenues grow from

But execution is the keyword. Microsoft has to keep investing in infrastructure at massive scale. The company has to keep innovating in AI software integration. And the company has to avoid catastrophic missteps like regulatory antitrust action or fundamental AI architecture breakthroughs that invalidate its infrastructure investments.

Those risks are real. But the opportunity is enormous.

The Broader Implications for Tech and Business

Zoom out from Microsoft for a moment and consider what's happening at a macroeconomic level.

Tech companies have historically made money from software licensing (Microsoft, Adobe), advertising (Google, Meta), e-commerce (Amazon), or smartphones (Apple). These are different business models with different economics.

AI is reshaping that. Now the way you make money from AI is by owning three things: the models, the infrastructure, and the software integrations. Microsoft is trying to own all three. Open AI is trying to own the models. But Open AI runs on Azure, which means Microsoft owns the infrastructure.

That creates an unusual power dynamic where Microsoft isn't the coolest company in the room. It's not the company with the breakthrough technology. But it's the company that gets paid either way.

This is reminiscent of the gold rush—the companies that sold mining equipment and supplies made more reliable money than the prospectors. Or the early internet—companies like Akamai that provided infrastructure made steadier money than dot-com companies that burned through venture capital.

Microsoft's position isn't predatory—the company provides genuine value. Enterprise customers choose to run workloads on Azure because it's reliable, integrated, and cost-effective. They're not forced. But they do recognize that Azure is increasingly the path of least resistance.

Over time, this might create antitrust concerns. If Microsoft becomes so dominant in AI infrastructure that competitors can't viably build alternatives, regulators might step in. That's already starting to happen in the EU with the Digital Markets Act.

But for now, Microsoft's position is both enviable and earned. The company made big bets on AI years ago when the outcome was uncertain. It invested in the right partners. It built infrastructure at scale. And it's harvesting the results.

Final Perspective: The Unsexy Winner in AI

There's something almost humorous about how the narrative around AI has evolved.

When Chat GPT launched, the narrative was all about Open AI. The company was changing everything. Beating Google. Threatening all of human employment. Sam Altman was the visionary. The model was the product.

Then it became about the model arms race. GPT-4 vs. Claude vs. Gemini. Who would build the most capable model? That was the competition that mattered.

But quietly, Microsoft was playing a different game. While everyone debated which model was best, Microsoft ensured that regardless of which model won, it would run on Azure. Which meant Microsoft would capture the infrastructure margin.

Now, in quarterly earnings, we see the proof point. Microsoft made $7.6 billion from its Open AI investment in a single quarter. That's not from selling Chat GPT subscriptions. That's from being the infrastructure provider that powers it.

It's the unsexy winner position. Microsoft doesn't get the cultural prestige of Open AI. It's not celebrated as a visionary company. But it's making the most reliable, largest profits from the AI boom.

That's the real story of Microsoft's AI strategy: not brilliance, but systematic advantage. The company isn't trying to build the best AI model. It's trying to be the platform that all AI companies depend on. And it's succeeding spectacularly.

For enterprise customers, that's reassuring. You know Microsoft will be there for the next decade, maintaining infrastructure, supporting your workloads, integrating new capabilities. You know the company has the capital and commitment to stay competitive.

For competitors, it's a warning. Building a better model isn't enough if you're dependent on someone else's infrastructure. Building great software isn't enough if you lack distribution. The structural advantages accrue to companies that own multiple parts of the stack and have deep enterprise relationships.

For investors, Microsoft's earnings prove that AI is now a serious revenue driver, not just a long-term bet. And the company positioned itself to capture substantial share of that revenue for the next decade, which makes the stock valuation more justified than many acknowledge.

The $7.6 billion number isn't a fluke or a one-time boost. It's the beginning of a pattern where Microsoft's earnings increasingly reflect the value it's capturing from the AI boom. As long as AI demand keeps growing and Open AI remains dependent on Azure, that pattern will continue.

That's the real story beneath the headlines.

FAQ

How is Microsoft making $7.6 billion from Open AI?

Microsoft's

What is the 20% revenue share agreement between Microsoft and Open AI?

While neither company has officially confirmed the exact terms, industry sources consistently point to Microsoft receiving 20% of Open AI's revenue in exchange for providing infrastructure through Azure cloud services. This means every dollar of Open AI's Chat GPT subscriptions, API revenue, and enterprise contracts generates roughly $0.20 in revenue for Microsoft as an infrastructure provider.

Why did Open AI commit $250 billion to Azure?

Open AI committed $250 billion to purchasing Azure compute services because large language models require massive computational power, particularly GPUs and specialized AI accelerators that Microsoft provides through Azure. Open AI can't build equivalent data center infrastructure independently, so outsourcing to Microsoft Azure ensures reliable access to the computing power needed to scale Chat GPT and future models. The long-term commitment also gives Open AI favorable pricing and guaranteed capacity allocation.

How much is Microsoft spending quarterly on AI infrastructure?

Microsoft reported

Could Microsoft's AI dominance create antitrust problems?

Potentially yes. If Microsoft becomes so dominant in AI infrastructure that competitors can't viably build alternatives, antitrust regulators might intervene, particularly in the EU where the Digital Markets Act already applies to Microsoft. However, customers currently choose Azure voluntarily due to its reliability, integration with Microsoft software, and cost-effectiveness. The regulatory risk exists long-term but is not imminent, provided Microsoft continues providing genuine competitive value.

What happens if Open AI becomes independent from Microsoft?

Open AI's recent restructuring into a public benefit corporation was partly designed to enable eventual independence. However, the company's $250 billion (and growing) commitment to purchase Azure services makes true independence difficult. Even if Open AI builds some proprietary infrastructure, it will rely on Azure for peak capacity, redundancy, and customer demands exceeding internal capacity. Microsoft's position is so entrenched that Open AI cannot easily reduce dependence without massive additional capital investment in competing infrastructure.

Is open-source AI a threat to Microsoft's dominance?

Open-source AI models like Meta's Llama and Mistral's offerings do create an alternative path for some enterprises, but they don't fundamentally threaten Microsoft's infrastructure dominance. Open-source models still need to run somewhere—usually on cloud infrastructure like Azure. Microsoft can offer the most seamless way to deploy and run open-source models in production, which maintains its strategic advantage even as open-source models improve.

How does the Anthropic investment fit into Microsoft's strategy?

Microsoft's

Microsoft's $7.6 billion Open AI windfall isn't just a one-time accounting gain. It's a signal that the company's infrastructure-first AI strategy is generating extraordinary returns. The partnership demonstrates how the unsexy infrastructure layer often generates more sustainable profits than the celebrated model creators. As enterprise AI adoption accelerates, Microsoft's position should only strengthen—making its earnings reports increasingly impressive and its competitive moat deeper. For anyone building in the AI space, the lesson is clear: own the platform, not just the application.

Key Takeaways

- Microsoft generated $7.6 billion in net income from OpenAI investment appreciation, demonstrating the scale of AI company valuations

- OpenAI has committed to purchasing 625B performance obligations), locking in long-term revenue

- Microsoft's 20% revenue share agreement means every dollar OpenAI generates in revenue contributes directly to Microsoft's profit

- The company is spending $25 billion quarterly on AI hardware and infrastructure, positioning itself as indispensable to all major AI companies

- Microsoft's strategy of owning infrastructure rather than just models has proven more profitable than competing on model capabilities alone

Related Articles

- Why Agentic AI Projects Stall: Moving Past Proof-of-Concept [2025]

- Microsoft Q2 2026 Earnings: Cloud Dominance, Gaming Struggles [2025]

- AI Pro Tools & Platforms: Complete Guide to Enterprise AI [2025]

- AI Infrastructure Boom: Why Semiconductor Demand Keeps Accelerating [2025]

- China Approves NVIDIA H200 GPU Imports: What It Means [2025]

- Is AI Adoption at Work Actually Flatlining? What the Data Really Shows [2025]

![Microsoft's $7.6B OpenAI Windfall: Inside the AI Partnership [2025]](https://tryrunable.com/blog/microsoft-s-7-6b-openai-windfall-inside-the-ai-partnership-2/image-1-1769641738353.jpg)