The AI Paradox: Spending Big Without Seeing Results

There's a quiet frustration building in corporate boardrooms across the globe. Companies have committed billions to artificial intelligence initiatives, hired specialized teams, reorganized departments around AI-first strategies, and yet the financial needle isn't moving the way executives promised it would.

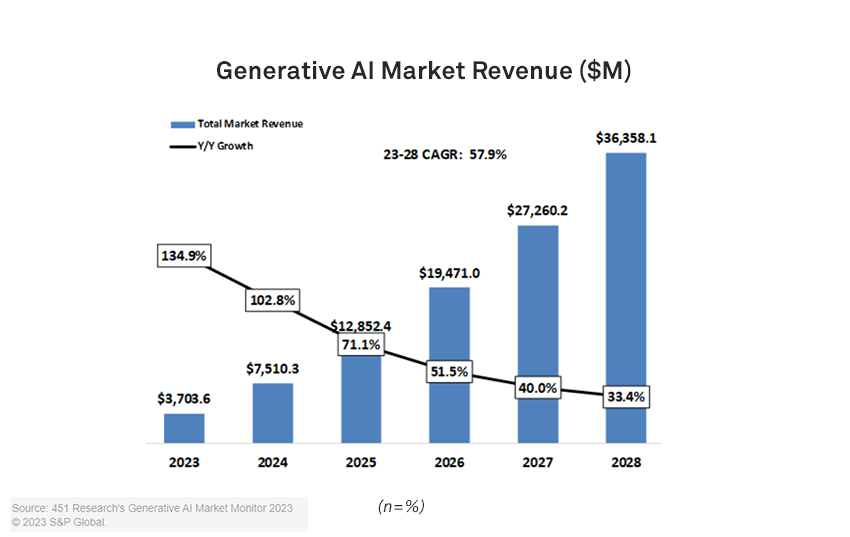

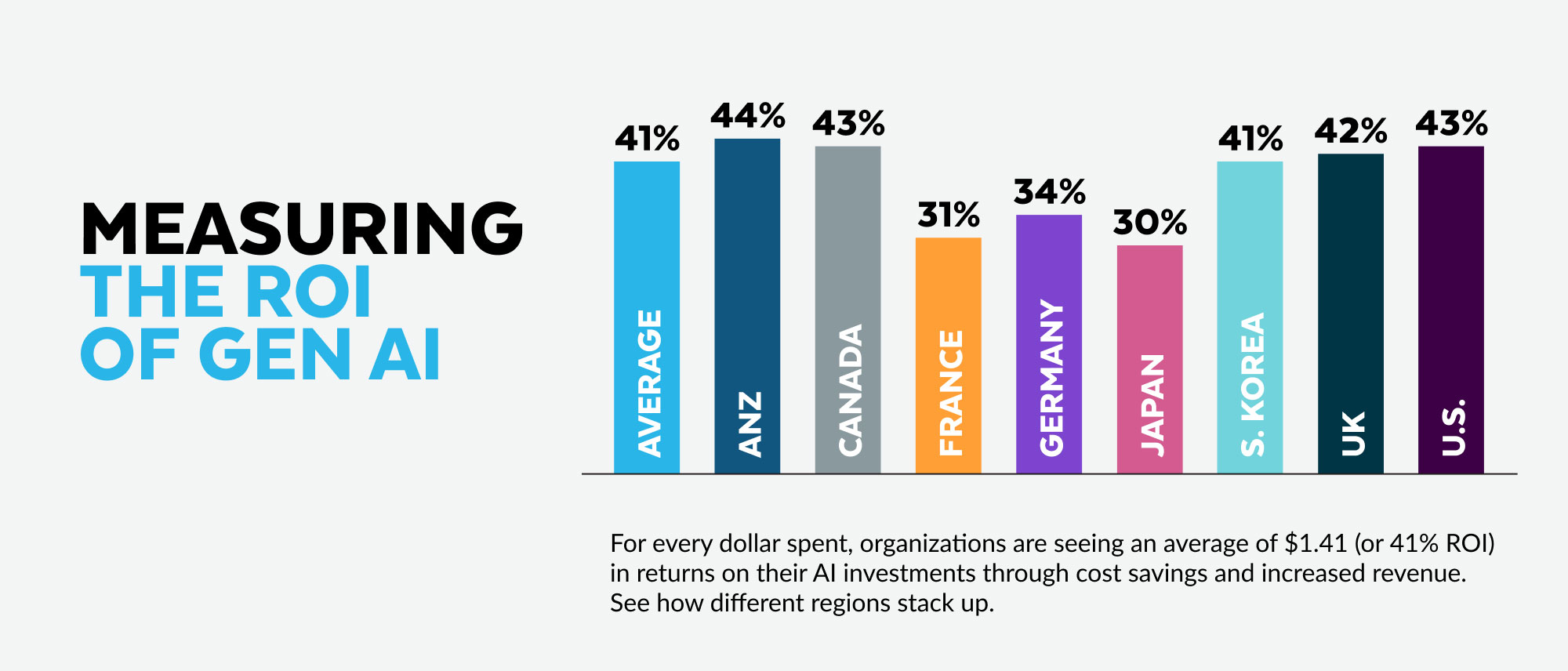

The numbers tell a sobering story. While roughly three-quarters of organizations have explicitly designated revenue growth as their primary success metric for AI deployment, only about one in five has actually achieved it. That's an 80% gap between ambition and reality. Meanwhile, when asked about transformative impact, barely a quarter of business leaders describe their AI efforts in those terms, despite two-thirds acknowledging that these tools do improve productivity and efficiency to some degree.

This isn't a failure of the technology itself. Modern AI systems are genuinely powerful. They can analyze datasets in seconds that would take human teams weeks. They can identify patterns invisible to traditional analytics. They can generate content, code, and strategies at a scale that would have seemed impossible five years ago. The problem isn't capability. It's implementation, expectation-setting, and the messy reality of actually integrating transformational technology into organizations built on legacy systems and human workflows.

What makes this situation particularly interesting is how calm executives remain about it. There's no panic. No mass abandonment of AI investments. Instead, what's emerging is a pragmatic, almost cautious approach to AI deployment. Companies are treating this technology like a long-term strategic asset rather than a quick-fix productivity tool. They're moving deliberately, building foundations, and being selective about where they place their bets.

This is the story of the AI investment gap: why it exists, what's causing it, and critically, how organizations that understand these dynamics will eventually separate themselves from the pack.

TL; DR

- Only 20% of organizations have achieved revenue growth from AI despite 74% targeting it as their primary metric

- Just 25% report transformative impact even though 66% see modest productivity improvements

- Three-quarters of companies are moving AI pilots to production, signaling a shift from experimentation to implementation

- Agentic AI adoption will reach 74% within two years, jumping from just 23% today, but deployment will remain measured and deliberate

- Bottom Line: The AI ROI gap reflects unrealistic initial expectations rather than technological failure, and organizations treating AI as a five-year strategic play, not a one-year revenue driver, will eventually win

Understanding the Revenue Growth Gap: Where Organizations Stumble

The Expectation Inflation Problem

When companies began seriously evaluating AI three to five years ago, the narrative was intoxicating. Industry analysts projected that AI would drive productivity improvements of 30-50%. Technology vendors promised automation that would slash operational costs dramatically. Consultants published studies showing early adopters gaining competitive advantages worth millions. Against that backdrop, boards approved budgets. Executives committed to timelines. Teams were hired specifically to make AI work.

But here's what happened: the gap between the promise and the deployment reality turned out to be enormous. A company doesn't get 30% productivity gains by simply turning on Chat GPT. It doesn't suddenly save $10 million annually by running a few pilots with machine learning models. Real transformation requires rethinking business processes, retraining workforces, changing how decisions get made, and often discovering that your data infrastructure is decades behind where it needs to be.

Consider a financial services firm that spent months developing an AI system to detect fraud. The model itself works beautifully, catching 15% more fraudulent transactions than the previous rule-based system. But integrating it into their existing compliance workflow meant building new approval processes, training investigators to understand model outputs, and dealing with regulatory requirements around explainability. Two years into deployment, they're realizing the ROI will take another three years to materialize, after accounting for development, training, and integration costs.

This pattern repeats across industries. Healthcare organizations implemented AI for diagnostic support and found that doctors still needed to verify results. Manufacturing facilities deployed computer vision systems for quality control and discovered their legacy equipment couldn't capture images in the right format. Retailers built AI recommendation engines only to learn that their data on customer behavior was inconsistent and fragmented across incompatible systems.

The Measurement and Attribution Challenge

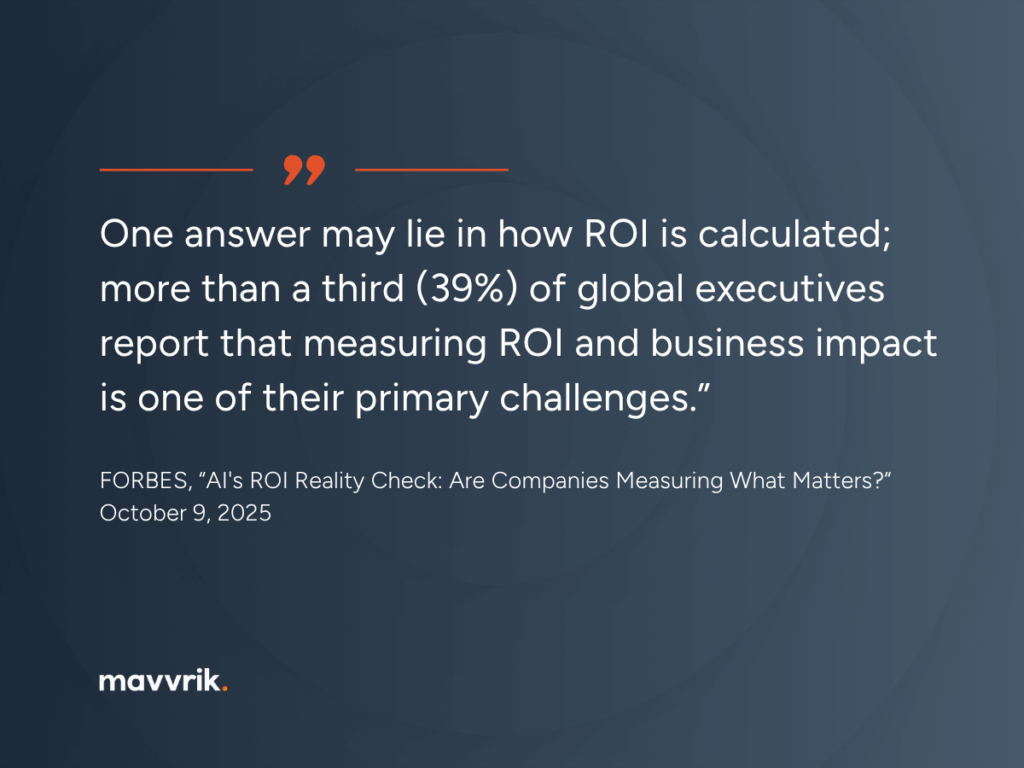

Proving that AI actually caused improvements is harder than it sounds. If customer service response times improve after deploying an AI chatbot, how much of that improvement came from the chatbot itself versus the fact that you also upgraded your infrastructure, retrained your team, and were already experiencing seasonal demand increases? How do you isolate the impact of an AI forecasting model when multiple factors influence inventory levels?

Many organizations struggle with this attribution problem. They see improvements, but they can't cleanly connect them to their AI investments with the certainty that finance departments demand. So they categorize the gains as "operational efficiency" or "process improvement" rather than attributing them specifically to AI. From a financial perspective, this means AI doesn't get credited with the returns it actually delivered, making the investment appear less valuable than it truly is.

There's also the issue of intangible improvements. An AI system might dramatically improve employee satisfaction by handling tedious tasks, but how do you quantify that into a revenue number? It might reduce decision-making time from days to hours, giving the company more agility in a competitive market. That's valuable, but it doesn't show up as a discrete revenue event in quarterly financials.

Why Productivity Improvements Don't Automatically Equal Revenue Growth

This distinction is critical and often overlooked. Two-thirds of organizations report that their AI tools improve productivity and efficiency. That's not nothing. But only one-fifth report that this translates into revenue growth. Why the disconnect?

Because productivity gains and revenue growth are fundamentally different things. If your customer service team processes tickets 20% faster because of AI, you can take one of three paths: hire fewer people (cost savings, not revenue growth), handle more customers with the same team (potential revenue growth if you're capacity-constrained), or improve customer satisfaction and retention (also potential revenue growth, but indirect and difficult to measure).

Many organizations took path one: they realized they could reduce their customer service headcount and used that as a cost-cutting mechanism. That's rational from a financial perspective, but it doesn't produce revenue growth. It produces margin improvement. For accounting purposes, margin improvement might be better than revenue growth because it goes straight to the bottom line. But for executives who committed to AI initiatives as revenue-growth plays, it feels like the investment underperformed against its stated objectives.

Additionally, if your entire industry adopts the same productivity tools, you all get faster. But the actual revenue pie doesn't grow because customers aren't paying more for the same service. They're just expecting better service quality as the new normal. The AI becomes table stakes rather than a differentiator, which is fine for maintaining competitiveness but terrible for proving exceptional ROI on your AI investment.

The Agentic AI Revolution: Why Everyone's Optimistic Despite Current Underperformance

What Agentic AI Actually Means (And Why It's Different)

Agentic AI represents a meaningful shift from the AI systems currently deployed in most organizations. Today's typical implementation is what you might call "tool AI": humans use AI to help with specific tasks. An employee might use Chat GPT to draft an email, a data analyst might use a machine learning model to make a forecast, a marketer might use an image generator for social content.

Agentic AI flips this dynamic. Instead of humans directing the AI, the system operates with some degree of autonomy, pursuing defined objectives and making decisions along the way. It might monitor systems, identify issues, propose solutions, implement changes, and even handle the subsequent troubleshooting, all with minimal human intervention. You set the goals and guardrails, and the agent figures out how to achieve them.

The difference is profound. Tool AI requires constant human attention and decision-making. You have to prompt it, evaluate its output, and decide what to do with the results. Agentic AI can potentially run unattended for extended periods, handling routine work that would otherwise require significant human time.

Currently, agentic AI adoption sits at around 23% of organizations. But research indicates this will jump to 74% within the next two years. That's not because the technology is necessarily ready for such widespread deployment, but because executives believe it will be, and they're preparing for the transition.

Why The Optimism Exists Despite Current Underperformance

So if current AI initiatives are underperforming, why are leaders so confident about future investments? Several factors contribute to this.

First, they're seeing evidence of progress. One in four organizations has already moved 40% or more of their AI pilots into production. This is significant because it shows that despite the challenges and gap between initial expectations and actual results, the technology is advancing from experimental phase into operational deployment. When AI becomes operational rather than experimental, the ROI dynamics shift. You're no longer trying to prove the concept; you're optimizing an existing system.

Second, executives are learning from the current cohort's experience. The organizations that have struggled with ROI are creating case studies that the broader market can learn from. Early-stage AI initiatives have revealed where the pitfalls exist: poor data quality, lack of internal expertise, incompatibility with legacy systems, regulatory challenges, and the need for process redesign. Armed with this knowledge, the next wave of implementations can avoid some of these mistakes.

Third, the technology itself continues to improve at a rapid pace. The capabilities of AI systems today exceed what was possible two years ago, which exceeded what was possible two years before that. When you're planning investments based on where the technology is heading, not where it is now, you're naturally more optimistic. By the time organizations actually implement agentic AI at scale, it will be more capable, more reliable, and more integrated with business processes than it is today.

The Measured Approach to Scaling

Importantly, executives aren't charging forward recklessly. The plan isn't to move from 23% to 74% agentic AI adoption by suddenly deploying autonomous systems everywhere. Instead, what's happening is a methodical scaling process. Organizations are identifying the highest-value use cases, deploying agents in controlled environments, measuring results, and then expanding to other areas.

This measured approach reflects lessons learned from current AI deployments. Companies that aggressively scaled tools without proper governance, testing, and oversight often encountered problems: inaccurate outputs going to customers, systems making unexpected decisions, models trained on biased data perpetuating those biases at scale. The discipline now is to move deliberately.

The timeline matters too. Expecting agentic AI adoption to reach 74% over 24 months doesn't mean full production deployment across all areas by then. It means 74% of organizations will be using some form of agentic AI in some part of their business, perhaps still in limited capacity. The actual widespread transformation will take considerably longer.

Why Data and Infrastructure Are Actually The Real Bottlenecks

The Unsexy Truth About AI Implementation

When executives hear "AI implementation", they picture sophisticated algorithms and machine learning teams building cutting-edge models. They don't picture someone spending three weeks cleaning inconsistent data entries or discovering that data from one system doesn't match data from another system because they were built using different definitions of what a "customer" actually is.

Yet this unglamorous work is often the highest-value use of early AI project resources. Many organizations find that their data isn't in condition for AI systems to work effectively. Customer information is duplicated across multiple databases. Historical transaction data is incomplete. Product classifications use different schemas in different departments. Regional operations maintained their own records using their own formats.

Before any machine learning model can deliver value, this data needs to be integrated, cleaned, and organized. This isn't a one-time project either. Data quality requires ongoing governance. Bad data constantly finds ways back into systems. New data sources get added. Systems change. Without continuous investment in data quality infrastructure, AI models start making decisions based on garbage.

Organizations that recognized this early are progressing faster. Those that underestimated the data challenge are stuck in technical debt, spending more time fixing data problems than building AI capabilities. This directly explains a significant portion of the ROI gap: the invested capital went into fixing foundational issues rather than developing user-facing capabilities.

Infrastructure and Sovereign AI Concerns

Parallel to data quality, organizations face infrastructure challenges. Modern AI systems, especially large language models and agentic systems, require computational resources that many organizations didn't plan for. Running inference at scale (making predictions or generating outputs using trained models) can be expensive. Processing large datasets to train models can require expensive infrastructure.

There's also the sovereignty question. When you send data to a cloud-based AI service operated by another company, you're introducing security, privacy, and compliance considerations. Your customer data leaves your control. Your competitive intelligence gets processed by external systems. In regulated industries like healthcare, finance, and government, this creates legal and operational challenges.

Four in five organizations now view sovereign AI (maintaining control over your data and AI infrastructure) as strategically important. This is a significant shift that affects deployment choices. Instead of subscribing to a ready-made AI service, organizations are increasingly interested in running models on their own infrastructure or using services that can run in private environments.

This choice has cost implications. Building your own infrastructure is more expensive than using a shared service. Training your own models takes more effort than fine-tuning pre-built ones. But the trade-offs around data control and competitive advantage make it worthwhile for many organizations. This is another factor that extends timelines and increases upfront investment before revenue benefits materialize.

The Workforce Adoption Challenge: Why Fewer Than 3 in 5 Workers Use AI Daily

The Adoption Gap Nobody Talks About

Here's a paradox that rarely makes it into executive presentations: fewer than three in five workers actually use AI in their daily workflows, even though their organizations have invested in AI tools and training. Organizations are actively managing this adoption issue and sometimes even expanding lists of banned AI tools.

Why would a company restrict access to tools they invested in? Because uncontrolled AI use creates problems. When employees use generative AI without oversight, they sometimes generate incorrect information and pass it along to customers. They accidentally expose confidential information in prompts. They create content that violates company policies or brand guidelines. They spend time experimenting with tools rather than focusing on core work.

So organizations are taking a governance approach: rather than maximizing adoption of any AI tool, they're being selective about when, where, and how AI gets used. In some departments, AI usage is encouraged. In others, it's restricted or banned entirely. This creates a more controlled environment but it also explains why adoption rates remain relatively low despite industry investment levels being high.

The workforce adoption challenge also reflects a training and competency issue. Using AI effectively isn't as simple as handing someone access to a tool. There's a learning curve. Employees need to understand what these systems can do, what they can't do, how to evaluate output quality, and how to use them for actual business value rather than just entertainment.

Organizations that have invested heavily in training and that frame AI adoption as part of long-term capability building see better results. Those that deployed AI tools without accompanying training programs see lower adoption and higher frustration.

Building AI-Native Cultures Takes Time

The most successful organizations are treating AI adoption as a cultural shift, not a tool deployment. They're redesigning job descriptions to incorporate AI-assisted workflows. They're adjusting performance metrics to recognize when employees effectively leverage AI. They're hiring new people specifically because they have strong AI capabilities. They're rotating executives and managers through AI training programs.

This cultural transformation is essential but it's slow. Cultural change in large organizations typically takes three to five years before it's genuinely embedded. You can't achieve real ROI on AI investments until the organization's culture, incentives, and processes align to actually use these tools effectively.

Companies trying to rush this process typically see diminishing returns. They invest in tools, see that adoption is low, blame the tools, and then switch to different tools. Meanwhile, the actual blocker—organizational readiness and culture—remains unaddressed.

Competing Priorities: Why AI Isn't The Only Investment Pulling Resources

The Core Business Vs. Future Innovation Dilemma

Organizations operate under a fundamental constraint: they need to keep the current business running while simultaneously investing in future capabilities. It's easy for executives to commit to AI transformation when the strategic discussion is happening in the abstract. It's much harder when the question becomes: "Do we spend this dollar on maintaining our core technology stack, or do we spend it on AI experimentation?"

The reality is that most organizations are significantly over-extended in terms of technical debt. Legacy systems that are twenty, thirty, or even forty years old underpin critical business processes. These systems are fragile, expensive to maintain, and difficult to integrate with modern tools. But they can't simply be replaced overnight because the business depends on them running reliably.

When an IT organization has to choose between finally modernizing an aging billing system or investing in a new AI initiative, the billing system usually wins because its failure would directly impact revenue. This isn't a failure of strategic vision; it's a rational allocation of finite resources under competing constraints.

Organizations with lower technical debt can allocate more resources to AI innovation. Organizations with high technical debt struggle to find budget for AI beyond the most compelling use cases. This creates a bifurcation where the most digitally mature companies accelerate their AI capabilities while others move more slowly. Over a five to ten-year horizon, this will create significant competitive gaps.

The Talent Scarcity Problem

Even when budget exists, finding people to execute AI projects remains challenging. The market for AI expertise is tight. Experienced machine learning engineers, data scientists, and AI architects command significant salaries. Building internal capability in these areas is expensive and takes time.

Many organizations respond by outsourcing AI development to consulting firms or bringing in contract specialists. This works for specific projects but doesn't build lasting internal capability. The moment a strategic AI initiative concludes, the external talent moves on to the next project, and the organization is left without the expertise to maintain and optimize what was built.

Building genuine internal AI capability requires committing to hiring, training, and retaining talent, which is expensive and requires multi-year commitment. This is another reason why the ROI timeline is longer than executives initially expect.

The Positive Signals: Why Optimism Is Actually Justified

Production Deployment As An Indicator of Maturation

One-quarter of organizations moving 40% or more of their AI pilots into production is actually significant. It indicates that the ecosystem is progressing from pure experimentation to operational deployment. When a company moves something from pilot to production, it means they've tested it, solved the initial problems, and are confident enough to rely on it for actual business processes.

Production deployments are also where the real economics start to materialize. Experimental pilots can demonstrate value but don't necessarily generate returns because they're not at scale and they require intensive management. Production systems, once debugged and optimized, can generate returns at scale with reduced operational overhead.

The fact that this transition is happening at a meaningful scale (one in four organizations making substantial moves to production) suggests that the earlier cohort of AI adopters is moving successfully through the implementation valley and into operational maturity. This will likely accelerate adoption among the later cohort because they'll have demonstrated success stories to learn from.

Evidence of Learning and Course Correction

Organizations are also proving that they can learn and adapt. The expansion of banned AI tools isn't a sign of failure; it's evidence of developing judgment. Companies are figuring out which AI tools are genuinely valuable and which are distractions. They're developing policies around how and when AI should be used. They're building governance structures that balance innovation with appropriate risk management.

This kind of maturation typically precedes significant value realization. Once you've established which tools work, you've built the governance framework, and you've trained people on proper usage, you can then scale those tools with confidence. That's when ROI tends to appear.

The Long-Game Perspective

Fundamentally, executives are right to take a patient approach. AI capabilities that don't exist today will exist in eighteen months. Business models that seem impossible now will become viable. Integration challenges that currently seem intractable will be solved by the next generation of platforms. Organizations that treat this as a five-year journey rather than a one-year revenue driver will ultimately perform better than those that expected immediate returns.

The organizations that will win at AI over the next five years aren't necessarily those that achieved the highest ROI in the first two years. They're the ones that built strong data foundations, developed internal capability, created cultures that embrace AI tools, and maintained strategic patience while the technology and their operations matured together.

Building Effective AI Strategies: What Actually Works

Start With Use Cases, Not Technology

One consistent theme from organizations that are seeing better results: they start by identifying specific business problems that AI could potentially solve, then they evaluate whether the technology makes sense for that use case. They don't start with the technology and then hunt for problems to apply it to.

This sounds obvious but it's remarkable how many organizations get it backwards. A company becomes interested in Chat GPT, so they spin up Chat GPT projects. They read about computer vision, so they launch computer vision initiatives. Instead of driving strategic value, they end up with technology looking for a home.

Companies seeing better ROI start by asking: "What would meaningful business impact look like in this area? What would we need to be true for an AI solution to be viable? Do we have the data? Do we have the expertise? Is the ROI compelling enough to justify the effort?"

This discipline filters out projects that will consume resources without generating meaningful return. It focuses effort on use cases where success is actually achievable and valuable.

Focus On Continuous Improvement, Not Transformation

The expectation that AI would deliver transformation tends to be the source of disappointment. Transformation is rare. Continuous improvement is common.

A five percent improvement in customer satisfaction, a ten percent reduction in operational costs, a fifteen percent improvement in decision-making speed—these aren't transformational changes. But they're real, achievable, and they compound. A company that generates five percent improvement across twenty business processes sees a meaningful improvement to their overall performance.

Organizations that are seeing positive ROI tend to frame their AI strategy around continuous improvement rather than transformation. They expect to get better gradually, and they're pleasantly surprised when the gains exceed expectations. Organizations that frame AI as transformational tend to be disappointed because transformation is the exception, not the rule.

Invest In Human-AI Partnership, Not Replacement

The most successful implementations position AI as enhancing human capability rather than replacing humans. A doctor using AI for diagnostic support makes better diagnoses than a doctor without that support, and definitely makes better diagnoses than the AI system alone. A customer service representative using AI to suggest responses to customers provides better service than a pure chatbot and also better than providing no AI assistance.

Organizations trying to use AI to eliminate jobs tend to see poor adoption and mediocre results. Employees rightfully resist tools designed to replace them. Customers often prefer having human options. The emotional and practical friction around replacement stalls implementation.

Organizations positioning AI as a tool for doing the same job better see faster adoption and better results. Employees embrace tools that make their jobs easier. Customers appreciate the improved service. The financial math is also better because you're improving output from the same workforce rather than managing workforce reductions.

Establish Clear Measurement From Day One

We mentioned this earlier, but it's worth emphasizing: you need to establish clear baseline metrics and success measures before you deploy anything. After the fact, you can rationalize almost anything as success. Before deployment, you either have clear metrics or you don't.

For each AI initiative, ask: What specific metric will we measure? How will we gather this data? What does improvement look like? What's the baseline we're measuring against? How often will we check progress?

This discipline prevents the rationalization problem where you can't cleanly attribute improvements to your AI investment. With clear measurement in place from the beginning, attribution becomes straightforward.

The Role of Governance and Policy

Why Restrictions Often Precede Scale

One of the counterintuitive findings is that organizations expanding their lists of tools they restrict or ban is evidence of development, not regression. This seems backwards until you consider what's actually happening.

When an organization first gets access to AI tools, usage increases rapidly as people experiment and find interesting applications. During this period, some uses are valuable, but others are frivolous or risky. An employee might share confidential information in a prompt. Another might use company time for personal projects. A third might generate content that violates brand guidelines.

After the chaotic experimentation phase, organizations typically establish governance: clear policies about what's allowed, what's not, and what requires oversight. This governance phase often looks like restricting usage because restrictions increase significantly as organizations move from "anything goes" to "usage must be intentional and appropriate."

But what follows governance is optimization. Once you've defined the rules, you can educate people, you can build processes that use the tools effectively, and you can scale with confidence. The organizations that move most successfully through experimentation -> governance -> optimization will realize the most value.

Organizations that try to skip the governance phase and jump straight from experimentation to optimization at scale tend to encounter problems. You end up with uncontrolled usage, poor results, and loss of trust in the technology. The restriction phase is actually essential; it's where you develop the maturity necessary for responsible scale.

Building Your Own Governance Framework

If your organization hasn't yet established clear governance around AI usage, here's a framework that organizations have found effective:

Define Approved Use Cases: Identify specific processes where AI usage is encouraged, supported, and potentially required. Make these explicit so people know they should be using the tools.

Establish Restricted Domains: Identify areas where AI usage is problematic or risky. Healthcare diagnosis, financial advice, and hiring decisions are examples where AI should supplement human judgment, not replace it. Establish clear policies about this.

Create Approval Workflows: For uses that don't fit clearly into approved or restricted categories, establish a lightweight approval process. This prevents people from avoiding governance while still enabling innovation.

Implement Auditing: You need visibility into how tools are being used. This isn't about surveillance; it's about understanding patterns and identifying problems before they become crises.

Provide Training: Don't assume people know how to use AI tools effectively. Provide training on proper usage, understanding limitations, and avoiding common pitfalls.

Iterate: Your governance framework should evolve as your use of AI matures. What made sense when adoption was 10% might need adjustment when adoption reaches 50%.

Sovereign AI: Control As Competitive Advantage

Why Companies Are Prioritizing Data Control

We mentioned earlier that four in five organizations now view sovereign AI as strategically important. Understanding why this is happening is important for your strategy.

When you rely on third-party AI services (like commercial versions of Chat GPT or other APIs), your data flows to external systems. The service provider processes your data, learns from it, uses it to improve their systems. In commercial terms, they're extracting value from your proprietary information.

For many organizations, this trade-off is acceptable. The convenience of using a ready-made service outweighs the cost of having your data processed externally. But for organizations with sensitive data, competitive-sensitive information, or regulatory constraints, the trade-off doesn't work.

Consider a pharmaceutical company using AI to help analyze potential drug compounds. That data represents years of research and potentially billions in future value. Sending it to a third-party service to process through their AI systems means competitors could potentially see it (through data breaches, service provider negligence, or regulatory disclosure). For a pharmaceutical company, sovereign AI—running their own models on their own infrastructure—isn't optional; it's strategically essential.

The same logic applies to financial institutions with customer data, insurance companies with underwriting models, manufacturers with production optimization algorithms, and many other organizations. The more proprietary your information and the higher the strategic value, the more important sovereign AI becomes.

The Cost-Benefit Analysis

Sovereign AI is more expensive than using external services. You need to build infrastructure, maintain it, train people to operate it, and handle the operational burden. Smaller organizations often can't justify this cost.

But over time, the math changes. As you deploy more AI systems, the fixed cost of maintaining your own infrastructure gets amortized across more applications. As your team develops expertise, the cost per project decreases. Eventually, sovereign AI can be more economical than paying for external services, especially if your AI usage is heavy.

More importantly, sovereign AI creates strategic optionality. If you're running your own models, you can customize them to your specific needs, optimize them for your particular use cases, and maintain complete control over your intellectual property and data. If you're dependent on external services, you're limited to what the service provider offers, and you're subject to their pricing changes, policy changes, and service availability.

Organizations planning for the next five years are increasingly choosing sovereign AI because they expect AI to become strategically central to their operations. If that's true, you want to own your capability rather than rent it.

Hybrid Approaches

Most organizations won't go fully sovereign or fully dependent on external services. Instead, they're adopting hybrid approaches: using external services for some use cases where sovereignty doesn't matter and they benefit from the convenience, while maintaining sovereign capability for strategically sensitive applications.

This allows organizations to benefit from both models: the speed and convenience of external services where it's appropriate, and the control and customization of sovereign systems where it's strategically important.

Realistic Timelines and Expectations

The Three-to-Five-Year Horizon

Based on patterns emerging from organizations at various stages of AI adoption, a realistic timeline for achieving significant ROI looks like this:

Year 1: Pilots, learning, establishing governance. You might see some cost savings or efficiency improvements, but you shouldn't expect major returns. Focus on capability building and learning.

Year 2: Scaling pilots, moving systems to production, training workforce. You should start seeing clearer evidence of value as more systems become operational. Expect moderate improvements.

Year 3-5: Full-scale deployment, mature operations, optimization. This is when your earlier investments start generating compounding returns. Organizations that stuck with their AI strategy through the difficult earlier years start seeing the advantage over competitors that didn't.

This timeline assumes you're taking a disciplined approach: clear governance, measured scaling, continuous learning, and willingness to course-correct. Organizations that try to shortcut the timeline typically don't get better results; they get disappointed results on a faster timeline.

Managing Expectations With Stakeholders

One of the most important things you can do in your organization is set correct expectations around AI ROI timing. Executive teams that expect returns in year one will be disappointed and will lose faith in AI initiatives. Executive teams that understand that the real returns come in year three and beyond can stay committed through the valley of disillusionment.

Frame AI strategy conversations around multi-year capability building rather than near-term returns. Show the progress that's being made (data quality improving, production deployments increasing, employee adoption growing) rather than just financial metrics. Help leaders understand that they're investing in strategic capability that will compound over time.

Organizations that have successfully navigated this expectation management are the ones seeing the best results because they maintained consistent investment and support through the period where the hardest work happens.

Accelerating AI ROI: Practical Strategies

Focus On High-Impact Use Cases First

Not all AI applications are created equal. Some use cases generate value quickly and with relatively low complexity. Others promise big returns but require significant foundational work before they can deliver.

Identify your highest-impact, highest-certainty use cases and focus there first. A retail company might see faster ROI from demand forecasting than from fully autonomous customer service. A financial services firm might see faster ROI from process automation than from credit risk modeling. By starting with the highest-confidence, highest-impact applications, you build momentum and demonstrate value that funds additional investment.

Leverage Transfer Learning and Existing Models

You don't need to train everything from scratch. Numerous open-source models exist that were trained on massive datasets and can be adapted to your specific use case. Using transfer learning—taking a pre-trained model and adapting it to your domain—dramatically reduces the time and cost to get to working systems.

This is different from using a commercial service because you maintain control over the model and can optimize it specifically for your needs. You get some of the speed benefits of using external services while maintaining sovereignty and customization options.

Invest In Data Quality Early

Everything returns to data. Investing in data quality upfront means every downstream AI application works better and requires less effort to deploy and maintain. It's the most leveraged investment you can make.

Specifically: establish data governance (clear definitions, ownership, quality standards), invest in data infrastructure (systems that can handle scale and complexity), and build data culture (where people understand that data quality is everyone's responsibility).

Organizations that make this investment early compound their advantage over time. Organizations that neglect it constantly fight data quality problems that make AI initiatives harder and more expensive.

Partner Strategically

You don't need to build everything internally. Partnerships with AI vendors, consulting firms, and technology providers can accelerate progress. The key is choosing partnerships strategically.

Look for partners who have deep expertise in your specific domain and have demonstrated success with similar problems. Be cautious of partners who promise universal solutions or who want to take full control of your AI strategy. The best partnerships are those where the external partner helps you build internal capability, not where they make you dependent on their continued support.

The Competitive Dynamics: Who Wins At AI?

First-Mover Advantage Is Real, But It's Not What You Think

Companies often believe that moving fastest gives you the biggest advantage. But actually, first-mover advantage in AI is more subtle. The advantage isn't to the company that deploys AI first; it's to the company that learns faster from their deployments and improves faster.

If Company A deploys an AI system six months before Company B, but Company A learns slowly and Company B learns quickly, Company B will have better results by year two. The timing of initial deployment matters far less than the pace of learning and improvement.

This is why organizations that are thoughtful about measurement, that run regular retrospectives, that systematically incorporate learnings into the next iteration, outperform companies that deploy aggressively but don't learn effectively. Speed matters, but learning speed matters more.

Network Effects and Data Advantages

In some domains, AI creates winner-take-most dynamics because of network effects. If your AI system gets better with more data, and you already have more data than competitors, you can pull further ahead. If your system benefits from feedback loops (more users -> better outcomes -> more users), early leadership creates compounding advantage.

But this doesn't apply to every AI use case. In many domains, a smaller, more focused dataset is actually better than a larger, messier one. In other domains, AI quality improvements hit diminishing returns relatively quickly.

Understanding whether your AI applications have network effects and data advantages is important for strategy. If they do, speed and scale matter significantly. If they don't, a more measured, quality-focused approach might serve you better.

Talent as Sustainable Advantage

One of the most sustainable advantages in AI is having a strong team with deep capability. Companies with world-class machine learning engineers, data scientists, and AI architects can build better systems faster than competitors without those capabilities. And unlike some advantages that erosion over time, good teams actually improve and become harder to compete against because they accumulate experience and build institutional knowledge.

Investing in talent—hiring the best people you can, developing them over time, and creating an environment where they want to stay—might be the single best ROI play in AI strategy. It's expensive in the short term but compounds massively over time.

The Path Forward: Reconciling Optimism With Realism

Why The Current Situation Isn't Actually A Problem

Stepping back, the situation where most organizations haven't yet achieved revenue growth from AI despite heavy investment isn't actually a problem. It's a healthy transition period.

You're in a phase where the most important work is foundational: building data infrastructure, developing internal capability, establishing governance, moving pilots to production, and learning what actually works in your organization. Revenue growth will follow, but trying to force it in year one creates pressure that distorts decision-making.

Organizations that understand this and manage expectations appropriately will maintain the patience to do the foundational work properly. Organizations that panic and try to force returns will make poor decisions and end up with worse outcomes.

The leaders who are staying calm about current underperformance are actually the ones playing it right. They're not seeing the AI investment as a failure; they're seeing it as a long-term strategic capability that's being built appropriately.

Building For Sustainable Advantage

The goal isn't to optimize for year-one ROI. It's to build sustainable competitive advantage that compounds over time. That requires:

- Patience: Maintaining commitment to AI strategy even when near-term results are mixed

- Discipline: Making decisions based on data and analysis, not hype or fear

- Humility: Acknowledging what you don't know and learning systematically

- Flexibility: Being willing to change course when evidence suggests you should

- People Focus: Remembering that technology is only valuable if humans can use it effectively

Organizations that combine these elements will ultimately separate themselves from the pack, even if their year-one results look similar to everyone else's.

Your Next Steps

If you're responsible for AI strategy in your organization, here's what to focus on:

- Establish clear measurement for every AI initiative before you launch it

- Prioritize data quality as the highest-leverage investment you can make

- Build governance that balances innovation with appropriate risk management

- Invest in talent development, building internal capability that sustains long-term

- Set realistic expectations with stakeholders about timeline and returns

- Focus on learning, not just deployment; the pace of learning matters more than the speed of deployment

- Start with high-confidence, high-impact use cases that can demonstrate value quickly

- Plan for the long term while executing in the short term

The organizations that nail this balance—between strategic patience and operational discipline—will be the ones that look back in five years and wonder how they ever competed without the capabilities they've built.

FAQ

Why are most organizations not seeing revenue growth from AI despite investing heavily?

The gap between AI investment and revenue growth reflects several factors: unrealistic initial expectations about how quickly transformation would occur, the significant foundational work required before AI systems can deliver value (data quality, infrastructure, governance), and the difficulty in attributing financial returns cleanly to specific AI initiatives. Most organizations underestimated the time, expertise, and organizational change required to realize returns. What they're actually achieving—modest productivity improvements and operational efficiency—is real and valuable, but doesn't match the transformational narrative that often accompanies AI investment.

How long should we realistically expect before seeing meaningful ROI from AI investments?

A realistic timeline is three to five years for meaningful, sustainable ROI. Year one typically focuses on pilots, learning, and foundational work with minimal financial returns. Year two involves scaling pilots to production and should show moderate improvements and early returns. Years three through five are when earlier investments compound and serious advantages emerge relative to competitors who didn't maintain commitment through the difficult middle period. Organizations expecting returns in year one typically become disappointed and lose confidence in their AI strategy. Those planning for the three-to-five-year horizon can stay committed through challenging periods and realize better ultimate outcomes.

Why is data quality such a critical factor in AI success, and how should organizations prioritize it?

Data quality determines the ceiling on what any AI system can achieve. If your data is incomplete, inconsistent, or inaccurate, your AI models will make poor decisions. Most organizations discover that fixing data quality problems consumes significant effort and budget. Establishing clear data governance, investing in data infrastructure, and building a culture where people understand data quality is everyone's responsibility creates leverage for every subsequent AI initiative. Organizations that prioritize data quality early achieve faster results with lower costs across all their AI efforts. This is one of the highest-ROI investments you can make, yet it's often overlooked because it's less glamorous than developing new algorithms.

What's the difference between agentic AI and the AI tools most organizations use today, and when should we expect significant agentic AI deployment?

Current AI tools are "tool AI"—humans direct them to perform specific tasks. Agentic AI operates with some degree of autonomy toward defined objectives, potentially handling multiple steps and making decisions along the way with minimal human intervention. Current agentic AI adoption is around 23%, expected to reach 74% within two years. However, this increase reflects adoption of some agentic capabilities, not full-scale autonomous system deployment. Real-world agentic AI will likely see slower, more cautious scaling than raw adoption numbers suggest because autonomous systems require even more careful governance and testing than current tools. Organizations should begin preparing for agentic AI by establishing strong governance frameworks now, when stakes are lower.

How should organizations balance the desire for fast AI deployment against the need for proper governance and testing?

Speed and governance aren't actually opposed; they're complementary. Organizations that rush deployment without proper governance typically encounter problems that slow them down significantly later: systems making unexpected decisions, poor output quality, employee resistance due to inadequate training, data breaches or privacy issues. Organizations that establish governance thoughtfully can actually accelerate their long-term progress because they avoid these later problems. A measured approach—clear governance upfront, rapid deployment within those guardrails, continuous learning and iteration—actually enables faster overall progress than aggressive deployment followed by firefighting. The friction you feel setting up governance is doing important work that speeds up subsequent scaling.

What role should AI play in improving productivity versus generating new revenue, and how should we frame these outcomes financially?

Both productivity improvement and revenue generation are valuable, but they're often confused or conflated, leading to disappointed expectations. If AI improves customer service productivity by 20%, the organization can either reduce costs (margin improvement) or handle more customers (potential revenue growth if capacity-constrained). If the entire industry adopts the same AI improvements, productivity gains benefit everyone but don't create competitive advantage. Revenue growth comes from using AI to do things that were previously impossible, serve new customers, or enter new markets. Most current AI deployments deliver productivity improvements, which is valuable but less impressive than the revenue growth narrative that often accompanies investment announcements. Frame AI investments honestly: "This will improve productivity and reduce costs" is actually a compelling value proposition, and it's more likely to be realized than promised transformational revenue growth.

How important is sovereign AI versus relying on third-party AI services, and what factors should guide this decision?

Sovereign AI (maintaining control over your data and infrastructure) is strategically important for organizations with sensitive or proprietary data, competitive advantage in their data, or regulatory requirements around data handling. Four in five organizations now view it as important, though the urgency varies by industry. Sovereign AI is more expensive than using third-party services but provides control and customization advantages. Most organizations adopt a hybrid approach: using third-party services for use cases where sovereignty doesn't matter and convenience is valuable, while maintaining sovereign capability for strategically sensitive applications. The more central AI becomes to your operations and the more proprietary your data, the stronger the case for sovereign capability. For organizations just starting their AI journey, starting with external services to learn rapidly, then building sovereign capability for high-value use cases, is often the most pragmatic approach.

Why are organizations increasing restrictions and bans on certain AI tools even as they invest more heavily in AI overall?

This seems contradictory but is actually evidence of healthy maturation. During initial experimentation phases, AI usage increases rapidly and broadly, with both valuable and problematic applications. After some experience, organizations establish governance to distinguish between approved and restricted uses—focusing on high-value use cases while preventing problematic ones. The restriction phase isn't the endpoint; it's essential groundwork for responsible scale. Organizations that move systematically through experimentation -> governance -> optimized deployment actually realize more value long-term than those trying to skip the governance phase. Think of restrictions as establishing rules that allow confident scaling to follow. The organizations that will scale AI most successfully are those that are being thoughtfully selective now about how these tools are used.

Related Articles You Might Find Valuable:

- How to Build an Effective AI Governance Framework

- The Data Quality Imperative: Why AI Projects Fail Without It

- Sovereign AI Strategy: When and How to Build Internal Capability

- The Long View on AI ROI: Planning for Three-Year+ Returns

- Measuring AI Impact: Establishing Metrics That Actually Matter

Key Takeaways

- 80% of organizations targeting AI-driven revenue growth haven't achieved it yet, but this reflects expectation misalignment rather than technology failure

- The real ROI timeline is 3-5 years, not 1-2 years. Year one focuses on foundational work; years 3-5 show compounding returns

- Data quality is the highest-leverage investment—it improves every downstream AI system and often becomes the primary bottleneck

- Agentic AI adoption will reach 74% within two years, but deployment will remain measured because autonomous systems require even stronger governance

- Organizations winning at AI are treating it as a strategic five-year capability play, not a quick-fix productivity tool—patience and discipline matter more than speed

Related Articles

- Why CEOs Are Spending More on AI But Seeing No Returns [2025]

- AI Bubble or Wave? The Real Economics Behind the Hype [2025]

- ServiceNow and OpenAI: Enterprise AI Shifts From Advice to Execution [2025]

- OpenAI's Enterprise Push 2026: Strategy, Market Share, & Alternatives

- Enterprise AI Adoption Report 2025: 50% Pilot Success, 53% ROI Gains [2025]

- LinkedIn's Small Models Breakthrough: Why Prompting Failed [2025]

![Why AI ROI Remains Elusive: The 80% Gap Between Investment and Results [2025]](https://tryrunable.com/blog/why-ai-roi-remains-elusive-the-80-gap-between-investment-and/image-1-1769165045944.jpg)