Nvidia Cosmos Reason 2: Physical AI Reasoning Models Explained [2025]

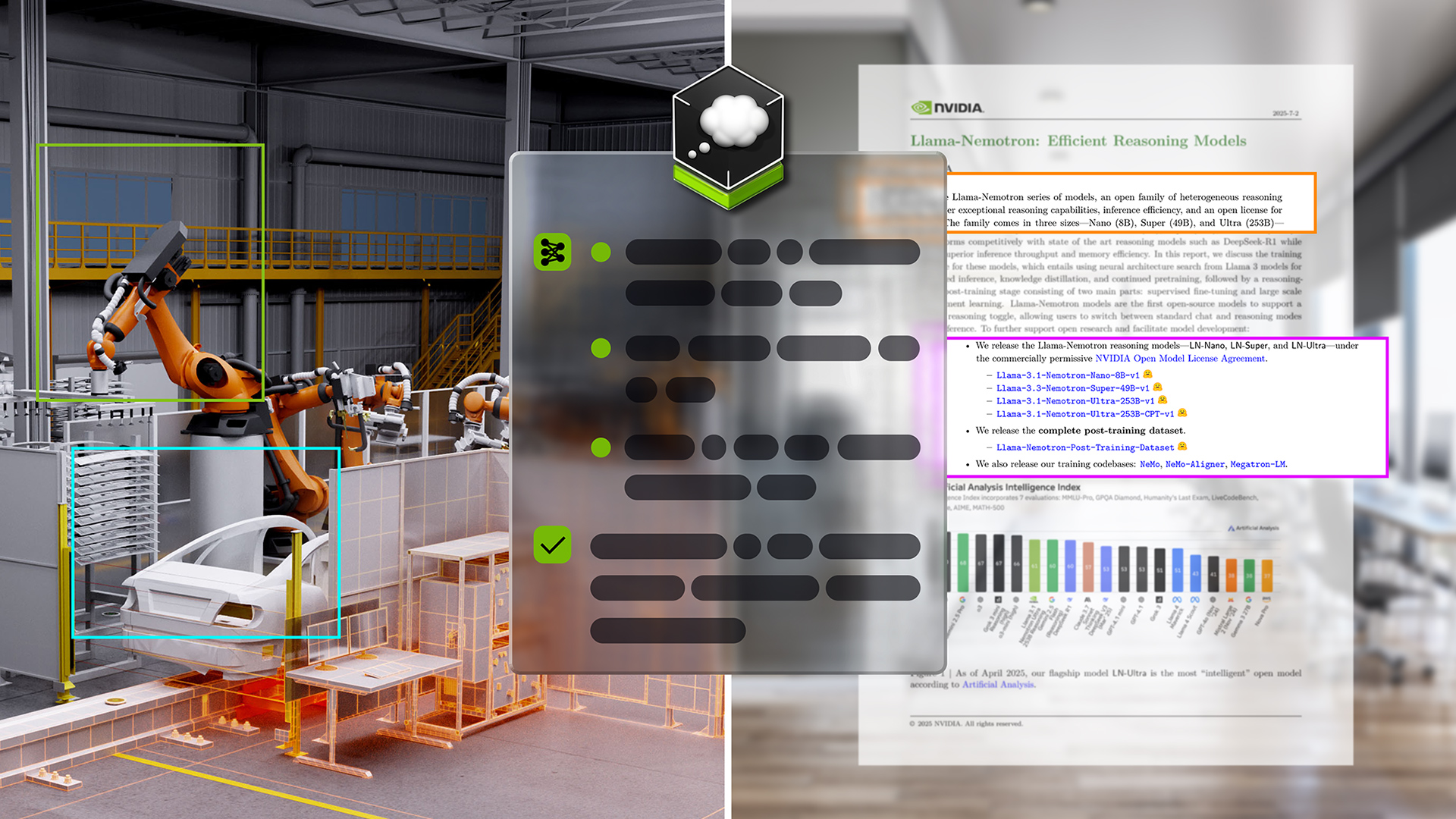

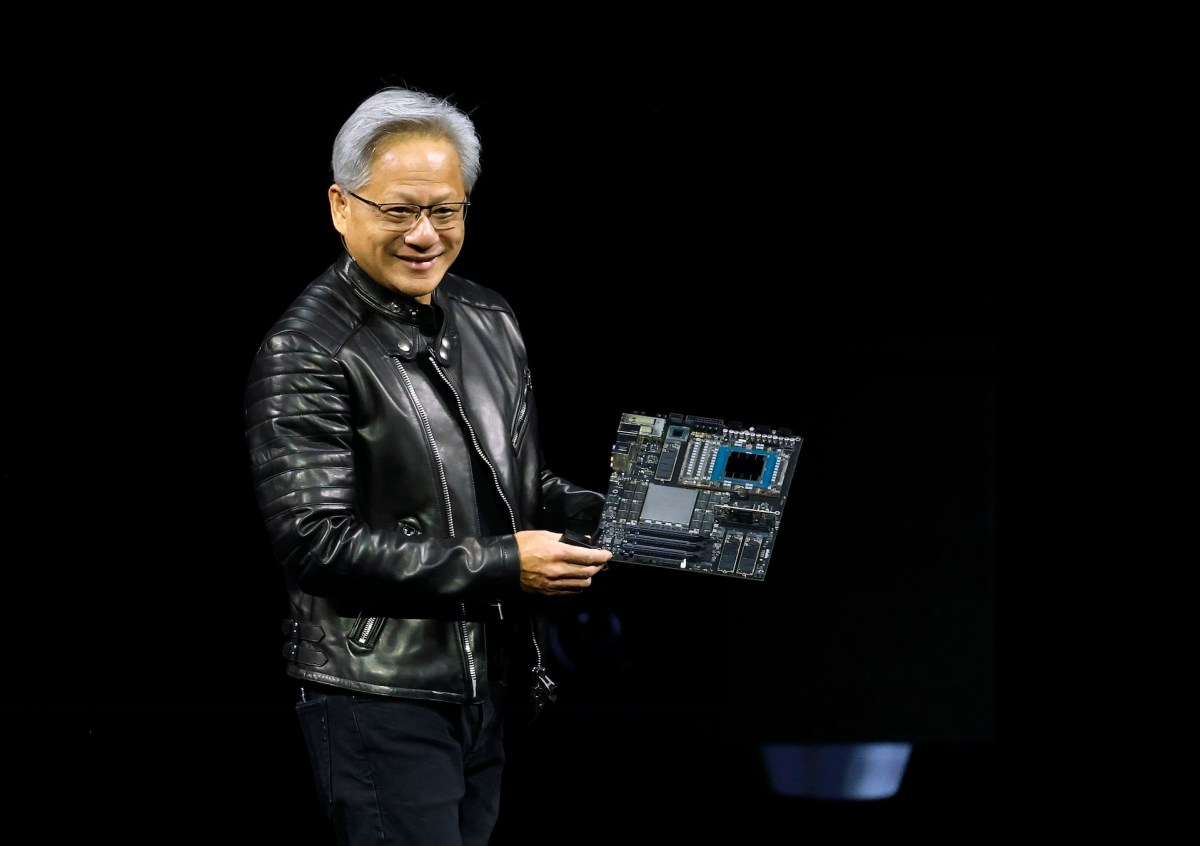

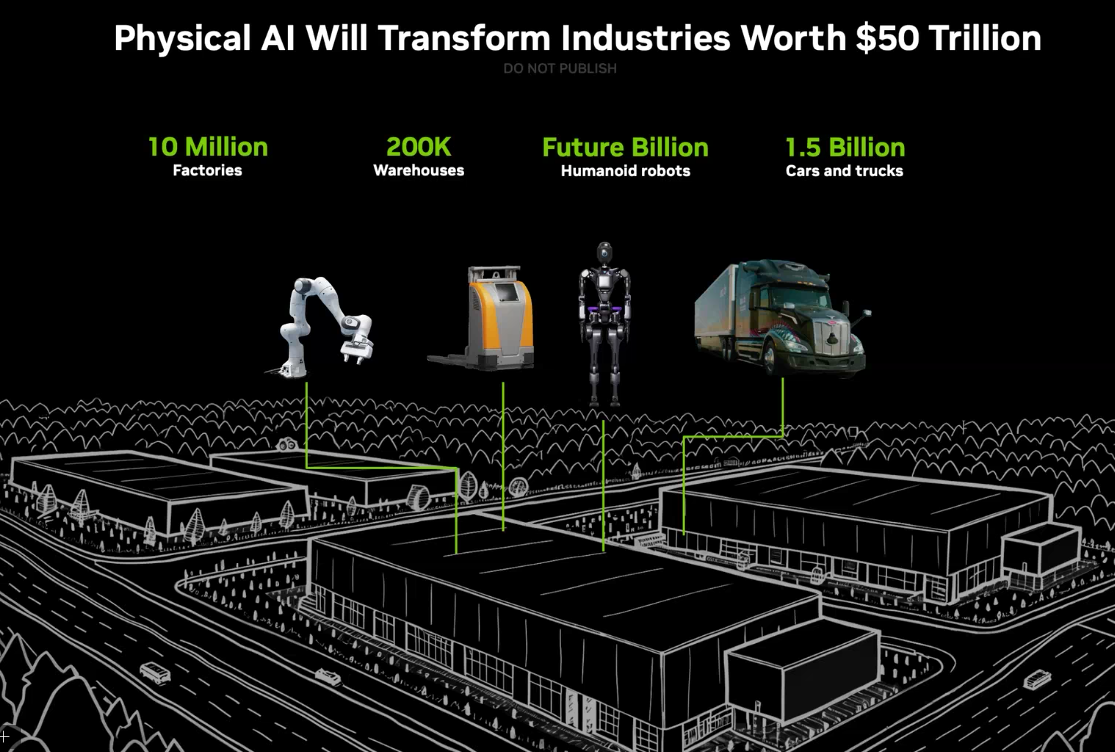

When Nvidia CEO Jensen Huang declared we're entering the age of physical AI, he wasn't being hyperbolic. The company's backing that statement with real infrastructure, real models, and a clear roadmap to move AI from chat windows into robots, autonomous vehicles, and manufacturing systems.

The latest proof of concept? Cosmos Reason 2, unveiled at CES 2025. This is Nvidia's next-generation vision-language model built specifically for embodied reasoning, and it represents a fundamental shift in how AI companies think about machine intelligence.

Here's what makes this moment significant: for years, AI has excelled at pattern matching in text and images. Chat GPT can write code. DALL-E can generate images. But none of these models truly understand causality, physics, or how to make decisions when the environment fights back. A robot doesn't care about token prediction. It needs to understand that if it knocks over a cup, the coffee spills. That if it moves too fast, the gripper loses grip. That if it encounters an obstacle, it needs a new plan.

Cosmos Reason 2 is built to understand those dynamics. It's not just seeing the world—it's reasoning through it.

TL; DR

- Cosmos Reason 2 is Nvidia's latest vision-language model designed for embodied reasoning in robots and autonomous systems, built on a proven two-dimensional ontology for physical understanding

- The model enables robots to plan and reason through unpredictable physical environments, similar to how software agents reason through digital workflows

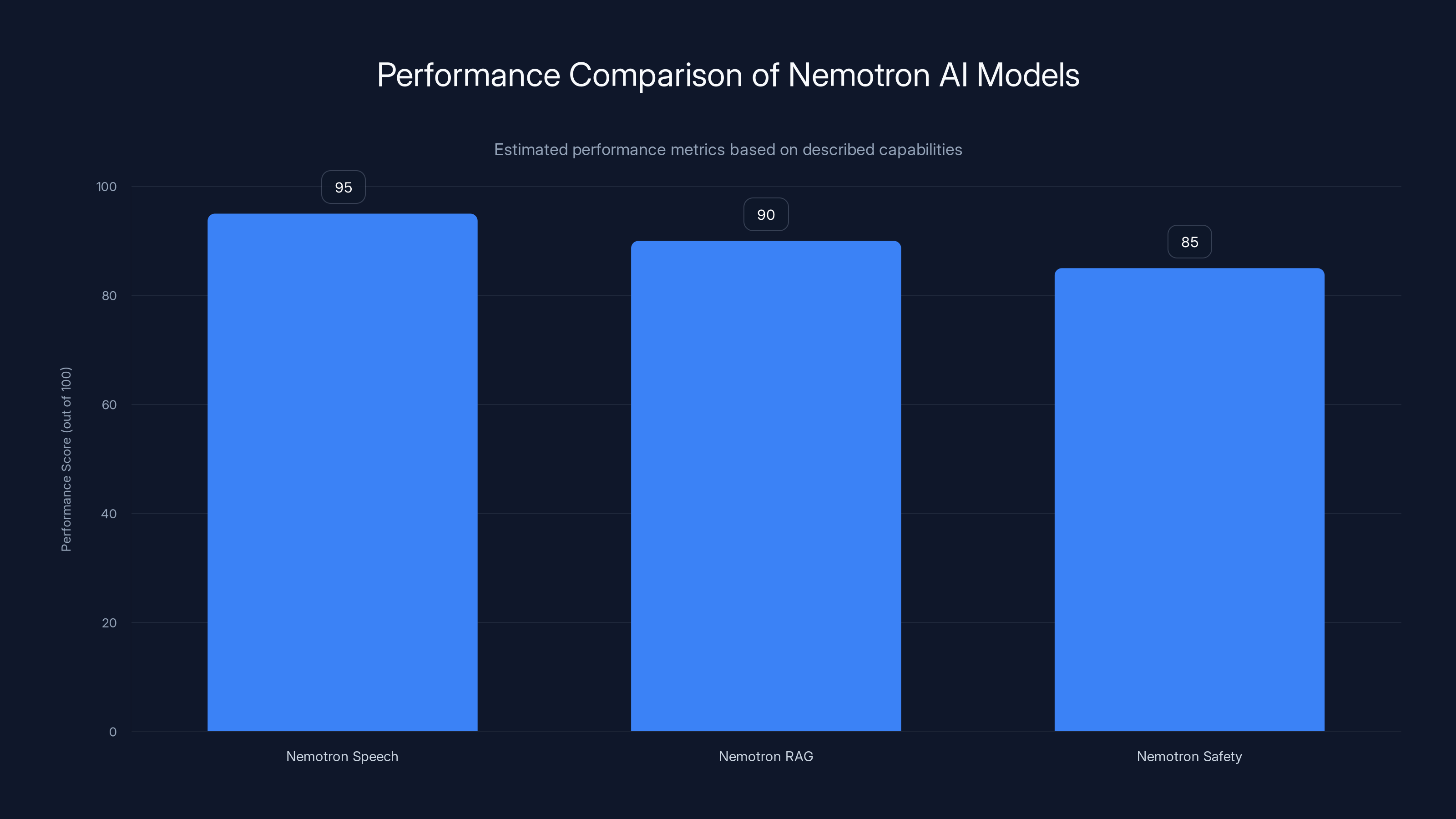

- Nvidia expanded its reasoning stack with three new Nemotron models (Speech, RAG, Safety) that work together to create comprehensive AI agent ecosystems

- Enterprises gain customization flexibility to adapt physical AI applications to specific manufacturing, logistics, and robotics use cases

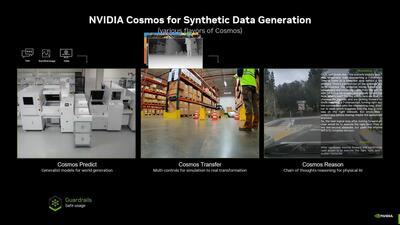

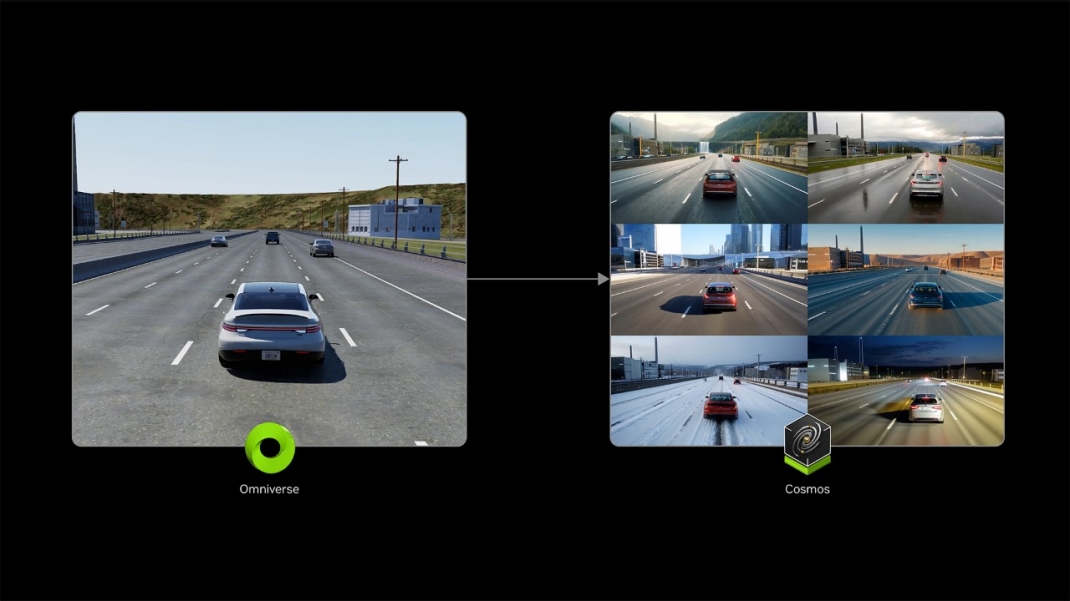

- The physics simulation layer (Cosmos Transfer) lets developers generate unlimited training data for robotic systems without expensive real-world testing

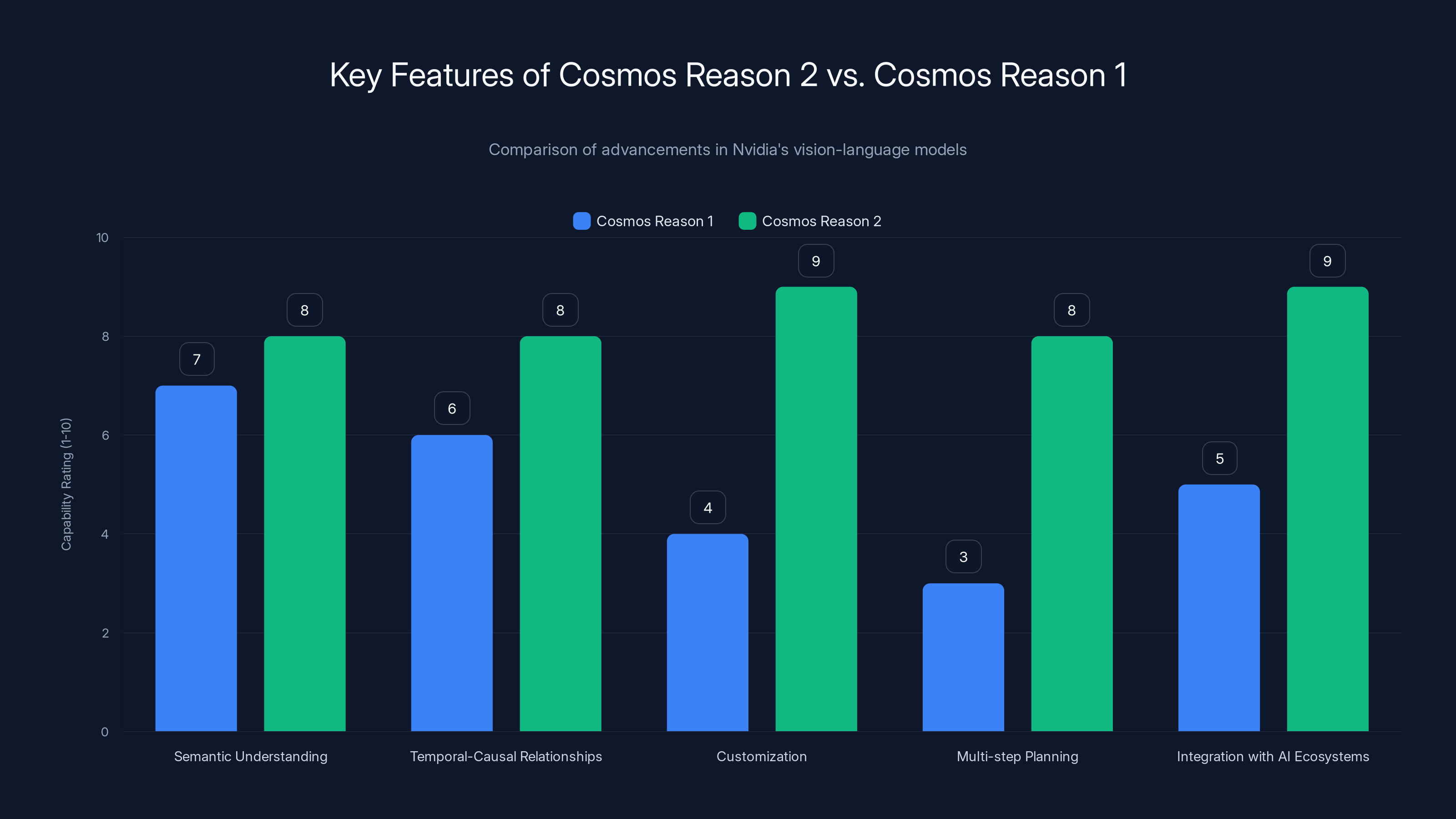

Cosmos Reason 2 significantly enhances customization, multi-step planning, and integration with AI ecosystems compared to its predecessor, enabling more effective physical reasoning. Estimated data.

What Is Physical AI, and Why Does It Matter?

Let's start with a basic definition. Physical AI sounds like science fiction, but it's actually straightforward: it's artificial intelligence deployed in the real world through robots, drones, autonomous vehicles, and manufacturing systems. Instead of being confined to digital interfaces, the AI makes decisions that have physical consequences.

The challenge is exponentially harder than digital AI. A language model can't break the internet if it makes a mistake. A robot arm moving at the wrong speed in a manufacturing plant can shatter parts worth thousands. An autonomous vehicle making a split-second decision affects human lives.

For decades, robotics required hand-coded rules and specialized training for narrow tasks. A robot trained to pick up balls couldn't handle boxes. A vision system trained on warehouse lighting failed when the sun came through the window differently. This specialization made robotics expensive and inflexible.

Cosmos Reason 2 changes this by introducing generalist reasoning at the foundation. The model doesn't just recognize objects—it understands their properties, how they behave when manipulated, and what happens when plans change. This is the difference between a reactive system and a thinking agent.

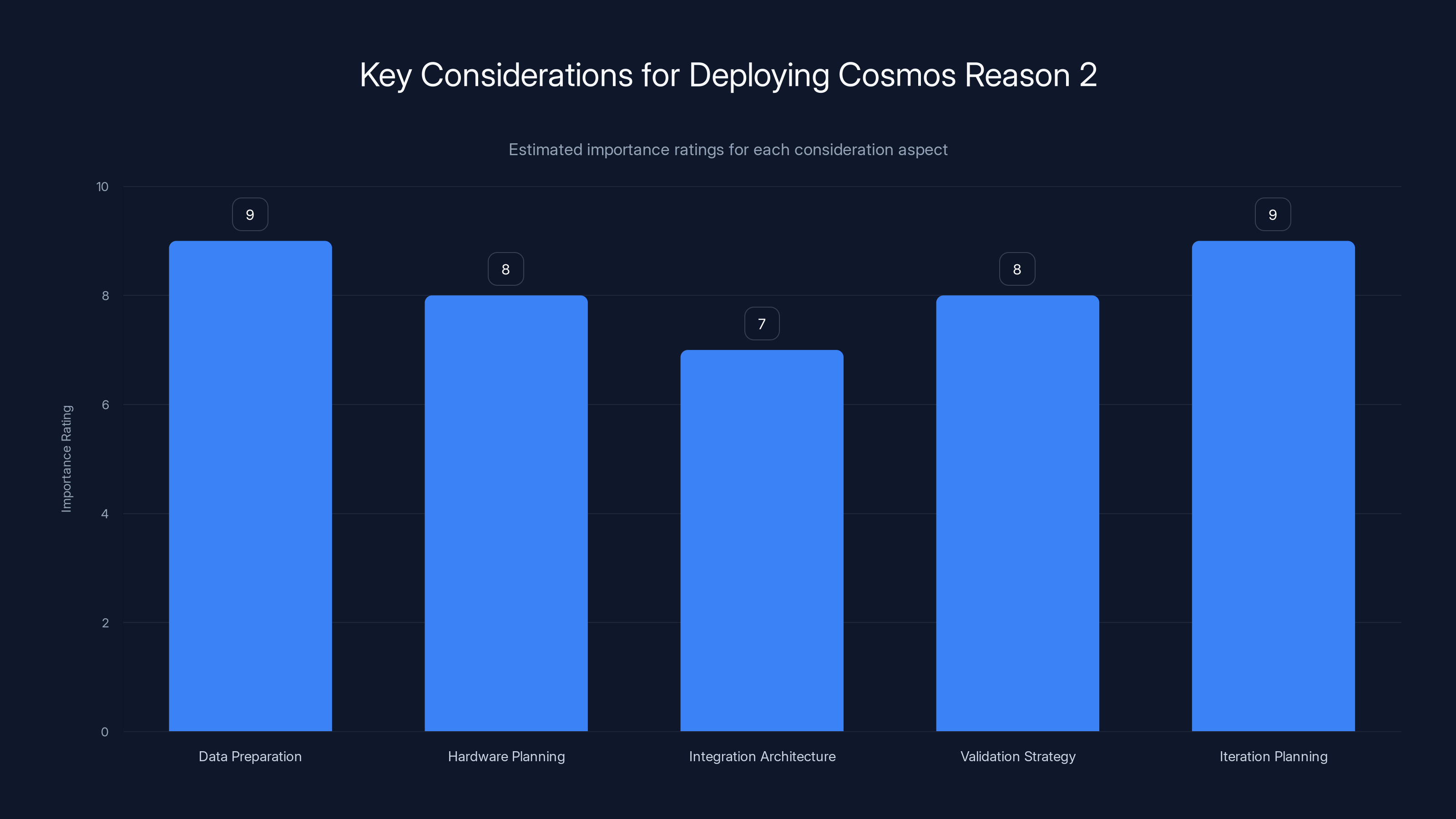

Data preparation and iteration planning are crucial for deploying Cosmos Reason 2 effectively. Estimated data based on typical deployment priorities.

Understanding Vision-Language Models (VLMs)

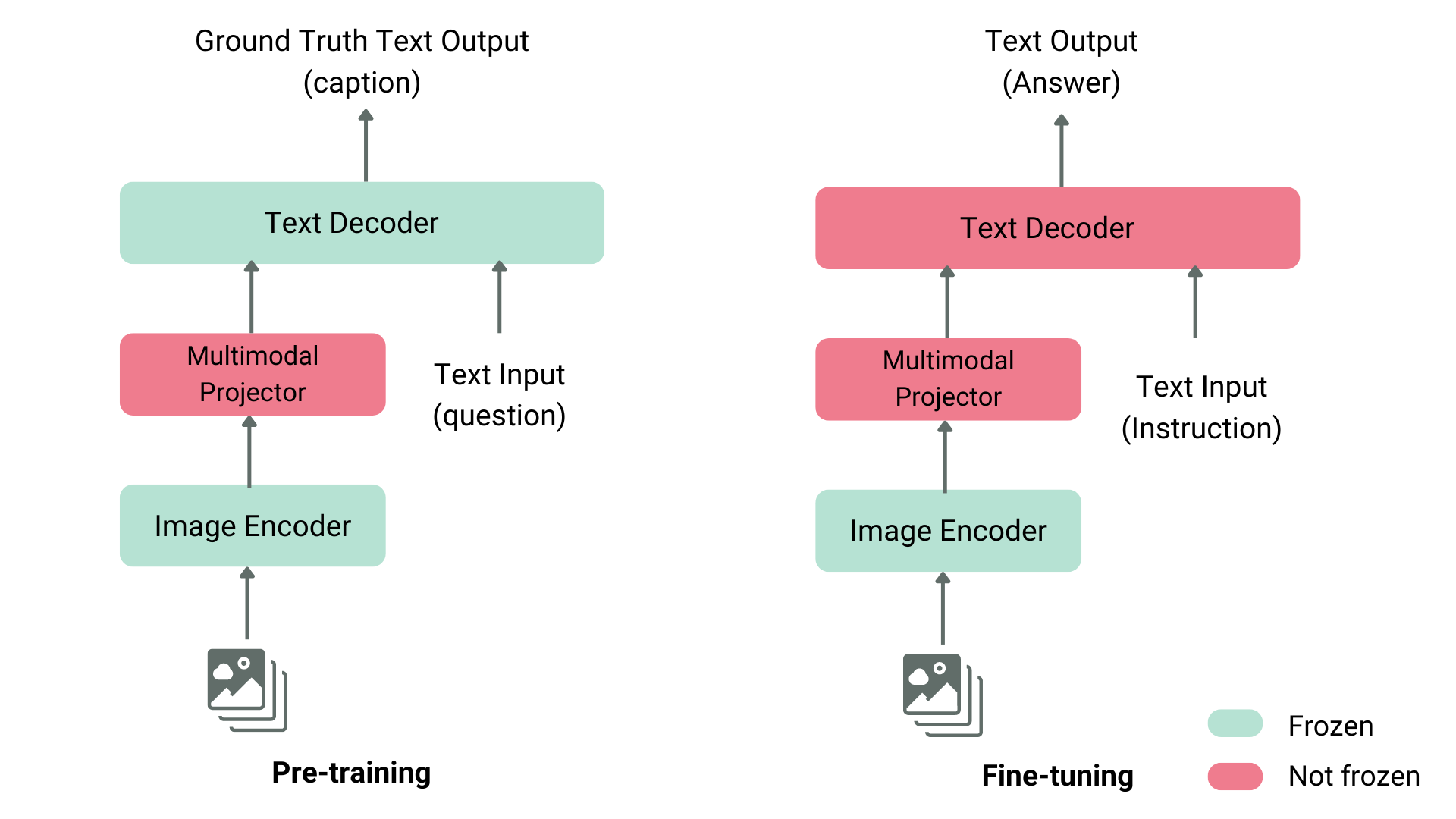

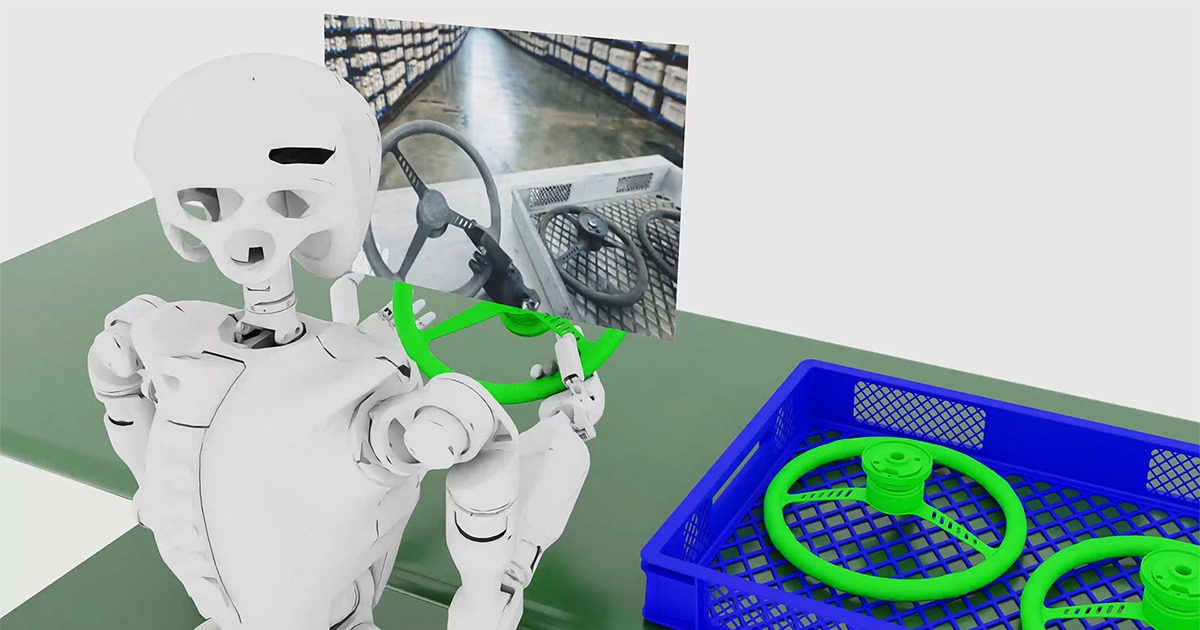

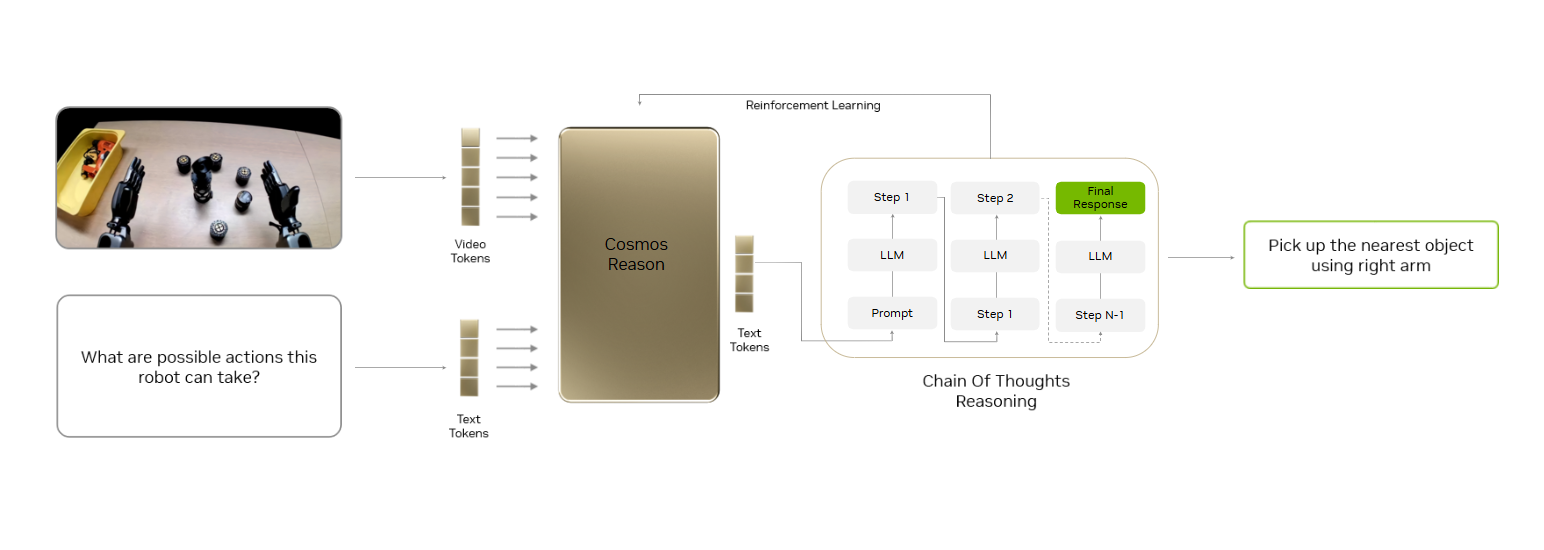

Before diving into Cosmos Reason 2, you need to understand its category. A vision-language model is an AI system trained on paired image and text data, allowing it to process visual inputs and generate language outputs describing what it sees.

Think of models like GPT-4 Vision or Claude 3. You show them an image, and they describe it in natural language. That's useful for accessibility and content moderation. But traditional VLMs don't reason about causality or physics.

Reasoning VLMs take this further. They understand not just what's in an image, but how things relate to each other, what would happen if something moved, whether a plan makes sense given the constraints of the physical world.

Nvidia's approach here is critical: they didn't just train a bigger model on more data. They built in a two-dimensional ontology specifically for embodied reasoning. An ontology is essentially a structured way of understanding concepts and their relationships. Nvidia's version captures the relationships between objects, actions, outcomes, and temporal sequences.

Why does this matter? Because it means the model can generalize. Train it on robots picking up cubes, and it can reason about picking up boxes. Train it on warehouse navigation, and it starts understanding principles that apply to factory floors. The structured knowledge transfers across domains.

Cosmos Reason 1: The Foundation

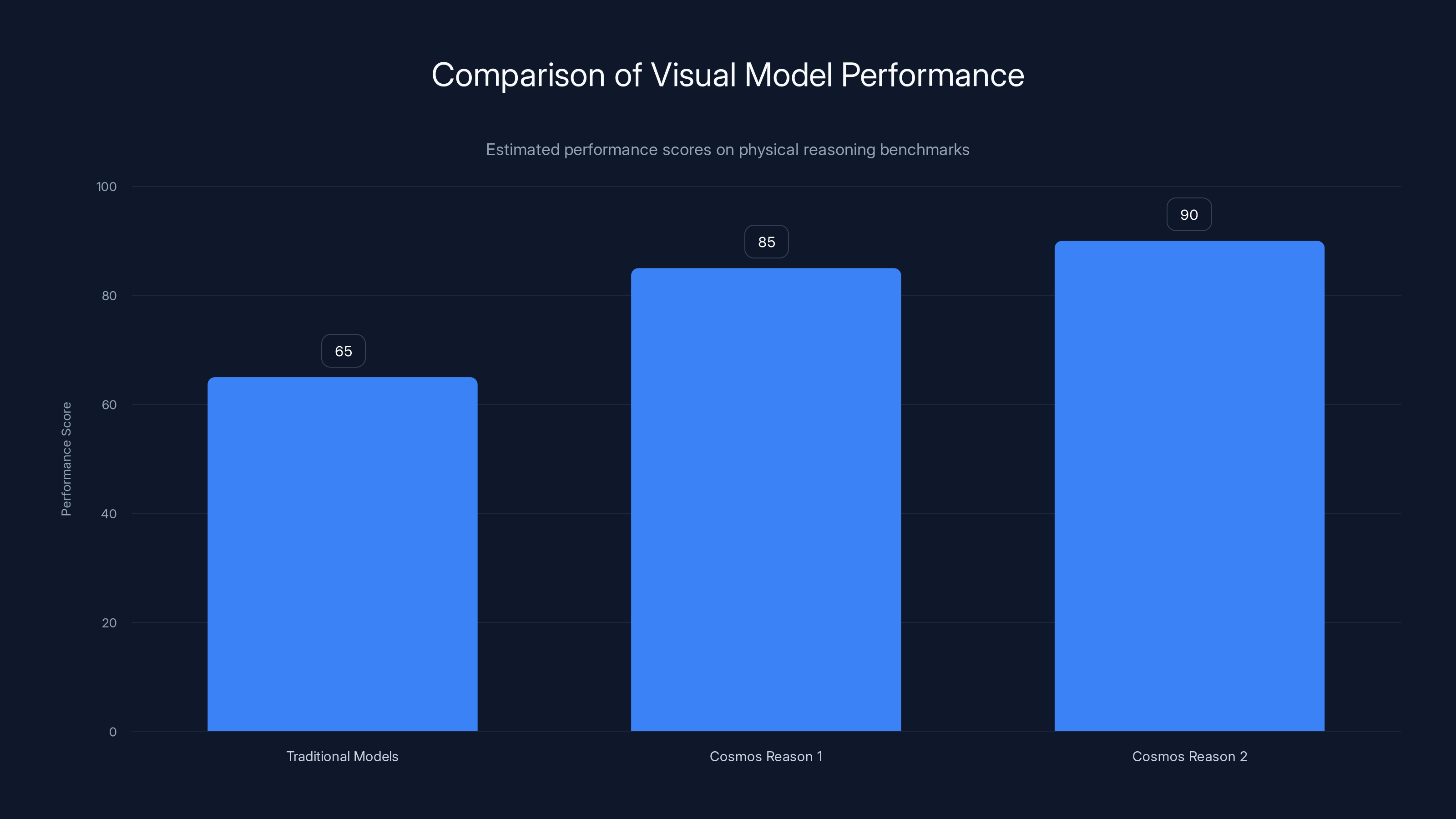

Released last year, Cosmos Reason 1 introduced Nvidia's approach to embodied reasoning. It immediately dominated Hugging Face's physical reasoning for video leaderboard, the standard benchmark for evaluating how well models understand physical dynamics.

What made Reason 1 successful wasn't raw size—it was the training approach and data quality. Nvidia focused on real robotic data, simulation data, and carefully curated datasets that showed cause and effect. The model learned that when a robot gripper closes too fast, precision decreases. When an arm extends too far, stability drops. These aren't obvious to a model trained purely on image data.

The two-dimensional ontology proved its value immediately. The first dimension captured semantic understanding—what objects are, what they can do. The second captured temporal and causal relationships—what happens next, why it happens, what would change the outcome.

But there were limitations. Enterprises couldn't easily customize Reason 1 for their specific use cases. The model was somewhat locked into the training paradigm. Developers had limited tools to fine-tune or adapt it. And while it excelled at physical understanding, it wasn't integrated with broader AI agent ecosystems needed for production deployments.

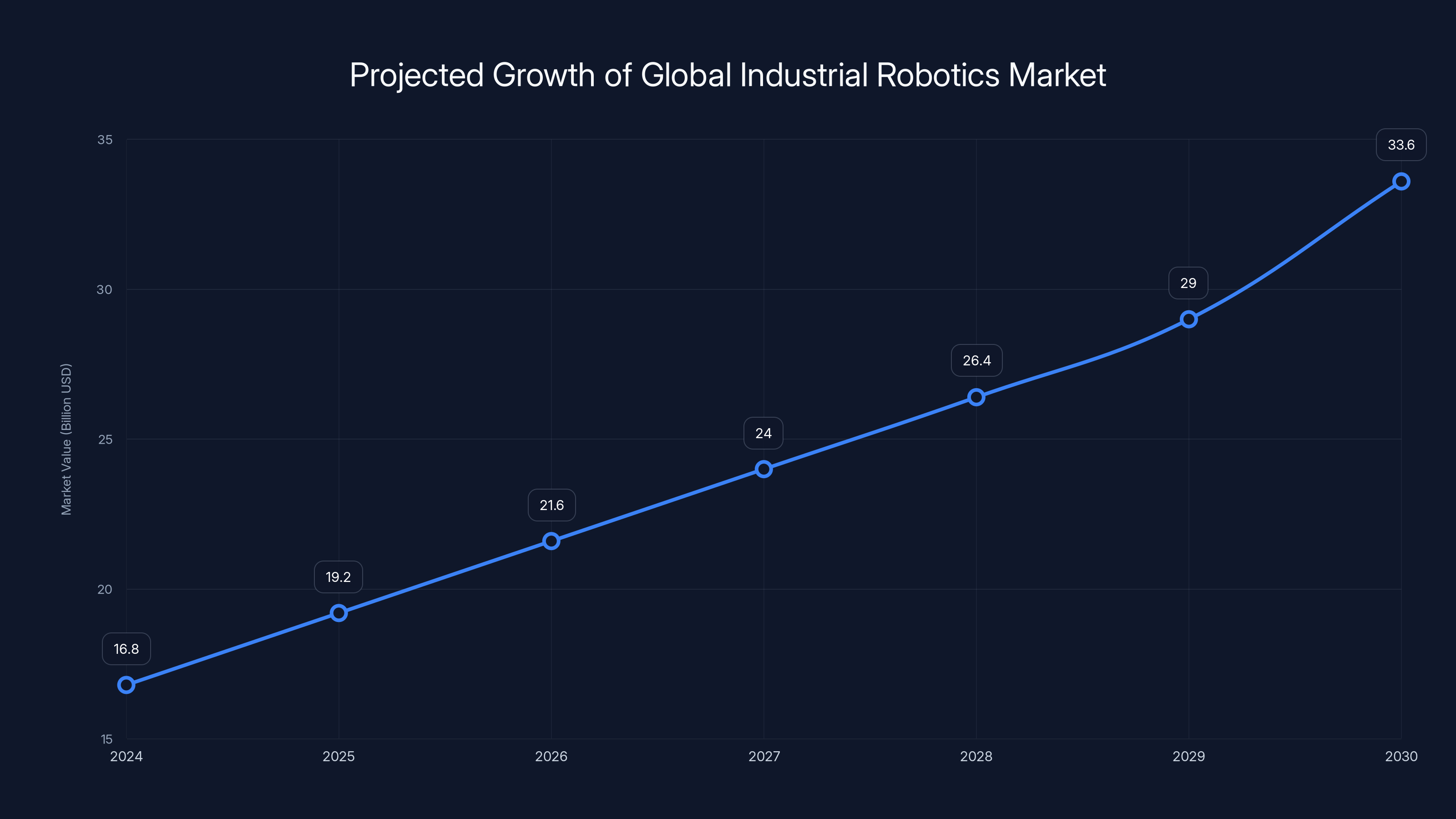

The global industrial robotics market is projected to double from

Cosmos Reason 2: Architectural Improvements

Cosmos Reason 2 addresses these limitations directly. The core ontology remains—that foundation works—but Nvidia rebuilt the model architecture around enterprise flexibility.

The first improvement is customization depth. Enterprises can now fine-tune Reason 2 more effectively for their specific physical environments. A logistics company can train it specifically on their warehouse layout, lighting conditions, and cargo types. A manufacturing facility can focus on their specific assembly sequences and failure modes. A robotics startup can adapt it to their hardware constraints without retraining from scratch.

The second improvement is planning capability. Reason 2 models can now reason through multi-step physical plans, similar to how software agents break down digital workflows. Instead of just understanding the current frame, the model can think ahead: "If I move left, the obstacle is still in the way. If I move right, then forward, I reach the goal. Moving right first is the better plan."

This planning capability is subtle but transformative. It means robots stop being reactive and start being strategic. They can handle novel situations because they're reasoning through consequences, not pattern-matching against trained examples.

The third improvement is integration with Nvidia's broader agent ecosystem. Reason 2 doesn't exist in isolation. It connects with the Nemotron family of models, with simulation tools, with deployment infrastructure. This ecosystem approach means physical AI systems can leverage the full stack of modern AI capabilities.

The Two-Dimensional Ontology Explained

Understanding Nvidia's ontology approach is key to grasping why Cosmos Reason 2 works. Traditional models treat each image independently. Cosmic models understand structured relationships.

Dimension 1 (Semantic): What is in the image? Object types, their properties, their capabilities. A ball is spherical, compressible, rolls freely. A cup holds liquid, breaks when dropped, doesn't roll predictably. A gripper has a maximum closing force, requires calibration, degrades with use.

Dimension 2 (Temporal-Causal): How do things change? What causes what? If the gripper closes with force X, does the object break or grasp? If the robot moves distance Y at speed Z, does it collide or succeed? These relationships form chains of causality that the model can reason through.

When you combine these dimensions, something remarkable happens. The model understands not just what it sees, but the principles governing what it sees. It can transfer knowledge across different objects, different tasks, different environments—because it's reasoning about underlying principles, not memorizing specific scenarios.

This is why Cosmos Reason 1 immediately outperformed other visual models on physical reasoning benchmarks. It wasn't bigger or faster. It just understood the structure of the problem.

Cosmos Reason 2 refines this ontology further while making it more adaptable. Enterprises can now layer domain-specific knowledge on top of the foundation. The base model understands physics. Your customization teaches it about your specific constraints, equipment, and goals.

Cosmos Reason models outperform traditional visual models due to their advanced understanding of structured relationships and causal reasoning. (Estimated data)

Real-World Applications in Robotics

How does this translate to actual robots? Let's walk through some concrete scenarios.

Manufacturing and Assembly: A robot trained with Cosmos Reason 2 can handle variations in components. If a part is slightly warped, it reasons about the adjusted gripper pressure needed. If a fastener is stuck, it considers a different angle rather than forcing it. If the assembly sequence changes because materials arrived in different order, it adapts the plan.

This matters enormously for small-batch manufacturing and customization. Traditional industrial robots require complete reprogramming for different products. Cosmos Reason 2-enabled systems can adapt with minutes of guidance rather than days of engineering.

Warehouse and Logistics: Autonomous systems using Cosmos Reason 2 can handle unstructured environments. A box is damaged and leaning at an angle—the system plans how to grab it without causing collapse. A narrow shelf requires precise placement—the system calculates approach angles dynamically. A fragile item sits next to heavy boxes—the system understands weight distribution and picks items in the right order.

Warehouses are chaos compared to controlled manufacturing. Every item is slightly different. Environments change hourly. This is exactly where general physical reasoning becomes valuable.

Healthcare and Delicate Handling: In medical facilities and research labs, robots must handle sensitive materials. A tube of samples needs positioning without contamination. A patient requires gentle mobility assistance. A delicate instrument needs careful placement. Cosmos Reason 2 can reason about forces, pressure points, and failure modes in ways that pre-programmed sequences can't match.

Autonomous Vehicles: While autonomous driving uses specialized models, the physical reasoning layer from Cosmos applies. Understanding friction, braking distances, how objects will behave—these foundation concepts apply across different robotics domains.

In each scenario, the advantage isn't dramatic for any single task. But the flexibility across tasks, the ability to handle edge cases, and the graceful degradation when plans fail—that's where the value emerges.

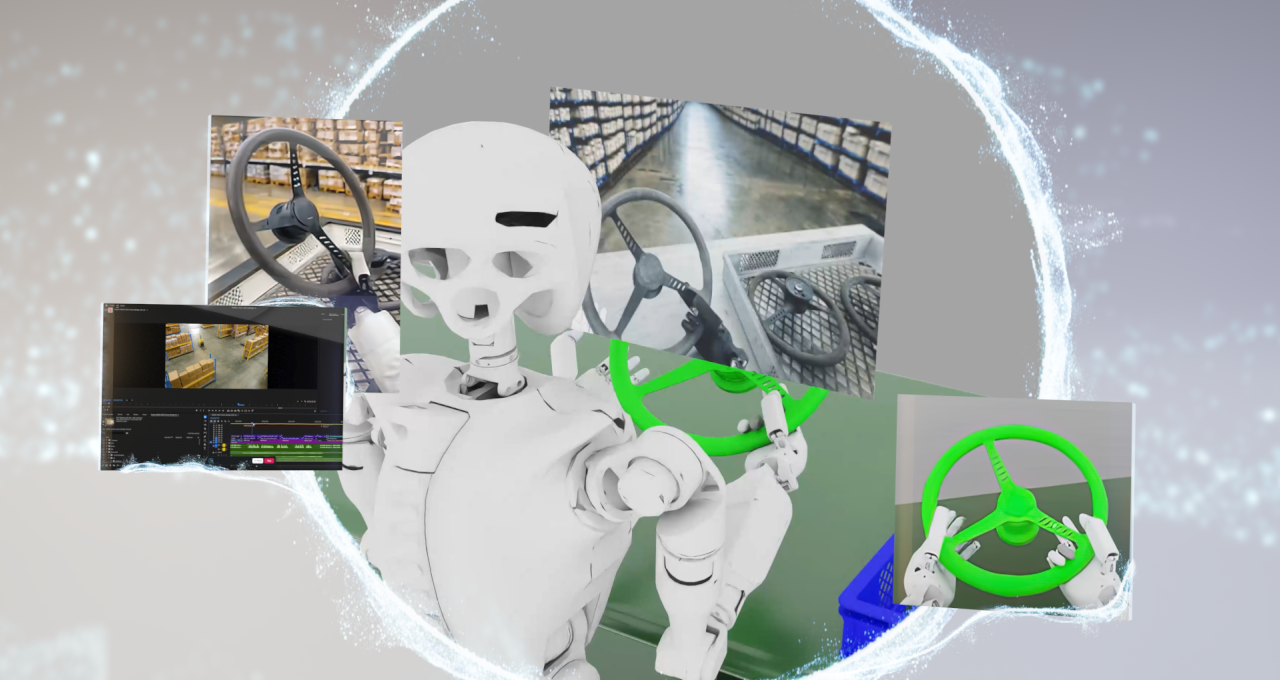

Cosmos Transfer: Synthetic Data at Scale

There's a practical problem hiding in physical AI: where does your training data come from?

You can't train a robot manufacturing system by running failed experiments in your production line. The cost of failures is too high. Real-world data is expensive, limited, and specific to your exact environment.

Enter Cosmos Transfer, the simulation and synthetic data generation component of Nvidia's ecosystem. It lets developers create unlimited training scenarios in virtual environments, then apply what the model learned to physical robots.

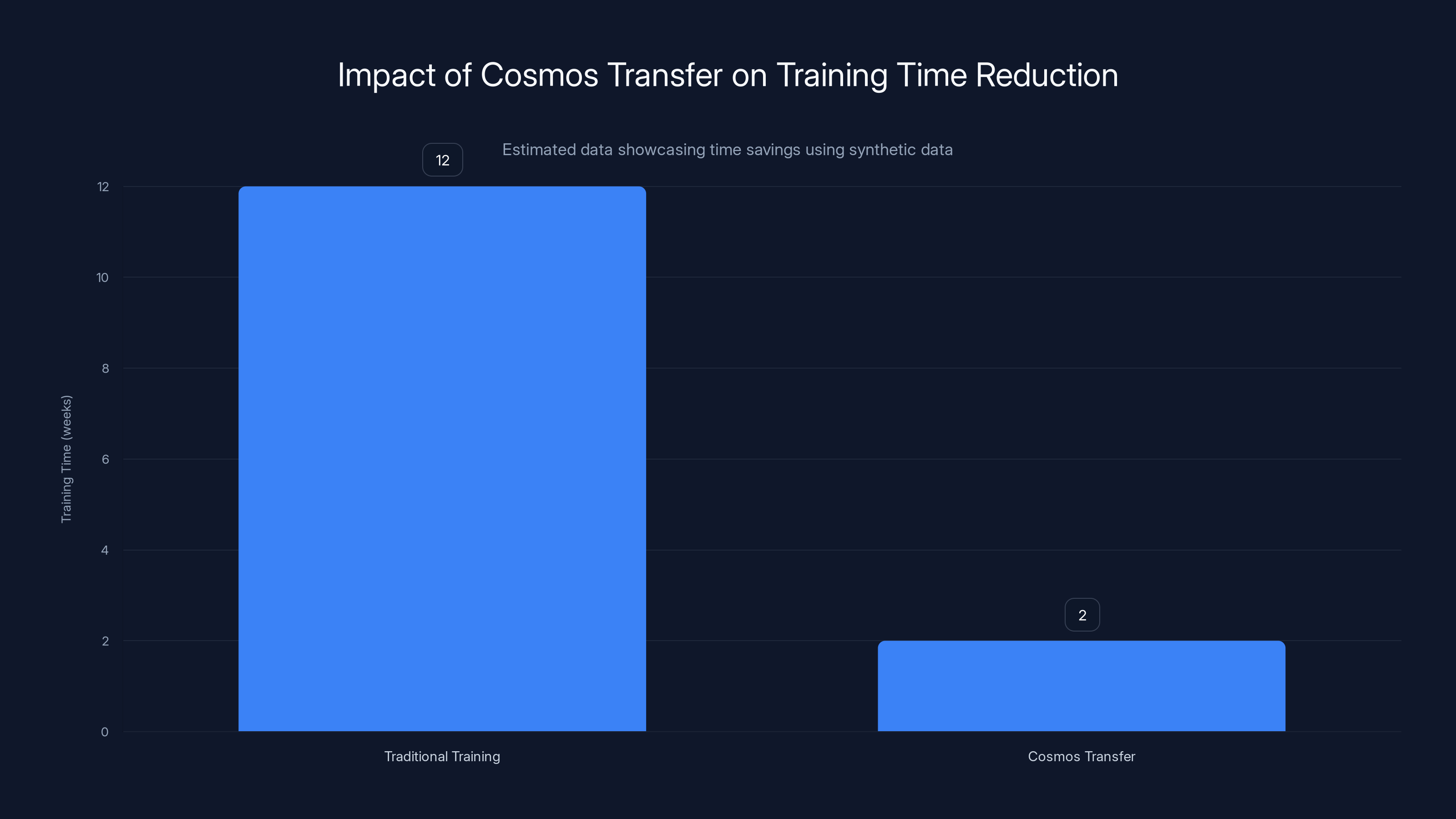

This is hard to overstate in importance. If your robot fails to grasp objects correctly in simulation, you iterate freely. No cost. No physical damage. No production downtime. You run 10,000 scenarios in simulation, the model learns, then you deploy to one physical robot for validation.

Cosmos Transfer improved in the latest version with better physics simulation fidelity and faster rendering. The gap between synthetic training and real-world performance continues to narrow. Most teams report that models trained predominantly on Cosmos Transfer data transfer effectively to physical hardware with only modest fine-tuning.

Here's the workflow: Define your robotic task in simulation. Set up variations—different object sizes, lighting conditions, material properties, gripper configurations. Run extensive simulation experiments. Train Cosmos Reason 2 on the simulation data. Deploy to physical robots. Fine-tune with real data. Iterate.

This approach reduces the cost and risk of deploying new robotic systems by orders of magnitude. A new manufacturing task that once required weeks of integration and months of training can now be deployed in days.

Using Cosmos Transfer, the training time for deploying new robotic systems can be reduced from 12 weeks to just 2 weeks. Estimated data.

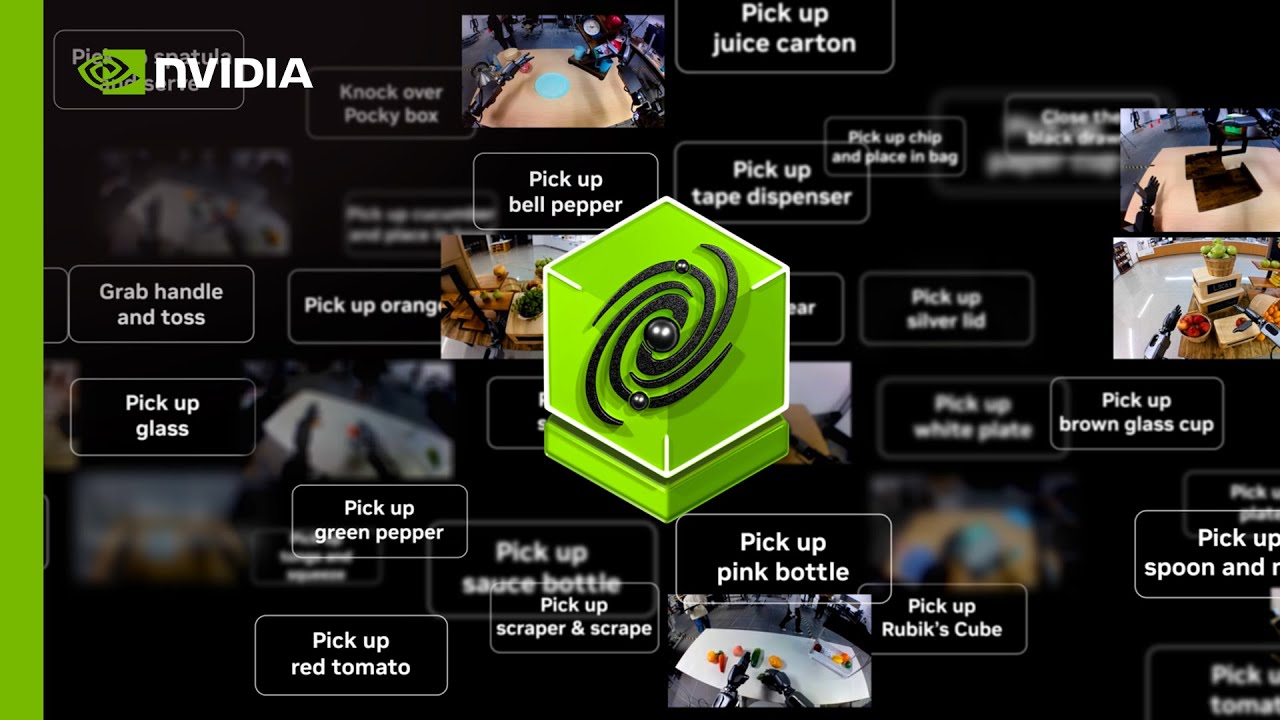

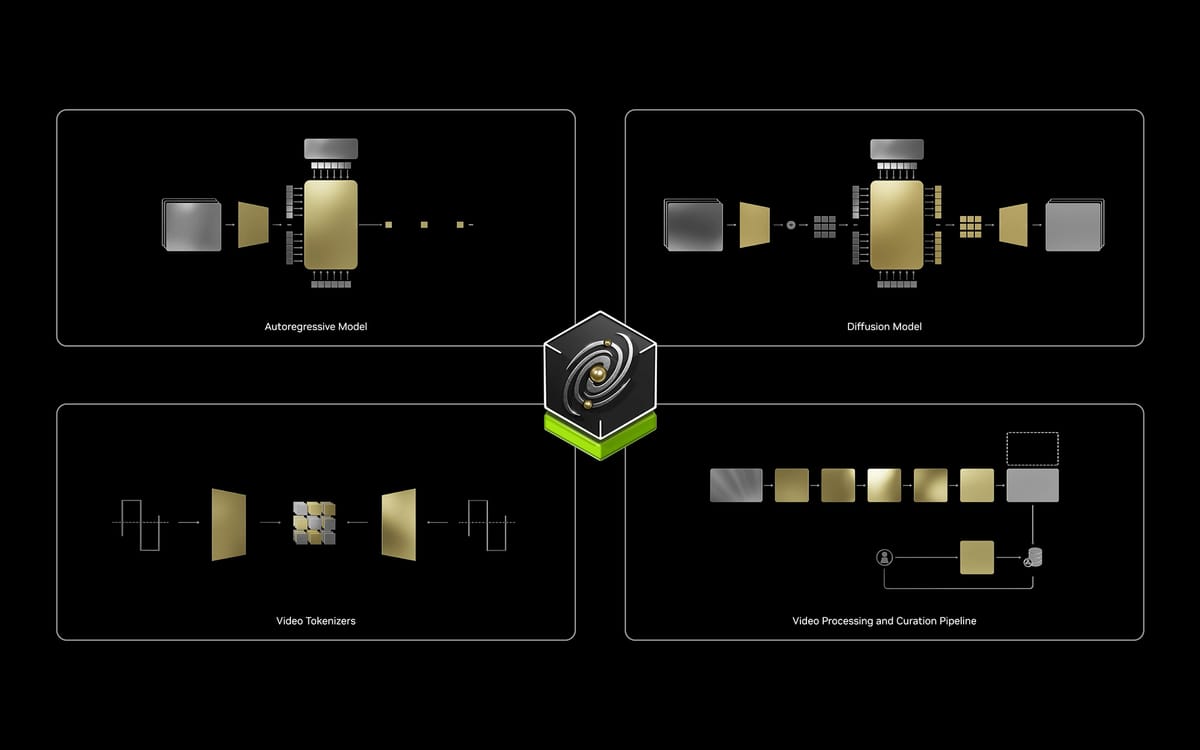

The Nemotron Family: AI Agents Beyond Vision

Here's where the thinking becomes systemic. Cosmos Reason 2 is powerful, but it's just the vision component. Real AI agents need more capabilities. Nvidia released three new Nemotron models that work alongside Cosmos to create complete agent systems.

Nemotron Speech: Delivers real-time, low-latency speech recognition. This matters for robots that need to respond to verbal commands instantly. Ten times faster than competing speech models, according to Nvidia. For a warehouse robot receiving instruction from a worker, the latency difference between speech-to-text taking 500ms versus 50ms is noticeable and affects safety.

More importantly, Nemotron Speech handles real-world audio—background machinery noise, overlapping speech, accents. This is where general models often fail. The model was trained on diverse audio from multiple languages and environments.

Nemotron RAG: Stands for Retrieval-Augmented Generation, but Nvidia's implementation is notable because it's multimodal. The embedding model understands both text and images. The reranking model can look at an image and decide if it's relevant to a query.

This matters for agents that need to access databases of knowledge. A robot encountering an object it hasn't seen before can query a product database by image, retrieve relevant documentation instantly, and reason about how to handle it. An autonomous system can search visual logs from similar situations and apply learned approaches.

Nemotron RAG uses the MMTab benchmark for embedding quality, achieving strong multilingual performance while using less compute power and memory. In production systems handling thousands of queries per second, this efficiency matters.

Nemotron Safety: A specialized model that detects sensitive data and prevents accidental exposure. For agents running in enterprise environments with confidential information, this is critical. A robot processing documents shouldn't accidentally transcribe or log personally identifiable information. A system querying databases shouldn't leak customer data. Nemotron Safety works alongside other models to validate outputs and flag risky content.

The strategic insight here is ecosystem thinking. No single model does everything. A complete agent system needs vision reasoning, language understanding, speech processing, knowledge retrieval, and safety validation. Nvidia is building the full stack.

Generalist vs. Specialist Robots: The Strategic Shift

Kari Briski, Nvidia's VP for Generative AI Software, articulated an important shift happening in robotics: the move from specialist robots to generalist specialist systems.

Old approach: Build a robot for one task. Assembly line robot bolts parts together, nothing else. Warehouse robot picks items from low shelves, can't reach high ones. Becomes economically viable only at high volume where specialization pays for itself.

New approach: Build a robot with broad foundational knowledge plus deep task-specific skills. Same robot can assemble different products. Same system can work in different warehouse layouts. Same platform adapts to different industries. The broad foundation comes from models like Cosmos Reason 2. The task-specific skills come from fine-tuning and domain data.

This changes the economics. Lower deployment volume becomes viable. Custom applications become feasible. Robotics moves from pure manufacturing into smaller facilities, logistics operations, healthcare, research.

Broad foundational knowledge without task-specific skills is useless—the robot is generalist but ineffective. Deep task-specific skills without broad foundation is the old specialist model—expensive and inflexible. The combination is the sweet spot.

Cosmos Reason 2 enables this shift by providing that broad foundation. It understands physics, causality, planning. Organizations layer their domain expertise on top. The result is robots that are flexible enough to handle variations while being focused enough to excel at their job.

This also affects how you build and train robotic systems. You're not training from scratch. You're starting with Cosmos Reason 2's physical understanding and adapting from there. Much faster iteration. Lower technical barriers to entry.

Nemotron Speech excels with a high performance score due to its low-latency and real-world audio handling capabilities. Estimated data based on described features.

Customization and Enterprise Flexibility

One of Cosmos Reason 2's advertised advantages is enterprise customization. What does that mean practically?

First, fine-tuning depth. You can adapt the base model more effectively for your specific domain. Custom training data from your facility. Custom loss functions reflecting your specific objectives. Custom evaluation metrics matching your real-world success criteria. This isn't trivial—poor fine-tuning often damages model performance. But with Reason 2's architecture, carefully executed customization improves results.

Second, integration flexibility. The model works with your existing compute infrastructure, simulation systems, and robotics platforms. Nvidia provides guides and examples for common scenarios (warehouse automation, manufacturing, etc.) but the system is flexible enough for novel applications.

Third, deployment flexibility. You can run Cosmos Reason 2 on various hardware platforms—Nvidia GPUs obviously, but also with optimization for edge devices. A robot with onboard inference doesn't need to call home for every decision. Decisions happen locally, reducing latency and enabling autonomous operation.

Fourth, version management. As Nvidia improves Cosmos models, you can upgrade your deployment. New versions offer better reasoning, expanded capabilities, improved efficiency. You can migrate from Reason 2 to future versions without rewriting your entire system.

This flexibility is why enterprises adopt new AI models. It's not just about marginally better performance. It's about the system fitting into existing operations without requiring complete overhauls.

Competing Approaches and Why Cosmos Matters

Nvidia isn't alone in pursuing physical AI. How does Cosmos compare?

Google Deep Mind has extensive robotics research but hasn't released publicly available reasoning models at Cosmos's level. Their approach emphasizes reinforcement learning and robotics-specific training. Powerful for specific tasks, less general-purpose.

Mistral's Pixtral Large is a capable vision-language model but doesn't specifically target physical reasoning. It understands images well but not causality or planning in the structured way Cosmos does.

Google has internal robotics capabilities but hasn't productized physical reasoning models for general use. Open AI focuses primarily on language and image generation, less on embodied reasoning.

Nvidia's advantage is the ecosystem. Cosmos doesn't exist alone. It integrates with simulation tools, hardware partners, deployment infrastructure, and complementary models like Nemotron. This ecosystem advantage compounds—having Cosmos Transfer means better training data; having Nemotron models means complete agent systems; having GPU infrastructure means efficient deployment.

The public availability of Cosmos models also matters. Google's systems are powerful but private. Nvidia open-sourced Cosmos, letting researchers and companies build on it. This openness drives adoption and innovation.

The Road Ahead: Gr 00t and Vision-Language-Action Models

While Cosmos Reason 2 handles the reasoning layer, Nvidia also emphasizes Gr 00t, an open-reasoning vision-language-action (VLA) model that bridges reasoning to actual robot control.

The distinction matters. Cosmos Reason 2 says "here's what should happen." Gr 00t translates that into actual motor commands—specific joint angles, grip force, movement speed. It's the connection between thinking and doing.

Gr 00t incorporates physical constraints—what actions the robot can actually perform given its hardware. It handles real-world latencies, imperfections, and failures. The reasoning says "pick up the box." Gr 00t executes that considering the robot's actual capabilities.

Nvidia's roadmap includes expanding both models. Better reasoning. Better action execution. Faster inference. Lower power consumption. Support for more robot hardware platforms.

The long-term vision is clear: AI systems that reason about physical tasks at a level matching human reasoning, then execute those plans reliably despite real-world chaos. Not there yet, but the trajectory is visible.

Practical Implementation Considerations

If you're considering deploying Cosmos Reason 2, what do you need to think about?

Data Preparation: Cosmos learns better from good data. Invest in capturing diverse scenarios in your environment. Include edge cases and failures—the model learns from what doesn't work. Synthetic data from Cosmos Transfer helps, but some real-world data is usually necessary.

Hardware Planning: Running Cosmos Reason 2 requires reasonable compute. Inference on an A100 GPU or newer runs efficiently. Edge deployment needs planning—can your robot carry the compute? Can you handle latency if decisions must round-trip to a data center?

Integration Architecture: How does Cosmos fit with your existing systems? Vision pipelines? Control systems? Databases and knowledge systems? Planning the integration architecture early prevents expensive refactoring later.

Validation Strategy: Reasoning models sometimes confidently make wrong decisions. Build validation into your system. Physical safety stops. Logic checks before executing critical actions. Testing in simulation before deployment.

Iteration Planning: Expect multiple development cycles. First deployment rarely matches optimal performance. Plan for gathering real-world data, improving fine-tuning, and iterating the model.

Safety, Ethics, and Physical AI Governance

When AI systems make decisions affecting physical world, safety becomes paramount. A wrong answer in a chatbot is embarrassing. A wrong answer in a robot arm near a human is injury or death.

Nemotron Safety addresses one dimension—preventing information leaks. But broader safety questions require organizational governance.

Physical Safety: Robots should have hard stops. Momentum overrides. Collision detection. No software issue should cause dangerous behavior. These are primarily hardware and systems considerations, not model considerations, but the model architecture should support safety features.

Failure Modes: What happens when reasoning is wrong? When the model is uncertain? When the environment is outside its training distribution? Good systems fail gracefully. Robot stops. Alerts operators. Requests human intervention. Cosmos-enabled systems should be designed with these failure modes in mind.

Bias and Fairness: Physical AI systems can embed human biases. A system trained primarily on one manufacturer's equipment might struggle with different equipment. A system trained in one environment might fail in another. Diverse training data and validation across environments matters.

Transparency: For critical applications, organizations need to understand why the system made a decision. This is harder than post-hoc explanations with language models. But having reasoning traces helps.

Nvidia emphasizes that deploying physical AI is an organizational responsibility. The model is a component. The system architecture, safety infrastructure, monitoring, and governance—these are customer responsibilities.

The Economics of Physical AI Deployment

When do investments in Cosmos Reason 2-based systems make financial sense?

Cost Structure: Model licensing is one component. Deployment infrastructure (GPU hardware, software platforms) is another. Integration and customization work is typically largest. Ongoing operation and monitoring. These costs stack up.

Benefit Timeline: Benefits accrue over time. Improved efficiency—fewer failed grasps, better task planning. Flexibility—same system handles new products or environments. Reduced downtime—better diagnosis of issues. These benefits are real but don't appear on day one.

Payback Periods: For automation of high-volume repetitive tasks in expensive environments (advanced manufacturing, autonomous vehicles), payback is often 1-2 years. For moderate-volume custom applications (small facility logistics, specialized assembly), 2-4 years. For experimental or research applications, financial payback isn't the goal.

Competitive Dynamics: If competitors deploy Cosmos-based systems and you don't, you gradually fall behind. Rivals operate more flexibly, adapt faster, reduce costs better. The competitive pressure drives adoption.

The financial case is usually strongest for organizations where labor is expensive, environments are complex, and flexibility is valuable. It's weakest for simple fixed tasks in low-cost labor markets.

Looking Forward: The Physical AI Inflection Point

We're at an inflection moment. Physical AI capabilities have crossed a threshold where commercial deployment makes sense for an expanding range of applications.

Five years ago, deploying an AI-guided robot in a warehouse was experimental. Now it's becoming standard practice. The capability threshold has been cleared. The economic case has been validated. Remaining barriers are integration and organization adoption, not fundamental technical limitations.

Cosmos Reason 2 represents this transition. It's not a research model—it's a production tool. Organizations can deploy it today and expect reasonable results within months.

The next five years will likely see physical AI move from "fascinating technology" to "infrastructure." Not ubiquitous yet, but no longer novel. Expect major manufacturers to have deployments. Expect new startups building on Cosmos to emerge. Expect investments in physical AI to accelerate.

Cosmos Reason 2 is part of this transition, but it's not the end point. Future versions will reason more deeply, plan further ahead, handle greater complexity. But the fundamental capability—AI systems that understand and navigate physical environments—is now established.

For organizations considering physical automation, the question is shifting from "Is this possible?" to "When should we deploy this?" and "How do we integrate this into operations?" Those are harder questions. They require organizational change, not just technical innovation. But at least the technology is ready.

FAQ

What is Cosmos Reason 2 exactly?

Cosmos Reason 2 is Nvidia's vision-language model specifically designed for physical reasoning in robots and autonomous systems. Unlike general-purpose VLMs, it understands causality, physics, and how the physical world behaves, enabling robots to reason through complex tasks and adapt to unpredictable environments rather than just executing pre-programmed sequences.

How is Cosmos Reason 2 different from previous AI models?

Cosmos Reason 2 builds on a two-dimensional ontology that captures both semantic understanding (what objects are and what they do) and temporal-causal relationships (what happens next and why). This structured approach to reasoning enables the model to generalize across different tasks and environments more effectively than traditional vision-language models trained purely on image data. Previous reasoning models like Cosmos Reason 1 introduced this approach, but Reason 2 adds better customization, multi-step planning, and integration with broader AI agent ecosystems.

What makes physical reasoning different from regular image understanding?

Regular image understanding recognizes objects and their properties in static images. Physical reasoning understands dynamics—how objects behave when interacted with, what forces are needed for different actions, how to plan sequences of actions to achieve goals. A standard vision model sees a cube and knows it's a cube. A physical reasoning model understands that a cube with certain material properties requires specific grip force, will behave predictably when moved, and can be stacked with other cubes while maintaining structural integrity. This difference is fundamental to enabling robots to act effectively in the real world.

Can Cosmos Reason 2 be customized for specific industries?

Yes, Cosmos Reason 2 is designed with customization as a core feature. Organizations can fine-tune the model using domain-specific training data from their facilities or environments. Manufacturing plants can optimize it for their specific equipment and assembly sequences. Warehouse operators can adapt it to their specific layouts and cargo types. This customization capability is what makes the model valuable for enterprise deployment—you're not limited to the general-purpose version.

How does Cosmos Transfer work with Cosmos Reason 2?

Cosmos Transfer is the simulation and synthetic data generation component that works alongside Cosmos Reason 2. It lets developers create unlimited training scenarios in virtual environments that closely match physical conditions. You train the reasoning model extensively in simulation (10,000+ scenarios) where failures are free, then deploy to physical robots knowing the model learned in realistic conditions. This dramatically reduces the risk and cost of deployment compared to learning primarily from expensive real-world experiments.

What are the practical deployment challenges?

Key challenges include ensuring good training data quality that covers edge cases, integrating the model with existing robotics and control systems, planning appropriate hardware infrastructure for running inference, designing safety mechanisms that work even when reasoning is wrong, and building validation processes before deploying to physical robots. Organizations also need to plan for iteration and continuous improvement rather than expecting perfect performance on day one. The technology is ready, but organizational integration requires careful planning.

How does Cosmos Reason 2 handle uncertainty and edge cases?

The model is designed to reason through uncertainty by understanding probability distributions over outcomes rather than single deterministic answers. When encountering novel situations outside training distribution, well-designed systems using Cosmos Reason 2 fail gracefully—the robot stops, alerts operators, requests human intervention rather than attempting actions it's uncertain about. The underlying planning capability helps the model consider alternative approaches when the preferred plan seems risky.

What's the relationship between Cosmos Reason 2 and the Nemotron models?

Cosmos Reason 2 handles visual reasoning and planning. The Nemotron models handle complementary functions: Nemotron Speech processes voice commands in real-time, Nemotron RAG enables agents to retrieve and reason about information from databases and knowledge systems, and Nemotron Safety prevents accidental exposure of sensitive information. Together, they form a complete agent stack that can perceive, reason, communicate, access knowledge, and operate safely in enterprise environments.

Is Cosmos Reason 2 open-source?

Nvidia released Cosmos as open-source models, making them available for research and commercial deployment. This open approach differs from some competitors' closed models and drives broader adoption and innovation. Organizations can download weights, fine-tune for their applications, and deploy without licensing restrictions beyond attribution requirements.

What's the timeline for ROI when deploying Cosmos Reason 2 systems?

ROI timelines vary based on application. For automation of high-volume expensive tasks (advanced manufacturing, autonomous vehicles), payback typically occurs within 1-2 years. For moderate-volume custom applications, 2-4 years is common. For experimental deployments where financial return isn't primary, benefits manifest through capability expansion and competitive advantage. The economics are strongest in complex environments where flexibility matters and labor or error costs are high.

The Future of Physical AI Is Reasoning, Not Just Recognition

Nvidia's trajectory from Cosmos Reason 1 through Reason 2 and into future versions tells a story about where AI is heading. The industry spent years perfecting pattern matching in digital space. Now the frontier moves to reasoning in physical space.

Robots that truly understand the world they operate in. That can plan through complexity. That adapt when plans fail. That handle novel situations using underlying principles rather than memorized responses. This is the promise of physical AI.

Cosmos Reason 2 is not there yet, but it's unmistakably moving in that direction. The model understands physics and causality in ways that enable useful reasoning. Organizations are deploying it to real systems and reporting improved performance and flexibility. The ecosystem of supporting tools continues expanding.

If you're responsible for automation, robotics, or AI strategy in an organization, Cosmos Reason 2 deserves serious attention. Not as a solution to deploy immediately in every scenario, but as the foundation layer for future systems. The capabilities represent a meaningful step forward. The timing suggests production readiness for many applications. The ecosystem support indicates serious long-term commitment from Nvidia.

The age of physical AI that CEO Jensen Huang described isn't coming. It's here. And with each model release, the foundation for serious, widespread deployment strengthens.

Key Takeaways

- Cosmos Reason 2 uses a two-dimensional ontology to understand both object properties and temporal-causal relationships, enabling robots to reason through physical tasks rather than just pattern-matching

- Multi-step planning capability allows robots to reason through consequences before acting, similar to how software agents break down digital workflows

- The Nemotron family of complementary models (Speech, RAG, Safety) creates complete AI agent ecosystems beyond just visual reasoning

- Cosmos Transfer synthetic data generation dramatically reduces deployment risk and cost by enabling extensive training in simulation before physical robot deployment

- The shift from specialist robots to generalist-specialist systems changes robotics economics, making custom applications viable at lower volumes than traditional fixed-purpose automation

Related Articles

- Nvidia Vera Rubin AI Computing Platform at CES 2026 [2025]

- How to Watch Hyundai's CES 2026 Press Conference Live [2026]

- Kodiak and Bosch Partner to Scale Autonomous Truck Technology [2025]

- AI in B2B: Why 2026 Is When the Real Transformation Begins [2025]

- Bosch CES 2026 Press Conference Live Stream [2025]

- Qwen-Image-2512 vs Google Nano Banana Pro: Open Source AI Image Generation [2025]

![Nvidia Cosmos Reason 2: Physical AI Reasoning Models [2025]](https://tryrunable.com/blog/nvidia-cosmos-reason-2-physical-ai-reasoning-models-2025/image-1-1767657130102.png)